Abstract

Objective

Hospitals in the National Healthcare Safety Network began reporting laboratory-identified (LabID) Clostridium difficile infection (CDI) events in January 2013. Our study quantified the differences between the LabID and traditional surveillance methods.

Design

Cohort study.

Setting

A cohort of 29 community hospitals in the southeastern United States.

Methods

A period of 6 months (January 1, 2013, to June 30, 2013) of prospectively collected data using both LabID and traditional surveillance definitions were analyzed. CDI events with mismatched surveillance categories between LabID and traditional definitions were identified and characterized further. Hospital-onset CDI (HO-CDI) rates for the entire cohort of hospitals were calculated using each method, then hospital-specific HO-CDI rates and standardized infection ratios (SIRs) were calculated. Hospital rankings based on each CDI surveillance measure were compared.

Results

A total of 1,252 incident LabID CDI events were identified during 708,551 patient-days; 286 (23%) mismatched CDI events were detected. The overall HO-CDI rate was 6.0 vs 4.4 per 10,000 patient-days for LabID and traditional surveillance, respectively (P < .001); of 29 hospitals, 25 (86%) detected a higher CDI rate using LabID compared with the traditional method. Hospital rank in the cohort differed greatly between surveillance measures. A rank change of at least 5 places occurred in 9 of 28 hospitals (32%) between LabID and traditional CDI surveillance methods, and for SIR.

Conclusions

LabID surveillance resulted in a higher hospital-onset CDI incidence rate than did traditional surveillance. Hospital-specific rankings varied based on the HO-CDI surveillance measure used. A clear understanding of differences in CDI surveillance measures is important when interpreting national and local CDI data.

Clostridium difficile infection (CDI) is the most common healthcare-acquired infection in United States; it increases healthcare costs by approximately $35 billion per year.1,2 A highly efficient and accurate surveillance system is essential to assessing infection prevention efforts. Traditional surveillance for CDI requires manual chart review and is labor intensive, subjective, and expensive.3

The National Healthcare Safety Network (NHSN) enacted a new surveillance method in January 2013 termed laboratory-identified (LabID) CDI events. The LabID method was designed to utilize electronically captured laboratory data and hospital admission dates to determine hospital-onset (HO) versus community-onset (CO) surveillance categories. Hospitals receiving payment from the Centers for Medicare and Medicaid Services (CMS) were required to report CDI LabID events to the NHSN.

LabID CDI events and, more specifically, LabID HO-CDI event rates, have been publicly reported in many states.3,4 Many hospitals now report LabID CDI events alone or in parallel with traditional, manually performed CDI surveillance. Public reporting generally utilizes the standardized infection ratio (SIR), which is a risk-adjusted measure that was used to compare HO-CDI LabID rates with the baseline, standard population of NHSN hospitals from 2010 to 2011. However, little is known about how the newer LabID method of identifying CDI events compares with traditional surveillance. Gase et al3 previously identified significant differences between traditional and LabID surveillance methods, but their study was primarily limited to large, academic medical centers in the New York metropolitan region, and their results may not be generalizable to other hospital settings.

The aims of the current study were twofold. First, we aimed to compare LabID and traditional infection surveillance method estimates of C. difficile incidence in a cohort of community hospitals and thus validate the findings of Gase et al. Second, we aimed to understand how measurement of HO-CDI by LabID, traditional surveillance, or SIR influences an individual hospital's rank within a cohort of community hospitals.

Methods

We performed a prospective observational cohort study of patients admitted to 29 community hospitals in the Duke Infection Control Outreach Network (DICON) between January 1, 2013, and June 30, 2013. DICON is a collaborative network of 43 community hospitals in the southeastern United States that share surveillance data and consultative services.5

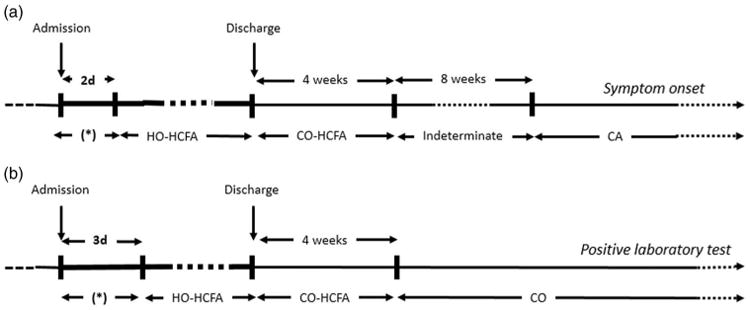

Infection preventionists (IPs) at each hospital prospectively collected surveillance data using both the NHSN's CDI surveillance definition and the LabID module definition (Figure 1).6,7 IPs were required to enter a corresponding traditional surveillance definition category for each CDI event identified by LabID. Thus, the total number of CDI events was the same for both surveillance methods, but the distribution of CDI events varied by surveillance category.

Figure 1.

Time line for definitions of Clostridium difficile infection (CDI) for both (a) traditional surveillance and (b) LabID surveillance methods.

Note. This figure is adapted from McDonald et al.6 The figure is designed for illustrative purposes and does not include all surveillance definition categories. Case patients with events (symptom onset versus positive laboratory test) occurring during the window of hospitalization marked by an asterisk (*) could be classified as either having community-acquired/community-onset, indeterminate, or community-onset, healthcare-facility-associated disease based on the timing of their previous hospitalization. Note. HO-HCFA, hospital-onset healthcare-facility-associated CDI; CO-HCFA, community-onset healthcare-facility-associated CDI; CA, community-acquired CDI; CO, community-onset CDI.

The following additional data were collected for each CDI event: date of admission, date of discharge, date of CDI test, indicator for signs or symptoms within 48 hours of admission, traditional surveillance category, LabID surveillance category, location prior to admission (eg, other hospital, nursing home, home), hospital identifier, number of admissions at each hospital, number of patient days at each hospital, facility bed size, CDI test type (eg, nucleic acid amplification test, or enzyme immunoassay), medical school affiliation, and facility type (eg, acute vs nonacute). The traditional surveillance categories included those in Figure 1 as well as recurrent events (ie, cases occurring between 2 and 8 weeks after the onset of a previous event) and continuation events (ie, a CDI event occurring within 14 days of previous CDI event for that patient). We utilized NHSN surveillance guidelines and included only new LabID events for analysis of incidence rates.7 Therefore, duplicate positive CDI tests occurring within 14 days of a preceding positive test were excluded. LabID CDI event categories are delineated in Figure 1 and also include recurrent events (positive tests obtained between 2 and 8 weeks after the most recent event for that patient). All hospitals utilized the same surveillance definitions and protocols for data entry. Laboratory policies of every participating hospital stipulated that only stool specimens conforming to the shape of the container would be processed.

Traditional surveillance and corresponding LabID events were compared to evaluate discord between surveillance categories. CDI cases that fell into two separate surveillance categories (ie, HO vs CO) were deemed mismatches. A cause for each mismatched CDI case was determined using information in our standardized database. If a mismatch had more than one explanation, both reasons were counted.

We compared LabID and traditional surveillance methods for CDI. First, we calculated the total HO-CDI incidence rate per 10,000 patient days for LabID versus traditional surveillance by combining data from all 29 participating hospitals. Then, we compared these rates using a χ2 test. Second, we calculated incidence rates per 10,000 patient-days for each hospital for each surveillance method. Then, we compared individual hospital rates using a Wilcoxon signed-rank test. Third, we used the LabID NHSN risk adjustment formula to calculate the expected number of HO-CDI LabID events for each hospital.8 This formula uses defined covariates (ie, CDI test type, CO-CDI admission prevalence rate, facility bed size, and medical school affiliation) to estimate the number of expected LabID events based on the NHSN standard population from 2010 to 2011.8 For each hospital, the number of LabID HO-CDI events was divided by the expected number of LabID HO-CDI events to calculate an SIR. Hospitals with expected LabID HO-CDI events of <1 (n =0) or missing data on necessary covariates (n =1) were excluded from the SIR analyses. We ranked each hospital from lowest to highest based on HO-CDI LabID incidence rates, traditional HO-CDI incidence rates, and SIR. Scatter plots with reference lines were used to demonstrate the degree of correlation between hospital ranks.

To investigate the effect of delayed diagnosis for patients presenting with symptoms on admission on LabID HO-CDI rates, we identified cases categorized as LabID HO-CDI events that also had an indicator for signs and symptoms in the first 48 hours of admission. These cases were subtracted from the total LabID CDI events, and facility-level rates were recalculated with this “corrected” LabID HO-CDI rate. We then compared original and corrected LabID HO-CDI events, incidence rate, and hospital ranking.

All statistical analyses were performed using SAS software, version 9.3 (Cary, North Carolina). P<.05 was considered statistically significant. This research was deemed exempt from review by the Duke Institutional Review Board.

Results

A total of 1,262 CDI events were observed over 708,551 patient-days in 29 hospitals during the 6-month study period. After 10 duplicate events were removed, a total of 1,252 CDI events were analyzed. Hospital demographic information is summarized in Table 1.

Table 1. Characteristics of 29 Study Hospitals, Duke Infection Control Outreach Network, 2013.

| Characteristics | Hospital Cohort (n= 29) |

|---|---|

| 6-mo admissions, median (IQR) | 4,548 (2,921–6,691) |

| 6-mo patient days, median (IQR) | 19,803 (11,089–28,609) |

| Facility size, median (IQR) | 249 beds (147–289) |

| Facility size, No. (%) | |

| >245 beds | 12 (41) |

| 101–245 beds | 15 (52) |

| ≤100 beds | 2 (7) |

| CDI test type, No. (%) | |

| NAAT/PCR | 13 (45) |

| EIA/(Toxin) | 15 (52) |

| Othera | 1 (3) |

| Medical school affiliation, No. (%) | |

| Major | 1 (3) |

| Graduate | 1 (3) |

| None | 27 (93) |

| Facility type, No. (%) | |

| General Acute Care | 28 (97) |

| Other | 1 (3) |

| State, No. (%) | |

| North Carolina | 20 (69) |

| South Carolina | 3 (10) |

| Georgia | 3 (10) |

| Virginia | 2 (7) |

| Florida | 1 (3) |

Note. IQR, interquartile range; CDI, Clostridium difficile infection; NAAT, nucleic acid amplification test;; PCR, polymerase chain reaction; EIA, enzyme immunoassay.

Hospital was transitioning from EIA to NAAT during study period and performed both tests.

Overall, 286 mismatched events (23%) were identified between the two surveillance methods (Table 2). We identified three major causes of mismatched events. The most frequent cause was the lack of an indeterminate category in LabID definitions (n= 140; 46%). Of these 140 cases, 121 (86%) were categorized as CO-CDI by LabID. The second most frequent cause of mismatched events was diagnostic testing delay >3 days despite the presence of symptoms of CDI in the first 2 days of admission (n = 107; 37%). The third most frequent cause of mismatched events was misclassification of recurrent or continuation episodes as incident events by LabID (n = 57; 4.6%).

Table 2. Comparison of 1,252 Incident Cases of Clostridium difficile Infection Using the National Healthcare Safety Network LabID Versus Traditional Surveillance C. difficile Events, Duke Infection Control Outreach Network, 2013a.

| CDI LabID Events | |||||

|---|---|---|---|---|---|

|

|

|||||

| Traditional CDI Surveillance Events | HO | CO-HCFA | CO | Recurrent | No. (%) |

| HO | 310 | 0 | 0 | 1 | 311 (25) |

| CO-HCFA | 26 | 153 | 0 | 2 | 181 (14) |

| CA | 58 | 0 | 426 | 0 | 484 (39) |

| Indeterminate | 14 | 3 | 121 | 2 | 140 (11) |

| Recurrent | 12 | 13 | 17 | 77 | 119 (9.5) |

| Continuation | 5 | 3 | 7 | 2 | 17 (1.4) |

| Total (%) | 425 (34) | 172 (14) | 571 (46) | 84 (6.7) | 1252 |

Note. CDI, Clostridium difficile infection; LabID, laboratory identified; HO, hospital-onset CDI; CO-HCFA, community-onset–healthcare-facility-associated CDI; CO, community-onset CDI; CA, community-associated CDI.

Duplicate events were excluded from analysis because they are not counted as new events by the National Healthcare Safety Network.

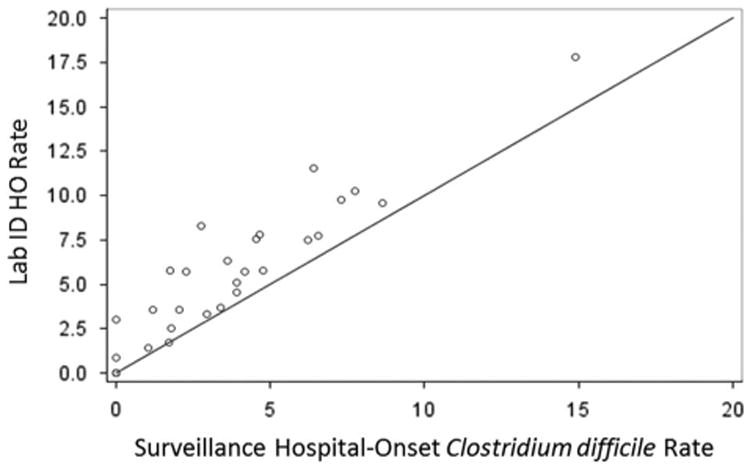

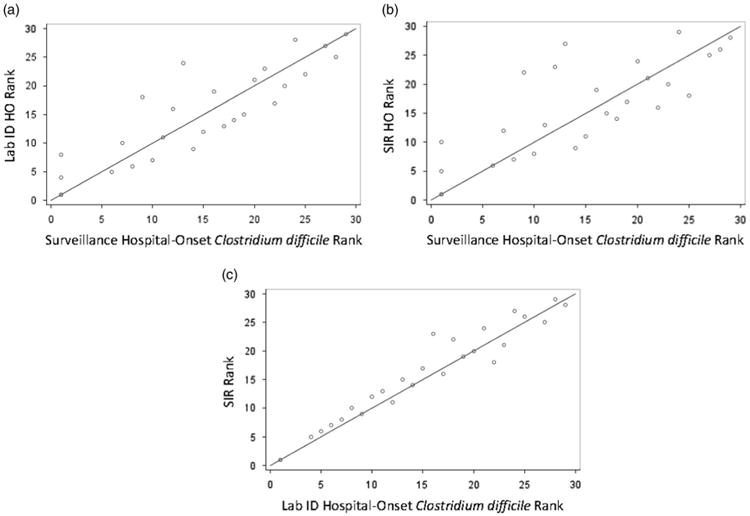

A statistically significant difference in the total HO-CDI incidence rates between the LabID and traditional surveillance methods was observed. Specifically, the total LabID HO-CDI incidence rate was 36% higher than the traditional surveillance rate (6.0 vs 4.4 per 10,000 patient-days; P < .001). Comparison of hospital-specific estimates revealed that 25 of 29 hospitals (86%) had a higher HO-CDI incidence rate using LabID compared with traditional surveillance (Figure 2). Statistically significant differences were also observed between hospital-specific rates for CO-CDI and recurrent events (Table 3). SIR point estimates for 17 of 28 hospitals (61%) were <1, which is considered better than the national average by the NHSN.9 Individual hospital rankings differed depending on the surveillance method used for comparison (Figure 3). Two examples are described in detail below. First, a 200-bed hospital reported 6 traditional surveillance events and 18 LabID events; as a result, the hospital's ranking within the cohort changed 14 positions (rank = 13 for traditional surveillance; rank = 24 for LabID; rank = 27 for SIR). Second, a 220-bed hospital that reported 3 surveillance events and 10 LabID events changed 13 positions (rank = 9 for traditional surveillance; rank = 18 for LabID; rank = 22 for SIR).

Figure 2.

Traditional surveillance and Lab ID hospital-onset Clostridium difficile infection incidence in 28 study hospitals from the Duke Infection Control Outreach Network. Note. HO, hospital-onset. Rates are number of events per 10,000 patient-days. Three hospitals share the [0,0] coordinate.

Table 3. Summary of Clostridium difficile Event Rates per Hospital, Duke Infection Control Outreach Network, 2013a.

| CDI Incidence Rate | LabID median, (IQR) | Traditional Surveillance median, (IQR) | Median Difference (IQR)a | P Value |

|---|---|---|---|---|

| HO rate per 10,000 patient-days | 5.70 (3.03–7.76) | 3.40 (1.71–4.75) | 1.24 (0.63–2.89) | <.001 |

| CO-HCFA rate per 10,000 patient-days | 2.30 (1.25–3.89) | 2.53 (1.24–3.73) | 0 (−0.48–0) | .50 |

| CA rate per 1,000 admissionsb | 4.01 (1.68–5.56) | 2.77 (1.50–5.51) | 0.51 (0–1.06) | <.001 |

| Recurrent CDI rate per 1,000 admissionsb | 0.330 (0–1.13) | 0.478 (0.19–1.19) | −0.017 (−0.41–0) | <.001 |

| LabID standardized infection ratioc | 0.78 (0.50–1.15) | N/A | N/A | N/A |

Note. CDI, Clostridium difficile infection; LabID, laboratory identified; IQR, interquartile range; HO, hospital-onset CDI; CO-HCFA, community-onset–healthcare-facility-associated CDI; CO, community-onset CDI; CA, community-associated CDI.

Median difference between LabID and traditional surveillance CDI incidence rates.

Observed LabID and traditional surveillance HO-CDI rates are different than overall data in this table due to missing LabID from one hospital, which was removed from the above analyses due to missing data.

SIR predicted NHSN LabID HO-CDI incidence rates were calculated from the Centers for Disease Control risk adjustment calculator.

Figure 3.

Comparison of hospital-onset Clostridium difficile infection (HO-CDI) rankings based on surveillance method in a network of 28 hospitals during 2013. Rankings are from lowest to highest CDI incidence rate. Reference line is in blue. Note. HO, hospital-onset CDI; SIR, standardized infection ratio.

In total, 9 of 28 hospitals (32%) had a rank change of ≥5 positions between traditional surveillance to SIR ranks. Medium-sized hospitals (100–245 beds) with SIR values >1 demonstrated the most ranking variation in the study cohort. Specifically, 6 of the 9 hospitals with a change of ≥5 in rank were in the 100–245-bed size category; 4 of these 9 hospitals had an SIR >1. Rank by LabID and SIR did not differ >5 positions except for one hospital, which reported 2 surveillance events and 5 LabID events (surveillance rank = 12, LabID rank = 16, SIR rank = 23) (Figure 3C).

Of 29 hospitals, 24 had at least 1 LabID HO-CDI case affected by misclassification as HO-CDI due to delayed diagnosis when symptom onset was documented within the first 48 hours of admission. Median (IQR) number of LabID HO-CDI events with delayed diagnosis was 3 (1–6) per hospital, representing 23% (10%–43%) of LabID HO-CDI cases. Removing delayed-diagnosis cases from reporting would have resulted in a decrease in the LabID HO-CDI rate of a median 1.14 CDI per 10,000 patient-days (IQR 0.37–2.37, Wilcoxon signed-rank P = .04). Hospital rankings based on corrected LabID HO rates were similar to rankings based on traditional surveillance.

Discussion

We observed large differences in overall HO-CDI measurement between surveillance methods. Specifically, the HO-CDI incidence was 36% higher when measured with LabID than with traditional surveillance definitions. Mismatched cases were largely related to the lack of an indeterminate surveillance category and delayed diagnostic testing. Approximately 33% of hospital rankings changed by 5 or more positions when compared LabID surveillance, traditional surveillance, and SIR were compared. These data support previously observed differences between LabID and traditional surveillance methods.3

Hospitals that adopt the LabID surveillance method should expect to observe higher HO-CDI incidence rates than with traditional surveillance. However, the magnitude of increase in HO-CDI incidence for an individual hospital transitioning from traditional to LabID CDI surveillance is variable and difficult to predict. We theorize that this variability may be due to a combination of three factors: heterogeneity in diagnostic testing practices,10 the number of “imported” CO-CDI cases, and the quality of traditional surveillance practices. However, diagnostic testing practices may be particularly important.

Mismatched cases between LabID and traditional surveillance that are due to delays in diagnostic testing may potentially penalize hospitals on publically reported SIR measures in two ways. First, patients with symptoms on admission but a diagnostic test delay > 3 days shifts CO-CDI events into classification as HO-CDI, which results in an inflated LabID HO-CDI rate. Second, this shift lowers the CO-CDI prevalence rate, which is a risk adjustment variable in the SIR equation. In our analysis of cases deemed LabID HO-CDI, in which symptom onset was documented in the first 48 hours of admission, we found that many hospitals were affected by delayed laboratory diagnosis of symptomatic patients. Therefore, prompt diagnostic testing of patients with diarrheal illness within the first 3 days of admission will not only improve patient care but will also improve the accuracy of CDI surveillance in hospitals using LabID definitions.

The most common causes of discord between surveillance methods in our study cohort of community hospitals were similar to those reported by Gase et al3 in metropolitan New York academic medical centers. Both studies identified delayed diagnostic testing and classification of recurrent or duplicate episodes as a new event as major reasons for discord between results of different surveillance methods. However, we note important differences between our findings and those of Gase et al.

First, almost 15% of mismatched CDI events in the study by Gase et al were documented as LabID-only events due to lack of documented diarrhea. In contrast, IPs were required to select a corresponding traditional surveillance category for each identified LabID case in our study. This requirement was incorporated into the data entry process for our surveillance database based on two assumptions. First, we assumed that hospital laboratories follow their policies to reject specimens that do not to conform to the shape of the container. Second, we assumed that medical record documentation was less reliable than the presence of an acceptable liquid stool specimen when detecting patients with diarrheal illness. Other investigators have observed that diarrhea is documented in <60% of patients who undergo CDI testing.11 Undoubtedly, some of our study patients had true diarrhea due to CDI, while others were colonized with C. difficile and had diarrheal symptoms for other reasons. The parallel surveillance approach practiced in our cohort may not be similar to surveillance programs in other hospitals, which may reduce the generalizability of our findings. Specifically, our method may have inflated the traditional surveillance rate and may have underestimated the difference between traditional and LabID incidence rates. However, our observed LabID HO-CDI rate increase of 36% was similar to the 28% increase in prevalidation results reported by Gase et al.3

Second, the rate of misclassification due to recurrent or continuing CDI episodes (4.6%) in our cohort was lower than that of Gase et al (15.2%). Finally, unlike Gase et al, we did not perform any further evaluation of mismatched events. Thus, we could not detect subjective biases, errors, or adjudications that are known to occur with traditional surveillance. Our database contained deidentified patient data; we were not able to pursue additional individual chart reviews for validation purposes. Despite these limitations, this study provides an illustrative sample of community hospital CDI surveillance that is an important addition to existing literature. Hospitals now have the choice to continue traditional CDI surveillance in addition to LabID or to use LabID CDI surveillance exclusively. This facility-level decision may be based on a variety of factors, such as infection-control personnel resources, the need to identify community reservoirs of CDI, and demands for external reporting. LabID surveillance provides considerable benefit in terms of reduced resource burden in data collection. However, LabID provides limited information on location of infection onset, date of symptom onset, or prior episodes of CDI that is present in chart review but absent in laboratory data. IPs and hospital epidemiologists investigating outbreaks or increased rates of CDI benefit from reliable information pertaining to the location and timing of symptom onset. This information allows investigators to identify likely reservoirs and location of CDI transmission to formulate plans for intervention. In contrast, external benchmarking that utilizes a more objective measure like LabID offers an advantage over methods that are susceptible to subjective biases. Thus, we believe that continuing both surveillance methods for CDI in parallel is beneficial. However, external reporting will often be a higher priority for hospitals faced with limited resources.

Our study has several limitations. First, our network includes community hospitals in the southeastern United States and may not be generalizable to larger, academic centers in other geographic locations. For example, we observed little effect of SIR risk adjustment compared with LabID HO-CDI rates on hospital ranking in our cohort (Figure 3C). The included hospitals were very similar in 2 of the 3SIR risk adjustment variables: medical school affiliation and hospital size (Table 1). Second, all identified CDI events were required to have both a traditional and LabID surveillance category defined, which could lead to higher traditional surveillance CDI rates and limit generalizability, as discussed in detail above. Third, we were unable to perform any further evaluation of mismatched events. Our estimates of traditional CDI surveillance rates may include errors in application of surveillance definitions that are known to occur with manual review methods. Similarly, we were unable to evaluate for errors in data entry into our database. However, most community hospitals do not conduct parallel CDI surveillance or routinely perform further evaluation of all mismatched CDI events. Thus, our results illustrate community hospital experiences with two methods of CDI surveillance performed in parallel.

In summary, LabID surveillance produced higher estimates of HO-CDI incidence than traditional surveillance methods, and this result has now been demonstrated in both urban academic medical centers and smaller community hospitals. Prompt CDI testing of patients with diarrheal illness within the first 3 days of hospital admission will improve HO-CDI LabID reporting accuracy and may improve SIR estimates for individual hospitals. A clear understanding of differences in CDI surveillance measures is important when interpreting national and local CDI data. Facility-level decisions about the use of parallel CDI surveillance methods should consider the advantages and disadvantages of both methods.

Acknowledgments

We would like to thank all of the participating hospitals in the Duke Infection Control Outreach Network.

Financial support: DJA was supported by a grant from the National Institutes of Health/National Institute of Allergy and Infectious Diseases (No. K23AI095357).

Footnotes

Previous Presentation: A preliminary report of this research was presented at IDWeek, October 2–6, 2013. San Francisco, CA. Abstract 41953.

Potential conflicts of interest: All authors report no conflicts of interest relevant to this article.

References

- 1.Miller BA, Chen LF, Sexton DJ, Anderson DJ. Comparison of the burdens of hospital-onset, healthcare facility-associated Clostridium difficile infection and of healthcare-associated infection due to methicillin-resistant Staphylococcus aureus in community hospitals. Infect Control Hosp Epidemiol. 2011;32:387–390. doi: 10.1086/659156. [DOI] [PubMed] [Google Scholar]

- 2.Antibiotic Resistance Threats in the United States. Center for Disease Control and Prevention website; 2013. [Accessed September 30, 2013]. http://www.cdc.gov/drugresistance/threat-report-2013/pdf/ar-threats-2013-508.pdf. Published 2013. [Google Scholar]

- 3.Gase KA, Haley VB, Xiong K, Van Antwerpen C, Stricof RL. Comparison of 2 Clostridium difficile surveillance methods: National Healthcare Safety Network's laboratory-identified event reporting module versus clinical infection surveillance. Infect Control Hosp Epidemiol. 2013;34:284–290. doi: 10.1086/669509. [DOI] [PubMed] [Google Scholar]

- 4.Illinois Department of Public Health website; [Accessed September 30, 2013]. Illinois Statewide Report of Clostridium difficile infections in Acute Care Hospitals, NHSN Reporting January 1, 2012 – December 31, 2012. http://www.healthcarereportcard.illinois.gov/files/pdf/C_diff_IL_acute_hosp_NHSN_2012_final.pdf. Published June 2013. [Google Scholar]

- 5.Anderson DJ, Miller BA, Chen LF, et al. The network approach for prevention of healthcare-associated infections: long-term effect of participation in the Duke Infection Control Outreach Network. Infect Control Hosp Epidemiol. 2011;32:315–322. doi: 10.1086/658940. [DOI] [PubMed] [Google Scholar]

- 6.McDonald LC, Coignard B, Dubberke E, et al. Recommendations for surveillance of Clostridium difficile-associated disease. Infect Control Hosp Epidemiol. 2007;28:140–145. doi: 10.1086/511798. [DOI] [PubMed] [Google Scholar]

- 7.Centers for Disease Control and Prevention website; [Accessed September 13, 2014]. Multidrug-Resistant Organism & Clostridium difficile Infection (MDRO/CDI) Module. http://www.cdc.gov/nhsn/PDFs/pscManual/12pscMDRO_CDADcurrent.pdf. Published January 2014. [Google Scholar]

- 8.Centers for Disease Control and Prevention website; [Accessed November 7, 2014]. Risk Adjustment for Healthcare Facility-Onset C. difficile and MRSA Bacterermia Laboratory-identified Event Reporting in NHSN. http://www.cdc.gov/nhsn/PDFs/mrsa-cdi/RiskAdjustment-MRSA-CDI.pdf. Published March 2013. [Google Scholar]

- 9.Your Guide to the Standardized Infection Ratio (SIR) Centers for Disease Control and Prevention website; [Accessed November 7, 2014]. National Healthcare Safety Network e-News Special Edition. http://www.cdc.gov/nhsn/PDFs/Newsletters/NHSN_NL_OCT_2010SE_final.pdf. Published October 2010. [Google Scholar]

- 10.Moehring RW, Lofgren ET, Anderson DJ. Impact of change to molecular testing for Clostridium difficile infection on healthcare facility-associated incidence rates. Infect Control Hosp Epidemiol. 2013;34:1055–1061. doi: 10.1086/673144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Jury L, S B, Stiefel, Donskey CJ. How smart is the chart? Diarrhea, documentation, fecal incontinence, and Clostridium difficile infection. IDWeek. 2012 Abstract #360. Published October 12, 2012. [Google Scholar]