Researchers examined the RODE's interrater reliability using 2 occupational therapy driving rehabilitation specialists working with 24 older adults diagnosed with dementia.

MeSH TERMS: automobile driving, dementia, occupational therapy, reproducibility of results, task performance and analysis

Abstract

The Record of Driving Errors (RODE) is a novel standardized tool designed to quantitatively document the specific types of driving errors that occur during a standardized performance-based road test. The purpose of this study was to determine interrater reliability between two occupational therapy driver rehabilitation specialists who quantitatively scored specific driving errors using the RODE in a sample of older adults diagnosed with dementia (n = 24). Intraclass correlation coefficients of major driving error and intervention categories indicated almost perfect agreement between raters. Using raters with adequate training and similar professional backgrounds, it is possible to have good interrater reliability using the RODE on a standardized road test.

Comprehensive driving assessments, usually performed by occupational therapy driver rehabilitation specialists (OT/DRSs), are used to determine whether a person is safe to continue driving when affected by a medical condition such as dementia. Typically, these assessments include a standardized clinical evaluation of vision and cognitive and motor skills and an on-the-road evaluation. The performance-based on-the-road test is the most widely accepted method for determining driving competency, with poor test results being associated with a history of at-fault crashes (Davis, Rockwood, Mitnitski, & Rockwood, 2011; De Raedt & Ponjaert-Kristoffersen, 2001; Fitten et al., 1995; Wood et al., 2009). In spite of being the most widely accepted measure of driving competency, road tests have limitations, which can include potential lack of objective scoring procedures, lack of established interrater reliability, variability in road courses and traffic conditions, and safety concerns (Lee, Cameron, & Lee, 2003; Stutts & Wilkins, 2003).

Road test performance has traditionally been classified using global scoring systems (e.g., safe, marginal, or unsafe). Although such global scoring systems lack reporting on specific driving errors, they have been found to have fairly good interrater reliability on overall driving performance (Brown et al., 2005; Carr, Barco, Wallendorf, Snellgrove, & Ott, 2011; Grace et al., 2005; Ott, Papandonatos, Davis, & Barco, 2012; Stav, Justiss, McCarthy, Mann, & Lanford, 2008).

Driving research is moving toward a closer examination of the types of specific errors that occur while driving. Driving errors have been classified in a variety of ways. Michon’s (1985) model is commonly used as a framework to examine driving errors operationally (operating the vehicle), tactically (maneuvering the vehicle), and strategically (navigating the vehicle). Some researchers have used various modifications of the Michon model to develop error scoring classifications. Other researchers have developed error scoring classifications that are based on state licensing scoring procedures (Dawson, Anderson, Uc, Dastrup, & Rizzo, 2009; Di Stefano & Macdonald, 2003) and used team consensus to distinguish more serious errors from less serious errors (Dawson et al., 2009). Researchers have also developed unique scoring classifications that include both the location where the driving error occurred (e.g., controlled intersections), the type of driving behavior error observed (e.g., lack of signaling), and the type of instructor intervention required (verbal or physical; Wood et al., 2009).

Studies have more recently attempted to objectify driving performance by using Likert-based scales to summarize driving characteristics or to quantify the number of errors possible per road test by recording errors at various points on a standardized road test (Akinwuntan et al., 2003; Anstey & Wood, 2011; Dawson et al., 2009; Fitten et al., 1995; Grace et al., 2005; Hunt et al., 1997; Janke & Eberhard, 1998; Odenheimer et al., 1994; Shechtman, Awadzi, Classen, Lanford, & Joo, 2010; Wood et al., 2009). These quantitative approaches may provide better understanding of the specific errors that occur with driving impairment, allowing OT/DRSs to better tailor driving recommendations to prolong or improve driving skills. However, interrater reliability information regarding road tests that addresses specific numbers and types of driving errors is lacking. Most interrater reliability studies do not include quantitative scoring methods, only global scoring methodology.

Studies on interrater reliability of quantitative scoring of driving errors (using a fixed-error or Likert scoring approach) are scarce in the literature. Moreover, the few published studies used varied approaches. One study compared scores from an in-car evaluator with those from a second evaluator reviewing a video of the same road test and had an intraclass correlation coefficient (ICC) of .62 for total errors (Akinwuntan et al., 2003). Another study reported interrater reliability on a quantitative error (fixed-error) scoring system and was able to reach a significant (but not high) correlation of .51 to .54 between two state driving examiners, one in the front passenger seat and one in the back seat (Janke & Eberhard, 1998). The purpose of the current study was to determine interrater reliability between two OT/DRSs who quantitatively scored specific driving errors using the Record of Driving Errors (RODE) in a sample of older adults diagnosed with dementia and referred for fitness-to-drive evaluations.

Method

Research Design

This cross-sectional study was part of a larger study investigating medical fitness to drive funded by the Missouri Department of Transportation (Carr et al., 2011). It was conducted at Washington University Medical School in St. Louis, Missouri, through the Driving Connections Clinic at The Rehabilitation Institute of St. Louis (TRISL) and approved by the Human Studies Committee at Washington University and the Health South Research Committee Corporate Office, a managing partner of TRISL.

Participants

Participants were recruited from January to August 2013 through the Memory Diagnostic Center of Washington University School of Medicine. Inclusion criteria required a diagnosis of dementia and a physician’s referral for a driving assessment, an active driver with current driver’s license, an Assessing Dementia–8 (AD8; Galvin, Roe, Xiong, & Morris, 2006) score of 2 or greater by the informant, a Clinical Dementia Rating (CDR; Hughes, Berg, Danziger, Coben, & Martin, 1982) of 1 or greater, at least 10 yr of driving experience, an informant available to answer questions and attend portions of the driving assessment, visual acuity acceptable in state driving guidelines, and ability to speak English. Exclusion criteria were any major chronic unstable diseases or conditions (e.g., seizures), severe orthopedic or musculoskeletal or neuromuscular impairments that would require adaptive equipment to drive, sensory or language impairments that would interfere with testing, use of sedating drugs, or a driving evaluation in the past 12 mo. All participants went through a comprehensive driving assessment that included the clinical assessment and on-road assessment (Carr et al., 2011).

Performance-Based Road Evaluation

The modified Washington University Road Test (mWURT; Carr et al., 2011) replaced the Washington University Road Test (WURT; Hunt et al., 1997) as a result of route changes caused by road construction. However, the mWURT uses common traffic situations from the WURT. The course currently consists of 14 right-hand turns (5 at stop signs, 3 at traffic lights, and 6 unprotected), 11 left-hand turns (6 at traffic lights, 5 unprotected), 33 traffic lights, and 10 stop signs. The road test begins in a closed, quiet parking lot and then progresses to low-traffic conditions (in a park setting) and on to moderate- to high-traffic conditions (e.g., complex intersections, greater traffic demands, traffic lights), as safety permits. The course is 13 miles long and takes approximately 1 hr to complete.

For this study, the road testing occurred in a midsized driving evaluation car with an occupational therapy certified driver rehabilitation specialist (OT/CDRS) positioned in the front passenger seat. The OT/CDRS provided directions and maintained safety of the vehicle. Two OT/DRSs used the RODE to record driving errors made by the participant. The two OT/DRSs sat in the back seat (one in the back middle and the other behind the passenger seat) with a large board between them to prevent them from viewing each other’s scoring tool. Both OT/DRSs who were scoring driving errors were blinded to results of any clinical testing.

Record of Driving Errors

The RODE, developed and implemented in 2007, is an objective approach to quantify the number and type of driving errors in a standardized performance-based road test. As noted previously, a paucity of published studies on scoring driving errors exists. The RODE incorporates knowledge of common driving errors that were identified by reading the literature and numerous case examples from clinically based driving evaluations. Different from most road test scoring approaches, the RODE allows for a continuous measure of errors throughout the road test, without a maximum limit. When driving, a variety of type and frequency of errors can occur at any given time between Point A and Point B. To capture the true extent of the occurrence of the errors, it is necessary to measure the errors not only at designated points along the route but also consistently throughout the whole driving experience. The RODE incorporates categories of driving errors (i.e., driving situation and driving behavior errors) from previous studies (Wood et al., 2009) and operationally defines specific errors within each category.

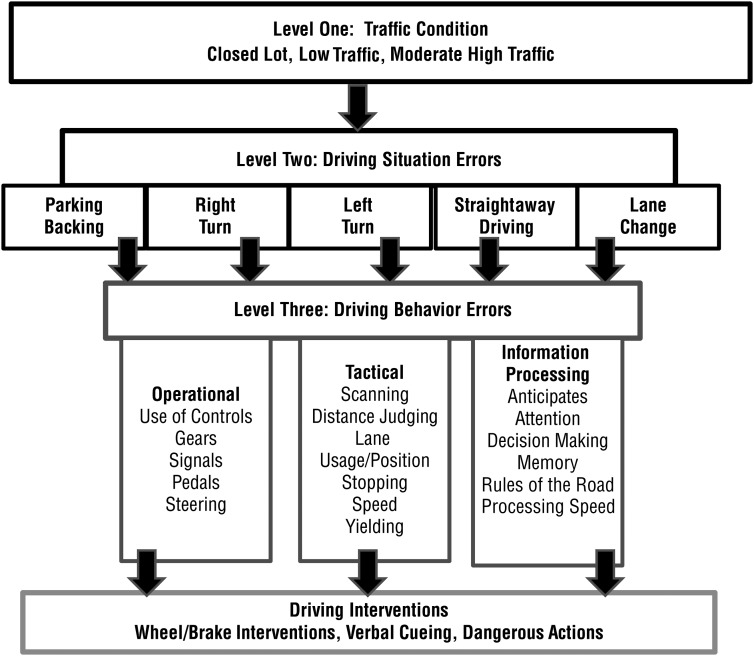

The RODE scoring methodology follows a three-tiered approach. First, all driving errors are recorded according to three levels of traffic condition in which they occur (closed parking lot, low traffic, and moderate to high traffic). Second, within each traffic condition, driving errors are recorded according to the specific driving situation that is involved (parking or backing, left turn, right turn, straightaway driving, or lane change). Third, within each driving situation, errors are further described by the type of driving behavior errors that occur (operational, tactical, or information-processing errors; Figure 1).

Figure 1.

Record of Driving Errors scoring.

Building on the Michon model, the RODE defines operational errors as those that involve the direct operation of the car (e.g., use of turn signals and foot pedals), tactical errors as those that require driving tactics or skill in traffic (e.g., maintaining lane position, visual scanning, yielding), and information-processing errors as those related to higher processing skills (e.g., following directions and using memory, making decisions, anticipating maneuvers, knowing traffic signs and rules of the road). Information-processing errors are analogous to but extend beyond the “strategic/planning” driving errors described in other studies (Grace et al., 2005; Withaar, Brouwer, & van Zomeren, 2000). The RODE uniquely acknowledges that multiple driving behavior errors can simultaneously occur and can result in one driving situation error. For example, a driving situation error in making a left turn could be the result of multiple driving behavior errors (such as failure to signal, failure to yield to a vehicle or pedestrian, and turning from an incorrect lane). Finally, the evaluator records any necessary interventions to maintain safety (e.g., verbal cue, wheel intervention, brake intervention) and whether the errors resulted in an occurrence of a dangerous event (e.g., errors that resulted in immediate, imminent danger of a collision or hazard; see Figure 1).

Training on Scoring

We implemented a rigorous training protocol for scoring driving errors. Training occurred over a 3-mo period, and the primary investigator (Barco, an OT/DRS with 15+ years of experience in driving evaluation) served as the trainer. Training included approximately 30 hr of written and verbal practice activities involving scoring methodology (familiarity with the scoring sheets, tallying of errors, and objective definitions of the errors and driving situations) and 15 hr of practice trials of recording errors during road testing to ensure understanding of the methodology, discuss and resolve differences in scoring, and demonstrate proficiency in the administration of the RODE. All training was completed before data collection.

Statistics

Interrater reliability was assessed using ICCs (Shrout & Fleiss, 1979). ICCs reflect the degree of agreement between raters. Higher ICCs indicate better reliability, with guidelines as follows: ≤0 = poor, .01–.20 = slight, .21–.40 = fair, .41–.60 = moderate, .61–.80 = substantial, and .81–1.00 = almost perfect agreement (Landis & Koch, 1977).

Results

Twenty-four participants met the inclusion criteria. Participants had CDR scores that reflected very mild (0.5, n = 20) or mild (1, n = 4) dementia. Their mean AD8 score was 5.7 (standard deviation [SD] = 1.8), and their mean score on the Short Blessed Test (Katzman et al., 1983), a brief test of memory, concentration, and orientation, was 7.0 (SD = 5.5). Seven (29.2%) participants were women, and 3 (12.5%) were of minority race. Participants were age 69.1 yr (SD = 9.3 yr) on average and had a mean of 15.3 yr of education (SD = 3.5 yr).

We calculated ICCs and associated 95% confidence intervals for situational (Table 1), driving behavior (Table 2), and intervention (Table 3) errors. Using the guidelines of Landis and Koch (1977), we determined that ICCs indicated almost perfect agreement between raters for total situational errors; total situational errors for closed lot, low traffic, and moderate to high traffic (see Table 1); total operational, tactical, and information-processing errors (see Table 2); and all intervention error categories (see Table 3). However, some of the situational and driving behavior subcategories that make up the totals showed lower interrater reliability (see Tables 1 and 2).

Table 1.

Reliability of Situational Errors

| 95% CI | |||

| Situational errors | ICC | LL | UL |

| Total situational errors | .97 | .93 | .99 |

| Total situational errors, closed lot | .84 | .67 | .93 |

| Total situational errors, low traffic | .90 | .79 | .95 |

| Right turn | .85 | .69 | .93 |

| Straightaway | .44 | .07 | .71 |

| Total situational errors, moderate to high traffic | .97 | .93 | .99 |

| Right turn | .89 | .77 | .95 |

| Left turn | .89 | .77 | .95 |

| Straightaway | .96 | .91 | .98 |

| Lane change | .67 | .39 | .84 |

| Open parking lot | .88 | .75 | .95 |

Note. CI = confidence interval; ICC = intraclass correlation coefficient; LL = lower limit; UL = upper limit.

Table 2.

Reliability of Driving Behavior Errors

| 95% CI | |||

| Behavior errors | ICC | LL | UL |

| Total operational errors | .91 | .81 | .96 |

| Use of controls | .31 | –.03 | .65 |

| Use of gears | .88 | .75 | .95 |

| Turn signal use | .91 | .81 | .96 |

| Pedal control | –.02 | –.40 | .37 |

| Steering control | –.02 | –.40 | .37 |

| Total tactical errors | .95 | .89 | .98 |

| Visual scanning | .72 | .47 | .87 |

| Distance judging | .35 | –.04 | .65 |

| Lane use and position | .97 | .93 | .99 |

| Stopping inappropriately | .5 | .14 | .74 |

| Speed (slow) | .86 | .71 | .94 |

| Speed (fast) | .77 | .55 | .89 |

| Yield to vehicle | .37 | –.02 | .66 |

| Yield to pedestrian | .66 | .37 | .83 |

| Total information-processing errors | .84 | .67 | .93 |

| Failure to anticipate | –.07 | –.44 | .32 |

| Attention | .45 | .08 | .71 |

| Decision making | .64 | .34 | .82 |

| Memory (following directions) | .81 | .62 | .91 |

| Lack of knowledge of rules of the road | .73 | .48 | .87 |

| Speed of processing | .32 | –.07 | .63 |

Note. CI = confidence interval; ICC = intraclass correlation coefficient; LL = lower limit; UL = upper limit.

Table 3.

Reliability of Intervention Errors

| 95% CI | |||

| Intervention errors | ICC | LL | UL |

| Verbal cueing | .98 | .96 | .99 |

| Wheel interventions | .94 | .87 | .97 |

| Brake interventions | .96 | .91 | .98 |

| Dangerous actions | .93 | .85 | .97 |

Note. CI = confidence interval; ICC = intraclass correlation coefficient; LL = lower limit; UL = upper limit.

Discussion

Although quantitative error counts in driving research are becoming more common, interrater reliability information regarding these measures is scarce. For the RODE, we found strong interrater reliability across the important main categories of driving errors that occur during the on-road assessment. The stronger interrater reliability of this tool compared with interrater reliability of other quantitative error scoring is likely attributable to the intense training program in error observation using the RODE, similar professional background of both raters, standardized road course, and objective definitions of error types. Moreover, the raters were both positioned in the back seat compared with one scorer in the front seat and one in the back, as in Janke and Eberhard (1998). Seating position in the car has the potential to influence the type of driving errors that are observed and subsequently recorded because different seating positions give different views of the driver and the road.

Although the majority of driving behavior errors showed strong interrater reliability, some specific driving behavior errors had lower interrater reliability. The lower interrater reliability could be the result of a lower frequency of occurrence of the specific errors. The lowest ICCs tended to be found for errors that occurred with low frequency. For example, errors in pedal control (ICC = –.02) and in steering control (ICC = –.02) each occurred only one time for one participant (see Table 2). Moreover, lower rating on some specific errors could be the result of different OT/DRSs emphasizing different driving behaviors. Ott et al. (2012) found that different driving evaluators weighted various driving behaviors differently, which affected interrater reliability on scoring road test performance.

Limitations

This study had several limitations. Although the road course was standardized, the traffic occurrences on each route were not; when driving in real traffic conditions, it is not possible to standardize the various traffic-related situations that occur at any given time on the performance-based road course. Moreover, the study was limited to one site and a small sample. Replication with larger samples and other standardized routes needs to occur to increase generalizability of these results.

Recommendations for Future Research

Future studies need to address how the types and quantity of errors affect passing or failing a road test in a comprehensive driving assessment. Studies should examine how the types of errors may differ across different medical impairments and whether the RODE can be validated with prospective or retrospective crash history.

Because the RODE uses a continuous measure of errors across a performance-based road test (in contrast to a point system at predesignated locations), it may be more easily transferred to use at other locations. Future studies are necessary to test the reliability and validity of the RODE across various sites and with different performance-based road tests.

It is unknown how the seating position in the car affects evaluator observation of driving errors. Therefore, future research could focus on whether error observations using the RODE differ because of evaluator seating position (back seat vs. front seat). Studies should also focus on the comparisons between error observations that are direct from an evaluator and errors that are detected with instrumented vehicles (i.e., vehicles equipped with various electronic mechanisms and sensors to record and review driving performance later, using video analysis). Most clinically based occupational therapy road assessments include only one driving evaluator (positioned in the front seat) who is responsible for observing errors, providing directions, and maintaining safety of the vehicle. In such cases, it may not be possible for this person to record errors with the level of detail included in the RODE. Future studies will focus on ways to adapt the RODE to make it more usable in clinically based driving assessments.

Implications for Occupational Therapy Practice

The results of this study have the following implications for occupational therapy practice:

With adequate training and objective definitions, it is possible for OT/DRSs to reliably identify driving errors on a standardized performance-based road assessment using the RODE.

Being able to reliably identify the type of driving errors that occur during the road assessment is a beginning step toward identifying the type of occupational therapy–based interventions needed for improving and prolonging driving ability to maintain independence and participation throughout the life span.

Care should be taken when using any complex driving error scoring tool, such as the RODE, to not distract the OT/DRS (in the front passenger seat) from effectively managing safety of the vehicle during the road assessment.

Acknowledgments

We express appreciation to the Missouri Department of Transportation, Traffic and Highway Safety Division, for grant funding to support this study. We also acknowledge Steven Ice of Independent Drivers, LLC, for his assistance in the implementation of this project. Partial salary support for Dr. Roe’s work on this study was provided by the National Institute on Aging (R01 AG04343401). Dr. Roe’s and Dr. Xiong’s salaries are also supported by grants from the National Institute on Aging (P50 AG005681, P01 AG003991, and P01 AG026276). For more information on the RODE, contact Peggy P. Barco.

Contributor Information

Peggy P. Barco, Peggy P. Barco, OTD, BSW, OTR/L, SCDC, is Instructor, Program in Occupational Therapy and Department of Medicine, Washington University School of Medicine, St. Louis, MO; barcop@wusm.wustl.edu

David B. Carr, David B. Carr, MD, is Professor of Medicine and Neurology, Washington University School of Medicine, St. Louis, MO

Kathleen Rutkoski, Kathleen Rutkoski, BS, OTR/L, is Research Assistant, Department of Medicine, Washington University School of Medicine, St. Louis, MO.

Chengjie Xiong, Chengjie Xiong, PhD, is Professor of Biostatistics, Knight Alzheimer’s Disease Research Center and Division of Biostatistics, Washington University School of Medicine, St. Louis, MO.

Catherine M. Roe, Catherine M. Roe, PhD, is Research Assistant Professor, Knight Alzheimer’s Disease Research Center and Department of Neurology, Washington University School of Medicine, St. Louis, MO

References

- Akinwuntan A. E., DeWeerdt W., Feys H., Baten G., Arno P., & Kiekens C. (2003). Reliability of a road test after stroke. Archives of Physical Medicine and Rehabilitation, 84, 1792–1796 http://dx.doi.org/10.1016/s0003-9993(03)00767-6 [DOI] [PubMed] [Google Scholar]

- Anstey K. J., & Wood J. (2011). Chronological age and age-related cognitive deficits are associated with an increase in multiple types of driving errors in late life. Neuropsychology, 25, 613–621 http://dx.doi.org/10.1037/a0023835 [DOI] [PubMed] [Google Scholar]

- Brown L. B., Stern R. A., Cahn-Weiner D. A., Rogers B., Messer M. A., Lannon M. C., . . . Ott B. R. (2005). Driving scenes test of the Neuropsychological Assessment Battery (NAB) and on-road driving performance in aging and very mild dementia. Archives of Clinical Neuropsychology, 20, 209–215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr D. B., Barco P. P., Wallendorf M. J., Snellgrove C. A., & Ott B. R. (2011). Predicting road test performance in drivers with dementia. Journal of the American Geriatrics Society, 59, 2112–2117 http://dx.doi.org/10.1111/j.1532-5415.2011.03657.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis D. H. J., Rockwood M. R. H., Mitnitski A. B., & Rockwood K. (2011). Impairments in mobility and balance in relation to frailty. Archives of Gerontology and Geriatrics, 53, 79–83 http://dx.doi.org/10.1016/j.archger.2010.06.013 [DOI] [PubMed] [Google Scholar]

- Dawson J. D., Anderson S. W., Uc E. Y., Dastrup E., & Rizzo M. (2009). Predictors of driving safety in early Alzheimer disease. Neurology, 72, 521–527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Raedt R., & Ponjaert-Kristoffersen I. (2001). Predicting at-fault car accidents of older drivers. Accident Analysis and Prevention, 33, 809–819 http://dx.doi.org/10.1016/s0001-4575(00)00095-6 [DOI] [PubMed] [Google Scholar]

- Di Stefano M., & Macdonald W. (2003). Assessment of older drivers: Relationships among on-road errors, medical conditions and test outcome. Journal of Safety Research, 34, 415–429 http://dx.doi.org/S0022437503000537 [DOI] [PubMed] [Google Scholar]

- Fitten L. J., Perryman K. M., Wilkinson C. J., Little R. J., Burns M. M., Pachana N., . . . Ganzell S. (1995). Alzheimer and vascular dementias and driving: A prospective road and laboratory study. JAMA, 273, 1360–1365 http://dx.doi.org/10.1001/jama.273.17.1360 [PubMed] [Google Scholar]

- Galvin J. E., Roe C. M., Xiong C., & Morris J. C. (2006). Validity and reliability of the AD8 informant interview in dementia. Neurology, 67, 1942–1948 http://dx.doi.org/10.1212/01.wnl.0000247042.15547.eb [DOI] [PubMed] [Google Scholar]

- Grace J., Amick M. M., D’Abreu A., Festa E. K., Heindel W. C., & Ott B. R. (2005). Neuropsychological deficits associated with driving performance in Parkinson’s and Alzheimer’s disease. Journal of the International Neuropsychological Society, 11, 766–775 http://dx.doi.org/10.1017/s135561770505848 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hughes C. P., Berg L., Danziger W. L., Coben L. A., & Martin R. L. (1982). A new clinical scale for the staging of dementia. British Journal of Psychiatry, 140, 566–572 http://dx.doi.org/10.1192/bjp.140.6.566 [DOI] [PubMed] [Google Scholar]

- Hunt L. A., Murphy C. F., Carr D., Duchek J. M., Buckles V., & Morris J. C. (1997). Reliability of the Washington University Road Test: A performance-based assessment for drivers with dementia of the Alzheimer type. Archives of Neurology, 54, 707–712. [DOI] [PubMed] [Google Scholar]

- Janke M. K., & Eberhard J. W. (1998). Assessing medically impaired older drivers in a licensing agency setting. Accident Analysis and Prevention, 30, 347–361 http://dx.doi.org/10.1016/s0001-4575(97)00112-7 [DOI] [PubMed] [Google Scholar]

- Katzman R., Brown T., Fuld P., Peck A., Schechter R., & Schimmel H. (1983). Validation of a short Orientation–Memory–Concentration Test of cognitive impairment. American Journal of Psychiatry, 140, 734–739. [DOI] [PubMed] [Google Scholar]

- Landis J. R., & Koch G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33, 159–174 http://dx.doi.org/10.2307/2529310 [PubMed] [Google Scholar]

- Lee H. C., Cameron D., & Lee A. H. (2003). Assessing the driving performance of older adult drivers: On-road versus simulated driving. Accident Analysis and Prevention, 35, 797–803 http://dx.doi.org/10.1016/S0001-4575(02)00083-0 [DOI] [PubMed] [Google Scholar]

- Michon J. (1985). A critical review of driver behavior models: What do we know, what should we do? In Evans L. & Schwing R. (Eds.), Human behavior and traffic safety (pp. 495–520). New York: Plenum Press. [Google Scholar]

- Odenheimer G. L., Beaudet M., Jette A. M., Albert M. S., Grande L., & Minaker K. L. (1994). Performance-based driving evaluation of the elderly driver: Safety, reliability, and validity. Journal of Gerontology, 49, M153–M159. [DOI] [PubMed] [Google Scholar]

- Ott B. R., Papandonatos G. D., Davis J. D., & Barco P. P. (2012). Naturalistic validation of an on-road driving test of older drivers. Human Factors, 54, 663–674 http://dx.doi.org/10.1177/0018720811435235 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shechtman O., Awadzi K. D., Classen S., Lanford D. N., & Joo Y. (2010). Validity and critical driving errors of on-road assessment for older drivers. American Journal of Occupational Therapy, 64, 242–251 http://dx.doi.org/10.5014/ajot.64.2.242 [DOI] [PubMed] [Google Scholar]

- Shrout P. E., & Fleiss J. L. (1979). Intraclass correlations: Uses in assessing rater reliability. Psychological Bulletin, 86, 420–428 http://dx.doi.org/10.1037//0033-2909.86.2.420 [DOI] [PubMed] [Google Scholar]

- Stav W. B., Justiss M. D., McCarthy D. P., Mann W. C., & Lanford D. N. (2008). Predictability of clinical assessments for driving performance. Journal of Safety Research, 39, 1–7 http://dx.doi.org/10.1016/j.jsr.2007.10.004 [DOI] [PubMed] [Google Scholar]

- Stutts J. C., & Wilkins J. W. (2003). On-road driving evaluations: A potential tool for helping older adults drive safely longer. Journal of Safety Research, 34, 431–439. [DOI] [PubMed] [Google Scholar]

- Withaar F. K., Brouwer W. H., & van Zomeren A. H. (2000). Fitness to drive in older drivers with cognitive impairment. Journal of the International Neuropsychological Society, 6, 480–490. [DOI] [PubMed] [Google Scholar]

- Wood J. M., Anstey K. J., Lacherez P. F., Kerr G. K., Mallon K., & Lord S. R. (2009). The on-road difficulties of older drivers and their relationship with self-reported motor vehicle crashes. Journal of the American Geriatrics Society, 57, 2062–2069 http://dx.doi.org/10.1111/j.1532-5415.2009.02498.x [DOI] [PubMed] [Google Scholar]