Abstract

Background

Implementation science has progressed towards increased use of theoretical approaches to provide better understanding and explanation of how and why implementation succeeds or fails. The aim of this article is to propose a taxonomy that distinguishes between different categories of theories, models and frameworks in implementation science, to facilitate appropriate selection and application of relevant approaches in implementation research and practice and to foster cross-disciplinary dialogue among implementation researchers.

Discussion

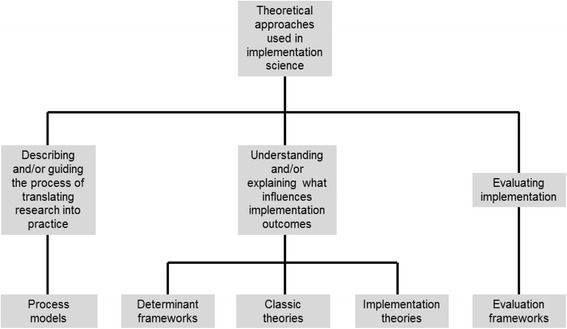

Theoretical approaches used in implementation science have three overarching aims: describing and/or guiding the process of translating research into practice (process models); understanding and/or explaining what influences implementation outcomes (determinant frameworks, classic theories, implementation theories); and evaluating implementation (evaluation frameworks).

Summary

This article proposes five categories of theoretical approaches to achieve three overarching aims. These categories are not always recognized as separate types of approaches in the literature. While there is overlap between some of the theories, models and frameworks, awareness of the differences is important to facilitate the selection of relevant approaches. Most determinant frameworks provide limited “how-to” support for carrying out implementation endeavours since the determinants usually are too generic to provide sufficient detail for guiding an implementation process. And while the relevance of addressing barriers and enablers to translating research into practice is mentioned in many process models, these models do not identify or systematically structure specific determinants associated with implementation success. Furthermore, process models recognize a temporal sequence of implementation endeavours, whereas determinant frameworks do not explicitly take a process perspective of implementation.

Keywords: Theory, Model, Framework, Evaluation, Context

Background

Implementation science was borne out of a desire to address challenges associated with the use of research to achieve more evidence-based practice (EBP) in health care and other areas of professional practice. Early implementation research was empirically driven and did not always pay attention to the theoretical underpinnings of implementation. Eccles et al. ([1]:108) remarked that this research seemed like “an expensive version of trial-and-error”. A review of guideline implementation strategies by Davies et al. [2] noted that only 10% of the studies identified provided an explicit rationale for their strategies. Mixed results of implementing EBP in various settings were often attributed to a limited theoretical basis [1,3-5]. Poor theoretical underpinning makes it difficult to understand and explain how and why implementation succeeds or fails, thus restraining opportunities to identify factors that predict the likelihood of implementation success and develop better strategies to achieve more successful implementation.

However, the last decade of implementation science has seen wider recognition of the need to establish the theoretical bases of implementation and strategies to facilitate implementation. There is mounting interest in the use of theories, models and frameworks to gain insights into the mechanisms by which implementation is more likely to succeed. Implementation studies now apply theories borrowed from disciplines such as psychology, sociology and organizational theory as well as theories, models and frameworks that have emerged from within implementation science. There are now so many theoretical approaches that some researchers have complained about the difficulties of choosing the most appropriate [6-11].

This article seeks to further implementation science by providing a narrative review of the theories, models and frameworks applied in this research field. The aim is to describe and analyse how theories, models and frameworks have been applied in implementation science and propose a taxonomy that distinguishes between different approaches to advance clarity and achieve a common terminology. The ambition is to facilitate appropriate selection and application of relevant approaches in implementation studies and foster cross-disciplinary dialogue among implementation researchers. The importance of a clarifying taxonomy has evolved during the many discussions on theoretical approaches used within implementation science that the author has had over the past few years with fellow implementation researchers, as well as reflection on the utility of different approaches in various situations.

Implementation science is defined as the scientific study of methods to promote the systematic uptake of research findings and other EBPs into routine practice to improve the quality and effectiveness of health services and care [12]. The terms knowledge translation, knowledge exchange, knowledge transfer, knowledge integration and research utilization are used to describe overlapping and interrelated research on putting various forms of knowledge, including research, to use [8,13-16]. Implementation is part of a diffusion-dissemination-implementation continuum: diffusion is the passive, untargeted and unplanned spread of new practices; dissemination is the active spread of new practices to the target audience using planned strategies; and implementation is the process of putting to use or integrating new practices within a setting [16,17].

A narrative review of selective literature was undertaken to identify key theories, models and frameworks used in implementation science. The narrative review approach gathers information about a particular subject from many sources and is considered appropriate for summarizing and synthesizing the literature to draw conclusions about “what we know” about the subject. Narrative reviews yield qualitative results, with strengths in capturing diversities and pluralities of understanding [18,19]. Six textbooks that provide comprehensive overviews of research regarding implementation science and implementation of EBP were consulted: Rycroft-Malone and Bucknall [20], Nutley et al. [21], Greenhalgh et al. [17], Grol et al. [22], Straus et al. [23] and Brownson et al. [24]. A few papers presenting overviews of theories, models and frameworks used in implementation science were also used: Estabrooks et al. [14], Sales et al. [4], Graham and Tetroe [25], Mitchell et al. [8], Flottorp et al. [26], Meyers et al. [27] and Tabak et al. [28]. In addition, Implementation Science (first published in 2006) was searched using the terms “theory”, “model” and “framework” to identify relevant articles. The titles and abstracts of the identified articles were scanned, and those that were relevant to the study aim were read in full.

Discussion

Theories, models and frameworks in the general literature

Generally, a theory may be defined as a set of analytical principles or statements designed to structure our observation, understanding and explanation of the world [29-31]. Authors usually point to a theory as being made up of definitions of variables, a domain where the theory applies, a set of relationships between the variables and specific predictions [32-35]. A “good theory” provides a clear explanation of how and why specific relationships lead to specific events. Theories can be described on an abstraction continuum. High abstraction level theories (general or grand theories) have an almost unlimited scope, middle abstraction level theories explain limited sets of phenomena and lower level abstraction theories are empirical generalizations of limited scope and application [30,36].

A model typically involves a deliberate simplification of a phenomenon or a specific aspect of a phenomenon. Models need not be completely accurate representations of reality to have value [31,37]. Models are closely related to theory and the difference between a theory and a model is not always clear. Models can be described as theories with a more narrowly defined scope of explanation; a model is descriptive, whereas a theory is explanatory as well as descriptive [29].

A framework usually denotes a structure, overview, outline, system or plan consisting of various descriptive categories, e.g. concepts, constructs or variables, and the relations between them that are presumed to account for a phenomenon [38]. Frameworks do not provide explanations; they only describe empirical phenomena by fitting them into a set of categories [29].

Theories, models and frameworks in implementation science

It was possible to identify three overarching aims of the use of theories, models and frameworks in implementation science: (1) describing and/or guiding the process of translating research into practice, (2) understanding and/or explaining what influences implementation outcomes and (3) evaluating implementation. Theoretical approaches which aim at understanding and/or explaining influences on implementation outcomes (i.e. the second aim) can be further broken down into determinant frameworks, classic theories and implementation theories based on descriptions of their origins, how they were developed, what knowledge sources they drew on, stated aims and applications in implementation science. Thus, five categories of theoretical approaches used in implementation science can be delineated (Table 1; Figure 1):

Process models

Determinant frameworks

Classic theories

Implementation theories

Evaluation frameworks

Table 1.

Five categories of theories, models and frameworks used in implementation science

| Category | Description | Examples |

|---|---|---|

| Process models | Specify steps (stages, phases) in the process of translating research into practice, including the implementation and use of research. The aim of process models is to describe and/or guide the process of translating research into practice. An action model is a type of process model that provides practical guidance in the planning and execution of implementation endeavours and/or implementation strategies to facilitate implementation. Note that the terms “model” and “framework” are both used, but the former appears to be the most common | Model by Huberman [40], model by Landry et al. [41], model by Davies et al. [43], model by Majdzadeh et al. [44], the CIHR Model of Knowledge Translation [42], the K2A Framework [15], the Stetler Model [47], the ACE Star Model of Knowledge Transformation [48], the Knowledge-to-Action Model [13], the Iowa Model [49,50], the Ottawa Model [51,52], model by Grol and Wensing [53], model by Pronovost et al. [54], the Quality Implementation Framework [27] |

| Determinant frameworks | Specify types (also known as classes or domains) of determinants and individual determinants, which act as barriers and enablers (independent variables) that influence implementation outcomes (dependent variables). Some frameworks also specify relationships between some types of determinants. The overarching aim is to understand and/or explain influences on implementation outcomes, e.g. predicting outcomes or interpreting outcomes retrospectively | PARIHS [5,64], Active Implementation Frameworks [63,68], Understanding-User-Context Framework [62], Conceptual Model [17], framework by Grol et al. [22], framework by Cochrane et al. [59], framework by Nutley et al. [21], Ecological Framework by Durlak and DuPre [57], CFIR [60], framework by Gurses et al. [58], framework by Ferlie and Shortell [61], Theoretical Domains Framework [66] |

| Classic theories | Theories that originate from fields external to implementation science, e.g. psychology, sociology and organizational theory, which can be applied to provide understanding and/or explanation of aspects of implementation | Theory of Diffusion [107], social cognitive theories, theories concerning cognitive processes and decision making, social networks theories, social capital theories, communities of practice, professional theories, organizational theories |

| Implementation theories | Theories that have been developed by implementation researchers (from scratch or by adapting existing theories and concepts) to provide understanding and/or explanation of aspects of implementation | Implementation Climate [116], Absorptive Capacity [117], Organizational Readiness [118], COM-B [119], Normalization Process Theory [120] |

| Evaluation frameworks | Specify aspects of implementation that could be evaluated to determine implementation success | RE-AIM [124]; PRECEDE-PROCEED [125]; framework by Proctor et al. [126] |

ACE Academic Center for Evidence-Based Practice, CFIR Consolidated Framework for Implementation Research, CIHR Canadian Institutes of Health Research Knowledge, COM-B Capacity-Opportunities-Motivation-Behaviour, Conceptual Model Conceptual Model for Considering the Determinants of Diffusion, Dissemination, and Implementation of Innovations in Health Service Delivery and Organization (full title), K2A Knowledge-to-Action, PARIHS Promoting Action on Research Implementation in Health Services, PRECEDE-PROCEED Predisposing, Reinforcing and Enabling Constructs in Educational Diagnosis and Evaluation-Policy, Regulatory, and Organizational Constructs in Educational and Environmental Development, RE-AIM Reach, Effectiveness, Adoption, Implementation, Maintenance.

Figure 1.

Three aims of the use of theoretical approaches in implementation science and the five categories of theories, models and frameworks.

Although theories, models and frameworks are distinct concepts, the terms are sometimes used interchangeably in implementation science [9,14,39]. A theory in this field usually implies some predictive capacity (e.g. to what extent do health care practitioners’ attitudes and beliefs concerning a clinical guideline predict their adherence to this guideline in clinical practice?) and attempts to explain the causal mechanisms of implementation. Models in implementation science are commonly used to describe and/or guide the process of translating research into practice (i.e. “implementation practice”) rather than to predict or analyse what factors influence implementation outcomes (i.e. “implementation research”). Frameworks in implementation science often have a descriptive purpose by pointing to factors believed or found to influence implementation outcomes (e.g. health care practitioners’ adoption of an evidence-based patient intervention). Neither models nor frameworks specify the mechanisms of change; they are typically more like checklists of factors relevant to various aspects of implementation.

Describing and/or guiding the process of translating research into practice

Process models

Process models are used to describe and/or guide the process of translating research into practice. Models by Huberman [40], Landry et al. [41], the CIHR (Canadian Institutes of Health Research) Knowledge Model of Knowledge Translation [42], Davis et al. [43], Majdzadeh et al. [44] and the K2A (Knowledge-to-Action) Framework [15] outline phases or stages of the research-to-practice process, from discovery and production of research-based knowledge to implementation and use of research in various settings.

Early research-to-practice (or knowledge-to-action) models tended to depict rational, linear processes in which research was simply transferred from producers to users. However, subsequent models have highlighted the importance of facilitation to support the process and placed more emphasis on the contexts in which research is implemented and used. Thus, the attention has shifted from a focus on production, diffusion and dissemination of research to various implementation aspects [21].

So-called action (or planned action) models are process models that facilitate implementation by offering practical guidance in the planning and execution of implementation endeavours and/or implementation strategies. Action models elucidate important aspects that need to be considered in implementation practice and usually prescribe a number of stages or steps that should be followed in the process of translating research into practice. Action models have been described as active by Graham et al. ([45]:185) because they are used “to guide or cause change”. It should be noted that the terminology is not fully consistent, as some of these models are referred to as frameworks, for instance the Knowledge-to-Action Framework [46].

Many of the action models originate from the nursing-led field of research use/utilization; well-known examples include the Stetler Model [47], the ACE (Academic Center for Evidence-Based Practice) Star Model of Knowledge Transformation [48], the Knowledge-to-Action Framework [13], the Iowa Model [49,50] and the Ottawa Model [51,52]. There are also numerous examples of similar “how-to-implement” models that have emerged from other fields, including models developed by Grol and Wensing [53], Pronovost et al. [54] and the Quality Implementation Framework [27], all of which are intended to provide support for planning and managing implementation endeavours.

The how-to-implement models typically emphasize the importance of careful, deliberate planning, especially in the early stages of implementation endeavours. In many ways, they present an ideal view of implementation practice as a process that proceeds step-wise, in an orderly, linear fashion. Still, authors behind most models emphasize that the actual process is not necessarily sequential. Many of the action models mentioned here have been subjected to testing or evaluation, and some have been widely applied in empirical research, underscoring their usefulness [9,55].

The process models vary with regard to how they were developed. Models such as the Stetler Model [47,56] and the Iowa Model [49,50] were based on the originators’ own experiences of implementing new practices in various settings (although they were also informed by research and expert opinion). In contrast, models such as the Knowledge-to-Action Framework [45] and the Quality Implementation Framework [27] have relied on literature reviews of theories, models, frameworks and individual studies to identify key features of successful implementation endeavours.

Understanding and explaining what influences implementation outcomes

Determinant frameworks

Determinant frameworks describe general types (also referred to as classes or domains) of determinants that are hypothesized or have been found to influence implementation outcomes, e.g. health care professionals’ behaviour change or adherence to a clinical guideline. Each type of determinant typically comprises a number of individual barriers (hinders, impediments) and/or enablers (facilitators), which are seen as independent variables that have an impact on implementation outcomes, i.e. the dependent variable. Some frameworks also hypothesize relationships between these determinants (e.g. [17,57,58]), whereas others recognize such relationships without clarifying them (e.g. [59,60]). Information about what influences implementation outcomes is potentially useful for designing and executing implementation strategies that aim to change relevant determinants.

The determinant frameworks do not address how change takes place or any causal mechanisms, underscoring that they should not be considered theories. Many frameworks are multilevel, identifying determinants at different levels, from the individual user or adopter (e.g. health care practitioners) to the organization and beyond. Hence, these integrative frameworks recognize that implementation is a multidimensional phenomenon, with multiple interacting influences.

The determinant frameworks were developed in different ways. Many frameworks (e.g. [17,21,22,57,59,61]) were developed by synthesizing results from empirical studies of barriers and enablers for implementation success. Other frameworks have relied on existing determinant frameworks and relevant theories in various disciplines, e.g. the frameworks by Gurses et al. [58] and CFIR (Consolidated Framework for Implementation Research) [60].

Several frameworks have drawn extensively on the originator’s own experiences of implementing new practices. For instance, the Understanding-User-Context Framework [62] and Active Implementation Frameworks [63] were both based on a combination of literature reviews and the originators’ implementation experiences. Meanwhile, PARIHS (Promoting Action on Research Implementation in Health Services) [5,64] emerged from the observation that successful implementation in health care might be premised on three key determinants (characteristics of the evidence, context and facilitation), a proposition which was then analysed in four empirical case studies; PARIHS has subsequently undergone substantial research and development work [64] and has been widely applied [65].

Theoretical Domains Framework represents another approach to developing determinant frameworks. It was constructed on the basis of a synthesis of 128 constructs related to behaviour change found in 33 behaviour change theories, including many social cognitive theories [10]. The constructs are sorted into 14 theoretical domains (originally 12 domains), e.g. knowledge, skills, intentions, goals, social influences and beliefs about capabilities [66]. Theoretical Domains Framework does not specify the causal mechanisms found in the original theories, thus sharing many characteristics with determinant frameworks.

The determinant frameworks account for five types of determinants, as shown in Table 2, which provides details of eight of the most commonly cited frameworks in implementation science. The frameworks are superficially quite disparate, with a broad range of terms, concepts and constructs as well as different outcomes, yet they are quite similar with regard to the general types of determinants they account for. Hence, implementation researchers agree to a large extent on what the main influences on implementation outcomes are, albeit to a lesser extent on which terms that are best used to describe these determinants.

Table 2.

Implementation determinants and outcomes in eight determinant frameworks

| Characteristics of the implementation object | Characteristics of the users/adopters (e.g. health care practitioners) | Characteristics of the end users (e.g. patients) | Characteristics of the context | Characteristics of the strategy or other means of facilitating implementation | Outcomes | |

|---|---|---|---|---|---|---|

| PARIHS (Kitson et al. [5]; Rycroft-Malone [64]) | Characteristics of the evidence | Characteristics of the clinical experience (addressed as an aspect of the evidence element) | Characteristics of the patient experience (addressed as an aspect of the evidence element) | Characteristics of the context (comprising culture, leadership and evaluation) | Characteristics of the facilitation, i.e. the process of enabling or making easier the implementation | Successful implementation of research |

| Conceptual Model (Greenhalgh et al. [17]) | Innovation attributes | Aspects of adopters (e.g. psychological antecedents and nature of the adoption decision) and assimilation by organizations | Not addressed | Features of the inner context (organizational antecedents and organizational readiness for innovation) and outer context (e.g. informal interorganizational networks and political directives) | Influences (e.g. opinion leaders, champions and network structure) lying on a continuum from diffusion to dissemination | Successful diffusion, dissemination and implementation of innovations |

| Grol et al. [22] | Features of the innovation | Features of the professionals who should use the innovation | Features of the patients | Features of the social setting (e.g. attitudes of colleagues, culture and leadership) and of the economic, administrative and organizational context | Features of the methods and strategies for dissemination and implementation used | Implementation of new evidence, guidelines and best practices or procedures |

| Nutley et al. [21] | Nature of the research to be applied | Personal characteristics of researchers and potential research users and links between research and its users | Not addressed | Context for the use of research | Strategies to improve the use of research | Use of research |

| Cochrane et al. [59] | Guidelines and evidence barriers | Cognitive and behavioural barriers, attitudinal and rational-emotional barriers, health care professional and physician barriers | Patient barriers | Support and resource barriers, system and process barriers | Not addressed | Adherence to guidelines or implementation of evidence into clinical practice |

| Ecological Framework (Durlak and DuPre [57]) | Characteristics of the innovation | Provider characteristics | Not addressed | Community-level factors (comprising general organizational features, specific organizational practices and processes, and specific staffing considerations) | Features of the prevention support system (comprising training and technical assistance) | Successful implementation of innovations |

| CFIR (Damschroder et al. [60]) | Intervention characteristics | Characteristics of individuals | Patient needs and resources (addressed as an aspect of the outer setting) | Characteristics of the inner setting (e.g. structural characteristics, networks and communications, culture) and outer setting (e.g. cosmopolitanism, external policies and incentives) | Effectiveness of process by which implementation is accomplished (comprising planning, engaging, executing, reflection and evaluating) | Successful implementation of interventions |

| Gurses et al. [58] | Guideline characteristics | Clinician characteristics | Not addressed | Systems characteristics (e.g. physical environment, organizational characteristics) and implementation characteristics (e.g. tension for change and getting ideas from outside the organization) | Implementation characteristics (e.g. change agents’ characteristics, relative strengths of supporters and opponents) | Adherence to guidelines |

Conceptual Model Conceptual Model for Considering the Determinants of Diffusion, Dissemination, and Implementation of Innovations in Health Service Delivery and Organization (full title, [17]), CFIR Consolidated Framework for Implementation Research, PARIHS Promoting Action on Research Implementation in Health Services.

The frameworks describe “implementation objects” in terms of research, guidelines, interventions, innovations and evidence (i.e. research-based knowledge in a broad sense). Outcomes differ correspondingly, from adherence to guidelines and research use, to successful implementation of interventions, innovations, evidence, etc. (i.e. the application of research-based knowledge in practice). The relevance of the end users (e.g. patients, consumers or community populations) of the implemented object (e.g. an EBP) is not explicitly addressed in some frameworks (e.g. [17,21,67]), suggesting that this is an area where further research is needed for better analysis of how various end users may influence implementation effectiveness.

Determinant frameworks imply a systems approach to implementation because they point to multiple levels of influence and acknowledge that there are relationships within and across the levels and different types of determinants. A system can be understood only as an integrated whole because it is composed not only of the sum of its components but also by the relationships among those components [68]. However, determinants are often assessed individually in implementation studies (e.g. [69-72]), (implicitly) assuming a linear relationship between the determinants and the outcomes and ignoring that individual barriers and enablers may interact in various ways that can be difficult to predict. For instance, there could be synergistic effects such that two seemingly minor barriers constitute an important obstacle to successful outcomes if they interact. Another issue is whether all relevant barriers and enablers are examined in these studies, which are often based on survey questionnaires, and are thus biased by the researcher’s selection of determinants. Surveying the perceived importance of a finite set of predetermined barriers can yield insights into the relative importance of these particular barriers but may overlook factors that independently affect implementation outcomes. Furthermore, there is the issue of whether the barriers and enablers are the actual determinants (i.e. whether they have actually been experienced or encountered) and the extent to which they are perceived to exist (i.e. they are more hypothetical barriers and enablers). The perceived importance of particular factors may not always correspond with the actual importance.

The context is an integral part of all the determinant frameworks. Described as “an important but poorly understood mediator of change and innovation in health care organizations” ([73]:79), the context lacks a unifying definition in implementation science (and related fields such as organizational behaviour and quality improvement). Still, context is generally understood as the conditions or surroundings in which something exists or occurs, typically referring to an analytical unit that is higher than the phenomena directly under investigation. The role afforded the context varies, from studies (e.g. [74-77]) that essentially view the context in terms of a physical “environment or setting in which the proposed change is to be implemented” ([5]:150) to studies (e.g. [21,74,78]) that assume that the context is something more active and dynamic that greatly affects the implementation process and outcomes. Hence, although implementation science researchers agree that the context is a critically important concept for understanding and explaining implementation, there is a lack of consensus regarding how this concept should be interpreted, in what ways the context is manifested and the means by which contextual influences might be captured in research.

The different types of determinants specified in determinant frameworks can be linked to classic theories. Thus, psychological theories that delineate factors influencing individual behaviour change are relevant for analysing how user/adopter characteristics affect implementation outcomes, whereas organizational theories concerning organizational climate, culture and leadership are more applicable for addressing the influence of the context on implementation outcomes.

Classic theories

Implementation researchers are also wont to apply theories from other fields such as psychology, sociology and organizational theory. These theories have been referred to as classic (or classic change) theories to distinguish them from research-to-practice models [45]. They might be considered passive in relation to action models because they describe change mechanisms and explain how change occurs without ambitions to actually bring about change.

Psychological behaviour change theories such as the Theory of Reasoned Action [79], the Social Cognitive Theory [80,81], the Theory of Interpersonal Behaviour [82] and the Theory of Planned Behaviour [83] have all been widely used in implementation science to study determinants of “clinical behaviour” change [84]. Theories such as the Cognitive Continuum Theory [85], the Novice-Expert Theory [86], the Cognitive-Experiential Self-Theory [87] and habit theories (e.g. [88,89]) may also be applicable for analysing cognitive processes involved in clinical decision-making and implementing EBP, but they are not as extensively used as the behaviour change theories.

Theories regarding the collective (such as health care teams) or other aggregate levels are relevant in implementation science, e.g. theories concerning professions and communities of practice, as well as theories concerning the relationships between individuals, e.g. social networks and social capital [14,53,90-93]. However, their use is not as prevalent as the individual-level theories.

There is increasing interest among implementation researchers in using theories concerning the organizational level because the context of implementation is becoming more widely acknowledged as an important influence on implementation outcomes. Theories concerning organizational culture, organizational climate, leadership and organizational learning are relevant for understanding and explaining organizational influences on implementation processes [21,53,57,94-101]. Several organization-level theories might have relevance for implementation science. For instance, Estabrooks et al. [14] have proposed the use of the Situated Change Theory [102] and the Institutional Theory [103,104], whereas Plsek and Greenhalgh [105] have suggested the use of complexity science [106] for better understanding of organizations. Meanwhile, Grol et al. [22] have highlighted the relevance of economic theories and theories of innovative organizations. However, despite increased interest in organizational theories, their actual use in empirical implementation studies thus far is relatively limited.

The Theory of Diffusion, as popularized through Rogers’ work on the spread of innovations, has also influenced implementation science. The theory’s notion of innovation attributes, i.e. relative advantage, compatibility, complexity, trialability and observability [107], has been widely applied in implementation science, both in individual studies (e.g. [108-110]) and in determinant frameworks (e.g. [17,58,60]) to assess the extent to which the characteristics of the implementation object (e.g. a clinical guideline) affect implementation outcomes. Furthermore, the Theory of Diffusion highlights the importance of intermediary actors (opinion leaders, change agents and gate-keepers) for successful adoption and implementation [107], which is reflected in roles described in numerous implementation determinant frameworks (e.g. [63,64]) and implementation strategy taxonomies (e.g. [111-114]). The Theory of Diffusion is considered the single most influential theory in the broader field of knowledge utilization of which implementation science is a part [115].

Implementation theories

There are also numerous theories that have been developed or adapted by researchers for potential use in implementation science to achieve enhanced understanding and explanation of certain aspects of implementation. Some of these have been developed by modifying certain features of existing theories or concepts, e.g. concerning organizational climate and culture. Examples include theories such as Implementation Climate [116], Absorptive Capacity [117] and Organizational Readiness [118]. The adaptation allows researchers to prioritize aspects considered to be most critical to analyse issues related to the how and why of implementation, thus improving the relevance and appropriateness to the particular circumstances at hand.

COM-B (Capability, Opportunity, Motivation and Behaviour) represents another approach to developing theories that might be applicable in implementation science. This theory began by identifying motivation as a process that energizes and directs behaviour. Capability and opportunity were added as necessary conditions for a volitional behaviour to occur, given sufficient motivation, on the basis of a US consensus meeting of behavioural theorists and a principle of US criminal law (which considers prerequisites for performance of specified volitional behaviours) [119]. COM-B posits that capability, opportunity and motivation generate behaviour, which in turn influences the three components. Opportunity and capability can influence motivation, while enacting a behaviour can alter capability, motivation and opportunity [66].

Another theory used in implementation science, the Normalization Process Theory [120], began life as a model, constructed on the basis of empirical studies of the implementation of new technologies [121]. The model was subsequently expanded upon and developed into a theory as change mechanisms and interrelations between various constructs were delineated [122]. The theory identifies four determinants of embedding (i.e. normalizing) complex interventions in practice (coherence or sense making, cognitive participation or engagement, collective action and reflexive monitoring) and the relationships between these determinants [123].

Evaluating implementation

Evaluation frameworks

There is a category of frameworks that provide a structure for evaluating implementation endeavours. Two common frameworks that originated in public health are RE-AIM (Reach, Effectiveness, Adoption, Implementation, Maintenance) [124] and PRECEDE-PROCEED (Predisposing, Reinforcing and Enabling Constructs in Educational Diagnosis and Evaluation-Policy, Regulatory, and Organizational Constructs in Educational and Environmental Development) [125]. Both frameworks specify implementation aspects that should be evaluated as part of intervention studies.

Proctor et al. [126] have developed a framework of implementation outcomes that can be applied to evaluate implementation endeavours. On the basis of a narrative literature review, they propose eight conceptually distinct outcomes for potential evaluation: acceptability, adoption (also referred to as uptake), appropriateness, costs, feasibility, fidelity, penetration (integration of a practice within a specific setting) and sustainability (also referred to as maintenance or institutionalization).

Although evaluation frameworks may be considered in a category of their own, theories, models and frameworks from the other four categories can also be applied for evaluation purposes because they specify concepts and constructs that may be operationalized and measured. For instance, Theoretical Domains Framework (e.g. [127,128]), and Normalization Process Theory [129] and COM-B (e.g. [130,131]) have all been widely used as evaluation frameworks. Furthermore, many theories, models and frameworks have spawned instruments that serve evaluation purposes, e.g. tools linked to PARIHS [132,133], CFIR [134] and Theoretical Domains Framework [135]. Other examples include the EBP Implementation Scale to measure the extent to which EBP is implemented [136] and the BARRIERS Scale to identify barriers to research use [137], as well as instruments to operationalize theories such as Implementation Climate [138] and Organizational Readiness [139].

Summary

Implementation science has progressed towards increased use of theoretical approaches to address various implementation challenges. While this article is not intended as a complete catalogue of all individual approaches available in implementation science, it is obvious that the menu of potentially useable theories, models and frameworks is extensive. Researchers in the field have pragmatically looked into other fields and disciplines to find relevant approaches, thus emphasizing the interdisciplinary and multiprofessional nature of the field.

This article proposes a taxonomy of five categories of theories, models and frameworks used in implementation science. These categories are not always recognized as separate types of approaches in the literature. For instance, systematic reviews and overviews by Graham and Tetroe [25], Mitchell et al. [8], Flottorp et al. [26], Meyers et al. [27] and Tabak et al. [28] have not distinguished between process models, determinant frameworks or classic theories because they all deal with factors believed or found to have an impact on implementation processes and outcomes. However, what matters most is not how an individual approach is labelled; it is important to recognize that these theories, models and frameworks differ in terms of their assumptions, aims and other characteristics, which have implications for their use.

There is considerable overlap between some of the categories. Thus, determinant frameworks, classic theories and implementation theories can also help to guide implementation practice (i.e. functioning as action models), because they identify potential barriers and enablers that might be important to address when undertaking an implementation endeavour. They can also be used for evaluation because they describe aspects that might be important to evaluate. A framework such as the Active Implementation Frameworks [68] appears to have a dual aim of providing hands-on support to implement something and identifying determinants of this implementation that should be analysed. Somewhat similarly, PARIHS [5] can be used by “anyone either attempting to get evidence into practice, or anyone who is researching or trying to better understand implementation processes and influences” ([64]:120), suggesting that it has ambitions that go beyond its primary function as a determinant framework.

Despite the overlap between different theories, models and frameworks used in implementation science, knowledge about the three overarching aims and five categories of theoretical approaches is important to identify and select relevant approaches in various situations. Most determinant frameworks provide limited “how-to” support for carrying out implementation endeavours since the determinants may be too generic to provide sufficient detail for guiding users through an implementation process. While the relevance of addressing barriers and enablers to translating research into practice is mentioned in many process models, these models do not identify or systematically structure specific determinants associated with implementation success. Another key difference is that process models recognize a temporal sequence of implementation endeavours, whereas determinant frameworks do not explicitly take a process perspective of implementation since the determinants typically relate to implementation as a whole.

Theories applied in implementation science can be characterized as middle level. Higher level theories can be built from theories at lower abstraction levels, so-called theory ladder climbing [140]. May [141] has discussed how a “general theory of implementation” might be constructed by linking the four constructs of Normalization Process Theory with constructs from relevant sociology and psychology theories to provide a more comprehensive explanation of the constituents of implementation processes. Still, it seems unlikely that there will ever be a grand implementation theory since implementation is too multifaceted and complex a phenomenon to allow for universal explanations. There has been debate in the policy implementation research field for many years whether researchers should strive to produce a theory applicable to public policy as a whole [38]. However, policy implementation researchers have increasingly argued that it would be a futile undertaking because “the world is too complex and there are too many causes of outcomes to allow for parsimonious explanation” ([37]:31). Determinant frameworks in implementation science clearly suggest that many different theories are relevant for understanding and explaining the many influences on implementation.

The use of a single theory that focuses only on a particular aspect of implementation will not tell the whole story. Choosing one approach often means placing weight on some aspects (e.g. certain causal factors) at the expense of others, thus offering only partial understanding. Combining the merits of multiple theoretical approaches may offer more complete understanding and explanation, yet such combinations may mask contrasting assumptions regarding key issues. For instance, are people driven primarily by their individual beliefs and motivation or does a pervasive organizational culture impose norms and values that regulate how people behave and make individual characteristics relatively unimportant? Is a particular behaviour primarily influenced by reflective thought processes or is it an automatically enacted habit? Furthermore, different approaches may require different methods, based on different epistemological and ontological assumptions.

There is a current wave of optimism in implementation science that using theoretical approaches will contribute to reducing the research-practice gap [4,10,11,63,142]. Although the use of theories, models and frameworks has many advocates in implementation science, there have also been critics [143,144], who have argued that theory is not necessarily better than common sense for guiding implementation. Common sense has been defined as a group’s shared tacit knowledge concerning a phenomenon [145]. One could argue that common sense about how or why something works (or does not) also constitutes a theory, albeit an informal and non-codified one. In either case, empirical research is needed to study how and the extent to which the use of implementation theories, models and frameworks contributes to more effective implementation and under what contextual conditions or circumstances they apply (and do not apply). It is also important to explore how the current theoretical approaches can be further developed to better address implementation challenges. Hence, both inductive construction of theory and deductive application of theory are needed.

While the use of theory does not necessarily yield more effective implementation than using common sense, there are certain advantages to applying formal theory over common sense (i.e. informal theory). Theories are explicit and open to question and examination; common sense usually consists of implicit assumptions, beliefs and ways of thinking and is therefore more difficult to challenge. If deductions from a theory are incorrect, the theory can be adapted, extended or abandoned. Theories are more consistent with existing facts than common sense, which typically means that a hypothesis based on an established theory is a more educated guess than one based on common sense. Furthermore, theories give individual facts a meaningful context and contribute towards building an integrated body of knowledge, whereas common sense is more likely to produce isolated facts [145,146]. On the other hand, theory may serve as blinders, as suggested by Kuhn [147] and Greenwald et al. [148], causing us to ignore problems that do not fit into existing theories, models and frameworks or hindering us from seeing known problems in new ways. Theorizing about implementation should therefore not be an abstract academic exercise unconnected with the real world of implementation practice. In the words of Immanuel Kant, “Experience without theory is blind, but theory without experience is mere intellectual play”.

Acknowledgements

I am grateful to Bianca Albers, Susanne Bernhardsson (special thanks), Dean L. Fixsen, Karen Grimmer, Ursula Reichenpfader and Kerstin Roback for constructive comments on drafts of this article. Also, thanks are due to Margit Neher, Justin Presseau and Jeanette Wassar Kirk for their input.

Footnotes

Competing interests

The author declares that he has no competing interests.

References

- 1.Eccles M, Grimshaw J, Walker A, Johnston M, Pitts N. Changing the behavior of healthcare professionals: the use of theory in promoting the uptake of research findings. J Clin Epidemiol. 2005;58:107–12. doi: 10.1016/j.jclinepi.2004.09.002. [DOI] [PubMed] [Google Scholar]

- 2.Davies P, Walker A, Grimshaw J. Theories of behavior change in studies of guideline implementation. Proc Br Psychol Soc. 2003;11:120. [Google Scholar]

- 3.Michie S, Johnston M, Abraham C, Lawton R, Parker D, Walker A. Making psychological theory useful for implementing evidence based practice: a consensus approach. Qual Saf Health Care. 2005;14:26–33. doi: 10.1136/qshc.2004.011155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sales A, Smith J, Curran G, Kochevar L. Models, strategies, and tools: theory in implementing evidence-based findings into health care practice. J Gen Intern Med. 2006;21:S43–9. doi: 10.1111/j.1525-1497.2006.00362.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kitson AL, Harvey G, McCormack B. Enabling the implementation of evidence-based practice: a conceptual framework. Qual Health Care. 1998;7:149–58. doi: 10.1136/qshc.7.3.149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.ICEBeRG Designing theoretically-informed implementation interventions. Implement Sci. 2006;1:4. doi: 10.1186/1748-5908-1-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Godin G, Bélanger-Gravel A, Eccles M, Grimshaw J. Healthcare professionals’ intentions and behaviours: a systematic review of studies based on social cognitive theories. Implement Sci. 2008;3:36. doi: 10.1186/1748-5908-3-36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mitchell SA, Fisher CA, Hastings CE, Silverman LB, Wallen GR. A thematic analysis of theoretical models for translating science in nursing: mapping the field. Nurs Outlook. 2010;58:287–300. doi: 10.1016/j.outlook.2010.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Rycroft-Malone J, Bucknall T. Theory, Frameworks, and Models: Laying Down the Groundwork. In: Rycroft-Malone J, Bucknall T, editors. Models and Frameworks for Implementing Evidence-Based Practice: Linking Evidence to Action. Oxford: Wiley-Blackwell; 2010. pp. 23–50. [Google Scholar]

- 10.Cane J, O’Connor D, Michie S. Validation of the theoretical domains framework for use in behaviour change and implementation research. Implement Sci. 2012;7:37. doi: 10.1186/1748-5908-7-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Martinez RG, Lewis CC, Weiner BJ. Instrumentation issues in implementation science. Implement Sci. 2014;9:118. doi: 10.1186/s13012-014-0118-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Eccles MP, Mittman BS. Welcome to implementation science. Implement Sci. 2006;1:1. [Google Scholar]

- 13.Graham ID, Logan J, Harrison MB, Straus SE, Tetroe J, Caswell W, et al. Lost in knowledge translation: time for a map? J Contin Educ Health Prof. 2006;26:13–24. doi: 10.1002/chp.47. [DOI] [PubMed] [Google Scholar]

- 14.Estabrooks CA, Thompson DS, Lovely JE, Hofmeyer A. A guide to knowledge translation theory. J Contin Educ Health Prof. 2006;26:25–36. doi: 10.1002/chp.48. [DOI] [PubMed] [Google Scholar]

- 15.Wilson KM, Brady TJ, Lesesne C, on behalf of the NCCDPHP Work Group on Translation An organizing framework for translation in public health: the knowledge to action framework. Prev Chronic Dis. 2011;8:A46. [PMC free article] [PubMed] [Google Scholar]

- 16.Rabin BA, Brownson RC. Developing the Terminology for Dissemination and Implementation Research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and Implementation Research in Health. New York: Oxford University Press; 2012. pp. 23–51. [Google Scholar]

- 17.Greenhalgh T, Robert G, Bate P, Macfarlane F, Kyriakidou O. Diffusion of Innovations in Service Organisations: A Systematic Literature Review. Malden, MA: Blackwell Publishing; 2005. [Google Scholar]

- 18.Jones K. Mission drift in qualitative research, or moving toward a systematic review of qualitative studies, moving back to a more systematic narrative review. Qual Rep. 2004;9:95–112. [Google Scholar]

- 19.Cronin P, Ryan F, Coughlan M. Undertaking a literature review: a step-by-step approach. Br J Nurs. 2008;17:38–43. doi: 10.12968/bjon.2008.17.1.28059. [DOI] [PubMed] [Google Scholar]

- 20.Rycroft-Malone J, Bucknall T. Models and Frameworks for Implementing Evidence-Based Practice: Linking Evidence to Action. Oxford: Wiley-Blackwell; 2010. [Google Scholar]

- 21.Nutley SM, Walter I, Davies HTO. Using evidence: how research can inform public services. Bristol: The Policy Press; 2007. [Google Scholar]

- 22.Grol R, Wensing M, Eccles M. Improving Patient Care: The Implementation of Change in Clinical Practice. Edinburgh: Elsevier; 2005. [Google Scholar]

- 23.Straus S, Tetroe J, Graham ID. Knowledge Translation in Health Care. Chichester: John Wiley & Sons; 2009. [Google Scholar]

- 24.Brownson RC, Colditz GA, Proctor EK. Dissemination and Implementation Research in Health. New York: Oxford University Press; 2012. [Google Scholar]

- 25.Graham ID, Tetroe J. Some theoretical underpinnings of knowledge translation. Acad Emerg Med. 2007;14:936–41. doi: 10.1197/j.aem.2007.07.004. [DOI] [PubMed] [Google Scholar]

- 26.Flottorp SA, Oxman AD, Krause J, Musila NR, Wensing M, Godycki-Cwirko M, et al. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement Sci. 2013;8:35. doi: 10.1186/1748-5908-8-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Meyers DC, Durlak JA, Wandersman A. The Quality Implementation Framework: a synthesis of critical steps in the implementation process. Am J Community Psychol. 2012;50:462–80. doi: 10.1007/s10464-012-9522-x. [DOI] [PubMed] [Google Scholar]

- 28.Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43:337–50. doi: 10.1016/j.amepre.2012.05.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Frankfort-Nachmias C, Nachmias D. Research Methods in the Social Sciences. London: Arnold; 1996. [Google Scholar]

- 30.Wacker JG. A definition of theory: research guidelines for different theory-building research methods in operations management. J Oper Manag. 1998;16:361–85. [Google Scholar]

- 31.Carpiano RM, Daley DM. A guide and glossary on postpositivist theory building for population health. J Epidemiol Community Health. 2006;60:564–70. doi: 10.1136/jech.2004.031534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Bunge M. Scientific Research 1: The Search for System. New York: Springer; 1967. [Google Scholar]

- 33.Reynolds PD. A Primer in Theory Construction. Indianapolis, IN: Bobbs-Merrill Educational Publishing; 1971. [Google Scholar]

- 34.Dubin R. Theory Building. New York: Free Press; 1978. [Google Scholar]

- 35.Hunt SD. Modern Marketing Theory: Critical Issues in the Philosophy of Marketing Science. Cincinnati, OH: Southwestern Publishing; 1991. [Google Scholar]

- 36.Bluedorn AC, Evered RD. Middle Range Theory and the Strategies of Theory Construction. In: Pinder CC, Moore LF, editors. Middle Range Theory and The Study of Organizations. Boston, MA: Martinus Nijhoff; 1980. pp. 19–32. [Google Scholar]

- 37.Cairney P. Understanding Public Policy—Theories and Issues. Basingstoke, UK: Palgrave Macmillan; 2012. [Google Scholar]

- 38.Sabatier PA. Theories of the Policy Process. 2. Boulder, CO: Westview Press; 2007. [Google Scholar]

- 39.Kitson AL, Rycroft-Malone J, Harvey G, McCormack B, Seers K, Titchen A. Evaluating the successful implementation of evidence into practice using the PARiHS framework: theoretical and practical challenges. Implement Sci. 2008;3:1. doi: 10.1186/1748-5908-3-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Huberman M. Research utilization: the state of the art. Knowl Policy. 1994;7:13–33. [Google Scholar]

- 41.Landry R, Amara N, Lamari M. Climbing the ladder of research utilization: evidence from social science. Sci Commun. 2001;22:396–422. [Google Scholar]

- 42.Canadian Institutes of Health Research (CIHR). About knowledge translation. [http://www.cihr-irsc.gc.ca/e/29418.html]. Retrieved 18 December 2014.

- 43.Davis SM, Peterson JC, Helfrich CD, Cunningham-Sabo L. Introduction and conceptual model for utilization of prevention research. Am J Prev Med. 2007;33:1S. doi: 10.1016/j.amepre.2007.04.004. [DOI] [PubMed] [Google Scholar]

- 44.Majdzadeh R, Sadighi J, Nejat S, Mahani AS, Ghdlami J. Knowledge translation for research utilization: design of a knowledge translation model at Teheran University of Medical Science. J Contin Educ Health Prof. 2008;28:270–7. doi: 10.1002/chp.193. [DOI] [PubMed] [Google Scholar]

- 45.Graham ID, Tetroe J, KT Theories Group . Planned Action Theories. In: Straus S, Tetroe J, Graham ID, editors. Knowledge Translation in Health Care. Oxford: Blackwell Publishing; 2009. pp. 185–95. [Google Scholar]

- 46.Rycroft-Malone J, Bucknall T. Analysis and Synthesis of Models and Frameworks. In: Rycroft-Malone J, Bucknall T, editors. Models and Frameworks for Implementing Evidence-Based Practice: Linking Evidence to Action. Oxford: Wiley-Blackwell; 2010. pp. 223–45. [Google Scholar]

- 47.Stetler CB. Stetler model. In: Rycroft-Malone J, Bucknall T, editors. Models and Frameworks for Implementing Evidence-Based Practice: Linking Evidence to Action. Oxford: Wiley-Blackwell; 2010. pp. 51–82. [Google Scholar]

- 48.Stevens KR. The impact of evidence-based practice in nursing and the next big ideas. Online J Issues Nurs. 2013;18(2):4. [PubMed] [Google Scholar]

- 49.Titler MG, Kleiber C, Steelman V, Goode C, Rakel B, Barry-Walker J, et al. Infusing research into practice to promote quality care. Nurs Res. 1995;43:307–13. [PubMed] [Google Scholar]

- 50.Titler MG, Kleiber C, Steelman VJ, Rakel BA, Budreau G, Everett LQ, et al. The Iowa Model of evidence-based practice to promote quality care. Crit Care Nurs Clin North Am. 2001;13:497–509. [PubMed] [Google Scholar]

- 51.Logan J, Graham I. Toward a comprehensive interdisciplinary model of health care research use. Sci Commun. 1998;20:227–46. [Google Scholar]

- 52.Logan J, Graham I. The Ottawa Model of Research Use. In: Rycroft-Malone J, Bucknall T, editors. Models and Frameworks for Implementing Evidence-Based Practice: Linking Evidence to Action. Oxford: Wiley-Blackwell; 2010. pp. 83–108. [Google Scholar]

- 53.Grol R, Wensing M. What drives change? Barriers to and incentives for achieving evidence-based practice. Med J Aust. 2004;180:S57–60. doi: 10.5694/j.1326-5377.2004.tb05948.x. [DOI] [PubMed] [Google Scholar]

- 54.Pronovost PJ, Berenholtz SM, Needham DM. Translating evidence into practice: a model for large scale knowledge translation. BMJ. 2008;337:a1714. doi: 10.1136/bmj.a1714. [DOI] [PubMed] [Google Scholar]

- 55.Field B, Booth A, Ilott I, Gerrish K. Using the Knowledge to Action Framework in practice: a citation analysis and systematic review. Implement Sci. 2014;9:172. doi: 10.1186/s13012-014-0172-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Stetler C. Refinement of the Stetler/Marram Model for application of research findings to practice. Nurs Outlook. 1994;42:15–25. doi: 10.1016/0029-6554(94)90067-1. [DOI] [PubMed] [Google Scholar]

- 57.Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008;41:327–50. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- 58.Gurses AP, Marsteller JA, Ozok AA, Xiao Y, Owens S, Pronovost PJ. Using an interdisciplinary approach to identify factors that affect clinicians’ compliance with evidence-based guidelines. Crit Care Med. 2010;38(8 Suppl):S282–91. doi: 10.1097/CCM.0b013e3181e69e02. [DOI] [PubMed] [Google Scholar]

- 59.Cochrane LJ, Olson CA, Murray S, Dupuis M, Tooman T, Hayes S. Gaps between knowing and doing: understanding and assessing the barriers to optimal health care. J Contin Educ Health Prof. 2007;27:94–102. doi: 10.1002/chp.106. [DOI] [PubMed] [Google Scholar]

- 60.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Ferlie E, Shortell SM. Improving the quality of health care in the United Kingdom and the United States: a framework for change. Milbank Q. 2001;79:281–315. doi: 10.1111/1468-0009.00206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Jacobson N, Butterill D, Goering P. Development of a framework for knowledge translation: understanding user context. J Health Serv Res Policy. 2003;8:94–9. doi: 10.1258/135581903321466067. [DOI] [PubMed] [Google Scholar]

- 63.Blase KA, Van Dyke M, Fixsen DL, Bailey FW. Implementation Science: Key Concepts, Themes and Evidence for Practitioners in Educational Psychology. In: Kelly B, Perkins DF, editors. Handbook of Implementation Science for Psychology in Education. Cambridge: Cambridge University Press; 2012. pp. 13–34. [Google Scholar]

- 64.Rycroft-Malone J. Promoting Action on Research Implementation in Health Services (PARIHS) In: Rycroft-Malone J, Bucknall T, editors. Models and Frameworks for Implementing Evidence-Based Practice: Linking Evidence to Action. Oxford: Wiley-Blackwell; 2010. pp. 109–36. [Google Scholar]

- 65.Helfrich CD, Damschroder LJ, Hagedorn HJ, Daggett GS, Sahay A, Ritchie M, et al. A critical synthesis of literature on the Promoting Action on Research Implementation in Health Services (PARIHS) framework. Implement Sci. 2010;5:82. doi: 10.1186/1748-5908-5-82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Michie S, Atkins L, West R. A guide to using the Behaviour Change Wheel. London: Silverback Publishing; 2014. [Google Scholar]

- 67.Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation Research: A Synthesis of the Literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute; 2005. [Google Scholar]

- 68.Holmes BJ, Finegood DT, Riley BL, Best A. Systems Thinking in Dissemination and Implementation Research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and Implementation Research in Health. New York: Oxford University Press; 2012. pp. 192–212. [Google Scholar]

- 69.Légaré F, Ratté S, Gravel K, Graham ID. Barriers and facilitators to implementing shared decision-making in clinical practice. Update of a systematic review of health professionals’ perceptions. Patient Educ Couns. 2008;73:526–35. doi: 10.1016/j.pec.2008.07.018. [DOI] [PubMed] [Google Scholar]

- 70.Johnson M, Jackson R, Guillaume L, Meier P, Goyder E. Barriers and facilitators to implementing screening and brief intervention for alcohol misuse: a systematic review of qualitative evidence. J Public Health. 2011;33:412–21. doi: 10.1093/pubmed/fdq095. [DOI] [PubMed] [Google Scholar]

- 71.Verweij LM, Proper KI, Leffelaar ER, Weel ANH, Nauta AP, Hulshof CTJ, et al. Barriers and facilitators to implementation of an occupational health guideline aimed at preventing weight gain among employees in the Netherlands. J Occup Environ Med. 2012;54:954–60. doi: 10.1097/JOM.0b013e3182511c9f. [DOI] [PubMed] [Google Scholar]

- 72.Broyles LM, Rodriguez KL, Kraemer KL, Sevick MA, Price PA, Gordon AJ. A qualitative study of anticipated barriers and facilitators to the implementation of nurse-delivered alcohol screening, brief intervention, and referral to treatment for hospitalized patients in a Veterans Affairs medical centre. Addiction Sci Clin Pract. 2012;7:7. doi: 10.1186/1940-0640-7-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Dopson S, Fitzgerald L. The Active Role of Context. In: Dopson S, Fitzgerald L, editors. Knowledge to Action? Evidence-Based Health Care in Context. Oxford: Oxford University Press; 2005. pp. 79–103. [Google Scholar]

- 74.Ashton CM, Khan MM, Johnson ML, Walder A, Stanberry E, Beyth RJ, et al. A quasi-experimental test of an intervention to increase the use of thiazide-based treatment regimens for people with hypertension. Implement Sci. 2007;2:5. doi: 10.1186/1748-5908-2-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Mohr DC, VanDeusen LC, Meterko M. Predicting healthcare employees’ participation in an office redesign program: attitudes, norms and behavioral control. Implement Sci. 2008;3:47. doi: 10.1186/1748-5908-3-47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Scott SD, Plotnikoff RC, Karunamuni N, Bize R, Rodgers W. Factors influencing the adoption of an innovation: an examination of the uptake of the Canadian Heart Health Kit (HHK) Implement Sci. 2008;3:41. doi: 10.1186/1748-5908-3-41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Zardo P, Collie A. Predicting research use in a public health policy environment: results of a logistic regression analysis. Implement Sci. 2014;9:142. doi: 10.1186/s13012-014-0142-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Gabbay J, Le May A. Evidence based guidelines or collectively constructed “mindlines”? Ethnographic study of knowledge management in primary care. Br Med J. 2011;329:1–5. doi: 10.1136/bmj.329.7473.1013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Fishbein M, Ajzen I. Belief, Attitude, Intention, and Behaviour. New York: John Wiley; 1975. [Google Scholar]

- 80.Bandura A. Self-efficacy: towards a unifying theory of behavioural change. Psychol Rev. 1977;84:191–215. doi: 10.1037//0033-295x.84.2.191. [DOI] [PubMed] [Google Scholar]

- 81.Bandura A. Social Foundations of Thought and Action: A Cognitive Social Theory. Englewood-Cliffs, NJ: Prentice-Hall; 1986. [Google Scholar]

- 82.Triandis HC. Nebraska Symposium on Motivation; Beliefs, Attitude, and values: 1979. Lincoln, NE: University of Nebraska Press; 1979. Values, Attitudes, and Interpersonal Behaviour; pp. 195–259. [PubMed] [Google Scholar]

- 83.Ajzen I. Attitudes, Personality and Behavior. Milton Keynes: Open University Press; 1988. [Google Scholar]

- 84.Nilsen P, Roback K, Broström A, Ellström PE. Creatures of habit: accounting for the role of habit in implementation research on clinical behaviour change. Implement Sci. 2012;7:53. doi: 10.1186/1748-5908-7-53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Hammond KR. Principles of Organization in Intuitive and Analytical Cognition. Boulder, CO: Center for Research on Judgment and Policy, University of Colorado; 1981. [Google Scholar]

- 86.Benner P. From Novice to Expert, Excellence and Power in Clinical Nursing Practice. Menlo Park, CA: Addison-Wesley Publishing; 1984. [Google Scholar]

- 87.Epstein S. Integration of the cognitive and the psychodynamic unconscious. Am Psychol. 1994;49:709–24. doi: 10.1037//0003-066x.49.8.709. [DOI] [PubMed] [Google Scholar]

- 88.Ouelette JA, Wood W. Habit and intention in everyday life: the multiple processes by which past behaviour predicts future behaviour. Psychol Bull. 1998;124:54–74. [Google Scholar]

- 89.Verplanken B, Aarts H. Habit, attitude, and planned behaviour: is habit an empty construct or an interesting case of goal-directed automaticity? Eur Rev Soc Psychol. 1999;10:101–34. [Google Scholar]

- 90.Eccles MP, Hrisos S, Francis JJ, Steen N, Bosch M, Johnston M. Can the collective intentions of individual professionals within healthcare teams predict the team’s performance: developing methods and theory. Implement Sci. 2009;4:24. doi: 10.1186/1748-5908-4-24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Parchman ML, Scoglio CM, Schumm P. Understanding the implementation of evidence-based care: a structural network approach. Implement Sci. 2011;6:14. doi: 10.1186/1748-5908-6-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Cunningham FC, Ranmuthugala G, Plumb J, Georgiou A, Westbrook JI, Braithwaite J. Health professional networks as a vector for improving healthcare quality and safety: a systematic review. BMJ Qual Saf. 2011. doi:10.1136/bmjqs-2011-000187. [DOI] [PMC free article] [PubMed]

- 93.Mascia D, Cicchetti A. Physician social capital and the reported adoption of evidence-based medicine: exploring the role of structural holes. Soc Sci Med. 2011;72:798–805. doi: 10.1016/j.socscimed.2010.12.011. [DOI] [PubMed] [Google Scholar]

- 94.Wallin L, Ewald U, Wikblad K, Scott-Findlay S, Arnetz BB. Understanding work contextual factors: a short-cut to evidence-based practice? Worldviews Evid Based Nurs. 2006;3:153–64. doi: 10.1111/j.1741-6787.2006.00067.x. [DOI] [PubMed] [Google Scholar]

- 95.Meijers JMM, Janssen MAP, Cummings GG, Wallin L, Estabrooks CA, Halfens RYG. Assessing the relationship between contextual factors and research utilization in nursing: systematic literature review. J Adv Nurs. 2006;55:622–35. doi: 10.1111/j.1365-2648.2006.03954.x. [DOI] [PubMed] [Google Scholar]

- 96.Wensing M, Wollersheim H, Grol R. Organizational interventions to implement improvements in patient care: a structured review of reviews. Implement Sci. 2006;1:2. doi: 10.1186/1748-5908-1-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Gifford W, Davies B, Edwards N, Griffin P, Lybanon V. Managerial leadership for nurses’ use of research evidence: an integrative review of the literature. Worldviews Evid Based Nurs. 2007;4:126–45. doi: 10.1111/j.1741-6787.2007.00095.x. [DOI] [PubMed] [Google Scholar]

- 98.Yano EM. The role of organizational research in implementing evidence-based practice: QUERI series. Implement Sci. 2008;3:29. doi: 10.1186/1748-5908-3-29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.French B, Thomas LH, Baker P, Burton CR, Pennington L, Roddam H. What can management theories offer evidence-based practice? A comparative analysis of measurement tools for organizational context. Implement Sci. 2009;4:28. doi: 10.1186/1748-5908-4-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Parmelli E, Flodgren G, Beyer F, Baillie N, Schaafsma ME, Eccles MP. The effectiveness of strategies to change organisational culture to improve healthcare performance: a systematic review. Implement Sci. 2011;6:33. doi: 10.1186/1748-5908-6-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Chaudoir SR, Dugan AG, Barr CHI. Measuring factors affecting implementation of health innovations: a systematic review of structural, organizational, provider, patient, and innovation level measures. Implement Sci. 2013;8:22. doi: 10.1186/1748-5908-8-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Orlikowski W. Improvising organizational transformation over time: a situated change perspective. Inform Syst Res. 1994;7:63–92. [Google Scholar]

- 103.DiMaggio PJ, Powell WW. The New Institutionalism and Organizational Analysis. Chicago, IL: University of Chicago Press; 1991. [Google Scholar]

- 104.Scott WR. Institutions and Organizations. Thousand Oaks, CA: Sage; 1995. [Google Scholar]

- 105.Plsek PE, Greenhalgh T. The challenge of complexity in health care. BMJ. 2001;323:625–8. doi: 10.1136/bmj.323.7313.625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Waldrop MM. Complexity: The Emerging Science at The Edge of Order and Chaos. London: Viking; 1992. [Google Scholar]

- 107.Rogers EM. Diffusion of Innovations. 5. New York: Free Press; 2003. [Google Scholar]

- 108.Aubert BA, Hamel G. Adoption of smart cards in the medical sector: the Canadian experience. Soc Sci Med. 2001;53:879–94. doi: 10.1016/s0277-9536(00)00388-9. [DOI] [PubMed] [Google Scholar]

- 109.Vollink T, Meertens R, Midden CJH. Innovating ‘diffusion of innovation’ theory: innovation characteristics and the intention of utility companies to adopt energy conservation interventions. J Environ Psychol. 2002;22:333–44. [Google Scholar]

- 110.Foy R, MacLennan G, Grimshaw J, Penney G, Campbell M, Grol R. Attributes of clinical recommendations that influence change in practice following audit and feedback. J Clin Epidemiol. 2002;55:717–22. doi: 10.1016/s0895-4356(02)00403-1. [DOI] [PubMed] [Google Scholar]

- 111.Oxman AD, Thomson MA, Davis DA, Haynes RB. No magic bullets: a systematic review of 102 trials of interventions to improve professional practice. CMAJ. 1995;153:1423–31. [PMC free article] [PubMed] [Google Scholar]

- 112.Grimshaw J, McAuley LM, Bero LA, Grilli R, Oxman AD, Ramsay C, et al. Systematic reviews of effectiveness of quality improvement strategies and programmes. Qual Saf Health Care. 2003;12:298–303. doi: 10.1136/qhc.12.4.298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Walter I, Nutley SM, Davies HTO. Developing a taxonomy of interventions used to increase the impact of research. St. Andrews: University of St Andrews; 2003. Discussion Paper 3, Research Unit for Research Utilisation, University of St. Andrews.

- 114.Leeman J, Baernholdt M, Sandelowski M. Developing a theory-based taxonomy of methods for implementing change in practice. J Adv Nurs. 2007;58:191–200. doi: 10.1111/j.1365-2648.2006.04207.x. [DOI] [PubMed] [Google Scholar]

- 115.Estabrooks CA, Derksen L, Winther C, Lavis JN, Scott SD, Wallin L, et al. The intellectual structure and substance of the knowledge utilization field: a longitudinal author co-citation analysis, 1945 to 2004. Implement Sci. 2008;3:49. doi: 10.1186/1748-5908-3-49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manage Rev. 1996;21:1055–80. [Google Scholar]

- 117.Zahra AS, George G. Absorptive capacity: a review, reconceptualization and extension. Acad Manage Rev. 2002;27:185–203. [Google Scholar]

- 118.Weiner BJ. A theory of organizational readiness for change. Implement Sci. 2009;4:67. doi: 10.1186/1748-5908-4-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 119.Michie S, van Stralen MM, West R. The behaviour change wheel: a new method for characterising and designing behaviour change interventions. Implement Sci. 2011;6:42. doi: 10.1186/1748-5908-6-42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.May C, Finch T. Implementing, embedding and integrating practices: an outline of Normalization Process Theory. Sociology. 2009;43:535. [Google Scholar]

- 121.May C, Finch T, Mair F, Ballini L, Dowrick C, Eccles M, et al. Understanding the implementation of complex interventions in health care: the normalization process model. Implement Sci. 2007;7:148. doi: 10.1186/1472-6963-7-148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Finch TL, Rapley T, Girling M, Mair FS, Murray E, Treweek S, et al. Improving the normalization of complex interventions: measure development based on normalization process theory (NoMAD): study protocol. Implement Sci. 2013;8:43. doi: 10.1186/1748-5908-8-43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123.Murray E, Treweek S, Pope C, MacFarlane A, Ballini L, Dowrick C, et al. Normalisation process theory: a framework for developing, evaluating and implementing complex interventions. BMC Med. 2010;8:63. doi: 10.1186/1741-7015-8-63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 124.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89:1322–7. doi: 10.2105/ajph.89.9.1322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 125.Green LW, Kreuter MW. Health Program Planning: An Educational and Ecological Approach. New York: McGraw-Hill; 2005. [Google Scholar]

- 126.Proctor E, Silmere H, RaghaVan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin Policy Mental Health. 2011;38:65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 127.Phillips CJ, Marshall AP, Chaves NJ, Lin IB, Loy CT, Rees G, et al. Experiences of using Theoretical Domains Framework across diverse clinical environments: a qualitative study. J Multidiscip Healthc. 2015;8:139–46. doi: 10.2147/JMDH.S78458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 128.Fleming A, Bradley C, Cullinan S, Byrne S. Antibiotic prescribing in long-term care facilities: a qualitative, multidisciplinary investigation. BMJ Open. 2014;4(11) doi: 10.1136/bmjopen-2014-006442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 129.McEvoy R, Ballini L, Maltoni S, O’Donnell CA, Mair FS, MacFarlane A. A qualitative systematic review of studies using the normalization process theory to research implementation processes. Implement Sci. 2014;9:2. doi: 10.1186/1748-5908-9-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 130.Connell LA, McMahon NE, Redfern J, Watkins CL, Eng JJ. Development of a behaviour change intervention to increase upper limb exercise in stroke rehabilitation. Implement Sci. 2015;10:34. doi: 10.1186/s13012-015-0223-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 131.Praveen D, Patel A, Raghu A, Clifford GD, Maulik PK, Abdul AM, et al. Development and field evaluation of a mobile clinical decision support system for cardiovascular diseases in rural India. JMIR mHealth uHealth. 2014;2 doi: 10.2196/mhealth.3568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 132.Estabrooks CA, Squires JE, Cummings GG, Birdell JM, Norton PG. Development and assessment of the Alberta Context Tool. BMC Health Serv Res. 2009;9:234. doi: 10.1186/1472-6963-9-234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 133.McCormack B, McCarthy G, Wright J, Slater P, Coffey A. Development and testing of the Context Assessment Index (CAI) Worldviews Evid Based Nurs. 2009;6:27–35. doi: 10.1111/j.1741-6787.2008.00130.x. [DOI] [PubMed] [Google Scholar]

- 134.Damschroder LJ, Lowery JC. Evaluation of a large-scale weight management program using the consolidated framework for implementation research (CFIR) Implement Sci. 2013;8:51. doi: 10.1186/1748-5908-8-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 135.Dyson J, Lawton R, Jackson C, Cheater F. Development of a theory-based instrument to identify barriers and levers to best hand hygiene practice among healthcare practitioners. Implement Sci. 2013;8:111. doi: 10.1186/1748-5908-8-111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 136.Melnyk BM, Fineout-Overholt E, Mays MZ. The Evidence-Based Practice Beliefs and Implementation Scales: psychometric properties of two new instruments. Worldviews Evid Based Nurs. 2008;5:208–16. doi: 10.1111/j.1741-6787.2008.00126.x. [DOI] [PubMed] [Google Scholar]

- 137.Nilsson Kajermo K, Boström A-M, Thompson DS, Hutchinson AM, Estabrooks CA, Wallin L. The BARRIERS scale—the barriers to research utilization scale: a systematic review. Implement Sci. 2010;5:32. doi: 10.1186/1748-5908-5-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 138.Jacobs SR, Weiner BJ, Bunger AC. Context matters: measuring implementation climate among individuals and groups. Implement Sci. 2014;9:46. doi: 10.1186/1748-5908-9-46. [DOI] [PMC free article] [PubMed] [Google Scholar]