Abstract

Background

Identification of patients at high risk for readmission is a crucial step toward improving care and reducing readmissions. The adoption of electronic health records (EHR) may prove important to strategies designed to risk stratify patients and introduce targeted interventions.

Objective

To develop and implement an automated prediction model integrated into our health system’s EHR that identifies on admission patients at high risk for readmission within 30 days of discharge.

Design

Retrospective and prospective cohort.

Setting

Healthcare system consisting of three hospitals.

Patients

All adult patients admitted from August 2009 to September 2012.

Interventions

An automated readmission risk flag integrated into the EHR.

Measures

Thirty-day all-cause and 7-day unplanned healthcare system readmissions.

Results

Using retrospective data, a single risk factor, ≥2 inpatient admissions in the past 12 months, was found to have the best balance of sensitivity (40%), positive predictive value (31%), and proportion of patients flagged (18%), with a c-statistic of 0.62. Sensitivity (39%), positive predictive value (30%), proportion of patients flagged (18%) and c-statistic (0.61) during the 12-month period after implementation of the risk flag were similar. There was no evidence for an effect of the intervention on 30-day all-cause and 7-day unplanned readmission rates in the 12-month period after implementation.

Conclusions

An automated prediction model was effectively integrated into an existing EHR and identified patients on admission who were at risk for readmission within 30 days of discharge.

Keywords: Informatics, Quality Improvement, Readmissions

Potential MeSH Terms: Electronic Health Records/statistics & numerical data, Outcome Assessment (Health Care)/statistics & numerical data, Patient Readmission/statistics & numerical data, Predictive Value of Tests, Risk Assessment/statistics & numerical data

BACKGROUND

Unplanned hospital readmissions are common, costly, and potentially avoidable. Approximately 20% of Medicare patients are readmitted within 30 days of discharge.1 Readmission rates are estimated to be similarly high in other population subgroups2–4 with approximately 80% of patients1,5,6 readmitted to the original discharging hospital. A recent systematic review suggested that 27% of readmissions may be preventable.7

Hospital readmissions have increasingly been viewed as a correctable marker of poor quality care and have been adopted by a number of organizations as quality indicators.8–10 As a result, hospitals have important internal and external motivations to address readmissions. Identification of patients at high risk for readmissions may be an important first step toward preventing them. In particular, readmission risk assessment could be used to help providers target the delivery of resource-intensive transitional care interventions11–14 to patients with the greatest needs. Such an approach is appealing because it allows hospitals to focus scarce resources where the impact may be greatest and provides a starting point for organizations struggling to develop robust models of transitional care delivery.

Electronic health records (EHRs) may prove to be an important component of strategies designed to risk stratify patients at the point of care. Algorithms integrated into the EHR that automatically generate risk predictions have the potential to (1) improve provider time efficiency by automating the prediction process, (2) improve consistency of data collection and risk score calculation, (3) increase adoption through improved usability, and (4) provide clinically important information in real-time to all healthcare team members caring for a hospitalized patient.

We thus sought to derive a predictive model for 30-day readmissions using data reliably present in our EHR at the time of admission, and integrate this predictive model into our hospital’s EHR to create an automated prediction tool that identifies on admission patients at high risk for readmission within 30 days of discharge. In addition, we prospectively validated this model using the 12-month period after implementation and examined the impact on readmissions.

METHODS

Setting

The University of Pennsylvania Health System (UPHS) includes three hospitals with a combined capacity of over 1,500 beds and 70,000 annual admissions. All hospitals currently utilize Sunrise Clinical Manager version 5.5 (Allscripts, Chicago, Illinois) as their EHR. The study sample included all adult admissions to any of the three UPHS hospitals during the study period. Admissions to short procedure, rehabilitation and hospice units were excluded. The study received expedited approval and a HIPAA waiver from the University of Pennsylvania Institutional Review Board.

Development of Predictive Model

The UPHS Center for Evidence-based Practice15,16 performed a systematic review to identify factors associated with hospital readmission within 30 days of discharge. We then examined the data available from our hospital EHR at the time of admission for those factors identified in the review. Using different threshold values and look-back periods, we developed and tested 30 candidate prediction models using these variables alone and in combination. Prediction models were evaluated using 24 months of historical data between 8/1/2009–8/1/2011.

Implementation

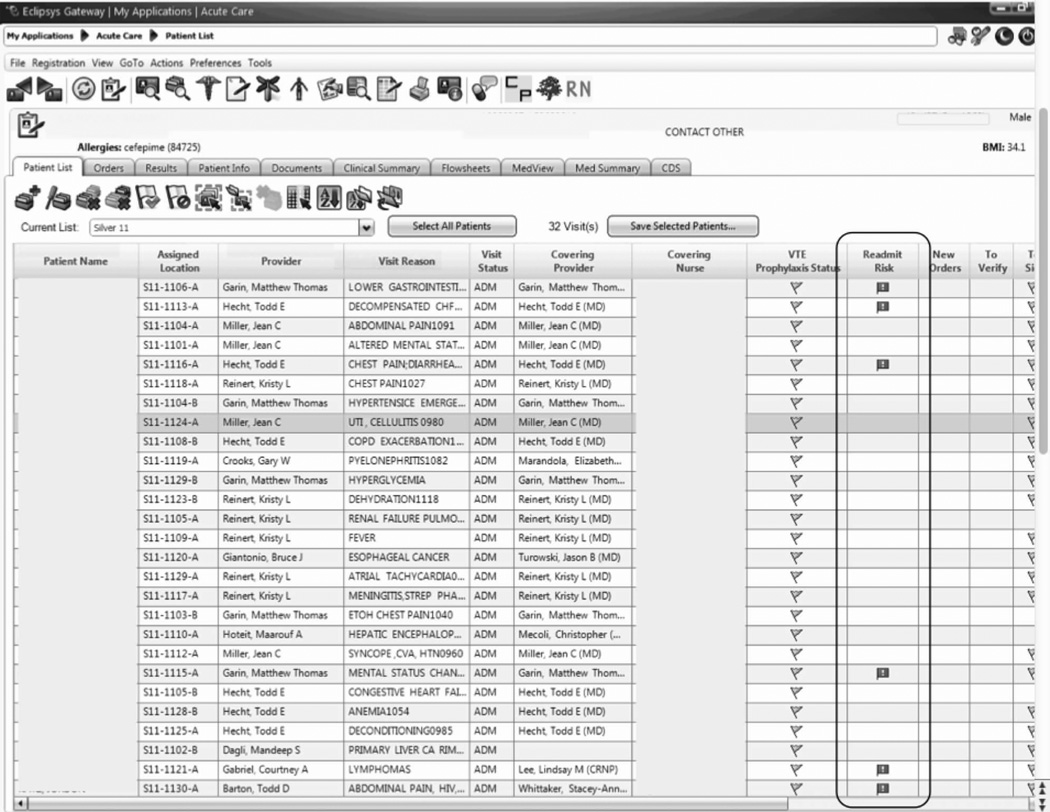

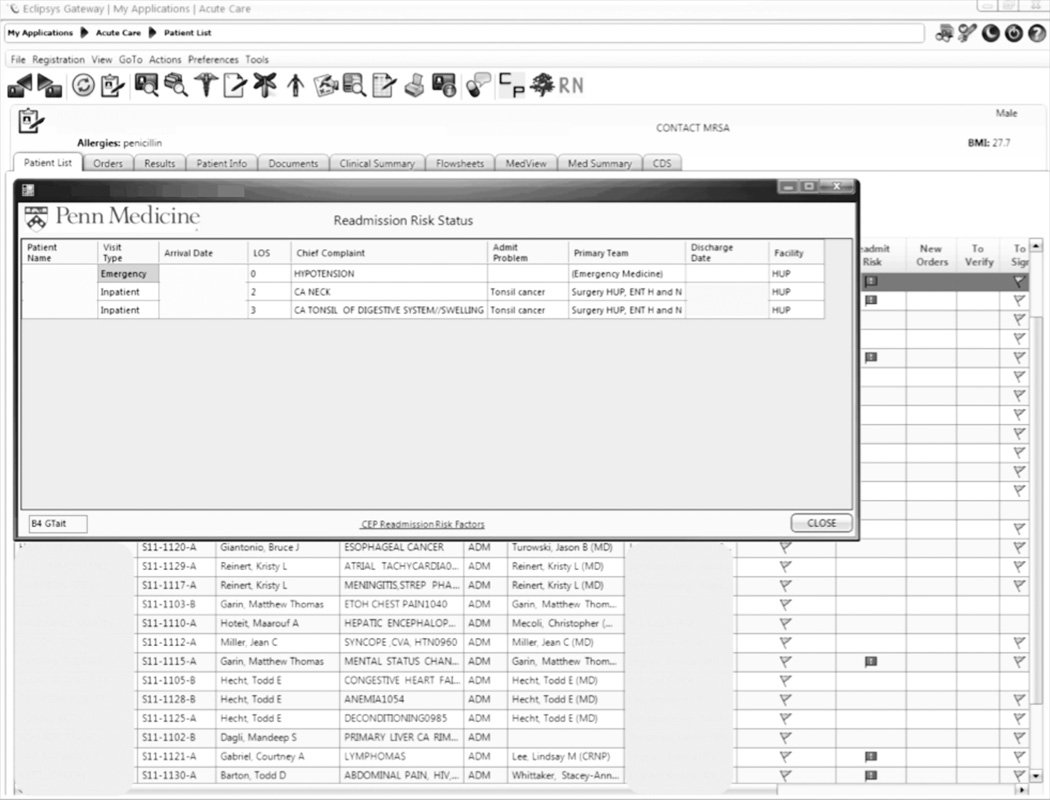

An automated readmission risk flag was then integrated into the EHR. Patients classified as being at high risk for readmission with the automated prediction model were flagged in the EHR on admission (Figure 1A). The flag can be double-clicked to display a separate screen with information relevant to discharge planning including inpatient and ED visits in the prior 12 months, as well as information about the primary team, length of stay, and admitting problem associated with those admissions (Figure 1B). The prediction model was integrated into our EHR using Arden Syntax for Medical Logic Modules.17 The readmission risk screen involved presenting the provider with a new screen and was thus developed in Microsoft .NET using C# and Windows Forms (Microsoft Corp, Redmond, Washington).

Figure 1.

The flag was visible on the patient lists of all providers who utilized the EHR. This included but was not limited to nurses, social workers, unit pharmacists, and physicians. At the time of implementation, educational events regarding the readmission risk flag were provided in forums targeting administrators, pharmacists, social workers, and housestaff. Information about the flag and recommendations for use were distributed through emails and broadcast screensaver messages disseminated throughout the inpatient units of the health system. Providers were asked to pay special attention to discharge planning for patients triggering the readmission risk flag, including medication reconciliation by pharmacists for these patients prior to discharge, and arrangement of available home services by social work.

The risk flag was one of four classes of interventions developed and endorsed by the health system in its efforts to reduce readmissions. Besides risk stratification, the other classes were: interdisciplinary rounding, patient education, and discharge communication. None of the interventions alone were expected to decrease readmissions, but as all four classes of interventions were implemented and performed routinely, the expectation was that they would work in concert to reduce readmissions.

Analysis

The primary outcome was all-cause hospital readmissions in the healthcare system within 30 days of discharge. While this outcome is commonly used both in the literature and as a quality metric, significant debate persists as to the appropriateness of this metric.18 Many of the factors driving 30-day readmissions may be dependent on factors outside of the discharging hospital’s control and it has been argued that nearer-term, non-elective readmission rates may provide a more meaningful quality metric.18 Seven-day unplanned readmissions were thus used as a secondary outcome measure for this study.

Sensitivity, specificity, predictive value, c-statistic, F-score (the harmonic mean of positive predictive value and sensitivity)19 and screen-positive rate were calculated for each of the 30 prediction models evaluated using the historical data. The prediction model with the best balance of F-score and screen-positive rate was selected as the prediction model to be integrated into the EHR. Prospective validation of the selected prediction model was performed using the 12-month period following implementation of the risk flag (9/2011–9/2012).

To assess the impact of the automated prediction model on monthly readmission rate, we used the 24-month period immediately before and the 12-month period immediately after implementation of the readmission risk flag. Segmented regression analysis was performed testing for changes in level and slope of readmission rates between pre-implementation and post-implementation time periods. This quasi-experimental interrupted time series methodology20 allows us to control for secular trends in readmission rates and to assess the pre-implementation trend (secular trend), the difference in rates immediately before and after the implementation (immediate effect), and the post-implementation change over time (sustained effect). We used Cochrane-Orcutt estimation21 to correct for serial autocorrelation.

All analyses were performed using Stata 12.1 software (Stata Corp, College Station, Texas).

RESULTS

Predictors of Readmission

Our systematic review of the literature identified several patient and healthcare utilization patterns predictive of 30-day readmission risk. Utilization factors included length of stay, number of prior admissions, previous 30-day readmissions and previous emergency department visits. Patient characteristics included number of comorbidities, living alone, and payor. Evidence was inconsistent regarding threshold values for these variables.

Many variables readily available in our EHR were either found by the systematic review not to be reliably predictive of 30-day readmission (including age and gender) or were not readily or reliably available on admission (including length of stay and payor). At the time of implementation, our EHR did not include vital sign or nursing assessment variables so these were not considered for inclusion in our model.

Of the available variables, three were consistently accurate and available in the EHR at the time of patient admission: prior hospital admission, emergency department visit, and 30-day readmission within UPHS. We then developed 30 candidate prediction models using a combination of these variables, including ≥1 and ≥2 prior admissions, ED visits, and 30-day readmissions in the 6 and 12 months preceding the index visit.

Development and Validation

We used 24 months of retrospective data, which included 120,396 discharges with 17,337 thirty-day readmissions (14.4% thirty-day all-cause readmission rate) to test the candidate prediction models. A single risk factor, ≥2 inpatient admissions in the past 12 months, was found to have the best balance of sensitivity (40%), positive predictive value (31%), and proportion of patients flagged (18%) (Table 1).

Table 1.

Retrospective and prospective evaluation of prediction models for 30-day all-cause readmissions

| Sensitivity | Specificity | C-Statistic | PPV | NPV | Screen Positive |

F-Score | |

|---|---|---|---|---|---|---|---|

| Retrospective Evaluation of Prediction Rules | |||||||

| Lookback period: 6 months | |||||||

| Prior Admissions | |||||||

| ≥1 | 53% | 74% | 0.640 | 26% | 91% | 30% | 0.350 |

| ≥2 | 32% | 90% | 0.610 | 35% | 89% | 13% | 0.333 |

| ≥3 | 20% | 96% | 0.578 | 44% | 88% | 7% | 0.274 |

| Prior ED Visits | |||||||

| ≥1 | 31% | 81% | 0.558 | 21% | 87% | 21% | 0.252 |

| ≥2 | 13% | 93% | 0.532 | 25% | 87% | 8% | 0.172 |

| ≥3 | 7% | 97% | 0.519 | 27% | 86% | 4% | 0.111 |

| Prior 30-day Readmissions | |||||||

| ≥1 | 39% | 85% | 0.623 | 31% | 89% | 18% | 0.347 |

| ≥2 | 21% | 95% | 0.582 | 43% | 88% | 7% | 0.284 |

| ≥3 | 13% | 98% | 0.555 | 53% | 87% | 4% | 0.208 |

| Combined Rules | |||||||

| Admit≥1 & ED≥1 | 22% | 92% | 0.568 | 31% | 88% | 10% | 0.255 |

| Admit≥2 & ED≥1 | 15% | 96% | 0.556 | 40% | 87% | 5% | 0.217 |

| Admit≥1 & 30-day≥1 | 39% | 85% | 0.623 | 31% | 89% | 18% | 0.346 |

| Admit≥2 & 30-day≥1 | 29% | 92% | 0.603 | 37% | 89% | 11% | 0.324 |

| 30-day≥1 & ED≥1 | 17% | 95% | 0.559 | 37% | 87% | 6% | 0.229 |

| 30-day≥1 & ED≥2 | 8% | 98% | 0.527 | 40% | 86% | 3% | 0.132 |

| Lookback period: 12 months | |||||||

| Prior Admission | |||||||

| ≥1 | 60% | 68% | 0.593 | 24% | 91% | 36% | 0.340 |

| ≥2* | 40% | 85% | 0.624 | 31% | 89% | 18% | 0.361 |

| ≥3 | 28% | 92% | 0.600 | 37% | 88% | 11% | 0.318 |

| Prior ED Visit | |||||||

| ≥1 | 38% | 74% | 0.560 | 20% | 88% | 28% | 0.260 |

| ≥2 | 20% | 88% | 0.544 | 23% | 87% | 13% | 0.215 |

| ≥3 | 8% | 96% | 0.523 | 27% | 86% | 4% | 0.126 |

| Prior 30-day Readmission | |||||||

| ≥1 | 43% | 84% | 0.630 | 30% | 90% | 20% | 0.353 |

| ≥2 | 24% | 94% | 0.592 | 41% | 88% | 9% | 0.305 |

| ≥3 | 11% | 98% | 0.548 | 54% | 87% | 3% | 0.186 |

| Combined Rules | |||||||

| Admit≥1 & ED≥1 | 29% | 87% | 0.580 | 27% | 88% | 15% | 0.281 |

| Admit≥2 & ED≥1 | 22% | 93% | 0.574 | 34% | 88% | 9% | 0.266 |

| Admit≥1 & 30-day≥1 | 42% | 84% | 0.630 | 30% | 90% | 14% | 0.353 |

| Admit≥2 & 30-day≥1 | 34% | 89% | 0.615 | 34% | 89% | 14% | 0.341 |

| 30-day≥1 & ED≥1 | 21% | 93% | 0.569 | 35% | 88% | 9% | 0.261 |

| 30-day≥1 & ED≥2 | 13% | 96% | 0.545 | 37% | 87% | 5% | 0.187 |

| Prospective Evaluation of Prediction Rule | |||||||

| 30-Day All-Cause | 39% | 84% | 0.614 | 30% | 89% | 18% | 0.326 |

Legend:

Optimum prediction model; Admit = inpatient hospital admission; ED = emergency room visit; 30-day = prior 30-day readmission

Prospective validation of the prediction model was performed using the 12-month period directly following readmission risk flag implementation. During this period, the 30-day all-cause readmission rate was 15.1%. Sensitivity (39%), positive predictive value (30%), and proportion of patients flagged (18%) were consistent with the values derived from the retrospective data, supporting the reproducibility and predictive stability of the chosen risk prediction model (Table 1). The c-statistic of the model was also consistent between the retrospective and prospective datasets (0.62 and 0.61 respectively).

Readmission Rates

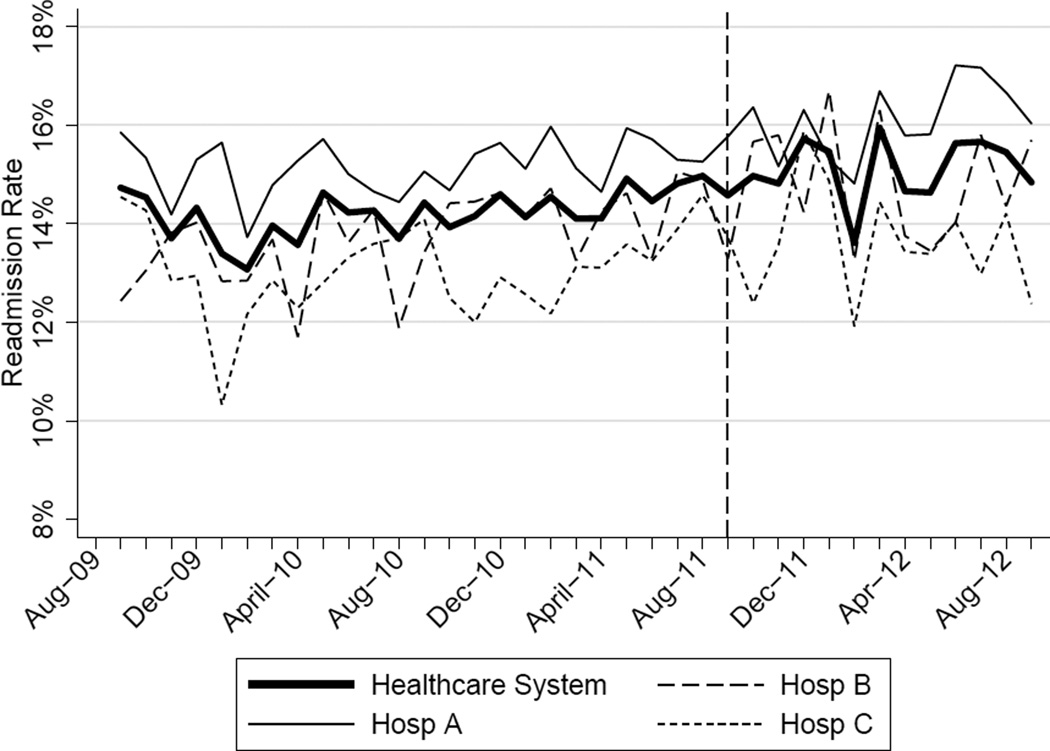

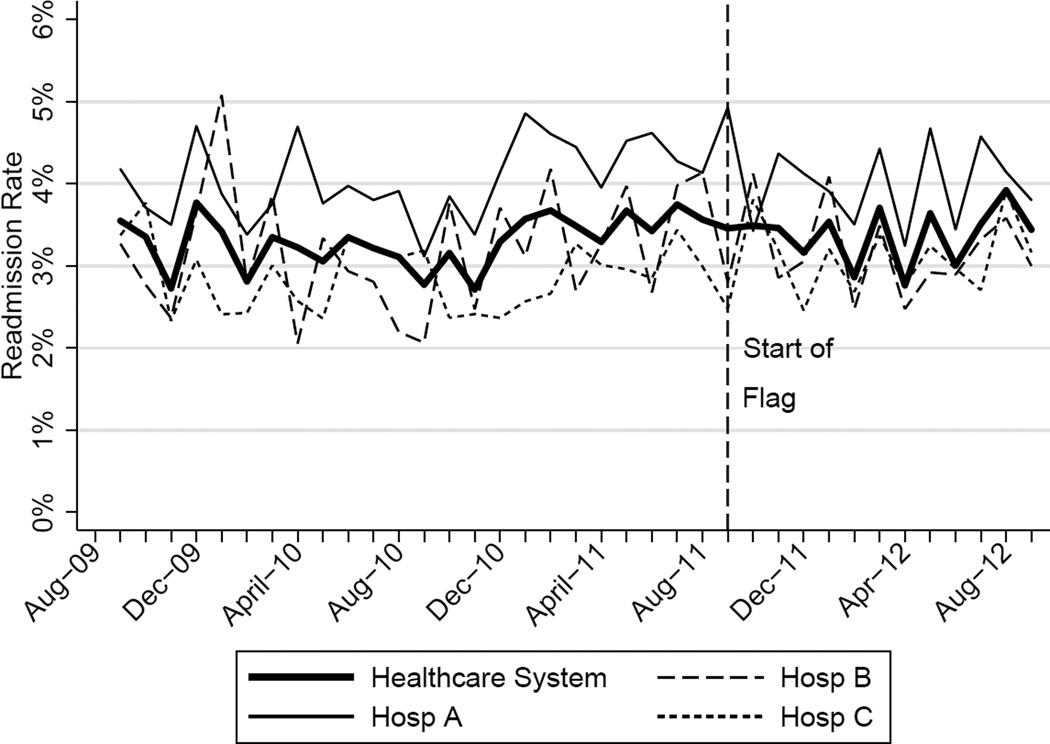

The mean 30-day all-cause readmission rate for the 24-month period prior to the intervention was 14.4%, while the mean for the 12-month period after the implementation was 15.1%. Thirty-day all-cause and 7-day unplanned monthly readmission rates do not appear to have been impacted by the intervention (Figure 2). There was no evidence for either an immediate or sustained effect (Table 2).

Figure 2.

Table 2.

Interrupted Time Series of Readmission Rates

| Pre-implementation period |

Immediate Effect |

Post-implementation period |

P Value Change in trend* |

||||||

|---|---|---|---|---|---|---|---|---|---|

| Hospital | Monthly % change in readmission rates |

P Value |

Immediate % change |

P Value |

Monthly % change in readmission rates |

P Value |

|||

| 30-Day All-Cause Readmission Rates | |||||||||

| Hosp A | 0.023 | Stable | 0.153 | 0.480 | 0.991 | 0.100 | Increasing | 0.134 | 0.044 |

| Hosp B | 0.061 | Increasing | 0.002 | 0.492 | 0.125 | −0.060 | Stable | 0.048 | 0.296 |

| Hosp C | 0.026 | Stable | 0.413 | 0.447 | 0.585 | −0.046 | Stable | 0.476 | 0.629 |

| Health System | 0.032 | Increasing | 0.014 | 0.344 | 0.302 | 0.026 | Stable | 0.881 | 0.499 |

| 7-Day Unplanned Readmission Rates | |||||||||

| Hosp A | 0.004 | Stable | 0.642 | −0.271 | 0.417 | 0.005 | Stable | 0.967 | 0.891 |

| Hosp B | −0.012 | Stable | 0.201 | 0.298 | 0.489 | −0.038 | Stable | 0.602 | 0.429 |

| Hosp C | −0.008 | Stable | 0.213 | 0.353 | 0.204 | −0.004 | Stable | 0.899 | 0.895 |

| Health System | −0.005 | Stable | 0.358 | −0.003 | 0.990 | 0.010 | Stable | 0.583 | 0.712 |

P-value compares the pre- and post-implementation trends in readmission rates.

Regression coefficients represent the absolute change in the monthly readmission rate (percentage) per unit time (month). Models adjusted for autocorrelation using the Cochrane-Orcutt estimator

DISCUSSION

In this proof-of-concept study, we demonstrated the feasibility of an automated readmission risk prediction model integrated into a health system’s EHR for a mixed population of hospitalized medical and surgical patients. To our knowledge, this is the first study examining the impact of providing readmission risk assessment for a general population of hospitalized patients on readmission rates. We used a simple prediction model potentially generalizable to EHRs and healthcare populations beyond our own.

Existing risk prediction models for hospital readmission have important limitations and are difficult to implement in clinical practice.22 Prediction models for hospital readmission are often dependent on retrospective claims data, developed for specific patient populations, and not designed for use early in the course of hospitalization when transitional care interventions can be initiated.22 In addition, the time required to gather the necessary data and calculate the risk score remains a barrier to the adoption of prediction models in practice. By automating the process of readmission risk prediction, we were able to help integrate risk assessment into the health care process across many providers in a large multi-hospital healthcare organization. This has allowed us to consistently share risk assessment in real-time with all members of the inpatient team, facilitating a team-based approach to discharge planning.23

Two prior studies have developed readmission risk prediction models designed to be implemented into the EHR. Amarasingham et al24 developed and implemented25 a heart failure-specific prediction model based on the 18-item Tabak mortality score.26 Bradley et al27 studied in a broader population of medicine and surgery patients the predictive ability of a 26-item score that utilized vital sign, cardiac rhythm, and nursing assessment data. While EHRs are developing rapidly, currently the majority of EHRs do not support the use of many of the variables used in these models. In addition, both models were complex, raising concerns about generalizability to other healthcare settings and populations.

A distinctive characteristic of our model is its simplicity. We were cognizant of the realities of running a prediction model in a high-volume production environment and the diminishing returns of adding more variables. We thus favored simplicity at all stages of model development, with the associated belief that complexity could be added with future iterations once feasibility had been established. Finally, we were aware that we were constructing a medical decision support tool rather than a simple classifier.26 As such, the optimal model was not purely driven by discriminative ability, but also by our subjective assessment of the optimal trade-off between sensitivity and specificity (the ‘test-treatment’ threshold) for such a model.26 To facilitate model assessment, we thus categorized the potential predictor variables and evaluated the test characteristics of each combination of categorized variables. While the c-statistic of a model using continuous variables will be higher than one using categorical values, model performance at the chosen trade-off point is unlikely to be different.

Although the overall predictive ability of our model was fair, we found that it was associated with clinically meaningful differences in readmission rates between those triggering and not triggering the flag. The 30-day all-cause readmission rate in the 12-month prospective sample was 15.1%, yet among those flagged as being at high risk for readmission the readmission rate was 30.4%. Given resource constraints and the need to selectively apply potentially costly care transition interventions, this may in practice translate into a meaningful discriminative ability.

Readmission rates did not change significantly during the study period. A number of plausible reasons for this exist, including: (1) the current model may not exhibit sufficient predictive ability to classify those at high risk or impact the behavior of providers appropriately, (2) those patients classified as high risk of readmission may not be at high risk of readmissions that are “preventable”, (3) information provided by the model may not yet routinely be used such that it can affect care, or (4) providing readmission risk assessment alone is not sufficient to influence readmission rates, and the other interventions or organizational changes necessary to impact care of those defined as high risk have not yet been implemented or are not yet being performed routinely. If the primary reasons for our results are those outlined in numbers (3) or (4), then readmission rates should improve over time as the risk flag becomes more routinely used, and those interventions necessary to impact readmission rates of those defined as high risk are implemented and performed.

Limitations

There are several limitations of this intervention. First, the prediction model was developed using 30-day all-cause readmissions, rather than attempting to identify potentially preventable readmissions. Thirty-day readmission rates may not be a good proxy for preventable readmissions18 and, as a consequence, the ability to predict 30-day readmissions may not ensure that a prediction model is able to predict preventable readmissions. Nonetheless, 30-day readmission rates remain the most commonly used quality metric.

Second, the impact of the risk flag on provider behavior is uncertain. We did not formally assess how the readmission risk flag was used by healthcare team members. Informal assessment has, however, revealed that the readmission risk flag is gradually being adopted by different members of the care team including unit-based pharmacists who are using the flag to prioritize the delivery of medication education, social workers who are using the flag to prompt providers to consider higher level services for patients at high risk of readmission, and patient navigators, who are using the flag to prioritize follow-up phone calls. As a result, we hope that the flag will ultimately improve the processes of care for high-risk patients.

Third, we did not capture readmissions to hospitals outside of our healthcare system and have therefore underestimated the readmission rate in our population. However, our assessment of the effect of the risk flag on readmissions focused on relative readmission rates over time, and the use of the interrupted time series methodology should protect against secular changes in outside hospital readmission rates that were not associated with the intervention.

Fourth, it is possible that the prediction model implemented could be significantly improved by including additional variables or data available during the hospital stay. However, simple classification models using a single variable have repeatedly been shown to have the ability to compete favorably with state-of-the-art multivariable classification models.28

Fifth, our study was limited to a single academic health system and our experience may not be generalizable to smaller healthcare systems with limited EHR systems. However, the simplicity of our prediction model and the integration into a commercial EHR may improve the generalizability of our experience to other healthcare settings. Additionally, partly due to recent policy initiatives, the adoption of integrated EHR systems by hospitals is expected to continue at a rapid rate and become the standard of care within the near future.29

Conclusion

An automated prediction model was effectively integrated into an existing EHR and was able to identify patients on admission who are at risk for readmission within 30 days of discharge. Future work will aim to further examine the impact of the flag on readmission rates, further refine the prediction model, and gather data on how providers and care teams use the information provided by the flag.

References

- 1.Jencks SF, Williams MV, Coleman EA. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med. 2009;360(14):1418–1428. doi: 10.1056/NEJMsa0803563. [DOI] [PubMed] [Google Scholar]

- 2.Benbassat J, Taragin M. Hospital readmissions as a measure of quality of health care: advantages and limitations. Arch Intern Med. 2000;160(8):1074–1081. doi: 10.1001/archinte.160.8.1074. [DOI] [PubMed] [Google Scholar]

- 3.Weeks WB, Lee RE, Wallace AE, West AN, Bagian JP. Do older rural and urban veterans experience different rates of unplanned readmission to VA and non-VA hospitals? J Rural Health. 2009;25(1):62–69. doi: 10.1111/j.1748-0361.2009.00200.x. [DOI] [PubMed] [Google Scholar]

- 4.Underwood MA, Danielsen B, Gilbert WM. Cost, causes and rates of rehospitalization of preterm infants. J Perinatol. 2007;27(10):614–619. doi: 10.1038/sj.jp.7211801. [DOI] [PubMed] [Google Scholar]

- 5.Allaudeen N, Vidyarthi A, Maselli J, Auerbach A. Redefining Readmission Risk Factors for General Medicine Patients. J Hosp Med. 2011;6(2):54–60. doi: 10.1002/jhm.805. [DOI] [PubMed] [Google Scholar]

- 6.Lanièce II, Couturier PP, Dramé MM, et al. Incidence and main factors associated with early unplanned hospital readmission among French medical inpatients aged 75 and over admitted through emergency units. Age and Ageing. 2008;37(4):416–422. doi: 10.1093/ageing/afn093. [DOI] [PubMed] [Google Scholar]

- 7.Van Walraven C, Bennett C, Jennings A, Austin PC, Forster AJ. Proportion of hospital readmissions deemed avoidable: a systematic review. CMAJ. 2011;183(7):E391–E402. doi: 10.1503/cmaj.101860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hospital Quality Alliance. [Accessed March 6, 2013]; Available at: http://www.hospitalqualityalliance.org/hospitalqualityalliance/qualitymeasures/qualitymeasures.html.

- 9.Institute for Healthcare Improvement. [Accessed March 6, 2013]; Available at: http://www.ihi.org/explore/Readmissions/Pages/default.aspx.

- 10.Centers for Medicare and Medicaid Services. [Accessed March 6, 2013]; Available at: http://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/OutcomeMeasures.html. [PubMed]

- 11.Naylor MD, Brooten D, Campbell R, et al. Comprehensive discharge planning and home follow-up of hospitalized elders: a randomized clinical trial. JAMA. 1999;281(7):613–620. doi: 10.1001/jama.281.7.613. [DOI] [PubMed] [Google Scholar]

- 12.Coleman EA, Smith JD, Frank JC, Min S-J, Parry C, Kramer AM. Preparing patients and caregivers to participate in care delivered across settings: the Care Transitions Intervention. J Am Geriatr Soc. 2004;52(11):1817–1825. doi: 10.1111/j.1532-5415.2004.52504.x. [DOI] [PubMed] [Google Scholar]

- 13.Naylor MD, Aiken LH, Kurtzman ET, Olds DM, Hirschman KB. The Importance Of Transitional Care In Achieving Health Reform. Health affairs. 2011;30(4):746–754. doi: 10.1377/hlthaff.2011.0041. [DOI] [PubMed] [Google Scholar]

- 14.Hansen LO, Young RS, Hinami K, Leung A, Williams MV. Interventions to reduce 30-day rehospitalization: a systematic review. Ann Intern Med. 2011;155(8):520–528. doi: 10.7326/0003-4819-155-8-201110180-00008. [DOI] [PubMed] [Google Scholar]

- 15.University of Pennsylvania Health System Center for Evidence-based Practice. http://wwwuphsupennedu/cep/.

- 16.Umscheid CA, Williams K, Brennan PJ. Hospital-based comparative effectiveness centers: translating research into practice to improve the quality, safety and value of patient care. J Gen Intern Med. 2010;25(12):1352–1355. doi: 10.1007/s11606-010-1476-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hripcsak G. Writing Arden Syntax Medical Logic Modules. Comput Biol Med. 1994;24(5):331–363. doi: 10.1016/0010-4825(94)90002-7. [DOI] [PubMed] [Google Scholar]

- 18.Joynt KE, Jha AK. Thirty-day readmissions--truth and consequences. N Engl J Med. 2012;366(15):1366–1369. doi: 10.1056/NEJMp1201598. [DOI] [PubMed] [Google Scholar]

- 19.Van Rijsbergen CJ. Information Retrieval. 2nd ed. Butterworth-Heinemann; 1979. [Google Scholar]

- 20.Wagner AK, Soumerai SB, Zhang F, Ross-Degnan D. Segmented regression analysis of interrupted time series studies in medication use research. J Clin Pharm Ther. 2002;27(4):299–309. doi: 10.1046/j.1365-2710.2002.00430.x. [DOI] [PubMed] [Google Scholar]

- 21.Cochrane D, Orcutt GH. Application of least squares regression to relationships containing auto-correlated error terms. J Am Stat Assoc. 1949 [Google Scholar]

- 22.Kansagara D, Englander H, Salanitro A, et al. Risk prediction models for hospital readmission: a systematic review. JAMA. 2011;306(15):1688–1698. doi: 10.1001/jama.2011.1515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mitchell P, Wynia M, Golden R, et al. [Accessed March 19, 2013];Core Principles & Values of Effective Team-Based Health Care. Available at: https://www.nationalahec.org/pdfs/VSRT-Team-Based-Care-Principles-Values.pdf.

- 24.Amarasingham R, Moore BJ, Tabak YP, et al. An automated model to identify heart failure patients at risk for 30-day readmission or death using electronic medical record data. Med Care. 2010;48(11):981–988. doi: 10.1097/MLR.0b013e3181ef60d9. [DOI] [PubMed] [Google Scholar]

- 25.Amarasingham R, Patel PC, Toto K, et al. Allocating scarce resources in real-time to reduce heart failure readmissions: a prospective, controlled study. BMJ Qual Saf. 2013 doi: 10.1136/bmjqs-2013-001901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pauker SG, Kassirer JP. The threshold approach to clinical decision making. N Engl J Med. 1980;302(20):1109–1117. doi: 10.1056/NEJM198005153022003. [DOI] [PubMed] [Google Scholar]

- 27.Bradley EH, Yakusheva O, Horwitz LI, Sipsma H, Fletcher J. Identifying patients at increased risk for unplanned readmission. Med Care. 2013;51(9):761–766. doi: 10.1097/MLR.0b013e3182a0f492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Holte RC. Very Simple Classification Rules Perform Well on Most Commonly Used Datasets. Mach Learn. 1993;11(1):63–91. [Google Scholar]

- 29.Blumenthal D. Stimulating the adoption of health information technology. N Engl J Med. 2009;360(15):1477–1479. doi: 10.1056/NEJMp0901592. [DOI] [PubMed] [Google Scholar]