Abstract

Stroke can be a source of significant upper extremity dysfunction and affect the quality of life (QoL) in survivors. In this context, novel rehabilitation approaches employing robotic rehabilitation devices combined with brain-machine interfaces can greatly help in expediting functional recovery in these individuals by actively engaging the user during therapy. However, optimal training conditions and parameters for these novel therapeutic systems are still unknown. Here, we present preliminary findings demonstrating successful movement intent detection from scalp electroencephalography (EEG) during robotic rehabilitation using the MAHI Exo-II in an individual with hemiparesis following stroke. These findings have strong clinical implications for the development of closed-loop brain-machine interfaces to robotic rehabilitation systems.

I. Introduction

Every year about 15 million people worldwide suffer from a stroke, and amongst survivors, about 5 million have chronic disability, leading to high economic burdens on their families and the society. The disability-adjusted life years (DALYs) lost due to stroke is projected to rise globally from 38 million in 1990 to 61 million DALYs in 2020 [1]. Utilizing recent advances in brain-machine interface (BMI) and robot-assisted rehabilitation technologies, there is potential to promote functional compensation through sensorimotor adaptation and central nervous system plasticity [2] and help reduce the socio-economic burden of disability [3], [4]. Towards this end, rehabilitation robots and exoskeletons are being developed to improve functional motor recovery after stroke. These robots are capable of providing movement assistance and/or resistance to the patients at different levels, ranging from fully passive (or robot-controlled) to patient-triggered to fully active (or patient-controlled) movements. However, current rehabilitation robots lack the ability to monitor patient participation or engagement during the tasks. Motivation and patient engagement are important psychosocial factors that can greatly affect neurological rehabilitation outcomes for the patient [5]. If users can be actively engaged in their rehabilitation through interfacing self-generated neural signals to accomplish task goals via a neurally interfaced therapeutic exoskeleton, then positive feedback can further enhance neural plasticity and facilitate motor recovery.

Previous studies have shown that scalp (noninvasive) EEG recordings of brain activity can be used to detect volitional movement intention of healthy and stroke subjects from slow movement-related cortical potentials (MRCP) [6] as well as by the respective decrease or increase in power in the α (8-13 Hz) or β (15-30 Hz) bands [7]. MRCPs are quite versatile, since their amplitudes and time courses vary depending on the subject’s psychological status as well as characteristics of the movement being performed such as distance, speed, precision, etc. [8], [9]. Traditionally, MRCPs have been studied by averaging over a large number of trials, since there was high trial-to-trial variability resulting from background neural activity and non-neural artifacts. However, recent studies have successfully demonstrated detection of movement intention from single trial MRCPs, by employing optimized spatial filtering and advanced machine learning techniques [9]. These findings are promising and underscore the potential utility of MRCPs in serving as neural control signals for an appropriate therapeutic robotic device.

In this study, we aimed to create a neural interface using MRCPs for a novel powered exoskeleton, the MAHI Exo-II for upper limb stroke rehabilitation (Fig. 1). It was therefore critical to identify: a) utility of MRCP as an appropriate neural control signal that represents user engagement, and b) the best training mode of the MAHI Exo-II (Exo) device. To this end, we examined changes in MRCPs in a stroke survivor, as compared to healthy able-bodied participants, when performing goal-oriented movements under different assistance modes. Further, a 2-class (Go vs. No-Go) Support Vector Machine (SVM) classifier was developed to detect the user’s movement intention from single trial MRCPs. The overarching goal is to eventually implement this classifier in real-time to enable control of the MAHI Exo-II by stroke survivors during rehabilitation.

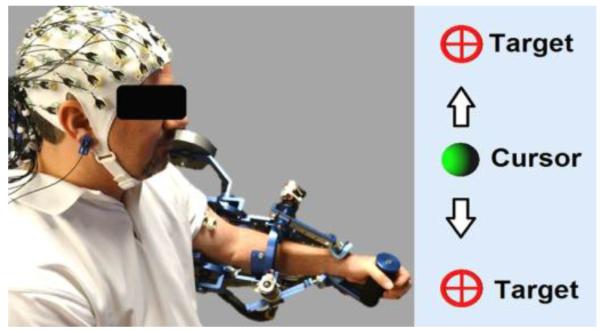

Fig. 1.

User with left-sided hemiparesis fitted with MAHI Exo-II and the EEG-EMG sensors; inset shows the GUI which feed-backs current exoskeleton position to the user.

II. Methods

A. Participants

In this pilot study, three healthy, able-bodied individuals (23±1 years old, right-handed males) and one individual with left hemiparesis (45 year-old male, with an ischemic, right posterior thalamic lesion five months ago) participated after providing voluntary, informed consent to study procedures approved by the Institutional Review Boards (IRB) at University of Houston, Rice University and University of Texas. For the stroke survivor, the level of impairment was assessed using the Modified Ashworth Scale (MAS) and the Fugl-Meyer Upper Extremity (FMA-UE) tests. The MAS score for any individual forearm/hand muscle group was between 0 and 2 (out of 4 i.e., maximum spasticity). The FMA-UE score was 49 (out of 66 i.e., normal function).

B. MAHI Exo-II Upper Extremity Robotic Exoskeleton

The MAHI Exo-II has four actuated degrees of freedom (DOF): elbow flexion-extension, forearm pronation-supination, wrist flexion-extension, and radial-ulnar deviation. It is equipped with high-resolution encoders that provide accurate position and velocity measurement for implementation of various closed-loop protocols. For a detailed description of MAHI Exo-II, see [10].

Training Modes

The MAHI Exo-II can be operated in three training modes: Passive, Triggered, and Active [11]. In the “Passive” mode, no volitional movements are required from the users and the Exo automatically transfers the user’s hand from one position to the next, along a fixed trajectory. In the “Triggered” mode, the user self-initiates movement, following which the robot completes the movement. For detecting the user’s self-initiation (or movement onset) we determined a joint velocity threshold prior to the experiment, by having users practice the task in an unconstrained environment. Finally, the “Active” mode refers to a resistance-training mode in which the user must move their limb through an adjustable virtual viscous field, without any assistance from the robot. In this study, we modified the “Active” mode by turning off the motors to eliminate the virtual damping. This modified “Active” mode was called “Backdrive” mode in this study. We also added a fourth mode called “Observation” mode, wherein the subject only observed an experimenter operate the Exo in “Triggered” mode. Additionally, in “Passive” and “Observation” modes the subjects were specifically asked to also imagine moving the Exo during trials. We hypothesized that observation coupled with motor imagery would also activate underlying movement-related cortical networks and can be particularly useful to calibrate the neural signals in stroke survivors who have limited voluntary control over their upper limb. As shown in Fig. 1, the GUI provided feedback of Exo/arm movement to the user. Lastly, the Exo’s software allowed specifying the range of motion for each DOF independently for each user.

C. Experimental Protocol

Task

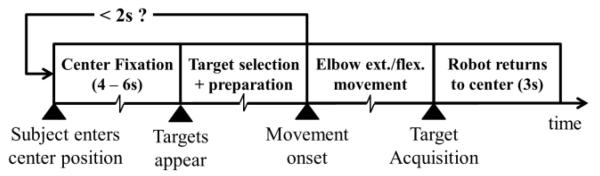

For this study, we chose a single DOF, namely elbow extension/flexion movement with the MAHI Exo-II. The Exo was operated in four modes as explained earlier: Passive, Velocity Triggered, Backdrive and Observation. Each mode consisted of 80 successful movements split into four blocks of 20, and the order of the modes for each subject was randomized. Breaks were given between each block and mode to minimize user fatigue. The sequence of each trial is shown in Fig. 2.

Fig. 2.

Sequence of each trial. Each trial starts when the subject enters the center position and a fixation cross is displayed for 4 to 6s. Two targets (Up & Down) appear on the screen, at which time the subject selects a target, prepares and later executes the movement. Unknown to the subject, target selection and preparation times of less than 2s result in an aborted trial, which can be restarted by re-entering the center position. In a successful trial, the subject performs elbow flexion/extension to hit the target, following which the robot automatically returns the subject’s hand to the center.

Data Acquisition

EEG, Electromyography (EMG) and movement kinematics were simultaneously recorded (sampling frequency = 1000 Hz) during the experiment. EEG signals were recorded using 64 channel active electrodes system from Brain Products GmbH (Morrisville, NC). Additionally, 4 active electrodes (2 differential pairs) were used to record EMG signals over the biceps brachii and triceps brachii muscles. Concurrently recorded EEG & EMG signals by the same system were automatically synchronized. The MAHI Exo-II recorded joint kinematics (i.e. position, velocity) and generated +5V TTL trigger pulses to synchronize EEG-EMG acquisition. The triggers were generated at the beginning and end of each trial as well as on initiation of movement within a trial. The movement initiation, or onset, was determined at runtime based on a velocity threshold (as explained in sec. II.B). It is important to note that while the muscle activity (EMG signals) were most prominent in “Backdrive” mode and mostly absent in “Observation” mode, their signal-to-noise was very low in “Passive” & “Triggered” modes. This was caused by the mechanical vibrations of the Exoskeleton during “Passive” & “Triggered” modes, which interfered with the EMG sensors located in close proximity. Hence, for this study we used EMG signals only from “Backdrive” mode.

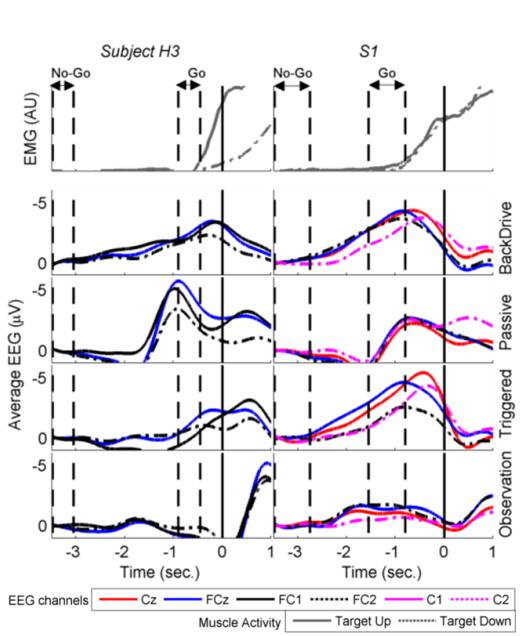

D. Signal Processing

All data analysis was performed offline using MATLAB (Release 2012b, The MathWorks, Inc., Natick, MA) and EEGLAB [12]. Raw EEG signals of the four trial blocks under each training mode (i.e. total 80 trials) were first appended and zero-phase band-pass filtered (0.1-1 Hz) using 4th order Butterworth filter, followed by spatial filtering using Large Laplacian reference and then down sampled to 200 Hz. Noisy EEG channels were replaced with channels derived using spherical interpolation. EEG signals were segmented into trial epochs of duration [-3.5s to +1s] with respect to movement onset triggers (t = 0s). This resulted in a total of 320 epochs (80 epochs per mode) for each subject. All epochs were visually inspected for corruption by eye blinks or movement artifacts and the corrupted epochs were rejected (on average 8% of total epochs). Next, all the remaining epochs were averaged across trials to compute the grand-averaged MRCP for each channel. Additionally, raw EMG signals were band-pass filtered (20-100 Hz), full-wave rectified and low-pass filtered (1 Hz) to obtain linear EMG envelopes, which were later segmented into epochs. Fig. 3 shows sample traces of EMG envelopes and grand average MRCPs for EEG channels over the primary motor cortex (M1) and supplementary motor area (SMA). Subsequently, EEG channels with the strongest MRCPs were identified and used for single trial detection of movement intent.

Fig. 3.

Top row shows segment of median EMG envelope during Up/Down movements for the stroke participant (S1) and one healthy participant (H3). Bottom four rows show baseline corrected, grand average MRCP during four training modes with the MAHI Exo-II for six relevant frontal and central channels. Additionally, (t = 0s) corresponds to the movement onset time when the joint velocity threshold was exceeded. Dashed vertical lines indicate ‘Go’ and ‘No-Go’ windows optimally selected for each subject.

E. Detection of Movement Intent

To detect the intention for movement from single trials, we extracted equal length ‘Go’ and ‘No-Go’ windows from the pre-movement onset EEG epoch [−3.5s to 0s]. Here, ‘Go’ window represents a time interval when the subjects intended (or prepared) to perform a task/movement, whereas the interval that lacked such intention is represented by the ‘No-Go’ window. The length and trailing/leading edges of the ‘Go’ and ‘No-Go’ windows were optimally chosen for each subject, as described below.

Optimization of ‘Go’/‘No-Go’ windows

First, EMG epochs in “Backdrive” mode were used to estimate the EMG onset time (median value over all trials) for a subject. In order to reduce variations in EMG onset time among single trials, the threshold for EMG onset was kept at 10% of the median over maximum values for all EMG envelopes. Second, assuming that the subjects were motionless before the median EMG onset time, we fixed it as the leading edge of the ‘Go’ window. Likewise the trailing edge of the ‘No-Go’ window was fixed at −3.5s. Lastly, our algorithm iteratively increased the window length in 50 msec increments (up to 1s), by shifting the trailing edge of ‘Go’ (i.e., leading edge of ‘No-go’) window. During each iteration, the performance of a classifier that was trained using trials from “Backdrive” mode was determined. The window length with maximum area under the ROC curve was chosen as the optimal window length for ‘Go’ and ‘No-Go’ windows. For each subject, the optimal window length was kept same across all training modes, in order to simplify the comparison of classifiers developed for each training mode. Across all subjects, the mean (± S.D.) values for EMG onset time (with reference to t = 0s) and optimal window length were found to be −650 (± 165) msec and 725 (±190) msec, respectively.

Feature Extraction & Classifier Training

During feature extraction, we first computed a spatial average X(t) of EEG channels Vk(t) that showed strongest grand average MRCP, i.e. where e.g., Vk(t) = [FCz, FC2, Cz, C2] for the stroke subject (refer Fig. 3). Next for each trial, using the amplitude of X(t) over the ‘Go’ and ‘No-Go’ windows, we created 4-D feature vectors for respective ‘Go’ and ‘No-Go’ classes. The elements of the feature vector were slope, peak negative amplitude, area under the curve (AUC) and Mahalanobis distance of X(t), during ‘Go’/‘No-Go’ windows. For detecting movement intention, a 2-class SVM classifier was trained using these features and tested using 10-fold cross-validation, with the help of LIBSVM library for MATLAB [13]. The feature vectors were transformed using a radial basis kernel function and then linearly scaled between [0, 1]. The cost C and kernel parameter γ for SVM models were optimized using the grid search technique for C ∈ {10, 100, 1000} and γ ∈ {0.2, 0.5, 0.8, 1}. Classification accuracy was calculated as the ratio of total correctly predicted trials to the total number of trials.

III.Results

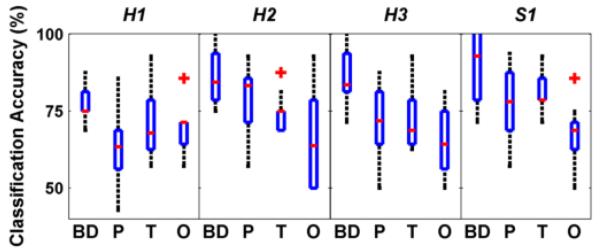

In this first demonstration of an integrated EEG-based neural interface with the MAHI Exo-II, we were able to successfully measure MRCP in a stroke survivor. Specifically, these MRCPs were identified during three training modes of the Exo: Backdrive, Passive & Triggered. However, we did not observe any MRCP during “Observation” mode in the healthy participants as well as the stroke survivor. Nonparametric Kruskalwallis test (p<0.001) and post-hoc comparisons (Bonferroni-corrected) showed that accuracies were significantly higher in “Backdrive” & “Triggered” modes as compared to “Observation” (p<0.05). Next, we used SVM to classify single trials within each training mode based on features described previously. Interestingly, we found relatively high classification accuracy (medians around 75%) across all training modes (see Fig. 4). Particularly, it appears that classification was more successful in Triggered and Backdrive modes across all subjects. One-sided Wilcoxon signed rank test showed that accuracies were significantly higher than chance levels (50%) for both healthy and stroke participants (p<0.001; α-level adjusted using Bonferroni correction for multiple comparisons: 0.0062).

Fig. 4.

Boxplots showing median classification accuracies across 4 training modes for all participants (interquartile and full ranges shown along with outliers as ‘+’). BD: Backdrive; P: Passive; T: Triggered; O: Observation.

IV. DISCUSSION

In this study, we successfully integrated a noninvasive EEG-based neural interface with the MAHI Exo-II, and validated the system in a stroke survivor. Further, we were able to identify MRCP in three training modes using the Exo, which helped differentiate movement intention in participants compared to rest with relatively high accuracy. In this context, the “Triggered” training mode was of greatest relevance for stroke participants, as it can be implemented in participants with relatively low voluntary upper limb control. Therefore, it is very promising to note a median classification accuracy of around 75% for the stroke participant in this mode. This provides initial evidence for the potential applicability of MRCP as a neural control signal for a brain-machine interface to robotic systems in stroke survivors.

Surprisingly, we also found a reasonably strong MRCP in the “Passive” mode, which was therefore classified with relatively high accuracy by the SVM. It appears that the “Passive” training mode in our system was able to recruit underlying movement intention related cortical networks plausibly by engaging the user with the task through a) observation of the movement of the user’s arm by the Exo, b) kinesthetic feedback provided by the Exo, and c) through visual feedback from the GUI. This is encouraging as it further highlights the advantages of combining neural-robotic interventions to increase active patient engagement during rehabilitation. Additionally, this mode can also be applied in stroke participants who may be unable to actively initiate movement in the “Triggered” mode, thereby increasing the target population for this and related interventions. However, this will need to be validated by testing additional participants with stroke. Interestingly, we did not find any discernible MRCP waveform during “Observation” of an actor’s limb movement. This may be due to the fact that action observation related neural processes, which typical modulate oscillatory cortical dynamics in α or β bands, may not be adequately represented in the relatively low frequency, amplitude-modulated MRCP that was studied here. Additionally, it is also possible that user engagement in this mode was considerably lesser than the other 3 modes, which could have further contributed to lack of observed MRCP.

A potential limitation in our proposed algorithm is that the ‘Go’/ ‘No-Go’ windows are kept same across all training modes. We expect that optimally selecting Go/No-Go windows per mode will improve classifier performance and therefore, will be pursued in future studies. Also we are currently working on eliminating interference from Exo motor vibrations on EMG signals during “Passive” and “Triggered” modes. This will further enable us to accurately decide the optimal window lengths for these training modes.

In summary, we provide initial clinical validation of an integrated EEG-based neural interface to the MAHI Exo-II for upper-arm stroke rehabilitation based on movement intent detection while the Exo is operated in Triggered as well as Passive training modes. In subsequent studies, we plan to implement and test this system as a closed-loop, real-time BMI, which will ensure patient engagement during therapy sessions and maximize functional outcomes.

Acknowledgments

This work was supported by NIH Grant R01NS081854-02 (National Robotics Initiative)

Contributor Information

Nikunj A. Bhagat, Dept. of Electrical & Computer Engineering, University of Houston, Houston, TX 77004 USA. (Phone: 713-743-0796; fax: 713-743-4444; nabhagat@uh.edu

James French, Dept. of Mechanical Engineering, Rice University, Houston, TX 77005 USA. jaf12@rice.edu.

Anusha Venkatakrishnan, Dept. of Electrical & Computer Engineering, University of Houston, Houston, TX 77004 USA. (Phone: 713-743-0796; fax: 713-743-4444; avenkatakrishnan@uh.edu.

Nuray Yozbatiran, Institute for Rehabilitation Research (TIRR) and University of Texas Health Sciences Center, Houston, TX USA, nuray.yozbatiran@uth.tmc.edu.

Gerard E. Francisco, Institute for Rehabilitation Research (TIRR) and University of Texas Health Sciences Center, Houston, TX USA gerard.e.francisco@uth.tmc.edu

Marcia K. O’Malley, Dept. of Mechanical Engineering, Rice University, Houston, TX 77005 USA. omalleym@rice.edu.

Jose L. Contreras-Vidal, Dept. of Electrical & Computer Engineering, University of Houston, Houston, TX 77004 USA. (Phone: 713-743-0796; fax: 713-743-4444; jlcontreras-vidal@uh.edu.

REFERENCES

- [1].Mendis S, Puska P, Norrving B. Global atlas on cardiovascular disease prevention and control. World Health Organization; 2011. [Google Scholar]

- [2].McDonald JW, Sadowsky CL, Stampas A. The changing field of rehabilitation: optimizing spontaneous regeneration and functional recovery. Handb. Clin. Neurol. 2012 Jan.109:317–36. doi: 10.1016/B978-0-444-52137-8.00020-6. [DOI] [PubMed] [Google Scholar]

- [3].Dobkin BH. Brain-computer interface technology as a tool to augment plasticity and outcomes for neurological rehabilitation. J. Physiol. 2007 Mar.579(Pt 3):637–42. doi: 10.1113/jphysiol.2006.123067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Moreno JC, Barroso F, Farina D, Gizzi L, Santos C, Molinari M, Pons JL. Effects of robotic guidance on the coordination of locomotion. J. Neuroeng. Rehabil. 2013 Jan.10:79. doi: 10.1186/1743-0003-10-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Umphred DA, Roller M. Neurological Rehabilitation6: Neurological Rehabilitation. Elsevier/Mosby; 2013. [Google Scholar]

- [6].Lew E, Chavarriaga R, Silvoni S, Millán JDR. Detection of self-paced reaching movement intention from EEG signals. Front. Neuroeng. 2012 Jan.5(July):13. doi: 10.3389/fneng.2012.00013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Bai O, Rathi V, Lin P, Huang D, Battapady H, Fei D-Y, Schneider L, Houdayer E, Chen X, Hallett M. Prediction of human voluntary movement before it occurs. Clin. Neurophysiol. 2011 Feb.122(2):364–72. doi: 10.1016/j.clinph.2010.07.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Gu Y, Dremstrup K, Farina D. Single-trial discrimination of type and speed of wrist movements from EEG recordings. Clin. Neurophysiol. 2009;120(8):1596–1600. doi: 10.1016/j.clinph.2009.05.006. [DOI] [PubMed] [Google Scholar]

- [9].Garipelli G, Chavarriaga R, Millán JDR. Single trial analysis of slow cortical potentials: a study on anticipation related potentials. J. Neural Eng. 2013 Jun.10(3):036014. doi: 10.1088/1741-2560/10/3/036014. [DOI] [PubMed] [Google Scholar]

- [10].Pehlivan AU, Celik O, Member S, Malley MKO. Mechanical Design of a Distal Arm Exoskeleton for Stroke and Spinal Cord Injury Rehabilitation. IEEE Int. Conf. Rehabil. Robot. 2011 doi: 10.1109/ICORR.2011.5975428. [DOI] [PubMed] [Google Scholar]

- [11].Gupta A, O’Malley MK, Patoglu V, Burgar C. Design, Control and Performance of RiceWrist: A Force Feedback Wrist Exoskeleton for Rehabilitation and Training. Int. J. Rob. Res. 2008 Feb.27(2):233–251. [Google Scholar]

- [12].Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods. 2004 Mar.134(1):9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- [13].Chang C-C, Lin C-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011;2(3):27:1–27:27. [Google Scholar]