Abstract

Binocular disparity is a powerful depth cue for object perception. The computations for object vision culminate in inferior temporal cortex (IT), but the functional organization for disparity in IT is unknown. Here we addressed this question by measuring fMRI responses in alert monkeys to stimuli that appeared in front of (near), behind (far), or at the fixation plane. We discovered three regions that showed preferential responses for near and far stimuli, relative to zero-disparity stimuli at the fixation plane. These “near/far” disparity-biased regions were located within dorsal IT, as predicted by microelectrode studies, and on the posterior inferotemporal gyrus. In a second analysis, we instead compared responses to near stimuli with responses to far stimuli and discovered a separate network of “near” disparity-biased regions that extended along the crest of the superior temporal sulcus. We also measured in the same animals fMRI responses to faces, scenes, color, and checkerboard annuli at different visual field eccentricities. Disparity-biased regions defined in either analysis did not show a color bias, suggesting that disparity and color contribute to different computations within IT. Scene-biased regions responded preferentially to near and far stimuli (compared with stimuli without disparity) and had a peripheral visual field bias, whereas face patches had a marked near bias and a central visual field bias. These results support the idea that IT is organized by a coarse eccentricity map, and show that disparity likely contributes to computations associated with both central (face processing) and peripheral (scene processing) visual field biases, but likely does not contribute much to computations within IT that are implicated in processing color.

Keywords: disparity, fMRI, functional organization, monkey, vision

Introduction

Binocular disparity is a powerful cue for depth perception (Anzai and DeAngelis, 2010) and has been linked to several functions, including scene segmentation, sensorimotor transformations, and object recognition (Orban et al., 2006). In agreement with its various roles, disparity activates many brain regions in monkeys and humans (e.g., Janssen et al., 2000b; e.g., Ferraina et al., 2000; Parker, 2007; Preston et al., 2008; Georgieva et al., 2009). Studies using functional magnetic resonance imaging (fMRI) in rhesus monkeys have shown that disparity activates regions in the dorsal visual stream (Tsao et al., 2003b; Durand et al., 2007), frontal cortex (Joly et al., 2009), and early ventral stream (Tsao et al., 2003b). However, no fMRI study has described clear disparity activation in anterior parts of the ventral visual stream, including inferior temporal cortex (IT), a part of cortex thought to be important in object vision. The lack of disparity activation in IT is surprising given microelectrode recordings in monkeys showing disparity selectivity of IT neurons (Janssen et al., 1999, 2000a; Uka et al., 2005; Yamane et al., 2008), and the role of disparity in object vision (Verhoef et al., 2012). We revisited the functional organization of disparity in monkeys using a custom-built fMRI coil system that provides excellent coverage across IT (Lafer-Sousa and Conway, 2013).

Considering the importance of the ventral visual stream for disparity-defined depth perception (Cowey and Porter, 1979; Ptito et al., 1991; Verhoef et al., 2010, 2012; Shiozaki et al., 2012), we sought to document the extent to which disparity information is available to IT, and to use disparity as a tool to access the functional architecture of IT. The ventral visual stream consists of parallel, multistaged processing pathways, such as those for faces, colors, and scenes (Tsao et al., 2003b; Nasr et al., 2011; Lafer-Sousa and Conway, 2013). Deciphering the way these streams relate to the functional organization for disparity may advance our understanding of how the brain builds a unitary visual percept. Here we addressed these questions by measuring fMRI responses to random-dot disparity stimuli in the same monkeys in which we previously determined the relative functional organization for colors, shapes, places, and faces (Lafer-Sousa and Conway, 2013).

In Experiment 1, we used a stimulus that comprised a mixture of near and far disparities to identify regions that responded more to both near and far stimuli, relative to stimuli without disparity. In Experiment 2, we measured fMRI responses to separate near and far stimuli, to test for biases for either near or far disparities, and found chiefly near disparity-biased regions. We compared the organization of the different disparity-biased regions and related these spatial patterns to the functional organization of faces, colors, places, and the representation of the visual field. We found widespread activation to disparity across both central and peripheral representations in IT; the activation occurred in patches that were generally reproducible across hemispheres and subjects. The results reveal crosstalk between the disparity network and parts of the face and scene network.

Materials and Methods

fMRI.

Scanning procedures were identical to those of a previous study (Lafer-Sousa and Conway, 2013). Two male rhesus macaques (7–8 kg), Monkey M1 and Monkey M2, pair housed in standard 12:12 light-dark cycle and given food ad libitum, were scanned at Massachusetts General Hospital Martinos Imaging Center in a 3-T Tim Trio scanner (Siemens). Magnetic resonance (MR) images were acquired with a custom-built four-channel MR coil system with AC88 gradient insert. This system increases the signal-to-noise ratio (SNR) by allowing very short echo times, providing 1 mm3 spatial resolution and good coverage of the temporal lobe (Lafer-Sousa and Conway, 2013; their Supplemental Fig. 9). Standard echo planar imaging was used (repetition time = 2 s, 96 × 96 × 50 matrix, 1 mm3 voxels; echo time = 13 ms). To further enhance SNR, data were obtained after administration of a contrast agent, monocrystalline iron oxide nanoparticle (MION, Feraheme, 8–10 mg per kg of body weight, diluted in saline, AMAG Pharmaceuticals), injected intravenously into the femoral vein just before scanning. SNR increases brought about by MION are demonstrated previously (Lafer-Sousa and Conway, 2013; their Supplemental Fig. 1a). Decreases in MION signals correspond to increases in blood oxygen level-dependent (BOLD) response; time course traces in all figures have been vertically flipped to facilitate comparison with conventional BOLD in which upward deflections correspond to increases in neural activity. We used data from two animals and all hemispheres in the analyses. Using juice rewards, monkeys were trained to sit in a sphinx position in a custom-made chair placed inside the bore of the scanner, and to fixate a central spot presented on a screen 49 cm away. Head position was maintained using surgically implanted custom-made plastic head posts (see Surgical procedures). An infrared eye tracker (ISCAN) was used to monitor eye movements, and animals were only rewarded for maintaining their gaze within ∼1 degree of the central fixation target. All imaging and surgical procedures conformed to local and National Institutes of Health guidelines and were approved by the Harvard Medical School, Massachusetts General Hospital, and Wellesley College Institutional Animal Care and Use Committees.

Surgical procedures.

Details of the surgical procedures have been published previously (Lafer-Sousa and Conway, 2013). A plastic headpost was implanted using standard sterile surgical procedures. Animals were anesthetized with ketamine (15 mg/kg, intramuscular) and xylazine (2 mg/kg, intramuscular) and given atropine (0.05 mg/kg, intramuscular) to reduce salivary fluid production. Depth of anesthesia was maintained with 1%–2% isoflurane. Before surgery, animals were given a preemptive dose of buprenorphine (0.005/kg, intramuscular) and flunixin (1.0 mg/kg, intramuscular) as analgesics, and a prophylactic dose of an antibiotic (Baytril, 5 mg/kg, intramuscular). Antibiotic was administered again 1.5 h into surgery; buprenorphine and flunixin were given for 48 h postoperatively. During the surgery, the animals were placed in a stereotaxic holder, and sterile techniques were used to insert ceramic screws and custom-made inverted plastic ‘T’ bolts into the skull. A head post was placed on the surface of the skull and cemented in place to the skull, anchored by the screws and T-bolts using dental acrylic. The animals recovered for 2–3 months before resuming training.

Disparity stimuli.

Visual stimuli were displayed on a screen (41° × 31°) 49 cm in front of the animal using a JVC-DLA projector (1024 × 768 pixels). All stimuli contained a small central fixation cross to engage fixation. Stimuli were random-dot stimuli with 15% dot density and a dot size of 0.08° × 0.08° presented on a black background (see Fig. 1A). Stereograms were anaglyphs and viewed through red-blue filter goggles. The luminance of the red dots was 20.42 cd/m2 through the red filter and 4 cd/m2 through the blue filter. The luminance of the blue dots was 20.35 cd/m2 through the blue filter and 1.38 cd/m2 through the red filter. Stimuli were presented in a block-design procedure. The repetition time (TR) was 2 s. Each block lasted 32 s (16 TRs). Each run encompassed 13 blocks and lasted 7 min 6 s (208 TRs). Additional experiment-dependent stimulus details are described in the next two sections.

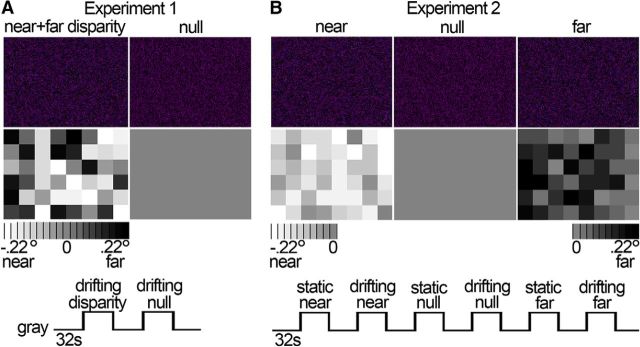

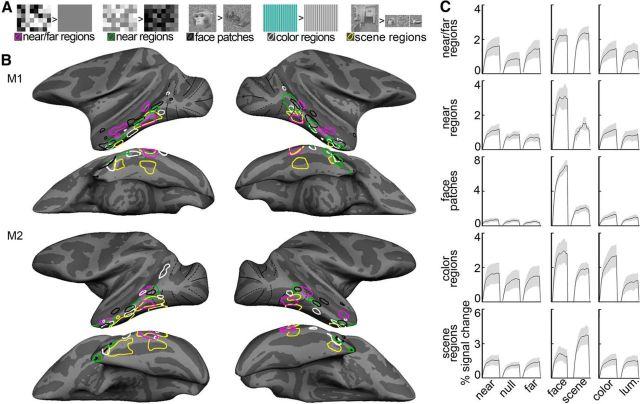

Figure 1.

Stimuli and experimental design. To examine the functional architecture for disparity, we presented stimuli with and without disparity to alert monkeys and measured fMRI responses. A, Experiment 1 served to localize near/far disparity-biased regions. Example anaglyphs depicting static random-dot stereograms are presented (first row), with mixed near and far disparity (leftmost anaglyph) and without disparity (rightmost anaglyph). The actual stimuli in Experiment 1 were drifting random-dot stereograms (see Materials and Methods). When horizontal disparity was added to the stimulus, the stimulus appeared as a checkerboard with each check located at a randomly chosen depth around null disparity (range: −0.22° to 0.22°). The disparity map below each anaglyph shows the disparity associated with each check of the example stimuli as a grayscale value: Light checks appear near (in front of the fixation plane); dark checks appear far (behind the fixation plane). Null stimuli did not contain any disparity. Blocks (32 s) with random-dot stimuli were interleaved with blocks with neutral full-field gray, and blocks with disparity stimuli alternated with blocks with no-disparity stimuli. B, Experiment 2 was used to quantify near/far selectivity in the ROIs defined in Experiment 1 and to localize position-of-depth-biased regions. Example anaglyphs and their disparity maps are presented. The near stimuli consisted of a checkerboard presented in front of the fixation plane (average disparity = −0.11°; range: −0.22° to 0°). The null stimulus contained no disparity variation (disparity = 0°). The far stimulus constituted a checkerboard presented behind the fixation plane (average disparity = 0.11°; range: 0° to 0.22°). Random-dot stimuli could be static or drifting and were presented in blocks of 32 s, interleaved with blocks maintaining constant neutral full-field gray.

Experiment 1: near/far disparity localizer.

IT regions of interest (ROIs) biased for both near and far disparities relative to stimuli without disparity, henceforth denoted as “near/far disparity-biased regions,” were obtained using a localizer experiment in which the monkeys viewed drifting random-dot stimuli with either zero disparity or mixed near and far disparities (see Fig. 1A). Stimuli contained dots moving at 2.2°/s, changing direction every 2 s. Disparity stimuli appeared as checkerboards with an average disparity of 0° and consisted of 8 × 6 checks (3.5° × 3.5° square) with a randomly chosen disparity with a range of ± 0.22° around null disparity. Blocks with random-dot stimuli were interleaved with blocks with neutral full-field gray of constant mean luminance; and random-dot stimulus blocks alternated between disparity and null blocks. We obtained a total of 10 runs for Monkey M1 (1 experimental session) and 41 runs for Monkey M2 (2 experimental sessions). The data from this experiment were used to define near/far disparity-biased ROIs; activity in these ROIs was quantified using independently acquired data obtained in Experiment 2.

Experiment 2: quantifying near/far disparity-biased responses, and measuring responses to near and far disparities separately.

In this experiment, we presented a total of six different blocks of random-dot stimuli (see Fig. 1B); half of the blocks were static and half were dynamic, and these alternated in the course of a single run. Stimuli appeared either as checkerboards in front of (near) or behind (far) the plane of fixation; or were uniform fields at the plane of fixation (null). Dynamic stimuli contained dots moving at 2.2°/s, changing direction every 2 s. When horizontal disparity was added to the static or dynamic random-dot stimulus, the stimulus appeared as a checkerboard consisting of 8 × 6 checks with each check being a 3.5° × 3.5° square. The mean disparity of the checkerboard was either 0.11° near (crossed) or 0.11° far (uncrossed). Checks had a randomly chosen disparity with a range of ±0.11° around the mean disparity. Blocks with random-dot stimuli were interleaved with blocks of full-field gray that maintained constant average luminance across the run. Blocks were presented in one order (gray, static near, gray, drifting near, gray, static null disparity, gray, drifting null disparity, gray, static far, gray, drifting far, gray). We obtained 23 runs for Monkey M1 and 26 runs for Monkey M2. The data from Experiment 2 served to probe disparity-biased activity in two different ways: First, by contrasting the responses to both near and far disparity stimuli with responses to stimuli without disparity (i.e., near + far vs null), using the near/far disparity-biased ROIs obtained in Experiment 1. Second, by contrasting the responses to stimuli with near and far disparity separately, which allowed us to examine potential cortical activity biases for near or far positions in depth. In all analyses, ROI definition and response quantification were conducted using independent data (see below).

ROI definitions.

We defined functional regions biased for colors, faces, scenes, visual field eccentricity, near/far disparity, and near disparity. Regions biased for color, face, scene, or eccentricity were defined using previously published data (Lafer-Sousa and Conway, 2013) obtained in the same animals in which we conducted the disparity experiments described presently; near disparity-biased regions were defined using Experiment 2. Full details regarding the face, place, color, and eccentricity stimuli are given in Lafer-Sousa and Conway (2013). The images of objects and scenes were static grayscale. Blocks of intact images were interleaved with blocks of scrambled versions of the same images. Color stimuli consisted of low-spatial frequency drifting color-gray or achromatic (black-and-white) gratings. Each block comprised a single color or achromatic condition; there were 12 color conditions that evenly sampled DKL color space (Derrington et al., 1984). Eccentricity stimuli consisted of discs and annuli of zero-disparity checkerboards centered on the fixation spot. The central disc had a radius of 1.5°, and the annuli were 1.5°–3.5°, 3.5°–7°, and 7°–20°. Checkerboards were comprised of checks of either magenta and cyan, blue and yellow, or black and white. Responses to each eccentricity were averaged across checkerboard hues. In all cases, to avoid “double-dipping” (Kriegeskorte et al., 2009), ROIs were defined in each animal individually, using half the data runs, and selectivity indices were computed in the ROIs using the other half of the data (indices are described below). Significance thresholds to define ROIs were set to yield approximately the same number of voxels in each hemisphere (left hemisphere/right hemisphere, M1: p = 10−5, 10−3; M2: p = 10−7, 10−5), and ROIs were defined as a set of contiguous voxels with suprathreshold significance values.

Near/far disparity-biased ROIs were defined by contrasting the responses to stimuli with mixed near and far disparity with responses to stimuli without disparity, using data obtained in Experiment 1. Near disparity-biased regions were defined by contrasting the responses to stimuli with near disparity with responses to stimuli with far disparity, using data obtained in Experiment 2. The near disparity-biased regions were defined using even-numbered runs and quantified using odd-numbered runs obtained in the same scan sessions (similar results were obtained using other data partitions). Face patches were defined by comparing the responses to achromatic images of faces with the responses to achromatic images of bodies. Color-biased ROIs were defined by comparing the responses to achromatic gratings with the responses to equiluminant chromatic gratings. Scene-biased regions were defined in a conjunctive way as those brain regions that were activated significantly more in response to achromatic images of scenes than to achromatic images of faces, achromatic images of objects, as well as scrambled scenes (Lafer-Sousa and Conway, 2013). The conjunction analysis required voxels to significantly increase their response to images of scenes in all three considered contrasts (i.e., scenes vs faces, scenes vs objects, and scenes vs scrambled scenes). Finally, visual field biased regions were defined as those that showed differential responses to the foveal/central annuli (radius 3.5°) compared with the peripheral annuli (radius 7° + 20°).

fMRI data processing.

High-resolution anatomical scans (0.35 × 0.35 × 0.35 mm3 voxels) were obtained for each animal while it was lightly sedated. Significance maps generated from the functional data were rendered on inflated surfaces of each animal's anatomical volume. Data analysis was performed using FREESURFER and FS-FAST software (http://surfer.nmr.mgh.harvard.edu/), the custom “jip” toolkit provided by J. Mandeville (http://www.nitrc.org/projects/jip/), and custom scripts written in MATLAB (MathWorks). The surfaces of the high-resolution structural volumes were reconstructed and inflated using FREESURFER; functional data were motion corrected with the AFNI motion correction algorithm (Cox and Hyde, 1997), spatially smoothed with a Gaussian kernel (full-width at half maximum = 2 mm), and registered to each animal's own anatomical volume using jip. The fMRI images were processed using standard alert monkey fMRI processing techniques: images were first normalized to correct for signal intensity changes and temporal drift, and t tests uncorrected for multiple comparisons were performed to construct statistical activation maps based on a general linear model (Tsao et al., 2003b; Op de Beeck et al., 2008; Lafer-Sousa and Conway, 2013). Activation was thresholded at significance levels indicated in the figures by a color scale bar. Activation maps were then projected on high-resolution anatomical volumes and surfaces. Time courses were calculated by first detrending the fMRI response. The temporal drift often associated with fMRI signals was modeled by a second-order polynomial as follows:

|

where x(t) is the raw fMRI signal and S(t) is the detrended signal. The coefficients a–c were calculated using the MATLAB function polyfit. The percentage deviation of the fMRI signal, s′(t), reported as the y-axis values in the time course traces, was calculated by s′(t) = 100 × , where s(t) = smoothed (S(t) + c), t = 1, 2,…N, s̄ is the mean of s(t), and N is the number of repetition times in the experiment. The constant c was added to S(t) to avoid dividing by zero errors. Smoothing was performed using the MATLAB function smooth with a moving average of 3 TRs.

The signal, averaged across all voxels associated with a given ROI, was first detrended and smoothed as described above. The response during a given stimulus block was then calculated using the average of the last seven repetition times of the stimulus block to avoid confounds introduced by the hemodynamic delay. Suppose that the stimulus occurred during the kth block in a run, the block immediately preceding and immediately following this stimulus block would be gray. We denote the response to the stimulus block as Rk, which is the mean of the response during the ninth to the 16th samples, and the response to the neighboring gray blocks as Rk−1 and Rk+1. The percentage signal change due to the stimulus block was then defined by the following:

|

Selectivity indices were computed as Selectivity = , in which R1 and R2 are the responses to two different stimulus conditions for a given ROI (e.g., disparity vs no disparity; see Fig. 5). Responses (averaged over the last seven repetition times in a stimulus block) were calculated against the response to the intervening gray block as described above. If any of the responses that make up R1 or R2 were negative, a value was added to the these responses to raise them such that they were zero or positive (Simmons et al., 2007). Mean selectivity indices are presented in the bar plots of Figures 5, 7, and 11 and were determined by averaging the selectivity indices of the functionally defined regions in all four hemispheres. The selectivity indices associated with the ROIs in all four hemispheres were used to compute standard errors of the mean (SEM; see bar plots) and perform nonparametric statistical tests.

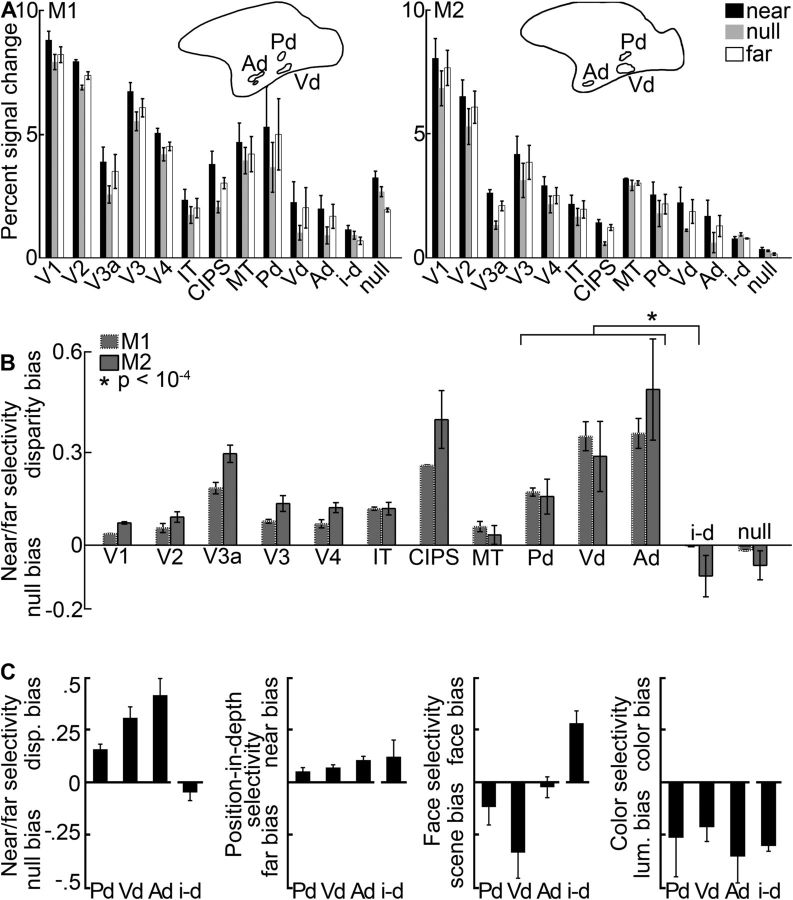

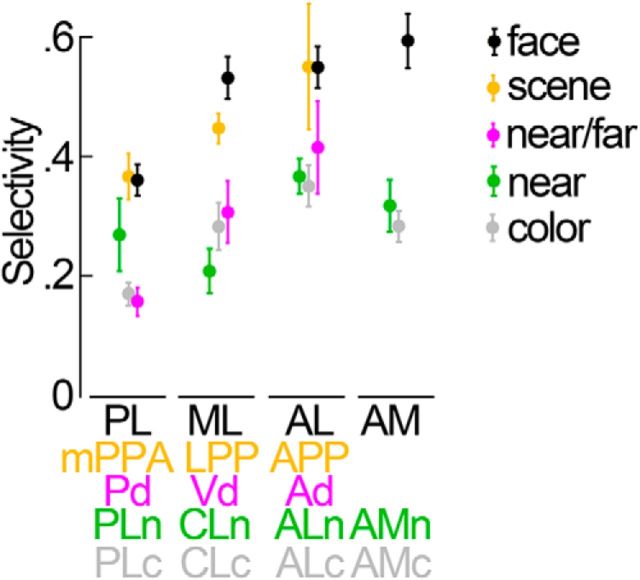

Figure 5.

Quantification of the disparity-biased activity in an independent dataset. Quantification is based on the data from Experiment 2 in ROIs defined in Experiment 1. A, Average percentage signal change to stimuli with near disparity (black), far disparity (white), and without disparity (gray) in regions in and outside of IT. Left, Monkey M1. Right, Monkey M2. Pictograms indicate the location of IT's near/far disparity-biased regions in each subject. B, Average selectivity per area and monkey. The near/far disparity selectivity index was defined as (near + far disparity − null)/(near + far disparity + null). Positive values correspond to increased responses to stimuli with both near and far disparity (disparity bias); negative values indicate stronger responses to stimuli without disparity (null bias). The average disparity selectivity in the interstitial disparity patches (i-d) was significantly smaller than that in the disparity regions (p = 10−4, unpaired permutation t test comparing the disparity selectivity indices from all hemisphere's near/far disparity-biased regions [N = 12] with all hemisphere's i-d regions [N = 4]; see asterisk). C, From left to right, Near/far selectivity, position-in-depth selectivity, face/scene selectivity, and color selectivity in the interstitial- and disparity-biased regions in IT. Error bars indicate SEM. P, Posterior; Vd, ventral; Ad, anterior near/far disparity-biased region; i-d, interstitial region; null, null-biased voxels.

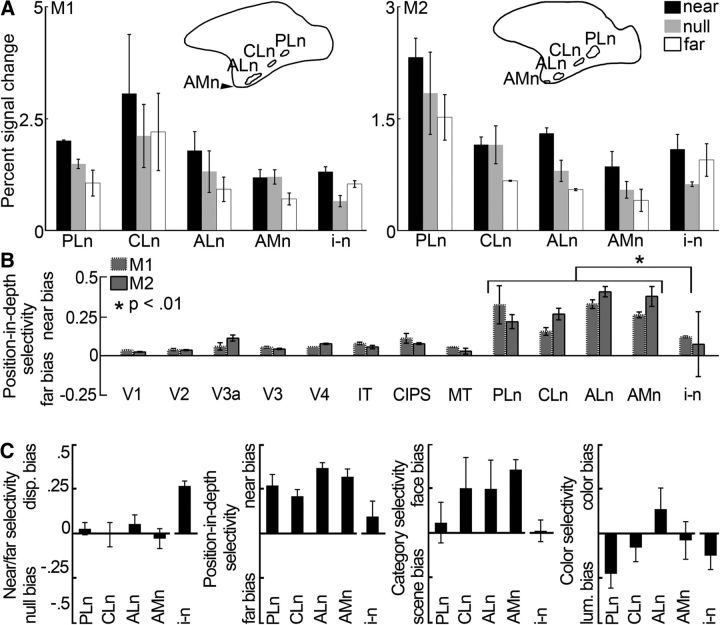

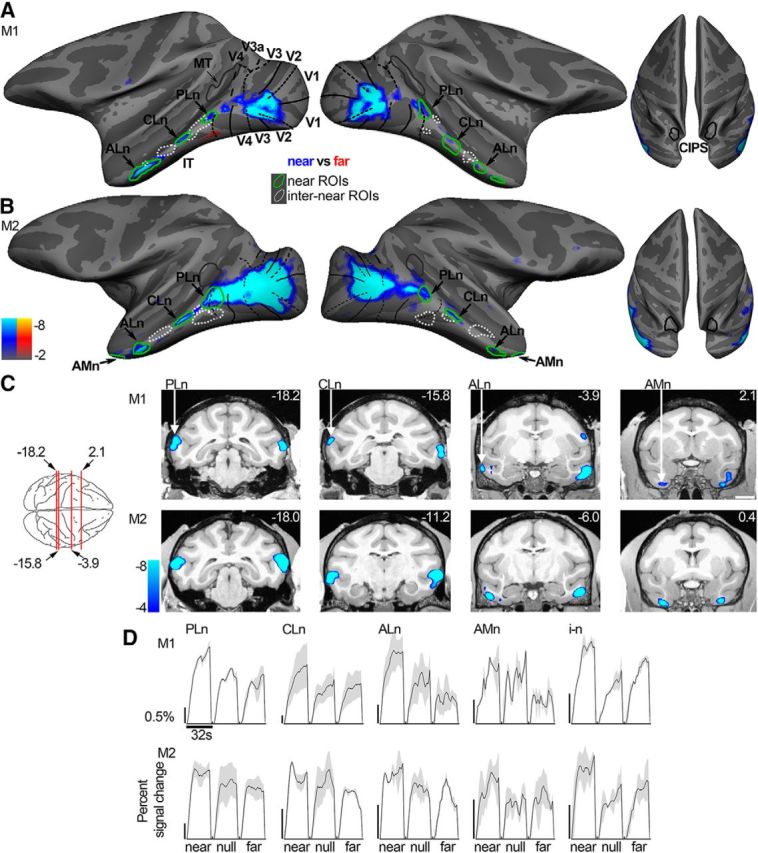

Figure 7.

Quantification of the near disparity-biased activity in an independent dataset. Quantification was performed on half of the data from Experiment 2. ROIs were defined using the other half of the data from Experiment 2. A, Average percentage signal change to stimuli with near disparity (black), far disparity (white), and without disparity (gray) in the near disparity-biased ROIs in IT. PLn, Posterolateral; CLn, centrolateral; ALn, anterolateral; AMn, anteromedial; i-n, interstitial near disparity-biased region. Left, Monkey M1. Right, Monkey M2. Pictograms represent the location of ITs near disparity-biased regions for each monkey. B, Average position-in-depth selectivity, defined as the responses to (near − far)/(near + far) (see Materials and Methods), per area and monkey. Positive values correspond to increased responses to near stimuli (near bias); negative values indicate stronger responses to far stimuli (far bias). Some near disparity-biased activity was observed in all examined areas. Responses in the three near/far disparity-biased regions Pd, Vd, and Ad were near biased (p < 0.001 bootstrap t test on the near-selectivity indices associated with the near/far disparity-biased regions of all four hemispheres, N = 12, N = 3 per hemisphere), although less pronounced than in the near disparity-biased regions (p < 10−6, unpaired permutation t test on the position-in-depth selectivity indices of the near [N = 16] and near/far disparity-biased [N = 12] regions). The average near bias in the interstitial IT region i-n was smaller than that in the near disparity-biased IT regions (see asterisk; p = 0.007, unpaired permutation t test comparing the position-in-depth selectivity indices from all four hemispheres' near disparity-biased regions [N = 16] with those of the interstitial regions of all four hemispheres [N = 4]). C, From left to right, Near/far selectivity, position-in-depth selectivity, face/scene selectivity, and color selectivity in the interstitial- and near disparity-biased regions in IT. Error bars indicate SEM.

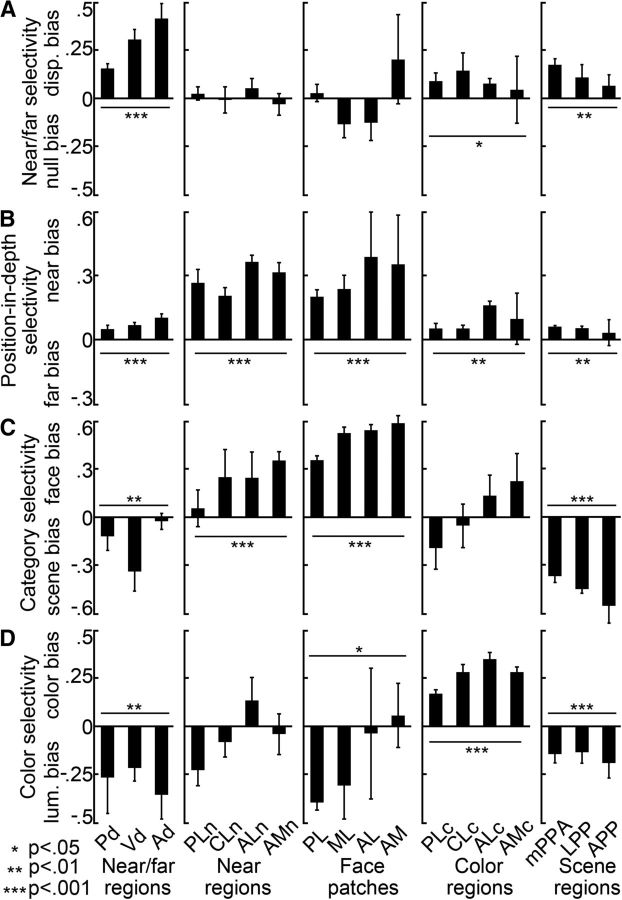

Figure 11.

Quantification of near/far, position-in-depth, face, scene, and color selectivity in IT in independent datasets. Selectivity indices were computed using half of the data. The selectivity indices for the near/far disparity-biased regions in IT are shown in the first column. Second column, Near disparity-biased regions in IT. Third column, Face patches in IT. Fourth column, Color-biased regions in IT. Fifth column, Scene-biased regions in IT. A, Near/far selectivity (near + far disparity − null)/(near + far disparity + null), in the different IT patches. Activity in the color- and scene-biased regions was near/far disparity biased (p = 0.02 on the near/far selectivity indices of the N = 16 color regions of all four hemispheres; p = 0.003 for the N = 12 scene-biased regions; bootstrap t test), but less selective than the IT's near/far disparity-biased regions (p = 0.003 for the color regions; p = 0.004 for the scene-biased regions; unpaired permutation t test). B, Position-in-depth selectivity (near disparity − far disparity)/(near disparity + far disparity), in the different IT patches. All patches, regardless of their definition (i.e., face, color, scene, or stereo), demonstrated a preference for nearer stimuli (p < 0.01 for face, color, scene, and disparity patches; bootstrap t test). The near bias in the color-, scene-, and near/far disparity-biased regions was small compared with that in the near disparity-biased regions (p < 10−5, unpaired permutation t test). The preference for near stimuli was comparable in the face- and near disparity-biased regions (p = 0.9, unpaired permutation t test). C, Face/scene category selectivity in the different IT patches. Face/scene selectivity was defined as the responses to (faces − scenes)/(faces + scenes), with positive values corresponding to a preference for faces and negative values corresponding to a preference for scenes. Responses in near disparity-biased regions were face selective (p < 10−4, bootstrap t test), but not as face selective as the responses in the face-biased regions of IT (p = 3 × 10−4, unpaired permutation t test on the face-selectivity indices corresponding to all near biased, N = 16, and all face-biased, N = 16, regions of all hemispheres). Responses in the near/far disparity-biased regions were on average scene-biased (p < 0.01). D, Color selectivity in the different IT regions. Color selectivity was defined as the responses to (weakest color − luminance)/(weakest color + luminance), with positive values corresponding to a preference for colors. Color selectivity was highest in the color-biased brain regions (p < 2 × 10−4 for the comparisons between the color-selectivity indices of the near/far disparity-, near disparity-, face-, scene-biased regions, and those of the color-biased regions; unpaired permutation t test). The near disparity-, near/far disparity-, face-, and scene-biased regions were generally more strongly activated by achromatic stimuli than by colored stimuli (p < 0.05 for the near/far disparity-, face-, and scene-biased regions; p > 0.05 for the near disparity-biased regions; bootstrap t test). Asterisks refer to the results of a statistical test comparing the average selectivity within an area to zero (bootstrap t test): *p < 0.05, **p < 0.01, ***p < 0.001. Error bars indicate SEM. Same ROI-naming conventions as before.

Results

The ventral visual stream consists of a set of brain regions that starts in V1 and ends in anterior IT. We used fMRI to determine the functional architecture for disparity processing along the ventral visual stream of alert rhesus monkeys while they maintained fixation during passive exposure to full-field stimuli with different disparities. The stimuli were random-dot stereograms, in which the dot pattern seen by one eye is horizontally displaced relative to the pattern seen by the fellow eye (Julesz, 1964). Depending on the direction of displacement, the depth percept is either of a surface appearing in front of the fixation plane (“near”) or behind it (“far”) (Fig. 1). We ran two sets of disparity experiments: the first experiment (Fig. 1A) was used as a localizer to define ROIs that responded more to both near and far disparities relative to stimuli without disparity, denoted as “near/far” disparity-biased regions. The localizer comprised stimulus blocks of drifting null-disparity random-dot stimuli and blocks of drifting random-dot disparity checkerboards (the checks in a given random-dot stimulus were randomly assigned to be of near or far disparity; Fig. 1A; see Materials and Methods). The second experiment was used to quantify responses within the near/far disparity-biased ROIs and to test for biases in the representation of near versus far disparities. We conducted these additional tests, prompted by psychophysical and neurophysiological observations suggesting that under some circumstances “near” disparities are privileged (Landers and Cormack, 1997; Tanabe et al., 2005). As described below, the results from Experiment 2 showed a strong bias for near disparities in certain portions of IT; the data in Experiment 2 were therefore used to define “near disparity-biased” regions. In the second experiment, we measured responses to checkerboards that comprised either all near-disparity checks or all far disparity checks (and were either drifting or static) (Fig. 1B; see Materials and Methods). The stimuli used in the two sets of experiments were chosen for comparison with a previous fMRI study that examined disparity processing in the occipitoparietal cortex of humans and monkeys (Tsao et al., 2003b). Stimuli were presented in 32 s blocks that were interleaved with blocks of neutral full-field gray stimuli maintaining constant mean luminance. To delineate the retinotopic areas encompassing the ventral pathway, we used fMRI to map the visual meridian representations and computationally inflated the brain to show the cortical activity buried in sulci. The retinotopic data have been reported in a previous study and are repeated here for convenience (Lafer-Sousa et al., 2012; Lafer-Sousa and Conway, 2013).

Functional architecture for disparity: near/far disparity-biased regions

We first defined disparity-biased regions as those parts of cortex that showed stronger activation to random-dot stimuli with near and far disparity than to random-dot stimuli without disparity (Experiment 1; “near/far vs null”). Figure 2A shows data on computationally inflated surfaces for one animal. The locations of the near/far disparity-biased regions in Experiment 1 were consistent with those identified in Experiment 2, in which we compared the average response to the near and far conditions against the responses to the null (zero) disparity condition (Fig. 2B). Experiments 1 and 2 were conducted ∼6 months apart; the consistency in the activation patterns to the disparity stimuli obtained in these two experiments shows that the organization for disparity is stable over time. To provide the clearest possible representation of the functional organization for disparity-biased regions, Figure 2C, D shows the pattern of disparity-biased activity for each animal averaged over the two sets of experiments. The locations of the near/far disparity-biased regions relative to major anatomical landmarks were generally similar across the two monkeys and the four hemispheres, but the activity was somewhat stronger in the left hemisphere of each monkey (Fig. 2, left column of panels). In agreement with previous fMRI studies (Tsao et al., 2003b; Joly et al., 2009; Hubel et al., 2015), we observed strong disparity activation in regions of occipital and posterior-parietal cortex, including areas V3a and caudal intraparietal sulcus (CIPS) (Fig. 2, solid arrowheads), and in parts of prefrontal cortex between the posterior part of the principal sulcus and the inferior ramus of the arcuate sulcus. V1, V2, and V3 showed more variable responses to the disparity stimulus across the two animals, in agreement with previous reports (Tsao et al., 2003b).

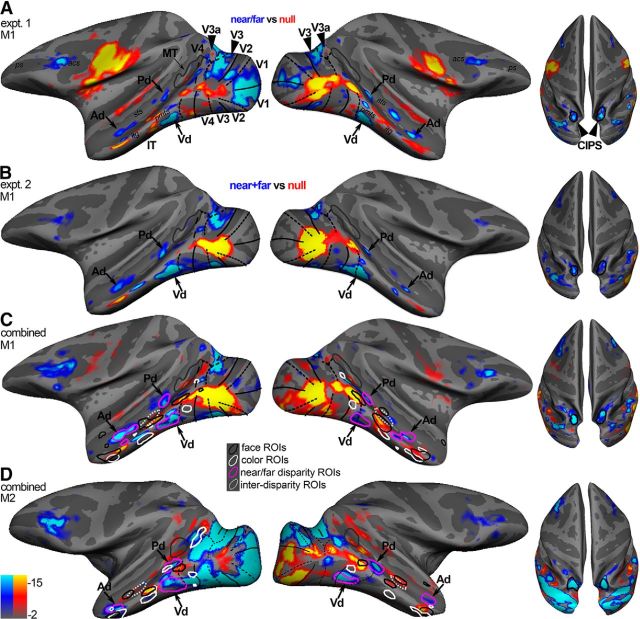

Figure 2.

Near/far disparity-biased activity in the ventral visual stream. A, Near/far disparity-biased activity in Monkey M1 in Experiment 1. The computationally inflated surfaces show the near/far disparity-biased activity for Monkey M1 in blue/cyan and the “null-biased” activity (i.e., stronger responses to stimuli without disparity) in yellow/red. The disparity-biased activity of Experiment 1 was used to define the three near/far disparity-biased regions in IT: Pd, Vd, and Ad near/far disparity-biased region. Black solid lines indicate horizontal meridians. Black dotted lines indicate vertical meridians. Gray outlines indicate MT. Left, Lateral views of each hemisphere. Right, Top views of the brain showing the activity in CIPS (black outlines). B, Near/far disparity-biased activity in Monkey M1 in Experiment 2. The inflated surfaces show those voxels that responded more to disparity stimuli (i.e., near and far stimuli) in blue/cyan (near/far disparity-biased) and voxels that responded more to stimuli without disparity in yellow/red (null-biased). The locations of the near/far disparity-biased IT regions in Experiment 2 agreed well with those of Experiment 1. The activity in Experiment 2 was used to quantify near/far disparity-biased activity in the near/far disparity-biased ROIs derived in Experiment 1. C, Surfaces display the combined activity from Experiment 1 and Experiment 2 for Monkey M1. Magenta closed contours represent the near/far disparity-biased regions, as defined in Experiment 1, in each hemisphere. White dotted closed contours represent the interstitial disparity regions (i-d) located on the STS in between the near/far disparity regions. The locations of the color-biased regions (white contours) and face patches (black contours), measured in the same animals, are given to provide functional landmarks. D, Surfaces display the combined activity from Experiment 1 and Experiment 2 for Monkey M2. Acs, Arcuate sulcus; itg, inferior temporal gyus; pmts, posterior middle temporal sulcus; ps, principal sulcus; sts, superior temporal sulcus.

Consistent disparity-biased activation was also found in portions of IT, anterior to area V4. In all four hemispheres, disparity-biased activity was seen just anterior to ventral V4 on the inferotemporal gyrus, within posterior IT near the posterior middle temporal sulcus (PMTS) (“Vd,” Fig. 2). More dorsally, disparity-biased activity was found in parts of the fundus of the superior temporal sulcus and the ventral bank of the superior temporal sulcus (“Pd” and “Ad,” Fig. 2); the near/far disparity-biased regions (Fig. 2C,D, magenta contours) were separated by an interstitial “i-d” region that did not show an obvious near/far disparity bias (Fig. 2C,D, dotted ROI). In addition to anatomical landmarks, Figure 2C, D also shows functional landmarks: the color-biased regions (white contours) and face patches (black contours), measured in the same animals. The relationship between the various activation patterns will be described in a subsequent section.

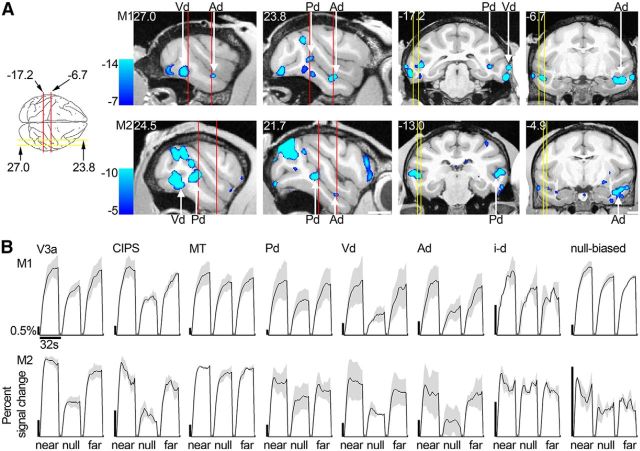

Figure 3A shows the disparity-biased activation used to generate Figure 2C, D on sagittal (left four panels) and coronal (right four panels) slices (M1, top row; and M2, bottom row). The average time courses of the responses obtained in Experiment 2 in the three IT disparity patches (Pd, Vd, and Ad, defined using data from Experiment 1) are given in Figure 3B and show increased activity for near and far stimuli compared with lower activity for stimuli without disparity. For comparison, Figure 3B also shows the time course of activation in V3A, CIPS, and middle temporal area (MT).

Figure 3.

Near/far disparity-biased activity: location and time course. A, Left four brain sections represent near/far disparity-biased activation in sagittal slices at locations indicated by the yellow lines on the top-down view of the brain schematic (or nearby for Monkey M2). Right four panels represent this disparity-biased activation in coronal sections corresponding to the red lines of the brain schematic (or nearby for M2). First row, Monkey M1. Second row, Monkey M2. Slices are given in Talairach coordinates (mm). Functional activation in these slices is based on the combined data from Experiments 1 and 2 and superimposed on a high-resolution anatomical scan. B, Time course of the average percentage signal change in V3a, CIPS, MT, the near/far disparity-biased regions Pd, Vd, Ad, the interstitial disparity patch i-d, and the null-biased voxels. Time courses are given for the near, null, and far stimuli presented in Experiment 2. The average time courses of the different regions are scaled to be of approximately the same height for proper comparison. Black scale bars on the left of each panel represent the 0.5% signal change; note the different lengths. First row, Monkey M1. Second row, Monkey M2. Blocks lasted 32 s. Areas V3a, CIPS, and the near/far disparity-biased regions exhibited increased activity to near and far stimuli compared with stimuli without disparity. Area MT and the interstitial STS regions in between the anterior and posterior near/far disparity regions (i-d) did not show this activation pattern. The null-biased voxels increased their activity predominantly in response to near stimuli, relative to stimuli without disparity. Shading over the lines indicates SEM.

Apart from regions with increased activity to disparity stimuli, some voxels showed higher responses to stimuli with no disparity compared with their responses to near/far stimuli (Fig. 2A–D, yellow/red regions). These seemingly “null-disparity-biased” voxels were predominantly located near the foveal confluence of V1, V2, V3, and V4, and at the lip of the the superior temporal sulcus (STS) and the middle temporal gyrus. The time course for the “null-biased” voxels is shown in Figure 3B; these voxels were defined in Experiment 1, and the responses within them were quantified using data obtained in Experiment 2. A closer inspection of the data shows that these voxels were, indeed, modulated by different disparities. Specifically, the voxels showed greatest responses to near stimuli and lowest responses to null and far stimuli; this pattern of responses is different from the one found in the near/far disparity-biased regions (Pd, Vd, and Ad), where voxels showed relatively comparable responses to near and far stimuli, and relatively lower responses to null stimuli. In the next section, we turn our attention to the pattern of responses biased for near (or far) disparities. But first we address whether the patchiness in any of the maps could be an artifact, and quantify the results of the near/far disparity-biased regions identified in Experiment 1.

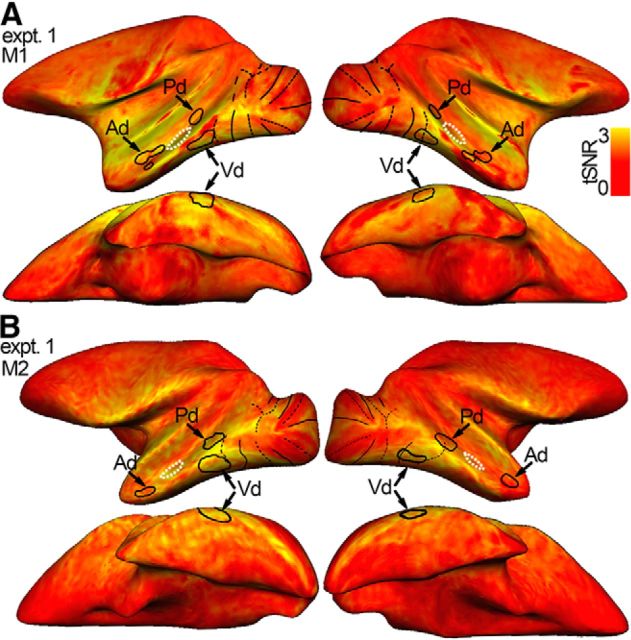

The patchiness in the functional maps could arise even if the underlying functional organization were uniform, if there were inhomogeneity (e.g., signal drop out) in the fMRI signal. The temporal SNR (tSNR) for the two animals is given in Figure 4 and shows that this explanation is unlikely: reliable signal is found throughout the temporal lobe, and the slight variance in SNR is not obviously correlated with the disparity-biased ROIs.

Figure 4.

Patchiness of the disparity-biased IT regions cannot be attributed to patchiness in SNR. Each voxel's tSNR is indicated by color. tSNR is a measure of the SNR of a voxel and is used here to examine potential spatial inhomogeneities (i.e., “signal drops”) of the fMRI signal. Yellowish colors represent voxels with strong SNR. tSNR is defined as each voxel's mean signal, averaged across all time points of the first run of each day, divided by its SD over all time points. The tSNR is subsequently normalized by the average tSNR of the white matter so that values >1 correspond to improved SNR with respect to the white matter. tSNR maps are given for Experiment 1, the disparity localizer experiment. Black closed contours represent the near/far disparity-biased patches (Pd, Vd, and Ad) in each hemisphere. White dotted closed contours represent the interstitial disparity regions (i-d), located on the STS in between the disparity patches. Black solid lines indicate horizontal meridians. Black dotted lines indicate vertical meridians. A, tSNR for Monkey M1 in Experiment 1 in the left and right hemisphere: first row, lateral view; second row, bottom view. B, tSNR for Monkey M2 in Experiment 1. Reliable signal is found throughout the temporal lobe, and the slight spatial variability in SNR is not obviously correlated with the ROIs, providing evidence for the patchiness of the disparity-biased regions.

Figure 5A shows the average percentage signal change obtained in Experiment 2 for retinotopically defined areas (V1, V2, V3a, V3, V4, MT), CIPS, and four ROIs within IT (Pd, Vd, Ad and the interstitial region, i-d, located between Ad and Pd). Black bars represent responses to near stimuli; white bars represent responses to far stimuli; and gray bars represent responses to the null-disparity stimulus. All regions were defined with independent datasets (i.e., defined using data from Experiment 1 and quantified using data from Experiment 2). These areas and regions span the full extent of the ventral visual pathway. The most posterior areas (V1 and V2), representing areas closest to the retinal input to the cortex, showed the strongest activation to both disparity and null conditions; the most anterior regions (IT and its component parts), implicated in the highest levels of object processing, showed lower modulation to the stimuli. The declining sensitivity of more anterior regions is not surprising and is documented in previous reports (e.g., Tsao et al., 2003b; Lafer-Sousa and Conway, 2013): the stimulus was a simple checkerboard, lacking the image complexity typically associated with neural tuning properties within IT. Compared with the responses elicited by the null-disparity stimulus, the disparity stimuli brought about modestly higher average responses in V1, V2, V3, and V4 for both monkeys (p < 10−7, bootstrap test comparing the disparity-selectivity indices of V1, V2, V3, and V4 of all four hemispheres, N = 16, to zero); substantially higher responses in V3a and CIPS (p < 10−4, bootstrap test, N = 8); and markedly higher responses in the three ROIs defined in IT (Pd, Vd, and Ad; p < 10−5, bootstrap test, N = 12) (Fig. 5B). Selectivity for near and far disparities, that is, near/far selectivity (near + far vs null), in V3a and CIPS was significantly higher than near/far selectivity in V1, V2, V3, and V4 (p < 10−7, unpaired permutation t test). In addition, selectivity in the near/far disparity-biased regions was significantly higher than selectivity in V1, V2, V3, and V4 (10−6, unpaired permutation t test). Selectivity in the near/far disparity-biased regions of IT was significantly higher than the selectivity in area MT (p = 0.005, unpaired permutation t test). The average disparity selectivity in the interstitial region i-d differed significantly from that in the near/far disparity-biased ROIs of IT (p = 10−4, unpaired permutation t test on the disparity selectivity indices; Fig. 5C, left). Figure 5C also shows the preference of the near/far disparity-biased regions for near stimuli, face stimuli, and color stimuli. These findings will be discussed in the subsequent sections.

Together, these analyses confirm the spatial discreteness of near/far disparity-biased regions. However, as suggested by the time course of the responses within the apparently “null-biased” voxels (Fig. 3B, right), the pattern of functional organization for disparity likely depends on the contrast used to define it.

Functional architecture for disparity: near disparity-biased activity

Some psychophysical and neurophysiological evidence suggests that the brain preferentially exploits near disparities (Landers and Cormack, 1997; Tanabe et al., 2005). In Experiment 2, we tested this hypothesis by asking to what extent voxels were more strongly activated by near or far stimuli. Figure 5C (second panel) shows that all the near/far disparity-biased ROIs defined by Experiment 1, and the interstitial i-d region that showed no overt near/far disparity bias for the mixed near-far disparity stimulus used in Experiment 1, had a slight bias for near stimuli (p < 0.001, bootstrap t test on all position-in-depth selectivity indices of all hemispheres, N = 16).

The near-bias observed in Figure 5C (second panel) (which was also observed in the “null-biased” regions shown in Fig. 2, time courses shown in Fig. 3B, right), prompted us to examine the spatial organization of the near (vs far) bias across the visual cortex (Fig. 6A,B). The increased activity for near stimuli was evident around the foveal confluence of V1, V2, V3, and V4 and further extended into IT in all four hemispheres. The near disparity-biased activity in IT was spatially organized, with clusters of near-preferring activity located along the crest of the STS, spanning the posterior–anterior axis of IT. In both hemispheres of both animals, we identified four near disparity-biased regions on the basis of half of the data obtained in Experiment 2 (Fig. 6A,B, green contours). We named these near disparity-biased regions, guided by their anatomical location: posterolateral (PLn), centrolateral (CLn), anterolateral (ALn), and anteromedial (AMn). PLn and CLn, were located on the lip of the ventral bank of the STS and the transition into the inferior temporal gyrus; ALn was situated on the inferior temporal gyrus; AMn was located on the most anterior part of the inferior temporal gyrus near the entorhinal area. In addition, we defined interstitial regions between the near disparity-biased regions (“i-n,” Fig. 6A,B, dashed contours), which were used in analyses described below. Figure 6C shows coronal slices through these near disparity-biased regions in both monkeys (M1, top row; M2, bottom row). The time course of the average activity in each near disparity-biased region and the interstitial i-n regions, obtained using independently acquired data from those used to define the ROIs, is given in Figure 6D. The near disparity-biased regions showed a stereotyped ordinal activity pattern: highest activity to the near-disparity condition, lower activity for the null condition, and lowest activity to the far disparity condition.

Figure 6.

Near disparity-biased activity. Computationally inflated surfaces for Monkey M1 (A) and Monkey M2 (B) showing those voxels that responded more to near stimuli in blue/cyan (near disparity-biased) and those voxels that responded more to far stimuli in yellow/red (none visible). The surface activity is based on half the data from Experiment 2; the other half was used to define the near disparity-biased disparity regions. Left, Lateral views of each hemisphere. Right, Top views of the brain. Black solid lines indicate horizontal meridians. Black dotted lines indicate vertical meridians. Green closed contours represent the near disparity-biased disparity patches in each hemisphere. PLn, Posterolateral; CLn, centrolateral; ALn, anterolateral; AMn, anteromedial near disparity-biased region. White dotted closed contours represent the interstitial regions (i–n), located adjacent to the near disparity-biased regions. C, Panels showing near disparity-biased activation in coronal slices at locations indicated by the red lines on the top-down view of the brain schematic (or nearby for M2). First row, Monkey M1. Second row, Monkey M2. Slices are given in Talairach coordinates (mm). Functional activity on the slices is based on all data from Experiment 2 and superimposed on a high-resolution anatomical scan. D, Time course of the average percentage signal change in the PLn, CLn, ALn, AMn, and i-n regions for the near, null, and far stimuli based on half the data from Experiment 2. The average time courses of the different regions are scaled to be of approximately the same height for proper comparison. Black scale bar on the left of each panel corresponds to 0.5% signal change; note the different lengths. Blocks lasted 32 s. Shading over the lines indicates SEM.

The percentage signal changes in the near disparity-biased ROIs are given in Figure 7A. Again, the ROI definitions and quantification were conducted with independent data. Figure 7B shows selectivity-index measures for the near disparity-biased ROIs, and for retinotopic areas, along with CIPS and MT. The position-in-depth selectivity index compares responses to near and far stimuli, averaged across static and drifting stimulus conditions (see Materials and Methods). This differs slightly from Hubel et al. (2015), in which near-selectivity indices were based solely on drifting stimulus conditions. Regions outside of IT generally showed a subtle near bias (p < 10−9, bootstrap test on the N = 28 position-in-depth selectivity indices in all four hemispheres). Figure 7C shows the selectivity-index values for the near disparity-biased IT regions averaged across monkeys; the near bias was significantly greater than the near bias we found in the near/far disparity-biased regions defined in Experiment 1 (compare Fig. 7C, second panel, with Fig. 5C, second panel; p < 10−6, unpaired permutation t test on the near-selectivity indices of the near disparity-biased [N = 16] and near/far disparity-biased [N = 12] regions). Moreover, unlike the near/far disparity-biased regions defined in Experiment 1, the near disparity-biased regions did not show a significantly higher average response to both near and far disparity stimuli compared with null stimuli (p = 0.32, bootstrap test on the N = 16 near/far-selectivity indices of all near disparity-biased regions; compare Fig. 7C, leftmost panel; and Fig. 5C, leftmost panel). This explains why the disparity-related activity in the near disparity-biased regions was undetected with the “near + far disparity vs null” contrast used in Experiment 1. The interstitial i-n regions showed a strong near/far disparity-disparity bias (Fig. 7C, leftmost panel) but gave a significantly weaker near-biased response compared with the near disparity-biased regions (Fig. 7C, second panel; p = 0.007, unpaired permutation t test). Figure 7C also shows the preference of the near disparity-biased IT regions for face stimuli and color stimuli. These findings will be discussed in the next section.

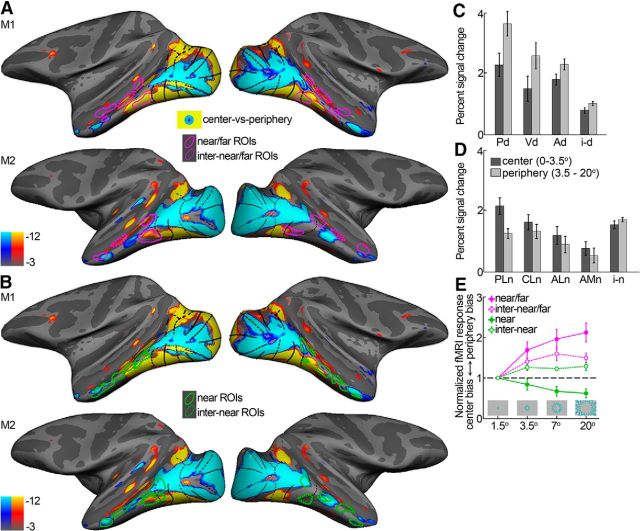

Data in humans (Hasson et al., 2003) and monkeys (Lafer-Sousa and Conway, 2013) suggest that a coarse map of the visual field underlies the global organizational plan of IT. We therefore examined the relationship between the organization for disparity and visual field preference. Figure 8A, B shows eccentricity surface maps with the near/far disparity-biased regions (Fig. 8A; magenta), near disparity-biased regions (Fig. 8B; green), and the corresponding interstitial regions (dotted) overlaid. Near/far disparity-biased regions responded more to more peripheral stimuli (Fig. 8C,E; p < 0.001, paired permutation t test), whereas the near disparity-biased disparity regions were activated more strongly by central stimuli (Fig. 8D,E; p = 0.005). These findings show that the disparities used in this study elicited activation within both centrally biased and peripherally biased regions. The interstitial regions showed visual field preferences intermediate to those of the disparity-biased regions, with some preference for more eccentric visual field locations (p = 0.02; Fig. 8C–E). Hence, near/far disparity-biased regions and near disparity-biased regions not only differed in the pattern of their disparity sensitivity, but also in their retinotopic sensitivity.

Figure 8.

Eccentricity maps. A, Eccentricity mapping with the location of the near/far disparity-biased regions (magenta) and their interstitial regions (magenta dotted) overlaid. Blue/cyan represents regions that were more responsive to a central flickering checkered stimuli (0°–3.5°; no disparity). Yellow/red represents regions that activated more to an annulus of flickering checkers (radius = 3.5°–20°; no disparity). B, Eccentricity mapping with the location of the near disparity-biased regions (green) and their interstitial regions (green dotted) overlaid. C, Average percentage signal change of the near/far disparity-biased regions to central (0°–3.5°) and peripheral (3.5°–20°) stimulation. D, Same for the near disparity-biased regions in IT. E, Normalized average percentage signal change to checkered annuli at different eccentricities for the different types of IT ROIs. Percentage signal change is normalized to that observed with the 1.5° checkered disc, so that values >1 indicate a peripheral bias, whereas values <1 indicate a center bias. Activity was averaged across all regions in all four hemispheres for the near/far disparity-biased regions (near/far; magenta), their interstitial regions (inter-near/far; dotted magenta), the near disparity-biased regions (near; green), and the near disparity-biased interstitial regions (inter-near; dotted green). Error bars indicate SEM.

In sum, we observed that much of the ventral visual pathway responded to disparity stimuli; some disparity-biased regions were detected using a mixed near/far disparity stimulus (Experiment 1) and responded preferentially to peripheral stimuli. Other disparity-biased regions were detected only by comparing responses to near and far stimuli separately (Experiment 2); these regions showed a bias for near compared with far stimuli. The near disparity-biased regions were spaced at somewhat regular intervals along the posterior–anterior axis of the temporal lobe, were activated preferentially to centrally presented stimuli, and were separated by regions with a smaller near bias.

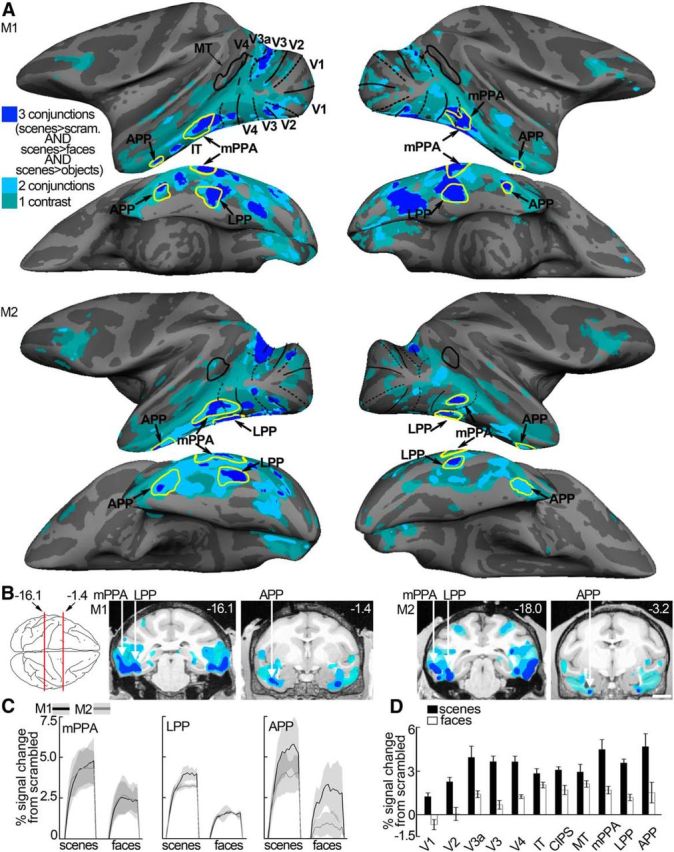

Relationships of the functional architecture for disparity, color, scenes, and faces

We compared the functional organization for disparity processing in IT with that for scenes, colors, and faces. Scene-biased regions were defined by comparing the responses to scenes with the responses to faces, objects, and scrambled scenes using a conjunction analysis (Fig. 9A; see Materials and Methods). To any single contrast, we found considerably higher scene-biased activity along the ventral belly of the temporal lobe than has been previously reported (Nasr et al., 2011; Kornblith et al., 2013). The conjunction analysis is conservative and most likely reveals brain activity that responds specifically to scenes rather than to other low-level differences between the scene and nonscene images. A scene-biased region was found near the PMTS on the inferior temporal gyrus and corresponds to the macaque putative homolog of the human parahippocampal place area (mPPA) (Nasr et al., 2011); another scene-biased region was found near the posterior part of the occipitotemporal suclus and corresponds to the previously identified lateral place patch (LPP) (Kornblith et al., 2013); a third scene-biased region was found adjacent to the anterior part of the occipitotemporal sulcus. We tentatively define this region as the anterior place patch (APP) (Fig. 9A,B). Scene-biased activation corresponding to APP has also been observed in previous studies, albeit variably (Nasr et al., 2011; Kornblith et al., 2013). Some hemispheres also showed an additional region between mPPA and APP (e.g., upper left hemisphere, Fig. 9A); we do not analyze this region further because it was not found in all hemispheres, but its existence is consistent with models of IT that comprise three main stages within each parallel-processing pathway along the posterior–anterior extent of IT (e.g., Lafer-Sousa and Conway, 2013). The time course of the average scene-biased activity in each scene region is shown in Figure 9C; the average percentage signal change to scenes and faces, in regions in and outside of IT, is shown in Figure 9D.

Figure 9.

Scene-biased activity. A, Computationally inflated surfaces for Monkey M1 (first two rows) and Monkey M2 (third and fourth row) showing those voxels that responded more to scene stimuli in cobalt blue (scene-biased). Scene-biased regions were defined by comparing the responses to scenes with the responses to faces, objects, and scrambled scenes using a conjunction analysis. A conjunction analysis was used to define scene-biased regions because it is more likely to detect brain responses to scenes rather than other low-level differences between the scene and nonscene images. Individual scene-localizer contrasts (i.e., scenes vs faces, scenes vs objects, or scenes vs scrambled scenes) led to more “scene”-related activity as indicated by the green and light blue coloring on the surfaces in A and B. Here, color represents whether a voxel activated more strongly to scenes (p < 10−3) in one of three considered contrasts (green), two of three contrasts (light blue), or all three contrasts (cobalt blue). The scene-biased ROIs are constructed using half of the available data and are indicated by the yellow closed contours (LPP, mPPA, and APP) in each hemisphere. The surface activity is based on the other half of the data. Lateral (first and third row) and bottom views (second and fourth row) of each hemisphere are presented. Black solid lines indicate horizontal meridians. Black dotted lines indicate vertical meridians. B, Panels represent scene-biased activation in coronal slices at locations indicated by the red lines on the top-down view of the brain schematic (or nearby for M2). Left two panels, Monkey M1. Right two panels, Monkey M2. Slices are given in Talairach coordinates (mm). Colored regions on the slices are based on all data and superimposed on a high-resolution anatomical scan (same color conventions as in A). C, Time course of the average percentage signal change to images of scenes and faces (relative to scrambled images of scenes and faces respectively) in the scene-biased patches mPPA, LPP, and APP, based on half of the data. Black lines indicate Monkey M1. Gray lines indicate Monkey M2. Shading over the lines indicates SEM. D, Average percentage signal change in response to images of scenes and faces in the scene-biased regions and regions outside of IT. Error bars indicate SEM.

Color-biased regions were identified in these animals on the basis of previous data (Lafer-Sousa and Conway, 2013); these regions were defined as those that showed stronger activation to drifting equiluminant colored gratings than to achromatic gratings (posterolateral, centrolateral, anterolateral, and anteromedial; Figure 10A,B). Face-patches were also previously identified in the animals studied presently and were defined as those regions with stronger activation to achromatic images of faces compared with achromatic images of body parts (posterolateral (PL), mediolateral (ML), anterolateral (AL), and anteromedial (AM), Figure 10A,B). A detailed examination of the functional architecture for colors and faces has been presented previously (Lafer-Sousa and Conway, 2013). Color-biased regions and face patches were mostly nonoverlapping but showed progressively a small increase in overlap at more anterior regions of IT (Lafer-Sousa and Conway, 2013; their Fig. 3A). Here we focus on their relationship with IT's disparity architecture.

Figure 10.

Comparison between the functional architecture for disparity, faces, scenes, and color in IT. A, Example stimuli from each experiment. Example disparity maps are shown for the two disparity experiments (same conventions as in Fig. 1A,B). Near/far disparity-biased regions correspond to brain regions with higher responses to stimuli with mixed near and far disparity compared with stimuli without disparity. Near disparity-biased regions respond more to near compared with far stimuli. Face patches are defined as regions with higher responses to faces compared with body parts. Color regions are defined as regions with higher responses to colored grating compared with achromatic gratings. Scene-biased regions were defined by comparing the responses to scenes with the responses to faces, objects, and scrambled scenes (see Materials and Methods). B, Inflated surfaces depicting the near/far disparity-biased regions in magenta, the near disparity-biased regions in green, the face patches in black, color-biased regions in white, and the scene-biased regions in yellow. First row, Lateral view of each hemisphere for Monkey M1. Second row, Bottom view of each hemisphere of Monkey M1. Bottom 2 rows, Same for Monkey M2. C, Time course of the average percentage signal change in the near/far disparity-biased regions (first row), the near disparity-biased regions (second row), the face patches (third row), the color-biased regions (fourth row), and the scene-biased regions (fifth row). Time courses are given for the near, null, and far stimuli presented in Experiment 2 (first column), the face and scene stimuli (second column), and the color and achromatic gratings (third column). There are different scales for each row. Shading over the lines indicates SEM.

The spatial relationship of the disparity-biased regions, color-biased regions, scene-biased regions, and face patches is shown in Figure 10B (color-biased regions in white; face patches in black; scene patches in yellow; near/far disparity-biased disparity regions in magenta; near disparity-biased disparity regions in green). In the posterior part of IT, we found relatively little consistent overlap between the color-biased regions and any of the other functionally defined regions. In contrast, the near disparity-biased regions overlapped with face patches. The overlap of near disparity-biased regions and face patches was evident along the length of the temporal lobe, although the correspondence between near disparity-biased regions and face patches was incomplete: the most dorsal face patches, within the STS, showed no near bias. The near disparity-biased regions showed almost no overlap with scene-biased regions. And perhaps most strikingly, the near/far disparity-biased regions were almost entirely exclusive of the face patches, the color-biased regions, and the scene-biased regions. There was one prominent exception: the ventral near/far disparity-biased region (Vd) overlapped with mPPA, located near the PMTS. Close inspection of the data also shows other regions of overlap; for example, in the left hemisphere of M2, the most anterior near/far disparity-biased region (Ad) overlapped with a portion of a color-biased region. And in most hemispheres, the most anterior near disparity-biased region overlapped a color-biased region. Figure 10C shows the time courses of the average responses to disparity stimuli (first column), faces and scenes (second column), and color and achromatic luminance (third column) for the near/far disparity-biased regions (first row), near disparity-biased regions (second row), face patches (third row), color patches (fourth row), and the scene-biased regions (fifth row).

Next, we quantified the cross-stimulus selectivity in each area. As in all other analyses, the quantification was conducted with data that were independent of those used to define the ROIs (Fig. 11). Not surprisingly, near/far disparity selectivity was high in the near/far disparity-biased regions (Fig. 11A, left). But as predicted from the sparse overlap of the near/far disparity-biased regions and the near disparity-biased regions (Fig. 10), near/far disparity selectivity was insignificant in the near disparity-biased regions (and face-patches; Fig. 11A, second and third panels); near/far disparity-selectivity in the color-biased regions (Fig. 11A, fourth panel) was significant (p = 0.02, bootstrap t test), but weaker than found in the near/far disparity-biased regions (p = 0.003, unpaired permutation t test). Outside of the near/far disparity-biased regions, near/far disparity-selectivity was most significant in the scene-biased regions (Fig. 11A, right; p = 0.003, bootstrap t test; not as strong as in the near/far disparity-biased regions, p = 0.004, unpaired permutation t test).

Figure 11B shows position-in-depth selectivity for the five functionally defined regions and confirms that almost all regions, regardless of their definition (i.e., face, color, scene, or disparity), showed a preference for near stimuli (p < 0.01 for face, color, scene, and disparity patches; bootstrap t test). In agreement with their anatomical separation from the near disparity-biased regions, the near preference in color-biased, scene-biased, and near/far disparity-biased regions was relatively modest and significantly lower than the near bias in the near disparity-biased regions (p < 10−5, unpaired permutation t test for the comparisons between the near/far disparity-biased regions, face patches, scene-biased regions, color-biased regions, and the near disparity-biased regions). On the other hand, and as predicted by the anatomical overlap between them, the bias for near stimuli was comparable in the face patches and near disparity-biased regions (p = 0.9 unpaired permutation t test, compare Fig. 11B, second and third panels). These findings show that activity in the face patches was significantly modulated by disparity, providing the first fMRI evidence for a contribution of disparity to face processing. But these two systems were not completely overlapping: the near disparity-biased regions showed significant face selectivity (p < 10−4, bootstrap t test), although it was considerably weaker than the face selectivity in the face patches (Fig. 11C; p = 3 × 10−4, unpaired permutation t test).

Figure 11C confirms that the ventral near/far disparity-biased region (Vd) responded more strongly to images of scenes compared with images of faces, as anticipated from its overlap with scene region mPPA. Finally, Figure 11D shows the color selectivity for each region. As expected, color selectivity was highest in the color-biased regions (p < 2 × 10−4 for the comparisons between the near/far disparity-biased regions, near disparity-biased regions, face patches, scene-biased regions, and the color-biased regions; unpaired permutation t test). Furthermore, the near disparity-biased regions, near/far disparity-biased regions, face patches, and scene-biased regions were usually more strongly activated by achromatic stimuli than by colored stimuli (p < 0.05 for the near/far disparity-biased regions, face patches, and scene-biased regions; p > 0.05 for the near disparity-biased regions; bootstrap t test).

Together, these findings suggest that the ventral visual pathway comprises distinct networks for color, faces, and scenes and that disparity information contributes to the processing of information in each of these channels to different degrees: color-biased regions appeared to be poorly modulated by disparity, whereas scene-biased regions (especially in posterior IT) were strongly modulated by both near and far stimuli, and face patches were modulated more strongly by near stimuli than by far stimuli. More anterior regions appear to have higher selectivity than posterior regions within each stream (Fig. 12), not inconsistent with a hierarchical processing model (Freiwald and Tsao, 2010; DiCarlo et al., 2012).

Figure 12.

Summary of the selectivity in the different ROIs in IT. Mean face selectivity is given in black for the different face patches (PL, ML, AL, and AM). Mean scene selectivity is given in orange for the different scene-biased areas (mPPA, LPP, and APP). Mean near/far disparity selectivity is given in magenta for the three near/far disparity-biased areas (Pd, Vd, and Ad). Average near selectivity is shown in green for the four near disparity-biased areas (PLn, CLn, ALn, and AMn). The average color selectivity for the four color-biased regions is shown in gray: PLc, posterolateral; CLc, centrolateral; ALc, anterolateral; AMc, anteromedial. Error bars indicate SEM.

Discussion

We determined the functional architecture for disparity in the ventral visual pathway of monkeys, and show how this architecture relates to that for color, faces, and places. “Near/far” disparity-biased regions were defined as those showing strong activation to both near and far stimuli, compared with zero-disparity stimuli. A near/far disparity bias was observed in V1, V2, V3, and V4 and in three consistent regions in IT: two near the fundus and lower bank of the superior temporal sulcus (“Pd,” “Ad”), and one on the posterior inferior temporal gyrus (“Vd”). These disparity-biased regions generally did not coincide with color-biased regions or face patches; Vd overlapped with a scene-biased region, mPPA, but none of the other near/far disparity-biased regions showed a prominent scene bias. We also measured responses to near and far stimuli separately and discovered a network of “near” disparity-biased regions that preferred near over far stimuli; the pattern of these regions was distinct from that for near/far disparity-biased regions: it originated around the foveal confluence of V1/V2 and tracked the crest of the superior temporal sulcus through IT. Near disparity-biased regions largely overlapped face patches, but generally not color-biased regions or scene-biased regions. Near/far disparity-biased regions responded more strongly to peripheral stimuli, whereas near disparity-biased regions preferred central stimuli. Together, these results are consistent with the idea that IT in macaque monkeys is organized by a coarse eccentricity map (Lafer-Sousa and Conway, 2013), and show that disparity likely contributes to computations associated with both central (face processing) and peripheral (scene processing) visual field biases. The lack of color selectivity in the disparity-biased regions suggests that disparity and color contribute to different computations within IT, and may reflect the propagation of the segregation of color and disparity processing found in the thin and thick stripes of area V2 (Hubel and Livingstone, 1987; Gegenfurtner, 2003). This segregation could account for impaired stereoscopic shape perception with equiluminant colored random-dot stereograms (Gregory, 1979).

Previous monkey fMRI studies have described disparity-driven activity in occipitoparietal and prefrontal areas (Tsao et al., 2003b; Durand et al., 2007; Joly et al., 2009) but little or no disparity activity in IT. The detection of disparity activity in IT described here may be attributed to improved signal sensitivity, resulting from targeted coil placement and technological advances that provide excellent coverage of IT. Nonetheless, we may not have detected all parts of IT that are modulated by disparity because the stimulus we used was relatively simple. Despite this limitation, the disparity-modulated regions that we observed span much of IT, consistent with neurophysiological studies, which together used a wide range of stimuli (Janssen et al., 2000b; Yamane et al., 2008; Vaziri et al., 2014).

Microelectrode studies report strong disparity selectivity for many IT neurons. The majority of disparity-selective cells reside in clusters (Uka et al., 2000; Verhoef et al., 2012) at locations corresponding to the disparity-biased regions described here (Janssen et al., 1999, 2000a; Uka et al., 2000, 2005; Yamane et al., 2008). Thus both fMRI and microelectrode results support the hypothesis that the functional organization for disparity in IT is patchy.

Near stimuli evoked more activity than far stimuli in most, if not all, visually responsive portions of the temporal lobe. Previous studies have shown that many neurons are biased for crossed/near disparities in several areas of the monkey brain, including V1 (Cumming, 2002), V3 (Adams and Zeki, 2001; Hubel et al., 2015), V4 (Watanabe et al., 2002; Hinkle and Connor, 2005; Tanabe et al., 2005), and MT (DeAngelis and Uka, 2003). A similar near bias has been observed with fMRI in human striate and extrastriate cortex (Cottereau et al., 2011). The near bias may underlie the salience of stimuli presented in front of the fixation plane (Manning et al., 1987; Finlay et al., 1989; Patterson et al., 1995; Landers and Cormack, 1997; Jansen et al., 2009).

The face patches tended to overlap with near disparity-biased regions, providing the first evidence that disparity contributes to the representation of faces in IT. IT is implicated in the computation of transformation-tolerant visual representations of real-world objects, such as faces (DiCarlo et al., 2012). Disparity can help in attaining tolerance to spatial transformation (Edelman and Bülthoff, 1992; Bennett and Vuong, 2006; Lee and Saunders, 2011) and improves viewpoint-dependent face recognition in humans (Burke et al., 2007). Our findings provide a neural substrate for these perceptual findings and suggest that near/crossed disparities are especially important in building the representation of faces. This could be related to the fact that focusing one's gaze near the eyes, as primates often do (Guo et al., 2003), brings many informative face features, such as the nose, mouth, and eyebrows, at or in front of the fixation plane, particularly in monkeys; and that in free viewing, the faces that capture attention are those that appear in front of the fixation plane. That near disparity-biased regions (and near/far disparity-biased regions) extended well beyond the face patches additionally suggests that other object representations in IT make use of disparity.

Scene-biased regions were found along the posterior–anterior extent of the inferotemporal belly. The near/far disparity-biased region “Vd” overlapped with the posterior scene-biased patch (mPPA) (Nasr et al., 2011). This overlap suggests a role for disparity in the perception of scenes: disparity may, among other possibilities, act as a cue for identifying and discriminating objects that can be used for scene discrimination or as landmarks for navigational purposes. This hypothesis is consistent with human fMRI studies showing that the PPA responds to objects in addition to scenes (Janzen and van Turennout, 2004; Troiani et al., 2014).

IT occupies a large swath of cortex, yet it is unclear whether IT comprises a single area or multiple areas (DiCarlo et al., 2012). Many studies have sought to address the rules that govern IT (Sayres and Grill-Spector, 2008; Harada et al., 2009; Naselaris et al., 2009; Kanwisher, 2010; Op de Beeck and Baker, 2010; Winawer et al., 2010; Rajimehr et al., 2014; Vanduffel et al., 2014). The stimuli we used provide a way of disentangling the functional organization of IT. We refer to disparity-biased regions as “biased”, not “selective”, because it seems unlikely that these regions participate solely in disparity processing. Rather, we consider disparity to be another useful visual feature, like color, that can be exploited to reveal the architecture of IT. Mounting evidence suggests that IT comprises parallel-processing streams. Different streams have been identified by a bias for some aspect of the visual world, such as faces, color, or environmental layout (Van Essen et al., 1990; Tsao et al., 2003a; Nasr et al., 2011; Kornblith et al., 2013; Kravitz et al., 2013; Lafer-Sousa and Conway, 2013; Vaziri et al., 2014). The current results are consistent with this view, showing that the architecture for disparity is patchy, and largely independent of that for color.

But is there a deeper principle that determines the development and organization of IT? Imaging results in humans (Hasson et al., 2003) and monkeys (Lafer-Sousa and Conway, 2013) suggest one possibility: that extrastriate cortex is arranged according to a coarse representation of the visual field, an “eccentricity map”. Activation to foveal stimulation is found at or near face patches, and activation of the peripheral visual field coincides with scene-biased regions. Here we show that the near/far disparity-biased regions and the near disparity-biased regions occupy peripheral and foveal locations of the extrastriate eccentricity map, respectively. A further clue to the deeper rules that carve up IT may be given by the intersection of the processes that handle face, near-disparity, and foveal stimuli. Their junction is not predicted by similarity in low-level visual features. Instead, the stimuli, and by extension the processes that handle them, are joined by their ability to attract attention, suggesting that it is a commonality in behavioral relevance (Kourtzi and DiCarlo, 2006; Konkle and Oliva, 2012) that drives the organization and development of IT.

Depth cues, and disparity in particular, appear to play a central role in how IT neurons encode objects in monkeys (Yamane et al., 2008). Furthermore, IT seems to play an essential role in 3D-shape perception (Verhoef et al., 2010, 2012). Given the proposed hierarchical organization of IT (DiCarlo et al., 2012), it remains an open question how 3D-object representations are built through serial and parallel processing in subsequent stages of the ventral visual pathway and how 3D-object perception emerges. The functional organization for disparity and its relationship to the functional architecture for face, scene, color, and visual-field processing may serve as a blueprint to understand how the perceived world receives its third dimension.

Footnotes