Abstract

The illusory subjective experience of looking at one's own face while in fact looking at another person's face can surprisingly be induced by simple synchronized visuotactile stimulation of the two faces. A recent study (Apps MA, Tajadura-Jiménez A, Sereno M, Blanke O, Tsakiris M. Cereb Cortex. First published August 20, 2013; doi:10.1093/cercor/bht199) investigated for the first time the role of visual unimodal and temporoparietal multimodal brain areas in the enfacement illusion and suggested a model in which multisensory mechanisms are crucial to construct and update self-face representation.

Keywords: enfacement illusion, multisensory integration, self-identity, self-face recognition, temporoparietal junction, posterior parietal cortex

the topic of how self-representation is built, maintained, and constantly updated in the human brain has recently become a highly debated issue in cognitive neuroscience. At a very basic level the sense of self is built upon the sense of bodily self, for which the face holds special importance, being the most distinctive feature of one's own physical appearance. A coherent visual representation of one's own face is formed and maintained by matching felt and observed sensorimotor experiences in the mirror. This process finally allows self-face mirror recognition, an ability that ontogenetically precedes higher forms of self-consciousness and social behavior (Tsakiris 2010).

Because of its importance, it was classically held that self-face representation is fundamentally stable and can rarely be disrupted, unless in severe neurological degenerative or psychiatric disorders (Feinberg and Keenan 2005). However, recent evidence shows that self-other distinction can be easily blurred by synchronous interpersonal visuotactile stimulation of one's own and another person's face, an effect we named enfacement illusion (Sforza et al. 2010). Participants incorporate facial features of the other into the self-face representation and, at a phenomenological level, report to be feeling the tactile stimulus observed on another person's face and to be looking at themselves in the mirror.

Apps and colleagues (2013) were the first to investigate the neural correlates of this illusory felling of “I felt I was looking at my face” while instead looking at another person's face, induced by interpersonal multisensory stimulation (IMS). Participants' brain activity was recorded via fMRI while they were stimulated on their upper cheek and concomitantly observed matching or mismatching stimulation on another person's face. Mismatch between felt and observed stimulation was spatial (incongruent stimulation position, e.g., the chin) and/or temporal (1-s asynchrony) and gave rise to four experimental conditions: synchronous-congruent (SC), synchronous incongruent (SI), asynchronous congruent (AC), and asynchronous incongruent (AI).

After each block, participants rated how much they felt they were looking at their own face. At the behavioral level, the results show that participants felt they were looking at their own face more during the SC condition and progressively less in the other conditions, i.e., SI, AC, and AI. The authors, unfortunately, do not report whether these conditions differed from one another, which would have allowed one to test if temporally synchronous but spatially incongruent IMS is sufficient to generate the illusion, even if to a weaker extent.

At a neural level, the right inferior occipital gyrus (rIOG), right inferior parietal sulcus (rIPS), and right temporoparietal junction (rTPJ) showed a significant interaction between congruency and synchronicity in both whole brain factorial and small volume correction analysis, performed around the coordinates of the same areas previously found to be involved in self-face visual recognition and in bodily illusions (Apps et al. 2013). Activity in these regions was also found to vary parametrically with the phenomenological experience of the illusion (“looking at my face”), regardless of IMS condition.

Thus the authors proposed that the interplay between unimodal and multimodal brain areas drives dynamic self-identification with a face whose sensations match one's own. Very interestingly, Apps and colleagues (2013) argue that the processes of constructing and updating a mental representation of one's own face may conform to the principles of predictive coding within the free-energy theoretical model. The authors explain the enfacement illusion accordingly and suggest that congruency between observed and felt touch initially generates surprise, since participants cannot move to prove to themselves that the observed face is not theirs, and then that the brain attempts to minimize surprise by changing self-face representation to include the other person's facial features. In particular, the authors propose that rIPS processes “multisensory driven predictions about upcoming somatosensory input” and that rTPJ responds as a “function of the extent to which, self or other, perspectives are being processed.”

We find the interpretation provided by the authors appealing; however, at times it does not seem to closely describe their results. We therefore raise few controversial points and attempt to suggest a more comprehensive interpretation of the neural correlates subserving the “looking at my face” illusory experience.

Although rIPS and rTPJ regions show an overall dissimilar pattern of modulations relative to the different IMS conditions (see histograms of beta values resulting from factorial analysis reported in Fig. 2 of Apps et al. 2013), they both correlate with the perceived strength of the illusion. Even if it is difficult to interpret beta values as deactivations or activations due to the absence of an active baseline condition, and to know if they differ between conditions due to the absence of post hoc comparisons, by looking at the graph it seems that IPS is less deactivated in the SC condition, where participants report a stronger illusion, and progressively more deactivated in those conditions (SI, AC, AI) where participants report progressively weaker illusion. In contrast to rIPS, rTPJ activity maximally differs between SC and AC, with AI and SI having similar and intermediate activation levels. Still, the parametric analysis shows a linear correlation between rTPJ activity and the strength of illusory feeling. Surprisingly, although factorial and parametric analysis are coherent for rIPS, but not for rTPJ, no clear interpretation of the correlation between rIPS activation and illusion strength is provided by Apps and colleauges (2013), whereas they discuss the role of TPJ as crucial for the illusion.

IPS is a large and multifaceted region involved in a wide range of diverse cognitive processes. From our point of view, it is interesting to note that IPS, and in particular its ventral part, integrates multisensory body-related signals (Bremmer et al. 2001), since it receives afferent sensory projections from somatosensory and visual areas and forms with the putamen a visual-somesthetic network that processes the space on and near the body (Graziano and Botvinick 2002). Because of its anatomic and functional properties, IPS activity is crucial to maintain a coherent body representation during conflicts that arise from incongruent multisensory stimuli coming from one's own body (caudomedial IPS: Hagura et al. 2007; inferior parietal lobule: Bufalari et al. 2014) or from congruent multisensory signals coming from one's own and another's body (ventral and medial IPS: Ehrsson et al. 2004; Petkova et al. 2011). This second scenario is apparent, for example, when tactile spatially and temporally congruent signals are delivered to one's own unseen hand and to a rubber hand spatially congruent with respect to one's own arm. The initial conflict between seen and felt position of the hand was found to be resolved mostly by anterior left IPS, which recalibrates (aligns) the peri-hand space representation toward that of the seen rubber hand so that tactile, visual, and proprioceptive signals fuse in a single coherent percept (Brozzoli et al. 2012). Accordingly, IPS is more active in the temporal window associated to this remapping process, which usually precedes the illusory sense of ownership (Ehrsson et al. 2004).

Analogously, we hint that during the enfacement illusion, ventral IPS could integrate multisensory congruent stimuli and remap the space around the face (as seen in a mirror). Such role is also supported by a recent study showing that ventral IPS is involved in remapping visual information about touch applied to another person's face on processing of tactile stimuli concomitantly applied to the self-face (Cardini et al. 2011). This remapping of the space around the self-face may result from a central process that detects conflicts between tactile afferences and visual signals that, although temporally and spatially congruent with self-percept, instead originate from another person's face. Thus the spatial remapping process suppresses such conflict and prompts the insurgence of the illusory perceptual experience of looking at one's own face. Following this line of reasoning, we believe that only once the illusion is at play (and thus just one identity is represented) can the multisensory integrative nature of IPS subserve the function, as proposed by Apps and colleagues (2013), of predictive upcoming of congruent somatosensory stimuli (such as when looking at oneself in the mirror). We further suggest that the brain area responsible to inform IPS about such mismatch between self and other tactile sensations could be rTPJ.

This interpretation seems congruent with the study by Ionta et al. (2011) in which synchronous IMS to participants in the fMRI scanner and to a virtual body resulted in perceived shifts of self-location toward the virtual body. Interestingly, TPJ activity was differentially modulated in those participants that perceived the virtual body as floating over their real body in a spatially congruent manner, compared with those participants who experienced looking down on the virtual body, which implied a spatial relocation of the self, i.e., feeling as if lying prone on the scanner bed. It seems, therefore, that TPJ activity is related to the presence/absence of a mismatch between real afferent proprioceptive information and illusion-induced feelings (e.g., perceive the virtual body floating over or under oneself) (Aglioti and Candidi 2011).

The trend of TPJ activations in the different IMS conditions as found in the present study also fits well with this interpretation of TPJ's role. As reported above, rTPJ beta values show unexpected similar activations during SI and AI, whereas rTPJ activity maximally differs between SC and AC. This activation pattern, not discussed by Apps and colleagues (2013), might relate to the fact that participants were always touched on the same location. Congruency was then just determined by the observed touch location, which could be inferred from the hand's trajectory before the touch occurred. Although rTPJ response might not be needed to differentiate spatially incongruent conditions because they both imply immediate self/other difference (due to different touch locations), a differential rTPJ response might be needed to code for self/other difference when the other face is being touched in the same location (as predicted from the approaching hand's direction), and therefore it may become crucial to detect temporal a/synchrony.

Also, Apps and colleagues (2013) found peak activation in the anterior subdivision of rTPJ, part of the ventral attentional and not of the social cognition network (Mars et al. 2012), which is in line with this interpretation of the rTPJ role in the enfacement illusion, given that attentional reorienting could play a role to compare mismatching internal (self) and external (other) stimuli such as those present in the current study.

Additional brain areas might as well follow the free-energy principle and show attenuated responses linked to multisensory processing of visual and tactile information when only one face (the self-face) is represented (namely, during the illusory experience), as opposed to when, in the control conditions, separate visual and tactile information needs to be processed about one's own and another person's face. We would expect, in line with studies showing reduced activity in somatosensory cortices during predictable self-produced tactile stimulation versus externally unpredictable stimulation (Blakemore et al. 2000), to find a modulation of activity also in early somatosensory and visual areas once, in the illusion condition, the stimulation has become predictable. Interestingly, the authors report an interaction effect in the right parietal operculum (secondary somatosensory cortex), but unfortunately we do not know which IMS condition drives this effect, since post hoc tests and plots of signal change in this region are not reported.

Along this line of reasoning based on the initial findings of Apps and colleagues (2013), we suggest that to provide further insights into the dynamic process inducing the illusion, future studies should compare activity in unimodal and multimodal brain regions before and after the appearance of the illusory feeling of looking at one's own face. Indeed, Apps and colleagues (2013) reported that this illusory feeling intervenes with a great intersubject variability (a mean delay of 13 s, with a range between 5.56 and 32.65 s); however, they analyzed brain activity during each kind of IMS independently of participants' perceptual experience. Furthermore, peri-personal space remapping processes could be at play in the time window preceding the onset of the illusory sensation of “looking at me in the mirror,” and thus measuring the subjective experience of referral of touch would provide additional fruitful information. Unfortunately, participants were not asked to rate mislocalization of their tactile experience (e.g., “It seemed as though the touch I felt was caused by the paintbrush touching the other's face.”), although it is a significant component of the enfacement illusion (Sforza et al. 2010) and predicts the hand's objective mislocalization in the rubber hand illusion (Longo et al. 2008).

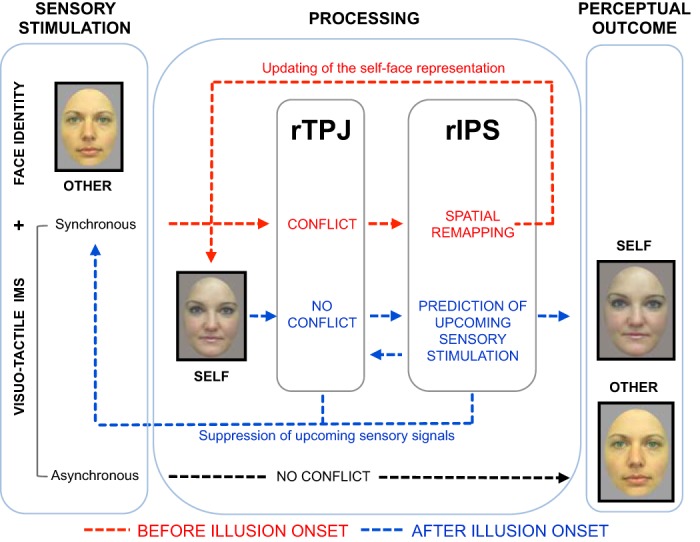

As results of the aforementioned analysis, we would expect (Fig. 1) IPS to be involved in integrating multisensory input and resolving multimodal conflict before the illusion onset, and analogous with the rubber hand studies, we would expect its activity to correlate with the subjective experience of referral of touch. Only once the illusory sensation of looking at oneself is established would we expect IPS to inform TPJ of the likelihood of upcoming somatosensory stimulation, since at that point just one face (the self face) is represented. In addition, we would expect TPJ to predict and monitor the congruency of the upcoming multisensory stimulation and, probably conjointly with IPS, to modulate the activity of low-level unimodal brain structures (i.e., somatosensory and visual cortices). Finally, we believe that approaches that allow investigation of effective and functional connectivity between brain areas (e.g., Granger and dynamical causal modeling; Friston et al. 2013) would prove very useful to help determine the time-relevant role of multimodal brain areas in the enfacement illusion.

Fig. 1.

Proposed model, based on the findings of Apps et al. (2013), of the cognitive and neural temporal dynamics of the enfacement illusion. Participants see the face of another person while receiving either synchronous or asynchronous interpersonal multisensory stimulation (IMS). In the case of asynchronous stimulation (black line), no conflict arises and the other face is perceived as other. In the case of synchronous stimulation, conflict initially arises (red line, before the illusion onset) between tactile afferences and spatially and temporally congruent visual signals coming from the other person's face. Temporoparietal junction (TPJ) detects the conflict while inferior parietal sulcus (IPS) integrates multisensory congruent stimuli and remaps the space around the face (as seen in the mirror). This finally results in updating of the self-face representation to include facial features of the synchronously stimulated other. Once the self-face representation is updated, the illusion (i.e., the perceptual experience of looking at one's own face) emerges (blue line). TPJ now detects less conflict, and IPS can predict (and inform TPJ of) the likelihood of upcoming sensory tactile stimuli on the self-face based on those observed on the other's face. Concomitantly, TPJ and IPS modulate the activity of low-level unimodal brain structures (i.e., somatosensory and visual cortices), which has an effect on the perceived multisensory stimuli. The end result of synchronous IMS is therefore a biased perception of the other's face as self.

GRANTS

I. Bufalari and G. Porciello were funded by Progetto di Avvio alla Ricerca 2013 University of Rome “Sapienza” C26N135X7H, C26N139L7N, and C26N13TMFT. I. Minio-Paluello was supported by the Italian Ministry of Health, Giovani Ricercatori GR-2009-1607360.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

I.B., G.P., M.S., and I.M.-P. drafted manuscript; I.B., G.P., M.S., and I.M.-P. edited and revised manuscript; I.B., G.P., M.S., and I.M.-P. approved final version of manuscript.

REFERENCES

- Aglioti SM, Candidi M. Out-of-place bodies, out-of-body selves. Neuron 70: 173–175, 2011. [DOI] [PubMed] [Google Scholar]

- Apps MA, Tajadura-Jiménez A, Sereno M, Blanke O, Tsakiris M. Plasticity in unimodal and multimodal brain areas reflects multisensory changes in self-face identification. Cereb Cortex. First published August 20, 2013. doi: 10.1093/cercor/bht199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore SJ, Wolpert D, Frith C. Why can't you tickle yourself? Neuroreport 11: R11–R16, 2000. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Schlack A, Shah NJ, Zafiris O, Kubischik M, Hoffmann K, Zilles K, Fink GR. Polymodal motion processing in posterior parietal and premotor cortex: a human fMRI study strongly implies equivalencies between humans and monkeys. Neuron 29: 287–296, 2001. [DOI] [PubMed] [Google Scholar]

- Brozzoli C, Gentile G, Ehrsson HH. That's near my hand! Parietal and premotor coding of hand-centered space contributes to localization and self-attribution of the hand. J Neurosci 32: 14573–14582, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bufalari I, Di Russo F, Aglioti SM. Illusory and veridical mapping of tactile objects in the primary somatosensory and posterior parietal cortex. Cereb Cortex 24: 1867–1878, 2014. [DOI] [PubMed] [Google Scholar]

- Cardini F, Costantini M, Galati G, Romani GL, Làdavas E, Serino A. Viewing one's own face being touched modulates tactile perception: an fMRI study. J Cogn Neurosci 23: 503–513, 2011. [DOI] [PubMed] [Google Scholar]

- Ehrsson HH, Spence C, Passingham RE. That's my hand! Activity in premotor cortex reflects feeling of ownership of a limb. Science 305: 875–877, 2004. [DOI] [PubMed] [Google Scholar]

- Feinberg TE, Keenan JP. The Lost Self: Pathologies of the Brain and Identity. Oxford: Oxford University Press, 2005. [Google Scholar]

- Friston K, Moran R, Seth AK. Analysing connectivity with Granger causality and dynamic causal modelling. Curr Opin Neurobiol 23: 172–178, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graziano MS, Botvinick MM. How the brain represents the body: insights from neurophysiology and psychology. In: Common Mechanisms in Perception and Action: Attention and Performance XIX, edited by Prinz W, Hommel B. Oxford: Oxford University Press, 2002, p. 136–157. [Google Scholar]

- Hagura N, Takei T, Hirose S, Aramaki Y, Matsumura M, Sadato N, Naito E. Activity in the posterior parietal cortex mediates visual dominance over kinesthesia. J Neurosci 27: 7047–7053, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ionta S, Heydrich L, Lenggenhager B, Mouthon M, Fornari E, Chapuis D, Gassert R, Blanke O. Multisensory mechanisms in temporo-parietal cortex support self-location and first-person perspective. Neuron 70: 363–374, 2011. [DOI] [PubMed] [Google Scholar]

- Longo MR, Schüür F, Kammers MP, Tsakiris M, Haggard P. What is embodiment? A psychometric approach. Cognition 107: 978–998, 2008. [DOI] [PubMed] [Google Scholar]

- Mars RB, Sallet J, Schüffelgen U, Jbabdi S, Toni I, Rushworth MF. Connectivity-based subdivisions of the human right “temporoparietal junction area”: evidence for different areas participating in different cortical networks. Cereb Cortex 22: 1894–1903, 2012. [DOI] [PubMed] [Google Scholar]

- Petkova VI, Björnsdotter M, Gentile G, Jonsson T, Li TQ, Ehrsson HH. From part- to whole-body ownership in the multisensory brain. Curr Biol 21: 1118–1122, 2011. [DOI] [PubMed] [Google Scholar]

- Sforza A, Bufalari I, Haggard P, Aglioti SM. My face in yours: visuo-tactile facial stimulation influences sense of identity. Soc Neurosci 5: 148–162, 2010. [DOI] [PubMed] [Google Scholar]

- Tsakiris M. My body in the brain: a neurocognitive model of body-ownership. Neuropsychologia 48: 703–712, 2010. [DOI] [PubMed] [Google Scholar]