Abstract

Background

To make appropriate choices, organisms must weigh the costs and benefits of potential valuable outcomes, a process known to involve the nucleus accumbens (NAc) and its dopaminergic input. However, it is currently unknown if dopamine dynamically tracks alterations in expected reward value `on-line' as behavioral preferences change, and if so, if it is causally linked to specific components of value such as reward magnitude and/or delay to reinforcement.

Methods

Electrochemical methods were used to measure subsecond NAc dopamine release during a delay discounting task where magnitude was fixed but delay varied across blocks (n=7 rats). Next, to assess whether this dopamine signaling was causally related to specific components of choice behavior, we employed selective optogenetic stimulation of dopamine terminals in the NAc using a modified delay discounting task in which both delay and magnitude varied independently (n=23 rats).

Results

Cues predictive of available choices evoked dopamine release that scaled with the rat's preferred choices, and dynamically shifted as delay to reinforcement for the large reward increased. In the second experiment, dopamine signaling was causally related to features of decision making, as optogenetically-enhanced dopamine release within the NAc during predictive cue presentation was sufficient to alter subsequent value-related choices. Importantly, this dopamine-mediated shift in choice was limited to delay-based, but not magnitude-based decisions.

Conclusions

These findings indicate that NAc dopamine dynamically tracks delay discounting, and establishes a causal role for this signaling in a subset of value-based associative strategies.

Keywords: dopamine, decision making, nucleus accumbens, optogenetics, reward, value

Introduction

Behaviors to obtain desirable rewards require a complex assessment comparing the costs of actions - such as effort, delay to reinforcement and risk – to the benefits of the outcome. Typically, these factors vary independently, requiring a calculation of subjective tolerance to various behavioral costs versus the benefit of anticipated rewards. Integral to this decision making process is a neural circuit that includes the nucleus accumbens (NAc) and its dopaminergic input (1–3). Dopamine neurons encode reward prediction, displaying increased activation to cues that reliably predict reward delivery (4–7), reflected in dopamine release in the NAc (3, 8–10). Further, mesolimbic dopamine has also been implicated in cost-benefit calculations necessary for value-based decision making. Patterned firing of dopamine neurons track choice behaviors related to effort, delay, risk and probability of reward (7, 11–14), and these signals are reflected in phasic dopamine release in the NAc (3, 10). Indeed, perturbations of mesolimbic dopamine circuitry, including the NAc, result in maladaptive decision making such that animals are not able to adjust behaviors appropriately as the value of rewards change (15–21). Thus, the mesolimbic dopamine system not only encodes predictions of reward value, but is necessary for dynamically evaluating costs and adjusting behaviors to maximize resources.

Specific elements of value-based decision making may be separable into related but distinct components. Value assessment appears to involve both outcome-based features of the association (e.g., reward magnitude) as well as subjective components that may be more variable across individuals (e.g., impulsivity, or willingness to engage in risky behaviors). Recent evidence suggests that these may be dissociable at the neural level; dopamine neurons track subjective cost calculations like effort and delay (8, 10) and disruption of the dopamine signal profoundly alters choice behaviors that vary along these subjective dimensions (20). In contrast, alterations of dopamine signaling typically have no effect on choice behavior when rats discriminated rewards of different magnitude (16, 20, 21). However, little is known about precisely how rapid dopamine signaling encodes, and may be causally linked to, subjective factors compared to outcome-based features underlying value-based decision making.

Here, we used complimentary approaches to understand these issues. First, fast-scan cyclic voltammetry (FSCV) in behaving rats was used to evaluate how rapid dopamine signaling in the NAc encodes dynamic changes in anticipated reward value during a task that varied subjective costs (delay to reward) across the session while keeping outcome features (reward magnitude) fixed (3 pellets). Rats were allowed to choose between a small reward available immediately after a response versus a large reward that was available after either no delay (0s), a short delay (10s), or long delay (20s). We found that rapid dopamine signaling dynamically tracks this subjective decline in outcome value; decreases in dopamine release were observed for cues signaling the large reward as delay to reinforcement increased. To assess whether discrete aspects of this signaling was sufficient to support choice behavior, we next used optogenetic tools to selectively activate VTA dopaminergic neurons during a modified version of our task. We found that optogenetic stimulation of dopamine fibers in the NAc was sufficient to shift choice behaviors when options varied by delay to reinforcement, but not magnitude. These findings reveal a causal relationship between phasic dopamine release and value-based decision making under subjective, but not outcome-based, features.

Materials and Methods

Behavior

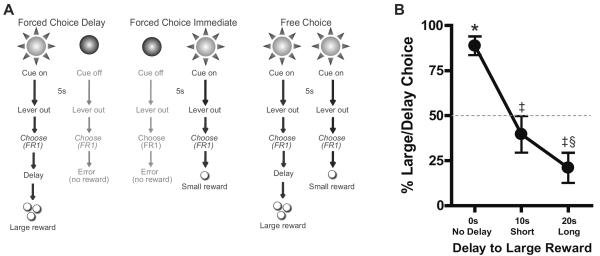

Detailed methods are described in the Supplemental Materials. Briefly, male Sprague Dawley rats (n=7; Harlan Indianapolis, IN) were trained on a delay discounting task comprised of three trial types. On forced choice delay trials (Fig. 1A, left) a cue light was illuminated for 5s followed by extension of two levers. A single press on the associated lever positioned below that cue light resulted in a large reward (3 sucrose pellets) delivered after a period of delay. During forced choice immediate trials (Fig. 1A, middle), another 5s cue light signaled that responses on the associated lever resulted in a small (1 sucrose pellet) immediate reward. During free choice trials (Fig. 1A, right), both cue lights illuminated for 5s, signaling that both responses were rewarded based on the contingency of the lever chosen. Importantly, each behavioral session consisted of three blocks of trials: during the first block the large reward was presented immediately (No-Delay block), in the subsequent block, the delay to large reward was 10s following a lever press (Short-Delay block), while in the last block, there was a 20s delay to obtain the large reward (Long-Delay block). Rats performed 30 trials per block with 20 forced choice (10 of each type) and 10 free choice trials. Rats were voltammetrically recorded on the last day of training.

Figure 1.

Delay discounting task and behavior. (A) Schematic representation of the task. On Forced Choice Delay trials (left), a 5s cue predicted the opportunity to press a lever for a large reward after a period of delay. On Forced Choice Immediate trials (middle) the other cue light predicted the opportunity to respond for a small reward delivered immediately. On Free Choice trials (right) both cue lights predicted the opportunity to choose between large delayed or small immediate rewards. (B) Free choice behavior during the delay discounting task. Responses for the large reward decreased as delay to reward increased. *p<0.001 delay option chosen greater than chance; ‡p<0.05 delay option chosen less than in No Delay block; §p<0.01, delay option chosen less than chance. Data are mean ± SEM.

For the optogenetics experiment, we used a modified version of the task in a different set of animals (n=23; see Supplemental Methods for details). Here, we trained rats with a variety of different delays and magnitudes across sessions, but on the test day we used optogenetics to manipulate each feature of the delay discounting task independently of the other. This was accomplished by completing a `delay' test day (whereby two delays were used with the same magnitude) and a `magnitude' test day (involving two magnitudes with the same delay). This approach was critical since any optogenetic manipulation using the more traditional delay discounting task would not isolate but instead confound the contribution of each factor (magnitude versus delay) with the other.

On test days, optical stimulation (5s, 20Hz, 20mW, 5ms pulsewidth) of 473nm light was delivered to the NAc of rats during the 5s cue presentation for the less-valuable option on forced choice trials (i.e., delay condition: stimulate on forced delay cue; magnitude condition: stimulate on forced small reward cue). No stimulation was delivered during free choice cues. This approach allowed us to isolate the contribution of each factor (delay or magnitude) independently by assessing whether increasing dopamine signaling during specific forced trial types would bias preference for that option when subsequently given a choice. Critically, since stimulation was restricted to forced choice (i.e., not during free choice) trials, free choice behavior served as a measure of learned preference for the different options independent of any nonspecific effects of stimulation during free choice trials. All rats were tested while connected to the laser for 3 sessions: the first and last no light was delivered, while the light was delivered for the middle session. Thus, each animal served as its own behavior control for both the presence and absence of stimulation.

FCSV

Rapid dopamine release was measured in the NAc core (see Fig. S2 for histology) using established procedures (10) during the task in well-trained rats. See supplemental materials for details.

Optogenetics

Male Long-Evans rats (n=23; in-house bred) were infused with a Cre-dependent adeno-associated viral construct encoding ChR2 with EYFP (AAV5-DIO-ChR2-EYFP), into the VTA (see Supplemental Materials for detail). Optical fibers (200 μm diameter core) coupled to ferrules were chronically implanted over the NAc bilaterally. The virus was given at least 8 weeks to be taken up and expressed in the terminals in the NAc before behavioral experiments were conducted (22).

Results

Dopamine signaling tracks associative cue value related to subjective choice preferences

During the delay discounting task used with FSCV, rats discriminated the different reward options during both forced and free choice trials. On forced choice trials, rats showed accurate responding (89.6% correct) which was modulated by delay costs (Fig S1A–D). During free choice trials, rats' initial preference for the large-reward lever decreased as delays for that outcome increased across blocks (Fig. 1B; F(2,7)=21.68, p<0.001). In the No-Delay block, rats strongly preferred the large (immediate) option, choosing it significantly greater than chance (t(7)=7.519, p<0.001). In the Short Delay block (delay to the large reward increased to 10s), preference for the large-delay lever decreased to a rate equal to the small-immediate option (t(7)=1.097, p=0.31). In the Long-Delay block (large reward delay: 20s), rats now strongly preferred the small-immediate option, selecting it well above chance (t(7)=3.742, p<0.01).

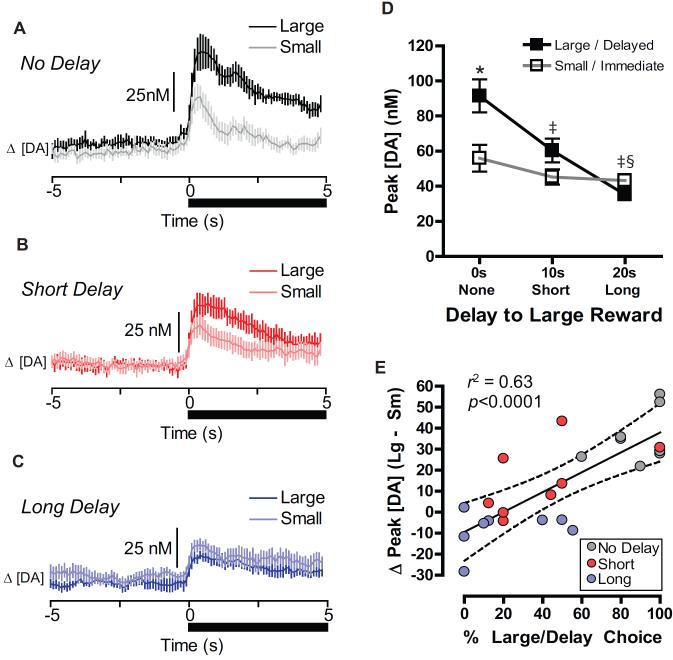

Reward-predictive cues evoke phasic increases in NAc core dopamine that scales with the predicted value of outcomes (3, 8, 10). In the forced choice trials, phasic dopamine release occurred following presentation of reward-predictive cues in each of the delay blocks. However, the peak amplitude (greatest concentration within 1s following cue onset) of cue-evoked dopamine release systematically varied depending on both reward magnitude (small versus large; F(1,7)=13.62, p<0.01) and the delay block (No-Delay, Short-Delay, Long-Delay; F(2,14)=13.33, p<0.001) (Fig. 2A–C). Importantly, a significant interaction between magnitude and delay, F(2,14)=47.6, p<0.0001, indicated that dopamine signaled the relative value of each cue type as it changed with increasing delays (Fig. 2D). During the No-Delay block, rats displayed both a strong behavioral preference for the large reward (Fig. 1B), and significantly greater peak cue-evoked dopamine that option in forced choice trials (Tukey: p<0.001, Fig. 2A,D). Similarly, in the Short-Delay block, greater dopamine was evoked by the large-delayed than small-immediate cue (Tukey: p<0.01, Fig. 2B,D). However, in the Long-Delay block, rats shifted to a strong preference for the small immediate reward (Fig. 1B), while dopamine release during the large-delayed option was significantly less than the small immediate cue, t(7)=2.447, p=0.04). Indeed, a significant correlation between cued dopamine amplitude and subsequent behavioral preference during free choice trials (r2=0.63, p<0.001), indicated that NAc dopamine encoded and rapidly updated information that tracked subsequent choice behavior (Fig. 2E).

Figure 2.

Dopamine release encodes the relative value of cue presentations during delay discounting. Dopamine concentration aligned to cue onset (black bar, time 0 s) on forced large versus forced small reward trials during the (A) No Delay, (B) Short Delay, and (C) Long Delay blocks. (D) Peak dopamine concentration following cue onset for forced Large/Delay cues (black squares) and forced Small/Immediate cues (open squares) across each reward delay block. Dopamine release was greatest for the Large option when delivered immediately, but decreased as delays to reinforcement increased. *p<0.05 Large/Delay greater than Small/Immediate. ‡p<0.05 Large/Delay option less than Large/Delay (No Delay block). §p<0.05 Small/Immediate greater than Large/Delay. Data are mean ± SEM. (E) Correlation of behavioral preference (% Large/Delay option chosen within a block) with dopamine difference score (peak dopamine for the Large/Delay option minus peak dopamine for the Small/Immediate option within a block) for each animal during each of the three blocks of the task.

Phasic dopamine signaling encodes relative values during behavioral choices

Dopamine release during cues may either signal the value of the best available option or the specific action selected, which are conflated on forced choice trials (8, 10). To reconcile this, we evaluated cue-evoked dopamine release during free choice trials. Free choice trials were associated with significant increases in dopamine during cue presentation (Fig. S3A), that decreased across trial blocks as the subjective value of expected rewards was devalued by the temporal delay, F(2,14)=9.75, p=0.002 (Fig. S3B). Notably, dopamine release was not significantly different from that seen for the behaviorally preferred option on forced choice trials (all p>0.05), consistent with previous work in FSCV (8, 10) and VTA neural activity (7, 23).

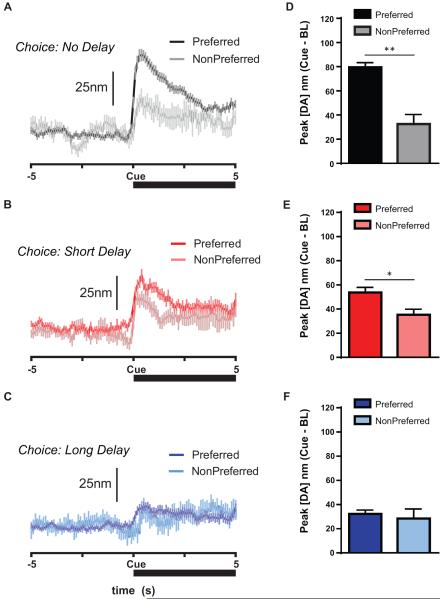

However, to examine whether dopamine was additionally involved with the expected value of the chosen option, we analyzed trial-by-trial dopamine traces during the free choice cues based on whether each subject subsequently chose its preferred or non-preferred option within that block. A significant main effect of Preference, F(1,213)=21.32, p<0.0001, indicated that individual preferences influenced dopamine signaling during the free choice cue (Fig. 3A–F) with greater peak dopamine during the choice cue when rats subsequently chose their preferred option. However, as in forced choice trials, a significant interaction of Delay Block X Preference (F(2,213)=5.51, p<0.005) indicated that the free choice cue evoked greater dopamine when the rat subsequently chose its preferred option compared to the non-preferred option in both the No-Delay block (Kolmogorov-Smirnov [K-S] D=0.65, p<0.005); Fig. 3B) and the Short-Delay block (K-S D=0.42, p<0.02); p<0.05, Fig. 3D). However, this difference did not persist in the Long-Delay block, p=0.75 (Fig. 3F). Peak dopamine release decreased across blocks when the rat chose its preferred option (no-delay versus short-delay, K-S D=0.36 p<0.005; short-delay versus long-delay, K-S D=0.40, p<0.001), but did not change across delay blocks when the rat chose the non-preferred option (K-S: all p>0.50). Indeed, for each subject's preferred option within a delay block, peak dopamine for the preferred forced choice cue significantly correlated with cued dopamine for free choice trials when the rat subsequently chose its preferred option, r=0.64, F(1,22)=15.35, p<0.001, but not when it chose its non-preferred option p=0.43 (Fig. S4). Finally, this predictive scaling of DA signaling was largely limited to the cue, as there were no preference-modulated differences during the press (Fig. S5A), though there was a modest decrease in the period following press that was not related to reward size, but instead to the long delay for the larger option (Fig. S5B). Thus, dopamine signaled information about the relative value for options within the block that was selective to predictive cues.

Optical stimulation of terminal dopamine during cues modulates choice behavior

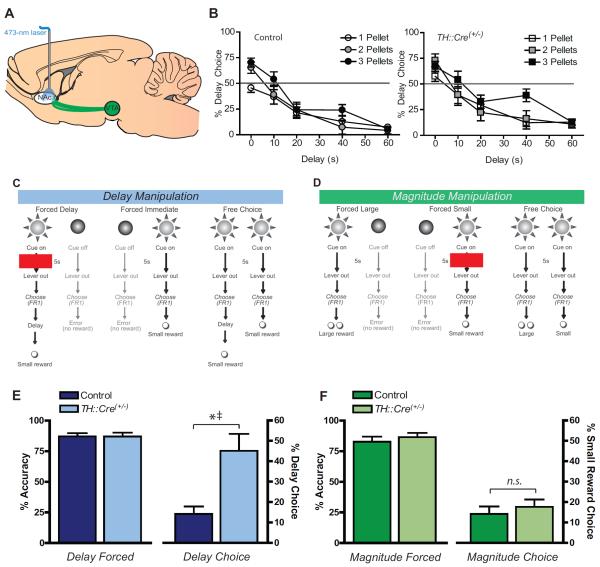

While the above findings indicate a role for dopamine signaling for encoding predicted value, it does not show a causal relationship between relative dopamine signaling and choice behavior. To test whether dopamine signaling is sufficient to bias value encoding necessary for choice behavior, we used a genetic line of rats expressing Cre-recombinase in TH neurons (TH∷Cre(+/−)) (22) and their non-transgenic littermate controls. All rats were injected with a Cre-dependent virus into the VTA to induce ChR2 expression selectively in dopamine neurons (Fig. S6A). Optical fibers placed in the NAc allowed for selective activation of dopamine terminals to induce release (Fig. 4A). Optical stimulation parameters (473nm laser; 5s, 20Hz, 20mW, 5ms pulsewidth) were based on in vivo measurements of dopamine release using FSCV generated by different presentations of laser light on dopamine cells (see Supplemental Methods, Fig. S6), which similar to previous reports (22, 24, 25) were behaviorally confirmed by TH∷Cre(+/−) rats' willingness to self-administer light to the NAc at these settings (Fig. S7).

Figure 4.

Optical stimulation of dopamine terminals modulates delay but not magnitude-based decision making. (A) Schematic representation of optogenetic technique. The VTA was bilaterally injected with a Cre-dependent ChR2 virus into both TH∷Cre(+/−) and control rats, though ChR2 was only expressed in cells in the TH∷Cre(+/−) subjects. In all subjects, bilateral optical fibers were aimed at the NAc. (B) Discounting behavior for control (left) and TH∷Cre(+/−) animals (right), showing the percent of presses on the large delayed lever plotted against the delay to reward. Animals discriminated between reward magnitudes, pressing significantly more than chance for the larger reward when no delay was imposed and decreased preference for the larger option as delays increased. There were no differences between groups in delay discounting behavior. (C) Schematic of the task design for the optogenetic delay manipulation. Presses on one lever option (immediate) resulted in a small reward delivered immediately, while on the other lever option (delay) resulted in a small reward delivered after a 10s delay. All rats received optical stimulation of dopamine afferents in the NAc during the cue on forced choice delay trials, but not during the cue on the forced immediate or free choice trials. (D) Schematic of the task design for the optogenetic magnitude manipulation. Presses on one lever option (small) resulted in one small pellet delivered immediately, while on the other lever option (large) resulted in a two pellets reward delivered immediately. All rats received optical stimulation of dopamine afferents in the NAc during the cue on forced choice small trials, but not during the cue on the forced large or free choice trials. (E) Left: Percent accuracy on forced trials in control (n=12; dark blue) and TH∷Cre(+/−) rats (n=11; pale blue) during Delay Test decision making sessions. Laser stimulation during the Forced Choice Delay cue on these trials had no effect on forced choice accuracy in either group. Right: During Free Choice trials, no laser stimulation was delivered, and thus choice behavior was determined by value learned during forced choice trials. During sessions in which rats received laser stimulation during the Forced Choice Delay cue, TH∷Cre(+/−) rats subsequently showed a significant increase in preference for the Delay lever compared to both the stimulated control animals.*p<0.05, greater than stimulated controls; ‡p<0.05, greater than no-stimulation condition. (F) Left: Percent accuracy on forced trials in control (n=9; dark green) and TH∷Cre(+/−) (n=7; pale green) animals during Magnitude Test decision making sessions. Laser stimulation during the Forced Choice Small cue had no effect on either group's accuracy relative to controls. Right: Subsequent preference in the Free Choice trials for the Small reward option was similar for both control and TH∷Cre(+/−) subjects, as subjects in both groups preferred the Large option equally. Data shown are mean ± SEM.

Next, we coupled this optogenetic approach with a modified version of our delay-discounting task, designed to allow delay (range: 0–60s) and magnitude (range: 1–3 pellets) components to vary independently across sessions (see Supplemental Methods for details). During this task, accuracy on forced choice trials did not vary by transgenic group (TH∷Cre(+/−): 83.3% versus controls, 82.9%, Group: F(1,45)=0.034, p=0.85). During free choice trials, all rats showed a preference for larger rewards (Magnitude: F(2,62)=4.03, p=0.022), and a strong bias towards immediate reinforcement (Delay: F(4,248)=115.6, p<0.0001), though this preference for larger rewards dissipated as delays to reinforcement increased (Magnitude X Delay: F(8,248)=3.32, p=0.0012; Fig. 4B). However, there was no main effect of genetic Group, F(1,62)=2.07 p=0.155, or any interaction of Group with Magnitude or Delay (all ANOVA interactions, p>0.30).

We then tested whether optogenetically-enhanced dopamine signaling was sufficient to alter subsequent choice behavior. As shown in our FSCV experiment, cued dopamine signaling scaled with the rats' subjective preferences. If dopamine amplitude is a necessary component of expected value encoding, then enhanced dopamine signaling during cues signaling the less preferred option should bias animals towards preferring that option when subsequently given a choice. To test this hypothesis, rats received optical stimulation (5s, 20Hz, 20mW, 5ms pulsewidth) of dopaminergic terminals in the NAc selectively during the forced choice cue associated with a lower-value option (Fig. 4C–D), which was compared to their behavior when they performed the same discrimination without stimulation. Importantly, no stimulation was given during free choice cue trials, thus allowing for these choice trials to act as a measure of learned preference for one option or the other rather than any nonspecific effects of stimulation. Optical fiber tip locations within the NAc for the delay and magnitude tests are shown in Figs. S8 and S9.

The first experiment (Delay Test) comprised a delay manipulation (Fig. 4C), where rats discriminated an Immediate option (1 pellet, 0s delay) and a Delay option (1 pellet, 10s delay). During these sessions, 5s optical stimulation selectively during the Delay cue of forced choice trials had no effect on the accuracy of behavioral performance on those trials either between groups (Fig. 4E, left), or compared to the no-stimulation sessions (Fig. S10A, Group: F(1,21)=0.55, p=0.47; Stimulation: F(1, 21)=1.39, p=0.26; Group X Stimulation: F(1, 21)=1.06, p=0.31). In contrast, optical stimulation during the forced Delay cue biased TH::Cre(+/−) rats, but not controls, towards choosing this option in subsequent free choice trials (Group X Stimulation, F(1,21)=7.95, p=0.010; Fig. 4E, right). Specifically, TH::Cre(+/−) rats showed significantly greater responding for the Delay choice compared to controls (Tukey: p<0.001) and also relative to their performance on the no-stimulation session (Tukey: p<0.02). In contrast, controls showed no difference in choice behavior when they received stimulation compared to their no-stimulation session (Tukey: p=0.21). Further, TH::Cre(+/−) and control rats did not differ from each other in sessions where was no light delivered (Tukey: p=0.22; Fig. S10A).

In a second experiment (Magnitude Test), we selectively manipulated reward size while keeping delay to reinforcement fixed (Fig. 4D). Here, rats chose between a Small (1 pellet) versus a Large (2 pellet) reward; both reinforcers were delivered immediately following lever press (0s delay). As in the Delay test, we found no effect of stimulation on forced choice trials during either stimulation (Fig. 4F, left) or no-stimulation (Fig. S10B) sessions (ANOVA Group, F(1,14)=0.05, p=0.82; Stimulation, F(1,14)=0.71, p=0.41;Group X Stimulation, F(1,14)=2.56, p=0.13). In contrast with the Delay test, stimulation during the Magnitude sessions had no discernible effect on subsequent choice behavior (Fig. 4F, right). We found no effect of Group, F(1,14)=0.24, p=0.63, Stimulation, F(1, 14)=1.02, p=0.33, or Group X Stimulation, F(1,14)=0.01, p=0.92. Regardless of Group, all subjects preferred the large reward option given the free choice, regardless of stimulation (all Tukey comparisons Small vs Large, p<0.001Fig. S5B). Thus, optogenetically-enhanced dopamine signaling during cues was sufficient to alter value-based decision behavior when options varied by delay costs, but not when they varied only in reward magnitude.

Discussion

The present findings reveal a role for dopaminergic input to the NAc in mediating discrete aspects of value-based decision making. Using FSCV in a delay-discounting task where reward magnitudes were constant but delays to reinforcement shifted, dopamine release during predictive cues scaled between differently valued options that reflected information about the rats' preferred responses in forced choice trials, tracked the value of the preferred choices, and was dynamically modulated by delay costs. Further, cued dopamine signaling during delay discounting was not merely correlated with value-based preferences. Using a modified version of the delay discounting task that allowed us to independently manipulate delay costs and reward magnitude, optogenetically-enhanced dopamine release within the NAc during predictive cues was sufficient to shift subsequent free choice responses. However, this effect was quite specific, as optically-enhanced dopamine was sufficient to shift choice behavior in delay-based discriminations, but not magnitude-based discriminations. These findings show a critical role for dopamine signaling in value-based decision-making, and illuminate a differential role for this signal in distinct aspects of value encoding.

The importance of dopaminergic signaling in tracking subjective costs has been demonstrated along aspects such as delay, effort and risk-taking behaviors (17, 18, 20, 26), although it was not previously known what features of the dopamine signal were critical for these processes (27). Here, using a delay discounting task, we show that dopamine signaling tracks two important features of predicted value necessary for subsequent choice selection. First, dopamine was relatively greater for cues associated with the preferred option within a given block, indicating that this signaling is important for biasing animals toward selecting favorable outcomes within a given context. Second, dopamine levels failed to encode a “winner-take-all” strategy in which preferred options evoked maximum dopamine levels and non-preferred options were minimal. Instead, dopamine signals produced a graded and dynamic prediction of subjective value that rapidly shifted as delay costs increased across blocks. Specifically, while peak dopamine levels for the Small/Immediate option did not vary across blocks, dopamine for the Large/Delay option was sensitive to delay costs and decreased across blocks accordingly. This provides further evidence that dopamine signaling presents a consistent neural currency reflecting both the relative value between options within a context, but also perhaps the additional value to be gained by the more advantageous option (23).

We also show that phasic dopamine release within the NAc is modulated by choice behavior. Previous work has suggested that dopamine signals encode the best available option regardless of the action chosen (7, 8, 10). Here, however, dopamine signaling during the choice cue reflected a dynamic prediction of subjective value that was weighted by the preference for the subsequent choice. Thus, phasic dopamine during the cue signaled the best available option, but only when this was coupled to integrating this information into preferred action selection. This observation differs substantially from previous reports (in particular, 7), though some important differences may account for this discrepancy. One, terminal release of dopamine within the NAc may diverge from neural impulses of recorded VTA neurons due to either recorded neurons failing to project to the NAc core and/or additional regulation of dopaminergic terminals within the NAc by other striatal inputs. In both cases, the correspondence between VTA activity and resultant dopamine release within the NAc may display subtle but important differences. Two, earlier tasks (7, 8) employed choices where one of the options was clearly superior to the other (e.g., less versus more effort for the same reward, or large versus small reward). In contrast, the present delay discounting tasks are more ambiguous, as the cost-benefit weighting varied considerably between individuals for the same parameters. Thus, these ambiguous factors may contribute to better resolution of detecting preference-dependent actions. In support, we have recently shown that NAc neural activity in both core and shell tracks information for subjective preferences during choice cues in a similar risk-based decision task (28).

We also report that optogenetic stimulation of the dopamine signal was sufficient to bias later choices in a value-based decision making task. However, enhanced dopamine signaling was sufficient to shift preferences for only a subset of choice discriminations. When rats were given the choice to select the same magnitude reward (1 pellet) but at different temporal delays (immediately versus 10s delay), dopamine release to the delayed cue was sufficient to shift preference to that option. In contrast, when rats had the choice to select between rewards of different magnitude (1 versus 2 pellets) at the same delay (both immediate) optically-enhanced dopamine signaling during the smaller reward cue failed to shift preference to that option. Thus, dopamine signaling was sufficient to modulate choice behavior in some (delay costs), but not all (magnitude), assessments of expected value. Compellingly, a recent finding showed that brief inhibition of dopamine signaling during decision-making shifts rats away from advantageous choices (21), which along with the present findings, argue for both a necessary and sufficient role for dopamine in decision-making.

In naturalistic settings, delays to reinforcement entail lost opportunity costs to obtain other foods and can increase risk of predation, though this waiting may provide superior rewards. In contrast, shifting to an impulsive strategy of smaller but more immediate rewards also accrues fewer rewards, but has the benefit of limited exposure to risk. Thus, weighing the costs and benefits of various factors often does not produce an obvious solution. Dopamine signaling appears to bias animals' subjective strategies for resource maximization under these uncertain conditions. In contrast, magnitude is a more intrinsic feature of reward value. Assuming equal time and effort to obtain two rewards of different size, animals will always prefer the larger option. Additional dopamine signaling here appeared to have no appreciable effect on subsequent magnitude-based decisions, consistent with related findings (16, 20, 21), reinforcing the idea of dopamine's role in modulating subjective factors of value during decision making.

This difference in value-based dopamine signaling in the NAc has been shown under multiple subjective cost conditions. For example, when rats had to expend more effort to obtain reinforcement, cues predictive of this effortful option evoked less dopamine release than the easier option, despite the fact that the rewards magnitudes were the same for each choice (8). Similarly, in a risk-based decision task where the expected values of two different options were equal but varied on probability, rats typically expressed a strong preference for one of the choices (10). Dopamine in this task tracked the subjective preference rather than the expected value, and indeed, NAc neurons showed differential patterns of firing that reflected these preferences during choice behavior (29). Recently, transient inhibition during risk-based tasks was sufficient to shift probability-based decisions, but not magnitude (21), while evidence from humans similarly indicate that cues that predict high value options based on reward probability or delay (but not reward size) recruit larger amounts of ventral striatal activity (30, 31).

In conclusion, we have shown that the dopamine signal within the NAc core plays a central role in the encoding of value-based associative information for guiding subsequent behavior. Not only is this signal highly dynamic (changing rapidly as conditions shift), but is sufficient to bias animals' decisions involving delay-based, but not magnitude-based conditions. Thus, the present data suggest a direct causal link between dopamine signaling and components of subjective value-based decision making.

Supplementary Material

Figure 3.

During free choice trials, dopamine release during choice cue presentation scaled with both subsequent choice and subjective preference. (A–C) Average trial-by-trial dopamine signaling aligned to choice cue onset (Cue) were separated by whether the animal subsequently chose its Preferred option (at least 50% choices within that block; dark colors) or its Non-Preferred option (light colors). (D–F) Mean peak dopamine signal following choice cue onset was greater when rats chose their Preferred option compared to their Non-Preferred option. However, this effect was strongest (**p<0.01) in the No Delay block (d), moderate (*p<0.05) in the Short Delay block (e), and absent in the Long Delay block. All data are mean ± SEM.

Acknowledgements

We are grateful for outstanding technical support from Xuefei Wang. This research was supported by DA034021 to RMC, DA030307 to JAS and DA035322 to MPS.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The authors report no biomedical financial interests or potential conflicts of interest.

References

- 1.Fields HL, Hjelmstad GO, Margolis EB, Nicola SM. Ventral tegmental area neurons in learned appetitive behavior and positive reinforcement. Annu Rev Neurosci. 2007;30:289–316. doi: 10.1146/annurev.neuro.30.051606.094341. [DOI] [PubMed] [Google Scholar]

- 2.Clark JJ, Hollon NG, Phillips PE. Pavlovian valuation systems in learning and decision making. Curr Opin Neurobiol. 2012;22:1054–1061. doi: 10.1016/j.conb.2012.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Day JJ, Roitman MF, Wightman RM, Carelli RM. Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nat Neurosci. 2007;10:1020–1028. doi: 10.1038/nn1923. [DOI] [PubMed] [Google Scholar]

- 4.Pan WX, Schmidt R, Wickens JR, Hyland BI. Dopamine cells respond to predicted events during classical conditioning: Evidence for eligibility traces in the reward-learning network. Journal of Neuroscience. 2005;25:6235–6242. doi: 10.1523/JNEUROSCI.1478-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412:43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- 6.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 7.Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10:1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Day JJ, Jones JL, Wightman RM, Carelli RM. Phasic nucleus accumbens dopamine release encodes effort- and delay-related costs. Biol Psychiatry. 2010;68:306–309. doi: 10.1016/j.biopsych.2010.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Jones JL, Day JJ, Aragona BJ, Wheeler RA, Wightman RM, Carelli RM. Basolateral amygdala modulates terminal dopamine release in the nucleus accumbens and conditioned responding. Biol Psychiatry. 2010;67:737–744. doi: 10.1016/j.biopsych.2009.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sugam JA, Day JJ, Wightman RM, Carelli RM. Phasic nucleus accumbens dopamine encodes risk-based decision-making behavior. Biol Psychiatry. 2012;71:199–205. doi: 10.1016/j.biopsych.2011.09.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 12.Tobler PN, Fiorillo CD, Schultz W. Adaptive coding of reward value by dopamine neurons. Science. 2005;307:1642–1645. doi: 10.1126/science.1105370. [DOI] [PubMed] [Google Scholar]

- 13.Kobayashi S, Schultz W. Influence of reward delays on responses of dopamine neurons. J Neurosci. 2008;28:7837–7846. doi: 10.1523/JNEUROSCI.1600-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fiorillo CD. Transient activation of midbrain dopamine neurons by reward risk. Neuroscience. 2011;197:162–171. doi: 10.1016/j.neuroscience.2011.09.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Cardinal RN, Pennicott DR, Sugapathala SL, Robbins TW, Everitt BJ. Impulsive choice induced in rats by lesions of the nucleus accumbens core. Science. 2001;292:2499–2501. doi: 10.1126/science.1060818. [DOI] [PubMed] [Google Scholar]

- 16.Ghods-Sharifi S, Floresco SB. Differential effects on effort discounting induced by inactivations of the nucleus accumbens core or shell. Behav Neurosci. 2010;124:179–191. doi: 10.1037/a0018932. [DOI] [PubMed] [Google Scholar]

- 17.St Onge JR, Chiu YC, Floresco SB. Differential effects of dopaminergic manipulations on risky choice. Psychopharmacology (Berl) 2010;211:209–221. doi: 10.1007/s00213-010-1883-y. [DOI] [PubMed] [Google Scholar]

- 18.St Onge JR, Floresco SB. Dopaminergic modulation of risk-based decision making. Neuropsychopharmacology. 2009;34:681–697. doi: 10.1038/npp.2008.121. [DOI] [PubMed] [Google Scholar]

- 19.Salamone JD, Arizzi MN, Sandoval MD, Cervone KM, Aberman JE. Dopamine antagonists alter response allocation but do not suppress appetite for food in rats: Contrast between the effects of skf 83566, raclopride, and fenfluramine on a concurrent choice task. Psychopharmacology (Berl) 2002;160:371–380. doi: 10.1007/s00213-001-0994-x. [DOI] [PubMed] [Google Scholar]

- 20.Stopper CM, Khayambashi S, Floresco SB. Receptor-specific modulation of risk-based decision making by nucleus accumbens dopamine. Neuropsychopharmacology. 2013;38:715–728. doi: 10.1038/npp.2012.240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Stopper CM, Tse MT, Montes DR, Wiedman CR, Floresco SB. Overriding phasic dopamine signals redirects action selection during risk/reward decision making. Neuron. 2014 doi: 10.1016/j.neuron.2014.08.033. [DOI] [PubMed] [Google Scholar]

- 22.Witten IB, Steinberg EE, Lee SY, Davidson TJ, Zalocusky KA, Brodsky M, et al. Recombinase-driver rat lines: Tools, techniques, and optogenetic application to dopamine-mediated reinforcement. Neuron. 2011;72:721–733. doi: 10.1016/j.neuron.2011.10.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lak A, Stauffer WR, Schultz W. Dopamine prediction error responses integrate subjective value from different reward dimensions. Proc Natl Acad Sci U S A. 2014;111:2343–2348. doi: 10.1073/pnas.1321596111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Steinberg EE, Boivin JR, Saunders BT, Witten IB, Deisseroth K, Janak PH. Positive reinforcement mediated by midbrain dopamine neurons requires d1 and d2 receptor activation in the nucleus accumbens. PLoS One. 2014;9:e94771. doi: 10.1371/journal.pone.0094771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Steinberg EE, Keiflin R, Boivin JR, Witten IB, Deisseroth K, Janak PH. A causal link between prediction errors, dopamine neurons and learning. Nat Neurosci. 2013;16:966–973. doi: 10.1038/nn.3413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Floresco SB, Tse MT, Ghods-Sharifi S. Dopaminergic and glutamatergic regulation of effort- and delay-based decision making. Neuropsychopharmacology. 2008;33:1966–1979. doi: 10.1038/sj.npp.1301565. [DOI] [PubMed] [Google Scholar]

- 27.Winstanley CA, Theobald DE, Dalley JW, Robbins TW. Interactions between serotonin and dopamine in the control of impulsive choice in rats: Therapeutic implications for impulse control disorders. Neuropsychopharmacology. 2005;30:669–682. doi: 10.1038/sj.npp.1300610. [DOI] [PubMed] [Google Scholar]

- 28.Sugam JA, Saddoris MP, Carelli RM. Nucleus accumbens neurons track behavioral preferences and reward outcomes during risky decision making. Biol Psychiatry. 2014;75:807–816. doi: 10.1016/j.biopsych.2013.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sugam JA, Saddoris MP, Carelli RM. Nucleus accumbens neurons track behavioral preferences and reward outcomes during risky decision making. Biol Psychiatry. 2013 doi: 10.1016/j.biopsych.2013.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nat Neurosci. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tobler PN, O'Doherty JP, Dolan RJ, Schultz W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. J Neurophysiol. 2007;97:1621–1632. doi: 10.1152/jn.00745.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.