Abstract

Introduction

The aims of this article were to introduce the capability to view and interact with 3-dimensional (3D) surface models in online publications, and to describe how to prepare surface models for such online 3D visualizations.

Methods

Three-dimensional image analysis methods include image acquisition, construction of surface models, registration in a common coordinate system, visualization of overlays, and quantification of changes. Cone-beam computed tomography scans were acquired as volumetric images that can be visualized as 3D projected images or used to construct polygonal meshes or surfaces of specific anatomic structures of interest. The anatomic structures of interest in the scans can be labeled with color (3D volumetric label maps), and then the scans are registered in a common coordinate system using a target region as the reference. The registered 3D volumetric label maps can be saved in .obj, .ply, .stl, or .vtk file formats and used for overlays, quantification of differences in each of the 3 planes of space, or color-coded graphic displays of 3D surface distances.

Results

All registered 3D surface models in this study were saved in .vtk file format and loaded in the Elsevier 3D viewer. In this study, we describe possible ways to visualize the surface models constructed from cone-beam computed tomography images using 2D and 3D figures. The 3D surface models are available in the article’s online version for viewing and downloading using the reader’s software of choice. These 3D graphic displays are represented in the print version as 2D snapshots. Overlays and color-coded distance maps can be displayed using the reader’s software of choice, allowing graphic assessment of the location and direction of changes or morphologic differences relative to the structure of reference. The interpretation of 3D overlays and quantitative color-coded maps requires basic knowledge of 3D image analysis.

Conclusions

When submitting manuscripts, authors can now upload 3D models that will allow readers to interact with or download them. Such interaction with 3D models in online articles now will give readers and authors better understanding and visualization of the results.

More effective and rational clinical decision making for orthodontic and orthognathic surgery patients requires careful 3-dimensional (3D) image-analysis techniques. Advanced applications of 3D imaging techniques, including virtual models from cone-beam computed tomography (CBCT), stereo-photometry, and intraoral or indirect scanners, can be used for applications in dentistry that now allow superimpositions for populational and individual longitudinal assessments. The 3D superimpositions provide assessments of growth, changes with treatment, stability evaluations, improved diagnoses of asymmetry, tooth morphologies and relative positions, quantitative and qualitative analyses of skeletal and tooth displacements, and temporomandibular joint evaluations, among other applications.1 Interpretation of the superimposition results depends on the structure of reference used for registration. It is important that the clinician be prepared and familiar with the technology to prevent misunderstandings and incorrect interpretations of the images.

Advances in our field have relied on 3D data for new discoveries. However, when publishing those new discoveries, articles were up to now limited to featuring 2-dimensional (2D) projected images of the 3D models. Since September 2014, thanks to a collaborative effort between Elsevier and Kitware SAS (Lyon, France), the American Journal of Orthodontics and Dentofacial Orthopedics has given readers the exciting capability to view and interact with publications’ 3D models on a variety of devices, including mobile phones, tablets, laptops, and desktops. The 3D interactivity in online Elsevier articles empowers authors to optimally showcase their research and enables readers to more deeply and efficiently understand the findings presented.2 Readers can see data sets in all views in 3D space by panning, rotating, and zooming in or out on models of interest, applying color settings, and downloading the 3D data sets.

This article introduces the capability to view and interact with publications’ 3D volumetric images and surface models and describes the steps to prepare the surface models for such online 3D visualizations. Specifically, we present image analysis procedures for 3D visualization and quantification of populational and longitudinal changes.

MATERIAL AND METHODS

Three-dimensional image analysis procedures for orthodontic, craniofacial, and maxillofacial surgery applications include (1) image acquisition, (2) construction of 3D volumetric files, (3) image registration, and (4) visual analytics with graphic display of 3D morphological variability and changes. Once these procedures are performed, the 3D files can be saved and uploaded for 3D visualization in online publications.

For acquisition of 3D diagnostic records, diagnosis of maxillomandibular discrepancies is based on data coming from different sources: clinical examinations, 3D photographs, CBCT, CT, magnetic resonance images, and digital dental models. Systems for computer-assisted diagnosis must integrate different records to characterize the diagnosis and formulate the treatment plan.3 Images from CBCT, CT, and magnetic resonance imaging are acquired as volumetric 3D images (saved in file formats such as DICOM, gipl, or nrrd). These volumetric images can be visualized as cross-sectional slices or 3D projected images (3D renderings) or can be used to construct polygonal meshes or surfaces of specific anatomic structures of interest. The Elsevier 3D viewer includes capabilities to visualize the 3D images as cross-sectional slices, 3D renderings, 3D polygonal meshes, or solid surfaces. To simplify the description of image analysis procedures, we will specifically describe 3D analysis of images acquired with CBCT, since it is now widely used in our field. The same image analysis procedures are applicable and can be generalized for images acquired with any 3D imaging modality.

For construction of 3D volumetric files that label with color the anatomic structures of interest, the CBCT scans (DICOM files) can be opened and visualized in any 3D image analysis software of choice. Examples are 3DMDvultus (3DMD, Atlanta, Ga),4 Maxilim (Medicim, Mechelen, Belgium),5 Dolphin Imaging (Dolphin Imaging & Management Solutions, Chatsworth, Calif),6 In-Vivo Dental (Anatomage, San Jose, Calif),7 SimPlant OMS or Mimics (Materialise, Leuven, Belgium),8 or open-source tools such as TurtleSeg,9 ITK-SNAP,10 and 3D Slicer.11 In a procedure known as image segmentation, we identify and delineate the anatomic structures of interest in the CBCT scan to obtain a 3D representation of the hard and soft tissues (3D volumetric label map files). Even though image segmentation has been a field of active research for many decades, it remains one of the most time-consuming steps in image processing. A major challenge with segmentation is that hard and soft tissues from CBCT images have no corresponding Hounsfield units. One CBCT image taken from a subject may have different intensity levels for bone, cartilage, and soft tissues. No standard segmentation method can be expected to work equally well for all tasks. Many commercial softwares incorporate an intensity thresholding algorithm for their segmentation. Although this often works well for thick and dense bones such as the mandible, it often fails for thin bones such as the condyles and the labial surfaces of the teeth. The morphology and position of the condyles and the internal surfaces of the ramus and maxilla are critical for careful diagnosis. Then precise segmentation and representation of these anatomic regions are important. To best capture the facial anatomy, our method of choice for the segmentation procedures is ITK-SNAP10 software, which has received continuous support from the National Institutes for Health (NIH), Bethesda, Maryland, for further open-source software development. ITK-SNAP was developed, based on the NIH Visualization Tool Kit (VTK) and Insight Tool Kit (ITK), as part of the NIH Roadmap Initiative for National Centers of Biomedical Computing. The semiautomatic segmentation procedures in ITK-SNAP use active contour methods to compute feature images based on the CBCT images’ gray level intensities and boundaries. ITK-SNAP is more versatile than other open and commercial softwares because it allows the adjustment of the parameters for automatic detection of intensities and boundaries and allows user interactive editing of contours. For example, on a laptop computer equipped with a 2.5 GHz processor and a 1 GB graphic card, the initial segmentation step typically takes about 15 minutes. Manual postprocessing of the segmentation usually takes longer, up to several hours (separation of the maxillary and mandibular teeth can be particularly tedious).

After segmentation, for a single 1-time point image, the 3D volumetric label map can be saved as a 3D triangular or polygonal mesh (3D surface model), in .obj, .ply, .vtk, or .U3D file format. Then it can be uploaded in an online publication and visualized in the Elsevier 3D viewer.

For longitudinal CBCT scans or scans of a group of patients, further image analysis procedures are required and described below.

The next image analysis procedure consists of registering the scans and their respective 3D volumetric label maps in a common coordinate system using a target region as the reference. Different types of registrations will lead to different interpretations of the results. The registration procedures can use, as options for reference, landmarks, surface models, or voxel gray intensity. Currently, both commercial and open-source softwares allow these different options for craniomaxillofacial registration (https://sites.google.com/a/umich.edu/dentistry-image-computing).12 The process of registration involves computing transformations. Transformation is a mathematical operation that applies a matrix to move the points of a 3D image or surface model in multiple planes and degrees of freedom in 3D space. Longitudinal CBCT scans acquired at different times can be registered by computing the differences of the head position relative to a stable anatomic structure of reference. The image registration procedure that computes the translational (anteroposterior, transverse, and vertical) and rotational displacements (pitch, roll, and yaw) is known as rigid registration.13 There are also image registration procedures that compute differences in scale (size changes with growth or treatment, known as affine registration) or shape (nonrigid registration). The challenges of using nonrigid registration for clinical studies are that shape integrity is not preserved, and the 3D models can be deformed.14 To prevent distorting or morphing of the images, nonrigid registration can be used to compute transformations by considering scale and shape differences, and then applying only the rigid movements (rotation and translation) to preserve the actual scale and shape features.15,16

The image registration procedures that our research group has found to provide the most reliable results consist of 2 steps.

Establishing a common coordinate system across subjects for group comparisons, not based on a single line or plane, is essential to allow group comparisons and consistent measurements across subjects. Quantifications of the anteroposterior, vertical, and transverse directions of changes require consistent orientations of the heads of all subjects and image acquisitions using natural head position, intracranial reference planes,17 or minisensors for recording the 3D head position.18

For voxel-based registrations for longitudinal assessments, the major strength of this method is that registration does not depend on how precisely the 3D volumetric label maps represent the anatomic truth or on the location of a limited number of landmarks. For voxel-based registration methods that use the 3D volumetric label maps as the input of the region of reference, these multiplanar label maps should be extended slightly (1–2 voxels) beyond the actual bone boundaries to provide the complex gray-level information needed for the automatic detection of the bone boundaries during the voxel-based registration. Importantly, the 3D segmentation of the anatomic structures of reference for the registration are not “clean” 3D surface models and are used only as references to mask anatomic structures that change with growth and treatment. Voxel-based registration methods actually compare voxel by voxel the gray-level values in 2 CBCT images to calculate the rotation and translation parameters between them. Not all voxel-based registrations are similar in methodology and accuracy, because they can use different structures of reference and different numbers of iterations (eg, the software can run for 1 minute and compute 100 attempts of best voxel match, or run for 10 minutes and compute 1000 comparisons of best fit among thousands of voxels). After registration, the 3D volumetric label maps should be further edited for finer definitions of the patient’s actual bony anatomic contours.

It is also important to understand that the clinical implications that can be derived from 3D registrations and superimpositions depend on the structures selected as references for registration. Registration on different regions of reference will lead to different interpretations of the results. We have developed a novel sequence of fully automated voxel-wise rigid registrations at the cranial base (for overall facial assessments relative to cranial structures that complete their growth early in childhood)16 and regionally (to assess maxillary and mandibular bone remodeling).19,20 All image registration procedures described above can be performed in the Transforms and CMF registration modules in Slicer open-source software (www.slicer.org,11; video tutorials 1 to 3 available at http://www.youtube.com/user/DCBIA).21 They were initially developed as part of the National Alliance of Medical Image Computing (NIH Roadmap for Medical Research) and have been widely used internationally.

For visual analytics with graphic display of 3D morphologic variability and changes over time, after registration, the registered 3D volumetric label maps can then be saved as 3D triangular or polygonal meshes (3D surface models) in .obj, .ply, .vtk., .stl, or .U3D file formats and can be used for (1) overlays with contrasting opaque or semitransparent colors, (2) 2D linear distances between landmarks, and (3) 3D linear distances graphically displayed with color-coded maps computed using closest or corresponding surface points. The overlays provide visual qualitative assessment of the location and direction of changes or morphologic differences. Quantitative assessments can provide distances and angles between landmarks and planes in the surface models or color-coded surface distance maps graphically displayed on the surface models.

Landmark-based measurements can cause errors related to landmark identification.22 Locating 3D landmarks on complex curving structures is not a trivial problem for representation of the components of the craniofacial form.23 As Bookstein24 noted, there is a lack of literature about suitable operational definitions for the landmarks in the 3 planes of space (coronal, sagittal, and axial).

Three-dimensional surface distances computed at the vertices of the triangular meshes can be computed as closest points between noncorrespondent surface meshes or as homologous or correspondent points between correspondent surface meshes. The computation of the surface distances can be stored as color-coded distances in the .obj, .ply, or .vtk file format that can be uploaded in the online publications. Currently, the visualization of the color-coded maps on the Elsevier 3D viewer appears faded, and users cannot adjust the range of the colors in the color-coded map yet, but readers can download the surface models that store the color-coded maps and then upload those models in another software of choice, such as Paraview (http://www.paraview.org)25 or the ShapePopulation-Viewer module in Slicer 4.411 (tutorial available at http://www.youtube.com/user/DCBIA).21

Closest-point distances measure the closest distances between the vertices of the triangular meshes in 2 surfaces, not corresponding distances between anatomic points on 2 or more longitudinally obtained models. This standard analysis is currently used by most commercial and academic softwares but does not map corresponding surfaces based in anatomic geometry and usually underestimates large rotational and translational movements. Closest-point distances can be displayed with color-coded maps, as proposed by Gerig et al.26

Shape correspondence, as computed with the SPHARM-PDM module,27 was developed as part of the National Alliance of Medical Image Computing (NIH Roadmap for Medical Research) and has been adapted for use with CBCT images of the craniofacial complex.28–32 The SPHARM-PDM software (version 1.12; open source, http://www.nitrc.org/projects/spharm-pdm) computes point-based surface models, where all models have the same numbers of triangular meshes and vertices in corresponding (homologous) locations.33 This software is now distributed as the SPHARM-PDM extension module in the Slicer 4.4.11 Corresponding surface distances and vectors can then be calculated with the “model to model distance” extension module and graphically displayed in the Slicer “shape population viewer” extension module (http://www.youtube.com/user/DCBIA; video 5).21

Once the 3D image analysis procedures have been completed, the 3D registered surface models can be saved as .obj, .ply, .U3D, or .vtk files and uploaded by authors as they submit their manuscripts in the AJO-DO Elsevier Web site. Authors can submit each 3D model in 3D figures as separate zipped files that are uploaded for online articles via the “3D models” submission category. The current Elsevier submission system recommends a 3D model size of 50 to 100 MB before zipping. Authors can indicate in their cover letters whether the manuscript should also be considered as an original article for the printed publication, in which all the 2D figures can be printed and in which the figure legends and descriptions for the 3D figures can refer the readers to the online versions. The 3D visualization provided by the Elsevier 3D viewer in the online articles combines local (WebGL) and remote (ParaViewWeb) rendering techniques. The Web browser and the size of the 3D data are analyzed to ensure that users are given optimal support and can interact with the 3D models in real time. The remote rendering option is based on ParaViewWeb, a collaborative Web interface for 3D interaction built on the Para-View open-source client/server architecture.25 With this technology, 3D rendering is performed remotely to enable users with limited computing resources to interact with large scenes. For local rendering, the WebGL viewer calls upon the client’s graphics processing unit to render 3D scenes directly in a Web browser. The resulting hybrid viewer enables Elsevier subscribers to surpass the limitations of current digital publications and interact with data in a meaningful way. Readers can see data sets in 3 views; can pan, rotate, and zoom in or out on models of interest; can apply different settings such as color; can load multiple registered files (that can be best visualized in the current Elsevier 3D viewer one at a time); can download the data set; and can visualize semitransparent overlays and analytical color maps in their image analysis software of choice.

RESULT

Assessments of 3D changes with visual analytic graphics are displayed and presented as illustrations in this article in 2 formats (2D figures and 3D files available online) to facilitate the reader’s understanding.

For online versions, all registered 3D surface models were saved as multiple models in .vtk file format; they are designed to be loaded in the Elsevier 3D viewer and are now available for readers to interact with them. The 2D figures show snapshots or perspective views of the different visualizations that readers can now obtain from the 3D figures when they load the 3D files in the Elsevier 3D viewer or download the 3D files to visualize them in their software of choice. The visualization of 3D superimpositions in the current Web-based Elsevier 3D viewer is hampered by the inability to adjust opacity when 2 or more 3D models are loaded simultaneously, and the color-coded maps appear faded in 1 tone because the color-coded surface distances stored in the 3D file cannot be displayed with different ranges of colors. This makes it difficult for readers to interpret and understand the results of the superimpositions using the current Elsevier 3D viewer. The Elsevier 3D viewer does allow readers to download the 3D surface models for visualization in the reader’s image analysis software of choice.11,21

Figures 1 through 5 and Supplementary Figures 1 through 4 show the 3D surface models of a patient who was followed during his growth spurt before treatment at 2 time points, 1 year 6 months apart (T1, 11.5 years; T2, 13 years). Figure 6 and Supplementary Figure 5 show 3D surface models before and after an adult patient’s surgical correction with bimaxillary advancement, mandibular counterclockwise rotation, and genioplasty.

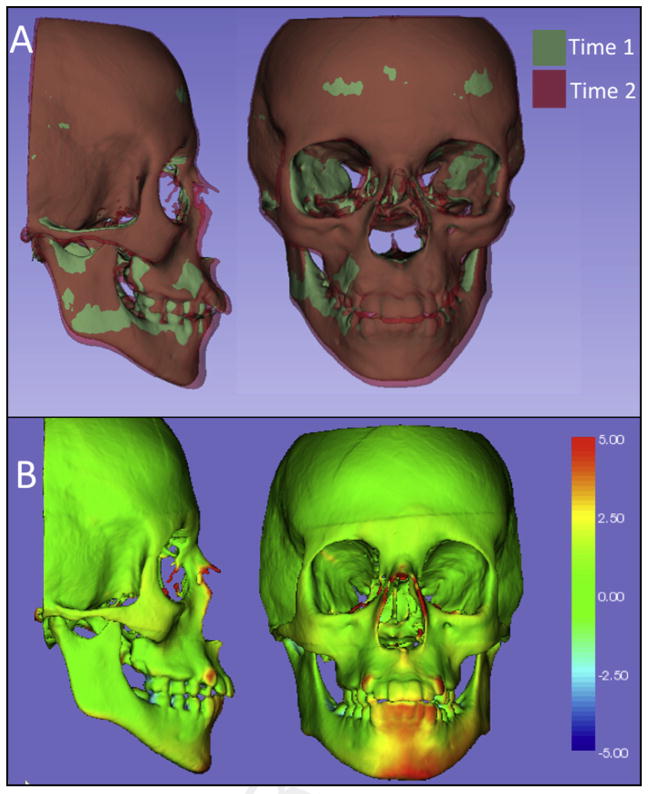

Fig 1.

Two-dimensional figure showing the results of the cranial base superimposition at T1 and T2 of the surface models of a growing patient: A, semitransparent overlays; B, closed point color-coded surface distance maps quantifying the mandibular displacement or bone remodeling relative to the cranial base registration.

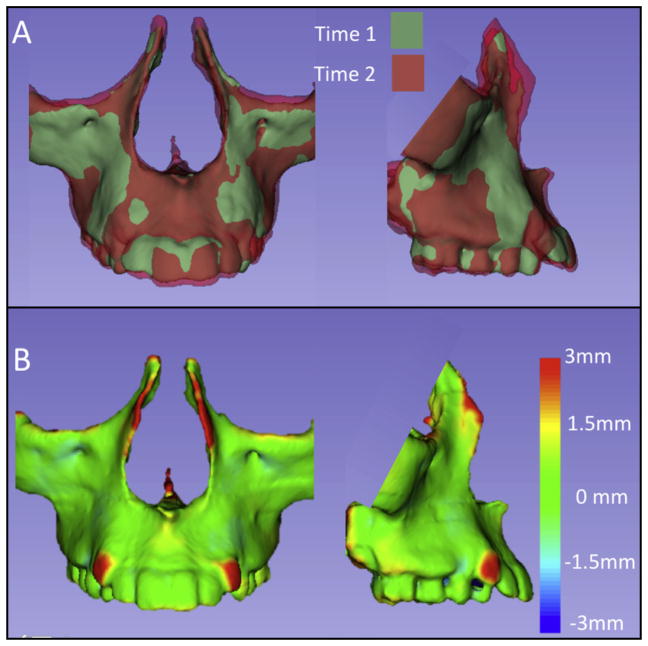

Fig 5.

Two-dimensional figure showing the maxillary surface models, where the T2 model was registered on T1 using the maxilla as the reference: A, semitransparent overlays; B, closest-point surface distances color-coded maps between T1 and T2.

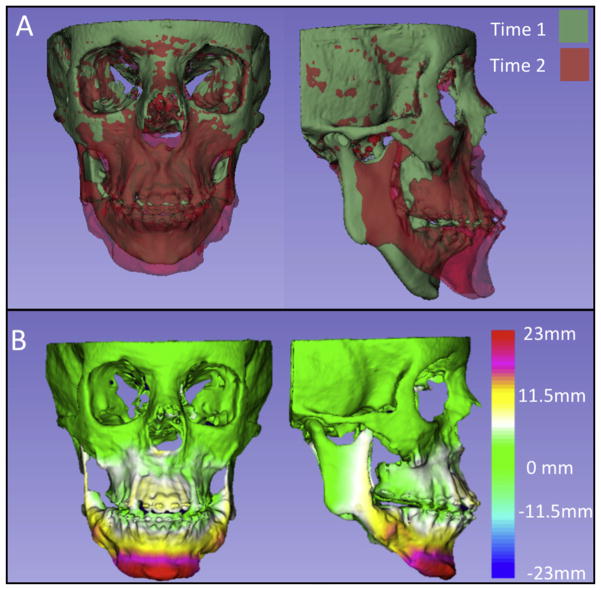

Fig 6.

Two-dimensional figure showing the surface models at 2 times of a patient who received surgical correction. The T2 model was registered over T1 using the cranial base as the reference. A, Semi-transparent overlays; B, closest-point surface distances color-coded map between T1 and T2, shown with 7 colors to better define the regional measurements.

Figures 1 through 6 are 2D snapshot examples of the 3D Supplementary Figures 1 through 5. Each 3D figure includes multiple files that can be visualized in the Elsevier 3D viewer or downloaded for visualization in your image analysis software of choice. Your image analysis software may have more image analysis functionalities than the current Elsevier 3D viewer, as shown in the 2D figures taken in open-source software (3D Slicer).11 The Elsevier 3D viewer currently allows the reader to adjust files A and B in each 3D illustration, changing colors for visualization. In addition, readers will be able to rotate, pan, and zoom the models, facilitating the visualization and interpretation of the image analysis results. For 3D illustrations C and D (color-coded maps), the reader can analyze the differences between the times, but in the Elsevier 3D viewer currently available, readers cannot modify the color-coded ranges yet.

Figure 1 in 2 dimensions and Supplementary Figure 1 in 3 dimensions show the results of the cranial base registration of a growing patient. The 2D figure (Fig 1) shows snapshots of the semitransparent overlays and the closest point color-coded maps. The 3D figure (Supplementary Fig 1) contains 3 files: A and B are the T1 and T2 surface models, and C is a file that stores the computed color-coded surface distances.

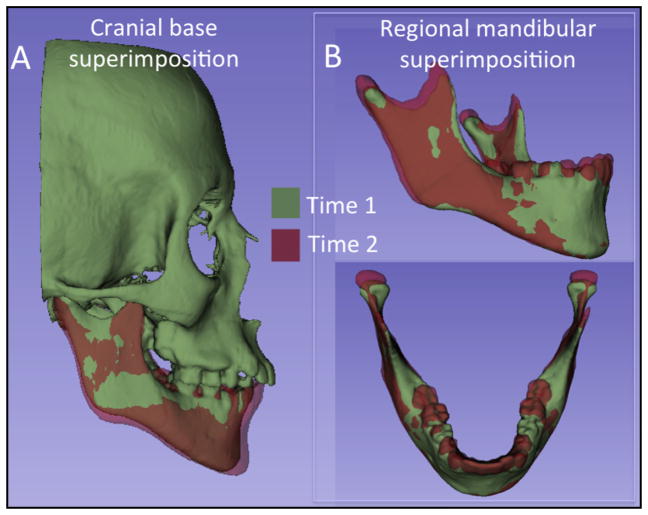

Figure 2 in 2 dimensions and Supplementary Figure 2 in 3 dimensions show the results of the mandibular regional registration of a growing patient. The 2D figure (Fig 2) shows snapshots of the semitransparent overlays, and Figure 2, A, shows the cranial base overlay just for comparison with the changes observed with the mandibular regional registration in Figure 2, B. The 3D figure (Supplementary Fig 2) contains 3 files: A and B are the T1 and T2 surface models, and C is a file that stores the computed color-coded surface distances.

Fig 2.

Two-dimensional figure showing the semitransparent overlays of 2 time points of a patient during the growth spurt: A, mandibular anterior inferior displacement relative to the cranial base; B, mandibular registration, with the T2 model registered on T1 using the mandible as the reference, showing posterior and superior condylar growth.

Figures 3 and 4 are 2D snapshots of different quantitative methods of mandibular ramus and condylar growth with the 3D surface distances computed at the vertices of the triangular meshes. Figure 3 shows how distances between points located on the model surfaces can be automatically calculated by different methods, and Figure 4 shows the color-coded surface maps of those measured point-to-point distances. Supplementary Figure 3 is a 3D figure that contains 4 models: A and B are the T1 and T2 surface models, C is a file that stores the computed closest point color-coded surface distances, and D is a surface model that stores the computed corresponding point color-coded surface distances.

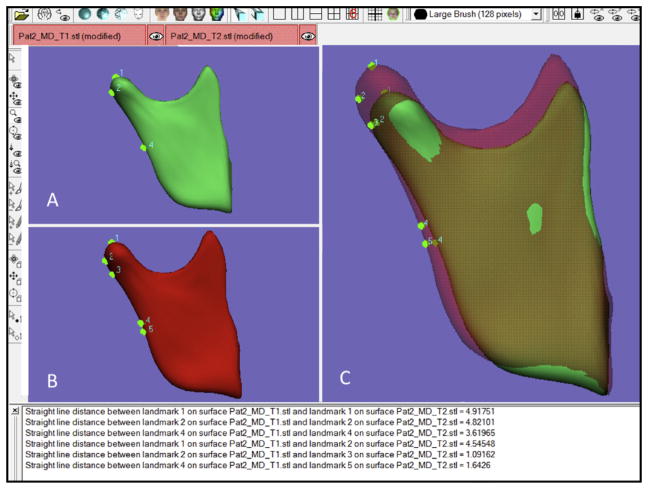

Fig 3.

Two-dimensional figure showing the distances between landmarks placed in the T1 and T2 surface models registered using the mandible as the reference. The distances between landmarks can be automatically calculated by different methods: closest point and corresponding (homologous) point. The distances between landmarks are displayed. Points 1 (most superior point in the condyle), 2 (most posterior point in the condyle), and 4 (point in the mid-distance between gonion and point 1) located on the T1 surface model (green) have corresponding points in the T2 surface model (red). Note in the overlay that the closest points for points 1, 2, and 4 in the T1 surface model are points 2, 3, and 5 placed over the T2 surface. Comparing the distances between corresponding points (1–1, 4.92 mm; 2–2, 4.82 mm; 4–4, 3.62 mm) to the closest points (1–2, 4.54 mm; 2–3, 1.09 mm; 4–5, 1.64 mm), it is notable that the distance between the 2 closest points placed over the 2 models is often smaller than the corresponding points’ distance.

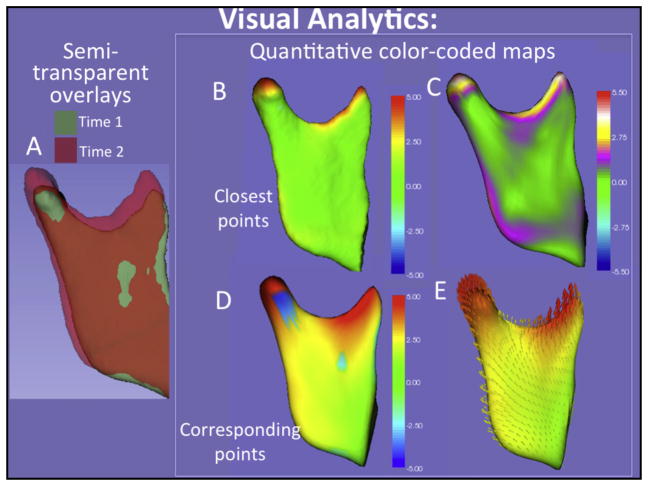

Fig 4.

Two-dimensional figure showing: A, the right mandibular ramus and condyle semitransparent overlay, and B–E, quantitative color-coded maps of the distances measured in Figure 3. In B–E, 2 types of color-coded maps are shown: the closest distances between surface points are shown with 5 colors in B and with 7 colors in C to better define the regional measurements; the distances between corresponding points in the surface models are shown in D, and the vectors of the growth direction are shown in E.

Figure 5 (2D) and Supplementary Figure 4 (3D) exemplify the regional registration with the maxilla as the reference, and Figure 6 (2D) and Supplementary Figure 5 (3D) show an adult surgical correction.

DISCUSSION

We describe new capabilities for publishing 3D data and displaying the 3D assessments in an online publication. Although 2D figures of the 3D skeletal and dental changes with growth and treatment provide perspective views, 2D figures are oversimplified representations of the 3D morphology. Three-dimensional models are “flattened” into static 2D images, significantly reducing the value of the author’s analysis and the reader’s ability to interact with the content. Since 3D models are built for 3D evaluations and the virtual generation claims for innovations, there is no reason not to use them for publications. Elsevier authors now can show their 3D data, and any ScienceDirect users or readers can view and interact with these author-provided 3D data sets on many devices with no additional plug-in required. These devices include smart phones, tablets, laptops and desktop computers.2

For the online article versions, 3D file formats saved (eg, .vtk files, which were originally designed to be loaded in Slicer11 or Paraview25 software) will then be available for readers to interact with them in the Elsevier 3D viewer software. For scientific publications, we define unambiguously the 3D content being published and strict subsets of the formats that give good expressibility of the 3D results and findings; these are easy to implement with various softwares.

Interpretation of the 3D morphology changes depends on the structure of the reference used for registration, and it is important that clinicians be prepared and familiar with the technology to prevent misunderstandings and incorrect interpretations of the 3D images. We expect that readers will have enough background in 3D evaluation to load and understand the results from the superimposition of the registered files when they download them for visualization in their software of choice. During this transitional phase, while authors and readers become familiar with the Elsevier 3D viewer, authors should consider having both illustrations (3D and 2D), keeping 3D models available for readers and 2D figures taken from the correspondent 3D models, or mixing 3D and 2D images to make sure that all information regarding a study is properly shared, and regions and landmarks of importance are highlighted.

Authors and readers must also understand how to benefit from the current 3D viewer provided by Elsevier and how to handle 3D file formats (particularly if they contain 2 or more surface models) or store computed distances between them. One key point that deserves exploration is the challenge of a large number of 3D file formats that are not standardized. Whereas the Elsevier viewer currently supports .obj, .ply, .U3D, and .vtk files, different softwares might not handle the legal variations of these file formats consistently. When each author submitting a manuscript uploads a 3D figure, it is helpful for readers and for the printed version of the article that authors also include screenshots and conventional 2D figures of their 3D visualizations as standard figures. This is important to ensure that the 3D visualizations deliver the information the authors present in their methods and results.

CONCLUSIONS

When submitting manuscripts, Elsevier authors can now upload 3D models that will allow readers to interact with and download them. Interaction with 3D models in online articles now will give readers improved understanding and visualization of the results. Such interactions with 3D models require that readers know how to interpret the 3D superimposition information that is relative on the areas of reference used for registration.

Supplementary Material

Acknowledgments

Supported by the National Institute of Dental & Craniofacial Research and the National Institute of Biomedical Imaging and Bioengineering of the National Institutes of Health (award number R01DE024450).

Footnotes

All authors have completed and submitted the ICMJE Form for Disclosure of Potential Conflicts of Interest, and none were reported.

Supplementary data associated with this article can be found, in the online version, at http://dx.doi.org/10.1016/j.ajodo.2015.02.002.

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- 1.American Academy of Oral and Maxillofacial Radiology. Clinical recommendations regarding use of cone beam computed tomography in orthodontic treatment. Position statement by the American Academy of Oral and Maxillofacial Radiology. Oral Surg Oral Med Oral Pathol Oral Radiol. 2013;116:238–57. doi: 10.1016/j.oooo.2013.06.002. [DOI] [PubMed] [Google Scholar]

- 2. [Accessed January 14, 2015]; Available at: http://www.elsevier.com/connect/bringing-3d-visualization-to-online-research-articles.

- 3.Cevidanes LHC, Tucker S, Styner M, Kim H, Chapuis J, Reyes M, et al. Three-dimensional surgical simulation. Am J Orthod Dento-facial Orthop. 2010;138:361–71. doi: 10.1016/j.ajodo.2009.08.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. [Accessed January 14, 2015]; Available at: http://www.3dmd.com/3dmd-software.

- 5. [Accessed January 14, 2015]; Available at: http://www.medicim.com/en/products.

- 6. [Accessed January 14, 2015]; Available at: http://www.dolphinimaging.com.

- 7. [Accessed January 14, 2015]; Available at: http://dental.anatomage.com/specialty/orthodontist.

- 8. [Accessed January 14, 2015]; Available at: http://www.materialisedental.com/dental.

- 9. [Accessed January 14, 2015]; Available at: http://www.turtleseg.org.

- 10. [Accessed January 14, 2015]; Available at: http://www.itksnap.org.

- 11. [Accessed January 14, 2015]; Available at: http://www.slicer.org.

- 12. [Accessed January 14, 2015]; Available at: https://sites.google.com/a/umich.edu/dentistry-image-computing/

- 13.Thompson PM, MacDonald D, Mega MS, Holmes CJ, Evans AC, Toga AW. Detection and mapping of abnormal brain structure with a probabilistic atlas of cortical surfaces. J Comput Assist Tomogr. 1997;21:567–81. doi: 10.1097/00004728-199707000-00008. [DOI] [PubMed] [Google Scholar]

- 14.Maes F, Collignon A, Vandermeulen D, Marchal G, Suetens P. Multimodality image registration by maximization of mutual information. IEEE Trans Med Imaging. 1997;16:187–98. doi: 10.1109/42.563664. [DOI] [PubMed] [Google Scholar]

- 15.Cevidanes LHS, Styner MA, Proffit WR. Image analysis and superimposition of 3-dimensional cone-beam computed tomography models. Am J Orthod Dentofacial Orthop. 2006;129:611–8. doi: 10.1016/j.ajodo.2005.12.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cevidanes LH, Heymann G, Cornelis MA, DeClerck HJ, Tulloch JF. Superimposition of 3-dimensional cone-beam computed tomography models of growing patients. Am J Orthod Dentofacial Orthop. 2009;136:94–9. doi: 10.1016/j.ajodo.2009.01.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cevidanes L, Oliveira AE, Motta A, Phillips C, Burke B, Tyndall D. Head orientation in CBCT-generated cephalograms. Angle Orthod. 2009;79:971–7. doi: 10.2319/090208-460.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.de Paula LK, Ackerman JL, de Carvalho FA, Eidson L, Cevidanes LH. Digital live-tracking 3-dimensional minisensors for recording head orientation during image acquisition. Am J Orthod Dentofacial Orthop. 2012;141:116–23. doi: 10.1016/j.ajodo.2011.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Nguyen T, Cevidanes L, George W. Validation of 3D mandibular regional superimposition methods for growing patients. J Dent Res. 2014;93(Spec Iss A):784. [Google Scholar]

- 20.Ruellas AC, Huanca L, Gomes M, Danesi C, Lione R, Nguyen T, et al. Comparison and reproducibility of two maxillary regional registration methods. J Dent Res. 2015;94(Spec Iss A):2121679. [Google Scholar]

- 21. [Accessed January 14, 2015]; Available at: http://www.youtube.com/user/DCBIA.

- 22.Rohr K. Computational imaging and vision series. Vol. 21. Dordrecht, Netherlands: Kluwer Academic Publishers; 2001. Landmark-based image analysis: using geometric and intensity models. [Google Scholar]

- 23.Dean D, Hans MG, Bookstein FL, Subramanyan K. Three-dimensional Bolton-Brush Growth Study landmark data: ontogeny and sexual dimorphism of the Bolton Standards cohort. Cleft Palate Craniofac J. 2000;37:145–56. doi: 10.1597/1545-1569_2000_037_0145_tdbbgs_2.3.co_2. [DOI] [PubMed] [Google Scholar]

- 24.Bookstein FL. Morphometric tools for landmark data. 1. Cambridge, United Kingdom: Cambridge University Press; 1991. p. 435. [Google Scholar]

- 25. [Accessed January 14, 2015]; Available at: http://www.paraview.org.

- 26.Gerig G, Jomier M, Chakos M. In: Niessen W, Viergever M, editors. Valmet: a new validation tool for assessing and improving 3D object segmentation; Proceedings of the International Society and Conference Series on Medical Image Computing and Computer-Assisted Intervention; 2001 Oct 14–17; Utrecht, Netherlands. Berlin, Germany: Springer; 2001. pp. 516–28. [Google Scholar]

- 27.Styner M, Oguz I, Xu S, Brechbuhler C, Pantazis D, Levitt J, et al. Framework for the statistical shape analysis of brain structures using Spharm-PDM:Special edition open science workshop at MIC-CAI. Insight J. 2006:1–7. Available at: http://hdl.handle.net/1926/215. [PMC free article] [PubMed]

- 28.Gerig G, Styner M, Jones D, Weinberger D, Lieberman J. Shape analysis of brain ventricles using Spharm. MMBIA Proceedings; IEEE; 2001. pp. 171–8. [Google Scholar]

- 29.Paniagua B, Cevidanes L, Walker D, Zhu H, Guo R, Styner M. Clinical application of SPHARM-PDM to quantify temporomandibular joint arthritis. Comput Med Imaging Graph. 2011;35:345–52. doi: 10.1016/j.compmedimag.2010.11.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Paniagua B, Cevidanes L, Zhu H, Styner M. Outcome quantification using SPHARM-PDM toolbox in orthognathic surgery. Int J Comput Assist Radiol Surg. 2011;6:617–26. doi: 10.1007/s11548-010-0539-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.de Paula LK, Ruellas AC, Paniagua B, Styner M, Turvey T, Zhu H, et al. One-year assessment of surgical outcomes in Class III patients using cone beam computed tomography. Int J Oral Maxillofac Surg. 2013;42:780–9. doi: 10.1016/j.ijom.2013.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Nguyen T, Cevidanes L, Paniagua B, Zhu H, Koerich L, De Clerck H. Use of shape correspondence analysis to quantify skeletal changes associated with bone-anchored Class III correction. Angle Orthod. 2014;84:329–36. doi: 10.2319/041513-288.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. [Accessed January 14, 2015]; Available at: http://www.nitrc.org/projects/spharm-pdm.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.