1. Introduction

1.1 Study Overview

To design information technology systems that are usable and support effective and efficient performance, it is necessary to iteratively develop and test such systems. We present a simulator that can be used to evaluate patient tracking system displays as they are being developed. The methodology combines a software simulation of emergency department (ED) events with clinical information to create simulated patients and information about their evolving condition and care. The simulator consists of underlying software, desktop and large-screen displays, a phone call/pager system, and typical tasks that enhance the realism of the simulation experience. The displays contain features typical of ED electronic patient tracking displays. Additionally, the simulator supports measurement of a variety of usability-oriented measures (e.g. the level of situation awareness). The simulation has been implemented in a controlled laboratory environment so the impact of display parameters and ED operations on user performance can be evaluated. The methodology can provide a model for the development of other healthcare IT simulations which combine clinical and operational data.

1.2 Research Background

1.2.1 Patient Tracking Systems

The shift from paper-based activities to computer-based processing and storage of healthcare information is considered one of the most important transformations in the healthcare domain.1 Information technology has been suggested as a means to improve patient safety, organizational efficiency and patient satisfaction.2 ED patient tracking systems are shifting from a manual format (typically, large dry-erase boards) to electronic systems. Patient tracking systems display demographic information (patient name, age and gender), patient location, assigned caregivers, clinical information (chief complaints, orders, dispositions), indications of workflow, and key alerts such as those for patients with similar names or allergies.3-5 Patient tracking systems are essential ED tools which are not simply lists of patients and their locations: they allow patient care and ED processes to be communicated and coordinated across caregivers and other ED staff.5,6

Although some studies of electronic patient tracking systems and healthcare technologies indicate potential benefits7-9 such as improvements in accuracy, efficiency and ease of reporting data,10,11 other studies have indicated a mixed or negative impact.5,6,12-18 Understanding the impact (both positive and negative) of a new system before actual implementation can avert safety hazards and user rejection, and promote sustained use. User-centered design processes19 can be used to ensure that new systems are easily usable by practitioners, contain appropriate information, and meet the needs of care providers. Evaluating new systems in an operational ED is difficult because caregivers are busy and involved in safety-critical tasks, and unanticipated hazards have the potential to impact patients. However, simulation can be used for test and evaluation to avoid these challenges. Human workload, the usability of technology and types of errors can be recorded in a laboratory environment by integrating simulation with human factors based measures.

1.2.2. Simulation for computer interface evaluation

Simulations of objects and environments can vary widely from realistic physical representations (e.g., mannequin simulators20-23), to computer-generated displays (both desktop, or immersive virtual reality-type displays), to software programs that include mathematical representations of processes and events.24 Software simulations are used regularly in transportation,25 manufacturing,26 and service environments to assess and improve system operations. In healthcare, software simulations have included models of emergency department operations,27,28 patient flow, and caregiver workflow.29,30 However, no simulators to test and develop patient tracking systems have been described. One type of simulator combines a simulation of a human-computer interface display with a software simulation of the underlying system the interface is controlling. For instance, flight simulators used for training pilots combine a realistic flight deck (where crew members can view displays and manipulate controls) with a software simulation of aircraft dynamics and flight events. In human factors research, different human-computer interfaces are often tested using simulations of this type. Alternative display screens are combined with the underlying system simulation (i.e., of an aircraft, a military situation, a process control plant) to allow the effects of different displays on human performance to be compared.31-37 In our research, we created such a simulator.

1.3 Research Objective

The objective of this research was to create a patient tracking system simulator to study human performance with such systems. This paper describes the methodology for creating the simulation, components of the simulated system, and aspects of the simulation that can be used for evaluating electronic patient tracking system displays. The methodology combines a discrete event software simulation of ED processes with a user interface. The simulator can support studies in which people interact with the patient tracking system display. System variables (e.g., display configurations, ED load conditions) can be manipulated while measuring aspects of human performance.

2. Electronic Patient Tracking System Simulator

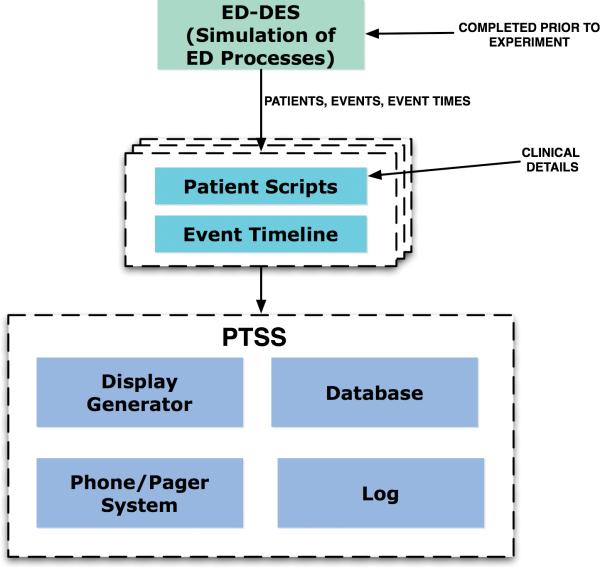

Figure 1 provides an overview of the simulator components. These components are described in detail in the following sections.

Figure 1.

Simulator Components

2.1 The Base ED Simulation Model (ED-DES)

The simulator is based on a model called the Emergency Department Discrete Event Simulation (EDDES). Discrete event models are computer-based representations of processes and events that mimic the behavior of stochastic processes in real systems. For instance, for an ED, triage may be modeled as a process with associated times drawn from a probability distribution that has been derived from collected data. Patient type (in terms of the medical severity of the patient's condition) and arrival rates can be modeled based on the distribution of chief complaint acuities from actual patient arrival data.

ED-DES (developed using the ProModel™ simulation software39) was based on a simulation developed during a previous study. The previous study modeled the emergency department of a large urban hospital.40 Model parameters were captured from hospital records and observation. The original simulation model used animated simulation and statistical analyses to identify, evaluate and recommend potential measures to improve ED patient throughput.40 Implementation of the recommended changes, including adding an additional emergency physician at certain hours, resulted in shorter patient lengths of stay.

The original ED simulation included the following components: triage, registration, ED beds and rooms, labs and radiology, nurses (triage and ED), and physicians. Some ED patients were admitted to the hospital and the remaining were discharged. The processes of patient triage, registration, waiting for ED beds, being seen by nurses and physicians, lab tests and radiology imaging, observation, and discharge were all modeled by the simulation. Wait time depended on the availability of resources, such as ED beds, nurses, physicians and labs and radiology facilities. The model also captured bed assignment time for admitted patients. A screen from the animated simulation is shown in figure 2.

Figure 2.

Sample screen from the ED discrete event simulation

2.2 Scaling the ED-DES Parameters

2.2.1 Patient Arrival Rate

The rate at which patients arrived to the simulated ED was based on hospital records40 as noted in section 2.1. To more closely mimic the ED environment where we planned to recruit participants for the simulation studies, we increased the inter-arrival mean based on the ratio of ED beds between the initially simulated hospital and the desired size (18 beds to 27 beds, including several temporary beds/chairs in the hallway). Additionally, we adjusted the simulation to simulate both high and low patient traffic conditions. The high traffic inter-arrival times were calculated at 150% of the size-scaled data. The low traffic inter-arrival times were calculated at 75% of the size-scaled data. The calculations below show how the exponential inter-arrival distributions were scaled:

Scaling Ratio :

Initial Initial Arrival Rate = [ED Main Arrival Rate + Fast Track Arrival Rate] = 5.25 + 6.25 = 11.5 arrivals per hour

Simulation Scaled High Traffic Arrival Rate = (11.5 ×1.5 ×1.5)= 25.875 arrivals per hour

Simulation Inter - Arrival Distribution :

High Traffic Interarrival Distribution = expo(2.32 min)

Simulation Scaled Low Traffic Arrival Rate = (11.5 ×1.5 × 0.75)=12.9375 arrivals per hour

Simulation Inter - Arrival Distribution :

Low Traffic Interarrival Distribution = expo(4.64 min)

2.2.2 Patient Severity Level

The distribution of patient types (in terms of the medical severity of the patient's condition) was determined using the Emergency Severity Index (ESI) triage score40 originally assigned by the ED, as follows:

Severity 1 = 1.8% (Most severe)

Severity 2 = 13.2%

Severity 3 = 56.1%

Severity 4 = 24.8%

Severity 5 = 4.1% (Least severe)

2.2.3 Simulated ED events

The ED simulation was used to generate a set of events based on the amount of time taken for each step of a patient's visit to the ED. The various time based events included:

Waiting Time Before Triage

Triage Time

Waiting Time Before Registration

Registration Time

Waiting Time to be Assigned an ER Bed

Waiting Time for a Nurse and Physician to Arrive

Time with Physician

Time with Nurse

Lab and Radiology Turn-around Time (if required)

Waiting Time for a Nurse and Physician to Arrive with Lab and Radiology Results (if required)

Time with Physician with Lab and Radiology Results (if required)

Time with Nurse with Lab and Radiology Results (if required)

Time to Order Patient to be Admitted (if required)

Waiting Time to be Discharged from ED to a Hospital Bed (if required)

Waiting Time to be Discharged from ED to Home (if required)

2.3 Patient Script and Event Timeline Development

2.3.1 Patient Name Database

Simulated patient names were created by randomly selecting names from a local phone book. Four separate random number lists were used to select two sets of first names (male and female) and two sets of last names, which were combined to create patient names.

2.3.2 Patient Gender, Chief Complaint, and Age

Patient gender was assigned corresponding to the random name assigned to the patient. Chief complaints and ages were assigned to each simulated patient based on the severity levels assigned by the simulation. Chief complaint and age were obtained from a de-identified database of actual ED patients. It was necessary to link age and chief complaint because the severity assigned to a patient with a given chief complaint could vary due to the age of the patient.

2.3.3 Patient script development

Emergency physicians on our research team created clinical information corresponding to the simulation-generated events. This information was integrated with the simulation events and times to create a script for each patient (see table 1). The information was appropriate for the chief complaint, age and gender of each simulated patient and included clinical information such as laboratory and medicine orders, treatment plans, and dispositions. The scripts included information that would typically appear in the comments or disposition column of a manual whiteboard.

Table 1.

Example Clinical Patient Script

| Patient ID | Severity Type | Comments for Whiteboard (ED-DES) | Description of events | Time |

|---|---|---|---|---|

| 13 | 4 | Time of Arrival | 52.88 | |

| 13 | 4 | Triage Start | 53.25 | |

| 13 | 4 | Registration Start | 57.61 | |

| 13 | 4 | Registration End | 63.65 | |

| 13 | 4 | Assigned ER Bed | 67.66 | |

| 13 | 4 | ER Nurse and Doctor Arrive | 88.51 | |

| 13 | 4 | ER Doctor Leaves | 114.9 | |

| 13 | 4 | Orders | 120.9 | |

| 13 | 4 | ER Nurse Leaves | 144.51 | |

| 13 | 4 | D/C | Patient Disposition | 144.51 |

| 13 | 4 | Hskp | Patient Leaves ER | 152.14 |

| Chief Complaint | First Name | Last Name | Age | Gender |

| Fever, runny nose | Edward | Swan | 20 | M |

2.3.4 Event timeline development

Patient scripts were integrated to create an “event timeline” (table 2). The event timeline consists of all events, for all patients, organized by time. The event timeline contains information such as the location where a patient will be at a particular time point (waiting room or ED), bed number that will be assigned once the patients are in the ED, and nurses and physicians who will be caring for the patient. For instance, at time point ‘3’ (3 minutes into a scenario), three patients could be at triage, two at registration, and five in the waiting room (see table 2). The event timeline is used to populate areas of the Patient Tracking System Display Simulation (PTSS) and to update displayed information over time, as the events occur.

Table 2.

Sample Event Timeline

| ID | Time of Event | Arrival Time | Room | Bed Number | Patient Name | Age | Gender | Nurse | MD | Chief Complaint | Orders | Disposition | ID | Event |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 220 | 12:00 | WR | 38 | Arrival | |||||||||

| 2 | 221 | 12:00 | WR | Scarpello, Lynn | 24 | F | 38 | Start Triage | ||||||

| 3 | 227 | 12:06 | WR | 39 | Arrival | |||||||||

| 4 | 227 | 12:00 | WR | 1 | Scarpello, Lynn | 24 | F | Constipation | 38 | End Triage | ||||

| 5 | 228 | 12:07 | WR | 40 | Arrival | |||||||||

| 6 | 228 | 12:00 | WR | 1 | Scarpello, Lynn | 24 | F | Constipation | 38 | Start Registration | ||||

| 7 | 228 | 12:07 | WR | Davidson, Valerie | 79 | F | 39 | Start Triage | ||||||

| 8 | 232 | 12:00 | WR | 1 | Scarpello, Lynn | 24 | F | Constipation | 38 | End Registration | ||||

| 9 | 232 | 12:11 | WR | 41 | Arrival | |||||||||

| 10 | 232 | 12:00 | FT | 1 | Scarpello, Lynn | 24 | F | 38 | Assigned Bed | |||||

| 11 | 232 | 12:06 | WR | Klosowski, John | 21 | M | 40 | Start Triage | ||||||

| 12 | 235 | 12:14 | WR | 42 | Arrival | |||||||||

| 13 | 236 | 12:15 | WR | 43 | Arrival | |||||||||

| 14 | 238 | 12:16 | WR | 44 | Arrival | |||||||||

| 15 | 239 | 12:06 | WR | 1 | Davidson, Valerie | 79 | F | Bladder, Urinary Problem | 39 | End Triage |

2.4 Patient Tracking System Display Simulation (PTSS)

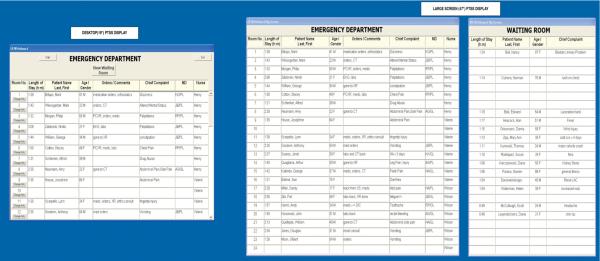

The PTSS is the front-end graphical user interface which simulates an electronic patient tracking system interface. The PTSS program consists of four components: a database developed in Microsoft Access 2007, a display generator developed in Microsoft Visual Basic 6.0, a phone/pager system and a log file generator. The database contains one or more event timelines (representing different scenarios). The display generator creates displays according to selected configuration parameters, retrieves information from a specified event timeline in the database, and inserts the information on the interface as the program runs (as shown in Figure 3). Dynamic updates of patient information, events, and evaluative probes (described in section 2.5) are triggered by the event timeline. Participants’ interactions with the interface are recorded in structured text in the log files for further analysis.

Figure 3.

PTSS screenshot

The PTSS has been designed modularly so components can be adapted for different studies. The software application can be modified to support different interface features (e.g. color coding based on various ED parameters), and different types of interaction (e.g. discharging patients). The database could be expanded to include new scenarios, in the form of new event timelines.

2.4.1 PTSS Display Generator

The PTSS display was designed to show information commonly found on manual and electronic whiteboards, rather than to mimic any particular commercially available system. The PTSS has separate views for the ED and the waiting room. Twenty-four rows (representing 24 available treatment area spaces for patients) are provided (figure 3). Each row shows room number; length of stay; demographic information such as patient name, age and gender; clinical information such as chief complaints and orders; and provider information such as attending physician and resident initials and nurse name. Nurses are assigned in zones, with each nurse handling six beds. The waiting room displays the patient's name, age, gender and chief complaint as each enters the PTSS system. The waiting room patients are automatically transferred to the ED bed screen based on the “ER bed assignment time” in the event timeline. Both the ED and the waiting room screens are updated as the simulator runs based on information in the event timeline.

The PTSS is designed to support two screen configurations for evaluation. The display can be shown either on a standard desktop monitor and large screen (e.g., 47”) display, or just a desktop monitor. These configurations were chosen because they are consistent with ED IT system displays that are currently being implemented. As with typically implemented systems, users can only interact with the small (desktop) display. Results of any changes are shown on both displays. The large screen display shows the entire census of the ED and waiting room. The small screen shows either the ED or waiting room, and shows only a sub-set of patients. Users toggle between the ED and waiting room views and scroll to view all patients.

The simulator allows users to change patient information and add same name alerts to patients, using a “change info” button. From the “change info” screen, users are able to see patient name, age, gender, chief complaints, current orders, and assigned caregivers.

2.4.2 Phone/pager system

Additional interactions with the PTSS are initiated through a phone-pager system, which runs synchronously with the simulation. The phone-pager system emulates typical phone calls and pages that users might receive. Twenty-four prompts were designed for each forty-minute scenario, and are based on the patient information appearing on the PTSS at different times throughout the scenario. The phone calls and pages require users to respond by (a) checking the PTSS to obtain information about a specific patient (b) referring to a list of physician/nurse phone numbers (c) changing information about a specific patient in the PTSS, based on the information provided by the prompt or (d) transferring information about a specific patient from the screen to a form. Example prompts are to “find the location of a patient,” or “change the lab value for a patient.” Responses to the prompt system are recorded for further analysis, such as the accuracy and completeness of the responses.

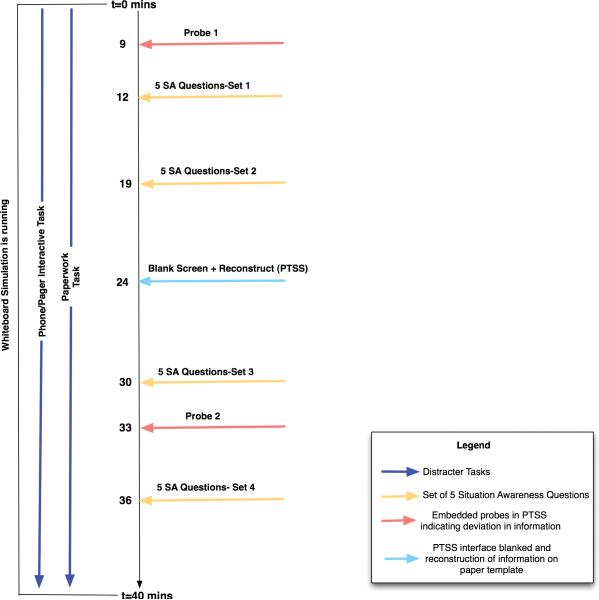

2.5 Simulator Measurement Capabilities

The simulator can measure several human factors engineering and usability variables (Figure 4). Endsley's simulation freeze technique (Situation Awareness Global Assessment Technique or SAGAT41) was implemented to allow assessment of situation awareness. In this technique, the simulation is stopped (“frozen”) and the information on the screen hidden, while various questions regarding the current and projected states of information and entities in the simulation are asked of the user. Scores are based on the accuracy of the responses. The simulator can also mimic a critical information system failure by blanking out the PTSS screens. Users are then asked to reconstruct the PTSS screen on a paper template, with scores based on the correctness and completeness of the data recall. Thus, user response and adaptation in the event of critical information system failures can be measured. The completeness and correctness of the data recalled on the paper form also indicates the salience of the information represented on the PTSS system.

Figure 4.

Timeline for the experiment

Scenarios can also be developed to include embedded task ‘probes’, which are deviations from the normal state of the ED. For example, a ‘hair x-ray’ order could be scripted to appear in the orders column to determine whether users are able to identify an abnormality in the information represented on the PTSS. Users can respond to the prompts by verbal or physical acknowledgement or add “alert” information in the PTSS.

Finally, along with the above measures, the simulator logs all the changes and additions that users make in the PTSS. This log is useful in providing a retrospective record of the caregiver interactions with the PTSS system, for use in analyzing the usability of the PTSS.

3. Simulator Applications

The simulator can be used to assess aspects of performance across ED operating parameters or display configurations. As described in sections 2.1 and 2.2, the underlying ED simulation can be scaled to create events and times for EDs of different sizes, across different scenario lengths, with different patient arrival rates, or with different distributions of more and less severe patient conditions. Scenarios can be tested across different display configurations: a small screen, and a small-screen + large screen configuration. For example, we have used this methodology to create four different forty-minute scenarios based on four different event timelines. Two scenarios were high traffic, and two were low traffic (as described in section 2.2.1). Twenty situation awareness (SA) questions were created and included for each scenario. Embedded probes were included in each scenario, and a system failure was embedded in one of the scenarios. The simulator and scenarios have been used to support a laboratory study comparing the small screen and small screen + large screen configurations across the high and low traffic conditions, for two user groups: ED nurses and ED secretaries.42

Because the PTSS program is modular, it is possible to change the format of the information display while using the same scenarios. Thus, the existing scenarios could be used in additional studies to test different display conditions. We are creating an additional display format, using color to highlight critical information. Additionally, because the scenarios were based on event types and times from a real emergency department, and enhanced with relevant clinical details, the scenarios should be applicable across a range of user groups. As needed, the language in the event timeline could be edited to conform to vocabulary or notations used by specific user groups (e.g., if physicians in one ED denote a urine pregnancy test as a “UPT” while those in another use “ICON”). Different scenarios could also be developed by running the underlying ED simulation with different parameters. Patient scripts could be created using the methodology described in section 2.3 and assembled into a new event timeline.

4. Simulator Fidelity

Simulators can vary in terms of the level of fidelity with which they represent real world objects, environments, and situations. Our goals were to incorporate realistic patient related events, times, and characteristics (e.g., arrival rates, severity of conditions, treatment events while in the ED), as well as realistic clinical information, into the simulator. Additionally, we wanted to develop a computer interface that had features found on most manual and electronic patient tracking systems. We did not attempt to create a multi-room ED environment where patient care or team interaction could be simulated, nor validate our system for this or other purposes beyond those of this research. Instead, our approach to validation was tied to our specific research goals.

Simulator fidelity was closely controlled and evaluated. First, the events and event times were based on an actual ED, and validated during an earlier simulation by Paul and Lin.40 Second, four emergency physicians on the research team evaluated the set of events associated with each simulated patient. The clinical details developed for each patient were screened for realism, and carefully selected to match the severity level associated with the patient's condition, as well as the events and event times associated with the patient. The patient tracking system interface was also developed based on the expertise of the research team, who had both research and clinical experience with both manual and electronic patient tracking systems at a number of hospitals. The particular columns and medical notation on the display were reviewed by the team to ensure they matched those of a manual whiteboard at a local hospital where we planned to recruit participants. Third, before we used the simulator as part of an experimental study, we piloted the system and asked people with relevant experience (e.g., an ED secretary, an attending physician) to assess the realism of the experimental tasks using the simulator (e.g., the questions generated by the phone system, the need to look up information in the system). Their feedback was used to enhance the tasks (e.g., to add more phone interruptions, or slightly change the nature of the questions and interactions to better match people's real world tasks). Fourth, during the experiments we conducted using the simulator, participants made informal comments regarding the patient tracking displays that indicated they were comfortable interacting with the display and that the simulator display was similar to what they would expect. Thus, while we did not conduct a formal evaluation of the simulator realism, both our simulator development methodology and informal assessments associated with our experiments suggest that the patient tracking system simulator provides a realistic display of events and clinical information that would be typical of an ED system.

5. Limitations and Future Work

This research did not develop an immersive, simulated “ED suite” in which teams of trainees or experimental participants role-play complex interactions representative of ED work and experience realistic workload demands. Instead, measures collected based on the simulator we developed could be used to make comparisons among different patient tracking screen designs or screen size configurations in terms of usability and support for situation awareness. For instance, we have simulated typical paper tasks (e.g., scheduling nurses for a shift and filling in patient departure forms) to support and supplement interaction with the PTSS as part of an experimental protocol. Performance measures based on these tasks indicate the degree to which different patient loads, or display configurations, impact overall workload.43 Future studies could consider how to incorporate the simulator into an immersive, physical simulation of an ED environment where more realistic assessments of caregiver workload and awareness of information on the displays could be assessed. Additionally, in an immersive setting, more aspects of team interaction with the patient tracking system simulator could be measured.44

The discrete event simulation created patient arrivals based on stochastic arrival processes with different volumes for various times. In a real ED, there could be instances where there is a sudden extreme surge in the patient volume, such as in the case of a mass casualty incident. Our simulation does not consider sudden extreme surges in patient volumes. Additional scenarios could be created, using our methodology, in which transient processes for patient surge arrivals would be included to capture the dynamic capacity changes in the hospital. Such scenarios may be valuable in assessing IT system performance under conditions of disaster emergencies.45 It is also possible that the simulator could support ED training sessions. Scenarios could be developed which include incidents of interest (e.g., mass casualties) or situations which could lead to errors (e.g., patients with similar names), and used within a more immersive simulated ED setting for training.

6. Conclusions

An increasing number of EDs are transitioning to computerized solutions to support work processes. Unexpected consequences may ensue from these technological solutions if they fail to provide support for caregiver's work activities, and have negative consequences for performance and potentially patient safety. The methodology described here employs simulation to iteratively design and test IT systems and allows relevant variables to be measured in a controlled environment. The methodology exploits the benefits of discrete event simulation to design an electronic patient tracking simulator. Studies using this simulator will help generate specifications for a patient-tracking interface that will best support ED caregivers’ work and enhance patient care and safety.

Acknowledgements

This study was supported by grant 1 U18 HS016672 from the Agency for Healthcare Research and Quality. We would like to acknowledge Theresa Moehle for her work on the initial setup of the simulation environment.

Footnotes

1 The authors are willing to share the software with researchers interested in using or adapting the methodology for their own needs. Please email the corresponding author for such requests.

References

- 1.Haux R. Health information systems - past, present, future. International Journal of Medical Informatics. 2006;75(3-4):268–81. doi: 10.1016/j.ijmedinf.2005.08.002. [DOI] [PubMed] [Google Scholar]

- 2.Poon E, Jha A, Christino M, et al. Assessing the level of healthcare information technology adoption in the United States: a snapshot. BMC Medical Informatics and Decision Making. 2006;6(1) doi: 10.1186/1472-6947-6-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pennathur PR, Bisantz AM, Fairbanks RJ, Perry SJ, Zwemer F, Wears RL. Assessing the impact of computerization on work practice: information technology in emergency departments.. Proceedings of the Human Factors and Ergonomics Society 51st Annual Meeting; Baltimore, Maryland. 2007. [Google Scholar]

- 4.Xiao Y, Schenkel S, Faraj S, Mackenzie CF, Moss J. What whiteboards in a trauma center operating suite can teach us about emergency department communication. Ann Emerg Med. 2007;50(4):387–95. doi: 10.1016/j.annemergmed.2007.03.027. [DOI] [PubMed] [Google Scholar]

- 5.Wears R, Perry S, Wilson S, Galliers J, Fone J. Emergency department status boards: user-evolved artefacts for inter- and intra-group coordination. Cognition Technology and Work. 2007;9(3):163–70. [Google Scholar]

- 6.Wears RL, Perry SJ. Status boards in accident and emergency departments: Support for shared cognition. Theoretical Issues in Ergonomics Science. 2007;8(5):371–80. [Google Scholar]

- 7.Laxmisan A, Hakimzada F, Sayan O, Green R, Patel Z. The multitasking clinician: decision-making and cognitive demand during and after team handoffs in emergency care. Int J Med Inform. 2007;76(11-12):801–11. doi: 10.1016/j.ijmedinf.2006.09.019. [DOI] [PubMed] [Google Scholar]

- 8.Vest N, Rudge N, Holder G. ED whiteboard: An electronic patient tracking and communication system. J Emerg Nurs. 2006;32(1):8. [Google Scholar]

- 9.France D, Levin S, Hemphill R, et al. Emergency physicians' behaviors and workload in the presence of an electronic whiteboard. Int J Med Inform. 2005;74:827–37. doi: 10.1016/j.ijmedinf.2005.03.015. [DOI] [PubMed] [Google Scholar]

- 10.Kohli S, Waldron J, Feng K, et al. Utilizing the electronic emergency whiteboard to track and manage emergency patients. Medinfo. 2004:1688. [Google Scholar]

- 11.Jensen J. United hospital increases capacity usage, efficiency with patient-flow management system. J Healthc Inf Manag. 2004;18(3):26–31. [PubMed] [Google Scholar]

- 12.Mackay WE. Is paper safer? The role of paper flight strips in air traffic control. ACM Transactions on Computer-Human Interaction. 1999;6(4):311–40. [Google Scholar]

- 13.Ash J, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11:104–12. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Koppel R, Metlay J, Cohen A, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA. 2005;293(10):1197–203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- 15.Garg A, Adhikari N, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293(10):1223–38. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- 16.Heath C, Luff P. Technology in Action. Cambridge University Press; New York, NY: 2000. [Google Scholar]

- 17.Weiner E. Beyond the sterile cockpit. Hum Factors. 1985;27(1):75–90. [Google Scholar]

- 18.Wears RL, Perry SJ, Shapiro MJ, Beach C, Croskerry P, Behara R. A comparison of manual and electronic status boards in the emergency department: What's gained and what's lost?. Proceedings of the Human Factors and Ergonomics Society 47th Annual Meeting; Denver, Colorado. 2003.pp. 1415–9. [Google Scholar]

- 19.Gould JD, Lewis C. Designing for usability: Key principles and what designers think. In: Baecker RM, editor. Human-computer Interaction: A Multidisplinary Approach. Morgan Kaufman Publishers Inc.; San Francisco, CA: 1987. [Google Scholar]

- 20.Hunt E, Walker A, Shaffner D, Miller M, Pronovost P. Simulation of in-hospital pediatric medical emergencies and cardiopulmonary arrests: highlighting the importance of the first 5 minutes. Pediatrics. 2008;121(1):34–43. doi: 10.1542/peds.2007-0029. [DOI] [PubMed] [Google Scholar]

- 21.Lighthall G, Barr J. The use of clinical simulation systems to train critical care physicians. Journal of Intensive Care Medicine. 2007;22(5):257–69. doi: 10.1177/0885066607304273. [DOI] [PubMed] [Google Scholar]

- 22.Gaba D. The future vision of simulation in health care. British Medical Journal. 2004;13:2–10. doi: 10.1136/qshc.2004.009878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Gaba D, Deanda A. A comprehensive anesthesia simulation environment: re-creating the operating room for research and training. Anesthesiology. 1988;69(3):387–94. [PubMed] [Google Scholar]

- 24.Anderson JG, Jay SJ, Anderson M, Hunt TJ. Evaluating the capability of information technology to prevent adverse drug events: A computer simulation approach. Journal of the American Medical Informatics Association. 2002;9(5):479–90. doi: 10.1197/jamia.M1099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lee J, McGehee D, Brown T, Reyes M. Collision warning timing, driver distraction, and driver response to imminent rear-end collisions in a high fidelity driving simulator. Human Factors. 2002;44 doi: 10.1518/0018720024497844. [DOI] [PubMed] [Google Scholar]

- 26.Iwata K, Onosato M, Teramoto K, Osaki S. A modeling and simulation architecture for virtual manufacturing systems. CIRP Annals-Manufacturing Technology. 1995;44(1):399–402. [Google Scholar]

- 27.Duguay C, Chetouane F. Modeling and improving emergency department systems using discrete event simulation. Simulation. 2007;83(4):311–20. [Google Scholar]

- 28.Gunal M, Pidd M. Understanding accident and emergency department performance using simulation. Proceedings of the 38th Conference on Winter Simulation. Monterey, California. 2006:446–52. [Google Scholar]

- 29.Kuwata S, Kushniruk A, Borycki E, Watanabe H. Using simulation methods to analyze and predict changes in workflow and potential problems in the use of a bar-coding medication order entry system.. AMIA Annual Symposium Proceedings; 2006; p. 994. [PMC free article] [PubMed] [Google Scholar]

- 30.Borycki ER, Kushniruk A, Kuwata S. Use of simulation approaches in the study of clinician workflow.. AMIA Annual Symposium Proceedings; 2006; pp. 61–5. [PMC free article] [PubMed] [Google Scholar]

- 31.Sarter N, Woods DD. Team play with a powerful and independent agent: A full-mission simulation study. Human Factors. 2000;42(3):390–402. doi: 10.1518/001872000779698178. [DOI] [PubMed] [Google Scholar]

- 32.Longridge T, Bürki-Cohen J, Go T, Kendra A. Simulator fidelity considerations for training and evaluation of today's airline pilots. Proceedings of the 11th International Symposium on Aviation Psychology; Columbus, OH. 2001. [Google Scholar]

- 33.Menendez R, Bernard J. Flight simulation in synthetic environments.. Digital Avionics Systems Conferences; Philadelphia, PA. 2000. [Google Scholar]

- 34.Davis PK. Distributed interactive simulation in the evolution of DoD warfare modeling and simulation. Proceedings of the IEEE. 1995;83(8):1138–55. [Google Scholar]

- 35.Walton G, Patton R, Parsons D. Usage testing of military simulation systems.. Proceedings of the 33rd Conference on Winter Simulation Arlington; Virginia. 2001. [Google Scholar]

- 36.Burns CM, Skraaning G, Jamieson GA, et al. Evaluation of Ecological Interface Design for Nuclear Process Control: Situation Awareness Effects. Human Factors: The Journal of the Human Factors and Ergonomics Society. 2008 Aug 1;50(4):663. doi: 10.1518/001872008X312305. [DOI] [PubMed] [Google Scholar]

- 37.Jamieson G. Ecological Interface Design for Petrochemical Process Control: An Empirical Assessment. IEEE Transactions on Systems, Man and Cybernetics, Part A. 2007;37(6):906–20. [Google Scholar]

- 38.Triggs TJ. The ergonomics of decision-making in large scale systems: information displays and expert knowledge elicitation. Ergonomics. 1988;31(5):711–9. [Google Scholar]

- 39.Harrell CR, Ghosh BK, Bowden RO. Simulation Using Promodel. 2nd ed. McGraw-Hill Professional; New York: 2004. [Google Scholar]

- 40.Paul JA, Lin L. Simulation and parametric models for improving patient throughput in hospitals.. Society for Health Systems Conference; New Orleans, LA. 2007. [Google Scholar]

- 41.Endsley M. Measurement of situation awareness in dynamic systems. Human Factors. 1995;37(1):65–84. [Google Scholar]

- 42.Pennathur P, Cao D, Sui R, et al. Evaluating Emergency Department Information Technology Using a Simulation-based Approach.. Proceedings of the Human Factors and Ergonomics Society 53th Annual Meeting: To Appear; 2009. [Google Scholar]

- 43.Meshkati N, Hancock PA, Rahimi M, Dawes SM. Techniques in mental workload assessment. In: Wilson JR, Corlett NE, editors. Evaluation of Human Work. Taylor and Francis; Bristol, PA: 1995. [Google Scholar]

- 44.Shapiro MJ, Morey JC, Small SD, et al. Simulation based teamwork training for emergency department staff: does it improve clinical team performance when added to an existing didactic teamwork curriculum? Quality and Safety in Healthcare. 2004;13:417–21. doi: 10.1136/qshc.2003.005447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Paul JA, George SK, Yi P, Lin L. Transient modeling in hospital operations for emergency response on patient waiting times. Prehosp Disaster Med. 2006;21(4):223–36. doi: 10.1017/s1049023x00003757. [DOI] [PubMed] [Google Scholar]