Abstract

Background

Clinical decision support (CDS) is associated with improvement in quality and efficiency in healthcare delivery. The appropriate way to evaluate its effectiveness remains uncertain.

Methods

We analyzed data from our electronic health record (EHR) measuring the display frequency of eight reminders for Coronary Artery disease and Type 2 Diabetes and their associated performance according to a predefined methodology. We propose two key performance indicators to measure their impact on a target population: the reminder performance (RP), and the number needed to remind (NNR), to evaluate the impact that Clinical decision support reminders have on the adherence to guideline derived CDS interventions on the entire patient population, and individual providers receiving the interventions.

Results

Data were available for 116,027 patients and a total of 1,982,735 reminders were displayed to a subset of 65,516 patients during the study period from January 1 to December 31, 2010. The evaluation framework assessed provider acknowledgement of the CDS intervention, and the presence of the expected performance event while accounting for patients’ exposure to the CDS reminders. The total RP was 2.7% while the average NNR was 3.1 for all the reminders under study.

Conclusions

The proposed framework to asses of CDS performance provides a novel approach to improve the design and evaluation of CDS interventions. The application of this methodology represents an indicator to understand the impact of CDS interventions and subsequent patient outcomes. Further research is required to evaluate the impact of these systems on the quality of care.

1 Introduction

Meaningful use of health information technology is viewed as essential for effecting change in healthcare delivery.1 Effective use of clinical decision support (CDS) is one of the components that help physicians and other health care providers treat patients according to evidence-based guidelines for care.2–5

Reminders are one common type of CDS usually triggered by patient data or information entered by the user. They are intended to prompt the healthcare provider about the appropriate interventions or to avoid certain actions to improve the patient individual care.6 In the literature, reminders have been found to improve some preventive practices and compliance with clinical guidelines in regard to medication selection and diagnostic testing.7

However, some research has found that reminders achieve modest or no improvement in care.6,8–10 In addition, current research has suggested that an overabundance of reminders may counteract their effectiveness and lead to user dissatisfaction.11,12 Our approach focuses on the expected actions in preventive care that can be attributable to the reminder being displayed and acted upon from a population perspective. Existing evidence on the topic is conflicting and understudied.13 In research conducted by the CDS Consortium14,15, we developed a CDS Dashboard to inform end users as to their use of decision support and compliance with CDS recommendations, and implemented it at the Partners Healthcare System. The CDS Dashboard also provides feedback to the research team about CDS performance characteristics, including usage and compliance rates, user performance for key metrics, compared to other users or reference benchmarks.

The purpose of this paper is to provide a better understanding of population based CDS performance measurement, to identify best practices for designing and implementing CDS, and to introduce two new quality measures, titled Reminder Performance (RP) and the Number Needed to Remind (NNR) for evaluating the effectiveness of clinical reminders in the context of the CDS Dashboards.

2 Methods

2.1 Study Setting

The CDS Consortium was funded by the Agency for Healthcare Research and Quality (AHRQ) to address the challenge of documenting, generalizing, and translating the CDS adoption experience at advanced sites to broader community settings.14 The Consortium was created when investigators from Partners HealthCare (PHS) Information Systems (IS) formed an alliance with several other institutions intimately involved in creating and providing CDS tools and services in EHR’s. Furthermore, a CDS rules service has been implemented to enable sharing of CDS on an advanced rules engine platform among consortium members.16

Within the AHRQ CDSC project, eight reminders were studied (Table 1) from January 1, 2010 to December 31, 2010. All were synchronous passive reminders triggered by opening the patients’ electronic chart. For each reminder, we obtained data from the Longitudinal Medical Record including: patients who were eligible for the measure (the denominator), patients that had already or subsequently received the recommended action (the numerator), the reminders displayed – when and to whom- as well as the provider acknowledgement to the reminder, and a coded response. This data was loaded into the Partners Quality Data Warehouse (QDW) and dashboards were constructed in Report Central17 using Crystal Reports™.

Table 1.

Reminders

| Condition | Reminder | Measure | Reminder |

|---|---|---|---|

| CAD | CAD and no Aspirin | Patient has CAD and aspirin is on the medication list | Patient has CAD equivalent on problem - list and aspirin is not on the medication list. Recommend aspirin. |

| Diabetes | Diabetic overdue for HbA1c | Diabetes, HbA1c completed in the past 6 months | Patient with Diabetes Mellitus overdue for HbA1C |

| Diabetes | Diabetic almost due for HbA1C | Diabetes, HbA1c completed in the past 6 months | Patient with DM is due for HbA1C by <mm/dd/yyyy> |

| Diabetes overdue for | Diabetes, Microalbumin | Patient with diabetes overdue for urine | |

| Diabetes | Microalbumin/creatinine ratio | completed in the past year | Microalbumin/creatinine ratio |

| Diabetes | Diabetes almost due for Microalbumin/creatinine ratio | Diabetes, Microalbumin completed in the past year | Patient with diabetes almost due for urine Microalbumin/creatinine ratio by <mm/dd/yyyy> |

| Diabetes | Diabetic overdue for ophthalmology exam | Diabetes, Ophthalmology exam completed in past year | Patient with diabetes mellitus overdue for ophthalmology exam |

| Diabetes | Diabetic almost due for ophthalmology exam | Diabetes, Ophthalmology exam completed in past year | Patient with diabetes mellitus almost due for ophthalmology exam |

| Diabetes with Renal Disease | Diabetes Mellitus and Microalbumin/creatinine ratio >30 | Diabetes, Microalbumin/creatinine ratio>30, and on ACE- inhibitor, ARB | Patient with diabetes mellitus, Microalbumin/creatinine ratio >30 and not on ACE-inhibitor, ARB. Recommend ACE-inhibitor or ARB |

Detailed list of CDS reminders by condition.

2.2 Dashboard Design and Development

Two separate dashboards were built to target clinicians and CDS implementers (knowledge engineers). To accomplish this, the thoughts and requests of each type of user were considered and incorporated in the design process. Iterative designs were prototyped incorporating feedback from the CDS Dashboard Team, which was comprised of senior medical informaticians, senior knowledge engineers, and the principal investigator. It was determined that the clinician dashboard would present information regarding clinical performance for patients determined by the reminder logic (Table 2), which would then be organized by condition and compared to their peers. In comparison, the designer view was created to address reminder performance.

Table 2.

CDS Reminder Performance logic per measure

| Measure | Performance measurement |

|---|---|

| Patient has CAD and Aspirin is on the medication list | Patient has CAD and aspirin is on the medication list before the period start date and there is no stop date on the med entry that is before the period end date. |

| Diabetes, HbA1c completed in the past 6 months | Patient has an HbA1C entry < than the period end date and >6 months before the period start date. |

| Diabetes, Microalbumin completed in the past year | Patient has a MALCR entry entered < than period end date and >than 12 months before the period start date. |

| Diabetes, Ophthalmology exam completed in past year | Patient has an Ophthalmologic exam entry entered < than the period end date and > than 12 months before the period start date. |

| Diabetes, microalbumin/creatinine ratio>30, and on ACEI/ARB | Patient has an ACEI/ARB on their medication list before the period start date and there is no stop date on the medication entry that is before the period start date. |

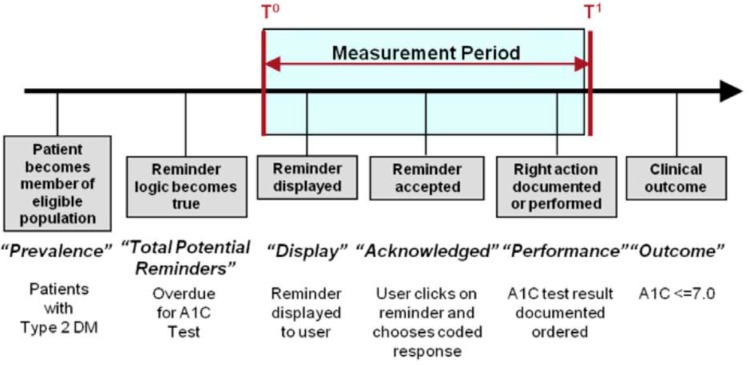

The designer view displays the level of acknowledgement of reminders, reminder performance by patient group, and specific data related to reminder effectiveness, including: reminder prevalence, reminder presentation, reminder acknowledgement defined as “the formal declaration of a reminder being received and acted upon” (Figure 1), CDS performance, and the NNR. Within the QDW, there are two ways of looking at each reminder – the first looks at the number of displays, defining a display event as the reminder being shown on the screen to an LMR user for a particular patient, where display events are counted by provider-patient-month. The second approach defines a reminder event at the patient level for the entire study period, i.e. the reminder being shown on a screen to any LMR user for a particular patient, treating each patient-year as one display event, while considering the patient compliant when the performance action was recorded within the following month from the reminder being displayed.

Figure 1.

Timeline detailing the reminder lifecycle.

2.3 Proposed Measurement Framework

A measurement framework was developed to consider the lifecycle of an ambulatory EHR reminder (Figure 1). This lifecycle suggests the kind of events, actions and outcomes that can be used to define rates and measures to assess CDS effectiveness. Each stage of the lifecycle is associated with a particular measure: prevalence, logic, display, acknowledged, performance, or outcome. For each reminder, we defined the numerator and denominator for clinical performance. This refers to whether or not a patient who is part of the eligible population received the recommended action, independent of whether a reminder was displayed or not. Ultimately, the key to reminder effectiveness is the contribution the reminder makes to overall clinical performance, i.e. when the reminder is displayed, how often the recommended action is subsequently taken. This can be expressed in a measure we call “CDS Reminder Performance” (RP) (Table 3).

Table 3.

CDS Reminder Performance for all patients’ total reminders per month

| Rule Name | Reminder with Performance | Total Reminders | CDS Reminder Performance (%) |

|---|---|---|---|

| CAD and no Aspirin on medication list | 2,589 | 131,721 | 2.0% |

| Diabetes Mellitus and microalbumin/creatinine ratio >30 and no ACEI/ARB on medication list | 1,668 | 57,678 | 2.9% |

| Diabetes overdue for Microalbumin/creatinine ratio | 2,927 | 215,626 | 1.4% |

| Diabetes almost due for Microalbumin/creatinine ratio | 11,864 | 82,385 | 14.4% |

| Diabetic overdue for HbA1c | 16,549 | 355,361 | 4.7% |

| Diabetic almost due for HbA1c | 13,751 | 70,024 | 19.6% |

| Diabetic overdue for ophthalmology exam | 2,863 | 1,048,478 | 0.3% |

| Diabetic almost due for ophthalmology exam | 1,947 | 21,462 | 9.1% |

|

| |||

| Total | 54,15 | 1,982,875 | 2.7% |

2.4 Number Needed to Remind (NNR)

In 1988, Laupacis et al. proposed a measure of clinical benefit intended to capture value of certain clinical interventions – the number needed to treat (NNT)18–20, calculating the inverse of the absolute risk reduction. Similarly, the Number Needed to Harm (NNH) reflects the number of patients that have to be exposed in order to harm one patient that otherwise would not have been harmed. We applied the same approach in an analogous fashion to reminders and proposed a new measure of reminder effectiveness called the “Number Needed to Remind” (NNR). Consequent with the NNT, the ideal NNR is 1, reflecting that every reminded patient will benefit from the reminder being displayed to one or more of their care providers.

The NNR corresponds to the number of patients reached by the reminder to result in one recommended action being taken or:

The denominator represents the number of patients to whom a reminder was followed by the appropriate performance event within 30 days of the end of the reporting period. The numerator corresponds to the cumulative count of patients with reminders displayed in each measurement period.

We used the performance data, including the NNR, to assess the effectiveness of each reminder and were able to gain some potential insights related to how well they function in the clinical setting. The logic to calculate the performance per each of the measures under study is explained in detail in table 2.

3 Results

During the study period, 1,982,735 reminders were triggered for the rules described in Table 1, being displayed to 12,327different providers. From the cohort of 116,027 patients included in the study, 65,516 of them were exposed to the one or more of selected reminders during the study period.

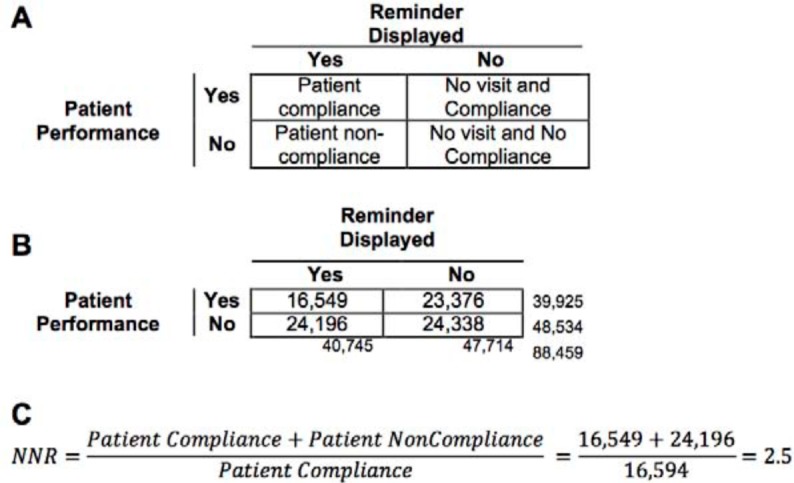

As a validation through example, we evaluated one reminder for diabetic patients who were overdue for their HbA1c after 6 months. During the study period the reminder was displayed 355,361 times (Table 3) to a total of 40,745 different patients (Table 4). Out of those patients, 16,549 (40.6%) of them had a performance event, defined as an HbA1c present in laboratory tests results database (Table 2) during the 30 days following the reminder being displayed. The RP for this rule would be the ratio of patients with performance over the total number of reminders displayed; for this particular example the RP for the Overdue HbA1c would equal 4.7 patients in performance per 100 reminders displayed. This indicator reflects the effectiveness of each reminder displayed on the expected performance. In contrast, when we calculate the inverse of the patient performance we obtain a NNR of 2.5, reflecting the number of patients receiving the reminders in order to get one patient to comply with the defined performance measure (Figure 2).

Table 4.

Reminders with Performance for displayed patient reminders per month: NNR (the Number of patients needed to remind).

| Rule | Reminder with Performance | Patients with Reminders Displayed | Number Needed To Remind(NNR |

|---|---|---|---|

| CAD and no Aspirin on medication list | 2,589 | 7,051 | 2.7 |

| Diabetes Mellitus and microalbumin/creatinine ratio >30 and no ACEI/ARB on medication list | 1,668 | 2,949 | 1.8 |

| Diabetes overdue for Microalbumin/creatinine ratio | 2,927 | 21,466 | 7.3 |

| Diabetes almost due for Microalbumin/creatinine ratio | 11,864 | 15,227 | 1.3 |

| Diabetic overdue for HbA1c | 16,549 | 40,745 | 2.5 |

| Diabetic almost due for HbA1c | 13,751 | 18,403 | 1.3 |

| Diabetic overdue for ophthalmology exam | 2,863 | 57,889 | 20.2 |

| Diabetic almost due for ophthalmology exam | 1,947 | 5,020 | 2.6 |

|

| |||

| Total | 54,158 | 168,750 | 3,11 |

Figure 2.

CDS performance evaluation framework for passive reminders for HbA1c to be completed every six months to the entire population of diabetic patients found in the Partners Healthcare System. (A) Distribution of all the active diabetic patients depending if they have received a reminder and whether they are in compliance with the performance measure. (B) HbA1c compliance among diabetic patients in Partners population. (C) Computation of the Number Needed to Remind based on CDS performance framework.

Table 3, shows the number of reminded patients for whom the relevant performance action was found, and the total number of patients displayed with a reminder. The RP is the percentage of patients with reminder and performance over the total number of reminders displayed. Table 4 shows the reminders with performance for displayed patient reminders per month: NNR (the Number of patients needed to remind).

The evaluation of the CDS reminder performance by differentiating four scenarios in decision support performance, as explained in Figure 2, can distinguish patients in compliance with the performance measure from non compliant patients, while accounting for those patients affected by the reminder. From all eligible patients, considered the prevalence for the evaluated condition, only a fraction has a healthcare provider visit during a specified time frame, thus since the patient had no recent performance event (e.g. patient is overdue for HbA1c) and not having a reminder being displayed, produced the effect of patients without reminders and no performance.

The opposite scenario occurs when a patient is in compliance although no reminder was triggered. These patients could be in performance most commonly because of a proactive care team.

4 Discussion

Using routinely collected data in our EHR, we were able to examine the effectiveness of ambulatory care reminders for diabetes mellitus and coronary artery disease. Specifically, we measured the number of eligible patients that were receiving recommended care (clinical performance) and the effectiveness of the reminders displayed toward the healthcare providers (reminder performance). By identifying the patients with reminders displayed and those that received a suggested action, we calculated the Number Needed to Remind, i.e. the number of patients required to be reached by CDS reminders that are associated with one additional patient receiving recommended care. This measure, the NNR, may be useful for monitoring and differentiating the relative effectiveness of CDS rules.

The NNR measure clarifies the difference between the effectiveness of the reminder (RP, Reminder Performance) as an isolated entity from the Reminder effect on the patient level, and furthermore highlights the subtle differences in the reminder logic or documentation requirements that are associated with dissimilar performance. For example, looking at the almost due and overdue reminders for eye exam and HbA1c (Table 4), the “Overdue” reminder had a notably higher NNR than the “Almost Due” reminder. We speculate that this may be attributable to a number of factors that create a bias in the patient population that receives each reminder.

This unintended patient selection bias could be explained by the fact that to trigger an “Almost Due” reminder, a prior performance event needs to be present in the database and, more importantly, needs to be properly documented in a previous episode of care including a computable time stamp. Further, the “Almost Due” temporal logic allows for a higher degree of tolerance meaning that the reminder may fire in advance of a strict due date. In this case, the reminder will work better in those sites where providers document at the point of care and use the features in the EHR appropriately.

Advanced CDS may fail to achieve ideal care if it relies on incomplete or unstructured information that is considered difficult to acquire and maintain for the appropriate display of reminders and other forms of decision support. Here we can differentiate two scenarios. First, a lack of structured information will impede the display of the reminder or it will be displayed in a wrong clinical scenario. The second will occur when the performance event is not documented appropriately and the reminder will not stop triggering. The Ophthalmologic exam to diabetic patients (Table 1) is usually not documented as discrete data in the LMR – it is often free text in patient notes. Consequently, despite the performance action has been taken, the reminder keeps triggering and the corresponding performance evaluation cannot be reliably measured.

To evaluate the performance of CDS knowledge artifacts and compare them cross EHR interventions would enable a comparative effectiveness analysis of reminders implemented in different EHRs, locations or implementations. To evaluate the same rule and assess whether one implementation vs. another had a better NNR, or assess the variations in the NNR across EHR systems using the same reminders, would be revealing and could allow discovery of the appropriate design patterns that EHR and CDS interventions should follow.

In a recent study, a framework to evaluate the appropriateness of clinical decision support was proposed.21 In contrast, our study examines the analytical component of the quality of care within the institution. Our approach seeks to examine the effectiveness of the knowledge artifacts as isolated entities as well as from the patient perspective and provide the tools that would enable to elucidate the reasons behind the poor performance that clinical decision support interventions may have. We believe that both approaches are complementary since the first event to be scrutinized in the clinical performance of decision support is whether the reminder is being displayed appropriately. We broaden the scope for this study and defined that improvement efforts should be driven by clinical performance.

Evaluation of Clinical Decision Support Systems is becoming increasingly important in those institutions that have chosen to use these systems to improve quality and safety. The development of accurate performance indicators will allow the comparison among different institutions, playing a key role on standardizing care in the near future. By setting a common ground and start comparing the effects of different reminder rules we will start discovering features and functionalities that can solve the issues we confront on a daily basis.

5 Conclusion

Healthcare information technology is changing the way that we treat our patients and CDS can play an important role to improve the quality of care that we deliver. We identified different measures that might be helpful to assess the performance of CDS, and described the potential interpretation in context. More research is required to develop a comprehensive CDS assessment framework, and to further evaluate these measurements. New indicators to accurately reflect the clinical performance of a CDS reminder are required, to concentrate the improvement strategies on specific aspects throughout knowledge artifact lifecycle. This study reinforces the importance of clinical performance evaluation as a key function in the CDS lifecycle that could increase effectiveness and finally improve care.

Acknowledgments

This publication is derived from work supported under a contract with the Agency for Healthcare Research and Quality (AHRQ) Contract # HHSA290200810010.

Footnotes

Disclosures

The findings and conclusions in this document are those of the author(s), who are responsible for its content, and do not necessarily represent the views of AHRQ. No statement in this report should be construed as an official position of AHRQ or of the U.S. Department of Health and Human Services.

References

- 1.Blumenthal D, Glaser JP. Information technology comes to medicine. N Engl J Med. 2007;356(24):2527–2534. doi: 10.1056/NEJMhpr066212. [DOI] [PubMed] [Google Scholar]

- 2.Bates DW, Gawande AA. Improving safety with information technology. N Engl J Med. 2003;348(25):2526–2534. doi: 10.1056/NEJMsa020847. [DOI] [PubMed] [Google Scholar]

- 3.Osheroff JA, Teich JM, Middleton B, Steen EB, Wright A, Detmer DE. A roadmap for national action on clinical decision support. J Am Med Inform Assoc. 2007;14(2):141–145. doi: 10.1197/jamia.M2334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–752. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 5.Berner E. AHRQ White Paper: Clinical Decision Support Systems: State of the Art. 2009. [Accessed November 7, 2011]. Available at: http://healthit.ahrq.gov/images/jun09cdsreview/09_0069_ef.html.

- 6.Shojania KG, Jennings A, Mayhew A, Ramsay CR, Eccles MP, Grimshaw J. The effects of on-screen, point of care computer reminders on processes and outcomes of care. Cochrane Database Syst Rev. 2009;(3):CD001096. doi: 10.1002/14651858.CD001096.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012;157(1):29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 8.Heselmans A, Van de Velde S, Donceel P, Aertgeerts B, Ramaekers D. Effectiveness of electronic guideline-based implementation systems in ambulatory care settings - a systematic review. Implement Sci. 2009;4:82. doi: 10.1186/1748-5908-4-82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Patterson ES, Nguyen AD, Halloran JP, Asch SM. Human factors barriers to the effective use of ten HIV clinical reminders. J Am Med Inform Assoc. 2004;11(1):50–59. doi: 10.1197/jamia.M1364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Dexheimer JW, Talbot TR, Sanders DL, Rosenbloom ST, Aronsky D. Prompting clinicians about preventive care measures: a systematic review of randomized controlled trials. J Am Med Inform Assoc. 2008;15(3):311–320. doi: 10.1197/jamia.M2555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sittig DF, Wright A, Osheroff JA, et al. Grand challenges in clinical decision support. J Biomed Inform. 2008;41(2):387–392. doi: 10.1016/j.jbi.2007.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cash JJ. Alert fatigue. Am J Health Syst Pharm. 2009;66(23):2098–2101. doi: 10.2146/ajhp090181. [DOI] [PubMed] [Google Scholar]

- 13.Schedlbauer A, Prasad V, Mulvaney C, et al. What evidence supports the use of computerized alerts and prompts to improve clinicians’ prescribing behavior? J Am Med Inform Assoc. 2009;16(4):531–538. doi: 10.1197/jamia.M2910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Middleton B. The clinical decision support consortium. Stud Health Technol Inform. 2009;150:26–30. [PubMed] [Google Scholar]

- 15.Clinical Decision Support (CDS) Consortium. Available at: http://www.partners.org/cird/cdsc/

- 16.Dixon BE, Simonaitis L, Goldberg HS, et al. A pilot study of distributed knowledge management and clinical decision support in the cloud. Artif Intell Med. 2013 doi: 10.1016/j.artmed.2013.03.004. [DOI] [PubMed] [Google Scholar]

- 17.Jung E, Li Q, Mangalampalli A, et al. Report Central: quality reporting tool in an electronic health record. AMIA Annu Symp Proc. 2006:971. [PMC free article] [PubMed] [Google Scholar]

- 18.Laupacis A, Sackett DL, Roberts RS. An assessment of clinically useful measures of the consequences of treatment. N Engl J Med. 1988;318(26):1728–1733. doi: 10.1056/NEJM198806303182605. [DOI] [PubMed] [Google Scholar]

- 19.Cook RJ, Sackett DL. The number needed to treat: a clinically useful measure of treatment effect. BMJ. 1995;310(6977):452–454. doi: 10.1136/bmj.310.6977.452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.McQuay HJ, Moore RA. Using numerical results from systematic reviews in clinical practice. Ann Intern Med. 1997;126(9):712–720. doi: 10.7326/0003-4819-126-9-199705010-00007. [DOI] [PubMed] [Google Scholar]

- 21.McCoy AB, Waitman LR, Lewis JB, et al. A framework for evaluating the appropriateness of clinical decision support alerts and responses. Journal of the American Medical Informatics Association: JAMIA. 2011 doi: 10.1136/amiajnl-2011-000185. [DOI] [PMC free article] [PubMed] [Google Scholar]