Abstract

Ontologies underpin methods throughout biomedicine and biomedical informatics. However, as ontologies increase in size and complexity, so does the likelihood that they contain errors. Effective methods that identify errors are typically manual and expert-driven; however, automated methods are essential for the size of modern biomedical ontologies. The effect of ontology errors on their application is unclear, creating a challenge in differentiating salient, relevant errors with those that have no discernable effect. As a first step in understanding the challenge of identifying salient, common errors at a large scale, we asked 5 experts to verify a random subset of complex relations in the SNOMED CT CORE Problem List Subset. The experts found 39 errors that followed several common patterns. Initially, the experts disagreed about errors almost entirely, indicating that ontology verification is very difficult and requires many eyes on the task. It is clear that additional empirically-based, application-focused ontology verification method development is necessary. Toward that end, we developed a taxonomy that can serve as a checklist to consult during ontology quality assurance.

Ontologies and Errors

Ontologies enable researchers to describe and reason about data and knowledge in a computable fashion using a common vocabulary. Because of these properties, ontologies are key to many biomedical tasks ranging from decision support and data integration to search and billing.1 As a sign of the wide-spread use of ontologies, the National Center for Biomedical Ontology’s BioPortal contains over 350 ontologies and terminologies describing almost any biomedical subdomain.2 The U.S. government has required the use of terminologies such as SNOMED CT and LOINC in Electronic Health Records (EHR) as part of meaningful use.3 Ontologies also play an essential role in biomedical research, helping to combat the data-deluge. Researchers use ontologies to perform pharmacovigilance using unstructured clinical text, to analyze gene modules, and to integrate biosimulation models.4–6 In the life sciences, the Gene Ontology is central to many methods (e.g., micro-array enrichment analysis), and has over 10,000 citations.7 These examples all demonstrate the importance and diverse uses of biomedical ontologies.

Unfortunately, those same biomedical ontologies we rely on contain errors. Researchers have published reports about critical domain-specific errors in two widely-used biomedical ontologies, the National Cancer Institute Thesaurus (NCIt)8 and SNOMED CT9–13. For example, Rector and colleagues manually explored SNOMED CT and identified errors such as “The foot is-a-part of the pelvis”.10 As ontologies increase in size, scale, and number, these domain-specific errors become even more difficult to identify or prevent. Current methods used to identify these errors are either human based or computationally based. In human-based methods, domain experts manually browse an ontology searching for errors. This approach is costly and cannot scale to the size of recent biomedical ontologies. For instance, it is likely impossible for a human to check the nearly 400,000 stated concepts in SNOMED CT, let alone the millions of logical entailments. Computational methods rely on inconsistencies within ontology syntax, structure, or semantics to find errors.14–17 These methods scale more easily but do not detect accurately domain-specific errors. Considering the limitations of current methods, additional research is still needed to achieve scalable and accurate ontology verification.

Adding to these challenges of ontology verification, the real-world impact and true extent of errors is unknown. For example, it is unclear what costs are associated with an EHR that incorrectly classifies a patient due to errors in SNOMED CT. By understanding error patterns in ontologies, one can begin to identify the real-world cost of a certain pattern and prevent it. Using these patterns as a reference, engineers can develop best practices that prevent the creation of errors in the first place. In addition, these patterns can direct additional research efforts focused on the automated, scalable methods that we now require. In this work, we perform an empirical error analysis on a subset of the SNOMED CT hierarchy.

Specifically, we make the following contributions:

A logic-based heuristic to identify subsets of an ontology likely to contain errors.

An expert-curated and validated set of relations in a subset of SNOMED CT.

A taxonomy describing the common types of errors. This taxonomy can serve as a checklist during verification.

Methods

To characterize the common error patterns in SNOMED CT, we first selected a subset of SNOMED CT to verify using the CORE Problem List Subset, a listing of terms provided by National Library of Medicine that are most frequent in multiple hospitals across the US, and a logic-based heuristic.18 Next, we asked 5 experts with both ontology and medical expertise to verify the subset of relations independently. With expert votes and comments collected, we then asked experts to update their response after reviewing responses other experts in the study. The updated responses provided a final enumeration of errors from which we developed a taxonomy of common error patterns. We describe each step in detail below.

Logic-Based Heuristic Filtering

To obtain a reasonably sized set of relations for verification, we elected to filter the entire set of relations in the SNOMED CT by frequency and complexity. In doing so, we made two key assumptions: (1) real world term frequency is a proxy for importance, and (2) complex relations are more likely to contain errors. We began with the January 2013 version of the SNOMED CT ontology. We then extracted an ontology module from SNOMED CT using the CORE Problem List Subset as the signature.10,18,19 The CORE Problem List Subset serves as the proxy for “importance”. We used Snorocket, an OWL reasoner designed for SNOMED CT, to compute the concept hierarchy of this module.20 To further narrow a set of relations likely to have errors, we selected complex SubClass (is-a) entailments from the classified module. A complex entailment has the following characteristics:

non-asserted – not explicitly stated during ontology design but is a logical conclusion of stated axioms (e.g., the relations “Hypertension is-a Cardiovascular Disorder” and “Cardiovascular Disorder is-a Disorder” entails a non-asserted axiom “Hypertension is-a Disorder”)

non-trivial – the justification for that entailment contained at least 2 axioms (e.g., the non-asserted axiom “Hypertension is-a Disorder” from the above example is also non-trivial because it requires the interplay of 2 axioms)

direct – there exists no concept in the inferred SubClass hierarchy between the two concepts in the relation, removing basic subsumption entailments that are not non-trivial, but still rather basic (e.g., “Hypertension is-a Disorder” is indirect because “Cardiovascular Disorder” lies between “Hypertension” and “Disorder” in the inferred hierarchy.)

This process selects ~35,000 SubClass relations. We focus solely on SubClass relations in this work because they are the most common type of relation in biomedical ontologies.21 To reduce further the set of relations, we required that both parent and child be explicitly in the CORE Problem List Subset, not just contained in the module, leaving ~1000 relations. From this set, we randomly sampled 200 relations for verification.

Expert Verification and Delphi

We asked 5 domain experts known directly by the authors who are have expertise in both in medicine and ontology, to verify the filtered set of 200 relations. Specifically, we presented each expert with an online survey of randomly ordered relations reformulated as natural language questions (e.g., “Diabetes is a kind of Disorder of the Abdomen. True or False?”) (Fig. 1). In addition, we provided concept definitions when available from the UMLS.22 In previous work, we developed the optimal format to ask in natural language the verification question (e.g., “is a” vs. “is a kind of”) and to provide appropriate context through definitions.21,23,24 Experts marked each relation as “True” or “False” and also justified their decision. After each expert independently completed the survey, we performed one round of the Delphi method on relations where all experts did not reach agreement (i.e., they did not all give same response). In Delphi, experts saw their responses and justifications along with those of other experts in an anonymized fashion.25 The experts then had an option to update their response in light of other expert comments and votes.

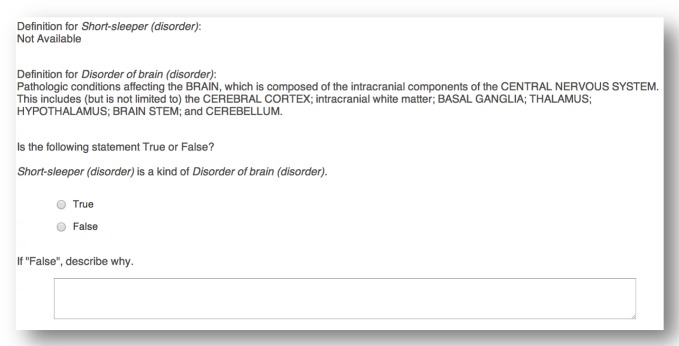

Figure 1.

Screenshot of the question form that experts completed to verify relations in SNOMED CT. All 200 questions were randomly ordered per expert.

Analysis and error classification

With the expert verification complete, we determined which relations were truly in error by supermajority voting after the Delphi round (i.e., at least 4 of the experts agreed). In addition, we calculated expert agreement using a free-marginal kappa statistic (κ).26 The free-marginal kappa does not assume a fixed number of true or false relations, making it appropriate for our application. Finally, the authors qualitatively reviewed the errors and identified common characteristics among them in an ad-hoc fashion. From these common characteristics, we developed a taxonomy of errors.

Results

The experts identified 9 errors in the 200 relations before the Delphi round by supermajority. After the Delphi round, the experts flagged 30 additional relations by supermajority. Table 2 presents all 39 errors. Most errors fall into the categories of anatomical confusion or issues related to cause and effect.

Table 2.

Summary and classification of 39 errors identified in 200 relations from the SNOMED CT CORE Problem List Subset by 5 domain experts. For presentation and readability, fully specified names are shortened and all lack semantic tags (e.g., “(disorder)”). Complete, unabridged list available at request.

| Child | Parent | Term Ambiguity | Naming Convention | Modeling | Cause and Effect | Disorder vs. Symptom | Disorder vs.Sequelae | Multiple Etiology | Anatomic Confusion |

|---|---|---|---|---|---|---|---|---|---|

| Anterior shin splints | Disorder of bone | ✓ | ✓ | ||||||

| Short-sleeper | Disorder of brain | ✓ | ✓ | ||||||

| Frontal headache (finding) | Pain in face (finding) | ✓ | ✓ | ||||||

| Local infection of wound | Wound | ✓ | ✓ | ✓ | |||||

| Anal and rectal polyp | Rectal polyp | ✓ | ✓ | ✓ | ✓ | ||||

| Malignant neoplasm of brain | Malignant tumor of head and/or neck | ✓ | ✓ | ||||||

| Diabetic autonomic neuropathy associated with type 1 diabetes mellitus | Diabetic peripheral neuropathy | ✓ | ✓ | ||||||

| Placental abruption | Bleeding (finding) | ✓ | ✓ | ✓ | |||||

| Impairment level: blindness, one eye - low vision other eye | Disorder of eye proper | ✓ | ✓ | ✓ | |||||

| Gastroenteritis | Disorder of intestine | ✓ | ✓ | ||||||

| Microcephalus | Disorder of brain | ✓ | ✓ | ||||||

| Thrombotic thrombocytopenic purpura | Disorder of hematopoietic structure | ✓ | |||||||

| Fibromyositis | Myositis | ✓ | ✓ | ||||||

| Lumbar radiculopathy | Spinal cord disorder | ✓ | ✓ | ||||||

| Vascular dementia | Cerebral infarction | ✓ | ✓ | ✓ | |||||

| Chronic tophaceous gout | Tophus | ✓ | ✓ | ✓ | |||||

| Full thickness rotator cuff tear | Arthropathy | ✓ | ✓ | ||||||

| Disorder of joint of shoulder region | Arthropathy | ✓ | ✓ | ||||||

| Injury of ulnar nerve | Injury of brachial plexus | ✓ | ✓ | ||||||

| Basal cell carcinoma of ear | Basal cell carcinoma of face | ✓ | ✓ | ||||||

| Bronchiolitis | Bronchitis | ✓ | ✓ | ||||||

| Migraine variants | Disorder of brain | ✓ | ✓ | ||||||

| Gingivitis | Inflammatory disorder of jaw | ✓ | ✓ | ||||||

| Septic shock | Soft tissue infection | ✓ | ✓ | ✓ | |||||

| Cellulitis of external ear | Otitis externa | ✓ | ✓ | ||||||

| Inguinal pain (finding) | Pain in pelvis (finding) | ✓ | ✓ | ||||||

| Disorder of tendon of biceps | Disorder of tendon of shoulder region | ✓ | ✓ | ||||||

| Pain of breast (finding) | Chest pain (finding) | ✓ | ✓ | ||||||

| Injury of ulnar nerve | Ulnar neuropathy | ✓ | ✓ | ✓ | |||||

| Injury of back | Traumatic injury | ✓ | ✓ | ✓ | |||||

| Achalasia of esophagus | Disorder of stomach | ✓ | ✓ | ||||||

| Pneumonia due to respiratory syncytial virus | Interstitial lung disease | ✓ | ✓ | ||||||

| Sensory hearing loss | Labyrinthine disorder | ✓ | ✓ | ✓ | |||||

| Degeneration of intervertebral disc | Osteoarthritis | ✓ | ✓ | ||||||

| Disorder of sacrum | Disorder of bone | ✓ | ✓ | ✓ | |||||

| Peptic ulcer without hemorrhage, without perforation AND without obstruction | Gastric ulcer | ✓ | ✓ | ✓ | |||||

| Diabetic autonomic neuropathy | Peripheral nerve disease | ✓ | ✓ | ||||||

| Cyst and pseudocyst of pancreas | Cyst of pancreas | ✓ | ✓ | ||||||

| Calculus of kidney and ureter | Ureteric stone | ✓ | ✓ | ✓ | ✓ | ||||

After the experts identified the errors, we developed a taxonomy that specifies the patterns of errors they encountered. Table 1 lists this taxonomy with error descriptions and expert agreement on errors in that category. Table 2 indicates where a specific error falls in this taxonomy. Before Delphi, expert agreement on errors was low (κ=−0.5); agreement increased after Delphi by a marked margin (κ =0.69). For comparison, expert κ was 0.73 before Delphi and 0.9 after Delphi on 148 correct relations (by supermajority). Experts were unable to reach consensus on 13 relations.

Table 1.

Error taxonomy constructed by observing common characteristics between 39 errors in SNOMED CT. The categories are not disjoint, and thus errors may be members of multiple classes. The κ describes expert agreement before and after Delphi and range between complete disagreement (−1) and complete agreement (1). As reference, on the 148 correct relations, κ was 0.73 Pre-Delphi and 0.9 Post-Delphi. (Error type names shortened for presentation.)

| Error Type | Description | κ Pre-Delphi | κ Post-Delphi | Error Count |

|---|---|---|---|---|

| Term Ambiguity | Term representing a concept has an unclear meaning | −0.16 | 0.73 | 9 |

| Naming Convention | Convention causes interpretation difficulty (e.g. use of a conjunction within a term) | −0.07 | 1 | 3 |

| Modeling | Incorrect domain representation | −0.03 | 0.69 | 36 |

| Cause and Effect | Conflation of root disorder and its effects | 0.1 | 0.7 | 8 |

| Disorder vs. Symptom | Confusion of a disorder and its symptoms | 0.07 | 0.73 | 3 |

| Disorder vs. Sequelae | Confusion of a disorder and its potential consequences | 0.2 | 0.8 | 4 |

| Multiple Etiology | Ignoring alternate etiologies of disorder | 0.04 | 1 | 5 |

| Anatomic Confusion | Improper anatomic location (e.g., regions vs. structure) | −0.1 | 0.69 | 28 |

Discussion

We identified and classified 39 errors (19.5% of 200 relations) in SNOMED CT into a taxonomy of error patterns. At a high level, the errors were due to ambiguous concept terms, anatomic confusion (the most common), and mix-ups of cause and effect. Such errors include “Septic Shock is-a Soft Tissue infection” and “Frontal Headache is-a Pain in face”. The former error is due to cause (infection) and effect (sepsis) while the latter is due to anatomic confusion (face vs. head/skull). These results are surprising; in summary, they suggest that:

the extent of errors in SNOMED CT, particularly in complex relations, is quite large

errors are very subtle – in the first round of verification, the majority of experts missed 20% (8/39) of the errors

it is therefore essential to develop quality assurance with many eyes checking for errors

These results support empirically the results of previous exploratory studies by Rector and colleagues who identified similar errors in SNOMED CT.10 In contrast to the work by Rector et al., this study used a panel of experts to verify a pre-defined set of relations in multiple stages, allowing us to measure expert disagreement. Furthermore, it confirmed the subtlety and difficultly of ontology verification through an empirical, systematic experiment.

A checklist to focus ontology engineering and verification

This work provides three elements to help future verification and method development. First, it presents a taxonomy of errors. The taxonomy should serve as a checklist during ontology verification (i.e., during verification, an expert should consult the list to ensure that none of these types of errors occur). Recent literature describes the importance of checklists when working with complex domains and complex tasks.27 Please note that our taxonomy enumerates some of the kinds of errors that can occur, but not the causes, of which there are many. The taxonomy does not serve as a solution manual to ontology bugs, but instead helps us find them. Second, this paper presented an expert-curated and validated gold standard set of relations from the CORE Problem List Subset against which researchers can now compare methods. While the standard we developed cannot capture or represent the entirety of relations in biomedical ontologies, it serves as a “first stab” at a gold standard, of which, currently, there are none of any useful size. Finally, we developed a generalized logic-based heuristic to saturate errors in an ontology of interest (i.e., the heuristic selects relations that are likely to be in error).

The difficulty of identifying ontology errors

Our two-step Delphi approach to verification, wherein 5 domain experts initially did not reach agreement, highlights the difficulty of identifying ontology errors. It appears that having more eyes on the problem is essential. However, we believe that the next challenge in identifying errors is finding those that are salient (i.e., that impact a system that uses an ontology). To do so, it is necessary to understand the ontology in the context of the system that uses it. In this experiment, part of the difficulty was reaching consensus not only about errors but also about context. In certain contexts, errors may have no significant impact or instead have a positive impact. For example, the incorrect relation “Local Infection of wound is-a Wound” may in fact be helpful in retrieval tasks but not with clinical decision support. The taxonomy we developed can guide researchers as they begin to focus on finding errors in the context of ontology applications in a scalable fashion. For example, one might find that cause and effect errors are particularly important to catch in the context of clinical decision support. With an understanding of context and common error patterns, researchers and developers can develop best practices to avoid and repair salient errors. Finally, these results highlight that ontologies are generally tied to particular contexts of use. We suggest that ontology developers become more explicit in how they represent and communicate the context and appropriateness of an ontology for a given application.

Conclusion

Reinforcing results in the literature, experts identified 39 errors in a set of 200 relations from SNOMED CT. We found this task was rather difficult for domain experts, underlining the need for multiple eyes when error-checking and the need for additional method development. We developed a taxonomy of common error patterns. This taxonomy can focus future development of scalable, automated ontology verification methods, giving researchers a pointer toward salient, common patterns of errors. More importantly, ontology engineers can view the taxonomy as checklist to consult when performing ontology quality assurance. Such efforts are imperative, given the prevalence of biomedical ontologies and our reliance upon them.

Acknowledgments

The authors thank Alan L Rector, Timothy E. Sweeney, Evan P. Minty, and Michael Januszyk for serving as domain experts. This work has been supported in part by Grant GM086587 from the National Institute of General Medical Sciences and by The National Center for Biomedical Ontology, supported by grant HG004028 from the National Human Genome Research Institute, the National Lung, Heart, and Blood Instititute, and the National Institutes Health Common Fund. JMM is supported by National Library of Medicine Informatics Training Grant LM007033.

References

- 1.Bodenreider O, Stevens R. Bio-ontologies: current trends and future directions. Brief. Bioinform. 2006;7(3):256–74. doi: 10.1093/bib/bbl027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Whetzel PL, Noy NF, Shah NH, Alexander PR, Nyulas CI, Tudorache T, et al. BioPortal: enhanced functionality via new web services from the National Center for Biomedical Ontology to access and use ontologies in software applications. Nucleic Acids Res. 2011;39(Web Server issue):W541–5. doi: 10.1093/nar/gkr469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. N Engl J Med. 2010;363(6):501–4. doi: 10.1056/NEJMp1006114. [DOI] [PubMed] [Google Scholar]

- 4.LePendu P, Iyer SV, Bauer-Mehren A, Harpaz R, Mortensen JM, Podchiyska T, et al. Pharmacovigilance using clinical notes. Clin Pharmacol Ther. 2013;93(6):547–55. doi: 10.1038/clpt.2013.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Segal E, Shapira M, Regev A, Pe’er D, Botstein D, Koller D, et al. Module networks: identifying regulatory modules and their condition-specific regulators from gene expression data. Nat Genet. 2003;34(2):166–76. doi: 10.1038/ng1165. [DOI] [PubMed] [Google Scholar]

- 6.Hoehndorf R, Dumontier M, Gennari JH, Wimalaratne S, de Bono B, Cook DL, et al. Integrating systems biology models and biomedical ontologies. BMC Syst Biol BioMed Central Ltd. 2011;5(1):124. doi: 10.1186/1752-0509-5-124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ashburner M, Ball CA, Blake JA, Botstein D, Butler H, Cherry JM, et al. Gene Ontology: tool for the unification of biology. Nat Genet. 2000 May;25(1):25–9. doi: 10.1038/75556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Golbeck J, Fragoso G, Hartel F, Hendler J, Oberthaler J, Parsia B. The National Cancer Institute’s thesaurus and ontology. J web Semant. 2003;1(1):75–80. [Google Scholar]

- 9.Stearns MQ, Price C, Spackman KA, Wang AY. SNOMED clinical terms: overview of the development process and project status. Proc AMIA Symp. 2001:662. [PMC free article] [PubMed] [Google Scholar]

- 10.Rector AL, Brandt S, Schneider T. Getting the foot out of the pelvis: modeling problems affecting use of SNOMED CT hierarchies in practical applications. J Am Med Informatics Assoc BMJ Publishing Group Ltd. 2011;18(4):432–40. doi: 10.1136/amiajnl-2010-000045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Rector A, Iannone L. Lexically suggest, logically define: Quality assurance of the use of qualifiers and expected results of post-coordination in SNOMED CT. J Biomed Inform. 2012;45:199–209. doi: 10.1016/j.jbi.2011.10.002. [DOI] [PubMed] [Google Scholar]

- 12.Ceusters W, Smith B, Goldberg L. A terminological and ontological analysis of the NCI Thesaurus. Methods Inf Med. 2005;44(4):498. [PubMed] [Google Scholar]

- 13.Ceusters W. Applying evolutionary terminology auditing to SNOMED CT. AMIA Annu Symp Proc. 2010;2010:96–100. [PMC free article] [PubMed] [Google Scholar]

- 14.Zhu X, Fan JW, Baorto DM, Weng C, Cimino JJ. A review of auditing methods applied to the content of controlled biomedical terminologies. J Biomed Inform. 2009;42:413–25. doi: 10.1016/j.jbi.2009.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ochs C, Perl Y, Geller J, Halper M, Gu H, Chen Y, et al. Scalability of abstraction-network-based quality assurance to Large SNOMED Hierarchies. AMIA Annu Symp Proc. 2013:1–10. [PMC free article] [PubMed] [Google Scholar]

- 16.Geller J, Ochs C, Perl Y, Xu J. New abstraction networks and a new visualization tool in support of auditing the SNOMED CT content. AMIA Annu Symp Proc. 2012:237–46. [PMC free article] [PubMed] [Google Scholar]

- 17.Vrandečić D. Handb Ontol. Springer Berlin; Heidelberg: 2009. Ontology evaluation; pp. 293–313. [Google Scholar]

- 18.The CORE Problem List Subset of SNOMED CT® [Internet] Available from: http://www.nlm.nih.gov/research/umls/Snomed/core_subset.html.

- 19.Doran P, Tamma V, Iannone L. Ontology module extraction for ontology reuse: an ontology engineering perspective. Proc 16th ACM Conf Inf Knowl Manag. 2007:61–70. [Google Scholar]

- 20.Lawley MJ, Bousquet C. Fast classification in Protégé: Snorocket as an OWL 2 EL reasoner; Proc 6th Australas Ontol Work (IAOA’10). Conf Res Pract Inf Technol; 2010. pp. 45–9. [Google Scholar]

- 21.Noy NF, Mortensen JM, Alexander PR, Musen MA. Mechanical Turk as an ontology engineer? Using microtasks as a component of an ontology engineering workflow. Web Sci. 2013 [Google Scholar]

- 22.Bodenreider O. The Unified Medical Language System (UMLS): integrating biomedical terminology. Nucleic Acids Res. 2004;32(suppl 1):D267–D270. doi: 10.1093/nar/gkh061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mortensen JM, Noy NF, Musen MA, Alexander PR. Crowdsourcing ontology verification. Int Conf Biomed Ontol. 2013 [Google Scholar]

- 24.Mortensen JM, Musen MA, Noy NF. Crowdsourcing the verification of relationships in biomedical ontologies. AMIA Annu Symp. 2013 [PMC free article] [PubMed] [Google Scholar]

- 25.Linstone HA, Turoff M. The Delphi method: techniques and applications. Reading: Addison-Wesley; 1975. [Google Scholar]

- 26.Randolph JJ, Thanks A, Bednarik R, Myller N. Free-marginal multirater kappa (multirater κfree): an alternative to Fleiss’ fixed-marginal multirater kappa. Joensuu Learn Instr Symp. 2005 [Google Scholar]

- 27.Gawande A. The checklist manifesto: how to get things right. Metropolitan Books; New York: 2010. [Google Scholar]