Abstract

Data fragmentation within electronic health records causes gaps in the information readily available to clinicians. We investigated the information needs of emergency medicine clinicians in order to design an electronic dashboard to fill information gaps in the emergency department. An online survey was distributed to all emergency medicine physicians at a large, urban academic medical center. The survey response rate was 48% (52/109). The clinical information items reported to be most helpful while caring for patients in the emergency department were vital signs, electrocardiogram (ECG) reports, previous discharge summaries, and previous lab results. Brief structured interviews were also conducted with 18 clinicians during their shifts in the emergency department. From the interviews, three themes emerged: 1) difficulty accessing vital signs, 2) difficulty accessing point-of-care tests, and 3) difficulty comparing the current ECG with the previous ECG. An emergency medicine clinical dashboard was developed to address these difficulties.

Introduction

Fragmentation of patient information is a common problem in healthcare. Health information is seldom shared among competing healthcare delivery organizations, and even within a single organization, it is common for data to be isolated within various information ‘silos.’[1] Adoption of electronic health records (EHRs) may help address some aspects of information fragmentation, but EHR systems themselves are fragmented, making it difficult for clinicians to easily review data that “belong together.” For example, in our commercial EHR, laboratory results and medication orders are accessed through different modules that are not visible on the computer screen at the same time, even though reviewing these two types of data together makes clinical sense. This fragmented model for displaying patient information requires increased cognitive effort to obtain a holistic understanding of a patient. In turn, increased cognitive effort can lead to a higher rate of medical error.[2]

Related to the challenge of information fragmentation is the increasing awareness of gaps in clinicians’ information needs. Stiell and colleagues found that physicians reported information gaps in one-third of patients presenting to the emergency department.[3] Of these information gaps, half were felt to be either very important or essential to patient care, they were found more commonly in sicker patients, and they were independently associated with a prolonged length of stay in the emergency department. Historical information (e.g. previous visits, past medical history) was the most common information gap among the studied emergency physicians.

Even when historical information is available to emergency physicians, they do not always access it,[4] especially if it is difficult or time-consuming to find.[5] Effective cognitive support—providing information in an optimal format to clinicians when and where it is needed—should be a foundational principle for designing any clinical information system. The importance of cognitive support was highlighted in the 2009 report of the U.S. National Research Council, “Computational Technology for Effective Health Care: Immediate Steps and Strategic Directions.”[6] The report noted that current healthcare information systems force clinicians to “devote precious cognitive resources to the details of data,” explaining that “without an underlying representation of a conceptual model for the patient showing how data fit together and which are important,…understanding of the patient can be lost.”[6]

Dashboards have been defined as “a visual display of the most important information needed to achieve one or more objectives that has been consolidated on a single computer screen so it can be monitored at a glance.”[7] Dashboards have been employed in a wide range of medical settings, including emergency medicine, otolaryngology, nursing care, and maternity care.[8–11] While clinical dashboards are typically used to summarize the status of a cohort of patients (such as an emergency department tracking board), we believe there is a pressing need for dashboards to organize and efficiently display data for individual patients. We hypothesized that emergency medicine clinicians have specific information needs, that these needs are not adequately addressed by our existing electronic health record, and that a clinical dashboard could be created to fill the information gaps.

Methods

This IRB-approved study was performed at the Columbia University Medical Center emergency department, which has 126,000 annual visits and serves an urban, low-income population. A survey was used to elicit the general information needs of emergency medicine clinicians. Structured interviews were conducted to supplement the survey data and to identify situation-specific information gaps.

Survey

After a review of the survey methodology literature, a preliminary survey was developed. This online instrument adhered to the tenets of proper survey design, including limiting forced responses and allowing for free-text answer choices.[12] The survey questions were designed to clarify the clinical information items most important to emergency medicine clinicians. Questions were answered via a slider that could be moved continuously from 0 to a maximum score of 100; the default location of the sliders was set to 50.

The preliminary survey was iteratively refined based on feedback from an advisory committee composed of emergency medicine physicians and experts in qualitative research methods. The result of this process was a six-question survey instrument that was emailed to all emergency department attending physicians, fellows, and resident physicians using the Qualtrics Survey software (Qualtrics LLC, Provo, UT). A subsequent reminder email was sent approximately two weeks after the initial survey email.

The survey asked clinicians to consider their information needs while caring for a typical patient in the emergency department. The survey also inquired about which patient data items should be included in a clinical dashboard. To orient the survey respondents to the definition of a “dashboard” and the layout of a potential emergency medicine dashboard at our institution, a sample image of an ambulatory medicine dashboard was included in the survey. To avoid biasing respondents, the sample dashboard included information such as preventive care recommendations that were not especially relevant to the emergency environment.

Interviews

Structured interviews were conducted with emergency department faculty, residents, and midlevel practitioners while they were working in the emergency department. The interviewer (JS) followed an interview script that focused the conversation on information gaps that were present in the emergency department, specifically with respect to the hospital’s information systems. Clinicians were asked about their “pain points” during an average shift and how the EHR could be modified to reduce frustration and improve efficiency. The interviews were coded using the grounded theory method, an inductive approach in which interview and observation data are coded and then organized into themes.[13] Data collection and analysis were performed concurrently; the interviews were conducted until theme saturation was achieved.

Dashboard Development

Based on the results of the survey and interviews, a dashboard display was designed and created. Our institution uses a commercial EHR product, Allscripts Sunrise (Allscripts Corp., Chicago, IL), in the emergency and acute care settings. A locally developed system called iNYP integrates with the EHR and provides advanced data review capabilities. iNYP is a Java-based service-oriented web application that builds on Columbia University’s 25-year history of clinical information system innovation.[14–16] iNYP is available as a custom tab within the commercial EHR (supplementing the native results review capabilities) and can also be accessed from a web browser or a mobile device. At the time of the study, approximately 8,000 clinicians used iNYP alongside the commercial EHR each month. During the study, a new architecture based on HTML5 and JavaScript was added to iNYP to enable the creation of clinical dashboards. The dashboard architecture facilitated the display of data originating from disparate EHRs and from different locations within the same EHR. A comprehensive evaluation of the clinical use of the dashboard was outside the scope of the current study.

Results

Survey

Of 109 emergency department attending physicians, fellows, and resident physicians, 52 (48%) completed the online survey. The majority of respondents (62%) were attending physicians.

The clinical information items reported to be most helpful while caring for patients in the emergency department were vital signs, electrocardiogram (ECG) reports, previous discharge summaries, and previous laboratory test results, as shown in Table 1.

Table 1.

Helpfulness while seeing average patient in ED

| Survey Item | Average Score |

|---|---|

| Vital Signs | 94.35 |

| Previous ECG | 92.04 |

| Prior discharge summary | 89.59 |

| Prior lab results | 84.73 |

| Prior ED note | 84.16 |

| Something else* | 74.06 |

| Triage note | 69.75 |

| Guidelines | 60.85 |

| Immunizations | 26.93 |

Something else

Medication list - 4 respondents

Imaging results - 3 respondents

PMD phone # - 3 respondents

Ambulance note - 2 respondents

Allergies - 2 respondents

ED visits - 2 respondents

Clinic notes - 1 respondent

The list of a patient’s medications was the most frequently requested “Something else?” free-response item. Survey respondents identified triage notes, evidence based-guidelines, and immunization histories as less important.

After showing respondents an image of a sample ambulatory medicine dashboard, they were asked to identify the clinical information items that they thought would be most helpful for an emergency medicine dashboard. In terms of historical clinical information items, as shown in Table 2, the results suggested that previous ECG, past medical history, most recent inpatient discharge summary, and prior lab results would be most helpful.

Table 2.

Helpfulness of historical data in dashboard

| Survey Item | Average Score |

|---|---|

| Something else* | 92.2 |

| Previous ECG | 91.8 |

| Past medical history | 89.8 |

| Prior discharge summary | 87 |

| Prior lab results | 85 |

| Prior ED note | 79.3 |

| Prior imaging | 78.6 |

| Immunizations | 32 |

Something else:

Medication list – 3 respondents

Vital signs – 2 respondents

Lab results – 1 respondents

Microbiology results – 1 respondent

For active clinical information items (i.e., from the current visit), as shown in Table 3, respondents reported that it would be most helpful to have vital signs, lab results, and imaging results in the dashboard. In contrast, information such as the private medical doctor’s contact information, the triage note, and reference material were felt to be less helpful. In the “Something else” free-response component of the survey, one respondent requested that lab results not be shown in the dashboard because they could already be found elsewhere.

Table 3.

Helpfulness of active data in dashboard

| Survey Item | Average Score |

|---|---|

| Vital signs | 94.35 |

| Lab results | 92.04 |

| Current imaging results | 89.59 |

| Something else* | 84.73 |

| PMD phone # | 84.16 |

| Triage note | 74.06 |

| Reference | 69.75 |

| Material |

Something else:

Don’t show labs – 1 respondent

Meds given in ED – 1 respondent

Triage level – 1 respondent

Pending results – 1 respondent

Medication list – 1 respondent

Ambulance note – 1 respondent

The final question allowed respondents to answer in a free-response manner the single feature they would most want to add to the existing EHR. The majority of answers were similar to those found in the other parts of the survey (e.g. medication list, previous results). However, there were two novel answers: 1) patient photograph and 2) a way for outside physicians referring their patients to the emergency department to have a means of communicating referral information directly into the EHR.

Interviews

Of the 18 interviews conducted, 8 were with attending physicians, 7 were with resident physicians, and 3 were with midlevel providers. The interviews lasted approximately 10–20 minutes. There were three key themes that emerged: 1) difficulty accessing current vital signs, 2) difficulty accessing current point-of-care tests, and 3) difficulty comparing the current ECG with the previous ECG.

The interviewees noted that although vital signs were very important to the emergency medicine clinician, their display in the EHR was both tedious to access and in a format that was time-consuming to comprehend. A similar sentiment was expressed regarding the results of the point-of-care tests, such as urine pregnancy. Because these results are hand-entered by nurses, they are found in a section separate from other laboratory test results. Clinicians complained that these results were “buried at the bottom of a long flowsheet,” which was cumbersome and time-consuming to access.

Despite the fact that all ECGs were accessible in the EHR, it was not possible to compare two ECGs on the computer screen at the same time. Instead, clinicians were obliged to print one of the ECGs and compare it to the other on the screen, or more often, to print both of the ECGs and compare them side-by-side.

Dashboard Development

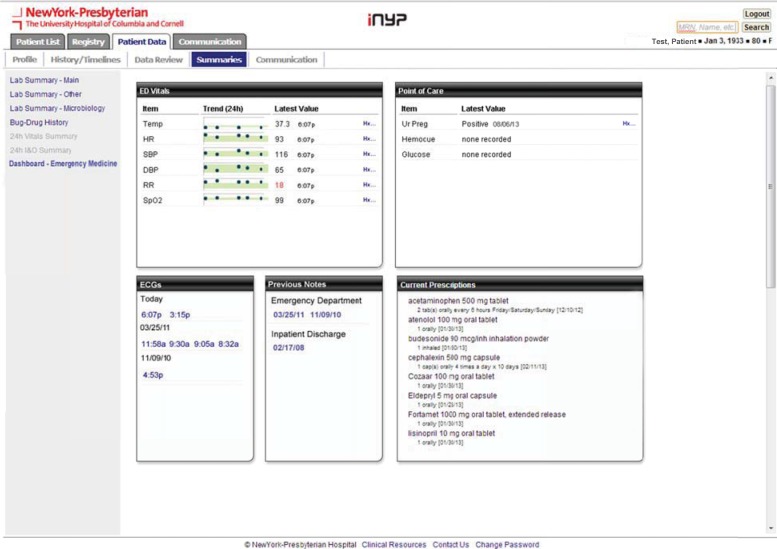

Based on the knowledge obtained from the survey and interviews, we designed a dashboard to address the information gaps of the emergency medicine clinicians. The dashboard provided summary “tiles” of five of the most-requested clinical information items: vital signs, point-of-care tests, ECGs, previous notes/discharge summaries, and medications. In designing the tiles, we considered the best practices of information visualization, such as adhering to location-based emphasis (i.e., placing the most important information in the upper left) and minimizing the amount of non-data pixel use.[17]

The dashboard display is shown in Figure 1. The “ED Vitals” tile presents the patient’s vital signs in graphical and tabular formats. The sparkline graphic provides a quick way to identify trends during a patient’s stay in the emergency department. The shaded band indicates the normal range; data points that are abnormal (and therefore fall outside the band) are readily apparent. Alternatively, a more precise display of the same data can be found by reviewing the “Latest Value” column and clicking the history (“Hx”) link, which pops up a display of the previous vital sign measurements. Abnormal values are marked in red font.

Figure 1.

Screenshot of the Emergency Medicine Dashboard

The “Point-of-Care” tile shows the results of the point-of-care tests performed at our institution: urine pregnancy, urine dipstick, finger-stick glucose, and finger-stick hemoglobin. If no results are available, “none recorded” is displayed. Similar to the ED Vitals tile, the history (“Hx”) link displays any earlier point-of-care test result. This display is useful when clinicians need to follow a patient’s finger-stick hemoglobin or glucose test during their stay in the emergency department.

The “ECG” tile divides electrocardiogram results by date. Clicking one of the hyperlinks launches a pop-up ECG viewer, which graphically displays the tracing in PDF format, along with the cardiologist’s report (if available). Multiple ECGs can be viewed simultaneously by clicking on additional hyperlinks.

Similarly, the “Previous Notes” tile organizes discharge summaries and previous emergency department visit notes by date. Clicking the associated hyperlink launches a note viewer. Finally, the “Current Prescriptions” tile shows the name, dosage, and dosing schedule for the patient’s home medications.

Discussion

In order to design clinical information systems that minimize data fragmentation, it is necessary to know which clinical information items are most important to the users of the system. Information needs of clinicians do not follow a “one-size-fits-all” model: a given specialty cohort of clinicians may use information systems in a much different way than their colleagues who work in a different setting or come from another specialty. Once information needs of a specific group are known, extra care can be given to the design of clinical information systems to ensure that various workflows are supported and data are presented in a way that makes “clinical sense.”

Our qualitative investigation of the information needs of emergency medicine clinicians demonstrated both the information needs of the average emergency medicine clinician as well as institution-specific information gaps in our EHR system. We found that vital signs, current and previous ECG, previous discharge summary, lab results, and medication list were particularly important; this finding is probably applicable to most emergency care settings. Our investigation also showed that at our institution, there were information gaps related to the inefficient display of these clinical information items.

Our institution is not unique; many (if not all) clinical information systems have been designed with inadequate usability testing and apparent lack of clinical input.[18] Our study provides a methodology by which the information needs of a specialty-specific group of clinicians can be assessed. In turn, this can inform the development of specialty-specific dashboards that fill information gaps.

The clinical dashboard we created was designed based on the feedback elicited from clinicians who used the information systems regularly. The information gaps that were identified were related to vital signs, lab results, ECGs, and discharge summaries, all of which were felt to be among the most important clinical information items by the clinicians. In order to fill these gaps, individual tiles were created within the clinical dashboard to enable at-a-glance monitoring and access to these items.

In the future, we envision “smart dashboards,” which dynamically change based on the chief complaint of the patient. For example, a patient who presents to the emergency department with a chief complaint of “laceration” would have his tetanus status displayed, whereas different information might be surfaced for someone presenting with chest pain. This in turn might inform the development of universal rules of clinical data display. For example, a patient’s creatinine level and pregnancy status (if appropriate) should always be shown when ordering a computed tomography (CT) scan. Similarly, a previous ECG, when available, should always be shown next to the current one. We believe that improved information displays will better support the cognitive tasks of clinicians.

Our study has several limitations. First, the survey response rate was 48%, reflecting a possible bias because of differences in the types of subjects who completed the survey versus those who did not. In any case, this study at least reflects almost half of the relevant clinicians. Second, a single investigator conducted the structured interviews. While an interview script was used, it is possible that the way in which questions were asked biased the respondents in their answers. Third, because our study relied on survey and interview, it is subject to recall bias. Observation could be used in future studies to confirm the interview and survey results. Fourth, we have not yet implemented the dashboard within the emergency department. Fifth, the study was performed at only one emergency department with only one electronic health record. Our findings may not generalize to other environments or EHR systems. Nevertheless, we believe our results can help inform EHR vendors about the information needs of their users and also encourage the investigation of clinical information needs in diverse settings.

Conclusion

Electronic health records suffer from data fragmentation, which adversely affects patient care. Our study presented a methodology by which the information needs of a specialty cohort of clinicians can be studied. We demonstrated how a better understanding of clinicians’ information needs can inform the development of specialty-specific clinical dashboards that provide cognitive support and improve efficiency.

Acknowledgments

Dr. Cimino was supported in part by research funds from the National Library of Medicine and the NIH Clinical Center.

References

- 1.Mohler MJ. Collaboration across clinical silos. Frontiers of health services management. 2013;29(4):36–44. [PubMed] [Google Scholar]

- 2.Horsky J, Allen MB, Wilcox AR, Pollard SE, Neri P, Pallin DJ, et al. Analysis of user behavior in accessing electronic medical record systems in emergency departments. AMIA Annual Symposium proceedings / AMIA Symposium AMIA Symposium. 2010;2010:311–5. [PMC free article] [PubMed] [Google Scholar]

- 3.Stiell A, Forster AJ, Stiell IG, van Walraven C. Prevalence of information gaps in the emergency department and the effect on patient outcomes. CMAJ : Canadian Medical Association journal = journal de l’Association medicale canadienne. 2003;169(10):1023–8. [PMC free article] [PubMed] [Google Scholar]

- 4.Shapiro JS, Kuperman G, Kushniruk AW, Kannry J. Survey of emergency physicians to determine requirements for a regional health information exchange network. AMIA Spring Congress. 2006 May;:16–18. [Google Scholar]

- 5.Hripcsak G, Sengupta S, Wilcox A, Green RA. Emergency department access to a longitudinal medical record. Journal of the American Medical Informatics Association : JAMIA. 2007;14(2):235–8. doi: 10.1197/jamia.M2206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Stead WW, Lin HS, editors. Computational Technology for Effective Health Care: Immediate Steps and Strategic Directions. Washington (DC): 2009. (The National Academies Collection: Reports funded by National Institutes of Health). [PubMed] [Google Scholar]

- 7.Few S. Information dashboard design : the effective visual communication of data. 1st ed. Beijing ; Cambride MA: O’Reilly; 2006. p. viii.p. 211. [Google Scholar]

- 8.Khemani S, Patel P, Singh A, Kalan A, Cumberworth V. Clinical dashboards in otolaryngology. Clinical otolaryngology : official journal of ENT-UK ; official journal of Netherlands Society for Oto-Rhino-Laryngology & Cervico-Facial Surgery. 2010;35(3):251–3. doi: 10.1111/j.1749-4486.2010.02143.x. [DOI] [PubMed] [Google Scholar]

- 9.Stone-Griffith S, Englebright JD, Cheung D, Korwek KM, Perlin JB. Data-driven process and operational improvement in the emergency department: the ED Dashboard and Reporting Application. Journal of healthcare management / American College of Healthcare Executives. 2012;57(3):167–80. discussion 80–1. [PubMed] [Google Scholar]

- 10.Simms RA, Ping H, Yelland A, Beringer AJ, Fox R, Draycott TJ. Development of maternity dashboards across a UK health region; current practice, continuing problems. European journal of obstetrics, gynecology, and reproductive biology. 2013 doi: 10.1016/j.ejogrb.2013.06.003. [DOI] [PubMed] [Google Scholar]

- 11.Tan YM, Hii J, Chan K, Sardual R, Mah B. An electronic dashboard to improve nursing care. Studies in health technology and informatics. 2013;192:190–4. [PubMed] [Google Scholar]

- 12.Schleyer TK, Forrest JL. Methods for the design and administration of web-based surveys. Journal of the American Medical Informatics Association : JAMIA. 2000;7(4):416–25. doi: 10.1136/jamia.2000.0070416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.B K, J M. Qualitative research methods for evaluating computer information systems. Evaluating the Organizational Impact of Healthcare Information Systems. 2005:30–55. [Google Scholar]

- 14.Hripcsak G, Cimino JJ, Sengupta S. WebCIS: large scale deployment of a Web-based clinical information system; Proceedings / AMIA Annual Symposium AMIA Symposium; 1999. pp. 804–8. [PMC free article] [PubMed] [Google Scholar]

- 15.Hendrickson G, Anderson RK, Clayton PD, Cimino J, Hripcsak GM, Johnson SB, et al. The integrated academic information management system at Columbia-Presbyterian Medical Center. MD computing : computers in medical practice. 1992;9(1):35–42. [PubMed] [Google Scholar]

- 16.Johnson S, Friedman C, Cimino JJ, Clark T, Hripcsak G, Clayton PD. Conceptual data model for a central patient database; Proceedings / the Annual Symposium on Computer Application [sic] in Medical Care Symposium on Computer Applications in Medical Care; 1991. pp. 381–5. [PMC free article] [PubMed] [Google Scholar]

- 17.Tufte ER. The visual display of quantitative information. 2nd ed. Cheshire, Conn.: Graphics Press; 2001. p. 197. [Google Scholar]

- 18.Middleton B, Bloomrosen M, Dente MA, Hashmat B, Koppel R, Overhage JM, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. Journal of the American Medical Informatics Association : JAMIA. 2013;20(e1):e2–8. doi: 10.1136/amiajnl-2012-001458. [DOI] [PMC free article] [PubMed] [Google Scholar]