Abstract

Wrong patient selection errors are a major issue for patient safety; from ordering medication to performing surgery, the stakes are high. Widespread adoption of Electronic Health Record (EHR) and Computerized Provider Order Entry (CPOE) systems makes patient selection using a computer screen a frequent task for clinicians. Careful design of the user interface can help mitigate the problem by helping providers recall their patients’ identities, accurately select their names, and spot errors before orders are submitted. We propose a catalog of twenty seven distinct user interface techniques, organized according to a task analysis. An associated video demonstrates eighteen of those techniques. EHR designers who consider a wider range of human-computer interaction techniques could reduce selection errors, but verification of efficacy is still needed.

Introduction

Patient selection errors can be defined as actions (orders or documentation) which are performed for one patient that were intended for another patient1. Such wasteful and potentially life-threatening errors are a well-documented problem. Koppel et al. categorized 22 types of scenarios where CPOE increased the probability of prescription errors, including wrong patient selection2. The wrong patient can be selected when referring to patient profiles, lab results, or medication administration records3. According to a study by Hyman et al., placing orders in the incorrect patients chart comprised 24% of the reported errors4. Case-reports from the Veterans Health Administration showed that 39% of their “laboratory medicine adverse events” were caused by wrong patient order entry and 8% of these were due to reporting back the results to the wrong patient medical record5. Lambert et al. 6 projected that 14247 cases of wrong drug errors happen every day in USA, and many of them were caused by a wrong patient selection error. Two studies7,8 estimated that about 50 per 100,000 electronic notes are entered in the wrong patient record. We believe that providing cognitive support though interface design for patient selection can reduce the frequency of harmful outcomes, thereby improving performance and safety.

Method

We reviewed sources of user interface selection errors from academic literature, interviews with clinicians, and inspection of existing EHR interfaces. Then, guided by a task analysis (ranging from recall of patient identity to error recovery or reporting of errors), we propose 27 user interface techniques. Eighteen techniques are illustrated in a prototype and available on video. Finally the techniques were tagged with an estimated level of implementation difficulty and estimated payoff, based on past findings of Human-Computer Interaction research9. Verification of efficacy is clinical environments is still needed.

Prior Work

Sengstack10 provides a 46-item checklist for CPOE system designers to follow, categorized into clinical decision support, order form configuration, human factor configuration, and work flow configuration. Like Sengstack we found many descriptions of the safety problems associated with patient selection but very little prior work describing empirical evaluation of user interface design guidelines. The scope of the suggested techniques was limited and many proposed solutions had shortcomings. For example Adelman et al.7 showed that ID-reentry (i.e. keying the patient information twice) could reduce wrong patient selection errors, but this technique takes a substantial amount of additional time and therefore is likely to cause significant user frustration. Lane et al.11 list ‘Wrong Selection’ as a type of error in their taxonomy of errors in hospital environments, with wrong patient selection being only one of the errors mentioned. Most of those errors were attributed to poorly designed systems for patient selection7. More general work by Reason12 categorized human errors as violations, mistakes and slips. Norman13 asserts that the dividing line is the intention: it is a slip when the intention is correct but mechanical factors lead to error, while it is a mistake when the intention itself is wrong. Wrong patient selection can be a result of either a slip or a mistake. It is a slip if the clinician accidentally selects the patient in an adjacent row or hits the wrong number key when entering a patient ID number14. Slips are more frequent when the text is hard to read or small buttons are hard to select. Mistakes are more frequent when two patients are listed with the same first and last name15 or inconsistent Medical Record Numbers (from different data sources).

Various human factors such as visual perception or short-term memory can lead to confused intentions. For example when names are sorted alphabetically similar names can coalesce visually and lead to intentional selection of the wrong one. Hospitals often have shared computers where clinicians need to log in to work and then to log off. Failure to log off can leave then next clinician’s memory being cued by the wrong list. To minimize confusion between patients, it is possible to display other identifier such as date of birth, room number, gender, admission date, or attending physician name3. Clinicians often identify their patient by room number / location16. The Joint Commission now mandates that at least two patient identifiers be used17. Unfortunately, clinicians do not routinely verify patient identity after selection18, 19. They may be interrupted in the midst of selecting a patient or toggle between the records of two patients15.

The general literature on errors can also inform better design of EHR systems13. Slips can be reduced by providing feedback (e.g. highlighting the item before the selection occurs). Mistakes can be reduced providing memory aids (e.g. providing pictures to remind the clinician of the patient) especially when interruptions / distractions between the time of the intent and the time to perform the task. Visual attention guidelines can also be useful. For example animation, if used appropriately, can focus users attention20, 21. Schlienger et al.22 presented empirical evidence that use of animation and sound improves perception and comprehension of a change. One recent example of the use of animation is TwinList, that was designed to assist the medication reconciliation process23.

Task Analysis

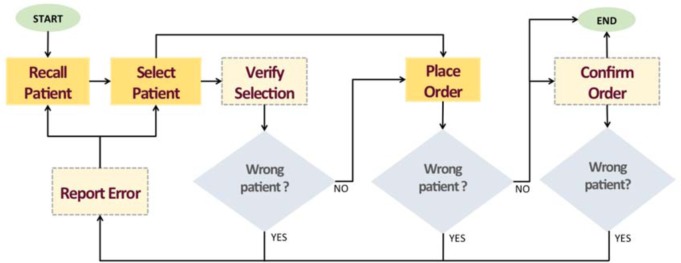

While a detailed clinician task analysis depends on a particular system and its user interface, we focus on general steps for CPOE, namely patient selection, verification, order entry and confirmation (Figure 1). In an ideal hospital, order entry would occur at the patient’s bedside assuring identity confirmation, or if not at the bedside using proper documentation. Unfortunately, in reality, interruptions are frequent, documentation incomplete, and human nature tends to shortcuts. Inevitably, recall will be used16, so it seems prudent to engineer EHR interactions to maximize odds of correct patient selection from recall. We therefore include the following tasks:

Recall patient: Clinicians must first recall the patient’s identity (e.g. John Smith, or the heart failure patient in Room 201, or the patient whose ID# is on this sheet of paper). They may not recall the correct patient name.

Select patient: This is done either by typing a name or record ID number or by selecting in a list of patients.

Verify selection: After selecting a patient, clinicians may or may not verify whether they selected the correct patient. Unfortunately the patient name may be invisible (e.g. scrolled away from view). To verify may require checking the age, chief complaint, or room number, all of which may not be displayed at that time. They may not recognize the error, and continue working with the wrong patient’s record.

Place order: One or more orders are entered for the patient. This can be a long and complex task which can be interrupted multiple times, sometimes to work on other patient records.

Confirm order: A confirmation dialogue may be displayed before or after order submission. This is likely to be the last chance to stop a wrong patient order.

Report error: If clinicians realize later on (minutes or days later) that they made an error after submitting an order, they should be able to cancel the wrong order and then place the same order for the correct patient. They should also be able to report the error and notify stakeholders.

Figure 1:

Task analysis of the selecting a patient and placing an order, shown as a flow-diagram. Lighter colored dotted rectangles represent tasks that may not be taken.

Proposed User Interface Techniques to Reduce Wrong Patient Selection Errors

Based on the error types reported in the prior work and the task analysis, we provide a design space of 27 potentially helpful user interface techniques (see Table 2). We grouped the techniques by the task for which they would be most useful. Some of the techniques have been successfully implemented, either in some EHR systems or in other non medical applications, but most have not. Eighteen techniques with comparatively higher pay-offs and lower implementation cost were implemented in a prototype, refined based on clinician feedback, and recorded on video (see http://www.cs.umd.edu/hcil/WPE). The prototype has a control panel that allows designers to combine techniques or check them individually. The prototype was developed in JavaScript and HTML. The animated features use the JavaScript library D3 and jQuery. For user interface and layout, we used the Bootstrap library from Twitter and jQueryUI. Source code is available.

Table 2:

Techniques to reduce wrong patient selection, with our estimates of the level of effort and safety impact (TBD = to be determined). Techniques with high estimated safety impact and low estimated effort are highlighted with bold text. The In-demo checkmarks (x) indicate which techniques are demonstrated in the prototype or video.

| Task | User Interface Technique | Estimated Effort | Estimated Safety impact | In demo? |

|---|---|---|---|---|

| Recall patient | Use patients’ photos and other information | HIGH | HIGH | x |

| Show the floor plan | medium | medium | x | |

| Provide a personalized list of patients | low | medium | ||

| Allow sorting | low | low | x | |

| Allow filtering | low | low | x | |

| Allow categorical grouping | low | low | x | |

|

| ||||

| Select patient | Provide clues that similar names exist | HIGH | HIGH | x |

| Use RFID technology | HIGH | HIGH | ||

| Always show patient’s full name | low | HIGH | x | |

| Consider ID reentry | low | medium | ||

| Include buffer space between rows | low | medium | x | |

| Increase row height | low | medium | x | |

| Highlight row under cursor | low | HIGH | x | |

| Consider using a 2D grid instead of a list | medium | low | ||

|

| ||||

| Verify selection | Highlight on departure | low | HIGH | x |

| Highlight on arrival | low | medium | x | |

| Use animated transition | low | medium | x | |

| Use a visual summary of the patient history | medium | TBD | ||

|

| ||||

| Place order | Keep the patient header visible at all times | low | medium | x |

| Maintain consistency between screens | low | low | x | |

| Use clinical decision support | HIGH | HIGH | ||

| Consider side-by-side display of detailed patient information and order information | medium | TBD | ||

|

| ||||

| Confirm order | Include the identity of the patient in Submit button | low | HIGH | x |

| Consider placing the submit button near the patient information | low | medium | x | |

|

| ||||

| Report error | Report errors with proper feedback | medium | TBD | |

| Speed up placing the same order for the correct patient | HIGH | Low | ||

During Task 1: Recall patient

* Use patients’ photos and other information. Patients’ photos can be used along with the patients’ information both in the patient list and in the header of the order screen. Photos likely aid the recall and verification step28. More contextual information, like the location, admission date, attending clinician’s name, chief complaint, etc. may also help clinicians recall the patient correctly. Color coding patient age and using visual clues indicating how long each patient has been in the hospital can also help clinicians identify the correct patient. Providing more information helps resolve confounding cases where two patients have similar names. Usually room number is shown in the patient selection list. But if the room numbers do not provide any contextual information, they will not be helpful.

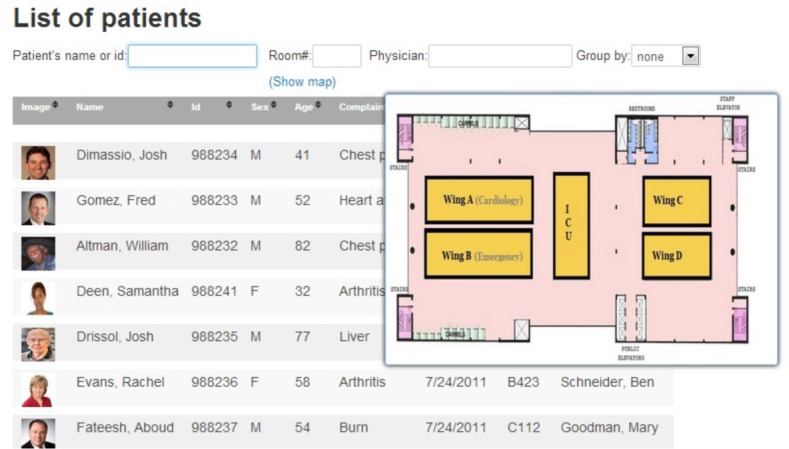

* Show the floor plan. Patients are often recalled by the location where they were seen. Clinicians dealing with a new patient and unable to remember the patient name after returning to their desk should be allowed to select from a floor plan to narrow the list of patients to only see the patients at that location. In small facilities the patient information might appear directly on the map, while in large facilities the map can be used to filter patients by ward or room (Figure 2).

Figure 2:

As clinicians click on a section of the map, the patient list is filtered to show only patients in that section. In small facilities individual rooms might be selected.

Other techniques can be used to facilitate recall as well as selection by narrowing the list of patients, so when clinicians only recall that a patient had a French name they can more easily scan the list to recall the exact name. In general users find their targets faster in smaller lists, and are more likely to miss a target in a large list24.

* Provide a personalized list of patients. Showing only the patients assigned to the currently logged-in clinician is preferable. Different clinicians may use the same computer to log in so showing the clinician’s name in the selection screen is critical. Further visual differentiation is likely to be needed to help clinicians recognize that someone else is logged in – especially when they do not expect it, or have been interrupted. Overlaying the clinicians’ name in large characters over the screen after a brief period of inactivity might be useful. A distinct background color or border style could be recommended to each user during initial setup, which could then be applied to all their screens.

* Allow sorting. Sorting patient lists by attributes such as date of birth, date of admission, name of provider, etc. can help match the list to the clinician’s mental organization of their care, for example clinicians often think of young and elderly patients.

* Allow filtering. Repetitively scanning long lists takes time, and clinicians also need to be able to recall patients’ identity based on other attributes than name. They should be able to use filters to narrow down the list of patients. For example, if clinicians remember that a patient was in cardiology, they can filter the list to see only the patients from that department. Our prototype provides three examples of filters (Figure 3): by patient name or ID, room number, and attending clinician name (which may be relevant for a nursing station). Filters need to be rapid, incremental and reversible. For example typing a string of letters should progressively filter down the list to only show names that include the string. Menus and controls should be optimized for rapid selection9.

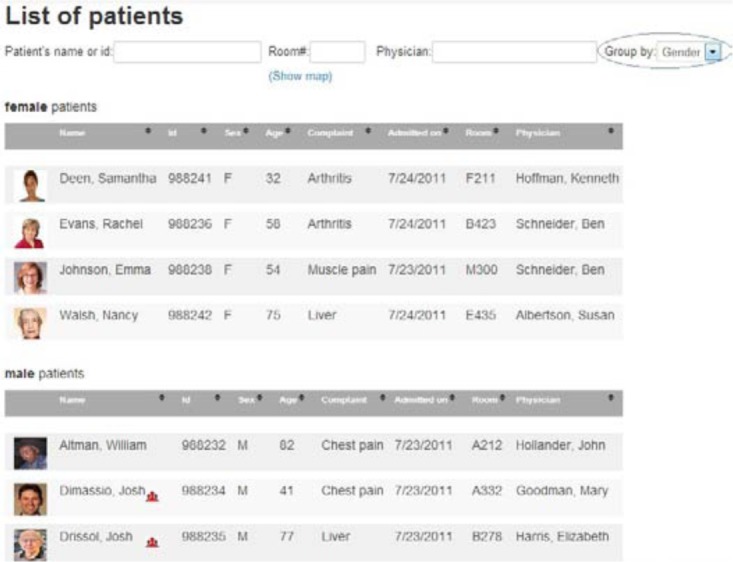

* Allow categorical grouping. Meaningful groups also reduce search time25. The patient list can be grouped into smaller lists based on categories, such as gender, floor, room number, service, department, attending physician name, etc. We show three examples of grouping in our prototype: gender, clinician and ward.

Figure 3:

A list of patients with pictures and examples of controls for searching (by name, ID, room or physician) and grouping (here by gender). Each row includes a patient’s photo, room number, date of birth, gender and the name of the attending clinician.

During Task 2: Select patient

After clinicians have recalled the identity of the patient whose record they want, they need to easily select that record. Several user interface techniques can help reduce errors.

* Provide clues that similar names exist. The system can alert clinicians of the existence of similar names. Imagine that a clinician recalls the name Adam Davis, then see David Adams at the top of the list. Those names will not sort together. Clinicians may never realize that there are two patients with similar names. Both orthographic (similar spelling) and phonological (similar sounding) similarity are detectable. Our prototype and video illustrates an example technique to notify clinicians about this similarity. It shows a small icon next to the name if there are other patients with similar names. Hovering the cursor over the icon reveals the list of similar names and additional information about those patients. The prototype also allows users to highlight the similar names in the list, or to group the rows of the patients with a similar name close together to facilitate comparison (Figure 4).

* Use RFID technology. Some hospitals give wristbands with their ID and demographic information. An RFID (radio frequency identification) scanner can be used to read the information. Although the technology is still costly and has its technical limitation, it has the potential to help reduce patient misidentification. RFID tags might also be used to read the location of the patient (e.g. a room) or the clinician ID.

* Always show patient’s full name. Some tabular lists crop patients’ names to fit it in the limited horizontal space. This will lead to confusion among similar names. The row displaying the patient names should be automatically resized to be large enough to show the entire names. Even omitting titles or suffixes can lead to a wrong selection. If a name in the list is abnormally long (to the point of not leaving room for the other columns) it should be wrapped inside the cell using a double height row.

* Consider ID reentry. Re-entering the patient’s ID a second time on the order screen has been shown to helpful7. Nevertheless we believe that the additional time it takes is likely to produce a lot of user frustration.

Figure 4:

Notification about similar names: here, a red little icon is placed next to names that are similar to other names. The cursor is hovering over Josh Drissol, showing that the name was found similar to Josh Dimassio.

Other design techniques can be used to facilitate the mechanical aspects of target selection in lists:

* Include buffer space between rows. If there is “dead” space between the rows and the mouse click occur, nothing will be selected. This reduces the chance of selecting the wrong row by mistake. This could be accomplished by added white space between rows (e.g. in figure 2–4) or by only accepting clicks in the center of the row. A proper balance should be found: when the spacing becomes too large, more scrolling will become needed, and if the clickable area is too small selection becomes more difficult

* Increase row height. If the row height is big enough, there is less chance of clicking the adjacent rows as we tend to click in the middle of a target. In general target selection performance follow Fitts’ law, i.e. speed and accuracy decreases proportionally with the smallest dimension of the target area26 (i.e. in this case the height of the selectable area). Again, if each row takes more vertical space, the screen can contain fewer number of rows.

* Increase font size. Readability can be an issue while working under pressure and older or tired users with even mild vision impairments may have trouble. Users should be allowed to increase the font size easily so that the text is always clearly readable. In our prototype, users can use a keyboard shortcut to customize the font-size.

* Highlight row under cursor. This is a standard technique for list selection which is sometime omitted. Highlighting the row under the cursor draws attention to the impending selection. It also makes more apparent an inadvertent slip to an adjacent row. In our prototype, the row under the cursor is highlighted by changing the background color to yellow and changing the font to a darker gray (see Figure 4).

* Consider using a 2D grid instead of a list. Instead of listing patients with one patient per row, the interface can use a 2-dimensional grid with all the patient information (name, room, age etc.) presented in a single taller and narrower cell. Square targets are easier to select than long skinner ones, which would reduce the slip errors. The drawback is that it may be less obvious how the patients are sorted or to compare particular attributes (e.g. age).

During Task 3: Verify selection

Clinicians should be given opportunities to detect selection errors before order entry.

* Highlight on departure. Highlighting the selected name or row while departing from the patient list can help users verify that the selection was correct28. In our prototype, when users selects a patient row by a mouse click, all the other rows fade out in 500 millisecond, leaving only the selected row visible on the screen (Figure 5).

* Highlight on arrival. The ordering screen can also display the patient information header first so the clinician can verify that the correct record has been opened. The temporary highlight only takes a fraction of a second so it does not slow down the interaction or require additional action. In our prototype, the patient name appears on the top-left corner of the screen (where readers usually start scanning or reading a screen) then the rest of the patient identification information appears next to the name. After one second, the rest of the interface becomes visible allowing clinicians to place the order. Our prototype allows adjustment of all animations delays and informal testing suggests that keeping all delays below a second is preferable. This is in contrast with techniques such as ID Reentry might require 10–15 additional seconds for each patient selection.

* Use animated transition. Clinicians’ attention can be drawn to the patient name during the entire transition between screens. For example an animated transition can smoothly glide the selected name from its position in the patient list to its final position in the header of the order screen. Again the animation should be fast enough not to hamper the workflow, and slow enough to serve its purpose (i.e. attract attention to the selected name, and teach the final location of the name in the header of the order screen to new users). An adaptive approach might be helpful. For the system could detect the probability of a slip error (e.g. when the mouse click was very near the edge of a row) and slow the transition a bit more to increase the chance of error detection.

* Use a visual summary of the patient history. A thumbnail of the timeline of the patient history could be displayed next to the patient’s photo. A visual summary is faster to “read” than a textual description of the patient’s history. Instead of the full history the last two or three significant events in the time line might help clinicians distinguish between patients.

Figure 5:

Highlight on departure (mock-up)

During Task 4: Place order

* Keep the patient header visible at all times. The header should not be allowed to scroll out of view

* Maintain consistency between screens. When switching from the patient list to the order screen, font-type, capitalization and color used to display the patient’s basic information should not change abruptly. Such changes are visually distracting and make it harder to perceive differences between the selection screen and the order screen.

* Use clinical decision support. For example Galanter et al. demonstrated a reduction of wrong-patient medication errors after implementing a clinical decision support system to prompt clinicians for indications when certain medications were ordered without an appropriately coded indication on the problem list.27.

* Consider side-by-side display of detailed patient information and order information. For example, a patient’s medication history is useful for clinicians ordering a new medication, and that medication history will be fairly unique to each patient so may help recognize selection errors.

During step 5: Confirmation after order entry

The goal here is to remind users of the patient identity before they finish their work:

* Include the identity of the patient in the Submit button. This simple technique increases the chances that clinicians will pay attention to the name or photo as they place the order. If there is no space to include the patient information on the submit button a tooltip can be used, but this is less desirable and it should be carefully designed to pop up consistently as the mouse approaches the submit button. A confirmation dialog box can serve the same purpose, but would require an additional reorientation and action from the user. It seems preferable to leave the dialog box option for situations where Decision Support can draw attention to a specific potential error (such as ordering a papsmears for a male patient)

* Consider placing the submit button near the patient information. Alternatively the submit button can be positioned near the header and the patient information so clinicians are more likely to glance at the patient’s name and photo before the order submission. This may require changing button placement guidelines for the entire application.

During Task 6: Report error

* Allow placing the same order for the correct patient without starting entirely from scratch. Correcting the error is another aspect of the problem. Canceling or invalidating the order and re-entering a new order from scratch takes time; so we recommend that if clinicians recognize that an error was made, they be able to keep at least some of the order information and assign it to the correct patient. This technique will not only speed up the creation of the new order and will make it easier to track the occurrence of patient selection errors.

* Report errors with proper feedback. When an error has been made, allow reporting of the error. If a clinician simply cancels an order then the reason of the error is not clear. It can be that the wrong patient was selected, or a wrong medication or simply that the situation has changed. The cancellation dialog box can be augmented to include a list of possible reasons for the cancellation. Collecting reasons for cancellation will provide a basis for requesting improvements based on what type of errors are most common for that system. For example, if most of the errors were due to selecting an adjacent row then the interface can be improved by inserting gap between the rows or resizing the row height. If a majority of errors are caused by similar names, then adding similarity algorithms and warnings about similar names will be most helpful. Most of the techniques proposed here have implementation costs, so error reports will be most useful to guide the selection of improvements to be made.

Limitations and Evaluation Challenges

This study has several limitations. We catalog many possible user interface techniques, and discuss why they are likely to be useful but the new techniques we propose have not been tested in clinical situations. Some of the techniques are very easy to implement and obvious payoffs (e.g. putting patient’s names on the Submit button, or not truncating names) so we are very confident they can be implemented immediately without extensive evaluation. Other techniques require more complex implementation and we cannot predict the exact payoff (e.g. calculating similarity of names) so clinical evaluations may be needed to measure the benefits, but they can be safely deployed after adequate interface usability testing. The benefits of other techniques such as animated transitions or list filtering is fairly well documented in Human-Computer Interaction research (see prior work section) and used extensively in modern interfaces such as mobile devices. Those techniques may not require formal evaluations in clinical settings before they can be deployed widely, but will require careful usability evaluations (e.g. animation needs some user testing to adjust transition speed). On the other hand, long-term monitoring and evaluations in clinical settings will be beneficial to measure if clinicians’ attention is still attracted to the patient identity after months of animation or highlighting use.

The main challenge faced by Human-Computer Interaction researchers is that it is difficult to simulate a hospital environment. Spontaneous errors are rare and unlikely to occur in laboratory settings. One approach is to “plant” errors and measure how the interface helps users to notice and recover from those errors18, 28. Another possible technique might be to simulate extremely disrupted situations (e.g. very high levels of interruptions or dual parallel tasks) so errors are more likely to be made and experiments can measure which techniques help reduce the effect of interruption. None of the laboratory methods can adequately evaluate the long-term effect of the proposed techniques.

Long-term in-situ evaluations will require both accurate detection of the errors and safety monitoring (as recommended by Sittig and al.29) and participation of vendors to build novel techniques into their user interfaces. Both Wilcox8 and Adelman7 used self-corrections to estimate the number of errors made (i.e. orders placed that were retracted within 10 min, and then reordered by the same provider on a different patient within 10 min of retraction.) Wrong patient errors are often only detected when reported by pharmacists or patients, sometimes after the patients have suffered the consequences of the errors. Elder et al.30 showed that the perceived benefit of improving the patient care system can encourage clinicians to report errors. A voluntary error reporting and tracking system established in Duke University’s Department of Community and Family Medicine succeeded in increasing the rate of error reporting by encouraging the clinicians to improve their system, involving them in the care improvement process, and keeping their identity confidential31. Instead of blaming the clinicians they directed their focus towards improving the overall health care system.

A/B testing32 is a new method now commonly used to improve e-commerce websites. It is a type of between-subject test where participants are presented with one of two versions of an interface while doing their work. With adequate measurement of the error rates conducting long term A/B testing might then become possible within operational EHR systems, and lead to evaluations in real situations. With adequate IRB review each novel technique presented here could be tested by presenting the original version A to one group of clinicians and version B (with the suggested techniques) to another group of clinicians.

Conclusion

After reviewing the prior work on wrong patient selection and applying our expertise in Human-Computer Interaction design, we cataloged 27 user interfaces techniques that have the potential to reduce the occurrence of costly and potentially life-threatening errors. Multiple techniques are available at every task of the process of recall, selection, verification, order entry, confirmation and reporting. Eighteen of those techniques were implemented and demonstrated. Feedback from clinicians and user interface designers enabled us to refine our designs, but scientific evaluation of those techniques remains a challenge. While some techniques may be evaluated in laboratory settings, more rapid progress will be made when hospitals and clinics put in place adequate incentives for reporting errors or near misses, and researchers have agreements with vendors to assist in the testing of the proposed techniques. Nevertheless many techniques have low implementation costs, low risk and high probable impact and we believe do not require long-term evaluation before they can be implemented. One such example we hope to see implemented in all EHR systems is the inclusion of the name of the patient on Submit buttons. Drawing attention to and collecting accurate data about medical care errors, is exactly what advocates of the Learning Health System promote to accelerate continuous improvement. In addition, these same techniques could have payoffs for many consumer and business applications, in which selections of people (passengers, college applicants, etc.), products (books, films, etc.), or services (flights, shipping, etc.) are made.

Acknowledgments

This work was partially supported by Grant No. 10510592 for Patient-Centered Cognitive Support under the Strategic Health IT Advanced Research Projects Program (SHARP) from the Office of the National Coordinator for Health Information Technology. We also want to thank Zach Hettinger, Meirav Taieb-Maimon, and all our Sharp project colleagues who helped along the way by provided feedback on the prototype and paper.

Footnotes

Project webpage with video demonstration: http://www.cs.umd.edu/hcil/WPE/

References

- 1.Schumacher RM, Patterson ES, North R, Zhang J, Lowry SZ, Quinn MT, Ramaiah M. Technical Evaluation, Testing and Validation of the Usability of Electronic Health Records. Baltimore, MD: National Institute of Standards and Technology; 2011. (Report No. NISTIR 7804). [Google Scholar]

- 2.Koppel R, Metlay JP, Cohen A, Abaluck B, Localio AR, Kimmel SE, Strom BL. Role of computerized physician order entry systems in facilitating medication errors. JAMA: The Journal of the American Medical Association. 2005;293(10):1197–1203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- 3.Grissinger M. Oops, sorry, wrong patient!: Applying the joint commission’s ”two-identifier” rule goes beyond the patient’s room. Pharmacy and Therapeutics: a peer-reviewed journal for formulary management. 2008;33:625–651. 11. [PMC free article] [PubMed] [Google Scholar]

- 4.Hyman D, Laire M, Redmond D, Kaplan DW. The use of patient pictures and verification screens to reduce computerized provider order entry errors. Pediatrics. 2012;130(1):e211–e219. doi: 10.1542/peds.2011-2984. [DOI] [PubMed] [Google Scholar]

- 5.Dunn EJ, Moga PJ. Patient misidentification in laboratory medicine: A qualitative analysis of 227 root cause analysis reports in the veterans health administration. Archives of Pathology and Laboratory Medicine. 2010 doi: 10.5858/134.2.244. [DOI] [PubMed] [Google Scholar]

- 6.Lambert BL, Dickey LW, Fisher WM, Gibbons RD, Lin S-J, Luce PA, McLennan CT, Senders JW, Yu CT. Listen carefully: The risk of error in spoken medication orders. Social Science and Medicine. 2010;70(10):1599–1608. doi: 10.1016/j.socscimed.2010.01.042. [DOI] [PubMed] [Google Scholar]

- 7.Adelman JS, Kalkut GE, Schechter CB, Weiss JM, Berger MA, Reissman SH, Cohen HW, Lorenzen SJ, Burack DA, Southern WN. Journal of the American Medical Informatics Association. 2012 doi: 10.1136/amiajnl-2012-001055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wilcox AB, Chen YH, Hripcsak G. Minimizing electronic health record patient-note mismatches. J Am Med Inform Assoc. 2011;18:511–14. doi: 10.1136/amiajnl-2010-000068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Shneiderman B, Plaisant C. Designing the user Interface. Addison Wesley; 2010. [Google Scholar]

- 10.Sengstack P. CPOE configuration to reduce medication errors. Journal of Healthcare Information Management. 2010;24(4) [Google Scholar]

- 11.Lane R, Stanton NA, Harrison D. Applying hierarchical task analysis to medication administration errors. Applied Ergonomics. 2006;37(5):669–679. doi: 10.1016/j.apergo.2005.08.001. [DOI] [PubMed] [Google Scholar]

- 12.Reason J. Human error. Cambridge university press; 1990. [Google Scholar]

- 13.Norman DA. Design rules based on analyses of human error. Commun ACM. 1983 Apr;26(4):254–258. [Google Scholar]

- 14.Thimbleby H, Cairns P. Reducing number entry errors: solving a widespread, serious problem. Journal of The Royal Society Interface. 2010;7(51):1429–1439. doi: 10.1098/rsif.2010.0112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.McCoy AB, Wright A, Kahn MG, Shapiro JS, Bernstam EV, Sittig DF. Matching identifiers in electronic health records: implications for duplicate records and patient safety. BMJ Quality & Safety. 2013;22(3):219–224. doi: 10.1136/bmjqs-2012-001419. [DOI] [PubMed] [Google Scholar]

- 16.Phipps E, Turkel M, Mackenzie ER, Urrea C. He thought the lady in the door was the lady in the window: A qualitative study of patient identification practices. Joint Commission Journal on Quality and Patient Safety. 2012;38(3):127–134. doi: 10.1016/s1553-7250(12)38017-3. [DOI] [PubMed] [Google Scholar]

- 17.The Joint Commission National Patient Safety Goals. Available at: http://www.jointcommission.org/PatientSafety/NationalPatientSafetyGoals/ (Accessed 20 December 2012)

- 18.Hettinger AZ, Fairbanks RJT. Recognition of patient selection errors in a simulated computerized provider order entry system. Proceedings of the Human Factors and Ergonomics Society Annual Meeting. 2012;56(1):1743–1747. [Google Scholar]

- 19.Henneman PL, Fisher DL, Henneman EA, et al. Providers do not verify patient identity during computer order entry. Acad Emerg Med. 2008;15:641–8. doi: 10.1111/j.1553-2712.2008.00148.x. [DOI] [PubMed] [Google Scholar]

- 20.Novick J Rhodes, Wert W. The communicative functions of animation in user interfaces. Proceedings of the 29th ACM international conference on Design of communication, SIGDOC ’11, pages 1–8; New York, NY, USA. ACM; 2011. [Google Scholar]

- 21.Tversky B. Visualizing Thought. Topics in Cognitive Science. 2011;3:499–535. doi: 10.1111/j.1756-8765.2010.01113.x. [DOI] [PubMed] [Google Scholar]

- 22.Schlienger S Conversy, Chatty S, Anquetil M, Mertz C. Improving users’ comprehension of changes with animation and sound: An empirical assessment. Proc. Human-Computer Interaction INTERACT 2007, volume 4662 of Lecture Notes in Computer Science, pages 207–220; Heidelberg: Springer Berlin; 2007. [Google Scholar]

- 23.Plaisant C, Chao T, Wu J, Hettinger A, Herskovic J, Johnson T, Bernstam E, Markowitz E, Powsner S, Shneiderman B. Twinlist: Novel User Interface Designs for Medication Reconciliation. Proceedings of AMIA Annual Symposium. 2013:1150–1159. [PMC free article] [PubMed] [Google Scholar]

- 24.Halverson T, Hornof AJ. Local density guides visual search: Sparse groups are first and faster. Proceedings of the Human Factors and Ergonomics Society Annual Meeting. 2004;48(16):1860–1864. [Google Scholar]

- 25.Marian A, Dexter F, Tucker P, Todd M. Comparison of alphabetical versus categorical display format for medication order entry in a simulated touch screen anesthesia information management system: an experiment in clinician-computer interaction in anesthesia. BMC Medical Informatics and Decision Making. 2012;12(1):46. doi: 10.1186/1472-6947-12-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wobbrock JO, Cutrell E, Harada S, MacKenzie IS. An error model for pointing based on fitts’ law. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’08, pages 1613–1622; 2008. [Google Scholar]

- 27.Galanter W1, Falck S, Burns M, Laragh M, Lambert BL. Indication-based prescribing prevents wrong-patient medication errors in computerized provider order entry (CPOE) J Am Med Inform Assoc. 2013 May 1;20(3):477–81. doi: 10.1136/amiajnl-2012-001555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Taieb-Maimon M, Plaisant C, Hettinger Z, Shneiderman B. Increasing recognition of wrong patient errors while using a computerized provider order entry system an experiment. Under review-contact authors. 2013 [Google Scholar]

- 29.Sittig DF, Singh H. Electronic health records and national patient-safety goals. N Engl J Med. 2012;367(19):1854–1860. doi: 10.1056/NEJMsb1205420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Elder NC, Graham D, Brandt E, Hickner J. Barriers and motivators for making error reports from family medicine offices: A report from the American Academy of Family Physicians National Research Network (AAFP NRN) The Journal of the American Board of Family Medicine. 2007 Marchapril;20(2):115–123. doi: 10.3122/jabfm.2007.02.060081. [DOI] [PubMed] [Google Scholar]

- 31.Kaprielian V, Østbye T, Warburton S, Sangvai D, Michener L, et al. A system to describe and reduce medical errors in primary care. Advances in Patient Safety: New Directions and Alternative Approaches. 2008;1 [PubMed] [Google Scholar]

- 32.Kohavi R, Longbotham R, Sommerfield D, Henne R. Controlled experiments on the web: survey and practical guide. Data Mining and Knowledge Discovery. 2009;18:140–181. [Google Scholar]