Abstract

Background

A coordinated fitting of a cochlear implant (CI) and contralateral hearing aid (HA) for bimodal device use should emphasize balanced audibility and loudness across devices. However, guidelines for allocating frequency information to the CI and HA are not well established for the growing population of bimodal recipients.

Purpose

The study aim was to compare the effects of three different HA frequency responses, when fitting a CI and an HA for bimodal use, on speech recognition and localization in children/young adults. Specifically, the three frequency responses were wideband, restricted high frequency, and nonlinear frequency compression (NLFC), which were compared with measures of word recognition in quiet, sentence recognition in noise, talker discrimination, and sound localization.

Research Design

The HA frequency responses were evaluated using an A B1 A B2 test design: wideband frequency response (baseline-A), restricted high-frequency response (experimental-B1), and NLFC-activated (experimental-B2). All participants were allowed 3–4 weeks between each test session for acclimatization to each new HA setting. Bimodal benefit was determined by comparing the bimodal score to the CI-alone score.

Study Sample

Participants were 14 children and young adults (ages 7–21 yr) who were experienced users of bimodal devices. All had been unilaterally implanted with a Nucleus CI24 internal system and used either a Freedom or CP810 speech processor. All received a Phonak Naida IX UP behind-the-ear HA at the beginning of the study.

Data Collection and Analysis

Group results for the three bimodal conditions (HA frequency response with wideband, restricted high frequency, and NLFC) on each outcome measure were analyzed using a repeated measures analysis of variance. Group results using the individual “best bimodal” score were analyzed and confirmed using a resampling procedure. Correlation analyses examined the effects of audibility (aided and unaided hearing) in each bimodal condition for each outcome measure. Individual data were analyzed for word recognition in quiet, sentence recognition in noise, and localization. Individual preference for the three bimodal conditions was also assessed.

Results

Group data revealed no significant difference between the three bimodal conditions for word recognition in quiet, sentence recognition in noise, and talker discrimination. However, group data for the localization measure revealed that both wideband and NLFC resulted in significantly improved bimodal performance. The condition that yielded the “best bimodal” score varied across participants. Because of this individual variability, the “best bimodal” score was chosen for each participant to reassess group data within word recognition in quiet, sentence recognition in noise, and talker discrimination. This method revealed a bimodal benefit for word recognition in quiet after a randomization test was used to confirm significance. The majority of the participants preferred NLFC at the conclusion of the study, although a few preferred a restricted high-frequency response or reported no preference.

Conclusions

These results support consideration of restricted high-frequency and NLFC HA responses in addition to traditional wideband response for bimodal device users.

Keywords: Pediatric, bimodal fittings, speech perception

INTRODUCTION

The use of a cochlear implant (CI) in one ear and a hearing aid (HA) in the nonimplanted ear is referred to as bimodal device use. An increasing number of individuals are potential bimodal device recipients as candidacy guidelines for cochlear implantation expand to include individuals with more residual hearing. For example, many adults and children with asymmetric hearing loss may present with hearing threshold levels that are within the severe to profound range at one ear with less severe hearing loss at the opposite ear (Firszt et al, 2012; Cadieux et al, 2013). The rationale for fitting bimodal devices is that in addition to providing potential access to binaural cues that enhance listening in everyday environments, the acoustic information from the HA may improve overall speech recognition and perception of music. Specifically, low-frequency acoustic information from the HA may provide phonetic cues related to consonant voicing and manner that complement the CI and improve overall recognition of speech (Ching et al, 2011). Furthermore, these low-frequency acoustic cues may transmit pitch cues that improve perception of music (McDermott, 2011). The ability to localize sound, hear in the presence of background noise, and perceive music are among the outcomes that may be improved when listening bimodally compared to a unilateral CI (Ching, Hill, et al, 2005; Kong et al, 2005; Ching et al, 2007; Gifford et al, 2007a; Dorman et al, 2008; Uchanski et al, 2009). In addition, some period of bimodal device use prior to children receiving bilateral sequential CIs may have benefits for language development (Nittrouer and Chapman, 2009) and localization abilities (Grieco-Calub and Litovsky, 2010). Given that the normal auditory system is highly reliant on input from both ears, children listening through only one ear must develop speech and language via a system that is not only impaired, but also receives unilateral input. A coordinated fitting of an HA and CI for bimodal use should emphasize balanced audibility and loudness across the two ears and devices (Blamey et al, 2000; Ching et al, 2001; Ching, van Wanrooy, et al, 2005; Keilmann et al, 2009). However, guidelines for allocating frequency information to the CI and HA are less well established for this population. Some investigators have varied the degree of frequency overlap between the CI and HA whereby to some degree, the low-frequency information is assigned to the HA and delivered acoustically and the high-frequency information is assigned to the CI and delivered electrically (Vermeire et al, 2008b). Other studies have specifically limited the frequency range of the CI program for recipients with traditional bimodal fittings (CI + HA) and with hybrid or electroacoustic stimulation (Kiefer et al, 2005; Simpson et al, 2009b). Results have been inconclusive; some supported programming the devices so that the frequency range delivered acoustically and electrically has some degree of overlap (Kiefer et al, 2005; Vermeire et al, 2008b), others supported programming the devices so that there is no overlap (Gantz and Turner, 2004; James et al, 2006), and still others have shown no significant difference between the two variations (Simpson et al, 2009a). Vermeire et al (2008a) emphasized that for bimodal fittings, the HA fitting protocol should depend on the degree and configuration of the acoustic thresholds and the extent to which acoustic gain can be applied.

Although the mechanisms underlying bimodal benefits are not completely understood, the low-frequency acoustic cues from the HA are thought to be the primary source of benefit observed in speech perception (Dorman et al, 2008; Zhang et al, 2010). In many cases, the unaided low- to mid-frequency thresholds (~125– 1000 Hz) range from a mild to moderate degree, and the high-frequency thresholds (1500 Hz and greater) range from severe to profound. As such, the frequency response of the HA may be optimal when gain and output are primarily confined in the low-frequency region or at least in the frequency region where an individual has usable residual hearing. In 14 adult CI recipients, bimodal benefit (CI + HA versus CI alone) for speech perception correlated with poorer aided HA thresholds in the mid to high frequency range (Mok et al, 2006). Similarly, nine pediatric bimodal users showed greater bimodal benefit correlated with better-aided HA thresholds at 250–500 Hz and poorer HA thresholds at 4000 Hz (Mok et al, 2010). The authors suggested that high-frequency gain provided by the HA may interfere with the information provided by the CI and ultimately have adverse effects on bimodal benefit. In contrast, another study with 19 adult bimodal users found that better-aided HA thresholds at 1500 and 2000 Hz related to better scores in the bimodal condition for localization of speech (Potts et al, 2009). More recently, Neuman and Svirsky (2013) systematically varied the frequency bandwidth of the HA for 14 adult bimodal users to examine the effects of frequency response on bimodal benefit for speech recognition in quiet and noise. The HA gain and output for the maximum (i.e., widest) frequency bandwidth were set using National Acoustics Laboratory-Revised Profound prescriptive targets (Byrne et al, 1991). Gain and output were modified from these prescriptive targets to create increasingly restricted frequency bandwidths with cutoff frequencies at 2000, 1000, and 500 Hz, respectively. Restricting the bandwidth to frequencies below 1000 Hz did not provide significantly greater speech recognition benefit; the best performance was observed when the output and gain were amplified across all frequencies with usable hearing.

Another option for the HA frequency response for bimodal recipients is to use frequency-lowering technology available in some HAs. In this case, high-frequency information is shifted to a lower frequency region, although the terminology and technology vary across devices (Glista et al, 2009). Candidates for this technology are individuals who cannot achieve high-frequency audibility due to severe high-frequency hearing loss combined with the limitations of HA gain and acoustic feedback in this region. This has important implications for children who require a wider frequency bandwidth, thus more high-frequency information, for optimal perception of speech compared to adults (Pittman and Stelmachowicz, 2000; Stelmachowicz et al, 2002; Pittman et al, 2005). High-frequency audibility assumes a critical role for linguistic development in children not only because of the importance of consonants for overall speech intelligibility (Miller and Nicely, 1955), but also their importance to speech, grammar, and vocabulary development; for example, the English phonemes /s/ and /z/ as markers for plurality and possession (Moeller et al, 2007a,b; Stelmachowicz et al, 2007).

Frequency-lowering technology for children has generally resulted in positive benefits for those with moderate to severe hearing loss using HAs, especially for recognition of high-frequency phonemes (McCreery et al, 2012). The use of frequency-lowering technology, specifically nonlinear frequency compression (NLFC) whereby the high-frequency information is compressed at a specified high-frequency cutoff and ratio, and leaves lower frequency regions unchanged, has met with mixed results for bimodal recipients. Consonant perception in quiet and sentence recognition in noise did not differ for adult bimodal recipients when NLFC was activated compared to a nonactivated condition; however, the participants readily accepted the use of NLFC in the HA (McDermott and Henshall, 2010). The effects of NLFC on spondee recognition in noise, sound localization, and self-report questionnaires were examined for 10 adult-bimodal recipients (Perreau et al, 2013) who alternated daily between two HA responses (NLFC activated versus conventional or NLFC nonactive). Localization results revealed no significant differences between the two HA settings suggesting that NLFC offered no additional benefit over the conventional setting. Furthermore, only bimodal benefit (CI + HA versus CI alone) occurred for the conventional HA setting in noise and many of the participants rated the NLFC HA setting as “distorted” or “harsh.” Word and consonant recognition in quiet and sentence recognition in noise did not differ for 11 pediatric-bimodal recipients who wore HAs with and without NLFC (Park et al, 2012). In contrast to the Perreau et al (2013) study in adults, some of these pediatric users preferred NLFC. As CI recipients present with greater degrees of residual hearing at the nonimplanted ear, clinicians must carefully consider frequency response options and available technology for the HA.

The aim of this study was to compare the effects of three different HA frequency responses (wideband, restricted high frequency, and NLFC), when fitting a CI and an HA for bimodal use in children/young adults. Group and individual results were compared across fitting conditions for word recognition in quiet, sentence recognition in noise, talker discrimination, and localization.

METHODS

Participants

Fourteen children (eight boys; six girls) participated in this study ranging in age from 7 to 21 yr with a mean age at testing of 12 yr. Participants had been unilaterally implanted (11 in the left ear; 4 in the right ear) with a Nucleus CI24 implant (CI) system for at least 2.5 yr, used either a Freedom or CP810 speech processor, had a minimum Consonant-Nucleus-Consonant (Peterson and Lehiste, 1962) word recognition score in quiet of ≥40%, and were on average 7.6 yr old at the time of implantation. Note that the average age of implantation is older than expected; this is explained by the presence of more residual hearing and the expanding criteria for cochlear implantation. Participants were experienced bimodal users and were fit with a Phonak Naida IX UP behind-the-ear HA at the beginning of the study. Each was allowed to keep the HA at the completion of the study. No noise processing or directional microphones were active for the HA. The mean unaided puretone average threshold (500, 1000, and 2000 Hz) was 84.8 dB HL and ranged from ~32 to 104 dB HL. Table 1 lists demographic and audiological information for the group including CI information. Note that all participants had mean aided CI thresholds ≤30 dB HL from 250 to 6000 Hz. The study was approved by the Human Research Protection Office of Washington University in St. Louis.

Table 1.

Participant Characteristics

| Participant | Age at Test (yrs) |

Age at first HA fit (yrs) |

Age at CI (yrs) | Internal CI | PTA HA ear unaided |

PTA HA ear aided | Pre-CI PTA unaided |

|---|---|---|---|---|---|---|---|

| P01 | 15 | 1 | 11 | CI24RE (CA) | 104.3 | 64.7 | 110.0 |

| P02 | 21 | 2 | 20 | CI24RE (CA) | 96.7 | 30.0 | 100.0 |

| P03 | 9 | 0.5 | 4 | CI24RE (CA) | 101.7 | 48.3 | 91.7 |

| P04 | 12 | 4 | 7 | CI24RE (CA) | 86.7 | 24.7 | 88.3 |

| P05 | 15 | 1 | 8 | CI24R (ST) | 90.0 | 31.3 | 80.0 |

| P06 | 7 | 3 | 4 | CI24RE (CA) | 101.7 | 24.0 | 101.7 |

| P07 | 7 | 2 | 3 | CI24RE (CA) | 80.0 | 24.7 | 100.0 |

| P08 | 14 | 2 | 6 | CI24R (CS) | 100.0 | 28.7 | 95.0 |

| P09 | 8 | 1.5 | 5 | CI24RE (CA) | 86.7 | 28.0 | 86.7 |

| P10 | 15 | 5 | 6 | CI24M | 78.3 | 31.3 | 78.3 |

| P11 | 7 | 0.5 | 3 | CI24RE (CA) | 73.3 | 20.0 | 115.0 |

| P12 | 10 | 2 | 5 | CI24RE (CA) | 31.7 | 20.7 | 83.3 |

| P13 | 17 | 3 | 11 | CI24RE (CA) | 76.7 | 29.3 | 86.7 |

| P14 | 13 | 1 | 9 | CI24RE (CA) | 80.0 | 29.3 | 76.7 |

Notes: PTA = pure-tone average at 500, 1000, and 2000 Hz in dB HL.

CI24RE (CA) = Nucleus Freedom Contour Advance; CI24R (CS) = Nucleus 24 Contour; CI24R (ST) = Nucleus 24; CI24M = Nucleus 24.

Study Design

The three HA frequency responses were evaluated using an A B1 A B2 test design: wideband frequency_response (baseline-A), restricted high-frequency response (experimental-B1), and NLFC activated (experimental-B2). All children were allowed 3–4 weeks between each session for acclimatization to each new HA setting. All participants were consistent users of bimodal devices and reported using their CI and HA for the majority of their day; however, HA wear time was not confirmed using data logging. The total duration of the study was ~16–20 weeks. Participant preference was assessed at the conclusion of the study by allowing participants to listen to each HA setting and choose the setting that they preferred to continue to use on a daily basis. The baseline HA response was the wideband frequency response setting, where output of the HA was matched to Desired Sensation Level (DSL v 5.0 [Scollie et al, 2005]) targets across the frequency range from 250 to 6000 Hz whenever possible. The output of the HA was verified using the Audioscan Verifit system. Real-ear-to-coupler differences (RECDs) were obtained for each child and applied to simulated real-ear measures (SREM) to assess output levels of the HA to various stimuli. Verification stimuli included calibrated speech (i.e., male-speaker speech maps) at three different input levels (50, 60, 70 dB SPL) and a sweep of tone bursts presented at 90 dB SPL to assess the maximum power output. Optimization of HA settings for both high-frequency restricted response and NLFC settings were adjusted based on unaided thresholds, aided thresholds, and SREM output using average conversational speech (speech map at 60 dB SPL) for each child. The cutoff frequency for limiting gain and output for the high-frequency restricted response was determined by identifying both the lowest pitch where the unaided hearing threshold was ≥90 dB HL and at which the root mean square (RMS) average of the aided speech map at 60 dB SPL fell below the unaided threshold. When restricting the HA bandwidth, some participants reported reduced loudness when balancing the CI and HA for audibility and loudness. For these participants, the low-frequency gain of the HA was increased to compensate for reduced loudness. The cutoff frequency for the NLFC was determined on an individual basis depending on three factors: (1) HA output related to DSL targets for an average conversational input (60 dB SPL) created by unaided thresholds, (2) recognition of the Ling 6 sounds (ah, oo, ee, m, sh, s), and (3) aided thresholds using frequency modulated tones in the sound field. The chosen cutoff frequency resulted in maximum audibility measured on the Audioscan Verifit and via aided sound-field thresholds with minimal Ling 6 sound confusions. After selecting the optimal cutoff frequency, the frequency compression ratio was then assigned by the manufacturer software. Table 2 shows individual participant settings for the restricted high-frequency response cutoff and the cutoff frequency and compression ratio for the NLFC setting.

Table 2.

HA Settings

| Participant | NLFC Ratio | NLFC Hz Cutoff | Restricted Hz Cutoff |

|---|---|---|---|

| P01 | 4:1 | 1600 | 1000 |

| P02 | 4:1 | 1600 | 1000 |

| P03 | 4:1 | 1500 | 1000 |

| P04 | 2.2:1 | 2400 | 1500 |

| P05 | 4:1 | 1500 | 1000 |

| P06 | 4:1 | 2800 | 2000 |

| P07 | 4:1 | 2100 | 1500 |

| P08 | 2.8:1 | 3300 | 1500 |

| P09 | 3.8:1 | 3000 | 1000 |

| P10 | 4:1 | 1800 | 1000 |

| P11 | 3.7:1 | 3100 | 2000 |

| P12 | 2.3:1 | 6000 | 3000 |

| P13 | 2.2:1 | 2400 | 500 |

| P14 | 1.5:1 | 3400 | 1500 |

Test Measures

Test measures were administered in each condition (A, B1, A, B2). Whenever possible, all tests were conducted in CI alone, HA alone, and bimodal conditions. All speech perception measures used recorded stimuli presented via an audiometer in the sound field in a sound-treated booth. All test stimuli were calibrated using a type 2 sound level meter (A weighting) placed at the level of the child’s device. Children were seated at 0° azimuth 3 ft. from the loudspeaker for all the test measures except localization. Test measures were completed by experienced pediatric audiologists and participant responses were judged by a single examiner.

The CNC monosyllabic word lists (50 words per list) were presented in quiet at 60 dBSPL and scored as percentage of correct words. The Bamford-Kowal-Bench Speech-in-Noise (BKB-SIN) Test (Killion et al, 2004) was presented in four-talker noise with 16–20 sentences per list. The noise and talkers were presented from the same loudspeaker. Pairs of sentences that yield equal signal-to-noise ratios (SNRs) were used for each condition. Results were calculated using the SNR at which 50% of the words were repeated correctly. Verbal responses were obtained for both tests.

Localization was evaluated using CNC words presented via an array of 15 loudspeakers at a 60 dB SPL (±3 dB roving) level. Ten words were presented from each of ten active loudspeakers (100 words), which were located at ±70°, ±50°, ±30°, ±20°, and ±10° azimuth. The five inactive loudspeakers were located at ±60°, ±40°, and 0° azimuth. Before the presentation of each CNC word, the word “ready” was presented. Each participant was instructed to face the front center speaker at 0° azimuth, until the word “ready” was heard, at which time they could turn their head. They indicated the speaker location by number (1–15) with a verbal response, then repositioned their gaze to the front center speaker. Results were calculated as an RMS error value based on the localization responses (i.e., the difference between the correct loudspeaker location and the speaker location identified by the participant). A lower value indicated better performance.

Sentence stimuli from the Harvard IEEE (IEEE, 1969) and the Indiana Multi-Talker Speech Database (Karl and Pisoni, 1994) were used to assess within-male and within-female talker discrimination. Sentences incorporated eight female and eight male talkers and were administered at 60 dB SPL and using the APEX 3 program developed at ExpORL (Laneau et al, 2005; Francart et al, 2008). A two-interval, two-alternative, forced-choice paradigm required that the listener respond by pointing or clicking on one of two schematic/cartoon images corresponding to “same person” or “different people.” In addition, on every trial, the sentences differed in the two intervals. Note, however, the listener did not need to understand the words in the sentences to make his/her response.

Unaided thresholds at audiometric frequencies from 125 to 8000 Hz were obtained with insert earphones for each ear. Aided sound-field detection thresholds were obtained in the three conditions (HA alone, CI alone, and HA + CI-bimodal) using frequency modulated tones at audiometric frequencies from 125 to 6000 Hz and collected at each test session. The participant was seated at ~1 m from the loudspeaker at 0° azimuth and thresholds were obtained in 2 dB increments. A Speech Intelligibility Index (SII) value was calculated using the SREM responses for the HA at the three different input levels for each child (i.e., 50, 60, and 70 dB SPL). The Audioscan Verifit system calculated the SII based on the one-third octave band method and includes level distortion effects. This was also used as a measure of aided audibility.

Data Analysis

Group bimodal benefit was initially examined by comparing the three bimodal conditions to the CI-alone condition using a repeated measures analysis of variance (ANOVA). The CI-alone score was used for this comparison as all participants considered their CI to be the dominant device. We anticipated substantial individual variability as to the “best bimodal” condition for a particular outcome measure based on past studies evaluating multiple conditions (McDermott and Henshall, 2010; Park et al, 2012). Because of this variability, group analyses conducted using an ANOVA with condition as the main effect was expected to show minimal or no group bimodal benefit for any particular condition. Conducting this same standard statistical analyses using the “best bimodal” score (i.e., identifying the largest difference between the CI alone and any of the three bimodal conditions) was also problematic because the precise value required to reject the null hypothesis was unknown. The mean of any of these differences in CI versus bimodal condition may appear to be significantly different from zero even if the bimodal condition had no real effect because each participant has three opportunities (bimodal conditions) to find a bimodal advantage. For this kind of problem, the theoretical sampling distribution was unknown. A randomization test is an approach that can be used to test for significance in these instances.

For a randomization test, the choice of a target condition (the CI-alone condition in the original comparison) was considered arbitrary, as were any differences that might have existed between that target condition and the remaining conditions. This provided a basis for determining what would be expected under the null hypothesis. In this procedure, the target condition (CI only in the original data) and the comparison conditions (all of the bimodal in the original data) were randomly selected for each participant. The largest difference was then chosen, and the average of these differences found. This procedure was repeated a large number of times (1,000,000 in this study) to create a sampling distribution of differences that represented what was expected under a random generating model—the null hypothesis. The location of the original difference in this randomization distribution was then found. If it was a rare event in that distribution (i.e., occurring with probability <0.05), then the random generating process, or null hypothesis, was rejected.

Correlation analysis was used to determine whether unaided thresholds, aided thresholds, or SII indices were associated with performance on a particular outcome measure.

Significance at the individual level was determined for the CNC word lists at the 0.05 level based on the binomial model (Carney and Schlauch, 2007) and the 95% confidence interval (>3.1 dB) for the BKB-SIN based on adult CI users with two list pairs (Killion et al, 2004). Individual differences for the localization test were determined by calculating the mean and standard deviation for individual responses for each speaker location using an ordinary least squares regression. Correction of standard errors for unequal variance between conditions was used to compare the slopes of the fitted lines. This method accounted for differences in variance as well as differences in slope.

The effects of learning and maturation over the duration of the study were examined by comparing the CI-alone scores for each outcome measure across the last three test sessions (or any three if they were missing a CI-alone test score) using a repeated measures ANOVA. The majority of participants completed testing in the CI-alone condition at each of the four test sessions; however, occasionally CI-alone testing was not conducted due to time constraints or participant fatigue. In addition, scores for the two baseline bimodal conditions (wideband bimodal at sessions 1 and 3) for each outcome measure were compared using paired t tests.

RESULTS

Repeated measures ANOVA for the CI-alone conditions across the three test sessions indicated no significant differences for any of the outcome measures: CNC-F(2,24) = 0.9, p = 0.43; BKB-F(2,24) = 0 .57, p = 0.57; Localization-F(2,24) = 1.83, p = 0.18; Talker Discrimination female-F(2,24) = 1.45, p = 0.25; Talker Discrimination male-F(2,22) = 0.79, p = 0.47. Paired t tests for the two baseline bimodal conditions were not significantly different for any of the outcome measures: CNC-t(12) = 0.39, p = 0.70; BKB-t(12) = 1.35, p = 0.31; Localization-t(9) = 1.06, p = 0.32; Talker Discrimination female-t(12) = 1.07, p = 0.31; Talker Discrimination male-t(12) = 1.16, p = 0.27. On the basis of these results, the average of the three CI-alone scores and the average of the two baseline bimodal conditions (wideband) were used in the following analyses to represent CI alone and baseline bimodal, respectively.

Unaided and Aided Thresholds

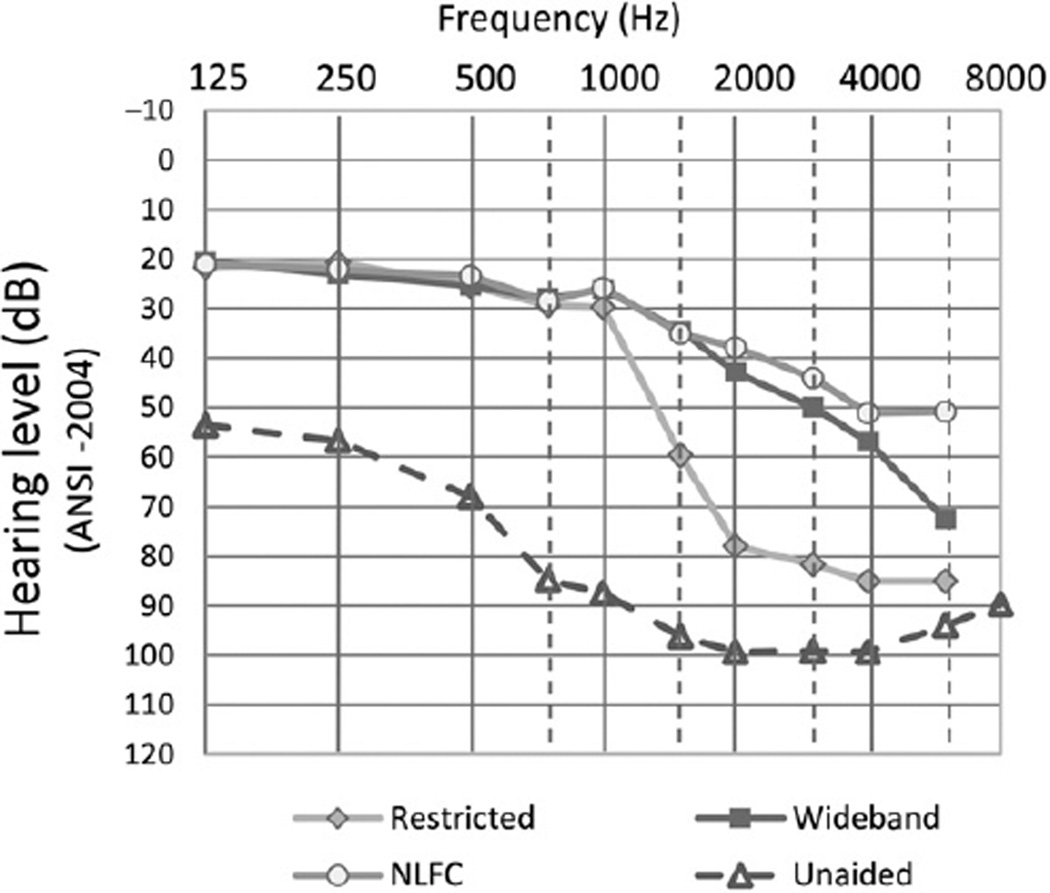

Figure 1 shows the mean unaided thresholds (open triangles) from 125 to 8000 Hz and the mean HA-alone thresholds for the three different HA frequency responses. On average, the aided thresholds for NLFC (open circles) and wideband (filled squares) were similar except for the highest frequency of 6000 Hz. The restricted high-frequency thresholds (filled diamonds) were higher (poorer) at 1500 Hz and above compared to the NLFC and wideband responses.

Figure 1.

Group mean audiometric threshold levels as a function of frequency. Unaided thresholds are shown as open triangles and dashed lines. Aided thresholds are shown in three conditions: restricted high frequency as filled diamonds and gray lines, wideband frequency as filled squares and dark gray lines, and frequency compression as open circles and gray lines.

CNC Words

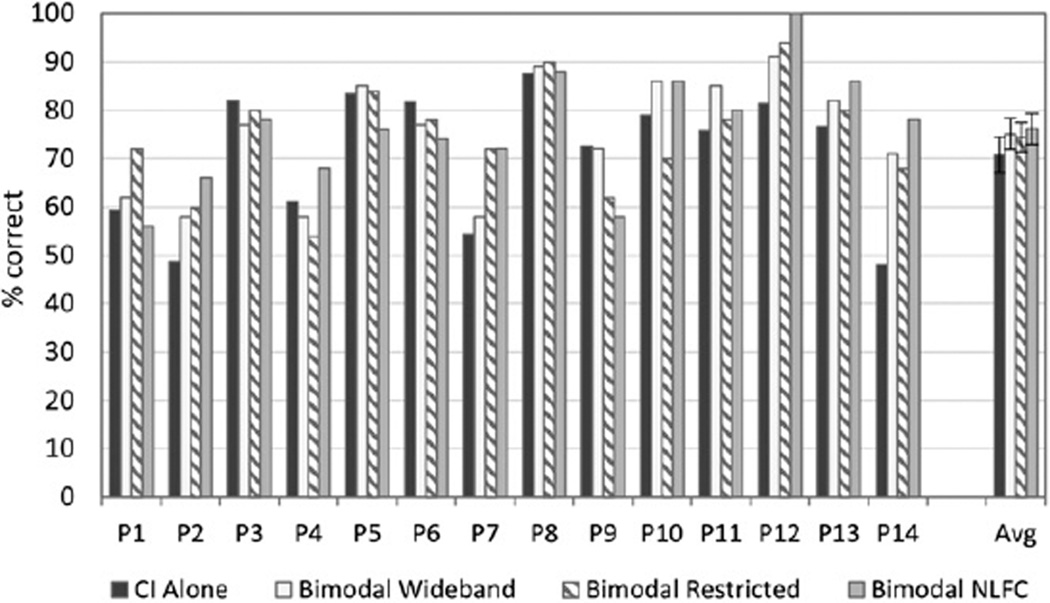

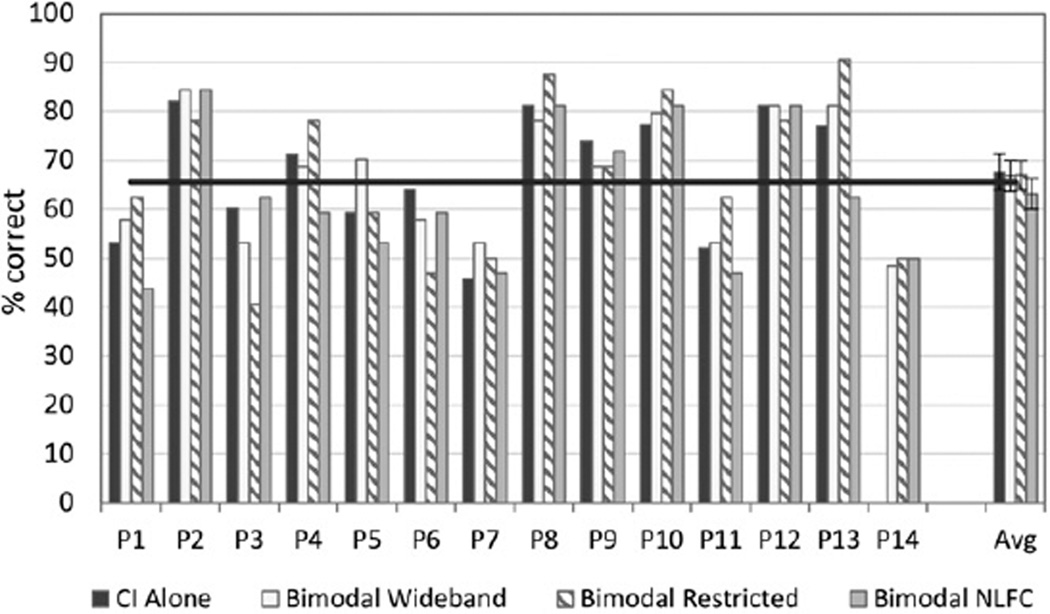

Figure 2 shows individual and group CNC scores for the CI-alone and three bimodal conditions: wideband, restricted high frequency, and NLFC. There was considerable variability across participants as to the condition that yielded the “best bimodal” score compared to the CI alone. Group data (shown to the far right) comparing the bimodal conditions to the CI alone revealed no significant benefit for any of the three bimodal conditions compared to the CI alone. However, when the bimodal score with the greatest difference from the CI alone was used (“best bimodal” score), a significant bimodal benefit was found [F(1, 13) = 12.69, p = 0.003, d = 0.077].

Figure 2.

Individual and group data are shown for speech recognition of CNC words presented in quiet. For each participant shown along the x axis, percent correct scores are displayed in dark gray for the CI-alone condition, and in three bimodal conditions: bimodal wideband (white), bimodal restricted (bars with angled stripes), and bimodal frequency compression (bars in light gray). Group mean and standard error results for each condition are shown to the far right.

This result was confirmed using the randomization procedure with the original difference exceeded by randomly generated differences only 2.27% (p < 0.05) of the time. Two participants (P12 and P14) had at least one bimodal score that was significantly better than the CI alone; P12 did best in either frequency compression (NLFC) or restricted, and P14 did best with any of the three frequency responses. Other participants had higher scores in a bimodal condition; however, the results did not reach statistical significance. No participant was significantly worse in the bimodal versus CI-alone condition.

BKB-SIN

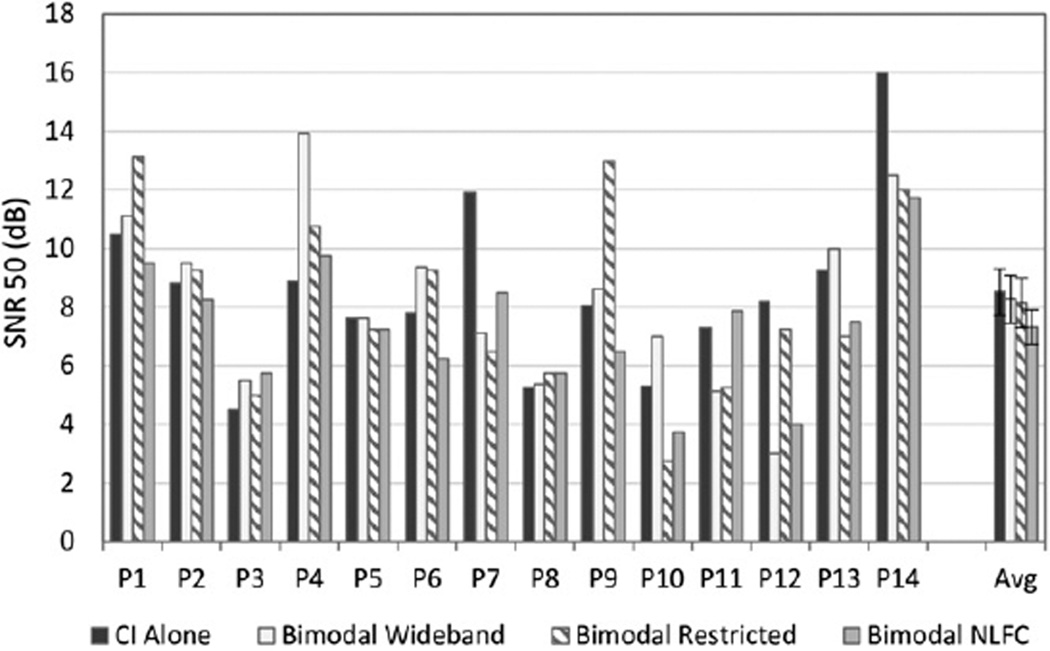

Figure 3 shows individual and group SNR scores for the BKB-SIN for the CI-alone and the three bimodal conditions: wideband, restricted high frequency, and NLFC. Lower SNRs reflect better performance. Results show considerable variability across participants and for the bimodal condition that yielded the best score. Group data comparing the bimodal conditions to the CI alone revealed no significant benefit for the three bimodal conditions versus the CI alone. Although group data using the “best bimodal score” revealed a significant bimodal benefit [F(1, 13) = 11.54, p = 0.005], this significant result was not confirmed using the randomization procedure with the original difference exceeded by randomly generated differences 13.02% of the time.

Figure 3.

Individual and group data are shown for speech recognition in noise on the BKB-SIN. For each participant shown along the x axis, SNR-50 (dB) scores are displayed in dark gray for the CI-alone condition, and in three bimodal conditions: bimodal wideband (white), bimodal restricted (bars with angled stripes), and bimodal frequency compression (bars in light gray). Group mean and standard error results for each condition are shown to the far right.

Three participants (P7, P12, and P14) had at least one bimodal score that was significantly lower (better) than the CI-alone score. For two participants (P7 and P14), all three bimodal scores were better than the CI alone, although there were no significant differences between the three bimodal conditions. One participant (P12) did best with wideband and NLFC bimodal. Two participants had one bimodal score that was significantly worse (higher) than the CI alone; P4 scored significantly worse with the wideband bimodal condition, and P9 scored significantly worse with the restricted high frequency bimodal condition.

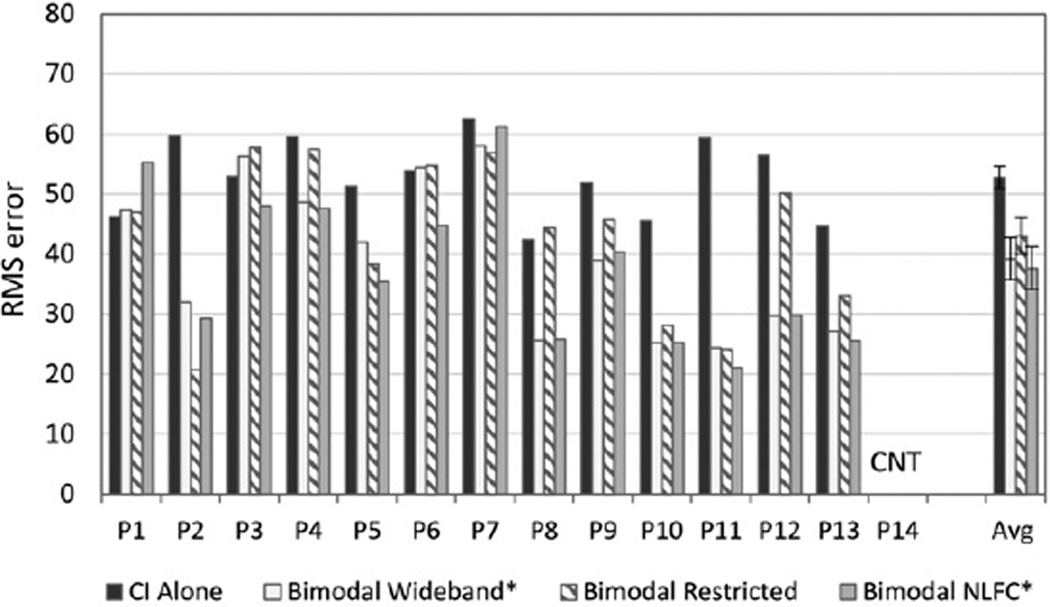

Localization

Figure 4 shows the individual and group mean RMS error results for the localization task. All but one participant (P14) were able to complete the localization task. Lower RMS error scores reflect better performance. Analysis of group data revealed significantly better scores in the bimodal conditions compared to CI alone [F(1, 12) 5 13.939, p = 0.003]. Post hoc comparisons showed that both the wideband and NLFC HA settings were significantly better than CI alone (p = 0.01, d = −1.3 and 0.006 d = −1.5, respectively). The “best bimodal” condition was significantly better than CI alone [F(1,12) = 25.47, p = 0.000], and was confirmed using the randomization procedure with the original difference exceeded by randomly generated differences only .00% (p < 0.01) of the time.

Figure 4.

Individual and group data are shown for localization. For each participant shown along the x axis, RMS error scores are displayed in dark gray for the CI-alone condition, and in three bimodal conditions: bimodal wideband (white), bimodal restricted (bars with angled stripes), and bimodal frequency compression (bars in light gray). Group mean and standard error results for each condition are shown to the far right.

CNT = could not test.

* Significant bimodal improvement compared to CI alone, p = 0.01.

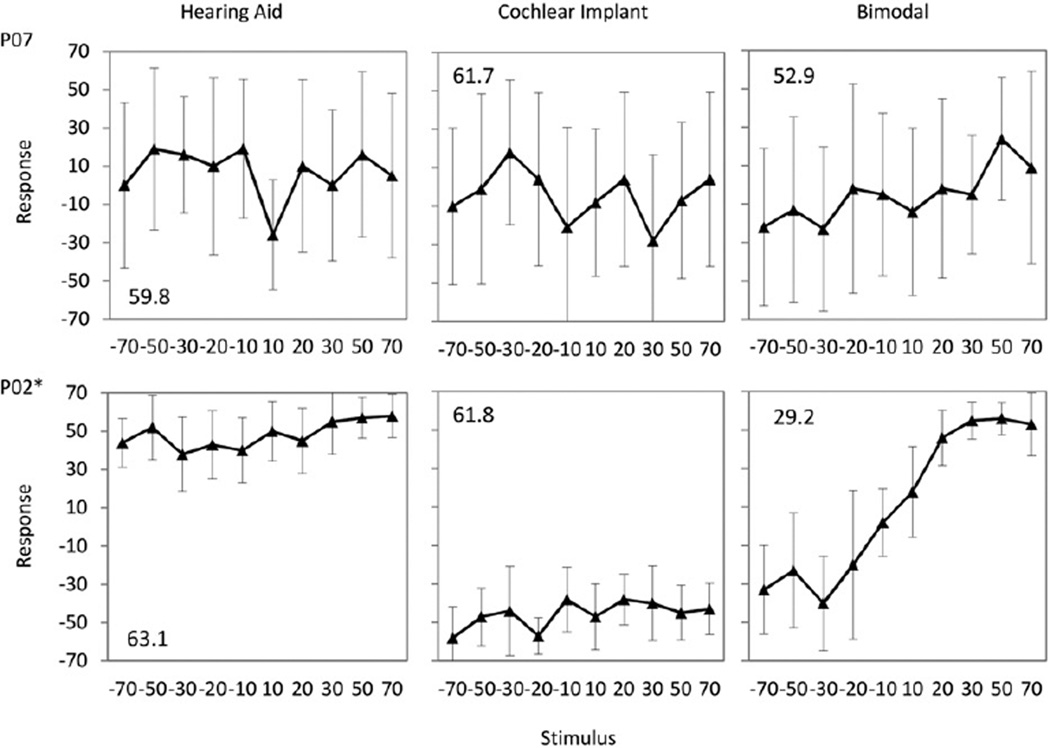

Individual localization plots are shown in Figure 5 to illustrate cases showing no bimodal benefit (P7) and bimodal benefit (P2) over the CI alone. The mean and standard deviation of the individual’s response and the actual speaker location of the stimuli are shown in degrees azimuth for each of the ten active loudspeakers used. The location of the stimulus is shown along the x axis, and the response along the y axis. Perfect localization responses would be graphed as a diagonal line from the lower left-hand corner to the upper right-hand corner. The HA alone, the CI alone, and the bimodal conditions are displayed from the left to right panels respectively. The bimodal score shown reflects the “best bimodal” score. Results of the ordinary least squares regression with correction of standard error for unequal variance indicated that the slopes of the lines across the four conditions (CI alone, bimodal wideband, bimodal restricted high frequency, and bimodal NLFC) were significantly different for all but two participants (P6 and P7). Individual F and P values are included in Appendix 1. Post hoc analyses using a corrected alpha of 0.0008 compared the three bimodal conditions to the CI-alone condition. Only four participants failed (P1, P3, P6, and P7) to have at least one bimodal condition that was significantly better than the CI-alone condition. The bimodal NLFC condition was significantly better than the CI-only condition for nine participants, the wideband condition was better than the CI alone for eight participants, and restricted frequency was significantly better than the CI alone for six participants.

Figure 5.

Example localization scores for participants P07 and P02 showing a row of three plots for each subject, with conditions hearing aid ear alone, CI ear alone, and bimodal. Numeric values in the upper- and lower-left corners of each plot represent the RMS error for each participant and condition.

*Significant bimodal improvement compared to CI alone, p < 0.001.

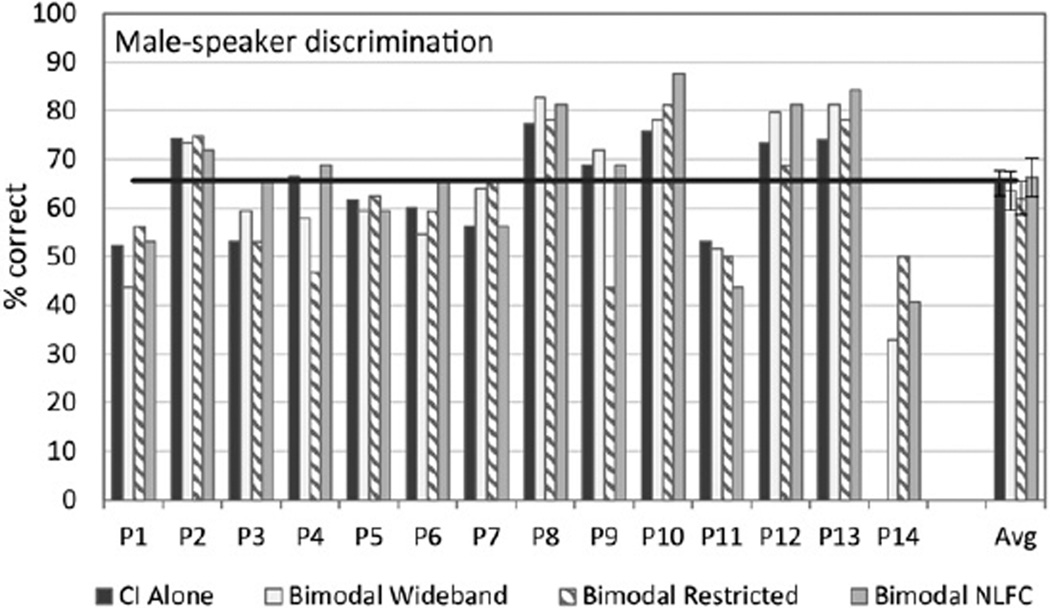

Talker Discrimination

Figures 6 and 7 show the individual and group data for the female- and male-talker task, respectively. Participant 14 did not complete the female- and male-talker task in the CI-alone condition, therefore these data are not included in the group analyses. Group data using the “best bimodal” score for the female- and male-talker task, respectively, revealed a significant bimodal benefit [F(1, 12) = 12.33, p = 0.004 female talker] and [F(1, 12) = 19.61, p = 0.001 male talker]. The significance of these results was not confirmed using the randomization procedure, with the original difference exceeded by randomly generated differences of 47.42 and 26.62% of the time, respectively. Note that a score >65.6% (shown by the black horizontal line) was significantly above chance: only 8 of 14 participants scored >65.6% for the female-talker task. Results were similar for the male-talker task: 7 of 14 participants scored >65.5%. Participants who scored above chance on one measure were the same participants who were able to do so on the opposite measure (i.e., female versus male talker).

Figure 6.

Individual and group data are shown for female-talker discrimination. For each participant shown along the x axis, percent correct scores are displayed in dark gray for the CI-alone condition, and in three bimodal conditions: bimodal wideband (white), bimodal restricted (bars with angled stripes), and bimodal frequency compression (bars in light gray). Group mean and standard error results for each condition are shown to the far right. The horizontal black line at 65% represents the level at which scores fall significantly above chance. One participant was not tested in the CI-alone condition.

Figure 7.

Individual and group data are shown for male-talker discrimination. For each participant shown along the x axis, percent correct scores are displayed in dark gray for the CI-alone condition, and in three bimodal conditions: bimodal wideband (white), bimodal restricted (bars with angled stripes), and bimodal frequency compression (bars in light gray). Group mean and standard error results for each condition are shown to the far right. The horizontal black line at 65% represents the level at which scores fall significantly above chance. One participant was not tested in the CI-alone condition.

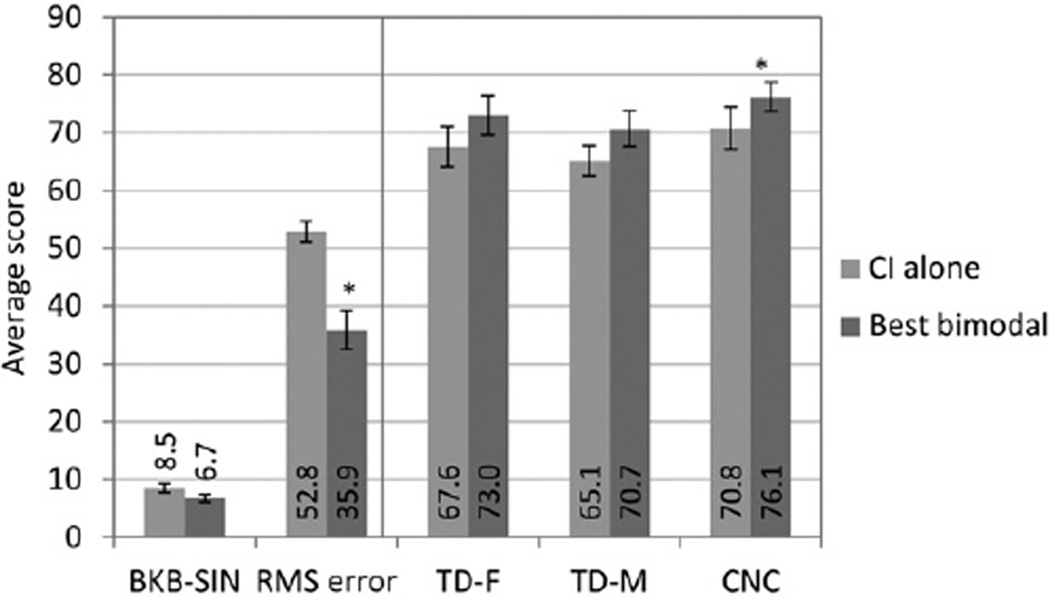

Figure 8 shows the group data for each of the above outcome measures in the CI-alone condition and the “best bimodal” condition. As discussed above, the analysis showed that bimodal benefit over the CI-alone condition was confirmed by the resampling procedure for the CNC and localization tasks.

Figure 8.

Group data are shown for each outcome measure for the CI-alone score (light gray) and the “best bimodal” score in dark gray. For each outcome measure shown along the x axis, the average score (units vary) are displayed on they axis. For scores in the left panel, lower scores denote better performance, and for scores in the right panel higher scores denote better performance.

*Significant difference between CI-alone and best bimodal condition, p < 0.01 and p < 0.05, respectively.

Correlation Analyses

Correlation coefficients were obtained between individual outcome measures and measures of residual hearing (unaided thresholds) and audibility (aided thresholds and SII). There were no significant correlations between any of the outcome measures in the three bimodal conditions and measures of aided audibility. This included pure-tone averages calculated using various combinations of low, mid, and high frequencies for aided thresholds, individual frequency thresholds, and the SII at 60 dB SPL. The same was true for the unaided thresholds, including calculating slope of low-frequency loss (unaided threshold at 500–250 Hz).

Preference Data

Preference among the three HA frequency response settings (wideband, restricted high frequency, and NLFC) was assessed at the completion of the study. The majority of the participants (n = 10) reported a preference for NLFC, two participants reported a preference for the restricted high frequency, and two reported no preference.

DISCUSSION

A primary goal of the clinical audiologist is to deter- mine the device characteristics that provide their patients with the best opportunity to perceive speech in a variety of environments. As such, the goal of a bimodal device fitting is to determine how to best program the CI and HA for coordinated benefit. For example, whether low frequencies should be delivered to the HA and high frequencies to the CI. Should both devices provide the widest frequency range possible (Vermeire et al, 2008b; Simpson, 2009)? Should HA gain be restricted to regions that have more residual hearing (Mok et al, 2006; Mok et al, 2010)? Finally, should NLFC HA settings be considered for bimodal fittings (McDermott and Henshall, 2010; Park et al, 2012)? The primary aim of this study was to evaluate three different HA response settings for bimodal device users without changing the CI program. All three frequency response settings— wideband, restricted high frequency, and NLFC—were determined based on each individual’s residual hearing and aided output provided by the HA. As such, the optimal frequency response and HA characteristics varied across individuals and represented routine clinical practice of individualizing device settings.

Bimodal benefit for this study was defined as a bimodal score that was significantly better than the CI-alone score for a given outcome measure. Not unexpectedly, there was considerable variability across subjects as to the HA settings that yielded the “best bimodal” score. Therefore, when results were analyzed using group data there were no significant bimodal benefits for any of the three bimodal conditions for the CNC, BKB-SIN, and talker discrimination. To account for the variability described above, the “best bimodal” score was identified for each participant and used to determine overall bimodal benefit among group data. This “best bimodal” group average was analyzed in comparison to the group CI-alone score for each measure, and using a randomization test to confirm significance there was a bimodal benefit for CNC words, but not for BKB-SIN or talker variability. Although the localization data also had variability as to which HA condition led to the “best bimodal” score, any bimodal condition was favorable to the CI alone. Group data for the RMS error values for the localization measure revealed that both the wideband and NLFC compression resulted in significantly better performance than the CI alone. Analysis of individual results for each outcome measure also varied as to the best (if any) bimodal condition.

CNC

Note that the two participants showing a significant bimodal benefit for CNC words (Figure 2) scored best in at least two of the three bimodal conditions and there were no significant differences across the three bimodal conditions. The fact that so few participants showed a bimodal benefit for CNC words may also be related to the high performance of the CI-alone condition. The group average CI-alone CNC word score for these participants was 71% (range, 48–87%). This mean score is higher than some adult studies reporting group average CNC scores between 40 and 62% for the CI-alone condition (Firszt et al, 2004; Mok et al, 2006; Dorman et al, 2008; Dorman and Gifford, 2010; Holden et al, 2013), and more comparable to a reported average bimodal score of 73% for a group of adults (Dorman and Gifford, 2010). It is important to note that for this outcome measure none of the bimodal conditions for individual participants were significantly worse than the CI-alone condition. That is, none of the different HA settings degraded their speech perception ability in the bimodal condition when compared to CI alone.

BKB-SIN

Three participants showed a bimodal benefit (>3.1 dB SNR-50) for the BKB-SIN (Figure 3). Group data on this measure were consistent with the CNC results in that at least two of the three bimodal conditions were significantly better than the CI alone, and none of the three bimodal conditions were significantly different. However, there were two participants who showed a decrement with one of the bimodal settings: one with wideband and one with high frequency restricted. For participants with an improved SNR-50 in at least one bimodal condition, the improvement ranged from as little as 0.5 dB to as great as 5 dB. These individual improvements are relatively consistent with reported group average SNR improvements (~2–4 dB SNR) from other studies where the speech and noise originate from same loudspeaker (van Hoesel, 2012).

Localization

Individual data that compared the slope of the CI alone to the bimodal condition revealed that all but four participants were better in at least one of the bimodal conditions. In general, the NLFC and wideband bimodal conditions were better for the majority of those receiving some bimodal benefit. It appears that for many of these participants, having a wider frequency range delivered via traditional acoustic amplification or NLFC enabled better performance on the localization task compared to using the CI alone. The overall results from this study of pediatric/young adult participants are similar to other pediatric and adult studies showing improved localization when using bimodal devices compared to either device (HA or CI) alone (Ching, van Wanrooy, et al, 2005; Dunn et al, 2005; Potts et al, 2009; Firszt et al, 2012). Two of these adult studies used identical localization tasks allowing for a direct comparison of bimodal benefit (Potts et al, 2009; Firszt et al, 2012). Group performance using the “best bimodal” score (i.e., RMS error, 39.9°) for this study was very similar to bimodal performance of both of these groups (~35 to 40° RMS error). The participants in this study did not have the directional microphones on the HA activated before, during, or after the conclusion of the study. Since the CNC words for the localization task were not presented in the presence of competing talkers or background noise, it is unlikely that having the directional microphones activated would have affected results. However, it is not clear if this would be the case in highly reverberant and noisy situations in everyday environments. In these instances, there may be issues with localization if the target speech and noise are both coming from a direction that is in the null area of the microphone. In general, the use of a directional microphone for the HA is only recommended for older children who are able to report issues with sound quality.

Talker Discrimination

We expected that bimodal benefits would be particularly evident on the talker discrimination task since the majority of the participants had better residual hearing in the low- to mid-frequency regions at the HA ear. Therefore, the acoustic cues of voice pitch and format frequencies may be better transmitted in the bimodal condition compared to the CI alone (Chang et al, 2006; Dorman et al, 2008; Dorman and Gifford, 2010). The majority of the participants, however, had great difficulty on the talker discrimination measures (male- and female-talkers). Eight of the children scored below chance in the CI alone as well as the three bimodal conditions (Figures 6 and 7). This is in contrast to a group of children ranging in age from 8 to 11 yr with normal hearing sensitivity who scored between 80 and 100% on this task (Geers et al, 2013). For the children who scored above chance on this task, there appeared to be no trend for better bimodal performance in any of the three bimodal conditions compared to the CI alone. Similarly, Dorman and colleagues did not find a bimodal benefit over CI alone for adult CI participants on a talker discrimination task (Dorman et al, 2008). They suggested that the lack of bimodal benefit was possibly because of poor spectral resolution at the HA ear.

CONCLUSIONS

There were no clear trends for group data to support a specific HA frequency response setting over another for bimodal fittings, other than for the localization task. For localization, unlike the wideband and NLFC settings, restricting the high-frequency gain and output failed to produce a significant bimodal group benefit. In contrast to a recent study of adult bimodal and bilateral HA users, results did not show a detriment when activating NLFC (Perreau et al, 2013). Instead, these results are similar to other studies that have shown equivalent performance whether NLFC is activated or not when applied to a wideband frequency response setting (McDermott and Henshall, 2010; Park et al, 2012). Unlike other studies where some participants reported poor sound quality or where group performance was poorer (Gifford et al, 2007b; Perreau et al, 2013), many of the participants in this study preferred using NLFC.

Similar to a recent adult study comparing a restricted high frequency to a wideband HA response, group data did not reveal significant benefits when restricting the high frequency gain (Neuman and Svirsky, 2013). This data set revealed that the restricted response in the bimodal condition was among the conditions producing benefit over the CI-alone condition for some individual participants on various outcome measures (although the benefits were neither necessarily greater than the other bimodal conditions, nor were they consistent for a particular individual across outcome measures). Some differences in the methodology of this study versus other studies should be noted. Other studies systematically varied the low frequency cutoff (i.e., 125,500,1000 Hz etc.) for the acoustic stimuli delivered to the HA (Zhang et al, 2010; Neuman and Svirsky, 2013; Sheffield and Gifford, 2014) for the bimodal conditions. For this study, the cutoff frequency differed based on the individuals’ residual hearing and aided output of the HA, therefore the cutoff frequency for the HA response in the bimodal condition varied across participants. In comparison to the aforementioned studies, some of the subjects in this study had poorer residual hearing in the low- to mid- frequency range (thresholds ≥85 dB HL from ~125 to 1000 Hz). Therefore, some differences in the restricted versus wideband frequency response may not have been as apparent. There were no consistent correlations between unaided or aided audibility and bimodal benefit in the three conditions. Results from the literature have demonstrated inconsistent results when attempting to predict bimodal performance from unaided or aided hearing thresholds; some have shown correlations (Mok et al, 2006; Potts et al, 2009; Mok et al, 2010) while others have not (Ching, Hill, et al, 2005; Ching et al, 2004).

Inspection of individual data varied as to the “best bimodal” condition for a given outcome measure, although with few exceptions none of the participants performed significantly worse with a given bimodal setting. The majority of the participants preferred NLFC at the conclusion of the study, although some did prefer restricted high frequencies. It should be noted that NLFC was the last condition implemented before preference was assessed; therefore, it is possible that participants were influenced by experience with the most recent condition. However, the participants were given the opportunity to listen to all three conditions at the time preference was assessed. Comparison of individual results across outcome measures with reported preference revealed only a moderate degree of consistency between preference and actual performance. The majority of participants reporting a preference for NLFC had at least two outcome measures where the “best bimodal” score was with NLFC activated, this was especially true for the localization task. Those reporting a preference for the restricted high-frequency setting also had at least two outcome measures where the restricted high-frequency response produced the “best bimodal” score, or at least was tied for the best score. It should be stressed, however, that the many factors contributing to preference (i.e., sound quality, reduction of acoustic feedback) may not contribute to better overall performance for a given outcome measure. Anecdotal comments from some participants who preferred NLFC were that they could hear more with their HA. Those who preferred the restricted setting reported better sound quality. Nonetheless, these results should support clinicians considering alternate frequency responses, including NLFC, for patients if bimodal benefits are not realized with more traditional settings. Even if no apparent benefit is seen on a given outcome measure, patient preference and comfort for a particular HA response setting may facilitate more consistent HA use (i.e., bimodal use). In addition, the use of NLFC or restricted high-frequency gains may eliminate acoustic feedback that can also be a detriment to consistent bimodal use.

In this study, the HA and CI were balanced for audibility and comfort for all three bimodal settings. A coordinated fitting for bimodal devices should be part of routine clinical care, despite the many psychophysical and commercial device mismatches that are present when combining an HA and CI (Francart and McDermott, 2013). Given the trend for increasing bilateral CIs in the pediatric population (Peters et al, 2010), clinicians should evaluate and determine whether bilateral CIs should be considered over bimodal devices. The decision to proceed with bilateral CIs must take into account the need for surgery as well as the likely probability that the acoustic benefits of bimodal stimulation (complementary phonetic cues and music perception) may not be retained. Notably, some of the participants in this study were considering a second CI based on lack of bimodal benefit across outcome measures. If various outcome measures fail to produce a bimodal benefit, despite the clinician’s best attempt at optimizing the bimodal fitting, consideration of bilateral CIs may be advisable. Although some of the outcome measures used in this study are not readily available for clinical use, a comprehensive evaluation that includes parent and therapists’ reports, patient preference, and functional outcome measures in addition to a variety of clinical outcome measures (i.e., speech perception in quiet and noise) should be used.

Acknowledgments

This work was supported by NIH/NIDCD K23DC008294, R01 DC012778, R01DC009010, St. Louis Children’s Hospital Collaborative-Faculty Research Grant, Cochlear America’s and Phonak Corporation.

The authors thank all the participants in this study as well as their families for their time and effort. We also thank the following colleagues who assisted with data collection: Kristin Gravel, Rose Wright, Janet Vance, Mary Rice, Emily Finley, and Lauren McCoole. We thank Michael Strube and Dorina Kallogjeri for statistical support.

Abbreviations

- ANOVA

analysis of variance

- BKB-SIN

Bamford-Kowal-Bench Speech-In-Noise Test

- CI

cochlear implant

- CNC

Consonant Nucleus Consonant

- DSL

Desired Sensation Level

- HA

hearing aid

- NLFC

nonlinear frequency compression

- RECDs

real-ear-to-coupler differences

- RMS

root mean square

- SNR

signal-to-noise ratio

- SREM

simulated real-ear measures

- SII

Speech Intelligibility Index

Appendix 1. Within-Participant Comparisons of Localization across Conditions

| BFC vs. BR |

BFC vs. BW |

BFC vs. CI |

BR vs. BW |

BR vs. CI |

BW vs. CI |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Participant | Overall F (3, 392) |

p value* | F (1, 392) | p value** | F (1, 392) | p value** | F (1, 392) | p value** | F (1, 392) | p value** | F (1, 392) | p value** | F (1, 392) | p value** |

| 1 | 3.37 | 0.0185 | 7.19 | 0.0076 | 7.4 | 0.007 | 5.96 | 0.015 | 0 | 0.99 | 1.08 | 0.298 | 1.15 | 0.28 |

| 2 | 176.99 | <0.001 | 5.06 | 0.025 | 2.16 | 0.14 | 112.12 | <0.001 | 0.88 | 0.35 | 340.69 | <0.001 | 272.9 | <0.001 |

| 3 | 2.72 | 0.044 | 5.69 | 0.0175 | 5.78 | 0.017 | 2.08 | 0.15 | 0.17 | 0.68 | 2.39 | 0.12 | 2.22 | 0.14 |

| 4 | 10.81 | <0.001 | 7.91 | 0.005 | 0.19 | 0.66 | 15.88 | 0.0001 | 8.61 | 0.003 | 0.21 | 0.65 | 23.65 | <0.001 |

| 5 | 31 | <0.001 | 0.95 | 0.33 | 49.37 | <0.001 | 51.61 | <0.001 | 39.84 | <0.001 | 37.33 | <0.001 | 6.47 | 0.01 |

| 6 | 1.57 | 0.196 | 0.02 | 0.89 | 0.25 | 0.615 | 3.16 | 0.076 | 0.28 | 0.596 | 2.09 | 0.15 | 1.59 | 0.21 |

| 7 | 1.43 | 0.233 | 1.11 | 0.29 | 1.17 | 0.28 | 0.09 | 0.76 | 0.02 | 0.90 | 2.42 | 0.12 | 3.05 | 0.08 |

| 8 | 12.6 | <0.001 | 16.29 | 0.0001 | 0.42 | 0.52 | 24.32 | <0.001 | 12.82 | 0.0004 | 0.47 | 0.49 | 18.16 | <0.001 |

| 9 | 13.16 | <0.001 | 1.8 | 0.18 | 0.23 | 0.63 | 16.06 | 0.0001 | 3.92 | 0.048 | 4.58 | 0.033 | 32.9 | <0.001 |

| 10 | 44.78 | <0.001 | 0.92 | 0.337 | 0.01 | 0.91 | 81.04 | <0.001 | 0.75 | 0.39 | 55.25 | <0.001 | 80.81 | <0.001 |

| 11 | 41.91 | <0.001 | 0.55 | 0.458 | 1.38 | 0.24 | 113.34 | <0.001 | 0.16 | 0.69 | 98.33 | <0.001 | 95.2 | <0.001 |

| 12 | 61.85 | <0.001 | 16.27 | 0.0001 | 1.1 | 0.295 | 85.46 | <0.001 | 27.36 | <0.001 | 7.19 | 0.008 | 161.72 | <0.001 |

| 13 | 9.8 | <0.001 | 1.07 | 0.302 | 0.38 | 0.54 | 24.15 | <0.001 | 0.23 | 0.63 | 10.64 | 0.001 | 17.49 | <0.001 |

Note: Values in italic indicate an overall significant difference between the bimodal and Cl-alone conditions, which are indicated in later columns.

BFC = bimodal frequency compression; BW = bimodal wideband; BR = bimodal restricted; CI = cochlear implant only.

Evaluated at alpha level of 0.05.

Evaluated at alpha level of 0.008.

Footnotes

Portions of this work were presented at the 2013 Phonak Sound Foundations Pediatric Conference in Chicago, IL, December 8–11,2013; the 2013 Annual Meeting of the Association for Research in Otolaryngology in Baltimore, MD, February 16–20, 2013; and the 2012 Annual Meeting of the American Auditory Society in Scottsdale, AZ, March 8–10, 2012.

REFERENCES

- Blamey PJ, Dooley GJ, James CJ, Parisi ES. Monaural and binaural loudness measures in cochlear implant users with contralateral residual hearing. Ear Hear. 2000;21(1):6–17. doi: 10.1097/00003446-200002000-00004. [DOI] [PubMed] [Google Scholar]

- Byrne D, Parkinson A, Newall P. Modified hearing aid selection procedures for severe/profound hearing losses. In: Studebaker GA, Bess FH, Beck L, editors. Vanderbilt hearing aid report II. Parkton, MD: York Press; 1991. pp. 295–300. [Google Scholar]

- Cadieux JH, Firszt JB, Reeder RM. Cochlear implantation in nontraditional candidates: preliminary results in adolescents with asymmetric hearing loss. Otol Neurotol. 2013;34(3):408–415. doi: 10.1097/MAO.0b013e31827850b8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carney E, Schlauch RS. Critical difference table for word recognition testing derived using computer simulation. J Speech Lang Hear Res. 2007;50(5):1203–1209. doi: 10.1044/1092-4388(2007/084). [DOI] [PubMed] [Google Scholar]

- Chang JE, Bai JY, Zeng FG. Unintelligible low-frequency sound enhances simulated cochlear-implant speech recognition in noise. IEEE Trans Biomed Eng. 2006;53(12):2598–2601. doi: 10.1109/TBME.2006.883793. [DOI] [PubMed] [Google Scholar]

- Ching TYC. Acoustic Cues for Consonant Perception with Combined Acoustic and Electric Hearing in Children. Semin Hear. 2011;32(01):032–041. [Google Scholar]

- Ching TY, Hill M, Brew J, et al. The effect of auditory experience on speech perception, localization, and functional performance of children who use a cochlear implant and a hearing aid in opposite ears. Int J Audiol. 2005;44(12):677–690. doi: 10.1080/00222930500271630. [DOI] [PubMed] [Google Scholar]

- Ching TY, Incerti P, Hill M. Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears. Ear Hear. 2004;25(1):9–21. doi: 10.1097/01.AUD.0000111261.84611.C8. [DOI] [PubMed] [Google Scholar]

- Ching TY, Psarros C, Hill M, Dillon H, Incerti P. Should children who use cochlear implants wear hearing aids in the opposite ear? Ear Hear. 2001;22(5):365–380. doi: 10.1097/00003446-200110000-00002. [DOI] [PubMed] [Google Scholar]

- Ching TY, van Wanrooy E, Dillon H. Binaural-bimodal fitting or bilateral implantation for managing severe to profound deafness: a review. Trends Amplif. 2007;11(3):161–192. doi: 10.1177/1084713807304357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ching TYC, van Wanrooy E, Hill M, Dillon H. Binaural redundancy and inter-aural time difference cues for patients wearing a cochlear implant and a hearing aid in opposite ears. Int J Audiol. 2005;44(9):513–521. doi: 10.1080/14992020500190003. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH. Combining acoustic and electric stimulation in the service of speech recognition. Int J Audiol. 2010;49(12):912–919. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH, Spahr AJ, McKarns SA. The benefits of combining acoustic and electric stimulation for the recognition of speech, voice and melodies. Audiol Neurootol. 2008;13(2):105–112. doi: 10.1159/000111782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn CC, Tyler RS, Witt SA. Benefit of wearing a hearing aid on the unimplanted ear in adult users of a cochlear implant. J Speech Lang Hear Res. 2005;48(3):668–680. doi: 10.1044/1092-4388(2005/046). [DOI] [PubMed] [Google Scholar]

- Firszt JB, Holden LK, Reeder RM, Cowdrey L, King S. Cochlear implantation in adults with asymmetric hearing loss. Ear Hear. 2012;33(4):521–533. doi: 10.1097/AUD.0b013e31824b9dfc. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firszt JB, Holden LK, Skinner MW, et al. Recognition of speech presented at soft to loud levels by adult cochlear implant recipients of three cochlear implant systems. Ear Hear. 2004;25(4):375–387. doi: 10.1097/01.aud.0000134552.22205.ee. [DOI] [PubMed] [Google Scholar]

- Francart T, McDermott HJ. Psychophysics, fitting, and signal processing for combined hearing aid and cochlear implant stimulation. Ear Hear. 2013;34(6):685–700. doi: 10.1097/AUD.0b013e31829d14cb. [DOI] [PubMed] [Google Scholar]

- Francart T, van Wieringen A, Wouters J. APEX 3: a multipurpose test platform for auditory psychophysical experiments. J Neurosci Methods. 2008;172(2):283–293. doi: 10.1016/j.jneumeth.2008.04.020. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Turner C. Combining acoustic and electrical speech processing: Iowa/Nucleus hybrid implant. Acta Otolaryngol. 2004;124(4):344–347. doi: 10.1080/00016480410016423. [DOI] [PubMed] [Google Scholar]

- Geers AE, Davidson LS, Uchanski RM, Nicholas JG. Interdependence of linguistic and indexical speech perception skills in school-age children with early cochlear implantation. Ear Hear. 2013;34(5):562–574. doi: 10.1097/AUD.0b013e31828d2bd6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, McKarns SA, Spahr AJ. Combined electric and contralateral acoustic hearing: word and sentence recognition with bimodal hearing. J Speech Lang Hear Res. 2007a;50(4):835–843. doi: 10.1044/1092-4388(2007/058). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Spahr AJ, McKarns SA. Effect of digital frequency compression (DFC) on speech recognition in candidates for combined electric and acoustic stimulation (EAS) J Speech Lang Hear Res. 2007b;50(5):1194–1202. doi: 10.1044/1092-4388(2007/083). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glista D, Scollie S, Bagatto M, Seewald R, Parsa V, Johnson A. Evaluation of nonlinear frequency compression: clinical outcomes. Int J Audiol. 2009;48(9):632–644. doi: 10.1080/14992020902971349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grieco-Calub TM, Litovsky RY. Sound localization skills in children who use bilateral cochlear implants and in children with normal acoustic hearing. Ear Hear. 2010;31(5):645–656. doi: 10.1097/AUD.0b013e3181e50a1d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holden LK, Finley CC, Firszt JB, et al. Factors affecting open-set word recognition in adults with cochlear implants. Ear Hear. 2013;34(3):342–360. doi: 10.1097/AUD.0b013e3182741aa7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- IEEE. Recommnded pratice for speech quality measurements. IEEE Trans on Audio and Electroacoustics. 1969;17(3):225–246. [Google Scholar]

- James CJ, Fraysse B, Deguine O, et al. Combined electro-acoustic stimulation in conventional candidates for cochlear implantation. Audiol Neurootol. 2006;11(Suppl 1):57–62. doi: 10.1159/000095615. [DOI] [PubMed] [Google Scholar]

- Karl JR, Pisoni DB. The role of talker-specific information in memory for spoken sentences. J Acoust Soc Am. 1994;95(5):2873–2873. [Google Scholar]

- Keilmann AM, Bohnert AM, Gosepath J, Mann WJ. Cochlear implant and hearing aid: a new approach to optimizing the fitting in this bimodal situation. Eur Arch Otorhinolaryngol. 2009;266(12):1879–1884. doi: 10.1007/s00405-009-0993-9. [DOI] [PubMed] [Google Scholar]

- Kiefer J, Pok M, Adunka O, et al. Combined electric and acoustic stimulation of the auditory system: results of a clinical study. Audiol Neurootol. 2005;10(3):134–144. doi: 10.1159/000084023. [DOI] [PubMed] [Google Scholar]

- Killion MC, Niquette PA, Gudmundsen GI, Revit LJ, Banerjee S. Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners. J Acoust Soc Am. 2004;116(4):2395–2405. doi: 10.1121/1.1784440. [DOI] [PubMed] [Google Scholar]

- Kong YY, Stickney GS, Zeng FG. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117(3):1351–1361. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- Laneau J, Boets B, Moonen M, van Wieringen A, Wouters J. A flexible auditory research platform using acoustic or electric stimuli for adults and young children. J Neurosci Methods. 2005;142(1):131–136. doi: 10.1016/j.jneumeth.2004.08.015. [DOI] [PubMed] [Google Scholar]

- McCreery RW, Venediktov RA, Coleman JJ, Leech HM. An evidence-based systematic review of frequency lowering in hearing aids for school-age children with hearing loss. Am J Audiol. 2012;21(2):313–328. doi: 10.1044/1059-0889(2012/12-0015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDermott H. Benefits of Combined Acoustic and Electric Hearing for Music and Pitch Perception. Semin Hear. 2011;32(01):103–114. [Google Scholar]

- McDermott H, Henshall K. The use of frequency compression by cochlear implant recipients with postoperative acoustic hearing. J Am Acad Audiol. 2010;21(6):380–389. doi: 10.3766/jaaa.21.6.3. [DOI] [PubMed] [Google Scholar]

- Miller GA, Nicely PE. An Analysis of Perceptual Confusions Among Some English Consonants. J Acoust Soc Am. 1955;27(2):338–352. [Google Scholar]

- Moeller MP, Hoover B, Putman C, et al. Vocalizations of infants with hearing loss compared with infants with normal hearing: Part II—transition to words. Ear Hear. 2007a;28(5):628–642. doi: 10.1097/AUD.0b013e31812564c9. [DOI] [PubMed] [Google Scholar]

- Moeller MP, Hoover B, Putman C, et al. Vocalizations of infants with hearing loss compared with infants with normal hearing: Part I—phonetic development. Ear Hear. 2007b;28(5):605–627. doi: 10.1097/AUD.0b013e31812564ab. [DOI] [PubMed] [Google Scholar]

- Mok M, Galvin KL, Dowell RC, McKay CM. Speech perception benefit for children with a cochlear implant and a hearing aid in opposite ears and children with bilateral cochlear implants. Audiol Neurootol. 2010;15(1):44–56. doi: 10.1159/000219487. [DOI] [PubMed] [Google Scholar]

- Mok M, Grayden D, Dowell RC, Lawrence D. Speech perception for adults who use hearing aids in conjunction with cochlear implants in opposite ears. J Speech Lang Hear Res. 2006;49(2):338–351. doi: 10.1044/1092-4388(2006/027). [DOI] [PubMed] [Google Scholar]

- Neuman AC, Svirsky MA. Effect of hearing aid bandwidth on speech recognition performance of listeners using a cochlear implant and contralateral hearing aid (bimodal hearing) Ear Hear. 2013;34(5):553–561. doi: 10.1097/AUD.0b013e31828e86e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S, Chapman C. The effects of bilateral electric and bimodal electric—acoustic stimulation on language development. Trends Amplif. 2009;13(3):190–205. doi: 10.1177/1084713809346160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park LR, Teagle HF, Buss E, Roush PA, Buchman CA. Effects of frequency compression hearing aids for unilaterally implanted children with acoustically amplified residual hearing in the nonimplanted ear. Ear Hear. 2012;33(4):e1–e12. doi: 10.1097/AUD.0b013e31824a3b97. [DOI] [PubMed] [Google Scholar]

- Perreau AE, Bentler RA, Tyler RS. The contribution of a frequency-compression hearing aid to contralateral cochlear implant performance. J Am Acad Audiol. 2013;24(2):105–120. doi: 10.3766/jaaa.24.2.4. [DOI] [PubMed] [Google Scholar]

- Peters BR, Wyss J, Manrique M. Worldwide trends in bilateral cochlear implantation. Laryngoscope. 2010;120(Suppl 2):S17–S44. doi: 10.1002/lary.20859. [DOI] [PubMed] [Google Scholar]

- Peterson GE, Lehiste I. Revised CNC lists for auditory tests. J Speech Hear Disord. 1962;27:62–70. doi: 10.1044/jshd.2701.62. [DOI] [PubMed] [Google Scholar]

- Pittman AL, Lewis DE, Hoover BM, Stelmachowicz PG. Rapid word-learning in normal-hearing and hearing-impaired children: effects of age, receptive vocabulary, and high-frequency amplification. Ear Hear. 2005;26(6):619–629. doi: 10.1097/01.aud.0000189921.34322.68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pittman AL, Stelmachowicz PG. Perception of voiceless fricatives by normal-hearing and hearing-impaired children and adults. J Speech Lang Hear Res. 2000;43(6):1389–1401. doi: 10.1044/jslhr.4306.1389. [DOI] [PubMed] [Google Scholar]

- Potts LG, Skinner MW, Litovsky RA, Strube MJ, Kuk F. Recognition and localization of speech by adult cochlear implant recipients wearing a digital hearing aid in the nonimplanted ear (bimodal hearing) J Am Acad Audiol. 2009;20(6):353–373. doi: 10.3766/jaaa.20.6.4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scollie S, Seewald R, Cornelisse L, et al. The Desired Sensation Level multistage input/output algorithm. Trends Amplif. 2005;9(4):159–197. doi: 10.1177/108471380500900403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheffield SW, Gifford RH. The benefits of bimodal hearing: effect of frequency region and acoustic bandwidth. Audiol Neurootol. 2014;19(3):151–163. doi: 10.1159/000357588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson A. Frequency-lowering devices for managing high-frequency hearing loss: a review. Trends Amplif. 2009;13(2):87–106. doi: 10.1177/1084713809336421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson A, McDermott HJ, Dowell RC, Sucher C, Briggs RJ. Comparison of two frequency-to-electrode maps for acoustic-electric stimulation. Int J Audiol. 2009a;48(2):63–73. doi: 10.1080/14992020802452184. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Lewis DE, Choi S, Hoover B. Effect of stimulus bandwidth on auditory skills in normal-hearing and hearing-impaired children. Ear Hear. 2007;28(4):483–494. doi: 10.1097/AUD.0b013e31806dc265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Aided perception of /s/ and /z/ by hearing-impaired children. Ear Hear. 2002;23(4):316–324. doi: 10.1097/00003446-200208000-00007. [DOI] [PubMed] [Google Scholar]

- Uchanski RM, Davidson LS, Quadrizius S, et al. Two ears and two (or more?) devices: a pediatric case study of bilateral profound hearing loss. Trends Amplif. 2009;13(2):107–123. doi: 10.1177/1084713809336423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Hoesel RJM. Contrasting benefits from contralateral implants and hearing aids in cochlear implant users. Hear Res. 2012;288(1–2):100–113. doi: 10.1016/j.heares.2011.11.014. [DOI] [PubMed] [Google Scholar]

- Vermeire K, Anderson I, Flynn M, Van de Heyning P. The influence of different speech processor and hearing aid settings on speech perception outcomes in electric acoustic stimulation patients. Ear Hear. 2008a;29(1):76–86. doi: 10.1097/AUD.0b013e31815d6326. [DOI] [PubMed] [Google Scholar]

- Zhang T, Spahr AJ, Dorman MF. Frequency overlap between electric and acoustic stimulation and speech-perception benefit in patients with combined electric and acoustic stimulation. Ear Hear. 2010;31(2):195–201. doi: 10.1097/AUD.0b013e3181c4758d. [DOI] [PMC free article] [PubMed] [Google Scholar]