Abstract

The fusiform face area (FFA) is one of several areas in occipito-temporal cortex whose activity is correlated with perceptual expertise for objects. Here, we investigate the robustness of expertise effects in FFA and other areas to a strong task manipulation that increases both perceptual and attentional demands. With high-resolution fMRI at 7Telsa, we measured responses to images of cars, faces and a category globally visually similar to cars (sofas) in 26 subjects who varied in expertise with cars, in (a) a low load 1-back task with a single object category and (b) a high load task in which objects from two categories rapidly alternated and attention was required to both categories. The low load condition revealed several areas more active as a function of expertise, including both posterior and anterior portions of FFA bilaterally (FFA1/FFA2 respectively). Under high load, fewer areas were positively correlated with expertise and several areas were even negatively correlated, but the expertise effect in face-selective voxels in the anterior portion of FFA (FFA2) remained robust. Finally, we found that behavioral car expertise also predicted increased responses to sofa images but no behavioral advantages in sofa discrimination, suggesting that global shape similarity to a category of expertise is enough to elicit a response in FFA and other areas sensitive to experience, even when the category itself is not of special interest. The robustness of expertise effects in right FFA2 and the expertise effects driven by visual similarity both argue against attention being the sole determinant of expertise effects in extrastriate areas.

Introduction

If faces are ‘special’ because of our expertise with them, other categories of expertise may recruit similar face-like neural substrates. Expertise effects for non-face objects in face-selective regions have been reported at standard resolution (SR) (Bilalic et al., 2011; Gauthier et al., 2000; Harel et al., 2010; James & James, 2013; Xu 2005; Harley et al., 2009), but their interpretation has been controversial. Some high-resolution fMRI (HR-fMRI) and neurophysiological studies found no reliable selectivity for objects in face-selective areas (Grill-Spector et al., 2006; Tsao et al., 2006), suggesting that object responses obtained at lower resolution are due to spatial blurring from adjacent non-face selective areas. However, we recently documented expertise effects with HR-fMRI in FFA within the most face-selective voxels in the 25mm2 peak of the FFA (McGugin et al., 2012a). When we analyzed separately posterior and anterior portions of FFA (FFA1/FFA2; Pinsk et al., 2009; Weiner et al., 2010), car expertise predicted neural selectivity to cars in both sub-regions. As in prior studies using this parcellation, FFA1 and FFA2 were not functionally different (Julian et al., 2012; Pinsk et al. 2009; Weiner et al. 2010). This nonetheless begs the question: are some visual areas more critical than others for expert perception? Indeed, FFA is only one of several areas recruited in expertise, leading some to question the specificity of the effects (Harel et al., 2010). Beyond FFA, the right occipital face area (OFA) and parts of the anterior temporal lobe (aIT) and the parahippocampal gyrus (PHG) have reportedly been engaged by expertise (Gauthier et al., 1999; Gauthier et al., 2000; Xu 2005). Harel et al. (2010) suggested that recruitment of FFA in expertise may be explained to a large extent by a general attentional effect: they found that car selectivity in car experts depended on explicit attention to cars (relative to planes, also present in the task). Attention to cars in car experts in that study led to activity in many areas including early visual cortex (putative area V1). However, cars may be especially difficult for car experts to ignore. Indeed, response times in the Harel study were longer for experts than novices especially when asked to ignore cars. Car experts may also experience difficulty ignoring planes, as expertise for cars and for planes are sometimes associated (McGugin et al., 2012a; 2012b).

While we would expect attentional manipulations to influence activity across different visual tasks, we also have reasons to expect a stable relationship between the response to objects in FFA and individual differences in expertise. Several studies have found a linear correlation between behavioral performance in matching or recognition tests for a given category and activity in FFA for this category. This is demonstrated in conditions when the specific objects shown in the scanner are different than in behavioral measures, when the fMRI task (e.g., in identity or location judgments; in block or event-related design) or image format (e.g., high or low spatial frequencies filtered images) are different, and sometimes when the behavioral data are acquired several months after the fMRI data (Gauthier et al., 2000; 2005; Xu, 2005; McGugin et al., 2012). Such results suggest that while the specifics of the experiment may influence overall levels of activity or degree of selectivity for a category, the rank ordering of responses to a category could represent a relatively stable indicator of perceptual expertise.

Here, we employed HR imaging to examine the fine grain stability of expertise effects of clusters within the FFA, combined with a large field of view allowing us to examine effects across early visual and ventral temporal cortices. We directly test the robustness of expertise effects in and outside FFA in the face of a strong task manipulation– strong enough to influence category-selective responses in visual areas and to affect expertise effects in several extrastriate regions. Note that our approach differs from Harel et al. (2010) in that we did not ask experts to ignore objects of expertise because we assumed it would be particularly difficult for experts to ignore an object of expertise, especially when there is nothing else on the screen. Rather we varied the task load by simultaneously increasing the number of categories presented and attended. In a high load condition, we chose to show cars and faces amongst images of sofas, which were also to be attended. Sofas were selected since they are visually similar to profiles of cars, making the task challenging, but they were unlikely to be a special category of interest for car experts. As we discuss below, their similarity to cars led to an unexpected car expertise effect for sofas in the FFA (more activity to sofas in car experts than car novices), even though car experts did not show a behavioral advantage for sofas. We report and discuss this unexpected result below, which is different from what has been observed in the past for other control categories (e.g., planes or butterflies). Fortunately, this did not prevent us from studying the effect of load on car expertise effects, because we were able to use faces as a baseline, as used in prior work (Gauthier et al. 2005; Xu 2005; Furl et al. 2011).

Our load manipulation impacted selective responses across the visual system, allowing us to examine how car expertise effects were influenced in this context. As load was increased, we predicted that expertise effects may drop in several areas, but that FFA, especially on the right, would remain a robust predictor of car expertise. Whether the recruitment of FFA1 and FFA2 in expertise would depend differentially upon attention was of interest, although we had no specific prediction.

Materials and Methods

Subjects

A meta-analysis of all published fMRI studies of expertise with cars by 2012 (Gauthier et al., 2000; Gauthier et al., 2005; Gauthier et al., 2005; Xu 2005; McGugin et al., 2012; McGugin et al., 2012; Van Gulick et al., 2012; Harel 2010) using the correlation between the percent signal change (PSC) to cars (relative to a high-level baseline) and a behavioral measure of car expertise yielded a value of r=.54, 95% CI [.40; .65]. 26 healthy adults (6 females, ages 22 to 41 with an average of 27) participated for monetary compensation, yielding a power of .87 to find an effect of this size with alpha =.05. Written informed consent was obtained from each participant in accordance with guidelines of the institutional review board of Vanderbilt University and Vanderbilt University Medical Center. All subjects were right-handed (based on a laterality quotient from the Edinburgh Handedness Inventory) and had normal or corrected-to-normal visual acuity.

Note that the subjects are the same as in McGugin et al. (2012a) Experiment 2, where results for only the low load condition, in only the FFA1 and FFA2, were reported in a different analysis with the neural effects for cars compared to the average of faces and sofas and behavioral expertise for cars was estimated using only car d’. While there is no overlap in the actual analyses reported in the two papers, the finding of a car expertise effect in FFA1 and FFA2 in the low load condition should not be considered an independent replication of McGugin et al. (2012a). All data from the high load runs, and all other analyses, especially those pertaining to the effect of load, have never been reported.

MRI acquisition

All imaging was performed on a Philips 7-Tesla (7T) Achieva human magnetic resonance scanner with a volume quadrature transmit coil and a 32 channel parallel receive array coil (Nova). First, high-resolution (HR) T1-weighted anatomical volumes were acquired using a 3D TFE (Turbo Field Echo) acquisition sequence with sensitivity encoding (SENSE) (FOV = 246 mm, minimum TE, TR=3.152ms, matrix size=352×352) to obtain 249 slices of 0.7mm3 isometric voxels. Then, a single-run standard resolution (SR) functional localizer (30 SR coronal slices, 2.3×2.3×2.5mm) was acquired for real-time localization of FFA and optimal positioning of HR slices using an on-line analysis performed with a standard GLM procedure implemented on the scanner. The SR run was collected using a fast T2*-sensitive radiofrequency-spoiled 3D PRESTO-EPI sequence (FOV=211.2mm, TEeff=28ms, TR=21.93ms, volume repetition time=2500ms, flip angle=12°, matrix size=96×96, EPI-factor=11, SENSERL=3.1). We used the 'IView-Bold' analysis package produced by Philips to adjust the t-threshold and minimum cluster size for each individual until we could subjectively isolate clusters of face-selective activation in the fusiform gyrus, saving these activation maps as images to be used for the geometric planning of subsequent, HR scans. Immediately following the SR scan, we acquired a series of HR runs. Each HR run contained 24 oblique slices acquired using a radiofrequency-spoiled 3D FFE-EPI acquisition sequence with SENSE (FOV=160mm, TE=21ms, TR=32.26ms, volume repetition time=4000ms, flip angle=10°, matrix size=128×128, EPI-factor=11, SENSE=3) to obtain 1.25mm isotropic voxels.

fMRI stimuli and task

Subjects completed one SR face-object localizer run, three low load HR runs and six high load HR runs. All images were presented with an Apple Macintosh computer using Matlab (MathWorks, Natick, MA) using Psychophysics Toolbox extension (Brainard 1997; Pelli 1997) and to a screen mounted behind the subject’s head and viewed via a mirror mounted in front of the subjects eyes allowing for vision outside the coil. The SR localizer scan used 72 grayscale images (36 faces, 36 objects) in a 1-back detection task with 14 blocks of alternating faces and objects (20 images shown for 1s; 7 blocks with 1 image repeat and 7 blocks with 2 image repeats) with a 2.5s fixation at the beginning and end. Sensitivity did not differ for Face and Object blocks: (hit rate, false alarm rate) Face (0.89, 0.01), Object (0.88, 0.01).

Immediately following real-time positioning of the HR slices based on SR data, subjects completed three runs with 102 grayscale images each of faces, cars, sofas and scrambled matrices shown in low load blocks during using a 1-back detection task (Figure 1). Each run contained 14 blocks (4 each of faces, cars and sofas, and 2 scrambled) of 16s duration each (16 images sequentially presented for 1s; 8 blocks/run with 1 image repeat and 6 blocks/run with 2 image repeats), with 4s fixation at the beginning and end. A 1-way ANOVA revealed a main effect of category (F3,75=105.74, p<0.0001), explained by significantly poorer detection during Scrambled blocks relative to the intact-object blocks, but no difference between Faces, Cars and Sofas (Scheffé test pairwise comparisons, p<0.0001): (hit rate, false alarm rate) Face (0.93, 0.007), Car (0.93, 0.005), Sofa (0.91, 0.01), Scrambled (0. 24, 0.03).

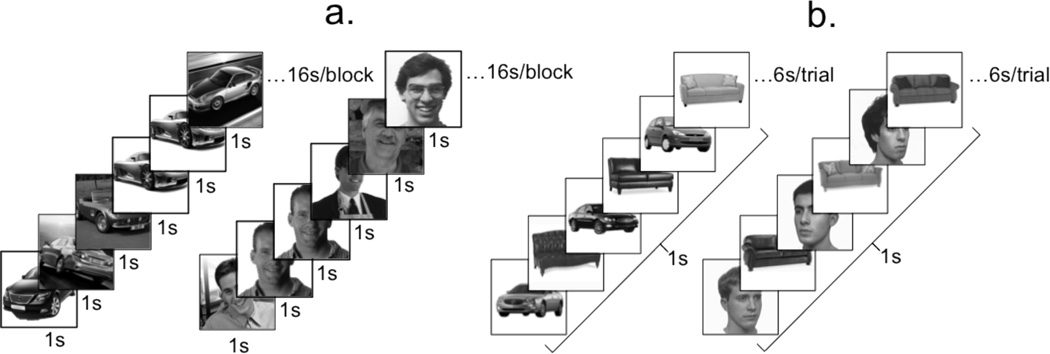

Figure 1.

Example trials from the low and high load high-resolution fMRI runs. (a) In the low load runs, participants looked for an immediate image repeat in blocks of cars, faces, sofas and scrambled matrices (example car and face blocks are shown). Car and sofa images were collected from the Internet. Face images were obtained from Kalanit Grill-Spector (Grill-Spector, Sayres & Ress, 2006). Scrambled images were created on-line during the scan by parsing a randomly selected image into 64 equally sized squares, then rearranging these squares to form a novel display of scrambled pieces. All stimuli were presented in the center of the screen to subtend a visual angle of ~12.6°, ~15.1°, or ~17.6°. Stimulus size was varied from item to item (and subjects were instructed to report repeated identities regardless of size as 1-back hits) to discourage the use of low-level visual properties to perform the task. (b) In the high load runs, subjects looked for targets within a rapid serial visual presentation (RSVP) of images presented in the center of the screen subtending a 12° visual angle and always alternating between category: cars/sofas and faces/sofas (shown) and cars/faces. To avoid salient information unrelated to image identity as in previous work using very fast image presentations, our images were cropped of backgrounds (McKeeff et al., 2010).

The final six HR runs used 48 previously unseen grayscale images each of faces, cars and sofas. Sofas were chosen as a control category visually similar to but semantically unique from cars, to avoid competition between two categories of expertise. In Harel et al. (2010), car images were considerably larger than the plane images, which could explain why even car novices showed more activity for cars than planes in V1. Here, special attention was given to matching car, sofa and face images for size. Subjects looked for targets within a rapid serial visual presentation (RSVP) of images presented at a rate of 6 images/s for 6s trials and always alternating between two categories. Pilot work suggested this presentation rate corresponds to behavioral performance of ~65% correct discrimination. In three conditions (cars and faces (CF), cars and sofas (CS), and faces and sofas (FS)), subjects searched for randomly assigned targets (1 face, car and sofa), which were learned at least one day prior to beginning the experiment. One 24s block consisted of a cue (1.5s) indexing the two target images that would be relevant for the upcoming trials, then three 6s RSVP sequences each followed by a 1.5s response window. One run contained 9 blocks alternating CF, CS, and FS conditions in random order. In each trial, subjects were instructed to maintain central fixation while monitoring the RSVP sequence of 36 rapidly alternating images for one or both of the two current targets: e.g., in CS trials, subjects searched for the studied car and sofa (Figure 1). At the end of each RSVP sequence, subjects made a button response indicating one of four possible outcomes: they found (1) category one target only (9 or 10 blocks/run), (2) category two target only (10 or 9 blocks/run), (3) both category one and two targets (4 blocks/run), or (4) neither category one nor two targets (4 blocks/run). In target-present trials, the serial position of the target(s) was randomly determined, but never occurred first or last in the stream. Chance was 25%. Total accuracy rates were submitted to two 1-way ANOVAs, first using Condition (CF, CS, FS) then using Response (‘face’, ‘car’, ‘sofa’, ‘both’, ‘neither’) as the within-subject factor. In the first test, there was a main effect of Condition (F2,50=11.85, p<0.0001), which was carried by significantly poorer detection during CS blocks relative to CF or FS blocks (Scheffé test pairwise comparisons, p<0.005), with no difference between CF and FS. In the second test, there was a main effect of Response (F4,50=4.41, p=.003), showing better search performance for ‘face’ target responses relative to ‘neither’ responses (p=0.004): (average accuracy rates) FC (0.58), FS (0.53), CS (0.38).

fMRI data analysis

The HR T1-weighted anatomical scan was used to create a 3D brain for which translational and rotational transformations ensured a center on the anterior commissure and alignment with the anterior commissure–posterior commissure plane. For group analyses only, brains were normalized in Talairach space (Talairach & Tournoux, 1988). Functional data were analyzed using Brain Voyager (BV) (http://www.brainvoyager.com) and in-house Matlab scripts (http://www.themathworks.com). Data preprocessing included 3D motion correction and temporal filtering (high-pass criterion of 2.5 cycles/run) with linear trend removal. Data from HR functional runs were interpolated from 1.25mm isotropic voxels to a resolution of 1mm isotropic using sinc interpolation.

For ROI analyses, no spatial smoothing was applied. We used standard GLM analyses to compute the correlation between predictor variables and actual blood oxygenation level-dependent (BOLD) activation, yielding voxel-by-voxel activation maps for each condition. All ROIs were defined in 2-dimensional surface space. Automatic and manual segmentation techniques were applied to separate the hemispheres and precisely identify the grey matter–white matter boundary. Category-selective ROIs were defined using the face-object contrast in the SR localizer run; we identified bilateral fusiform and occipital areas that were more engaged by faces than objects, and bilateral medial parahippocampal regions that were more active for objects than faces. We localized two discrete peaks of face selectivity in the fusiform gyrus of 20 participants (FFA1 and FFA2), and another four subjects had only one of the 2 peaks (Pinsk et al. 2009; Weiner et al. 2010) (Table 1). First, the center of the ROI was identified, then surface ROIs were defined around this peak by lowering the threshold to achieve an area of roughly equivalent size across all individuals. Analyses were performed on interpolated 1mm3 voxels of a given ROI that were activated significantly more to the average of all image categories relative to the scrambled matrix baseline (p<.01). Voxels incorporated by the SR-defined ROI but failing to pass the HR-based Intact > Scrambled restriction were classified as “Scrambled” voxels. In addition, an ROI in early visual cortex (EVC) was defined as the cluster of voxels engaged by Scrambled > Intact in the posterior occipital gyrus. All HR-analyses were performed on volume time course data that were not transferred into surface format, avoiding blurring. Only SR localizer data were transferred to a surface format to define ROIs that were optimally localized around the peak of face selectivity. It is ideal to define ROIs on the surface and map them back to the volumetric space because the surface accounts for cortical folding, and thus properly takes into account the distance between adjacent voxels lying on opposite banks of a sulcus or gyrus.

Table 1.

For five bilateral regions, mean peak Talairach coordinates, mean size (mm3 and mm2), and mean percent signal change (PSC) difference between face and non-face responses (Face − (Car + Sofa)/2) from the low load single-category runs with results from paired t-tests on these mean differences.

| Mean Talairach coordinates for peak voxel ± std dev |

Average Volume (mm3) ± std dev |

Maximum Area (mm2) |

Mean PSC difference (Face−(Car+Sofa)/2) from single-category runs with p-value from paired t-test comparing the two means |

|

|---|---|---|---|---|

| Right FFA1 (N=23) |

36, −66.3, −16.3 (6.3, 7.4, 5.4) |

685 (150) | 100 | 0.47 (p<.0001) |

| Right FFA2 (N=19) |

35.2, −50.3, −14.8 (6.4, 10.0, 9.1) |

677 (169) | 100 | 0.51 (p=.0003) |

| Right OFA (N=18) |

32.9, −82.8, −13 (10.3, 6.5, 5.3) |

388 (168) | 50 | 0.63 (p=.0045) |

| Right PHG (N=25) |

27.1, −59.8, −12.7 (5.4, 8.3, 3.9) |

1172 (496) | 200 | −0.52 (p=.0002) |

| Right EVC (N=22) |

15.5, −93.0, −1.8 (6.5, 5.8, 6.6) |

492 (202) | 100 | −0.22 (p=.0878) |

| Left FFA1 (N=19) |

−38.9, −64, −17.5 (5.2, 7.9, 5.0) |

595 (204) | 100 | 0.62 (p<.0001) |

| Left FFA2 (N=18) |

−39.5, −49.1, −17.4 (6.4, 7.6, 4.1) |

659 (193) | 100 | 0.72 (p<.0001) |

| Left OFA (N=14) |

−37.3, −80.9, −13 (7.2, 10.1, 6.1) |

328 (81) | 50 | 0.46 (p=.069) |

| Left PHG (N=26) |

−29.3, −58.8, −13 (3.8, 7.4, 4.3) |

1138 (240) | 200 | −0.31 (p=.0002) |

| Left EVC (N=23) |

−18.2, −93.0, −3.0 (6.7, 5.5, 5.5) |

471 (248) | 100 | −0.2 (p=.2527) |

Response amplitudes in the low load runs were computed for each voxel as the average PSC estimate for stimulus blocks over 4–16s after trial onset, an interval selected to account for the rise and fall times of the BOLD responses. Scrambled blocks were used as baseline for intact-stimulus categories: e.g., “Face” PSC=(Face−Scrambled)/Scrambled. We then measured car expertise effects by correlating a behavioral car expertise index (see below) with the BOLD response for cars relative to faces as a high-level baseline (C–F). In addition, we used PSC computations to classify HR voxels as either face-selective or non face-selective based on the category for which they were maximally responsive (faces and cars or sofas, respectively). Expertise effects in the high load runs were computed by correlating behavioral expertise with the voxel-by-voxel differences across conditions, CS-FS (representing the contrast of Cars minus Faces when both were shown in the presence of Sofas), CF-SF and FC-SC. In this way, we can compare expertise effects for the BOLD response to cars relative to faces when all images are shown blocked by category to the BOLD response to cars relative to faces when all images are shown in the context of alternating sofas.

For whole field-of-view (FOV) group analyses only, 3D spatial smoothing was applied to all functional data using a Gaussian filter of 2mm FWHM, and pre-processed functional slice-based data were aligned to the Talairach-transformed brain. For all whole-FOV contrasts, an initial contrast with an uncorrected threshold of p<0.02 was applied to correct for multiple comparisons using Brain Voyager’s Cluster Threshold Estimator (Forman et al., 1995; Goebel et al., 2006), which uses a Monte Carlo simulation with iterative Gaussian filtering to calculate the cluster size that yields a corrected threshold of p<0.05.

To test whether functional patterns of response across brain regions were affected by physiological noise variations at different anatomical locations, we measured the timeseries signal to noise (tSNR) ratio of the voxels composing bilateral FFA1 and FFA2 using the mean signal intensity divided by the standard deviation of the signal time course for the scrambled condition in the three low load experimental runs. Mean tSNR for right FFA1/FFA2 was 41.5 and 38.8, respectively, and a 2-sample t-test revealed no significant difference in the noise of these regions (t=.76, n.s.). Mean tSNR for left FFA1/FFA2 was 43.5 and 44.5, respectively, again with no significant difference (t=−.21, n.s.).

Behavioral tests, stimuli and expertise index

All subjects completed two behavioral assessment tests following their fMRI session: a sequential matching expertise test used to quantify individual skill at matching cars (Curby et al., 2009; Gauthier et al., 2000; 2005; Grill-Spector et al., 2004; Rossion et al., 2004; Xu, 2005) and the Vanderbilt Expertise Test (VET; McGugin et al., 2012b).

In the sequential matching test, subjects made same-different judgments on greyscale images of 56 car, plane and bird images not used in the MRI portion of the experiment. Subjects performed 12 blocks of 28 sequential matching trials per category. On each trial, the first stimulus appeared for 1000ms followed by a 500ms mask. A second stimulus then appeared and remained visible until a same/different response was made or 5000ms elapsed. Subjects judged whether the two images showed cars or planes from the same make and model regardless of year, or birds of the same species. An expertise sensitivity score, d’, was calculated for cars (range 0.64–3.17), planes (range 0.59–2.73), and birds (range 0.21–2.32) for each subject. A subset of subjects (n=11) self-reported expertise with cars and outperformed the others in car matching (t=2.23, p=.039).

The Vanderbilt Expertise Test (VET; McGugin et al., 2012b) included eight object categories: leaves, owls, butterflies, wading birds, mushrooms, cars, planes and motorcycles (no overlap with the other test or the scan). For each category, target images consisted of four exemplars from six unique species/models, while distractor images showed 48 exemplars from novel species/models. Before each category block, subjects studied a display with one exemplar from each of 6 species/models. For each test trial, one of the studied exemplars (identical images for the first twelve trials, or transfer images requiring generalization across viewpoint, size and settings for the subsequent 36 trials) was presented with two distractors from another species/model in a forced-choice paradigm. The target image could occur in any of the three positions, and subjects indicated which image of the triplet was the studied target. For a complete description of the VET, see McGugin et al. (2012b). Principal component analysis has revealed that the underlying structure of this 8 categories VET can be largely explained by two independent factors that map on living and non-living objects. Therefore, here, we reduced VET performance to a Living Objects score (average of butterflies, leaves, mushrooms, owls, wadingbirds) and a Non-Living Objects score (average of motorcycles and planes, excluding cars). The 11 self-reported car experts outperformed the others in the car portion of the VET (t=2.82, p=.008).

An aggregate car expertise index was calculated based on the highly-correlated standardized car performance in the matching task and standardized car performance on the VETcar (r=.75). An aggregate non-car performance index was calculated based on the standardized performance for planes and birds from the matching task, as well as standardized VET Living Objects and Non-Living Objects scores (matching and memory indices were correlated, r=.38). In the following analyses, we regress out the non-car aggregate scores (SD =.63) from the car aggregate scores (SD = .93). Henceforth, all mention of car expertise refers to the car aggregate index with the non-car aggregate index applied as covariate: importantly, this measure of car expertise allows us to reveal effects that cannot be explained by domain-general performance.

Behavioral discrimination follow-up

To test whether expertise for cars afforded a processing advantage for visually similar sofas, a behavioral discrimination follow-up experiment was conducted. A separate 75 healthy adults (39, males, ages 18 to 30 with an average of 21) participated for monetary compensation or course credit.

Subjects made same-different judgments on sequentially presented car, sofa and chair images in 12 blocks of 28 sequential matching trials per category. Chairs were chosen because they were semantically similar to sofas but less visually similar to cars. Unique exemplars (56) from each category were used, with six variations per exemplar varying in color, presentation size and/or horizontal rotation. These adjustments were added to increase the difficulty of matching based exclusively on low-level visual properties, effectively taking performance off ceiling on all categories. On each trial, the first stimulus appeared for 1000ms followed by a 500ms mask. A second stimulus then appeared and remained visible until a same/different response was made or 5000ms elapsed. Subjects judged whether the two images showed the exact same car, sofa or chair, regardless of color or rotation. A sensitivity score, d’, was calculated for cars (range .51–3.44), sofas (range .35–2.51), and chairs (range .77–3.31) for each subject.

These subjects also completed the sequential matching test with cars, planes and birds followed by the VET (described above).

Results

Mean category-specific BOLD responses

We obtained confirmation in independent data for the selectivity of our ROIs using the category-selective HR responses from the low load runs. For the entire group of subjects (without considering car expertise), paired t-tests performed on mean PSC values show the greatest response to faces in SR face>object regions in the fusiform and occipital gyri, to cars and/or sofas in SR object>face regions in the parahippocampal gyrus, and no category preference in early visual areas defined as scrambled>intact (Table 1).

Effect of task manipulation

The high load runs place higher demands on perceptual processing and attention, as stimuli are presented faster and the task was subjectively more difficult and led to more errors (t-test of overall accuracy in both tasks: t=10.25, p<0.0001). Our goal of assessing how robust expertise effects were in the FFA further requires that this manipulation influence visual activation as expected. Whole FOV analyses revealed significant effects of task, [(CS-FS) vs. (C-F)] in several areas, such as decreases in activity accompanying the more difficult task in several regions including areas near the average coordinates for the OFA and FFA1 bilaterally, as well as left FFA2. Interestingly, a few areas showed more activity in the high load runs, in the right inferior and middle temporal gyrus, and the right anterior fusiform (Figure 2A). The task contrast had no significant effect in any of the 6 face-selective ROIs (all ps>0.48).

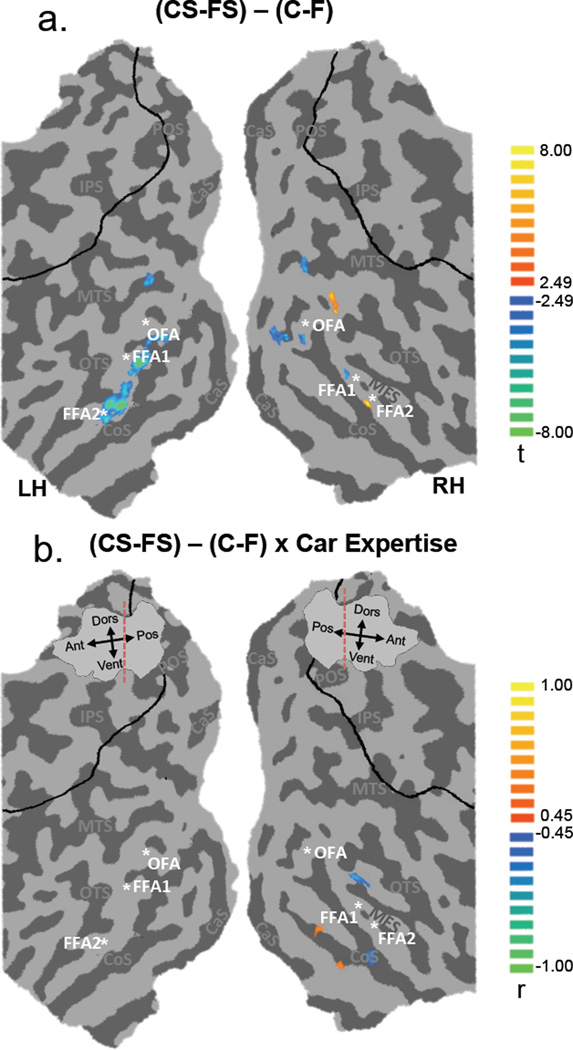

Figure 2.

(a) Group-average t-map showing the difference between the BOLD response to cars (relative to faces) in high and low load runs: (CS-FS) – (C-F). (b) Group-average r-map for the partial correlations between the BOLD response for (CS-FS) – (C-F) and behavioral car expertise regressing out non-car performance, overlaid on an individual’s flattened right and left hemisphere. The map is shown at a corrected threshold of .02 based on an alpha of .05 and a cluster threshold of 102 voxels. The black outlines depict the approximate borders of the HR field of view. See Table 3 for names, peak t- or r-values, and peak Talaraich coordinates of each activation cluster. Asterisks represent the average Talairach coordinates for bilateral group FFA1, FFA2 and OFA, as reported in Table 1. Sulci labels: CaS: Calcarine Sulcus; CoS: Collateral Sulcus; MFS: Mid-Fusiform Sulcus; OTS: Occipito-Temporal Sulcus; POS: Parieto-Occiptial Sulcus; IPS: Intraparietal Sulcus; MTS: Middle Temporal Sulcus.

Moreover, we looked for regions where behavioral car expertise was differentially correlated with each task. We find that car expertise effects were significantly reduced in several visual areas, including areas near the average coordinates for FFA1 and FFA2 bilaterally (Figure 2B). We consider car expertise effects in the context of the task manipulation in individually defined face-selective ROIs below, to directly address the goals of our study.

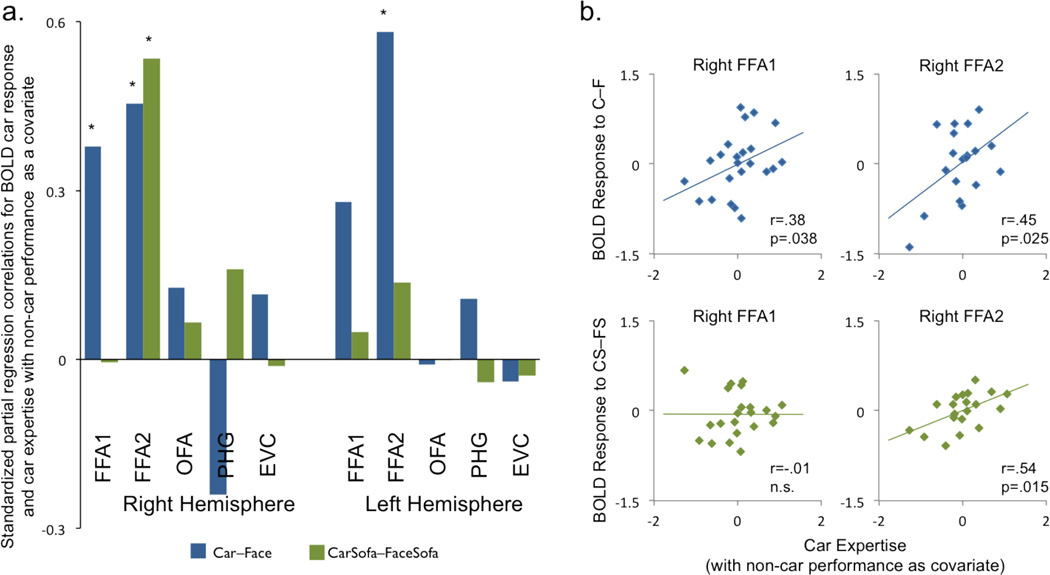

Car expertise effects

Car expertise predicted mean PSC to cars in face-selective areas when presented in isolation as in prior work (Gauthier et al., 2000; 2005; Xu 2005) and consistent with a preliminary analysis of the low load category data in McGugin et al. (2012a). Faces were used as a baseline, with the advantage that little variability in face expertise would be expected (Furl et al., 2011; Gauthier et al., 2005; Xu 2005). In each ROI, we calculated the standardized partial correlation between the behavioral car expertise index (with non-car performance as a covariate) and the BOLD response to cars vs. faces. Car expertise effects were found in right FFA1 and bilateral FFA2 (Figure 3; Table 2). Expertise effects were not observed in face-selective regions in the occipital lobe, in object-selective regions in the parahippocampal gyrus, or in early visual areas.

Figure 3.

Standardized partial regression analyses on the relationship between the BOLD response to Car–Face or CarSofa–FaceSofa and behavioral car expertise, with non-car expertise as a covariate. All variables are shown as z-scores. (a) Bar graphs represent the magnitude of the standardized partial correlation for five regions of interest (Methods) bilaterally: rFFA1 (N=23), rFFA2 (N=19), rOFA (N=18), rPHG (N=25), rEVC (N=22), lFFA1 (N=19), lFFA2 (N=18), lOFA (N=14), lPHG (N=26), lEVC (N=23). Asterisks show significant correlations at an alpha of .05 (1-tail test for Car–Face and 2-tail test for CarSofa–FaceSofa). (b) Scatterplots corresponding to the graphed partial correlations in (a) for Car–Face (top) and CarSofa–FaceSofa (bottom) for rFFA1 and rFFA2. The extreme point in the upper left region of the rFFA1 CarSofa–FaceSofa plot did not classify as an outlier (externally studentized residual=2.55); however, even when this point is removed, the correlation is not significant (r=.28, n.s.).

Table 2.

In ten functionally-defined ROIs, the standardized partial correlations between behavioral car expertise (with non-car performance as a covariate) and the BOLD response to car–face (C-F), car–face in the context of sofas (CS-FS), sofa–face (S-F) and sofa–face in the context of cars (SC-FC). Shaded/bolded cells indicate significant correlations at an alpha of .05.

| C-F | CS-FS | S-F | SC-FC | |

|---|---|---|---|---|

| Right FFA1 | 0.378 | −0.005 | 0.294 | −0.169 |

| Right FFA2 | 0.454 | 0.535 | 0.417 | 0.471 |

| Right OFA | 0.127 | 0.066 | 0.441 | −0.044 |

| Right PHG | −0.24 | 0.16 | 0.026 | −0.077 |

| Right EVC | 0.116 | −0.012 | 0.09 | 0.038 |

| Left FFA1 | 0.28 | 0.049 | 0.382 | −0.189 |

| Left FFA2 | 0.582 | 0.137 | 0.575 | 0.298 |

| Left OFA | −0.009 | −0.001 | 0.061 | 0.01 |

| Left PHG | 0.108 | −0.041 | 0.237 | 0.278 |

| Left EVC | −0.04 | −0.029 | 0.239 | −0.224 |

Next, we considered expertise effects for cars, relative to faces, during the high load runs. Again, in each ROI we used the partial correlation between the BOLD response subtraction, cars – faces, when both categories were presented in the context of sofas, and behavioral car expertise (regressing out non-car performance). The higher demands led to a different picture: first, it is clear that the higher load did not affect the expertise effect in right FFA2, the only ROI showing a significant effect of expertise (r=.54, Figure 3; Table 2). In the high load condition, no other ROI showed a correlation above r=.16, and there were marginally significant reductions in car expertise effects in the other two ROIs that had shown a car expertise effect in the low load run, right FFA1 (Hotelling’s t =1.44, p=.08) and left FFA2(t=1.61, p=.06). To demonstrate this reduction of expertise effects, we would need 67 and 58 participants in right FFA1 and left FFA2 analyses respectively, (for a power of .8 at an alpha of .05), as opposed to our current sample sizes of 23 and 18 for these regions. It is hard to imagine a larger effect size however: for instance, the right FFA shows a low load correlation with expertise of around .4, close to the average effect size of car expertise in FFA based on our meta-analysis, whereas the high load correlation with expertise is virtually 0 and, therefore, theoretically could not be smaller.

Group analyses over the whole FOV confirm that expertise effects are reduced in several extrastriate regions by our task manipulation (Figure 2). In contrast, it is also clear that the car expertise effect in right FFA2 was not affected at all by this manipulation (the correlation was, if anything, numerically stronger).

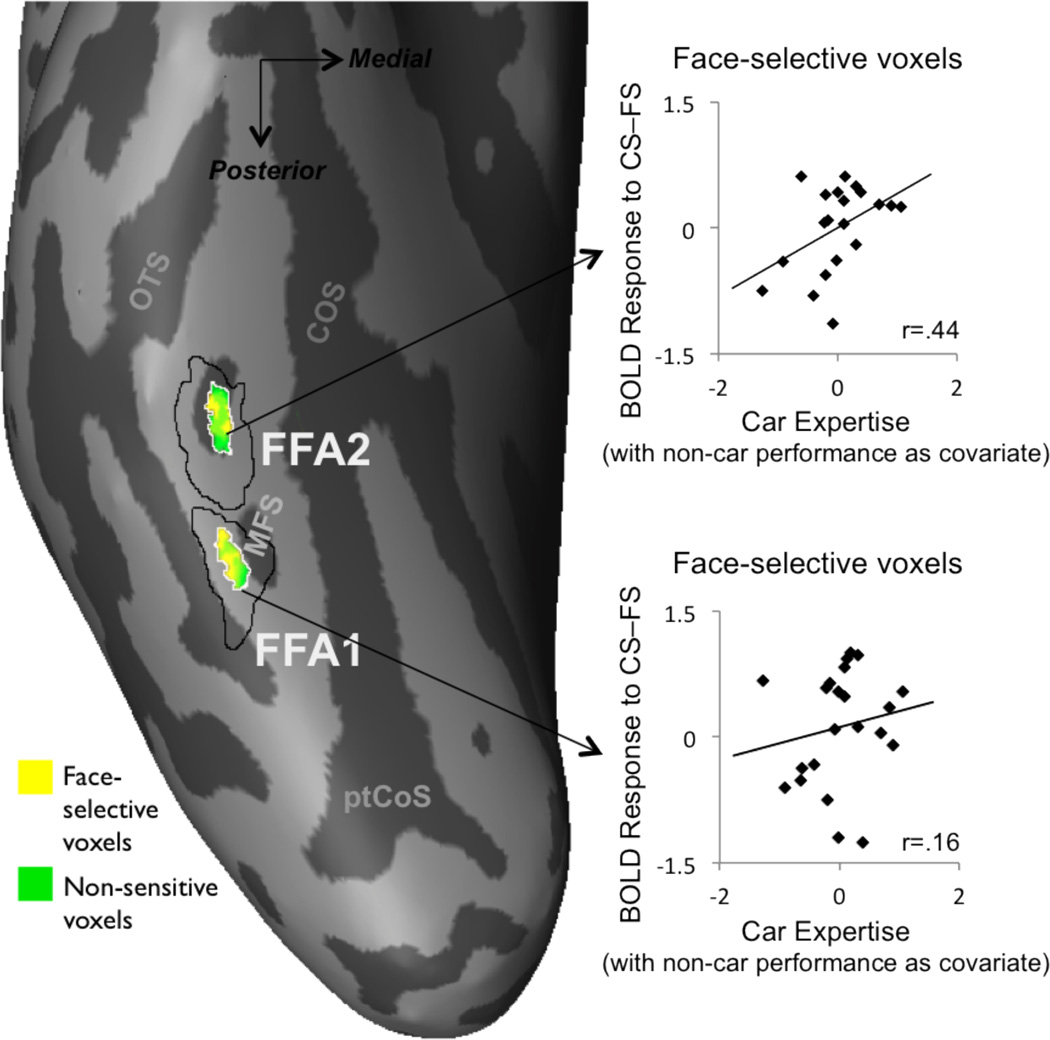

Car expertise effects in the center of FFA1/FFA2

Within each face-selective ROI defined at SR, there is a subset of HR voxels that are most strongly face selective. In McGugin et al. (2012), we reported that car expertise effects were obtained in the most face-selective voxels of the FFA ROIs. Thus, within each fusiform ROI we raised the threshold of the SR face > object contrast map to look within the 25mm2 peak of face-selectivity. Then, using data from the low load runs, we sorted HR voxels into two groups depending on their maximal response: face-selective and non-sensitive voxels (Figure 4). Face voxels in these 25mm2 regions accounted for approximately half of the voxels in each ROI (from 47% in the rFFA2 to 55% in the lFFA2). These maximally face-selective voxels were spatially interdigitated amongst non-sensitive voxels responding maximally to cars or sofas (Figure 4). Within only these face-selective voxels in the middle of the FFAs, using independent data from the high load runs, we find a significant effect of car expertise in the right FFA2 (r=.44, p=.05), but not in any of the other ROIs (all ps>0.20). This result suggests that the robust effects of car expertise in FFA2 cannot be attributed to spatial smoothing or a ROI definition that is too liberal.

Figure 4.

Inflated posterior portion of a representative subject’s right hemisphere. As defined from the SR face>object localizer run, the 100mm2 rFFA1 and rFFA2 are outlined in black, while the 25mm2 peak of face-selectivity for each region is outlined in white. Voxels within the 25mm2 peak were sorted based on their maximal response to either face or car/sofa voxels (labeled face-selective and non-sensitive, respectively) based on data from the HR low load runs. (Surface vertices encompassing two voxels of different preferences (i.e., face and car/sofa) appear in a yellow-green blend.) There were HR voxels within the 25mm2 peak that responded most to cars or sofas (average in rFFA1: 48%, rFFA2: 53%), and these non-face voxels were spatially interdigitated amongst the maximally face-selective voxels (average in rFFA1: 52%, rFFA2: 47%). CoS: Collateral Sulcus; OTS: Occipito-Temporal sulcus; MFS: Mid-Fusiform Sulcus; ptCoS: Posterior Transverse Collateral Sulcus. See Supplemental Figures 1 and 2 for additional examples of voxel distributions in FFA1 and/or FFA2 of individual subjects’ right and left hemispheres. The scatterplots to the right show the standardized partial regressions on the relationship between behavioral car expertise (with non-car performance as a covariate) and the BOLD response to CarSofa–FaceSofa from within the HR voxels maximally selective to faces from the 25mm2 hub of face-selectivity for rFFA1 and rFFA2. Even those maximally face-selective voxels show an effect of car expertise in the rFFA2 but not rFFA1 during the demanding high load runs.

Sofa effects

Since we had included sofas because of their similarity to cars in global shape, we looked at whether car expertise predicted mean PSC to sofas, relative to faces, when presented in isolation or in the context of alternating cars. In the high load condition, cars were presented both with alternating sofas (CS) and with alternating faces (CF). Surprisingly, we observed a correlation between the sofa response and behavioral car expertise in bilateral FFA2 and right OFA during low load runs and in right FFA2 during high load runs (Table 2). In general, the pattern of response relative to behavioral car expertise is very similar for sofa–face as for car–face, and particularly so in bilateral FFA2, suggesting that the responses to sofas as a function of car expertise may depend on their similarity to cars. For both cars and sofas, the only ROI that shows a significant effect of expertise in the high load condition is right FFA2.

A behavioral follow-up (Methods) found no evidence that car expertise predicts sofa discrimination. The results are qualitatively identical whether we look at all subjects or only at men, which was important because most of our fMRI subjects were men. The car expertise index predicted performance for cars (r75=.31, p=.004; r49=.41, p=.01) but not performance for sofas (r75=.13, p=.27; r49=.09, p=.57) or chairs (r75=.11, p=.36; r49=.14, p=.39). In both samples, Steiger’s Z showed the correlation with car performance to be significantly higher than that for sofas (ps=.01). These results provide no support for the idea that car expertise is generally accompanied by an advantage in the recognition of sofas, although we cannot rule out that the two abilities were associated by chance in the fMRI sample. Instead, we would argue that just as face cells have been shown to respond to apples/clocks (Tsao et al., 2006), car cells may respond to objects with a global car-like aspect. Generalization to sofa images in car experts is inconsistent with the idea that car expertise effects are due to car experts’ intrinsic interest for cars, as car experts are unlikely to attend preferentially to sofas. And since the effect for sofas is obtained in the easier low load task where the categories are blocked, it is unlikely that this effect of car expertise with sofas is due to subjects being confused about whether an image shows a car or a sofa. We therefore interpret this effect in the same way as the activation of face cells by round objects: as a bottom-up response driven by car-like features.

Finally, to test the conjecture that cars may be more similar to sofas than to control categories used in prior studies, we designed an experiment in which a new group of subjects were asked whether two sequentially presented objects were from the same category or not. We collected judgments from new subjects comparing car and sofa images from the present study and comparing objects from the last two control categories used in car expertise studies from our lab: planes were compared to cars in McGugin et al. (2012), and butterflies were compared to cars in McGugin et al. (2014). See supplemental materials for methods. The results revealed a significant effect of category, (F(2,99)=7.38, p=.0008), with subjects better able to discriminate cars from butterflies and planes than from sofas (Mean d-primes: butterflies = 2.93, SD=.64; planes = 3.00, SD = .48; sofas = 2.80, SD=.68; Scheffé tests sofas vs. butterflies: p = .05, sofas vs. planes = .001).

Effect of Load on face-selectivity

The main goal of the load manipulation was to assess its influence on car expertise effects, and we found that a high load reduced expertise effects in several visual areas but not in the right FFA2. In addition, we can also ask whether load influences the magnitude of face-selectivity in FFA. In prior analyses, we verified that when looking at activity in high-resolution voxels at low load within the FFA defined with a standard resolution localizer, the FFA produced a significant face-selective response (Table 1). However, because some of our subjects have a high degree of car expertise, they actually show more activity for cars than faces on average in the FFA in the low load condition (number of these subjects in ROIs as defined in Table 1: rFFA1 = 4; rFFA2 = 2; lFFA1 = 6; lFFA2 = 5). Moreover, the average response to cars relative to faces did not change with load in the two right FFAs (rFFA1, t22=-.46, p=.65; rFFA2, t18=-.66, p=.16), while in the left FFAs the response becomes overall less face selective at low load (lFFA1, t18=−3.78, p=.001; lFFA2, t17=−4.25,p=.0005 – see Figure S3). The results were virtually unchanged whether we looked at the larger ROIs or the smaller 25 mm2 area at the peak of face-selectivity, or when the high load condition only used the high-resolution voxels that were face-selective at low load. This suggests that these effects are not due to large ROIs including non-face selective voxels. Rather, it appears that just like car selectivity is reduced at high load in face areas (with the exception of right FFA2), face selectivity is also reduced by a high load in face-selective areas of the left hemisphere.

Discussion

Expertise with a category is likely to have important consequences for attention to objects from that category. For example, in Gauthier et al. (2000), a manipulation of attention to identity vs. location while viewing cars had a larger effect on car novices than on car experts, suggesting that car experts processed the identity of the cars more automatically than novices. Behavioral measures of expertise also take advantage of the fact that experts lose some flexibility in the manner in which they process objects: for instance, car experts process cars more holistically than car novices, evidenced by the finding that they are less able to selectively attend to a part of a car when asked to ignore other parts (Bukach, Phillips & Gauthier, 2010). Indeed, the expertise hypothesis of specialization in category-specific areas predicts that it is differences in how we process different categories (top-down effects) that eventually become automatized and stimulus-driven (Gauthier et al., 2000; Wong et al., 2009).

In this context, it may be difficult to theoretically separate effects of expertise and effects of attention, because expertise is expected to change what perceptual dimensions are attended (Goldstone, 1994; Folstein, Palmeri & Gauthier, 2013). Because attention during visual tasks is well known to increase the average level of activity in a region (Murray & Wojciulik, 2004; Wojciulik, Kanwisher, & Driver, 1998), there have been concerns that FFA may be engaged by expertise with non-face objects only as a result of a general attentional response producing a widespread increase in activity (Harel et al, 2010). Here, we sought evidence of variability in FFA selectivity across individuals that would predict perceptual expertise in a manner that is robust to attentional manipulations. We found evidence for such a robust effect of expertise for cars in the right anterior FFA (or FFA2).

Our manipulation of attention is different from that of Harel and colleagues (2010) who argued they could not find a car expertise effect when subjects were asked to ignore cars. Because subjects always attended to cars in our tasks, it remains possible that the modulations we obtained are not sufficient to abolish expertise effects in FFA. However, we did not use instructions to ignore cars because the behavioral results in Harel et al. suggest that car experts were unable to ignore cars and may also have experienced competition from images of planes. In recent work (McGugin et al., in press), using a different task where cars were shown simultaneously on the screen with other objects (butterflies or faces), we found that car expertise effects were robust to the presence of another object (clutter) but were almost entirely abolished when cars were viewed in the context of another object of expertise, i.e. faces. Such competition between categories of expertise are interesting because they converge with a number of behavioral (Behrmann et al. 2005; McKeeff et al. 2010; McGugin et al. 2011) and ERP studies (Gauthier et al. 2003; Rossion et al. 2004; 2007) to suggest functional overlap between different categories of expertise, but they should not be confounded with attentional effects. Here, we demonstrated that our load manipulation was powerful enough to affect visual activity and to reduce expertise effects for cars across extrastriate areas (an analysis that was not performed by Harel). Interestingly, the high load condition also reduced face-selectivity in the left FFA. Prior work suggests that an increase in perceptual load may play a more important role than an increase in working memory load in influencing FFA responses (Yi, Woodman, Widders, Marois & Chun, 2004). It remains to be explored how different kinds of manipulations may affect category selectivity and, more specifically, expertise effects; but our results suggest that the anterior right FFA may contain the most robust signals to predict perceptual expertise. They also suggest that load has a similar effect on face and car selectivity.

While there is a clear role of task-driven attention in the acquisition of expertise (Wong et al., 2009; Wong, Folstein & Gauthier, 2012), and perhaps even a role of attention in holistic processing of faces and other objects of expertise (Richler, Palmeri & Gauthier, 2012), there are other arguments suggesting that effects of expertise in FFA may not be simply attributable to attention. First, attention has been found to increase the selectivity of responses in face selective regions (greater difference between preferred and non-preferred categories; Puri et al., 2009), suggesting that if anything, an increase in attention to cars in experts relative to novices would help localize areas that are truly selective for cars. Second, at least within the fusiform gyrus, expertise effects are very tightly overlapping with those of face selectivity, and a general attentional effect is not sufficient to account for the same small peak of activity for cars and faces in car experts (McGugin et al., 2012a).

Our finding of the most robust expertise effects in a face-selective area in the right hemisphere is consistent with the suggestion that face-selective areas in the left hemisphere depend on information transferred from the right hemisphere (Verosky & Turk-Browne, 2012). The same advantage for right hemisphere processing may exist for non-face categories of expertise and, under particularly difficult conditions it is possible that cross-hemispheric processing is reduced. In general, and not unlike face-selectivity, expertise effects have been observed bilaterally although often qualitatively stronger in the right hemisphere (Gauthier et al., 1999; 2000; Harel et al., 2010; Harley et al., 2009; McGugin et al., 2012a). Some of the strongest lateralization effects in perceptual expertise were recently observed in contrasting expertise effects for cars versus planes. In McGugin et al., (2012a), individual differences in performance predicted activity in FFA for both cars and planes in the same group of subjects who varied in their self-reported expertise for cars, but not planes (very few subjects in that sample reported above-average interest for planes). While the right hemisphere showed robust expertise effects for both cars and planes, only cars led to any effect in the left hemisphere. We speculated at the time that perceptual skills, when they are not associated with semantic knowledge, may not be sufficient for recruitment of left hemisphere areas. It is possible that under a higher attentional load, expert perceptual representations are engaged, but not semantic representations. Importantly, these are speculations made on the basis of qualitative lateralization effects that we did not test statistically (and just like a difference between FFA1 and FFA2, would require considerable power). At a minimum, consistent with the current results and the body of work on expertise effects with objects in face-selective areas, when only one hemisphere is recruited by expertise it tends to be the right hemisphere (Gauthier et al., 2000; Harley et al., 2009; McGugin et al., 2012a; Xu et al., 2005). Similarly, there is little work that could have led to predictions about relative effects of FFA1 and FFA2, and it is important to note that we do not have sufficient statistical power to dissociate their responses here. In the low load condition we find, as in prior work, significant expertise effects in both FFA1 and FFA2 of comparable magnitude. Under high load, we find that the effect in rFFA2 is undiminished, and it is non-significant in FFA1, but we lack the power to show that it is significantly reduced by load in FFA1. Given the samples that would be needed to show such an effect, a functional dissociation between these two areas based on individual differences may have to await consideration using meta-analysis.

It is worth noting the absence of any expertise effect for cars in EVC, in contrast to Harel et al. (2010). While it is a null effect, it is obtained in the context of clear expertise effects in other areas, and it is consistent with a number of prior studies of expertise where early visual cortex was part of the field of the view (Bilalic et al., 2011; Gauthier et al., 1999; 2000; Harley et al., 2009; Xu 2005; McGugin et al., in press). This suggests that expertise individuating objects in homogeneous categories does not recruit V1 and that expertise effects for cars in Harel et al. (2010) may have been due to differences in size between cars and control images, as supported by their finding more activity for cars in V1 even for novices (although these effects were not statistically significant). Of course, other aspects of the Harel et al. study could have led to this effect, including the difference in the task. There is evidence for other kinds of perceptual expertise to recruit EVC: for example expertise reading musical notation engages putative area V1 (Wong & Gauthier, 2010), as well as perceptual learning involving orientation judgments (Sigman et al., 2005; Wong, Folstein & Gauthier, 2012).

Perhaps the most surprising finding in our study is that activity in response to images of sofas correlated with behavioral expertise for cars. This suggests that the representations that are engaged in experts depend to a large extent on global features, as sofas are most similar to car profiles in their coarse global shape. The car expertise effects with sofas may represent early activity dominated by coarse/low spatial frequency information (Bar et al., 2006; Morrison & Schyns, 2001; Sripati & Olson, 2009), without reflecting processing of finer details/higher spatial frequencies. Expertise effects with cars have been observed in FFA for both high and low spatial frequencies images, with the effects being independent and thus argued to reflect different neuronal populations (Gauthier et al., 2005). In sofa stimuli, even if the global shape is similar to cars, the local information would be inconsistent, and it was reassuring to find that behavioral car expertise predicted car matching but not sofa matching. Indeed, the similarity between cars and sofas does not require car expertise, as evidenced by our finding that cars were harder to discriminate from sofas than planes or butterflies in a sample of subjects not recruited for their expertise with cars. Therefore, it appears that a sofa looks similar to a car for car novices and experts alike, but this only activates car representations that are co-localized with face representations in car experts.

As an analogy, consider a blob that without internal features can under certain contextual conditions elicit strong activity in the FFA (Cox, Meyers & Sinha, 2004), although it would not support individuation, which is a function associated with FFA (Nestor et al., 2011). Not all face-selective activation is associated with good face recognition abilities, as demonstrated by face-selective responses in the FFA by congenital prosopagnosic patients (Avidan et al., 2005). We do not know if images of sofas would engage the FFAs of car experts if there had been no cars presented in the experiment. Most critical to our conclusions, our behavioral measure of car expertise regressed out behavioral performance with a number of non-car categories, such that the neural effects of expertise observed for both cars and sofas are specific to individuals who perform better with cars, relative to other object categories. They should not be interpreted as evidence that FFA responses to objects relate to domain-general object recognition performance.

The present results reveal that expertise effects for cars in face-selective high-resolution voxels of the right FFA2 are robust to a manipulation of attentional load. In addition, the triggering of responses in face-selective regions by sofas in people with domain-specific expertise with cars provides further evidence that responses for non-face objects in FFA are not driven by widespread attentional effects attributable to interest or arousal elicited by objects of expertise, since sofas are visually related to cars but are unlikely to be of special interest to car experts.

Supplementary Material

Table 3.

Results from group-averaged maps of difference between the BOLD response to cars (relative to faces) in low- and high load runs, and this difference (i.e., (CS-FS) – (C-F) correlated with behavioral car expertise (with non-car performance as a covariate). The table gives the name, peak t- or r-value, and peak Talairach coordinates for each cluster of activation.

| (CS-FS) − (C-F) | (CS-FS) − (C-F) × Car Expertise | ||||||

|---|---|---|---|---|---|---|---|

| Name | Cluster Peak t- value |

Mean Talairach coordinates for peak voxel |

Name | Cluster Peak r- value |

Mean Talairach coordinates for peak voxel |

||

| 1 | L med fus gyrus | −4.54 | −28, −84, −16 | ||||

| 2 | L med pos gyrus | −4.68 | −27, −70, −15 | ||||

| 3 | L occ gyrus | −4.54 | −28, −84, −16 | ||||

| 4 | L mid temp sulcus | −3.18 | −42, −80, −4 | ||||

| 5 | R med fus gyrus | 3.52 | 35, −42, −15 | 1 | R parahipp gyrus | 1.66 | 21, −35, −10 |

| 6 | R post fus gyrus | −3.07 | 28, −56, −14 | 2 | R ent cortex | 0.94 | 19, −52, −5 |

| 7 | R occ gyrus 1 | 3.26 | 49, −73, −6 | 3 | R col sulcus | −1.2 | 35, −28, −13 |

| 8 | R mid temt sulcus | −3.04 | 32, −87, 5 | 4 | R occ temp gyrus | −2.14 | 42, −61, −10 |

| 9 | R occ gyrus 2 | −3.09 | 23, −74, −14 | ||||

| 10 | R occ gyrus 3 | −3.53 | 11, −84, −9 | ||||

Acknowledgements

This work has been supported by the NSF (SBE-0542013), the Vanderbilt Vision Research Center (P30-EY008126), and the National Eye Institute (R01 EY013441-06A2). We thank Benjamin Tamber-Rosenau for comments on the manuscript.

References

- Avidan G, Hasson U, Malach R, Behrmann M. Detailed exploration of face-related processing in congenital prosopagnosia: 2. Functional neuroimaging findings. Journal of Cognitive Neuroscience. 2005;17(7):1150–1167. doi: 10.1162/0898929054475145. [DOI] [PubMed] [Google Scholar]

- Bar M, et al. Top-down facilitation of visual recognition. Proc Natl Acad Sci USA. 2006;103:449–454. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrmann M, Marotta J, Gauthier I, Tarr MJ, McKeeff TJ. Behavioral Change and Its Neural Correlates in Visual Agnosia After Expertise Training. J Cogn Neurosci. 2005;17:554–568. doi: 10.1162/0898929053467613. [DOI] [PubMed] [Google Scholar]

- Bilalic M, Langner R, Ulrich R, Grodd W. Many faces of expertise: Fusiform Face Area in chess experts and novices. J Neurosci. 2011;31:10206–10214. doi: 10.1523/JNEUROSCI.5727-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spat. Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- Bukach CM, Phillips SW, Gauthier I. Limits of generalization between categories and implications for theories of category specificity. Att Percept Psycho. 2010;72:1865–1874. doi: 10.3758/APP.72.7.1865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox D, Meyers E, Sinha P. Contextually evoked object-specific responses in human visual cortex. Science. 2004;304:115–117. doi: 10.1126/science.1093110. [DOI] [PubMed] [Google Scholar]

- Curby KM, Glazek K, Gauthier I. A visual short-term memory advantage for objects of expertise. J Exp Psychol- Human. 2009;35:94–107. doi: 10.1037/0096-1523.35.1.94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Folstein JR, Palmeri TJ, Gauthier I. Category learning increases discriminability of relevant object dimensions in visual cortex. Cerebral Cortex. 2013;23:814–823. doi: 10.1093/cercor/bhs067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forman SD, Cohen JD, Fitzgerald M, Eddy WF, Mintun MA, Noll DC. Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): use of a cluster-size threshold. Magn Reson Med. 33(5):636–647. doi: 10.1002/mrm.1910330508. [DOI] [PubMed] [Google Scholar]

- Furl N, Garrido L, Dolan RJ, Driver J, Duchaine B. Fusiform gyrus face selectivity relates to individual differences in facial recognition ability. J Cogn Neurosci. 2011;23:1723–1740. doi: 10.1162/jocn.2010.21545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gauthier I, Curran T, Curby KM, Collins D. Perceptual interference supports a non-modular account of face processing. Nat Neurosci. 2003;6:428–432. doi: 10.1038/nn1029. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nat Neurosci. 2000;3:191–197. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. Activation of the middle fusiform 'face area' increases with expertise in recognizing novel objects. Nat Neurosci. 1999;2:568–573. doi: 10.1038/9224. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Curby KM, Skudlarski P, Epstein R. Individual differences in FFA activity suggest independent processing at different spatial scales. Cogn Affect Behav Ne. 2005;5:222–234. doi: 10.3758/cabn.5.2.222. [DOI] [PubMed] [Google Scholar]

- Goebel R, Esposito F, Formisano E. Analysis of functional image analysis contest (FIAC) data with Brainvoyager QX: From single-subject to cortically aligned group general linear model analysis and self-organizing group independent component analysis. Human Brain Mapping. 2006;27:392–401. doi: 10.1002/hbm.20249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldstone RL. Influences of categorization on perceptual discrimination. J Exp Psychol Gen. 1994;123:178–200. doi: 10.1037//0096-3445.123.2.178. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N. The FFA subserves face perception not generic within category identification. Nat Neurosci. 2004;7:555–562. doi: 10.1038/nn1224. [DOI] [PubMed] [Google Scholar]

- Grill-Spector KS, Sayres R, Ress D. High-resolution imaging reveals highly selective nonface clusters in the fusiform face area. Nat Neurosci. 2006;9:1177–1185. doi: 10.1038/nn1745. [DOI] [PubMed] [Google Scholar]

- Harel A, Gilaie-Dotan S, Malach R, Bentin S. Top-down engagement modulates the neural expressions of visual expertise. Cereb Cortex. 2010;20:2304–2318. doi: 10.1093/cercor/bhp316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harley EM, et al. Engagement of Fusiform Cortex and Disengagement of Lateral Occipital Cortex in the Acquisition of Radiological Expertise. Cereb Cortex. 2009;19:2746–2754. doi: 10.1093/cercor/bhp051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James TW, James KH. Expert individuation of objects increases activation in the fusiform face area of children. NeuroImage. 2013;67:182–192. doi: 10.1016/j.neuroimage.2012.11.007. [DOI] [PubMed] [Google Scholar]

- Julian JB, Fedorenko E, Webster J, Kanwisher N. An algorithmic method for functionally defining regions of interest in the ventral visual pathway. NeuroImage. 2012;60:2357–2364. doi: 10.1016/j.neuroimage.2012.02.055. [DOI] [PubMed] [Google Scholar]

- Li W, Piech V, Gilbert CD. Perceptual leaning and top-down influences in primary visual cortex. Nature Neuroscience. 2004;7:651–657. doi: 10.1038/nn1255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGugin RW, Gatenby JC, Gore JC, Gauthier I. High-resolution imaging of expertise reveals reliable object selectivity in the FFA related to perceptual performance. Proc Natl Acad Sci USA. 2012a;109:17063–17068. doi: 10.1073/pnas.1116333109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGugin RW, McKeeff TJ, Tong F, Gauthier I. Irrelevant objects of expertise compete with faces during visual search. Atten Percept Psychophys. 2011;73:309–317. doi: 10.3758/s13414-010-0006-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGugin RW, Richler JJ, Herzmann G, Speegle M, Gauthier I. The Vanderbilt Expertise Test Reveals Domain-General and Domain-Specific Sex Effects in Object Recognition. Vision Research. 2012b;69:10–22. doi: 10.1016/j.visres.2012.07.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGugin RW, Van Gulick AE, Tamber-Rosenau BJ, Ross D, Gauthier I. Expertise effects in face-selective areas are robust to clutter and diverted attention, but not to competition. Cerebral Cortex. doi: 10.1093/cercor/bhu060. (In Press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKeeff TJ, McGugin RW, Tong F, Gauthier I. Expertise increases the functional overlap between face and object perception. Cognition. 2010;117:355–360. doi: 10.1016/j.cognition.2010.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morrison DJ, Schyns PG. Usage of spatial scales for the categorization of faces, objects, and scenes. Psychon B Rev. 2001;8:454–469. doi: 10.3758/bf03196180. [DOI] [PubMed] [Google Scholar]

- Murray SO, Wojciulik E. Attention increases neural selectivity in the human lateral occipital complex. Nat Neurosci. 2004;7:70–74. doi: 10.1038/nn1161. [DOI] [PubMed] [Google Scholar]

- Nestor A, Plaut DC, Behrmann B. Unraveling the distributed neural code of facial identity through spatiotemporal pattern analysis. Proc Natl Acad Sci USA. 2011;108:9998–10003. doi: 10.1073/pnas.1102433108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software of visual psychophysics: Transforming numbers into movies. Spatial Vision. 1997;10:437–442. [PubMed] [Google Scholar]

- Pinsk MA, Arcaro M, Weiner KS, Kalkus JF, Inati SJ, Gross CG, Kastner S. Neural representations of faces and body parts in macaque and human cortex: a comparative fMRI study. J Neurophysiol. 2009;101:2581–2600. doi: 10.1152/jn.91198.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puri AM, Wojciulik E, Ranganath C. Category expectation modulates baseline and stimulus-evoked activity in human inferotemporal cortex. Brain Res. 2009;1301:89–99. doi: 10.1016/j.brainres.2009.08.085. [DOI] [PubMed] [Google Scholar]

- Rossion B, Collins D, Goffaux V, Curran T. Long-term expertise with artificial objects increases visual competition with early face categorization processes. J Cogn Neurosci. 2007;19:543–555. doi: 10.1162/jocn.2007.19.3.543. [DOI] [PubMed] [Google Scholar]

- Rossion B, Kung CC, Tarr MJ. Visual expertise with nonface objects leads to competition with the early perceptual processing of faces in the human occipitotemporal cortex. Proc Natl Acad Sci USA. 2004;101:14521–14526. doi: 10.1073/pnas.0405613101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sigman M, Pan H, Yan Y, Stern E, Silbersweig S, Gilbert C. Top-Down reorganization of activity in the visual pathway after learning a shape identification task. Neuron. 2005;46:823–835. doi: 10.1016/j.neuron.2005.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sripati AP, Olson CR. Representing the forest before the trees: a global advantage effect in monkey inferotemporal cortex. J Neurosci. 2009;29:7788–7796. doi: 10.1523/JNEUROSCI.5766-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-Planar Sterotaxic Atlas of the Human Brain. New York: Thieme Medical; 1988. 1988. [Google Scholar]

- Tsao DY, Freiwald WA, Tootell RB, Livingstone MS. A cortical region consisting entirely of face-selective cells. Science. 2006;311:670–674. doi: 10.1126/science.1119983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Verosky SC, Turk-Browne NB. Representations of facial identity in the left hemisphere require right hemisphere processing. J Cog Neurosci. 2012;24:1006–1017. doi: 10.1162/jocn_a_00196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiner KS, Sayres R, Vinberg J, Grill-Spector K. fMRI-adaptation and category selectivity in human ventral temporal cortex: Regional differences across time scales. J Neurophysiol. 2010;103:3349–3365. doi: 10.1152/jn.01108.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiner KS, et al. The mid-fusiform sulcus: A landmark identifying both cytoarchitectonic and functional divisions of human ventral temporal cortex. NeuroImage. 2014;84:453–465. doi: 10.1016/j.neuroimage.2013.08.068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wojciulik E, Kanwisher N, Driver J. Modulation of activity in the fusiform face area by covert attention: an fMRI study. J Neurophysio. 1998;79:1574–1579. doi: 10.1152/jn.1998.79.3.1574. [DOI] [PubMed] [Google Scholar]

- Wong YK, Folstein JR, Gauthier I. The nature of experience determines object representations in the visual system. Journal of Exp Psychol Gen. 2012;141:682–698. doi: 10.1037/a0027822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu Y. Revisiting the role of the fusiform face area in visual expertise. Cereb Cortex. 2005;15:1234–1242. doi: 10.1093/cercor/bhi006. [DOI] [PubMed] [Google Scholar]

- Yi D-J, Woodman GF, Widders D, Marois R, Chun MM. Neural fate of ignored stimuli: dissociable effects of perceptual and working memory load. Nature neuroscience. 2004;7(9):992–996. doi: 10.1038/nn1294. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.