Abstract

A goal of Brain-Computer Interface (BCI) research is to develop fast and reliable means of communication for individuals with paralysis and anarthria. We evaluated the ability of an individual with incomplete locked-in syndrome enrolled in the BrainGate Neural Interface System pilot clinical trial (IDE) to communicate using neural point-and-click control. A general-purpose interface was developed to provide control of a computer cursor in tandem with one of two on-screen virtual keyboards. The novel BrainGate Radial Keyboard was compared to a standard QWERTY keyboard in a balanced copy-spelling task. The Radial Keyboard yielded a significant improvement in typing accuracy and speed – enabling typing rates over 10 correct characters per minute. The participant used this interface to communicate face-to-face with research staff by using text-to-speech conversion, and remotely using an internet chat application. This study demonstrates the first use of an intracortical BCI for neural point-and-click communication by an individual with incomplete locked-in syndrome.

Keywords: ALS, stroke, spinal cord injury, paralysis, assistive technology, text entry

Introduction

Neural Interface Systems (NISs), also termed Brain-Computer Interfaces (BCIs), are devices that connect the nervous system to an external device for the purpose of restoring mobility and communication to individuals with paralysis and anarthria (inability to speak) resulting from neurological disorders (e.g. advanced ALS, basilar thrombosis and bilateral ventral pontine infarction) or traumatic nervous system injury (e.g. cervical spinal cord injury). One goal of BCI research is to develop fast and reliable communication systems for individuals whose cognition and language capabilities remain intact but are unable to control muscles of speech articulation or functionally use their limbs to control an assistive communication device. A number of BCIs for communication have been developed using scalp electroencephalography (EEG) and electrocortiography (ECoG).1–3 These systems, such as the P300 matrix speller 4–8 and the Rapid Serial Visual Presentation (RSVP) paradigm 9–11, have primarily been based on synchronous evoked potentials. Another approach has been to provide self-paced two dimensional computer cursor control using sensory-motor rhythm modulation extracted from EEG12 and ECoG13, including one report of a 2D cursor control paradigm with a sequential, synchronous selection method, or a “point-then-triggered-click” paradigm.14

Intracortical microelectrode arrays have shown the potential to provide more information-rich control signals for BCIs that would be especially suited for high dimensional self-paced control.15–20 By providing at least two dimensional (2D) neural control of a computer cursor and a parallel selection method (i.e. “clicking”), users could not only type self-selected characters, but to also use native computer applications with a cursor, much like an able-bodied person using a mouse. Non-human primate research has shown that this technology can enable multidimensional neural ensemble control of effectors with two15 or more degrees of freedom.16,17 Our previous reports have demonstrated the ability of participants to obtain point-and-click control on a computer screen by imagining moving a computer mouse with their arm and imagining squeezing their hand to select items on the screen.18–20

With the proliferation of mobile, tablet, and other touch-screen devices, an area of increasing interest in Human-Computer Interaction is in virtual (on-screen) keyboard design. Many alternative virtual keyboard interfaces have been designed with more efficient text entry capabilities. These include, for example, DASHER,21 a gesture-driven text entry interface that has been tested as a BCI communication device using one-dimensional EEG-based control; Hexo-Spell,22,23 which is a circular, two-tiered speller integrated into the EEG-based Berlin BCI; the Metropolis keyboard,24 which was developed using a specialized algorithm to define the locations of keys on the keyboard in an optimized fashion; and numerous keyboard designs from Mackenzie,25,26 which are based on scan-switch control, optimal letter layouts, and ambiguous text entry – similar to T9 Word (Nuance Communications, Burlington, MA) for keypad mobile phones. Similar innovations can be applied to continuous neural point-and-click control to optimize BCI-driven communication for people with disabilities.

We developed and implemented two virtual keyboards and evaluated them under closed-loop neural point-and-click control by one participant in the BrainGate pilot clinical trial. These keyboards are part of a software package, BrainGate2 Desktop (BG2D), designed to enable users of the BrainGate Neural Interface System to use any standard PC software. The first keyboard is based upon the standard QWERTY layout found on most physical keyboards. The second keyboard is a novel interface called the BrainGate Radial Keyboard, which has been designed to improve typing performance and ease-of-use for neural point-and-click communication. To compare these two virtual keyboards, typing performance was assessed through a balanced copy-spelling task. The general-purpose utility of BG2D was also demonstrated by a real-time internet chat conversation between the participant and a remote researcher, and by an informal question and answer session in which the participant used text-to-speech conversion to communicate in person with research staff.

Methods

Participant

The participant (S3) is a woman, 58 years old at the time of this study, with more than 14 years of tetraplegia and anarthria resulting from an ischemic stroke that yielded extensive bilateral pontine infarction. Due largely to the interruption of descending control of the laryngeal branches of the vagus and the hypoglossal nerves, she was able to generate only rare, barely audible vowels. She had no functional use of her arms or legs, though bilateral upper extremity flexor spasms could be generated over a limited range of motion. Extraocular movements and head rotation were largely intact. Her only dependable communication was reliant on a second person to hold a translucent eye board in front of her and to read aloud the letter she appeared to be looking at; if correct, she would look up quickly. She had tried a few commercially available assistive communication technologies, which she controlled either through a reflective marker placed on the bridge of her glasses or through a single button switch placed on her headrest. These caused her continual frustration, and were often broken (the vendor's repairs took weeks to months). Despite their expense, these assistive devices were regularly abandoned.

A 96-channel intracortical microelectrode array (Blackrock Microsystems Inc., Salt Lake City, UT) was surgically implanted in the arm/hand area of her motor cortex approximately 5 years previous to this study. Sessions included in this study occurred on trial days 1589, 1895, 1918, 1925 and 1976. The keyboard comparison sessions were conducted on trial days 1895, 1918 and 1925; and the internet chat and in-person conversation sessions were conducted on trial days 1589 and 1976, respectively. For additional information about the BrainGate2 clinical trial and participant S3, see Simeral et al.20 and Hochberg et al.27

Data Acquisition

Neuronal ensemble activity was recorded and digitized using Cerebus hardware and software (Blackrock Microsystems, Inc.), and processed in 100 ms bins using custom Simulink software (Mathworks, Natick, MA). On trial days 1895, 1918, 1925 and 1976, a zero-phase-lag (“non-causal”) band-pass filter with corners at 250 – 5000 Hz was applied to the data with a 4 millisecond delay before spike threshold crossings were detected and summed for each channel; these threshold crossing rates were then used for decoding.27,28 On trial day 1589, spikes from putative single neurons were manually discriminated (“spike sorting”) using Blackrock Microsystems, Inc. software, and spike counts from all single neurons were used for decoding. Channels not recording usable neural signals were turned off at the beginning of the session. See Hochberg et al.27 for detailed signal processing methods.

Decoding

To provide continuous point-and-click control over the computer desktop cursor, we employed a parallel continuous kinematics plus discrete click state decoding architecture adapted from Simeral et al.20 and Kim et al.19 A Kalman filter was used to control cursor velocity, and a linear discriminant analysis (LDA) classifier running in parallel was used to decode clicks from the same neural signals.

The Kalman filter was initialized using a standard center-out-and-back cursor task. During initialization, the participant watched an on-screen cursor that was preprogrammed to move from target to target. She was instructed to imagine that she was controlling the cursor's movements by moving her dominant hand at the wrist. Her neural activity was recorded and regressed against the cursor kinematics that she had observed (and imagined generating). After initializing the Kalman filter using this “open-loop” block of data, this filter was used for velocity cursor control and further refined in a “closed-loop calibration” paradigm.29 In this phase, the participant performed the center-out task under neural control, acquiring targets by dwelling on them for 300 milliseconds. Multiple (4 or 5) closed-loop calibration blocks were repeated, and the decoder was updated after each block of ~24 trials under the assumption that, at each moment during the first 3 seconds of each trial (thought to be the “ballistic” component of that trial), the neural activity reflected the participant's intention to move the cursor directly toward the target. Channels qualified for inclusion in the filter by meeting a tuning quality criterion (modulation depth normalized by residual standard deviation had to exceed 0.1).29 In each of the sessions, 60, 18, and 18 channels, respectively, were included in the Kalman filter. For more details on the decoder calibration methods, see Hochberg et al.27 and Jarosiewicz et al.29

Once the participant reached a satisfactory level of performance (typically > 70% target acquisition rate) in the center-out task, the LDA classifier was calibrated, enabling a parallel state switch for “click” control. For calibration of the LDA classifier, the participant was instructed to imagine controlling a cursor making pre-programmed movements to targets. Each time the cursor returned to the center target, the screen turned pink for 1.5 seconds, during which time the participant was instructed to imagine squeezing her dominant hand. The classifier was then calibrated to separate neural activity states corresponding to the intention to move the cursor vs. neural activity states corresponding to the intention to click. One or two subsequent center-out blocks were run to assess point-and-click control before moving on to a copy-spelling task (described in the next section). During the copy-spelling tasks, the tuning model of the decoders remained static within a given session; these decoders were used for 14, 20, and 22 minutes of task time for each of the 3 copy-spelling sessions, respectively. Throughout the use of a given decoder, an adaptive mean tracking algorithm was used to track changes in baseline firing rates.27

Keyboard Designs

Two on-screen virtual keyboards were developed and evaluated under closed-loop neural point-and-click control. The first keyboard has a standard QWERTY layout (Figure 1b), similar to a commonly used physical desktop keyboard. In addition, it has a collection of word completion keys; candidate completion words are drawn from a conglomerate of dictionaries containing over 58,000 words. This keyboard has built-in text-to-speech and spell-check functionality, and it can send text into any standard desktop computer application.

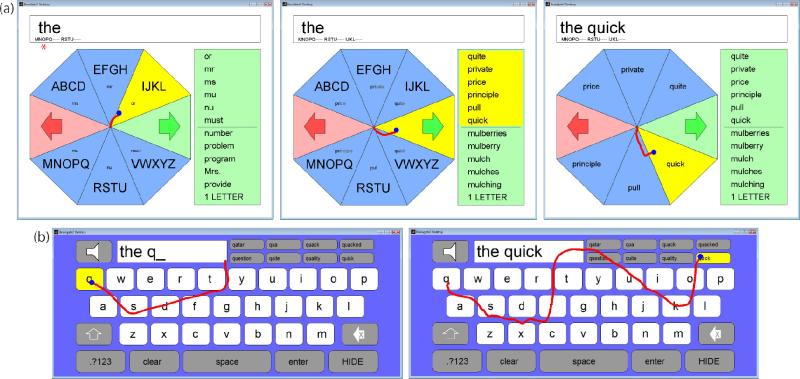

Figure 1.

Virtual keyboard designs. (a) Radial Keyboard screen capture of the participant typing the word “quick”. Left: the keys containing the “q” and “u” have already been entered, as denoted by the “MNOPQ” and “RSTU” text shown below “the” in the word editor (and above the red asterisk). The red trace (overlaid onto the screen capture, not visible during actual system use) shows the trajectory used to select the “IJKL” key to enter the “i” in quick (the blue dot shows the neurally-clicked selection location). Middle: after entering the “i”, the desired word (“quick”) appeared in the word list; the participant selected the green right facing arrow key to bring the word completions onto the radial keys. Right: the participant selected the word “quick” from the word completions to enter the word in the editor. (b) The participant typed the word “quick” with the QWERTY keyboard. Left: the participant moved to and selected “q”. Right: the participant moved across the screen to the word completion to select “quick”.

Because the QWERTY layout was originally designed to prevent early typewriters from jamming, with its keys arranged in a way that forces the typist to alternate hands for common letter sequences, it may not be a practical layout when the access method is a single cursor that takes more time to move further across the keyboard. Thus, we developed a second virtual keyboard with a radial layout (Figure 1a) specifically for continuous point-and-click control with the investigational BrainGate Neural Interface System. The BrainGate Radial Keyboard consists of 8 keys arranged in a radial configuration with a word completion and functions/options list. The keyboard utilizes a variant of the ambiguous text entry method T9 Word.26 Ambiguous text entry represents the entire alphabet on a smaller subset of keys, with multiple letters on each key. The user selects the key that contains the desired letter, and repeats this for each letter of the word they are typing. A dictionary-based algorithm then disambiguates the key combinations into a list of words created by all possible key combinations of letters typed in the sequence. This disambiguation procedure generates a manageable subset of words, and often generates the intended word near the top of the list when the dictionary is ranked by frequency of use.26 In addition, the Radial Keyboard provides word completions (predicting full words when only a subset of letters have been entered), text-to-speech functionality through a functions menu, and (like the QWERTY keyboard) the capacity to send text into native computer applications.

The Radial Keyboard works as follows (Figure 1a, Supplemental videos). The cursor starts in the center of the keyboard. The user moves the cursor and selects the wedge containing the letter she wishes to enter. After each selection, the cursor is automatically re-centered, allowing each trajectory to the next letter selection to be very short (and therefore rapid). As the cursor re-centers, a list of disambiguated word completions updates in the right green box. The top six words are also shown inside of the radial letter keys to reduce the visual search required to view the word list. These words have the first N letters bolded, corresponding to the N keystrokes made by the user, to help the user keep track of how many letters she typed within the current word. For example, if the user is typing the word “quick” as in Figure 1a, after she selects the buttons containing “q”, “u”, and “i”, the word “quick” appears in the “IJKL” button, and the “qui” is bolded. Also, after each letter is selected, a string of text showing the name of the key that has been selected is displayed in the word editor in smaller font below the entered text (e.g. if the desired letter is “q” and the “MNOPQ” key is selected, then the text “MNOPQ” is shown in small font in the editor).

When the desired word appears in the word list, the user selects the key containing the right-facing green arrow, which replaces the letter options in the wedges with words from the word list, allowing the desired word to be selected. The user then selects the key containing the desired word and it appears in the text editor at the top of the interface. To access the second set of 6 words in the word list, the green right arrow key can be selected again, acting as a toggle between the two word sets. The word list is sorted by order of ranked frequency for the top 1000 words encoded in the 58,000+ word English dictionary, and alphabetically for the rest of the words. If the user has selected the necessary keys and the desired word does not appear in the word list, the user then selects the “1 Letter” option at the bottom of the word prediction list. The keyboard then displays the individual letters from the selected series of wedges, one wedge at a time, to allow the user to manually select the desired letter from each of the previously selected wedges. Once each letter is disambiguated and the word is entered, this word is printed to the editor as before, and the word is permanently added to the software's dictionary.

When the user is not in the middle of typing a word (i.e., when there is not a partially completed word in the editor), the word list is replaced with a function/options list, which can be accessed by selecting the right arrow key and choosing an option. These options include: speak (for text to speech), enter (for sending text into other applications), clear, exit, numbers, punctuation, etc. At all times, the left-facing red arrow key is used as an “undo” button, executing a delete, navigate-back or exit command depending on the state of the keyboard.

Experimental Tasks

To directly compare the performance and usability of the QWERTY and Radial virtual keyboard interfaces under neural point-and-click control, a balanced copy-spelling task was performed by participant S3 in three research sessions (trial days 1895, 1918, and 1925). In the first task, the participant typed the word “keyboard ” (with a space at the end) twice with each keyboard in an alternating block design, with word prediction turned off. The second task was to type the phrase “the quick fox is lazy now ” (henceforth referred to as the “quick fox” task) with both keyboards in a similar alternating block design, except with word completion enabled. The trailing space at the end of this phrase was a by-product of the word completion selection automatically appending a space onto the end of words. The “quick fox” phrase was adapted from the standard typing evaluation sentence “the quick brown fox jumps over the lazy dog”, which includes every letter in the English alphabet. Although the adapted phrase does not contain all letters in the alphabet, it does sufficiently sample both the QWERTY and Radial Keyboard workspaces, and its shorter length allowed for more repetitions of the task per session. In all three sessions, the participant typed each phrase (“keyboard” and “quick fox”) twice, resulting in 6 total repetitions of each typing task.

Evaluation Metrics

To evaluate typing performance with each of the virtual keyboards, the following metrics were used:

Percent correct: ratio of correct selections to total selections made, × 100. Selecting backspace to make a correction was considered a successful selection.

Keystrokes per minute (kspm): total number of keystrokes (selections) made per unit time, regardless of whether it was correct or not.

Correct characters per minute (ccpm): the ratio of the number of correct characters entered to the amount of time (in minutes) taken to enter the correct text. If the participant made an error in either of the typing tasks, she was required to fix the error to complete the task. Note: this metric is not directly related to the number of keystrokes or a theoretical typing rate; rather, it represents an effective output metric that measures how fast the user generated the desired (error-free) text per unit time.

To compare metrics between keyboards, one-tailed paired t-tests were used to test the null hypothesis that the mean of each metric for the Radial Keyboard was not higher than the QWERTY keyboard. If rejected (p < 0.05), then the Radial Keyboard showed significantly higher performance than the QWERTY keyboard for that metric.

Results

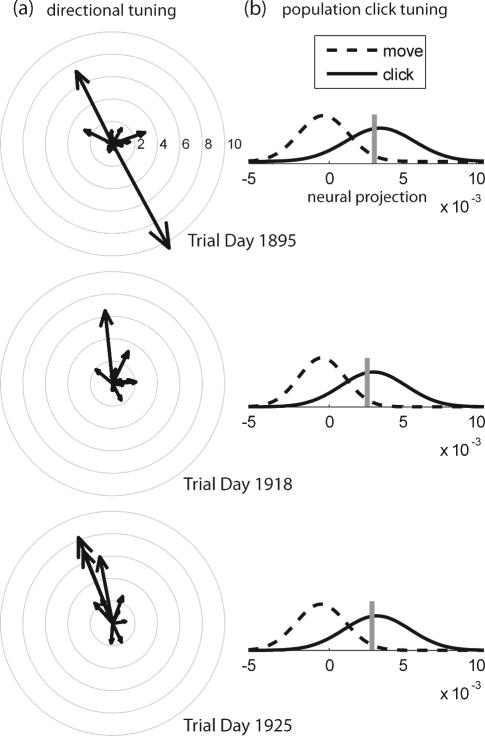

Participant S3 was able to enter text and use text-to-speech with both QWERTY and Radial virtual keyboards under neural point-and-click control using the BrainGate Neural Interface System. Directional and click tuning of the neuronal populations used for copy-spelling sessions (Figure 2) were sufficient to perform the neural point-and-click tasks. The population click classifier was robust in each session, as demonstrated by the well-separated peaks of the “move” and “click” state likelihood distributions (Figure 2b). Because of the cost of false clicks (requiring a backspace selection to correct the mistake), a less sensitive classifier threshold was selected to minimize false positives (accidental clicks) at the expense of making clicks slightly more difficult to generate. Example decoded trajectories and click locations using each of the virtual keyboards (Figure 1) highlight the beneficially short distances required to make selections with the Radial Keyboard compared to the QWERTY keyboard.

Figure 2.

Continuous (Kalman Filter) and State decoders (LDA) used for neural point-and-click control for each of the 3 copy-spelling sessions. (a) Kalman filter decoders: each arrow shows the tuning of a channel; the direction represents its preferred direction, and the amplitude represents its modulation depth in Hz. Each gray ring represents a 2 Hz increment in modulation depth. (b) State “click” decoders: LDA modeled Gaussian distributions for movement (dashed) and click (solid) classes. Gray bar shows the decision boundary (threshold) chosen to minimize false clicks. A click is decoded when the log-likelihood exceeds the threshold for 3 consecutive samples (300 msec).

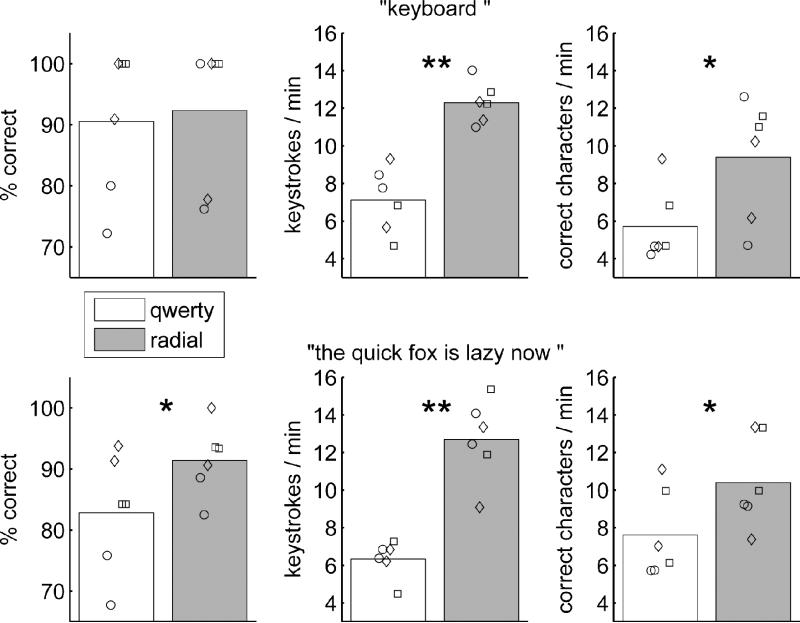

In the copy-spelling tasks, the participant made accurate selections using both keyboards (Figure 3). Her accuracy in the “quick fox” task was higher using the Radial Keyboard than the QWERTY keyboard (mean ± standard deviation, p-value; QWERTY: 82.9 ± 9.7, Radial: 91.4 ± 5.8 % correct, p = 0.047). In the “keyboard” task, accuracy was not significantly higher using the Radial Keyboard (QWERTY: 90.5 ± 12.0, Radial: 92.3 ± 11.9 % correct, p > 0.05). In the “keyboard” task, the number of keystrokes per minute (QWERTY: 7.1 ± 1.7, Radial: 12.3 ± 1.1 kspm, p < 0.001) and the number of correct characters per minute (QWERTY: 5.7 ± 2.0, Radial: 9.4 ± 3.2 ccpm, p = 0.019) were higher using the Radial Keyboard compared to the QWERTY keyboard. In the “quick fox” task, keystrokes per minute (QWERTY: 6.3 ± 1.0, Radial: 12.7 ± 2.2 kspm, p < 0.001) and correct characters per minute (QWERTY: 7.6 ± 2.3, Radial: 10.4 ± 2.4 ccpm, p = 0.035) were also higher using the Radial Keyboard. Overall, the Radial Keyboard yielded a 65% improvement in correct characters per minute in the “keyboard” task, and an improvement of 37% in the “quick fox” task. In each session, the Radial Keyboard outperformed the QWERTY keyboard in keystrokes per minute and correct characters per minute for both tasks. The Radial Keyboard also outperformed the QWERTY keyboard in accuracy, except in the “keyboard” task in the second session (see Supplemental Figure 1 for day to day comparisons).

Figure 3.

Pooled performance results from the copy-spelling tasks. Top Row: performance in the “keyboard” task. Bottom Row: performance in the “quick fox” task. Left column: percent correct (accuracy). Middle column: keystrokes per minute. Right column: correct characters per minute. Bars = mean performance across the 6 blocks from all 3 sessions. Data points show individual block performance for the 6 repetitions of typing each phrase for each task across the 3 sessions. Data points are offset horizontally, representing the temporal order of their occurrence (left to right; circles, diamonds, and squares correspond to trial days 1895, 1918, and 1925, respectively). * = p < 0.05, ** = p < 0.0001 (one-tailed paired t-test).

As an additional demonstration of the general-purpose capability of the BG2D interface, the participant communicated in real time from her residence using the QWERTY keyboard and a commonly used internet chat application (Google Chat; Google Inc., Mountain View, CA) with a researcher at his University laboratory desk. The participant opened a Google Chat window with the neurally controlled computer cursor, opened the QWERTY virtual keyboard by moving the cursor to the left edge of the screen, and entered text into the chat window to have a brief real-time conversation (Table 1). Her typing performance was comparable to the QWERTY blocks of the copy-spelling task (100% accuracy, 8.1 correct characters per minute). This demonstration of a real-time internet chat conversation by a person with incomplete locked-in syndrome using a BCI highlights the flexibility of BG2D for use with native applications useful for commonplace communication.

Table 1.

BrainGate-Google Chat Interface Conversation.

| Conversation | Typing Time (sec) |

|---|---|

| S3: hi | 11 |

| R: Hello! How are you? | |

| S3: fine | 38 |

| R: Glad to hear that. What's it like using the BrainGate2 desktop interface? | |

| S3: exciting | 55 |

Transcript of S3's real-time internet chat conversation with a researcher (denoted “R” in the table; author SDS) at Brown University from her residence. S3 achieved 100% accuracy and a typing rate of 8.1 correct characters per minute using the QWERTY keyboard.

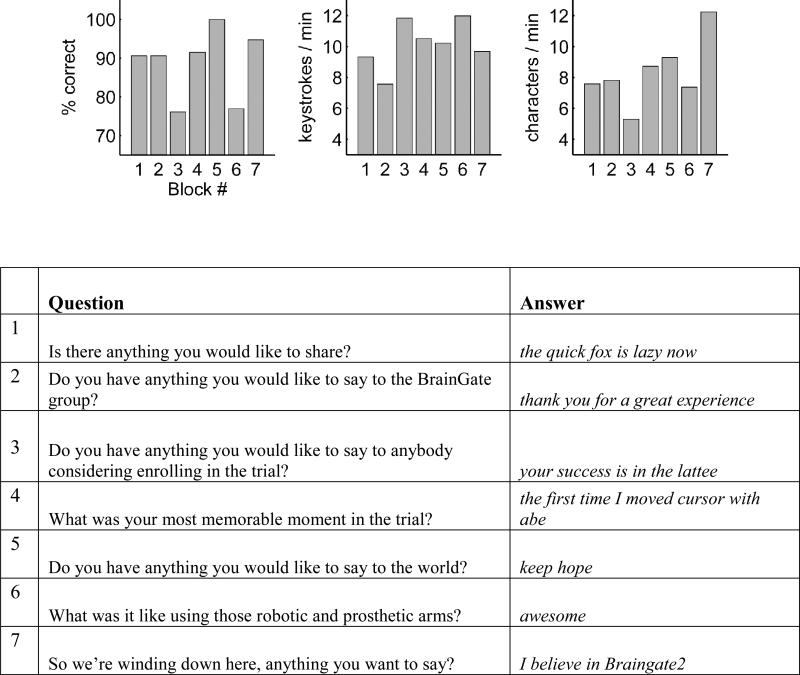

During S3's final research session in the BrainGate2 clinical trial (trial day 1976), an informal question and answer session was conducted using the Radial Keyboard interface under BrainGate neural point-and-click control as a means for her to communicate her answers (Figure 4; Supplemental videos). Spontaneous questions were asked and she replied in real time with her responses, utilizing the text-to-speech function to speak her answers to the researchers present in the room with her. Performance metrics were computed for each of her answers (Figure 4). The metrics were slightly lower during this session compared to the copy-spelling task sessions; this slight decrease is likely attributable to the time it took for her to think about her responses and enter her answers spontaneously in this real-time conversation.

Figure 4.

Question/Answer session results. Top: typing performance for each answer the participant gave. Bottom: questions asked and S3's responses. To provide some context for S3's responses: Question 1: due to her competitive nature (and great sense of humor), S3 decided to type the copy spelling task phrase one more time to test her skill. Question 3: S3's favorite beverage during sessions was a cinnamon latte. It is unknown whether lattes improve neural control. Question 4: Abe was the first clinical technician S3 worked with as a part of the clinical trial. Question 6: S3 successfully controlled an assistive robotic arm and a prosthetic arm a few days before this final research session. Question 7: A final statement from S3 using the investigational BrainGate Neural Interface System.

In order to test the potential clinical utility of an assistive device, it is also important to assess the user's subjective experience using the device. When asked for feedback regarding both the QWERTY and Radial Keyboard designs, S3 enthusiastically complimented the Radial Keyboard for being “fast” and “easier to use” than the QWERTY keyboard. S3 joked that she would “never go back” to the QWERTY keyboard after using the Radial Keyboard. She has also expressed interest in using the Radial Keyboard, driven by a head tracker, outside of the BrainGate2 clinical trial as her primary text entry system for communication and computer interaction.

Discussion

This study demonstrates, for the first time, that an individual with tetraplegia and anarthria can communicate in real time using neural point-and-click control derived from intracortical neural activity. We also show that improvements in keyboard design can enhance the utility of a BCI-driven point-and-click communications system. The participant demonstrated higher accuracy and higher typing rates (correct characters per minute) with the novel Radial Keyboard interface compared to a keyboard interface in the standard QWERTY layout. Furthermore, the participant reported strongly preferring the Radial Keyboard over the QWERTY keyboard, citing its speed and ease of use.

BCI communication performance

The participant in this study, who has incomplete locked-in syndrome, was able to type at a sustained rate of 10.4 correct characters per minute averaged across three sessions (see “quick fox” task, Figure 3). While higher typing rates have been achieved in previous studies with able-bodied participants3, the fastest reported sustained typing rates by a participant with locked-in syndrome are on the order of 1 – 3 characters per minute using P300 EEG systems.5,10,30 The average typing rate of over 10 correct characters per minute reported in this study therefore represents roughly a 3-10 × increase in typing rate over previously reported BCI communication rates by people with locked-in syndrome.

One method to increase communication rates is by integrating language models into BCIs (e.g. RSVP keyboard).10 We anticipate improved typing performance by making better use of sophisticated language models in our system for applications such as auto-correct, letter prediction, and improved word prediction. The word prediction algorithm used in our system was rudimentary, based on a relatively small (58,000 word) dictionary. Modern word prediction systems are built on corpora with millions of words and are adaptive in nature to “learn” statistical patterns in word order as the user types. Integrating these improvements into BCIs is likely to further improve communication rates.

Radial Keyboard design considerations

Fitts’ Law31,32 provides a framework for evaluating computer pointing devices and interfaces, stating that the larger the travel distance required and the smaller the target, the longer it will take to acquire that target. The “alternating hand” design of the QWERTY keyboard implies that common letter sequences require the user to move a cursor to alternating sides of the keyboard; thus, it becomes apparent that the QWERTY layout is theoretically sub-optimal for use with a single cursor (e.g. Figure 1b). The circular layout and ambiguous text entry design of the Radial Keyboard shortens the travel distance and increases the target size, resulting in an improvement in typing rate.

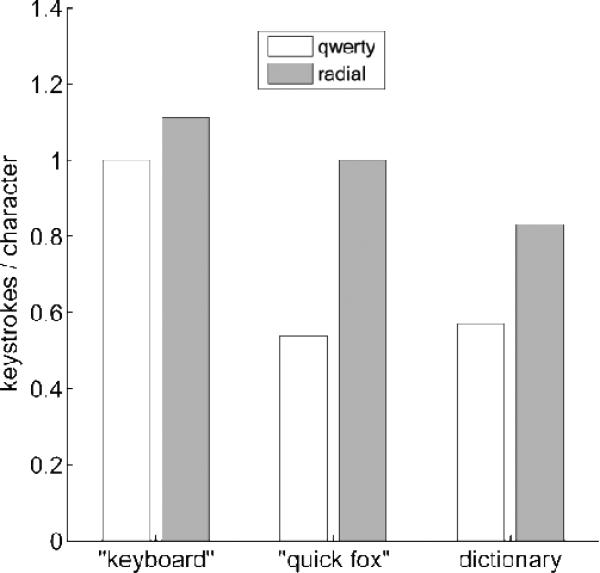

Despite this theoretical improvement in efficiency, the Radial Keyboard still requires more keystrokes per character (KSPC)33 to generate the same amount of text as the QWERTY keyboard. The higher KSPC of the Radial Keyboard (Figure 5) is a result of two factors. First, using an ambiguous text entry method requires more key selections to find the desired word completion compared to entering individual letters as in the QWERTY keyboard. Second, an extra key selection is required to bring the disambiguated words from the word list into focus in the radial keys, whereas in the QWERTY keyboard the user can directly move to the word completion keys and make a selection. Nevertheless, performance was still higher in the radial keyboard. To control for the efficiency differences between the two keyboards’ word completion methods, the “keyboard” task portion of the copy-spelling task was dedicated to comparing typing performance of both keyboards with word completion turned off. The improvement of the Radial Keyboard over the QWERTY keyboard was especially evident in this task (see Figure 3).

Figure 5.

Keystrokes per character (KSPC). KSPC comparison between QWERTY and Radial Keyboards for the “keyboard” task, “quick fox” task, and typing the entire software dictionary (58,000+ words). KSPC is defined (on average) as the theoretical number of keystrokes needed to enter a single character of text, assuming 100 percent accuracy. Due to the extra keystroke needed to make word selections and the weaker prediction power inherit in ambiguous text prediction compared to the QWERTY keyboard, KSPC is lower for the QWERTY keyboard. For text entry interfaces, a lower KSPC is better. In the “keyboard” task, word completion was not used, so the KSPC for QWERTY = 1, while it is 1.1 for Radial, due to the extra keystroke needed to select the word “keyboard”. In the “quick fox” task, all of the words are very common, so the KSPC is well below 1 for QWERTY. It is at 1 for the Radial keyboard in the “quick fox” task, offsetting some of the extra required keystrokes by being able to select the words as soon as they appear in the word list, which often occurs before all characters have been typed.

The “quick fox” phrase also doesn't necessarily represent commonplace language and the improvement in typing speed is expected to be even larger with more typical sentences. Supporting this possibility, the difference in KSPC between the Radial keyboard and QWERTY keyboard was greater for the “quick fox” phrase than the general dictionary (see Figure 5).

Also, despite having many years of experience using QWERTY-based keyboards, the participant only had exposure to the Radial keyboard during occasional (once a week) experimental sessions spanning a few months, yet performance was still better using the Radial keyboard. Thus, typing speed is expected to increase even more in the Radial keyboard with more practice.

Conclusions

Neural point-and-click control can serve as a powerful communication method when coupled with a general-purpose interface such as the BrainGate2 Desktop, which enables direct control over a computer cursor with text entry via virtual keyboard interfaces. Communication speed is further improved with user interfaces better designed for this control modality. We combined these principles to demonstrate the feasibility of a clinically viable intracortical BCI for communication by an individual with incomplete locked-in syndrome. Future studies will focus on continuing to improve the performance, robustness, and usability of interfaces enabled by intuitive, neurally-enabled, point-and-click technologies.

Supplementary Material

Supplemental Figure 1: Day-to-day comparison of performance from the copy-spelling task. Same metrics and layout as in Figure 3, except each bar represents the average performance of the two repetitions of the same task within a session. The Radial Keyboard outperformed the QWERTY keyboard in nearly every metric in each session.

Supplemental video 1: Participant S3, Trial Day 1976, typing “the quick fox” under neural point-and-click control. This phrase was part of the sentence “the quick fox is lazy now”, which she also previously typed as part of a copy-spelling task.

Supplemental video 2: Participant S3, Trial Day 1976, typing “keep hope” in response to the question “do you have anything you would like to say to the world?” as part of her final research session in the BrainGate clinical trial.

Acknowledgements

We thank participant S3 for her active participation in the trial and her feedback during early development of the BG2D and the Radial Keyboard. Thanks to The Boston Home and their staff; Emad Eskanda and Etsub Berhanu for their contributions to this study. Thanks also to Laurie Barefoot, Beth Travers, and David Rosler for research support. The contents do not necessarily represent the views of the Department of Veterans Affairs or the United States Government. This work was supported by NIH: NIDCD (R01DC009899) and NICHD-NCMRR (N01HD53403, N01HD10018); Rehabilitation Research and Development Service, Office of Research and Development, Department of Veterans Affairs (Merit Review Awards B6453R and A6779I; Career Development Transition Award B6310N); Doris Duke Charitable Foundation, Craig H. Neilsen Foundation, and the MGH-Deane Institute for Integrated Research on Atrial Fibrillation and Stroke. The pilot clinical trial into which participant S3 was recruited was sponsored in part by Cyberkinetics Neurotechnology Systems (CKI). The BrainGate clinical trial is directed by Massachusetts General Hospital. CAUTION: Investigational Device. Limited by Federal Law to Investigational Use.

References

- 1.Birbaumer N, Ghanayim N, Hinterberger T, et al. A spelling device for the paralysed. Nature. 1999;398:297–298. doi: 10.1038/18581. doi:10.1038/18581. [DOI] [PubMed] [Google Scholar]

- 2.Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clin Neurophysiol. 2002;113:767–91. doi: 10.1016/s1388-2457(02)00057-3. doi:10.1016/S1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 3.Brunner P, Ritaccio AL, Emrich JF, Bischof H, Schalk G. Rapid Communication with a “P300” Matrix Speller Using Electrocorticographic Signals (ECoG). Front Neurosci. 2011;5:5. doi: 10.3389/fnins.2011.00005. doi:10.3389/fnins.2011.00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Farwell LA, Donchin E. Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr Clin Neurophysiol. 1988;70:510–523. doi: 10.1016/0013-4694(88)90149-6. doi:10.1016/0013-4694(88)90149-6. [DOI] [PubMed] [Google Scholar]

- 5.Guger C, Daban S, Sellers E, et al. How many people are able to control a P300-based brain-computer interface (BCI)? Neurosci Lett. 2009;462:94–98. doi: 10.1016/j.neulet.2009.06.045. doi:10.1016/j.neulet.2009.06.045. [DOI] [PubMed] [Google Scholar]

- 6.Sellers EW, Vaughan TM, Wolpaw JR. A brain-computer interface for long-term independent home use. Amyotroph Lateral Scler. 2010;11:449–455. doi: 10.3109/17482961003777470. doi:10.3109/17482961003777470. [DOI] [PubMed] [Google Scholar]

- 7.Mugler EM, Ruf CA, Halder S, Bensch M, Kubler A. Design and implementation of a P300-based brain-computer interface for controlling an internet browser. IEEE Trans Neural Syst Rehabil Eng. 2010;18:599–609. doi: 10.1109/TNSRE.2010.2068059. doi:10.1109/TNSRE.2010.2068059. [DOI] [PubMed] [Google Scholar]

- 8.Ryan DB, Frye GE, Townsend G, et al. Predictive Spelling With a P300-Based Brain–Computer Interface: Increasing the Rate of Communication. Int J Hum Comput Interact. 2010;27:69–84. doi: 10.1080/10447318.2011.535754. doi:10.1080/10447318.2011.535754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Orhan U, Hild KE, Erdogmus D, Roark B, Oken B, Fried-Oken M. RSVP Keyboard: An EEG Based Typing Interface. Proc IEEE Int Conf Acoust Speech Signal Process. 2012:645–648. doi: 10.1109/ICASSP.2012.6287966. doi:10.1109/ICASSP.2012.6287966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Oken BS, Orhan U, Roark B, et al. Brain-Computer Interface With Language Model-Electroencephalography Fusion for Locked-In Syndrome. Neurorehabil Neural Repair. 2013 doi: 10.1177/1545968313516867. doi:10.1177/1545968313516867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Acqualagna L, Blankertz B. Gaze-independent BCI-spelling using rapid serial visual presentation (RSVP). Clin Neurophysiol. 2013;124(5):901–8. doi: 10.1016/j.clinph.2012.12.050. doi:10.1016/j.clinph.2012.12.050. [DOI] [PubMed] [Google Scholar]

- 12.Wolpaw JR, McFarland DJ. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proc Natl Acad Sci U S A. 2004;101:17849–17854. doi: 10.1073/pnas.0403504101. doi:10.1073/pnas.0403504101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Leuthardt EC, Schalk G, Wolpaw JR, Ojemann JG, Moran DW. A brain-computer interface using electrocorticographic signals in humans. 2004:63–71. doi: 10.1088/1741-2560/1/2/001. doi:10.1088/1741-2560/1/2/001. [DOI] [PubMed] [Google Scholar]

- 14.McFarland DJ, Krusienski DJ, Sarnacki WA, Wolpaw JR. Emulation of computer mouse control with a noninvasive brain-computer interface. J Neural Eng. 2008;5:101–110. doi: 10.1088/1741-2560/5/2/001. doi:10.1088/1741-2560/5/2/001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Serruya MD, Hatsopoulos NG, Paninski L, Fellows MR, Donoghue JP. Instant neural control of a movement signal. Nature. 2002;416:141–142. doi: 10.1038/416141a. doi:10.1038/416141a. [DOI] [PubMed] [Google Scholar]

- 16.Taylor DM, Tillery SIH, Schwartz AB. Direct cortical control of 3D neuroprosthetic devices. Science. 2002;296:1829–1832. doi: 10.1126/science.1070291. doi:10.1126/science.1070291. [DOI] [PubMed] [Google Scholar]

- 17.Velliste M, Perel S, Spalding MC, Whitford AS, Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 2008;453:1098–1101. doi: 10.1038/nature06996. doi:10.1227/01.NEU.0000335797.80384.06. [DOI] [PubMed] [Google Scholar]

- 18.Hochberg LR, Serruya MD, Friehs GM, et al. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006;442:164–171. doi: 10.1038/nature04970. doi:10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 19.Kim S-P, Simeral JD, Hochberg LR, Donoghue JP, Friehs GM, Black MJ. Point-and-click cursor control with an intracortical neural interface system by humans with tetraplegia. IEEE Trans Neural Syst Rehabil Eng. 2011;19:193–203. doi: 10.1109/TNSRE.2011.2107750. doi:10.1109/TNSRE.2011.2107750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Simeral JD, Kim S-P, Black MJ, Donoghue JP, Hochberg LR. Neural control of cursor trajectory and click by a human with tetraplegia 1000 days after implant of an intracortical microelectrode array. J Neural Eng. 2011;8:025027. doi: 10.1088/1741-2560/8/2/025027. doi:10.1088/1741-2560/8/2/025027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wills SA, MacKay DJC. DASHER--an efficient writing system for brain-computer interfaces? IEEE Trans Neural Syst Rehabil Eng. 2006;14:244–246. doi: 10.1109/TNSRE.2006.875573. doi:10.1109/TNSRE.2006.875573. [DOI] [PubMed] [Google Scholar]

- 22.Blankertz B, Dornhege G, Krauledat M, Schröder M, Williamson J, Murray-Smith R, Muller KR. Proc 3rd Int Brain-Computer Interface Work Train Course. Graz, Austria: 2006. The Berlin brain-computer interface presents the novel mental typewriter hex-o-spell. [Google Scholar]

- 23.Treder MS, Blankertz B. (C)overt attention and visual speller design in an ERP-based brain-computer interface. Behav Brain Funct. 2010;6:28. doi: 10.1186/1744-9081-6-28. doi:10.1186/1744-9081-6-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Zhai S, Kristensson P-O, Smith BA. In search of effective text input interfaces for off the desktop computing. Interact Comput. 2005;17:229–250. doi:10.1016/j.intcom.2003.12.007. [Google Scholar]

- 25.MacKenzie IS. The one-key challenge: searching for a fast one-key text entry method. Proceedings of the 11th international ACM SIGACCESS conference on Computers and accessibility. 2009:91–98. doi:10.1145/1639642.1639660. [Google Scholar]

- 26.MacKenzie IS. Evaluation of text entry techniques. Text entry systems: Mobility, Accessibility,Universality. 2007:75–101. doi:10.1016/B978-012373591-1/50004-8. [Google Scholar]

- 27.Hochberg LR, Bacher D, Jarosiewicz B, et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;485(7398):372–375. doi: 10.1038/nature11076. doi:10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Masse NY, Jarosiewicz B, Simeral JD, et al. Non-causal spike filtering improves decoding of movement intention for intracortical BCIs. J Neurosci Methods. 2014 doi: 10.1016/j.jneumeth.2014.08.004. doi:10.1016/j.jneumeth.2014.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Jarosiewicz B, Masse NY, Bacher D, et al. Advantages of closed-loop calibration in intracortical brain-computer interfaces for people with tetraplegia. J Neural Eng. 2013;10(4):046012. doi: 10.1088/1741-2560/10/4/046012. doi:10.1088/1741-2560/10/4/046012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Nijboer F, Sellers EW, Mellinger J, et al. A P300-based brain-computer interface for people with amyotrophic lateral sclerosis. Clin Neurophysiol. 2008;119(8):1909–16. doi: 10.1016/j.clinph.2008.03.034. doi:10.1016/j.clinph.2008.03.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.MacKenzie IS. Fitts’ law as a research and design tool in human-computer interaction. Hum-Comput Interact. 1992;7:91–139. doi:10.1207/s15327051hci0701_3. [Google Scholar]

- 32.MacKenzie IS, Kauppinen T, Silfverberg M. Accuracy measures for evaluating computer pointing devices. CHI ’01. 2001:9–16. doi:10.1145/365024.365028. [Google Scholar]

- 33.MacKenzie IS. KSPC ( Keystrokes per Character ) as a Characteristic of Text Entry Techniques. Proceedings of the Fourth International Symposium on Human Computer Interaction with Mobile Devices. 2002:195–210. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Figure 1: Day-to-day comparison of performance from the copy-spelling task. Same metrics and layout as in Figure 3, except each bar represents the average performance of the two repetitions of the same task within a session. The Radial Keyboard outperformed the QWERTY keyboard in nearly every metric in each session.

Supplemental video 1: Participant S3, Trial Day 1976, typing “the quick fox” under neural point-and-click control. This phrase was part of the sentence “the quick fox is lazy now”, which she also previously typed as part of a copy-spelling task.

Supplemental video 2: Participant S3, Trial Day 1976, typing “keep hope” in response to the question “do you have anything you would like to say to the world?” as part of her final research session in the BrainGate clinical trial.