Significance

Public risk perception of hazardous events such as contagious outbreaks, terrorist attacks, and climate change are difficult-to-anticipate social phenomena. It is unclear how risk information will spread through social networks, how laypeople influence each other, and what social dynamics generate public opinion. We examine how messages detailing risks are transmitted from one person to another in experimental diffusion chains and how people influence each other as they propagate this information. Although the content of a message is gradually lost over repeated social transmissions, subjective perceptions of risk propagate and amplify due to social influence. These results provide quantitative insights into the public response to risk and the formation of often unnecessary fears and anxieties.

Keywords: risk perception, opinion dynamics, diffusion chains, social transmission, collective behavior

Abstract

Understanding how people form and revise their perception of risk is central to designing efficient risk communication methods, eliciting risk awareness, and avoiding unnecessary anxiety among the public. However, public responses to hazardous events such as climate change, contagious outbreaks, and terrorist threats are complex and difficult-to-anticipate phenomena. Although many psychological factors influencing risk perception have been identified in the past, it remains unclear how perceptions of risk change when propagated from one person to another and what impact the repeated social transmission of perceived risk has at the population scale. Here, we study the social dynamics of risk perception by analyzing how messages detailing the benefits and harms of a controversial antibacterial agent undergo change when passed from one person to the next in 10-subject experimental diffusion chains. Our analyses show that when messages are propagated through the diffusion chains, they tend to become shorter, gradually inaccurate, and increasingly dissimilar between chains. In contrast, the perception of risk is propagated with higher fidelity due to participants manipulating messages to fit their preconceptions, thereby influencing the judgments of subsequent participants. Computer simulations implementing this simple influence mechanism show that small judgment biases tend to become more extreme, even when the injected message contradicts preconceived risk judgments. Our results provide quantitative insights into the social amplification of risk perception, and can help policy makers better anticipate and manage the public response to emerging threats.

Public perception of risk is often polarized, difficult to anticipate, and at odds with scientific evidence (1, 2). The risks associated with nuclear energy, genetically modified food, and nanotechnologies continue to elicit strong public reaction in contrast to the assessments of many experts, and policy makers often fail to influence the public perception of the risks associated with poor nutrition, a sedentary lifestyle, and overexposure to the sun (2). The ability to communicate risks to the public, but also the ability to anticipate the public response to these risks, has a substantial impact on society and is key to alleviating unnecessary public anxiety (3).

Although research into the psychological factors that influence the formation of risk perceptions (4–7) and the communication of risks (8, 9) is relatively well developed, the study of risk perception is largely focused on the individual. However, risk judgments are formed in a social context: People frequently discuss the everyday risks they face with their friends, relatives, coworkers, and unknown people over the web. They observe and imitate the risky and self-protective behavior of their peers (10), and exchange opinions, sources of information, and behavioral recommendations through social media and online communication platforms. For example, during the H1N1 influenza outbreak in 2009, nearly half a million messages mentioning H1N1 vaccination were exchanged on Twitter, 20% of which explicitly expressed a positive or negative attitude toward vaccination (11). Indeed, a growing body of evidence suggests that people’s perception of risk is mediated by social interaction. Large-scale social network analysis has shown that social interactions influence the spread of behaviors such as smoking, food choice, and adherence to various health programs (12–14). In addition, it has been found that social proximity between individuals influences both their perception of risk (15, 16) and their emotional state (17).

These findings suggest that social interaction may lead to nonlinear amplification dynamics at the scale of the population. As illustrated by a number of studies of social contagion in relation to opinion dynamics (18), collective attention (19), cultural markets (20), and crowd behavior (21), local social influence can lead to chain reactions and amplification that yield population-scale collective patterns (22). Similarly, the dynamics of public risk perception bear the hallmarks of self-organized systems, such as opinion clustering (i.e., connected individuals share a similar judgment) and opinion polarization (i.e., opposing opinions coexist in the same population) (23). Therefore, a major challenge is to understand what role the social transmission of risk information between individuals plays in the formation of population-level dynamics. In particular, to what extent is risk perception contagious, and what behavioral patterns might this contagion yield at the macro level?

Few theorists have attempted to analyze and model these phenomena (24). Among those theorists who have, a common starting point is the social amplification of risk (SAR) framework, which attempts to combine the cultural, structural, and psychological factors that drive the formation of risk perception at the scale of the society (25, 26). The SAR framework suggests that individuals may play the role of “amplification stations” by transmitting a small and often biased subset of the available information. Although the overarching perspective of the SAR framework is widely assumed in risk research, it has undergone limited empirical analysis.

In this article, we study the social transmission of risk information from an interdisciplinary perspective by combining insights from the social and cognitive sciences with the study of complex systems and nonlinear dynamics. In particular, we study how messages describing risk change in response to repeated social transmission by analyzing the relative fidelity with which two distinct aspects of a message—the message content and the message signal—propagate through human diffusion chains. What effect does the process of social transmission have on these message components, and what collective patterns of risk perception might this process generate?

To examine these questions, we analyze how information detailing the benefits and harms of the widely used but controversial antibacterial agent triclosan (27) is communicated from one individual to another in experimental diffusion chains. In a diffusion chain, a series of individuals propagate information sequentially and in turn from one individual to the next. Specifically, the information provided by the first individual is communicated to the second individual, who, in turn, communicates this information to a third individual, and so on. In our experiments, participants in the chain were instructed to communicate the risks surrounding triclosan in open, unstructured conversations. In each diffusion chain, the first participant was “seeded” with information presented in media articles detailing the benefits and harms of triclosan. In total, we examine 15 such diffusion chains, each composed of up to 10 participants. Both before and after participation in the experiment, we also assessed the subject’s risk perception of triclosan. This experimental paradigm was pioneered by Frederic Bartlett over 80 y ago (28) and has since been used to study how a range of cultural entities undergo change, or cumulatively evolve, in response to repeated cultural transmission by humans and other animals (29–33). Here, we make use of the same experimental paradigm to study, for the first time to our knowledge, how risk information and, consequently, the risk perception of people change when socially transmitted. We found that subjects bias the signal of the message according to their subjective perception of risk, which influences the judgment of the receivers. Crucially, although risk perception “biases” propagate well and are typically amplified, the message content is transmitted with low fidelity and tends to become shorter, gradually inaccurate, and increasingly dissimilar between chains. We use computer simulations of this process to understand further the social implications of risk amplification. Put simply, although the content of messages describing risks degenerates in response to repeated social transmission, the signal of the message propagates with high fidelity, with social transmission playing the role of an amplifying process.

Results

Message Content.

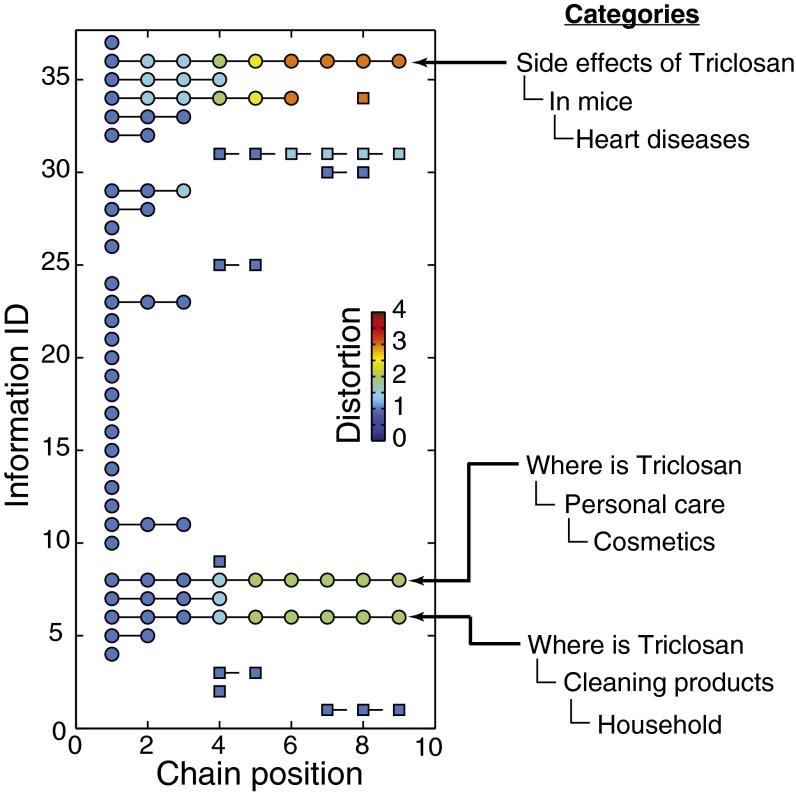

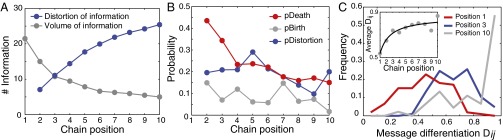

We define the content of the message communicated from one participant to the next as the set of units of information that were communicated during a conversation between these participants. We identified 61 possible units of information by analyzing all conversations that occurred in the 15 diffusion chains. Each unit of information was classified in a three-level coding scheme (the detailed procedure is explained in Materials and Methods, with examples provided in Fig. 1) and tracked from one chain position to the next. This procedure allows us to study the propagation of information down each chain. In addition, a unit of information is labeled as “distorted” if it changes when transmitted from one chain position to the next. To illustrate, Fig. 1 depicts the propagation of risk information down a typical chain. At the first chain position, a total of 30 units of information were mentioned, 13 of which were propagated to the second chain position and only three of which were propagated to the final chain position. The three successfully transmitted units were, however, distorted (as illustrated by the color coding in Fig. 1). In addition, seven new units of information were generated as the chain unfolded (represented by squares in Fig. 1), two of which were propagated to the end of the chain. Propagation maps for all 15 chains are shown in Fig. S1. As shown in Fig. 2A, most units of information disappear as the chain unfolds, whereas those units that are propagated are done so with low fidelity and tend to become increasingly distorted. In addition, we measured the probability of a specific unit of information disappearing (pDeath) from one position to the next, as estimated from all 15 diffusion chains. As shown in Fig. 2B, pDeath has high values at the first two chain positions and reaches a relatively stable level of 0.2 afterward. Similarly, we estimated the probability that a specific unit of information is created (pBirth) or gets distorted (pDistortion) from one chain position to the next. As shown in Fig. 2B, these probabilities remain largely constant as a function of chain position. In addition, we found no significant differences when comparing these probabilities within each of the categories used in our coding scheme, which suggests that units of information (at least those units of information that we encounter here) can appear, disappear, or undergo content distortion with ostensibly constant probabilities, regardless of the kind of information communicated (Fig. S2). Next, we analyzed how the messages develop among the 15 chains. Do all of the chains eventually converge to a similar set of information units, or, conversely, do these sets diverge from each other? To examine these questions, we measured the message differentiation coefficient , which defines the proportion of information units present in chain i at position p that are not present in the message of chain j at the same position p. Formally, the differentiation is defined as

where is the set of information units contained in the message of chain i at position p and is the size of that set. Therefore, = 0 when the information units in chain i are also present in chain j at the same position (no differentiation), whereas = 1 indicates that none of the information units were found in chain j at this position (maximum differentiation). Fig. 2C shows the distribution of for all possible pairs of messages {i,j} taken at positions p = 1, p = 3, and p = 10. The distributions tend to shift toward high differentiation values as p increases. Although the messages communicated at the first position have an average differentiation of 0.53, this value increases to 0.87 at the 10th position (Fig. 2C, Inset). In short, the content of the messages tends to become increasingly dissimilar among the chains as they propagate down each chain.

Fig. 1.

Topological map of information propagation in an experimental diffusion chain. Among all units of information available at chain position 1 (blue dots), only three have survived to the end of the chain, although they were strongly distorted. The text on the right-hand side describes the categories of these units of information. Seven units of information were introduced by the chain (squares), two of which survived to the end of the chain. The color coding indicates the cumulated content distortion of the information. Information identifications (y axis) are arbitrary.

Fig. 2.

Dynamics of information propagation. (A) Mean number of units of information transmitted and the mean cumulated content distortion of the information over the chains. (B) Hazard functions showing the probabilities that a piece of information disappears (red, pDeath), gets distorted (blue, pDistortion), or appears (gray, pBirth) at each chain position. (C) Distribution of the message differentiation Dij for all possible pairs of messages i and j at position 1 (red line), position 3 (blue line), and position 10 (gray line). The distributions tend to shift toward high differentiation values, indicating that the content of the message becomes increasingly different between the chains as it propagates from one person to another. (Inset) Mean value of Dij at each position of the chain (gray dots). The dark line corresponds to the fit equation with parameters p1 = 0.86 and p2 = 0.61.

Message Signal.

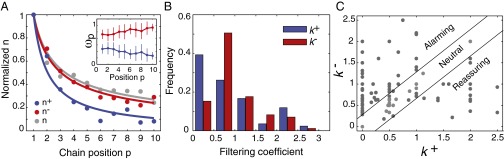

To understand further the impact of social information transmission, we analyzed the signal of the message, that is, whether the message carries a positive or negative assessment of triclosan. To quantify the signal of the message, we analyzed the transcripts of all conversations and marked each sentence as “negative,” “positive,” or “neutral” (Materials and Methods). Sentences are labeled negative when they express a negative assessment of triclosan (e.g., negative side effects) and are labeled positive when they express a positive assessment (e.g., that triclosan is safe). Sentences that express neither a positive nor negative assessment are neutral. This coding scheme is independent of the coding scheme used to quantify the message content. We use and to denote the number of positive and negative statements found at chain position p and as the total number of statements at position p. As shown in Fig. 3A, the three quantities decrease as a function of chain position but decays faster than , suggesting that negative statements propagate down the chain more freely than positive statements. Consequently, the relative proportion of negative statements tends to increase gradually, at the expense of the relative proportion of positive statements (Fig. 3A, Inset).

Fig. 3.

Message mutation. (A) Evolution of the normalized number of positive statements (blue), negative statements (red), and total statements (gray) over the chain. Fit lines are power functions , where the exponent e equals 0.96, 0.62, and 0.57 for , , and , respectively. (Inset) Average proportion of positive statements (, in blue) and negative statements (, in red) along the chains. (B) Distribution of the filtering coefficients and for all subjects. The distribution of is significantly higher than the distribution of (P < 0.001). (C) Individual profiles measured as the pair {,}. Each point represents one experimental subject. Individuals with have a neutral effect on the message, whereas individuals with > (respectively > ) tend to make the message more alarming (respectively more reassuring).

How are these aggregate trends related to the behavior of individual participants? We introduce the individual filtering coefficients and expressing the degree to which the participant at chain position p modified the message (either positively or negatively) that he or she received from the participant at position p−1. The filtering coefficients are formally defined as and . Thus, holds for participants who attenuate the positive aspect of the message, whereas holds when a participant has amplified the positive aspect of the message (for negative statements, we have , respectively). The distribution of and , across all participants, is shown in Fig. 3B. In line with our previous finding, is, on average, higher than (P < 0.001), which further underscores that our participants have an overall tendency to amplify negative statements and attenuate positive ones. However, significant individual differences were found (Fig. 3C): Although the majority of subjects tend to amplify the harmful aspects of triclosan (), other participants have the opposite profile () or act neutrally on the message (). Interestingly, the individual differences can be partly explained by the risk perception that subjects reported in questionnaires conducted after the experiment [ correlates positively with ; correlation (c) = 0.25, P = 0.019]. In short, the direction of the “mutation” of the message corresponds to the opinion of the speaker: Individuals with higher risk perception tend to filter out positive statements and amplify the dangerous aspects of triclosan, whereas those individuals with lower risk perception tend to have an opposite effect on the signal of the message.

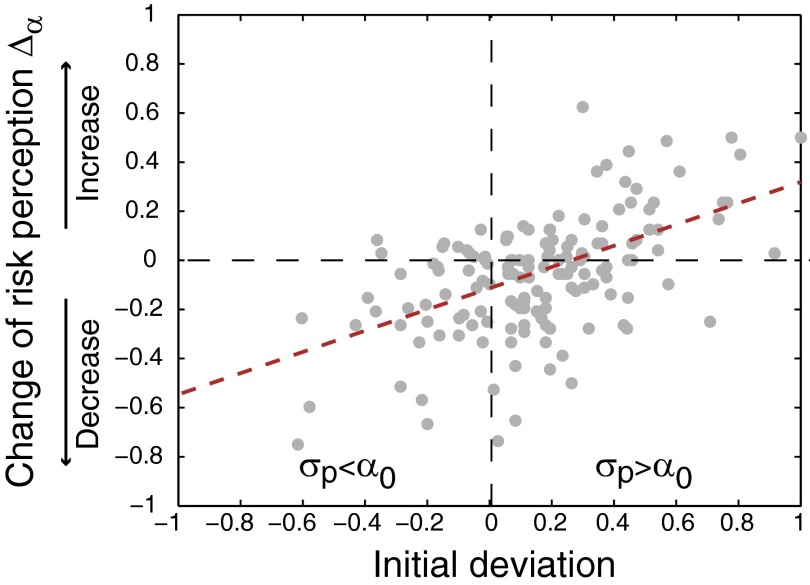

Finally, we measured what impact a message has on the risk perception of the receiver. Overall, participants changed their risk perception by an absolute average value of (SD = 0.17), where is the risk perception of the individual before the experiment and is the risk perception of the same subject after the experiment. The degree of change should be interpreted relative to the signal of the message that this participant received, defined as . When the message signal confirms the prior assessment of the participant (i.e., ), no change of opinion is expected (i.e., ), whereas should vary in the same direction as . As shown in Fig. 4, and correlate well (c = 0.55, P < 0.001), confirming that participants are influenced by the signal of the message they receive.

Fig. 4.

Social influence. The scatter plot represents the degree to which participants changed their risk perception as a function of the initial deviation with the message signal they have received. Subjects receiving a message with negative signal, with respect to their initial perception, tend to increase their risk level, whereas those subjects receiving a more positive signal tend to reduce their risk level. The equation of the fit line is .

Collective Patterns.

These findings provide quantitative insights into the role of social transmission as an amplifying process. Specifically, the signal of the incoming message influences the receiver’s risk perception, which, in turn, shapes the signal of the outgoing message. In many social systems, similar feedback loops are typically associated with nonlinear dynamics and emerging collective patterns (22). In our experiment, the social amplification of the risk signal is clearly visible (Fig. 3A). However, considerable behavioral fluctuations are observed within and between chains, making it difficult to conduct a systematically controlled analysis of the amplification process. We analyzed a simple simulation model derived from our observations to study this amplification process more precisely.

In the model, each individual i in a chain is has an initial risk perception . Individuals sequentially, and in turn, receive and transmit a message made of and positive and negative statements, respectively. The signal of the message is defined as above, by the equation . When an individual i receives the message, the risk level of that individual changes as follows:

| [1] |

where s is an influence factor with a value between 0 (no influence) and 1 (full adoption). Previous empirical estimates of influence factors lie between 0.3 and 0.5 (34, 35), which is consistent with our experimental estimate (s = 0.45; Fig. 4). In our simulation, when a message is transmitted from one person to another, it undergoes a random biased mutation dependent on the risk perception of the emitter i. For the sake of simplicity, we implement the message mutation such that is increased by 1 with a probability , and is decreased by 1 with a probability . Similarly, is increased by 1 with a probability , and is decreased by 1 with a probability . Based on this simple model, we implemented a series of computer simulations. Each simulation models a diffusion chain of N agents, where the ith agent receives a risk message from the (i − 1)th agent and transmits a message to the (i + 1)th agent. Each agent i has an initial risk level of . The first agent, at chain position i = 1, receives a seeded message composed of positive statements and negative statements. Iteratively, and for each agent in turn, three operations are applied: (i) agent i updates his or her risk perception in response to the received message, as defined by Eq. 1, (ii) agent i modifies the message using probabilities and , and (iii) agent i transmits the modified message to agent i + 1. Three example simulations illustrating the implications of different parameter values are detailed in Fig. S3.

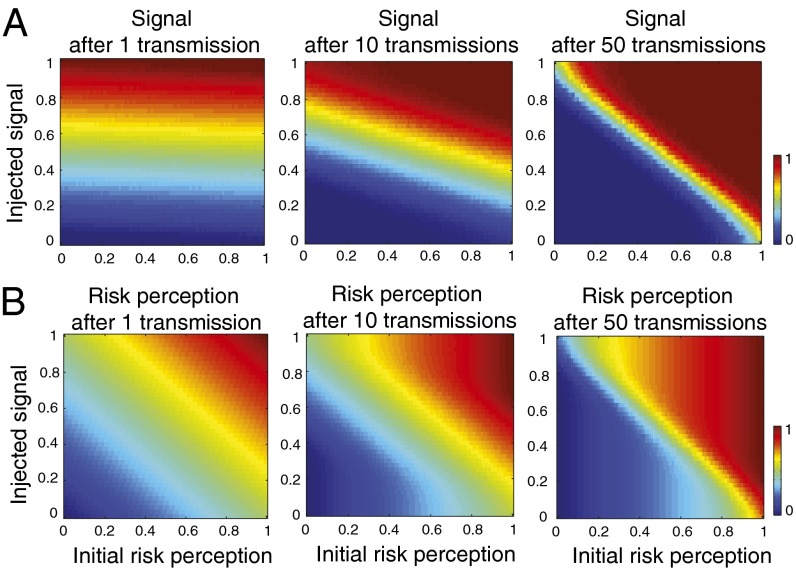

Whereas the impact of the message on the first agent in the chain is given by Eq. 1, the dynamics at subsequent chain positions are complex and result from repeated mutations of the message, combined with related changes in the judgment of the individuals. First, we conducted simulations under conditions matching the conditions of our experiment, where there exists a substantial diversity of initial risk perceptions (i.e., where is drawn from a normal distribution with a mean of 0.65 and SD of 0.22). At chain position p = 10, the aggregate distributions of risk signals and risk perceptions correspond to the risk signals and risk perceptions we observed experimentally (Kolmogorov–Smirnov test: P = 0.57 and P = 0.08). As we observed previously, the model predicts a gradual amplification of the risk signal, whereas individuals’ final risk perception exhibits a considerable variability due to the diversity of initial judgments (simulation examples in Fig. S3). Under homogeneous initial conditions, where all individuals have the same initial risk perception (i.e., for all individuals i), the amplification process is itself amplified and easier to study. For example, our simulations show that a neutral message (i.e., = ) injected in a population of concerned individuals (i.e., for all individuals in the chain) tends to mutate rapidly as it propagates from one agent to another, culminating in a steady state representing the population’s initial view, with no impact on the individuals’ opinions in the long run (Fig. S3B). What social dynamics emerge when the initial risk perception of the group, the signal of the injected message, and the social influence factor s are varied? Fig. 5 and Fig. S4 examine such an exploration of the model’s parameter space. In short, the social amplification of the risk signal is visible after 10 transmissions and continues to increase as the message propagates further (Fig. 5A). After 50 transmissions, the signal of the message is either strongly positive (dark blue, Fig. 5A) or strongly negative (dark red, Fig. 5A). The impact on individuals’ risk perception follows a similar trend (Fig. 5B). In most cases, the message has a polarizing effect on the population, where the biased initial judgments of the agents tend to become more extreme at the end of the chain, even when the injected message is completely neutral. Along the transition zone depicted in green (Fig. 5), the mean values of the signal and the risk perception are both 0.5, but these means have very high SDs, indicating a U-shaped distribution where the outcome can be either high or low (Fig. S5). Thus, even a neutral message injected into a neutral population can possibly have a polarizing effect on the judgment of the individuals due to the amplification of random mutations of the message (as illustrated in Fig. S3C).

Fig. 5.

Computer simulations. (A) Evolution of the risk signal at chain positions 1, 10, and 50 as a function of the initial risk perception of the individuals (x axes) and the signal of the injected message (y axes). The gradual dominance of extreme values (in dark red and dark blue) demonstrates the amplification of the risk signal. (B) Evolution of individuals’ risk perception follows a similar trend. Individuals who initially expressed extreme risk perception (x = 0 or x = 1) gradually move back to their initial view, regardless of the message signal. The social influence parameter is set to s = 0.5. Simulations varying the value of s are provided in Fig. S4. Results are averaged over 500 simulations.

Discussion

Among the factors influencing public risk perception, the impact of social transmission is arguably the least studied, despite being an everyday occurrence in an increasingly connected society. This study is the first, to our knowledge, to examine experimentally how the social transmission of risk information among human subjects influences the propagation of risky information, and how these risk perceptions become amplified as a result of this propagation. Ambiguity surrounds the risks associated with triclosan, such that each message not only carries factual information about the potential benefits and harms but also carries a signal representing the subjective judgment of the communicator. Consequently, we have distinguished between “content” propagation and “signal” propagation. First, we found that the information content of a message degrades (contains fewer units information) and becomes less accurate (undergoes content distortion) in response to being transmitted. As a consequence, different communication chains led to a focus on different issues related to triclosan, including Greenpeace protests (chain 13), breastfeeding (chain 10), and environmental damage (chain 3). Participants nevertheless influence each other: Changes in risk perception are a function of the signal of the received message (whether the message is positive or negative). Thus, changes in risk perception occur less as a result of the message content and more as a result of its overarching, subjective signal. In agreement with Bartlett’s experiments (28), we observed that the information passed along the chain is shaped by the preconceptions of its members. In Bartlett’s experiment (28), a Native American story was gradually modified to fit the cultural perceptions of the British participants. In our experiment, a similar mechanism drives the propagation of, and modifications to, “stories” detailing the risks associated with triclosan. One explanation for this behavior is that when faced with conflicting information, participants are in a state of cognitive dissonance and, consistent with Festinger’s theory (36), reduce the discrepancy between their prior judgment and the received message. Thus, cognitive dissonance theory may partly explain why participants alter the strength of the risk signal.

At the aggregate level, the process of social transmission tends to amplify or attenuate the message signal. As a message propagates down the chain, it becomes distorted to fit the view of those individuals transmitting it, with the original message eventually having a negligible impact on the judgment of the participants. Ultimately, the message can have a counterintuitive polarizing effect on the population: After being transmitted several times, the message can strengthen the existing bias of the group, even though it initially supported the opposite view. As our simulations show, this social phenomenon is itself amplified in chains of like-minded individuals. According to recent theoretical and empirical studies (15, 23, 37), social interactions and information exchange frequently take place within clusters of like-minded people, suggesting that “natural” social structures may create favorable conditions for strong amplification dynamics. Investigating the development of risk patterns in naturally occurring social networks, which includes the impact of complex topologies with highly connected individuals (38) and a possibly stronger social influence (Fig. S4), therefore remains an important direction for future work. Similarly, factors influencing the frequency with which people communicate risks may also regulate their amplification. For example, new incidents typically lead to a burst of social exchange followed by a long-tailed decay of collective attention (19, 39).

More generally, our experiments demonstrate how the field of opinion dynamics can be extended to include the study of risk perception and how existing theoretical models of collective risk perception can be furnished with empirical support (37, 40). Perhaps most importantly, our findings illustrate the importance of studying patterns of risk perception as, at least in part, the outcome of a social process. From a public health perspective, the social amplification of risk can have undesirable and costly consequences, making it crucial to understand how policy makers can communicate risks in such a way as to aid their transmission through social networks. Promising lines of inquiry include simple yet effective methods for helping people assess the risks and benefits of technologies, chemicals, or medical drugs (8), and simple tabular representations and visual displays used to clarify public debates (41).

Materials and Methods

Experimental Design.

The experiment took place in April 2013. We invited 12 participants to each of the 15 experimental sessions. Due to absences, some sessions were conducted with less than 12 participants. In total, we collected data from four chains of length 12, two chains of length 11, four chains of length 10, three chains of length 9, one chain of length 8, and one chain of length 7 (average chain size = 10.1). Our analyses considered only the first 10 participants of diffusion chains of length greater than 10. All participants gave informed consent to the experimental procedure and received a flat fee of €15. The participants entered a waiting room and were instructed not to interact with each other. All participants completed an initial questionnaire Q1. The first participant p1 was then moved to the experimental room and instructed to read a collection of six media articles displayed on a computer screen. The articles presented alternative views on the use and suspected side effects of the controversial antibacterial agent triclosan (these articles are provided in SI Appendix). To present a representative selection of articles, and one that reflects those articles likely to be encountered in an everyday setting, we selected each article from the first page of results returned by Google with the search keyword “triclosan” (accessed early in 2013). The articles were presented in a random order across groups, and subject p1 had 3 min to read each of them. After the reading phase (18 min in total), the computer was shut down and participant p2 was invited to join participant p1 in the experimental room. Both participants were instructed to discuss triclosan in an open, unstructured discussion. No time limit was imposed. At the end of the discussion, the next participant p3 was moved to the experimental room and a new discussion began between participants p2 and p3 under the same conditions. In the meantime, the first participant p1 was instructed to complete the second questionnaire Q2 in another room and was then free to leave. This procedure was repeated for all participants in the chain. The discussions were recorded, and the audio files were then transcribed and translated into English (from German).

Measures of Risk Perception.

The risk perception of the participants toward triclosan was measured before and after the discussion by means of two questionnaires Q1 and Q2. Questionnaire Q1 evaluated the subject’s knowledge about triclosan. Among all participants, only one had prior knowledge of triclosan but failed to answer basic questions relating to triclosan. We therefore considered all subjects to be unfamiliar with triclosan. Questionnaires Q1 and Q2 also evaluated the risk perception of the participants toward triclosan. The risk perception of the participants was self-reported by placing a tick on a continuous line ranging from “Not dangerous at all” to “Extremely dangerous” (coded correspondingly with values between 0 and 1). Because participants were unfamiliar with triclosan before the experiment, it was impossible to assess their level of risk perception directly. We therefore used an indirect measurement by evaluating their perception of risk toward the more general issue of chemical use in food safety and cosmetics.

Coding the Content of the Message.

We first used the transcripts of the discussions to identify every unit of information that was discussed. We identified a list of 61 units of information. These units were classified into four high-level categories: “Side effects,” “Personal anecdotes,” “Where is triclosan,” and “Others.” Each of these high-level categories was then subdivided into subcategories and sub-subcategories. For example, the top category, Side effects, contains the subcategory “In mice,” which was further divided into seven classes, such as “Heart diseases” and “Cancer.” Finally, we analyzed the transcripts and tracked each unit of information from one chain position to the next until the end of each chain. In addition, each unit of information was labeled distorted if factual differences or imprecisions were detected from one chain position to the next. A content distortion was marked when (i) a numerical value has changed or disappeared; (ii) a qualitative indication of volume, frequency, or probability has changed or disappeared; (iii) a change is detected in the generality of the description provided by a unit of information; (iv) a previously nonexistent unit of information has been added; and (v) a unit of information is factually incorrect.

Coding the Signal of the Message.

We also used the transcripts to analyze the signal of the conversation. Here, we reviewed all sentences uttered by the informed speaker at each chain position and labeled each of them as positive, negative, or neutral. Sentences that highlight the suspected dangers of triclosan or express a negative judgement about it received a negative label, whereas sentences suggesting that the use of triclosan is safe or well-controlled received a positive label. Sentences that were labeled neither negative nor positive were considered neutral. This procedure was conducted by two independent coders, who received the same instruction sheets (SI Materials and Methods). We used the mean response of the two coders. The reliability of the encoding was then assessed by comparing the number of positive and negative statements found by each coder in the same transmission. In 56% of the cases, the numbers reported by the two coders were equal. In 94% of the cases, the difference was two or less.

Supplementary Material

Acknowledgments

We thank Eleonora Spanudakis and Mareike Trauernicht for helpful assistance during data collection and data analyses. We are grateful to Christel Fraser for her help in transcribing and translating the audio recordings. We thank Jeanne Gouëllo, Isaac Moussaïd, Michael Mäs, Jan Lorenz, and Kenny Smith for insightful discussions. We also acknowledge the members of the Center for Adaptive Rationality and the Center for Adaptive Behavior and Cognition at the Max Planck Institute for Human Development for providing valuable feedback during the preparation of this work.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1421883112/-/DCSupplemental.

References

- 1.Slovic P. The Perception of Risk. Earthscan Publications; London: 2000. [Google Scholar]

- 2.Slovic P. Perception of risk. Science. 1987;236(4799):280–285. doi: 10.1126/science.3563507. [DOI] [PubMed] [Google Scholar]

- 3.Funk S, Gilad E, Watkins C, Jansen VA. The spread of awareness and its impact on epidemic outbreaks. Proc Natl Acad Sci USA. 2009;106(16):6872–6877. doi: 10.1073/pnas.0810762106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Huang L, et al. Effect of the Fukushima nuclear accident on the risk perception of residents near a nuclear power plant in China. Proc Natl Acad Sci USA. 2013;110(49):19742–19747. doi: 10.1073/pnas.1313825110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Loewenstein GF, Weber EU, Hsee CK, Welch N. Risk as feelings. Psychol Bull. 2001;127(2):267–286. doi: 10.1037/0033-2909.127.2.267. [DOI] [PubMed] [Google Scholar]

- 6.Pachur T, Hertwig R, Steinmann F. How do people judge risks: Availability heuristic, affect heuristic, or both? J Exp Psychol Appl. 2012;18(3):314–330. doi: 10.1037/a0028279. [DOI] [PubMed] [Google Scholar]

- 7.Slovic P, Peters E. Risk perception and affect. Curr Dir Psychol Sci. 2006;15(6):322–325. [Google Scholar]

- 8.Fischhoff B, Brewer NT, Downs JT. Communicating Risks and Benefits: An Evidence-Based User’s Guide. US Food and Drug Administration; Silver Spring, MD: 2011. [Google Scholar]

- 9.Gigerenzer G, Gaissmaier W, Kurz-Milcke E, Schwartz L, Woloshin S. Helping doctors and patients make sense of health sStatistics. Psychol Sci Public Interest. 2007;8(2):53–96. doi: 10.1111/j.1539-6053.2008.00033.x. [DOI] [PubMed] [Google Scholar]

- 10.Faria J, Krause S, Krause J. Collective behavior in road crossing pedestrians: The role of social information. Behav Ecol. 2010;21(6):1236–1242. [Google Scholar]

- 11.Salathé M, Vu D, Khandelwal S, Hunter D. The dynamics of health behavior sentiments on a large online social network. European Physical Journal Data Science. 2013;2:4. [Google Scholar]

- 12.Christakis NA, Fowler JH. The spread of obesity in a large social network over 32 years. N Engl J Med. 2007;357(4):370–379. doi: 10.1056/NEJMsa066082. [DOI] [PubMed] [Google Scholar]

- 13.Christakis NA, Fowler JH. The collective dynamics of smoking in a large social network. N Engl J Med. 2008;358(21):2249–2258. doi: 10.1056/NEJMsa0706154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Centola D. The spread of behavior in an online social network experiment. Science. 2010;329(5996):1194–1197. doi: 10.1126/science.1185231. [DOI] [PubMed] [Google Scholar]

- 15.Scherer CW, Cho H. A social network contagion theory of risk perception. Risk Anal. 2003;23(2):261–267. doi: 10.1111/1539-6924.00306. [DOI] [PubMed] [Google Scholar]

- 16.Binder AR, Scheufele DA, Brossard D, Gunther AC. Interpersonal amplification of risk? Citizen discussions and their impact on perceptions of risks and benefits of a biological research facility. Risk Anal. 2011;31(2):324–334. doi: 10.1111/j.1539-6924.2010.01516.x. [DOI] [PubMed] [Google Scholar]

- 17.Kramer AD, Guillory JE, Hancock JT. Experimental evidence of massive-scale emotional contagion through social networks. Proc Natl Acad Sci USA. 2014;111(24):8788–8790. doi: 10.1073/pnas.1320040111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lorenz J, Rauhut H, Schweitzer F, Helbing D. How social influence can undermine the wisdom of crowd effect. Proc Natl Acad Sci USA. 2011;108(22):9020–9025. doi: 10.1073/pnas.1008636108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wu F, Huberman BA. Novelty and collective attention. Proc Natl Acad Sci USA. 2007;104(45):17599–17601. doi: 10.1073/pnas.0704916104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Salganik MJ, Dodds PS, Watts DJ. Experimental study of inequality and unpredictability in an artificial cultural market. Science. 2006;311(5762):854–856. doi: 10.1126/science.1121066. [DOI] [PubMed] [Google Scholar]

- 21.Moussaïd M, Helbing D, Theraulaz G. How simple rules determine pedestrian behavior and crowd disasters. Proc Natl Acad Sci USA. 2011;108(17):6884–6888. doi: 10.1073/pnas.1016507108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Helbing D, et al. Saving human lives: What complexity science and information systems can contribute. J Stat Phys. 2014;158(3):735–781. doi: 10.1007/s10955-014-1024-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Salathé M, Khandelwal S. Assessing vaccination sentiments with online social media: Implications for infectious disease dynamics and control. PLOS Comput Biol. 2011;7(10):e1002199. doi: 10.1371/journal.pcbi.1002199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Moussaïd M. Opinion formation and the collective dynamics of risk perception. PLoS ONE. 2013;8(12):e84592. doi: 10.1371/journal.pone.0084592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Renn O, Burns W, Kasperson J, Kasperson R, Slovic P. The social amplification of risk: Theoretical foundations and empirical applications. J Soc Issues. 1992;48(4):137–160. [Google Scholar]

- 26.Kasperson R, et al. The social amplification of risk: A conceptual framework. Risk Anal. 1988;8(2):177–187. [Google Scholar]

- 27.Yueh M-F, et al. The commonly used antimicrobial additive triclosan is a liver tumor promoter. Proc Natl Acad Sci USA. 2014;111(48):17200–17205. doi: 10.1073/pnas.1419119111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Bartlett F. Remembering: A Study in Experimental and Social Psychology. Cambridge Univ Press; Cambridge, UK: 1932. [Google Scholar]

- 29.Kempe M, Mesoudi A. Experimental and theoretical models of human cultural evolution. WIREs Cognitive Science. 2014;5(3):317–326. doi: 10.1002/wcs.1288. [DOI] [PubMed] [Google Scholar]

- 30.Griffiths TL, Kalish ML, Lewandowsky S. Review. Theoretical and empirical evidence for the impact of inductive biases on cultural evolution. Philos Trans R Soc Lond B Biol Sci. 2008;363(1509):3503–3514. doi: 10.1098/rstb.2008.0146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Horner V, Whiten A, Flynn E, de Waal FB. Faithful replication of foraging techniques along cultural transmission chains by chimpanzees and children. Proc Natl Acad Sci USA. 2006;103(37):13878–13883. doi: 10.1073/pnas.0606015103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kirby S, Cornish H, Smith K. Cumulative cultural evolution in the laboratory: An experimental approach to the origins of structure in human language. Proc Natl Acad Sci USA. 2008;105(31):10681–10686. doi: 10.1073/pnas.0707835105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Mesoudi A, Whiten A, Laland KN. Towards a unified science of cultural evolution. Behav Brain Sci. 2006;29(4):329–347, discussion 347–383. doi: 10.1017/S0140525X06009083. [DOI] [PubMed] [Google Scholar]

- 34.Soll JB, Larrick RP. Strategies for revising judgment: How (and how well) people use others’ opinions. J Exp Psychol Learn Mem Cogn. 2009;35(3):780–805. doi: 10.1037/a0015145. [DOI] [PubMed] [Google Scholar]

- 35.Moussaïd M, Kämmer JE, Analytis PP, Neth H. Social influence and the collective dynamics of opinion formation. PLoS ONE. 2013;8(11):e78433. doi: 10.1371/journal.pone.0078433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Festinger L. A Theory of Cognitive Dissonance. Stanford Univ Press; Stanford, CA: 1957. [Google Scholar]

- 37.Lorenz J. Continuous opinion dynamics under bounded confidence: A survey. Int J Mod Phys. 2007;18(12):1819–1838. [Google Scholar]

- 38.Watts DJ, Strogatz SH. Collective dynamics of ‘small-world’ networks. Nature. 1998;393(6684):440–442. doi: 10.1038/30918. [DOI] [PubMed] [Google Scholar]

- 39.Crane R, Sornette D. Robust dynamic classes revealed by measuring the response function of a social system. Proc Natl Acad Sci USA. 2008;105(41):15649–15653. doi: 10.1073/pnas.0803685105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Castellano C, Fortunato S, Loreto V. Statistical physics of social dynamics. Rev Mod Phys. 2009;81(2):591–646. [Google Scholar]

- 41.Arkes HR, Gaissmaier W. Psychological research and the prostate-cancer screening controversy. Psychol Sci. 2012;23(6):547–553. doi: 10.1177/0956797612437428. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.