Abstract

It has been shown that, by adding a chaotic sequence to the weight update during the training of neural networks, the chaos injection-based gradient method (CIBGM) is superior to the standard backpropagation algorithm. This paper presents the theoretical convergence analysis of CIBGM for training feedforward neural networks. We consider both the case of batch learning as well as the case of online learning. Under mild conditions, we prove the weak convergence, i.e., the training error tends to a constant and the gradient of the error function tends to zero. Moreover, the strong convergence of CIBGM is also obtained with the help of an extra condition. The theoretical results are substantiated by a simulation example.

Keywords: Feedforward neural networks, Chaos injection-based gradient method, Batch learning, Online learning, Convergence

Introduction

Gradient method (GM) has been widely used as a training algorithm for feedforward neural networks. GM can be implemented in two practical ways: the batch learning and the online learning (Haykin 2008). The batch learning approach accumulates the weight correction over all the training samples before actually performing the update, nevertheless the online learning approach updates the network weights immediately after each training sample is fed. Though GM is widely used in neural network fields, it also has drawbacks of slow learning and getting trapped in local minimum. To overcome those problems, many heuristic improvements have been proposed, such as adding a penalty term to the error function (Karnin 1990), adding a momentum to the weight update (Zhang et al. 2006), injecting noise into the learning procedure (Sum et al. 2012a, b; Ho et al. 2010), etc. Some other nonlinear optimization algorithms such as the Newton method (Osowski et al. 1996), conjugate-gradient method (Charalambous 1992), extended Kalman filtering (Iiguni et al. 1992), and Levenberg–Marquardt method (Hagan and Mehnaj 1994) have also been used for training neural networks. Though these algorithms converge in fewer iterations than GM, they require much more computation per pattern, which makes them not so suitable especially for online learning (Behera et al. 2006). Thus, gradient method remains attractive because of its simplicity and ease of implementation.

As convergence is a precondition for the practical usage of a learning algorithm, the convergence analysis of GM and its various modifications have attracted many researchers in neural network fields (Fine and Mukherjee 1999; Wu et al. 2005, 2011; Wang et al. 2011; Shao and Zheng 2011; Zhang et al. 2007, 2008, 2009, 2012, 2014; Fan et al. 2014; Yu and Chen 2012). Recently, Sum John, Leung Chi-Sing and Ho Kevin theoretically investigated the convergence of noise injection-based online gradient methods (NIBOGM) in Sum et al. (2012a, b) and Ho et al. (2010), where the noises are independent mean zero Gaussian-distributed random variables. For the stability of the noise-induced neural systems and the effects of the noise on neural networks, we refer to Wu et al. (2013), Zheng et al. (2014) and Guo (2011). Besides the independent and identically distributed (i.i.d) noises, chaos noise is also widely used and has been shown to be effective (Ahmed et al. 2011; Uwate et al. 2004) when injected into the gradient training process of feedforward neural networks. Chaos injection enhances the resemblance to biological systems (Li and Nara 2008; Yoshida et al. 2010), and the dynamic variation that it introduces facilitates escaping from local minima and thus improves the convergence (Ahmed et al. 2011). However, as the chaos is not an i.i.d variable, the existing convergence results and the corresponding analysis methods for noise injection-based online gradient methods can not be directly applied to the chaos injection-based gradient methods (CIBGM).

Motivated by the above issues, in this paper we try to theoretically analyze the convergence of CIBGM, covering both the batch learning and the online learning. The weak convergence and strong convergence of the algorithms will be established. The online learning we considered in this paper is the case where the training samples are fed into the network in a fixed sequence, which is also called cyclic learning in literature (Heskes and Wiegerinck 1996). Thus, compared with the convergence results for NIBOGM (Sum et al. 2012a, b; Ho et al. 2010), where the training samples are fed into the network in a totally random sequence, our results will be of deterministic nature.

The remainder of this paper is organized as follows. The network structure and CIBGM are described in “Network structure and chaos injection-based gradient method” section. “Convergence results” section presents some assumptions and our main theorems. The detailed proof of the theorems is given in “Proofs” section. In “Simulation results” section, we use a simulation example to illustrate the theoretical analysis. We conclude the paper in “Conclusion” section.

Network structure and chaos injection-based gradient method

In this section, we first introduce the network structure, which is a typical three-layer neural network. Then we describe the chaos injection-based batch gradient method and the chaos injection-based online gradient method.

Network structure

Consider a three-layer network consisting of p input nodes, q hidden nodes, and 1 output node. Let be the weight vector between all the hidden units and the output unit, and be the weight vector between all the input units and the hidden unit . To simplify the presentation, we write all the weight parameters in a compact form, i.e., and we define a matrix .

Given activation functions for the hidden layer and output layer, respectively, we define a vector function for . For an input , the output vector of the hidden layer can be written as and the final output of the network can be written as

| 1 |

where represents the inner product between the two vectors and .

Chaos injection-based batch gradient method

Suppose that is a given set of training samples. The aim of the network training is to find the appropriate network weights that can minimize the error function

| 2 |

where .

The gradient of the error function is given by

| 3 |

with

| 4a |

| 4b |

Starting from an arbitrary initial value , the chaos injection-based batch gradient method updates the weights iteratively by

| 5 |

where is the learning rate, A is a positive parameter, , and

| 6 |

is the logistic map/Verhust equation which is highly sensitive to the initial value and the parameter α. For specific values of (e.g., ) and α (e.g., ), the logistic map produces a chaotic time series.

Chaos injection-based online gradient method

The batch gradient method given in (5) updates the weights after all the training samples are fed into the network. This seems not so efficient if the training set is made up of a large number of samples. In this case, the online gradient method is preferred.

We consider the case that the training samples are supplied to the network in a fixed order in the training process. Starting from an arbitrary initial value , the chaos injection-based online gradient method updates the weights iteratively by

| 7a |

| 7b |

with

| 8a |

| 8b |

for , where is the learning rate, whose value may be changed after each cycle of the training procedure, A is a positive parameter, and is defined by (6).

Remark 1

During the training process, the injected chaos should be large at the beginning in order to help the gradient method avoid trapping into a local minimum, and then be smaller and smaller as the iteration (cycle) proceeds for the sake of ensuring the convergence of the algorithm to a minimum. Thus, in (5) and (8), we use to control the magnitude of the chaos injected. Here p is used to magnify the effect of the chaos in the early training stage, and [as suggested by Assumption (A2) in the next section, ] is for the purpose of diminishing the effect of the injected chaos on the convergence of the algorithm with the iteration (cycle) increasing.

Convergence results

In this section, we give the convergence results of the CIBGM, covering both the batch learning case (5) and the online learning case (7).

Let be the stationary point set of the error function , and be the projection of onto the (s)th coordinate axis, for . The following assumptions are needed for our convergence results.

(A1) and are Lipschitz continuous on any bounded closed interval;

(A2) ;

(A3) generated by (5) is bounded over ;

(A3′) (or simply denoted by with ) generated by (7) is bounded over ;

(A4) The set does not contain any interior point for every .

Remark 2

Assumption (A1) is satisfied by most of the activation functions, such as sigmoid functions and linear functions. Assumption (A2) is a traditional condition for the convergence analysis of the online gradient method (Sum et al. 2012a, b; Ho et al. 2010). Here we also use this condition in the convergence analysis of the chaos injection-based batch gradient method for the sake of controlling the impact of the chaos on the convergence of the algorithm. Assumption (A3) [or Assumption (A3′)] is a commonly used condition for the convergence analysis of the gradient method in the literature (Wu et al. 2011). In fact, this condition can be easily satisfied by adding a penalty term to the error function (Zhang et al. 2009, 2012). Assumption (A4) is provided to establish the strong convergence.

Now we present our convergence results, where we use “” to denote the Euclidean norm of a vector.

Theorem 1

Suppose that the error function is given by (2) and that the weight sequence is generated by the algorithm (5) for any initial value . Assume the conditions (A1)–(A3) are valid. Then there hold the weak convergence results

| 9 |

| 10 |

Moreover, if Assumption (A4) is valid, then there holds the strong convergence, i.e., there exists a point such that

| 11 |

Theorem 2

Suppose that the conditions (A1), (A2) and (A3′) are valid. Then, starting from an arbitrary initial value , the weight sequence defined by (7) satisfies the following weak convergence

| 12 |

| 13 |

Moreover, if Assumption (A4) is valid, then there holds the strong convergence: there exists such that

| 14 |

Proofs

In this section, we first list several lemmas in the literature, then we conduct the proofs of theorems 1 and 2 in “Proof of Theorem 1” and “Proof of Theorem 2” subsections, respectively.

Lemma 1

(See Lemma 1 in Bertsekas and Tsitsiklis 2000) Let and be three sequences such that is nonnegative for all n. Assume that

and that the series is convergent. Then either or else converges to a finite value and .

Lemma 2

(See Lemma 4.2 in Wu et al. 2011) Suppose that the learning ratesatisfies Assumption (A2) and that the sequence satisfies and for some positive constants βand μ. Then there holds .

Lemma 3

(See Lemma 5.3 in Wang et al. 2011) Let be continuous for a bounded closed region, and . The projection of on each coordinate axis does not contain any interior point. Let the sequencesatisfy:

-

(i)

;

-

(ii)

.

Then, there exists a uniquesuch that

Proof of Theorem 1

Lemma 4

Suppose the conditions (A1) and (A3) are valid, then satisfies Lipschitz conditon, that is, there exists a positive constant L, such that

| 15 |

Specially, for , there holds

| 16 |

Proof

The proof of this lemma is similar to Lemma 2 of Zhang et al. (2012) and thus omitted.

Proof of (9)

Given that and , it is easy to see

| 17 |

By the differential mean value theorem, there exists a constant , such that

| 18 |

where the last inequality is due to (16). Considering (5) and (18), we have

| 19 |

Using (17) and the inequality , the second term on the right hand side of (19) can be evaluated

| 20 |

Using inequality , the third term on the right hand side of (19) can be evaluated

| 21 |

| 22 |

By Assumptions (A1) and (A3), there is a constant such that for all

| 23 |

Thus, there exists a positive constant , such that

| 24 |

Combining , and according to Lemma 1, we can conclude that there exists a constant such that

| 25 |

and

| 26 |

This completes the proof of (9).

Proof of (10)

Using (5), (15) and (23), we have

| 27 |

where . Thus, by (26), (27), and Lemma 2, we conclude

Proof of (11)

Obviously is a continuous function under the Assumption (A1). Using (5), (17) and (23), we have

| 28 |

Furthermore, the Assumption (A4) is valid. Thus, applying Lemma 3, there exists a unique such that .

Proof of Theorem 2

Let the sequence be generated by (7). For brevity, we introduce the following notations:

| 29a |

| 29b |

| 29c |

| 29d |

for

Lemma 5

(See Lemma 4.1 in Wu et al. 2011) Let be a function defined on a bounded closed interval such that is Lipschitz continuous with Lipschitz constant. Then, is differentiable almost everywhere in and

| 30 |

Moreover, there exists a constantsuch that

| 31 |

Lemma 6

Suppose the conditions (A1) and (A3′) are valid, and the sequence is generated by (7). Then there are such that

| 32 |

| 33 |

| 34 |

| 35 |

where

Proof

According to Assumption (A3′), we can define a constant . Then we have

| 36 |

Accordingly, there exist two positive constants and such that

| 37 |

Thus we have

| 38 |

and

| 39 |

Then, there is a positive constant such that

| 40 |

Using (8), (17), (29c), (38) and (40), we have

| 41 |

Similarly, for , we have

Let , then we have for .

Using (29c), (29d), (33) and the mean value theorem, we have

| 42 |

where and .

As Assumptions (A1) and (A3′) are valid, it is easy to see that there exists a constant such that for any and , there holds

| 43 |

Combining (8), (29), (32)–(34) and (43), we have

| 44 |

where . Similarly, we can show the existence of a constant such that

| 45 |

Let , then we have for .

Lemma 7

Let the sequence be generated by (7). Under assumptions (A1) and , there holds

| 46 |

where is a positive constant.

Proof

By virtue of Assumption (A1) and Lemma 5, we know that is integrable almost everywhere on . Then, using Taylor’s mean value theorem we arrive at

| 47 |

By virtue of (8), (29), (47) and Lemma 5, there is a constant such that

| 48 |

where

| 49 |

and

| 50 |

Summing (48) for from 1 to J up, and noticing (2), (4a, 4b), (29a), (49) and (50), we have

| 51 |

where

| 52 |

Considering (17) and (32)–(40), it is easy to see that there exists a constant such that

| 53 |

Thus, the desired estimate is deduced by combining (51) and (53).

Now we are ready to prove the convergence theorem.

Proof of (12)

According to Lemmas 1 and 7, there exists a constant such that or . Recall , then we have (12).

Proof of (13)

Using Lemmas 1 and 7, we have that

| 54 |

Similarly to Lemma 4, there exists a Lipschitz constant such that

| 55 |

where is the weight sequence generated by (7) and is a positive integer.

Using (55) and (33), for , and , we have

| 56 |

Combining (54), (56) and Lemma 2, we have

| 57 |

Since

| 58 |

we have for .

Proof of (14)

The proof is almost the same as the proof of (11) and thus it is omitted here.

Simulation results

In this section, we illustrate the convergence behavior of the CIBGM using the sonar signal classification problem.

Sonar signal classification is one of the benchmark problems in neural network field. Our task is to train a network to discriminate between sonar returns bounced off a metal cylinder and those bounced off a roughly cylindrical rock. We obtained the data set from UCI machine learning repository (http://archive.ics.uci.edu/ml/), which comprises 208 samples, each with 60 components. In this simulation, we stochastically choose 164 samples for training and 44 samples for test.

The network for training is with the structure of 60–25–2. The activation functions for both the hidden and output layers are set to be in MATLAB, which is a commonly used sigmoid function. We choose the initial weights to be random numbers in the interval .

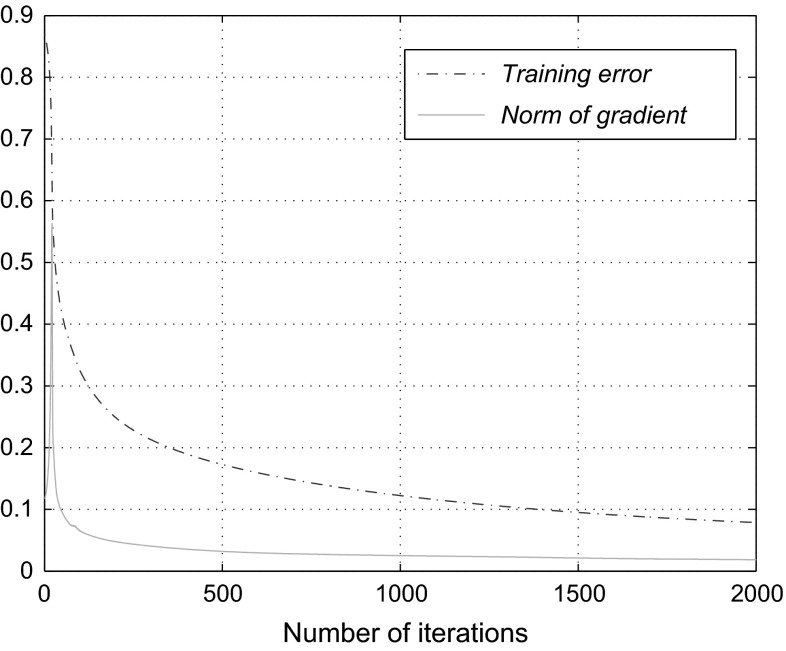

The simulation is carried out by choosing the parameters and . We set the learning rate if and if , which satisfies Assumption . Here 164 is the number of the training samples. The maximum training iteration (cycle) is 2,000. The learning curves for the chaos injection-based batch gradient method are depicted in Fig. 1, which shows the training error tends to a constant and the gradient of the error function tends to zero. This supports our theoretical analysis. The learning curves for the chaos injection-based online gradient method are almost the same with Fig. 1, with the only change that we should replace the x label “Number of iterations” with “Number of cycles”.

Fig. 1.

Learning curves for chaos injection-based batch gradient method

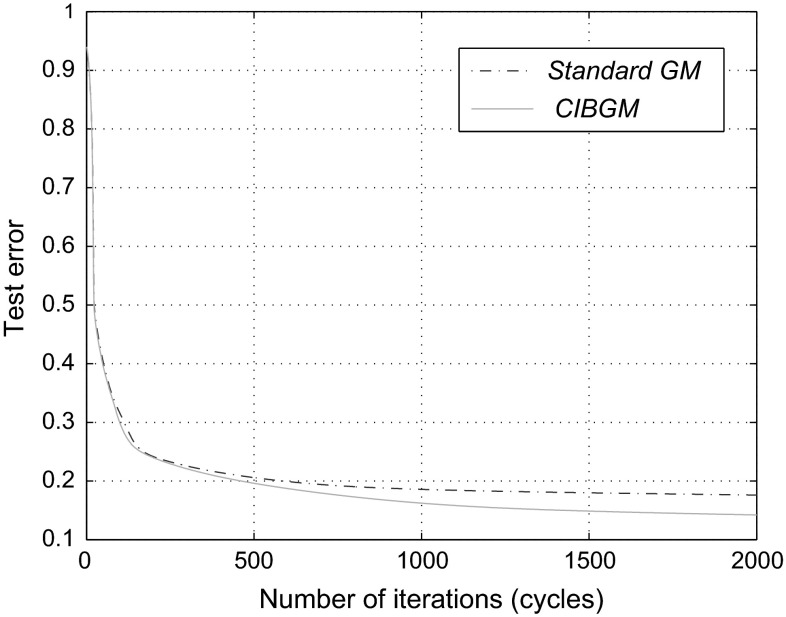

In order to show the effectiveness of the chaos injection method, we compare the test error curves of CIBGM and the standard GM (with no chaos injected) in Fig. 2. We can see that the test error of CIBGM converges faster and tends to a smaller number than that of the standard GM.

Fig. 2.

Test error curves for CIBGM and GM (with no chaos injected)

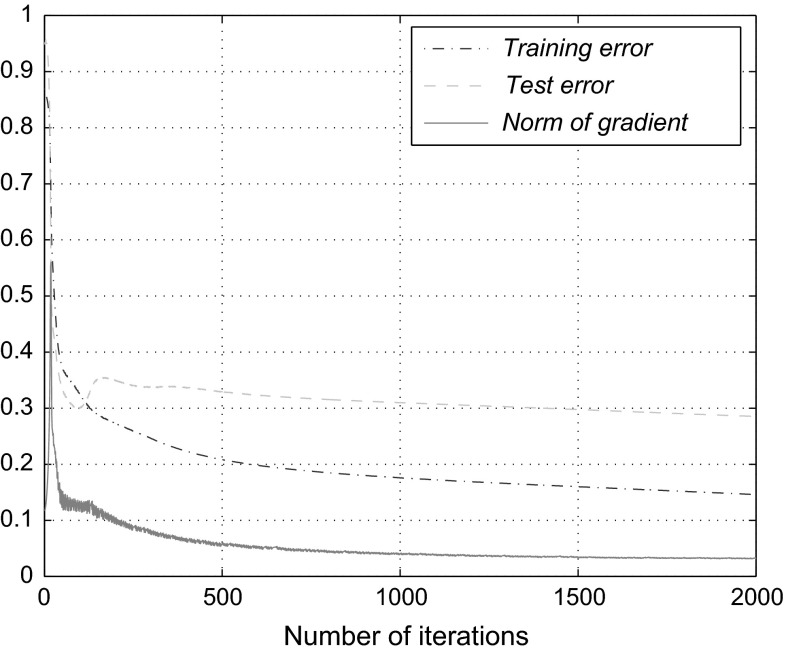

We mention that, though there is no restriction for the parameter A in Theorems 1 and 2, the choice of A is still of great importance. If A is too small, CIBGM will reduce to the standard GM. On the other hand, if A is too large, then the chaos term will dominate the update of the CIBGM especially in the early stage of the training procedure. As a result, the algorithm will converge very slowly and the performance may even be unacceptable. Figure 3 shows the results of the chaos injection-based batch gradient method for . We can find that the algorithm still converges. However, the performance is much worse than that for .

Fig. 3.

Performance for chaos injection-based batch gradient method with

Conclusion

This paper investigates the chaos injection-based gradient method (CIBGM) for the feedforward neural networks. Two learning mode cases, batch learning and online learning, are considered. Under the conditions that the derivatives of activation functions are Lipschitz continuous on any bounded closed interval and the learning rate is positive and satisfies and , we derive the weak convergence of the CIBGM, that is the gradient of the error function tends to zero and the error function tends to a constant. The strong convergence is also derived with the assumption that the set does not contain any interior point. The theoretical findings and the effectiveness of the CIBGM are illustrated by a simulation example. Future research includes the study on the convergence of the chaos injection-based stochastic gradient method.

Acknowledgments

This work is partly supported by the National Natural Science Foundation of China (Nos. 61101228, 61301202, 61402071), the China Postdoctoral Science Foundation (No. 2012M520623), and the Research Fund for the Doctoral Program of Higher Education of China (No. 20122304120028).

References

- Ahmed SU, Shahjahan M, Murase K. Injecting chaos in feedforward neural networks. Neural Process Lett. 2011;34:87–100. doi: 10.1007/s11063-011-9185-x. [DOI] [Google Scholar]

- Behera L, Kumar S, Patnaik A. On adaptive learning rate that guarantees convergence in feedforward networks. IEEE Trans Neural Netw. 2006;17(5):1116–1125. doi: 10.1109/TNN.2006.878121. [DOI] [PubMed] [Google Scholar]

- Bertsekas DP, Tsitsiklis JN. Gradient convergence in gradient methods with errors. SIAM J Optim. 2000;3:627–642. doi: 10.1137/S1052623497331063. [DOI] [Google Scholar]

- Charalambous C. Conjugate gradient algorithm for efficient training of artificial neural networks. Inst Electr Eng Proc. 1992;139:301–310. [Google Scholar]

- Fan QW, Zurada JM, Wu W. Convergence of online gradient method for feedforward neural networks with smoothing L1/2 regularization penalty. Neurocomputing. 2014;131:208–216. doi: 10.1016/j.neucom.2013.10.023. [DOI] [Google Scholar]

- Fine TL, Mukherjee S. Parameter convergence and learning curves for neural networks. Neural Comput. 1999;11:747–769. doi: 10.1162/089976699300016647. [DOI] [PubMed] [Google Scholar]

- Guo DQ. Inhibition of rhythmic spiking by colored noise in neural systems. Cogn Neurodyn. 2011;5(3):293–300. doi: 10.1007/s11571-011-9160-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagan MT, Mehnaj MB. Training feedforward networks with Marquardt algorithm. IEEE Trans Neural Netw. 1994;5(6):989–993. doi: 10.1109/72.329697. [DOI] [PubMed] [Google Scholar]

- Haykin S. Neural networks and learning machines. New Jersey: Prentice Hall; 2008. [Google Scholar]

- Heskes T, Wiegerinck W. A theoretical comparison of batch-mode, on-line, cyclic, and almost-cyclic learning. IEEE Trans Neural Netw. 1996;7(4):919–925. doi: 10.1109/72.508935. [DOI] [PubMed] [Google Scholar]

- Ho KI, Leung CS, Sum JP. Convergence and objective functions of some fault/noise-injection-based online learning algorithms for RBF networks. IEEE Trans Neural Netw. 2010;21(6):938–947. doi: 10.1109/TNN.2010.2046179. [DOI] [PubMed] [Google Scholar]

- Iiguni Y, Sakai H, Tokumaru H. A real-time learning algorithm for a multilayered neural netwok based on extended Kalman filter. IEEE Trans Signal Process. 1992;40(4):959–966. doi: 10.1109/78.127966. [DOI] [Google Scholar]

- Karnin ED. A simple procedure for pruning back-propagation trained neural networks. IEEE Trans Neural Netw. 1990;1:239–242. doi: 10.1109/72.80236. [DOI] [PubMed] [Google Scholar]

- Li Y, Nara S. Novel tracking function of moving target using chaotic dynamics in a recurrent neural network model. Cogn Neurodyn. 2008;2(1):39–48. doi: 10.1007/s11571-007-9029-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osowski S, Bojarczak P, Stodolski M. Fast second order learning algorithm for feedforward multilayer neural network and its applications. Neural Netw. 1996;9(9):1583–1596. doi: 10.1016/S0893-6080(96)00029-9. [DOI] [PubMed] [Google Scholar]

- Shao HM, Zheng GF. Boundedness and convergence of online gradient method with penalty and momentum. Neurocomputing. 2011;74:765–770. doi: 10.1016/j.neucom.2010.10.005. [DOI] [Google Scholar]

- Sum JP, Leung CS, Ho KI. Convergence analyses on on-line weight noise injection-based training algorithms for MLPs. IEEE Trans Neural Netw Learn Syst. 2012;23(11):1827–1840. doi: 10.1109/TNNLS.2012.2210243. [DOI] [PubMed] [Google Scholar]

- Sum JP, Leung CS, Ho KI. On-line node fault injection training algorithm for MLP networks: objective function and convergence analysis. IEEE Trans Neural Netw Learn Syst. 2012;23(2):211–222. doi: 10.1109/TNNLS.2011.2178477. [DOI] [PubMed] [Google Scholar]

- Uwate Y, Nishio Y, Ueta T, Kawabe T, Ikeguchi T. Performance of chaos and burst noises injected to the hopfield NN for quadratic assignment problems. IEICE Trans Fundam. 2004;E87–A(4):937–943. [Google Scholar]

- Wang J, Wu W, Zurada JM. Deterministic convergence of conjugate gradient method for feedforward neural networks. Neurocomputing. 2011;74:2368–2376. doi: 10.1016/j.neucom.2011.03.016. [DOI] [Google Scholar]

- Wu W, Feng G, Li Z, Xu Y. Deterministic convergence of an online gradient method for BP neural networks. IEEE Trans Neural Netw. 2005;16:533–540. doi: 10.1109/TNN.2005.844903. [DOI] [PubMed] [Google Scholar]

- Wu W, Wang J, Chen MS, Li ZX. Convergence analysis on online gradient method for BP neural networks. Neural Netw. 2011;24(1):91–98. doi: 10.1016/j.neunet.2010.09.007. [DOI] [PubMed] [Google Scholar]

- Wu Y, Li JJ, Liu SB, Pang JZ, Du MM, Lin P. Noise-induced spatiotemporal patterns in Hodgkin–Huxley neuronal network. Cogn Neurodyn. 2013;7(5):431–440. doi: 10.1007/s11571-013-9245-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoshida H, Kurata S, Li Y, Nara S. Chaotic neural network applied to two-dimensional motion control. Cogn Neurodyn. 2010;4(1):69–80. doi: 10.1007/s11571-009-9101-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu X, Chen QF. Convergence of gradient method with penalty for Ridge Polynomial neural network. Neurocomputing. 2012;97:405–409. doi: 10.1016/j.neucom.2012.05.022. [DOI] [Google Scholar]

- Zhang NM, Wu W, Zheng GF. Convergence of gradient method with momentum for two-layer feedforward neural networks. IEEE Trans Neural Netw. 2006;17(2):522–525. doi: 10.1109/TNN.2005.863460. [DOI] [PubMed] [Google Scholar]

- Zhang C, Wu W, Xiong Y. Convergence analysis of batch gradient algorithm for three classes of sigma–pi neural networks. Neural Process Lett. 2007;261:77–180. [Google Scholar]

- Zhang C, Wu W, Chen XH, Xiong Y. Convergence of BP algorithm for product unit neural networks with exponential weights. Neurocomputing. 2008;72:513–520. doi: 10.1016/j.neucom.2007.12.004. [DOI] [Google Scholar]

- Zhang HS, Wu W, Liu F, Yao MC. Boundedness and convergence of online gradient method with penalty for feedforward neural networks. IEEE Trans Neural Netw. 2009;20(6):1050–1054. doi: 10.1109/TNN.2009.2020848. [DOI] [PubMed] [Google Scholar]

- Zhang HS, Wu W, Yao MC. Boundedness and convergence of batch back-propagation algorithm with penalty for feedforward neural networks. Neurocomputing. 2012;89:141–146. doi: 10.1016/j.neucom.2012.02.029. [DOI] [Google Scholar]

- Zhang HS, Liu XD, Xu DP, Zhang Y. Convergence analysis of fully complex backpropagation algorithm based on Wirtinger calculus. Cogn Neurodyn. 2014;8(3):261–266. doi: 10.1007/s11571-013-9276-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zheng YH, Wang QY, Danca MF. Noise induced complexity: patterns and collective phenomena in a small-world neuronal network. Cogn Neurodyn. 2014;8(2):143–149. doi: 10.1007/s11571-013-9257-x. [DOI] [PMC free article] [PubMed] [Google Scholar]