Abstract

Response Evaluation Criteria in Solid Tumors (RECIST) is a standardized methodology for determining therapeutic response to anticancer therapy using changes in lesion appearance on imaging studies. Many radiologists are now using RECIST in their routine clinical workflow, as part of consultative arrangements, or within dedicated imaging core laboratories. Although basic RECIST methodology is well described in published articles and online resources, inexperienced readers may encounter difficulties with certain nuances and subtleties of RECIST. This article illustrates a set of pitfalls in RECIST assessment considered to be “beyond the basics.” These pitfalls were uncovered during a quality improvement review of a recently established cancer imaging core laboratory staffed by radiologists with limited prior RECIST experience. Pitfalls are presented in four categories: (1) baseline selection of lesions, (2) reassessment of target lesions, (3) reassessment of nontarget lesions, and (4) identification of new lesions. Educational and operational strategies for addressing these pitfalls are suggested. Attention to these pitfalls and strategies may improve the overall quality of RECIST assessments performed by radiologists.

Keywords: Oncology, solid malignancies, antitumor efficacy, RECIST, tumor response assessment

The Response Evaluation Criteria in Solid Tumors (RECIST) (1,2) have become broadly accepted by the oncology community, industry sponsors, and regulatory bodies for assessing the therapeutic efficacy of new anticancer agents. Most clinical trials for solid malignancies use RECIST for response assessment. RECIST guidelines, maintained by the European Organization for Research and Treatment of Cancer, specify how to identify and measure lesions, how to evaluate disease burden at follow-up imaging, and how to place patients into response categories at successive time points during a trial. Trial-level composite end points derived from RECIST, including overall response rate, time to progression, and progression-free survival (PFS), form the basic vocabulary for reporting the efficacy of investigational new agents and for comparing the efficacy of different treatment regimens.

Although RECIST was developed in the oncology community, many radiologists are now involved with performing RECIST assessments, especially in the academic setting (3,4). Radiologists may use RECIST in different contexts, including within their routine clinical workflow, as part of consultative arrangements with local research colleagues or industry sponsors, or within dedicated imaging core laboratories. A key objective when using RECIST is to apply the technique in a standardized fashion, adhering as closely as possible to established methodology and thus minimizing interreader variability that can lead to suboptimal reproducibility of results.

Although basic RECIST methodology is described in published articles and online resources (1,2,5), certain nuances and subtleties of the technique may be problematic for inexperienced readers. The purpose of this article is to illustrate a set of RECIST pitfalls considered to be “beyond the basics.” Examples were drawn from a 6-month quality improvement review of a cancer imaging core laboratory established at our institution in 2012; the radiologists staffing this new core laboratory were board-certified and subspecialty trained, but had little to no prior dedicated experience with RECIST methodology. (Please see Appendix for a description of our core laboratory, an overview of our quality improvement review methods, and a downloadable version of our educational materials.) Pitfalls are presented in four categories: (1) baseline selection of lesions, (2) reassessment of target lesions, (3) reassessment of nontarget lesions, and (4) reassessment of new lesions. These categories are intended to replicate the major evaluative steps established by RECIST for an individual patient on a clinical trial. For each pitfall, we suggest educational or operational support strategies for minimizing or preventing errors.

Baseline Selection of Lesions

Pitfalls in this category include (1) inappropriate selection of a target lesion when it is not unequivocally a metastasis, (2) selection of too many target lesions at baseline, (3) inappropriate selection of a small lesion as a target lesion, and (4) inappropriate selection of a target lesion from within a radiation field.

Inappropriate Selection of Target Lesion When Not Unequivocally a Metastasis

Because inadvertently selecting a benign lesion as a target lesion can lead to a false assessment of response or stability over time, it is crucial that only unequivocally metastatic lesions be chosen as target lesions (Fig 1). Educational materials presented to new RECIST readers should emphasize this point. Prior studies may be useful to confirm prior growth of an otherwise indeterminate lesion, although these may not always be available (especially in a centralized review setting). Review of clinic notes, operative reports, or surgical pathology reports may be useful to confirm sites of recent biopsy or surgery, thus preventing the inadvertent selection of a postoperative seroma or granulation tissue as a target lesion.

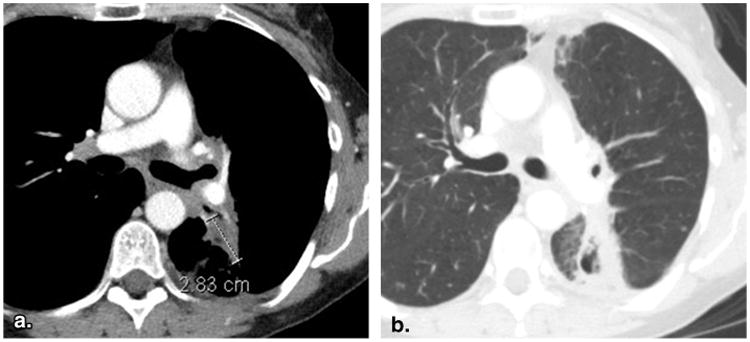

Figure 1.

Selection of a target lesion when not unequivocally a metastasis (61-year-old female with breast cancer). Contrast-enhanced computed tomography at baseline (a) depicts a low-attenuation lesion in the right breast that was designated as a target lesion, yet represented postsurgical granulation tissue/scarring rather than a metastasis. Lesion shrinkage on 8-week (time point #2) follow-up imaging (b) therefore represented a false-positive “response.”

Selection of Too Many Target Lesions at Baseline

RECIST 1.1 lowered the maximum number of target lesions to five, with no more than two from any given organ system. Although this rule is fairly straightforward for parenchymal lesions, it causes some confusion with regard to assessing lymph nodes. RECIST 1.1 considers all lymph nodes together as a single organ system and thus specifies a maximum total of two lymph nodes as target lesions (5). It is incorrect, therefore, to designate more than two lymph nodes as target lesions, even if the lesions are arising in different nodal basins. Educational materials should include this point and the suggestion from the European Organization for Research and Treatment of Cancer that readers consider selecting lymph nodes from two different nodal basins if there are multiple nodal basins involved. Electronic case report forms (eCRFs) may also be configured to disallow the selection of more than two lymph nodes as target lesions.

Inappropriate Selection of Small Lesions as Target Lesions

RECIST 1.1 requires parenchymal target lesions on computed tomography (CT) to measure at least 10 mm in longest axial dimension, assuming a scan slice thickness of 5 mm or less. For scans acquired at a slice thickness greater than 5 mm, target lesions must measure at least twice the slice thickness of the scan to avoid partial volume effects that may confound lesion measurement. In addition, RECIST 1.1 requires a target lesion lymph node to measure at least 15 mm in short axis. These rules should be clearly presented in educational materials for new readers. eCRFs may also be built to disallow target lesion measurements that do not satisfy these criteria.

Inappropriate Selection of Target Lesion from within a Radiation Field

RECIST guidelines specify that a previously radiated lesion should not be designated as a target lesion, because stability of such a lesion over time may be attributable to prior radiation rather than the agent under investigation (Fig 2). The exception to this rule is that if progression in a previously irradiated lesion has been demonstrated. It is important to note that indirect signs of prior radiation (such as surrounding pulmonary fibrosis with sharp linear margins) may or may not be evident on the images. This pitfall may therefore be best addressed in joint readout sessions with the treating physician in attendance. Such joint readout sessions are difficult to arrange, however, because of scheduling and workflow considerations, and if conducted may run the risk of clinical bias from the physician investigator being introduced into the imaging response assessment process. A compromise process might involve the referring physician investigator submitting a list of previously radiated sites for each patient to the radiologist reader before RECIST data extraction.

Figure 2.

Inappropriate selection of a target lesion from within a radiation field (53-year-old female with non-small cell lung cancer). Baseline contrast-enhanced computed tomography of the chest viewed at soft tissue window settings (a) reveals a lesion in the left lung that was designated as a target lesion. However, the same slice viewed at lung window settings (b) demonstrates surrounding linear parenchymal fibrosis characteristic of previous radiation. The previous radiation would disqualify this lesion as a target lesion, unless unequivocal progression after radiation had been previously demonstrated.

Reassessment of Target Lesions

Pitfalls in this category include (1) remeasurement of lesions in a different phase of contrast than at baseline and (2) failure to change the measurement axis with changes in lesion orientation.

Remeasurement of Lesions in a Different Phase of Contrast than Baseline

To ensure accurate comparison over time, lesions should be measured at each follow-up assessment in the same phase of contrast, that is, with the same timing of image acquisition relative to the injection of intravenous contrast media (Fig 3). This consideration is especially important given the widespread use of multiphase CTand magnetic resonance imaging scanning protocols, in which images are successively acquired in different phases of contrast distribution. Educational materials should illustrate how performing follow-up measurements in a different phase of contrast than the baseline measurement can produce inaccurate comparisons.

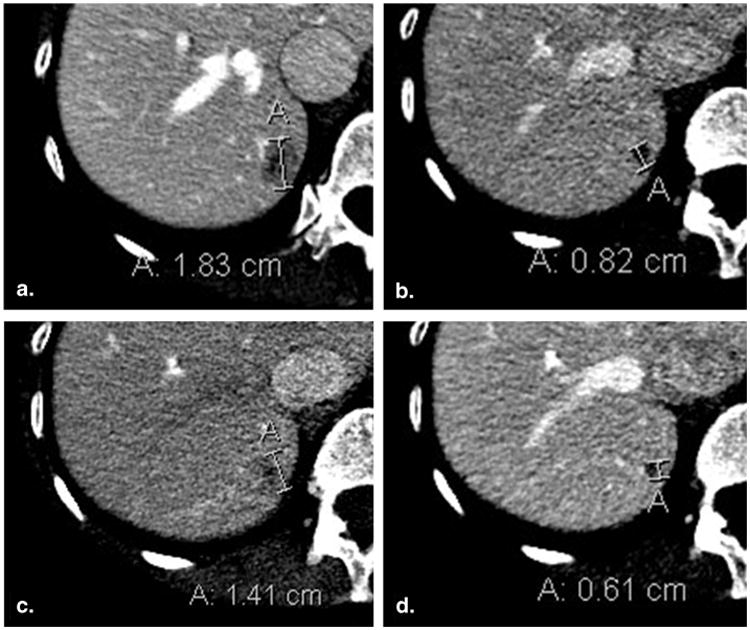

Figure 3.

Lesion remeasurement in a different phase of contrast than baseline (50-year-old female with breast cancer). Baseline contrast-enhanced computed tomography of the abdomen acquired in the portal venous phase (a) demonstrates an 18 mm metastasis in the right hemi-liver. Portal venous phase computed tomography at 8-week (time point #2) follow-up imaging (b) shows that lesion size has decreased to 8 mm. At 16 weeks (time point #3), the lesion was incorrectly evaluated in the arterial phase (c), leading to a measurement of 14 mm and a false assessment of progressive disease. Reevaluation in the portal venous phase (d) yielded a measurement of 6 mm.

Failure to Change Measurement Axis with Changes in Lesion Orientation

The true long axis should be used for target lesion measurement at every time point, even if the spatial orientation of the lesion changes such that there is a shift in the lesion's long axis. Inexperienced readers, in a well-intended but incorrect effort to preserve a “standardized” measurement, may maintain the original measurement axis at successive time points despite changes in lesion orientation (Fig 4). The need to measure in the true long axis should be emphasized in educational materials for new RECIST readers.

Figure 4.

Failure to change measurement axis with changes in lesion orientation (51-year-old male with metastatic adenoid cystic carcinoma of the tongue base). Baseline contrast-enhanced computed tomography of the chest viewed at lung window settings (a) demonstrates a mass in the right lung, correctly measured along its axis. At 8-week (time point #2) follow-up imaging (b), the original measurement axis was incorrectly maintained, resulting in the underestimation of true lesion size. Shifting the measurement to the new long axis (c) correctly captures the interval lesion growth.

Reassessment of Nontarget Lesions

Pitfalls in this category include (1) premature assignment of progressive disease (PD) for nontarget lesions, (2) incorrect designation of partial response (PR) for nontarget lesions, (3) comparison to the incorrect prior scan, and (4) failure to assign a designation of complete response (CR) for nontarget lymph nodes falling less than 10 mm.

Premature Assignment of PD for Nontarget Lesions

Assignment of PD for nontarget lesions requires unequivocal worsening; as a general rule of thumb, if a change in a nontarget lesion could conceivably be because of something other than disease progression (eg, a bone lesion becoming more sclerotic because of a treatment response), it should not be designated as PD (Fig 5). This determination should ideally be made with consideration to the nature of the tumor and the type of therapy, although the latter information may not be available to a blinded reviewer.

Figure 5.

Incorrect assignment of progressive disease for a nontarget lesion (60-year-old female with breast cancer). Contrast-enhanced computed tomography of the chest viewed at bone window settings demonstrates a vertebral body metastasis becoming more sclerotic at baseline (a), 8 weeks (time point #1) (b), and 16 weeks (time point #2) (c). Because this could represent a treatment response rather than worsening disease, it would be inappropriate to assign progressive disease to this lesion.

More problematic is determining when, in the context of a growing nontarget lesion, the lesion has advanced to the point that it should be designated as PD (Fig 6). RECIST 1.1 recognizes the subjective nature of this determination and provides guidance for patients with measurable disease and for patients with nontarget lesions only. In both cases, an assignment of nontarget lesion PD requires an increase in overall tumor burden to merit discontinuation of therapy. (For patients with nontarget lesions only, this increase in overall disease burden would be comparable to a 20% increase in the diameter of a measurable lesion.) These important considerations should be incorporated into educational materials with the understanding that reassessment of nontarget lesions remains somewhat contentious and observer-dependent despite the additional guidance provided in RECIST 1.1.

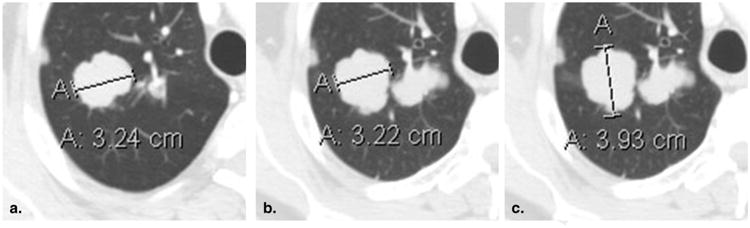

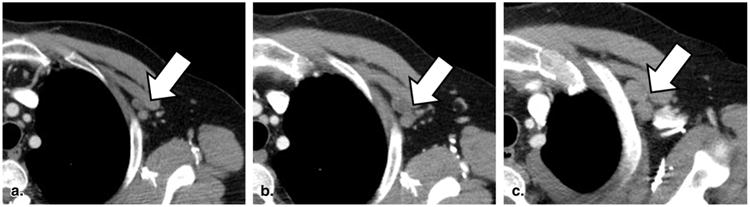

Figure 6.

Equivocal progressive disease for a nontarget lesion (60-year-old female with non-small cell lung cancer). Contrast-enhanced computed tomography of the chest reveals a cluster of left subpectoral lymph nodes that are slowly growing over time (a–c). According to Response Evaluation Criteria in Solid Tumors, an assignment of progressive disease for these nodes must be considered with reference to overall disease burden on the rest of the scan.

Incorrect Designation of PR for Nontarget Lesions

Inexperienced RECIST readers may mistakenly assign a designation of PR to shrinking nontarget lesions. The only acceptable follow-up categorizations for nontarget lesions are CR, PD, and non-CR/non-PD. A shrinking but still visible nontarget lesion should therefore be designated as non-CR/non-PD, with the exception of a nontarget lymph node shrinking to less than 10 mm short axis, which may be designated as CR (see subsequently). eCRFs may be configured such that a designation of PR is prohibited for nontarget lesions.

Comparison to the Incorrect Prior Scan

For growing lesions, both target and nontarget, RECIST stipulates comparison to the scan at which lesion measurements were at their nadir. Slowly worsening disease can be missed if comparisons are always made to the most recent prior scan (Fig 7). Although eCRFs can be configured to calculate percent change in target lesion measurements using the correct comparison time point, readers must themselves select the correct comparison scan when reassessing nontarget lesions. A good rule of thumb is to display the current and nadir images (rather than the current and most recent prior images) side-by-side when performing RECIST data extractions.

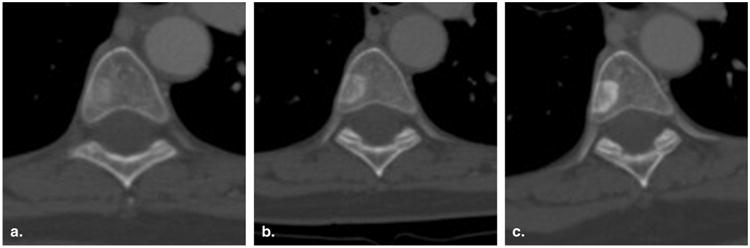

Figure 7.

Comparison to the incorrect prior scan (46-year-old female with non-small cell lung cancer). Baseline contrast-enhanced computed tomography of the chest viewed at lung window settings (a) reveals a small pleural-parenchymal nodule at the left lung apex. The lesion steadily grew at successive follow-up time points (b, c) but was repeatedly designated as stable because comparisons were made to the most recent prior. The worsening disease is more evident when comparison is made to the baseline scan. (Measurements provided for reference.)

Failure to Assign CR for Nontarget Lymph Nodes Falling Less than 10 mm

Nontarget lymph nodes shrinking less than 10 mm short axis should be designated as CR. This is the exception to the aforementioned rule stating that shrinking but still visible nontarget lesions should be designated as non-CR/non-PD. Educational materials should address this rule, which is difficult to incorporate into eCRFs because quantitative measurements are typically not entered for nontarget lesions.

Identification of New Lesions

Pitfalls in this category include (1) premature assessment of new disease on anatomic imaging and (2) premature assessment of new disease on 18-F-fluorodeoxyglucose positron emission tomography (FDG-PET) studies.

Premature Assessment of New Disease on Anatomic Imaging

As with selection of target lesions on the baseline scan, assessment of PD on the basis of a new lesion requires that the new lesion be unequivocal (6). Equivocal new lesions may represent true metastases or may arise because of slight differences in scanning technique or changes in imaging modality (eg, from CT to magnetic resonance imaging). When an equivocal new lesion arises (Fig 8), RECIST 1.1 recommends that readers document the new lesion, thus flagging the lesion for close scrutiny at the subsequent time point. If the lesion grows and becomes an unequivocal new metastasis in the future, PD may be assigned retroactively to the time point at which the lesion first appeared. Otherwise the lesion can continue to be followed (Fig 9).

Figure 8.

Premature assessment of new disease before it is unequivocal (79-year-old male with metastatic melanoma). Baseline contrast-enhanced computed tomography of the chest viewed at lung windows (a) reveals no suspicious finding. At follow-up imaging (b), there is a small right lower lobe pulmonary nodule (arrow). Given only these two images, it is unclear whether the nodule is a new metastasis or a lesion that was present at baseline and not visualized because of slice placement. As such, this lesion should not yet be designated as progressive disease, but rather flagged for close attention at the next time point. (On subsequent imaging, not shown, the lesion did continue to grow; a designation of progressive disease was therefore applied retroactively to the time point at which the nodule was first visualized.)

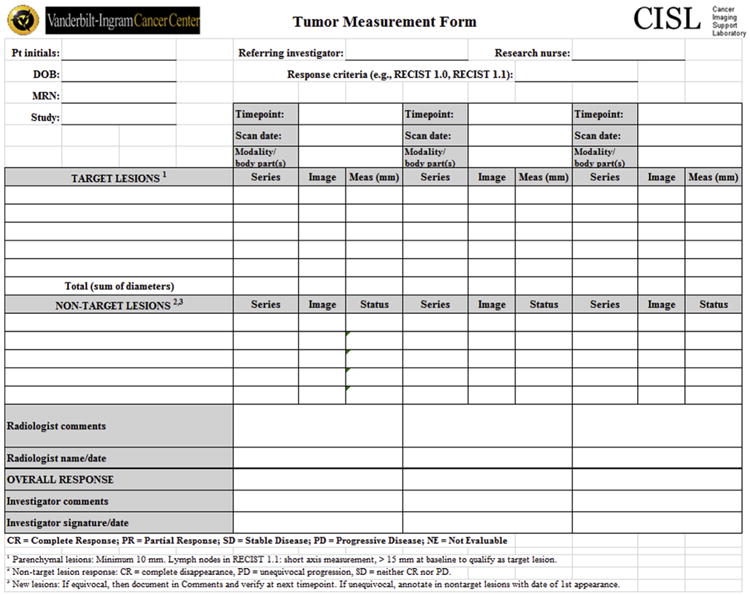

Figure 9.

Standard CISL tumor measurement form for Response Evaluation Criteria in Solid Tumor data extractions.

Premature Assessment of New Disease on FDG-PET Studies

Although assessment with FDG-PET studies may be important in determining best overall response (7), RECIST takes a conservative approach to the use of FDG-PET for response evaluation. FDG-PET may be used to identify a new lesion (with twice the FDG uptake of surrounding tissue suggested as the threshold for lesion positivity), but only if (1) a baseline FDG-PET scan is negative for disease at the site of the new lesion or (2) the positive FDG-PET scan is confirmed by a new site of disease visualized by CT. If there is no baseline FDG-PET scan and a new positive FDG-PET finding is not confirmed by anatomic imaging, the patient should remain on trial until the new lesion is confirmed by CT, at which point the designation of PD may be assigned retrospectively to the date of the positive FDG-PET scan.

Discussion

Although RECIST methodology is intended to promote objective and standardized response assessment, previous studies have revealed evidence of suboptimal reproducibility for both patient-level and trial-level results. In their study of repeated measurements of lung tumors on CT scans, Erasmus et al. (8) found PD misclassification rates of 10% for intraobserver measurements and 30% for interobserver measurements. Dodd et al. (9) presented data showing a 39% discrepancy rate between local and centrally determined PFS times in a phase II trial of bevacizumab in renal cell carcinoma and illustrated the statistical implications for informative censoring biasing trial results toward more favorable PFS. Borradaile et al. (10) analyzed blinded independent central review data from 40 oncology clinical trials and found a 23% discordance rate between two readers when determining best overall response and a 31% discordance rate when determining date of progression. In a subset analysis of breast cancer clinical trials, 77% of discordant cases were thought to be the result of justifiable differences between the two readers (including selection of different target lesions), whereas 23% were attributed to an interpretive error made by one of the readers.

This article seeks to improve the reproducibility of RECIST assessments performed by radiologists by illustrating certain pitfalls that were problematic for inexperienced readers in the first 6 months of our new cancer imaging core laboratory. (Table 1 summarizes and translates these pitfalls into a set of RECIST data extraction “pearls.”) We propose that errors in RECIST assessments are best avoided through a combination of educational and operational support strategies, both of which should be considered by radiologists considering adding RECIST assessments to their workflow or embarking on new consultative or core laboratory arrangements at their institutions. Educational and feedback interventions have been shown to improve performance with RECIST assessments (11), as would be predicted for a learnable skill requiring both didactic and experiential training. Adherence to best practices may be further enhanced by workflow tools including “smart” eCRFs configured to calculate measurement totals and disallow inappropriate entries, as well as by next-generation informatic applications for lesion tracking and annotation (12) (Table 2).

Table 1. Response Evaluation Criteria in Solid Tumor Data Extraction “Pearls”.

Baseline selection of lesions

|

CR, complete response; CT, computed tomography; FDG-PET, 18-F-fluorodeoxyglucose positron emission tomography; PD, progressive disease; PR, partial response.

Table 2. Errors in Response Evaluation Criteria in Solid Tumor Methodology Discovered at Quality Improvement Review.

| Error Category | 1st 6 Months n (%) | 2nd 6 Months n (%) | P Value for Change |

|---|---|---|---|

| Baseline selection of lesions | |||

| Selection of target lesion when not unequivocally a metastasis | 2 (3.8) | 0 (0) | .15 |

| Selection of too many target lesions at baseline | 3 (5.7) | 1 (1.2) | .30 |

| Inappropriate selection of small lesions as target lesions | 3 (5.7) | 1 (1.2) | .30 |

| Inappropriate selection of target lesion from within radiation field | 2 (3.8) | 0 (0) | .15 |

| Reassessment of target lesions | |||

| Remeasurement of lesions in a different phase of contrast than baseline | 2 (3.9) | 0 (0) | .05 |

| Failure to change measurement axis with changes in lesion orientation | 1 (2.0) | 0 (0) | .23 |

| Reassessment of nontarget lesions | |||

| Premature assignment of PD for nontarget lesions | 3 (5.9) | 2 (1.2) | .08 |

| Incorrect designation of PR for nontarget lesions | 2 (3.9) | 0 (0) | .05 |

| Comparison to the incorrect prior scan | 2 (3.9) | 0 (0) | .05 |

| Failure to assign CR for nontarget lymph nodes falling less than 10 mm | 1 (2.0) | 1 (0.6) | .40 |

| Identification of new lesions | |||

| Premature assessment of new disease on anatomic imaging | 2 (3.9) | 0 (0) | .05 |

| Premature assessment of new disease on FDG-PET studies | 1 (2.0) | 0 (0) | .23 |

CR, complete response; FDG-PET, 18-F-fluorodeoxyglucose positron emission tomography; PD, progressive disease; PR, partial response.

Note: Error frequencies for the first category are reported as percentage of baseline assessments. Error frequencies for the second through fourth categories are reported as percentages of follow-up assessments. Differences in error rates evaluated in Stata 13 (StataCorp LP, College Station, TX) using Fisher's exact test.

This article also illustrates several difficulties with RECIST that may prove challenging even to experienced RECIST readers. First, despite emphasis of RECIST on objectivity and standardization, the technique still retains an element of subjectivity, especially for assigning PD to nontarget lesions. RECIST 1.1 provides additional guidance meant to minimize this subjectivity (ie, PD for nontarget lesions requires an increase in overall disease burden sufficient to discontinue therapy, comparable to the 20% increase in the sum of the diameters that would be required for target lesion PD), yet our audit and anecdotal experience both suggest considerable observer dependency for making these determinations.

Second, RECIST methodology may have variable usefulness across different tumor types and different therapeutic agents. This observation has led to the evolution of several modifications to RECIST, intended to tailor the technique to response assessment in specific situations (13–16). Certain elements of standard RECIST methodology may misrepresent the overall clinical picture, for example, with new, small, slow-growing pulmonary nodules arising in a lung cancer patient despite dramatic initial response to a novel tyrosine kinase inhibitor (17). What is the radiologist to do if he or she suspects that RECIST methodology is yielding misleading results, or that a different response assessment technique may be more appropriate? We suggest that when conducting RECIST assessments, the radiologist should strive to apply standardized methodology as objectively as possible. However, the radiologist also has a duty to communicate with the physician investigator and discuss any suspicion that tumor measurement data may be yielding false-positive or false-negative results when taken in a broader clinical context. More generally, radiologists need to become more involved with clinical and research colleagues in designing response assessment methodology for clinical trials, interpreting results in clinical context, and validating and advancing new response assessment techniques.

The examples presented in this article were drawn from a quality improvement review of our cancer imaging core laboratory. Our methodology was limited in the sense that the error categories were not chosen a priori, nor was our study prospectively powered to demonstrate improvement in error rates after the quality improvement audit. Also, because our core laboratory's workflow relies on the physician investigator to make the final overall response assessment at each category, we were not able to illustrate examples of errors in assigning overall response. Potential pitfalls in assigning overall response include failure to observe the new RECIST 1.1 requirement that a 5 mm absolute increase in sum of target lesion diameters be observed (in addition to a 20% increase) to assign PD, and failure to observe predefined rules regarding confirmation of response (especially in clinical trials where response is the primary end point).

Despite advancements in medical imaging technology including the rapid development of new functional and molecular imaging methods (18), conventional gross lesion size measurement remains the mainstay of clinical response assessment. RECIST is the primary method for assessing antitumor efficacy in solid malignancies and will likely remain so for the foreseeable future. Familiarity with the pitfalls outlined in this article should be useful to inexperienced RECIST readers, including radiologists seeking to respond to oncologists' growing expectations for quantitative tumor measurements as a value-added service (19), and may contribute toward increased standardization and decreased interpretive error in assessing the antitumor efficacy of new agents.

Supplementary Material

Acknowledgments

Financial support: NCI SPORE in Breast Cancer P50 CA098131, NCI Cancer Center Support Grant P30 CA068485, and AUR-GE Radiology Research Academic Fellowship (R.G.A.)

Footnotes

Supplementary Data: Supplementary data related to this article can be found online at http://dx.doi.org/10.1016/j.acra.2015.01.015.

References

- 1.Eisenhauer EA, Therasse P, Bogaerts J, et al. New response evaluation criteria in solid tumours: revised RECIST guideline (version 1.1) Eur J Cancer. 2009;45:228–247. doi: 10.1016/j.ejca.2008.10.026. [DOI] [PubMed] [Google Scholar]

- 2.Therasse P, Arbuck SG, Eisenhauer EA, et al. New guidelines to evaluate the response to treatment in solid tumors. European Organization for Research and Treatment of Cancer, National Cancer Institute of the United States, National Cancer Institute of Canada. J Natl Cancer Inst. 2000;92:205–216. doi: 10.1093/jnci/92.3.205. [DOI] [PubMed] [Google Scholar]

- 3.Jaffe TA, Wickersham NW, Sullivan DC. Quantitative imaging in oncology patients: Part 1, radiology practice patterns at major U.S. cancer centers. AJR Am J Roentgenol. 2010;195:101–106. doi: 10.2214/AJR.09.2850. [DOI] [PubMed] [Google Scholar]

- 4.Nishino M, Jagannathan JP, Ramaiya NH, et al. Revised RECIST guideline version 1.1: what oncologists want to know and what radiologists need to know. AJR Am J Roentgenol. 2010;195:281–289. doi: 10.2214/AJR.09.4110. [DOI] [PubMed] [Google Scholar]

- 5.EORTC. RECIST 1.1 questions and clarifications. [Accessed January 6, 2015]; Available at: http://www.eortc.org.

- 6.Suzuki C, Jacobsson H, Hatschek T, et al. Radiologic measurements of tumor response to treatment: practical approaches and limitations. Radiographics. 2008;28:329–344. doi: 10.1148/rg.282075068. [DOI] [PubMed] [Google Scholar]

- 7.Nishino M, Jackman DM, Hatabu H, et al. New Response Evaluation Criteria in Solid Tumors (RECIST) guidelines for advanced non-small cell lung cancer: comparison with original RECIST and impact on assessment of tumor response to targeted therapy. AJR Am J Roentgenol. 2010;195:W221–W228. doi: 10.2214/AJR.09.3928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Erasmus JJ, Gladish GW, Broemeling L, et al. Interobserver and intraobserver variability in measurement of non-small-cell carcinoma lung lesions: implications for assessment of tumor response. J Clin Oncol. 2003;21:2574–2582. doi: 10.1200/JCO.2003.01.144. [DOI] [PubMed] [Google Scholar]

- 9.Dodd LE, Korn EL, Freidlin B, et al. Blinded independent central review of progression-free survival in phase III clinical trials: important design element or unnecessary expense? J Clin Oncol. 2008;26:3791–3796. doi: 10.1200/JCO.2008.16.1711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Borradaile K, Ford R, O'Neal M, et al. Discordance between BICR readers: understanding the causes and implementing processes to mitigate sources of discordance. 2010 [Google Scholar]

- 11.Andoh H, McNulty NJ, Lewis PJ. Improving accuracy in reporting CT scans of oncology patients: assessing the effect of education and feedback interventions on the application of the Response Evaluation Criteria in Solid Tumors (RECIST) criteria. Acad Radiol. 2013;20:351–357. doi: 10.1016/j.acra.2012.12.002. [DOI] [PubMed] [Google Scholar]

- 12.Abajian AC, Levy M, Rubin DL. Informatics in radiology: improving clinical work flow through an AIM database: a sample web-based lesion tracking application. Radiographics. 2012;32:1543–1552. doi: 10.1148/rg.325115752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Byrne MJ, Nowak AK. Modified RECIST criteria for assessment of response in malignant pleural mesothelioma. Ann Oncol. 2004;15:257–260. doi: 10.1093/annonc/mdh059. [DOI] [PubMed] [Google Scholar]

- 14.Choi H, Charnsangavej C, Faria SC, et al. Correlation of computed tomography and positron emission tomography in patients with metastatic gastrointestinal stromal tumor treated at a single institution with imatinib mesylate: proposal of new computed tomography response criteria. J Clin Oncol. 2007;25:1753–1759. doi: 10.1200/JCO.2006.07.3049. [DOI] [PubMed] [Google Scholar]

- 15.Wolchok JD, Hoos A, O'Day S, et al. Guidelines for the evaluation of immune therapy activity in solid tumors: immune-related response criteria. Clin Cancer Res. 2009;15:7412–7420. doi: 10.1158/1078-0432.CCR-09-1624. [DOI] [PubMed] [Google Scholar]

- 16.Lencioni R, Llovet JM. Modified RECIST (mRECIST) assessment for hepatocellular carcinoma. Semin Liver Dis. 2010;30:52–60. doi: 10.1055/s-0030-1247132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mok TS. Living with imperfection. J Clin Oncol. 2010;28:191–192. doi: 10.1200/JCO.2009.25.8574. [DOI] [PubMed] [Google Scholar]

- 18.Yankeelov TE, Gore JC. Has quantitative multimodal imaging of treatment response arrived? Clin Cancer Res. 2009;15:6473–6475. doi: 10.1158/1078-0432.CCR-09-2257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jaffe TA, Wickersham NW, Sullivan DC. Quantitative imaging in oncology patients: Part 2, oncologists' opinions and expectations at major U.S. cancer centers. AJR Am J Roentgenol. 2010;195:W19–W30. doi: 10.2214/AJR.09.3541. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.