Abstract

Many animals rely on visual figure–ground discrimination to aid in navigation, and to draw attention to salient features like conspecifics or predators. Even figures that are similar in pattern and luminance to the visual surroundings can be distinguished by the optical disparity generated by their relative motion against the ground, and yet the neural mechanisms underlying these visual discriminations are not well understood. We show in flies that a diverse array of figure–ground stimuli containing a motion-defined edge elicit statistically similar behavioral responses to one another, and statistically distinct behavioral responses from ground motion alone. From studies in larger flies and other insect species, we hypothesized that the circuitry of the lobula—one of the four, primary neuropiles of the fly optic lobe—performs this visual discrimination. Using calcium imaging of input dendrites, we then show that information encoded in cells projecting from the lobula to discrete optic glomeruli in the central brain group these sets of figure–ground stimuli in a homologous manner to the behavior; “figure-like” stimuli are coded similar to one another and “ground-like” stimuli are encoded differently. One cell class responds to the leading edge of a figure and is suppressed by ground motion. Two other classes cluster any figure-like stimuli, including a figure moving opposite the ground, distinctly from ground alone. This evidence demonstrates that lobula outputs provide a diverse basis set encoding visual features necessary for figure detection.

Keywords: feature detection, figure–ground discrimination, optomotor

Introduction

Sensory discrimination is one of the oldest subjects of study in behavioral neuroscience (Weber, 1846; Fechner, 1860). The discrimination of visual figures that differ in some quality from the background is an everyday phenomenon and yet the neuronal mechanisms remain largely enigmatic. The study of figure–ground discrimination in humans, and in particular, the study of the discrimination of motion-defined figures and contours, or edges, was coincident with the earliest studies of how flies respond to optic flow and elementary motion (Reichardt and Poggio, 1976; Buelthoff, 1981). Flies are capable of figure-tracking when the figure stimulus is discriminable from the ground only by its relative motion, even when it occupies <10% of the visual field, or is superimposed on a ground that moves opposite the direction of the figure, moves randomly, or flickers (Reichardt et al., 1989; Theobald et al., 2010; Fox et al., 2014). Neural mechanisms for the discrimination of vertical edges from the ground image likely evolved for navigating gaps and approaching perch sites within natural landscapes (Maimon et al., 2008; Aptekar et al., 2012).

Studying the neural mechanism of figure–ground discrimination is challenging because a figure is an abstract percept defined by difference-from-ground in an expansive set of features (Sperling et al., 1985; Theobald et al., 2008). Many qualitatively different stimuli may elicit quantifiably similar behaviors. This may be accounted for by the diverse inputs having in common core, perceptually salient features. Work across species hypothesizes that common features of figures found in nature(O'Carroll, 1993; Kimmerle and Egelhaaf, 2000a,b; Nordström and O'Carroll, 2006; Straw et al., 2008; Wiederman et al., 2008; O'Carroll and Wiederman, 2014), or the behavioral context of figure tracking for pursuing prey or avoiding predators (Olveczky et al., 2003; Baccus et al., 2008; Wiederman et al., 2008; Zhang et al., 2012) require certain properties of figure discrimination circuits, such as sensitivity to small size, luminance contrast, or special shapes.

Because a “feature” is a primitive quality that can be shared among dissimilar stimuli, a natural way to describe the preferred input to a “feature detector” is by a set of pairwise comparisons of that detector's outputs under different stimulus inputs. This set of pairwise measures is a perceptual graph (PG). A PG provides a schema for the perceptual properties of either a behavioral or cellular response without the need to hypothesize a priori-specific parameters to which the detector is tuned, such as size, luminance, or shape. Clustering of two or more stimuli within the PG, apart from others, demonstrates the capacity for discrimination between inputs by that detector.

We use a genetically encoded calcium indicator (Akerboom et al., 2012) with two-photon excitation imaging (Clark et al., 2011) of dendrites of columnar neurons that project axons from the lobula to distinct optic glomeruli or to the lobula plate. We use PGs to test the hypothesis that the glomerular network provides parallel information processing (Strausfeld et al., 2006), simultaneous encoding of higher-order motion features by distinct anatomical loci, critical to figure–ground discrimination behavior.

Materials and Methods

Behavioral assay.

Our aim was very specifically to map behavioral figure tracking to neuronal responses. Keeping the scope focused on the horizontal motion of vertical contours that drive fixation behavior, we did not investigate variables, such as orientation or speed. Adult, female Drosophila melanogaster were anesthetized under cold sedation at 3°C and tethered by the dorsal thorax to tungsten pins with dental acrylic. The head was free to move. After ∼1 h of recovery in a humidified box, individuals were suspended in a light-emitting diode (LED) arena (Fig. 1B; Reiser and Dickinson, 2008). For the spatiotemporal action field (STAF) experiments (Fig. 1C), the detailed procedure was reported previously (Aptekar et al., 2012). For the single-sweep experiments (Fig. 1E), the assay was composed of three repetitions of 16 stimuli. A stimulus period consisted of 4 s of open-loop tracking during which a ground, a single edge, or a 30-degree-wide figure would traverse the azimuth of the arena at 90°/s for one full revolution. All figures, grounds, and edge stimuli consisted of randomly interspersed ON and OFF columns of pixels. As a result, figures, edges, and ground stimuli could not be discriminated at rest. Between each stimulus period, flies were provided with a 30° dark bar under closed-loop feedback control for a period of 5 s. “Open loop” refers to the animal being shown a series of images where the steering behavior of the animal does not feedback and impact the image trajectory. When feedback is integrated, this is referred to as “closed loop” control.

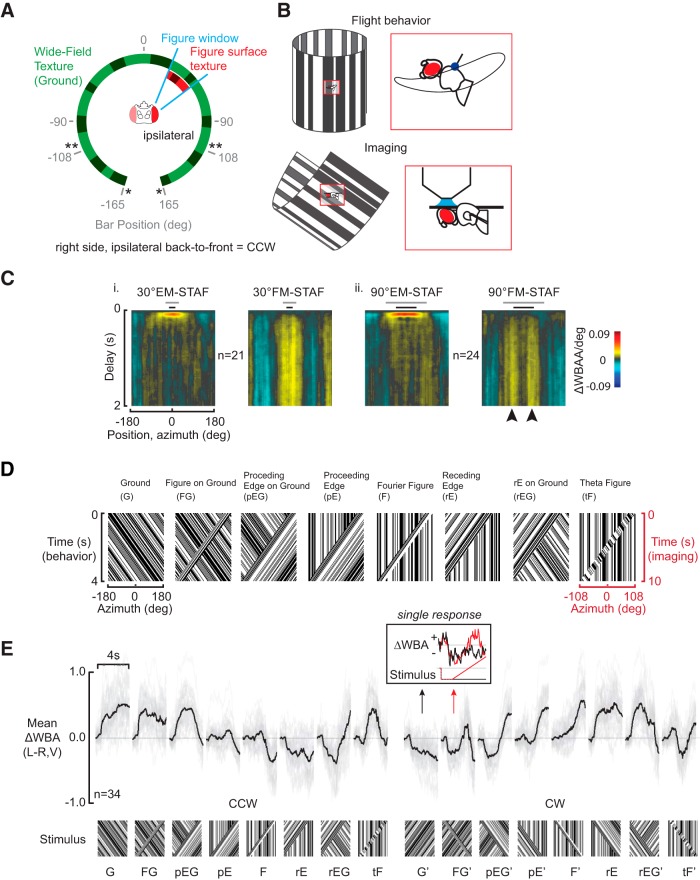

Figure 1.

Figure–ground discrimination is based on an edge-detection mechanism. A, Schematic diagram of panoramic LED arena for behavioral (*) and physiology (**) experiments. Pixel spacing is 3.75°. All patterns were composed only of vertical stripes. B, Schematic diagram of fly orientation relative to flight and imaging arena. The angle of the thorax relative to the axis of the arena is ∼35° in both cases. Due to flexion of the neck, the angle of the head relative to the arena is slightly larger in the restrained imaging preparation than in the flight arena. C, STAFs for FM and sfEM for a 30° wide (Ci) and 9° wide (Cii) figure. The gray and black bars above each STAF signify the extent of the 90° and 30° bars, respectively. The lobe separation corresponds well to the separation of the two edges of the 90° bar (black arrowheads). D, Stimuli used for both behavior and physiology studies. Mirror symmetric versions of all stimuli were also used for a total of 16 experimental conditions. E, Steering responses to visual stimuli and their mirror symmetric counterparts. Means of all animals are plotted in black. Mean responses from individual flies are plotted in light gray. Inset, Raw traces for the ground (black) and figure–ground (red); N = 34 flies.

STAF assay.

In a flight simulator (Fig. 1), two sequences of white noise were applied to a bar figure, one controlling velocity impulses of coherent space-time correlated elementary motion (EM) generated by the figure's surface, and the other controlling figure motion steps (FM) generated by displacing the window containing the figure (Fig. 1A; methods described previously in detail by Aptekar et al., 2014). We measured the EM and FM responses over the azimuth (Fig. 1B) to generate STAFs that describe the retinotopic variation of temporal dynamical responses of the two subsystems (Aptekar et al., 2012).

Fly lines.

All Gal4 fly lines were identified through the Janelia Farm online imaging database and obtained from the Bloomington FlyBase (ID):

LC9 (ID 26438, http://flweb.janelia.org/cgi-bin/view_flew_imagery.cgi?line=R14A11)

LC12 (ID 31156, http://flweb.janelia.org/cgi-bin/view_flew_imagery.cgi?line=R65B05)

T5 (ID 32427, http://flweb.janelia.org/cgi-bin/view_flew_imagery.cgi?line=R79D04)

LC10a (ID 32564, http://flweb.janelia.org/cgi-bin/view_flew_imagery.c g i?line=R80G09).

The UAS-GCamp6m line was from the Bloomington FlyBase (Akerboom et al., 2012), FlyBaseID: FBti0151346. For stochastic labeling of single cells, we used a FRT-STOP-FRTmCD8::GFP line that was a gift from Larry Zipursky (UCLA Department of Biological Chemistry, Los Angeles, CA).

We noted that LC10a, a subset of the LC10 cell-type that is limited only to the medial most portion of the optic tubercle, has been described previously (Otsuna and Ito, 2006), but never before imaged. Additionally, LC9 was colabeled in the R14A11 line with both LC11 and LC13, along with an unnamed lobula-intrinsic neuron. However, both gross and specific labeling verified that the vast majority of the lobular neurites labeled within this line belong to LC9.

Anatomical single-photon excitation confocal imaging.

Gal4 expression was visualized by crossing R79D04, R65B05, R80G09, and R14A11-Gal4 to UAS-mCD8::GFP (5137). For single-cell analysis, hs-flp; UAS-FRT-STOP-FRTmCD8:: GFP flies were crossed to R79D04-Gal4 (40034), R65B05-Gal4(49610), and R14A11-Gal4 (48595) and heat shock (37°C) was applied for 15–25 min to 3- to 5-d-old larvae. Two to 7-d-old female flies were then dissected in 1× PBS. Brains were fixed in 4% paraformaldehyde and washed 3× for 15 min with 0.3% PBST (Triton X-100). Brains were then blocked in 5% goat serum diluted in 0.3% PBST for 30 min and incubated in primary antibody for 2 d at 4°C. Then, brains were washed 3× for 15 min in PBST and incubated in secondary antibody for 2 d at 4°C. Finally, brains were mounted in Vectashield (Vector Laboratories) on a microscope slide. Images were taken using a Zeiss 710 confocal microscope and analyzed with ImageJ (NIH). The following primary and secondary antibodies were used: mouse anti-nc82 (1:10, Developmental Studies Hybridoma Bank), rabbit anti-GFP (1:1000, Invitrogen, A11122), goat anti-rabbit AlexaFluor 488 (1:200, Invitrogen, A11034), and goat anti-mouse AlexaFluor 568 (1:200, Invitrogen, A11031).

Two-photon excitation calcium imaging.

Briefly, the preparation procedure was adapted from prior work (Seelig et al., 2010). Adult female D. melanogaster expressing the genetically encoded calcium indicator GCamp6m under one of the four Gal4 drivers were anesthetized under cold sedation. Once anesthetized, flies were wedged dorsal side up into a 1/32 inch slit cut into 0.001-inch-thick stainless steel shim (304 Stainless Shim, Trinity Brand Industries) in a custom-built acetal stage. The cervical connective was flexed downward to bring the posterior surface of the head capsule flush with the shim, and the rim of the cuticle was fixed in place with dental acrylic (Seelig et al., 2010). The proboscis and antennae were fixed to prevent motion. The posterior cuticle was cut out and removed with a pair of sharpened Dumont no. 5 forceps (Fine Science Tools). Overlying tissues were removed to provide clear access to the optic lobe.

During imaging, a perfusing solution was flowed over the imaging site at a rate of ∼1 ml/min with a gravity drip, regulated by an in-line valve, after passing through an in in-line temperature regulator (Warner Instruments) set at 19°C, resulting in a well temperature of 21–23°C throughout the experiment. Perfusate saline solution is based on Wilson et al. (2004).

Imaging was performed in a two-photon excitation scanning microscope (Intelligent Imaging Innovations). We used a 20×/NA = 1.0 water-immersion objective lens (Carl Zeiss). Laser power was regulated to 10–20 mW measured at the focus of the objective lens. Images were collected at 8–11 Hz and 300–500 nm/pixel on a side. Temporal registration with input stimuli was achieved by recording a voltage pulse at the completion of each frame that was output from the stimulus controller to a data acquisition device (National Instruments). Visual stimuli were produced by a 12–20 panel arena that subtended 105° of visual azimuth and 120° of elevation on the retina (IO Rodeo) using open-source MATLAB packages (flypanels.org; Reiser and Dickinson, 2008). Stimulus and data acquisition were controlled by custom-built software in MATLAB (MathWorks).

Eight stimulus classes in each of two horizontal directions were presented in a randomized block design. One of eight arena display patterns was chosen at random for each trial to eliminate correlation between neuronal responses and local elements within the random spatial pattern. Every stimulus type was repeated three times to complete one trial. If a preparation was stable and did not appear to bleach, we would complete multiple imaging trials per animal. Each stimulus period had the following structure: a control period consisting of 5 s of clockwise, wide-field motion followed by turning off the arena for 2 s. The arena was then turned back on to the randomly selected test pattern. Test pattern was held stationary for 10 s, run continuously for 10 s, and then stopped. The arena was turned off, and the cycle was repeated.

Image processing.

Movies frames were aligned spatially to a single reference image (usually the first frame of the movie) with a two-dimensional correlation algorithm built-in to the Slidebook software (Intelligent Imaging Innovations). Following alignment, an image of the mean fluorescence intensity over time was exported to MATLAB. We used a custom-built algorithm to recursively parcelate this image into a number of isoluminant rectangular cells. The image frame was broken into quadrants recursively until each subdivision (ROI) contained <50 pixels with luminance greater than the mean luminance value for the entire image stack. This has the effect of drawing larger ROIs over less-active dim regions of the image and smaller ROIs over more-active bright regions. This parcelated mask was then reimported into Slidebook and a matrix containing the sum of the intensity values within each ROI of the mask at each frame of the movie was exported to MATLAB. This resulted in >50× compression in the image data, while preserving variability in the signal within each mask cell across the image, as well as the spatial reference to the anatomical profile of the neurons.

Within the mask, the signal from each ROI was averaged with itself over the three instances of each stimulus type. δF/F was calculated by dividing the signal by the mean intensity over the 2 s preceding stimulus onset (with arena ON) and subtracting 1. All data were then down-sampled to 10 Hz and collated.

To minimize the effect of different ROIs having slightly different receptive fields within an animal and across animals, data within each experiment and each ROI was time-shifted by the difference peak responses to the two Fourier figures, one in each direction. This has the effect of centering each ROI about the center of its receptive field. Time shifts were on the order of 2 s, and the effect of this time-shifted alignment is negligible to the overall results (data not shown), but allows for direct comparison of responses across ROIs and between animals.

Whereas we qualitatively matched the stimulus conditions between the behavioral and imaging experiments, we restrained the wings and reduced the stimulus velocity to ensure reliable fluorescence signals. Faster stimulus dynamics and flying behavioral mode could be expected to modulate the activity of these cells (Maimon et al., 2010).

Covariance network analysis.

We reasoned that two dynamic processes are similar if they covary. However, we knew from previous behavioral work that figure and figure–ground stimuli only evoke similar responses within a limited range of the visual field (Aptekar et al., 2012; Fox et al., 2014). This means that to measure the extent to which two responses are similar by covariance, we needed to calculate the covariance between responses over a limited time (space)-window. We defined our interval as the average full-width at half-maximum for the response to the Fourier figure and theta figure in both directions. This interval was 2.2 s.

The pairwise covariance was calculated between each group of eight stimuli for the mean response of all aligned and pruned ROIs; repeated for clockwise and counterclockwise stimulus directions. This yields two covariance matrices for each time-point. To determine the distribution of covariance values that would arise by chance for any particular cell type, we did 19,200 simulations, where rather than calculated as described, we calculated the pairwise covariance of a cell's response over two randomly chosen time intervals between two test stimuli.

The time-varying sequence of covariance matrices for each stimulus direction were then drawn as graphs. We drew only those edges whose weights were significant at a level of p < 0.01. To validate this method of analysis, we performed a test of persistent topology across graph representations by calculating the number of disjoint clusters of stimuli within each perceptual graph as a function of this threshold; as we varied the significance threshold, effectively pruning more or fewer edges, we looked to see whether the number of distinct clusters within the PG changed. The results of this analysis, as well as the chosen significance level are depicted in Figure 6. Note that across all cell types the passage of the leading edge of the stimulus through the receptive field of the ensemble of ROIs results in a singular clustering event that persists across a range of significance values. This indicates that our choice of a pruning threshold at p < 0.01 has little effect on the results reported. This procedure is similar to strategies that transform continuous measures of nearness or similarity into discrete ones to understand the topology of a neural code (Singh et al., 2008; Malmersjö et al., 2013). The graph layout as shown is calculated by the Fruchterman–Reingold algorithm (Fruchterman and Reingold, 1991). Per the “Covariance Time Series” heatmaps in Figures 6 and 7, note that only one clustering event predominates, and that clustering event corresponds to the time when the edge discontinuity is contained within the receptive field of these cells, but not when they contain wide field motion only.

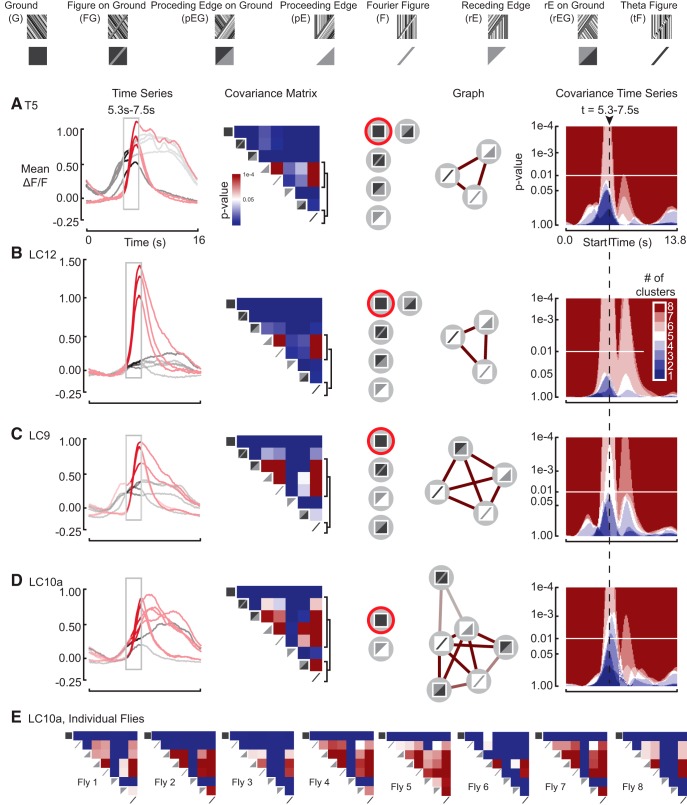

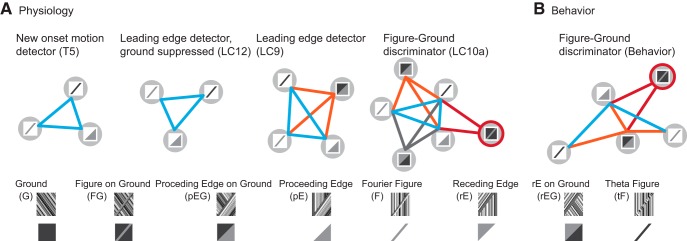

Figure 6.

PG construction for back-to-front response to the leading edges of figures and edge-type stimuli across all cell classes. A, T5 neurons are local motion detectors. Time series shows temporally aligned mean responses from Figure 4E for the eight front-to-back stimulus conditions. The highlighted window corresponds to the analysis interval, which in turn corresponds to the highlighted time point on the covariance matrix. The red trajectories correspond to the five stimuli the linked elements at this time point. For the covariance matrix at the specified time point, a p value of 0.01 is white. Brackets correspond to rows of the matrix that contain non-zero entries and correspond to stimuli in the perceptual graph. Graph shows the PG for the LPN responses of the fly. Edges are drawn only for covariance values that are significant at a threshold set by p < 0.01. Edge color corresponds to the covariance values from the covariance matrix. Covariance time series shows the number of discrete clusters in the PG as a function of the starting position of the time window. The two peaks evident in each heatmap correspond to clustering events elicited by passage of the leading and trailing edges of a figure through the receptive field of each ROI. B, LC12 neurons are ground-suppressed edge detectors. Analogous subfigure to A. C, LC9 neurons are figure–ground discriminators. Analogous subfigure to A. D, LC10a neurons are figure–ground discriminators. Analogous subfigure to A. E, Representative example of the covariance matrices at the specified time point across individual flies for the LC10a neuron subclass. The topology of the mean PG is largely preserved, even at the level of individual flies.

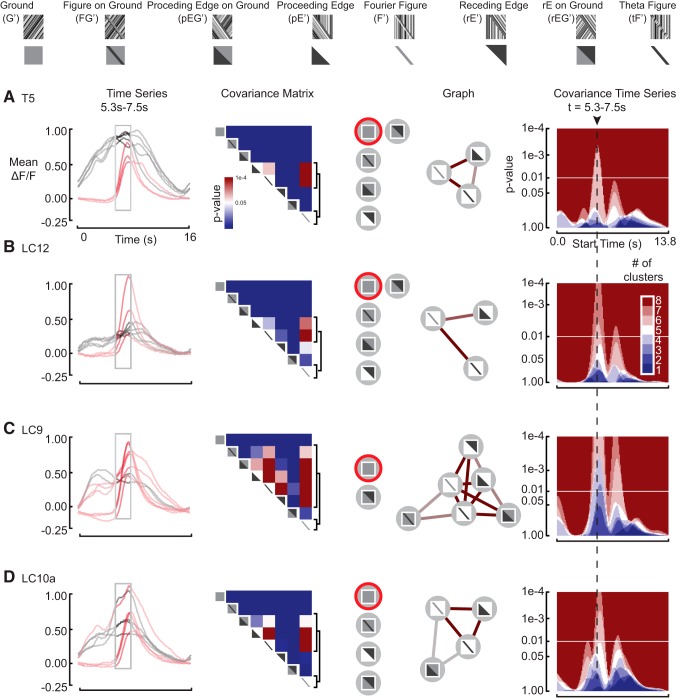

Figure 7.

PG construction for front-to-back response to the leading edges of figures and edge-type stimuli across all cell classes. A, T5 neurons are local motion detectors. Analogous subfigure to Figure 6A. B, LC12 neurons are ground-suppressed edge detectors. Analogous subfigure to Figure 6A. C, LC9 neurons are figure–ground discriminators. Analogous subfigure to Figure 6A. D, LC10a neurons are figure–ground discriminators. Analogous subfigure to Figure 6A.

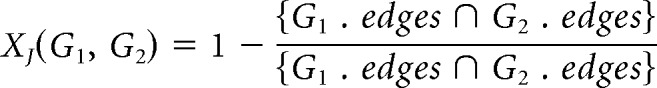

To rigorously characterize the degree of topological similarity for LC10a between individual animals, we use a Jaccard metric (XJ) to compare PGs by edges (Jaccard, 1901, 1912; Albatineh and Niewiadomska-Bugaj, 2011). It is equal to one minus the magnitude of the set intersection of the edges in two graphs divided by the magnitude of their set union. For two graphs, G1 and G2 are as follows:

|

Smaller Jaccard distances exist between graphs with a higher proportion of identical edges. By thresholding the covariance matrices of individual flies at p < 0.01, as described above, we construct PGs for each individual and then calculate the pairwise similarity between individual flies for each cell type; an example of the covariance matrices used to construct individual PGs over the specified time period in LC10a is shown in Figure 6E. Then, by randomly permuting the edges between all available stimuli for each individual, preserving the numerosity of edges, we generate a distribution of Jaccard distances that arise at chance for each cell type. We then produce p values for the measured, mean Jaccard distances within individuals for each cell type. This allows us to compare the measured similarity of the individual PGs to the similarity that would arise at chance.

Results

Figure-tracking on the edge

Flies fixate a vertical bar under closed-loop feedback conditions (Götz, 1968). For a wide bar, fixation is bistable, with two peaks in the fixation histogram corresponding to the position of the bar's edges; the salient component of the figure is its edge rather than center of mass (Wehner and Flatt, 1972; Heisenberg and Wolf, 1984; Maimon et al., 2010; van Breugel and Dickinson, 2012). We extended those studies with a white-noise systems identification approach, the STAF, to analyze figure-tracking behavior (Aptekar et al., 2012). Comparing the STAFs reveals that the EM and FM responses are affected in a qualitatively different manner by figure width (Fig. 1C). Consistent with EM detection originating from the sum of local motion detector inputs (Single and Borst, 1998; Haag et al., 2004; Maisak et al., 2013), the EM STAF broadens with the wider figure that generates more motion energy (Fig. 1Ci,Cii). However, the FM STAF shows two prominent azimuthal peaks separated by the figure's width (Fig. 1Cii, arrowheads), indicating that the strongest steering effort to FM cues is produced when the edge is on midline. This supports the hypothesis that flies track the edge of a figure (Reichardt and Poggio, 1979), and goes substantially further to indicate that the salient cue for an edge is not provided by first-order Fourier motion energy, whose spatial average is maximal at the center of the figure, but rather on higher-order cues, like the edges or seams between flow fields.

Figure detection operates on a single edge (Fig. 1C), varies over the visual field (Theobald et al., 2008; Reiser and Dickinson, 2010; Aptekar et al., 2012; Bahl et al., 2013; Fox and Frye, 2014; Fox et al., 2014), and interacts with ground stabilization (Fei et al., 2010; Fox and Frye, 2014). We generated a stimulus set to examine the perceptual similarity of figures defined by one or both edges, and figures on moving grounds (Fig. 1D). We restricted our analysis to the challenging set of figures defined by relative motion, such that if the figure stops moving it is indistinguishable from the ground. For each experiment, we revolve a stimulus around the LED arena at constant velocity (Reiser and Dickinson, 2010; Bahl et al., 2013). We displayed a narrow figure (F), a proceeding edge (pE), and a receding edge (rE) on a stationary background, and also each on a counter-rotating ground (FG, pEG, and rEG). We included a ground-alone stimulus (G) and a theta figure (tF); an important stimulus for assessing the differential influence of the FM and small-field EM (sfEM) systems to figure-tracking (Zanker, 1996; Theobald et al., 2008; Aptekar et al., 2012). These eight stimuli (G, FG, rEG, rE, F, pE, pEG, tF) in two directions composed our 16 experiments (Fig. 1D). Our behavioral variable was the measured difference between left and right wing beat amplitude, ΔWBA (Götz, 1987), which is proportional to yaw torque generated by a tethered fly (Tammero et al., 2004).

We found that ground motion (G) elicits a canonical optomotor response (Fig. 1E; compare G and G′ conditions). A bar subtending <10% of the visual field sweeping across the ground in the opposite direction evokes a full reversal in steering as the animal turns to pursue the figure (Fig. 1E; compare G′ and FG′ conditions, and inset). Some very different stimuli, such as the pE and the Fourier figure (F), produce qualitatively similar behavioral responses (Fig. 1E; compare pE, F and pE′, F′ conditions).

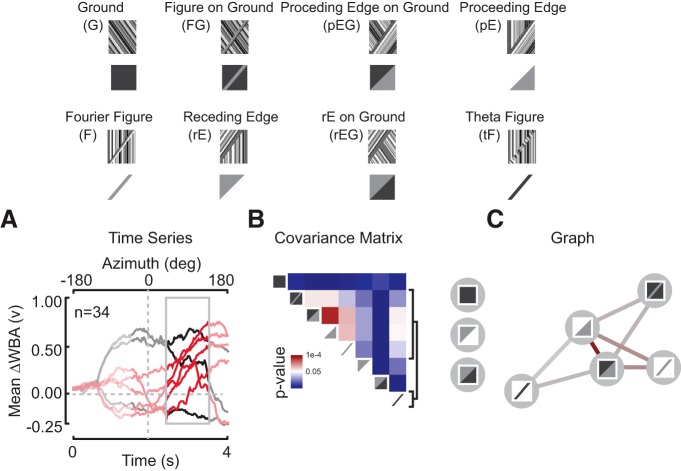

Behavioral responses define a visual feature space

To explore how dissimilar inputs map to similar behavioral responses, we measured the covariance across all steering responses within a one second sliding window over the stimulus interval (Fig. 2A). This provided a measure of behavioral response similarity across the different stimulus inputs, i.e., responses to perceptually indistinguishable stimuli covary significantly (p < 0.05; Fig. 2B, red), and responses to perceptually distinct inputs do not (p < 0.05; Fig. 2B, blue). To visualize the relationships across the stimulus set, we display the covariance matrix as a perceptual graph, PG (Fig. 2C; see Materials and Methods for details). We use the Fruchterman–Reingold algorithm (Fruchterman and Reingold, 1991) to anneal the PG and reveal clusters of behavioral responses (Fig. 2C). The PG reveals a window of time, defined by an edge passing through the frontal visual field (Fig. 2A, gray box), when the steering trajectories for many figure-like stimuli covary at a significant level and do not covary with the ground-alone condition (Fig. 2B,C). We find significant covariance between the F, tF, FG, pE, and pEG stimuli (Fig. 2B, red). These perceptually clustered stimuli all contain the leading edge of a figure. Receding edge (rE, rEG) stimuli did not elicit covariant steering responses with the FG stimulus. These analyses using simple constant velocity stimuli corroborate our white-noise STAF work (Fig. 1C; Aptekar et al., 2012, 2014; Fox et al., 2014): regardless of the presence or absence of a moving ground, flies perceptually cluster and track figures by a mechanism that detects the leading edge of a figure.

Figure 2.

Perceptual graph for Drosophila tethered, and optomotor response to figure–ground stimuli. A, Temporally aligned mean trajectories from Figure 1E for the eight counterclockwise stimulus conditions. The highlighted window corresponds to the analysis interval. The red trajectories correspond to the five stimuli that compose the linked elements of the PG at this time point. B, The covariance matrix at the specified time point. A p value of 0.05 is white. Brackets correspond to rows of the matrix that contain non-zero entries and correspond to stimuli in the perceptual graph. C, The PG for the wing steering behavior of the fly. Edges are drawn only for edges correspond to covariance values from B that are significant at a threshold set by p < 0.05. Edge color corresponds to the covariance values from B. Edge color corresponds to p value from B. Stimuli that reside outside the PG are indicated in a vertical stack.

Projection neurons of the fly lobula

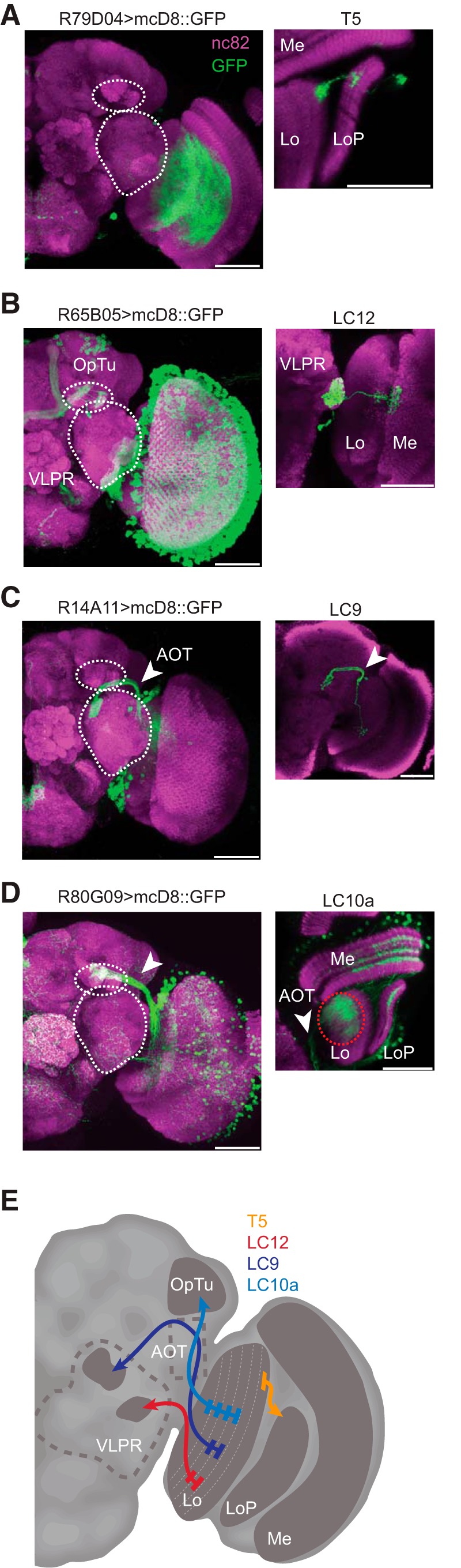

Within the lobula, a putative figure-coding region of the fly optic lobe, we recorded calcium responses from the dendrites of three cell classes projecting to distinct optic glomeruli (Otsuna and Ito, 2006; Strausfeld et al., 2007). LC12 is a columnar-type neuron that projects to the lateral portion of the ventrolateral protocerebrum (VLPR; Fig. 3B). LC9 is a columnar-type neuron that projects through the anterior optic tract (AOT) to the medial portion of the VLPR (Fig. 3C). LC10a is columnar-type neuron that projects through the AOT to the medial portion of the optic tubercle (Fig. 3D). We include a schematic diagram illustrating the layer- and glomerular-specificity of each of these cell types (Fig. 3E).

Figure 3.

Anatomy of lobula projection neurons. All confocal images indicate nc982 neuropil staining in magenta and GFP in green. Scale bars, 50 μm. A, Whole-hemisphere labeling for R79D04 Gal4 line and single-cell example (inset) of a T5 neuron. B, Whole-hemisphere labeling for R65B05 Gal4 line and single-cell example (inset) of a LC12 neuron (shown glomerular region contains projections from multiple cells but only one of them shown); white dashed circles bound the optic tubercle (OpTu) and VLPR. C, Whole-hemisphere labeling for R14A11 Gal4 line and single-cell example (inset) of a LC9 neuron (shown glomerular region contains projections from multiple cells but only one of them shown). D, Whole-hemisphere labeling for R80G09 Gal4 line. We were unable to achieve single-cell labeling in this line, yet identified the LC10a subtype based on the axonal projections being confined only to the medial part of the optic tubercle (arrowhead). E, A schematic diagram depicting the input layers of the lobula and the projection targets of each cell type imaged (Mu et al., 2012).

To facilitate comparison between figure detection and canonical wide-field motion vision, we imaged the dendrites of columnar T5 projections to the lobula plate (Fischbach and Dittrich, 1989; Joesch et al., 2010; Fig. 3A). T5 is selective for OFF edge-motion and its small-field columnar outputs are collated by wide-field lobula plate tangential cells (Buchner et al., 1984; Maisak et al., 2013).

Each set of cells (T5, LC12, LC10, and LC9/LC13) were genetically targeted with GAL4 lines (Jenett et al., 2012) to express GCAMP6m (Akerboom et al., 2012). In some cases the driver lines labeled additional cell types (see Materials and Methods), but the specificity of the driver within the lobula enabled us to use anatomical landmarks to record from individual cell types (Fig. 3).

Neural coding properties do not vary retinotopically for T5, LC12, LC9, or LC10a

Imaging preparations were shown a perspective-matched version of the stimulus from our behavioral assays (Fig. 1A,B; see Materials and Methods). The imaging region contained many local dendritic processes belonging to multiple individual columnar neurons (Fig. 4A). To test for neuronal responses that could underlie the figure–ground discrimination computations that we know flies make (Fig. 1E), we first recorded responses to a 30° bar sweeping across the azimuth of the LED display. The bar activates sequential retinotopic columnar elements as the bar swept across the azimuth (Fig. 1A, indicated in with pseudocolor mask). We record from multiple ROIs, shown arranged in order along the nasal–temporal (n–t) axis (Fig. 4B, top). The duration of GCaMP excitatory response corresponds with the width of the bar (30°). We did not identify any significant response variation along the n–t axis. We therefore collapsed the spatial dimension to pool responses across ROIs in time (Fig. 4B, bottom). All four classes of lobula projection neurons showed similar retinotopic homogeneity (Fig. 4); i.e., each columnar element of T5 responds to the passage of a Fourier bar in a similar way.

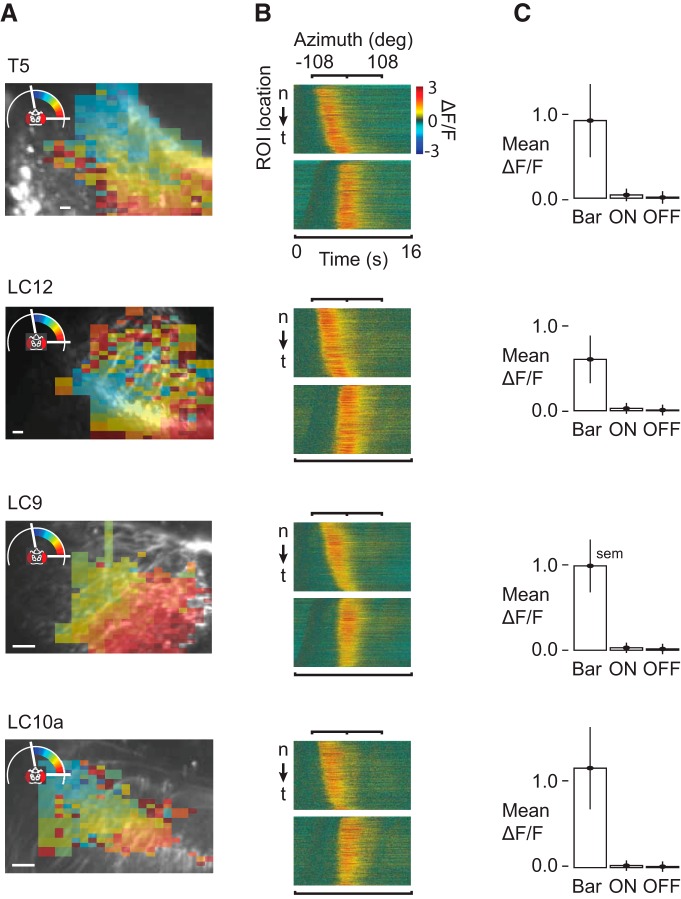

Figure 4.

Initial physiological classification. Each cell type tiles the visual field. Responses do not vary by retinotopic location. ON and OFF responses are very small compared with figure-motion responses. Cell-types are T5, LC12, LC9, and LC10a (top-to-bottom). A, Raw image of dendritic arbors from an imaging trial for each cell type, Scale bar, 10 μm. Receptive field map of pruned ROIs from the trial depicted in the raw image with the receptive field center mapped to the color of the ROI and superimposed on the raw image. The pruned ROI mask was computed by recursively subdividing the image according to the mean luminance of each pixel (see Materials and Methods, Image processing). Average pixel size was 400–500 nm on a side. Minimum ROI size was set at 50px, or ∼4 μm on a side; n = 5 flies (T5), n = 6 flies (LC12), n = 8 flies (LC9), n = 6 flies (LC10a). B, For each cell type, the top shows the raw timing of the response of each ROI to a front-to-back Fourier bar on a stationary ground (F stimulus). The bottom for each cell type shows the same responses following temporal alignment. All data were temporally aligned before undergoing further analysis. C, Each cell type shows a large response to the onset of motion within its receptive field relative to the response to lights-ON or lights-OFF. These cell types are configured to detect motion stimuli preferentially.

Throughout, we confined our measurements to the dendritic arbors of these cells. Although we did attempt some measurements from axonal terminals, there were no distinct responses. This may represent preaxonal filtering of the dendritic responses. However, the dendritic responses are likely important not only for axonal feedforward processing into the AOT and optic glomeruli of the central brain, but also for local dendrodendritic processing.

Lobula projection neurons do not respond with transient ON and OFF responses

We compared the mean ΔF/F response to switching the entire LED arena ON and OFF (Fig. 4C). The response amplitude within lobula dendritic arbors to full-field ON and OFF were very small in each cell type (Fig. 4C), indicating that lobula projections neurons are strongly tuned to motion and other motion-derived features rather than transient flicker.

Calcium imaging reveals distinct figure–ground response profiles by lobula projection systems

We presented the eight stimuli in each of the two azimuthal directions in random order (Fig. 1E). The trajectory of average fluorescence signals demonstrates by visual inspection that each of the four lobula cell projections responds differently to the ensemble of 16 stimuli (Fig. 5A–D). For example, all four columnar cell types respond in a similar manner to the four bar figures on static ground, regardless of the direction of figure motion or the direction of coherent pattern motion within the figure window (Fig. 5A–D; F, F′, tF, tF′). By contrast, each cell type differentially filters figure–ground stimuli. For example, LC12 appears to be strongly selective for figures on static ground (Fig. 5B; F, tF, pE), whereas LC9 and LC10a each show strong transients to a passing edge regardless of background movement (Fig. 5C,D; pEG, rE, pE, and rEG).

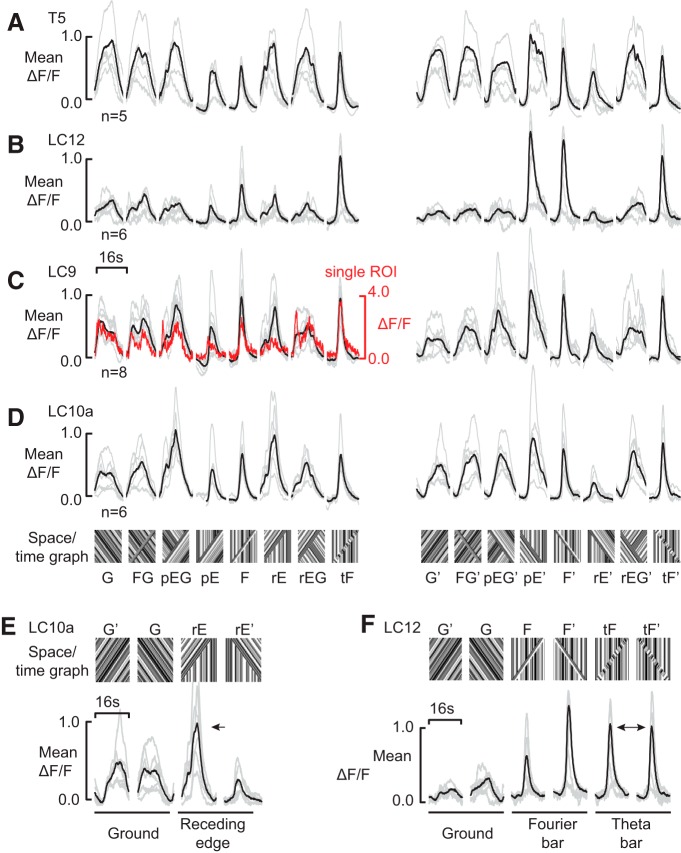

Figure 5.

Calcium imaging in LPNs reveals a diverse code for edge- and figure-motion. A, Mean of mean response by individual from the T5 line in black. Mean of individuals in gray. B, Mean of mean response by individual from the LC12 line in black. Mean of individuals in gray. C, Mean of mean response by individual from LC9 in black. Mean of individuals in gray. Trajectory from one exemplar ROI in red (99th percentile, ranked by the strength of the first principal component, where principal component analysis is performed on the response trajectory across all 16 stimulus conditions). D, Mean of mean response by individual from LC10a. Mean of individuals in gray. E, LC10a shows no differential activation in response to opposite directions of ground motion, but dramatic differential activation in response to opposite directions of the motion of a single receding edge. F, LC12 shows little differential activation for the two directions of ground motion, yet shows marked directional tuning to the two directions of passage of a Fourier bar, indicating a preference for back-to-front figure motion. It shows a near equal activation to the two directions of theta motion, for which the direction of motion of the figure window and the internal texture oppose.

For each neural subtype, there is substantial variation in the responses to our battery of stimuli. Parameterization of visual motion coding along the axes of figure, or flicker, coding within any single-cell types is idiosyncratic. For example, LC10a shows no difference in response to the two directions of ground-alone stimulus, but shows a very different response to the two receding edge stimuli (Fig. 5E). This demonstrates that LC10a has a strong predilection to the non-EM components of the stimulus.

LC12 shows nearly identical responses to ground motion in each direction, whereas the two directions of Fourier bar motion elicit markedly different responses (Fig. 5F). In contrast, the theta bar responses are nearly identical. In combination, these suggest a mechanism whereby the contributions of both FM-direction and EM-direction, and size-tuning interact directly.

Graph analysis of neural responses

These examples demonstrate the difficulty of either systematically evaluating pairwise responses within a particular neural subtype across stimulus-conditions or producing conceptual models for the underlying coding filters that these cells implement. The PG provides a method to consider all such possible pairwise comparisons between responses. We therefore repeated the behavioral time-varying covariance analysis (Fig. 2) for the responses of each cell type, treating each ROI as an independent sample to determine which cellular responses are homotopic to behavioral figure tracking.

T5 is a local motion-detector

Consistent with the model that T5 composes a columnar array of motion-sensitive neurons (Buchner et al., 1984; Maisak et al., 2013), each with a finite receptive field, we found that T5 responded similarly to the entire stimulus set, and in particular was little perturbed by the passage of an edge or figure superimposed on a moving ground (Fig. 5A). Accordingly, the only significant clustering event that we observed was for the leading edge of tF, F, and pE stimuli (Figs. 6A, 7A), each of which are characterized by a delayed onset of motion through the receptive field, by contradistinction to the wide-field G, FG, pEG, and rEG stimuli that drive the receptive field continuously.

Note that although we are measuring responses from T5, and these cells have been shown to have directionally tuned responses at their axon terminals in the lobula plate, we measure these responses in the dendritic compartments of T5 within the lobula. Maisak et al., (2013) demonstrated that signals delivered from the dendrites of T4 and T5 cells are already directionally selective. However, directional selectivity cannot be imaged en masse because the directionally tuned dendrites are mixed within strata of the lobula.

LC12 is a ground-suppressed edge-detector

LC12 clusters figure stimuli on a stationary ground regardless of the direction of internal EM or figure direction (Figs. 6B, 7B). In contrast to a cell that shows size-selectivity via inhibition by wide-field ground motion, e.g., figure detecting (FD) neurons of the fly lobula plate (Warzecha et al., 1992), LC12 exhibits its largest amplitude response to a single edge, pE-type stimulus. Therefore LC12 is not size-tuned per se, but rather is more broadly sensitive to the onset of motion generated by an edge (Figs. 6B, 7B).

AOT neurons encode high-order optical disparities and figure-on-ground

Consistent with tuning to higher-order edge features of the visual scene that drive behavioral responses (Fig. 2), two cell groups that project through the AOT (LC9 and LC10a) show weak directional preference, yet strong responses to figures on both stationary ground and counter-rotating ground (Figs. 6C,D, 7C,D). Similar to LC12, both LC9 and 10a demonstrated strong responses to the single-edge stimuli, thus are not size tuned per se, but rather respond to an approaching edge (Fig. 4). However, unlike LC12, both LC9 and LC10a respond to the edge progressing across a counter-rotating ground, and are not suppressed by ground motion (Fig. 5C,D).

The graph analysis demonstrates that both cell classes show more robust figure–ground selectivity than any known cell class in the fly motion pathway. Both LC9 (Figs. 6C, 7C) and LC10a (Figs. 6D, 7D) cluster the figure–ground stimulus along with the other figure-like stimuli, yet apart from the ground-alone stimulus. Although the LC9 and LC10a cell classes seem to respond to the entire stimulus ensemble, the cellular responses to any motion discontinuity generated by a leading edge, regardless of ground motion in either direction, covary with figure-alone stimuli, but not with ground-alone motion. Furthermore, sensitivity to the figure–ground stimulus is coupled with sensitivity to the receding edge (Figs. 6C, in LC9, and 7D, LC10a in the opposite direction), or the receding edge on counter-rotating ground (Figs. 6D, in LC10a, and 7C, in LC9). This suggests that the ability to distinguish figure–ground from ground-alone imparts sensitivity to a broad class of discontinuities in optic flow.

Figure–ground discrimination emerges from a hierarchy of conserved feature-detection motifs

We also note that the graph topology, meaning the set of edges between like stimuli, is conserved across individual flies (Fig. 6E). In the example from LC10a, several features predominate: across all individuals, the top row of the covariance matrix is generally empty, highlighting the very low level of covariance between the G condition and the others. A similar, highly conserved motif exists for the rEG stimulus across individuals. Per the Jaccard similarity metric described in Materials and Methods, we found that the PGs calculated for a single cell-type, calculated across individuals, were much closer to one another than would be expected at chance. For LC10a, p = 0.0009; LC12, p = 0.0067; T5, p = 0.0044; and LC9, p = 0.0290.

With respect to the Jaccard similarity between different cell types, we did not expect to find stark statistical differences between individual neurons of each cell type, because our hypothesis was that each cell type encodes figure-like stimuli in a similar, but progressively more complex way. As expected, the average pairwise distances between each cell type were uniformly shorter than chance with p = 0.01. However, for T5, LC9, and LC10a, the mean Jaccard distance for PGs from individual cells of a single type was shorter (nearer) than the mean Jaccard distance to PGs derived of any other type. For LC12, its nearest neighbor was the LC9 cell-type, with which its response shares many similar features (Figs. 6, 7). These results demonstrate that while PGs are consistent across measurements within a single cell-type, the PG of a given cell cannot be used in isolation to determine its identity relative to the other cell types imaged.

Discussion

We demonstrate that the optical disparity generated by the edge of a motion-defined figure elicits robust figure tracking steering responses in tethered flight, regardless of opposing ground motion (Fig. 1). We then employ a novel analytical construct called a perceptual graph to visualize the flies' perceptual classification of diverse figure stimuli, and demonstrate that figure-like stimuli elicit behavioral responses similar to one another and distinct from ground alone (Fig. 2). Choosing from a set of distinct glomerular output targets from the lobula, a putative figure sensitive region of the fly brain, we study four target cell types with distinct projection patterns (Fig. 3). Using calcium imaging (Figs. 4, 5) and the perceptual graph method, we compared the response patterns of these cell types to whole animal behavior (Figs. 6, 7). We found that passage of the leading edge through these cells receptive field elicited a single, marked clustering event in the PG. We found that this PG is highly conserved across individual recordings from different animals (Fig. 6); the topology of figure–ground discrimination in these LPNs is a characteristic property of each cell type. We found a set of conserved motifs in both behavior and neurophysiology demonstrating that figure–ground discrimination relies primarily on the detection and response to the edge of a figure (Fig. 8).

Figure 8.

Topological classification of cell types and behavior. A, Back-to-front, leading edge PGs (singleton clusters excluded) across surveyed cell types. Homologous subnetworks are colored to highlight persistence across PGs at higher levels of complexity. The figure–ground stimulus is highlighted in red. Note that the red edges are not necessarily between homologous stimuli. B, PG for behavior.

Feature detection persists over ground dynamics

The dynamics of figure and ground motion affect steering behavior in a spatial, visual-field-dependent manner (Theobald et al., 2008; Aptekar et al., 2012; Fox et al., 2014), and are particularly robust to the interference of uncorrelated ground motion (Fox et al., 2014). A figure occupying <10% of the total visual field fully reverses the steering response of the animal to counter-rotating ground (Fig. 1E; Buelthoff, 1981; Fox et al., 2014).

Behavioral responses to opposing figure–ground stimuli (Fig. 1E) therefore cannot be attributed to the responses of T5, which responds to ground motion and is little perturbed by a small counter-rotating figure (Fig. 5A), consistent with its role as local columnar retinotopic elementary motion detector supplying the wide-field collating neurons of the lobula plate (Schnell et al., 2012). By contrast, the LPNs (LC12, LC9, and LC10a) demonstrate complex figure-selective responses despite superimposed ground motion (Fig. 5), consistent with the hypothesis that these lobula projection systems support higher-order feature detection demonstrated behaviorally (Mu et al., 2012). The segregation of each output to separate regions of the lateral protocerebrum suggests that this complexity, and diversity, of the response profiles from each of these higher-order detectors may play a separate, or parallel, role in behavior.

Figure–ground discrimination emerges from a hierarchy of conserved feature-detection motifs

Each cell type generated a unique PG upon entry of the leading figure edge into the receptive field (Figs. 6, 7). The subset of stimuli that are indistinguishable (perceptually clustered) is distinct for each of the four cell classes. By extension, each cell type encodes different features of the stimulus set.

Several motifs become apparent in the PGs across cell type. For both T5 and LC12, the PG produced a triangular simplex of covariance between the F, tF, and pE stimuli (Fig. 8A, blue links). This topological feature was then conserved upon the addition of the proceeding edge on ground stimulus to the PG of LC9 and LC12 to produce a tetrahedral PG (Fig. 8A, orange edges). This tetrahedral PG is represented within the clusters that add the figure–ground stimulus to LC9 and LC10a (Fig. 8A, red edges). The figure–ground detector cluster also displays sensitivity to the receding edge (LC9) or receding edge on ground (LC10a) stimuli (Fig. 8A, dark gray edges).

This apparent “inheritance” of topology represents a modular and hierarchical construction of figure detection from feature primitives. First, the individual stimulus types are not treated independently by these cells. For each of these cell types, the response to tF, F, and pE are essentially indistinguishable at the leading edge and therefore represent an indivisible perceptual unit for this set of cells. That topology emerges at the lowest-levels, T5 and LC12, and is present at the higher-levels, LC9 and LC10a. This persistence of primitives across cell types is consistent with a hierarchical organization of feature detectors underlying figure–ground discrimination. We do not intend to argue for common inputs to T5, LC12, LC9, and LC10a or serial connections per se, but rather that the information processing architecture of the lobula shows a characteristic and fundamental sensitivity to leading edges. This progression leads to a final clustering topology that is very similar to the behavioral clustering (Fig. 8B). In both behavior and lobula physiology, the encoding of a leading edge seems to be a computational prerequisite or, at the very least, an intrinsic component of figure–ground discrimination.

Edge-tracking may arise from an EMD-like mechanism

Motion discrimination of two directions of motion is encoded in at least several of the LPNs we surveyed, as well as in expansion-sensitive neurons in the lobula plate (De Vries and Clandinin, 2012). This challenges the hypothesis that figure detection arose from a single, ancient neural mechanism that is very brittle and could be easily perturbed or lost. Rather, we argue that figure–ground discrimination is an emergent property of the visual system's propensity to detect spatiotemporally coherent patterns of disparity. The elementary motion detector (EMD) is simply a special case of sensitivity to coherence in luminance disparity (increments or decrements in light input). Figure-detection may arise from a similar mechanism that takes as its input motion disparity; i.e., an edge where the direction of motion reverses. A future challenge will be to identify the equivalent of a reverse-phi-type stimulus for an edge motion detector to validate whether this edge-tracking mechanism in fact follows the computational structure of the EMD, or whether it is fundamentally different.

Emerging cellular models of feature detection

FD cells of the lobula plate identified in blowflies have narrow receptive fields, but are inhibited by wide-field motion (Egelhaaf, 1985; Warzecha et al., 1992, 1993; Liang et al., 2008) and so would not serve behavioral responses that track a figure moving across a counter-rotating ground (Fig. 1E). Furthermore, genetic silencing experiments have shown that animals with nonfunctional columnar T5 neurons show strongly compromised OFF-edge wide-field motion wide-field responses, but essentially intact responses to a dark vertical bar on a uniform white ground suggesting that the lobula plate is dispensable for basic figure detection behavior in Drosophila (Bahl et al., 2013; Maisak et al., 2013). However, the lobula plate circuitry of hoverflies is equipped for high performance feature detection, perhaps as an adaptation to use natural vertical contours to stabilize gaze to maintain a precise hovering posture between bouts of conspecific pursuit (Wiederman et al., 2008; O'Carroll et al., 2011; Lee and Nordström, 2012).

A growing body of research on the lobula from dragonflies (O'Carroll, 1993; Olberg et al., 2000) and hoverflies (Nordström and O'Carroll, 2006; Nordström et al., 2006) has described classes of intrinsic and projection neurons within the lobula specialized for detecting very small targets with marked luminance difference from the s. These neurons are useful for models that describe prey capture or conspecific pursuit, but do not capture the phenomenology of figure–ground based edge detection or active visual fixation of a prominent vertical contour observed here (Fig. 1), which likely serve for navigation around plant stalks and gaps in the landscape.

Other lobula cell systems map retinotopic inputs recombined into glomerular outputs, an anatomical convergence suggesting that the lobula forms the nexus between sensation and feature coding (Strausfeld et al., 2006; Strausfeld and Okamura, 2007). Feature primitives have been found to be represented by the electrophysiological responses of glomerular projection neurons in Drosophila (Mu et al., 2012), and neurogenetic silencing of these cell classes produces defects in higher-order figure tracking behavior by tethered flies (Zhang et al., 2013).

The AOT is a prominent anatomical feature in many insects that projects from the lobula complex to the anterior portion of the lateral protocerebrum (Fischbach and Heisenberg, 1981; Fischbach and Lyly-Hünerberg, 1983). This study shows for the first time in Drosophila that the AOT is strongly implicated in higher-order feature coding, of the sort necessary for figure–ground discrimination (Figs. 6, 7). The congruence of this finding with work in other insects (Collett, 1971, 1972) argues that this architecture is both ancient and fundamental. We note also that the presence of a multiplicity of other similar outputs from the lobula (Fig. 8) may explain why substantial lesions to subsets of this neuropil do not result in profound optomotor deficits (Fischbach and Heisenberg, 1981). Parallel pathways of visual information allow for the sensitivity of flies to higher-order features and constitute a robust framework that cannot be easily perturbed by lesions. Future work should focus on refinement of stimuli to probe narrow sets of these filters, as well as measurement of cellular responses in animals that are actively engaged in figure-tracking behavior (Seelig et al., 2010; Weir et al., 2014).

Footnotes

M.A.F. is a Howard Hughes Medical Institute Early Career Scientist. This work was also supported by the US Air Force Office of Scientific Research (FA9550-12-1-0034) and the Edith Hyde Fellowship. J.W.A. is supported by the UCLA-Caltech Medical Scientist Training Program (NIH T32MH019384). We thank J. Seelig, V. Jayaraman, and T. Clandinin for technical advice on two-photon imaging.

The authors declare no competing financial interests.

References

- Akerboom J, Chen TW, Wardill TJ, Tian L, Marvin JS, Mutlu S, Calderón NC, Esposti F, Borghuis BG, Sun XR, Gordus A, Orger MB, Portugues R, Engert F, Macklin JJ, Filosa A, Aggarwal A, Kerr RA, Takagi R, Kracun S, et al. Optimization of a GCaMP calcium indicator for neural activity imaging. J Neurosci. 2012;32:13819–13840. doi: 10.1523/JNEUROSCI.2601-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Albatineh AN, Niewiadomska-Bugaj M. Correcting Jaccard and other similarity indices for chance agreement in cluster analysis. Adv Data Anal Classif. 2011;5:179–200. doi: 10.1007/s11634-011-0090-y. [DOI] [Google Scholar]

- Aptekar JW, Shoemaker PA, Frye MA. Figure tracking by flies is supported by parallel visual streams. Curr Biol. 2012;22:482–487. doi: 10.1016/j.cub.2012.01.044. [DOI] [PubMed] [Google Scholar]

- Aptekar JW, Keles MF, Mongeau JM, Lu PM, Frye MA, Shoemaker PA. Method and software for using m-sequences to characterize parallel components of higher-order visual tracking in Drosophila. Front Neural Circuits. 2014;8:130. doi: 10.3389/fncir.2014.00130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baccus SA, Olveczky BP, Manu M, Meister M. A retinal circuit that computes object motion. J Neurosci. 2008;28:6807–6817. doi: 10.1523/JNEUROSCI.4206-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bahl A, Ammer G, Schilling T, Borst A. Object tracking in motion-blind flies. Nat Neurosci. 2013;16:730–738. doi: 10.1038/nn.3386. [DOI] [PubMed] [Google Scholar]

- Buchner E, Buchner S, Btilthoff I. Deoxyglucose mapping of nervous activity induced in Drosophila brain by visual movement. J Comp Physiol A Neuroethol Sens Neural Behav Physiol. 1984;155:471–483. doi: 10.1007/BF00611912. [DOI] [Google Scholar]

- Buelthoff H. Figure-ground discrimination in the visual system of Drosophila melanogaster. Biol Cybern. 1981;41:139–145. doi: 10.1007/BF00335368. [DOI] [Google Scholar]

- Clark DA, Bursztyn L, Horowitz MA, Schnitzer MJ, Clandinin TR. Defining the computational structure of the motion detector in Drosophila. Neuron. 2011;70:1165–1177. doi: 10.1016/j.neuron.2011.05.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collett T. Visual neurones for tracking moving targets. Nature. 1971;232:127–130. doi: 10.1038/232127a0. [DOI] [PubMed] [Google Scholar]

- Collett TS. Visual neurones in the anterior optic tract of the privet hawk moth. J Comp Physiol A. 1972;78:396–433. doi: 10.1007/BF01417943. [DOI] [Google Scholar]

- de Vries SEJ, Clandinin TR. Loom-sensitive neurons link computation to action in the Drosophila visual system. Curr Biol. 2012;22:353–362. doi: 10.1016/j.cub.2012.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egelhaaf M. On the neuronal basis of figure–ground discrimination by relative motion in the visual system of the fly. Biol Cybern. 1985;52:123–140. doi: 10.1007/BF00364003. [DOI] [Google Scholar]

- Fechner GT. Elemente der psychophysik. In: Adler HE, translator. Reprint. New York: Holt, Rinehart and Winston, 1966; 1860. Elements of psychophysics. [Google Scholar]

- Fei H, Chow DM, Chen A, Romero-Calderón R, Ong WS, Ackerson LC, Maidment NT, Simpson JH, Frye MA, Krantz DE. Mutation of the Drosophila vesicular GABA transporter disrupts visual figure detection. J Exp Biol. 2010;213:1717–1730. doi: 10.1242/jeb.036053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischbach KF, Dittrich AP. The optic lobe of Drosophila melanogaster: I. A Golgi analysis of wild-type structure. Cell Tissue Res. 1989;256:441–475. [Google Scholar]

- Fischbach KF, Heisenberg M. Structural brain mutant of Drosophila melanogaster with reduced cell number in the medulla cortex and with normal optomotor yaw response. Proc Natl Acad Sci U S A. 1981;78:1105–1109. doi: 10.1073/pnas.78.2.1105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischbach KF, Lyly-Hünerberg I. Genetic dissection of the anterior optic tract of Drosophila melanogaster. Cell Tissue Res. 1983;231:551–563. doi: 10.1007/BF00218113. [DOI] [PubMed] [Google Scholar]

- Fox JL, Frye MA. Figure-ground discrimination behavior in Drosophila: II. Visual influences on head movement behavior. J Exp Biol. 2014;217:570–579. doi: 10.1242/jeb.080192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox JL, Aptekar JW, Zolotova NM, Shoemaker PA, Frye MA. Figure-ground discrimination behavior in Drosophila: I. Spatial organization of wing-steering responses. J Exp Biol. 2014;217:558–569. doi: 10.1242/jeb.097220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fruchterman T, Reingold E. Graph drawing by force directed placement. Softw Pract Exp. 1991;21:1129–1164. doi: 10.1002/spe.4380211102. [DOI] [Google Scholar]

- Götz KG. Flight control in Drosophila by visual perception of motion. Kybernetik. 1968;4:199–208. doi: 10.1007/BF00272517. [DOI] [PubMed] [Google Scholar]

- Götz KG. Course-control, metabolism and wing interference during ultralong tethered flight in Drosophila melanogaster. J Exp Biol. 1987;128:35–46. [Google Scholar]

- Haag J, Denk W, Borst A. Fly motion vision is based on Reichardt detectors regardless of the signal-to-noise ratio. Proc Natl Acad Sci U S A. 2004;101:16333–16338. doi: 10.1073/pnas.0407368101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heisenberg M, Wolf R. Vision in Drosophila. Berlin: Springer; 1984. [Google Scholar]

- Jaccard P. Étude comparative de la distribution florale dans une portion des Alpes et des Jura. Bull la Société Vaudoise des Sci Nat. 1901;37:547–579. [Google Scholar]

- Jaccard P. The distribution of the flora in the alpine zone. New Phytol. 1912;11:37–50. doi: 10.1111/j.1469-8137.1912.tb05611.x. [DOI] [Google Scholar]

- Jenett A, Rubin GM, Ngo TT, Shepherd D, Murphy C, Dionne H, Pfeiffer BD, Cavallaro A, Hall D, Jeter J, Iyer N, Fetter D, Hausenfluck JH, Peng H, Trautman ET, Svirskas RR, Myers EW, Iwinski ZR, Aso Y, DePasquale GM, et al. A GAL4-driver line resource for Drosophila neurobiology. Cell Rep. 2012;2:991–1001. doi: 10.1016/j.celrep.2012.09.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joesch M, Schnell B, Raghu SV, Reiff DF, Borst A. ON and OFF pathways in Drosophila motion vision. Nature. 2010;468:300–304. doi: 10.1038/nature09545. [DOI] [PubMed] [Google Scholar]

- Kimmerle B, Egelhaaf M. Performance of fly visual interneurons during object fixation. J Neurosci. 2000a;20:6256–6266. doi: 10.1523/JNEUROSCI.20-16-06256.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kimmerle B, Egelhaaf M. Detection of object motion by a fly neuron during simulated flight. J Comp Physiol A. 2000b;186:21–31. doi: 10.1007/s003590050003. [DOI] [PubMed] [Google Scholar]

- Lee YJ, Nordström K. Higher-order motion sensitivity in fly visual circuits. Proc Natl Acad Sci U S A. 2012;109:8758–8763. doi: 10.1073/pnas.1203081109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang P, Kern R, Egelhaaf M. Motion adaptation enhances object-induced neural activity in three-dimensional virtual environment. J Neurosci. 2008;28:11328–11332. doi: 10.1523/JNEUROSCI.0203-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maimon G, Straw AD, Dickinson MH. A simple vision-based algorithm for decision making in flying Drosophila. Curr Biol. 2008;18:464–470. doi: 10.1016/j.cub.2008.02.054. [DOI] [PubMed] [Google Scholar]

- Maimon G, Straw AD, Dickinson MH. Active flight increases the gain of visual motion processing in Drosophila. Nat Neurosci. 2010;13:393–399. doi: 10.1038/nn.2492. [DOI] [PubMed] [Google Scholar]

- Maisak MS, Haag J, Ammer G, Serbe E, Meier M, Leonhardt A, Schilling T, Bahl A, Rubin GM, Nern A, Dickson BJ, Reiff DF, Hopp E, Borst A. A directional tuning map of Drosophila elementary motion detectors. Nature. 2013;500:212–216. doi: 10.1038/nature12320. [DOI] [PubMed] [Google Scholar]

- Malmersjö S, Rebellato P, Smedler E, Planert H, Kanatani S, Liste I, Nanou E, Sunner H, Abdelhady S, Zhang S, Andäng M, El Manira A, Silberberg G, Arenas E, Uhlén P. Neural progenitors organize in small-world networks to promote cell proliferation. Proc Natl Acad Sci U S A. 2013;110:E1524–E1532. doi: 10.1073/pnas.1220179110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mu L, Ito K, Bacon JP, Strausfeld NJ. Optic glomeruli and their inputs in Drosophila share an organizational ground pattern with the antennal lobes. J Neurosci. 2012;32:6061–6071. doi: 10.1523/JNEUROSCI.0221-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nordström K, O'Carroll DC. Small object detection neurons in female hoverflies. Proc Biol Sci. 2006;273:1211–1216. doi: 10.1098/rspb.2005.3424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nordström K, Barnett PD, O'Carroll DC. Insect detection of small targets moving in visual clutter. PLoS Biol. 2006;4:e54. doi: 10.1371/journal.pbio.0040054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Carroll D. Feature-detecting neurons in dragonflies. Nature. 1993;362:541–543. doi: 10.1038/362541a0. [DOI] [Google Scholar]

- O'Carroll DC, Wiederman SD. Contrast sensitivity and the detection of moving patterns and features. Philos Trans R Soc Lond B Biol Sci. 2014;369:20130043. doi: 10.1098/rstb.2013.0043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Carroll DC, Barnett PD, Nordström K. Local and global responses of insect motion detectors to the spatial structure of natural scenes. J Vis. 2011;11(14):20, 1–17. doi: 10.1167/11.14.20. [DOI] [PubMed] [Google Scholar]

- Olberg RM, Worthington AH, Venator KR. Prey pursuit and interception in dragonflies. J Comp Physiol A. 2000;186:155–162. doi: 10.1007/s003590050015. [DOI] [PubMed] [Google Scholar]

- Olveczky BP, Baccus SA, Meister M. Segregation of object and background motion in the retina. Nature. 2003;423:401–408. doi: 10.1038/nature01652. [DOI] [PubMed] [Google Scholar]

- Otsuna H, Ito K. Systematic analysis of the visual projection neurons of Drosophila melanogaster: I. Lobula-specific pathways. J Comp Neurol. 2006;497:928–958. doi: 10.1002/cne.21015. [DOI] [PubMed] [Google Scholar]

- Reichardt W, Poggio T. Visual control of orientation behavior in the fly. Q Rev Biophys. 1976;9:311–375. doi: 10.1017/S0033583500002523. [DOI] [PubMed] [Google Scholar]

- Reichardt W, Poggio T. Figure-ground discrimination by relative movement in the visual system of the fly. Biol Cybern. 1979;35:81–100. doi: 10.1007/BF00337434. [DOI] [Google Scholar]

- Reichardt W, Egelhaaf M, Guo A. Processing of figure and background motion in the visual system of the fly. Biol Cybern. 1989;61:327–345. doi: 10.1007/BF00200799. [DOI] [Google Scholar]

- Reiser MB, Dickinson MH. A modular display system for insect behavioral neuroscience. J Neurosci Methods. 2008;167:127–139. doi: 10.1016/j.jneumeth.2007.07.019. [DOI] [PubMed] [Google Scholar]

- Reiser MB, Dickinson MH. Drosophila fly straight by fixating objects in the face of expanding optic flow. J Exp Biol. 2010;213:1771–1781. doi: 10.1242/jeb.035147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schnell B, Raghu SV, Nern A, Borst A. Columnar cells necessary for motion responses of wide-field visual interneurons in Drosophila. J Comp Physiol A Neuroethol Sens Neural Behav Physiol. 2012;198:389–395. doi: 10.1007/s00359-012-0716-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seelig JD, Chiappe ME, Lott GK, Dutta A, Osborne JE, Reiser MB, Jayaraman V. Two-photon calcium imaging from head-fixed Drosophila during optomotor walking behavior. Nat Methods. 2010;7:535–540. doi: 10.1038/nmeth.1468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singh G, Memoli F, Ishkhanov T, Sapiro G, Carlsson G, Ringach DL. Topological analysis of population activity in visual cortex. J Vis. 2008;8(8):11, 1–18. doi: 10.1167/8.8.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Single S, Borst A. Dendritic integration and its role in computing image velocity. Science. 1998;281:1848–1850. doi: 10.1126/science.281.5384.1848. [DOI] [PubMed] [Google Scholar]

- Sperling G, van Santen JP, Burt PJ. Three theories of stroboscopic motion detection. Spat Vis. 1985;1:47–56. doi: 10.1163/156856885X00071. [DOI] [PubMed] [Google Scholar]

- Strausfeld NJ, Okamura JY. Visual system of calliphorid flies: organization of optic glomeruli and their lobula complex efferents. J Comp Neurol. 2007;500:166–188. doi: 10.1002/cne.21196. [DOI] [PubMed] [Google Scholar]

- Strausfeld NJ, Sinakevitch I, Okamura JY. Organization of local interneurons in optic glomeruli of the dipterous visual system and comparisons with the antennal lobes. Dev Neurobiol. 2007;67:1267–1288. doi: 10.1002/dneu.20396. [DOI] [PubMed] [Google Scholar]

- Strausfeld NJ, Douglass JK, Campbell H, Higgins . Parallel processing in the optic lobes of flies and the occurrence of motion computing circuits. In: Warrant E, Nilsson DE, editors. Invertebrate vision. Cambridge, UK: Cambridge UP; 2006. pp. 349–398. [Google Scholar]

- Straw AD, Rainsford T, O'Carroll DC. Contrast sensitivity of insect motion detectors to natural images. J Vis. 2008;8(3):32, 1–9. doi: 10.1167/8.3.32. [DOI] [PubMed] [Google Scholar]

- Tammero LF, Frye MA, Dickinson MH. Spatial organization of visuomotor reflexes in Drosophila. J Exp Biol. 2004;207:113–122. doi: 10.1242/jeb.00724. [DOI] [PubMed] [Google Scholar]

- Theobald JC, Duistermars BJ, Ringach DL, Frye MA. Flies see second-order motion. Curr Biol. 2008;18:R464–R465. doi: 10.1016/j.cub.2008.03.050. [DOI] [PubMed] [Google Scholar]

- Theobald JC, Shoemaker PA, Ringach DL, Frye MA. Theta motion processing in fruit flies. Front Behav Neurosci. 2010;4:35. doi: 10.3389/fnbeh.2010.00035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Breugel F, Dickinson MH. The visual control of landing and obstacle avoidance in the fruit fly Drosophila melanogaster. J Exp Biol. 2012;215:1783–1798. doi: 10.1242/jeb.066498. [DOI] [PubMed] [Google Scholar]

- Warzecha AK, Borst A, Egelhaaf M. Photoablation of single neurons in the fly visual-system reveals neural circuit for the detection of small moving-objects. Neurosci Lett. 1992;141:119–122. doi: 10.1016/0304-3940(92)90348-B. [DOI] [PubMed] [Google Scholar]

- Warzecha AK, Egelhaaf M, Borst A. Neural circuit tuning fly visual interneurons to motion of small objects: I. Dissection of the circuit by pharmacological and photoinactivation techniques. J Neurophysiol. 1993;69:329–339. doi: 10.1152/jn.1993.69.2.329. [DOI] [PubMed] [Google Scholar]

- Weber EH. Tastsinn und gemeingefühl [the sense of touch and common feeling] Handwörterbuch der Physiologie mit Rücksicht auf physiologische Pathologie. 1846:481–588. Band 3. Teil 2. [Google Scholar]

- Wehner R, Flatt I. Information processing in the visual systems of arthropods. Berlin/Heidelberg: Springer; 1972. The visual orientation of desert ants, cataglyphis bicolor, by means of terrestrial cues; pp. 295–302. [Google Scholar]

- Weir PT, Schnell B, Dickinson MH. Central complex neurons exhibit behaviorally gated responses to visual motion in Drosophila. J Neurophysiol. 2014;111:62–71. doi: 10.1152/jn.00593.2013. [DOI] [PubMed] [Google Scholar]

- Wiederman SD, Shoemaker PA, O'Carroll DC. A model for the detection of moving targets in visual clutter inspired by insect physiology. PLoS One. 2008;3:e2784. doi: 10.1371/journal.pone.0002784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson RI, Turner GC, Laurent G. Transformation of olfactory representations in the Drosophila antennal lobe. Science. 2004;303:366–370. doi: 10.1126/science.1090782. [DOI] [PubMed] [Google Scholar]

- Zanker JM. On the elementary mechanism underlying secondary motion processing. Philos Trans R Soc Lond B Biol Sci. 1996;351:1725–1736. doi: 10.1098/rstb.1996.0154. [DOI] [PubMed] [Google Scholar]

- Zhang Y, Kim IJ, Sanes JR, Meister M. The most numerous ganglion cell type of the mouse retina is a selective feature detector. Proc Natl Acad Sci U S A. 2012;109:E2391–E2398. doi: 10.1073/pnas.1211547109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang X, Liu H, Lei Z, Wu Z, Guo A. Lobula-specific visual projection neurons are involved in perception of motion-defined second-order motion in Drosophila. J Exp Biol. 2013;216:524–534. doi: 10.1242/jeb.079095. [DOI] [PubMed] [Google Scholar]