Abstract

Introduction:

The collection of patient-reported outcomes (PROs) draws attention to issues of importance to patients—physical function and quality of life. The integration of PRO data into clinical decisions and discussions with patients requires thoughtful design of user-friendly interfaces that consider user experience and present data in personalized ways to enhance patient care. Whereas most prior work on PROs focuses on capturing data from patients, little research details how to design effective user interfaces that facilitate use of this data in clinical practice. We share lessons learned from engaging health care professionals to inform design of visual dashboards, an emerging type of health information technology (HIT).

Methods:

We employed human-centered design (HCD) methods to create visual displays of PROs to support patient care and quality improvement. HCD aims to optimize the design of interactive systems through iterative input from representative users who are likely to use the system in the future. Through three major steps, we engaged health care professionals in targeted, iterative design activities to inform the development of a PRO Dashboard that visually displays patient-reported pain and disability outcomes following spine surgery.

Findings:

Design activities to engage health care administrators, providers, and staff guided our work from design concept to specifications for dashboard implementation. Stakeholder feedback from these health care professionals shaped user interface design features, including predefined overviews that illustrate at-a-glance trends and quarterly snapshots, granular data filters that enable users to dive into detailed PRO analytics, and user-defined views to share and reuse. Feedback also revealed important considerations for quality indicators and privacy-preserving sharing and use of PROs.

Conclusion:

Our work illustrates a range of engagement methods guided by human-centered principles and design recommendations for optimizing PRO Dashboards for patient care and quality improvement. Engaging health care professionals as stakeholders is a critical step toward the design of user-friendly HIT that is accepted, usable, and has the potential to enhance quality of care and patient outcomes.

Keywords: Human-centered design, Human Computer Interaction (HCI), Engagement, Visualization, Health Information Technology, Data Use and Quality, Information display, Formative evaluation, Patient-reported outcomes, Learning Health System, Patient-Centered Outcomes Research (PCOR)

Introduction

Incorporating the patient perspective into health care systems by capturing patient-reported outcomes (PROs) offers considerable potential to enhance patient outcomes and quality of care.1–3 PROs complement traditional clinical data by representing a patient’s assessment of symptoms, physical function, and health-related quality of life without interpretation from clinicians or caregivers.4–5 Growing evidence demonstrates that collecting patient-reported quality of life increases health care provider awareness of patients’ concerns,3,6 reduces symptom distress, and enhances patient-practitioner communication without increasing visit length.7–8 When patients share PROs with their health care team, patient satisfaction, perceptions of quality of life, and clinical outcomes improve.9–11 PROs are also important for learning health care systems and patient-centered outcomes research aimed at evaluating the effectiveness of treatments.12–13

Integrating PROs into heath information technology (HIT) enhances health care delivery and research by monitoring longitudinal patient experience through electronic health records (EHR), patient portals, and other technological advances.14–16 Although considerable progress has been made in the electronic measurement and collection of PROs, far less attention has been paid to designing user-friendly interfaces that enable meaningful interaction with PROs once collected.17 With increased demand to incorporate patient-generated health data into HIT,18 effective user interfaces are necessary to present PROs in ways that empower health care professionals to use this data to improve health care quality and patient outcomes. Yet, most prior work is limited to static reports for viewing,8,19–20 rather than interactive HIT that can scale to growing PRO data sets and allow a personalized view of data. We address this gap by engaging health care professionals through our statewide Surgical Care and Outcomes Assessment Program (SCOAP)21 in the development of a PRO Dashboard for spine care.

Washington state’s Comparative Effectiveness Research and Translation Network (CERTAIN) is a patient-centered outcomes research initiative that emerged from SCOAP, a clinician-led performance surveillance and quality improvement (QI) initiative.16,22–23 CERTAIN is Washington state’s learning health care system, a network of over 60 diverse health care provider organizations improving patient care through continuous evaluation of health care delivery, generation of evidence through research, and learning.16,23 CERTAIN is a suite of projects and programs that tracks quality, benchmarks best practices, drives improvement, and allows all health care stakeholders to have their input on system improvements heard. A key component of CERTAIN’s mission is to solicit and incorporate such input to ensure that CERTAIN projects and initiatives are meeting the needs of the real-world health care community. Since its inception, CERTAIN has built a stakeholder network and engagement infrastructure to solicit input from a variety of health care stakeholders in meaningful ways.16

Currently, CERTAIN is working in collaboration with Spine SCOAP, a module developed in 2011 focused on spine surgery performance.21 Spine SCOAP operates in 19 hospitals to provide clinical outcomes and PROs up to five years after surgery. PROs collected from patients undergoing lumbar or cervical spine surgery include the Numeric Pain Rating Scale (NRS),24 a general measure of pain, and spine-specific measures of function, including the Oswestry Disability Index (ODI),25 and the Neck Disability Index (NDI).26 The NRS measures pain intensity on a 10-point scale with higher scores reflecting greater pain. The ODI measures disability due to low back pain across 10 activities of daily living (e.g., personal care, lifting, sleeping) and is reported as a percentage scale with higher percentages reflecting greater disability. The NDI is adapted from the ODI to assess disability due to neck pain.

As a framework for engaging representative users in the design of interactive HIT, we employed human-centered design (HCD)27 to optimize PRO Dashboards that leverage this growing data set. With stakeholder engagement from CERTAIN, this work expands upon prior research19 by examining the design of interactive visualizations. In this paper, we describe the HCD framework, illustrate our application of HCD to guide participatory development of PRO Dashboards with users, and share lessons learned and recommendations for future efforts to engage health care professionals in the design of user-friendly HIT.

Human-Centered Design (HCD)

HCD is a human factors framework that aims to make interactive systems more usable by focusing on use and usability through direct input from users (i.e., individuals who will use the system in the future).27 This participatory process optimizes the design of interactive systems to the needs of users through iterative phases. In our work, “users” include health care administrators, providers, and clinical staff, who will use PRO Dashboards as an “interactive system.” In general, we first apply formative methods, such as interviews and surveys to understand the context of use, including characteristics of users, their tasks, and the environment in which the system is used. This understanding informs “user requirements” that the system must support to meet users’ needs. Based on those requirements, we then build prototype systems to test with users and determine how well they meet user requirements. It is common to iterate back to earlier phases to refine designs based on new information gathered through user input. Thus, HCD helps ensure that the developed system will be acceptable, usable, and meet the needs of users. Not only is HCD highly iterative, it is highly flexible to enable selection of methods most responsive to needed refinements that emerge from user engagement. Prior work describes a range of methods that apply HCD in health care settings.28–31

HCD is an international standard27 with an established history of application in human factors, information science, and computer science research. Over the past decade, HCD has been increasingly applied to development of HIT, targeting both clinicians and patients. For example, human-centered principles have been employed to guide innovations in patient health records28 and other patient-centered technologies,29 user interfaces for clinical technology such as clinical decision support (CDS) systems and EHRs,30 and collaborative tools for shared decision-making, such as PRO Dashboards for prostate cancer.17 Johnson and colleagues present methods for iterative evaluation and design refinement for HIT interfaces ranging from heuristic evaluation and cognitive walkthroughs to usability testing.31 HCD is vital in health care where design flaws can limit HIT adoption29 and suboptimal interfaces can impede life-critical work.30,32 Thus improvements to EHR usability33 and CDS interface design34 are recognized key challenges. Human-computer interaction and related areas of user experience research are essential to presenting complex data sets used in patient-centered research.35 Effective interface design is just as critical for emerging HIT, including PRO Dashboards.

Methods

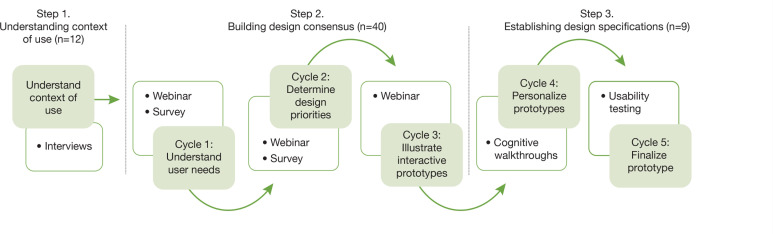

To optimize PRO Dashboards to the needs of users, we engaged a group of health care administrators, providers, and staff from health care sites that collaborate with CERTAIN as stakeholders in HCD activities over 15 months. We carried out this work in three major steps, including the following: (1) stakeholder interviews to understand the context of PRO Dashboard use, (2) group-based iterative design to build consensus on the design concept of PRO Dashboards, and (3) iterative design with individual users to establish design specifications for PRO Dashboard implementation (Figure 1). Through this process we examined stakeholder perceptions about integrating PROs into clinical practice and elicited their design preferences for dashboards that facilitate use of PROs in patient care and QI activities. The outcome of these steps is a functional prototype for implementation of PRO Dashboards designed in collaboration with stakeholders to meet user requirements. This research was approved by the University of Washington Institutional Review Board.

Figure 1.

Process for Engaging Stakeholders in the Human-Centered Design (HCD) of PRO Dashboards

Stakeholder Interviews (Step 1)

To understand the context in which PRO Dashboards will be used, we invited a convenience sample of health care administrators, providers, and staff to participate in semi structured interviews to elicit perceptions about incorporating PROs into clinical practice and QI initiatives. Site coordinators conducted outreach to request stakeholder participation. Interviews lasted approximately one hour, and focused on perceived benefits and barriers of leveraging PRO data to inform patient care and QI. Specifically, stakeholders were asked to respond to questions, including “What do you see as the promise or potential benefit from leveraging PROs in the future?” and “What barriers or risks to your practice do you see from collecting or leveraging PRO data?” They were also asked to describe any tools they already use that incorporate PROs and professional decisions they encounter in which PROs would be useful. Recruitment continued until thematic saturation was reached. We audio recorded and transcribed interviews for qualitative analysis.36 In this paper, we report emergent themes that ground our HCD to establish the context of use for PRO dashboards.

Group-Based Iterative Design (Step 2)

We conducted three cycles of group-based iterative design to build design consensus on PRO Dashboards. Each cycle involved specifying user requirements through use case scenarios, developing prototype PRO Dashboards based on those requirements, and evaluating prototypes with stakeholders. In the first cycle, we sought to understand user needs by evaluating scenarios and prototypes with stakeholders through an online webinar and follow-up survey. We designed brief use case scenarios to be general enough for participants to identify with and that differentiated use of PROs for patient care and QI activities. As a result, we designed prototypes to illustrate functionality to support these different roles and tasks.

Evaluations elicited stakeholder interest in using PROs, expectations for effective PRO reports, including data views (e.g., trending PROs over time versus comparing PROs at specific time points within and across clinics), and key functionality (e.g., support for patient care versus QI). In subsequent cycles, we used stakeholder feedback to refine the scenarios and prototypes to establish design priorities (cycle 2), which we illustrated through interactive prototypes (cycle 3). Because stakeholders are distributed across Washington state, we chose online methods that enable remote participation, including hour-long webinars (i.e., online web conference with content presentation and collaborative discussion) conducted with “Adobe connect,37 and follow-up surveys conducted with the “Catalyst WebQ” online survey platform.38

Iterative Design with Individual Users (Step 3)

To establish design specifications for implementation, we engaged individual stakeholders from CERTAIN in two more design iterations. Our goal was to operationalize design input obtained in steps 1 and 2 into a functional prototype that we refined through cognitive walkthroughs (cycle 4) and usability testing (cycle 5). We used cognitive walk throughs31,39 to examine PRO dashboard ease of use and used small-scale usability testing31 to evaluate prototype performance with representative tasks. In our cognitive walkthroughs, we asked participants to step through a personalized PRO dashboard framed as a “progress report” that displayed PROs collected from their own patients. Participants then described how easy they found the reports to use and ways the design of the report could be improved. We then incorporated stakeholder feedback into a function prototype for usability testing in which participants completed four tasks: (1) review PROs across a panel of patients, (2) evaluate treatment options for a new patient based on outcomes reported by other patients, (3) estimate the quality of PRO data, and (4) share PROs with team members, including patients.

We recruited providers with patient care and QI experience to take part in individual sessions. We met with some participants in person and met remotely with others through “Adobe Connect.”37 Unlike the interactive webinars (Step 2) in which the research team acted as presenter to control content that participants actively viewed and verbally responded to through “Adobe Connect,” remote usability participants were assigned the presenter role to enable observation of prototype use by the research team. The outcome of step 3 was a final design specification for implementation of a tested functional prototype.

Participants

We conducted HCD activities with a convenience sample of health care professional stakeholders from February 2013 through May 2014. In Step 1 we conducted 12 semi structured interviews with stakeholders from different health care sites that collaborate with CERTAIN. Participants included health care administrative staff (n= 6), health care providers (n=6). These stakeholders were drawn from small independent practices, community hospitals, large academic medical centers, and networked health systems that use different EHRs to incorporate perspectives on scaling PRO Dashboards across diverse technical, organizational, and physical environments. In Step 2, we conducted three cycles of group-based iterative design with a total of 40 health care administrators, providers, and staff taking part in webinars and surveys. The majority of participants had not taken part in Step 1. We obtained stakeholder feedback during each iterative cycle, which drove increased detail and complexity from static to interactive prototype design. Finally, in Step 3 we engaged nine provider stakeholders in two additional cycles of iterative design to finalize design specifications. The majority of those providers had not taken part in Step 2. We obtained input through cognitive walk-throughs with personalized prototypes and usability testing with an interactive functional prototype.

Results

We describe findings for each iterative step of our HCD process to understand the context of PRO dashboard use from interviews (Step 1), to build consensus on PRO Dashboard design from design groups (Step 2), and to establish design specifications for a functional PRO dashboard prototype from individual user testing (Step 3).

Understanding the Context of PRO Dashboard Use (Step 1)

Stakeholder interviews revealed contextual factors for design, including characteristics of target users, tasks, and environment for PRO Dashboards. Based on discussion of perceived benefits and barriers of PRO use in patient care and QI activities, two primary groups were evident as key target users of PRO Dashboards: health care providers and administrative staff. We describe three broad categories that emerged from thematic analysis to guide design of PRO Dashboards below: PRO data needs, obstacles to PRO use, and opportunities for integration of PROs into practice.

PRO Data Needs

Stakeholders reported active attempts to leverage PROs in professional practice and expressed a strong interest in the development of tools for capturing, managing, and reporting PRO data. They perceived that long-term collection of PROs is necessary to achieve value, but that existing EHR infrastructure is ill equipped due to constant updating and transitioning of systems. Continuity of information and access regardless of EHR system was an important need that surfaced. With increasing expectations to incorporate PROs into practice, stakeholders supported the concept of developing tools for capturing, managing, and reporting PRO data as part of practice workflow. In addition, stake-holders thought a secondary, and perhaps larger, challenge would involve learning how to use tool outputs to change behavior and practice. For example, one health care provider told us, “With other colleagues—some will have more flexibility than others with adopting new tools. Some have no allotment for new things. Others are very open with the use of new tools. There’s going to be a lot of variation correlated with ‘openness’ to other areas of their practice.” (Participant 6)

Thus, tools for integrating PROs into practice, such as PRO Dashboards, should not only be accessible regardless of EHR, but should be usable across a range of users. In particular, PRO data needs appear to differ for health care providers and administrative staff as key target users. Health care providers expressed the need for PRO data to support patient care activities, such as patient counseling, decision support, and analysis outside patient visits. For example, one health care provider envisioned viewing his patients’ PROs along regional or national data sets “for follow ups where everything is measured and compared— just like Zillow. com” (Participant 5). In contrast, health care professionals with QI duties, including both administrative staff and providers, expressed the need for PRO data to prioritize provider, staff, and physical resources, such as mapping aggregate PROs to clinical outcomes, utilization, and financial data. For example one health care administrator told us that she wanted to use PROs for “analysis in aggregate” but that the PRO data currently collected was used “only for patient care.” (Participant 10)

Obstacles to Integration of PRO Data

Although stakeholders found value in integrating PROs into practice, they reported concerns about barriers to effectively using PRO data for patient care, including fragmented tools that slow or impede workflow, misaligned incentives, and change management concerns. In particular, tools that slow clinical workflow were thought to result in limited adoption and minimal incorporation into practice. Even if PRO tools were adopted, improving and monitoring practice and transparency of data quality were thought to present major hurdles.

Several participants were wary of what the data might show and how their practice might change. For example, one participant commented on the need for a “safe harbor environment…clinicians and administrators are starting to get nervous about how the information will get shared publicly…the walls have started going up… and this may prevent the good that can come from the overall effort.” (Participant 11) Another participant told us, “The big part we are missing is having an action plan to act with the data, it does not come with a script. The institution is left to interpret and define corrective measures.” (Participant 5) Yet, value was found in developing meaningful ways to report on this new source of data: “Seeing the report is going to be the driver of change whether you are an underperformer or middle of the pack.” (Participant 7) These perspectives highlight the importance of providing guidance on the interpretability and use of PROs in ways that are meaningful and supportive to both patient care and QI initiatives within an organization.

Opportunities for Integration of PROs into Practice

Despite foreseen obstacles, stakeholders voiced enthusiasm for a number of opportunities for PROs to enhance health care practice, outcomes, and health care system marketing. For instance, a health care administrator told us, “One thing we need to do in the future is educate staff on how to communicate to patients the value of this information and collections—what is the benefit/value to them.” (Participant 10). Access to data quality indicators was also thought to be important for future adoption PRO systems. Interactive interfaces that scale to transparently illustrate data trends and quality metrics could spur PRO use. Stakeholders also perceived a number of clinical targets that PROs could help improve. For instance, one health care provider told us that “Obesity, smoking, and diabetes will have the greatest immediate impact.” (Participant 8) Lessons learned with these scenarios can help to inform the use of PROs in other scenarios.

Findings from step 1 established our understanding of the context and uses of PROs. Findings ground our focus on supporting the needs of health care administrators and providers in particular for (1) patient care and (2) QI efforts. We learned that our design solution must scale for accessibility across diverse clinical settings regardless of EHR and be usable by individuals with varied workflows and experience. Provision of guidance with transparent and intuitive interfaces was seen as particularly important for adoption of tools like PRO dashboards. Based on this foundation, we next moved to step 2 to build consensus on PRO Dashboard design through design groups.

Building Consensus on PRO Dashboard Design (Step 2)

We obtained stakeholder feedback through three iterations of group-based design. This feedback drove design refinements that increased detail and complexity of prototypes from sample wireframes (cycle 1), to static mock-ups (cycle 2), to interactive dashboards (cycle 3). We summarize key design recommendations that emerged from each cycle in Table 1.

Table 1.

Key Recommendations for Designing PRO Dashboards from Design Cycles in Step

| Patient Care Functionality | QI Functionality | |

|---|---|---|

| Key support | Use PROs to assess patient progress, counsel patients, and understand treatment effectiveness. | Use PROs to enhance patient satisfaction and establish benchmarks for care quality. |

| Cycle 1. Sample wireframes | Provide patient-level views to monitor individual PROs. Provide provider-level views to monitor PROs for panels of patients. |

Provide clinic-level views to compare aggregate PROs over time. Provide institution-level views to compare aggregate PROs across sites. |

| Cycle 2. Static mock-ups | Provide predefined timelines that chart patient progress to counsel patients during visits. Provide interactive analytics to dynamically explore individual patient data outside visits. |

Provide predefined snapshots of aggregated patient data at various endpoints to monitor care quality. Provide support for interactive analytics to dynamically explore aggregate patient data. |

| Cycle 3. Interactive prototypes | Provide dynamic patient- and provider-level data for deep interaction. Provide printable PRO reports to share with individual patients. |

Provide dynamic clinic- and institution-level data views for deep interaction. Provider quarterly PRO snapshots of aggregate patient data. |

Design Cycle 1: Sample Wireframes

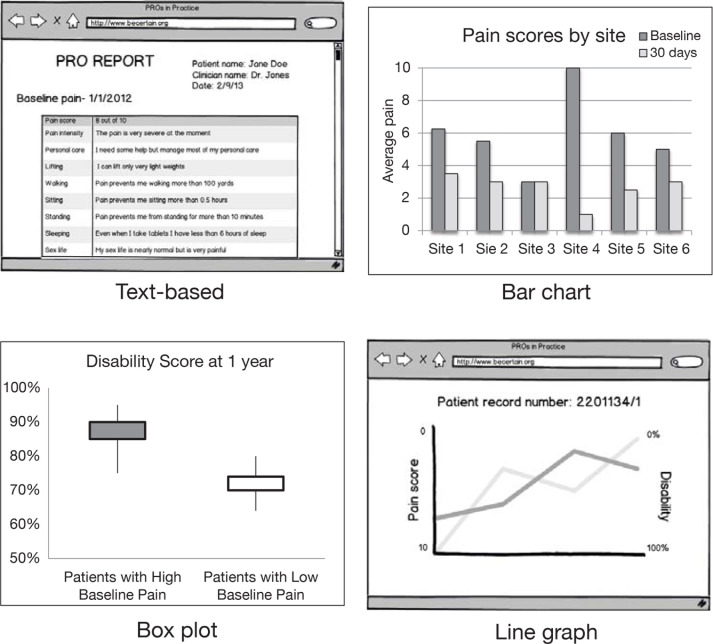

To understand user needs for PRO Dashboards, we created two use case scenarios, one to represent patient care tasks and one for QI tasks (Table 2). Based on these scenarios, we mocked up sample wireframes, which are simple black and white drawings of possible user interfaces to support the tasks illustrated in the scenarios (Figure 2). The wireframes span different visual approaches for presenting spine surgery PROs (i.e., NRS, ODI, NDI).

Table 2.

Use Case Scenarios

| ‘Patient Care’ Use Case Scenario | ‘Quality Improvement’ (QI) Use Case Scenario |

|---|---|

| Dr. Jones is seeing a patient for a 12-month surgery follow-up. The patient has reported pain and disability outcomes prior to surgery, and following surgery, at 30 days, 6 months, and 1 year. Dr. Jones wants to share this data with the patient during the follow-up visits to discuss changes in out-comes since surgery. | John is a hospital administrator who wants to compare outcomes of patients who had different levels of pain before surgery. He has available PROs that include scores for low back pain at baseline before surgery, and at 30 days, 6 months, and 1 year following surgery for all patients who had surgery at his hospital for the past 5 years. |

Figure 2.

Wireframes for Design Cycle 1

Notes: Wireframes for design cycle 1 illustrate different approaches for displaying PROs in user interfaces including text-based PRO reports that list an individual patient’s selections for items making up their overall pain score (upper left), bar chart that compares average pain scores for patients across six health care sites (upper right), box plot that compares disability scores for patients with high and low baseline pain (lower left), and line graph that illustrates trends in a patient’s pain and disability scores over time (lower right).

Feedback from the webinar and survey began to build consensus on design features for effective reporting of PROs to support both patient care and QI needs. Stakeholder interest in using PROs aligned with their professional role, supporting differentiated needs according to context of use. For example, when asked what interests them most in using PROs, one provider told us, “to accurately measure the severity of pain and disability among my patients,” whereas an RN abstractor told us, “We would use this for quality improvement…we want to know why patients return to the hospital after their initial surgery.” Thus, stakeholders envisioned different data views to support patient care and QI functionality. First, providers expressed interest in data views for patient care that illustrate PROs for individual patients (“patient level”) and for panels of patients (“provider level”) to help them monitor their personal practice and engage in shared patient decision-making. Stakeholders recommended that patient care functionality help providers assess patient progress, counsel patients, and understand treatment effectiveness in the patients they care for.

In contrast, stakeholders expressed interest in data views for QI that illustrate PROs aggregated across patients to help administrators and staff examine performance indicators for spine surgery at the clinic level and institution level (e.g., hospitals, medical centers, networked health systems). Recommended QI functionality included support for enhancing patient satisfaction and establishing benchmarks to compare surgery types, surgeons, and institutions. Overall, interest was high for viewing key comparisons, such as aggregate PROs over time (i.e., pre-op versus post-op) by provider, clinic, or institution. Thus, we examined different visual formats for illustrating these types of data views in the next cycle.

Design Cycle 2: Static Mock-ups

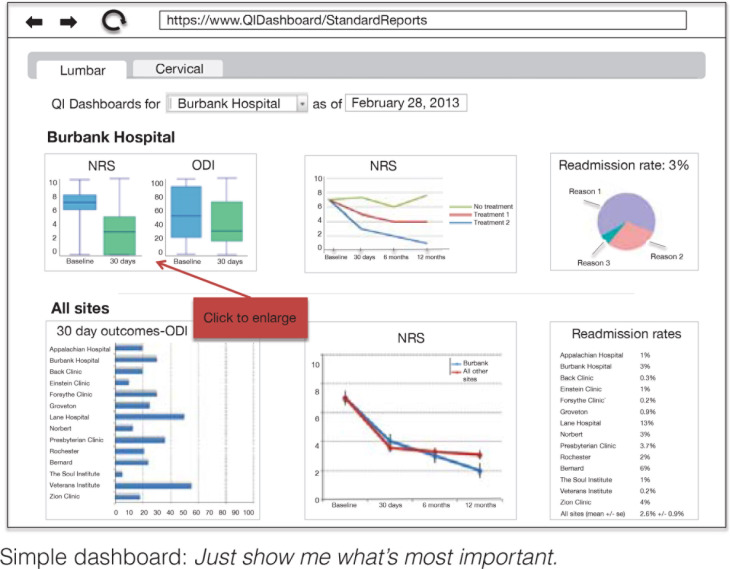

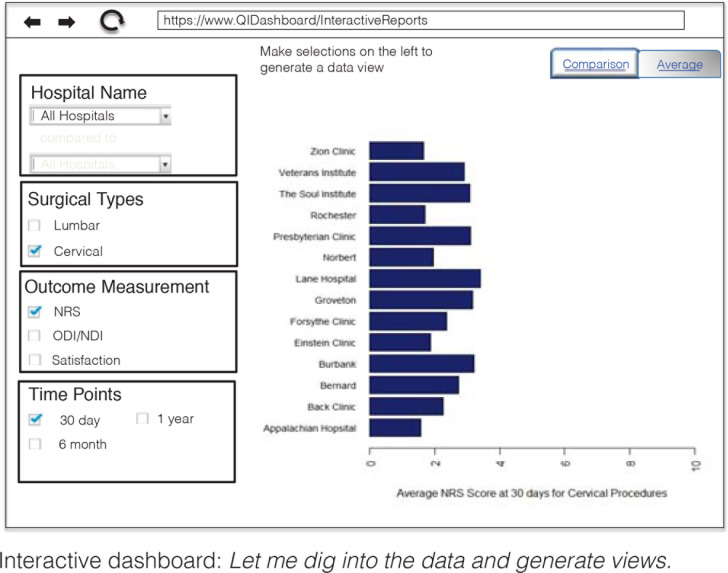

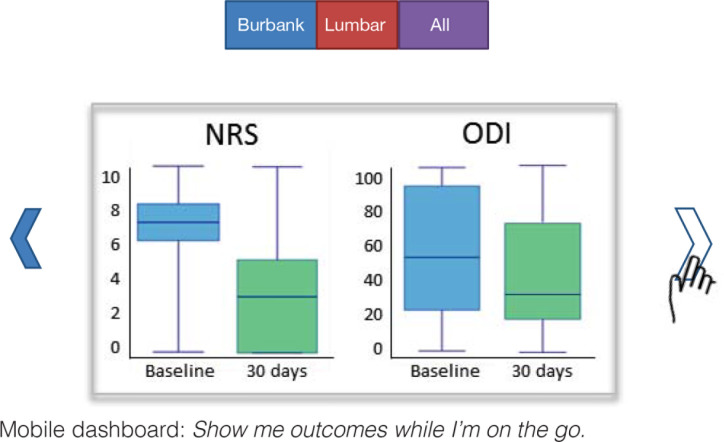

To establish design priorities for PRO dashboards, we used the feedback obtained in design cycle 1 to refine our prototypes with data views of interest to stakeholders. We were particularly interested in how much granularity was desired (e.g., quick glance at predefined views or deep dive to build customized views) and where dashboards would be accessed (e.g., on web browser seated in an office or on a mobile device in the flow of patient visits or meetings). Thus, we prepared mock-ups as static images to illustrate three alternative dashboard styles: (1) simple dashboard, (2) interactive dashboard, and (3) mobile dashboard (Appendix A). The simple dashboard has limited interactivity but allows for quick predefined overviews at a glance with minimal user effort. Users select a procedure of interest (i.e., cervical or lumbar surgery), institution, and date to view premade graphs of associated PROs. In contrast, the interactive dashboard enables the user to “dive into” PRO analysis with granular data filters to dynamically generate custom-tailored views. Compared to these web-based prototypes, the mobile dashboard is designed for users to access PROs on smart phones or tablets while on the go. In our webinar and survey, we sought feedback on stakeholder preferences and perceptions about the fit of dashboard style to patient care and QI contexts represented in our use case scenarios.

Feedback from the webinar and survey revealed preferences for dashboard style, as well as for parameters of data views, including quality indicators, data comparisons, and unit of PRO change over time. Most stakeholders could not envision the need for mobile access for either patient care or QI contexts, and thus preferred the web-based dashboards. They agreed that the predefined views in the simple prototype could meet the needs of most users, whereas the interactive prototype would be of most use to “power users” who wish to dynamically explore the data. For example, one provider thought that a simple predefined timeline of individual-level PROs could facilitate patient counseling following surgery (i.e., patient care scenario), and an administrator thought that predefined quarterly snapshots of PROs aggregated by clinic or institution could help with regular monitoring of quality of care (QI scenario). In contrast, stakeholders thought that interactive analytics were better suited for providers and administrators to examine practice trends to better understand unusual patient cases (e.g., patient care scenario) or to inform institutional decisions on target areas for improvement (QI scenario).

To discern quality of PRO data, stakeholders found completion rates and sample sizes critical for PRO interpretation. Examples of quality metrics included viewing the proportion of patients reporting outcomes before and after surgery and setting a threshold for minimum sample size for individual providers before including data in the dashboard. Stakeholders continued to show the greatest interest in comparing individual changes in PROs over time for patient care and comparing PROs by clinic and institution for QI, but raised confidentiality concerns about comparing patient outcomes by provider. Finally, stakeholders preferred viewing changes in raw scores over time, percentage of change, and quarterly “snapshots” for different endpoints (e.g., 30 day, 1 year, 2 years).

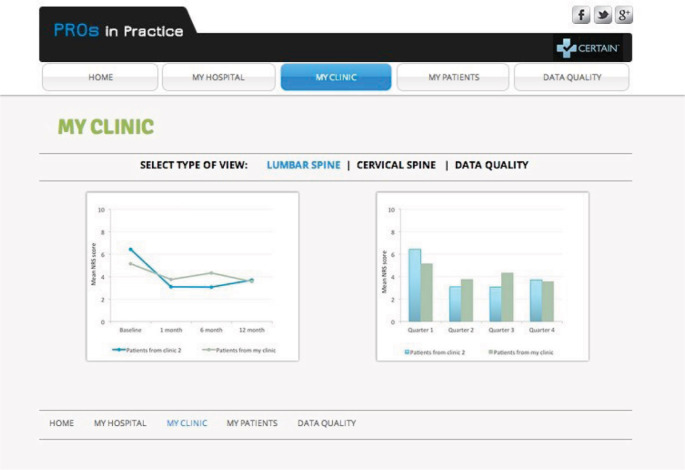

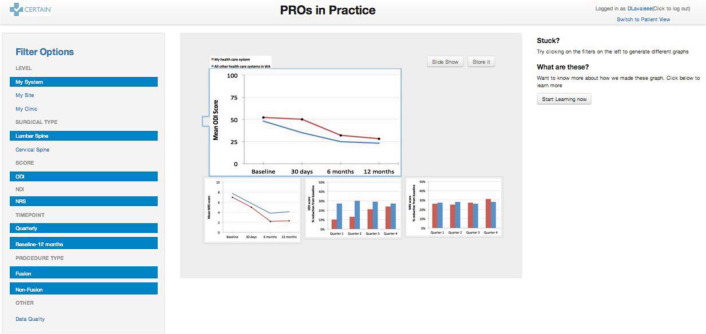

Design Cycle 3: Interactive Prototypes

Based on design priorities from cycle 2, we refined our prototypes to build stakeholder consensus on design options through three alternative illustrative prototypes: (1) paged dashboard, (2) workflow dashboard, and (3) power dashboard (Appendix B). The prototypes contrast level of dashboard interactivity (i.e., static versus dynamic views), organization of content (i.e., by context of use, data level, outcome measure), and by granularity of detail (i.e., minimal scores alone versus inclusion of quality indicators, clinical parameters, and other data associated with PROs). The paged dashboard provides simple static views organized by data level (i.e., patient-, provider-, clinic- and institution-level) without much granular detail on data quality or clinical parameters. The workflow dashboard provides a mix of predefined and dynamic user-defined views organized by context—one interface with aggregate PROs for QI and one interface with individual PROs for patient care. For both QI and patient care workflows, users can dynamically filter PROs into custom views and add quality indicators and clinical parameters, such as smoking status. The power dashboard is highly interactive with a single page for users to dynamically filter PROs into user-defined views with granular detail organized by outcome measure.

Stakeholders agreed that simpler static dashboards were better suited for talking with patients during busy clinic visits (i.e., patient care scenario), whereas interactive dashboards were better suited for deeper analytics that support QI tasks and “talk with colleagues” to examine unusual patient cases. They recommended integrating workflows for patient care and QI into a single tool that provides role-based permissions to functionality. There was no clear preference for content organization. Although patient-level views with detailed data granularity were found useful to providers for examining difficult patient cases (i.e., patient care scenario), stakeholders expressed concern about confidentiality. Health care administrators and staff were primarily interested in analyzing aggregate PROs at the clinic and institution levels for QI tasks, whereas providers were primarily interested in analyzing PROs of their own patients to reflect on their practice and inform patient care, such as treatment decisions. Overall, the greatest shared interest was in moving forward with a dynamic “data view” for deep analytics that embeds functionality to print static PRO reports for use in patient care during clinic visits.

Findings from step 2 built stakeholder consensus on the design of PRO Dashboards. In particular, stakeholders led us through a number of design considerations from priority data views and integration of quality indicators to preserving confidentiality. Although this critical design input helped to establish consensus on PRO Dashboard design, we confronted several methodological challenges that led us to individualize stakeholder engagement in Step 3. Most notably, the remote group-based methods, while convenient, may have limited the extent to which busy health care professionals participated. For example, we experienced diminishing participation with each design cycle. Thus in Step 3, we shifted our approach to target engagement with individual providers as “champions” around personalized prototypes that illustrate PROs their own patients contributed to CERTAIN. We anticipated that champions could speak to the utility of prototypes for use in both patient care and QI contexts.

Establishing Design Specifications (Step 3)

Through two cycles of iterative design with individual stakeholders, we finalized design specifications for implementation of PRO Dashboards. In design cycle 4 we obtained feedback using personalized prototypes. Then in design cycle 5 we tested the usability of our design specifications in a functional PRO dashboard prototype.

Design Cycle 4: Personalized Prototypes

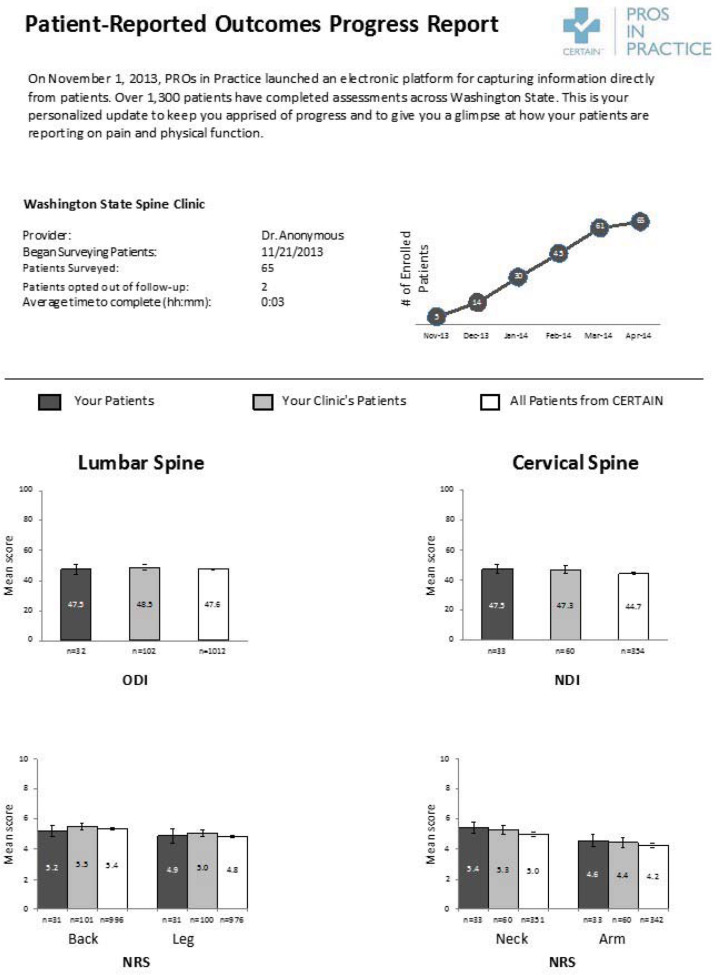

We engaged providers to solicit feedback on personalized prototypes that compare aggregate PROs collected from their own patients, their clinic, and all patients in CERTAIN. We designed the personalized prototypes to support aspects of both patient care (i.e., reflecting on trends in one’s own practice) and QI (i.e., reflecting on trends at clinic and institution levels). We first created PRO reports that were individualized with aggregated patient data collected through CERTAIN for each provider. The two-page personalized prototype report illustrates the number of patients the provider enrolled in CERTAIN over time, and their average pain (NRS) and disability (ODI/NDI) scores for lumbar spine and cervical spine using bar charts framed as a PRO progress report (Appendix C). The report compares PROs for the provider’s patients with PROs for other patients from the provider’s clinic and all patients participating in CERTAIN. Because we were most interested in feedback on content and function we limited the use of color and graphic design. We used these personalized prototypes in cognitive walkthroughs with 5 providers to examine ease of use and improvements to content, format, and possible interactions. All five providers were surgeons.

Provider feedback pointed to several refinements to improve ease of use and dashboard utility. Building on our group-based design work, providers expressed interest in a detailed data view that serves as a data analysis tool. For instance, they envisioned analyses to reflect on their own patient care practice, such as assessing improvements in their patients’ PROs over time (i.e., patient care scenario). They found value in the ability to easily compare trends across CERTAIN that could inform targets for improvements in care quality, such as addressing deficits in pain or disability of patients at their institution. They expressed interest in more refined comparisons of PROs for patients who underwent different surgical procedures. They wanted to incorporate clinical parameters in their detailed analyses, such as smoking status, return to work, opioid use, and postoperative complications organized by benchmarking groups. Inclusion of a quick overview showing simple trends at a glance was also of interest. Data quality concerns surfaced that indicate the need to illustrate patient attrition, clinician participation in CERTAIN, and explanation of data characteristics, such as sample size at various time points for PROs. As well, data transparency surfaced as an important design consideration for promoting a collaborative culture. Although providers felt comfortable disclosing PROs for their own patients to dashboard users within their own clinic or hospital, there was consensus about preserving anonymity beyond their institution.

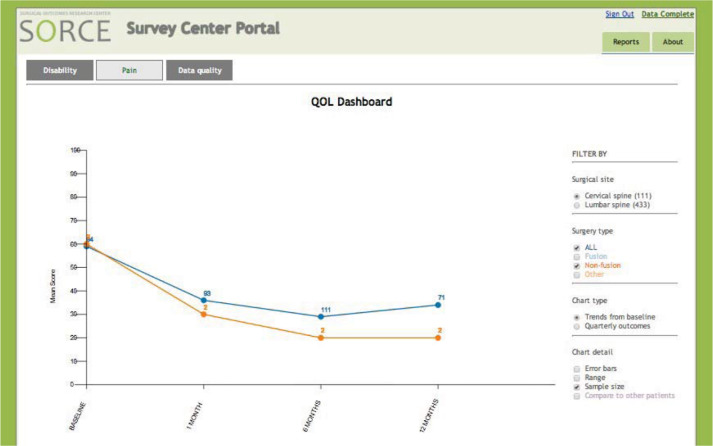

Design Cycle 5: Final Design Specification for PRO Dashboard

We solidified the input received throughout stakeholder design activities into a functional prototype implemented in Axure.40 The functional prototype comprises three primary components: (1) an “At a glance” screen providing a simple data overview of PROs collected to date, (2) an “Analyze” screen providing a data view the user can filter (e.g., surgical site, procedure type, patient parameters, data range), and (3) a “Data quality” screen (Figure 3). Based on prior design cycles, we designed the prototype to support both patient care and QI contexts. For patient care, we designed functionality to specifically support providers, such as data filters to compare disability and pain outcomes by procedure type for patients in their own practice, in their clinic, and across CERTAIN and then save these analyses to print or email to others (“Analyze screen”). We also designed functionality to specifically support health care administrators, providers, and staff involved in QI efforts. For instance, providers can view up-to-date pain and disability scores for their patients 60 days, 1 year, and 2 years following surgery (“At a glance” screen) and filter participant retention and response rates by date for patients in their own practice, in their clinic, and across CERTAIN (“Data quality” screen).

Figure 3.

Functional PRO Dashboard Prototype

Data Quality Screen

We evaluated the prototype performance with four participants using a mock PRO data set for a fictitious spine surgeon. Usability testing covered four task scenarios: (1) using the prototype to get an overview of PRO performance for the surgeon’s patients (i.e., QI scenario), (2) to evaluate treatment options for a new patient based on outcomes reported by other patients (i.e., patient care scenario), (3) to estimate the quality of PRO data (i.e., QI scenario), and (4) to share the surgeon’s PROs with his clinical team, including patients (i.e., patient care scenario).

Overall, participants found the prototype to present a clear user interface that did not overload them with information. Although several areas for improvement were identified, one participant told us that “The UI is clear and concise and nicely laid out with no information overload. It is also clear what the different entities are and easy to navigate.” Participants liked viewing trends over time and found the views to provide a useful basis for comparison. They recommended streamlining the “At a glance” screen to focus on two or three key clinical outcomes. For instance pro-grammatic outcomes (e.g., quarterly patient enrollment in CERTAIN) were not perceived as meaningful as viewing key clinical outcomes, such as pain and disability differentiated by surgical procedure of personal interest (e.g., fusion versus nonfusion treatment). They also wanted the ability to use the “Analyze” screen to generate and save tailored views they care about most as defaults. Granular data was deemed important for both patient care and QI tasks, which raised the need for data privacy solutions to address concerns about confidentiality in our future work. In particular, a common thread throughout our HCD was data confidentiality concerns, both for health care professionals and for patients. Thus users must feel safe to engage and privacy-preserving solutions are warranted. Although our participants favored keeping granular data secure, they also expressed interest in sharing data with colleagues for practice improvement. One offered solution was an online forum to anonymously share aggregate PRO trends and tactics for improvement.

Observing participants’ interactions with the prototype to complete usability tasks identified further refinements to solidify design specifications. For example, in addition to the information buttons provided on “how to interpret this chart,” clear labels are needed, such as on chart axes. Participants suggested adding definitions for the patient parameter filters on the “Analyze” screen (e.g., smoking status) and associating quality indicators on the “Data quality” screen with specific data points, such as showing sample size in context-sensitive hovers. Participants also wanted to share PROs with patients and colleagues by collecting a selection of charts that “tell a story” that can be emailed or printed.

Findings from step 3 established concrete design specifications for PRO dashboards. Through cognitive walkthroughs of personalized PRO progress reports, we identified several potential refinements from incorporating additional data quality metrics (e.g., sample size, attrition rates) to adding “at a glance” overviews.

After implementing a number of these improvements in our functional prototype, usability testing showed good task performance, reflected acceptability, and revealed a number of additional refinements for future improvements (e.g., information buttons and other context sensitive help, privacy preserving measures).

Discussion

PROs are a valuable source of health information that can add patient experience to clinical data. Although substantial prior work is focused on capturing PROs, relatively little attention has been paid to facilitating the interpretation and use of this data through visual displays in clinical practice. To address this gap, we engaged health care professionals as stakeholders in the design of PRO dashboards to support patient care and QI initiatives. Our findings provide a first step toward the design of user-friendly HIT that is accepted, usable, and has the potential to enhance health care. Further, our functional prototype aligns with more general dashboard classifications, including strategic dashboards that provide quick overviews (i.e., At a glance screen), analytical dashboards for drilling down into data detail (i.e., Analyze screen), and operational dashboards for monitoring program or business activities (i.e., Data quality screen).41 Further work is needed to understand best practices for integrating such displays into practice workflow and how PROs can best support quality care.

Our multistep project illustrates a range of engagement methods guided by human-centered principles for optimizing the design of PRO Dashboards with input from users. We contribute an initial set of design recommendation detailing priority features for PRO Dashboard that can support patient care and QI activities (Table 1) and a concrete implementation of such features in a functional prototype (Figure 3). In particular, we uncovered caveats for designing PRO Dashboards for patient care and QI contexts. First, presentation of patient-level and provider-level PROs appear to provide the best support for patient care activities, whereas clinic-level and institution-level PROs aggregated across patients better support QI activities. However, patient-level and provider-level PRO Dashboards raised significant concerns about privacy and confidentiality. Future work is needed to design privacy-preserving dashboards for patient care. Second, simple at a glance views of PROs were valued for both patient care and QI contexts. However, the most meaningful outcomes these quick views present may depend on the specific user. One solution that emerged from our work is designing PRO Dashboards that enable users to tailor views with outcomes they care about most. Finally, data quality was a critical theme that emerged for supporting accurate interpretation of PROs. Many stakeholders asked for dashboard representation of additional data beyond disability and pain scores, such as sample size and patient parameters (e.g., smoking status, comorbidities, opioid use). Future work is needed to explore intuitive interfaces that contextualize PROs with important quality indicators.

Although the importance of capturing PROs is recognized, many health care professionals remain uncertain about incorporating this data into practice and need opportunities to lend their input and expertise.42 HCD guided our collaborative work with these stakeholders from PRO Dashboard design concept to prototype specification for implementation. The range of design activities to engage stakeholders led not only to design specifications for implementation, but brought light to a number of design features that shaped prototypes. For instance, stakeholders expressed interest in viewing PROs at the patient-level and provider-level for patient care and at the clinic-level and institution-level for QI. For both patient care and QI contexts, stakeholders sought a mix of simple predefined views at a glance and interactive views to dynamically filter and deeply analyze PROs on the fly. Views that were most meaningful illustrated temporal trends in patients’ PROs over time (e.g., pretreatment, and 30-days, 1 year, and 2 years following treatment) and aggregate PROs at specific endpoints (e.g., “quarterly snapshots” of reported pain 1 year following surgery). Such features are concretely illustrated in several of our early prototypes that we refined with stakeholder feedback into our final functional prototype. Although stake-holders expressed overall enthusiasm about the potential of PRO Dashboards, concerns remain about how to interpret PRO data and ensure appropriate privacy protections for both patients and providers.

This work represents a case example that illustrates a range of flexible methods for engaging target users in the design of user-friendly HIT from qualitative interviews and iterative design groups to cognitive walkthroughs and usability testing. Despite these contributions, we learned several important lessons through the challenges we experienced in employing HCD with health care professionals. First, health care professionals can be challenging to engage in time-consuming design work. This committed group maintains busy schedules, pressing responsibilities, and some are unsure of the value that PROs could offer their future practice. Yet their design input is essential. We adapted our methods by scheduling time-limited activities outside of work hours (e.g., early morning webinars) and by building in alternative methods for engagement when most convenient, such as online surveys. As our work progressed, we found it productive to engage key champions face to face. Although we experienced some attrition in stakeholder participation over time, we believe that establishing this trusted leadership helped preserve a core stream of critical design input.43–44 We anticipate that continued leadership of these champions will be important for encouraging later adoption and sustainability.45

Another challenge we faced that is common to HCD is the difficulty target users can have envisioning design for a new interactive system that they have never before conceived. Because integration of PROs into practice is new, some stakeholders found it challenging to envision and articulate how they might use the data. Although our intent was to encourage participants to consider how they might use PROs with general use case scenarios they could identify with, our design choices such as gender could have introduced some bias. When approached with different design concepts for presenting PROs, stakeholders often asked for a mix of the concepts or agreed that all the concepts would work well. Although providing multiple prototypes helped us explore the design space of possibilities, providing concrete tools for interaction in lifelike scenarios, such as mock patient visits, may have helped to crystalize design input.

The HCD methods we employed are largely qualitative involving small samples of participants, which raise questions regarding generalizability of findings to other contexts and settings. We utilized a convenience sampling approach to obtain in-depth input from health care administrators, providers, and staff interested in advancing the use of PRO data for clinical care and QI initiatives. These stakeholders were drawn from diverse practice settings within CERTAIN, including small independent practices, community hospitals, large academic medical centers, and networked health systems. Although a convenience sample may result in biases, since those most interested in advancing the capture and reporting of PRO data may be more likely to make time for engagement, it did provide an advantage for obtaining feedback from those individuals most likely to utilize and integrate such data into their own work—a critical perspective in early development.

As development of PRO Dashboards proceeds, others can make use of our work by leveraging our HCD methods to examine the fit and extension of PRO Dashboard design recommendations to meet the needs and preferences of new stakeholder groups. The design specifications for our PRO Dashboard prototype may be locally tailored to the particular PROs and patient data collected through CERTAIN, and thus not generalize to other groups. Data collection in other large networks may differ to enable different types of functionality and PRO presentation. Smaller sites with limited resources for a vast PRO reporting infrastructure can still make use of initiatives like PROMIS4 to guide PRO collection and then apply our design recommendations for representing patient-level and provider-level PROs with simple spreadsheets and static charts. Grounded in an understanding of user needs, our work provides a solid foundation for efforts with similar PROs to expand upon. For example, hospitals that support both patient care and QI activities can follow our methods to distinguish dashboard functionality to support those different needs. Future work could examine the needs of a broader range of potential users, such as patients or public health professionals. Such work can follow our step-by-step approach using well-established, human-centered methods to understand the context of system use through formative work followed by specification of user requirements, prototyping, and user testing to ensure the resulting system meets the needs of users.27 As efforts to integrate patient-generated health data into clinical care advance,18,46 representational standards for interoperability and exchange of PROs (e.g., HL7) could enable local preferences to drive specific data presentation techniques.

Our work illustrates solid design thinking around the multiple ways to ask users for input on the design of HIT. HCD is certainly not limited to presenting a single design concept for feedback and stakeholders should be presented with multiple concepts to elicit meaningful feedback to drive design choices. We often presented stakeholders with several alternative designs,47 and through their response arrived at a deeper understanding of their needs and preferences. We also engaged stakeholders in a range of group-based and individually targeted design activities to enhance the breadth of guidance we received. Other techniques, such as participatory design,48 can be applied to encourage users themselves to generate designs rather than solely respond to prototypes generated by designers. Our participants preferred familiar bar charts and line graphs, yet advancing information visualization and visual analytics techniques could provide busy professionals with remarkable tools for interpreting and employing PROs in practice.49,50 Although we focused on meeting the needs of health care professionals, HCD methods can also contribute to the design of patient-facing tools that facilitate conversations about PROs that are important to patients.17

Conclusion

Integrating PROs into practice through innovative HIT has the potential to improve both health care outcomes and quality. We applied HCD to engage health care professionals from diverse practice settings in collaborative development of PRO Dashboards that visually display patient-reported pain and disability outcomes following spine surgery. By partnering with these HIT users throughout iterative and targeted design activities we specified, refined, and tested an evolving PRO Dashboard from design concept to implementation. We gained critical insights into the PRO reporting needs and preferences of health care professionals, including appropriate data level (patient, provider, clinic, or institution), priority views (e.g., temporal timelines and periodic snapshots) and level of interactivity (e.g., at a glance views versus filtered views for deeper analytics) for patient care and QI contexts.

Lessons we learned about meeting these needs through HIT are reflected in our design recommendations for PRO Dashboards with priority features for each context (Table 1). Stakeholders provided further design considerations on content and interaction of user-friendly HIT as well as social and ethical considerations around data sharing that will continue to shape our ongoing work. Examples include supporting custom-tailored views, integrating quality indicators, and developing privacy preserving interfaces. Although engaging health care professionals as stakeholders is a critical step toward design of user-friendly HIT, our experience illustrates the need for new methods of effective engagement that respect the busy schedules of health care professionals. In the future, we plan to implement PRO Dashboards for spine surgery across practices in CERTAIN. We plan to continue stakeholder engagement throughout this implementation using human-centered principles to ensure that PRO Dashboards are usable and user-friendly, and are tools that health care professionals will embrace to improve the patient care quality and outcomes.

Acknowledgments

We wish to thank PROs in Practice stakeholders who participated in this work. Special thanks is given to Andrew Buhayar and Henrik Holm Christensen for data collection, analysis and prototyping, Melissa Heckman for stakeholder engagement, and Amy Harper for project management. This work was supported by the National Library of Medicine Biomedical and Health Informatics Training Grant #T15LM007442, the Agency for Healthcare Research and Quality Grant #R01HS020025, and the Washington State Life Sciences Discovery Fund Grant #4593311. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality or the Washington State Life Sciences Discovery Fund. The Surgical Care and Outcomes Assessment Program (SCOAP) is a Coordinated Quality Improvement Program of the Foundation for Health Care Quality. CERTAIN is a program of the University of Washington, the academic research and development partner of SCOAP.

Acronyms

- CERTAIN

Comparative Effectiveness Research and Translation Network

- CDS

Clinical Decision Support

- EHR

Electronic Health Record

- HCD

Human Centered Design

- HIT

Health Information Technology

- NDI

Neck Disability Index

- NRS

Numerical Pain Rating Scale

- ODI

Oswestry Disability Index

- PROs

Patient-Reported Outcomes

- QI

Quality Improvement

- SCOAP

Surgery Care and Outcomes Assessment Program

Appendix A. Three Static Mock-Ups

Figure A1.

Simple Dashboard

The simple dashboard summarizes key comparisons in predefined views designed to show data deemed most important. Users select a procedure of interest (i.e., cervical or lumbar surgery), institution, and date to view premade graphs of PROs. While limited in interactivity, this dashboard allows for quick data overviews with minimal user effort.

Figure A2.

Interactive Dashboard

The interactive dashboard provides several filters, allowing the user to dynamically generate custom-tailored views for in-depth analysis. This view was designed to enable the user to “dive in to the data” to create their own views. Although this design requires more user effort than the simple dashboard, it allows for more customized views tailored to the user’s specific needs.

Figure A3.

Mobile Dashboard

The mobile dashboard is designed for accessing PROs while on the go. This mock-up mimics a tablet or smart phone touch interface for users to select data categories, and then swipe through a slideshow of predefined views.

Appendix B. Three Interactive Prototypes

Paged Dashboard

The paged dashboard provides simple static views organized by data level (i.e., patient-, provider-, clinic-, and institution-level) without much granular detail on data quality or clinical parameters. At the institution level, the My Hospital view is geared toward QI, My Patients view is geared toward patient care, and My Clinic view is useful for both contexts of use. Like the simple dashboard from cycle 2, this prototype requires minimal interactivity with static graphs on pages the user navigates with a horizontal menu. For example, navigating to the My Clinic page shows graphs that compare PROs for patients from the user’s clinic to other clinics. Quality indicators (e.g., completion rates) are not associated with individual graphs, but are viewed in a simple aggregate through the Data Quality page.

Figure B1.

Paged Dashboard with Low Interactivity, Multiple Pages Organized by Data Level, and Predefined Static Graphs

Workflow Dashboard

The workflow dashboard provides a mix of predefined and dynamic user-defined views organized by context of use—one interface with aggregate PROs for QI tasks and one interface with individual PROs for patient care. The QI interface is highly interactive with multiple filters for dynamic creation of user-defined views by level (i.e., clinic, hospital, or health care system), type of surgery, outcome, time point, and procedure. For example, ODI scores are shown for lumbar spine surgeries performed across the health care system from baseline to 12 months. Users can add quality indicators and clinical data (e.g., smoking status) to graphs in the center pane. The user can store each graph, and then display the series in a slide show. The patient care interface allows users to view PRO snapshots at the individual patient level. For example, a provider can use filters to construct a patient’s graph to review their PRO history, possibly during a patient visit. This interface includes a summary of the patient history, patient-generated narratives, and detailed user-defined views.

Figure B2.

Workflow Dashboard with High Interactivity, Multiple Pages, and Filters for Adjusting Outcomes on User-Defined Graphs

Power Dashboard

The power dashboard is highly interactive with a single page for users to dynamically filter PROs into user-defined views by surgical site and type. Content is organized by outcome measure, including disability (i.e., ODI, NDI), pain (NRS), and data quality. Users filter the data for an outcome measure with check boxes to generate specific views, one at a time, for either QI or patient care. Users can view PROs quarterly or over time from baseline. Users add quality indicators (i.e., sample size, error bars, range) with check boxes. For example, sample sizes for data points are shown to compare the change in NRS pain scores from baseline to 12 months for patients with different types of surgery.

Figure B3.

Power Dashboard Organized on One Page with Filters for Adjusting Outcomes on User-Defined Graphs

Appendix C. Personalized Prototype

Figure C1.

Footnotes

Disciplines

Civic and Community Engagement | Graphic Communications | Health Information Technology | Other Computer Engineering

References

- 1.Chen J, Ou L, Hollis SJ. A systematic review of the impact of routine collection of patient reported outcome measures on patients, providers and health organisations in an oncologic setting. BMC health services research. 2013;13(1):211. doi: 10.1186/1472-6963-13-211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Devlin NJ, Appleby J. Getting the most out of PROMS. Putting health outcomes at the heart of NHS decision making. London: King’s Fund; 2010. [Google Scholar]

- 3.Greenhalgh J, Meadows K. The effectiveness of the use of patient-based measures of health in routine practice in improving the process and outcomes of patient care: A literature review. J Eval Clin Pract. 1999;5(4):401–16. doi: 10.1046/j.1365-2753.1999.00209.x. [DOI] [PubMed] [Google Scholar]

- 4.Cella D, Yount S, Rothrock N, et al. The Patient-Reported Outcomes Measurement Information System (PROMIS): Progress of an NIH Roadmap Cooperative Group During its First Two Years. Med Care. 2007;45:S3–S11. doi: 10.1097/01.mlr.0000258615.42478.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Lipscomb J, Reeve BB, Clauser SB, et al. Patient-reported outcomes assessment in cancer trials: taking stock, moving forward. J Clin Oncol. 2007;25(32):5133–40. doi: 10.1200/JCO.2007.12.4644. [DOI] [PubMed] [Google Scholar]

- 6.Marshall S, Haywood K, Fitzpatrick R. Impact of patient-reported outcome measures on routine practice: a structured review. J Eval Clin Pract. 2006;12(5):559–68. doi: 10.1111/j.1365-2753.2006.00650.x. [DOI] [PubMed] [Google Scholar]

- 7.Berry DL, Hong F, Halpenny B, et al. Electronic self-report assessment for cancer and self-care support: results of a multi-center randomized trial. J Clin Oncol. 2014;32(3):199–205. doi: 10.1200/JCO.2013.48.6662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Berry DL, Blumenstein BA, Halpenny B, et al. Enhancing patient-provider communication with the electronic self-report assessment for cancer: A randomized trial. J Clin Oncol. 2011 Mar 10;29(8):1029–35. doi: 10.1200/JCO.2010.30.3909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Davison BJ, Degner LF. Empowerment of men newly diagnosed with prostate cancer. Cancer Nurs. 1997;20(3):187–96. doi: 10.1097/00002820-199706000-00004. [DOI] [PubMed] [Google Scholar]

- 10.Nelson EC, Landgraf JM, Hays RD, Wasson JH, Kirk JW. The functional status of patients: How can it be measured in physicians’ offices? Med Care. 1990;28(12):1111–26. [PubMed] [Google Scholar]

- 11.Rubenstein LV, McCoy JM, Cope DW, Barrett PA, Hirsch SH, Messer KS, et al. Improving patient quality of life with feedback to physicians about functional status. J Gen Intern Med. 1995;10(11):607–14. doi: 10.1007/BF02602744. [DOI] [PubMed] [Google Scholar]

- 12.Abernethy AP, Ahmad A, Zafar SY, Wheeler JL, Reese JB, Lyerly HK. Electronic patient-reported data capture as a foundation of rapid learning cancer care. Med Care. 2010;48(6):S32–8. doi: 10.1097/MLR.0b013e3181db53a4. [DOI] [PubMed] [Google Scholar]

- 13.Kwon S, Florence M, Grigas P, Horton M, Horvath K, Johnson M, et al. Creating a learning healthcare system in surgery: Washington State’s Surgical Care and Outcomes Assessment Program (SCOAP) at 5 years. Surgery. 2012;151(2):146–52. doi: 10.1016/j.surg.2011.08.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Broderick JE, Morgan DeWitt E, Rothrock N, Crane PK, Forrest CB. Advances in Patient Reported Outcomes: The NIH PROMIS Measures. eGEMs. 2013;1(1) doi: 10.13063/2327-9214.1015. (Generating Evidence & Methods to improve patient outcomes) Article 12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wu AW, Kharrazi H, Boulware LE, et al. Measure once, cut twice—adding patient-reported outcome measures to the electronic health record for comparative effectiveness research. J Clin Epidemiol. 2013;66(8):S12–S20. doi: 10.1016/j.jclinepi.2013.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Devine EB, Alfonso-Cristancho R, Devlin A, Edwards TC, Farrokhi ET, Kessler L, et al. A model for incorporating patient and stakeholder voices in a learning health care network: Washington State’s Comparative Effectiveness Research Translation Network. J Clin Epidemiol. 2013;66(8):S122–129. doi: 10.1016/j.jclinepi.2013.04.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Izard J, Hartzler A, Avery DI, Shih C, Dalkin BL, Gore JL. User-centered design of quality of life reports for clinical care of patients with prostate cancer. Surgery. 2014;155(5):789–96. doi: 10.1016/j.surg.2013.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Deering MJ, Patient-Generated Health Data and Health IT Office of the National Coordinator for Health Information Technology ONC Issue brief. 2013. Accessed Feb 19, 2015 from http://www.healthit.gov/sites/default/files/pghd_brief_final122013.pdf.

- 19.Weinstein JN, Brown PW, Hanscom B, et al. Designing an ambulatory clinical practice for outcomes improvement: from vision to reality--the Spine Center at Dartmouth-Hitchcock, year one. Qual Manag Health Care. 2000;8(2):1–20. doi: 10.1097/00019514-200008020-00003. [DOI] [PubMed] [Google Scholar]

- 20.Abernethy AP, Herndon JE, Wheeler JL, Patwardhan M, Shaw H, Lyerly HK, Weinfurt K. Improving health care efficiency and quality using tablet personal computers to collect research-quality, patient-reported data. Health services research. 2008;43(6):1975–91. doi: 10.1111/j.1475-6773.2008.00887.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Spine SCOAP Accessed Feb 19, 2015 from: http://www.becertain.org/patients/spine/spine_scoap.

- 22.Flum DR, Fisher N, Thompson J, Marcus-Smith M, Florence M, Pellegrini CA. Washington State’s approach to surgical variability in surgical processes/outcomes: surgical Clinical Outcomes Assessment Program (SCOAP) Surgery. 2005;138(5):821–8. doi: 10.1016/j.surg.2005.07.026. [DOI] [PubMed] [Google Scholar]

- 23.Flum DR, Alfonso-Cristancho R, Devine EB, Devlin A, Farrokhi E, Tarczy-Hornoch P, Kessler L, et al. Implementation of a “real-world” learning health care system: Washington state’s Comparative Effectiveness Research Translation Network (CERTAIN) Surgery. 2014;155(5):860–6. doi: 10.1016/j.surg.2014.01.004. [DOI] [PubMed] [Google Scholar]

- 24.Bijur PE, Latimer CT, Gallagher EJ. Validation of a verbally administered numerical rating scale of acute pain for use in the emergency department. Acad Emerg Med. 2003;10(4):390–2. doi: 10.1111/j.1553-2712.2003.tb01355.x. [DOI] [PubMed] [Google Scholar]

- 25.Fairbank JC, Pynsent PB, The Oswestry Disability Index Spine. 2000;25(22):2940–2952. doi: 10.1097/00007632-200011150-00017. [DOI] [PubMed] [Google Scholar]

- 26.Vernon H, Mior S. The Neck Disability Index: a study of reliability and validity. J Manipulative Physiol Ther. 1991;14(7):409–15. [PubMed] [Google Scholar]

- 27.Ergonomics of human-system interaction -- Part 210: Human-centred design for interactive systems. ISO 9241-210:2010. Retrieved Feb 19 2015 from: http://www.iso.org/iso/catalogue_detail.htm?csnumber=52075.

- 28.Brennan PF, Downs S, Casper G. Project HealthDesign: Re-thinking the power and potential of personal health records. J Biomed Inform. 2010;43(5):S3–5. doi: 10.1016/j.jbi.2010.09.001. [DOI] [PubMed] [Google Scholar]

- 29.Wolpin S, Stewart M. A deliberate and rigorous approach to development of patient-centered technologies. Semin Oncol Nurs. 2011;27(3):183–91. doi: 10.1016/j.soncn.2011.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Alexander G, Staggers N. A systematic review of the designs of clinical technology: Findings and recommendations for future research. ANS Adv Nurs Sci. 2009;32(3):252–79. doi: 10.1097/ANS.0b013e3181b0d737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Johnson CM, Johnson TR, Zhang J. A user-centered framework for redesigning health care interfaces. J Biomed Inform. 2005;38(1):75–87. doi: 10.1016/j.jbi.2004.11.005. [DOI] [PubMed] [Google Scholar]

- 32.Koppel R, Metlay JP, Cohen A, Abaluck B, Localio AR, Kimmel SE, Strom BL. Role of CPOE systems in facilitating medication errors. JAMA. 2005;293(10):1197–203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- 33.Middleton B, Bloomrosen M, Dente MA, Hashmat B, Koppel R, Overhage JM, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc. 2013;(e1):32–8. doi: 10.1136/amiajnl-2012-001458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Sittig DF, Wright A, Osheroff JA, Middleton B, Teich JM, Ash JS, et al. Grand Challenges in clinical decision support. J Biomed Inform. 2008;41(2):387–92. doi: 10.1016/j.jbi.2007.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Payne P. Advancing User Experience Research to Facilitate and Enable Patient Centered Research: Current State and Future Directions. eGEMs. 2013;1(1) doi: 10.13063/2327-9214.1026. (Generating Evidence & Methods to improve patient outcomes) Article 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Strauss A, Corbin J. Basics of qualitative research: grounded theory— procedures and techniques. Newbury Park: Sage Publications; 1990. [Google Scholar]

- 37.Adobe Connect Retrieved Feb 19, 2015 from: http://www.adobe.com/products/adobeconnect.html.

- 38.Catalyst WebQ Retrieved Feb 19, 2015 from: https://catalyst.uw.edu. [Google Scholar]

- 39.Wharton C, Bradford J, Jeffries J, Franzke M. Applying Cognitive Walkthroughs to more Complex User Interfaces: Experiences, Issues and Recommendations. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’92) 1992:381–388. [Google Scholar]

- 40.Axure. Retrieved Feb 19, 2015 from: http://www.axure.com/ [Google Scholar]

- 41.Few S. Information dashboard design. Sebastopol, CA: O’Reilly Media Inc; 2006. [Google Scholar]

- 42.Jagsi R, Chiang A, Polite BN, Medeiros BC, McNiff K, Abernethy A, et al. Qualitative analysis of practicing oncologists’ attitudes and experiences regarding collection of patient-reported outcomes. J Oncol Pract. 2013;9(6):e290–7. doi: 10.1200/JOP.2012.000823. [DOI] [PubMed] [Google Scholar]

- 43.Lorenzi NM, Kouroubali A, Detmer DE, Bloomrosen M. How to successfully select and implement electronic health records (EHR) in small ambulatory practice settings. BMC Med Inform and Decis Mak. 2009;9:15. doi: 10.1186/1472-6947-9-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Ash JS, Sittig DF, Seshardri V, Dystra RH, Carpenter JD, Starvi PZ. Adding insight: A qualitative cross-site study of physician order entry. Int J Med Inform. 2005;74(7–8):623–628. doi: 10.1016/j.ijmedinf.2005.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bates DW, Gawande AA. Improving safety with information technology. N Engl J Med. 2003;348(25):2526–34. doi: 10.1056/NEJMsa020847. [DOI] [PubMed] [Google Scholar]

- 46.Adler NE, Stead WW. Patients in context EHR capture of social and behavioral determinant of health. N Engl J Med. 2015;372:698–701. doi: 10.1056/NEJMp1413945. [DOI] [PubMed] [Google Scholar]

- 47.Nielsen J, Faber JM. Improving System Usability Through Parallel Design. IEEE Computer. 1996;29(2):29–35. (1996) [Google Scholar]

- 48.Muller MJ, Kuhn S. Participatory design. Commun ACM. 1993;36(6):24–8. [Google Scholar]

- 49.Zhang J. A representational analysis of relational information displays. Int J Hum.-Comput Stud. 1996;45(1):59–74. [Google Scholar]

- 50.Shneiderman B, Plaisant C, Hesse B. Improving health and healthcare with interactive visualization tools. IEEE Computer. 2013;46(5):58–66. [Google Scholar]