Abstract

Objective. Patients with type 2 diabetes often fail to achieve self-management goals. This study tested the impact on glycemic control of a two-way text messaging program that provided behavioral coaching, education, and testing reminders to enrolled individuals with type 2 diabetes in the context of a clinic-based quality improvement initiative. The secondary aim examined patient interaction and satisfaction with the program.

Methods. Ninety-three adult patients with poorly controlled type 2 diabetes (A1C >8%) were recruited from 18 primary care clinics in three counties for a 6-month study. Patients were randomized by a computer to one of two arms. Patients in both groups continued with their usual care; patients assigned to the intervention arm also received from one to seven diabetes-related text messages per day depending on the choices they made at enrollment. At 90 and 180 days, A1C data were obtained from the electronic health record and analyzed to determine changes from baseline for both groups. An exit survey was used to assess satisfaction. Enrollment behavior and interaction data were pulled from a Web-based administrative portal maintained by the technology vendor.

Results. Patients used the program in a variety of ways. Twenty-nine percent of program users demonstrated frequent engagement (texting responses at least three times per week) for a period of ≥90 days. Survey results indicate very high satisfaction with the program. Both groups’ average A1C decreased from baseline, possibly reflecting a broader quality improvement effort underway in participating clinics. At 90 and 180 days, there was no statistically significant difference between the intervention and control groups in terms of change in A1C (P >0.05).

Conclusions. This study demonstrated a practical approach to implementing and monitoring a mobile health intervention for self-management support across a wide range of independent clinic practices.

The Utah Beacon Community is one of 17 regional partnerships funded by the Office of the National Coordinator for Health Information Technology to build and strengthen local health information technology (IT) infrastructure and test innovative approaches to improving care delivery. During the 3-year program, Utah Beacon sought to improve outcomes for patients with type 2 diabetes in a three-county region by working with 60 primary care practices on clinical process improvement and electronic health record (EHR) implementation. The Utah Beacon program employed quality improvement coaches and health IT specialists to provide technical assistance to these 60 physician practices.

One year into the effort, the Utah Beacon team realized that addressing behaviors in the clinic setting alone would not be enough to achieve the effort’s health improvement targets. Diabetes is a largely self-managed disease, and primary care visits are typically limited to 15-minute, quarterly encounters. Furthermore, a focus on self-management support was clearly needed given that studies have consistently shown that patients with type 2 diabetes have low adherence to recommended self-management activities (1,2). Recognizing that the majority of care occurs beyond the clinic doors, a patient engagement component was added to the suite of existing interventions.

The number of American adults diagnosed with diabetes is expected to increase dramatically in the next 30 years, creating ever greater demands on care delivery systems and a pressing need for lower-cost innovations to support patient self-management (3). Because a key focus of Utah Beacon was to identify strategies that could improve care while decreasing costs, the program chose patient engagement interventions that had the potential to offer a lower-cost alternative to existing options (e.g., nurse case management) and that could be deployed across a large patient population.

Mobile health technology (mHealth) is one promising strategy that may meet these criteria. The World Health Organization defines mobile health as “medical and public health practice supported by mobile devices, such as mobile phones, patient monitoring devices, personal digital assistants (PDAs), and other wireless devices” (4). This new approach has several key advantages. Mobile phone use is widespread across patient populations, and text messaging is one of the most utilized mobile phone features; 85% of American adults own a mobile phone, and 80% of those who do use them to send and receive text messages (5). Moreover, because cell phone ownership is slightly higher among African Americans and Hispanics than among whites, mHealth interventions hold the promise to address health disparities (6).

Recent research suggests that mobile phone interventions may be particularly useful for improving diabetes self-management behaviors (7–9). Given that most mobile phone owners always keep their devices on hand, and almost half do so even during sleep (10), text message–based behavioral support programs allow for the possibility of reaching patients en masse and in real time with health messages that support behavior change. Furthermore, text messages are inexpensive to deliver compared to verbal phone or face-to-face communication. However, few studies have tested the effectiveness of text message–based education and behavioral support as an adjunct to care provided in the context of the primary care clinic environment.

The aim of this study was to assess the feasibility of deploying a novel two-way text-message education and behavioral support program within a diverse group of primary care clinics and to test the effectiveness of the program in improving glycemic control in adult patients with type 2 diabetes. The study used a two-group, randomized, controlled trial design to evaluate the impact of the program on glycemic control. It also examined patient interaction and satisfaction with the program using a validated survey instrument and usage data stored in the mHealth program’s Web-based administrative portal.

Design and Methods

Study Design

This study employed a pragmatic design that relied on existing strategies for identifying patients and measuring outcomes within participating clinics. The design was a randomized, controlled trial with two arms: usual care or an intervention consisting of usual care plus the text-message program (Care4Life).

Sites, Eligibility, and Participants

Study participants were adult patients with type 2 diabetes treated at one of 19 primary care clinics in the Salt Lake Metropolitan Statistical Area, which includes Salt Lake, Summit, and Tooele counties in Utah. Each of these clinics was already participating in the Utah Beacon Community program and had been working with an external clinic coach to achieve diabetes care and EHR implementation goals. Clinics used a variety of EHR systems (11 in total) and demonstrated varying proficiency using their EHRs to manage patients and record care processes. Participating clinics committed to identifying patients who met the study’s screening criteria using their EHRs. Clinic size ranged from 1 to 16 physicians, and all clinics were unaffiliated with larger, integrated health systems. There was considerable variation in the size and characteristics of the patient populations served at the clinics (Table 1). The 18 practices identified a total of 2,327 patients who met the following inclusion criteria: nonpregnant adults (aged >18 years) with a diagnosis of type 2 diabetes, an A1C of >8% in the past year (indicating inadequate glycemic control and high risk for complications), and Spanish or English as their primary language. Patients were excluded for any of the following reasons: they were no longer managed by study physicians, were pregnant, were visually impaired, had no cell phone access, or had limited or no English or Spanish language proficiency. The study received approval from the Western Institutional Review Board, an independent board based in Seattle, Wash.

TABLE 1.

Characteristics and Number of Eligible and Enrolled Patients from Participating Clinics

| Clinic | Physicians (n) | Median Income of Clinic Zip Code ($) | Characteristics/Patient Mix | Eligible Patients (n) | Enrolled Patients (n) | Eligible Patients Enrolled (%) |

| A | 8 | 30,259 | Two of eight providers have concierge practices | 46 | 1 | 2 |

| B | 16 | 55,032 | Emphasis on charity care; staffed primarily by resident trainees | 87 | 11 | 13 |

| C | 7 | 49,807 | Low-income, largely Hispanic, uninsured patients; designated as a safety-net provider by the state | 669 (Clinics C,D, and E were part of a system that shared a single EMR) | 24 | 4 |

| D | 5 | 35,133 | Low-income, largely Hispanic, uninsured patients; designated as a safety-net provider by the state | |||

| E | 4 | 22,219 | Low-income, largely Hispanic, uninsured patients; designated as a safety-net provider by the state | |||

| F | 3 | 52,000 | Two-site, rural/frontier practice | 112 | 1 | 1 |

| G | 11 | 74,590 | Large, multispecialty practice; designated as a safety-net provider by the state | 90 | 9 | 10 |

| H | 3 | 22,219 | Concierge practice near downtown serving higher-income patients | 37 | 7 | 19 |

| I | 9 | 42,832 | Large, two-site, multispecialty clinic with a staff certified diabetes educator; low-income population | 338 | 31 | 9 |

| J | 12 | 63,849 | Large, two-site, multispecialty practice; mostly commercially insured patients | 317 | 27 | 9 |

| K | 3 | 56,557 | Lower-income patients, many Hispanic | 40 | 4 | 10 |

| L | 1 | 42,831 | Largely commercially insured, but lower-income patients | 45 | 2 | 4 |

| M | 6 | 51,263 | Mix of commercially insured and Medicare/Medicaid patients | 119 | 10 | 8 |

| N | 3 | 64,006 | Primarily suburban, commercially insured patients | 80 | 6 | 8 |

| O | 12 | 55,032 | Large practice with a diabetes-focused nurse practitioner on staff | 247 | 17 | 7 |

| P | 1 | 50,613 | 80% of patients are Vietnamese immigrants | 35 | 3 | 9 |

| Q | 6 | 35,133 | Safety-net provider serving only Medicaid and uninsured patients; volunteer physicians | 40 | 2 | 5 |

| R | 1 | 77,233 | Solo practice; higher-income, commercially insured patients | 25 | 1 | 4 |

Recruitment and Enrollment

Invitation letters were mailed to patients identified through a query of each clinic’s EHR. The letter, printed on each clinic’s letterhead, directed patients to a Web-based consent form developed by the research team that was programmed to automatically randomize patients to the intervention (66%) or control arm (33%) after they gave consent. More patients were purposefully randomized to the intervention arm to increase the number of patients exposed to the program and to obtain more information about the usability and acceptability of this new approach to self-management support.

After providing consent, individuals randomized to the intervention arm were immediately redirected to the Web-based enrollment form for the text messaging program Care4Life. The form required patients to answer up to 26 questions related to their contact information, health and behaviors, and preferences regarding message frequency and intensity. After patients completed the enrollment form, they received a welcome text message from Care4Life that required a reply response to trigger program activation. After providing consent, patients randomized to the control arm received a message thanking them for joining the study and notifying them that they were not selected to use the text message tool.

Patients who did not respond to the initial mailed letter were contacted by phone or e-mail (if an e-mail address was listed in the EMR). Both approaches yielded few additional enrollments; follow-up calls were later abandoned because of the low return given the staff time required. Access to technology appears to have been a barrier for many patients; the research team reached 104 invited patients during follow-up calls, and approximately one-third (n=31) declined participation because they did not own a cell phone.

Using these approaches, 156 patients were enrolled out of ∼ 1,921 potential participants who received the invitation (8%). However, 17 patients randomized to the intervention arm could not complete the Web-based enrollment form or text message confirmation requirement. Four patients were determined to be ineligible because they did not qualify based on the screening criteria. Eleven patients were lost to follow-up because they changed clinics, moved away, or could not be located. Another 32 (20% of the total enrolled) were not included in the analysis of the primary outcome because they had not had an A1C test performed since baseline. Ultimately, 93 patients were included in the analysis, 58 in the intervention arm, and 35 in the control arm.

Based on data from a follow-up survey (n = 20) and the EHR, most of these patients (86%) had received a diabetes diagnosis >1 year before their enrollment in the study. The Care4Life program was initially designed to meet the needs of newly diagnosed patients, but this was not a requirement for participation in the study. Although messages were available in both English and Spanish, assessing the number of Spanish-speaking patients enrolled in the study was difficult because race, ethnicity, and language data were poorly documented in the EHRs; 16% of intervention patients had no race/ethnicity data, and 28% of control patients were missing such information.

Description of Intervention

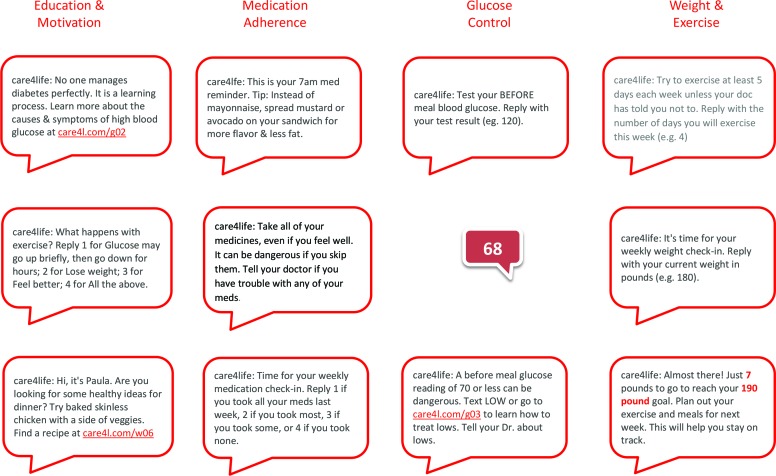

Patients assigned to the Care4Life intervention arm received between one and seven diabetes-related text messages per day. Message content was reviewed by a panel of certified diabetes educators, compliant with American Diabetes Association clinical practice guidelines, and written at the fifth-grade reading level (11). A key feature of the program was that patients had control over the types and frequency of the messages they received. Importantly, users could turn off the program at any time by texting the word “stop.” This customizable, patient-centered aspect of the program was supported by previous research showing that interventions that allow for targeting, tailoring, and patient control over the frequency of text messages have the greatest efficacy (12).

The core message protocol consisted of one text message per day related to diabetes education and health improvement. For example, two such messages read, “Have you had your blood tested in the last 6 months? An A1C test accurately measures your blood glucose, but you need this done at your doctor’s office,” and, “How are you? Feeling stressed about diabetes is normal. Getting support will help you feel better and control glucose. Ask for help when you need it.”

Patients could activate additional message protocols depending on the information they provided during the enrollment process. For example, if patients answered “yes” to the question “Has your doctor recommended that you check your blood pressure?” they would be asked if they wanted to receive a weekly reminder to check their blood pressure. If they again answered “yes,” they could then select the day and time for the weekly reminder to be sent. Patients could enroll in six message protocols in addition to the core educational message stream (Figure 1). These included:

Medication reminders and adherence surveys

Glucose testing reminders, prompts for testing results, and customized coaching depending on the glucose results texted to the system

Blood pressure monitoring reminders and feedback

Tracking and encouragement toward self-entered weight loss and exercise goals with weekly prompts for weight and exercise activity

FIGURE 1.

Sample messages by protocol option.

After replying to the initial welcome message, patients were encouraged (but not required) to text back responses to the requests for biometric and behavioral information. Their numerical responses were stored in a Web-based portal where patients could view their trends over time, make associations between their behaviors and test results, and print out their data to bring to visits with their clinical care team.

Primary Outcome

The primary outcome of the study was change in A1C from baseline to 90 and 180 days after enrollment. Because EHRs were used as the surveillance tool for this study, the results were dependent on the behavior patterns of patients and clinics to obtain the A1C data required for analysis. At 6 months, the team queried the EHRs of participating clinics to pull baseline, 90-day, and 180-day A1C data for patients who had given consent.

Secondary Outcomes

The secondary outcome measure was patient interaction and satisfaction with the program. Usage data were obtained from the Care4Life administrative portal where patient enrollment choices and interactions with the system were stored. Satisfaction was assessed via two surveys. Patients in the intervention arm received a short, five-question text message survey meant to assess their satisfaction 90 and 180 days into the Care4Life program. In addition, an exit questionnaire, the 8-question Client Satisfaction Questionnaire (CSQ-8), was administered to patients in the intervention arm at the completion of the study and was available in either an online or print format (13). The CSQ-8 is a well-validated questionnaire consisting of eight questions measuring subjects’ opinions of a service; the four-point scale used for each question can be converted into a simple overall score for quantitative comparison and is widely used for measuring patient satisfaction. This generic instrument was selected because it can be used to generate a unified measure of general satisfaction that can be compared with different health technology interventions (13,14).

Statistical Analysis

A two-sample t test and one-way analysis of variance were used to contrast the difference in A1C from baseline to 90 days and from baseline to 180 days for the intervention and control groups. Baseline A1C was also adjusted for as a covariate. The CSQ-8 respondent data were analyzed using an unweighted summation of both individual question ratings and the total single-dimension patient satisfaction score. Statistical significance was defined as P <0.05 or a 95% CI that excluded 0.

Study Results

At follow-up, 47 patients had 90-day A1C test results (defined as 45–135 days after initiating the program), and 52 patients had 180-day A1C test results (defined as 135–225 days after initiating the program). Six patients had all three measurements (baseline, 90 days, and 180 days). Baseline characteristics of the two groups were comparable in terms of age, sex, and baseline A1C (Table 2).

TABLE 2.

Baseline Characteristics of Study Participants

| Baseline Characteristics | Intervention Group | Control Group | ||||||

| n | Mean | SD | n | Mean | SD | |||

| A1C | 58 | 9.3 | 2.1 | 35 | 8.8 | 1.8 | ||

| >10% (high) | 16 | 12.2 | 1.1 | 7 | 11.8 | 1.4 | ||

| <10% (low) | 42 | 8.2 | 1.0 | 28 | 8.1 | 0.9 | ||

| Age | 58 | 52.0 | 11.2 | 31 | 54.5 | 10.7 | ||

| n | Percent | n | Percent | |||||

| Race/ethnicity | ||||||||

| White | 44 | 76 | 21 | 60 | ||||

| Hispanic | 2 | 3 | 1 | 3 | ||||

| Black | 2 | 3 | 0 | 0 | ||||

| Asian | 1 | 2 | 2 | 6 | ||||

| Pacific Islander | 0 | 0 | 1 | 3 | ||||

| Other/unknown | 9 | 16 | 10 | 28 | ||||

| Sex | ||||||||

| Male | 23 | 40 | 11 | 31 | ||||

| Female | 35 | 60 | 22 | 63 | ||||

| Unknown | 0 | 0 | 2 | 6 | ||||

Post hoc analysis of patient participation was used to determine whether study attrition could have affected outcome measures. Patients assigned to the intervention arm were no more likely than patients in the control group to drop out of the study, which suggests that sample selection bias was not driving the results. In addition, patients with high initial A1C values were no more or less likely to drop out of the program. Analysis of study attrition did show that women were more likely than men to drop out of the study at 180 days, but this attrition was similar in the control and intervention arms.

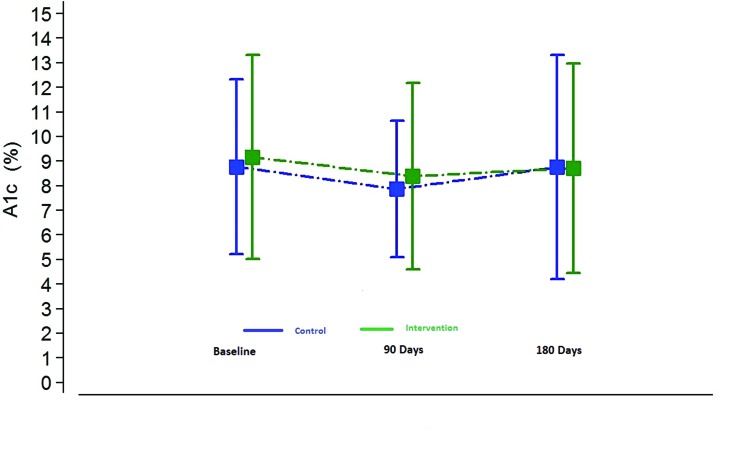

At 90 and 180 days, there were no statistically significant differences between the intervention and control groups in terms of change in A1C (P >0.05). Interestingly, both groups showed improvement, which may reflect the various clinical process improvement initiatives occurring in participating clinics at the time under the auspices of the Utah Beacon program. However, regression to the mean is an equally plausible explanation (Figure 2). There were no statistically significant relationships between duration in the program or intensity of use (interactions with the program) and A1C reduction.

FIGURE 2.

Change in A1C from baseline at 90 and 180 days.

Patient Interaction

A total of 76 patients enrolled in the Care4Life program. Fourteen individuals were unable to overcome the initial hurdle of replying to the initial welcome message. Data from the administrative portal suggest that patients who identified their first language as Spanish had particular difficulty with this aspect of the enrollment process. Six patients randomized to the intervention group enrolled in the Spanish-language version of Care4Life through the Web-based portal, but only two of them ever received Care4Life program messages. Four of the six (66%) did not reply to the initial text message from Care4Life, which was a requirement to demonstrate assent to join the program. In contrast, just 12% (10) of users who enrolled in the English version of the program never replied to the welcome message.

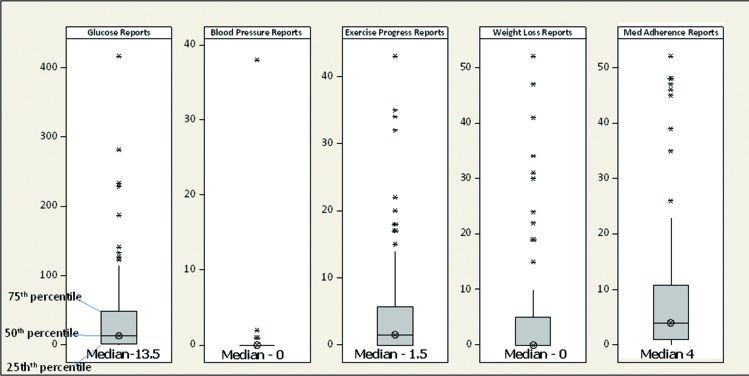

Few patients used the Web portal function to track their responses; only eight users (11%) logged into their accounts, and only two did so more than once. Enrollment in and engagement with the different protocol options varied (Figure 3). Twenty-two patients (29%) were frequent users; they stayed in the program for at least 90 days and sent in responses to prompts at least three times per week. Eight users (11%) never replied to a message request for information and used Care4Life as a one-way reminder and education program. Although users could turn the program off at any time, all users kept the program on for at least 30 days, 78% remained enrolled at 90 days, and 62% remained enrolled at 180 days. Surprisingly, 38 patients (50%) never stopped the program and continued to receive messages well beyond the 180-day end of the study.

FIGURE 3.

Boxplots of report counts per patient. Boxplots indicate median, first-quartile, and third-quartile values for frequency of patient reports from different Care4Life prompts. Asterisks represent outliers (defined as 1.5 times the interquartile range). Glucose reporting occurred frequently (median 13.5 replies), whereas blood pressure and weight loss prompts received few replies (median 0). Med, medication.

Patient Satisfaction

A total of 57 CSQ-8 questionnaires were sent to patients in the intervention group, and 33 were returned by the end of the data collection period (58%). Three questionnaires were excluded because they were submitted by individuals not enrolled in the intervention. This resulted in a total of 30 questionnaires (19 Web-based forms and 11 paper forms) being included in the analysis, representing 53% of the patients in the intervention group.

Patients reported high satisfaction with the Care4Life program, with individual questions all scoring above 3 (on a 4-point scale), and a mean total satisfaction score of 27.7 out of 32. The mean and standard deviation results for each question and for total satisfaction are summarized in Table 3. The mean scores for individual questions suggest a high level of satisfaction with the Care4Life program, as well as an endorsement of the modality and its perceived benefits for other patients with diabetes.

TABLE 3.

CSQ-8 Results at 6-Month Follow-Up*

| CSQ-8 Item | Mean Score | SD |

| 1. Quality of service | 3.63 | 0.56 |

| 2. Kind of service desired | 3.50 | 0.63 |

| 3. Met needs | 3.23 | 0.63 |

| 4. Would recommend to a friend | 3.60 | 0.56 |

| 5. Satisfaction with help received | 3.17 | 0.87 |

| 6. Helped in dealing more effectively with problems | 3.47 | 0.57 |

| 7. Overall satisfaction | 3.60 | 0.62 |

| 8. Would use it again in the future | 3.57 | 0.50 |

| Total Satisfaction | 27.77 | 3.85 |

The CSQ-8 was used with permission of Tamalpais Matrix Systems, LLC.

The high satisfaction reported in the CSQ-8 survey at exit was similar to the text-message survey responses reported at 90 days. Forty-five percent (n = 26) of enrolled patients answered the mid-point survey, and 85% answered “yes” to the question: “Did Care4Life improve your knowledge of diabetes and how to manage it?” Similarly, 94% of respondents answered “yes” to the question: “Would you recommend Care4Life to other patients with diabetes?”

Discussion and Conclusions

There was no significant difference in the primary outcome between the intervention and control groups at 90 or 180 days. Enrollment and interaction data indicate that patients used the program in a variety of ways, and at least half of them valued the program enough to continue using it beyond the scope of the study. Some patients engaged with the program more frequently and for a longer period of time than others. Fourteen individuals were unable to overcome the initial hurdle of replying to the initial welcome message, perhaps reflecting unfamiliarity with text messaging functionality. Future research should examine what factors predict engagement with digital tools for self-management such as the one tested here.

The high satisfaction reported by patients in the intervention arm of the study may reflect the unmet need for disease management and behavioral support for diabetes. This finding was surprising given that the screening criterion of an A1C >8% in the past year would have selected longer-term diabetes patients who had been managing their condition for some time, whereas the program was primarily designed for newly diagnosed patients. Despite this, the program appears to have provided needed (and perhaps new) information about how to manage diabetes.

Contrary to previous surveys (15), medical providers in this study expressed enthusiasm for the intervention and an eagerness to try new approaches that may assist patients with the difficult task of managing diabetes outside of the clinic setting. The participating primary care practices included clinics that were already amenable to change and improvement (by virtue of the fact that they had joined the Utah Beacon initiative), and they readily embraced this mHealth intervention.

Recruiting patients to enroll in this intervention was difficult. Many patients were unfamiliar with texting, and those with lower technology proficiency typically required 15–30 minutes of one-on-one support to fully understand and enroll in the program. The cost efficiencies anticipated for mHealth technologies such as this one may be tempered by the significant front-end labor required to recruit and enroll patients given that both the format and the technology may be new to many patients with chronic diseases, particularly older patients.

Future efforts to integrate mHealthtools into the primary care clinic environment may need to address the cost and time barriers that currently hamper broader adoption. Emerging payment paradigms such as Medicare’s Accountable Care Organization model (16) may alleviate some of the front-end enrollment burden that would be shouldered by providers who offered this support program as part of their care management services. Furthermore, some mHealth programs are now seeking U.S. Food and Drug Administration approval and securing reimbursement as a diabetes therapy from insurance payers (17)—developments that could also speed provider adoption.

Although this program included an option for patients to share their results with their provider, few actually did so. However, this could be attributable to the design of our intervention, which did not require a strong connection between the text message program and the care team. Future strategies could explore integrating the tool into the clinical process, and, ideally, using the self-reported data available through the program to inform a shared care plan. Such a strategy may allow for a richer view of patient behavior beyond the clinical encounter and could reinforce the role of patients as the drivers of health behavior change and key partners in improving health outcomes.

Study Limitations

This study employed a pragmatic, community-based research design that relied on the EHRs of participating medical practices as surveillance tools. Furthermore, the study targeted patients with poorly controlled diabetes who were likely more difficult to recall to the clinic for regular A1C testing. This is reflected in the large proportion of patients enrolled in the study who did not receive A1C tests at recommended intervals, which created power issues for the analysis. Unfortunately, the data do not provide insight as to why this was the case (e.g., patient refusal, lapses in care management on the part of the clinic, or gaps in documentation in the EHR), but, clearly, a more robust effort was needed to recall patients for testing for this data collection approach to yield the anticipated results.

Our ability to obtain meaningful results was also limited by the wide variation in the timing of baseline A1C measures relative to the study onset; one-third of the subjects (n = 26) ultimately included in the evaluation had baseline data that preceded the commencement of the intervention by >6 months, limiting our ability to measure the effect of the intervention. However, the small gain in blood glucose control observed here is consistent with similar studies that examined change in A1C for programs that delivered messages to patients by mobile phone (18).

Our primary outcome, change in A1C, is difficult to affect in a short timeframe (6 months), and our sample size was small. Furthermore, the research occurred in the context of a broader quality improvement effort and was concurrent with a variety of other initiatives focused on diabetes care that may have affected our results. The study design did not allow for controlling for these other interventions.

Acknowledgments

The authors acknowledge the funders for this project, the Office of the National Coordinator for Health Information Technology and the Center for Technology and Aging, and the 18 primary care practices that participated in it. They thank Utah Beacon colleagues Mike Silver and Gary Berg for their work on the project and Nicholas H. Wolfinger, PhD, Yue Zhang, PhD, Angela Presson, PhD, and Sharif Taha, PhD, of the University of Utah and Abigail Schachter, Alison Rein, and Raj Sabharwal of AcademyHealth for their valuable insights and contributions.

Duality of Interest

No potential conflicts of interest relevant to this article were reported.

References

- 1.Renders CM, Valk GD, Griffin S, Wagner EH, Eijk JT, Assendelft WJ. Review: interventions to improve the management of diabetes mellitus in primary care, outpatient, and community settings. Cochrane Database Syst Rev 2001;1:CD001481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kurtz MS. Adherence to diabetes regimens: empirical status and clinical applications. Diabetes Educ 1990;16:50–56 [DOI] [PubMed] [Google Scholar]

- 3.Centers for Disease Control and Prevention Number of Americans with diabetes projected to double or triple by 2050. Available from http://www.cdc.gov/media/pressrel/2010/r101022.html. Accessed 3 June 2013

- 4.World Health Organization mHealth: new horizons for health through mobile technologies. Global Observatory for eHealth series, Vol. 3, 2011. Available from http://www.who.int/goe/publications/ehealth_series_vol3/en. Accessed 15 May 2013

- 5.Duggan M, Rainie L. Cell phone activities: 2012. Pew Internet and American Life Project, 25 November 2012. Available from http://pewinternet.org/Reports/2012/Cell-Activities.aspx. Accessed 12 February 2013

- 6.Lenhart A. Cell phones and American adults, 2010. Pew Internet and American Life Project. Available from http://pewinternet.org/∼/media//Files/Reports/2010/PIP_Adults_Cellphones_Report_2010.pdf. Accessed 3 June 2013

- 7.Liang X, Wang Q, Yang X, Cao J, Chen J, Mo X. Effect of mobile phone intervention for diabetes on glycaemic control: a meta-analysis. Diabet Med 2011;28:455–463 [DOI] [PubMed] [Google Scholar]

- 8.Fjeldsoe BS, Marshall AL, Miller YD. Behavior change interventions delivered by mobile telephone short-message service. Am J Prev Med 2009;36:165–173 [DOI] [PubMed] [Google Scholar]

- 9.Cole-Lewis H, Kershaw T. Text messaging as a tool for behavior change in disease prevention and management. Epidemiol Rev 2010;32:56–69 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Smith A. The best (and worst) of mobile connectivity. Pew Internet and American Life Project. 30 November 2012. Available from http://www.pewinternet.org/PPF/r/213/report_display.asp. Accessed 12 November 2014

- 11.American Diabetes Association Clinical practice recommendations, 2014. Available from http://professional.diabetes.org/ResourcesForProfessionals.aspx?cid=84160. Accessed 3 September 2014

- 12.Head KJ, Noar SM, Iannarino NT, Grant Harrington N. Efficacy of text messaging–based interventions for health promotion: a meta-analysis. Soc Sci Med 2013;97:41–48 [DOI] [PubMed] [Google Scholar]

- 13.Larsen DL, Attkisson CC, Hargreaves WA, Nguyen TD. Assessment of client/patient satisfaction: development of a general scale. Eval Program Plann 1979;2:197–207 [DOI] [PubMed] [Google Scholar]

- 14.Nguyen TD, Attkisson CC, Stegner BL. Assessment of patient satisfaction: development and refinement of a service evaluation questionnaire. Eval Program Plann 1983;6:299–313 [DOI] [PubMed] [Google Scholar]

- 15.PricewaterhouseCoopers Emerging mHealth: paths for growth. Available from http://www.pwc.com/en_GX/gx/healthcare/mhealth/assets/pwc-emerging-mhealth-full.pdf. Accessed 4 June 2013

- 16.Centers for Medicare & Medicaid Services Accountable care organizations (ACO). Available from http://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/ACO/index.html?redirect=/ACO. Accessed 3 September 2014

- 17.Dolan B: WellDoc’s BlueStar secures first mobile health reimbursement. Avail-able from http://mobihealthnews.com/23026/welldocs-bluestar-secures-first-mobile-health-reimbursement. Accessed 4 June 2013

- 18.Pal K, Eastwood SV, Michie S, et al. Computer-based diabetes self-management interventions for adults with type 2 diabetes mellitus. Cochrane Database Syst Rev 2013;1:CD008776. [DOI] [PMC free article] [PubMed] [Google Scholar]