Abstract

Background and objective The clinical note documents the clinician's information collection, problem assessment, clinical management, and its used for administrative purposes. Electronic health records (EHRs) are being implemented in clinical practices throughout the USA yet it is not known whether they improve the quality of clinical notes. The goal in this study was to determine if EHRs improve the quality of outpatient clinical notes.

Materials and methods A five and a half year longitudinal retrospective multicenter quantitative study comparing the quality of handwritten and electronic outpatient clinical visit notes for 100 patients with type 2 diabetes at three time points: 6 months prior to the introduction of the EHR (before-EHR), 6 months after the introduction of the EHR (after-EHR), and 5 years after the introduction of the EHR (5-year-EHR). QNOTE, a validated quantitative instrument, was used to assess the quality of outpatient clinical notes. Its scores can range from a low of 0 to a high of 100. Sixteen primary care physicians with active practices used QNOTE to determine the quality of the 300 patient notes.

Results The before-EHR, after-EHR, and 5-year-EHR grand mean scores (SD) were 52.0 (18.4), 61.2 (16.3), and 80.4 (8.9), respectively, and the change in scores for before-EHR to after-EHR and before-EHR to 5-year-EHR were 18% (p<0.0001) and 55% (p<0.0001), respectively. All the element and grand mean quality scores significantly improved over the 5-year time interval.

Conclusions The EHR significantly improved the overall quality of the outpatient clinical note and the quality of all its elements, including the core and non-core elements. To our knowledge, this is the first study to demonstrate that the EHR significantly improves the quality of clinical notes.

Keywords: QNOTE, electronic health record, clinical quality, clinical note, note quality

INTRODUCTION

The clinical note1,2 documents the physician's information collection,3–7 problem assessment,3–7 and clinical management.3–8 In addition to its clinical uses, it is important for patient safety,5,6,9–11 quality assurance,5,12,13 legal proceedings,4,5,14 billing justification,4,6,14,15 and medical education.3,16–18 EHRs are being implemented in clinical practices throughout the USA yet the basic functions of clinical notes have not changed despite this transition from a paper to electronic format. The benefits of EHRs include the instantaneous availability of medical records6,9,16 and the elimination of illegible notes.6,16,19,20 It is not known whether EHRs improve the quality of clinical notes.

We developed a quantitative instrument, QNOTE, to measure clinical note quality. A validation study found QNOTE to be a valid and reliable measure of clinical note quality.2 We used QNOTE to assess the quality of the outpatient primary care clinical notes for patients with type 2 diabetes who were seen in clinic at three successive time points: before EHR implementation (before-EHR), approximately 6 months after-EHR implementation (after-EHR), and approximately 5 years following EHR implementation (5-year-EHR). We hypothesized that the implementation of an EHR would improve the quality of outpatient clinical visit notes.

METHODS

This is a five and a half year longitudinal retrospective multicenter study. QNOTE is a validated quantitative instrument that assesses the quality of the clinical note in terms of 12 clinical elements: chief complaint, history of present illness (HPI), problem list, past medical history, medications, adverse drug reactions and allergies, social and family history, review of systems, physical findings, assessment, plan of care, and follow-up information. The seven components used to assess the elements are as follows: clear, complete, concise, current, organized, prioritized, and sufficient information. The external and internal validations of QNOTE have been previously described and it was shown that the format of the note did not affect the quality ratings.2

Briefly, the physician outpatient notes of patients with type 2 diabetes (patients could have comorbid conditions) who had been seen in clinic on at least three occasions were used for this study. These notes were also used for the prior instrument validation study. The three occasions were: once approximately 6 months prior to EHR adoption (before-EHR), once approximately 6 months after EHR adoption (after-EHR), and once approximately 5 years after EHR adoption (5-year-EHR). This resulted in three outpatient clinical visit notes per patient. One-third of the notes were handwritten (before-EHR) and two-thirds were electronic (after-EHR and 5-year-EHR). The before-EHR visit notes were free text and the after-EHR and 5-year-EHR visit notes were electronic templates structured by the physicians. QNOTE has been shown to be equally reliable for assessing handwritten and electronic notes.2 From the patient pool of 537 patients, 100 study patients were randomly selected, resulting in 300 study notes; 100 before-EHR, 100 after-EHR, and 100 5-year-EHR.

To rate the visit notes, we recruited 8 general internal medicine and 8 family medicine MHS physicians in the District of Columbia metropolitan area, 10 were military physicians, 6 were civilian physicians, none of whom had prior experience assessing the quality of clinical notes. Before starting their reviews, the raters were shown the QNOTE instrument and instructed on how to fill it out online. They were told to read the note and score the note using QNOTE. They did not receive any training. The notes and raters were each independently randomized prior to the notes being given to the raters. They were asked to score the components of each element as fully acceptable, partially acceptable, or unacceptable. Not all of the components were used to evaluate all of the elements, but every element was evaluated using at least one component. After the raters completed their ratings of the elements’ components, a score was assigned to each component's rating: fully acceptable (100), partially acceptable (50), and unacceptable (0). The average of the component scores was the score for that element. The reviewers were not contacted during the review process and they were not compensated for participating in the study. The Uniformed Services University of the Health Sciences Institutional Review Board approved the study.

QNOTE scores are reported as means, ranging from 0 to 100, and SDs. The element scores were compared with their grand mean scores using the Student's t-test. The change in the element scores and grand mean scores across the time intervals were calculated in percentages and compared using the Student's paired t-test. Pearson's correlation coefficient was used to compare elements over time. The F-test was used to test for equality of variance. All calculations were performed using SAS 9.7 (Cary, North Carolina, USA).

RESULTS

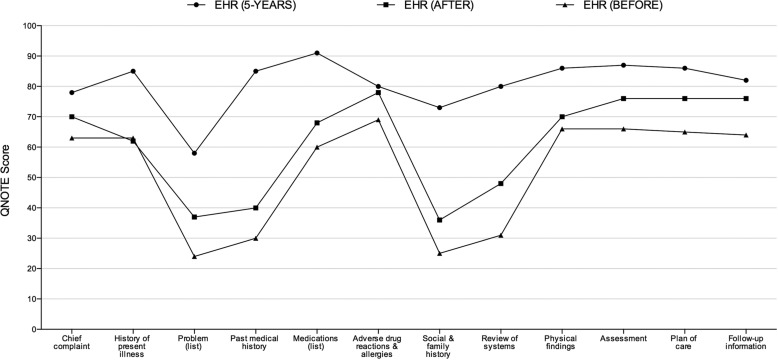

The before-EHR, after-EHR, and 5-year-EHR grand mean scores (SDs) were 52.0 (18.4), 61.2 (16.3), and 80.4 (8.9), respectively, (table 1) and the change in scores for before-EHR to after-EHR and before-EHR to 5-year-EHR were 18% (p<0.0001) and 55% (p<0.0001), respectively (table 2). All the element and grand mean quality scores significantly improved over the 5-year time interval. The improvement in scores was associated with an increasing similarity of element scores (figure 1). The correlation between the element scores, comparing before-EHR to after-EHR was r = 0.96, p<0.0001, and comparing before-EHR to 5-year-EHR was r = 0.61, p = 0.035. Over time, the element scores became less correlated with the before-EHR scores.

Table 1:

QNOTE mean element scores (SD) at each time point and each element's mean score compared with its grand mean score

| Elements | Before-EHR | After-EHR | 5-years-EHR |

|---|---|---|---|

| Chief complaint | 62.5 (28.3) | 70.9 (26.9) | 78.2 (23.7) |

| History of present illness | 63.2 (26.1) | 60.5 (33.8) | 84.7 (18.3) |

| Problem list | 24.0 (28.9)* | 38.6 (31.6)* | 59.6 (31.2)* |

| Past medical history | 29.4 (36.4)* | 39.4 (35.1)* | 84.7 (24.0) |

| Medications | 59.0 (39.0) | 67.1 (32.3) | 90.8 (17.7) |

| Adverse drug reactions and allergies | 69.1 (33.7)† | 77.3 (30.4)† | 79.3 (29.4) |

| Social and family history | 25.4 (27.9)* | 35.4 (30.9)* | 72.7 (26.0)* |

| Review of systems | 30.7 (33.3)* | 48.1 (38.7)* | 80.4 (23.8) |

| Physical findings | 66.3 (27.6)† | 70.3 (32.9) | 85.8 (16.5) |

| Assessment | 65.5 (24.4)† | 75.8 (21.4)† | 86.6 (14.5)† |

| Plan of care | 65.4 (24.6)† | 75.7 (21.7)† | 85.3 (15.3) |

| Follow-up information | 63.5 (27.0) | 75.7 (22.3)† | 81.7 (20.5) |

| Grand mean | 52.0 (18.4) | 61.2 (16.3) | 80.4 (8.9) |

*Element score is significantly below the grand mean (p<0.05). †Element score is significantly above the grand mean (p<0.05).

EHR, electronic health record.

Table 2:

Comparison of percent change in QNOTE element scores over time (p values)

| Elements | Before vs after | Before vs 5-years |

|---|---|---|

| Chief complaint | 13% (<0.05) | 25% (<0.0001) |

| History of present illness | −4% (NS) | 34% (<0.0001) |

| Problem list | 61% (<0.002) | 144% (<0.0001) |

| Past medical history | 34% (NS) | 188% (<0.0001) |

| Medications | 14% (NS) | 54% (<0.0001) |

| Adverse drug reactions and allergies | 12% (NS) | 15% (<0.05) |

| Social and family history | 39% (<0.05) | 186% (<0.0001) |

| Review of systems | 57% (<0.0005) | 162% (<0.0001) |

| Physical findings | 6% (NS) | 29% (<0.0001) |

| Assessment | 16% (<0.002) | 32% (<0.0001) |

| Plan of care | 16% (<0.002) | 31% (<0.0001) |

| Follow-up information | 19% (<0.0005) | 29% (<0.0001) |

| Grand mean | 18% (<0.0001) | 55% (<0.0001) |

Figure 1:

The shape of QNOTE element scores at the three time points.

The significant shift to higher quality scores was accompanied by a significant reduction in the grand mean SDs, before-EHR to 5-year-EHR, 18.4–8.4, p = 0.015. Other than the problem list variance, which did not significantly change over time, all the elements’ variances decreased in the before-EHR to 5-year-EHR time interval.

At the before-EHR time point, four elements, namely, problem list, past medical history, social and family history, and review of systems, were significantly below the before-EHR grand mean (table 1). These four elements’ scores demonstrated the largest improvements over time. Also at the before-EHR time point, four elements, namely, adverse drug reason and allergies, physical findings, assessment, and plan of care were significantly above the before-EHR grand mean. These elements’ scores also significantly improved, but less so than the four elements that had been below the grand mean.

DISCUSSION

The introduction of the EHR significantly improved the quality of the outpatient clinical notes. All the element and grand mean quality scores significantly improved, some within 6 months and all by the end of 5 years. This suggests that it took the physicians some time to learn how to effectively use the capabilities of the EHR and that its structural design caused them to write more detailed and comprehensive notes. The decline in the correlation of the elements before-EHR to after-EHR and to 5-year-EHR reflects changes in their relationships due to the introduction of the EHR. The reduction in element score variances by the end of 5 years demonstrates that the improvement in scores occurred across physicians, rather than in a subset of physicians.

The ‘core’ elements that physicians focus on during the patient visit are the chief complaint, HPI, physical findings, assessment, plan of care, and follow-up. At the before-EHR time point all the core elements had quality scores greater than 60, which suggests that the physicians were focusing on them during the patient visit before the advent of the EHR. The core elements’ average score increased from 64.4 (before-EHR) to 83.7 (5-years-EHR), p<0.001; a significant 30% increase in quality. Because the core elements are not a result of auto population or other automated systems it is reasonable to conclude that the EHR's effect on physician performance is responsible for the observed 30% improvement in core note quality.

Many EHRs include check boxes, presumably to make it easier for physicians to complete a detailed yet comprehensive note. But check boxes have two unintended pernicious consequences; they provide an opportunity for physicians to gloss over important aspects of the note and they reduce our ability to assess the quality of the note. EHRs also create larger boxes within which specific information is entered. The problem with this idea is that the information associated with QNOTE's elements may be scattered throughout the note. For example, the HPI is a chronological story that explores the patient's chief complaint.21 The HPI contains all the information relevant to the chief complaint and it can include information from many parts of the note. Furthermore, complex patients can generate complex notes that do not easily fit into discrete boxes. Finally, a recent survey of physicians found that onscreen boxes make it more difficult to practice medicine, turning face time into screen time, and thus into physician frustraction.22 In would be better if we assist physicians with the non-core elements of their notes, but allow them to write their core elements. We can then use QNOTE, in conjunction with natural language processing, to assess the core elements and note quality.

There has been a great deal of interest in the copying and pasting of information from previous notes into the current note.23,24 Physicians have been copying from previous notes since long before the introduction of the EHR. It was a time-consuming but necessary activity to carry forward and incorporate relevant clinical information into the daily note. What is different today is that, because little effort is required to cut and paste material in an EHR, indiscriminate copying and pasting is occurring; resulting in bloated and confusing notes that contain redundant, outdated, and even incorrect information. Eliminating the ability of physicians to cut and paste is not a viable option because there are many occasions when important past information needs to be included in the current note. QNOTE is sensitive to inappropriate cutting and pasting; when it occurs the QNOTE score suffers. Thus, one way to end this practice is to evaluate clinicians’ notes and provide them with feedback regarding note quality.

The clinical note is the basis for medical coding and billing; billing levels are based on the evaluation and management information contained in the note. In recent years there has been an increase in upcoding; between 2001 and 2010, physicians have significantly increased their billing for higher-level E&M codes.25 There is a proposal that the American Medical Association's proprietary CPT coding system require that physicians provide substantially more documentation of their medical decision making.26 If this is approved, more attention will have to be paid to the quality of information in the note. In addition, the new International Classification of Diseases (ICD-10-CM) requires more documentation.27 Currently, there is no standard for assessing note quality and without a standard it is difficult to provide physicians with the systematic feedback they need to improve and maintain the quality of their notes. QNOTE can assist physicians in the evaluation of their notes and it can help them discover and correct deficits in their documentation.

The physician–patient encounter can be analyzed in terms of at least three interrelated levels of analysis. The first level is the clinical note, which is assessed in terms of the quality of its elements, that is, clinical note quality. The second level is the clinical encounter, which is assessed in terms of the quality of the physicians’ collection of the relevant clinical information, their analysis of the information, and their clinical plan based on their analysis, that is, clinical quality. The third level is the clinical outcome, which is assessed in terms of the health of the patient, where health is usually defined in terms of one or more clinical outcomes, that is, clinical outcomes. In this view, a high-quality clinical note is necessary, but not sufficient, for determining the quality of the clinical encounter. In other words, if the clinical note is not of sufficient quality, then the clinical quality of the clinical encounter cannot be determined. However, a high-quality note does not guarantee a high-quality clinical encounter. Likewise, a high-quality clinical note is necessary but not sufficient for assessing patient outcomes.

We selected patients with type 2 diabetes because their visits are generalizable to all but the simplest primary care visits and their visits require full and complete clinical notes. Type 2 diabetes is the second most common diagnosis, after hypertension, in ambulatory medicine.28 Patient visits can be for only diabetes, which includes tests and medications, but more commonly they include the management of multiple comorbid conditions. Although we call these visits diabetic because the patients have type 2 diabetes, in reality these patients have most major diseases, including cardiovascular, renal, neurological, infectious, and musculoskeletal, and they exhibit the full range of severity of illness.29 In a recent population-based survey of 3761 adults with type 2 diabetes, 82.4% reported one or more morbidities, with a mean of 2.4 comorbidities,29 and the average patient with diabetes takes three or more medications.30 In other words, visits of patients with diabetes involve a wide variety of diseases representing the spectrum of clinical medicine and, because of the patient's complexity, the physician's clinical note must be detailed, accurate, and complete. Acute, self-limiting illness patient visits usually have too brief a note for the proper evaluation of their quality.

This study has several limitations. First, it was performed using MHS records. It is well established that the MHS distribution of patients is similar to the civilian community and it provides equivalent care to the civilian community.31–34 Second, although the MHS EHR is similar in most respects to commercial EHRs, these results may not be generalizable to all EHR systems. Third, we know of no MHS-wide billing or diabetes quality improvement initiatives related to the clinical note, but if there were any, this might account for some, but not most, of our findings. Fourth, we assessed primary care clinic visits; we do not know the relationship between the introduction of the EHR and specialty clinic visit note quality.

Our study has several strengths. It was a large five and a half year longitudinal multicenter trial that used the validated QNOTE instrument to rigorously evaluate the impact of the EHR on the quality of clinical notes. This is the first longitudinal quantitative assessment of the quality of outpatient clinical notes using a validated instrument.

CONCLUSIONS

The EHR significantly improved the overall quality of the outpatient clinical note and the quality of all its elements, including the core and non-core elements. To our knowledge, this is the first study to demonstrate that the EHR significantly improves the quality of clinical notes.

ACKNOWLEDGEMENTS

The authors acknowledge and appreciate the support of Patrice Waters-Worley, MBA, Michelle Helaire, EdD, and Karla Herres, RN, CPHQ of Lockheed Martin, Inc who traveled nationally to the five medical facilities participating in the study, coordinating the collection, de-identification, and indexing of clinical notes. These contributors were compensated for their efforts. Written permission was obtained from each of these contributors.

CONTRIBUTORS

All the authors provided substantial contributions to conception and design, acquisition of data, or analysis and interpretation of data; they assisted in drafting the article or revising it critically for important intellectual content; and they provided final approval of the version to be published.

FUNDING

Extramural funding was provided by the US Army Medical Research & Materials Command, cooperative agreement W81XWH-08-2-0056. All of the authors are employed by the Uniformed Services University of the Health Sciences or another entity of the US Federal Government. There are no author financial affiliations associated with this manuscript other than those related to their government employment.

DISCLAIMER

If the manuscript is accepted for publication, we are required by policy to include the following disclaimer: The views expressed in this manuscript are solely those of the authors and not necessarily reflect the opinion or position of the Uniformed Services University of the Health Sciences, the US Army Medical Research & Materials Command, the National Library of Medicine, the US Department of Defense, or the US Department of Health and Human Services.

COMPETING INTERESTS

None.

ETHICS APPROVAL

Uniformed Services University of the Health Sciences.

DATA SHARING STATEMENT

An electronic version of QNOTE is available free of charge from the corresponding author.

PROVENANCE AND PEER REVIEW

Not commissioned; externally peer reviewed.

REFERENCES

- 1.Tang PC, LaRosa MP, Gorden SM. Use of computer-based records, completeness of documentation, and appropriateness of documented clinical decisions. J Am Med Inform Assoc 1999;6:245–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Burke HB, Hoang A, Becher D, et al. QNOTE: an instrument for measuring the quality of clinical notes. J Am Med Inform Assoc 2014;21:910–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Weed LL. Medical Records, Medical Education, and Patient Care: The Problem-oriented Record as a Basic Tool. Chicago: Year Book Medical Publishers, Inc, 1969. [Google Scholar]

- 4.Rosenbloom ST, Stead WW, Denny JC, et al. Generating clinical notes for electronic health record systems. Appl Clin Inform 2010;1:232–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Soto CM, Kleinman KP, Simon SR. Quality and correlates of medical record documentation in the ambulatory care setting. BMC Health Serv Res 2002;2:22–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Miller RH, Sim I. Physicians’ use of electronic medical records: barriers and solutions. Health Affairs 2004;23:116–26. [DOI] [PubMed] [Google Scholar]

- 7.Meng F, Taira RK, Bui AAT, et al. Automatic generation of repeated patient information for tailoring clinical notes. Int J Med Inform 2005;74:663–73. [DOI] [PubMed] [Google Scholar]

- 8.Pollard SE, Neri PM, Wilcox AR, et al. How physicians document outpatient visit notes in an electronic health record. Int J Med Inform 2013;82:39–46. [DOI] [PubMed] [Google Scholar]

- 9.El-Kareh R, Gandhi TK, Poon EG, et al. Trends in primary care clinician perceptions of a new electronic health record. J Gen Intern Med 2009;24:464–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Johnson KB, Ravich WJ, Cowan JA., Jr Brainstorming about next-generation computer-based documentation: an AMIA clinical working group survey. Int J Med Inform 2004;73:665–74. [DOI] [PubMed] [Google Scholar]

- 11.Sittig DF, Singh H. Electronic health records and National Patient Safety Goals. N Engl J Med 2012;367:1854–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hofer TP, Asch SM, Hayward RA, et al. Profiling quality of care: is there a role for peer review? BMC Health Serv Res 2004;4:9–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Milchak JL, Shanahan RL, Kerzee JA. Implementation of a peer review process to improve documentation consistency of care process indicators in the EMR in a primary care setting. J Manag Care Pharm 2012;18:46–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Rosenbloom ST, Denny JC, Xu H, et al. Data from clinical notes: a perspective on the tension between structure and flexible documentation. J Am Med Inform Assoc 2011;18:181–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Silfen E. Documentation and coding ED patient encounters: an evaluation of the accuracy of the electronic health record. Am J Emerg Med 2006;24:664–78. [DOI] [PubMed] [Google Scholar]

- 16.Embi PJ, Yackel TR, Logan JR, et al. Impacts of computerized physician documentation in a teaching hospital: perceptions of faculty and resident physicians. J Am Med Inform Assoc 2004;11:300–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rouf E, Chumley HS, Dobbie AE. Electronic health records in outpatient clinics: perspectives of third year medical students. BMC Med Educ 2008;8:13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shaw N. Medical education & health informatics: time to join the 21st century? Stud Health Technol Inform 2010;160:567–71. [PubMed] [Google Scholar]

- 19.Staroselsky M, Volk LA, Tsurikova R, et al. An effort to improve electronic health record medication list accuracy between visits: patient's and physician's responses. Int J Med Inform 2008;77:153–60. [DOI] [PubMed] [Google Scholar]

- 20.Black AD, Car J, Pagliari C, et al. The impact of eHealth on the quality and safety of health care: a systematic overview. PLoS Med 2011;8:e1000387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Skeff KM. Reassessing the HPI: the chronology of present illness (CPI). J Gen Intern Med 2013;29:13–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lowes R. EHR rankings hint at physician revolt: when it comes to electronic health records (EHR) systems, many physicians are clicked off. http://www.medscape.com/viewarticle/820101 (accessed 6 Feb 2014).

- 23.Hirschtick RE. Cut and paste. JAMA 2006;295:2335–6. [DOI] [PubMed] [Google Scholar]

- 24.Thielke S, Hammond K, Helbig S. Copying and pasting of examinations within the electronic medical record. Int J Med Inform 2007;76(Suppl 1):S122–8. [DOI] [PubMed] [Google Scholar]

- 25.Levinson DR. Coding trends of Medicare evaluation and management services. Department of Health and Human Services, Office of Inspector General, May 2012 oei-04-10-00180.

- 26.AMA. http://www.ama-assn.org/ama/pub/physician-resources/solutions-managing-your-practice/coding-billing-insurance/cpt.page (accessed 28 Apr 2014).

- 27.Gray L. ICD-10-CM documentation: capture the details of a patient encounter. Med Econ 2014;91:38–40. [PubMed] [Google Scholar]

- 28.CDC. Selected patient and provider characteristics for ambulatory care visits to physician offices and hospital outpatient and emergency departments: United States, 2009–2010. 2013. http://www.cdc.gov/nchs/fastats/diabetes.htm (accessed 22 Oct 2013).

- 29.Aung E, Donald M, Coll J, et al. The impact of concordant and discordant comorbidities on patient-assessed quality of diabetes care. Health Expect. Published Online First 24 October 2013 doi:10.1111/hex.12151 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Baillot A, Pelletier C, Dunbar P, et al. Profile of adults with type 2 diabetes and uptake of clinical care best practices: results from the 2011 Survey on Living with Chronic Diseases in Canada—Diabetes component. Diabetes Res Clin Pract 2014;103:11–19. [DOI] [PubMed] [Google Scholar]

- 31.Jackson JL, Strong J, Cheng EY, et al. Patients, diagnoses, and procedures in a military internal medicine clinic: comparison with civilian practices. Mil Med 1999;164:194–7. [PubMed] [Google Scholar]

- 32.Jackson JL, O'Malley PG, Kroenke K. A psychometric comparison of military and civilian medical practices. Mil Med 1999;164:112–15. [PubMed] [Google Scholar]

- 33.Jackson JL, Cheng EY, Jones DL, et al. Comparison of discharge diagnoses and inpatient procedures between military and civilian health care systems. Mil Med 1999;164:701–4. [PubMed] [Google Scholar]

- 34.Gimbel RW, Pangaro L, Barbour G. America's undiscovered laboratory for health services research. Med Care 2010;48:751–6. [DOI] [PubMed] [Google Scholar]