Abstract

Background The effects of electronic health records (EHRs) on doctor–patient communication are unclear.

Objective To evaluate the effects of EHR use compared with paper chart use, on novice physicians’ communication skills.

Design Within-subjects randomized controlled trial using observed structured clinical examination methods to assess the impact of use of an EHR on communication.

Setting A large academic internal medicine training program.

Population First-year internal medicine residents.

Intervention Residents interviewed, diagnosed, and initiated treatment of simulated patients using a paper chart or an EHR on a laptop computer. Video recordings of interviews were rated by three trained observers using the Four Habits scale.

Results Thirty-two residents completed the study and had data available for review (61.5% of those enrolled in the residency program). In most skill areas in the Four Habits model, residents performed at least as well using the EHR and were statistically better in six of 23 skills areas (p<0.05). The overall average communication score was better when using an EHR: mean difference 0.254 (95% CI 0.05 to 0.45), p = 0.012, Cohen's d of 0.47 (a moderate effect). Residents scoring poorly (>3 average score) with paper methods (n = 8) had clinically important improvement when using the EHR.

Limitations This study was conducted in first-year residents in a training environment using simulated patients at a single institution.

Conclusions Use of an EHR on a laptop computer appears to improve the ability of first-year residents to communicate with patients relative to using a paper chart.

Keywords: electronic health records, provider-patient communication, physicians in-training, qualitative research, simulated patients

INTRODUCTION

One of the most important skills a physician can have is the ability to communicate well with his or her patients while conducting a well-organized and purposeful visit. Studies have shown that a patient's perception that their doctor listened and understood is crucial to clinical improvement, and is often more important than tests or even treatment.1,2 In this data-driven age, ‘Meaningful Use’ is pushing the transition from paper charting to electronic health record (EHR) use in the examination room. Researchers found that more than 68% of primary care doctors in the USA had adopted EHRs in 2011 and this number has continued to rise.3 Results of studies on the effects of EHRs on patient–provider communication in the examination room are mixed.4 Some studies have shown that patients view computer charting as beneficial and that satisfaction is not affected by EHR use.5–7 Other studies with formal measurement of communication, have found that computer use has both positive and negative effects on communication. Rapid retrieval of information can build trust and confidence in the provider.8 However, screen gazing and typing appear to increase emotional distance and separation.9 Pearce et al.10 hypothesize that for some patients, the computer becomes a third party in patient–provider communication. Among clinician leaders there continues to be a concern that the use of computers in the examination room, necessary for government mandates to be met, is a distraction for both doctor and patient and inhibits effective communication.11

However, among physicians in training today, computers may not be so much a distraction as a tool. Physicians in training now were born into an era where computing is ubiquitous and access to information through computers is potentially more ‘normal’ than access through printed material. To examine the impact of the use of a computer in the exam room on the communication skills of first-year residents in internal medicine, we conducted a study using the Four Habits communication rating tool.8

METHODS

We recruited all available first-year internal medicine residents at the University of Utah School of Medicine to participate in our study as part of an educational assessment. The study was held in a large formal simulation laboratory where objective structured clinical examinations (OSCEs) are regularly conducted.

One patient was a 62-year-old man with chronic diabetes and elevated hemoglobin A1c. The other patient was a 69-year-old woman with symptomatic diastolic heart failure. Both cases were designed for the experience level of a first-year medical resident and both required residents to review the patient's medical history in order to develop the correct treatment plan. Experienced patient actors, who routinely trained residents, were selected based on their similarity to the test cases and availability. Four actors were trained to portray the male diabetes case. Six actors were trained to portray the female heart failure case. Actors received standardized instructions on how to portray the patient and before each session questions regarding the cases were discussed together. Actors received a copy of the communication rating tool and reviewed it between examinations to maintain their awareness of the issues on which the residents would be rated so they could provide feedback to the residents’ supervisors at the end of the study. Actors were not informed regarding the planned comparison of the effects of paper and EHRs.

The study was conducted using a randomized balanced crossover within-subject design with each resident treating one patient where information for care was accessed by computer chart and a second patient whose information was accessed in a well-organized typed paper chart with the same tabbed pages as the computer chart. Both the paper and EHR chart were styled after the Department of Veterans’ Affairs' computerized patient record system and were identical within pages except that the pages of the paper charts had to be turned to access older data, while the EHR required scrolling to access older data. Residents completed both examinations (computer and paper) on the same day in two sequential 30 min blocks.

Residents used a laptop computer to access the EHR, which they brought into the examination room and positioned as they saw fit. All were given brief training on use of the laptop to access the EHR system and were shown a sample paper chart before the test. Residents were given 30 min to review the health record, see each patient, and complete a note. At the start of each examination period, they were presented with a single paper page describing the patient's chief complaint and current vital signs and the electronic or paper chart. They could review the records for as long as they wanted before entering the room, however the 30 min timer began when they received the records. Residents were encouraged to treat each examination as a ‘real’ clinical encounter but were not briefed that the test was to assess their communication skills.

The sequence of testing was carefully controlled to balance order (computer vs paper) and the type of case evaluated using the computer (chronic heart failure vs diabetes). Assignments were made using a computer-generated randomization table at the time of entry into the study. OSCE sessions were conducted on three dates, optimized to maximize the availability of residents within working hour restrictions. Video recordings were made of each encounter for later review to evaluate the resident's communication skills. The video camera was focused on the physician so that both verbal and non-verbal cues could be captured.

Three raters, all postgraduates, were trained to rate communication skills using the Four Habits instrument, which uses a Likert scale of 1 (highly effective) to 5 (not very effective). Raters received instruction on the communication skills commonly rated in clinical exams. Discussions were held on how to discriminate resident skill level. Raters listened to audio recordings of patient clinical exams, rated them independently and discussed differences in scoring. Finally, raters viewed video recordings of patient exams for independent scoring and discussed differences. Each rater received 20 h of training. Once training was complete, each rater viewed and independently scored each recorded clinical encounter. The independent ratings were averaged across the three raters for each patient exam, on an item-by-item basis, and then the difference between EHR and paper-based records was calculated for each resident for mixed effects linear regression controlling for order of chart type used in the crossover design. In this model, the doctor was the random effect and record format (electronic vs paper) was the fixed effect, providing a matched sample analysis analogous to a paired t test. A similar analysis comparing electronic versus paper charts was performed on each doctor's average total score for the Four Habits questions. A scatter plot of each resident's EHR and paper score was generated. A t test was performed for residents who scored greater than 3 using the paper chart or computer chart to estimate the effects of switching modalities on identified potential problems.

The Four Habits questionnaire is a systematic approach that examines provider–patient communication at a subject matter level. It breaks up communication into four sequential behaviors (habits), based on an idealized model of how a visit should be conducted, and assesses the performance of the provider for each task. The Four Habits model reflects evidence from clinical trials that aim to improve exam room communication12 and, by and large, corresponds to the general consensus among educators on essential communication skills.13 Among other things, the Four Habits questionnaire measures a physician’s efforts to build a relationship with the patient, elicit the patient's perspective, demonstrate empathy, and share information—complex tasks that may or may not be inhibited by simultaneous computer use. Specific activities assessed include performance in the initial phase of the visit directed at establishing rapport and shared agenda setting, followed by mutual decision making and efforts to ensure patient concerns have been addressed during the visit. While there are other scales that measure the quality of doctor–patient encounters from a communications perspective14 as well as approaches focused on micro communication and assessment of patterns that reflect good and natural two-way ‘flow’ in conversations,15 the Four Habits approach is well validated,16 is widely used clinically in physician communication training,17 and has been used in previous studies of the effects of EHRs on patient–provider communication.8 In addition to the standard Four Habits tasks, the authors created five questions to measure communication behaviors that may be specifically affected by computer use. These tasks, denoted by an asterisk (*) in figures 1 and 2, were developed in the style of the Four Habits and are based on the comments and concerns that practicing physicians have shared on-line and in the literature. These questions covered residents’ positioning of the chart or computer screen in view of the patient, skills at eye contact while reading and writing in the chart, and skills at speaking while reading and writing.

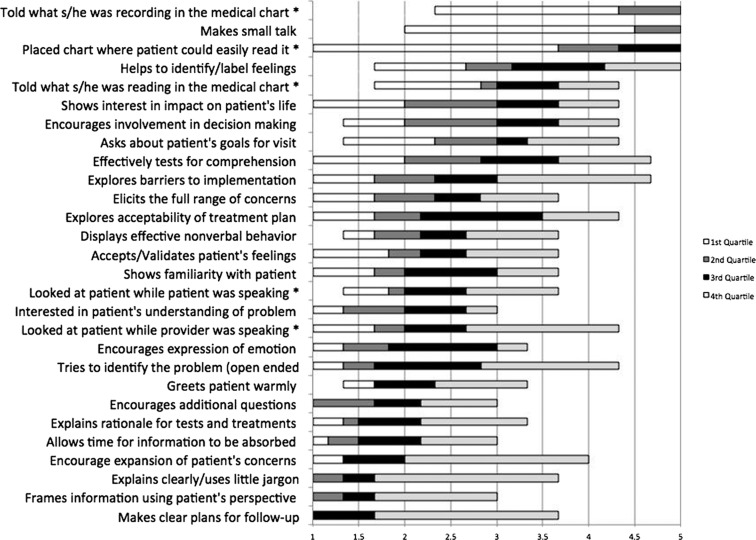

Figure 1:

Residents’ electronic health record (EHR) communication scores by task. * Indicates habits measured in addition to the Four Habits tool. A score of 1 indicates highly effective skills, while a score of 5 indicates not very effective skills.

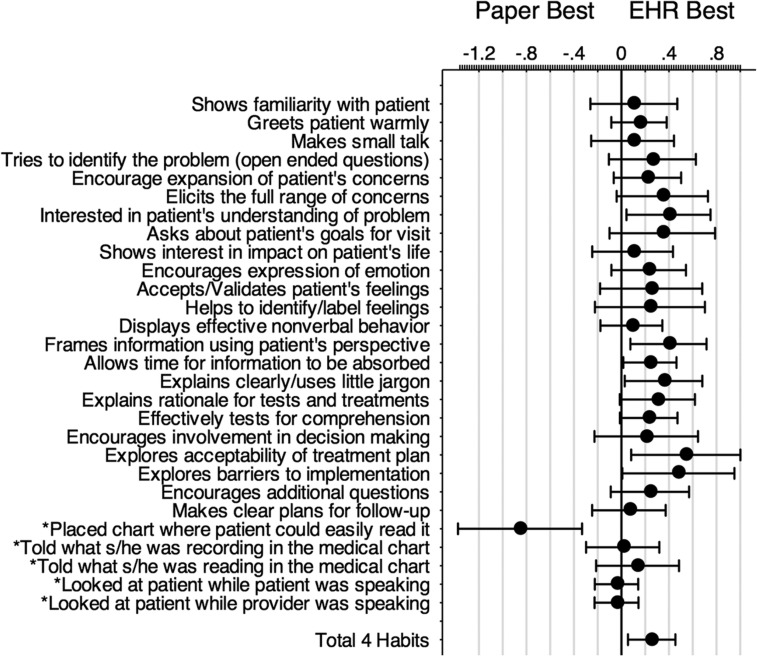

Figure 2:

Average difference between electronic health record (EHR) and paper scores for each communication skill. Positive scores indicate higher performance with the EHR. Bars show 95% CIs and dots show means. *Indicates communication skills measured in addition to the Four Habits items.

This study was reviewed by the University of Utah Human Subjects Institutional Review Board and ruled exempt because of its use of routine methods for educational assessment. The sample size was based on availability of residents as the study was designed as an educational exercise.

RESULTS

Subjects were recruited between August and October 2012. Thirty-seven of 52 first-year internal medicine residents participated in the study (71%). The remaining residents were unavailable due to clinical conflicts with scheduled OSCE sessions. The residents had an average age of 29 years and 62% were male. All had had previous experience using EHRs. They were superior touch-typists, averaging a typing speed of 53 words per minute with 98% accuracy.

All participating residents successfully completed the protocol. Video recordings of five participants were lost due to equipment failure, with losses closely balanced between groups. Thus, data were available on 32 (61.5%) residents in the first-year class. Table 1 summarizes the demographic features of the 32 residents described in this paragraph. There was fair to good inter-rater agreement on Four Habits scale scores: paper ratings had an intra-class correlation coefficient (ICC) of 0.73 (95% CI 0.57 to 0.86), while EHR ratings had an ICC of 0.60 (95% CI of 0.42 to 0.76).

Table 1:

Participant demographics

| Age, years | |

|---|---|

| Range | 26–38 |

| Mean | 29 |

| Gender | |

| Male | 19 (59%) |

| Female | 13 (41%) |

| Typing speed, words/min | |

| Range | 28–74 |

| Mean | 52 |

| Medical schools | 19 |

| Previous experience using paper charts* | 19 of 24 |

*Question added to protocol after first 13 subjects had completed testing.

The distributions of scores for each of the communication skills measured when using the EHR are shown in figure 1. Regardless of the type of chart used, residents performed poorly at certain communication tasks. Some of these tasks were more mechanical and were not performed effectively, for example, describing what they were doing when reading or writing/typing. Other activities requiring more thoughtful engagement also demonstrated less effective performance, for example, showing interest in the impact of the disease on the patient's life and asking questions about the patient’s goals for the visit. However, residents were highly effective at performing some tasks on average, for example, making clear plans for follow up, framing information from the patient's perspective, and avoiding jargon. There was no difference between male and female doctors in communication scores: females scored a mean of 0.01 points (95% CI −0.36 to 0.39) less on the Four Habits (p = 0.94). There was no impact of test order on total Four Habits score: mean difference 0.125 (95% CI −0.178 to 0.428) (p = 0.206).

However, for most of the 23 skills in the Four Habits set, residents performed, on average, at least as well when using an EHR as a paper chart, as shown in figure 2. Overall, performance was higher on the total Four Habits set when an EHR was used. Transformed to the same scale as individual items (1–5), the overall difference in Four Habits scores was 0.254 (95% CI 0.06 to 0.45) (p = −0.012), which is comparable to the SD of the (1–5 range transformed) total Four Habits score of 0.501 when using computers and 0.678 when using paper. The correlation observed between scores was 0.53. The effect size (Cohen's d) was estimated to be 0.476, a moderate-sized effect from a statistical perspective. No association was found between previous experience using paper records and the mean score when using paper methods: 2.45 (prior experience, n = 19) versus 2.81 (no experience, n = 5) (95% CI for difference −0.40 to 1.12).

Specific tasks in which residents performed better (p<0.05) using the EHR included: showing interest in the patient's understanding of the problem, framing information using the patient's perspective, allowing time for information to be absorbed, avoiding the use of jargon, exploring the acceptability of the treatment plan, and exploring barriers to implementation. Most of these tasks are complex, which suggests that the cognitive load for residents may have been reduced when using the EHR.

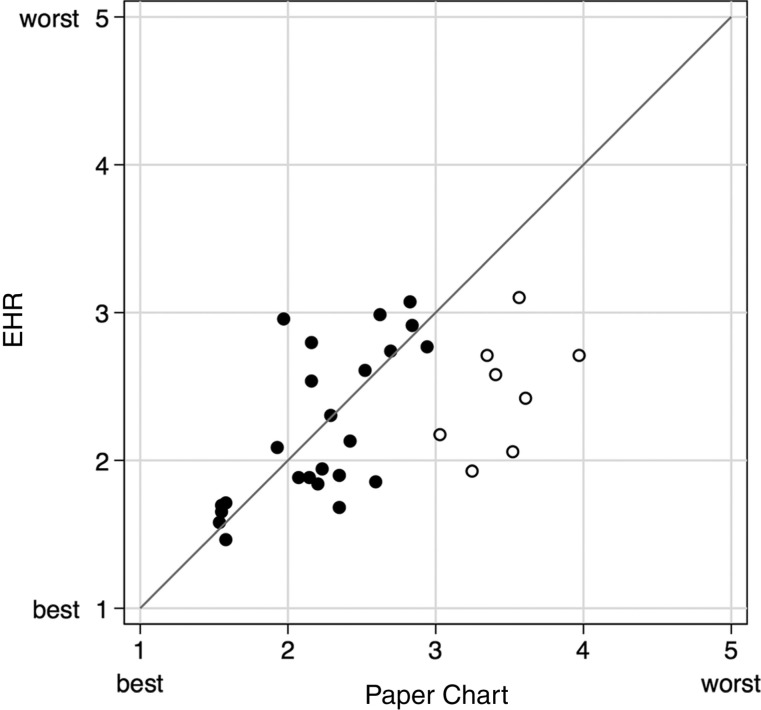

The effects of computer use were correlated with the resident's communication skills, as shown in figure 3. Residents who struggled to communicate while using a paper chart improved when using the EHR. Those residents (n = 8) who had a paper Four Habits total score greater than 3 (in the less effective range) showed communication scores that were better on average by 1 point (95% CI 0.70 to 1.30) for each skill when they used an EHR (p<0.001), a large and clinically important difference. The complementary analysis was not possible for residents with EHR scores above 3 because only two subjects met this criterion. The residents with the best communication skills did well regardless of the type of chart used, as shown by their proximity to the identity line shown in figure 3. However, some tasks associated with good communication practices but not assessed by the Four Habits model, were performed better by residents when using a paper chart. Only one of these tasks showed a statistically significant difference: placing the chart where the patient could read it (mean 0.85; 95% CI −1.37 to −0.33, p = 0.001). There was also a trend toward higher performance in residents looking at the patient when they were speaking, while using the paper chart.

Figure 3:

Plot of each resident's total score on the Four Habits instrument, comparing electronic health record (EHR) chart use with paper chart use. Higher scores indicate less effective performance. The residents whose dots lay close to the identity line had similar scores regardless of chart type. The residents whose dots lay far from the identity line had large differences in their total Four Habits communication scores depending on the type of chart used, with those falling above the line scoring worse on EHR and those falling below the line scoring worse on paper. The hollow circles indicate residents who scored above 3 using an EHR and below 3 using paper.

DISCUSSION

This study is among the first to make a direct intra-individual comparison of physicians’ communication performance when using electronic and paper-based systems. The strength and the weakness of this study is its use of OSCE methods and standardized patients to evaluate the impact of EHRs on communication skills and its use of a randomized sequential design. This approach allowed us to carefully control the stimuli given to each participant and to systematically compare the effects of paper and EHRs over the same cases, and afforded considerable statistical power, as demonstrated in post hoc analyses.

The effect favors EHR use across almost all measures as shown in figure 2. How important are these differences? The average difference of 0.254 corresponds to an effect size (Cohen's d) of 0.47. Thus, statistically, the effect seen is of moderate size. Clinically, the minimum significant difference on average Four Habits score is not known, but it is likely to be less than 1.0 as an average difference of 1.0 (visibly worse on every task) would indicate a much worse performance overall. The results suggest that, in contrast to many established providers’ perceptions about the effects of EHRs, internal medicine residents using EHRs performed better, at least statistically and probably clinically, at communication tasks that are part of a recognized model of patient-centric primary care visits. However, we did see some evidence of potential negative impacts on communication behaviors, primarily on placing the chart in view of the patient and possibly on maintaining eye contact during the interview.

Our residents’ recent medical training, excellent typing skills, and experience using EHRs may have been related to their performance. Previous detailed studies of doctor–patient communication that were not controlled at the individual level, have suggested both superior and inferior performance on some aspects of communication.8,9 One interpretation of past findings and the additional data provided by our study is that EHRs may in fact have a negative impact on the performance of some social behaviors during the clinical visit. However, EHRs do not hamper and may even support the processing and performance of complex patient-centric cognitive skills in interviews, such as framing information from the patient’s perspective, exploring the acceptability of the treatment plan, etc. This may be due to reduced cognitive load or improved task switching. Further research on the effects of EHRs on clinical cognition is needed.

In addition, we suggest that the widespread perception that EHRs have a negative impact on providers communication skills may be a form of representativeness bias,18 where episodes of difficulty using an EHR may be remembered by physicians without complementary memories of poor communication when paper health records were poorly organized and/or incomplete.

Residents had numerous individual and group level deficiencies in communication skills, as shown in table 1. Due to the limited number of cases seen by each resident, our results cannot be used to draw overall conclusions about a specific resident's communication skills; however, the results do identify gaps in the skill set of our subjects. Current training on computer use focuses the provider's attention on the position of the screen, and on talking while typing, reading or performing other low level tasks.19 Our results confirm the importance of training in these activities: residents often performed poorly on these tasks regardless of the type of chart used. However, our data do not support an exclusive training focus on communication behaviors such as positioning the computer screen within a patient's field of view. A comparison of the impact of training in each area (communication behaviors vs use of structured communications models similar to Four Habits) in the context of EHR use may be warranted.

Even so, it is interesting that many residents who scored poorly on communication when using the paper record improved substantially, by an average of 1.0 point across the Four Habits scale, when using an EHR. Thus our findings indicate that EHRs might be a supportive tool for communication. We suspect this effect could be enhanced by careful design of EHR interfaces. Current EHRs have been largely developed as documentation tools and have few features designed to promote doctor–patient communication. Several authors have described how EHRs could be applied to enhance communication20 and make visits more patient focused.10 We believe that EHRs also may need to incorporate conceptual visit models, such as the Four Habits or the broader Kalamazoo Consensus,13 into their design to help guide communication. Redesign efforts also might include the development of EHR interfaces that patients can see and intuitively understand during the visit. Placing a ‘documentation centric’ (as opposed to ‘discussion centric’) EHR screen in a position where patient and provider can both view it, is a step forward, but is not sufficient.21

Limitations of the study

This study was conducted at a single academic institution and in a single class of internal medicine first-year residents. Residents were drawn from 19 different medical schools, suggesting some generalizability to residents nationally. However, the results of this study are probably only applicable to the next generation of providers who have strong typing skills and life-long exposure to computer use.

Both the paper chart and the EHR were new to residents but were designed to be similar. We only tested a single design for EHRs and paper records and it is possible that other designs might produce different results. Our paper chart was well organized and highly readable, potentially biasing the study in favor of paper records.

The EHR in the study was accessed on a laptop computer that was placed in the examination room as each resident saw fit. This allowed us to test whether residents positioned the EHR screen in patient’s field of view. However, the use of a laptop might have different effects on communication than a stationary desktop; therefore, our results may not be generalizable to EHRs accessed on desktop computers in exam rooms.

This study used simulated patient encounters allowing a within-subjects testing design. While simulated encounters are an established technique in medical education, their use for evaluating the effects of computers in the exam room is relatively novel. Findings may be influenced by the realism of the scenarios and the performance of the actors. However, this approach was integral to a within-subjects design that allowed us to achieve adequate statistical power with a relatively small sample size.

CONCLUSIONS

Internal medicine residents performed modestly better at structured doctor–patient communication tasks when using an EHR than when using a similarly structured paper record. Findings were consistent across a wide range of complex exam room skills, with larger differences seen with more complex interviewing tasks and in residents with lower communication scores.

ACKNOWLEDGEMENTS

The authors wish to acknowledge the assistance of Robert Angell, Kurt Barsch, and James Lenert in conducting research studies. We thank Jonathan Nebeker for access to and assistance with programming the EHR simulator used in the study, and Dr James Hellewell for original development of the cases and patient data. We thank Robert Dunlea for assistance with application of the EHR simulator in our study. We also thank the staff of the University of Utah Learning Center and the actors who portrayed simulated patients in this study.

CONTRIBUTORS

The study was conceived of by LL and planned by TT, LL, FS, GS and CM. TT, FS, and CM were primarily responsible for the conduct of the study. TT, GS, and LL all contributed to the reporting of the work with input from other coauthors.

FUNDING

Analysis of this investigation was supported by the University of Utah Study Design and Biostatistics Center, with funding in part from the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, through Grant 8UL1TR000105 (formerly UL1RR025764). This work was also supported by NLM Training Grant No. T15LM007124.

COMPETING INTERESTS

None.

ETHICS APPROVAL

The University of Utah approved this study.

PROVENANCE AND PEER REVIEW

Not commissioned; externally peer reviewed.

DATA SHARING STATEMENT

Additional data from other measures of doctor–patient communication may be available to investigators on request from Dr Leslie Lenert (Lenert@MUSC.edu).

REFERENCES

- 1.Bass MJ, Buck C, Turner L, et al. The physician's actions and the outcome of illness in family practice. J Fam Pract 1986;23:43–7. [PubMed] [Google Scholar]

- 2.Starfield B, Wray C, Hess K, et al. The influence of patient-practitioner agreement on outcome of care. Am J Public Health 1981;71:127–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Xierali IM, Hsiao CJ, Puffer JC, et al. The rise of electronic health record adoption among family physicians. Ann Fam Med 2013;11:14–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Shachak A, Reis S. The impact of electronic medical records on patient–doctor communication during consultation: a narrative literature review. J Eval Clin Pract 2009;15:641–9. [DOI] [PubMed] [Google Scholar]

- 5.Irani JS, Middleton JL, Marfatia R, et al. The use of electronic health records in the exam room and patient satisfaction: a systematic review. J Am Board Fam Med 2009;22:553–62. [DOI] [PubMed] [Google Scholar]

- 6.Hsu J, Huang J, Fung V, et al. Health information technology and physician-patient interactions: impact of computers on communication during outpatient primary care visits. J Am Med Inform Assoc 2005;12:474–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lelievre S, Schultz K. Does computer use in patient-physician encounters influence patient satisfaction? Can Fam Physician 2010;56:e6–12. [PMC free article] [PubMed] [Google Scholar]

- 8.Frankel R, Altschuler A, George S, et al. Effects of exam-room computing on clinician-patient communication: a longitudinal qualitative study. J Gen Intern Med 2005;20:677–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Margalit RS, Roter D, Dunevant MA, et al. Electronic medical record use and physician-patient communication: an observational study of Israeli primary care encounters. Patient Educ Couns 2006;61:134–41. [DOI] [PubMed] [Google Scholar]

- 10.Pearce C, Arnold M, Phillips C, et al. The patient and the computer in the primary care consultation. J Am Med Inform Assoc 2011;18:138–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sinsky CA, Beasley JW. Texting while doctoring: a patient safety hazard. Ann Intern Med 2013;159:782–3. [DOI] [PubMed] [Google Scholar]

- 12.Smith RC, Dwamena FC, Grover M, et al. Behaviorally defined patient-centered communication—a narrative review of the literature. J Gen Intern Med 2011;26:185–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Makoul G. Essential elements of communication in medical encounters: the Kalamazoo consensus statement. Acad Med 2001;76:390–3. [DOI] [PubMed] [Google Scholar]

- 14.Schirmer JM, Mauksch L, Lang F, et al. Assessing communication competence: a review of current tools. Fam Med 2005;37:184–92. [PubMed] [Google Scholar]

- 15.Roter DL. Observations on methodological and measurement challenges in the assessment of communication during medical exchanges. Patient Educ Couns 2003;50:17–21. [DOI] [PubMed] [Google Scholar]

- 16.Krupat E, Frankel R, Stein T, et al. The Four Habits Coding Scheme: validation of an instrument to assess clinicians’ communication behavior. Patient Educ Couns 2006;62:38–45. [DOI] [PubMed] [Google Scholar]

- 17.Stein T, Frankel RM, Krupat E. Enhancing clinician communication skills in a large healthcare organization: a longitudinal case study. Patient Educ Couns 2005;58:4–12. [DOI] [PubMed] [Google Scholar]

- 18.Tversky A, Kahneman D. Judgment under uncertainty: heuristics and biases. Science 1974;185:1124–31. [DOI] [PubMed] [Google Scholar]

- 19.Saleem JJ, Flanagan ME, Russ AL, et al. You and me and the computer makes three: variations in exam room use of the electronic health record. J Am Med Inform Assoc 2013;21:e147–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.White A, Danis M. Enhancing patient-centered communication and collaboration by using the electronic health record in the examination room. JAMA 2013;309:2327–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Almquist JR, Kelly C, Bromberg J, et al. Consultation room design and the clinical encounter: the space and interaction randomized trial. HERD 2009;3:41–78. [DOI] [PubMed] [Google Scholar]