Abstract

Equilibrium sampling of biomolecules remains an unmet challenge after more than 30 years of atomistic simulation. Efforts to enhance sampling capability, which are reviewed here, range from the development of new algorithms to parallelization to novel uses of hardware. Special focus is placed on classifying algorithms — most of which are underpinned by a few key ideas — in order to understand their fundamental strengths and limitations. Although algorithms have proliferated, progress resulting from novel hardware use appears to be more clear-cut than from algorithms alone, partly due to the lack of widely used sampling measures.

Keywords: effective sample size, algorithms, efficiency, hardware, timescales

1 Introduction

1.1 Why sample?

Biomolecular behavior can be substantially characterized by the states of the system of interest — that is, by the configurational energy basins reflecting the coordinates of all constituent molecules in a system. These states might be largely internal to a single macromolecule such as a protein, or more generally involve binding partners and their relative coordinates. But regardless of the complexity of a system, the states represent key functional configurations along with potential intermediates for transitioning among the major states. The primary goal of equilibrium computer simulation is to specify configurational states and their populations. A more complete, mechanistic description would also include kinetic properties and dynamical pathways [147]. Nevertheless, even the equilibrium description provides a basic view of the range of structural motions of biomolecules, and can also serve more immediately practical purposes such as for ensemble docking [56, 65].

The basic algorithm of biomolecular simulation, molecular dynamics (MD) simulation, has not changed substantially since the first MD study of a protein more than 30 years ago [82]. Although routine explicit-solvent MD simulations are now four or five orders of magnitude longer (i.e., 100 − 103 nsec currently), modern MD studies still appear to fall significantly short of what is needed for statistically valid equilibrium simulation (e.g., [36, 38]). Roughly speaking, one would like to run a simulation at least 10 times longer than the slowest important timescale in a system. Unfortunately, many biomolecular timescales exceed 1 ms, and in some cases by orders of magnitude (e.g., [44]).

Despite the outpouring of algorithmic ideas over the past decades, MD largely remains the tool of choice for biomolecular simulations. While this staying power partly reflects the ready availability of software packages, and perhaps some psychological inertia, it also is indicative of a simple fact: no other method can routinely and reliably “beat” MD by a significant amount.

This article will employ several points of view in considering efforts to improve sampling. First, it will attempt to define “the equilibrium sampling problem(s)” as precisely as possible, which necessarily includes discussing the quantification of sampling. Significant and substantiated progress is not possible without clear yardsticks. Secondly, a brief discussion of how sampling happens — i.e., how simulations generate ensembles and averages — will provide a basis for understanding numerous methods. Third, the article will attempt to review a fairly wide array of modern algorithms — most of which are underpinned by a surprisingly small number of key ideas. The algorithms and studies are too numerous to be reviewed on a case-by-case basis, but a bird’s eye perspective is very informative; more focused attention will be paid to apparently conflicting statements about the replica exchange approach, however. Fourth, special emphasis will be placed on novel uses of hardware — GPUs, RAM, and special CPUs; this new “front” in sampling efforts has yielded rather clean results in some cases. Using hardware in new ways necessarily involves substantial algorithm efforts.

This review is limited. At least a full volume would be required to comprehensively describe sampling methods and results for biomolecular systems. This review therefore aims primarily to catalog key ideas and principles of sampling, along with enough references for the reader to delve more deeply into areas of interest. The author apologizes for the numerous studies which have not been mentioned for reasons of space or because he was not aware of them. Other review articles (e.g., [4, 16]) and books on simulation methods [2, 32, 62, 105] are available.

2 What is the sampling problem?

While scientists actively working in the field of biomolecular simulation may assume the essential meaning of “the sampling problem” is universally accepted, a survey of the literature indicates that several somewhat different interpretations are implicitly assumed. What is meant by the sampling problem and, accordingly, success or failure in addressing the problem, surely will dictate the choice of methodology.

2.1 A simple view of the equilibrium sampling problem

Equilibrium sampling at constant temperature and fixed volume can be concisely be identified with the generation of full-system configurations x distributed according to the Boltzmann-factor distribution:

| (1) |

where ρ is the probability density function, U is the potential energy function, kB is Botlz-mann’s constant, and T is the absolute temperature in Kelvin units. The coordinates x refer (in the classical picture assumed here) to the coordinates of every atom in the system, including solute and solvent. Note that other thermodynamic conditions, such as constant pressure or constant chemical potential, require additional energy-like terms in the Boltzmann factor [147], but here we shall consider only the distribution (1) for clarity and simplicity.

Performing equilibrium sampling requires access to all regions of configuration space, or at least to those regions with significant populations, and also requires that configurations have the correct relative probabilities. This point will be elaborated on, below.

2.2 Ideal sampling as a reference point

In practice, it is nearly impossible to generate completely independent and identically distributed (i.i.d.) configurations — obeying Eq. (1) — for biomolecules. Both dynamical and non-dynamical methods tend to produce correlated samples [38, 41, 73, 86, 141]. Nevertheless, such ideal sampling is a highly useful reference point for disentangling complications arising in practical simulation methods which generate correlated configurations.

Consider a hypothetical example where an equilibrium ensemble of N i.i.d. configurations has been generated according to Eq. (1) for a biomolecule with a complex landscape. Regions of configuration space with probability p ≳ 1/N are likely to be represented in the ensemble, and this is true regardless of kinetic barriers among states. Conversely, regions with p ≲ 1/N are not likely to be represented, regardless of whether the region is “interesting.” For instance, the unfolded state might or might not be an appropriate part of a size N ensemble, depending on the system (i.e., on the free energy difference between folded and unfolded states) and on N. See Fig. 1 and Sec. 2.3.1, below.

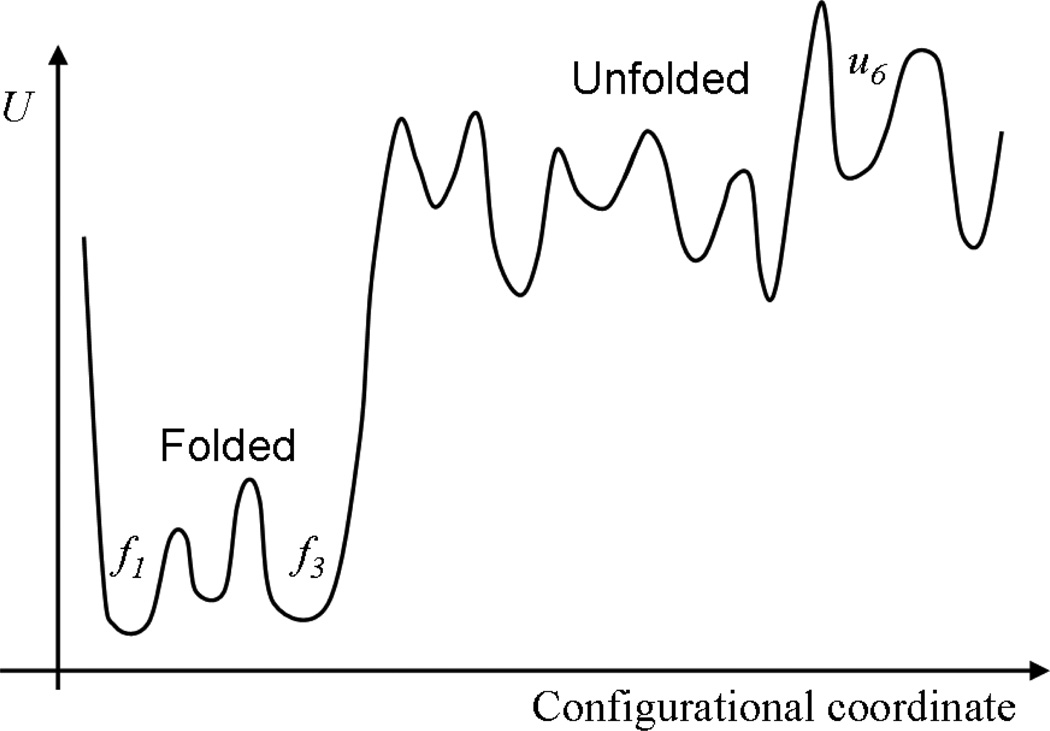

Figure 1.

A schematic energy landscape of a protein. Both the folded states and unfolded states generally can be expected to consist of multiple sub-states, fi and ui, respectively. Transitions among sub-states themselves could be slow, compared to simulation timescales. The “sampling problem” is sometimes construed to involve generating configurations from both folded and unfolded states: the text discusses when this view is justified.

2.3 Goals and ambiguities in equilibrium sampling

The question, “What should be achieved in equilibrium sampling?” can be divided into at least three questions. First, for a given problem (defined by the specific system and a specified initial condition), there is the question of defining success — When is sampling sufficient? Usually, the goal of equilibrium simulation is to calculate observables of interest to a level of precision sufficient to draw physical conclusions. Yet the variables “of interest” will vary from study to study. It therefore seems more reasonable to set a goal of generating an ensemble of configurations from which arbitrary observables can be calculated. In rough terms, let us therefore assume that the goal is to calculate Neff effectively independent configurations, where presumably it is desirable to achieve Neff > 10. Larger values may often be necessary, however, because fractional uncertainty will vary as for slower-to-converge properties such as state populations. This issue will be addressed further below.

The other questions are probably the most pressing, starting with, “What type of system is to be sampled?” A basic distinction that seems to appear implicitly in the literature is whether the unfolded state is important in the system or not. In this context, “importance” would usually be defined by whether or not a system exhibits a significant equilibrium unfolded fraction — say, roughly equal probabilities p (folded) ~ p (unfolded). Indeed, some evaluations of sampling methods have specifically targeted such a balance [48, 102], e.g., by adjusting the temperature.

A third question about initial conditions is addressed separately, below, in Sec. 2.3.1. These questions arise in the first place because all available practical sampling methods for biomolecules are “imperfect” in the sense of producing correlated configurations. In ideal equilibrium sampling (Sec. 2.2), by contrast, the sufficiency of sampling is fully quantified in terms of the number N of independent configurations, and can be assessed objectively in terms of observables of interest. The notion of an initial condition is irrelevant in ideal sampling since there are no correlations. Also, the values of p(folded) and p (unfolded) have no effect on sampling quality, which is fully embodied in N; instead, it is N which determines what states are represented in the ensemble and the appropriate frequencies of occurrence.

2.3.1 Should initial conditions matter? Issues surrounding the unfolded state

A typical simulation of a protein can be described as one intended to sampled the folded state, which may consist of a number of substates as in Fig. 1. So long as the simulation is initiated in or near one of these substates, the resources required to sample it will not depend significantly on the initial conditions. Rather, timescales for transitioning among substates will dominate the computing cost. In a dynamical simulation, after all, it is the transitions among substates which yields estimates for substate populations and hence the equilibrium ensemble: see Sec. 3.1.

Sensitivity to initial conditions can become important when the unfolded state is involved. Even when the goal is to sample the equilibrium ensemble of an overwhelmingly folded protein, the initial condition can come into play if it introduces an anomalously long timescale. Consider beginning an equilibrium simulation of the landscape of Fig. 1 in the unfolded substate labeled u6. Depending on details of the system, the time required to find the folded state could easily exceed the time for sampling folded substates. Thus, such a simulation, first has to solve a search problem before sampling is begun.

We can set our discussion in the context the folding free energy, defined based on the ratio of probabilities for unfolded and folded states:

| (2) |

Consider a value ΔGfold ~ −3kcal/mol ~ −5kBT, which implies the unfolded state is occupied 1% of the time or less. In ensembles with fewer than 100 independent configurations (Neff < 100), such an unfolded state typically should not be represented. In practical terms, because key folded-state timescales often exceed µsec or even msec, it is unlikely that a simulation of a protein in folding conditions in the current era will contain a sufficient number of independent configurations for the unfolded state to be represented.

Note that we have assumed it is straightforward to distinguish folded and unfolded configurations. In reality, there may be a spectrum of disordered states in many systems, and hence ambiguity in defining folded and unfolded states.

3 Sampling basics: Mechanism, timescales, and cost

3.1 Transitions provide the key information in sampling

The problem of equilibrium sampling can be summarized as determining the metastable states and their relative populations, but how are populations actually determined by the simulation? In almost every practical algorithm, the information comes from transitions among the states. By switching back and forth between pairs of states, with dwell times between transitions acting as proxies for rate constants k, a dynamical simulation gathers information about relative populations based on the equilibrium balance relation pikij = pjkji [147]. With more transitions, the error in the population estimates pi decreases [102]. Without transitions, most algorithms have no information about relative populations. Consider the case of two independent simulations started from different states which exhibit no transitions: determining the populations of the states then requires more advanced analysis [136] which often may not be practical.

It is critical realize that most “advanced algorithms” — such as the varieties of exchange simulation (Sec. 5) — also require “ordinary” transitions to gather population information. That is, transitions among states within individual continuous trajectories are required to obtain sampling, as has been discussed before [38, 102, 143]. Any algorithm that uses dynamical trajectories as a component can be expected to require transitions among metastable states in those trajectories.

It must also be pointed out that transitions themselves do not necessarily equate to good sampling. An example is when a high temperature is used to aid sampling at a lower temperature. Although transitions are necessary, they are not sufficient because of the overlap issue: the sampled configurations ultimately must be important in the targeted (e.g., lower temperature) ensemble after they are reweighted [72, 109].

3.2 Timescales of sampling

Much of the foregoing discussion can be summed up based on two key timescales. (Even if a sampling method is not fully dynamical, analogous quantities can be defined in terms of computing times.) The timescale that typically is limiting for simulations can be denoted as which denotes the longest “important” equilibrium correlation time. Roughly, this is the time required to explore all the important parts of configuration space once [73] — starting from any well populated (sub)state — and is elaborated on below. For sampling the landscape of Fig. 1 under folding conditions, this would be the time to visit all folded substates starting from any of the folded basins. However, as also discussed above, initial conditions may play an important role, and thus we can define tinit as the “equilibration” time (or “burn-in” time in Monte Carlo lingo). This is not an equilibrium timescale, but the time necessary to relax from a particular non-equilibrium initial condition to equilibrium. Thus, tinit is specific to the initial configuration of each simulation. The landscape of Fig. 1 under folding conditions suggests for a simulation started from a folded substate, but it is possible to have significant values — even —when starting from an unfolded state.

3.3 Factors contributing to sampling cost

A little thought about the ingredients of sampling can help one understand successes and failure of various efforts — and more importantly, aid in planning future research. The most important ingredient to understand is single-trajectory sampling — i.e., the class of dynamical methods discussed in Sec. 4.1 including MD, single-Markov-chain Monte Carlo (MC), or any other method that generates an ensemble as a sequentially correlated list of configurations. Single-trajectory methods tend to be the engine underlying traditional and advanced methods.

The sampling cost of a trajectory method can be divided into two intuitive factors:

| (3) |

In words, a given algorithm will require a certain number of steps to achieve good sampling (e.g., Neff ≫ 1) and the total cost of generating such a trajectory is simply the total cost per step multiplied by the required number of steps. Although this decomposition is trivial, it is very informative. For example, if one wants to use a single-trajectory algorithm (e.g., MD or similar) where a step corresponds roughly to 10−15 sec, then 109 – 1012 steps are required to reach the µsec - msec range. Therefore, unless the cost of a step can be reduced by several orders of magnitude, MD and similar methods likely cannot achieve sampling with typical current resources. In a modified landscape (e.g., different temperature or model) the roughness may be reduced — but, again, that reduction should be several orders of magnitude, or else be accompanied by a compensating reduction in the cost per step. Among noteworthy examples, increasing temperature decreases roughness but not the cost per step, whereas changing a model (i.e., modifying the potential energy function U) can affect both roughness and the step cost. Both strategies sample a different distribution, which may be nontrivial to convert to the targeted ensemble.

The importance of understanding dynamical trajectory methods via Eq. (3) is underscored by the fact that most multi-level (e.g., exchange) simulations require good sampling at some level [143, 148], by single continuous trajectories.

4 Quantitative assessment of sampling

Although the challenge of sampling biomolecular systems has been widely recognized for years, and although new algorithms are regularly proposed, systematic quantification of sampling has often not received sufficient attention. Most importantly, there does not seem to be a widely accepted (and widely used) “yardstick” for quantifying the effectiveness of sampling procedures. Given the lack of a broadly accepted measure, it is tempting for individual investigators to report measures which cast the most favorable light on their results.

Two key benefits would flow from a universal measure of sampling quality. As an example, assume we are able to assign an effective sample size, Neff, to any ensemble generated in a simulation; Neff would characterize the number of statistically independent configurations generated. Sampling efficiency could then be quantified by the CPU time (or number of cycles) required per independent sample. The first benefit is that we would no longer be able to fool ourselves or others as to the efficiency of a method based on qualitative evidence or perhaps the elegance of a method. Thus, the field would be pushed harder to focus on a bottom-line measure. Secondly, describing the importance of a new algorithm would be more straightforward. It would no longer be necessary to directly compare a new method to, perhaps, a competitor’s approach requiring subtle optimization. Instead, each method could be compared to a standard (e.g., molecular dynamics), removing ambiguities in the outcome. It would still be important to test a method on a number of systems (e.g., small and large, stiff and flexible, implicitly and explicitly solvated), but the results would be quantitative and readily verified by other groups. On a related note, there has been a proposal to organize a sampling contest or challenge event to allow head-to-head comparison of different methodologies (B.R. Brooks, unpublished).

Numerous ideas for assessing sampling have been proposed over the years (e.g., [36, 38, 41, 73, 86, 88, 114, 116, 141]), but it is important to divide such proposals into “absolute” and “relative” measures. Absolute measures attempt to give a binary indication of whether or not “convergence” has been achieved, whereas relative measures estimate how much sampling has been achieved — e.g., by an effective sample size Neff. The perspective of the present article is that absolute measures fail to account for the fundamental statistical picture underlying the sampling problem. To see why, note that any measured observable will have an uncertainty associated with it; roughly speaking, sampling quality is reflected in typical sizes of error bars which decrease with better sampling. There does not seem to an unambiguous point where sampling can be considered absolutely converged (although a simulation effectively does not begin to sample equilibrium averages until tinit has been surpassed).

It is certainly valuable, nevertheless, to be able to gauge when sampling is wholly inadequate, in an absolute sense. Absolute methods which may be useful at detecting extremely poor sampling include casual use of the “ergodic measure” [86] (i.e., whether or not it approaches zero) and cluster counting [114].

4.1 Assessing dynamical sampling

The assessment of dynamical methods illustrates the key ideas behind the relative measures of sampling. For this purpose, “dynamical” will be defined to mean when trajectories consist of configurations with purely sequential correlations — i.e., where a given configuration was produced based solely on the immediately preceding configuration(s). Thus, MD, Langevin dynamics, and simple Markov-chain MC are dynamical methods, but exchange algorithms are not [147]. Correlation times or their analogs for MC can then readily be associated with any trajectory which was dynamically generated. Assuming, for the moment, that there is a fundamental correlation time for overall sampling of the system, , then an effective sample size can be calculated from the simple relation

| (4) |

where tsim is the total CPU time (or number of cycles) used to generate the trajectory. Thus, sampling quality is relative in that it should increase linearly with tsim [86]. In rough terms, the absolute lack of sampling would correspond to Neff ≲ 1; physically, this would indicate that important parts of configuration have been visited only once — e.g., a simulation shorter than . (It would seem impossible to detect parts of the space never visited.)

Recent work by Lyman and Zuckerman has suggested that a reasonable overall correlation time can be calculated from a dynamical trajectory [73]. Those authors derived their correlation time from the overall distribution in configuration space, estimating the time that must elapse between trajectory frames so that they behave as if statistically independent. The approach has the twin advantages of being based on the full configuration-space distribution (as opposed to isolated observables) and of being blindly and objectively applicable to any dynamical trajectory. Other measures meeting these criteria would also be valuable; note, for instance, the work by Hess using principal components [41].

4.2 Assessing non-dynamical sampling

If sampling is performed in a non-dynamical way, one cannot rely on sequential correlations to assess sampling as in Eq. (4). Many of the modern algorithms which attempt to enhance sampling, such as those reviewed below, are not dynamical. Replica exchange (RE) [34, 51, 118] is a typical example: if one is solely interested in the ensemble at a single temperature, a given configuration may be strongly correlated with other configurations distant in the sequence at that T and/or be uncorrelated with sequential neighbors. At the same time, RE does generate trajectories which are continuous in configuration space, if not temperature, and it may be possible to analyze these in a dynamical sense [73] but care will be required [14]. Other algorithms, for instance based on polymer growth procedures [29, 35, 126, 138–140], are explicitly non-dynamical.

Two recent papers [38, 141] have argued that non-dynamical simulations are best assessed by multiple independent runs. The lack of sequential correlations — but the presence of more complex correlations — in non-dynamical ensembles means that the list of configurations cannot be divided into nearly independent segments for blocking-based analysis. Zhang et al. suggest that multiple runs be used to assess variance in the populations of physical states [141] for two related reasons: (i) such states are defined to be separated from one another by the slowest timescales in a system; (ii) relative populations of states cannot be properly estimated without good sampling within each state. State-population variances, in turn, can be used to estimate Neff based on simple statistical arguments [141]; nevertheless, it is important that states be approximated in an automated fashion — see [13, 91, 141] — to eliminate the possibility for bias.

5 Purely algorithmic efforts to improve sampling

How can we beat MD? Despite 30 years of effort, there is no algorithm that is significantly more efficient than molecular dynamics for the full range of systems of interest. Further, although dozens of different detailed procedures have been suggested, there are a limited number of qualitatively distinct ideas. At attempt will now be made to describe some of the strategies which have been proposed.

5.1 Replica exchange and multiple temperatures

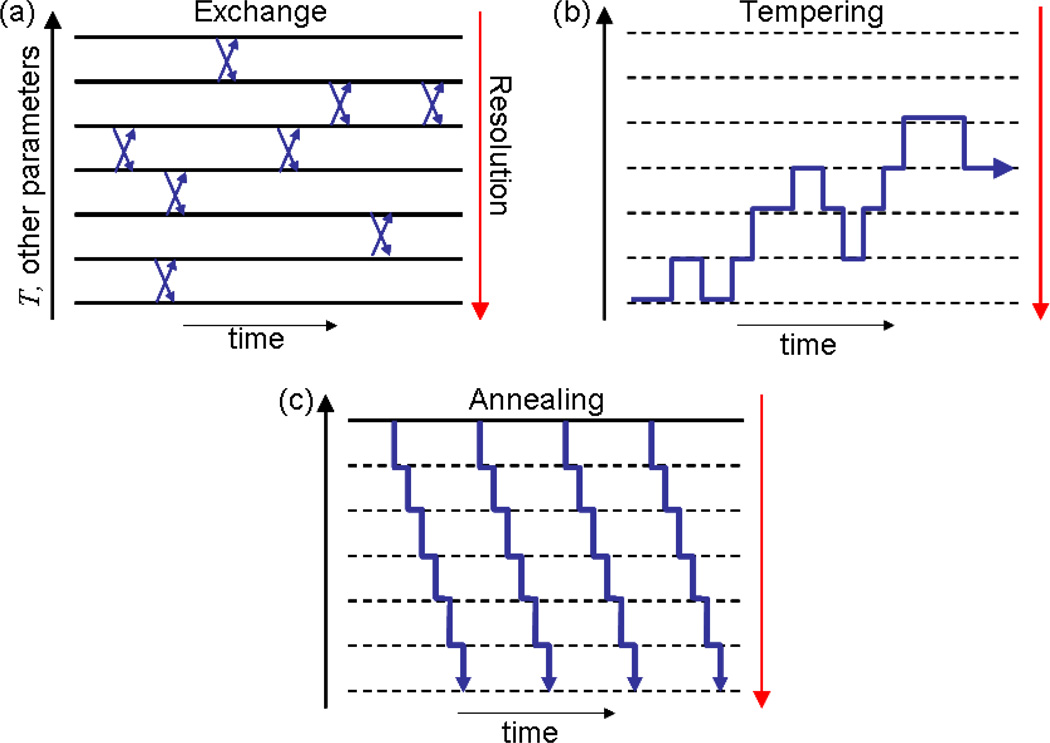

The most common strategy is to employ elevated temperature. Many variations on this strategy have been proposed, with one of the earliest suggestions being in the context of spin systems [27]. The approach that has been applied to biomolecules most often is replica exchange (RE, also called parallel tempering) in which parallel simulations wander among a set of fixed temperature values with swaps governed by a Metropolis criterion [34, 51, 118]. Many variations and optimizations have been proposed for RE (e.g., [8, 11, 26, 57, 64, 67, 93–95, 99–101, 103, 112, 121]). Closely related to RE is simulated tempering, in which a single trajectory wanders among a set of temperatures [75]; once again, optimizations have been proposed (e.g., [137]). See Fig. 2.

Figure 2.

Many ways to use many levels. Sampling algorithms are schematized in the panels: different horizontal levels represent different simulation conditions, such as varying temperature, model parameters, and/or resolution. A given ladder or set of conditions can be used in different formulations: (a) exchange, (b) tempering, or (c) annealing.

Less well known, but formally closely related to exchange and tempering, is “annealed importance sampling” (AIS), in which a high-temperature ensemble is annealed to lower temperatures in a weighted way that preserves canonical sampling [49, 87]. See the schematic representation in Fig. 2. AIS has been applied to biomolecules and optimizations have been suggested [72, 74]. AIS is nominally a non-equilibrium approach, but in precise analogy to the Jarzynski equality [52], it yields equilibrium ensembles. The “J-walking” approach also starts from high temperature [28]; a good discussion of several related methods is given in Ref. [9].

5.1.1 How effective is replica exchange?

Replica exchange (RE) simulation is both popular and controversial. Some authors have noted weaknesses [18, 92, 148], others have described successes (e.g., [48, 102, 104]), and some conclusions have been more ambiguous [3, 76, 96, 143]. What is the real story — how much better is RE than ordinary MD?

Examination of the various claims and studies reveals that, in fact, there is little disagreement so long as the particular sampling problem is explicitly accounted for (Sec. 2.3). In brief, when observables of interest depend significantly on states which are very rapidly accessed at high temperature, then replica exchange and related methods can be very efficient [48, 102]. A prime example would be estimating the folded fraction near the folding transition. On the other hand, when the goal is to sample an overwhelmingly folded ensemble, there does not appear to be significant evidence of replica exchange’s efficiency — so long as the initial condition is a folded configuration (i.e., in terms of the timescales of Sec. 3.2, if ). This last qualification is important in understanding the data presented in Ref. [48].

An intuitive picture underpinning the preceding conclusions is readily gained by revisiting the idea that state populations are estimated by transitions between states in continuous trajectories (Sec. 3.1). Indeed, this same picture is the basis for discrete-state analyses [102, 143], which we can consider along with the schematic landscape of Fig. 1. Specifically, the average of any observable A can be written as 〈A〉 = ΣipiĀi, where i indexes all folded and unfolded substates and Āi is the average of A within substate i. If pi values are significant in both folded and unfolded substates, then the elevated temperatures employed in RE simulation can usefully promote transitions to sample unfolded states [102]. On the other hand, if p (unfolded) ≪ p (folded), where is the sum of folded probabilities and p (unfolded) = 1 − p (folded), then computer resources devoted to unfolded substates can detract from estimating folded-state observables; this line of analysis echoes earlier studies [92, 143].

Regardless of whether RE is superior to MD, another key issue is whether RE can provide sufficient sampling (Neff > 10) for protein-sized systems. It is far from clear that this is the case. RE cannot provide full sampling unless some level of the ladder can be fully sampled [148] (see Sec. 3.1), and the ability for a simple dynamical trajectory to sample the large configuration space of a fully or partly unfolded state of a protein has not been demonstrated to date.

5.1.2 Energy-based sampling methods

Energy-based schemes must be seen as closely related to temperature-based strategies since the two variables are conjugate to each other in the Boltzmann factor, Eq. (1). Yet because any fixed-temperature ensemble can include arbitrary energy values, energy-based schemes must account for this. “Multi-canonical” schemes based on sampling energy values (typically uniformly) have been implemented for biomolecules [40, 85], including with the Wang-Landau device [132]; see also [55]. Nevertheless, it should be remembered that energy is not expected to be a good proxy for the configurational coordinates of primary interest — see Fig. 1.

5.2 Hamiltonian exchange and multiple models

As schematized in Fig. 2, the different temperature-based methods sketched above can readily be generalized to variations in forcefield parameters [117], and even resolution as discussed below. This is because the methods are based on the Boltzmann factor, which is a general form that can apply to different forcefields Uλ, each characterized by the set of parameters λ = {λ1, λ2,…}. Thus, the fundamental distribution Eq. (1) can be written more explicitly as:

| (5) |

The different schemes for using ladders of different types — based on temperature, force-field parameters, or resolution — are depicted in Fig. 2. One of the first proposals for using multiple models was given in Ref. [69].

The simplest way to reduce the roughness of a landscape (cf. Eq. (3)) is by maintaining the functional form of U but changing parameters — e.g., coefficients of dihedral terms. Changing parameters in parameter files of common software packages makes this route fairly straightforward. Note that unless forcefield terms are explicitly removed, the step cost in Eq. (3) remains unchanged.

Hamiltonian exchange has been applied in a number of cases, for instance using models with softened van der Waals’ interactions and hence decreased roughness [45]. The approach called “accelerated MD” employs ordinary MD on a smoothed potential energy landscape and requires reweighting to obtain canonical averages [39, 109]; it can therefore be considered a two-level Hamiltonian-changing algorithm.

5.3 Resolution exchange and multi-resolution approaches

Extending the multiple-model ideas a step further leads to the consideration of multiple resolutions. To account for varying resolution in the formulation of Eq. (5), some of the λi parameters may be considered prefactors which can eliminate detailed interactions when set to zero. Different, formally exact approaches to using multiple resolutions have been proposed [15, 68, 70, 71, 145], primarily echoing ideas in replica exchange and annealing.

Although multiple-resolution methods have not produced dramatic results for canonical sampling of large atomistic systems, they appear to hold unique potential in the context of the sampling costs embodied in Eq. (3). In particular, low-resolutions models reduce cost and roughness to such an extent that some coarse models have been shown to permit good sampling for proteins (Neff > 10) with typical resources [77, 135]. It is not clear that a similar claim can be made for high-temperature or modified-potential simulations of stably folded proteins.

5.4 Multiple trajectories without multiple levels

There is a qualitatively different group of algorithms that also uses multiple trajectories, but all under the same conditions — i.e., “uni-canonically” [6, 47, 89] — in contrast to exchange and related methods. The basic idea of these methods is that when a trajectory reaches a new region of configuration space, it can spawn multiple daughter trajectories, all on the unaltered landscape. The daughter trajectories enable better sampling of regions that otherwise would receive less computer resources — and also, resources can be directed away from regions which already are well sampled. In the context of Markov-state models, this strategy can be optimized to increase precision in the estimate of any observable [42]. Exact canonical sampling can also be using a steady-state formulation of the “weighted ensemble” path sampling method [6]; see also related approaches [98, 125, 133].

5.5 Single-trajectory approaches: Dynamics, Monte Carlo, and variants

It is useful to group together a large group of approaches — including MD, simple Monte Carlo (MC) and Langevin dynamics [2, 32, 105] — and consider them as basic dynamics [147]. These methods generate a single trajectory (or Markov chain) in which configurations are strongly sequentially correlated — and have no non-sequential correlations. In other words, these methods should all be expected to behave roughly like MD simulation because the motion is intrinsically constrained by the high density and landscape complexity of biomolecular systems. On the one hand, basic dynamical methods will generate physically realizable trajectories or nearly so, but on the other, they will be severely limited by physical timescales as described above.

In this section, we will explore some basic ideas of dynamical approaches and also some interesting variants.

5.5.1 Are there “real” dynamics?

Unlike many other sampling algorithms, MD also simulates the fundamental classical dynamics of a system. That is, the trajectories produced by MD are also intended to model the time-dependence of physical processes.

Should MD trajectories provide much better depictions of the underlying processes than other “basic” simulation methods — e.g., Langevin or MC simulation? The answer is not so clear. First, like other methods, MD necessarily uses an approximate forcefield (see, e.g., [31]). Second, on the long timescales ultimately of interest, roundoff errors can be expected to lead to significant deviations from the exact trajectories for the given forcefield.

Somewhat more fundamentally, there appears to be a physical inconsistency in running finite-temperature MD simulations for finite-sized systems. Thermostats of various types are used [32], some stochastic and some deterministic: is there a correct method? By definition, a finite-temperature system is not isolated but is coupled to a “bath” by some physical process, presumably molecular collisions. Because the internal degrees of freedom of a bath are not explicitly simulated, again by definition, a bath should be intrinsically stochastic. The author is unaware of a first-principles prescription for modeling the coupling to a thermal bath, although sophisticated thermostats have been proposed [32, 63]. For MD simulations with periodic boundary conditions, it is particularly difficulty to imagine truly physical coupling to a bath.

The bottom line is that a finite-temperature process in a finite system is intrinsically stochastic, rendering questionable the notion that standard MD protocols produce “correct” trajectories. To consider this point another way, should we really expect that inertial effects are very important in dense aqueous and macromolecular media?

The foregoing discussion suggests, certainly, that Langevin simulations should also be considered physical, but what about Monte Carlo? Certainly, one can readily imagine unphysical MC protocols (e.g., attempting trial moves for atoms in the C-terminal domain of a protein ten times as often as for those in the N terminus). Nevertheless, so long as trial moves are small and performed in a spatially uniform way, one can expect MC “dynamics” to be an approximation to over-damped (non-inertial) Langevin simulation; see [90, 110, 146].

5.5.2 Modifying dynamics to improve sampling

Although changing temperature or model parameters automatically changes the dynamics observed in MD or LD simulation, it has been shown that the dynamics can be modified and maintain canonical sampling on the original landscape, U (x) [84, 144]. This possibility defies the simple formulation of the second line of Eq. (3), because the landscape roughness remains unchanged while the number of required steps is reduced. The method has been applied to alkane and small-peptide systems, but its generalization to arbitrary systems has not been presented to the author’s knowledge. A better known and related approach is the use of multiple time steps, with shorter steps for faster degrees of freedom [119, 122], which can save computer time up to a limit set by resonance phenomena [106].

Another approach to modifying dynamics is to avoid revisiting regions already sampled by means of a “memory” potential which repels the trajectory from prior configurations [50, 60]. This strategy is called “metadynamics” or the local-elevation method, and typically employs pre-selection of important coordinates to be assigned repulsive terms. Although trajectories generated in a history-dependent way do not satisfy detailed balance, it is possible to correct for the bias introduced and recover canonical sampling [10]. This approach could also be considered a potential-of-mean-force method (Sec. 5.6).

The potential surface and dynamics can also be modified using a strategy of raising basins while keeping barriers intact [39, 97, 129]; see also [149]. The “accelerated MD” approach is a well-known example, but achieving canonical sampling requires a reweighting procedure [109]. Such reweighting is limited by the overlap issues confronting many methods: the sampled distribution must be sufficiently similar to the targeted distribution Eq. (1) so that the data is not overwhelmed by statistical noise [72, 109].

Qualitatively, how can we think about the methods just described? For one thing, as dynamical methods, they all gather information by transitions among states (Sec. 3.1): more transitions suggests greater statistical quality of the data. However, methods which modify the potential surface may increase the number of transitions, but the overlap between the sampled and targeted distributions generally is expected decline with more substantial changes to the potential. In this context, the modification of dynamics without perturbing the potential [84, 144] seems particularly intriguing, even though technical challenges in implementation may remain.

5.5.3 Monte Carlo approaches

The term “Monte Carlo” can be used in many ways, so it useful to first delimit our discussion. Here, we want to focus on single-Markov-chain Monte Carlo, in which a sequence of configurations is generated, each one based on a trial move away from the previous configuration. Typically, such MC simulations of biomolecules have a strong dynamical character because trial moves tend to be small and physically realizable in a small time increment [90, 110, 146], whereas large trial moves would tend to be rejected in any reasonably detailed model. As we shall see, however, less physical moves sometimes can be used — including move sets which do not strictly obey detailed balance but instead conform to the weaker balance condition [79]. It is also worth noting that the various exchange simulations discussed above (Secs. 5.1, 5.2, 5.3) can be considered MC simulations because “exchange” is a special kind of trial move and indeed governed by a Metropolis acceptance criterion [147].

A key advantage of single-chain MC simulation is that it can be used with any energy function, whether continuous or not, because forces do not need to be calculated [32]. MC simulation is available for use with standard forcefields in some simulation packages [46, 53] but it seems to be most commonly used in connection with simplified models (e.g., [110, 146]). The choice to use MC is usually for convenience: it readily enables the use of simple or discontinuous energy functions; furthermore, MC naturally handles constraints like fixed bond lengths and/or angles. Substantial effort has gone into developing trial moves useful for biomolecules, with a focus on moves that act fully locally (e.g., [5, 113, 123, 127, 134]). Two studies of MC employing quasi-physical trial moves have reported efficiency gains compared to MD [53, 124]. MC has also been applied to large implicitly solvated peptides [80]. Further, it is widely used in non-statistical approaches such as docking [62], and it also is used in the Rosetta folding software [17].

For implicitly solvated all-atom peptides, a novel class of trial moves has proven extremely efficient. Ding et al. showed that when libraries of configurations for each amino acid were computed in advance, swap moves with library configurations provided extremely rapid motion in configuration space [19]. Efficiency gains based on measuring Neff suggested the simulations were 100 – 1,000 times faster than MD. Trial moves involving entire residues apparently were successful because subtle correlations among the atoms were accounted for in the pre-computed libraries; by comparison, internal-coordinate trial moves of a similar magnitude (e.g., twisting φ and ψ dihedrals) were rarely accepted [19].

More detailed discussion of Monte Carlo methods can be found in recent reviews [22, 128] and textbooks [2, 32].

5.6 Potential-of-mean-force methods

Many methods are designed to calculate a potential of mean force (PMF) for a specified set of coordinates χ, and such approaches implicitly are sampling methods. After all, the Boltzmann factor of the PMF is defined to be proportional to the probability distribution for the specified coordinates: ρ(χ) ∝ exp[-PMF(χ)/kBT] [147]. If the PMF has been calculated well (with sufficient sampling along the χ coordinates and orthogonal to them) then the configurations can be suitably reweighted into a canonical ensemble.

There are numerous approaches geared toward calculating a PMF. Some explore the full configuration space in a single trajectory, such as lambda dynamics [58, 120], and could be categorized with modified dynamics methods, although this is just a semantic issue. A large fraction of PMF calculations employ the weighted histogram analysis method (WHAM) [59], but many alternatives have been developed (e.g., [1, 25, 81, 111, 142]).

The main advantage which PMF methods hope to attain is faster sampling along the \ coordinates than would be possible using brute-force sampling. This perspective allows several observations. First, to aid sampling, the investigator must be able to select all important slow coordinates in advance — otherwise, sampling in directions “orthogonal” to χ will be impractical. Second, the maximum advantage which can be gained over a brute-force simulation will depend on how substantial the barriers are along the χ coordinates; if sampling along a coordinate is slow because many states are separated by only small barriers, then the advantage may be modest. Last, for a PMF calculation to be successful, different values of the χ coordinates must be quantitatively related to each other: depending on the details of the method, this can be directly via numerous transitions (e.g., [25, 58, 120, 142]) echoing Sec. 3.1, or by requiring well-sampled sub-regions of overlap [59].

5.7 Non-dynamical methods

Methods which do not rely on dynamics for sampling use a variety of distinct strategies, but as there are relatively few such efforts, they are grouped together for convenience.

Recently, there have been a number of applications to biomolecules of old polymer growth ideas (e.g., [83, 131]), sometimes termed “sequential importance sampling” [66]. The basic idea is to add one monomer (e.g., amino acid) at a time to an ensemble of partially grown configurations while (i) keeping track of appropriate statistical weights and/or (ii) by “resampling” [66]. These approaches have been applied to simplified models of proteins and nucleic acids [29, 138–140], to all-atom peptides at high temperature [126], and more recently to all-atom peptides using pre-sampled libraries of amino-acid configurations [78]. The intrinsic challenge in polymer growth methods is that configurations important in the full molecule may have low statistical probability in early stages of growth; thus application to large, detailed systems likely will require biasing toward structural information known for the full molecule [78, 138].

Some methods have been developed which attempt a semi-enumerative description of energy basins. For instance, the “mining minima” method uses a quasiharmonic procedure to estimate the partition function of each basin, which in turn determines the relative probability of the basin [23]; see also [7]. While such approaches have the disadvantage of requiring an exponentially large number of basins [130], they largely avoid timescale issues associated with dynamical methods because the basins are located with faster search methods.

Another class of approaches has attempted to treat the problem of estimating the free energy of a previously generated canonical ensemble [43, 54, 136]; see also [12]. In analogy to PMF methods (Sec. 5.6), knowledge of free energies for independent simulations in separate states (for which no transitions have been observed) enables the states to be combined in a full ensemble: the free energies directly imply the relative probabilities of the states. The ideas behind such methods are fairly technical and beyond the scope of this review.

6 Special hardware use for sampling

In several cases, rather impressive improvements in sampling speed have been reported based on using novel hardware — and based on novel uses of “ordinary” hardware. It is almost always the case that using new hardware also requires algorithmic development, and that component should not be minimized. Little is “plug and play” in this business.

6.0.1 Parallelization, special-purpose CPUs, and distributed computing

Perhaps the best known way to employ hardware is by parallelization. On the one hand, parallelization typically makes sampling less efficient than single-core simulation when measured by sampling per core (or per dollar) due to overhead costs. On the other, parallelization allows by far the fastest sampling for a given amount of wall-clock time (for a single system) including record-setting runs [21, 30, 108]. Recent examples of parallelization of single-trajectory MD include: a 10 µsec simulation of a small domain [30]; µsec+ simulations of membrane proteins on the BlueGene [37] and Desmond [20] platforms; and the longest reported to date, a msec simulation of a small globular protein on the Anton machine [108]; see also [24]. The Anton simulation reflects both parallelization and the use of special-purpose chips, which can be inferred to each provide ~10 or more times speedup compared to standard chips [107, 108].

Long simulations have significant value. They allow the community to study selected systems in great detail and to appreciate phenomena which could not otherwise be observed. Of equal importance, long simulations can alert the community to limitations of MD and forcefields [31, 36].

There are other parallel strategies, such as exchange simulation (see above) and distributed computing. Distributed computing employs many simultaneous independent simulations or — via repeated rounds of simulation — quasi-parallel computing with minimal communication among simulations. While distributed computing has primarily been applied to the folding problem [115], recent work has shown the value of multiple short simulations for producing Markov state models [47, 89]. Such models can be used to deduce both non-equilibrium and equilibrium information — thus canonical ensembles if desired. A distributed computing framework can also be used for multi-level simulations such as replica exchange [100] and in principle for other quasi-parallel methods [6].

6.1 Use of GPUs and RAM for sampling

There are other means to exploit hardware besides parallelization. Combining a GPU (graphics processing unit) and CPU, implicit-solvent simulations of all-atom proteins can be performed hundreds of times faster than by a CPU alone [33]. Other work has exploited standard memory capacity (RAM) available on modern computers: by pre-calculating “libraries” of amino acid configurations, “library-based Monte Carlo” was shown to sample implicitly solvated peptides hundreds and sometimes > 1,000 times faster than standard simulation [19]. In a similar spirit, tabulation of a scaled form of the generalized Born implicit-solvent model was shown to lead to significant speed gain for the tabulated part [61].

7 Concluding Perspective

The main goal of this review has been to array various methodologies into qualitative groupings so as to aid the critical analysis necessary to make progress in equilibrium sampling of biomolecules. Where appropriate, an effort has been made to offer a point of view on essential strengths and weaknesses of various methods. As an example, when considering popular multi-level schemes (Secs. 5.1 – 5.3), users should be confident that some level of the “ladder” can be well sampled.

Interesting conclusions result from surveying the sampling literature. Except in small systems, purely algorithmic improvements have yet to demonstrably accelerate equilibrium sampling of biomolecules by a significant amount. Hardware-based advances have been more dramatic, however. In fairness, demonstrating the effectiveness of new hardware for MD is much more straightforward than assessing an algorithm.

To aid future progress, developing and using sampling “yardsticks” should be a key priority for the field (Sec. 4). Such measures should probe the configuration-space distribution in an objective, automatic way to measure the effective sample size. Once a small number of standard measures of sampling quality are accepted and used, efforts naturally will focus on approaches that make a significant difference. Currently, there is a proliferation of nuanced modifications of a small number of central ideas, without a good basis for distinguishing among them. Unlike in the history of theoretical physics, where elegance has sometimes served as a guide for truth, the sampling problem cries out for a “bottom line” focus on efficiency. After all, it is this efficiency which ultimately will permit addressing biophysical and biochemical questions with confidence.

In summary, there seems little choice but to express pessimism on the current state of equilibrium sampling of important biomolecular systems. Keys to moving forward would seem to be (i) exploiting hardware; (ii) quantifying sampling to determine which algorithms are more efficient than MD; and (iii) employing large-resource simulation data to provide benchmarks and guide future efforts.

Summary.

There are subtleties in defining the equilibrium sampling problem having to do with initial conditions and the targeted number of independent configurations.

Molecular dynamics simulations on typical hardware remain several orders of magnitude shorter than known biological timescales.

There are dozens of algorithmic variants available for biomolecular simulation, but none has been documented to yield an order of magnitude improvement over standard molecular dynamics in the range of systems and conditions of primary interest.

Algorithms typically have not been assessed by a standard measure, but some such measures are now available.

Isolated instances of high algorithmic efficiency (compared to molecular dynamics) have been reported in small systems and/or special conditions.

Novel uses of hardware (CPU, GPU, and memory) have yielded some of the most dramatic and demonstrable advances.

Future Issues.

Better sampling should reveal much more of the biophysics and biochemistry which motivates biomolecular simulations. We still do not know the scales of typical equilibrium fluctuations in large biomolecules.

The degree of sampling quality achieved by simulations must be assessed objectively and quantitatively.

Progress in sampling can be expected to come from the combination of novel algorithms and novel hardware use.

Although current simulations typically use all-atom models at the expense of poor sampling, it is possible that reduced or hybrid all-atom/coarse-grained models will yield a better overall picture of equilibrium fluctuations.

Acknowledgments

The author has benefitted from numerous stimulating discussions of sampling with colleagues and group members over the years. Financial support has been provided by grants from the National Science Foundation (Grant No. MCB-0643456) and the National Institutes of Health (Grant No. GM076569).

Mini-glossary

- Canonical distribution

The distribution of configurations where the probability of each is proportional to the Boltzmann factor of the energy at constant temperature, volume, and number of atoms.

- Correlation time

The time which must elapse, on average, between evaluations of a specific observable for the values to be statistically independent.

- Effective sample size

The number of statistically independent configurations to which a given ensemble of correlated configurations is equivalent.

- Forcefield

The classical potential energy function which approximates the quantum mechanical Hamiltonian and governs a simulation.

- Markov chain

A sequence of configurations where each is generated by a stochastic rule depending only the immediately prior configuration.

- Reweighting

Assigning probabilistic values to configurations based on the ratio of the targeted distribution and the distribution actually sampled.

Acronyms

- CPU

Central processing unit

- GPU

Graphics processing unit

- MC

Monte Carlo

- MD

Molecular Dynamics

- PMF

Potential of mean force

- RE

Replica Exchange

References

- 1.Abrams JB, Tuckerman ME. Efficient and direct generation of multidimensional free energy surfaces via adiabatic dynamics without coordinate transformations. The Journal of Physical Chemistry B. 2008 Dec;112(49):15742–15757. doi: 10.1021/jp805039u. [DOI] [PubMed] [Google Scholar]

- 2.Allen MP, Tildesley DJ. Computer Simulation of Liquids. Oxford: Oxford University Press; 1987. [Google Scholar]

- 3.Beck DAC, White GWN, Daggett V. Exploring the energy landscape of protein folding using replica-exchange and conventional molecular dynamics simulations. J Struct Biol. 2007 Mar;157(3):514–523. doi: 10.1016/j.jsb.2006.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Berne BJ, Straub JE. Novel methods of sampling phase space in the simulation of biological systems. Curr Opin Struc Biol. 1997;7:181–189. doi: 10.1016/s0959-440x(97)80023-1. [DOI] [PubMed] [Google Scholar]

- 5.Betancourt MR. Efficient Monte Carlo trial moves for polypeptide simulations. J Chem Phys. 2005 Nov;123(17):174905. doi: 10.1063/1.2102896. [DOI] [PubMed] [Google Scholar]

- 6.Bhatt D, Zhang BW, Zuckerman DM. Steady state via weighted ensemble path sampling. Journal of Chemical Physics. 2010;133:014110. doi: 10.1063/1.3456985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bogdan TV, Wales DJ, Calvo F. Equilibrium thermodynamics from basin-sampling. J Chem Phys. 2006 Jan;124(4):044102. doi: 10.1063/1.2148958. [DOI] [PubMed] [Google Scholar]

- 8.Brenner P, Sweet CR, VonHandorf D, Izaguirre JA. Accelerating the replica exchange method through an efficient all-pairs exchange. J Chem Phys. 2007 Feb;126(7):074103. doi: 10.1063/1.2436872. [DOI] [PubMed] [Google Scholar]

- 9.Brown S, Head-Gordon T. Cool walking: A new Markov chain Monte Carlo sampling method. J. Comp. Chem. 2002;24:68–76. doi: 10.1002/jcc.10181. [DOI] [PubMed] [Google Scholar]

- 10.Bussi G, Laio A, Parrinello M. Equilibrium free energies from nonequilibrium metadynamics. Phys Rev Lett. 2006 Mar;96(9):090601. doi: 10.1103/PhysRevLett.96.090601. [DOI] [PubMed] [Google Scholar]

- 11.Calvo F. All-exchanges parallel tempering. J Chem Phys. 2005 Sep;123(12):124106. doi: 10.1063/1.2036969. [DOI] [PubMed] [Google Scholar]

- 12.Cheluvaraja S, Meirovitch H. Simulation method for calculating the entropy and free energy peptides and proteins. Proc. Nat. Acad. Sci. 2004;101:9241–9246. doi: 10.1073/pnas.0308201101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chodera JD, Singhal N, Pande VS, Dill KA, Swope WC. Automatic discovery of metastable states for the construction of markov models of macromolec-ular conformational dynamics. The Journal of Chemical Physics. 2007;126(15):155101–155117. doi: 10.1063/1.2714538. [DOI] [PubMed] [Google Scholar]

- 14.Chodera JD, Swope WC, Pitera JW, Seok C, Dill KA. Use of the weighted histogram analysis method for the analysis of simulated and parallel tempering simulations. Journal of Chemical Theory and Computation. 2007;3(1):26–41. doi: 10.1021/ct0502864. [DOI] [PubMed] [Google Scholar]

- 15.Christen M, van Gunsteren WF. Multigraining: an algorithm for simultaneous fine-grained and coarse-grained simulation of molecular systems. J Chem Phys. 2006 Apr;124(15):154106. doi: 10.1063/1.2187488. [DOI] [PubMed] [Google Scholar]

- 16.Christen M, van Gunsteren WF. On searching in, sampling of, and dynamically moving through conformational space of biomolecular systems: A review. J. Comput. Chem. 2008;29(2):157–166. doi: 10.1002/jcc.20725. [DOI] [PubMed] [Google Scholar]

- 17.Das R, Baker D. Macromolecular modeling with Rosetta. Annu Rev Biochem. 2008;77:363–382. doi: 10.1146/annurev.biochem.77.062906.171838. [DOI] [PubMed] [Google Scholar]

- 18.Denschlag R, Lingenheil M, Tavan P. Efficiency reduction and pseudo-convergence in replica exchange sampling of peptide folding-unfolding equilibria. Chemical Physics Letters. 2008;458:244–248. [Google Scholar]

- 19.Ding Y, Mamonov AB, Zuckerman DM. Efficient equilibrium sampling of all-atom peptides using library-based Monte Carlo. J Phys Chem B. 2010 May;114(17):5870–5877. doi: 10.1021/jp910112d. PMCID: PMC2882875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dror RO, Arlow DH, Borhani DW, Jensen M, Piana S, Shaw DE. Identification of two distinct inactive conformations of the beta2-adrenergic receptor reconciles structural and biochemical observations. Proc Natl Acad Sci U S A. 2009 Mar;106(12):4689–4694. doi: 10.1073/pnas.0811065106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Duan Y, Kollman PA. Pathways to a protein folding intermediate observed in a 1-microsecond simulation in aqueous solution. Science. 1998 Oct;282(5389):740–744. doi: 10.1126/science.282.5389.740. [DOI] [PubMed] [Google Scholar]

- 22.Earl DJ, Deem MW. Monte Carlo simulations. Methods Mol Biol. 2008;443:25–36. doi: 10.1007/978-1-59745-177-2_2. [DOI] [PubMed] [Google Scholar]

- 23.en A Chang C, Chen W, Gilson MK. Ligand configurational entropy and protein binding. Proc Natl Acad Sci U S A. 2007 Jan;104(5):1534–1539. doi: 10.1073/pnas.0610494104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ensign DL, Kasson PM, Pande VS. Heterogeneity even at the speed limit of folding: large-scale molecular dynamics study of a fast-folding variant of the villin headpiece. J Mol Biol. 2007 Nov;374(3):806–816. doi: 10.1016/j.jmb.2007.09.069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Fasnacht M, Swendsen RH, Rosenberg JM. Adaptive integration method for Monte Carlo simulations. Phys. Rev. E. 2004;69:056704. doi: 10.1103/PhysRevE.69.056704. [DOI] [PubMed] [Google Scholar]

- 26.Fenwick MK, Escobedo FA. Expanded ensemble and replica exchange methods for simulation of protein-like systems. The Journal of Chemical Physics. 2003;119(22):11998–12010. [Google Scholar]

- 27.Ferrenberg AM, Swendsen RH. New Monte Carlo technique for studying phase transitions. Phys. Rev. Lett. 1988;61:2635–2638. doi: 10.1103/PhysRevLett.61.2635. [DOI] [PubMed] [Google Scholar]

- 28.Frantz DD, Freeman DL, Doll JD. Reducing quasi-ergodic behavior in Monte Carlo simulation by J-walking: applications to atomic clusters. J. Chem. Phys. 1990;93:2769–2784. [Google Scholar]

- 29.Frauenkron H, Bastolla U, Gerstner E, Grassberger P, Nadler W. New Monte Carlo algorithm for protein folding. Physical Review Letters. 1998;80(14):3149–3152. [PubMed] [Google Scholar]

- 30.Freddolino PL, Liu F, Gruebele M, Schulten K. Ten-microsecond molecular dynamics simulation of a fast-folding ww domain. Biophys J. 2008 May;94(10):L75–L77. doi: 10.1529/biophysj.108.131565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Freddolino PL, Park S, Roux B, Schulten K. Force field bias in protein folding simulations. Biophys J. 2009 May;96(9):3772–3780. doi: 10.1016/j.bpj.2009.02.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Frenkel D, Smit B. Understanding Molecular Simulation. San Diego: Academic Press; 1996. [Google Scholar]

- 33. Friedrichs MS, Eastman P, Vaidyanathan V, Houston M, Legrand S, Be-berg AL, Ensign DL, Bruns CM, Pande VS. Accelerating molecular dynamic simulation on graphics processing units. J Comput Chem. 2009 doi: 10.1002/jcc.21209. • Describes GPU-based simulation of implicitly solvated proteins which are hundreds of time faster than CPU simulation.

- 34.Geyer CJ. Markov chain Monte Carlo maximum likelihood. In: Keramidas EM, editor. Proceedings of the 23rd symposium on the interface. Computing science and statistics; 1991. [Google Scholar]

- 35.Grassberger P. Pruned-enriched rosenbluth method: Simulations of theta polymers of chain length up to 1 000 000. Physical Review E (Statistical Physics, Plasmas, Fluids, and Related Interdisciplinary Topics) 1997;56(3):3682–3693. [Google Scholar]

- 36.Grossfield A, Feller SE, Pitman MC. Convergence of molecular dynamics simulations of membrane proteins. Proteins. 2007 Apr;67(1):31–40. doi: 10.1002/prot.21308. [DOI] [PubMed] [Google Scholar]

- 37.Grossfield A, Pitman MC, Feller SE, Soubias O, Gawrisch K. Internal hydration increases during activation of the G-protein-coupled receptor rhodopsin. J Mol Biol. 2008 Aug;381(2):478–486. doi: 10.1016/j.jmb.2008.05.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Grossfield A, Zuckerman DM. Quantifying uncertainty and sampling quality in biomolecular simulations. Annu Rep Comput Chem. 2009 Jan;5:23–48. doi: 10.1016/S1574-1400(09)00502-7. PMCID: PMC2865156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hamelberg D, Mongan J, McCammon JA. Accelerated molecular dynamics: a promising and efficient simulation method for biomolecules. J Chem Phys. 2004 Jun;120(24):11919–11929. doi: 10.1063/1.1755656. [DOI] [PubMed] [Google Scholar]

- 40.Hansmann Okamoto. Monte carlo simulations in generalized ensemble: Mul-ticanonical algorithm versus simulated tempering. Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics. 1996 Nov;54(5):5863–5865. doi: 10.1103/physreve.54.5863. [DOI] [PubMed] [Google Scholar]

- 41.Hess B. Convergence of sampling in protein simulations. Physical Review E. 2002;65(3):031910. doi: 10.1103/PhysRevE.65.031910. [DOI] [PubMed] [Google Scholar]

- 42.Hinrichs NS, Pande VS. Calculation of the distribution of eigenvalues and eigenvectors in markovian state models for molecular dynamics. J Chem Phys. 2007 Jun;126(24):244101. doi: 10.1063/1.2740261. [DOI] [PubMed] [Google Scholar]

- 43.Hnizdo V, Tan J, Killian BJ, Gilson MK. Efficient calculation of configurational entropy from molecular simulations by combining the mutual-information expansion and nearest-neighbor methods. J Comput Chem. 2008 Jul;29(10):1605–1614. doi: 10.1002/jcc.20919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Howard J. Mechanics of Motor Proteins and the Cytoskeleton. Sunderland, Mass: Sinauer Associate; 2001. [Google Scholar]

- 45.Hritz J, Oostenbrink C. Hamiltonian replica exchange molecular dynamics using soft-core interactions. J Chem Phys. 2008 Apr;128(14):144121. doi: 10.1063/1.2888998. [DOI] [PubMed] [Google Scholar]

- 46.Hu J, Ma A, Dinner AR. Monte Carlo simulations of biomolecules: The MC module in CHARMM. J. Comput. Chem. 2006;27(2):203–216. doi: 10.1002/jcc.20327. [DOI] [PubMed] [Google Scholar]

- 47.Huang X, Bowman GR, Bacallado S, Pande VS. Rapid equilibrium sampling initiated from nonequilibrium data. Proc Natl Acad Sci U S A. 2009 Nov;106(47):19765–19769. doi: 10.1073/pnas.0909088106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Huang X, Bowman GR, Pande VS. Convergence of folding free energy landscapes via application of enhanced sampling methods in a distributed computing environment. J Chem Phys. 2008 May;128(20):205106. doi: 10.1063/1.2908251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Huber GA, McCammon JA. Weighted-ensemble simulated annealing: Faster optimization on hierarchical energy surfaces. Physical Review E. 1997;55(4):4822. [Google Scholar]

- 50.Huber T, Torda AE, van Gunsteren WF. Local elevation: a method for improving the searching properties of molecular dynamics simulation. J Comput Aided Mol Des. 1994 Dec;8(6):695–708. doi: 10.1007/BF00124016. [DOI] [PubMed] [Google Scholar]

- 51.Hukushima K, Nemoto K. Exchange Monte Carlo method and application to spin glass simulation. J. Phys. Soc. Jpn. 1996;65:1604–1608. [Google Scholar]

- 52.Jarzynski C. Nonequilibrium equality for free energy differences. Phys. Rev. Lett. 1997;78:2690–2693. [Google Scholar]

- 53.Jorgensen WL, Tirado-Rives J. Monte Carlo vs molecular dynamics for con-formational sampling. J. Phys. Chem. 1996;100:14508–14513. [Google Scholar]

- 54.Killian BJ, Kravitz JY, Gilson MK. Extraction of configurational entropy from molecular simulations via an expansion approximation. J Chem Phys. 2007 Jul;127(2):024107. doi: 10.1063/1.2746329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Kim J, Straub JE, Keyes T. Statistical-temperature Monte Carlo and molecular dynamics algorithms. Phys Rev Lett. 2006 Aug;97(5):050601. doi: 10.1103/PhysRevLett.97.050601. [DOI] [PubMed] [Google Scholar]

- 56.Knegtel RMA, Kuntz ID, Oshiro CM. Molecular docking to ensembles of protein structures. J. Mol. Bio. 1997;266:424–440. doi: 10.1006/jmbi.1996.0776. [DOI] [PubMed] [Google Scholar]

- 57.Kofke DA. On the acceptance probability of replica-exchange monte carlo trials. The Journal of Chemical Physics. 2002;117(15):6911–6914. [Google Scholar]

- 58.Kong X, Brooks CM. Lambda-dynamics: A new approach to free energy calculations. J. Chem. Phys. 1996;105:2414–2423. [Google Scholar]

- 59.Kumar S, Bouzida D, Swendsen RH, Kollman PA, Rosenberg JM. The weighted histogram analysis method for free-energy calculations on biomolecules. i. the method. J. Comp. Chem. 1992;13:1011–1021. [Google Scholar]

- 60.Laio A, Parrinello M. Escaping free-energy minima. Proc Natl Acad Sci U S A. 2002 Oct;99(20):12562–12566. doi: 10.1073/pnas.202427399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Larsson P, Lindahl E. A high-performance parallel-generalized born implementation enabled by tabulated interaction rescaling. J. Comput Chem. 2010;31(14):2593–2600. doi: 10.1002/jcc.21552. [DOI] [PubMed] [Google Scholar]

- 62.Leach AR. Molecular Modelling: Principles and Applications. Prentice Hall; 2001. [Google Scholar]

- 63.Leimkuhler BJ, Sweet CR. The canonical ensemble via symplectic integrators using nose and nose-poincare chains. The Journal of Chemical Physics. 2004;121(1):108–116. doi: 10.1063/1.1740753. [DOI] [PubMed] [Google Scholar]

- 64.Li H, Li G, Berg BA, Yang W. Finite reservoir replica exchange to enhance canonical sampling in rugged energy surfaces. J Chem Phys. 2006 Oct;125(14):144902. doi: 10.1063/1.2354157. [DOI] [PubMed] [Google Scholar]

- 65.Lin J-H, Perryman AL, Schames JR, McCammon JA. Computational drug design accommodating receptor flexibility: the relaxed complex scheme. J Am Chem Soc. 2002 May;124(20):5632–5633. doi: 10.1021/ja0260162. [DOI] [PubMed] [Google Scholar]

- 66.Liu JS. Monte Carlo Strategies in Scientific Computing. New York: Springer; 2002. [Google Scholar]

- 67.Liu P, Kim B, Friesner RA, Berne BJ. Replica exchange with solute tempering: A method for sampling biological systems in explicit water. Proc. Nat. Acad. Sci. 2005;102:13749–13754. doi: 10.1073/pnas.0506346102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Liu P, Shi Q, Lyman E, Voth GA. Reconstructing atomistic detail for coarsegrained models with resolution exchange. J Chem Phys. 2008 Sep;129(11):114103. doi: 10.1063/1.2976663. [DOI] [PubMed] [Google Scholar]

- 69.Liu Z, Berne BJ. Method for accelerating chain folding and mixing. The Journal of Chemical Physics. 1993;99(8):6071–6077. [Google Scholar]

- 70.Lyman E, Ytreberg FM, Zuckerman DM. Resolution exchange simulation. Phys. Rev. Lett. 2006;96:028105. doi: 10.1103/PhysRevLett.96.028105. [DOI] [PubMed] [Google Scholar]

- 71.Lyman E, Zuckerman DM. Resolution exchange simulation with incremental coarsening. J. Chem. Theory Comp. 2006;2:656–666. doi: 10.1021/ct050337x. [DOI] [PubMed] [Google Scholar]

- 72.Lyman E, Zuckerman DM. Annealed importance sampling of peptides. The Journal of Chemical Physics. 2007;127(6):065101–065106. doi: 10.1063/1.2754267. [DOI] [PubMed] [Google Scholar]

- 73.Lyman E, Zuckerman DM. On the structural convergence of biomolecu-lar simulations by determination of the effective sample size. J. Phys. Chem. B. 2007;111(44):12876–12882. doi: 10.1021/jp073061t. PMCID: PMC2538559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Lyman E, Zuckerman DM. Resampling improves the efficiency of a “fast-switch” equilibrium sampling protocol. J Chem Phys. 2009 Feb;130(8):081102. doi: 10.1063/1.3081626. PMCID: PMC2671214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Lyubartsev AP, Martsinovski AA, Shevkunov SV, Vorontsov-Velyaminov PN. New approach to Monte Carlo calculation of the free energy: Method of expanded ensembles. The Journal of Chemical Physics. 1992;96(3):1776–1783. [Google Scholar]

- 76.Machta J. Strengths and weaknesses of parallel tempering. Phys Rev E Stat Nonlin Soft Matter Phys. 2009 Nov;80(5 Pt 2):056706. doi: 10.1103/PhysRevE.80.056706. [DOI] [PubMed] [Google Scholar]

- 77. Mamonov AB, Bhatt D, Cashman DJ, Ding Y, Zuckerman DM. General library-based Monte Carlo technique enables equilibrium sampling of semi-atomistic protein models. J Phys Chem B. 2009 Aug;113(31):10891–10904. doi: 10.1021/jp901322v. PM-CID: PMC2766542. • Introduces a memory-intensive approach to simulation of all-atom, coarsegrained, and hybrid models.

- 78.Mamonov AB, Zhang X, Zuckerman D. Rapid sampling of all-atom peptides using a library-based polymer-growth approach. Journal of Computational Chemistry. 2010 doi: 10.1002/jcc.21626. Accepted In press Preprint available: http://arxiv.org/abs/0910.2495. PMCID in process. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Manousiouthakis VI, Deem MW. Strict detailed balance is unnecessary in Monte Carlo simulation. The Journal of Chemical Physics. 1999;110(6):2753–2756. [Google Scholar]

- 80.Mao AH, Crick SL, Vitalis A, Chicoine CL, Pappu RV. Net charge per residue modulates conformational ensembles of intrinsically disordered proteins. Proc Natl Acad Sci U S A. 2010 May;107(18):8183–8188. doi: 10.1073/pnas.0911107107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Maragliano L, Vanden-Eijnden E. Single-sweep methods for free energy calculations. J Chem Phys. 2008 May;128(18):184110. doi: 10.1063/1.2907241. [DOI] [PubMed] [Google Scholar]

- 82.McCammon JA, Gelin BR, Karplus M. Dynamics of folded proteins. Nature. 1977;267(5612):585–590. doi: 10.1038/267585a0. [DOI] [PubMed] [Google Scholar]

- 83.Meirovitch H. A new method for simulation of real chains. scanning future steps. J Phys A. 1982;15:L735–L740. [Google Scholar]

- 84.Minary P, Tuckerman ME, Martyna GJ. Long time molecular dynamics for enhanced conformational sampling in biomolecular systems. Phys Rev Lett. 2004 Oct;93(15):150201. doi: 10.1103/PhysRevLett.93.150201. [DOI] [PubMed] [Google Scholar]

- 85.Mitsutake A, Sugita Y, Okamoto Y. Generalized-ensemble algorithms for molecular simulations of biopolymers. Biopolymers. 2001;60(2):96–123. doi: 10.1002/1097-0282(2001)60:2<96::AID-BIP1007>3.0.CO;2-F. [DOI] [PubMed] [Google Scholar]

- 86.Mountain RD, Thirumalai D. Measures of effective ergodic convergence in liquids. J. Phys. Chem. 1989;93(19):6975–6979. [Google Scholar]

- 87.Neal RM. Annealed importance sampling. Statistics and Computing. 2001;11:125–139. [Google Scholar]

- 88.Neirotti JP, Freeman DL, Doll JD. Approach to ergodicity in Monte Carlo simulations. Physical Review E. 2000;62(5):7445. doi: 10.1103/physreve.62.7445. [DOI] [PubMed] [Google Scholar]

- 89.Noe F, Schtte C, Vanden-Eijnden E, Reich L, Weikl TR. Constructing the equilibrium ensemble of folding pathways from short off-equilibrium simulations. Proc Natl Acad Sci U S A. 2009 Nov;106(45):19011–19016. doi: 10.1073/pnas.0905466106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Nowak, Chantrell, Kennedy Monte Carlo simulation with time step quantification in terms of Langevin dynamics. Phys Rev Lett. 2000 Jan;84(1):163–166. doi: 10.1103/PhysRevLett.84.163. [DOI] [PubMed] [Google Scholar]

- 91.No F, Horenko I, Schtte C, Smith JC. Hierarchical analysis of conformational dynamics in biomolecules: transition networks of metastable states. J Chem Phys. 2007 Apr;126(15):155102. doi: 10.1063/1.2714539. [DOI] [PubMed] [Google Scholar]

- 92.Nymeyer H. How efficient is replica exchange molecular dynamics? an analytic approach. J. Chem. Theory Comput. 2008;4(4):626–636. doi: 10.1021/ct7003337. [DOI] [PubMed] [Google Scholar]

- 93.Okur A, Roe DR, Cui G, Hornak V, Simmerling C. Improving convergence of replica-exchange simulations through coupling to a high-temperature structure reservoir. Journal of Chemical Theory and Computation. 2007;3(2):557–568. doi: 10.1021/ct600263e. [DOI] [PubMed] [Google Scholar]

- 94.Opps SB, Schofield J. Extended state-space monte carlo methods. Phys Rev E Stat Nonlin Soft Matter Phys. 2001 May;63(5 Pt 2):056701. doi: 10.1103/PhysRevE.63.056701. [DOI] [PubMed] [Google Scholar]

- 95.Paschek D, Garcia AE. Reversible temperature and pressure denaturation of a protein fragment: A replica-exchange molecular dynamics simulation study. Phys. Rev. Lett. 2004;93:238105. doi: 10.1103/PhysRevLett.93.238105. [DOI] [PubMed] [Google Scholar]

- 96.Periole X, Mark AE. Convergence and sampling efficiency in replica exchange simulations of peptide folding in explicit solvent. J Chem Phys. 2007 Jan;126(1):014903. doi: 10.1063/1.2404954. [DOI] [PubMed] [Google Scholar]

- 97.Rahman JA, Tully JC. Puddle-skimming: An efficient sampling of multidimensional configuration space. The Journal of Chemical Physics. 2002;116(20):8750–8760. [Google Scholar]

- 98.Raiteri P, Laio A, Gervasio FL, Micheletti C, Parrinello M. Efficient reconstruction of complex free energy landscapes by multiple walkers metadynamics. J Phys Chem B. 2006 Mar;110(8):3533–3539. doi: 10.1021/jp054359r. [DOI] [PubMed] [Google Scholar]

- 99.Rathore N, Chopra M, de Pablo JJ. Optimal allocation of replicas in parallel tempering simulations. J Chem Phys. 2005 Jan;122(2):024111. doi: 10.1063/1.1831273. [DOI] [PubMed] [Google Scholar]

- 100.Rhee YM, Pande VS. Multiplexed-replica exchange molecular dynamics method for protein folding simulation. Biophys J. 2003 Feb;84(2 Pt 1):775–786. doi: 10.1016/S0006-3495(03)74897-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Rick SW. Replica exchange with dynamical scaling. J Chem Phys. 2007 Feb;126(5):054102. doi: 10.1063/1.2431807. [DOI] [PubMed] [Google Scholar]

- 102.Rosta E, Hummer G. Error and efficiency of replica exchange molecular dynamics simulations. J Chem Phys. 2009 Oct;131(16):165102. doi: 10.1063/1.3249608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Ruscio JZ, Fawzi NL, Head-Gordon T. How hot? systematic convergence of the replica exchange method using multiple reservoirs. J. Comput. Chem. 2010;31(3):620–627. doi: 10.1002/jcc.21355. [DOI] [PubMed] [Google Scholar]

- 104.Sanbonmatsu KY, Garca AE. Structure of met-enkephalin in explicit aqueous solution using replica exchange molecular dynamics. Proteins. 2002 Feb;46(2):225–234. doi: 10.1002/prot.1167. [DOI] [PubMed] [Google Scholar]

- 105.Schlick T. Molecular Modeling and Simulation. Springer; 2002. [Google Scholar]

- 106.Schlick T, Mandziuk M, Skeel RD, Srinivas K. Nonlinear resonance artifacts in molecular dynamics simulations. Journal of Computational Physics. 1998 Feb;140(1):1–29. [Google Scholar]

- 107.Shaw DE, Deneroff MM, Dror RO, Kuskin JS, Larson RH, Salmon JK, Young C, Batson B, Bowers KJ, Chao JC, Eastwood MP, Gagliardo J, Grossman JP, Ho CR, Ierardi DJ, Kolossváry I, Klepeis JL, Layman T, McLeavey C, Moraes MA, Mueller R, Priest EC, Shan Y, Spengler J, Theobald M, Towles B, Wang SC. ISCA ‘07: Proceedings of the 34th annual international symposium on Computer architecture. New York, NY, USA: 2007. Anton, a special-purpose machine for molecular dynamics simulation; pp. 1–12. ACM. [Google Scholar]