Abstract

INTRODUCTION

Ultrasound-guided interventions often necessitate scanning of deep-seated anatomical structures that may be hard to visualize. Visualization can be improved using reconstructed 3D ultrasound volumes. High-resolution 3D reconstruction of a large area during clinical interventions is challenging if the region of interest is unknown. We propose a two-stage scanning method allowing the user to perform quick low-resolution scouting followed by high-resolution live volume reconstruction.

METHODS

Scout scanning is accomplished by stacking 2D tracked ultrasound images into a low-resolution volume. Then, within a region of interest defined in the scout scan, live volume reconstruction can be performed by continuous scanning until sufficient image density is achieved. We implemented the workflow as a module of the open-source 3D Slicer application, within the SlicerIGT extension and building on the PLUS toolkit.

RESULTS

Scout scanning is performed in a few seconds using 3 mm spacing to allow region of interest definition. Live reconstruction parameters are set to provide good image quality (0.5 mm spacing, hole filling enabled) and feedback is given during live scanning by regularly updated display of the reconstructed volume.

DISCUSSION

Use of scout scanning may allow the physician to identify anatomical structures. Subsequent live volume reconstruction in a region of interest may assist in procedures such as targeting needle interventions or estimating brain shift during surgery.

Keywords: ultrasound, volume reconstruction, 3D Slicer, visualization

1. INTRODUCTION

Ultrasound (US) is an imaging modality with many advantages compared to magnetic resonance imaging or computed tomography, being safe, affordable, and real-time. One of the limitations of US-guided interventions is the difficulty of scanning and interpreting deep-seated regions. The main difficulty in understanding ultrasound images is that they only show a 2-dimensional cross section of a small area of the body. Furthermore, the ultrasound image poorly characterizes tissue types due to imaging artifacts, such as absorption, scatter, and acoustic shadows. Poor spatial resolution is unavoidable for sufficient penetration of ultrasounds, in order to detect deep targets [1]. It is difficult to accurately visualize a needle and its tip in US images, although visualization of the needle tip is essential in successful ultrasound-guided needle insertions. Image processing algorithms can facilitate scanning of these regions of interest. Use of US volume reconstruction allows better visualization of the target by creating a reconstructed 3D volume using the tracked 2D frames. The reconstructed volume can also be fused with the tracked pose of the needle, in order to visualize both the anatomy of the patient and the instrument in real time.

Various software tools have been developed for aiding ultrasound-guided interventions. SynchroGrab is an example which allows tracked ultrasound data acquisition and volume reconstruction [2][6]. Using Stradwin, users can calibrate 3D ultrasound probes, acquire and visualize images [3][4]. Brainlab Spinal Navigation software offers an image-guided navigation platform for spine surgery. However, none of these tools are ideal for translational research as they are either closed-source software (Stradwin, Brainlab) or not readily usable software applications (SynchroGrab).

Moreover, the workflow proposed by these existing tools is not optimal for clinical interventions. Clinical procedures usually require a general view of an anatomical region, and detailed information from only certain regions of interest. For example, in spinal injections in patients with pathological spine deformations, the target area may not be directly visible in US images. The intervertebral spaces, where the injection is targeted, is often compressed or deformed. Physicians use anatomical landmarks to localize the target, but in such spines the procedure is often unsuccessful. Furthermore, procedure time is a critical factor in operating rooms. Better visualization of the target can be achieved using 3D reconstructed ultrasound volumes, but often there is not enough time for high-resolution reconstruction of a large area during the procedure, because high-resolution reconstruction requires slow and careful scanning of the entire region. High-quality reconstruction in a limited time can only be achieved in a smaller region of interest. High-quality scan is also easier to achieve if there is real time feedback on the scanned ultrasound volume. But ultrasound volume reconstruction requires defined region of the output image. Otherwise every new image outside the existing volume would require the entire volume to be re-created in memory, which is a slow process. A low-resolution fast scan before another high-resolution scan could be used to define this region.

We propose a two-stage ultrasound scanning workflow and 3D reconstruction to assist scanning of target areas in ultrasound-guided interventions, and particularly for cases when the targets are not easy to localize and visualize. We implemented this workflow in the 3D Slicer1 software, and specifically in an existing module, Plus Remote, which is part of the SlicerIGT2 extension. Pre-existing version of Plus Remote initially allowed recording of 2D frames and volume reconstruction of recorded frames, or live volume reconstruction separately. Live volume reconstruction allows visualization of the reconstructed volume while scanning the target area. Limit of previous Plus Remote module is therefore that the volume reconstruction could be performed only on the entire area scanned by the physician, using a fixed resolution value and non-accessible parameters for the user.

2. NEW OR BREAKTHROUGH WORK TO BE PRESENTED

We propose a workflow adapted for ultrasound-guided procedures where localization of the target is a challenging task. The method is composed of two steps: low-resolution scout scanning first allows visualization of the entire region and selection of a small region of interest, then high-resolution reconstruction offers a good image quality of the target in real time. The open-source implementation as a 3D Slicer module is freely available.

3. METHODS

3.1 Setup

The generic steps required for ultrasound-guided interventions were described by Lasso et al. [4]. First, temporal and spatial calibrations of the tracked tools have to be completed, so the input data for volume reconstruction is accurate. Then the user acquires 2D tracked image data and frames are reconstructed into a 3D volume.

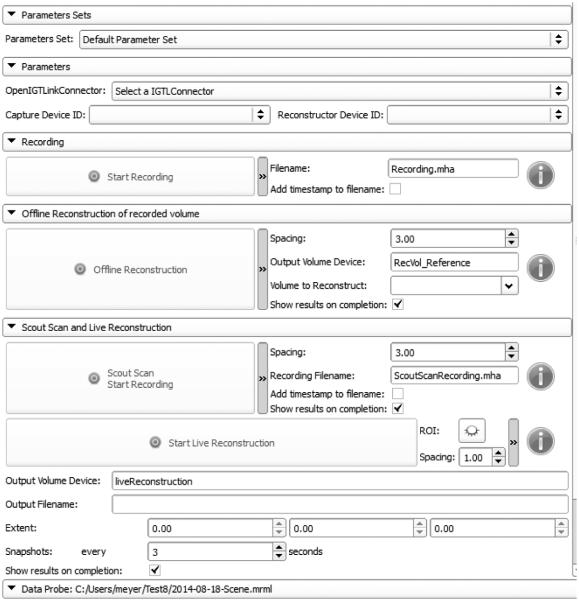

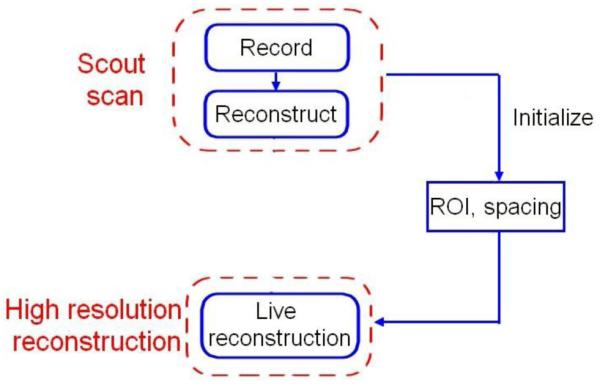

The solution we developed is a particular workflow (Figure 1) that users can easily follow step by step. The first step is a scout scan that needs to provide a volume good enough to select our region of interest for the high-resolution volume reconstruction. 2D tracked ultrasound images are recorded, and they are automatically reconstructed in a 3D volume. The result is then used for the live reconstruction by initializing spacing and region of interest (ROI) values based on the results of the first scout scan. These ROI values are the borders of a box that allows selecting the part of the image which contains the surgical target or relevant information for the procedure. ROI values can also be modified by user: the low-resolution reconstructed volume helps user to choose a smaller region of interest, using an interactive ROI user interface widget available in 3D Slicer. The ROI widget shows a ROI box in 2D slice and 3D volume rendering views of the 3D reconstructed volume. This ROI box is composed by lines showing the region boundaries and by points that allow modifying the size and location of the box. After specifying the ROI, a high-resolution live reconstruction can be performed. Indeed, a smaller spacing value is used for the high-resolution reconstruction compared to the first scout scan.

Figure 1.

Scout imaging workflow.

3.2 Design and Implementation

3.2.1 Design

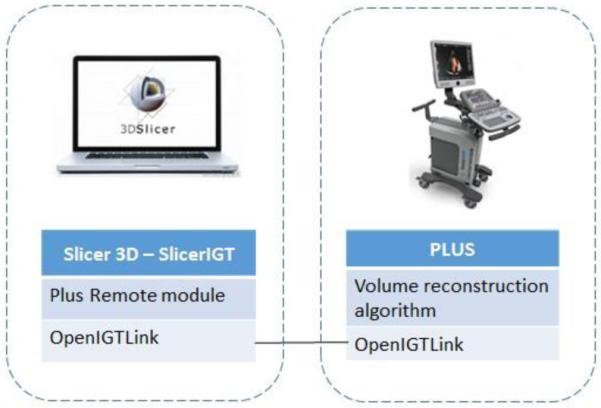

We used 3D Slicer software application framework, SlicerIGT extension, and PLUS toolkit [4] for implementing the above described workflow.

3D Slicer is an open-source software for visualization and analysis of medical images. The platform is modular and offers the possibility to access many commonly needed data import, processing, visualization features and to add new features. The workflow we developed is implemented in a module called Plus Remote, which is part of the SlicerIGT extension. The module is written in Python and its first version was already offering some actions for tracked ultrasound data: record frames or reconstruct data.

PLUS toolkit is designed for communication with tracking and ultrasound imaging hardware, but also to calibrate, process, and fuse the acquired data and provide it to applications through OpenIGTLink network communication protocol [4][5]. PLUS toolkit contains algorithms for volume reconstruction and can be used for generating and replaying simulated data for testing. Plus Server is the application running PLUS functions and sending data to 3D Slicer. It can be remotely controlled in 3D Slicer by sending available commands through OpenIGTLink connection. The module offers a convenient user interface to remotely control Plus Server from Slicer, to avoid the use of PLUS command prompt.

To use the live ultrasound volume reconstruction workflow, user needs first to download latest release of PLUS toolkit, 3D Slicer software, and SlicerIGT extension using the extension manager in Slicer. User interface for the proposed workflow is implemented in the Plus Remote module. 3D Slicer and PLUS do not have to be on the same computer as OpenIGTLink allows data exchange between any computers on the same network (Figure 2). Connection is set up in the OpenIGTLinkIF module and then Plus Remote can be used to send commands and receive data.

Figure 2.

System configuration.

Plus Server allows execution of various commands with many customizable parameters. We decided to give the user access to only a selected set of parameters and to exclude parameters such as controlling the method used for volume reconstruction and hole filling. These parameters are specified in a configuration file instead. To further simplify the user interface some parameters, such as the output filename or options for visualization are hidden by default. The basic user interface is only composed of a few buttons for sending commands with default parameters, and advanced parameters are accessible by clicking a button.

3.2.2 Implementation

3D Slicer is built on several open-source toolkits, including the Visualization Toolkit (VTK), and the core application is written in C++. Most features are also available through a Python wrapper, therefore modules can be implemented in C++ or Python. The Plus Remote module is written in Python.

Slicer architecture relies on MRML (Medical Reality Modeling Language), which provides data representation for complex medical image computing data sets. A MRML scene contains a set of MRML nodes, which describe all the loaded data items, how to visualize, process, and store them. Types of data items that MRML nodes can store include 3D images, models, point sets, and transforms. This MRML model is independent from the implementation of graphical user interface and the processing algorithms that operate on them. This separation is reflected in the source code, which is split up to user interface, processing algorithms, and data representation classes (MRML nodes). Classes in each group only communicate with other groups by modifying and observing changes in MRML nodes.

Plus Remote can execute multiple commands simultaneously, i.e., it does not have to wait for completion of a command to send a new command to Plus Server. Identifier and callback method of each in-progress command is stored in a hash table. If the command is completed (or waiting for response is timed out) then the callback method is executed. Multiple commands can be easily chained together by executing a new command in a callback method. This is applied for example in the implementation of the scout scan function, when a “stop recording” command is immediately followed by an “offline reconstruction” command.

Available commands include recording and offline reconstruction of recorded volume. The implemented workflow is composed of two steps: a scout scan command that records and performs the low resolution reconstruction of the recorded volume; and the live reconstruction command that directly performs the high resolution reconstruction while scanning the patient, without recording data (Table 1). Adding a scout scanning step to the workflow is mainly motivated by two sources. The first one is that it is desirable to have an image of a large anatomical area for reliably finding the region of interest. The other motivation is that specifying a fixed region before starting volume reconstruction greatly simplifies the reconstruction algorithm implementation, allows faster reconstruction speed, and lower and more predictable resource needs.

Table 1.

Available commands through Plus Remote module.

| Commands in Plus Remote module |

Buttons in user interface |

|---|---|

| Recording | Recording |

| Reconstruction of recorded volume |

Offline reconstruction |

| Live volume reconstruction using scout scanning |

|

Volume reconstruction is performed using the algorithm included PLUS package. Reconstruction is based on the method originally implemented by Gobbi et al. [6] and performed in two steps. First, a volume grid is allocated and then each pixel of slice is pasted into the volume. If no region of interest is specified then the reconstruction algorithm iterates through all the frames to determine the boundaries of the volume that encloses all the image frames, allocates this volume, and then starts inserting the frames. The imaging probe is moved by freehand, therefore spacing between images may be irregular, resulting in unfilled voxels that can be removed by a hole filling algorithm. The volume reconstructor in Plus includes a hole filling step, which can be activated immediately or when all the frames are filled. The reconstructor also contains an optional step of excluding image areas that remain empty because lack of good acoustic coupling between the imaging probe and the subject. All volume reconstruction parameters can be set through a configuration file and most parameters can be changed during run-time by sending commands.

All server connection, volume reconstruction, and visualization parameters can be saved in the MRML scene and can be reloaded when starting a procedure. This allows optimization of the user interface for a certain clinical procedure without doing any custom software development.

Plus Remote source code is openly accessible on GitHub project hosting website. Development tools used for this project include Eclipse, PyDev, Qt Designer, and 3D Slicer’s Python Debugger extension. The Python Debugger is a convenient tool that allows connecting the Eclipse integrated development environment using PyDev for visual debugging.

3.3 Testing

Testing during development was performed by replaying previously recorded procedures using simulated hardware devices available in Plus Server. This allowed thorough testing in a well-controlled environment without the need for accessing hardware devices.

The developed software was validated in the context of spinal injection procedures. Sonix Touch (Ultrasonix, Richmond, BC, Canada) ultrasound system and Ascension DriveBay (NDI, Waterloo, ON, Canada) tracker device were used on a human subject and on a realistic spine phantom. Our test was approved by our institutional ethics review board, and informed consent was obtained from the human subject.

The module was also tested in the context of brain tumor resection procedures. BrainLab navigation system (BrainLab AG, Feldkirchen, Germany) was used for visualization and recording of data for post-operative analysis. Volume reconstruction in PLUS was used for quick quality assessment of the acquired tracked ultrasound data.

4. RESULTS

Plus Remote user interface is shown in Figure 3. All advances parameters are visible in Figure 3. Previously existing recording and offline volume reconstruction functions are available in the top part of the module. The scout scan and live volume reconstruction are implemented in the second part of the module. Before performing any commands, user has to select the OpenIGTLink connector and can also select a set of saved parameters. The buttons are grey (Figure 3) while the OpenIGTLink connection is not set. Once the connection is set up, buttons are lighted up and commands can be performed (Figure 4). Blue icons give information about the status of the commands, by moving the mouse over the icons.

Figure 3.

Plus Remote user interface.

Figure 4.

User interface for scout scan and live reconstruction, with advanced parameters.

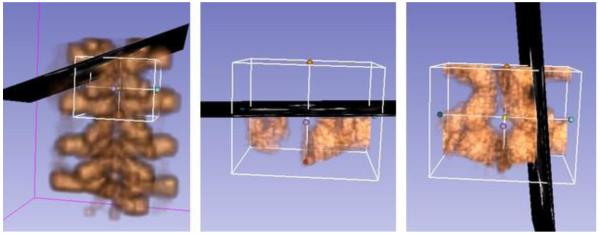

The user interface for scout scan and live volume reconstruction workflow, shown in Figure 4, has been simplified by hiding advanced parameters. Advanced parameters can still be shown after one mouse click that expands their panel. Advanced parameters are spacing, i.e. modifying resolution, and displaying the recorded data filename. Once low-resolution reconstruction is completed, the user can display the ROI and modify it by dragging control points using the mouse (Figure 5, left). Advanced parameters include information about extent and possibility to take snapshots during the live reconstruction. For both methods, the results are displayed in 3D Slicer scene views.

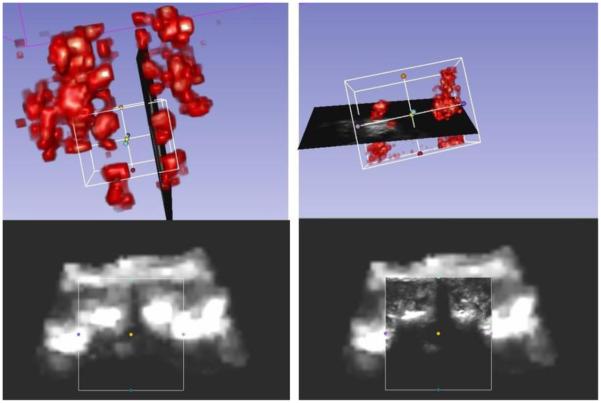

Figure 5.

Completed scout scan (left), live update of reconstruction (middle) and final live reconstructed volume (right) of a spine phantom 3D-printed from the CT scan of an adult.

4.1 Spinal injection application

For testing, the procedure started by performing a first scan of the patient’s back, moving the ultrasound probe over the entire lumbar spine region. Use of the “scout scan” button offered recording of the data and low-resolution volume reconstruction. Recorded frames and low-resolution reconstructed volumes are automatically saved in separate files. The region of interest was then selected using four vertebral laminae as landmarks around the target area (intervertebral space). These anatomical landmarks are large enough to appear on the scout volume. Finally, high-resolution volume reconstruction was performed in the region of interest by scanning the patient a second time. As frames are directly inserted in the volume during live reconstruction [4], only the high-resolution reconstructed volume is saved during live reconstruction. We used the Volume Rendering module of 3D Slicer to visualize the results. High-resolution volume reconstruction was visualized in real time while scanning the patient.

Figure 5 shows the result of this workflow performed on a spine phantom. First, we obtain a low-resolution scout scan reconstruction of the larger target area, followed by a high-quality volume of a smaller region of interest around the target. The left image is the scout scanning with 3 mm spacing and the region of interest set by landmarks. The middle image is the visualization of the live reconstruction volume that user can see as the volume is filling up. The right image is the reconstructed volume, computed in the selected region of interest with a spacing of 0.5 mm. The volume reconstruction parameters can be modified in PLUS configuration file. We used reverse tri-linear interpolation for volume reconstruction, with maximum option for calculation as multiple slices where intersecting [6]. Compounding is used, therefore voxels are averaged when image frames overlap each other. Scout scan volume reconstruction was performed in less than 5 seconds. During live reconstruction we obtained an updated reconstructed volume in every 3 seconds. This helped the operator visually confirm that enough images are recorded for a good quality volume reconstruction. Figure 6 shows the same experiment on a human subject. The scout scan provides a quick scout reconstruction of the spinal target area, which allows the clinician to identify vertebra levels and navigate the probe to the region of interest. By default, live reconstruction is performed on the whole region covered by the scout scan, but typically the clinician reduces the region of interest to one or two vertebrae to allow faster and higher-resolution reconstruction. During live volume reconstruction any number of frames can be acquired until the volume is filled up completely and the quality is visually acceptable. The acquired ultrasound images are directly added in the volume during the reconstruction (Fig 6, top row). There are not saved automatically, but the user can record them separately, if needed, by using the recording section.

Figure 6.

Scout scan (left) and live reconstruction (right) of an adult spine using Sonix Touch ultrasound and Ascension DriveBay tracker.

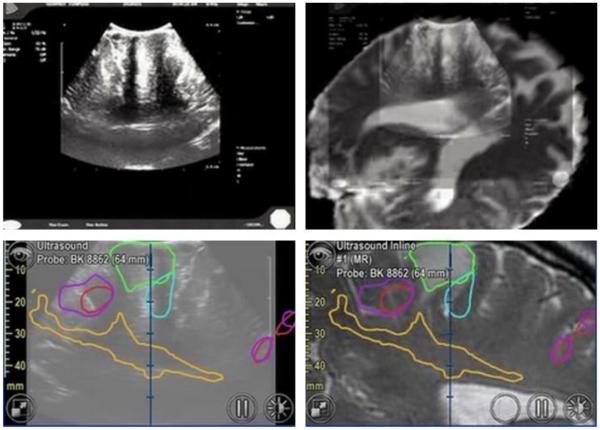

4.2 Brain tumor surgery application

The module is used clinically at the National Center for Image Guided Therapy (NCIGT) at Brigham and Women’s Hospital, for quick qualitative assessment of the tracked ultrasound data. These data are acquired during resection of brain tumors in the Advanced Multimodality Image Guided Operating (AMIGO) suite. The AMIGO suite is mainly used for patients with low grade gliomas, where tumor is difficult to distinguish from surrounding brain. The consequences are that it is very difficult to visualize the borders of the tumor, and inadequate resection can lead to neurologic damage [7]. Intraoperative image guidance can then be really useful. Preoperative and intraoperative images can be obtained with the AMIGO suite to visualize tumor and important structures in the brain. The commercial BrainLab navigation system is used for primary visualization and navigation. PLUS receives live tracking data from BrainLab through OpenIGTLink and acquires ultrasound images using a framegrabber.

Before exposition of the cortex, ultrasound scanning is performed to quickly get initial orientation and position of blood vessels. Successful brain surgery depends on a precise targeting of the tumor. Ultrasound guidance can therefore be useful during the procedure. Use of ultrasound during surgery allows determining intraoperative shifts and deformations of the brain, which results in different locations of anatomical landmarks compared to preoperative MRI or CT images acquired for surgery planning. Intracranial anatomy shifts are due to craniotomy, drainage or resection of a lesion. This process is called brain shift. Intraoperative ultrasound can track brain shift, guide surgical tools, and assist in tumors resection. For this purpose, the user can fuse preoperative images and intraoperative ultrasound images (Figure 7). Continuous scanning can only be performed if scanned region is not the surgical site and guidance requires sufficient spatial resolution. Plus Remote workflow can be useful to quickly observe whether the acquisition is good enough. After running the ultrasound for their own needs, the clinician usually performs two orthogonal sweeps for offline and volume reconstruction purposes. In this case, users from NCIGT appreciate Plus Remote module for the quick feedback provided about the quality of the scan. Use of the volume reconstruction results during the procedure depends on the surgical workflow and the needs of the operator. Intraoperative transcranial ultrasound monitor is also a solution for non-invasive guidance, by avoiding the need of craniotomy [8].

Figure 7.

Ultrasound images displayed in 3D Slicer obtained using Plus Remote (top row) and segmented images using BrainLab (bottom row) during brain tumor surgery. Provided by Alexandra Golby (National Center for Image Guided Therapy, Brigham and Women’s Hospital, USA).

5. DISCUSSION

Scout scanning offers a convenient way for selection and quick exploration of a volume of interest, followed by live volume reconstruction providing good image quality. The workflow and its open-source software implementation may be useful adjuncts in ultrasound-guided interventions such as spinal injection and brain tumor resection interventions.

Offline volume reconstruction is usually not used in clinical procedures, because time is critical during surgery and ultrasound volume reconstruction is not indispensable for the success of the procedure. The workflow implemented in Plus Remote offers the possibility to perform ultrasound volume reconstruction in real time during the surgery, to guide the surgeon and improve the visualization of problematic targets. This may contribute to a wider usage of 3D reconstructed US in the clinical practice.

Even though all volume reconstruction parameters are not accessible using Plus Remote interface, user can access many of them, especially output spacing. Missing parameters are controlling volume reconstruction algorithm (interpolation mode, compounding mode, etc.), which are unlikely to be changed during the surgery. By hiding all advanced parameters, the user interface is clear and easy to use, saving time during the procedure.

To conclude, the workflow we implemented can be useful to perform quick volume reconstruction of a selected region of interest, offering a good visualization of the target in real time.

6. ACKNOWLEDGEMENTS

The authors are grateful to Alexandra Golby, Isaiah Norton, and Alireza Mehrtash (National Center for Image Guided Therapy, Brigham and Women’s Hospital, USA) for providing data and feedback on using Plus Remote during brain surgery cases.

Footnotes

3D Slicer: www.slicer.org/

SlicerIGT: www.slicerigt.org/

REFERENCES

- [1].Ungi T, Abolmaesumi P, Jalal R, Welch M, Ayukawa I, Nagpal S, Lasso A, Jaeger M, P. Borschneck D, Fichtinger G, Mousavi P. Spinal Needle Navigation by Tracked Ultrasound Snapshots. IEEE Transactions on Biomedical Engineering. 2012;59(10) doi: 10.1109/TBME.2012.2209881. [DOI] [PubMed] [Google Scholar]

- [2].Boisvert J, Gobbi D, Vikal S, Rohling R, Fichtinger G, Abolmaesumi P. An open-Source Solution for Interactive Acquisition, Processing and Transfer of Interventional Ultrasound Images; The MIDAS Journal – Systems and Architectures for Computer Assisted Interventions (MICCAI 2008 Workshop).2008. pp. 1–8. [Google Scholar]

- [3].Treece M, H. Gee A, W. Prager R, J. C. Cash C, H. Berma L. High-Definition Freehand 3-D Ultrasound. Ultrasound in Med & Bio. 2003;29(4):529–546. doi: 10.1016/s0301-5629(02)00735-4. [DOI] [PubMed] [Google Scholar]

- [4].Lasso A, Heffter T, Rankin A, Pinter C, Fichtinger G. PLUS: open-source toolkit for ultrasound-guided intervention systems. IEEE Transactions on Biomedical Engineering. 2014;61(6):1720–1728. doi: 10.1109/TBME.2014.2322864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Tokuda J, S. Fischer G, Papademetris X, Yaniv Z, Inabez L, Cheng P, Haiying L, Blevins J, Arata J, J. Golby A, Kapur T, Pieper S, C. Burdette E, Fichtinger G, M. Tempany C, Hata N. OpenIGTLink: an open network protocol for image-guided therapy. The international Journal of Medical Robotics and Computed Assisted Surgery. 2009;5(4):423–434. doi: 10.1002/rcs.274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Gobbi D, Peters T. Interactive Intra-operative 3D Ultrasound Reconstruction and Visualization; 5th International Conference on Medical Image Computing and Computer-Assisted Intervention-Part II (MICCAI 2002).2002. pp. 156–163. [Google Scholar]

- [7].Mislow JMK, Golby AJ. Origins of Intraoperative MRI. Neurosurg Clin N Arm. 2009;20(2):137–146. doi: 10.1016/j.nec.2009.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].White PJ, Whalen S, Tang SC, Clement GT, Jolesz F, Golby AJ. An Intraoperative Brain-shift Monitor Using Shear-mode Transcranial Ultrasound; Preliminary Results. J Ultrasound Med. 2009;28(2):191–203. doi: 10.7863/jum.2009.28.2.191. [DOI] [PMC free article] [PubMed] [Google Scholar]