Abstract

The contrast sensitivity function (CSF) predicts functional vision better than acuity, but long testing times prevent its psychophysical assessment in clinical and practical applications. This study presents the quick CSF (qCSF) method, a Bayesian adaptive procedure that applies a strategy developed to estimate multiple parameters of the psychometric function (A. B. Cobo-Lewis, 1996; L. L. Kontsevich & C. W. Tyler, 1999). Before each trial, a one-step-ahead search finds the grating stimulus (defined by frequency and contrast) that maximizes the expected information gain (J. V. Kujala & T. J. Lukka, 2006; L. A. Lesmes et al., 2006), about four CSF parameters. By directly estimating CSF parameters, data collected at one spatial frequency improves sensitivity estimates across all frequencies. A psychophysical study validated that CSFs obtained with 100 qCSF trials (~10 min) exhibited good precision across spatial frequencies (SD < 2–3 dB) and excellent agreement with CSFs obtained independently (mean RMSE = 0.86 dB). To estimate the broad sensitivity metric provided by the area under the log CSF (AULCSF), only 25 trials were needed to achieve a coefficient of variation of 15–20%. The current study demonstrates the method’s value for basic and clinical investigations. Further studies, applying the qCSF to measure wider ranges of normal and abnormal vision, will determine how its efficiency translates to clinical assessment.

Keywords: adaptive, psychophysics, spatial frequency, forced-choice, AULCSF

Introduction

The contrast sensitivity function

The contrast sensitivity function, which describes how grating sensitivity (1/threshold) varies with spatial frequency, is fundamental to vision science. Its prominence in psychophysical and physiological studies of vision is based on several factors: (1) the critical input to most visual mechanisms is luminance contrast, not luminance itself (De Valois & De Valois, 1988; Graham, 1989; Regan, 1991a; Shapley, Kaplan, & Purpura, 1993); (2) CSFs measured in many species, from single neurons to full observers, exhibit a characteristic low- or band-pass shape (Ghim & Hodos, 2006; Keller, Strasburger, Cerutti, & Sabel, 2000; Kiorpes, Kiper, O’Keefe, Cavanaugh, & Movshon, 1998; Movshon & Kiorpes, 1988; Movshon, Thompson, & Tolhurst, 1978; Uhlrich, Essock, & Lehmkuhle, 1981), (3) linear systems analysis relates the CSF to the receptive field properties of neurons (Campbell & Robson, 1968; Enroth-Cugell & Robson, 1966); (4) the CSF stands as the front-end filter for standard observer models in complex visual tasks (Chung, Legge, & Tjan, 2002; Watson & Ahumada, 2005).

As a clinical measure, contrast sensitivity (CS) is important because it predicts functional vision better than other visual diagnostics (Comerford, 1983; Faye, 2005; Ginsburg, 2003; Jindra & Zemon, 1989). Contrast sensitivity deficits accompany many visual neuropathologies, including amblyopia (Bradley & Freeman, 1981; Hess & Howell, 1977; Kiorpes, Tang, & Movshon, 1999), glaucoma (Hot, Dul, & Swanson, 2008; Ross, Bron, & Clarke, 1984; Stamper, 1984), optic neuritis (Trobe, Beck, Moke, & Cleary, 1996; Zimmern, Campbell, & Wilkinson, 1979), diabetic retinopathy (Della Sala, Bertoni, Somazzi, Stubbe, & Wilkins, 1985; Sokol et al., 1985), Parkinson’s disease (Bodis-Wollner et al., 1987; Bulens, 1986; Mestre, 1990), and multiple sclerosis (Plant & Hess, 1985; Regan, 1991b; Regan, Bartol, Murray, & Beverley, 1982; Regan, Raymond, Ginsburg, & Murray, 1981; Travis & Thompson, 1989); such deficits are evident even when acuity or perimetry tests appear normal (Jindra & Zemon, 1989; Woods & Wood, 1995). Contrast sensitivity is also an important outcome measure for refractive and cataract surgery (Applegate et al., 2000; Applegate, Howland, Sharp, Cottingham, & Yee, 1998; Bellucci et al., 2005; Ginsburg, 1987, 2006; McLeod, 2001), and potential rehabilitation programs for macular degeneration (Loshin & White, 1984), myopia (Tan & Fong, 2008) and amblyopia (Huang, Tao, Zhou, & Lu, 2007; Levi & Li, 2009; Li, Polat, Makous, & Bavelier, 2009; Li, Young, Hoenig, & Levi, 2005; Polat, Ma-Naim, Belkin, & Sagi, 2004; Zhou et al., 2006). Taken together, these studies convey the great value of CSF testing for detecting visual pathology and tracking its progression or remediation.

Measuring the contrast sensitivity function

For general assessment of spatial and temporal contrast sensitivities, many studies have applied adaptive procedures (Cornsweet, 1962; Levitt, 1971; von Bekesy, 1947; Watson & Pelli, 1983; Wetherill & Levitt, 1965; for reviews, see Treutwein, 1995; Leek, 2001) to measure grating acuity or the critical fusion frequency (Birch, Stager, & Wright, 1986; Harmening, Nikolay, Orlowski, & Wagner, 2009; Sokol, Moskowitz, McCormack, & Augliere, 1988; Tyler, 1985, 1991; Vassilev, Ivanov, Zlatkova, & Anderson, 2005; Vianya, Douthwaite, & Elliott, 2002; Zlatkova, Vassilev, & Anderson, 2008). These single points on the high cutoff of spatial or temporal CSFs have traditionally been measured by fixing a high grating contrast (50 or 100%), while adaptively adjusting the stimulus on the frequency dimension (von Bekesy, 1947). Thorn, Corwin, and Comerford (1986) extended this strategy to measure the entire high-frequency limb of the temporal CSF by adaptively adjusting grating frequency at four fixed grating contrast levels (3.2, 10, 32, and 100%). The current study will focus on the larger task of measuring the full CSF, which involves estimating contrast sensitivities (thresholds) over a wide range of spatial frequencies.

Measuring the full CSF in the laboratory is complicated by a basic tradeoff in experimental design: when sampling the two-dimensional space of possible grating stimuli (defined by frequency and contrast), a sufficient range is needed to capture the CSF’s global shape and a sufficient resolution is needed to capture its dynamic regions (e.g., high frequency falloff). Increasing the sampling range improves test flexibility and increasing resolution improves test precision, but these adjustments add stimulus conditions and increase testing time. To reduce testing times, CSF measurements often apply an adaptive procedure independently across a pre-specified set of spatial frequencies. Under typical designs, adding a frequency condition requires a minimum number of experimental trials (often 50–100 trials). Therefore, sampling the CSF at 5–10 spatial frequencies typically requires 500–1000 trials (30–60 min). This amount of data collection, reasonable for experiments measuring a single CSF, becomes prohibitive for measuring multiple CSFs (e.g., for different eyes), even in laboratory settings.

The difficulties of measuring the full CSF are exacerbated in the clinical setting. Due to severe testing time constraints, clinical CS tests are far more condensed (and less precise) than laboratory procedures. The most-preferred test, the Pelli–Robson chart (Pelli, Robson, & Wilkins, 1988), does not use gratings; instead, it varies the contrast of constant-size letters. Although this test detects the general CS deficits exhibited by cataract, macular degeneration, and diabetic retinopathy (Ismail & Whitaker, 1998), the broadband letter stimuli do not provide information about frequency-specific defects (Ginsburg, 2003). To isolate spatial frequency channels and identify frequency-specific deficits, alternative CS tests (e.g., Arden cards, Vistech, FACT charts) vary both the frequency and contrast of narrowband gratings (Arden & Jacobson, 1978; Ginsburg, 1984, 1996). For portability and ease of application, these tests use paper media and gratings with pre-determined frequencies and contrast levels (see Owsley, 2003 for a review). These restrictions on stimulus sampling naturally limit test flexibility and reliability (Bradley, Hook, & Haeseker, 1991; Buhren, Terzi, Bach, Wesemann, & Kohnen, 2006; Hohberger, Laemmer, Adler, Juenemann, & Horn, 2007; Pesudovs, Hazel, Doran, & Elliott, 2004; van Gaalen, Jansonius, Koopmans, Terwee, & Kooijman, 2009); as a result, there is mixed opinion concerning the suitability of these tests for clinical research and practice (Owsley, 2003).

There are several factors suggesting that clinical CS testing requires more flexibility and precision to meet emerging needs: (1) Although some disorders (e.g., anisometropic amblyopia) exhibit a stereotypic frequency-specific deficit (Hess & Howell, 1977), others can exhibit a spectrum of low, high, or intermediate deficits across subjects (Regan, 1991b). Such variability in CSF deficits, exhibited between and within visual pathologies, suggests that measuring the full CSF over a wide frequency range is clinically important. (2) Contrast sensitivity deficits exhibited at specific temporal frequencies (Plant, 1991; Plant & Hess, 1985; Tyler, 1981) suggests the importance of measuring more than the static CSF measured by cards and charts. (3) For functional validity, it is important that contrast sensitivity be tested under different conditions: for example, under day and night illumination, with and without glare (Abrahamsson & Sjöstrand, 1986). (4) For prospective vision therapeutics that will eventually treat focal retinal damage with stem cells (Bull, Johnson, & Martin, 2008; Kelley et al., 2008) or neural prosthetics (Colby, Chang, Stulting, & Lane, 2007; Dowling, 2008; Weiland, Liu, & Humayun, 2005), it will be critical to measure the progression or remediation of contrast sensitivity at isolated retinal loci. Current cards and charts are not flexible enough to adequately capture the range of normal and abnormal CSFs observed across different illumination and glare conditions, temporal frequencies, and retinal loci.

An “ideal” CSF test should be flexible enough to rapidly characterize normal and abnormal vision across different testing conditions, and precise enough follow the progression or remediation of visual pathology. To meet these needs, a first important step is using a computer-based display, which provides the stimulus flexibility and precision to distinguish between normal and abnormal CSFs, and even between abnormal CSF subtypes. Because increasing the range and resolution for sampling the frequency and contrast of grating stimuli faces severe testing time constraints, a computerized test can exploit adaptive testing strategies that greatly improve testing efficiency without experimenter intervention. Previous CSF testing strategies that adjust grating contrast across pre-determined spatial frequency conditions are vulnerable to the inefficiency exhibited by the method of constant stimuli (Watson & Fitzhugh, 1990); namely, pre-determined sampling schemes (whether for contrast levels or spatial frequencies) that are effective for one psychophysical experiment or observer may be inappropriate for others (García-Pérez & Alcalá-Quintana, 2005; Wichmann & Hill, 2001). Therefore, one approach to improve the efficiency of CSF testing is to extend the adaptive stimulus search to both grating stimulus dimensions (frequency and contrast). A forced-choice task would be useful for avoiding the response criterion issues inherent in the simple detection of gratings (Higgins, Jaffe, Coletta, Caruso, & de Monasterio, 1984; Woods, 1996). This issue is especially important for testing the progress of visual rehabilitation: it is critical to determine that patients are improving their contrast sensitivity, and not only learning different response criteria.

The current study

To improve the measurement of the contrast sensitivity function in basic and clinical vision studies, the current study develops the quick CSF (qCSF) method, a computerized monitor-based test that provides the precision and flexibility of laboratory psychophysics, with a testing time comparable to clinical cards and charts. Relative to previous CS tests (Arden & Jacobson, 1978; Ginsburg, 2006; Owsley, 2003), the qCSF uses a much larger stimulus space that exhibits both a broad range and fine resolution for sampling grating frequency and contrast. Whereas classical adaptive methods converge to a single threshold estimate in one stimulus condition (e.g., grating spatial frequency), the qCSF concurrently estimates thresholds across the full spatial-frequency range. Before each trial, a one-step-ahead search evaluates the next trial’s possible outcomes and finds the stimulus maximizing the expected information gain (Cobo-Lewis, 1996; Kontsevich & Tyler, 1999; Kujala & Lukka, 2006; Lesmes et al., 2006), about the parameters of the particular CSF under study. In this report, demonstration and simulation of the qCSF method is followed by psychophysical validation.

The quick CSF method

The qCSF method greatly increases the efficiency of CSF testing by (1) imposing a functional form on the CSF; (2) defining a probability density function over a space of CSFs of that form, (3) updating this probability density (and parameter estimates) via Bayes Rule, given the results of previous trials, and (4) looking ahead to the possible outcomes of future trials, to find stimuli that further refine parameter estimates. Taken together, these features provide a flexible test that can efficiently sample grating stimuli from a broad stimulus space. Leveraging information acquired during the experiment with a priori knowledge about the CSF’s general functional form greatly accelerates its estimation. By directly estimating the CSF parameters, trial outcomes from a single spatial frequency condition can better inform sensitivity estimates across all frequencies.

Characterizing the contrast sensitivity function

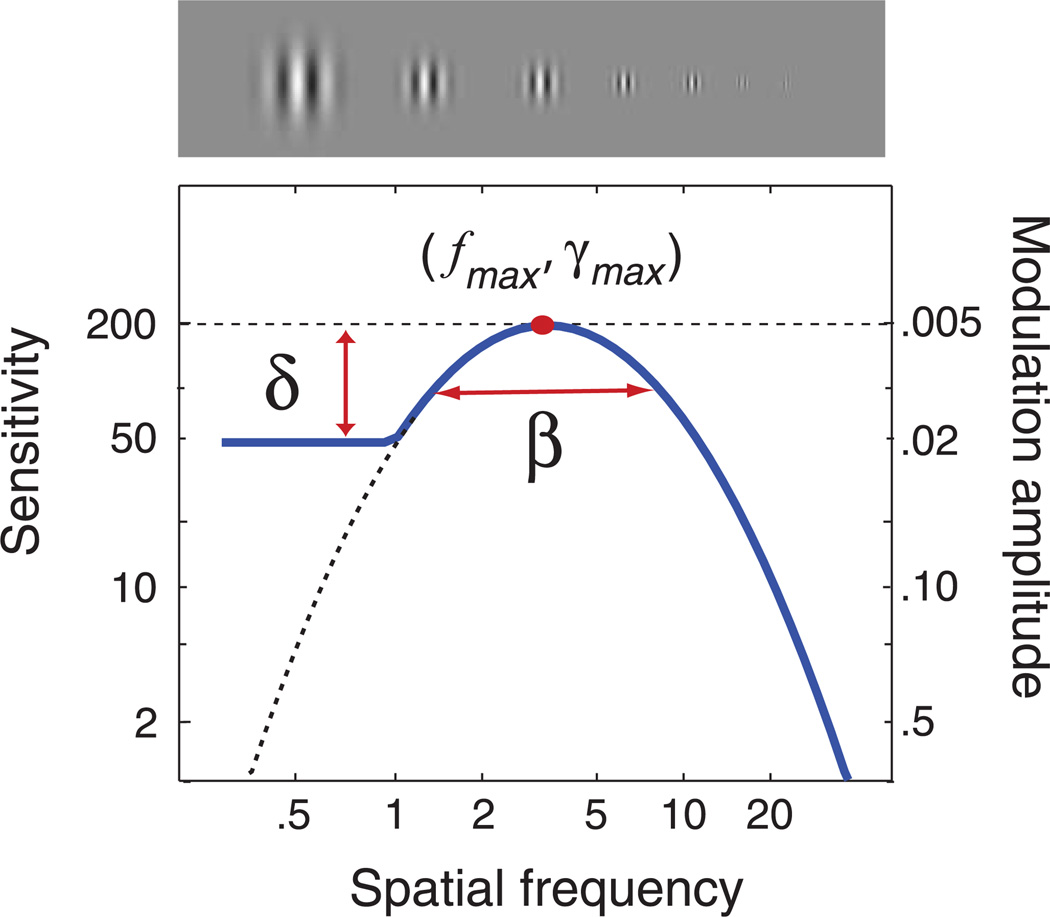

The contrast sensitivity function, S(f), represents sensitivity (1/threshold) as a function of grating frequency. Based on a review of nine parametric functions, Watson and Ahumada (2005) concluded that all provide a roughly equivalent description of the standard CSF. The qCSF method uses one form, the truncated log-parabola (see Figure 1), to describe the CSF with four parameters: (1) the peak gain (sensitivity), γmax; (2) the peak spatial frequency, fmax; (3) the bandwidth β which describes the function’s full-width at half-maximum (in octaves), and (4) δthe truncation level at low spatial frequencies. Without truncation, the log-parabola, S′(f), defines (decimal log) sensitivity as

| (1) |

where κ = log10(2) and β′ = log10(2β). Figure 1 represents a log-parabola (dotted line), which is truncated at frequencies below the peak with the parameter, β:

| (2) |

This function exhibits several advantages over other parametric forms of the spatial CSF. Rohaly and Owsley (1993) noted that two classical forms defined by three parameters—double-exponential and (untruncated) logparabola—were adequate for fitting aggregate CSF data but systemically misfit CSF data from individuals. The asymmetric double-exponential misfits the symmetry typically observed near the CSF’s peak, and the symmetric log-parabola misfits the plateau typically observed on the peak’s low-frequency side (Rohaly & Owsley, 1993). With an additional parameter to describe the low-frequency plateau, the truncated log-parabola can deal with the issues of the CSF’s symmetry and asymmetry. Other four-parameter descriptions, such as the difference of Gaussians, provide equivalent fits to empirical CSFs, but their fitted parameters are not immediately interpretable. The interpretable parameter set provided by the truncated log-parabola will be especially useful for a potential normative CSF data set, which in turn can provide Bayesian priors for qCSF testing. The current study adopts the truncated log-parabola as the functional form of the CSF and develops an adaptive testing procedure to estimate its four parameters.

Figure 1.

CSF parameterization. The spatial contrast sensitivity function, which describes reciprocal contrast threshold as a function of spatial frequency, can be described by four parameters: (1) the peak gain, γmax; (2) the peak frequency, fmax; (3) the bandwidth (full-width at half-maximum), β; and (4) the truncation (plateau) on the low-frequency side, δ. The qCSF method estimates the spatial CSF by using Bayesian adaptive inference to directly estimate these four parameters.

Bayesian adaptive parameter estimation

The qCSF method estimates the CSF parameters using Bayesian adaptive inference, which was first applied in the landmark development of the QUEST method (Watson& Pelli, 1983), and is now widely used in psychophysics (Alcalá-Quintana & García-Pérez, 2007; García-Pérez & Alcalá-Quintana, 2007; King-Smith, Grigsby, Vingrys, Benes, & Supowit, 1994; King-Smith & Rose, 1997; Remus & Collins, 2007, 2008; Snoeren & Puts, 1997). Whereas QUEST was designed to solely measure the psychometric threshold, subsequently developed methods estimate the threshold and steepness of the psychometric function (Cobo-Lewis, 1996; King-Smith & Rose, 1997; Kontsevich & Tyler, 1999; Remus & Collins, 2007, 2008; Snoeren & Puts, 1997; Tanner, 2008), or even more complex behavioral functions (Kujala & Lukka, 2006; Kujala, Richardson, & Lyytinen, in press; Lesmes, Jeon, Lu, & Dosher, 2006; Vul & MacLeod, 2007). We have previously applied the Bayesian adaptive framework to develop methods for estimating threshold versus external noise contrast functions (Lesmes, Jeon et al., 2006) and sensitivity thresholds and response bias(es) in detection tasks (Lesmes, Lu, Tran, Dosher, & Albright, 2006). In addition to the conceptual description of the qCSF method that follows in this section, a demonstration movie (Movie 1) is included in the next section, detailed preand post-trial analyses are described in Appendix A, and MATLAB code (MathWorks, Natick, MA) for the demonstration is available for download (http://lobes.usc.edu/qMethods).

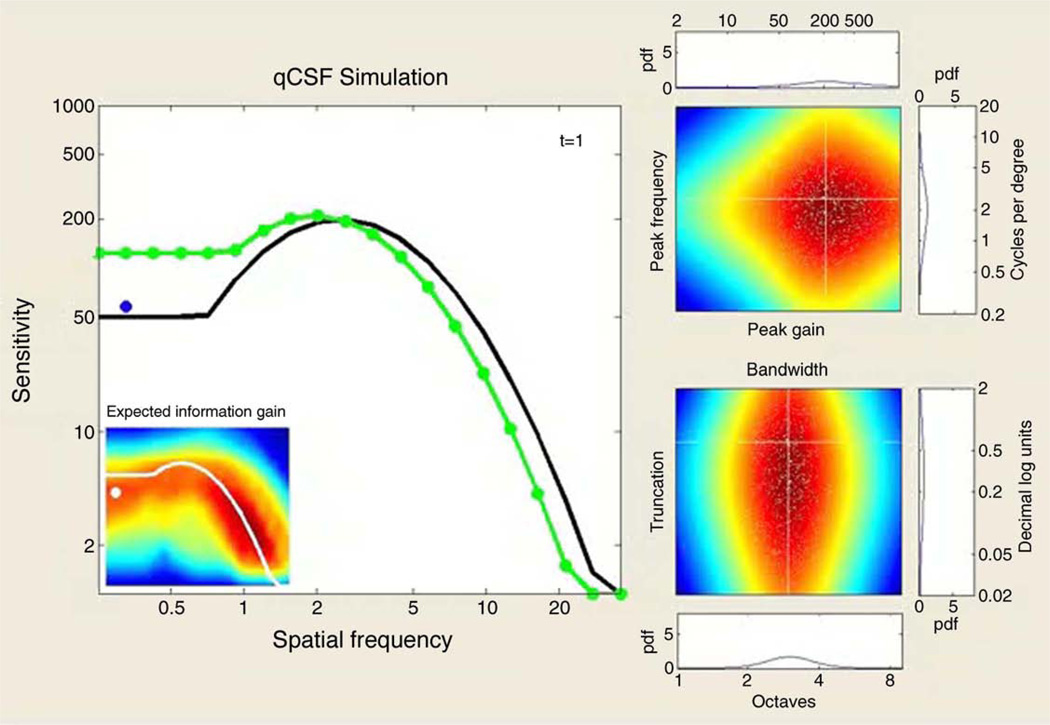

Movie 1.

The movie demonstrates a simulated 300-trial sequence of the qCSF application in a 2AFC task. In addition to the true CSF (black line), the large leftmost panel presents each trial’s outcome and the subsequently updated qCSF estimate (green line). For each simulated trial, the selected grating stimulus is presented as a dot, whose color represents a correct (green) or incorrect (red) response. The inset presents the results of the qCSF’s stimulus selection algorithm (the pre-trial calculation of expected information gain as a function of grating frequency and contrast), with the updating qCSF estimate (white) overlaid as a reference. The right-hand panels demonstrate the trial-by-trial Bayesian update of the probability density defined over four CSF parameters; in addition to a pair of 2-D marginal densities—defined by peak gain and peak frequency (top), and bandwidth and low-frequency truncation (bottom)—the 1-D marginals for each parameter are presented. The white cross-lines represent the targets of parameter estimation: the observer’s true CSF parameters. The small white dots represent Monte Carlo samples of the probability density, which accelerate the pre-trial calculations (see Appendix A). At the demo’s completion, the main plot’s inset presents the bias of AULCSF estimates (in percent) as a function of trial number for the full-simulated run. Several features of the demo providing evidence for the qCSF’s successful convergence are: (1) the overlap of the CSF estimate with the true CSF; (2) how rapidly the stimulus selection algorithm excludes large regions of the stimulus space and focuses on the region of the stimulus space corresponding to the true CSF; (3) the aggregation of probability mass in the parameter space regions corresponding to the true CSF parameters; and (4) the convergence of the AULCSF error estimate toward 0%.

Stimulus and parameter spaces

The qCSF’s application of Bayesian adaptive inference requires two basic components: (1) a probability density function, p(θ), defined over a four-dimensional space of CSF parameters, and (2) a two-dimensional space of possible grating stimuli. The method’s basic goal is to accelerate CSF estimation by efficiently searching the stimulus space for grating stimuli that improve the information gained over the CSF parameter space on each trial. For the current simulations, the ranges of possible CSF parameters are: 2 to 2000 for peak gain, γmax; 0.2 to 20 cpd for peak frequency, fmax; 1 to 9 octaves for bandwidth, β and 0.02 to 2 decimal log units for truncation level, δ. The possible ranges for stimuli were 0.1% to 100% for grating contrast and 0.2 to 36 cpd for grating frequency. The parameter and stimulus spaces are defined on log-linear grids (Kontsevich & Tyler, 1999).

Priors

Before any data is collected, an initial prior, pt=1(θ), represents foreknowledge of the observer’s CSF parameters. For each parameter, integrating this multivariate probability density over the other three parameters gives a 1-D marginal prior density. For the current simulations, the marginal priors were relatively flat and log-symmetric around the respective parameter modes (γmax = 100, fmax = 2.5 cpd, β = 2.5 octaves, and δ = 0.25 log units), and the joint prior was their normalized product (see Appendix A for more details). An advantage of applying Bayesian methods is the use of priors (Kuss, Jäkel, & Wichmann, 2005), which can usefully influence the testing strategy, based on other vision test results (Turpin, Jankovic, & McKendrick, 2007) or demographic data.

Bayesian adaptive inference

At the conclusion of each trial t, the evidence provided by the observer’s response, rt, is used to update the knowledge about CSF parameters, i.e., pt(θ) is updated to pt+1(θ), via Bayes Rule:

| (3) |

The conditional probability, p(rt|θ), representing the probability of observing rt given the CSFs comprising the parameter space, is generated via a model psychometric function (see Appendix A). This psychometric function, defined as a bivariate function of grating frequency and contrast, is translatable on log contrast (i.e., exhibits invariance of its steepness parameter across spatial frequencies). Following the Bayesian inference step, the updated estimates of CSF parameters are calculated by the marginal posterior means. The posterior, pt+1(θ), serves as the prior for the next trial.

Stimulus selection

To improve the quality of the evidence obtained on each trial, the qCSF selects the grating frequency and contrast for the next trial using a one-step-ahead search and a criterion of minimum expected entropy (Cobo-Lewis, 1996; Kontsevich & Tyler, 1999; Kujala & Lukka, 2006; Lesmes et al., 2006), or equivalently, maximum expected information gain (Kujala & Lukka, 2006). The entropy of p(θ),

| (4) |

is maximal when p(θ) is uniform over the parameter space and minimal when the observer’s CSF is perfectly certain: p(θ) = 1 for one set of CSF parameters and 0 otherwise. Because information is defined as a difference between entropies (Cover&Thomas, 1991; Kujala & Lukka, 2006)—in this case, between prior and posterior entropies—a strategy that minimizes the expected entropy of p(θ) is one that maximizes the information gained about CSF parameters on a trial-to-trial basis (Kujala & Lukka, 2006). By effectively simulating the next trial for each possible stimulus, and evaluating possible stimuli for their expected effects on the posterior, the method avoids large regions of the stimulus space that are not likely to be useful to the given experiment.

Demonstration and simulation

Figure 1 presents a prototypical spatial CSF (Watson & Ahumada, 2005), defined by four parameters: peak gain γmax = 200, peak frequency = 3.5 cpd, bandwidth (FWHM) = 3 octaves, and low-frequency truncation at 0.6 decimal log units below peak. Movie 1 demonstrates the qCSF applied to estimate this model CSF in a 2AFC task. To demonstrate how the qCSF’s estimation of CSF parameters evolves over the course of an experiment, the demo presents the trial-to-trial updating of 1-D marginal densities for each parameter, in addition to two 2-D joint densities of (1) peak gain and peak frequency, and (2) bandwidth and truncation.

To complement this demonstration, and evaluate the qCSF’s expected accuracy and precision, the same demo was repeated for 1000 iterations. Figure 2 summarizes the results. Even as few as 25–50 trials (distributed over 12 possible spatial frequencies) provide a general assessment of the CSF’s shape, although estimates obtained with such few trials are not very precise: mean variability ≈4–6 dB (where 1 dB = 0.05 decimal log units = 12.2%). With 100–300 trials of data collection, CSF estimates are unbiased and reach precision levels (2–3 dB) typical of laboratory CSF measurements.

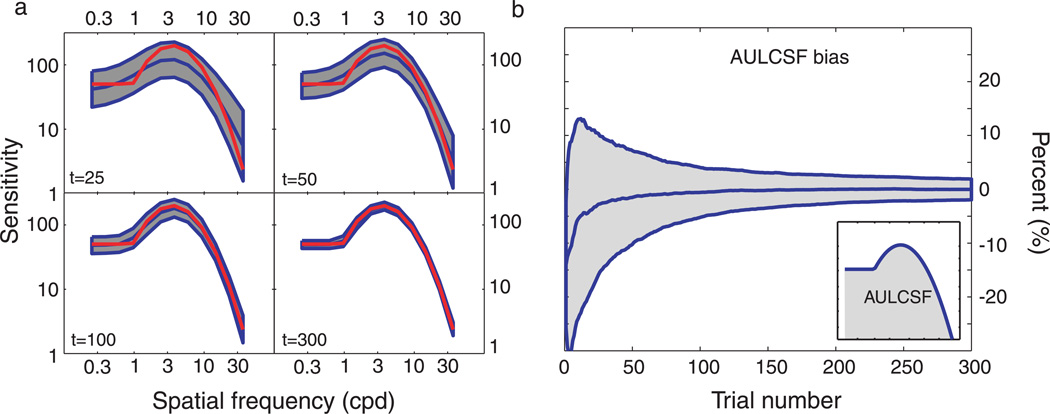

Figure 2.

Simulations. (a) CSF estimates obtained with 25, 50, 100, and 300 trials of the qCSF method. The general shape of the CSF is recovered with as few as 25 trials, but sensitivity estimates are imprecise (shaded regions reflect ±1 SD for individual frequencies). Method convergence with increasing trial numbers (50–300 trials) is supported by (1) the increasing concordance of mean qCSF estimates (red) with the true CSF (blue), and (2) the decreasing area of the error regions. (b) Expected bias of AULCSF estimates as a function of trial number. Evidence for the successful rapid estimation of the AULCSF is provided by (1) the convergence of the mean bias to zero and (2) the decreasing area of the error region (±1 standard deviation) as a function of increasing trial number.

In vision studies of special populations, specific CSF features (e.g., peak sensitivity, peak spatial frequency, or grating acuity) are often used as shorthand metrics of the full CSF (Lovegrove, Bowling, Badcock, & Blackwood, 1980; Peterzell, Werner, & Kaplan, 1995; Rogers, Bremer, & Leguire, 1987; Rohaly & Owsley, 1993). An alternative, broad contrast sensitivity metric is provided by the area under the log CSF (AULCSF; Applegate et al., 2000, 1998; Oshika, Klyce, Applegate, & Howland, 1999; Oshika, Okamoto, Samejima, Tokunaga, & Miyata, 2006; van Gaalen et al., 2009), which Campbell (1983) described as “our visual world.” Figure 2c presents the bias of AULCSF estimates (in percent), calculated as (true AULCSF j estimated AULCSF)/true AULCSF, as a function of trial number. These results demonstrate that the mean bias of AULCSF estimates decreases below 5% after 25 trials, and that AULCSF variability (evaluated via the coefficient of variation) decreases from 15% to 10%, between 25 and 50 trials of data collection. With more trials, the mean and variability of the bias both decrease. Thus, although 25 qCSF trials provide only imprecise estimates of the full CSF, reasonably accurate and precise AULCSF estimates can be obtained with such few trials: bias <5% and coefficient of variation <15%.

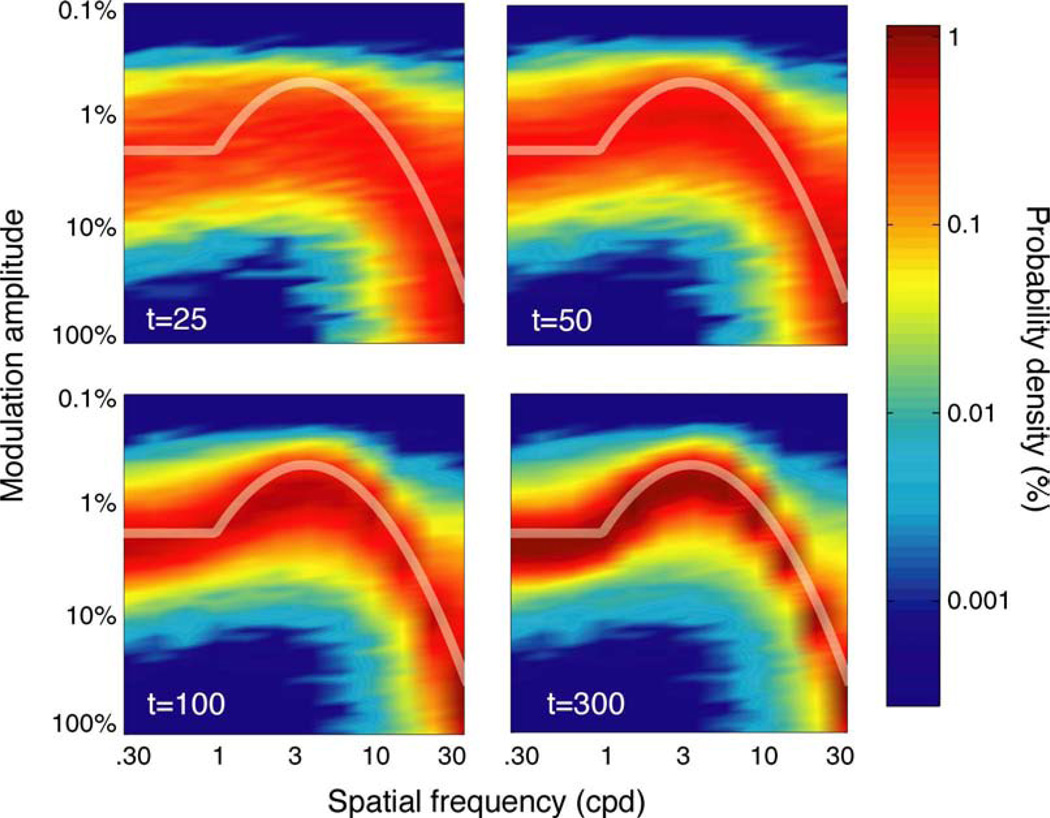

The qCSF’s pattern of stimulus sampling is summarized by the two-dimensional stimulus histograms presented in Figure 3. Each panel presents the probability (density) of stimulus presentation, as a function of grating frequency and contrast, for four endpoints of data collection: 25, 50, 100, and 300 trials. These results demonstrate how the qCSF effectively samples the large grating stimulus space and adjusts stimulus presentation to match the observer’s underlying contrast sensitivity function. Over the first 25 trials, stimulus presentation is relatively diffuse, especially over high spatial frequencies, where the procedure prospectively samples low contrasts. As the experiment progresses, and uncertainty about the observer’s sensitivity decreases, stimulus presentation focuses directly on the observer’s underlying contrast sensitivity function.

Figure 3.

Stimulus sampling. The history of the qCSF’s stimulus sampling pattern is characterized by two-dimensional probability density histograms, aggregated across simulations. Each histogram describes the probability of stimulus presentation, as a function of grating frequency and contrast, for four experimental cutoff points: t = 25, 50, 100, and 300 trials. Even with as few as 25–50 trials, testing is narrowed to a region of the grating stimulus space that correlates with observer sensitivity. For more extensive testing (100–300 trials), stimulus presentation focuses almost exclusively on the true CSF.

To summarize, simulation results support the qCSF as a promising method for rapidly estimating the contrast sensitivity function. Given a data collection rate of 10– 15 trials/min, reasonably precise CSF estimates can be obtained in 10–20 min. This testing time is significantly less than the 30–60 min required of conventional laboratory CSF measurements. Moreover, much fewer trials are needed to estimate the AULCSF with the qCSF. Due to the qCSF’s high sampling resolution of spatial frequency, its AULCSF estimates will be more precise and flexible than previous measurements taken with charts (Hohberger et al., 2007).

Psychophysical validation

An orientation identification task was used for psychophysical validation of the qCSF method. We evaluated precision through test–retest comparisons and accuracy through independent CSF estimates obtained with the ψ method developed by Kontsevich and Tyler (1999).

Methods

Apparatus

The experiment was conducted on a Windows-compatible computer running PsychToolbox extensions (Brainard, 1997; Pelli, 1997). The stimuli were displayed on a Dell 17-inch color CRT monitor with an 85-Hz refresh rate. A special circuit changed the display to a monochromatic mode, with high grayscale resolution (>14 bits); luminance levels were linearized via a lookup table (Li, Lu, Xu, Jin, & Zhou, 2003). Stimuli were viewed binocularly with natural pupil at a viewing distance of approximately 175 cm in dim light.

Participants

Two naive observers (SL and JL) and one of the authors (JB) participated in the experiment. All observers had corrected-to-normal vision and were experienced in psychophysical studies.

Stimuli

The signal stimuli were Gaussian-windowed sinusoidal gratings, oriented θ = ±45 degrees from vertical. The signal stimuli were rendered on a 400 × 400 pixel grid, extending 5.6 × 5.6 deg of visual angle. The luminance profile of the Gabor stimulus is described by

| (5) |

where c is the signal contrast, σ = 1.87 deg is the standard deviation of the Gaussian window (which was constant across spatial frequencies), and the background luminance L0 was set in the middle of the dynamic range of the display (Lmin = 3.1 cd/m2; Lmax = 120 cd/m2). For qCSF trials, the 11 possible grating spatial frequencies were spaced log linearly from 0.6 to 20 cpd; the 46 possible grating contrasts were spaced log linearly from 0.15% to 99%. The stimulus sequence started with the presentation of a fixation cross in the center of the screen for 500 ms, and the grating stimulus was presented for 130 ms. The target was preceded by presentation of one of three possible auditory cues—the digitally recorded words “small,” “medium,” or “large”—which conveyed the “stripe size” (spatial frequency) of the imminent grating stimulus. The cue was used to reduce stimulus uncertainty, which could affect CSF measurement, especially in the high-frequency region (Woods, 1996).

Design and procedure

Observers ran four testing sessions, each lasting approximately 30–40 min. During each session, two qCSF runs, which each lasted 100 trials, were applied in succession. Interleaved with qCSF runs were trials implementing another adaptive procedure (the ψ method”; Kontsevich & Tyler, 1999), applied to independently measure individual contrast thresholds in 6 spatial frequency conditions. There were 30 trials in each spatial frequency condition. To summarize, for each observer, each of four test sessions consisted of 2 × 100 = 200 qCSF trials and 6 × 30 = 180 ψ trials. Over the course of the experiment, this corresponded to collecting eight total qCSF measures and four ψ–CSF measures for each observer. The priors used for qCSF parameters are presented in Appendix A.

Results

Accuracy

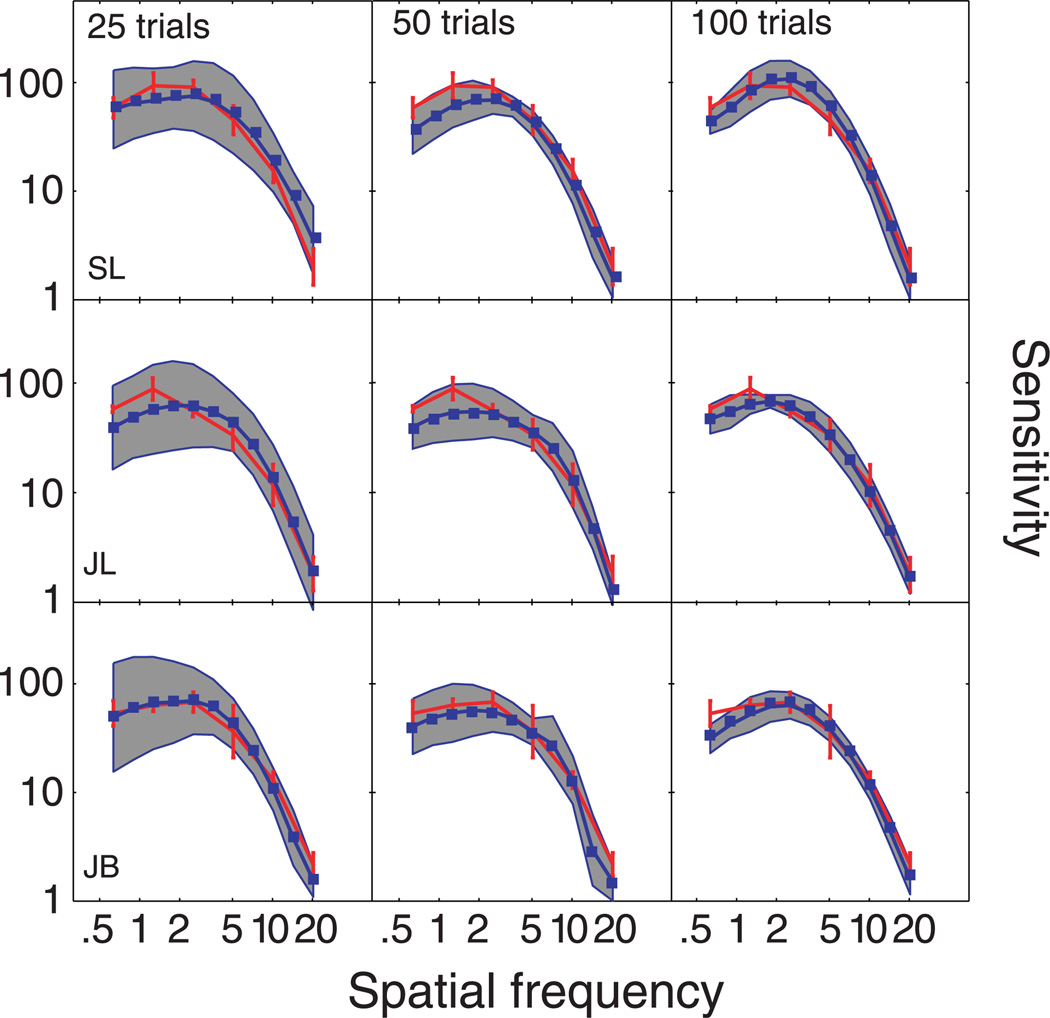

Figure 4 presents the CSFs measured with the qCSF (blue lines) and ψ methods (red lines). Each row presents CSF data from a different observer, and each column presents qCSF estimates obtained with different number of trials: 25, 50, and 100. The error region (shaded gray) represents the qCSF variability (mean ± 1 SD) for estimating individual thresholds. For comparison, for each observer, the same ψ − CSF estimate, obtained with 180 × 4 = 760 trials, is presented across all columns; error bars represent variability (±1 SD). Initial examination of CSFs obtained with both methods suggests significant overlap. To quantify the concordance of CSF estimates, we calculated the root mean squared error (RMSE) of the mean thresholds obtained with the two methods, collapsed across all three observers (m = 3) and spatial frequency conditions (n = 6) common to both methods:

| (6) |

The mean errors between sensitivities estimated with the ψ method and the qCSF using 25, 50, and 100 trials were 0.95, 1.09, and 0.86 dB, respectively.

Figure 4.

Test accuracy. Spatial CSFs obtained with two independent and concurrent adaptive procedures: the qCSF method (blue) and the ψ method (red). CSF estimates obtained from different subjects are presented in different rows; estimates obtained with 25, 50, or 100 trials are presented in different columns. The gray-shaded region reflects the variability of qCSF estimates (8 runs in total), and the red error bars reflect variability of ψ estimates (4 runs in total).

Precision

Evidence for the qCSF’s convergence is provided by the decreasing variability of threshold estimates as a function of trial number. For each observer, the variability of sensitivity estimates for each spatial frequency was calculated from the standard deviation of the eight CSF estimates obtained with 25, 50, and 100 qCSF trials. For the three data cutoff points, the mean of these variability estimates—averaged across the three observers and 11 frequency conditions—was 6.44 dB (SD = 1.5), 3.99 dB (SD = 1.07), and 2.7 dB (SD = 0.63). In Figure 4, this pattern is evident in the decreasing area of the CSF error regions (gray) with increasing trial number. The corresponding variability exhibited by the ψ method was 2.25 dB (SD = 1.04). Therefore, CSF estimates obtained with the ψ method were more precise than the best qCSF estimates but also required more data collection for each CSF: 180 vs. 100 trials. As a fair comparison, for CSFs obtained with the ψ method with comparable number of trials (96 trials per CSF: 16 trials at each of the 6 spatial frequency conditions), threshold variability was 4.5 dB.

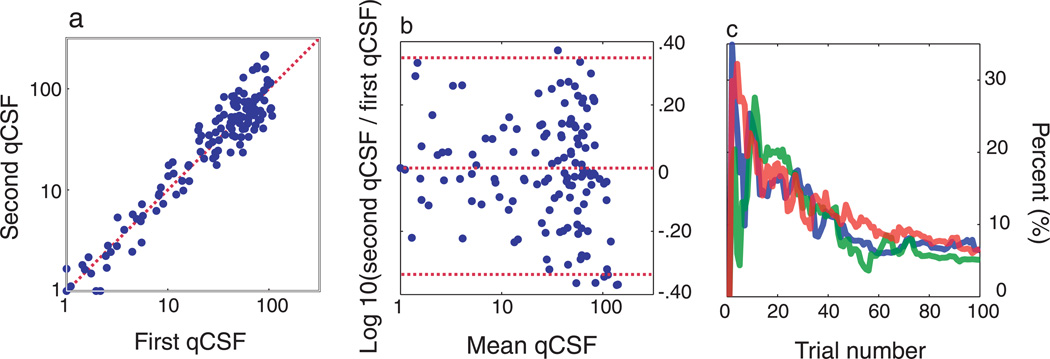

Test–retest reliability of the qCSF is assessed through analysis of the two qCSF runs completed in each session. Figure 5a plots sensitivities estimated from the second qCSF run against those from the first. The average test– retest correlations for the two CSFs estimated in each session, with 25, 50, and 100 qCSF trials, were 81.7% (SD = 21%), 88% (SD = 11%), and 96% (SD = 4%). Though test–retest correlations are widely reported as measures of test–retest reliability, they are not the most useful way to characterize method reliability or agreement (Bland & Altman, 1986). Figure 5b presents a Bland– Altman plot of the difference of same-session qCSF estimates against their mean. The mean and standard deviation of test–retest differences were 0.0075 and 0.175 (3.5 dB). These results signify that (1) sensitivity measures do not change systematically over the course of single testing sessions and (2) the precision of test– retest differences within sessions agrees with that estimated across single tests: compare 3.5 dB with .

Figure 5.

Test precision. (a, b) Test–retest comparisons for the two qCSF runs (of 100 trials) applied in each testing session. (a) Contrast sensitivities measured with the second qCSF run plotted against those obtained in the first run. The Pearson correlation coefficient for these comparisons averaged, r = 96% (SD = 4%), across all testing sessions. (b) A Bland–Altman plot presents the differences between sensitivity estimates obtained from each qCSF run, plotted against their mean. Mean difference <0.01 and the standard error of the difference = 0.175 log units. (c) Coefficient of variability (in percent) of AULCSF estimates obtained from three observers (8 runs each), as a function of trial number. AULCSF estimates converge in agreement with simulations (<15% by the completion of 25 trials).

To demonstrate the convergence of AULCSF estimates obtained with the qCSF, Figure 5c presents the coefficient of variation of AULCSF estimates as a function of trial number, for each subject. The consistent pattern, exhibited by each subject, is a decrease in variability as the trial number increases: from approximately 15% after 25 trials to 6% after 100 trials. These measures exhibit excellent agreement with those predicted by simulations.

Discussion

For psychophysical validation, we compared CSF estimates obtained with 25, 50, and 100 qCSF trials with those obtained with an independent adaptive method (Kontsevich & Tyler, 1999). CSF estimates obtained with the qCSF exhibited (1) excellent agreement with the ψ method and (2) increased precision with increasing test duration. As suggested by simulations, only 25 trials (≈1– 2 min) were sufficient to estimate a broad CSF metric, but estimation of individual sensitivities at higher precision (<3 dB) required more trials (100 trials ≈ 10 min). Over the relatively short test duration (100 trials), the qCSF was more precise than the ψ method (2.79 vs. 4.5 dB). It should be noted that the qCSF’s precision advantage depends on the validity of the CSF’s functional form assumed by the method. In instances in which the truncated log-parabola misfits the observer’s CSF (e.g., in cases with local notches), measurements with the ψ method (which is CSF model-free) would fare better in the comparison. However, under realistic applications, with wider CSF variability, the coarse 6-point sampling scheme used by the current ψ method application would be much more vulnerable to inefficiency than the flexible adaptive sampling used by the qCSF.

General conclusion and discussion

The qCSF applies a Bayesian adaptive strategy that uses a priori knowledge about the CSF’s general functional form to accelerate the information gained about the psychophysical observer. Results from simulations and psychophysics demonstrate that 100 trials are sufficient for reasonably accurate and precise estimates of sensitivity across the full spatial frequency range. As few as 25 trials are needed to estimate the broad metric provided by the area under the contrast sensitivity function. Taken together, these results suggest that the qCSF method can meet the different needs for measuring contrast sensitivity in basic and clinical vision applications.

The qCSF will be potentially valuable for investigating comprehensive models of spatiotemporal vision (Tyler et al., 2002), which require measuring and accounting for contrast sensitivity as a function of retinal illuminance (Koenderink, Bouman, Buenodemesquita, & Slappendel, 1978c), eccentricity (Koenderink, Bouman, Buenodemesquita, &Slappendel, 1978a, 1978b), temporal frequency (Kelly, 1979; van Nes, Koenderink, Nas, & Bouman, 1967), external noise (Huang et al., 2007; Nordmann, Freeman, & Casanova, 1992), or visual pathology (Regan, 1991b; Stamper, 1984). The ability to rapidly estimate a single CSF will certainly benefit investigations, which must measure many CSFs in the same observer, under different conditions.

For the most basic clinical measure of contrast sensitivity, the Pelli–Robson chart may be sufficient. However, Ginsburg (1996) critically noted that the chart’s broadband letter stimuli cannot isolate spatial-frequency channels and identify frequency-specific deficits. For example, observers with recognized frequency-specific deficits to gratings (as in amblyopia or X-linked retinoschisis) can exhibit normal results when tested with letters for Snellen acuity (Huang et al., 2007) or Pelli–Robson contrast sensitivity (Alexander, Barnes, & Fishman, 2005). The need for a rapid and efficient grating test is further reinforced by the FDA’s guidelines for novel therapeutic devices for vision (Ginsburg, 2006). As a critical outcome measure for clinical trials, contrast sensitivity must be measured with and without glare at four spatial frequencies: 3, 6, 12, and 18 cpd for photopic (85 cd/m2) vision and 1.5, 3, 6, and 12 cpd for mesopic (3 cd/m2) vision. However, a recent study of contrast sensitivity outcomes of refractive and cataract surgery (Pesudovs et al., 2004) illustrates the shortcomings of two current grating charts (FACT and Vistech). The limited grating contrast range of these charts makes them vulnerable to ceiling and floor effects: applied following refractive surgery, 33% and 50% of subjects demonstrated maximum sensitivity at the two lowest spatial frequencies; conversely, up to 60% of patients screened for cataracts with the same chart exhibited minimal sensitivity. Other recent studies comparing multiple contrast sensitivity tests (Buhren et al., 2006; van Gaalen et al., 2009) likewise conclude that none adequately meet the emerging needs of contrast sensitivity testing. To compare with the 45 grating stimuli (5 frequencies × 9 contrasts) used by the FACT charts, the qCSF can sample (at a minimum) a set of 60 contrasts × 12 spatial frequencies = 720 grating stimuli, with grating contrast sampled over a 60-dB range (with 1-dB resolution) and grating frequency sampled over a 10–20-dB range (with 3-dB resolution). With such a broad range and fine resolution for sampling grating stimuli, the qCSF needs no experimenter input to measure a wide variety of CSF phenomena. We believe that the qCSF is both flexible enough to capture large-scale changes of contrast sensitivity across testing conditions and precise enough to capture small-scale changes common to the progression or remediation of visual pathology.

Despite the promise demonstrated in the current study, the qCSF has several shortcomings. (1) It uses a forced-choice task with a high-guessing rate. The possibility of improving the test’s efficiency by using a Yes–No task is tempered by the introduction of unconstrained response criteria (Klein, 2001). One potential approach to address the response bias confound is to rapidly estimate the response bias in YN tasks directly (Lesmes, Lu et al., 2006) or add a rated response to the forced-choice task (Kaernbach, 2001; Klein, 2001). (2) The spatial CSF is limited as characteristic of spatiotemporal vision. Because CSF shape depends on factors that include temporal frequency (Kelly, 1979; van Nes et al., 1967), spatial and temporal envelopes (Peli, Arend, Young, & Goldstein, 1993), and retinal illuminance (Koenderink et al., 1978c), clarifying the best qCSF clinical testing conditions requires measuring the spatiotemporal contrast sensitivity surface (Kelly, 1979), which describes contrast thresholds as a function of spatial and temporal frequencies. The practical difficulty of measuring contrast sensitivity across this 2-D surface typically focuses investigation to only one of its cross-sections: (a) a spatial CSF at constant temporal frequency (Campbell & Robson, 1968), (b) a temporal CSF at constant spatial frequency (de Lange, 1958), or (c) a constant-speed CSF at co-varying spatial and temporal frequencies (Kelly, 1979). To improve measurements of spatiotemporal vision, we have developed the quick Surface (or qSurface) method (Lesmes, Gepshtein, Lu, & Albright, 2009), which leverages multiple qCSF applications to estimate different cross-sections of the spatiotemporal contrast sensitivity surface in parallel. Whereas the qCSF method evaluates stimuli for their contributions to a single cross-section through the spatiotemporal sensitivity surface, the qSurface method evaluates grating stimuli (defined by contrast and spatial and temporal frequencies) for the information they provide about concurrent estimates of horizontal, vertical, and diagonal cross-sections through the surface. This innovation greatly reduces the testing time for estimating the spatiotemporal sensitivity surface and even allows for the measurement of multiple surfaces in an experimental session (≈1 h). As a result, the qSurface method should be a valuable tool for finding the most useful spatiotemporal condition(s) for CSF clinical testing and for studying spatiotemporal vision in general. (3) The functional form used by the qCSF cannot accommodate notches or other local deficits. To address this shortcoming, we are currently developing adaptive CSF procedures with fewer model-based assumptions. These tests will be more flexible to detect aberrant CSF features with potential clinical importance (Tahir, Parry, Pallikaris, & Murray, 2009; Woods, Bradley, & Atchison, 1996). Forthcoming work that addresses the above-mentioned shortcomings, in combination with inevitable increases in computing power, should ultimately improve the efficiency of the next generation of qCSF methods.

The current application uses an adaptive testing strategy, maximizing the expected information gain of the Bayesian posterior (Cobo-Lewis, 1996; Kontsevich & Tyler, 1999; Kujala & Lukka, 2006; Lesmes et al., 2006), to estimate a specific functional CSF form, the truncated log-parabola. Alternative approaches are also likely to be successful; these include test strategies that apply different trial-to-trial cost functions, e.g., minimizing expected variance (Vul & MacLeod, 2007) or maximizing Fisher information (Remus & Collins, 2007, 2008), or which estimate different CSF descriptions, such as the pooled response of several frequency-specific band-pass mechanisms (Simpson & McFadden, 2005; Wilson & Gelb, 1984). One reviewer suggested defining the CSF by the intersection of two arcs from a radial center at 1 cpd and 30% amplitude. This novel CSF characterization, which uses only two parameters, provides an interesting prospect for future investigation, but the current paper does not have the space for fully exploring its development and implementation. One shortcoming of Bayesian testing strategies is their dependence on the prior; because we do not know the ground truth for the psychophysical parameters of interest, the optimization that drives the stimulus search depends on empirical estimates gained from previous trials. Therefore, local minima pose a risk for these procedures. One potential strategy to escape local minima is to perturb the stimulus search; this allows the stimulus selection algorithm to both explore and exploit diverse regions of the stimulus space (Alpaydin, 2004). A specific shortcoming of the one-step-ahead search is that the real experimental goal is to maximize the information gained over the course of the whole experiment. These current methods approximate this approach by finding the most informative stimulus for the next trial, but the two objectives (and their corresponding experimental trajectories) are not the same. It will be especially interesting to track the development of statistical and mathematical tools that increase the search horizon: more than one trial ahead, and perhaps even over the whole experiment (Lewi, 2009; Lewi, Butera, & Paninski, 2007, 2009). Therefore, we note that the current qCSF procedure almost certainly does not implement the optimal method for estimating the contrast sensitivity function; however, it does provide an unprecedented lower bound for the set of optimal procedures. We are optimistic that the qCSF will become even more efficient with the continuing development of sequential testing algorithms.

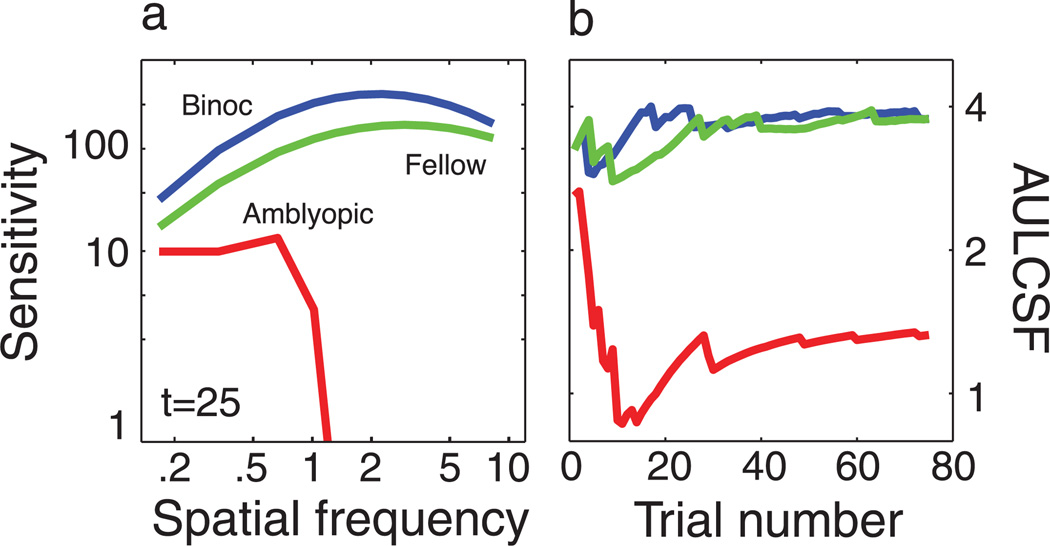

In the current study, we applied a testing strategy that rapidly characterizes the global shape of spatial contrast sensitivity functions by combining Bayesian inference and a trial-to-trial information gain strategy. The qCSF offers the “best of both worlds” for laboratory and clinical measures of contrast sensitivity. Over psychophysical testing times of 10–20 min, short by historical standards, the qCSF method can precisely measure the entire CSF over the wide range of spatial frequencies. For testing times that are short for cards and charts (<1–2 min), the qCSF is useful for estimating the area under the log CSF (AULCSF) with good precision (c.v. = 15%). Figure 6 presents the results of a preliminary clinical application that characterizes the CSF deficit of an amblyopic observer using the qCSF. The method distinguishes normal and abnormal CSFs with as few as 25 trials, with a stimulus placement strategy that minimizes the observer’s frustration level (overall performance was 84% correct for 75 trials). A more systematic investigation has successfully validated the qCSF’s identification of contrast sensitivity deficits in adults with amblyopia (Hou, Huang, Lesmes, Lu, & Zhou, unpublished data). Further investigations comparing the qCSF method with other CS tests will be important for validating the test’s potential advantage in the clinical setting. These studies, which will examine wider populations of normal and abnormal CSFs, will ultimately determine if the efficiency gains provided by the qCSF translate to improved clinical assessment of the CSF.

Figure 6.

Clinical application. The qCSF was applied to characterize contrast sensitivity functions in an amblyope. (a) Spatial CSFs were measured in three conditions: (1) one binocular CSF; (2) one monocular CSF measured in the amblyopic eye; and (3) one monocular CSF measured in the fellow eye. Spatial CSFs obtained with only 25 trials demonstrate a severe contrast sensitivity deficit, which is likewise apparent in (b), the AULCSF estimate with as few as 10 trials. AULCSF estimates are approximately stable after 25 trials. One important feature of the qCSF’s stimulus placement strategy is the high rate of evoked performance: this observer completed 75 trials with a comfortable performance level of 84% correct.

The qCSF method is part of a new generation of adaptive methods, which exploit advances in personal computer power to increase the complexity of classical Bayesian adaptive testing strategies. These methods estimate increasingly elaborate psychophysical models, which include the estimation of multi-dimensional models describing the psychometric function (Remus & Collins, 2007, 2008; Tanner, 2008), equi-detectable elliptical contours in color space (Kujala & Lukka, 2006), the features of external noise functions (Lesmes, Jeon et al., 2006), the spatiotemporal contrast sensitivity surface (Lesmes et al., 2009), neural input–output relationships (Lewi et al., 2007, 2009; Paninski, 2005), and the discrimination of memory retention models (Cavagnaro, Myung, Pitt, & Kujala, in press; Myung & Pitt, 2009). Taken together, these methods represent a powerful and versatile approach for studying phenomena previously restricted to data-intensive applications. Their computationally principled approach to data collection strategies will make them valuable in many future applications.

Acknowledgments

This research was supported by National Institutes of Health Grants F32EY016660 (LAL), EY017491 (ZL), and EY-007605 (TDA). We would like to thank two anonymous reviewers for insightful comments, Colin Clifford for providing MATLAB implementation of Kontsevich and Tyler’s ψ method, and Barbara Dosher, William H. Swanson, Don MacLeod, Sergei Gepshtein, Adam Reeves, and Tatanya Sharpee for valuable discussions

Appendix A

The components of a qCSF application, which include initialization and pre- and post-trial analyses, are described below and available for download in MATLAB code implementation (http://lobes.usc.edu/qMethods). To complement Movie 1, MATLAB code that generates a new demo with CSF parameters provided by the user is available.

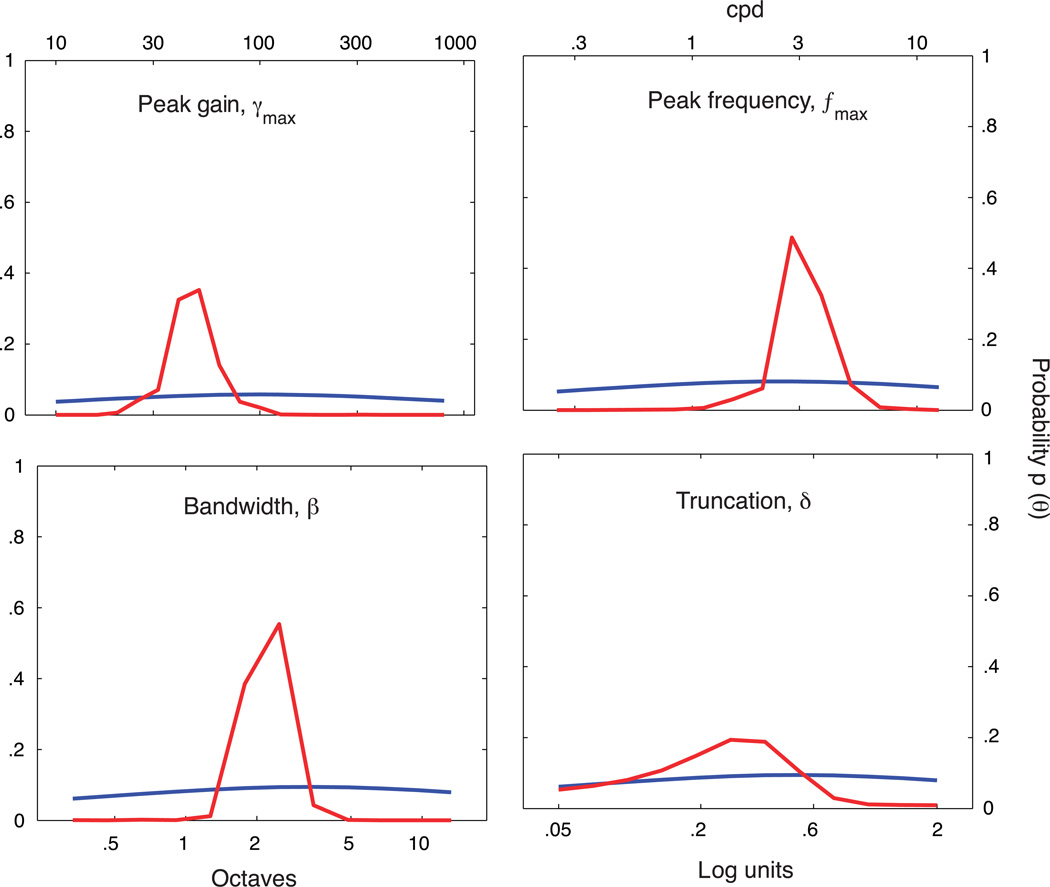

Initializing the quick CSF

To initialize the qCSF, first define a discrete gridded parameter space, Tθ, comprised of four-dimensional vectors θ = (fmax, γmax, β, δ), which represent potential CSFs. Before the experiment starts, a prior probability density, p(θ), which reflects baseline knowledge about the observer’s CSF, is defined over the space, Tθ. The prior can be informed by knowledge about how CSF shape varies as a function of task or test population. For example, the gain and frequency of the CSF’s peak can vary greatly across species, but there is much less variability in its bandwidth (Ghim & Hodos, 2006; Uhlrich et al, 1981). Figure A1 presents, for each of the four CSF parameters, the priors used in the current psychophysical validation. The priors were defined by hyperbolic secant (sech) functions (King-Smith & Rose, 1997). For each CSF parameter, θi, for i = 1, 2, 3, 4, the mode of the marginal prior, p(θi), was defined by the best guess for that parameter, θguess, and the width was defined by the confidence in that guess, θconfidence:

| (A1) |

The priors were defined to be log-symmetric around θguess, whose values for the respective parameters were: γmax = 100, fmax = 2.5 cpd, β = 3 octaves, and δ = 0.5 log units. For each parameter, setting θconfidence = 1 resulted in priors that were almost, but not completely, flat (see Figure A1). The joint prior was defined as the normalized product of the marginal priors. To show that the information contained in the priors did not over-influence the procedure, the posterior obtained from a complete run of 100 qCSF trials (observer JB) is also presented.

Figure A1.

For one subject’s completed qCSF run, comparison of the prior for trial number 1 (blue) and the posterior following trial number 100 (red) demonstrates that the priors do not dominate the CSF parameter estimates.

Stimulus selection

To choose the grating stimulus, s, defined by both spatial frequency and contrast, presented on each trial t, the qCSF method applies a strategy that minimizes the expected entropy of the Bayesian posterior defined over psychometric parameters (e.g., Kontsevich and Tyler’s ψ method). The experimental data reported in this paper were collected with the pre-trial calculations prescribed by Kontsevich and Tyler, but the provided implementation combines elements of the ψ method and an equivalent reformulation (Kujala & Lukka, 2006). Kujala and Lukka (2006) reformulated the calculation of minimum expected entropy by focusing on the equivalent task of maximizing the expected information gain (entropy change) between prior and posterior. Using a cost function based on the expectation of entropy change provides a great advantage: Monte Carlo sampling of the prior can be used to approximate expected information gain, by calculating the expected information gain over Monte Carlo samples. This approximation affects the precision of parameter estimates only minimally. The one-step-ahead search implemented by the qCSF is a greedy algorithm: the ultimate goal, a maximally informative experiment, is simplified as a search for the maximally informative stimulus on the next trial. This type of greedy algorithm is vulnerable to local minima, which can manifest in overrepresentation of a small subset of stimuli; to avoid this phenomenon, the qCSF does not strictly choose the stimulus that maximizes expected information gain but instead chooses uniformly over the top decile of stimuli. For sampling the prior, Kujala and Lukka used Monte Carlo Markov chain sampling with particle filtering (Doucet & de Freitas, 2001; Doucet, de Freitas, & Gordon, 2001), which greatly reduces computing load by forgoing explicit maintenance of the prior. In our application, we maintain the discrete grid-defined prior; future implementations of the qCSF may maintain this approach or change to other schemes for fitting/approximating the Bayesian posteriors (Lewi et al., 2009). Before each trial, the grid-defined prior is sampled via Monte Carlo inverse sampling using the MATLAB function “discretesample. m” written by Dahua Lin, and available for download from the MATLAB Central file exchange (http://www.mathworks.com/matlabcentral/fileexchange/21912). Even on old hardware (e.g., a Titanium Powerbook G4 laptop), the pre-trial calculation takes less than 500 ms; on a Windows PC that is several years old, the computing time is reduced to less than 10 ms. The number of samples can be arbitrarily high, though simulations suggest that as few as 50–100 samples are sufficient for method convergence. As prescribed by Kujala and Lukka (2006), for each possible stimulus, the calculation of expected information gain is

| (A2) |

where h(p) = pplog(p) − (1 − p)log(1 − p) defines the entropy of a distribution of complementary probabilities: p and 1 − p. The above calculation requires calculating Ψθ(x) over the Monte Carlo samples for each possible grating stimulus. Given a single sampled vector of CSF parameters, , the probability of a correct response for a grating of frequency, f, and contrast, c, is given by the log-Weibull psychometric function:

| (A3) |

Use of this psychometric function assumes that the steepness parameter, β = 2, does not change as a function of spatial frequency and that the observers makes stimulus-independent errors or lapses (Swanson & Birch, 1992; Wichmann & Hill, 2001) on a small proportion of trials, ε = 4%. Using a shallow assumed slope minimizes biases introduced by parameter mismatch. The assumed lapse rate will likely need to be increased for applications with naive psychophysical observers.

Response collection and Bayesian update

During the course of the experiment, the qCSF method applies Bayes Rule to reiteratively update p(θ), given the response to that trial’s grating stimulus. For the Bayesian update that follows each trial’s outcome, we use the explicit gridded priors, rather than its samples. This calculation is computationally intensive, but its impact is mitigated by (1) only calculating the update for the actual stimulus and response on each trial, not for all potential stimuli; and (2) the increases in computing power expected with each generation of personal computers. As prescribed by Kontsevich and Tyler (1999), to calculate the probability of the observed response (either correct or incorrect) to the stimulus s,

| (A4) |

or

| (A5) |

first use the prior, pt(θ), to weigh the response rates defined by CSF vectors, θ, across the parameter space, Tθ:

| (A6) |

This normalization factor, sometimes called “the probability of the data,” is then used to update the prior pt(θ) to the posterior pt+1(θ) via Bayes Rule:

| (A7) |

For estimating the four CSF parameters, the marginal means were calculated. The qCSF estimate of the CSF is defined by the mean parameters.

Reiteration and stop rules

After the observer finishes trial t, the updated posterior is used as the prior for trial t + 1. For a stopping criterion, the current qCSF application uses a fixed trial number. For future versions, other stopping criteria can be implemented (Alcalá-Quintana & García-Pérez, 2005).

Appendix B

The effects of priors

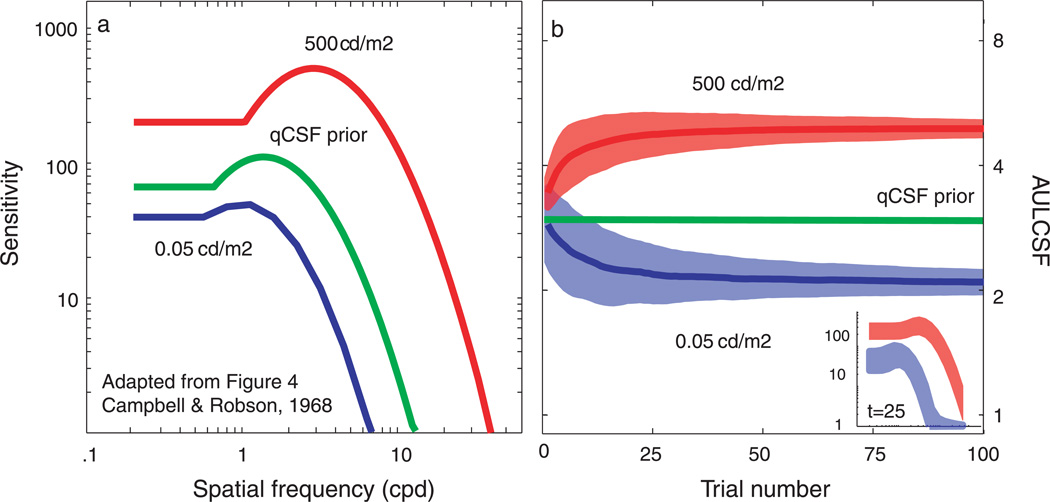

To demonstrate that the test efficiencies exhibited by the current simulation and psychophysical results were not overly determined by the initial priors (Alcalá-Quintana & García-Pérez, 2004), we simulated the qCSF measurement of widely different CSFs observed in different illumination conditions (Campbell & Robson, 1968). Figure B1a demonstrates that, for both simulated observers, the initial prior CSF poorly matches the observer’s CSFs. Figure B1b presents the simulation results: the mean and standard deviation of AULCSF estimates as a function of trial number (main plot) and the mean and standard deviation of CSF estimates obtained with only 25 trials (inset).

Figure B1.

See text.

Mean AULCSF estimates provided by the qCSF largely converge to their true value for both observers by the 25th trial; the mean bias magnitude, less than 5% for both observers, continues to decrease with more trials. Furthermore, at such short testing times, the coefficient of variation for AULCSF estimates (related to the area of the respective shaded regions) is less than 20% for both CSFs: 10 and 15% for the bright and dark conditions, respectively. The inset demonstrates that the CSF estimates obtained with only 25 trials are readily distinguishable from the initial prior CSF and from each other. However, applications attempting to precisely measure these CSFs are recommended to use more trials (>100).

Footnotes

Commercial relationships: L.A.L. and Z-L.L. have pending intellectual property interest in contrast sensitivity tests.

Contributor Information

Luis Andres Lesmes, Email: lu@salk.edu, Vision Center Laboratory, Salk Institute for Biological Studies, La Jolla, CA, USA.

Zhong-Lin Lu, Email: zhonglin@usc.edu, Laboratory of Brain Processes, University of Southern California, Los Angeles, CA, USA.

Jongsoo Baek, Email: Jongsoo.Baek@usc.edu, Laboratory of Brain Processes, University of Southern California, Los Angeles, CA, USA.

Thomas D. Albright, Email: tom@salk.edu, Vision Center Laboratory, Salk Institute for Biological Studies, La Jolla, CA, USA.

References

- Abrahamsson M, Sjöstrand J. Impairment of contrast sensitivity function (CSF) as a measure of disability glare. Investigative Ophthalmology & Visual Science. 1986;27:1131–1136. [PubMed] [Google Scholar]

- Alcalá-Quintana R, García-Pérez MA. The role of parametric assumptions in adaptive Bayesian estimation. Psychological Methods. 2004;9:250–271. doi: 10.1037/1082-989X.9.2.250. [DOI] [PubMed] [Google Scholar]

- Alcalá-Quintana R, García-Pérez MA. Stopping rules in Bayesian adaptive threshold estimation. Spatial Vision. 2005;18:347–374. doi: 10.1163/1568568054089375. [DOI] [PubMed] [Google Scholar]

- Alcalá-Quintana R, García-Pérez MA. A comparison of fixed-step-size and Bayesian staircases for sensory threshold estimation. Spatial Vision. 2007;20:197–218. doi: 10.1163/156856807780421174. [DOI] [PubMed] [Google Scholar]

- Alexander KR, Barnes CS, Fishman GA. Characteristics of contrast processing deficits in X-linked retinoschisis. Vision Research. 2005;45:2095–2107. doi: 10.1016/j.visres.2005.01.037. [DOI] [PubMed] [Google Scholar]

- Alpaydin E. Introduction to machine learning. Cambridge, MA: The MIT Press; 2004. [Google Scholar]

- Applegate RA, Hilmantel G, Howland HC, Tu EY, Starck T, Zayac EJ. Corneal first surface optical aberrations and visual performance. Journal of Refractive Surgery. 2000;16:507–514. doi: 10.3928/1081-597X-20000901-04. [DOI] [PubMed] [Google Scholar]

- Applegate RA, Howland HC, Sharp RP, Cottingham AJ, Yee RW. Corneal aberrations and visual performance after radial keratotomy. Journal of Refractive Surgery. 1998;14:397–407. doi: 10.3928/1081-597X-19980701-05. [DOI] [PubMed] [Google Scholar]

- Arden GB, Jacobson JJ. A simple grating test for contrast sensitivity: Preliminary results indicate value in screening for glaucoma. Investigative Ophthalmology & Visual Science. 1978;17:23–32. [PubMed] [Google Scholar]

- Bellucci R, Scialdone A, Buratto L, Morselli S, Chierego C, Criscuoli A, et al. Visual acuity and contrast sensitivity comparison between Tecnis and AcrySof SA60AT intraocular lenses: A multicenter randomized study. Journal of Cataract & Refractive Surgery. 2005;31:712–717. doi: 10.1016/j.jcrs.2004.08.049. [DOI] [PubMed] [Google Scholar]

- Birch EE, Stager DR, Wright WW. Grating acuity development after early surgery for congenital unilateral cataract. Archives of Ophthalmology. 1986;104:1783–1787. doi: 10.1001/archopht.1986.01050240057040. [DOI] [PubMed] [Google Scholar]

- Bland J, Altman D. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet. 1986;327:307–310. [PubMed] [Google Scholar]

- Bodis-Wollner I, Marx MS, Mitra S, Bobak P, Mylin L, Yahr M. Visual dysfunction in Parkinson’s disease: Loss in spatiotemporal contrast sensitivity. Brain. 1987;110:1675–1698. doi: 10.1093/brain/110.6.1675. [DOI] [PubMed] [Google Scholar]

- Bradley A, Freeman RD. Contrast sensitivity in anisometropic amblyopia. Investigative Ophthalmology & Visual Science. 1981;21:467–476. [PubMed] [Google Scholar]

- Bradley A, Hook J, Haeseker J. A comparison of clinical acuity and contrast sensitivity charts: Effect of uncorrected myopia. Ophthalmic and Physiological Optics. 1991;11:218–226. [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Buhren J, Terzi E, Bach M, Wesemann W, Kohnen T. Measuring contrast sensitivity under different lighting conditions: Comparison of three tests. Optometry and Vision Science. 2006;83:290–298. doi: 10.1097/01.opx.0000216100.93302.2d. [DOI] [PubMed] [Google Scholar]

- Bulens C. Contrast sensitivity in Parkinson’s disease. Neurology. 1986;36:1121–1125. doi: 10.1212/wnl.36.8.1121. [DOI] [PubMed] [Google Scholar]

- Bull ND, Johnson TV, Martin KR. Stem cells for neuroprotection in glaucoma. Progress in Brain Research. 2008;173:511–519. doi: 10.1016/S0079-6123(08)01135-7. [DOI] [PubMed] [Google Scholar]

- Campbell FW. Why do we measure contrast sensitivity? Behavioural Brain Research. 1983;10:87–97. doi: 10.1016/0166-4328(83)90154-7. [DOI] [PubMed] [Google Scholar]

- Campbell FW, Robson JG. Application of Fourier analysis to the visibility of gratings. The Journal of Physiology. 1968;197:551–566. doi: 10.1113/jphysiol.1968.sp008574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavagnaro DR, Myung JI, Pitt MA, Kujala JV. Adaptive design optimization: A mutual information based approach to model discrimination in cognitive science. Neural Computation. doi: 10.1162/neco.2009.02-09-959. in press. [DOI] [PubMed] [Google Scholar]

- Chung STL, Legge GE, Tjan BS. Spatial-frequency characteristics of letter identification in central and peripheral vision. Vision Research. 2002;42:2137–2152. doi: 10.1016/s0042-6989(02)00092-5. [DOI] [PubMed] [Google Scholar]

- Cobo-Lewis AB. An adaptive method for estimating multiple parameters of a psychometric function. Journal of Mathematical Psychology. 1996;40:353–354. [Google Scholar]

- Colby KA, Chang DF, Stulting RD, Lane SS. Surgical placement of an optical prosthetic device for end-stage macular degeneration: The implantable miniature telescope. Archives of Ophthalmology. 2007;125:1118–1121. doi: 10.1001/archopht.125.8.1118. [DOI] [PubMed] [Google Scholar]

- Comerford JP. Vision evaluation using contrast sensitivity functions. American Journal of Optometry and Physiological Optics. 1983;60:394–398. doi: 10.1097/00006324-198305000-00009. [DOI] [PubMed] [Google Scholar]

- Cornsweet TN. The staircase-method in psychophysics. The American Journal of Psychology. 1962;75:485–491. [PubMed] [Google Scholar]

- Cover TM, Thomas JA. Elements of information theory. New York: Wiley; 1991. [Google Scholar]

- de Lange H. Research into the dynamic nature of the human fovea. Cortex systems with intermittent and modulated light. I. Attenuation characteristics with white and colored light. Journal of Optical Society of America. 1958;48:777–783. doi: 10.1364/josa.48.000777. [DOI] [PubMed] [Google Scholar]

- De Valois RL, De Valois KK. Spatial vision. New York: Oxford; 1988. [Google Scholar]

- Della Sala S, Bertoni G, Somazzi L, Stubbe F, Wilkins AJ. Impaired contrast sensitivity in diabetic patients with and without retinopathy: A new technique for rapid assessment. British Journal of Ophthalmology. 1985;69:136–142. doi: 10.1136/bjo.69.2.136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doucet A, de Freitas N. Sequential Monte Carlo methods in practice. New York: Springer- Verlag; 2001. [Google Scholar]

- Doucet A, de Freitas N, Gordon N. An introduction to sequential Monte Carlo methods. In: Doucet A, de Freitas N, editors. Sequential Monte Carlo methods in practice. New York: Springer-Verlag; 2001. pp. 3–14. [Google Scholar]

- Dowling J. Current and future prospects for optoelectronic retinal prostheses. Eye, Advanced Online Publication. 2008 doi: 10.1038/eye.2008.385. [DOI] [PubMed] [Google Scholar]

- Enroth-Cugell C, Robson JG. The contrast sensitivity of retinal ganglion cells of the cat. The Journal of Physiology. 1966;187:517–552. doi: 10.1113/jphysiol.1966.sp008107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faye EE. Contrast sensitivity tests in predicting visual function. International Congress Series Vision 2005—Proceedings of the International Congress held between 4 and 7 April 2005 in London, UK. 2005;1282:521–524. [Google Scholar]

- García-Pérez MA, Alcalá-Quintana R. Sampling plans for fitting the psychometric function. Spanish Journal of Psychology. 2005;8:256–289. doi: 10.1017/s113874160000514x. [DOI] [PubMed] [Google Scholar]

- García-Pérez MA, Alcalá-Quintana R. Bayesian adaptive estimation of arbitrary points on a psychometric function. British Journal of Mathematical and Statistical Psychology. 2007;60:147–174. doi: 10.1348/000711006X104596. [DOI] [PubMed] [Google Scholar]

- Ghim MM, Hodos W. Spatial contrast sensitivity of birds. Journal of Comparative Physiology A. 2006;192:523–534. doi: 10.1007/s00359-005-0090-5. [DOI] [PubMed] [Google Scholar]

- Ginsburg AP. A new contrast sensitivity vision test chart. American Journal of Optometry and Physiological Optics. 1984;61:403–407. doi: 10.1097/00006324-198406000-00011. [DOI] [PubMed] [Google Scholar]

- Ginsburg AP. Contact lenses. The CLAO guide to basic science and clinical practice. Vol. 56. New York: Grune & Stratton; 1987. The evaluation of contact lenses and refractive surgery using contrast sensitivity; pp. 1–19. [Google Scholar]

- Ginsburg AP. Next generation contrast sensitivity testing. In: Rosenthal BP, Cole RG, editors. Functional assessment of low vision. St. Louis, MO: Mosby; 1996. pp. 77–88. [Google Scholar]

- Ginsburg AP. Contrast sensitivity and functional vision. International Ophthalmology Clinics. 2003;43:5–15. doi: 10.1097/00004397-200343020-00004. [DOI] [PubMed] [Google Scholar]

- Ginsburg AP. Contrast sensitivity: Determining the visual quality and function of cataract, intraocular lenses and refractive surgery. Current Opinion in Ophthalmology. 2006;17:19–26. doi: 10.1097/01.icu.0000192520.48411.fa. [DOI] [PubMed] [Google Scholar]

- Graham NVS. Visual pattern analyzers. New York: Oxford; 1989. [Google Scholar]

- Harmening WM, Nikolay P, Orlowski J, Wagner H. Spatial contrast sensitivity and grating acuity of barn owls. Journal of Vision. 2009;9(7):13, 1–12. doi: 10.1167/9.7.13. http://journalofvision.org/9/7/13/ [DOI] [PubMed] [Google Scholar]

- Hess RF, Howell ER. The threshold contrast sensitivity function in strabismic amblyopia: Evidence for a two type classification. Vision Research. 1977;17:1049–1055. doi: 10.1016/0042-6989(77)90009-8. [DOI] [PubMed] [Google Scholar]

- Higgins KE, Jaffe MJ, Coletta NJ, Caruso RC, de Monasterio FM. Spatial contrast sensitivity: Importance of controlling the patient’s visibility criterion. Archives of Ophthalmology. 1984;102:1035–1041. doi: 10.1001/archopht.1984.01040030837028. [DOI] [PubMed] [Google Scholar]

- Hohberger B, Laemmer R, Adler W, Juenemann A, Horn F. Measuring contrast sensitivity in normal subjects with OPTEC 6500: Influence of age and glare. Graefe’s Archive for Clinical and Experimental Ophthalmology. 2007;245:1805–1814. doi: 10.1007/s00417-007-0662-x. [DOI] [PubMed] [Google Scholar]

- Hot A, Dul MW, Swanson WH. Development and evaluation of a contrast sensitivity perimetry test for patients with glaucoma. Investigative Ophthalmology & Visual Science. 2008;49:3049–3057. doi: 10.1167/iovs.07-1205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hou F, Huang C-B, Lesmes LA, Feng L-X, Zhou Y-F, Lu Z-L. (unpublished data). Clinical application of the quick CSF: Efficient characterization and classification of contrast sensitivity functions in Amblyopia. doi: 10.1167/iovs.10-5468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang CB, Tao LM, Zhou YF, Lu ZL. Treated amblyopes remain deficient in spatial vision: A contrast sensitivity and external noise study. Vision Research. 2007;47:22–34. doi: 10.1016/j.visres.2006.09.015. [DOI] [PubMed] [Google Scholar]

- Ismail G, Whitaker D. Early detection of changes in visual function in diabetes mellitus. Ophthalmic and Physiological Optics. 1998;18:3–12. [PubMed] [Google Scholar]

- Jindra LF, Zemon V. Contrast sensitivity testing: A more complete assessment of vision. Journal of Cataract and Refractive Surgery. 1989;15:141–148. doi: 10.1016/s0886-3350(89)80002-1. [DOI] [PubMed] [Google Scholar]

- Kaernbach C. Adaptive threshold estimation with unforced-choice tasks. Perception and Psychophysics. 2001;63:1377–1388. doi: 10.3758/bf03194549. [DOI] [PubMed] [Google Scholar]

- Keller J, Strasburger H, Cerutti DT, Sabel BA. Assessing spatial vision—Automated measurement of the contrast-sensitivity function in the hooded rat. Journal of Neuroscience Methods. 2000;97:103–110. doi: 10.1016/s0165-0270(00)00173-4. [DOI] [PubMed] [Google Scholar]

- Kelley MJ, Rose AY, Keller KE, Hessle H, Samples JR, Acott TS. Stem cells in the trabecular meshwork: Present and future promises. Experimental Eye Research. 2008;88:747–751. doi: 10.1016/j.exer.2008.10.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelly DH. Motion and vision. II. Stabilized spatio-temporal threshold surface. Journal of the Optical Society of America. 1979;69:1340–1349. doi: 10.1364/josa.69.001340. [DOI] [PubMed] [Google Scholar]

- King-Smith PE, Grigsby SS, Vingrys AJ, Benes SC, Supowit A. Efficient and unbiased modifications of the QUEST threshold method: Theory, simulations, experimental evaluation and practical implementation. Vision Research. 1994;34:885–912. doi: 10.1016/0042-6989(94)90039-6. [DOI] [PubMed] [Google Scholar]

- King-Smith PE, Rose D. Principles of an adaptive method for measuring the slope of the psychometric function. Vision Research. 1997;37:1595–1604. doi: 10.1016/s0042-6989(96)00310-0. [DOI] [PubMed] [Google Scholar]

- Kiorpes L, Kiper DC, O’Keefe LP, Cavanaugh JR, Movshon JA. Neuronal correlates of amblyopia in the visual cortex of macaque monkeys with experimental strabismus and anisometropia. Journal of Neuroscience. 1998;18:6411–6424. doi: 10.1523/JNEUROSCI.18-16-06411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiorpes L, Tang C, Movshon JA. Factors limiting contrast sensitivity in experimentally amblyopic macaque monkeys. Vision Research. 1999;39:4152–4160. doi: 10.1016/s0042-6989(99)00130-3. [DOI] [PubMed] [Google Scholar]

- Klein SA. Measuring, estimating, and understanding the psychometric function: A commentary. Perception & Psychophysics. 2001;63:1421–1455. doi: 10.3758/bf03194552. [DOI] [PubMed] [Google Scholar]

- Koenderink JJ, Bouman MA, Buenodemesquita AE, Slappendel S. Perimetry of contrast detection thresholds of moving spatial sine wave patterns I. Near peripheral visual field (eccentricity 0 degrees to 8 degrees) Journal of the Optical Society of America. 1978a;68:845–849. doi: 10.1364/josa.68.000845. [DOI] [PubMed] [Google Scholar]

- Koenderink JJ, Bouman MA, Buenodemesquita AE, Slappendel S. Perimetry of contrast detection thresholds of moving spatial sine wave patterns. II. Far peripheral visual field (eccentricity 0 degrees to 50 degrees) Journal of the Optical Society of America. 1978b;68:850–854. doi: 10.1364/josa.68.000850. [DOI] [PubMed] [Google Scholar]

- Koenderink JJ, Bouman MA, Buenodemesquita AE, Slappendel S. Perimetry of contrast detection thresholds of moving spatial sine wave patterns. IV. Influence of mean retinal illuminance. Journal of the Optical Society of America. 1978c;68:860–865. doi: 10.1364/josa.68.000860. [DOI] [PubMed] [Google Scholar]

- Kontsevich LL, Tyler CW. Bayesian adaptive estimation of psychometric slope and threshold. Vision Research. 1999;39:2729–2737. doi: 10.1016/s0042-6989(98)00285-5. [DOI] [PubMed] [Google Scholar]

- Kujala JV, Lukka TJ. Bayesian adaptive estimation: The next dimension. Journal of Mathematical Psychology. 2006;50:369–389. [Google Scholar]

- Kujala JV, Richardson U, Lyytinen H. A Bayesian-optimal principle for learner-friendly adaptation in learning games. Journal of Mathematical Psychology. in press. [Google Scholar]

- Kuss M, Jäkel F, Wichmann FA. Bayesian inference for psychometric functions. Journal of Vision. 2005;5(5):8, 478–492. doi: 10.1167/5.5.8. http://journalofvision.org/5/5/8/ [DOI] [PubMed] [Google Scholar]

- Leek MR. Adaptive procedures in psychophysical research. Perception & Psychophysics. 2001;63:1279–1292. doi: 10.3758/bf03194543. [DOI] [PubMed] [Google Scholar]

- Lesmes LA, Gepshtein S, Lu Z-L, Albright T. Rapid estimation of the spatiotemporal contrast sensitivity surface [Abstract] Journal of Vision. 2009;9(8):696–696a. http://journalofvision.org/9/8/696/ [Google Scholar]

- Lesmes LA, Jeon ST, Lu ZL, Dosher BA. Bayesian adaptive estimation of threshold versus contrast external noise functions: The quick TvC method. Vision Research. 2006;46:3160–3176. doi: 10.1016/j.visres.2006.04.022. [DOI] [PubMed] [Google Scholar]

- Lesmes LA, Lu Z-L, Tran NT, Dosher BA, Albright TD. An adaptive method for estimating criterion sensitivity (dV) levels in yes/no tasks [Abstract] Journal of Vision. 2006;6(6):1097–1097a. http://journalofvision.org/6/6/1097/ [Google Scholar]

- Levi DM, Li RW. Improving the performance of the amblyopic visual system. Philosophical Transactions of the Royal Society B: Biological Sciences. 2009;364:399–407. doi: 10.1098/rstb.2008.0203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitt H. Transformed up-down methods in psychoacoustics. Journal of the Acoustical Society of America. 1971;49:467–477. [PubMed] [Google Scholar]

- Lewi J. Sequential optimal design of neurophysiology experiments. Doctoral dissertation; Georgia Institute of Technology; Atlanta. 2009. [DOI] [PubMed] [Google Scholar]

- Lewi J, Butera R, Paninski L. Real-time adaptive information-theoretic optimization of neurophysiology experiments. Advances in Neural Information Processing Systems. 2007;19:857. [Google Scholar]

- Lewi J, Butera R, Paninski L. Sequential optimal design of neurophysiology experiments. Neural Computation. 2009;21:619–687. doi: 10.1162/neco.2008.08-07-594. [DOI] [PubMed] [Google Scholar]

- Li R, Polat U, Makous W, Bavelier D. Enhancing the contrast sensitivity function through action video game training. Nature Neuroscience. 2009;12:549–551. doi: 10.1038/nn.2296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li RW, Young KG, Hoenig P, Levi DM. Perceptual learning improves visual performance in juvenile amblyopia. Investigative Ophthalmology & Visual Science. 2005;46:3161–3168. doi: 10.1167/iovs.05-0286. [DOI] [PubMed] [Google Scholar]