Abstract

The past decade has evidenced the increased prevalence of irregularly spaced longitudinal data in social sciences. Clearly lacking, however, are modeling tools that allow researchers to fit dynamic models to irregularly spaced data, particularly data that show nonlinearity and heterogeneity in dynamical structures. We consider the issue of fitting multivariate nonlinear differential equation models with random effects and unknown initial conditions to irregularly spaced data. A stochastic approximation expectation–maximization algorithm is proposed and its performance is evaluated using a benchmark nonlinear dynamical systems model, namely, the Van der Pol oscillator equations. The empirical utility of the proposed technique is illustrated using a set of 24-h ambulatory cardiovascular data from 168 men and women. Pertinent methodological challenges and unresolved issues are discussed.

Keywords: differential equation, dynamic, nonlinear, stochastic EM, longitudinal

From difference scores (see e.g., Bereiter, 1963; Cronbach & Furby, 1970; Harris, 1963) to confirmatory models grounded on differential/difference equations, the study of change remains a central question of interest to researchers in the social and behavioral sciences. In the realm of nonlinear dynamic systems analysis, the last decade has evidenced a gradual shift from heavy reliance on geometrically based exploratory nonlinear analytic techniques (see e.g., Kaplan & Glass, 1995; Longstaff & Heath, 1999) to confirmatory approaches of studying nonlinear dynamic processes via model fitting (Molenaar & Newell, 2003; Ramsay, Hooker, Campbell, & Cao, 2007).

Differential equation models provide a direct representation of change processes while allowing the data to be irregularly spaced. Such data have become increasingly prevalent in studies aimed at collecting experience sampling or ecological momentary assessment data (Stone & Shiffman, 1994). Most experience sampling studies require respondents to provide assessments on relevant constructs over a specified period at specific times of the day (interval-contingent), at random times when prompted by an experimenter-invoked signal (signal-contingent), or as triggered by an event in everyday life (event-contingent; Bolger, Davis, & Rafaeli, 2003). Signal-and event-contingent data are typically irregularly spaced by nature of the study designs.

Currently, there has been a scarcity of tools for fitting models to irregularly spaced data in the psychometric literature. Standard growth curve and the related mixed effects models (Browne & du Toit, 1991; Mcardle & Hamagami, 2003; Meredith & Tisak, 1990) provide a straightforward way of handling irregularly spaced intensive repeated measures data when time appears explicitly in the fitted functions. When that is not the case, this approach cannot be used without further modifications. Differential equation models, in contrast, can be used to extend conventional growth curve models in a number of ways. First, most growth curve (e.g., linear, Gompertz and exponential, among many others) models can be viewed as the integral solutions of various differential equations. Thus, growth curve models can be conceived as special cases of differential equation models. Second, differential equation models, when compared to standard growth curve expressions, focus explicitly on representing the mechanisms of change: that is, changes in the constructs of interest appear explicitly on the left-hand-side of the equations. In this way, differential equations have greater flexibility in capturing the interdependencies among multiple change processes, especially when the fitted functions do not depend explicitly on time.

Despite the proliferation of work on ODE modeling in the econometric, engineering, and statistical literature (Ait-Sahalia, 2008; Jones, 1984; Mbalawata, Särkkä, & Haario, 2013; Beskos, Papaspiliopoulos, Roberts, & Fearnhead, 2006; Beskos, Papaspiliopoulos, & Roberts, 2009; Ramsay et al., 2007; Särkkä, 2013), much of the progress in ODE modeling in the field of psychometrics has been limited to linear ODE modeling. Notable advances include efforts to extend earlier approaches of fitting the nonlinear integral solutions of linear ordinary differential equations (ODEs) as linear structural equation models without the necessary constraints (Arminger, 1986) to alternative state-space approaches (Jones, 1984, 1993), SEM implementation of state-space approaches (Singer, 1992, 2010, 2012; Oud & Jansen, 2000; Oud & Singer, 2010), two-stage derivative estimation approaches (Boker & Graham, 1998; Boker & Nesselroade, 2002), as well as comparisons between the two-stage and other single-stage approaches (Oud, 2007). Still, these approaches were designed primarily to fit longitudinal linear ODEs and stochastic differential equations (SDEs). Generalizing standard SEM procedures (e.g., product indicator techniques or related approaches involving nonlinear constraints; Kenny & Judd, 1984; Klein & Muthén, 2007; Marsh, Wen, & Hau, 2004) to nonlinear dynamic models (e.g., nonlinear ODEs) is far from simple. Implementing such constraints in the simpler linear growth curve models has proven to be difficult (Duncan, Duncan, Strycker, Li, & Alpert, 1999; Li, Duncan, & Acock, 2000; Wen, Marsh, & Hau, 2002), not to mention other less widely tested nonlinear dynamic models.

Despite the difficulties involved, nonlinear differential equation models have distinct merits compared to linear models that make the associated efforts worth pursuing. For instance, nonlinear differential equation models are capable of predicting ongoing oscillations between different locally stable behaviors (Hale & Koçak, 1991). In this vein, Boker and Graham (1998) used a cubic oscillator model to represent adolescent substance abuse behavior as having two attractor states: substance use and non-use. Perhaps of particular interest to social and behavioral scientists is nonlinear differential equation models’ flexibility in representing the dependencies of a change process on—or in other words, how the process is moderated by—other key variables in the system. Examples of applications along this line include the use of a modified Van der Pol oscillator equation to represent human circadian rhythms (Brown & Luithardt, 1999; Brown, Luithardt, & Czeisler, 2000), and variations of the predator–prey model to represent dyadic interaction (Chow, Ferrer, & Nesselroade, 2007), human cerebral development (Thatcher, 1998), and cognitive aging (Chow & Nesselroade, 2004). Thus, differential equation models share the merits of their discrete-time counterparts (such as state-space models and the SEM-based latent difference approach; Durbin & Koopman, 2001; McArdle & Hamagami, 2001) in providing a platform to represent change mechanisms in concrete terms, while offering more flexibility in accommodating irregular time intervals.

In the present article, we present a frequentist approach to fitting nonlinear ODE models with random effects and unknown initial conditions by means of a stochastic approximation expectation–maximization (SAEM) algorithm. The proposed approach extends previous work on fitting ODEs in the statistical, biostatistical, and psychometric literature in several ways. First, contrary to other existing linear approaches (Boker & Nesselroade, 2002; Oud & Jansen, 2000; Jones, 1984), we consider the problem of fitting nonlinear ODEs to irregularly spaced data. The proposed approach can also be used with linear ODEs. Second, unlike other applications using a fully Bayesian approach (e.g., Carlin, Gelfand, & Smith, 1992; Chow, Tang, Yuan, Song, & Zhu, 2011; Durbin & Koopman, 2001; Geweke & Tanizaki, 2001;Mbalawata et al., 2013; Särkkä, 2013), we combine a Markov chain Monte Carlo (MCMC) procedure with the expectation–maximization (EM) algorithm to yield maximum likelihood (ML) point and standard error estimates of the time-invariant modeling parameters (as in Kuhn & Laviellem, 2005; Donnet & Samson, 2007). Considerable modeling flexibility is gained due to the ease with which MCMC procedures handle more complex models. Yet, we can still adopt familiar Frequentist-based statistics and approaches (e.g., confidence intervals) for inferential purposes. Third, we represent the structural parameters (i.e., parameters that govern the dynamics of a system) as composed of a series of fixed and random effects—a modeling feature not considered in other studies, including studies that utilize other Frequentist and/or simulation-based approximation approaches to fit ODEs or SDEs (e.g., Beskos et al., 2009, 2006; Chow et al., 2007; Ramsay et al., 2007; Gordon, Salmond, & Smith, 1993; Hürzeler & Künsch, 1998; Kitagawa, 1998; Mbalawata et al., 2013; Singer, 1995, 2002, 2007; Tanizaki, 1996). Finally, while the performance of the SAEM algorithm in handling mixed effects ODEs featuring manifest variables only has been evaluated elsewhere (Donnet & Samson, 2007; Kuhn & Laviellem, 2005), these researchers’ prior work did not explicitly consider the performance of the SAEM in situations where the initial conditions are unknown and differ between subjects. Thus, as distinct from the work of Kuhn and Laviellem (2005) and Donnet and Samson (2007), the proposed modeling framework contributes uniquely to the literature on ODE modeling by (1) including a factor analytic model as a measurement model to enable modeling at the latent variable level, and (2) allowing the means and interindividual differences in the initial conditions of latent variables to be estimated as modeling parameters. Additionally, a simulation study is conducted to evaluate the performance of the estimation procedures under different initial condition specifications, including scenarios where the initial conditions are misspecified and interindividual differences in initial conditions are ignored.

1. Nonlinear Ordinary Differential Equation (ODE) Models with Random Effects and Possibly Unknown Initial Conditions

We consider the problem of fitting linear and nonlinear ODEs with random effects in the structural parameters under situations in which the initial conditions of the ODEs may be unknown. Letting Dxi (t) denote the differential operator applied on xi (t), i.e., Dxi (t) = dxi (t)/dt, the nonlinear ODEs of interest take on the general form of

| (1) |

where i indexes person and t indexes time, f(․) is a vector of (possibly nonlinear) drift functions; xi (t) is an nx × 1 vector of latent variables of interest at time t; and Dxi (t) denotes the corresponding nx × 1 vector of first derivatives. Note that xi (t) may include latent derivative variables needed to define higher-order ODEs. θf,i represents a q × 1 vector of person-specific structural parameters of interest that affect the dynamic functions in Eq. (1), expressed as a function of β, a pβ × 1 vector of fixed effects parameters, and bi, a d × 1 vector of random effect; Hi and Zi are q × pβ and q × d design matrices typically seen in the linear mixed effects framework. We further assume that bi follows a multivariate normal distribution as bi ~ N(0, Σb), where θb contains all the unknown parameters in Σb.

The initial conditions for the ODEs are denoted as xi (ti, 1), and are specified to be functions of θf,i. In this way, fixed effects parameters governing the initial conditions are estimated as part of β, while individual-specific deviations in initial conditions are captured by the random effects in bi. Our illustrative application provides a concrete example of one possible way of representing unknown initial conditions across multiple subjects using this formulation.

The latent variables in xi (ti,j) at discrete time point ti,j are indicated by an ny × 1 vector of manifest observations assumed to be measured at individual-specific and possibly irregularly spaced time intervals, at t = ti,j, j = 1, …, T, with Δi,j = ti,j+1 − ti,j. The vector of manifest observations is denoted as yi (ti,j), with

| (2) |

where μ is an ny × 1 vector of intercepts, Λ is an ny × nx factor loading matrix, and εi (ti,j) denotes multivariate normally distributed measurement error processes such that E[εi (ti,j)] = 0, E[εi (ti,j)εi (ti,j)T] = Σε, εi (ti,j) and xi (ti,j) are independent, and εi (ti,j) and εi (ti,k) are independent for ti,j ≠ ti,k, with a diagonal structure for Cov(εi (ti,j)) = Σε. We also assume generally that when multiple indicators are used to identify a latent factor, at least one factor loading is fixed for identification purposes.

In continuous time, given the initial conditions of the system at any arbitrary time t0, one may define a t-advance mapping or evolution function g(t, xi (t0)) that moves an initial state to a later state at time t. The evolution function, g(t, xi (t0)), can be used to map out the trajectories of all the latent variables in xi (t).Once these values are known, the solution to the hypothesized ODE is also known. Thus, one can obtain the solution of the ODE through repeated application of the evolution function, e.g., first from time t0 to s, and subsequently to t, as g(t+s, xi (t0)) = g(t, g(s, xi (t0))). Thus, if the vector of true latent differences, defined as Δxi (ti,j) = xi (ti,j) − xi (ti,j−1), are known at at a series of discrete time points, ti,j, j = 1, …, T, one can obtain a discrete t-advance mapping as

| (3) |

With few exceptions, most nonlinear ODEs do not have analytic solutions. One common approach is to use numerical methods such as Euler’s or Runge–Kutta methods to obtain approximate t-advance mapping at discrete intervals. In other words, we approximate the vector of true latent differences, Δxi (ti,j+1) = xi (ti,j+1) − xi (ti,j) using , namely, numerical interpolations of the latent changes at the next time point based on the hypothesized ODE to yield numerical solution, x̃i (ti,j), as

| (4) |

where the numerical latent differences, , are typically obtained at an equally spaced interval, Δ*, whose magnitude is considerably smaller than the observed measurement intervals,Δi,j, to improve the accuracy of the solutions.

We define ti,j* = ti,j−1+kΔ* as a time point at which observed measurements might not be available but we are interested in “imputing” the values of the latent changes at this point, , to improve estimation accuracy. A variety of numerical solvers can be used to obtain . One such example is the second-order Heun’s method, which is implemented as

| (5) |

Conditional on bi, if the initial condition variables in xi (ti,1) are known or can be estimated, then x̃i (ti,j) is also known. This reflects the deterministic nature of ODEs. The fitted model thus becomes

| (6) |

where the notation denotes approximately distributed as, used to highlight the fact that the numerical solutions given by x̃i (ti,j) contain truncation errors, namely, errors stemming from using the ODE solver to numerically approximate the true solutions of the ODE.1 It is important to emphasize that the true latent variables, xi (ti,j), as well as the associated approximation terms, and x̃i (ti,j), are all functions of θf,i. Here, we suppress the notational dependency to ease presentation.

Our interest is in estimating the parameters in via the SAEM algorithm (Zhu & Gu, 2007). Here, θμ is a pμ × 1 (where pμ ≤ ny) vector of freed parameters in μ, θΛ is a pΛ × 1 vector containing all unknown factor loadings in Λ, θε is a pε × 1 vector containing all the unknown parameters in Σε, and θb is a pb × 1 vector containing all the unknown parameters in Σb.

1.1. Stochastic Approximation Expectation–Maximization (SAEM) Algorithm

Prior to describing the SAEM algorithm, we first introduce some key notations. Let Yi (ti,j) = {yi (ti,1), …, yi (ti,j))},Yi = {yi (ti, 1), …, yi (ti,T)}, and Y = {Y1, …, Yn} is the observed data array for all n participants; let X̃i (ti,j) = {x̃i (ti,1), …, x̃i (ti,j)}, X̃i = {x̃i (ti,1), …, x̃i (ti,T)}, and X̃ = {X̃1, …, X̃n} is the array of numerical solutions of the latent variables for all n participants. Further, we denote the augmented complete data array as Z = {Y, b}. In standard Expectation–Maximization (EM) procedures (Dempster, Laird, & Rubin, 1977), maximum likelihood estimates (MLEs) are obtained by cycling iteratively through an expectation (E)-step and a maximization (M)-step. The E-step typically involves analytically computing terms that appear in a pseudo-loglikelihood function given by the conditional expectation of the complete-data loglikelihood function with respect to the distribution p(b|Y; θ). The M-step involves updating the parameter estimates using analytic formulas that serve to maximize the pseudo-loglikelihood function. SAEM differs from conventional E–M algorithms in the use of a stochastic approximation procedure in the E-step, coupled with a gradient-type updating procedure (e.g., the Newton–Raphson and Gauss–Newton algorithms; Ortega, 1990) in the M-step. That is, while the required expectations are analytically intractable, the E-step is made possible by replacing analytic expectations with summary statistics computed using samples drawn from the conditional distribution, p(b|Y; θ), by means of Markov chain Monte Carlo (MCMC) procedures. A Newton–Raphson algorithm is then used to obtain MLEs of θ in the maximization (M)-step during which a sequence of gain constants, γ, is used to control the degree to which updates of parameter estimates are weighted in subsequent iterations.

In the present context, the complete-data probability density function is given by

However, due to the deterministic nature of the ODE, p(X̃|b; θ) is known conditional on b.2 Thus, the integration over X̃ vanishes, and the complete-data loglikelihood function reduces to

| (7) |

where the associated score vector and information matrix are denoted as sZ(θ; Z) and IZ(θ; Z). Detailed analytical forms of sZ (θ; Z) and IZ (θ; Z) are included in Appendix 1.

To use any gradient-type algorithm such as the Newton–Raphson (Ortega, 1990) requires the score vector and the information (or negative Hessian) matrix of the loglikelihood function, denoted as sY (θ; Y) and IY (θ; Y), respectively. They can be obtained as (Dempster et al., 1977; Louis, 1982)

| (8) |

where E(․|Y, θ) denotes expectation taken with respect to the conditional distribution of p(b|Y; θ). Once the score function and information matrix, sY (θ; Y) and IY (θ; Y), are available, one can then obtain updated estimates of the parameter vector, θ, at iteration m using any gradient-type algorithm, such as the Newton–Raphson as (Gu & Zhu, 2001; Zhu & Gu, 2007; Ortega, 1990)

| (9) |

While the classical EM algorithm and the Newton–Raphson procedure shown in (9) both serve to provide iterative updates of the parameter estimates to yield ML estimates for which sY (θ; Y) = 0, the Newton–Raphson algorithm and other related gradient-type algorithms typically outperform the classical EM in terms of convergence speed (Dembo & Zeitouni, 1986; Ortega, 1990; Singer, 1995).

Based on Eq. (7) and its constituent elements shown in Appendix 1, taking the expectations of sZ(θ; Z) and IZ(θ; Z) with respect to the distribution p(b|Y; θ) requires the computation of terms such as E[x̃(ti,j)|Y; θ(m−1)], , E[x̃ (ti,j)x̃i (ti,j)T|Y; θ(m−1)], and , where W* is a function involving vectors/matrices of modeling parameters such as Λ and Σε. These terms, in turn, require integration over p(b|Y; θ). Because bi is involved in the nonlinear f (․) in the computation of x̃(ti,j), such integration is analytically formidable. In the present article, we chose to perform a variation of the EM algorithm—the SAEM algorithm—for parameter estimation purposes.

E-step with stochastic approximation

In the E-step, a MCMC technique of choice is used to simulate a sequence of random draws from the conditional distribution, p(b|Y; θ). For reasons detailed in Appendix 2, p(b|Y; θ) is non-standard, and sampling from this distribution cannot be performed directly by means of Gibbs sampling. We thus adopt the Metropolis–Hasting sampling procedure described in Appendix 2 to simulate samples of bi probabilistically from an alternative proposal distribution that does have a standard form to obtain , which is then used in the E-step to compute the summary statistics

| (10) |

where sZ(θ; Z) and IZ(θZ) are defined, respectively, in Eqs. (17) and (18) of Appendix 1. These summary statistics are then used in the M-step to update the parameter estimates in θ.

M-step

In the M-step, the goal is to obtain updated parameter estimates, θ(m), for the mth iteration using a modified Newton–Raphson procedure involving and current estimates of the information matrix, , abbreviated below as . Doing so in turn requires (see Eq. 9) current estimates of the score function, sY (θ; Y)(m), abbreviated below as , current estimates of E[IZ(θ; Z)|Y, θ], denoted as , and estimates of E[sZ(θ; Z)sZ (θ; Z)T|Y, θ], denoted as . These elements are computed as

| (11) |

where γ(m) is a gain constant that controls the degree to which new estimates are weighted at iteration m in comparison with the estimates from iteration m − 1. The gain constant is a control parameter that is modified in two stages to (1) prevent the estimation algorithm from settling too quickly into local minima in earlier iterations (stage 1; for iteration m = 1, …, K1); and (2) help speed convergence toward a final set of parameter estimates during the later iterations (stage 2; for iteration m = K1+1, …, K2). The use of a gain function is the key feature that distinguishes the SAEM from the stochastic and Monte Carlo EM procedures (Diebolt & Celeux, 1993; Lee& Song, 2003).3

The SAEM algorithm alternates between the E-step and the M-step until some predefined convergence criteria have been met (Zhu & Gu, 2007).4 An offline averaging procedure is implemented concurrently as the scoring algorithm in stage 2 of the SAEM. At the conclusion of stage 1 (i.e., at the K1th iteration), the averaging procedure is initiated with and θ̃(1) = θ(K1). These offline estimates are subsequently updated as

| (13) |

Theoretically, this averaging procedure automatically leads to an optimal convergence without estimating the information matrix (Polyak, 1990; Polyak and Juditski, 1992). Under some conditions, the offline average (θ̃(m), ) converges to θ̂MLE, ŝMLE(θ; Y)) almost surely, as K1+K2 approaches infinity (Zhu&Gu, 2007). Finally, at convergence, we use the offline average, (θ̃(K2), ), as our final estimate of (θ̂, ÎY(θ; Y)), where square roots of the diagonal elements of ÎY(θ; Y) are used as the standard error (SE) estimates of the parameters.

2. Illustrative Application

To illustrate the empirical utility of the proposed approach, we reanalyzed a set of previously published data (Carels, Blumenthal, & Sherwood, 2000; Sherwood, Steffen, Blumenthal, Kuhn, & Hinderliter, 2002) involving 172 employed men and women, aged 25–45 years, who participated in the Duke Biobehavioral Investigation of Hypertension study. The sample comprised 96 participants with normal blood pressure (BP), 41 with high normal BP, and 35 with stage 1 hypertension. Clinic systolic blood pressure that was greater than 180 mmHg or diastolic blood pressure that was greater than 100 mmHg, use of cardiovascular medications, and use of tobacco products were specified as exclusion criteria.

The AccuTracker II ABP Monitor (Suntech AccuTracker II, Raleigh, NC) was worn for approximately 24 h to measure BP and heart rate noninvasively. It was programmed to take four measurements hourly at random intervals ranging from 12 to 28 minutes apart. Participants were instructed to follow their normal schedule and to complete a diary entry indicating posture, activity, location, positive affect, and negative affect at each reading. The same procedure was implemented in the evening waking hours. Sleep was defined by diary activity ratings, which included an indication of “going to sleep.” The monitor was programmed to take only two readings hourly during sleeping hours, customized to the participants’ sleep habits.

The dynamics of individuals’ ambulatory BP and other related cardiovascular measures during a typical workday were the focus of our modeling example. In the study of 24-h ambulatory BP, BP dipping, an important prognostic indicator of cardiovascular morbidity and mortality, is derived by subtracting the mean nighttime sleep BP from the mean daytime waking BP (Sherwood et al., 2001, 2002). Consequently, the intensive BP readings collected throughout the study period (which typically exceed 50 measurements per participant) are aggregated and reduced to only mean-level information (Pickering, Shimbo, & Haas, 2006). Even in the few studies where within-subject linkages between ambulatory BP and related risk factors were explored without further data aggregation (e.g., Carels, 2000), emphasis was placed almost exclusively on examining concurrent linkages between multiple processes as opposed to lagged interdependencies among change processes. In this way, within-subject correlations between successive repeated measurements may be allowed (e.g., via Generalized Estimating Equations as in Carels, 2000) but the precise mechanisms of change (e.g., interdependencies among change processes) as well as related interindividual differences therein are not modeled explicitly. In the present analysis, we used the modified Van der Pol oscillator model to evaluate individual differences in the dynamics of diurnal cardiovascular fluctuations. In particular, we examined whether negative and positive emotion ratings throughout the day would help predict individual differences in the amplification of diurnal cardiovascular fluctuations.

Brown and colleagues (Brown & Luithardt, 1999; Brown et al., 2000) adapted the well-known Van der Pol oscillator equations to model human circadian data. In the present context, constrained by the lack of sufficient time points to capture slower circadian cycles and deviations therein, we considered a modified Van der Pol oscillator model as

| (14) |

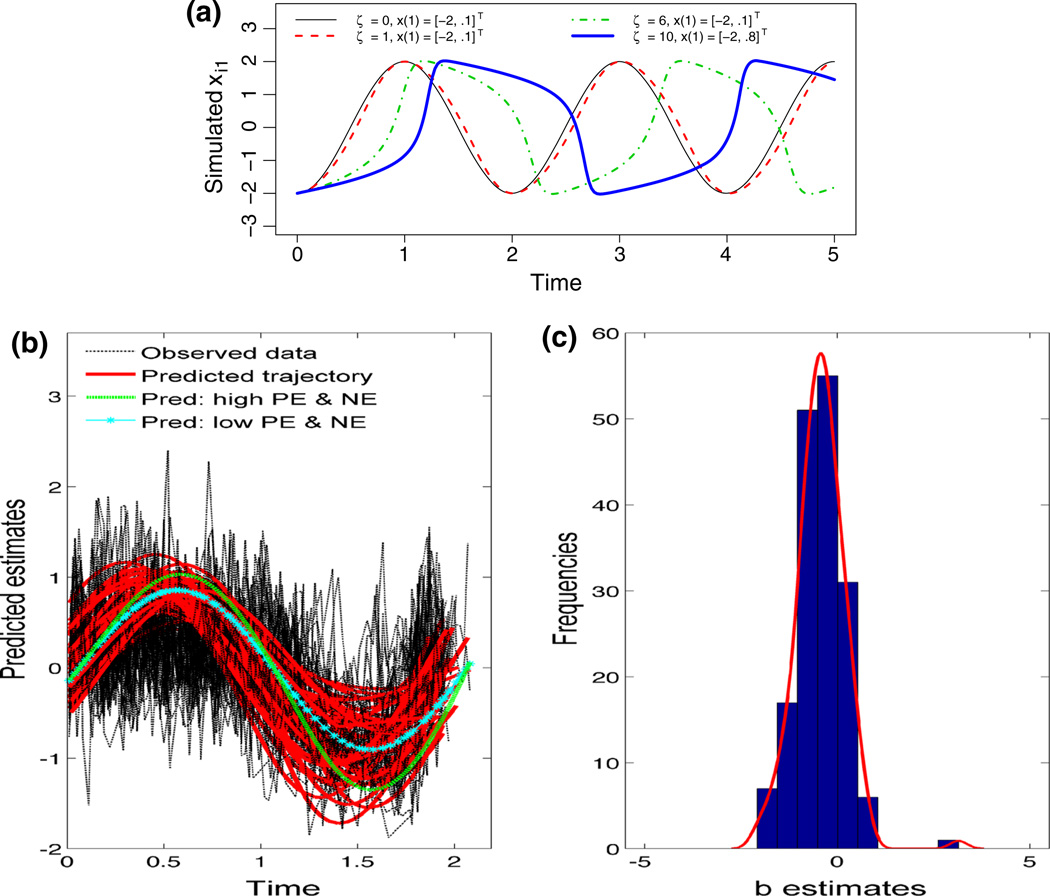

where xi1(t) represents the level of a dependent variable of interest (in our case, cardiovascular reactivity) and xi2(t) is its corresponding first derivative. In the present application, xi1(t) was indicted by three manifest variables, namely, systolic blood pressure, diastolic blood pressure, and heart rate, with μ1–μ3 as their respective intercepts. ζi is a general damping or amplification parameter that governs the oscillation amplitude of person i, and (2π/γ)2 is the squared frequency of cardiovascular reactivity in radians in the absence of damping or amplification, with γ representing the period of human circadian rhythm, fixed at 2 (i.e., corresponding to a period of 24 h) in the current context.5 The amount of damping (or amplification) is further moderated by a quadratic term in xi1(t). Formulated this way, the amplification (if ζi > 0) at small values of xi1(t) is expected to turn into massive damping at extreme values of xi1(t) due to the quadratic term, . This yields a system that is slow to rise to its peak, followed by damping (manifested as a pronounced drop in amplitude) when it hits extreme values. In contrast, if ζi is negative, this yields a system with damping dynamics at small values of xi1(t), and pronounced amplification at extreme values of xi1(t). If the initial level and rate of change of the system are extreme (i.e., far away from zero), the quadratic term, , would dominate the system’s dynamics by moderating and magnifying the system’s amplification, resulting in an explosive system—a scenario that is rare in CV dynamics. Since we expected ζi to be positive, we refer to this parameter as the amplification parameter throughout. In this illustrative example, ζi is further expressed as a function of a group intercept, ζ0, two person-specific covariates, u1i and u2i (with fixed effects parameters, ζ1 and ζ2, respectively), and bζ,i, person i’s deviation in ζi that is not accounted for by other fixed effects terms. As an example of some of the between-person heterogeneities in dynamics one may obtain from the hypothesized model, simulated trajectories of xi1(ti,j) using different values of ζ and initial conditions are shown in Figure 1a. The two trait-level covariates used in the present context to predict individual differences in the amplification parameter were (overtime) aggregate ratings of negative emotion (NE), obtained by averaging the participants’ responses over three items: stress, anger, and tense; and positive emotion (PE), obtained by averaging participants’ responses over the items happy and in control.

Figure 1.

a Simulated trajectories of xi1 (ti,j) using different values of ζ and initial conditions. The values shown in x(1) are xi1(ti,1) and xi2(ti,1), respectively. b Observed composite cardiovascular data and estimates of the latent cardiovascular reactivity obtained using the Van der Pol oscillator model. Time corresponds to time since each participant’s first measurement, as opposed to clock time. c Histogram of the random effect estimates of all participants. Observed data = composite cardiovascular measure of each participant on each occasion; Predicted trajectory = x̃i (ti,j) generated using the final θ̂ from the SAEM; Pred: high PE & NE = x̃i (ti,j) for a hypothetical individual with PE and NE that were 2 standard deviations higher than the sample average and no person-specific deviations in the amplification parameter and initial conditions; Pred: low PE & NE = x̃i (ti,j) for a hypothetical individual with PE and NE that were 2 standard deviations lower than the sample average and no person-specific deviations in the amplification parameter and initial conditions.

The present application also serves to demonstrate how the proposed modeling framework can be used to represent the unknown initial conditions at time 1, xi1(ti,1) and xi2(ti,1), as part of θf,i. The formulation adopted in (14) dictates that individual i ’s initial conditions at time 1, namely, xi1(ti,1) and xi2(ti,1), follow a multivariate normal distribution with mean vector [μx1μx2]T, and a covariance matrix composed of the lower 2 × 2 submatrix of Σb. We assume that the random effects covariance matrix conforms to the structure

| (15) |

where the person-specific deviation in ζi, bζ,i, is assumed to be uncorrelated with other person-specific deviations in initial conditions, while bx1,i and bx2,i are allowed to covary because interindividual differences in initial level and first derivative are often expected to show non-negligible associations. This particular way of structuring the initial conditions of a dynamic model as unknown parameters to be estimated has rarely been explicitly utilized in ODE modeling, but is commonly adopted in discrete-time state-space and time series models (e.g., Harvey & Souza, 1987; Chow, Ho, Hamaker, & Dolan, 2010; Du Toit & Browne, 2001), as well as growth curve-type models (Meredith & Tisak, 1990; McArdle & Hamagami, 2001). In addition, the presence of statistically significant interindividual differences in the amplification parameter and the two initial latent variables was deduced in the present context from the statistical significance of the variance parameters in Σb, namely, by means of a Wald test. A possible alternative to the Wald test will be highlighted in the Discussion section.

After removing data from individuals who contributed less than 50 readings, 168 participants were retained for model fitting purposes. Among these participants, the available measurement occasions ranged from 58 to 98 time points within each individual. Time was rescaled such that one unit of time corresponded to 12 h, with Δi,j ranging from 0.001 to 0.48. We fitted the multiple-indicator Van der Pol oscillator model to the ambulatory cardiovascular data. Several preliminary data treatment steps were performed prior to model fitting. First, we observed that substantial interindividual differences were present in the means of these indicator variables (which had to be modeled by allowing for random effects in the intercepts μ1–μ3). Because such interindividual differences were not the focus of our illustrative model, we removed these differences by subtracting each individual’s mean on each variable from the corresponding time series and used the residual scores for subsequent model fitting. Thus, the intercept parameters, while freed to be estimated as parameters in the present context, were expected to take on values that were close to zero. Second, to remove arbitrary scale differences across the three indicator variables while preserving potential interindividual differences in the amplification parameter, ζi, we standardized each individual’s time series using the group standard deviation of each indicator variable.

Third, there were substantial individual differences in when the participants took their first measurements of the day, with the first time point ranging in clock time from 7:07 am to 4.82 pm. To eliminate confounds due to individual differences in lifestyle, the participants’ dynamics were modeled in terms of time since each participant’s first measurement, as opposed to clock time. Finally, the sparse measurements at night, coupled with the dense daytime measurements, gave rise to highly irregularly spaced time intervals (with Δi,j ranging from 0.001 to 0.48). If these time intervals were used as they were, some of the larger time intervals would lead to very large approximation errors regardless of the choice of the ODE solvers, thereby jeopardizing the solvers’ numerical stability; the smallest time intervals were so much smaller by comparison that using them directly in the ODE solver would greatly increase computational costs. We adopted some strategies to strike a balance between numerical stability/modeling accuracy and computational time. That is, to improve the numerical stability of the ODE solver, missing data were inserted at the interval of Δi,j = 0.01 to avoid the need to interpolate over large time intervals. Additionally, we aggregated data that were too densely measured to yield a minimum Δi,j of 0.01. This essentially yielded a set of equally spaced data for model fitting purposes. We note that the proposed estimation framework can, in principle, handle irregularly spaced and person-specific Δi,j. While the first step is needed to improve the numerical stability of the estimation procedures, the latter step is not needed and was implemented simply to reduce computational costs. In practice, the interpolation intervals used in deriving numerical ODE solutions are almost always smaller than the crudest empirically observed measurement intervals. If computational time is not a constraint, then a smaller interpolation interval can help improve numerical accuracy under most circumstances. However, depending on the dynamics of the system, it may not be computationally efficient to always set the interpolation interval to the smallest possible time step. Here, our simulation study showed that interpolating with Δi,j = 0.01 as the smallest time step in the presence of irregularly spaced time intervals yielded reasonable estimates for the model considered in the present article.

A plot of the composite scores obtained by averaging the participants’ rescaled residual scores on the three indicator variables is shown in Figure 1b. Relatively clear diurnal trends can be seen from the observed scores, with the participants’ cardiovascular data rising to their peaks and staying at relatively high levels throughout the 12 h (i.e., from time = 0 to about 1) after their first measurements. Declines in cardiovascular levels became evident after t = 1.2, with the lowest “dipping” occurring at approximately t = 1.5 (corresponding to approximately 18 h after the first measurements).

Parameter estimates obtained from model fitting are shown in Table 1, and the predicted trajectories, x̃i (ti,j), generated using the final estimates θ̂ from the SAEM, are plotted in Figure 1b. All parameters, except for the interindividual variance in initial condition for the first derivative, , were significantly different from zero. This indicates that substantive interindividual differences were only present in the initial cardiovascular level at the first time point, but not in its first derivative. The fixed effects associated with PE and NE were both positive and statistically different from 0. The sign of these fixed effects indicated that overall, heightened PE, as well as NE, was found to increase the amplification magnitude of an individual’s diurnal cardiovascular oscillations. Such oscillations, characterized by a slow buildup followed by a sudden discharge to relax the stress accumulated during the buildup (Strogatz, 1994, p. 212), appeared to be amplified by emotions of high intensity, regardless of valence. Thus, an individual who experienced heightened PE as well as NE over the course of the study period would show greater build up as well as discharge compared to individuals who did not show high PE and/or NE during the same period. For illustrative purposes, we plotted the trajectories of a hypothetical individual with high PE and NE (defined as 2 standard deviations above the sample average) and low PE and NE (defined as 2 standard deviations below the sample average) in Figure 1b. The fixed effect coefficient for NE was slightly smaller in standardized value compared to that for PE, which is in contrast to commonly held beliefs regarding the close linkages between NE and cardiovascular health. This may be related, however, to differences in the roles of the two emotions in affecting the buildup vs. the discharge phases of cardiovascular dynamics. For instance, while heightened NE may lead to greater buildup of cardivascular activities in the daytime, it may also be associated with attenuated BP nighttime dipping. Such reversal in damping effects is not captured by the fitted version of the Van der Pol oscillator. A possible modification is to incorporate regime-switching (Chow, Grimm, Guillaume, Dolan, & McArdle, 2013; Chow & Zhang, 2013) or multiphase (Cudeck & Klebe, 2002) extensions wherein the amplification parameter is allowed to show phase-dependent relationships on the covariates.

Table 1.

Parameter estimates from fitting the modified Van der Pol Oscillator Model to empirical data.

| Parameters | Estimates (SE) | |

|---|---|---|

| ζ0 | 0.49 (0.01) | |

| ζ1 for NE | 0.08 (0.03) | |

| ζ2 for PE | 0.10 (0.02) | |

| μx1 | −0.17 (0.004) | |

| μx2 | 2.55 (0.002) | |

| μ1 | −0.11 (0.01) | |

| μ2 | −0.04 (0.01) | |

| μ3 | −0.04 (0.01) | |

| λ21 | 0.37 (0.01) | |

| λ31 | 0.44 (0.01) | |

| 0.63 (0.01) | ||

| 0.45 (0.01) | ||

| 0.476 (0.01) | ||

| 0.24 (0.02) | ||

| 0.28 (0.04) | ||

| 0.19 (0.10) | ||

| σbx1,x2 | 0.19 (0.03) |

Several other observations can be noted from the modeling results. First, substantial interindividual differences in cardiovascular amplification remained after the effects of the two covariates had been accounted for. Such differences gave rise to further deviations in the amplitude of diurnal fluctuations (see trajectories of the participants’ x̃i (ti,j) in Figure 1b, generated using the participants’ own random effect estimates as shown in Figure 1c). Second, despite the use of residual scores for model fitting purposes, the three intercept parameters were estimated to be negative and significantly different from zero. Third, the factor loadings for the second and third indicators, namely, diastolic BP and heart rate, were low compared to the loading for systolic BP, which was fixed at 1.0 for identification purposes. These latter findings suggest some possible inadequacies of the multivariate Van der Pol oscillator model in capturing the dynamics of the observed data, particularly differences in the diurnal dynamics of the three indicators.

Given the short time series lengths, the relatively small sample size, and other data-related constraints, the results reported here have to be interpreted with caution. For instance, the data collected barely spanned one complete cycle. Thus, there were insufficient time points (and importantly, number of replications in cycle) to clearly distinguish the nature of the oscillations and amplification/damping evidenced by the system over time. In particular, relatively few time points were available during sleeping hours—the time during which the heavy damping as predicted by the Van der Pol oscillator model was supposed to unfold. Nevertheless, our illustration demonstrated the potential promises of the proposed modeling approach and the ways in which future studies of diurnal cardiovascular patterns may be adapted to effectively utilize this approach.

3. Simulation Study

The performance of the SAEM algorithm in handling dynamic models featuring manifest variables only has been evaluated elsewhere (Donnet & Samson, 2007). The novel contributions of this article lie in presenting an alternative formulation that explicitly captures the dynamics of a system and interindividual differences in initial conditions at the latent level. We conducted a simulation study to specifically assess the performance of the SAEM with respect to these novel features. Unlike previous studies that assessed the performance of ODE modeling techniques using small and equally spaced time intervals (e.g., with Δi,j = 0.001 for all i and j), we used person-specific, irregularly spaced time intervals that mirror those observed in empirical studies.

We used a fourth-order Runge Kutta solver to generate the true latent differences at the population level, while the second-order Heun’s method summarized in Eq. (5) was used for model fitting purposes. Three manifest indicators were used to identify xi1(t), with Σε = 0.5I3. The first factor loading was set to unity for identification purposes. We set the period of oscillation, γ, to 0.8 (fixed and not estimated), ζ0 = 3, ζ1 = 0.5, ζ2 = 0.5, λ21 = 0.7, λ31 = 1.2, and μ1 = μ2 = μ3 = 0. The covariates u1i and u2i were both simulated from a uniform distribution over the interval [0, 5].

Two sample size configurations were considered: (1) n = 200 and T = 300, with Δi,j ranging between 0.005 and 0.07, and (2) n = 200 and T = 150, with Δ i,j ranging between 0.006 and 0.161. We also considered two variations of true initial conditions. In the first condition, the initial conditions at time ti,1 were set to the constant values, μx1 = μx2 = 1.0 for all individuals, with the true Σb specified to be

This condition is denoted as the condition with fixed initial conditions.

For the second condition (denoted as the condition with random initial conditions), we considered initial conditions that conformed to a multivariate normal distribution as follows. We assumed that the process conformed to the hypothesized ODE model both prior to, and after, the first available measurements. To implement this scenario, we began simulating data using the hypothesized ODE fifty time points prior to the first retained measurement occasion, starting at ti,1 with μx1 = μx2 = 1.0 and

| (16) |

Then, at j = 51, we centered each individual’s latent variable scores at the 51st time point using the across-individual means (thus resetting μx1 and μx2 to zero) and retained data from the 51st time point and beyond for model fitting purposes. Given the deterministic nature of the Van der Pol model, the variances and covariances among the true bi estimates in the retained data should still mirror the values shown in (16) at the population level. An alternative way of generating data with multivariate normally distributed initial conditions is to simply draw values of xi1(ti,1) and xi2(ti,1) from a multivariate normal distribution with mean vector [μx1μx2]′ and an arbitrary choice of Σb.However, doing so dictates that the dynamics of the ODE system at time ti,1 need not follow the dynamics of the system at other remaining time points. We adopted our specification to enforce the assumption of (overtime) homogeneity in dynamics.

Data generated using the two true initial conditions (fixed vs. random) were matched with two fitted initial condition specifications, namely, one where μx1 and μx2 were fixed at the constant value of 1.0 (i.e., with fixed initial conditions), and another one where μx1, μx2, , σbx1,x2, and were all estimated as modeling parameters (i.e., with random initial conditions). This yielded a total of four true–fitted initial condition specifications, with fixed–fixed, fixed–random, random–fixed, and random–random initial conditions. Among the conditions with fixed true initial condition specification, the fixed–fixed configuration was expected to yield slightly better performance (e.g., with fewer numerical problems) than the fixed–random condition, which is also able to capture the fixed initial conditions as a special case of our proposed modeling framework.

Among the conditions with random true initial condition specification, the random–random configuration was expected to yield substantially better performance than the random–fixed configuration in which μx1 and μx2 (which had a true value of 0) were fixed at the incorrect constant value of 1.0, with no interindividual differences therein. Note that this configuration is worth considering because this is a strategy often adopted by uninformed users of ODE solvers who, in the absence of knowledge concerning the true initial conditions, resort to fixing the initial conditions at arbitrary constant values. Results across the different true/fitted initial condition specifications also help provide insights into the sensitivity of modeling results to misspecification in the true initial conditions.

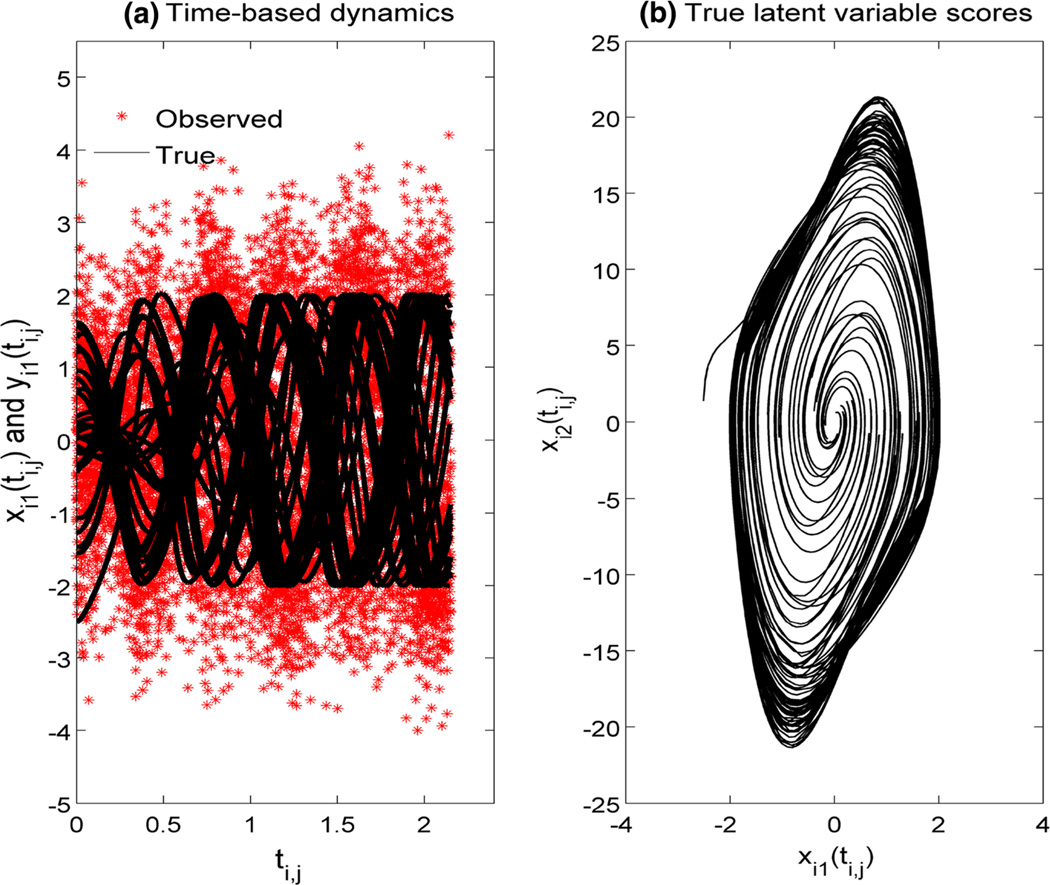

Simulated data from 50 randomly selected subjects are plotted in Figures 2a, b. In this particular parameter range, the Van der Pol oscillator model is expected to yield limit cycle behavior (Strogatz, 1994), or in other words, ongoing, isolated oscillations that either attract or repel neighboring trajectories (i.e., trajectories started out with similar values would either spiral toward or away from the cycle). Unlike cyclic oscillations that arise in linear dynamic systems where the amplitudes of oscillations are determined solely by initial conditions, the amplitudes of the oscillations in a Van der Pol system (or other similar systems) depend on latent variables in the system (see Strogatz, 1994, pp. 196–227, chap. 7). In this way, systems such as the Van der Pol model are appropriate for representing natural systems that exhibit self-sustained oscillations.

Figure 2.

a Plot of the true xi1(ti,j) and noisy observations, yi1(ti,j), of 50 randomly selected subjects from the Van der Pol model over time; b plot of two of the latent variables, xi1(ti,j) and its first derivatives, xi2(ti,j), over all time points.

To summarize, we considered 2 (sample size configurations) × 2 (true initial conditions) × 2 (fitted initial conditions) = 8 conditions in our simulation study. Statistical properties of the point and standard error (SE) estimates over 200 Monte Carlo replications, and the corresponding average correlations between the true and estimated bζ,i across the 8 conditions, are shown in Tables 2 and 9. The root mean squared errors (RMSEs) and relative biases were used to quantify the performance of the point estimates. The empirical SE of a parameter (i.e., the standard deviation of the parameter estimates across all Monte Carlo runs) was used as the “true” standard error. As a measure of the relative performance of the SE estimates, we used the average relative deviance of a SE estimate of an estimator (denoted as RDSE, namely, the difference between the average SE estimate and the true SE over the true SE). Ninety-five percent confidence intervals were constructed for each of the 200 simulation samples by adding and subtracting in each replication to the parameter estimate from the replication. We then computed power estimates6 by tallying the proportion of Monte Carlo trials in which the 95% CIs did not include zero. For parameters that had a true value of zero (i.e., μ1–μ3, and and σbx1,x2 in the fixed–random condition), this proportion can be taken as a type I error estimate, namely, the proportion of Monte Carlo trials in which the true parameter values of zero were incorrectly concluded as statistically significantly different from zero. To minimize the effects of outliers, we screened for cases manually, as well as winsorized 5%of the most extreme estimates (i.e., replacing cases that were lower or higher than the 5th and 95th percentiles by values of the 5th and 95th percentiles, respectively) before comparing the results across conditions. The percentages of retained cases used for comparison purposes are summarized in the footnotes of Tables 2 and 3.

Table 2.

Parameter estimates for the Van der Pol oscillator model with T = 150, true initial condition = fixed, fitted initial condition = fixed.

| True θ | Mean θ̂ | RMSE | rBias | MC SD | RDSE | Power/type I error | |||

|---|---|---|---|---|---|---|---|---|---|

| ζ0 | 3.00 | 3.05 | 0.05 | 0.02 | 0.077 | 0.318 | −0.76 | 1.00 | |

| ζ1 | 0.50 | 0.48 | 0.02 | −0.04 | 0.018 | 0.048 | −0.62 | 1.00 | |

| ζ2 | 0.50 | 0.49 | 0.01 | −0.01 | 0.018 | 0.058 | −0.69 | 1.00 | |

| μ1 | 0.00 | −0.00 | 0.00 | – | 0.004 | 0.006 | −0.25 | 0.16 | |

| μ2 | 0.00 | −0.00 | 0.00 | – | 0.004 | 0.005 | −0.11 | 0.12 | |

| μ3 | 0.00 | −0.00 | 0.00 | – | 0.004 | 0.007 | −0.38 | 0.21 | |

| λ21 | 0.70 | 0.70 | 0.00 | 0.00 | 0.003 | 0.004 | −0.11 | 1.00 | |

| λ31 | 1.20 | 1.20 | 0.00 | 0.00 | 0.003 | 0.005 | −0.37 | 1.00 | |

| 0.50 | 0.50 | 0.00 | −0.00 | 0.004 | 0.004 | −0.02 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | −0.00 | 0.004 | 0.004 | 0.14 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | −0.00 | 0.004 | 0.004 | −0.07 | 1.00 | ||

| 0.50 | 0.48 | 0.02 | −0.04 | 0.048 | 0.123 | −0.61 | 1.00 |

% of retained cases = 96%, correlation between true and estimated bζ,i = 0.87, true θ = true value of a parameter, mean , where θ̂h = estimate of θ from the hth Monte Carlo replication M RMSE , rBias relative bias = , SE standard deviation of θ̂ across Monte Carlo runs, = average standard error estimate across Monte Carlo runs, RDSE average relative deviance of , power/type I error = 1 − the proportion of 95% confidence intervals (CIs) that contain 0 across the Monte Carlo replications.

Table 9.

Parameter estimates for the Van der Pol oscillator model with T = 300, true initial condition = random, fitted initial condition = fixed.

| True θ | Mean θ̂ | RMSE | rBias | MC SD | RDSE | Power/type I error | |||

|---|---|---|---|---|---|---|---|---|---|

| ζ0 | 3.00 | 2.78 | 0.22 | −0.07 | 0.156 | 0.335 | −0.53 | 1.00 | |

| ζ1 | 0.50 | 0.88 | 0.38 | 0.76 | 0.040 | 0.081 | −0.51 | 1.00 | |

| ζ2 | 0.50 | 0.87 | 0.37 | 0.74 | 0.039 | 0.078 | −0.50 | 1.00 | |

| μ1 | 0.00 | 0.09 | 0.09 | – | 0.007 | 0.009 | −0.18 | 1.00 | |

| μ2 | 0.00 | 0.01 | 0.01 | – | 0.005 | 0.005 | −0.12 | 0.38 | |

| μ3 | 0.00 | 0.01 | 0.01 | – | 0.007 | 0.008 | −0.12 | 0.44 | |

| λ21 | 0.70 | 0.07 | 0.63 | −0.89 | 0.004 | 0.048 | −0.92 | 0.99 | |

| λ31 | 1.20 | 0.13 | 1.07 | −0.89 | 0.006 | 0.081 | −0.93 | 0.98 | |

| 0.50 | 3.33 | 2.83 | 5.67 | 0.019 | 0.214 | −0.91 | 1.00 | ||

| 0.50 | 1.29 | 0.79 | 1.58 | 0.007 | 0.019 | −0.60 | 1.00 | ||

| 0.50 | 2.82 | 2.32 | 4.64 | 0.016 | 0.052 | −0.69 | 1.00 | ||

| 0.50 | 15.69 | 15.19 | 30.39 | 1.521 | 0.474 | 2.21 | 1.00 |

% of retained cases = 100%, correlation between true and estimated bζ,i = 0.05, true θ = true value of a parameter, mean , where θ̂h = estimate of θ from the hth Monte Carlo replication, , rBias relative bias = , SE standard deviation of θ̂ across Monte Carlo runs, = average standard error estimate across Monte Carlo runs, RDSE average relative deviance of , power/type I error = 1 − the proportion of 95% confidence intervals (CIs) that contain 0 across the Monte Carlo replications.

Table 3.

Parameter estimates for the Van der Pol oscillator model with T = 300, true initial condition = fixed, fitted initial condition = fixed.

| True θ | Mean θ̂ | RMSE | rBias | MC SD | RDSE | Power/type I error | |||

|---|---|---|---|---|---|---|---|---|---|

| ζ0 | 3.00 | 2.96 | 0.04 | −0.01 | 0.056 | 0.173 | −0.68 | 1.00 | |

| ζ1 | 0.50 | 0.49 | 0.01 | −0.01 | 0.014 | 0.049 | −0.72 | 1.00 | |

| ζ2 | 0.50 | 0.49 | 0.01 | −0.01 | 0.013 | 0.042 | −0.68 | 1.00 | |

| μ1 | 0.00 | −0.00 | 0.00 | – | 0.003 | 0.004 | −0.19 | 0.13 | |

| μ2 | 0.00 | −0.00 | 0.00 | – | 0.003 | 0.003 | −0.12 | 0.09 | |

| μ3 | 0.00 | −0.00 | 0.00 | – | 0.003 | 0.004 | −0.17 | 0.12 | |

| λ21 | 0.70 | 0.70 | 0.00 | 0.00 | 0.002 | 0.002 | 0.05 | 1.00 | |

| λ31 | 1.20 | 1.20 | 0.00 | 0.00 | 0.002 | 0.003 | −0.15 | 1.00 | |

| 0.50 | 0.50 | 0.00 | −0.00 | 0.003 | 0.003 | 0.12 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | 0.00 | 0.003 | 0.002 | 0.16 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | −0.00 | 0.003 | 0.003 | −0.03 | 1.00 | ||

| 0.50 | 0.51 | 0.01 | 0.02 | 0.050 | 0.083 | −0.40 | 1.00 |

% of retained cases = 94%, correlation between true and estimated bζ,i = 0.92, true θ = true value of a parameter, mean , where θ̂h = estimate of θ from the hth Monte Carlo replication, , rBias relative bias = , SE standard deviation of θ̂ across Monte Carlo runs, = average standard error estimate across Monte Carlo runs, RDSE average relative deviance of , power/type I error = 1 − the proportion of 95% confidence intervals (CIs) that contain 0 across the Monte Carlo replications.

We first focus on elaborating results from the fixed–fixed conditions (i.e., with fixed true and fitted initial conditions), as this is the specification typically assumed in comparing ODE modeling methods. Results indicated that biases of the point estimates (as indicated by relative biases and RMSEs) and discrepancies in SE estimates in comparison with the MC SDs (as indicated by RDSEs) were relatively small. Biases in the point and SE estimates were both observed to decrease with increasing time points (see Tables 2, 3). Slightly large RMSEs, relative biases, and RDSEs were observed in the point and SE estimates of the fixed effects dynamic parameters, ζ0–ζ3, and the random effect variance, . This is to be expected, because a second-order ODE solver was used to approximate the trajectories generated using a fourth-order ODE solver. In other words, some approximation errors were inherently present in estimates of the latent variables, namely, x̃i (ti,j), especially with large time intervals. These biases, in turn, would likely affect estimates of the dynamic parameters in θf,i. The biases in point estimates were still within reasonable ranges given the time intervals considered in this simulation study; a larger number of time points and/or participants are likely needed to further improve properties of the point and SE estimates for all of the dynamic and random effect-related parameters.

The discrepancies in SE estimates reduced considerably from T = 150 to T = 300, with the exception of the fixed effects parameters for the two covariates, ζ1 and ζ2, which did not show clear gain in accuracy and precision from T = 150 to 300. Thus, consistent with findings concerning power issues in growth curve modeling (Raudenbush & Liu, 2001), it may be necessary to increase the number of participants to improve the estimation properties of ζ1 and ζ2 and the initial condition parameters. In addition, power estimates were generally close to 1.00 for the sample sizes considered in this simulation study, although the type I error rates associated with the three intercept parameters,μ1–μ3,whose true values were equal to zero, also appeared slightly elevated.

The performance measures just noted for the fixed–fixed conditions can be contrasted directly with the measures obtained from the fixed–random (i.e., fixed true initial conditions and random fitted initial conditions) because the same 200 sets of MC data were used to compare the performances of the two fitted initial condition specifications. Results indicated that specifying the initial conditions as conforming to a multivariate distribution with unknown mean vector and covariance matrix still led to satisfactory estimation results (see Tables 4, 5). In particular, all the measurement parameters remained unbiased and showed comparable levels of precision (in terms of MC SDs) compared to conditions with the fixed–fixed specification at equivalent sample sizes. Slight increases in biases were observed for the three fixed effects dynamic parameters, ζ0–ζ3; however, higher precision (i.e., reduced MC SDs) and smaller RDSEs were obtained for almost all of the parameters. When the initial conditions were fixed at known and correctly specified values, the algorithm was able to yield close to unbiased point estimates for ζ0–ζ3, but at the expense of lower precision, possibly due to the approximation errors. In contrast, when the initial conditions were freely estimated, even though greater biases were present in ζ0–ζ3 and the average correlation between the true and estimated bζ,i did decrease slightly (see footnotes of Tables 2, 3, 4, 5), the uncertainties in the initial conditions appeared to help compensate for some of these approximation errors and gave rise to higher precision and relatedly, smaller RSDEs.

Table 4.

Parameter estimates for the Van der Pol oscillator model with T = 150, true initial condition = fixed, fitted initial condition = random.

| True θ | Mean θ̂ | RMSE | rBias | MC SD | RDSE | Power/type I error | |||

|---|---|---|---|---|---|---|---|---|---|

| ζ0 | 3.00 | 3.23 | 0.23 | 0.08 | 0.075 | 0.133 | −0.44 | 1.00 | |

| ζ1 | 0.50 | 0.45 | 0.05 | −0.09 | 0.017 | 0.036 | −0.53 | 1.00 | |

| ζ2 | 0.50 | 0.45 | 0.05 | −0.09 | 0.017 | 0.039 | −0.56 | 1.00 | |

| μx1 | 1.00 | 1.00 | 0.00 | −0.00 | 0.008 | 0.012 | −0.38 | 1.00 | |

| μx2 | 1.00 | 1.01 | 0.01 | 0.01 | 0.021 | 0.034 | −0.39 | 1.00 | |

| μ1 | 0.00 | −0.00 | 0.00 | – | 0.004 | 0.004 | −0.05 | 0.12 | |

| μ2 | 0.00 | −0.00 | 0.00 | – | 0.004 | 0.004 | 0.03 | 0.00 | |

| μ3 | 0.00 | −0.00 | 0.00 | – | 0.004 | 0.005 | −0.13 | 0.14 | |

| λ21 | 0.70 | 0.70 | 0.00 | 0.00 | 0.003 | 0.003 | −0.09 | 1.00 | |

| λ31 | 1.20 | 1.20 | 0.00 | 0.00 | 0.003 | 0.004 | −0.23 | 1.00 | |

| 0.50 | 0.50 | 0.00 | −0.00 | 0.004 | 0.005 | −0.15 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | −0.00 | 0.004 | 0.004 | 0.05 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | −0.00 | 0.004 | 0.004 | 0.00 | 1.00 | ||

| 0.50 | 0.46 | 0.04 | −0.08 | 0.046 | 0.134 | −0.66 | 1.00 | ||

| 0.00 | 0.01 | 0.01 | – | 0.001 | 0.004 | −0.68 | 1.00 | ||

| 0.00 | 0.10 | 0.10 | – | 0.103 | 0.022 | 3.69 | 0.00 | ||

| σbx1,x2 | 0.00 | −0.02 | 0.02 | – | 0.010 | 0.006 | 0.54 | 0.26 |

% of retained cases = 86%, correlation between true and estimated bζ,i = 0.79, true θ = true value of a parameter, mean , where θ̂h = estimate of θ from the hth Monte Carlo replication, , rBias relative bias = , SE standard deviation of θ̂ across Monte Carlo runs, = average standard error estimate across Monte Carlo runs, RDSE average relative deviance of , power/type I error = 1 − the proportion of 95% confidence intervals (CIs) that contain 0 across the Monte Carlo replications.

Table 5.

Parameter estimates for the Van der Pol oscillator model with T = 300, true initial condition = fixed, fitted initial condition = random.

| True θ | Mean θ̂ | RMSE | rBias | MC SD | RDSE | Power/type I error | |||

|---|---|---|---|---|---|---|---|---|---|

| ζ0 | 3.00 | 3.22 | 0.22 | 0.07 | 0.054 | 0.124 | −0.56 | 1.00 | |

| ζ1 | 0.50 | 0.45 | 0.05 | −0.10 | 0.012 | 0.031 | −0.61 | 1.00 | |

| ζ2 | 0.50 | 0.45 | 0.05 | −0.10 | 0.012 | 0.030 | −0.60 | 1.00 | |

| μx1 | 1.00 | 1.00 | 0.00 | −0.00 | 0.006 | 0.009 | −0.34 | 1.00 | |

| μx2 | 1.00 | 1.01 | 0.01 | 0.01 | 0.015 | 0.024 | −0.39 | 1.00 | |

| μ1 | 0.00 | −0.00 | 0.00 | – | 0.003 | 0.003 | −0.06 | 0.08 | |

| μ2 | 0.00 | −0.00 | 0.00 | – | 0.003 | 0.003 | 0.04 | 0.00 | |

| μ3 | 0.00 | −0.00 | 0.00 | – | 0.003 | 0.003 | 0.12 | 0.05 | |

| λ21 | 0.70 | 0.70 | 0.00 | −0.00 | 0.002 | 0.002 | −0.08 | 1.00 | |

| λ31 | 1.20 | 1.20 | 0.00 | −0.00 | 0.002 | 0.003 | −0.27 | 1.00 | |

| 0.50 | 0.50 | 0.00 | −0.00 | 0.003 | 0.003 | 0.07 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | 0.00 | 0.003 | 0.003 | 0.12 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | −0.00 | 0.003 | 0.003 | −0.03 | 1.00 | ||

| 0.50 | 0.49 | 0.01 | −0.02 | 0.049 | 0.096 | −0.49 | 1.00 | ||

| 0.00 | 0.01 | 0.01 | – | 0.001 | 0.002 | −0.68 | 1.00 | ||

| 0.00 | 0.05 | 0.05 | – | 0.103 | 0.011 | 8.62 | 0.00 | ||

| σbx1,x2 | 0.00 | −0.01 | 0.01 | – | 0.005 | 0.003 | 0.37 | 0.41 |

% of retained cases = 98%, correlation between true and estimated bζ,i = 0.88, true θ = true value of a parameter, mean , where θ̂h = estimate of θ from the hth Monte Carlo replication, , rBias relative bias = , SE standard deviation of θ̂ across Monte Carlo runs, = average standard error estimate across Monte Carlo runs, RDSE average relative deviance of , power/type I error = 1 − the proportion of 95% confidence intervals (CIs) that contain 0 across the Monte Carlo replications.

The average initial condition parameters, μx1 and μx2, were correctly estimated in the fixed–random condition, and the associated variance–covariance parameters (including , and σbx1,x2) were estimated to be close to the correct value of zero. The SE for was clearly over-estimated, however, and the type I error rates for all of the initial condition variance–covariance parameters, including and σbx1,x2, also deviated quite substantially from the nominal value of 0.05. These findings did not arose in the random–random condition, and they may stem from the fact that in the fixed–random condition, we were performing estimation near the boundary values of the initial condition variance–covariance parameters, whose true values were equal to zero.7 A related consequence was that a higher percentage of cases with numerical problems arose in the fixed–random condition than in the fixed–fixed condition, although the percentages of replications that had converged to theoretically plausible values remained generally satisfactory (close to or above 90 %). As in the fixed–fixed condition, the fixed effects parameters for the two covariates, ζ1 and ζ2, also did not show improvement in accuracy from T = 150 to 300, verifying again the need to increase the number of subjects in future studies to improve the estimation properties of these parameters.

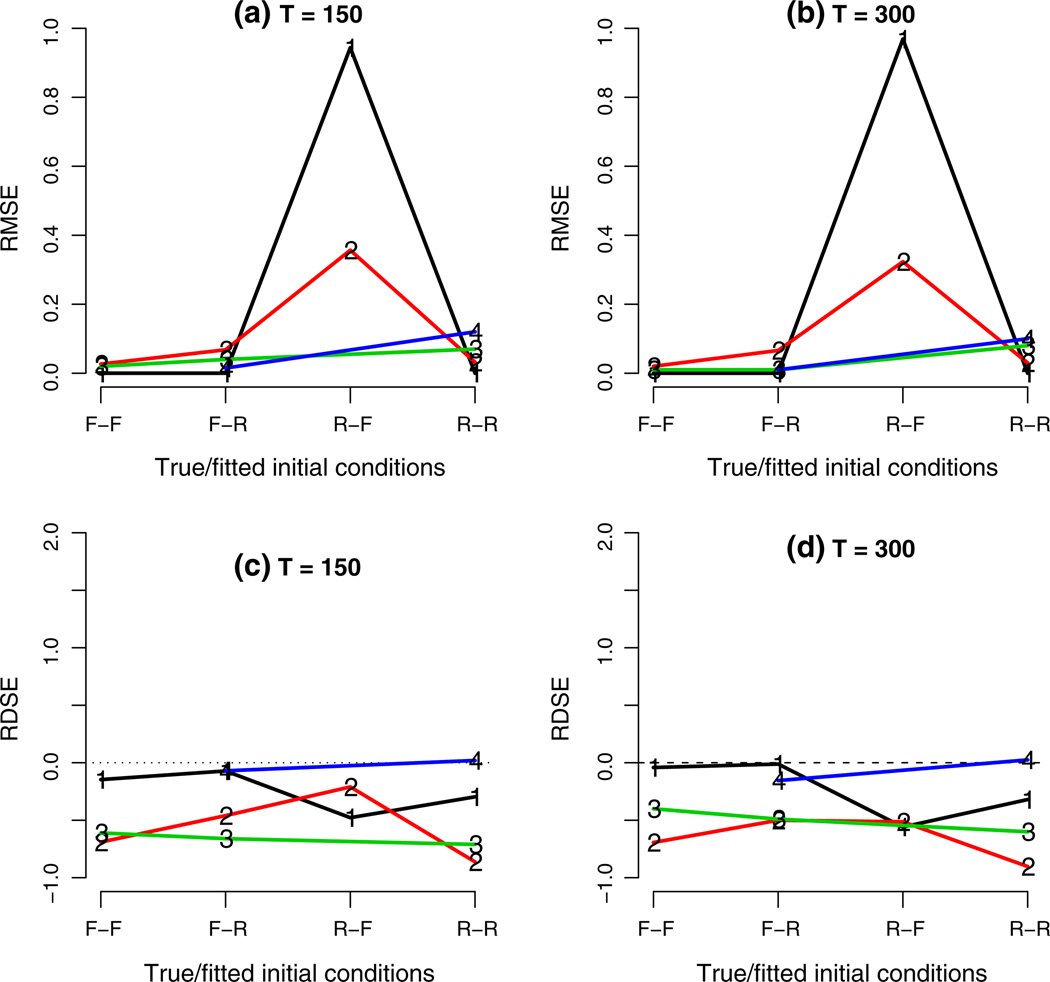

Our next set of findings concerns properties of the SAEM algorithm when the initial conditions were random, but paired with fitted initial conditions that were either correctly or incorrectly specified. Statistical properties of the point and SE estimates are summarized in Tables 6, 7, 8, and 9. To aid interpretation, we grouped the parameters into four major types and aggregated the performance measures by parameter type. Graphical summary of the RMSEs and RDSEs by initial condition specification and parameter type is shown in Figure 3a–d. The four parameter types considered were (1) fixed effects dynamic parameters, including ζ0, ζ1, ζ2, μx1, and μx2; (2) measurement parameters, including the intercept parameters μ1–μ3 and factor loadings λ21 and λ31; (3) the random effect variance for the amplification parameter, , and (4) random effect variance and covariances for the initial conditions, including , and σbx1,x2.

Table 6.

Parameter estimates for the Van der Pol oscillator model with T = 150, true initial condition = random, fitted initial condition = random.

| True θ | Mean θ̂ | RMSE | rBias | MC SD | RDSE | Power/type I error | |||

|---|---|---|---|---|---|---|---|---|---|

| ζ0 | 3.00 | 2.92 | 0.08 | −0.03 | 0.047 | 0.216 | −0.78 | 1.00 | |

| ζ1 | 0.50 | 0.46 | 0.04 | −0.08 | 0.012 | 0.066 | −0.81 | 1.00 | |

| ζ2 | 0.50 | 0.46 | 0.04 | −0.08 | 0.012 | 0.063 | −0.80 | 1.00 | |

| μx1 | 0.00 | 0.00 | 0.00 | – | 0.001 | 0.069 | −0.99 | 0.99 | |

| μx2 | 0.00 | 0.00 | 0.00 | – | 0.006 | 0.087 | −0.93 | 0.95 | |

| μ1 | 0.00 | 0.00 | 0.00 | – | 0.004 | 0.005 | −0.11 | 0.10 | |

| μ2 | 0.00 | −0.00 | 0.00 | – | 0.004 | 0.004 | −0.07 | 0.05 | |

| μ3 | 0.00 | 0.00 | 0.00 | – | 0.004 | 0.005 | −0.13 | 0.11 | |

| λ21 | 0.70 | 0.70 | 0.00 | −0.00 | 0.003 | 0.004 | −0.22 | 1.00 | |

| λ31 | 1.20 | 1.20 | 0.00 | −0.00 | 0.003 | 0.006 | −0.45 | 1.00 | |

| 0.50 | 0.50 | 0.00 | 0.00 | 0.004 | 0.008 | −0.51 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | 0.00 | 0.004 | 0.005 | −0.25 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | 0.00 | 0.004 | 0.011 | −0.63 | 1.00 | ||

| 0.50 | 0.57 | 0.07 | 0.13 | 0.053 | 0.186 | −0.71 | 1.00 | ||

| 1.00 | 1.10 | 0.10 | 0.10 | 0.110 | 0.116 | −0.05 | 1.00 | ||

| 1.00 | 1.23 | 0.23 | 0.23 | 0.131 | 0.184 | −0.29 | 1.00 | ||

| σbx1,x2 | 0.30 | 0.16 | 0.14 | −0.45 | 0.123 | 0.112 | 0.09 | 0.29 |

% of retained cases =93%, correlation between true and estimated bζ,i = 0.65, true θ = true value of a parameter, mean , where θ̂h = estimate of θ from the hth Monte Carlo replication, , rBias relative bias = , SE standard deviation of θ̂ across Monte Carlo runs, = average standard error estimate across Monte Carlo runs, RDSE average relative deviance of , power/type I error = 1 − the proportion of 95% confidence intervals (CIs) that contain 0 across the Monte Carlo replications.

Table 7.

Parameter estimates for the Van der Pol oscillator model with T = 300, true initial condition = random, fitted initial condition = random.

| True θ | Mean θ̂ | RMSE | rBias | MC SD | RDSE | Power/type I error | |||

|---|---|---|---|---|---|---|---|---|---|

| ζ0 | 3.00 | 3.03 | 0.03 | 0.01 | 0.032 | 0.210 | −0.85 | 1.00 | |

| ζ1 | 0.50 | 0.44 | 0.06 | −0.12 | 0.009 | 0.057 | −0.85 | 1.00 | |

| ζ2 | 0.50 | 0.45 | 0.05 | −0.10 | 0.009 | 0.065 | −0.87 | 1.00 | |

| μx1 | 0.00 | −0.00 | 0.00 | – | 0.001 | 0.068 | −0.99 | 0.98 | |

| μx2 | 0.00 | −0.01 | 0.01 | – | 0.004 | 0.076 | −0.95 | 0.92 | |

| μ1 | 0.00 | −0.00 | 0.00 | – | 0.003 | 0.003 | −0.09 | 0.08 | |

| μ2 | 0.00 | −0.00 | 0.00 | – | 0.003 | 0.003 | 0.00 | 0.04 | |

| μ3 | 0.00 | −0.00 | 0.00 | – | 0.003 | 0.003 | 0.00 | 0.05 | |

| λ21 | 0.70 | 0.70 | 0.00 | −0.00 | 0.002 | 0.005 | −0.58 | 1.00 | |

| λ31 | 1.20 | 1.20 | 0.00 | −0.00 | 0.002 | 0.009 | −0.76 | 1.00 | |

| 0.50 | 0.50 | 0.00 | 0.00 | 0.003 | 0.005 | −0.41 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | 0.00 | 0.003 | 0.004 | −0.17 | 1.00 | ||

| 0.50 | 0.50 | 0.00 | 0.00 | 0.003 | 0.006 | −0.55 | 1.00 | ||

| 0.50 | 0.58 | 0.08 | 0.17 | 0.055 | 0.137 | −0.60 | 1.00 | ||

| 1.00 | 1.10 | 0.10 | 0.10 | 0.110 | 0.113 | −0.03 | 1.00 | ||

| 1.00 | 1.17 | 0.17 | 0.17 | 0.130 | 0.169 | −0.23 | 1.00 | ||

| σbx1,x2 | 0.30 | 0.20 | 0.10 | −0.34 | 0.117 | 0.108 | 0.08 | 0.45 |

% of retained cases =95%; correlation between true and estimated bζ,i = 0.78, true θ = true value of a parameter, mean , where θ̂h = estimate of θ from the hth Monte Carlo replication, , rBias relative bias = , SE standard deviation of θ̂ across Monte Carlo runs, = average standard error estimate across Monte Carlo runs, RDSE average relative deviance of , power/type I error = 1 − the proportion of 95% confidence intervals (CIs) that contain 0 across the Monte Carlo replications.

Table 8.

Parameter estimates for the Van der Pol oscillator model with T = 150, true initial condition = random, fitted initial condition = fixed.

| True θ | Mean θ̂ | RMSE | rBias | MC SD | RDSE | Power/type I error | |||

|---|---|---|---|---|---|---|---|---|---|

| ζ0 | 3.00 | 2.62 | 0.38 | −0.13 | 0.195 | 0.257 | −0.24 | 1.00 | |

| ζ1 | 0.50 | 0.85 | 0.35 | 0.70 | 0.047 | 0.056 | −0.15 | 1.00 | |

| ζ2 | 0.50 | 0.84 | 0.34 | 0.68 | 0.047 | 0.062 | −0.24 | 1.00 | |

| μ1 | 0.00 | 0.06 | 0.06 | – | 0.010 | 0.011 | −0.07 | 1.00 | |

| μ2 | 0.00 | 0.01 | 0.01 | – | 0.007 | 0.007 | −0.03 | 0.17 | |

| μ3 | 0.00 | 0.01 | 0.01 | – | 0.010 | 0.010 | −0.07 | 0.20 | |

| λ21 | 0.70 | 0.09 | 0.61 | −0.87 | 0.005 | 0.041 | −0.87 | 1.00 | |

| λ31 | 1.20 | 0.15 | 1.05 | −0.87 | 0.008 | 0.068 | −0.88 | 1.00 | |

| 0.50 | 3.22 | 2.72 | 5.44 | 0.026 | 0.195 | −0.87 | 1.00 | ||

| 0.50 | 1.28 | 0.78 | 1.57 | 0.011 | 0.020 | −0.47 | 1.00 | ||

|

|

0.50 | 2.81 | 2.31 | 4.61 | 0.023 | 0.052 | −0.56 | 1.00 | |

| 0.50 | 15.32 | 14.82 | 29.64 | 1.493 | 0.417 | 2.58 | 1.00 |

% of retained cases = 98%, correlation between true and estimated bζ,i = 0.05, true θ = true value of a parameter, mean , where θ̂h = estimate of θ from the hth Monte Carlo replication, , rBias relative bias = , SE standard deviation of θ̂ across Monte Carlo runs, = average standard error estimate across Monte Carlo runs, RDSE average relative deviance of , power/type I error = 1 − the proportion of 95% confidence intervals (CIs) that contain 0 across the Monte Carlo replications.

Figure 3.

a–d Plots of the RMSEs and RDSEs across the four true–fitted initial condition specifications and two sample size configurations. F–F Fixed–fixed, F–R fixed–random, R–F random–fixed, R–R random–random. The numbers in the plots indicate parameter type: 1 fixed effects dynamic parameters, including ζ0, ζ1, ζ2, μx1, and μx2, 2 measurement parameters, including the intercept parameters μ1–μ3 and factor loadings λ21 and λ31, 3 the random effect variance for the amplification parameter, , and 4 random effect variance and covariances for the initial conditions, including and σbx1,x2. To avoid skewing the graphical presentation of results from the remaining conditions, the high RMSEs and RDSEs for (i.e., parameter type 3) in the R–F condition were omitted from the plots.

Several key results can be noted from the tables with full results for the conditions with random true initial condition specification. First, specifying the mean and variance–covariance parameters of the initial condition distribution as part of the parameters in β and Σb led to reasonable point and SE estimates, as well estimates of bi. In contrast, misspecifying the random initial conditions as fixed, and with μx1 and μx2 fixed at incorrect values, led to high biases in the point estimates of ζ0–ζ3, the factor loadings, and all the variance parameters. Particularly high biases were observed in the point and SE estimates for the random effect variance parameter, . In fact, the RMSEs and RDSEs for the point and SE estimates of were so high for both sample size conditions that these two values were omitted from the plots in Figure 3 to avoid skewing the graphical presentation of the other conditions. Under this particular misspecification in initial conditions, the estimates of bζ,i were completely biased and showed near-zero correlations with the true bζ,i values, regardless of sample size. These results suggested that the type of misspecification in initial condition specification considered in the present simulation study can greatly compromise the quality of the estimation results—an effect that is not necessarily circumvented by increases in sample size (i.e., the number of time points).

Second, for the random–random condition, doubling the number of time points from 150 to 300 led to increases in the accuracy (in terms of relative biases and RMSEs) and precision (in terms of RMSEs and MCSDs) of most parameters, as well as an increase in the average correlation between the true and estimated values of bζ,i from 0.65 to 0.78. Third, power estimates for all of the parameters in the R–R condition were generally high, with the exception of the covariance parameter between the interindividul differences in initial level and first derivative, σbx1,x2. “Type I error” rates for the five parameters whose true values were equal to zero (μ1–μ3, μx1, and μx2) remained slightly elevated as in the conditions with fixed true initial conditions. Finally, slight decrements in performance were observed from the F–R conditions to the R–R conditions across both sample size configurations in terms of the quality of the point and SE estimates. Particularly notable was the increased variability (i.e., MC SDs) of all the point estimates, and the higher RDSEs for the fixed effects dynamic and measurement parameters (i.e., parameter types 1 and 2 in Figure 3). Despite these decrements in performance, the estimates from the R–R condition were still far better than those from the R–F conditions, whose estimation was based on the same 200 sets of MC data as the former. Even though the RDSEs for the dynamic and measurement parameters (parameter types 1 and 2) appeared reasonable for the R–F conditions, the corresponding point and bζ,i estimates were too biased to be practically useful.

4. Discussion

In the present article, we presented an SAEM algorithm for fitting linear or nonlinear dynamic models with random effects in the dynamic parameters and unknown initial conditions. Although the illustrative and simulation examples are all nonlinear in nature, the proposed algorithm is applicable to linear ODEs as special cases. Using a modified Van der Pol oscillator model as an illustrative model, we evaluated the estimation properties of the proposed technique using an empirical example and a simulation study.

Our simulation results indicated that the proposed technique yielded satisfactory point and SE estimates for most of the parameters. Further developments are needed, however, to improve the accuracy of the SE estimates of the dynamic parameters. The problem is especially pronounced in cases involving random, as opposed to fixed initial conditions. We also demonstrated the feasibility of the proposed approach in handling situations with unknown initial conditions, either with or without interindividual differences in initial conditions. In our previous work in which different initial condition specifications were compared using linear discrete-time state-space models, the proposed approach of estimating means and variance–covariance parameters of the unknown initial condition distribution as modeling parameters was found to yield reasonable estimates for a broad array of initial condition scenarios (Losardo, 2012). Here, we extended our earlier results to the case of nonlinear continuous-time models with specific parametric assumptions on the distribution of the random effect. Possible extensions may include adding covariates as predictors of the interindividual differences in initial conditions. Other alternative approaches that may be adopted in future studies include using other parametric (e.g., exponential and Laplace) and nonparametric (e.g., Chow et al., 2011) distributions for the random effects distribution, and relaxing the linear functional form of θf,i in Eq. 1.

Slight decrements in the performance of the SAEM approach were observed when the number of random effects in the model was increased from one to three. Difficulties involved in estimating multiple random effects in dynamic models are related directly to whether the structural parameters are orthogonal to each other and whether the model is empirically identifiable with multiple random effects. In our empirical example, we fixed the oscillation frequency based on the expectation of a 24-h diurnal cycle to circumvent the issue of having insufficient complete cycles of data to identify other cycles of slower frequencies. In addition, we fixed one of the factor loadings at unity to set the metrics of the latent variables. The issue of parameter and model identifiability is, however, a much more complex problem than what we have alluded to thus far. In many dynamic models, similar differences in dynamics can be attained by incorporating random effects in more than one way. Obviously, estimation issues and difficulties arise if high correlations are present among the structural parameters. To this end, techniques aimed at evaluating model identifiability and dependencies among parameters are important to consider (Miao, Xin, Perelson, & Wu, 2011).

We adopted a Frequentist approach to parameter estimation because it offers a well-understood framework for performing hypothesis testing, and explicit criteria for assessing model convergence. However, there are still some subtle differences between the proposed SAEM approach and other standard Frequentist procedures in that the random effects in bi are estimated using MCMC procedures. In this way, the proposed procedure may be regarded as a compromise between the practical advantages of the MCMC framework in handling models of high complexity and (possibly) intractable integration, and the advantages offered by the Frequentist framework in assessing the convergence of modeling parameters and performing significance tests (e.g., test of the statistical significance of the random effects variances). In our empirical example, we highlighted the possibility of using a Wald test to determine the number of random effects to be included in a model and their corresponding covariance structure. Another possibility is to perform a score test using by-products from the SAEM under a null hypothesis model that assumes no random effect in the model (Zhu & Zhang, 2006).

Compared to approaches for fitting discrete-time dynamic models, continuous-time nonlinear ODE models pose additional challenges in deriving the ODE solutions needed to obtain latent variable estimates. In the present study, we opted to use a numerical integration method of two orders lower in the estimation process than that used in the data generation process. The estimates appeared reasonable, but improved performance may be attained with the use of a higher-order numerical solver, particularly adaptive solvers that can handle stiff systems. Other approaches using splines (Cao, Huang, & Wu, 2012; Liang, Miao, & Wu, 2010; Ramsay et al., 2007) or Langevin sampling techniques (Stuart, Voss, & Wilberg, 2004; Hairer, Stuart, Voss, & Wiberg, 2005) to replace the use of numerical solvers are also viable alternatives.