Abstract

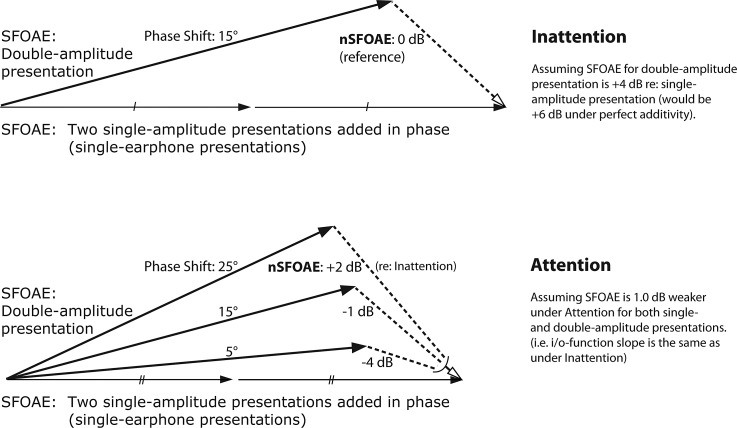

Previous studies have demonstrated that the otoacoustic emissions (OAEs) measured during behavioral tasks can have different magnitudes when subjects are attending selectively or not attending. The implication is that the cognitive and perceptual demands of a task can affect the first neural stage of auditory processing—the sensory receptors themselves. However, the directions of the reported attentional effects have been inconsistent, the magnitudes of the observed differences typically have been small, and comparisons across studies have been made difficult by significant procedural differences. In this study, a nonlinear version of the stimulus-frequency OAE (SFOAE), called the nSFOAE, was used to measure cochlear responses from human subjects while they simultaneously performed behavioral tasks requiring selective auditory attention (dichotic or diotic listening), selective visual attention, or relative inattention. Within subjects, the differences in nSFOAE magnitude between inattention and attention conditions were about 2–3 dB for both auditory and visual modalities, and the effect sizes for the differences typically were large for both nSFOAE magnitude and phase. These results reveal that the cochlear efferent reflex is differentially active during selective attention and inattention, for both auditory and visual tasks, although they do not reveal how attention is improved when efferent activity is greater.

I. INTRODUCTION

Beginning soon after the first reports on the existence of an olivo-cochlear bundle (OCB) of neurons connecting the hindbrain to the cochlea (Rasmussen, 1946, 1953), speculation began about whether this efferent circuit somehow might be involved in modulating the flow of auditory afferent information under conditions of selective attention (e.g., Hernández-Peón et al., 1956). Such speculation only increased as knowledge grew about the extensive network of efferent circuits that exists above the hindbrain (Mulders and Robertson, 2000a,b). Accordingly, numerous studies have been done over the years aimed at determining whether, and how, the efferent system behaves under conditions of selective attention. Many of these studies have used otoacoustic emissions (OAEs) as their dependent measure. OAEs have a number of advantages: They reveal response characteristics of the active cochlea, they are sensitive to changes in response due to olivo-cochlear efferent feedback, and they are a noninvasive physiological measure. A common result of these studies is an apparent increase in efferent activity during selective attention (e.g., Ferber-Viart et al., 1995; Froehlich et al., 1993; Maison et al., 2001; Puel et al., 1988; Walsh et al., 2014a,b). However, Harkrider and Bowers (2009) reported that suppression of click-evoked OAEs (CEOAEs) by a contralateral noise was reduced during selective auditory attention compared to their inattention condition.

Here we report two studies on the effects of selective attention on cochlear responses. Our primary measure was a form of stimulus-frequency otoacoustic emission (SFOAE) that we call the nonlinear SFOAE, or nSFOAE (Walsh et al., 2010a,b). The nSFOAE is a measure of how much SFOAE magnitude deviates from linear growth as stimulus level is increased. This measure was obtained during blocks of trials when subjects were, or were not, required to attend to sequences of digits presented either aurally or visually. Additional auditory stimuli known to activate the medial olivo-cochlear (MOC) component of the OCB were interleaved with the digit stimuli within each trial in order to elicit the nSFOAE response. Thus, the nSFOAE measures reported here were obtained contemporaneously with the presentation of the attended digits. For most subjects, the magnitudes of the nSFOAEs were different under attention and inattention, revealing that the MOC efferent system was differentially active under the two conditions. These results are similar to those reported previously from the same studies, using the same subjects (Walsh et al., 2014a,b): Those previous reports concentrated on the nSFOAE responses obtained during a brief silent period following each eliciting stimulus. Here we concentrate on the nSFOAE responses obtained during the presentation of the SFOAE-eliciting stimuli, what we call the perstimulatory responses.

Previous studies using OAEs to study attention typically used relatively simple behavioral tasks. For example, Puel et al. (1988) and Froehlich et al. (1990) had their subjects count the number of times a target letter appeared on a screen, Froehlich et al. (1993), Ferber-Viart et al. (1995), Meric and Collet (1994) measured reaction times following a light flash or a tone pip, and Giard et al. (1994), Maison et al. (2001), and Harkrider and Bowers (2009) asked their subjects simply to attend to sounds—sometimes those used to evoke their OAE measure—presented to one ear. By contrast, the present studies used more demanding behavioral tasks, and also used different acoustic stimuli to evoke the OAE. All previous investigations have used transient stimuli—either brief tones or clicks—to evoke the OAE, but these stimuli appear not to have been the best choice for studying the effects of the MOC efferent system. Guinan et al. (2003) reported that the stimuli used commonly as “probes” of efferent activation—clicks, tone pips, and tone pairs—often can themselves contribute inadvertently as “elicitors” of efferent activity. This means that in those previous studies, the efferent system could have been activated to some degree whether or not subjects were attending, and whether or not a deliberate MOC-eliciting stimulus was presented simultaneously with the OAE-evoking (and perhaps also MOC-eliciting) stimulus. This is a problematic possibility.

In contrast, Guinan et al. (2003) reported that long-duration tones of moderate intensity, like those used to evoke stimulus-frequency OAEs (SFOAEs), are relatively ineffective elicitors of the efferent system. Accordingly, our nSFOAE measure was elicited by a long-duration tone presented both alone and with a wideband noise (which is known to activate the MOC reflex). The behavioral tasks assigned the subjects did not pertain to these stimuli but to other stimuli that were interleaved temporally with them. In the first study, subjects attended to series of spoken digits, and in the second study, they attended to series of visually presented digits. In both studies, the individual digits alternated with the nSFOAE stimuli, meaning that our measures of the state of the cochlea were contemporaneous with the processes of attention demanded of the subject. The results obtained showed that the sounds we used to evoke emissions from the cochlea were processed differentially depending on the attentional demands imposed upon the listener, and this was true whether auditory or visual attention was required.

II. METHODS

A. General

Two studies are described here, one involving attention to sequences of auditory stimuli and the other to sequences of visual stimuli. The auditory study was completed before the beginning of the visual study, and the same subjects participated in both studies (except for the loss of one subject at the end of the auditory study). The procedures for collecting and analyzing the nSFOAE responses were essentially identical for the two studies. The differences in the behavioral tasks are described below.

The Institutional Review Board at The University of Texas at Austin approved the procedures described here, all subjects provided their informed consent prior to any testing, and the subjects were paid for their participation. Supplementary materials for this article can be found online.1

B. Subjects

Two males (both aged 22) and six females (aged 20–25) participated in the auditory study; one female was not available to complete the visual study. All subjects had normal hearing [≤15 dB Hearing Level (HL)] at octave frequencies between 250 and 8000 Hz, and normal middle-ear and tympanic reflexes, as determined using an audiometric screening device (Auto Tymp 38, GSI/VIASYS, Inc., Madison, WI). The test of the middle-ear reflex (MER) involved individually presenting tones at four frequencies to the test ear, each at as many as three levels, while the compliance of the middle-ear system was measured. For the 0.5- and 4.0-kHz tones, the test levels were 80, 90, and 100 dB HL, and for the 1.0- and 2.0-kHz tones, the test levels were 85, 95, and 105 dB HL. All tone series began with the weakest stimulus and increased in level only if an MER was not observed. An MER was defined as a decrease in the compliance of the middle-ear ossicles of 0.05 cm3 equivalent volume or more during the presentation of the test stimulus. At 4.0 kHz, a frequency used to elicit the nSFOAE response in our studies, only three subjects had an MER in either the left or the right ear at 80 dB HL, the lowest level tested. The level of the 4.0-kHz tone used in our attention studies was only 60 dB sound-pressure level (SPL), but we cannot be certain that it was incapable of activating the MER in all subjects. No subject had a spontaneous otoacoustic emission (SOAE) stronger than −15.0 dB SPL within 600 Hz of the 4.0-kHz tone used to elicit the nSFOAE response.

Each subject was tested individually while seated in a reclining chair inside a double-walled, sound-attenuated room. Two insert earphone systems (described below) delivered sound directly to the external ear canals. A computer monitor attached to an articulating mounting arm was positioned at a comfortable viewing distance from the subject; it was used to provide instructions, stimuli, response alternatives, and trial-by-trial feedback for the auditory and visual behavioral tasks. A numeric keypad was provided to the subject for indicating his or her behavioral responses. The experimenter (author K.P.W.) sat adjacent to the subject, in front of a computer screen from which he conducted the test session.

C. Behavioral measures

1. Selective auditory-attention conditions

For the auditory study, there were two selective-attention conditions: One required dichotic listening and the other diotic listening. For the dichotic-listening condition, two competing speech streams were presented separately to the ears, and the task of the subject was to attend to one of the speech streams. In one ear, the talker was female, in the other ear the talker was male, and the ear receiving the female talker was selected trial by trial from a closed set of random permutations. Within each block of trials, the female voice was in the right ear approximately equally as often as in the left ear. On each trial, the two talkers simultaneously spoke two different sequences of seven numerical digits. Each digit was selected randomly with replacement (0–9), and the digit sequence spoken by the single female talker was selected independently from that spoken by the single male talker. Digits were presented during 500-ms intervals that were separated by 330-ms interstimulus intervals (ISIs). As described more fully below, the stimulus waveforms used to elicit the nSFOAE response were presented in those six ISIs between the seven digits. At the end of each trial in those blocks requiring attention, the subject was shown two sets of five digits (identical except for one digit) and was allowed 2000 ms to indicate with an appropriate key press which set corresponded to the middle five digits spoken by the female voice. Visual trial-by-trial feedback as to the correctness of these choices was provided on the subject's computer screen. For the visual-attention study, the trial-timing sequence was the same, but the digits were presented visually, not aurally (see Sec. IV below).

The diotic-listening condition was similar to the dichotic-listening condition with the exception that the male and female voices were presented simultaneously to both ears on each trial, not to separate ears. Thus, the dichotic-listening condition required attention to one of two spatial locations—the left or the right ear—whereas the diotic-listening condition required subjects to disambiguate two speech streams that seemed to originate from the same location in space, roughly in the center of the head. The timing of each diotic trial was identical to each dichotic trial.

In order to assess the effects of attention, control conditions not requiring attention were needed. Ideally, all aspects of the task and stimuli ought to be identical to those in the attention condition, the only difference being that the subject was not required to attend. The problem with such an arrangement here is that speech is a unique stimulus for humans, and it is not clear that people can truly ignore speech when they hear it. Accordingly, for our primary inattention conditions in both the auditory- and visual-attention studies, the speech waveforms were replaced with sounds having spectral and temporal characteristics like speech (called speech-shaped noises or SSNs; details are below), but which were not intelligible. The individual SSNs sounded different from each other but did not sound like the digits from which they were derived. Thus, all the attention and inattention conditions contained stimuli having similar spectral and temporal characteristics (one exception existed in the visual-attention study), so that whatever physiological mechanisms were reflexively activated in the attention conditions also were activated in the inattention conditions.

Because our primary dependent variable was a physiological measure that can be sensitive to motor activity, the inattention control condition needed a motor response like that required in the attention conditions. Accordingly, for those blocks of trials not requiring attention to the speech sounds, the subject pressed a key after the last of the seven stimuli on each trial. Thus, some attention was required of the subject (to the trial-timing sequence), just no attention to the content of the speech prior to making a demanding discrimination at the end of each trial.

For all trials in all blocks, the time required for the subject to make the behavioral response was recorded; for the inattention blocks, this reaction time (RT) was the sole behavioral measure obtained. For all conditions, the RT clock began at the end of the seventh 500-ms epoch containing speech (or SSN) and was terminated by a key press. If no behavioral response occurred prior to the end of the 2000-ms response interval, neither the behavioral data nor the physiological data from that trial were saved. Trials with no response occurred infrequently. In the attention conditions, reaction times and percent correct values were recorded and averaged separately for correct and incorrect trials.

Nominally, all test trials were run in blocks of 30. However, whenever a subject failed to respond on the behavioral task, or the physiological response failed to meet pre-established criteria, that trial was discarded, and another trial was added to the end of the block (the visual display indicated this to the subject). Accordingly, the duration of a block of trials ranged between 4 and 6 min, and the behavioral measures (percent correct judgments and RT) necessarily were based on slightly different numbers of trials across conditions and subjects.

On each block of trials requiring attention to the digit strings, the percentage of correct responses was calculated automatically, and saved to disk. Those values were averaged over four to six repetitions of the same condition to yield an overall estimate of performance for that test condition. When designing these studies, the goal was to have behavioral attention tasks that were cognitively challenging for most subjects, but not too difficult for any of them. Specifically, we wanted everyone to perform above about 70% correct, but below 100% correct, and as will be seen, we were successful at this. Because there was no measure of correctness on the inattention blocks for comparison with the attention blocks, there never was any intention for these behavioral measures to be crucial to the primary question of interest (whether activation of the MOC efferent system differed between selective attention and inattention). All we needed was evidence that the subjects were attending to the digit sequences, and that evidence consisted of good performance on the discrimination tasks. Furthermore, the fact that performance was typically less than perfect led us to conclude that the attention task did require cognitive effort.

2. Speech stimuli

One female talker and one male talker were used to create the speech stimuli. Their speech was recorded using the internal microphone on an iMac computer that was running the Audacity (Sourceforge, Slashdot Media, San Francisco, CA) (audacity.sourceforge.net) software application. Each recording was the entire sequence of ten digits (0–9), spoken slowly, and several recordings were obtained from each of the two talkers. After recording, the most neutral-sounding sequence from each talker was selected. The individual digit waveforms then were cropped from the selected recordings and fitted to a 500-ms window by aligning the onset of each waveform with the onset of the window, and by adding the appropriate number of zero-amplitude samples (“zeros”) to the end of each waveform to fill the window. The recordings were made using a 50-kHz sampling rate and 16-bit resolution, and they were not filtered or processed further before being saved individually to disk.

At the start of an experimental condition, the 20 selected digits (ten from the female talker and ten from the male talker) were read into a custom-written LabVIEW (National Instruments, Austin, TX) program once, and then held in memory for the duration of the condition. Before presentation, all waveforms were lowpass filtered at 3.0 kHz, and they were equalized in level using the following procedure. The mean root-mean-square (rms) amplitude of the ten digits spoken by the female talker was calculated, and then each of the 20 waveforms was scaled by a fixed amount such that the overall level of each waveform was about 50 dB SPL. This operation was performed on the spoken digits and SSN stimuli alike.

a. Speech-shaped noise (SSN) stimuli.

Each of the 20 speech waveforms used in the auditory-attention (and the visual-attention) studies was transformed into a corresponding SSN stimulus as follows: For each digit, a Fast Fourier Transform (FFT) was taken of the entire utterance and used to create a 500-ms sample of noise having the same long-term spectral characteristics as the spoken digit. The envelope of each spoken digit was extracted using a Hilbert transform, the envelope was lowpass filtered at 500 Hz, and the resulting envelope function was applied to the relevant sample of noise. The resulting sounds were not intelligible as speech, but the pitches of the SSNs derived from the female talker were noticeably higher than those from the male talker. When presenting strings of SSN stimuli, the same rules about sampling and sequencing were used as with the real speech sounds. Subjects were never tested on the content of the SSNs, but in some inattention conditions, they did have to attend to the timing of the SSNs in order to respond that the digit sequence was completed.

D. Physiological measures

As noted, the subjects were tested individually while comfortably seated in a commercial sound room. The nSFOAE-eliciting stimuli (and the speech sounds) were delivered directly to the ears by two insert earphone systems. For the right ear, two Etymotic ER-2 earphones (Etymotic, Elk Grove Village, IL) were attached to plastic sound-delivery tubes that were connected to an ER-10A microphone capsule. The microphone capsule had two sound-delivery ports that were enclosed by the foam ear-tip that was fitted in the ear canal. The nSFOAE responses were elicited by sounds presented via the ER-2 earphones and recorded using the ER-10A microphone. For the left ear, one ER-2 earphone presented the stimuli to the external ear canal via a plastic tube passing through a foam ear-tip; there was no microphone in the left ear. That is, the nSFOAE stimuli were presented to both ears simultaneously, but nSFOAE responses were recorded from the right ear only.

The acoustic stimuli (speech sounds and nSFOAE-eliciting sounds) and the nSFOAE responses all were digitized using a National Instruments sound board (PCI-MIO-16XE-10) installed in a Macintosh G4 computer, and stimulus presentation and nSFOAE recording both were implemented using custom-written LabVIEW software (National Instruments, Austin, TX). The sampling rate for both input and output was 50 kHz with 16-bit resolution. The stimulus waveforms were passed from the digital-to-analog converter in the sound board to a custom-built, low-power amplifier before being passed to the earphones for presentation. The analog output of the microphone was passed to an Etymotic preamplifier (20 dB gain), and then to a custom-built amplifier/bandpass filter (14 dB gain, filtered from 0.4 to 15.0 kHz), before being passed to the analog-to-digital converter in the sound board.

1. The nSFOAE procedure

The physiological measure used here was a nonlinear version of the stimulus-frequency otoacoustic emission (SFOAE), called the nSFOAE (Walsh et al., 2010a, 2014a). The SFOAE is a continuous tonal reflection that is evoked from the cochlea by a long-duration tone (Kemp, 1980); after a time delay, the reflection adds with the input sound in the ear canal.

Because the SFOAE is so weak, it is difficult to extract from the sound in the ear canal, dominated as it is by the stimulus tone. Our procedure for extracting the nSFOAE response from the ear-canal sound is based upon the “double-evoked” procedure described by Keefe (1998). At the heart of this procedure are three acoustic presentations (a “triplet”) using the two earphones in the right ear. For the first presentation, the SFOAE-evoking stimulus is delivered to the ear via one of the ER-2 earphones at a specified SPL. For the second presentation, the exact same stimulus is delivered via the other ER-2 earphone at that same level. For the third presentation, both earphones deliver the exact same stimuli simultaneously and in phase; accordingly, the level in the ear canal is approximately 6 dB greater than the levels in each of the first two presentations. For each presentation of each triplet, the sound in the ear canal (called the response) is recorded and saved. Then the responses from the first two presentations are summed point-for-point in time and the response to the third presentation is subtracted from that summed response. To the extent that the presentation and recording systems are linear, the remaining “difference waveform” is devoid of the original stimulus. The nSFOAE can be thought of as the ongoing magnitude of the failure of additivity (Walsh et al., 2010a). To obtain a stable estimate of the nSFOAE, the difference waveforms were summed across many triplets from the same block of trials.

If only linear processes were involved, then when the response to the third presentation is subtracted from the sum of the responses to the first two presentations, the resulting nSFOAE would be close to the noise floor of the measurement system. However, in our studies, the result always is a difference waveform whose magnitude is substantially above the noise floor. The reason is that the response to the third presentation of each triplet always is weaker than the sum of the responses to the first two presentations, in accord with the known compressive characteristic of the normal human cochlea over moderate SPLs (e.g., Cooper, 2004). As a check on the software and the calibrations, the same SFOAE-evoking stimuli were presented to a passive cavity (a 0.5-cc syringe) instead of a human ear, using exactly the same procedures and equipment as used with humans, and the result of the subtraction of responses was an essentially perfect cancellation. That difference waveform was comparable in magnitude to other estimates of the noise floor of our OAE-measurement system, at about −13.0 dB SPL (at 4.0 kHz).

The stimulus used here to elicit the nSFOAE always was a long-duration tone presented in wideband noise. The tone was 4.0 kHz, 300 ms in duration, and had a level of 60 dB SPL. The noise had a bandwidth of 0.1–6.0 kHz, was 250 ms in duration, and had an overall level of about 62.7 dB SPL (a spectrum level of about 25 dB, so the tone-to-noise ratio was about 35 dB). The onset of the tone always preceded the onset of the noise by 50 ms. The tone was gated using a 5-ms cosine-squared rise and decay, and the noise was gated using a 2-ms cosine-squared rise and decay. The same random sample of noise was used across all presentations of a triplet, across all triplets, and across all subjects for both the auditory- and visual-attention studies. (The use of a single sample of frozen noise was important for the purpose of averaging nSFOAE responses across repeated conditions, and for comparing these averaged responses across the various experimental conditions.)

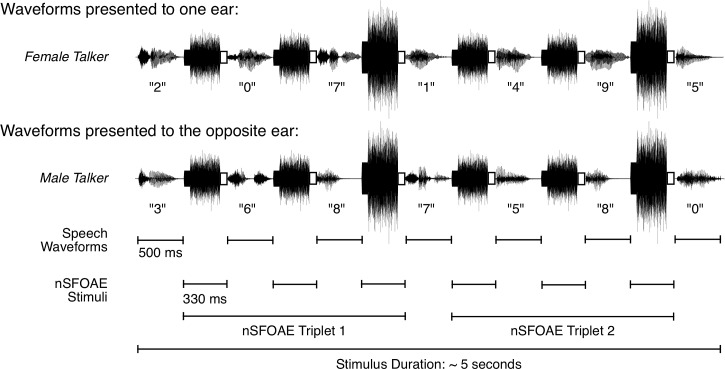

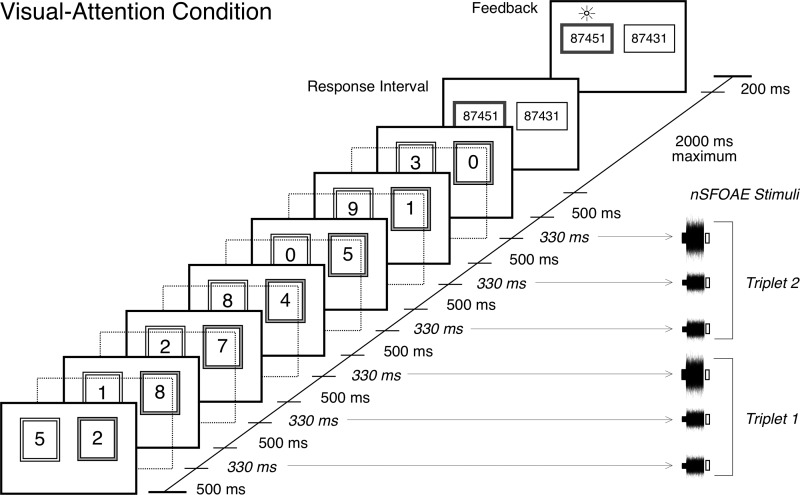

The digit stimuli and the SFOAE-eliciting stimuli were interleaved in time, as shown for a single dichotic-attention trial in Fig. 1. The two traces show the stimuli presented separately to the two ears, the female voice to one ear and the male voice to the other ear. Note that the interleaved SFOAE-eliciting stimuli were simultaneous and identical in the two ears (diotic), and the digits were simultaneous but different (dichotic). Because there were six SFOAE-eliciting presentations per trial, estimates for two triplets were obtained from each trial (the third and sixth presentations always were double amplitude, as shown). During data collection and data analysis, the estimates for the two triplets were kept separate; the responses themselves were averaged across trials within a block, and response magnitudes were averaged across blocks (see below), but no data were combined across triplets. On every trial, a silent 2000-ms response interval, and then a 200-ms feedback interval, followed the seventh digit stimulus. Thus, a single trial of the nSFOAE procedure lasted approximately 7 s. As Fig. 1 makes clear, during the auditory dichotic-attention blocks, about half of the trials produced nSFOAE responses from the right ear when attention was directed to the right ear (called the “ipsilateral” condition), and about half of the trials produced nSFOAE responses from the right ear when attention was directed to the left ear (the “contralateral” condition). These responses were kept separate initially. For the diotic-attention condition, there was no ipsilateral/contralateral distinction. Shown in Fig. 1 are small white boxes at the end of each SFOAE-eliciting stimulus. These mark the 30-ms silent period that was the topic of our previous reports (Walsh et al., 2014a,b).

FIG. 1.

Schematic showing how the speech sounds and the nSFOAE-eliciting stimuli were interleaved during one trial of the dichotic-listening condition in the auditory study. Each ear was presented with a series of seven spoken digits, one series spoken by a female talker, and the other series spoken simultaneously by a male talker. The ear receiving the female talker was selected randomly on each trial. Each digit was presented in a 500-ms temporal window. A 330-ms ISI separated consecutive digits, during which the nSFOAE-eliciting stimuli were presented. The latter always was composed of a 300-ms tone and a 250-ms frozen sample of wideband noise, and the onset of the tone always preceded the onset of the noise by the difference in their durations. A 30-ms silent period, shown here as an open rectangle, followed each nSFOAE-eliciting stimulus for the purpose of estimating the magnitude of the physiological noise in the nSFOAE recordings. The nSFOAE cancellation procedure was performed separately on each of the two triplets presented on each trial, yielding two estimates of the nSFOAE per trial. Although not shown here, a 2000-ms silent response interval and a 200-ms feedback interval completed each trial. During the response interval, the subject performed a two-alternative matching task based on the digits spoken by the female talker. For each block of trials, the physiological responses from the trials having a correct behavioral response were based on about 20 to 30 trials. [Reprinted with permission from Walsh et al. (2014a).]

Each block of trials began with a calibration period, during which no speech stimuli were presented. For calibration, the level of a 500-Hz tone was adjusted in the right ear canal of the subject to attain 65 dB SPL. This routine was run separately for each of the ER-2 earphones. The calibration factors obtained were used to scale the amplitude of the stimulus used to elicit the nSFOAE so that the presentation levels for the two earphones were as similar as possible. This calibration routine was followed by two criterion-setting routines, and the main data-acquisition routine. The first criterion-setting routine was based on the responses obtained during the first 12 trials of each block; during those 12 trials, all nSFOAE responses were accepted (for both triplets) unless the peak amplitude of the response was greater than 45 dB SPL. (Waveforms whose amplitudes exceeded this limit typically were observed when the subject moved, swallowed, or produced some other artifactual noise.) All of the accepted nSFOAE responses collected during the first 12 trials were averaged point-by-point, and the resultant waveform served as the foundation for the accumulating nSFOAE average to be constructed during the main acquisition routine. Furthermore, the rms value of each accepted nSFOAE response was computed, and this distribution of rms values was used to evaluate subsequent responses during the main acquisition routine. The second criterion-setting routine consisted of a 20-s recording in the quiet during which no sound was presented to the ears; this was done just prior to every block. The median rms voltage from this recording was calculated, and was used as a measure of the ambient (physiological) noise level of that individual subject for that block of trials.

During actual data collection, each new nSFOAE response was compared to the responses collected during the two criterion-setting routines and was accepted into the accumulating nSFOAE average if either one of the two criteria was satisfied. First, the rms value of the new nSFOAE was compared to the distribution of rms values collected during the first criterion-setting routine. If the new rms value was less than 0.25 standard deviations above the median rms of the saved distribution, then the new nSFOAE response was added to the accumulating average. Second, each new nSFOAE response was subtracted point-for-point from the accumulating nSFOAE average. The rms of this difference waveform was computed, then converted to dB SPL. If the magnitude of the difference waveform was less than 6.0 dB SPL above the noise level measured earlier in the quiet, the new nSFOAE was accepted into the accumulating average. Each nSFOAE always was evaluated by both criteria, but only one criterion needed to be satisfied for the data to be accepted for averaging. The nSFOAE waveforms accepted from triplet 1 of a trial always were evaluated and averaged separately from the nSFOAEs evaluated and averaged from triplet 2 of a trial. At the end of each trial, subjects were given feedback on the computer screen as to which nSFOAE waveforms (triplet 1 or 2) were accepted. The block of trials terminated when behavioral responses had been given to at least 30 trials on which the nSFOAE response had been accepted for both triplets 1 and 2 (including trials with incorrect behavioral responses). This typically required about 35–50 trials, or about 4–6 min.

2. Subject training

As noted above, each subject was screened for normal hearing prior to participating in the studies described here. After the screening procedure, the subjects who passed were given immediate training with the simultaneous behavioral and physiological stimuli by completing several blocks of trials of the auditory-inattention listening condition. During this training, subjects became familiar with the general testing and recording procedure. They learned to remain as still as possible during the test blocks and to press the response key without head or other body movements, permitting artifact-free OAE recordings. The subjects were not given training with the selective auditory-attention conditions prior to data collection. However, the two male subjects (L01 and L05) participated in an earlier, pilot version of this experiment that used the same experimental conditions, but slightly different control conditions, so they were experienced with the auditory-attention conditions.

E. Data analysis

At the end of each block of trials for the diotic auditory-attention condition, there were four physiological measures: An averaged physiological response for all the trials having correct behavioral responses, another average for those having incorrect behavioral responses, and each of those separately for triplets 1 and 2. For the dichotic auditory-attention condition, there were eight physiological measures at the end of each block of trials because the four measures described for the diotic condition were kept separately for trials on which the female voice (or female-derived SSNs) was in the right ear (which had the recording microphone) or the left (contralateral) ear. By saving these additional averages, we were able to test the logical possibility that the amount of efferent activity differed in ears having, and not having, the targeted female voice—whether there was “ear suppression.” (As will be seen, there was no systematic difference in the physiological responses from the ipsilateral and contralateral ears, within either subjects or conditions, an unexpected result.) For the auditory inattention condition, there were no incorrect trials (by definition), but the responses were kept separately for the ipsilateral and contralateral trials and for the two triplets, meaning that there were four averaged physiological measures per block of trials. (For the visual-attention study described below, the attention blocks were conceptually similar to the dichotic blocks in the auditory-attention study; thus, there were eight physiological averages at the end of each block. For the inattention conditions in the visual-attention study, there were four physiological averages at the end of each block.)

F. Analyzing nSFOAE responses

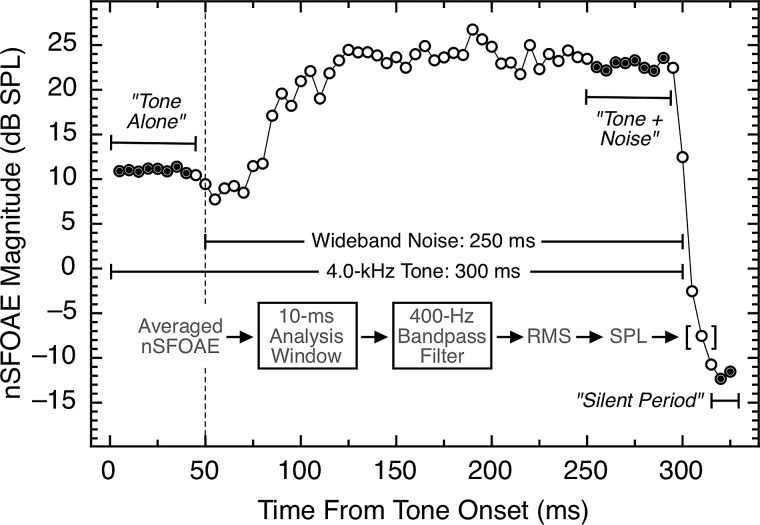

Following data collection, each averaged nSFOAE response (330 ms in duration) was analyzed offline by passing the averaged difference waveform through a succession of 10-ms rectangular analysis windows, beginning at the onset of the response, and advancing in 1-ms steps. At each step, the waveform segment was bandpass filtered between 3.8 and 4.2 kHz (the tonal signal was 4.0 kHz) using a sixth-order, digital elliptical filter. The rms voltage was computed for each 10-ms window and converted to dB SPL. Figure 2 shows an example of an nSFOAE response from subject L01, averaged from one block of trials. Time is shown along the abscissa, and nSFOAE magnitude is plotted on the ordinate. The analysis was performed in 1-ms steps, but only every fifth window is plotted in Fig. 2 to reduce data density.2

FIG. 2.

An example of an nSFOAE response averaged from one block of trials of one condition. To summarize the data, tone-alone magnitudes were averaged from 5 to 40 ms, and tone-plus-noise magnitudes were averaged from 250 to 285 ms (thirty-five 10-ms epochs each; indicated by the filled circles). All nSFOAE responses, across all subjects and conditions, were analyzed in this same manner. The noise floor for these measurements was about −13.0 dB SPL. The data from the silent period already have been reported (Walsh et al., 2014a,b).

At the left in Fig. 2 is the nSFOAE response to the 50 ms of 4.0-kHz tone-alone, followed by the nSFOAE response to tone-plus-noise, in turn followed by the response during the 30-ms silent period. As can be seen, the response to tone-alone appears to be essentially instantaneous and essentially constant throughout the time course of the tone. By contrast, the response following the onset of the weak wideband noise was an initial dip, or “hesitation” of about 25 ms, followed by a rising dynamic response whose magnitude became essentially constant for the remainder of the tone-plus-noise stimulus. Walsh et al. (2010a) attributed the hesitation to mechanical suppression plus neural delay and attributed the rising dynamic phase to the onset of MOC activation (as described by Guinan et al., 2003). The waveform morphology shown here was typical, but there were individual differences in nearly every aspect of the subjects' nSFOAE responses (the seemingly random fluctuations in the response are in fact attributable to the amplitude fluctuations in the particular sample of noise used). (The nSFOAE response shown in Fig. 2 comes from one block of trials, but for all analyses presented here, data were combined across several blocks, as is described below.)

Here we will emphasize the segment of the nSFOAE response seen during the tone-plus-noise portion of the eliciting stimulus rather than the segment of the response seen during the tone-alone portion. The reason is that tone-plus-noise is well known for its ability to trigger the MOC reflex (Guinan et al., 2003; Walsh et al., 2010a, 2014a); a rising, dynamic response having a time constant of about 100 to 200 ms is a common result (e.g., Backus and Guinan, 2006). Tone-alone is not as effective at triggering the MOC reflex, especially a tone as weak as 60 dB SPL (Guinan et al., 2003). Evidence that our tone-alone stimulus was not triggering the MOC reflex is the absence of a rising, dynamic component to the nSFOAE response at tone onset; rather, the nSFOAE response to tone-alone had a rise time that matched that of the tonal stimulus for every subject. Accordingly, we believe that the nonlinearity underlying the tone-alone segment of our nSFOAE responses is primarily mechanical—no neural reflex is involved. Presumably the tone-plus-noise segment of our nSFOAE response involves some mechanical nonlinearity plus the additional nonlinearity associated with the MOC reflex. [We acknowledge that some of our averaged responses to tone-alone may contain some carryover of neural efferent effect from one test trial to the next (Backus and Guinan, 2006; Goodman and Keefe, 2006; Walsh et al., 2010b, 2014a,b), which we call persistence.]

1. Culling and pooling of nSFOAE waveforms

As noted, each block of trials yielded four or eight averaged difference waveforms (nSFOAE responses), each of which was similar to the response shown in Fig. 2. Not all of these waveforms were judged to be acceptable for further analysis, however. Averaged responses having clearly atypical morphologies were excluded as follows: An nSFOAE response from a block of trials was eliminated from further analyses if (1) the asymptotic level of the tone-alone or tone-plus-noise portion of the response were within 3.0 dB of the noise floor as determined for that block of trials, or (2) if the average tone-plus-noise magnitude was more than 3.0 dB smaller than the average tone-alone magnitude. Using these criteria, only 18 of the 374 averaged nSFOAE responses collected across subjects, triplets, and conditions (∼5%) were culled.

Within blocks, difference waveforms obtained from individual triplets (trials) were summed point-for-point in time with other difference waveforms to yield final responses like those in Fig. 2. By averaging this way, random fluctuations were canceled out, and the weak nSFOAE response was allowed to emerge. Across blocks, however, the difference waveforms themselves were not summed; rather, the decibel values from corresponding 10-ms analysis windows were averaged. As described above, the nSFOAE responses from individual blocks were processed with a series of 10-ms windows (in 1-ms increments), each sample was filtered around the 4.0-kHz tone, and the rms amplitude of each window was calculated and transformed into decibels SPL. These decibel values then were averaged, window-by-window, across the nSFOAE responses obtained on different blocks of trials to determine the final overall averaged nSFOAE response for a condition for a subject. To summarize the responses resulting from this procedure, we averaged the nSFOAE magnitudes (in decibels) for the thirty-five 10-ms analysis windows beginning at 5 ms and for the thirty-five windows beginning at 250 ms (90% overlap for successive 10-ms windows). Those we call the asymptotic levels for the tone-alone and tone-plus-noise segments of the response, respectively (see Fig. 2).

2. Evaluating results

Numerous pairwise comparisons were of interest here, some within subjects and some across subjects. For each such comparison, an effect size (d) was calculated using the following equation:

| (1) |

where the numerator is the difference in the means (m) for the two conditions of interest, and the denominator is an estimate of the common standard deviation (s) for the two distributions. By convention, effect sizes larger than 0.2, 0.5, and 0.8 are considered small, medium, and large, respectively (Cohen, 1992).

For some comparisons, matched or unmatched t-tests also were used to evaluate possible differences between sets of data. For other comparisons, a bootstrapping procedure was implemented as a way of assessing the likelihood that the effect sizes obtained were attributable simply to chance. For each pair of conditions to be compared, all the data were pooled, a sample equal to the N of one of the conditions was drawn at random to simulate that condition, the remaining data were taken to simulate the second condition, an effect size was calculated for the two simulated conditions, and that value was compared to the effect size actually obtained in the study itself. This resampling process was repeated 20 000 times, and a tally was kept of how often the absolute value of the effect size in the resamples equaled or exceeded the effect size actually obtained in the study for that comparison. This tally, divided by 20 000, was taken as an estimate of the implied significance (p) of the actual outcome. (Our use of 20 000 resamples was a carry-over from our previous applications of this procedure, and although that number of resamples did well exceed the number of possible combinations for some of our comparisons, note that this over-sampling had no effect on our estimates of implied significance.) Also note that using the absolute values of the resampled effect sizes led to a relatively conservative (“two-tailed”) assessment of implied significance. The resampling process was accomplished using custom-written LabVIEW software.

III. RESULTS: AUDITORY-ATTENTION STUDY

A. Behavioral tasks

The goal was to have a behavioral task that was neither too easy nor frustratingly difficult, and that goal was accomplished. All subjects performed above chance and below perfection at recognizing the middle five digits spoken by the female voice for both the dichotic and diotic auditory-attention conditions. Across the eight subjects, the average percent-correct performance was 86.4% and 86.6%, for the dichotic and diotic conditions, respectively. On average, each subject completed 4.7 blocks of 30 or more trials per condition. Five of the subjects performed better in the dichotic-listening condition, and three performed better in the diotic-listening condition. The two male subjects having experience with early versions of the tasks (L01 and L05) found the diotic condition consistently, if only slightly, more difficult. Only one subject improved noticeably across the two test sessions (for both attention conditions), from about 71% correct to about 84% correct.

Whenever a subject responded within the 2.0-s response interval, a reaction time (RT) was calculated between the end of the time interval containing the seventh digit presentation and the key press. The values were saved separately for correct and incorrect responses except in the inattention condition, where all key presses were scored as “correct.” Paired t-tests showed that RTs were significantly faster in the inattention condition than in the two attention conditions (p ≤ 0.025, two-tailed and adjusted for multiple comparisons). This was expected because the inattention condition required no forced-choice decision about the content of the SSN stimuli heard during the trial. For both of the auditory-attention conditions, RTs were significantly slower for incorrect trials than for correct trials. For example, across the eight subjects in the dichotic auditory-attention condition, the mean RTs were 1520 and 1290 ms for incorrect and correct trials, respectively; unequal-variance t(7) = 3.8, p < 0.01. The results were similar for the diotic condition (and for the visual-attention conditions described below). There was no significant difference between the RTs for correct trials during the dichotic and diotic auditory-attention conditions. Thus, both the memory tests and the RT results confirm that all the subjects were actively engaged in the behavioral tasks.1

B. Cochlear responses during attention and inattention

For all subjects, there were differences in the magnitudes of the nSFOAE responses measured under conditions of attention and inattention. Typically, the differences were 2–3 dB. Before showing those results, we need to further explain the data analysis.

1. Dichotic condition: Ipsilateral and contralateral responses

When the auditory-attention study was designed, the dichotic condition was of particular interest because it provided the ability to test for “ear suppression.” On approximately half of the trials in the dichotic condition, the attended female voice was in the right ear, the same ear as the microphone recording the nSFOAE responses. This was called the ipsilateral condition. On the other half of the trials, the female voice was in the left ear—called the contralateral condition. Given the neural circuitry of the OC efferent system (Brown, 2011), and various facts and speculations about the functioning of that system (Cooper and Guinan, 2006; Giard et al., 1994; Guinan, 2006, 2010; Harkrider and Bowers, 2009; Jennings et al., 2011; Kawase et al., 1993; Kumar and Vanaja, 2004; Lukas, 1980; Walsh et al., 2010b), we expected that there could be differences in nSFOAE magnitude on ipsilateral and contralateral trials, and accordingly, the data were saved separately for the two situations. However, for the majority of our subjects, the nSFOAE responses for the ipsilateral and contralateral conditions were essentially the same (see below), and this was true for both triplets. The absence of ear suppression, while unexpected, allowed us to pool the data obtained from the ipsilateral and contralateral trials, and thereby increase the stability of our estimates for the dichotic condition. The pooling was accomplished not by summing the difference waveforms themselves, but by averaging the decibel values in the first 10-ms window of the averaged ipsilateral response (difference waveform) from each available block with that in the first window for the averaged contralateral response from each available block, and then repeating that process for each successive set of 10-ms windows in the two 330-ms responses (1-ms increments). In this way, 4–6 ipsilateral averaged responses were pooled with a corresponding number of contralateral responses.

To be precise about the similarities between the ipsilateral and contralateral nSFOAE responses: For each subject, statistical comparisons were made between the asymptotic values estimated for the tone-alone segments for triplet 1, the tone-plus-noise segments for triplet 1, and the same for triplet 2. Only two of those 32 statistical comparisons even approached marginal significance: (1) For all four blocks that L03 completed for the dichotic condition, the asymptotic magnitude for tone-plus-noise on triplet 2 was greater for the ipsilateral than the contralateral trials; the average difference was 1.6 dB. (2) For all six blocks that L05 completed for the dichotic condition, the asymptotic magnitude for tone-alone on triplet 2 was greater for the ipsilateral than the contralateral trials; the average difference was 2.8 dB. For L03, the p-value for the comparison for tone-plus-noise for triplet 2 was 0.052, and for L05, the p-value for the comparison for tone-alone for triplet 2 was 0.047 (matched t-tests). After Bonferroni correction for the 32 comparisons, these two differences did not achieve statistical significance, and the 30 other comparisons all had smaller differences than these. Accordingly, we felt it was appropriate to pool the ipsilateral and contralateral data for all subjects.1

For the inattention condition in the auditory-attention study, the physiological averages were averaged and stored separately depending upon whether the SSNs derived from the female voice were in the ipsilateral or contralateral ear, even though that manipulation was of no consequence for the subject's behavioral response. To obtain final averages, those ipsilateral and contralateral responses were combined as described above for the dichotic-attention condition. Matched t-tests showed no significant differences between the “ipsilateral” and “contralateral” responses in the inattention condition, and this was true for all subjects. This null outcome served as an important assessment of our procedures. Compared to the dichotic condition, where ipsilateral and contralateral differences seemed plausible, the same division of data in the inattention condition showed that such differences were unlikely to occur due to chance. (For the visual-attention study described below, the “ipsilateral” and “contralateral” averages also were combined to yield the final averaged response for each block of trials. No significant statistical differences were observed; as in the other conditions just described, the “ipsilateral” and “contralateral” responses were essentially the same within all subjects.) Accordingly, we conclude that the physiological mechanisms operating in our study were essentially equally effective in the two ears.

2. Inattention and attention conditions compared

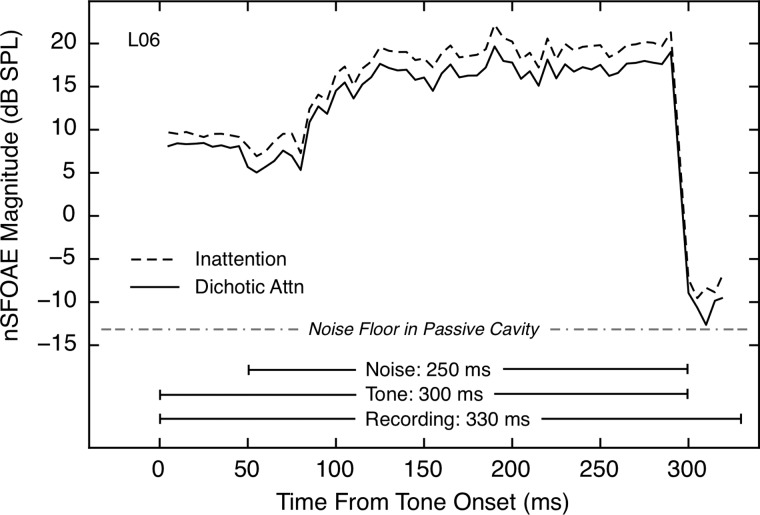

Representative examples of the nSFOAE responses obtained in this study are shown in Fig. 3. For this subject, the responses during most of the tone-alone and tone-plus-noise periods were larger during inattention than during attention. [This difference also was observed in the 30-ms silent period, as was reported and discussed by Walsh et al. (2014a,b).] For this subject, similar differences in responses for the inattention and dichotic conditions were obtained for triplet 2, and these differences were present as well when the inattention responses for both triplets were compared to those of the diotic-attention condition.

FIG. 3.

The nSFOAE responses for the full 330-ms measurement period for one subject for both the auditory inattention condition and the dichotic attention condition. The first 50 ms shows the response to tone-alone, the next 250 ms shows the response to tone-plus-noise, and the final 30 ms shows the response after the stimuli terminated. The dichotic response represents the mean of the nSFOAE averages obtained when the attended voice was either in the ear ipsilateral to the microphone or in the contralateral ear. The fine structure of the response during tone-plus-noise is not random fluctuation, but rather, it is attributable to the specific sample of noise used. Each response included only trials having correct behavioral responses and was averaged across at least four 30-trial blocks.

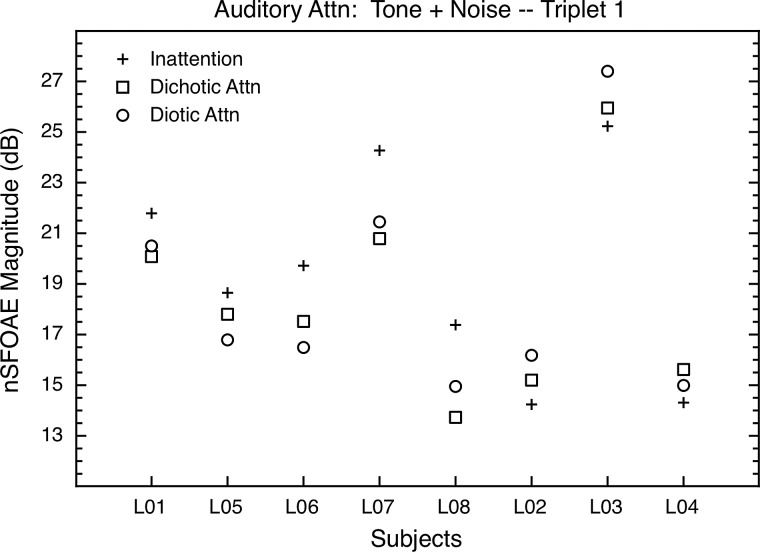

As noted above, the asymptotic nSFOAE responses for tone-alone and tone-plus-noise portions of the response were summarized by averaging the decibel values across thirty-five 10-ms windows beginning at 5 and at 250 ms, respectively (1-ms increments) from waveforms like those shown in Fig. 3. The results for the tone-plus-noise portion of the response are shown in Fig. 4. Note that for the five subjects grouped at the left in Fig. 4, the asymptotic values for tone-plus-noise were greater in the inattention condition than in either of the auditory-attention conditions, and for the three subjects at the right in Fig. 4, the asymptotic responses were smaller in the inattention condition than in either attention condition. The direction of the difference always was the same for a given subject, across multiple blocks of trials both within and across test sessions; it just was not the same across subjects. We ask the reader to ignore these individual differences in the direction of effect for the moment; a plausible explanation is provided in Sec. VII A below. For now, it is simply the existence of differences between attention and inattention that is of interest.

FIG. 4.

Auditory-attention study: The asymptotic nSFOAE responses to tone-plus-noise for triplet 1 for all subjects in the auditory study. The values shown began as means across thirty-five 10-ms analysis windows beginning at 250 ms into the 300-ms nSFOAE-eliciting stimulus within one block of trials (i.e., at response asymptote); those individual means then were averaged across at least four such blocks. The differences between inattention and attention were in one direction for the five subjects at the left of the figure, and in the other direction for the remaining three subjects. Standard errors of the mean were 0.73 dB, on average. The level of the noise floor of our measurement system at 4.0 kHz was about −13.0 dB SPL (see footnote 2).

The differences between inattention and attention in Fig. 4 are in the range of 2–3 dB, and thus are modest in size. However, these differences are substantially larger than many attentional differences reported using tone-evoked or click-evoked OAEs (Puel et al., 1988; Froehlich et al., 1990; Froehlich et al., 1993; Meric and Collet, 1992, 1994; Giard et al., 1994; Ferber-Viart et al., 1995; Maison et al., 2001; Harkrider and Bowers, 2009). Also, the decibel differences do not fully capture the magnitudes of our attentional differences within subjects. As evidence, we calculated effect sizes for the differences between the inattention and attention conditions within individual subjects. The results are shown in Table I. Effect sizes are shown separately for the dichotic and diotic conditions, each compared to the inattention condition. The top and bottom halves of the table contain the effect sizes for tone-plus-noise and tone-alone, respectively, and the left and right halves of the table pertain to the two triplets.

TABLE I.

Auditory-attention study: Effect sizes for the differences between the inattention condition and both attention conditions, shown separately for both triplets.

| Triplet 1 | Triplet 2 | |||

|---|---|---|---|---|

| Subject Number | Inattentionminus Dichotic | Inattentionminus Diotic | InattentionminusDichotic | InattentionminusDiotic |

| Tone + Noise | ||||

| L01 | 0.79 | 0.70 | 0.48 | −0.17 |

| L02 | −0.40 | −0.89 | −0.31 | −1.16 |

| L03 | −0.39 | −1.10 | −1.62a | −1.49b |

| L04 | −0.89 | −0.45 | −0.10 | 0.15 |

| L05 | 0.65 | 1.27b | −0.30 | −0.28 |

| L06 | 1.47b | 1.83b | 0.34 | 0.23 |

| L07 | 1.51b | 1.39 | 0.89 | 0.51 |

| L08 | 1.73a | 1.07b | 0.13 | 0.14 |

| Mean (absolute values) | 0.98 | 1.09 | 0.52 | 0.52 |

| Tone Alone | ||||

| L01 | 0.32 | 0.22 | 0.38 | 0.02 |

| L02 | −0.48 | −1.10 | 0.37 | −0.55 |

| L03 | −0.66 | −1.00 | −0.34 | 0.12 |

| L04 | 0.00 | 0.19 | −0.70 | −0.97 |

| L05 | −1.67a | −0.87 | 0.27 | −0.04 |

| L06 | 0.68 | 0.86 | −0.21 | −0.24 |

| L07 | 0.95 | 0.47 | 0.93 | 0.17 |

| L08 | 1.38b | 1.09 | 0.35 | 0.17 |

| Mean (absolute values) | 0.77 | 0.73 | 0.44 | 0.29 |

p ≤ 0.01 (Implied statistical significance computed using a bootstrapping procedure.)

p ≤ 0.05 (Implied statistical significance computed using a bootstrapping procedure.)

As Table I reveals, the differences between attention and inattention were far more substantial for most subjects than the 2–3 dB differences of Fig. 4 suggest. Contributing to these large effect sizes is the low variability within subjects across blocks of trials. The differences in sign in Table I reflect the individual differences in direction of effect seen in Fig. 4. In accord with our intention to temporarily ignore the differences in direction of effect, the mean effect sizes shown across subjects are means of the absolute values of the individual effect sizes. Table I reveals that the differences between inattention and attention generally were smaller for triplet 2 than for triplet 1, and generally smaller for tone-alone than for tone-plus-noise.

IV. VISUAL-ATTENTION STUDY

The second study was highly similar to the first, the primary difference being that the attended stimuli were presented visually. Seven of the eight subjects from the auditory-attention study participated in the visual-attention study. Again, there were at least two 2-h test sessions conducted for every subject, and, for each condition, data were collected for at least four blocks of trials consisting of at least 30 trials each. As in the auditory-attention study, Etymotic earphones were fitted in both ear canals, and an Etymotic microphone was in the right ear only. The collection and analysis of nSFOAE responses was the same as in the auditory study.

A. Visual-attention task

The subject again was presented with two strings of seven digits on every trial, was asked to attend to one of them and, at the end of each trial, was required to recognize the middle five digits of the attended string. The two strings of digits were presented visually, side-by-side, for 500 ms each, with one sequence inside a pink box (the attended string) and one inside a blue box. The pink box was on the left side of the display on half of the trials at random, and remained on that side throughout a trial. Interleaved with the seven pairs of visual digits were the six, diotic nSFOAE-eliciting sounds, each lasting 330 ms. In some conditions, sequences of spoken digits (attention condition) or SSNs (inattention condition) were presented as additional distracters; when present, they were dichotic and simultaneous with the visual digits. The durations of the digits and eliciting sounds were the same as for the auditory-attention study. The trial-timing sequence, including the 2000-ms response interval and the 200-ms feedback interval, is shown in Fig. 5. For the inattention conditions, the subject was expected only to press a response key after the final pair of pink and blue boxes was presented. RTs were collected for all behavioral responses. The pink and blue sequences of visual digits (and the dichotic pairs of spoken digits or SSNs when they were presented) all were selected independently.

FIG. 5.

Trial-timing sequence for the visual-attention study. Sequences of seven pairs of digits were presented simultaneously for 500 ms each, one sequence inside a pink box (gray in the figure) and one inside an adjacent blue box. Interleaved with the seven pairs of visual digits were six diotic presentations of the nSFOAE-eliciting stimuli, each being 330 ms in duration. Following the last pair of visual digits was a response interval of 2000 ms, during which the subject pressed one of two response keys to indicate which of two sequences of five digits contained the middle five digits of the digit sequence in the pink box. Immediately following was a feedback interval of 200 ms, during which a small symbol was displayed over the correct five-digit choice. In the inattention conditions, the subject pressed a response key immediately after the last nSFOAE stimulus was presented (no digits were displayed). In some conditions, dichotic speech or SSNs were presented simultaneously with the visual digits. [Reprinted with permission from Walsh et al. (2014b).]

There were two attention conditions and two inattention conditions in the visual-attention study. For both inattention conditions, no visual digits were presented in the pink and blue boxes, and all the subject was required to do was press a response key immediately after the final pair of boxes was presented. For one attention and one inattention condition, the only auditory stimuli were the nSFOAE elicitors; neither auditory digits nor SSNs were presented. For the second attention condition, auditory digits were presented simultaneous with the visual digits. These presentations were dichotic—the female voice was in one ear and the male voice in the other ear at random—and they spoke strings of digits of SSNs that were independent of each other and of the visual strings being presented. For the second inattention condition, SSNs were presented in place of the auditory digits.

Each subject adjusted the viewing distance to the monitor for his or her comfort. Those distances were not routinely measured, but the visual angles subtended by the digits unquestionably did vary across subjects. Under reasonable assumptions about viewing distance, the visual angle subtended was about 1.2–1.6 degrees for the height of the digits, about 2.4–3.2 degrees for the sides of the pink and blue boxes, and about 3.6–4.8 degrees for the lateral separation between the digits. Thus, the visual digits were large and well separated.

V. RESULTS: VISUAL-ATTENTION STUDY

A. Behavioral tasks

For the visual-attention study, just as for the auditory-attention study, performance on the digit-identification task during the attention conditions and the RTs during the inattention conditions revealed that all subjects were actively engaged in the behavioral tasks. Across the seven subjects, the percentage of correct decisions about the target digits was similar to that in the auditory-attention study; for the visual-attention condition without speech sounds, the average percent-correct performance was 92.0%, and for the visual-attention condition with speech sounds performance was 90.1% correct on average. Also similar to the auditory-attention study, reaction times were significantly faster for the visual-inattention conditions than for the visual-attention conditions (p ≤ 0.05, two-tailed), and RTs were significantly faster for correct versus incorrect trials (p ≤ 0.05, two-tailed).1

B. Cochlear responses during attention and inattention

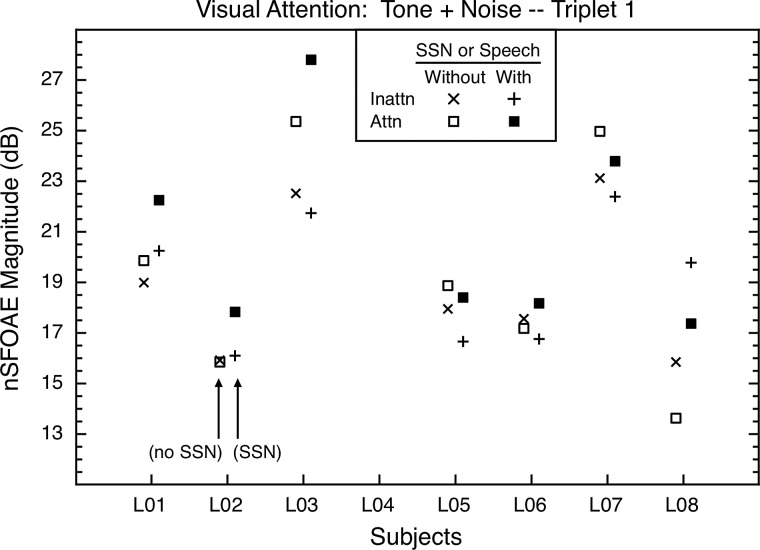

In the visual-attention study, just as in the auditory-attention study, there were differences in the magnitudes of the nSFOAE responses for the inattention and attention conditions, and this was true whether or not speech or SSNs were present as additional distracter stimuli. The asymptotic responses to tone-plus-noise for triplet 1 are shown in Fig. 6. The data from triplet 2 were similar.

FIG. 6.

Visual-attention study: The asymptotic nSFOAE responses to tone-plus-noise for triplet 1 for all subjects in the second study. One set of inattention and attention conditions (With) included spoken digits during the attention blocks and SSNs during the inattention blocks; another set included no speech nor SSN stimuli (Without). The values shown began as means across thirty-five 10-ms analysis windows beginning at 250 ms into the 300-ms nSFOAE-eliciting stimulus within one block of trials; those individual means then were averaged across at least four such blocks. As in the auditory-attention study, the differences between inattention and attention were in different directions for the different subjects. Within subjects, standard errors of the mean were about 0.78 dB, on average.

1. Inattention and attention conditions compared

The pair of inattention and attention conditions having no spoken digits or SSNs present was the simplest test of whether the cochlea was affected during visual attention. Examination of Fig. 6 reveals that there were attentional differences between these conditions, although they generally were smaller than the attentional differences for the pair of conditions in which spoken digits and SSNs also were present as distracters. Again, the decibel differences between inattention and attention underestimated the actual attentional differences; see the within-subject effect sizes in Table II. The left and right halves of the table contain the effect sizes for the two triplets, and the top and bottom halves pertain to tone-plus-noise and tone-alone, respectively. Effect sizes are shown separately for the conditions that did, and did not, contain speech or SSNs as auditory distracters during visual attention, and as in Table I, absolute values were used when calculating the means of the effect sizes across subjects. Note in Fig. 6 and Table II that there again were individual differences in whether the attention or inattention condition produced the stronger nSFOAE, just as was true for the auditory-attention study. Furthermore, individual subjects often did not exhibit the same directionality of effect in the two studies.

TABLE II.

Visual-attention study: Effect sizes for the differences between the inattention and attention conditions, shown separately for both speech/SSN conditions and both triplets.

| Subject Number | Triplet 1 | Triplet 2 | ||

|---|---|---|---|---|

| Inattentionminus Attention Without SSNsa | Inattentionminus Attention With SSNs | Inattentionminus Attention Without SSNs | Inattentionminus Attention With SSNs | |

| Tone + Noise | ||||

| L01 | −0.33 | −0.92 | −1.14 | 1.80b |

| L02 | 0.02 | −0.84 | 0.02 | 0.42 |

| L03 | −0.51 | −1.92b | −1.15c | 2.17b |

| L04 | — | — | — | — |

| L05 | −0.78 | −0.88 | −0.38 | 1.12c |

| L06 | 0.23 | −1.63b | −0.05 | 0.38 |

| L07 | −0.94 | −0.95 | −0.62 | 0.31 |

| L08 | 1.12c | 1.30c | −0.50 | 0.25 |

| Mean (absolute values) | 0.56 | 1.21 | 0.55 | 0.92 |

| Tone Alone | ||||

| L01 | 1.51c | −0.79 | 0.48 | 0.52 |

| L02 | 1.04 | −0.60 | 0.18 | 0.20 |

| L03 | −0.75 | −0.44 | −0.47 | −0.30 |

| L04 | — | — | — | — |

| L05 | −0.32 | −0.77 | −0.44 | −0.18 |

| L06 | 0.43 | −0.39 | 0.43 | −0.41 |

| L07 | −0.49 | −0.74 | 1.16c | −0.21 |

| L08 | 1.05c | 0.85 | −0.17 | −1.14c |

| Mean (absolute values) | 0.80 | 0.65 | 0.48 | 0.42 |

SSN: speech-shaped noise stimuli, presented dichotically, and used as auditory distracters. When SSNs were used in the inattention condition (WITH), dichotic spoken digits were used in the corresponding attention condition.

p ≤ 0.01 (Implied statistical significance computed using a bootstrapping procedure.)

p ≤ 0.05 (Implied statistical significance computed using a bootstrapping procedure.)

Table II reveals that attending to visual stimuli did produce differences in the cochlea during the tone-plus-noise portion of the eliciting stimulus. In accord with intuition, additional distracting stimuli did matter for most subjects. The attentional differences generally were larger when spoken digits or SSNs were present than when they were not. For 24 of the 28 entries for tone-plus-noise in Table II, the effect size was greater in absolute value when speech or SSNs were present than when not. Moreover, when spoken digits or SSNs were present in the visual-attention study, the effect sizes were generally quite similar to those in the auditory-attention study, and that was true for both tone-plus-noise and tone-alone (compare Tables I and II). These similarities constitute a form of replication for the auditory-attention study, although the subjects were the same.

VI. ADDITIONAL FINDINGS AND COMMENTS

A. Time delay of the SFOAE

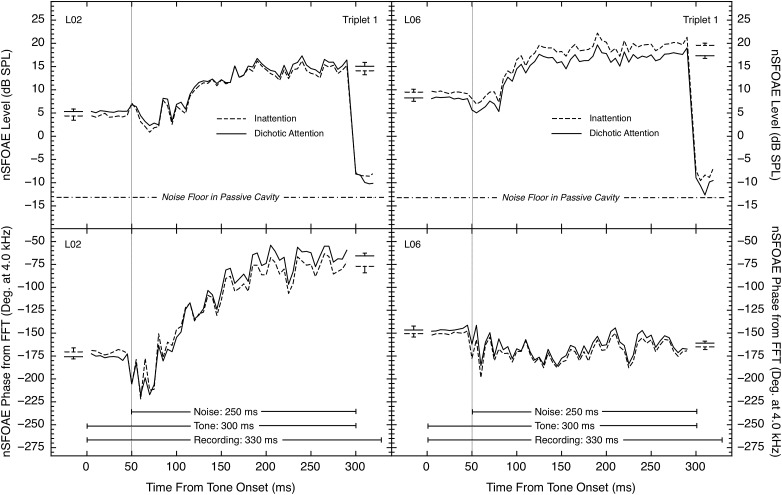

Although we have no direct measures of the time delays of the SFOAE itself, we did calculate FFTs for every 10-ms analysis window, and each of those calculations provided a phase value for the 4.0-kHz component of the nSFOAE. Figure 7 compares the level and phase data for two representative subjects, one showing a phase change of about 100 degrees from tone-alone to tone-plus-noise, and the other showing a phase change of less than 25 degrees. Three of the eight subjects showed the larger phase-change pattern seen on the left side of Fig. 7, and five showed relatively small phase changes. Each subject showed similar phase patterns in both the auditory and visual experiments. As can be seen, for some subjects, phase changed in a manner paralleling the changes in level (on the left in Fig. 7), but for other subjects phase did not show the same rising, dynamic pattern as level (on the right in Fig. 7).

FIG. 7.

Comparison of (top) level and (bottom) phase of the nSFOAE response at 4.0 kHz for triplet 1 for two subjects from the auditory-attention study. The timing lines in the bottom panels mark the tone-alone and tone-plus-noise portions of the response. Level measurements were obtained for each successive 10-ms analysis window using a filter centered at 4.0 kHz and having a bandwidth equal to 10% of its center frequency; the phase values were obtained by performing FFTs on each 10-ms analysis window. At the left and right within each panel, plus or minus one standard error is shown for the estimates of the asymptotic tone-alone and the asymptotic tone-plus-noise responses, respectively; standard errors were calculated across the multiple blocks that were averaged.

Note that these measures of phase are not simple because they are the phase of a “waveform” that results from subtracting the response to the single two-earphone presentation from the sum of the responses to the two single-earphone presentations. Also, the initial values (at time 0 ms) are necessarily constrained to be within a single period of the 4.0-kHz tone, meaning that a large difference in the time delay of the SFOAE (say, between the inattention and attention conditions) logically could be underestimated by one or more periods. Throughout the 300-ms interval of the recording, when the 4.0-kHz tone was present, phase values did remain within one period. Large, rapid phase changes were never observed in our succession of 10-ms analysis windows after the onset of the wideband noise. Larger rapid phase changes were occasionally seen during the initial 25 ms following noise onset, where the nSFOAE level dipped briefly, making phase measurements less reliable. As was the case for the level functions, the fine grain of the phase functions was very similar in the attention and inattention conditions, again suggesting that these fluctuations were due to the characteristics of the wideband noise stimulus, not random variability in the data.

For consistency with the magnitude data, the phase values for the thirty-five 10-ms analysis windows beginning at 5 ms and at 250 ms were averaged to produce estimates of the asymptotic phase values for the tone-alone and tone-plus-noise portions of the response. This was done for the nSFOAE response from each block of trials for each subject, and the resulting values were averaged across the repeated measures of that condition for that subject. Those asymptotic phase values are shown in Table III. The tone-plus-noise data are shown at the top of the table, the tone-alone data at the bottom of the table, and the data for triplets 1 and 2 are shown at left and right, respectively. Means of the absolute values of phase are shown below the data for individual subjects.

TABLE III.

Auditory-attention study: Asymptotic phase values at 4.0 kHz from FFTs for inattention and attention conditions, shown separately for tone-plus-noise (top) and tone-alone (bottom), and for both triplets.

| Subject Number | Triplet 1 | Triplet 2 | ||||

|---|---|---|---|---|---|---|

| Inattention | Dichotic | Diotic | Inattention | Dichotic | Diotic | |

| Tone + Noise | ||||||

| L01 | −161.33 | −152.37 | −153.67 | −159.19 | −150.94 | −150.75 |

| L02 | −77.09 | −65.66 | −63.28 | −74.42 | −64.34 | −60.69 |

| L03 | −39.19 | −38.53 | −43.96 | −37.93 | −44.08 | −44.89 |

| L04 | −36.46 | −46.77 | −42.33 | −41.36 | −49.02 | −46.24 |

| L05 | 125.12 | 117.46 | 122.26 | 121.63 | 119.31 | 123.61 |

| L06 | −165.02 | −160.93 | −162.94 | −160.96 | −160.99 | −160.37 |

| L07 | −140.06 | −118.32 | −136.48 | −145.01 | −130.67 | −156.26 |

| L08 | −145.64 | −133.18 | −126.63 | −136.07 | −138.22 | −133.81 |

| Tone Alone | ||||||

| L01 | −194.15 | −179.30 | −181.17 | −221.86 | −202.62 | −210.12 |

| L02 | −170.55 | −175.76 | −175.45 | −203.93 | −201.18 | −211.77 |

| L03 | 26.97 | 24.40 | 17.10 | 38.97 | 43.47 | 43.65 |

| L04 | −88.88 | −92.99 | −93.08 | −151.07 | −144.21 | −154.29 |

| L05 | 117.39 | 123.75 | 127.90 | 24.04 | 10.37 | 5.13 |

| L06 | −150.55 | −146.58 | −148.62 | −86.40 | −129.23 | −123.28 |

| L07 | −147.57 | −132.34 | −135.31 | −25.72 | −33.12 | −27.33 |

| L08 | −149.61 | −141.69 | −134.76 | −132.15 | −144.47 | −142.52 |

For the purpose of comparing the differences in phase values between the inattention and attention conditions, effect sizes were calculated, just as was done for the level data (Tables I and II); those calculations are shown in Table IV. The format of the table is the same as for Table III. The effect sizes in Table IV are, on average, medium or large in value. The reason is that, just as for the level measure, the asymptotic phase values exhibited quite small variability across blocks of trials. The data in Table IV are from the auditory-attention study, but the data for the visual-attention study were quite similar.

TABLE IV.

Auditory-attention study: Effect sizes for the phase differences between the auditory inattention condition and both auditory-attention conditions, shown separately for both triplets.

| Subject Number | Triplet 1 | Triplet 2 | ||

|---|---|---|---|---|

| Inattentionminus Dichotic | Inattentionminus Diotic | Inattentionminus Dichotic | Inattentionminus Diotic | |

| Tone + Noise | ||||

| L01 | −0.97 | −1.05 | −0.92 | −1.06 |

| L02 | −0.77 | −0.85 | −0.68 | −0.80 |

| L03 | −0.09 | 0.58 | 0.78 | 0.80 |

| L04 | 0.89 | 0.42 | 0.87 | 0.50 |

| L05 | 0.62 | 0.21 | 0.19 | −0.15 |

| L06 | −0.76 | −0.33 | 0.01 | −0.11 |

| L07 | −0.77 | −0.11 | −0.51 | 0.38 |

| L08 | −1.13 | −1.25 | 0.18 | −0.15 |

| Mean (absolute values) | 0.75 | 0.60 | 0.52 | 0.49 |

| Tone Alone | ||||

| L01 | −1.42 | −1.92 | −0.85 | −0.55 |

| L02 | 0.51 | 0.42 | 0.33 | 0.47 |

| L03 | 0.50 | 1.79 | −1.28 | −0.97 |

| L04 | 0.35 | 0.38 | −0.39 | 0.24 |

| L05 | −0.54 | −0.88 | 0.48 | 0.62 |

| L06 | −0.37 | −0.18 | 0.86 | 0.66 |

| L07 | −0.78 | −0.76 | 0.30 | 0.30 |

| L08 | −0.83 | −1.06 | 0.56 | 0.43 |

| Mean (absolute values) | 0.66 | 0.92 | 0.63 | 0.53 |

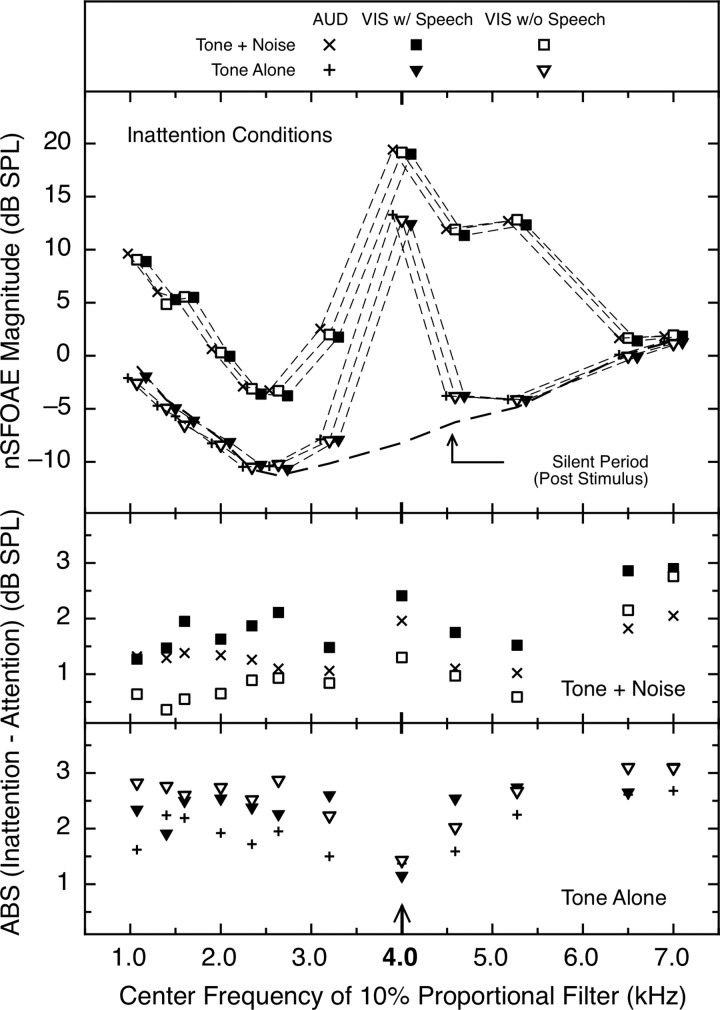

B. Frequency extent of attentional differences

All nSFOAE magnitude data presented to this point were obtained with the analysis filter centered at 4.0 kHz, the frequency of the tone in our MOC-eliciting stimulus. By selecting different center frequencies of the analysis filter, we were able to examine the magnitude of the attentional difference across the spectrum (for consistency, we kept the bandwidth of the filter at 10% of the center frequency and then corrected the data to compensate for the different rise times of those analysis filters; see Walsh et al., 2014a,b).2 Once the analysis filter was off the frequency of the 4.0-kHz tone, the measurements were of the nSFOAEs to the relevant frequency components of the weak wideband noise. The data were analyzed separately for the auditory- and visual-attention studies, for the inattention and attention conditions, and for the tone-alone, tone-plus-noise, and silent periods of the acoustic stimulus. As was true for the 4.0-kHz data above, the directionality of the difference between inattention and attention could differ across frequency within a subject, it could differ across subjects at individual frequencies, and the patterns were not the same for all subjects. This fact again made simple means of the inattention/attention difference inappropriate, so again the absolute values of the differences between inattention and attention were used.

In the top panel of Fig. 8 are the mean data from only the relevant inattention conditions for the tone-plus-noise, tone-alone, and silent-period portions of the nSFOAE response as the center frequency of the analysis filter was varied. As can be seen, these data confirm two expectations: (1) the magnitudes of the nSFOAEs for tone-plus-noise were considerably stronger than those for both tone-alone and the silent period; and (2) the magnitudes of the nSFOAEs at, and close to, 4.0 kHz were considerably stronger than those at more distant frequencies (where the acoustic stimulus was much weaker). Both outcomes are in accord with past experience with nSFOAE behavior as level was varied (see Walsh et al., 2010a,b). Also evident from the top panel of Fig. 8 is that the responses from the inattention conditions of the auditory- and visual-attention studies were remarkably similar in magnitude and pattern across frequency (presumably, in part, because the same sample of wideband noise was used for all subjects in both studies). (Supplemental analyses revealed that the flat segment in the vicinity of 5.0 kHz in the tone-plus-noise data exists even when the sampling of frequency values is more dense.)

FIG. 8.