Abstract

Background

Few guidelines exist regarding authorship on manuscripts resulting from large multicenter trials. The HF-ACTION investigators devised a system to address assignment of authorship on trial publications and tested the outcomes in the course of conducting the large, multi-center, NHLBI-funded trial (n=2,331; 82 clinical sites; 3 countries). The HF-ACTION Authorship and Publication (HAP) Scoring System was designed to enhance rate of dissemination, recognize investigator contributions to the successful conduct of the trial, and harness individual expertise in manuscript generation.

Methods

The HAP score was generated by assigning points based on investigators’ participation in trial enrollment, follow-up, and adherence, as well as participation in committees and other trial activity. Overall publication rates, publication rates by author, publication rates by site, and correlation between site publication and HAP score using a Poisson regression model were examined.

Results

Fifty peer-reviewed, original manuscripts were published within 6.5 years following conclusion of study enrollment. In total, 137 different authors were named in at least 1 publication. Forty-five of the 82 sites (55%) had an author named to at least one manuscript. A Poisson regression model examining incident rate ratios revealed that a higher HAP score resulted in a higher incidence of a manuscript, with a 100-point increase in site score corresponding to an approximately 32% increase in the incidence of a published manuscript.

Conclusions

Given the success in publishing a large number of papers and widely distributing authorship, regular use of a transparent, objective authorship assignment system for publishing results from multi-center trials may be recommended to optimize fairness and dissemination of trial results.

Introduction

The recent increase of large multi-center clinical trials poses a challenge to author assignment decisions in a scientific environment which places high importance on a researcher's productivity. Little guidance exists surrounding authorship assignment processes when the number of researchers in a trial exceeds that which can be negotiated by discussion and consensus alone. The International Committee of Medical Journal Editors (ICMJE) has provided the most widely accepted criteria for authorship, including: 1) substantial contributions to conception and design, acquisition of data, or analysis and interpretation of data; 2) drafting the article or revising it critically for important intellectual content; 3) final approval of the version to be published; and 4.) agreement to be accountable for all aspects of the work1. However, in a multicenter trial, such guidelines are insufficient due to the large number of investigators who could meet these criteria. Methods for assigning authorship in these cases continue to lack organization, transparency, and consistency.

When clear publication policies and procedures do not exist, co-investigators, including junior investigators and site coordinators, may experience uncertainty regarding whether they will receive academic recognition for their work. One study examining criteria for promotion in an academic setting revealed that participation in multi-center trials may already be undervalued relative to the time commitment required2. This in turn can impact not only manuscript generation but also investigator enthusiasm, rate of enrollment, and ultimately trial efficiency. Moreover, the common practice of limiting authorship to a small number of the most key investigators limits the potential to engage a larger number of authors who may contribute time, energy, and expertise. This can reduce total number and rate of publications generated from a trial, resulting in incomplete reporting of all the potential findings from the study. This ultimately results in suboptimal use of a valuable resource, particularly in publically funded trials.

Senior leadership in the HF-ACTION (Heart Failure-A Controlled Trial Investigating Outcomes of Exercise Training) trial responded to these issues by creating the HF-ACTION Authorship and Publication (HAP) Scoring System to optimize output of articles by leveraging the talents of those who made contributions to the study based on ICMJE criteria3. Study leadership aimed to widely distribute authorship assignments in a transparent manner that objectively translated investigator contributions into a numeric score. The current analysis describes the relationship between the HF-ACTION method for authorship assignment and the overall manuscript output, including site and author distribution, with the hypothesis that the implementation of the HAP Scoring System would result in a broad distribution of authorship, defined as >50% of sites with an author named to a published manuscript.

Methods

Trial Organization

The HF-ACTION study was a randomized clinical trial designed to test the efficacy of exercise training as a supplement to standard care in heart failure patients with ejection fraction ≤ 35% and New York Heart Association functional class III-IV. The HF-ACTION study enrolled 2,331 participants between April 2003 and February 2007. The study was conducted at 82 regional centers (67 in the United States, 9 in Canada, and 6 in France), the Coordinating Center, and core labs. Data collected from subsets of HF-ACTION participants at enrolling centers composed the ancillary studies on biomarkers, DNA, and nuclear imaging conducted in those respective core labs. The National Heart, Lung, and Blood Institute (NHLBI) provided funding for the study infrastructure, procedures, and publication of the trial design manuscript and the primary outcome manuscript. Additional funding for data collection, analysis, and manuscript development came from a combination of industry funding (specific ancillary studies) and individual investigators’ discretionary funds. No extramural funding was used to support this work.

HF-ACTION Publications Committee

The Executive Committee formulated a list of topics for manuscripts after initiation of enrollment but prior to the close of enrollment. The Publications Committee members, appointed by the Executive Committee, were responsible for approving all manuscripts publishing original HF-ACTION results. The committee provided expected timelines for manuscript progress, monitored compliance with these timelines, and promoted completion of manuscripts in a timely fashion.

Method for Ranking Investigator and Center

The key component of the HF-ACTION authorship assignment guidelines was the comprehensive HAP scoring system. The overall goals were to: 1) acknowledge individual investigators for their efforts in the trial; 2) take advantage of investigator interest, subject content expertise, and track record of experience to maximize the rate of dissemination as measured by acceptance of manuscripts in peer reviewed journals; and 3) generate enthusiasm by ensuring transparency, incorporating input from all stake-holders, and utilizing merit-based criteria3.

Three pathways existed to become an author of a manuscript (see summary in Table I) and included (a) assignment to an authorship position based on a site's HAP score, (b) assignment to an article based on additional expertise or contributions not recognized through the scoring system, or (c) investigator proposal of a unique manuscript idea not captured in the list generated by the Executive Committee. These three publication pathways are described in detail below.

Table I.

HF-ACTION Authorship Pathways

| HAP Score Pathway | Individual Expertise Pathway | Manuscript Suggestion Pathway |

|---|---|---|

| 1.ECa generates manuscript topics. 2.Sites select preferred topics from EC topic list in order from 1-5. 3.EC assigns topics to sites based on preferences, beginning with top-ranked sites. |

1.EC assigns key members of the core labs and ancillary studies to lead author positions as appropriate. 2.EC assigns additional authors through step 3 in HAP score pathway (i.e. based on site preferences and score). |

1.Author proposes topic to EC. 2.EC approves or rejects topic. 3.If approved, lead author submits writing group for approval. 4.EC approves or rejects writing group. |

EC= Executive Committee

Scoring system

The HAP score was generated by assigning points based on a site's performance at all phases of the trial, such as patient enrollment, follow-up, and exercise adherence, and investigator participation in committees. While the same scoring system was used for both baseline and outcomes manuscripts, more weight was given to subject enrollment in the baseline score and more emphasis given to subject follow-up in the outcomes manuscript score. The range of scores was 56-846 for baseline and 6-957 for outcome scores. This scoring system is previously described in greater detail 3.

Members of the Coordinating Center and NHLBI representatives were not eligible to receive points based on this system because they were not affiliated with a site. These members were assigned to manuscripts based on their level of participation and their stated interest in manuscript topics.

Manuscript Topics

The manuscripts were categorized as primary, secondary, and ancillary/tertiary. The Executive Committee circulated the list of projected manuscript topics to each site's investigators, who indicated their top article interests in order from 1-5. The EC then eliminated articles with insufficient interest. Authors selecting these low interest topics were reassigned to topics they had ranked as a lower priority. Outside this list of projected manuscripts, investigators could propose topics through a manuscript suggestion process.

Method for Authorship Assignment

The method for authorship assignment integrated the site score and the investigator manuscript topic preference. The site which earned the highest number of points based on trial contribution was offered the PI's first manuscript choice and was assigned lead authorship on the PI's highest ranked manuscript. The second ranked site PI was then given his/her preference for lead authorship and was then re-ranked. This process continued with re-ranking of sites occurring each time a new authorship position was assigned. Lead author positions were filled first, followed by senior author positions (second, third, and last position), and then by contributing author positions (all other author spots). The Executive Committee discussed placing a limit on the number of authors assigned to each topic; however, a limit was not determined in order to honor the requests of as many investigators as possible.

Assignment Based on Expertise

Certain articles were partially exempt from the scoring system and assigned instead to a specific group of authors, in order to acknowledge study members’ contributions that were not accounted for in the scoring system and due to the need for expertise regarding particular aspects of the trial. For example, the study design and rationale manuscript was authored by those investigators who were involved in the original grant proposal to NIH. The primary outcomes manuscripts were exempt from the scoring system.

Additionally, core laboratory members and individuals who secured funding for an ancillary study were assigned lead authorship positions on manuscripts pertaining to these activities. The additional authors on these manuscripts were assigned based on the scoring system. These manuscripts were also considered secondary outcomes and were coded for analysis purposes as “ancillary” manuscripts.

In recognition of their support for providing analysis and participation in the study, statisticians assumed a contributing authorship position on all manuscripts and were not factored into the scoring system. Statisticians could be listed as lead authors or senior authors if they suggested their own manuscript proposal.

Post-Assignment Manuscript Suggestion Process

Following completion of authorship assignment, individual investigators were able to submit suggestions for post hoc analyses that they wished to publish. The topic and writing group, chosen by the individual proposing the topic, were subject to Publications Committee approval. All articles assigned through this process were coded as “suggested” or “tertiary” manuscripts, depending on the level of interest generated.

Method for Analyzing Authorship System Outcomes

All published manuscripts were reviewed and compared to internal HF-ACTION documents, including proposed manuscript topics, writing group assignments, and site scores. Site affiliations were obtained primarily from Executive Committee/Publications Committee author assignment documents. When an author on a published manuscript was not listed in the original HF-ACTION assignments, the author was not included in a site's publication count. Descriptive analyses were performed evaluating the number of times an individual investigator was assigned authorship and the number of times an individual investigator participated as an author in a published manuscript. Each incidence of publication by an author was counted as an “author spot.” Thus, if a site had 2 authors listed on a single publication, the site had 2 author spots resulting from that manuscript. Site-specific descriptive statistics about authors, manuscript proposals, and publication were calculated from this data. Manuscripts were categorized as described throughout the Methods section. To determine the association of site score on publication, zero-inflated Poisson regression was used, adjusting for the number of persons and authors at each site. These analyses were initially conducted with a cut-off date of December 31, 2012 and were repeated with the end date of September 30, 2013 to update the publication list. Results reported are from the September 30, 2013 data unless otherwise noted.

Results

Overall Output

By September 30, 2013, 50 trial manuscripts had been published by HF-ACTION investigators. Table II details manuscript output by manuscript type. Out of a total of 22 baseline manuscript concepts that had writing groups assigned, 14 were eventually published. Two of the baseline manuscripts recommended by the Executive Committee were combined into one article for publication.

Table II.

Manuscript Type, Publication Rate, and Assignment Method

| Baseline Manuscripts | Outcomes Manuscripts | Review Manuscripts | |||

|---|---|---|---|---|---|

| Primary Outcomes | Secondary Outcomes | Suggested/Tertiary Outcomes | |||

| Number proposed | 22 | 7 | 19 | Not available | Not available |

| Number published | 14 | 5 | 10 | 18 | 3 |

| Description | Manuscripts published using baseline data only. | Manuscripts containing analysis of primary outcomes specified in the original grants submitted to NIH | Manuscripts containing outcomes analysis not specified in original grant (includes core lab, ancillary outcomes) | Manuscripts containing outcomes analysis not suggested by EC; ideas brought forward by investigators. | Manuscripts which included HF-ACTION results in a review format |

| Author Assignment Method Used | HAP Score | aEC Assignment | HAP Score/EC Assignment Combination | Self-Assignment/Lead Author Assignment with EC Approval | Self-Assignment with EC Approval |

EC=Executive Committee

There were 19 topics for secondary outcomes manuscripts allocated using the scoring system. Of the 19 topics, 10 were published by September 2013, including 7 secondary outcomes and 3 outcomes of the core laboratory work or ancillary studies. Additional outcomes manuscripts published included 5 primary outcomes manuscripts, the authorship of which were determined outside of the scoring system.

Eighteen outcomes manuscripts were published through the manuscript suggestion process. Finally, 3 review articles were written by HF-ACTION investigators and included results from the study. Among the 50 articles published, 29 articles (58%) grew out of topics suggested by the Executive Committee, and 21 (42%) were suggested by the post-assignment manuscript suggestion process.

By Author

In total, 137 different researchers were named as authors across the 50 HF-ACTION publications, resulting in 504 total author spots (Table III). In addition to the 137 published authors, 41 authors were named to a writing group but had not been included on a final publication as of the writing of this article, due to either manuscript publication delay or opting out of participation. Among the 137 authors, 75% (n=103) were authors on 3 or fewer manuscripts. Authors were most commonly (n=59, 43%) authors on one publication. Seven authors had 20 articles or more. Five of these 7 authors were members of the Executive Committee.

Table III.

Author Spots by Type of Site

| Type of Site | Number of Author Spots n (%) |

|---|---|

| Coordinating Center | 174 (35) |

| NIH | 44 (9) |

| Enrolling Sites | 281 (56) |

| Industry | 5 (1) |

| Total | 504 |

By Site

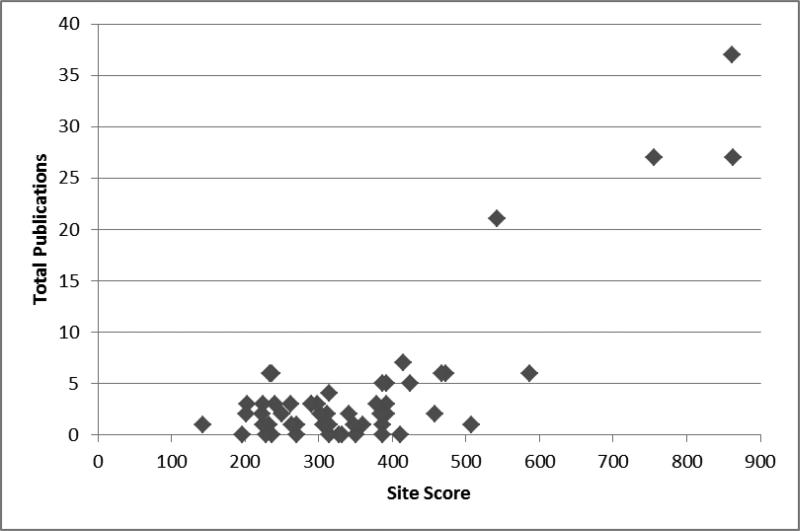

A total of 58 of the 82 sites (71%) had an author assigned to at least one manuscript. Sites that did not indicate an interest in topics put forward by the Publications Committee were not assigned to a manuscript. Forty-five of the 58 sites (78%; 55% of all sites) that were assigned to a manuscript had an author named on at least one of the published articles, leaving 13 sites which were assigned to a manuscript but later opted out of manuscript participation. The total number of publications of these 58 sites, along with the site score, is depicted in Figure I.

Figure I. Total Publications by Site Score.

Figure 1 shows the relationship of site score and total number of publications at each site. The figure includes only those sites which requested an authorship topic (n=58, 71% of sites).

In examining the distribution of published articles across sites, no site had more than 7% (n=37) of the 504 published authorship positions. Aside from enrolling sites, the Coordinating Center, with 35% (n=174) of authorship positions, had the greatest number of author spots (Table III).

Fidelity to Assignments

Of the 29 published articles originating from the Executive Committee, 100% of the writing groups had some change in authorship from the time of author assignment to the time of publication, and 100% of the writing groups had at least one author added to the writing group by the time of publication. Twenty-five of the 29 writing groups (86%) also had an author drop out in the time between author assignment and article publication. Sixteen of 29 articles originating from the Executive Committee (55%) had the published lead author change from the time of author assignment. Of these 16, 8 (50%) changed to a lead author who was part of the originally assigned writing group.

Association between Publication Rate and Site Score

Only HF-ACTION sites were included in the statistical analysis of publication rate to site score, as Coordinating Center members and NHLBI officers and statisticians, and other authors not affiliated with an HF-ACTION site did not receive a score for their participation in trial effort. Thus, the following analyses include only authors affiliated with sites and core labs who were originally given an author assignment, approximately 59% of all authors and 51% of all author spots.

Table IV presents the incident rate ratios of the Poisson regression models, illustrating the association between site score and number of manuscripts proposed and published, while taking the number of persons at the site into account. For every 100-point increase in average site score, the incidence in total authorship increases by about 32%. These results differed from the original analysis performed at the end of December 2012, which showed stronger statistically significant correlations for all published manuscripts, with an IRR of 1.4 for baseline manuscripts, 1.41 for outcomes manuscripts, 1.89 for suggested manuscripts, and 1.52 for the total manuscripts published (p<0.001).

Table IV.

Incident Rate Ratios for a 100 point increase in site score

| Manuscript Type | Author spots (n) | IRR (95% CI) | p-value |

|---|---|---|---|

| Baseline Proposed | 148 | 1.19 (1.04, 1.36) | 0.014 |

| Baseline Published | 139 | 1.23 (1.04, 1.46) | 0.016 |

| Outcomes Proposed | 253 | 1.25 (1.11, 1.42) | 0.000 |

| Outcomes Published | 190 | 1.29 (1.08, 1.54) | 0.005 |

| Suggested Published | 175 | 1.61 (1.38, 1.87) | <.0001 |

| Total | 504 | 1.32 (1.22, 1.43) | <.0001 |

Based on the high number of publications at site 209, this site was removed from the dataset and the Poisson analysis re-run for the September 2013 manuscripts (see Table V). After removal of Site 209, the baseline site score no longer had a significant effect on the number of baseline manuscripts proposed and published for each site, but the relationship was still positive. Similarly, the outcomes site score was not significantly associated with the number of outcomes manuscripts published. The suggested manuscripts (1.42; p<0.0001) and total number of manuscripts published (1.17; p<0.001) remained significantly associated with site score after removal of site 209 from the analysis.

Table V.

Incident Rate Ratios for a 100 point increase in site score, Site 209 dropped

| Manuscript Type | IRR (95% CI) | p-value |

|---|---|---|

| Baseline Proposed | 1.14 (0.99, 1.32) | 0.063 |

| Baseline Published | 1.10 (0.93, 1.30) | 0.285 |

| Outcomes Proposed | 1.14 (1.00, 1.30) | 0.048 |

| Outcomes Published | 1.06 (0.93, 1.22) | 0.389 |

| Suggested Published | 1.42 (1.22, 1.66) | <.0001 |

| Total | 1.17 (1.07, 1.28) | 0.001 |

Discussion

The HF-ACTION system is not the first system developed for assigning authorship positions in multi-center trials. However, to our knowledge it is the first to attempt to quantify investigators’ contributions to the study to assign authorship4. The HAP scoring system resulted in a large number of articles and a wide spread of authorship using a highly transparent process. Across 45 sites, 137 authors were included on at least one manuscript. While there remained a core group from the Coordinating Center that had the highest participation, more than half of all author spots were held by investigators across the HF-ACTION enrolling sites. Although there were significant changes in authorship from assignment to publication, the overall goals of the HF-ACTION authorship guidelines were achieved, specifically: recognizing investigator contributions to the trial using an objective and quantifiable system, rewarding investigators fairly for these contributions, and disseminating study findings efficiently and thoroughly.

The authors recognize that a large number of publications resulting from a single trial may not serve on its own as an indicator of the success of a trial or authorship system. In fact, this split reporting of results has been criticized for its potential to lead to authorship inflation without benefiting the readers and clinicians the study was intended to inform. However, when responsibly reported, multiple publications have benefits, such as thorough analysis of the intervention's effect on relevant but overlooked sub-populations and analysis of components of the intervention which may contribute to its success or failure. Such analyses are particularly relevant in trials with complex or unique study designs.

In considering the usefulness of multiple publications, the quality of publications must also be considered. One proposed set of guidelines for appraising research success is the PQRST method, which evaluates the productivity, quality, reproducibility, data sharing, and translational influence of the research5. In examining these guidelines in reference to HF-ACTION, all publications included in this manuscript were published in peer-reviewed journals, and the impact factor for those journals, as provided in the Journal Citation Reports, is listed in Appendix 1. The average impact factor was 4.09 for baseline manuscripts and 8.03 for outcomes manuscripts. Reproducibility is difficult in a study of this size and scope; however, data from HF-ACTION is in the process of being made publicly available. Finally, the translational value of the research has become apparent as the Centers for Medicare and Medicaid Services have issued a statement that they will cover the cost of cardiac rehabilitation for certain heart failure patients, citing two of the published HF-ACTION manuscripts in the decision memo6.

In order to contrast the HF-ACTION publication experience, HF trials of comparable size and scope were identified, and a PubMed search was conducted to identify the number of manuscripts resulting from each trial and the number of authors on these publications. Manuscripts generated from limited data sets released from these trials were not included in this count. The Evaluation Study of Congestive Heart Failure and Pulmonary Artery Catheterization Effectiveness (ESCAPE) trial resulted in 15 publications by 71 authors since its initiation in January 2000. The Surgical Treatment for Ischemic Heart Failure (STICH) trial had 14 publications, including 115 authors, resulting from its study since it began in September 2002. The Sudden Cardiac Death in Heart Failure Trial (SCD-HEFT), initiated in 1997, included 76 different authors across its 23 publications. In contrast to these HF multi-center clinical trials, the HF ACTION publication process was able to engage more investigators (n=137) in generating more manuscripts (n=50) in a relatively shorter period of time.

Changes in the author group in the time between author assignment and publication prevailed as the trend across HF-ACTION articles. However, wide distribution of authorship across sites was maintained. Of particular note, all five of the manuscripts describing primary outcomes were published with the assigned lead author. The publications timeline process gave priority to primary outcomes manuscripts, allowing these authors first access to study statisticians. As publications are drafted further from the trial conclusion, authors may become distracted by other projects and may find it more difficult to comply with publication timelines.

Although the publication guidelines were in effect, the use of the scoring system was not deployed for suggested manuscripts. The final year of published manuscripts included a greater proportion of suggested manuscripts and a greater proportion of authors from site 209, the home institution for the Coordinating Center, due to easier access to the data. The change in statistical significance in the last year prior to this manuscript's writing further indicates the importance of capitalizing on investigators’ early interest in authorship involvement.

Limitations

The HF-ACTION author assignment was determined prior to the actual data analysis and writing of the article. Publication guidelines specified that the lead author would be responsible for the first manuscript draft and, along with senior authors, would offer the greatest contribution to subsequent drafts. In addition, the Publication Committee established guidelines regarding the timing of draft completion and revisions. However, these guidelines did not specify methods to settle any disputes arising from failure to comply with these guidelines. While this system was largely successful, it should be noted that the establishment of an objective system does not eliminate authorship disputes, but rather provide guidelines and methods to minimize them.

This analysis of authorship scores and rates of publication was a post-hoc analysis. Therefore, it was not possible to establish a cause and effect relationship between the authorship process and publication outcomes. In addition, while a major aim of the scoring system was fairness and equitable distribution, no surveys were conducted to evaluate investigators’ perception of the fairness of the process.

The HF-ACTION trial was modest in the number of patients enrolled and the number of enrolling sites compared to international mega-trials. The ability to translate this system to a broader trial, or to one that spans multiple continents and cultures, may be more difficult. Additionally, HF-ACTION had a unique intervention which required considerably more effort on the part of participating sites than a simpler drug study might entail. However, in the current research environment, a significant amount of time and effort is required in all trials to identify patients, engage them in follow-up, and to meet regulatory and sponsor requirements. The HAP system provided a method for recognizing this commitment to a trial. While the precise components which comprised the score may depend upon the trial under consideration, the early establishment of a scoring and authorship system is one highly generalizable component which should be considered in all multi-center trials.

In addition, the HAP system had some practical limitations, including: 1.) Development of the system later in the trial rather than at the outset 2.) Failure to include the Coordinating Center in the scoring system due to its unique role compared to enrolling sites, and 3.) Difficulty in funding manuscripts aside from the primary outcome manuscripts, requiring the reliance on individual investigators’ discretionary funds, separate grants, or industry, where available.

Finally, the writing of this article comes as the four-year post-study termination date approaches. Although 50 articles have been published, many proposed topics remain at various stages of completion. While the publication of the raw data, as required under current NIH guidelines, has a positive effect on the speed of data dissemination, principal and site investigators also lose control over the quality of the data. Moreover, the continuation of the authorship process is prevented. As a result, study staff may not gain adequate recognition for their work, as non-study related staff are granted access to the data.

Conclusion

The HAP score emerged as a successful system to involve the majority of its investigators across many sites. The overall goal, to widely distribute authorship opportunities and promote dissemination of study results, was achieved even though the original allocation of authorship was not precisely maintained. While the system is not free of limitations, its components provide a useful framework for future studies and may serve as a starting point for authorship decisions in multi-site trials. In the future, successful implementation of similar authorship systems may prompt the NIH to require more specific, transparent, and equitable dissemination plans across its sponsored multi-center trials in order to optimize manuscript production, maximize use of public resources, and foster incentive to participate in these worthwhile ventures.

Acknowledgements

The authors would like to thank Jocelyn Andrel Sendecki, MSPH, Thomas Jefferson University, for her statistical support in the preparation of the manuscript.

This main study discussed in this manuscript was funded by the National Heart, Lung, and Blood Institute of the National Institutes of Health (HF-ACTION Main Trial, NIH/NHLBI, U01HL063747)

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.International Committee of Medical Journal Editors Uniform requirements for manuscripts submitted to biomedical journals. [June 4, 2013];Ethical Considerations in the Conduct and Reporting of Research: Authorship and Contributorship. 2013 at http://www.icmje.org/ethical_1author.html.

- 2.Tornetta P, 3rd, Pascual M, Godin K, Sprague S, Bhandari M. Participating in multicenter randomized controlled trials: what's the relative value? J Bone Joint Surg Am. 2012 Jul 18;94(Suppl 1):107–11. doi: 10.2106/JBJS.L.00299. [PMID:22810459] [DOI] [PubMed] [Google Scholar]

- 3.Whellan DJ, Ellis SJ, Kraus WE, Hawthorne K, Piña IL, Keteyian SJ, Kitzman DW, Cooper L, Lee K, O'Connor CM. Method for establishing authorship in a multicenter clinical trial. Ann Intern Med. 2009 Sep 15;151(6):414–20. doi: 10.7326/0003-4819-151-6-200909150-00006. [PMID:19755366] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dulhunty JM, Boots RJ, Paratz JD, Lipman J. Determining authorship in multicenter trials: a systematic review. Acta Anaesthesiol Scand. 2011 Oct;55(9):1037–43. doi: 10.1111/j.1399-6576.2011.02477.x. [PMID:21689076] [DOI] [PubMed] [Google Scholar]

- 5.Ioannidis JP, Khoury MJ. Assessing value in biomedical research: the PQRST of appraisal and reward. JAMA. 2014 Aug 6;312(5):483–4. doi: 10.1001/jama.2014.6932. [PMID:24911291] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Centers for Medicaid & Medicare Services [Nov 5, 2014];Decision Memo for Cardiac Rehabilitation (CR) Programs - Chronic Heart Failure (CAG-00437N) 2014 Feb 18; at http://www.cms.gov/medicare-coverage-database/details/nca-decision-memo.aspx?NCAId=270.