Abstract

The ability to navigate without getting lost is an important aspect of quality of life. In five studies, we evaluated how spatial learning is affected by the increased demands of keeping oneself safe while walking with degraded vision (mobility monitoring). We proposed that safe low-vision mobility requires attentional resources, providing competition for those needed to learn a new environment. In Experiments 1 and 2 participants navigated along paths in a real-world indoor environment with simulated degraded vision or normal vision. Memory for object locations seen along the paths was better with normal compared to degraded vision. With degraded vision, memory was better when participants were guided by an experimenter (low monitoring demands) versus unguided (high monitoring demands). In Experiments 3 and 4, participants walked while performing an auditory task. Auditory task performance was superior with normal compared to degraded vision. With degraded vision, auditory task performance was better when guided compared to unguided. In Experiment 5, participants performed both the spatial learning and auditory tasks under degraded vision. Results showed that attention mediates the relationship between mobility-monitoring demands and spatial learning. These studies suggest that more attention is required and spatial learning is impaired when navigating with degraded viewing.

The ability to navigate independently and confidently through unfamiliar environments plays an important role in quality of life. Navigating and learning a new space can be a challenge for any person; however, the exploration of a novel space can be a very different experience for someone with vision loss. Architectural information is often unclear; furniture may visually blend in with the floor, steps or ramps may be difficult to detect. Previous low vision work has evaluated the perception of objects for people with low vision (e.g., Bochsler, Legge, Gage, & Kallie, 2013; Bochsler, Legge, Kallie, & Gage, 2012) as well as performance on object avoidance and size and distance perception tasks in low vision and simulated degraded vision individuals (e.g., Kuyk, Biehl, & Fuhr, 1996; Long, Reiser, & Hill, 1990; Lovie-Kitchin, Soong, Hassan, & Woods, 2010; Marron and Bailey, 1982; Pelli, 1987; Tarampi, Creem-Regehr, & Thompson, 2010). While these are valuable questions, in the current paper we argue that concentrating efforts exclusively on the low-level perceptual aspects of life with low vision leaves a critical component unexplored: that of the cognitive demands of low-vision navigation. We conducted a series of studies to test the hypothesis that navigation with degraded viewing requires individuals to allocate additional attentional resources towards the goal of safely and efficiently walking, thereby drawing attention away from competing cognitive goals. Consequently, in the context of a spatial learning task, we predicted that with fewer available resources, learning a novel spatial environment would be impaired. Although this research was conducted in the context of navigation with low vision, the effects of cognitive demands while walking are more broadly relevant to normally sighted populations navigating in circumstances of additional cognitive load such as multi-tasking, distraction, or mobility impairment.

Spatial Navigation and the Role of Attention

Learning and understanding spatial environments is an important part of daily life. Individuals’ understanding of a space can vary from location to location, depending on their goals. For instance, one might only need to know a specific path to a target location such as a dentist lobby or classroom. In other, more frequently visited spaces, it may be helpful to acquire an enduring representation and store integrative information about the environment. These possibilities form an important distinction in the spatial memory literature. Route information refers to memory specific to the traveled path, often using landmarks positions or direct egocentric experience to represent space. Survey-based representations, also called cognitive maps (Tolman, 1948), are a more global representation of space. They provide a comprehensive and flexible understanding of space, allowing one to take novel shortcuts and easily reorient in an environment in the event of a wrong turn.

A robust finding in the spatial learning literature, with respect to both survey and route-based information, is that the act of learning a novel spatial environment is an attention-demanding task (Albert, Rensink, & Beusmans, 1999; Lindberg & Gärling, 1982; Smyth & Kennedy, 1982). Evidence for the use of attention during spatial encoding comes from desktop and real world environments, and generalizes to multiple spatial learning strategies (Albert et al., 1999; van Asselen, Fritschy, & Postma, 2006; Lindberg & Gärling, 1982). For instance, Van Asselen, Fritschy, and Postma (2006) led participants through a hallway; half of the participants were made aware of a later memory test. The informed group made fewer errors on a spatial memory task, suggesting that spatial learning benefits from the use of controlled attention. Divided attention tasks during the encoding of new environments also have been shown to disrupt spatial learning (Albert et al., 1999; Lindberg & Gärling, 1982). While there is some evidence that knowledge or familiarity with specific landmarks can be acquired through incidental learning while walking along routes (e.g., van Asselen et al., 2006; Anooshian & Siebert, 1996), this spatial memory is limited, and much work supports the claim that learning the spatial relations required for accurate route and survey memory requires intentional and effortful processing (see Chrastil & Warren, 2012 for a review). Together, the literature provides compelling evidence to suggest that one does not automatically encode spatial environments, but must instead attend to the environment while navigating to encode spatial information.

Although it is established that walking through an environment while trying to encode spatial information requires attention, there has been little focus on the attentional requirements of simply walking. This is not entirely surprising provided that walking safely from one location to another is often performed easily and without deliberate thought. However, we argue that this is not always the case. In some circumstances, the amount of attention allocated towards the goal of safe and efficient navigation might significantly draw attention away from other tasks occurring during navigation. We refer to this reallocation of attention as mobility monitoring. Many factors, both temporary and permanent, can cause an increase in mobility-monitoring demands. For instance, a healthy, young person who might normally navigate with low mobility monitoring-demands might need to attend more to their mobility when walking on slippery surfaces or crossing busy streets in unknown cities. As such, their demands for mobility monitoring temporarily increase. For those with physical disabilities, or older adults who face large consequences in the event of a fall might experience an increase in mobility monitoring demands for the remainder of their lives. Regardless of the duration, we argue that keeping oneself safe while navigating will take priority over other goals that might be competing for the same pool of attentional resources.

For a large portion of the population not facing short or long term challenges to navigation, mobility monitoring in a novel indoor environment is of low cognitive cost, accomplished easily using online visual information while walking. In such circumstances, it is unlikely that mobility monitoring would interfere with spatial processing or other goals within the environment. However, when the goal of safe navigation is challenged, one must utilize additional cognitive resources to meet the basic safety goal. The specific mobility-monitoring factor assessed in this research is navigation with degraded visual information, as is the case with a large and rapidly growing population of individuals with severe visual impairment. Meeting the goal of safe navigation for those with low vision might require additional visual attention to disambiguate between a shadow and a trip hazard, or a moment of distraction when walking in a crowd due to uncertainty about whether others have seen and will avoid your path. The current work supports the idea that the increase in mobility monitoring requires a marked increase in attention required for the act of navigation.

Low-vision perception and mobility

Low vision is defined as a chronic visual deficit that disrupts daily functioning and is not correctable with lenses. The low-vision population is comprised of individuals experiencing visual deficits of qualitatively different types and of varying severity. Typically, low vision involves some combination of deficiencies in visual acuity, contrast sensitivity, and visual field. The current work focuses specifically on deficits in contrast sensitivity and visual acuity, deficits that often occur together with vision loss. Such deficits likely affect the recovery of large-scale visual information, important in the formation of spatial representations obtained through direct experience with a novel environment. Although there is a lack of standardization in the low-vision literature, this research will address the range of acuity deficits termed profound low vision by the Visual Standards Report for the International Council of Ophthalmology, Sydney, Australia (2002). Profound low vision, most often characterized by the degree of acuity degradation, is associated with the visual acuity range from 20/500 to 20/1000. This range of vision loss is typically associated with large disruptions to mobility and inabilities to read without the aid of strong magnification (Colenbrander, 2004). However, individual differences among those with profound low vision exist. Some choose to, and are capable of, using their remaining vision to accomplish many daily life activities.

Individuals with low vision often report avoiding new spaces due to fear of getting lost (Brennan et al., 2001), although the empirical findings regarding the impact of low vision on mobility performance are mixed. The majority of the work in low-vision mobility focuses on object avoidance while interacting with their environment (Kuyk & Elliott, 1999; Goodrich & Ludt, 2003; Pelli, 1987; Turano et al., 2004; West et al., 2002) While West and colleagues (2002) find that even mild levels of acuity loss impair mobility through both self-report and performance measures, Pelli (1987) finds that mobility performance and obstacle avoidance are impaired only at very severe levels of vision loss (20/2000 Snellen). Navigation with low vision is consistently associated with a higher risk of falls, with chances of falling between 1.5 and 2.6 times greater, depending on the specific visual deficit (Black & Wood, 2010). Consideration of the attention costs while navigating with degraded visual information is a novel direction in low-vision navigation research. With potential increased risk of navigation-related errors, the goal of safe navigation may be more salient for an individual navigating with low compared to normal vision, providing impetus for the focus on mobility-monitoring demands.

Research assessing how individuals perceive objects and architectural features from a stationary position consistently finds little to no impact in perceptual judgments under simulated states of severely degraded viewing. This research includes empirical evaluations of ramp and step detection (Bochsler et al, 2013; Bochsler et al, 2012) distance perception to objects along the ground plane (Tarampi et al., 2010) or elevated off the ground plane (Rand, Tarampi, Creem-Regehr, & Thompson, 2012), and the role of visible horizon information in distance perception (Rand, Tarampi, Creem-Regehr, & Thompson, 2011), all under degraded-viewing conditions. Despite severe deficits to visual acuity and contrast sensitivity, perceptual abilities show little to no impairment. These findings are a bit surprising considering that several mobility and obstacle avoidance studies have shown decreases in performance with moderate levels of vision loss. This suggests that acting in the space as opposed to simply viewing the environment may present a meaningful and distinct challenge. We suggest that attention allocated towards mobility monitoring helps to explain these different findings.

An Attentional Resource Model of Low-Vision Navigation

In the current work, we investigated the impact of degraded vision on spatial learning, specifically focusing on how degraded vision might interfere with spatial learning due to increased demands to mobility monitoring. We propose that safe low-vision mobility requires a significant amount of attentional resources, providing competition for those needed to learn a new environment. Our model suggests that attentional resources that one might otherwise allocate towards learning are instead directed toward assessing potential risks or interpreting ambiguous perspective information when navigating with degraded vision. Due to this competition for attentional resources, spatial learning is impaired. In summary, we hypothesize that increased demands for assessing and managing mobility monitoring for degraded-vision navigation decrease spatial learning abilities via competition for the attentional resources associated with spatial learning.

Overview of Experiments

Providing evidence for the complete account involved five experiments designed to test individual relationships within the model, and a final integrative mediation-model experiment. The first two experiments evaluated whether increases to mobility-monitoring demands yielded poorer memory for a novel spatial environment. Experiment 1 tested this using the methodology adopted from previous studies of spatial learning in real-world environments (Fields & Shelton, 2006). In Experiment 1, we compared spatial learning performance for each participant when walking with normal vision and when walking with degraded vision. We predicted that participants would learn the environment better when walking with normal compared to degraded vision. Experiment 2 tested how decreases in mobility monitoring impact spatial learning when navigating with degraded vision by comparing two degraded-vision conditions which varied only in how an experimenter guided the participant along the paths. The second set of experiments (Experiments 3 and 4) tested the association between mobility monitoring and attentional resources. Experiment 3 evaluated the attentional demands of navigating with normal vision and those of walking with degraded vision (higher mobility monitoring) using an auditory digit response task as the dependent measure. Experiment 4 tested how decreasing mobility monitoring demands through guidance while navigating (as in Experiment 2) affects attentional demands, using the same digit monitoring task. Finally, Experiment 5 evaluated the causal relationship between the attentional demands of mobility monitoring and spatial learning performance by performing a mediation analysis on participants’ individual performance on both spatial learning and attention tasks.

In all experiments, normally sighted individuals performed navigation tasks while wearing blur goggles that severely degraded visual acuity and contrast (see also, previous work using this methodology in Rand et al., 2011; 2012). The use of simulated low vision allows for highly controlled experimental procedures. By controlling for the precise visual degradation, the manipulation reduces variability in performance between subjects, which ensures that all participants are experiencing visual deficits of the same type and severity - within the range of profound low vision. Another benefit to the blur goggles is that by inducing simulated low vision in normally sighted individuals, we can use a within-subjects design. This design allows for control over individual differences such as spatial learning ability or the ability to perform multiple tasks simultaneously. Despite the benefits of the use of simulated low vision, there remains the possibility that clinical low-vision individuals, through experience, would exhibit different behavioral patterns. This is an important question for future work.

Experiment 1

Experiment 1 evaluated the impact of degraded vision on spatial learning of a novel indoor environment. Specifically, we were interested in survey representations due to their flexibility with respect to acting in an environment. To evaluate learning performance, participants viewed six landmarks along a path and were asked to recall their locations at the completion of the path. This spatial learning task was a modified version of an established procedure used in earlier spatial learning tasks (Fields & Shelton, 2006). To indicate where the previously-viewed object was located, participants used a verbal pointing method (Philbeck, Sargent, Arthur, & Dopkins, 2008). Although it is possible to use route-based knowledge to retrace ones’ steps to the landmark location, this task is thought to test the acquisition of survey knowledge because of the requirement of pointing to each landmark location from a single, novel spatial location. Each participant completed one path under a simulated state of severely degraded vision and one path with normal vision. A comparison of performance on the verbal pointing method following each of the viewing conditions revealed the impact of degraded viewing on spatial learning.

In accordance with our model, we predicted that spatial memory performance, as indicated by verbal pointing accuracy, would be greater when individuals traveled the path using their normal vision compared to simulated degraded vision. This finding would be consistent with the hypothesis that when navigating with low vision, more attention is required for mobility, taking attention away and thereby impairing spatial learning. Alternatively, one might predict there to be no difference in memory performance as a function of vision condition. This finding would be consistent with low vision literature that has shown intact static perception of objects and distances (i.e. Tarampi et al, 2010), as well as work in the low vision obstacle avoidance literature suggesting that mobility is only impaired at very extreme levels of degradation (Pelli, 1987)

Method

Participants

Twenty-four (14 males, 10 females) undergraduate students participated in the experiment for partial course credit. Two participants were removed from the analysis due to below chance performance on the verbal pointing task. This exclusion criterion is discussed in more detail in the results section of Experiment 1.

Materials

All participants wore two sets of goggles during the experiment, one for each vision condition block: one pair of blur goggles and one control pair. Both pairs were welding goggles with the original glass lens removed. For the blur pair, the lenses of both eyes were replaced with a theatrical lighting filter (ROSCO Cinegel #3047: Light velvet frost), resulting in an average binocular acuity of logMAR 1.44, or 20/562 Snellen and an average log contrast sensitivity of .76. No lens covered the eyes for the control pair, allowing the participants to use their normal vision with the same field of view (FOV) restrictions as the blur pair. The binocular FOV for the goggles averaged 71 degrees horizontal and 68 degrees in the vertical. The degradation of visual acuity, contrast sensitivity, and FOV were evaluated using a separate group of 20 participants.1 During practice and experimental trials, participants wore noise-cancelling headphones to block out the distraction of environmental noise. They listened to pink noise from a mp3 player, and the experimenter used a microphone to speak above the noise.

All participants completed the Santa Barbara Sense of Direction Questionnaire (SBSOD) upon completion of the experiment (Hegarty, Crookes, Dara-Abrams, & Shipley, 2010). We added one question to assess how familiar participants were with the indoor space in which the experiment took place. This self-report scale, commonly used in spatial learning literature, is shown to be correlated with spatial tasks requiring survey knowledge, and we predicted that higher ratings of sense of direction would correlate with spatial learning outcomes on our task. The SBSOD measure was also collected for Experiment 2, but in neither case did this predict spatial learning error. As such, it will not be discussed further. Participants also referenced the Subject Units of Distress Scale (SUDS) when making judgments about how calm or anxious they felt while completing each trial (Bremner et al., 1998).

Two experimental paths were used during Experiment 1. An exemplar path from all experiments can be found in Figure 1. Both paths had six commonly occurring landmark objects (i.e., staircase, fire extinguisher) spaced out over a path that required approximately 5 minutes to walk. The landmarks were distinct for each path to avoid confusion or recurring objects. The paths were also carefully chosen so that no portion of experimental paths overlapped. This was the case for all 5 experiments. Paths were equated for distance (Path 1: 201 meters, Path 2: 198 meters), number of turns per path (8 per path), and distance traveled through wide compared to narrow hallways. The practice paths and both experimental paths were located on distinct floors of the building.

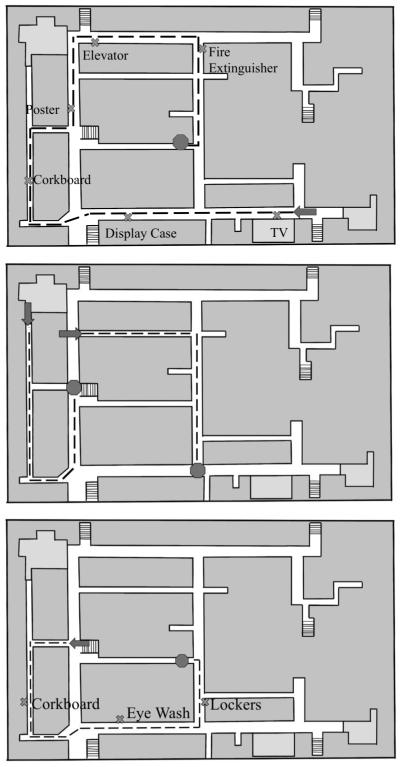

Figure 1.

Exemplar paths for Experiments 1 and 2 (top, represents 1 of 2 paths), Experiments 3 and 4 (middle, represents 2 of 8 paths), and Experiment 5 (bottom, represents 1 of 4 paths). Landmarks are marked on the maps for Experiments 1, 2, and 5 (Experiments 3 and 4 did not involve learning of landmarks).

After each trial, participants were presented with a floor plan of the building in which the experiment took place—the Merrill Engineering Building (MEB). The plan was created by taking a digital photograph of the posted floor plan on each level and superimposing the main layout of the floor on a blank document using a photo-editing program. All of the numbers and names of classrooms or landmarks on the floor were removed. Participants referred to these maps for the route-learning portion of questioning.

Design

The experiment used a 2 (vision condition) X 2 (vision order) design. Vision condition (simulated degraded vision, normal vision) was a within-subject manipulation and vision order (normal vision first, degraded vision first) was manipulated between subjects.

Procedure

Participants met two experimenters outside of a designated classroom in the Merrill Engineering Building (MEB) at the University of Utah campus. Participants first read and signed the consent form and completed a brief demographic form. Next, participants were given an overview of the experimental task and trained in the verbal pointing procedure. To assist with the training, participants stood in the center of two intersecting pieces of painter’s tape that formed a cross on the floor. Participants were then told they would be using a two-step verbal response to identify the location of landmarks they encountered in the building. The experimenter then defined the four quadrants: front right, front left, back right, and back left. Next, participants were instructed that once they had identified a quadrant, they would then provide more specific information regarding the location in the form of degrees from 0 to 90. Figure 2 shows how the 360-degree area surrounding each participant was broken down for the verbal response. The degrees were defined for each quadrant, followed by several practice trials to test for understanding of the verbal task.

Figure 2.

A depiction of the verbal pointing procedure used for Experiments 1, 2, and 5. Participants isolated a quadrant and a degree within the quadrant to indicate the precise location of each landmark object.

Participants were then led through two short practice paths to introduce the memory portion of the experiment. For practice walking with the goggles, participants wore the control goggles for the first practice path and the blur goggles for the second path. Throughout practice and experimental trials, participants were given verbal instructions while navigating the path, indicating when to stop walking, the location of landmarks, and when and which direction to turn. Participants walked along paths next to the experimenter, stopping at two landmarks along the two practice paths. The landmarks were a clock and a water fountain for the first path; in the second path the landmarks were a clock and a poster. At the end of each path, participants used the verbal pointing response to indicate where the two landmarks were located with respect to their current location and heading. Participants might, for example, respond by saying ‘back left, 45 degrees’, suggesting they thought the object to be behind them to the left. Feedback was provided during the practice trials. If needed, a third practice trial was added to ensure the participant understood the task before moving on to the experimental trials.

The participants completed two experimental paths; they completed one with the blur goggles and one with the regular goggles. Path order and vision order were both counterbalanced. After equipping them with the appropriate goggles and noise cancelling headphones, participants were instructed to walk next to the experimenter and match their walking pace. When a landmark was reached, walking was stopped, and the experimenter identified the landmark by stating the object name and the location in the hallway (left or right). After a 5-second pause, the experimenter continued walking along the path. At the end of the path, participants remained facing the same direction and were briefly reminded of the quadrants and degrees for the verbal pointing. This end location was critical for determining the angles for the verbal pointing task, so care was taken to ensure that the participant was centered in the hallway and facing directly down the hallway in the direction they were walking prior to making judgments about the landmarks. The experimenter named one landmark at a time in a random order and instructed the participants to use the verbal pointing procedure to indicate where the landmark was located from their current heading and location. Experimenters also encouraged physical pointing so they could monitor for accidental left/right confusion. Participants were allowed as much time as needed to make each judgment. Next, participants answered a series of questions about their level of mobility-related anxiety while they were walking through the space. They referenced the SUDS scale when making the responses, which allowed participants to report how calm on anxious they felt into a numeric value from 1 to 100.

Participants also answered questions about the specific route they traveled. They viewed a sketch of the floor plan of the level of the building they had just walked, and an experimenter provided orientation by indicating at which entrance to the building the participant had been greeted. They were then asked to identify the start and end location of the path they walked, and trace the path through the hallways they thought they walked. For each item, experimenters corrected the participants if they made a mistake, and tallied the total mistakes. Next, participants were asked to name the landmarks in the order in which they were seen along the path. Together, this information was scored as a single value and will subsequently be referred to as the route information score. Next, participants repeated the experimental procedure wearing the pair of goggles they did not wear in the first path. Finally, participants were thanked and debriefed.

Results and Discussion

Spatial Learning

Participants’ verbal pointing responses were first converted into a degree from 0 to 360. The difference between this pointing value and the veridical bearing was determined, and the absolute value of the non-reflex angle (the angle ≤180 degrees) of this difference was used as a measure of unsigned pointing error. Two participants were excluded because their average pointing error exceeded 90 degrees. This threshold was chosen because uniformly distributed random pointing would be expected to result in an average pointing error of 90 degrees, so exceeding this value can be interpreted as worse-than-chance performance. This definition of chance performance is consistent with Chrastil and Warren (2013).

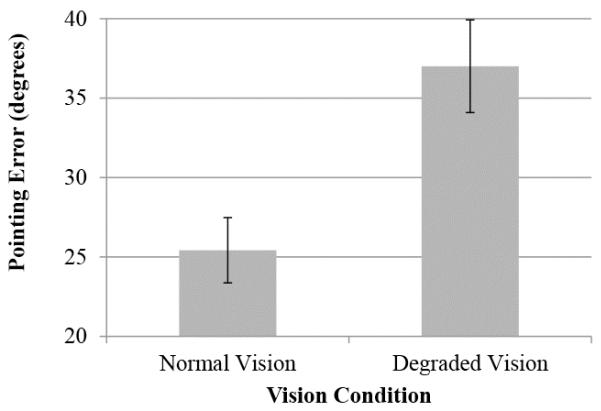

The main question of interest for Experiment 1 was whether degraded vision altered how well participants were able to learn a new environment. To assess this, we first conducted a mixed-design ANOVA on the verbal pointing task accuracy with vision condition as a within-subject variable and vision order as a between-subject variable. The analysis revealed a main effect of vision condition; error rates in degrees were lower when completing the task in the control (M = 25.42, SE = 3.0) compared to blur goggles (M = 37.0, SE = 4.2) goggles, F (1, 22) = 8.39, p < .01, partial χ2 = .276 (see Figure 3). There were no other significant effects.

Figure 3.

Mean error values for the verbal pointing task in Experiment 1, assessing spatial learning of a novel, indoor path under a severely degraded-vision condition compared to a normal-vision viewing condition. Error bars represent between-subject standard error.

As a secondary analysis, we conducted a 2 (path order) x 2 (vision condition) repeated-measures ANOVA on the combined scores from the route-learning questions. Participants received two points for correct responses on start and end location, one point for each correct turn from the path tracing, and one correct point for each landmark listed in the correct order, totaling 18 possible points per path. Route scores did not vary significantly between degraded vision (M = 15.63, SE = .48) and normal vision (M = 16.08, SE = .53) conditions (p = .51), likely due to ceiling effects on the route-based questions.

Anxiety Measures

To determine whether participants felt more anxious when navigating with the blur compared to normal goggles, a paired t-test was conducted on the average SUDS response following the degraded vision trial and the normal vision trials. This difference was significant t (23) = 6.34, p < .01, suggesting that participants were less anxious walking during the normal vision (M = 17.01, SE = 1.96) compared to degraded vision trials (M = 36.49, SE = 4.04).

The findings from Experiment 1 suggest that navigating a path under simulated degraded viewing conditions disrupts the ability to learn a novel spatial environment compared to when navigating with normal vision. There are many explanations for why degraded visual information would disrupt spatial learning. It is likely that the degraded visual information itself was a factor in the differences found in Experiment 1; if people are not able to see as well, it is more difficult to recover as much information about the spatial environment. This finding may not seem surprising, but it is important to recall that previous research conducted with the same visual degradation has yielded intact perceptional judgments of objects and distances up to 20 meters, suggesting that the inability to make sense of the visual array is not a sufficient explanation of the finding on its own. We argue that there is also a cognitive cost associated with navigating with low vision that is not present when one is viewing objects from a static perspective. Consistent with the hypothesis, participants in Experiment 1 reported higher levels of anxiety about their safety when walking through the paths with degraded compared to normal vision. In Experiment 2, we aimed to test whether manipulations to mobility-related safety demands influenced spatial learning performance in both normal and degraded-vision conditions.

Experiment 2

Independent navigation, even with normal vision, requires a person to monitor the surrounding environment in order to stay safe en route to a destination. This monitoring likely occurs passively, not requiring enough attention for it to be consciously recognized. When visual input is degraded, we predicted that the need to perform this monitoring increases. Although it might not be the case that this increase in monitoring demands is consciously recognized, we predict that it interferes with the ability to perform other cognitively demanding tasks such as learning a new environment. Experiment 2 begins to assess this prediction by manipulating the need to allocate attention towards monitoring for safety hazards throughout the paths. Participants walked a path in each of two guidance conditions; they walked one path guided (holding the arm of an experimenter) and one path independently (with the experimenter following), in either the normal vision or simulated degraded vision conditions.

We predicted that when walking with a guide in the degraded-viewing condition, less attention would be needed with respect to the goal of keeping safe while walking through the environment, freeing up resources for the goal of spatial learning, and improving memory performance. We did not predict a difference in guided versus unguided trials for control subjects walking with their normal vision because their mobility-monitoring demands were likely very low even when walking independently. Alternatively, a possible outcome of Experiment 2 would be that guidance while walking with low vision would not influence spatial memory performance. This realistic possibility would suggest one of several explanations. First, it might be the case that the deficits found during degraded vision conditions in Experiment 1 were the result of a distortion of the representation of space as a result of the visual deficit, and not due to a cognitive mediating factor. For instance, it might be the case that those navigating with low vision are misperceiving the length of long hallways, and are basing their spatial representations on this erred information. Another alternative hypothesis would be that walking safely through an environment is a completely automatic task, and providing a guide to assist with walking would not improve attentional demands.

Method

Participants

Forty-eight University of Utah undergraduate students participated (20 males, 28 females; 24 normal vision, 24 degraded vision) in Experiment 2 for partial course credit. None had participated in Experiment 1. Three participants were excluded from the analysis. One was excluded because of familiarity with the MEB building, and two because of below chance performance on the verbal pointing task. Forty-five participants were included in the analysis.

Materials

The same materials from Experiment 1 were used in Experiment 2.

Design

A 2 (guidance) X 2 (vision condition) X 2 (guidance order) design was used for Experiment 2. Guidance (guided, unguided) was manipulated within-subject, and vision condition (normal vision, degraded vision) and guidance order (guided first, unguided first) were manipulated between-subjects.

Procedure

Participants in Experiment 2 were given the same consent and demographic questionnaire as those in Experiment 1. The training was almost identical as well, except that both training paths were completed with the goggles that corresponded to the participants’ condition because vision condition was manipulated between subjects. Each participant navigated one path in the guided condition and one path in the unguided condition. In the guided trial, the experimenters walked with their elbow held firmly to their side, extending their forearm forward at a 90-degree angle directly next to the participant. Participants held the wrist of the experimenter’s arm while navigating through the path. During the unguided trial, participants walked independently in front of the experimenter. Before beginning the unguided trial, participants were assured that they would be warned well in advance if it seemed as though they were approaching an unseen hazard. On both guided trials and unguided trials, identical verbal instructions for where to turn, when to stop, and where the landmarks were located were provided. Experiment 2 used the same two paths, landmarks, SUDS questionnaire, route questions, and debriefing as Experiment 1.

Results and Discussion

Spatial Learning

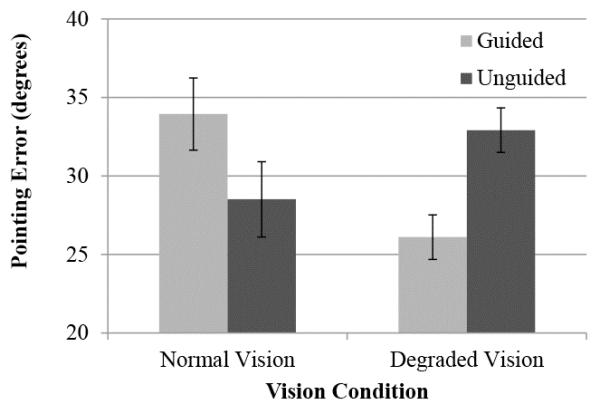

A 2 (guidance) X 2 (vision condition) X 2 (vision order) mixed-design ANOVA was conducted, with guidance as a within-subject variable and vision condition and vision order as between-subject variables. The analysis revealed a significant guidance X vision condition interaction, F (1, 42) = 4.87, p < .05, partial χ2 = .10. No other main effects or interactions reached significance. To test how guidance influenced each vision condition, separate mixed-design ANOVAs were conducted on a verbal pointing error to assess the impact of guidance on spatial learning for each vision condition separately, with guidance order (guided first, unguided first) entered as a between-subject factor. For the degraded vision condition, there was a main effect of guidance in the predicted direction. Participants in the unguided condition (Mean error= 32.0, SE= 2.1) were less accurate, as evidenced by degrees of error, than those in the guided condition (Mean error = 26.1, SE = 3.2), F (1, 22) = 5.50, p = .028, partial χ2 = .20 (see Figure 4). For those in the normal vision condition, the opposite pattern of results was found such that participants performed better on unguided trials (M = 28.5, SE = 3.4) compared to guided trials (M = 33.9, SE = 3.7), but this main effect was not significant, F (1, 20) = 1.23, p = .28. Therefore, the guidance X vision condition interaction was driven by a significant influence of guidance when walking with degraded vision but no significant influence when walking with normal vision. Differences in route-learning performance on guided compared to unguided trials were also not significant for participants in the degraded vision condition (guided: M = 14.7, SE = .47; unguided: M = 14.3, SE = .52), p =.29, or for participants in the normal vision condition (guided: M = 15.3, SE = .63; unguided: M = 15.3, SE = .64), p = 1.0. There were also not significant differences in route-learning scores as a function of vision condition, p = .44.

Figure 4.

Mean error values for the verbal pointing task in Experiment 2, assessing spatial learning of a novel, indoor path when walking independently (unguided) or with the assistance of an experimenter (guided), as a function of vision condition. Error bars represent between-subject standard error.

Anxiety Measures

There was a main effect for SUDS values for participants in the degraded-vision condition; SUDS values were higher in the unguided condition (M = 30, SE = 3.19) compared to the guided condition (M = 23, SE = 2.79), t (23) = 3.0, p = .006. There was no difference in SUDS values for participants in the normal-vision condition, p = .89, suggesting that the presence of a guide influenced participants when vision was degraded, but not when vision was clear. No other main effects or interactions were significant.

The aim for Experiment 2 was to determine whether allocating additional attention towards the goal of staying safe while walking contributed to the decreased spatial memory performance under simulated low-vision conditions observed in Experiment 1. The results of Experiment 2 support this hypothesis. Those participants who navigated the environment with degraded vision performed better on the spatial memory task while guided compared to when walking independently. Importantly, the visual degradation was identical in both cases, so degraded vision alone cannot explain this difference. Instead, we suggest that when navigating under severely degraded viewing conditions, the presence of a guide offloads a portion of the safety-monitoring demands, allowing a person to focus more on the spatial learning task. Results from Experiment 2 also suggest that when navigating with normal vision, the presence of a guide did not improve performance on the spatial memory task. Normal-vision participants did not perform significantly better than degraded-vision participants, as was the case in Experiment 1. This is likely due to individual differences or additional variability between groups since vision condition was manipulated between subjects in Experiment 2, instead of within subjects. Reports on the SUDS support the hypothesis. Under degraded-vision conditions, participants reported less anxiety when guided, but guidance had no effect for participants navigating with normal vision.

Experiment 3

The focus of this work so far has concentrated on the ability to learn a novel spatial environment. Experiment 1 demonstrated that navigating with degraded compared to normal vision disrupts this ability, and Experiment 2 showed that when people with degraded vision navigated with the presence of a guide, they were less anxious and better able to learn the spatial environment than when walking independently. The next step for this study was to assess the attentional demands of navigating with low compared to normal vision. In order to accomplish this goal, we instructed participants to perform an ongoing auditory attention task while walking through a novel environment. Performance on this auditory task was the main dependent variable in Experiment 3. If the results of Experiment 1 were due, in part, to allocating attentional resources towards monitoring the environment for safety when walking with degraded vision, we predict that auditory task performance would also decrease when walking with degraded vision.

Method

Participants

Twenty-one University of Utah undergraduate students who had not participated in Experiments 1 or 2 participated in Experiment 3 (11 males, 10 females) for partial course credit. Data for one participant was removed due to below chance performance on the auditory task, or failing to respond to 50 percent of the possible correct responses.

Materials

Experiment 3 introduced an auditory task in which participants were required to monitor a continuous stream of auditory-presented digits. We created an automated script run on a laptop. The script allowed the experimenter to choose from 12 tracks; each track presented sound files of digits 1-9 in random order, each separated by 1 second. Each track was 1 minute and 36 seconds long. The sound files were played through wireless headphones worn by the participants. The program recorded clicks from a wireless mouse, capturing both accuracy and precise reaction time data. When the experimenter pressed a key on the laptop at the end of each trial, the program generated an output file in the corresponding participant folder.

Participants walked eight short paths throughout the experiment, four for each vision condition. We organized the eight paths into two sets of four paths each. Paths within each set were equal in length (between 75 and 80 meters), number of turns per path (1 or 2 turns), and hallway width. Specifically, each set had one path through wide hallways with one turn, one path in wide hallways with two turns, one path through wide and narrow hallways with one turn, and one path through wide and narrow hallways with two turns. The order of path set was counterbalanced. Participants also wore the same blur and control goggles used in Experiment 1.

Design

Experiment 3 used a 2 (vision condition) X 2 (trial type: walking or stationary) x 2 (vision order) design. Vision condition (degraded vision, normal vision) and trial type were manipulated within-subjects whereas vision order (degraded vision first, normal vision first) was manipulated between subjects.

Procedure

Participants arrived at the same meeting locations as Experiment 1 and 2, and filled out the same demographics and consent form. Next, participants read the instructions for the digit monitoring task. The instructions stated that a continuous stream of auditory digits would be played through wireless headphones, and participants were required to make responses based on what they heard by clicking a mouse. Specifically, the task goal was to click the mouse upon hearing an even number proceeded by another even number, or an odd number proceeded by another odd number. That is, if they heard ‘1, 4, 5, 7’, clicking after hearing 7 would be a correct response as it followed the odd number 5. After reading through the instructions, participants practiced the auditory task for 1 minute.

Following practice with the auditory task, participants received instructions for the navigation portion of the task. Participants put the goggles on and were instructed to walk next to and keep pace with the experimenter. Prior to each practice and experimental trial, experimenters provided information about how many turns each path contained (either 1 or 2), and which direction the turns would be. For paths with two turns, both turns were in the same direction. Experimenters explained that they would tap the participants’ shoulders a few steps in advance of each turn to indicate the precise intersection; if the turn was to the right, they would tap the right shoulder, and if to the left, the left shoulder. In this way, no verbal instructions were required during experimental trials that might disrupt the auditory task. Participants were not asked to remember landmark locations in this experiment. After two practice trials were completed, participants performed two trials combining the walking task and the auditory task. After the training, participants performed 12 experimental trials. Eight trials were walking trials; participants performed the auditory task while walking through the hallways. Additionally, each participant performed the auditory task while standing on the side of the hallway. These four stationary trials provided a baseline measure for the auditory task performance in the absence of walking. Participants performed two counterbalanced blocks of four walking and two stationary trials, one for each vision condition. In between walking trials, participants kept the goggles on except when walking up or down stairs. Following each block, participants reported their SUDS values for that particular block. Participants were then debriefed.

Results and Discussion

Auditory Task Performance

Auditory task performance for each trial contained both error values and reaction time (RT) values to explore differences in errors between conditions, or potential speed/accuracy tradeoffs. Error values were calculated as hits minus false alarms, divided by the total number of correct responses possible. RT was averaged over the different paths for walking and stationary trials. We conducted separate 2 (vision condition) X 2 (trial type: walking or stationary) X 2 path order (degraded vision first or normal vision first) mixed-design ANOVAs with vision condition and trial type as within-subjects variables and path order as a between-subject variable, for both RT and error values. No significant results were found for RT analysis, suggesting that vision condition did not impair the speed at which participants responded to the stimuli. This finding was not surprising, as the instructions did not emphasize speeded responding.

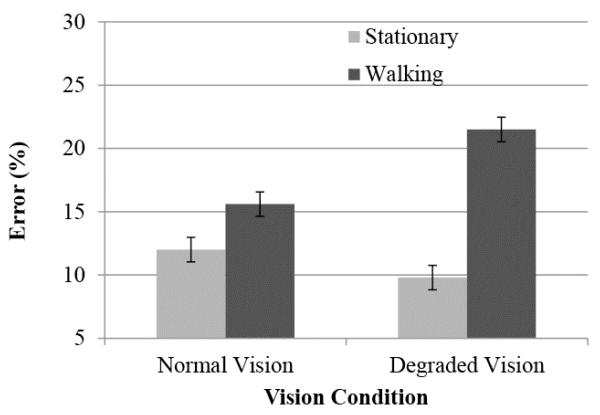

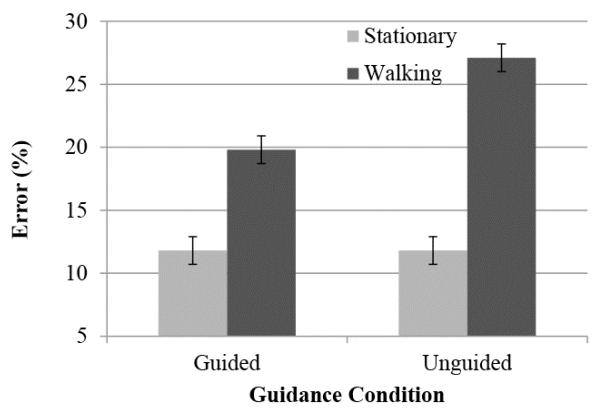

Results for the error values revealed a significant main effect of trial type such that overall, participants had lower error rates on stationary trials (M = .11, SE = .02) compared to walking trials (M = .19, SE = .02), F (1, 16) = 26.0, p < .01, partial χ2 = .62, and importantly, a vision condition x trial type interaction, F (1, 16) = 11.27, p < .01, partial χ2 = .41. No other main effects or interactions were significant. Next, separate mixed-design ANOVAs were conducted on error values for walking and stationary trials to determine the direction of the interaction, resulting in a 2 (trial type: degraded or normal vision) X 2 (path order: degraded vision first or normal vision first). Results for the walking trials revealed a main effect of vision condition, F (1, 14) = 8.245, p = .012, partial χ2 = .37. Error values on the auditory task were lower in the normal vision condition (M = .16, SE =.02) than in the degraded vision condition (M =.22, SE =.02), consistent with the hypothesis (see Figure 5). In contrast, there was no significant effect of vision condition on stationary trials accuracy (p= .30), suggesting that degraded-vision cost was specific to the act of walking with blur goggles. No other main effects or interactions were significant.

Figure 5.

Mean error values on the digit monitoring task in Experiment 3, performed while walking a path in severely degraded versus normal vision conditions, or while standing in a stationary position in each vision condition. Error bars represent between-subjects standard error.

Anxiety Measures

A paired-sample t-test was also conducted on the SUDS values compared across vision conditions. This difference was significant, with SUDS scores lower on the normal-vision block (M = 20.24, SE = 3.02), compared to the degraded-vision block (M = 46.06, SE = 4.67), t (14) = 5.77, p < .01.

Findings from Experiment 3 suggest that more attention is required to navigate with degraded vision compared to normal vision. Performance on an attention-demanding task, attempted while individuals were navigating through simple pathways in a novel space, was impaired when walking with degraded vision compared to normal vision. Although these results support the notion that increased mobility demands in the degraded-vision condition contributed to the decrease in available attention, it remains unclear from Experiment 3 whether the visual deficit alone generated the performance difference. Experiment 4 sought to evaluate the impact of mobility monitoring more directly using the guidance manipulation of Experiment 2.

Experiment 4

The results from Experiment 3 provided support for the notion that degraded-vision navigation requires more attentional resources than navigating with normal vision. Next, as a parallel to Experiment 2, we assessed whether the presence or absence of a navigation guide altered the availability of attentional resources while walking. Participants with simulated degraded vision walked along two series of paths: one series holding the arm of a guide, one series walking independently. We predicted that the addition of a guide would allow participants to free attentional resources that might otherwise be used for mobility monitoring. That is, when holding the arm of the guide, those walking with degraded vision would have more attention available to perform other tasks (in this case the digit monitoring task) because the navigation guide became responsible, in part, for the safety of the participant. Because the guidance manipulation did not alter normal-vision performance in Experiment 2, participants were not tested with normal vision in this experiment.

Method

Participants

Twenty-four University of Utah undergraduate students who had not participated in the previous experiments participated in Experiment 4 (13 males, 11 females) for partial course credit. Data from three participants were removed because of errors with the wireless headphones.

Materials

Materials from Experiment 3 were used again for Experiment 4. Participants heard a continuous stream of auditory digits through wireless headphones generated from a script. Participants also wore the same blur goggles and walked the same paths as Experiment 3.

Design

Experiment 4 used a 2 (guidance) X 2 (trial type) X 2 (guidance order) design. Guidance (guided, unguided) and trial type (walking, stationary) was manipulated within-subjects and guidance order (guided first, unguided first) was manipulated between subjects.

Procedure

Participants first signed consent and demographic forms. Next, participants both read written instructions and heard verbal instructions for the digit monitoring task that was used in Experiment 3. After practice with both the auditory task and the walking task, each participant performed two blocks of trials—one guided and one unguided—in a counterbalanced order. Regardless of guidance condition, participants were given the same verbal instructions before the path. The experimenter indicated how many turns the to-be-walked path had and the direction of those turns. To indicate precisely where to turn on each trial, the experimenter tapped the shoulder of the experimenter a few steps prior to each turn, on both guided and unguided trials. Each block contained four paths and two stationary trials to serve as baseline performance within that block. SUDS measures were collected after each block. As was the case for Experiment 3, participants were asked to keep the goggles on in between paths so they did not view the to-be-walked path with normal vision prior to navigating with the control or blur goggles. All participants in Experiment 4 performed both blocks of trials with the blur goggles.

Results and Discussion

Auditory Task Performance

Performance on the digit monitoring task was the main dependent variable assessed in Experiment 4. Similar to Experiment 3, no significant results were found for reaction time (RT) data. The remaining analyses were conducted on error values.

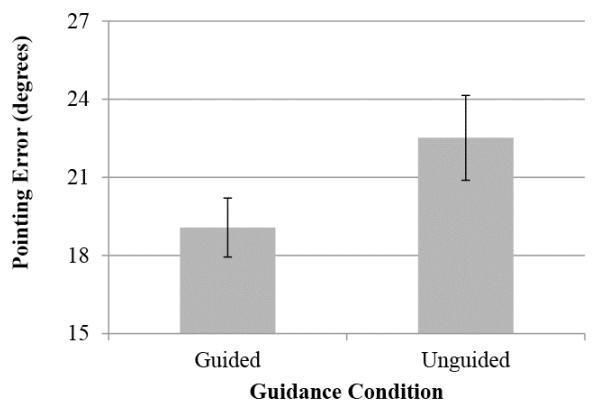

Results of a 2 (guidance) X 2 (trial type: walking or stationary) X 2 (guidance order) mixed-design ANOVA performed on the error values revealed a main effect of trial type such that overall, as expected, participants had lower error rates on stationary trials (M = .12, SE = 02) compared to walking trials (M = .23, SE = 02.), F (1, 18) = 47.75, p < .01, partial χ2 = .73. There was also a main effect of guidance showing that error rates on unguided trials (M = .19, SE = 02.) were higher than guided trials (M = .16, SE = 02.), F (1, 18) = 5.73, p < .05, χ2 = .24. These main effects were qualified by a significant guidance condition X trial type interaction, F (1, 18) = 6.90, p < .01, partial χ2 = .28. To further assess this interaction, a 2 (guidance) X 2 (guidance order) mixed-design ANOVA was performed on the error values, separately for walking and stationary trials. As predicted, lower error values resulted on guided paths (M = .20, SE = .02) compared to unguided paths (M = .27, SE = .02), F (1, 18), p < .001, partial χ2 = .44 (see Figure 6) for walking trials, but there was no significant effect of guidance on the analysis of stationary trials , F (1, 18) = .338, p = .987. The lack of significant effect on stationary trials was not surprising (given that the conditions were essentially the same), but supports that participants were not simply performing better on the auditory task overall in one block of trials compared to the other. Guidance order was not a significant predictor in any of these analyses.

Figure 6.

Mean error values on the digit monitoring task in Experiment 4, performed under degraded viewing while walking a path with a guide (guided) or independently (unguided) or while standing in a stationary position. Guided and unguided stationary trials refer to the condition block in which the stationary trials were embedded, as there was no physical guidance while participants were standing in place. Error bars represent between-subjects standard error.

Anxiety Measures

SUDS values were compared using a paired-samples t-test for unguided SUDS scores (M = 40.3, SE = 3.02) were significantly higher than guided SUDS scores (M = 23.10, SE = 33.60), t (19) = 2.41, p < .05.

Experiment 4 evaluated the impact of navigation with or without the presence of a guide on the availability of attentional resources. To test attentional resources, participants were asked to perform an auditory task while walking under two levels of guidance. Importantly, while we used auditory task performance as the main dependent variable, performance on this task served as an indicator of the amount of free attention a participant had remaining in the face of safely navigating through the environment. Results indicated that less available attention remained for those walking with degraded vision when walking independently as compared to walking with a guide. This is consistent with the proposed model in that when demands for mobility monitoring are higher, as they were in the condition of independent walking, fewer attentional resources are available to perform other goals. However, when a guide was introduced, serving to reduce the amount of attention participants needed to focus on their own safety, more attention was available to do the secondary task. Importantly, visual information alone cannot explain this finding, as the visual information on guided and unguided trials was held constant.

Experiment 5

Taken together, the first four experiments show support for the impact of cognitive factors to low vision spatial learning. Navigating through an environment in the absence of a guide leads to poorer memory for the location of objects (Experiment 2) and interferes with a secondary task (Experiment 4) more so than when navigating with a guide. These findings, although compelling on their own, would benefit from a more direct assessment of the impact of attentional resources on spatial learning. Specifically, we have proposed that people navigating with a guide are better able to remember the spatial location of objects because they are allocating more of their attentional resources towards learning and away from mobility monitoring. We are therefore claiming that the redistribution of attentional resources is mediating the relationship between guidance and spatial learning. Experiment 5 sought to test this relationship using a mediation analysis.

To test for mediation, we needed to assess both attentional resources while walking and spatial learning performance for each individual. Because the memory task and attention task used for Experiments 2 and 4, respectively, were difficult tasks seperately, the procedures were modified so that participants could perform the auditory task while they were learning the landmark locations. For the spatial learning task, path length and number of to-be-remember landmarks were reduced. For the attention task, we used a reaction time task thought to be a sensitive measure of cognitive load without meaningfully decreasing performance on the primary task (Brünken, Plass, & Leutner, 2003; Verwey & Veltman, 1996). In this task, tones are presented at random time intervals, and participants are asked to respond as quickly as possible when they hear the tone. Because of the ease of the task, accuracy values in this task are exteremely high, and the reaction time is the main dependent variable. Reaction times provide a measure for cognitive load such that slower reaction times indicate greater cognitive load (Verwey & Veltman, 1996). Participants in Experiment 5 were asked to perform the auditory task while navigating during spatial learning trials, some paths guided, some unguided.

Participants navigated under severely degraded viewing conditions for all trials. We were then able to examine whether the guidance cost on the attention task – guided minus unguided reaction times – predicted the guidance cost on the spatial learning task. We predicted that attention performance would fully mediate the relationship between guidance and spatial learning.

Methods

Participants

Forty-eight University of Utah undergraduate students participated in Experiment 5 (22 males, 26 females) for partial course credit. Two participants were removed from the analysis for technical difficulties with the recording of mouse clicks, and one for below chance performance on the verbal pointing task. Forty-five participants were included in the analyses.

Materials

The same wireless headphones, wireless mouse, computer, and blur goggles from Experiment 4 were used in Experiment 5. For the attention task, participants heard beep sounds occuring at random intervals between one and five seconds. The auditory presentation of beeps and recording of reaction times from mouse clicks was controlled using a script on a laptop computer. The paths for Experiment 5 were shorter than those in the previous experiments, containing only three landmarks each. Path distance was equated between floors, with overall path distance ranging from 109 and 121 meters. The number of turns on each path was also equated, with each path requiring the participants to make 4 turns. Participants performed two paths for each guidance condition, for a total of four paths. Paths were equated for distance and number of turns.

Design

Experiment 5 used a 2 (guidance) X 2 (guidance order) design. Guidance (guided, unguided) was manipulated within subjects, and guidance order (guided first, unguided first) was manipulated between subjects.

Procedure

The experimenters greeted the participants, who signed the consent form and filled out the demographic information. Next, participants received instructions and training for the spatial learning task as in Experiments 1 and 2. Experimenters then explained that they would also be wearing headphones during the experiment. They were informed that the spatial learning task was the primary goal of the experiment, but that they were also to click the mouse as quickly as possible upon hearing a beep through the headphones. Participants then praticed the auditory task from a stationary position to become accustomed to the sound and make adjustments to the volume of the headphones if needed. Participants then performed a practice trial of the spatial learning and auditory task together.

For the experimental trials, participants performed four critical trials of the spatial learning task with the auditory task, two guided and two unguided. Participants wore the blur goggles for all experimental trials. Guided and unguided trials were alternated, and whether the participant began with a guided or unguided trial was counterbalanced. Following each spatial learning trial, participants performed a stationary trial of the auditory task to serve as a baseline. On stationary trials, participants performed just the auditory task for one minute while standing in the hallway. Participants were also asked to rate their anxiety level following each path walked using the SUDS scale as a reference. Lastly, participants were thanked and debriefed.

Results and Discussion

Performance on both guided and unguided spatial learning trials and guided and unguided attention trials (auditory task performance) was recorded. For spatial learning performance, as in Experiments 1 and 2, error values (average of six pointing errors) were the main dependent variable; for attention trials, reaction times for the tone response during the walking trials were the main dependent variable. We included the guided and unguided memory error values, and guided and unguided RT values on the attention task in the analysis for each participant.

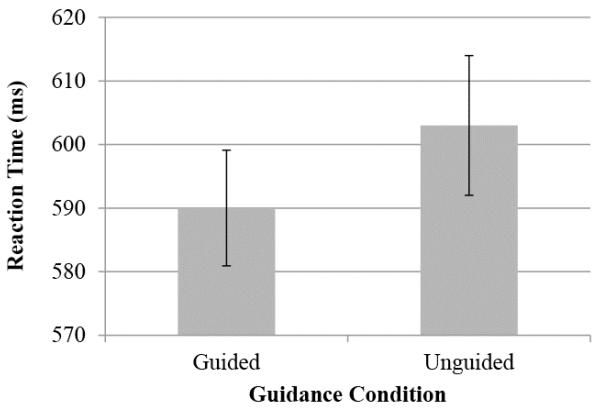

Replication Analyses

Separate 2 (guidance) X 2 (guidance order) mixed-design ANOVAs were conducted on spatial learning and attention trials to test for a replication of the findings of Experiment 2 and Experiment 4, respectively. Errors on guided spatial learning trials were significantly lower (M = 19.07, SE = 1.13) than unguided trials (M = 22.52, SE =1.63), F (1,44) = 5.96, p < .05, partial χ2 = .12 (see Figure 7). Notably the overall error values are lower given the reduced path length and number of landmarks to remember. No other effects or interactions were significant. This finding replicates the main finding of Experiment 2. Similar to the pattern of results from Experiment 5, reaction times on the auditory task during guided walking trials (M = 590 ms, SE = 9.1) were significantly faster than unguided walking trials (M = 603 ms , SE = 11.0), F (1,44) = 6.23, p < .05, partial χ2 = .12 (see Figure 8). No other main effects or interactions were found. As a manipulation check, we also ran a t-test comparing reaction times on the auditory task when stationary compared to an average of the walking trials, both guided and unguided. This manipulation check yielded a significant effect whereby reaction times were faster when stationary (M = 596 ms , SE = 9.1) compared to when walking (M = 519 ms, SE = 9.6), t (45) = 12.95, p < . 001.

Figure 7.

Mean error values for the verbal pointing task in Experiment 5, assessing spatial learning of a novel, indoor path under severely degraded viewing in the presence of a guide (guided) or walking independently (unguided). Error bars represent between-subject standard error.

Figure 8.

Mean reaction times for the auditory task in Experiment 5, while navigating a novel, indoor path in the presence of a guide (guided) or walking independently (unguided). Error bars represent between-subject standard error.

Mediation Analysis

According to our hypothesis, and in line with the results from the first four experiments, individuals navigating with degraded vision are not able to learn the environment as well when they are not guided because they have fewer attentional resources available to allocate to the task goal of spatial learning. This would be demonstrated through a mediational analysis if the difference of the performance on the attention task on guided versus unguided trials predicted the difference of the performance on the spatial learning task on guided versus unguided trials. To test for mediation, cost values were first calculated for each task by subtracting error on the unguided trials from error on the guided trials for each participant on the the spatial learning and the attention tasks. These calculations yielded two cost values (attention cost and learning cost) that reflected a difference between guidance conditions such that a negative value indicated the predicted direction of the effect, showing poorer performance on unguided trials for both the attention task (mean cost = -12.92 ms) and the spatial learning task (mean cost = -3.52 degrees).

Given our repeated-measures design, we followed the repeated-measures mediation procedure described by Judd, Kenny, and McClelland (2001). In this approach, treatment effects—or the difference between performance on both tasks at all levels of the independent variable—are calculated. The treatment effect of one task is then entered into a regression to determine whether it predicts the treatment effect of the other task while controlling for overall performance on that task (average of both treatment conditions). As we had already calculated the treatment effects for spatial learning and attention (attention cost and learning cost), we next calculated an additional variable to be entered into the regression to control for overall performance on the auditory task. This value was the sum of the performance on guided attention trials and the performance on the unguided attention trials, centered at the group mean (sumattn). Both attention cost and sumattn were entered into a regression as independent variable predictors of the dependent variable of learning cost. The analysis demonstrated that attention cost significantly predicted memory cost, B = .38, t(43) = 2.47, p = .018, indicating that attentional cost is a significant mediating variable. Summattn was not a significant predictor of learning cost, B = .11, t(43) = .75, p = .458.

Experiment 5 provided compelling support for the claim that individuals are not able to learn an environment as well while navigating under with high mobility monitoring demands because they have less attention available while walking. This experiment specifically demonstrated that the effect of increasing moblity monitoring demands on attentional resources predicts the effect of increase of mobility monitoring demands on spatial learning. As such, the amount of available attention mediates the relationship between mobility monitoring and spatial learning. Additionally, findings from Experiment 5 strengthen the claims from Experiments 2 and 4 by replicating the findings using a different methodology.

General Discussion

Summary of Findings

The series of studies included in this paper departed from a focus common in low vision research—whether objects can be detected and avoided with degraded vision—by evaluating cognitive influences of navigating with visual impairment. In five studies, we tested the hypothesis that individuals with degraded compared to normal vision have fewer attentional resources available online while they are walking through an unfamiliar space, due to attention to the goal of safe and efficient navigation (mobility monitoring). This redistribution of attentional resources may not noticeably impact the ability to walk, but we predicted that it would impair the ability to learn information about the spatial environment.

Results from this work provide convincing support for our proposed account. First, two experiments evaluated how changes to mobility-monitoring demands influenced the ability to learn a new spatial environment. The hypothesis that navigating with degraded vision would lead to poorer spatial memory for a novel environment was supported by findings from Experiment 1; spatial learning was superior when navigating with normal vision compared to degraded vision. Experiment 2 further evaluated this hypothesis by holding visual information constant and evaluating whether decreases in mobility-monitoring demands increase spatial learning. When mobility-monitoring demands were lower, as a result of walking with a guide, memory for spatial environments that were learned under degraded-viewing conditions showed improvement. We concluded from the first series of experiments that there is a cognitive component to low-vision navigation.

Second, this work evaluated how changes in mobility-monitoring demands directly affected the availability of attentional resources while individuals walked. We hypothesized that attentional demands are greater with visual degradation than with normal vision, and that attentional demands increase as mobility-monitoring demands increase within low-vision navigation. Experiment 3 provided support for the first part of this hypothesis by demonstrating that walking with degraded vision required more attentional resources than walking with normal vision. Experiment 4 showed that more attention was available when walking with a guide or with low mobility-monitoring demands compared to when walking independently with high demands or mobility monitoring. Therefore, the presence of a guide freed attentional resources so that they could be used for tasks other than executing the goal of safe navigation.

A final study provided support for the claim that attentional demands mediated the relationship between risk monitoring and spatial learning during low-vision navigation. Specifically, we found that low compared to high levels of mobility monitoring increased performance on the spatial learning task specifically because when mobility-monitoring demands are lowered, more attention is available for the goal of spatial learning.

Low-Vision Spatial Learning

Our findings suggest that the additional attention required to navigate with degraded vision impairs spatial learning, but also open the question of precisely how spatial learning is disrupted. Research has shown that forming a spatial representation of a novel space, individuals must not only understand the layout, but also keep track of where they are in that space. To do so, they must spatially update both direction and distance information as they navigate (Gallistel, 1990). When navigating with normal vision, individuals can easily recover visual information required for spatial updating such as the distance remaining in the hallway or landmarks that lie ahead. For those with visual impairments, access to such visual cues is limited. Importantly, research demonstrates that blind and normally sighted individuals are able to form cognitive maps of unknown spaces (Lahav & Mioduser, 2008) and navigate to the location of specified landmarks with prior knowledge of the environment (Giudice, Bakdash, & Legge, 2007), suggesting that spatial updating can be accomplished with or without vision. What is likely required in the absence of visual information is a greater reliance on path integration, or the continual monitoring of distance and direction traveled from a starting location (Gallistel, 1990; Mittelstaedt & Mittelstaedt, 1980). Should additional path integration requirements be contributing to the errors, one might predict that visual degradation would interfere with estimates of distance and direction traveled between turning and landmark locations.

Although the literature has not specifically addressed how distraction associated with mobility monitoring might influence navigation and spatial learning abilities, previous research has investigated the impact of distraction or dual task performance when navigating blind and with normal vision. Such divided attention disrupts path integration performance and perception of the distance one has traveled (Glasauer, Schneider, Grasso, & Ivanenko, 2007; Glasauer et al., 2009; Sargent, Zacks, Philbeck, & Flores, 2013). Results largely suggest that when cognitively distracted, people underestimate the distance traveled. This line of work also suggests that when environmental distractions related to the path, such as the complexity of the space or number of turns on the path, yield the opposite pattern whereby the distance traveled is overestimated. It has also been demonstrated that when walking to a landmark location without vision, the ability to perform a secondary task is particularly impacted when very near the landmark location, but relatively unimpaired when farther from objects (Lajoie, Paquet, & Renée, 2013). Future work using our spatial learning paradigm could include distance estimations to address this question.

Implications for the Low Vision Population

In addition to laying the groundwork for the role of attentional resources in low vision spatial learning, the current findings also support the more subjective reports of low-vision individuals concerning hesitation to travel and explore new spaces (Brennan et al., 2001). However, an open question is how these findings would translate to those with clinical low vision. One possibility would be that the attention and learning costs would be reduced in a clinical population due to experience with low-vision navigation. A recent study by Bochsler and colleagues found that individuals with low vision were better able to detect steps and ramps than normally sighted individuals with simulated low vision (Bochsler et al., 2013). Although the authors posit that experience with low vision might explain this difference, more research is needed to address this complicated question.

Alternatively, it is possible that the attention and learning costs would be present or even exaggerated in a clinical population due to the age demographic in which visual deficits are most common. Low vision is very prevalent in aging populations, with 65% of the visually impaired population over the age of 50 (World Health Organization, 2011). Not only do older adults often face additional physical challenges with mobility that might further compete for attentional resources, but a robust finding in the literature suggests that older adults have more difficult time learning large spaces (Jansen, Schmelter, & Heil, 2010; Kirasic, 1991; Moffat, Zonderman, & Resnick, 2001). It has also been shown that older adults are more impaired walking without vision to a previously-viewed target location in the face of a dual task than younger adults, suggesting that the elderly might be disproportionally impaired by multitasking while navigating. With respect to the attentional costs of navigating with low vision, cognitive resource competition is particularly important for an elderly population as aging is associated with decreases in cognitive processing speeds (Salthouse, 1988). For an older population, even without vision loss, we expect that mobility monitoring demands increase compared to younger adults, influencing quality of life and willingness to navigate novel environments.

The findings from this work also have implications for the design of navigation aids for the visually impaired. Many of the visual aids that are currently available for the blind and visually impaired, particularly those that provide verbal information about the spatial environment, have been shown to be detrimental to spatial learning. Klatzky, Marston, Giudice, Golledge, and Loomis (2006) and Guidice, Marston, Klatzky, Loomis, and Golledge (2008) evaluated two types of navigation aids—aid providing instructions through verbal instructions, and aids using non-verbal auditory instructions—on how accurately a user was able navigate and how well they remembered the environment. Although both technologies both appear to be effective at helping a user arrive at a desired location, users had a poorer memory for the environment after the task when verbal instructions were provided, presumably because of the additional cognitive processing. Similarly, Gardony, Brunye, Mahoney, and Taylor (2013) suggest that visual aids impair spatial memory specifically by dividing one’s attention between the navigation task and spatial learning while navigating. Together with the findings from the current work, it is likely that a device that offloads attentional demands as opposed to bombarding the user with additional information to process is the appropriate solution for a low vision individual who is already faced with increased attention costs.

Attention and Spatial Learning