Abstract

Auditory scene analysis is a demanding computational process that is performed automatically and efficiently by the healthy brain but vulnerable to the neurodegenerative pathology of Alzheimer's disease. Here we assessed the functional neuroanatomy of auditory scene analysis in Alzheimer's disease using the well-known ‘cocktail party effect’ as a model paradigm whereby stored templates for auditory objects (e.g., hearing one's spoken name) are used to segregate auditory ‘foreground’ and ‘background’. Patients with typical amnestic Alzheimer's disease (n = 13) and age-matched healthy individuals (n = 17) underwent functional 3T-MRI using a sparse acquisition protocol with passive listening to auditory stimulus conditions comprising the participant's own name interleaved with or superimposed on multi-talker babble, and spectrally rotated (unrecognisable) analogues of these conditions. Name identification (conditions containing the participant's own name contrasted with spectrally rotated analogues) produced extensive bilateral activation involving superior temporal cortex in both the AD and healthy control groups, with no significant differences between groups. Auditory object segregation (conditions with interleaved name sounds contrasted with superimposed name sounds) produced activation of right posterior superior temporal cortex in both groups, again with no differences between groups. However, the cocktail party effect (interaction of own name identification with auditory object segregation processing) produced activation of right supramarginal gyrus in the AD group that was significantly enhanced compared with the healthy control group. The findings delineate an altered functional neuroanatomical profile of auditory scene analysis in Alzheimer's disease that may constitute a novel computational signature of this neurodegenerative pathology.

Keywords: Auditory scene analysis, Cocktail party effect, Alzheimer's disease, fMRI

Highlights

-

•

Auditory scene analysis is affected in Alzheimer's disease (AD) but little studied.

-

•

We addressed this using fMRI of the ‘cocktail party effect’ in AD vs older controls.

-

•

Relative to controls, AD patients showed enhanced activation of supramarginal gyrus.

-

•

Auditory scene analysis is a new, informative probe of brain network function in AD.

1. Introduction

Decoding the auditory world poses a formidable problem of neural computation. Our brains normally solve this problem efficiently and automatically but the neural basis of ‘auditory scene analysis’ remains incompletely understood. The disambiguation of sound sources within the complex mixture that generally arrives at our ears is an essential prerequisite for identification of those sources and a fundamental task of auditory scene analysis (Bregman, 1994). One of the best known instances of this process in action is the so-called ‘cocktail party effect’ whereby our own name spoken across a noisy room captures attention and may even lead to successful tracking of the relevant conversation against the surrounding babble (Cherry, 1953; Moray, 1959). The cocktail party effect is a celebrated example of a much wider category of auditory phenomena that depend on generic computational processes that together segregate an acoustic target or ‘foreground’ sound from the acoustic ‘background’: these processes are likely to include representation of spectral and temporal regularities in the sound mixture and matching to previously stored auditory ‘templates’ (for example, specific speech or vocal sounds) prior to engagement of attentional resources (Billig et al., 2013; Griffiths and Warren, 2002; Kumar et al., 2007). Functional neuroimaging studies to define neuroanatomical substrates of auditory scene analysis in the healthy brain have implicated a distributed, dorsally directed cortical network including planum temporale and posterior superior temporal gyrus, supramarginal gyrus, intraparietal sulcus and prefrontal projection targets (Dykstra et al., 2011; Gutschalk et al., 2007; Hill and Miller, 2010; Kondo and Kashino, 2009; Kong et al., 2014; Linden et al., 1999; Overath et al., 2010; Wilson et al., 2007; Wong et al., 2009). While frontal cortex is thought to drive top-down attentional processes (Hill and Miller, 2010; Obleser et al., 2007; Schönwiesner et al., 2007), the precise role of parietal cortex in auditory scene analysis is more contentious and might include primary labelling of salient events (Cohen, 2009; Downar et al., 2000), integration of signal representations for programming behavioural responses (Cusack, 2005; Lee et al., 2014) or attentional modulation (Hill and Miller, 2010; Nakai et al., 2005). With particular reference to the cocktail party effect, speech intelligibility has been shown to engage more ventral and anterior superior temporal cortex in the dominant hemisphere (Scott et al., 2000), but is influenced by the nature of the background masker (speech versus non-speech: Scott and McGettigan, 2013; Scott et al., 2009). Lexical processes may modulate auditory scene analysis, perhaps via template matching algorithms (Billig et al., 2013; Griffiths and Warren, 2002) as well as additional parietal and prefrontal mechanisms engaging in speech in noise processing, particularly under conditions of increased attentional demand (Binder et al., 2004; Davis et al., 2011; Nakai et al., 2005; Scott et al., 2004; Scott and McGettigan, 2013).

On behavioural as well as neuroanatomical grounds, the computational processing required for auditory scene analysis is likely to be particularly vulnerable to the neurodegenerative disease process in Alzheimer's disease (AD). Patients with AD commonly experience difficulties in following conversations under degraded listening conditions such as a busy room or noisy telephone line. Both generic deficits of central auditory processing and specific deficits of auditory scene analysis have been demonstrated in AD (Gates et al., 1996, 2008, 2011; Golden et al., 2015; Goll et al., 2011, 2012; Golob et al., 2007, 2009; Kurylo et al., 1993; Strouse et al., 1995); these develop early in the course of disease and are likely to interact with impairments of attention and working memory (Conway et al., 2001; Goll et al., 2012; Stopford et al., 2012). Deficits of auditory scene analysis are in accord with the neuroanatomy of AD, which blights a large-scale, functionally coherent brain network linking mesial temporal lobe structures with retrosplenial, temporo-parietal and medial prefrontal cortices (Buckner et al., 2008; Greicius and Menon, 2004; Raichle et al., 2001; Seeley et al., 2009). Regional deposition of pathogenic proteins, hypometabolism and atrophy within this network in AD closely overlaps regions implicated in auditory scene analysis and speech-in-noise processing in the healthy brain, and involvement of temporo-parietal cortical junction zones is likely to be particularly pertinent (Herholz et al., 2002; Scahill et al., 2002; Warren et al., 2012). Indeed, modulation of activity in these areas has been linked to the efficiency of speech-in-noise processing even in apparently healthy older individuals (Wong et al., 2009). However, the pathophysiology of this culprit brain network in AD remains to be worked out in detail. While involvement of this network is relatively selective in AD, it is unlikely that the network behaves as an amorphous unit (Warren et al., 2012); moreover its core function or functions have not been defined. Although it has been designated the ‘default mode network’, showing correlated activity in the healthy ‘resting’ brain and deactivation with certain tasks (Buckner et al., 2008; Raichle et al., 2001; Shulman et al., 1997), this network has also been implicated in various ‘active’ processes including maintenance of internal sensory representations (Buckner et al., 2008; Buckner and Carroll, 2007; Spreng and Grady, 2010; Zvyagintsev et al., 2013) and more specifically in aspects of auditory scene analysis, both in the healthy brain (Salvi et al., 2002; Wong et al., 2009; Zündorf et al., 2013) and in patients with AD (Goll et al., 2012).

Here we used the cocktail party effect to delineate the functional neuroanatomy of auditory scene analysis in a cohort of patients with AD in relation to healthy older individuals. Previous work in AD has addressed psychophysical deficits of auditory scene analysis using relatively simple paradigms and structural neuroanatomical correlation (Gates et al., 2008, 2011; Goll et al., 2012). In this study we set out to use a realistic auditory scene analysis paradigm in the context of fMRI, in order to probe functional brain mechanisms directly. This paradigm was motivated by a cognitive model of the cocktail party effect according to which stored templates for auditory objects (e.g., spoken words) are used to disambiguate those objects from other sounds in the environment during parsing of the auditory scene (segregation of auditory ‘foreground’ and ‘background’: Griffiths and Warren, 2002). We used participant's own names as salient acoustic targets (Moray, 1959; Wood and Cowan, 1995) against naturalistic multi-talker babble; a sparse fMRI acquisition protocol to minimise confounding effects engendered by streaming auditory stimuli against scanner noise (Hall et al., 1999); and a passive-listening design to minimise any confounding effects from output task in these cognitively impaired patients. Based on previous neuroanatomical work in the healthy brain and in AD, we hypothesised that patients with AD and healthy older individuals would show similar profiles of auditory cortex activation by sound and representation of name identity per se; but that AD would have a distinct pathophysiological signature during auditory scene analysis, in temporo-parietal cortical regions separable from more anterior superior temporal cortex engaged by name identity coding (Dykstra et al., 2011; Goll et al., 2012; Overath et al., 2010; Scott et al., 2000, 2009; Wong et al., 2009). In particular, we hypothesised that AD would produce an altered interaction of auditory name template matching with object segregation underpinning the cocktail party effect.

2. Methods

2.1. Participants

Thirteen consecutive patients (mean (standard deviation) age 66 (5.8) years; five female) fulfilling consensus clinical criteria for early to moderately severe, typical Alzheimer's disease (AD) led by predominant episodic memory loss with additional cognitive dysfunction (Dubois et al., 2007) and 17 age-matched healthy individuals (68 (3.9) years; seven female) with no history of neurological or psychiatric illness participated in the study. All participants were right-handed and no participant had a clinical history of peripheral hearing loss; none was a professional musician. Detailed general neuropsychological assessment in the AD group corroborated the clinical diagnosis in all cases; demographic, clinical and neuropsychological details for the experimental groups are summarised in Table 1. At the time of participation, 12 patients were receiving symptomatic treatment with an acetylcholinesterase inhibitor (one was also receiving memantine). CSF examination was undertaken in six patients with AD and revealed a total tau: beta-amyloid ratio >1 (compatible with underlying AD pathology) in all cases. All participants gave informed consent in accordance with the Declaration of Helsinki.

Table 1.

General demographic, clinical and behavioural data for participant groups.

| Characteristics | Healthy controls | AD |

|---|---|---|

| General | ||

| No (m:f). | 17 (8:9) | 13 (8:5) |

| Age (years) | 68.3 (3.9) | 65.7 (5.6) |

| Education (years) | 15.8 (3.0) | 13.4 (3.2)a |

| Musical training (years) | 1.7 (2.7) | 3.0 (2.7) |

| MMSE | 28.8 (0.9) | 19.7 (6.5)a |

| Symptom duration (years) | − | 4.9 (1.7) |

| Neuropsychological assessment | ||

| General intellect: IQ | ||

| WASI verbal IQ | 118.6 (8.1) | 87.1 (22.3)a |

| WASI performance IQ | 118.1 (15.1) | 83.5 (17.4)a |

| NART estimated premorbid IQ | 119.7 (5.7) | 103.9 (16.5)a |

| Episodic memory | ||

| RMT words (/50) | 46.2 (2.8) | 30.6 (6.9)a |

| RMT faces (/50) | 43.1 (4.6) | 33.5 (7.1)a |

| Executive skills | ||

| WASI block design (/71) | 42.4 (16.6) | 12.6 (13.7)a |

| WASI matrices (/32) | 29.4 (14.9) | 12.8 (9.6)a |

| WMS-R digit span forward (/12) | 8.6 (1.8) | 6.1 (2.1)a |

| WMS-R digit span backward (/12) | 6.6 (2.2) | 4.5 (2.8)a |

| D-KEFS Stroopb colour (s) | 33.0 (7.1) | 53.3 (18.0)a |

| D-KEFS Stroopb word (s) | 22.4 (4.5) | 41.4 (25.6)a |

| D-KEFS Stroopb interference (s) | 62.2 (16.7) | 102.1 (32.9)a |

| Verbal skills | ||

| WASI vocabulary (/80) | 68.1 (4.5) | 45.2 (20.2)a |

| WASI similarities (/48) | 41.1 (9.0) | 23.1 (12.8)a |

| GNT (/30) | 24.9 (3.2) | 12.9 (8.5)a |

| BPVS (/150) | 146.8 (3.0) | 123.8 (28.8)a |

| NARTc (/50) | 41.2 (4.6) | 30.2 (12.2)a |

| Posterior cortical skills | ||

| GDAd (/24) | 15.6 (3.5) | 6.4 (4.9)a |

| VOSP object decision (/20) | 18.2 (1.5) | 14.8 (2.9)a |

| Post-scan behavioural tasks | ||

| Name detection (/20) | 19.9 (0.3) | 19.0 (1.5) |

| Segregation detection (/20)c | 17.1 (2.7) | 12.2 (4.1)a |

Values are mean (standard deviation, std) unless otherwise stated. Raw data are shown for neuropsychological tests (maximum score in parentheses); bold indicates mean raw score <5th percentile based on published norms.

AD, patient group with typical Alzheimer's disease; BPVS, British Picture Vocabulary Scale (Dunn et al., 1982); D-KEFS, Delis Kaplan Executive System (Delis et al., 2001); GDA, Graded Difficulty Arithmetic (Jackson and Warrington, 1986); GNT, Graded Naming Test (McKenna and Warrington, 1983); L, left; MMSE, Mini-Mental State Examination score; NART, National Adult Reading Test (Nelson, 1982); R, right; RMT, Recognition Memory Test (Warrington, 1984); VOSP, Visual Object and Spatial Perception Battery (Warrington and James, 1991); WASI, Wechsler Abbreviated Scale of Intelligence (Wechsler, 1999); WMS-R, Wechsler Memory Scale, Revised (Wechsler, 1987).

Significantly different to healthy control group.

Three patients did not complete all sub-sections of this task.

One patient did not complete this task.

Four patients were unable to complete this task.

2.2. Assessment of peripheral hearing

All participants had pure-tone audiometry using a procedure adapted from a commercial screening audiometry software package (AUDIO-CDTM®, http://www.digital-recordings.com/audiocd/audio.html). The test was administered via headphones from a notebook computer in a quiet room. Five frequency levels (500, 1000, 2000, 3000, 4000 Hz) were assessed: at each frequency, participants were presented with a continuous tone that slowly and linearly increased in intensity. Participants were instructed to indicate as soon as they were sure they could detect the tone; this response time was measured and stored for offline analysis. Hearing was assessed in the right ear in each participant.

2.3. Experimental design and stimuli

In designing the experimental paradigm we manipulated two key components of the cocktail party effect: separation of a particular ‘foreground’ auditory object (a spoken word) from a complex sound mixture or acoustic ‘background’; and matching of foreground object (own name) identity with a previously stored ‘template’. In order to isolate the neural processes involved in these computations, we created two closely matched auditory baseline conditions: by presenting ‘foreground’ sounds interleaved with (rather than superimposed on) the acoustic background; and by spectral rotation of participants' spoken names to generate acoustically similar but unfamiliar (and unintelligible) sound objects. Under this design, the cocktail party effect (detection of own name in a busy auditory scene) represents the interaction of processes that mediate auditory object segregation and template matching.

Stimuli were created as digital wave files and edited in MATLAB7.0® (http://www.mathworks.co.uk); examples of stimuli are available in Supplementary Material on-line. Each participant's own first name was recorded in a sound-proof room, by the same young adult female speaker using a Standard Southern English accent. Recorded name sounds were spectrally rotated using a previously described procedure that preserves spectral and temporal complexity but renders speech content unintelligible (Blesser, 1972). An acoustic ‘background’ of speech babble was created by superimposing recordings of 16 different female speakers reading passages of English from the EUROM database of English speech (Chan et al., 1995) using a previously described method (Rosen et al., 2013); no words were intelligible from the sound mixture. Babble samples were spectrally rotated in order to provide an acoustic background for the spectrally rotated name sounds that reduced any spectral ‘pop-out’ effects. The signal-to-noise ratio of names to background babble was fixed at 17 dB, corresponding to a moderately noisy (e.g., cocktail party) environment (International Telecommunication Union, 1986).

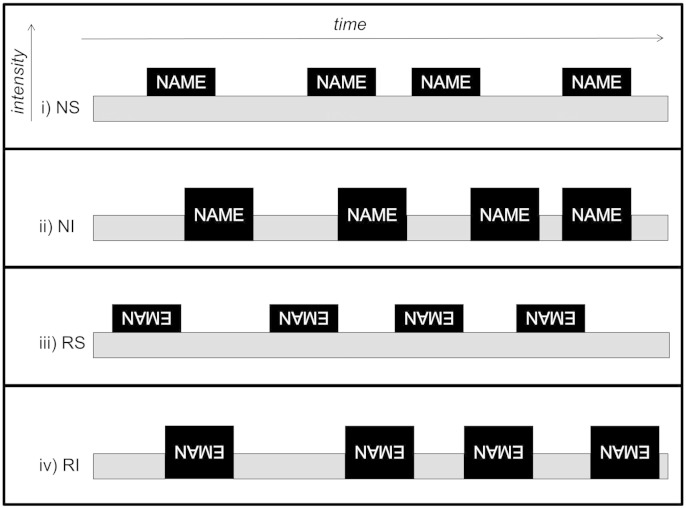

To create experimental trials, name and spectrally rotated name sounds were added to corresponding (raw or spectrally rotated) babble samples by either superimposing on or interleaving with babble; name sounds were repeated four times within a single trial and the total duration of each trial was fixed at 8 s (duration of individual name exemplars 0.6–0.9 s; experimental trials schematised in Fig. 1). Concatenated sound samples were windowed with 20 ms onset–offset temporal ramps to prevent click artefacts, and all wave files were digitally sampled at 44,100 Hz with fixed mean (root-mean-square) intensity over all trials. These procedures yielded four experimental conditions in a factorial relation: own natural name superimposed on babble, NS; own natural name interleaved with babble, NI; spectrally rotated name superimposed on (spectrally rotated) babble, RS; spectrally rotated name interleaved with (spectrally rotated) babble, RI. Twenty unique trials were created for each condition, by randomly varying the onsets of the four name sounds within the 8 s trial interval. An additional rest baseline condition comprising 8 s silent intervals was also included.

Fig. 1.

Schematic representation of fMRI stimulus conditions, showing representative trials. Dark grey boxes signify presentations of participant's own name, in either natural or spectrally rotated (inverted) form; light grey boxes represent the acoustic background (multi-talker babble). Onsets of name exemplars were varied randomly between trials; each trial was 8 s in total duration. NS, own natural name sounds superimposed on babble; NI, own natural name sounds interleaved with babble; RS, spectrally rotated name sounds superimposed on babble; RI, spectrally rotated name sounds interleaved with babble.

2.4. Experimental procedures

2.4.1. Stimulus presentation

In the fMRI session, experimental trials were presented from a notebook computer running the Cogent v1.25 extension of MATLAB (Vision Lab, University College London, UK), each triggered by the MR scanner on completion of the previous image acquisition in a ‘sparse’ acquisition protocol. Sounds were delivered binaurally via electrodynamic headphones (http://www.mr-confon.de) at a comfortable listening level (at least 70 dB) that was fixed for all participants; two identical scanning runs were administered, each comprising 20 trials for each sound condition plus 10 silence trials, yielding a total of 180 trials for the experiment. Participants were instructed to listen to the sound stimuli with their eyes open; there was no in-scanner output task and no behavioural responses were collected.

2.4.2. Brain image acquisition

Brain images were acquired on a 3 Tesla TIM Trio MRI scanner (Siemens Healthcare, Erlangen, Germany) using a 12-channel RF receive head coil. For each of the two functional runs, 92 single-shot gradient-echo planar image (EPI) volumes were acquired each with 48 oblique transverse slices covering the whole brain (slice thickness 2 mm, inter-slice gap 1 and 3 mm in-plane resolution, TR/TE 70/30 ms, echo spacing 0.5 ms, matrix size 64 × 64 pixels, FoV 192 × 192 mm, phase encoding (PE) direction anterior–posterior). A slice tilt of −30° (T > C), z-shim gradient moment of +0.6 mT/m ms and positive PE gradient polarity were used to minimise susceptibility-related loss of signal and blood-oxygen-level-dependent (BOLD) functional sensitivity in the temporal lobes, following optimisation procedures described previously (Weiskopf et al., 2006). Sparse-sampling EPI acquisition with repetition time 11.36 s (corresponding to an inter-scan gap of 8 s) was used to reduce any interaction between scanner acoustic noise and auditory stimulus presentations. The initial two brain volumes in each run were performed to allow equilibrium of longitudinal T1 magnetisation but discarded from further analysis. A B0 field-map was acquired using a gradient double-echo FLASH sequence (TE1 = 10 ms, TE2 = 12.46 ms, 3 × 3 × 2 mm resolution, 1 mm gap; matrix size = 64 × 64 pixels; FoV = 192 × 192 mm) to allow post-processing geometric distortion corrections of EPI data due to B0 field inhomogeneities.

Volumetric brain MR images were also obtained in each participant to allow coregistration of structural with functional neuroanatomical data. The structural acquisition was based on a multi-parameter mapping protocol (Weiskopf et al., 2011; Weiskopf and Helms, 2008), including a 3D multi-echo FLASH sequence with predominant T1 (TR 18.7 ms, flip angle 20°) weighting, six alternating gradient echoes at equidistant echo times and 1 mm isotropic voxels.

2.4.3. Behavioural assessment

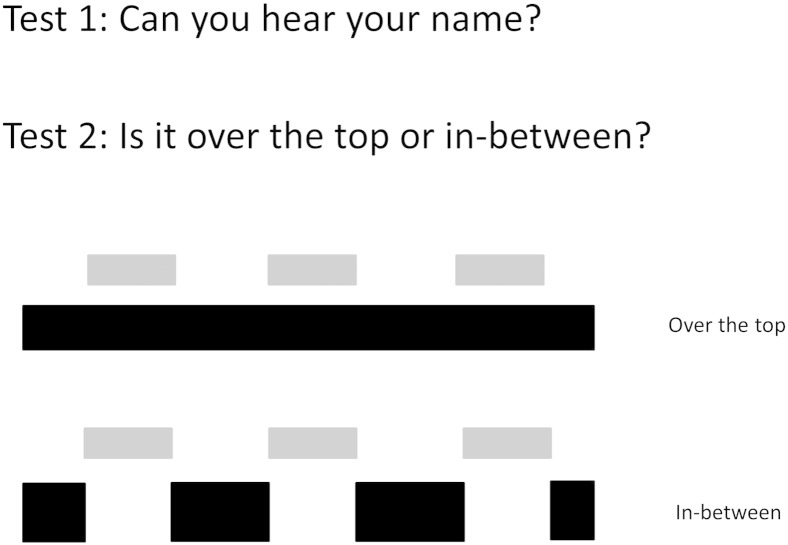

Following the scanning session, each participant's ability to perceive and discriminate the experimental conditions presented during scanning was assessed using a two alternative forced choice psychoacoustic procedure. Twenty auditory stimuli representing all sound conditions (five NS, five NI, five RS, five RI) were derived from trials presented in the scanner and administered in randomised order in two short tests. In the first test, the task (name detection) was to determine whether or not the participant's own name was present (discrimination of NS/NI from RS/RI conditions); in the second test, the task (segregation detection) was to determine whether the two kinds of sounds (name and babble) were superimposed or interleaved (‘Are the sounds over the top or in-between?’; discrimination of NS/RS from NI/RI conditions), assisted by a visual guide (see Fig. S1 in Supplementary Material on-line). It was established that all participants understood the tasks prior to commencing the tests; during the tests, no feedback about performance was given and no time limits were imposed. Participant responses were recorded for off-line analysis.

Fig. S1.

Visual guide shown to participants in post-scan behavioural testing to assess auditory segregation detection (discrimination between superimposed and interleaved sound conditions). The ‘foreground’ sound (grey) was either the participant's natural spoken name or its spectrally rotated (unintelligible) analogue; the ‘background’ sound (black) was either 16-talker babble or its spectrally rotated analogue. In this test, the task instruction on each trial was to decide whether the two kinds of sounds (‘grey’ and ‘black’) were ‘over the top’ (superimposed) or ‘in-between’ (interleaved).

Inline Supplementary Figure S1.

2.5. Data analyses

2.5.1. fMRI data analysis

Brain imaging data were analysed using statistical parametric mapping software (SPM8; http://www.fil.ion.ucl.ac.uk/spm). In initial image pre-processing, the EPI functional series for each participant was realigned using the first image as a reference and images were unwarped incorporating field-map distortion information (Hutton et al., 2002). The DARTEL toolbox (Ashburner, 2007) was used to spatially normalise all individual functional images to a group mean template image in Montreal Neurological Institute (MNI) standard stereotactic space; to construct this group brain template, each individual's T1 weighted MR image was first co-registered to their EPI series and segmented using DARTEL tools (New Segment) and this segment was then used to estimate a group template that was aligned to MNI space. Functional images were smoothed using a 6 mm full-width-at-half-maximum Gaussian smoothing kernel. For the purpose of rendering statistical parametric functional maps, a study-specific mean structural brain image template was created by warping all bias-corrected native space whole-brain images to the final DARTEL template and calculating the average of the warped brain images.

Pre-processed functional images were entered into a first-level design matrix incorporating the five experimental conditions (NS, NI, RS, RI and the baseline silence condition) modelled as separate regressors convolved with the standard haemodynamic response function, and also including six head movement regressors generated from the realignment process. For each participant, first-level t-test contrast images were generated for the main effects of auditory stimulation [(NS + NI + RS + RI) − silence], identification of own name [(NS + NI) − (RS + RI)] and segregation of auditory foreground from background [(NS + RS) − (NI + RI)]. In the absence of a specific output task during scanning, we use ‘identification’ here to indicate specific processing of own-name identity in relation to an acoustically similar perceptual baseline. In addition, contrast images were generated for the interaction of identification and segregation processes [(NS − RS) − (NI − RI)]: we argue that this interaction captures the computational process that supports the cocktail party effect proper. Both ‘forward’ and ‘reverse’ contrasts were assessed in each case. Contrast images for each participant were entered into a second-level random-effects analysis in which effects within each experimental group and between the healthy control and AD groups were assessed using voxel-wise t-test contrasts.

Contrasts were assessed at peak voxel statistical significance threshold p < 0.05 after family-wise error (FWE) correction for multiple voxel-wise comparisons in two anatomical small volumes of interest, specified by our prior hypotheses (Dykstra et al., 2011; Goll et al., 2012; Overath et al., 2010; Scott et al., 2000, 2009; Wong et al., 2009). These regional volumes were created using MRICron® (http://www.mccauslandcenter.sc.edu/mricro/mricron/) and comprised temporo-parietal junction (including superior temporal and adjacent inferior parietal cortex posterior to Heschl's gyrus and supramarginal gyrus; the putative substrate for auditory scene analysis) and superior temporal gyrus anterior and lateral to Heschl's gyrus (the putative substrate for name identity coding). For the purpose of assessing overall auditory stimulation, a combined regional volume with addition of Heschl's gyrus was used for the contrast [(NS + NI + RS + RI) − silence].

2.5.2. Voxel-based morphometry of structural MR images

Structural brain images were compared between the patient and healthy control groups in a voxel-based morphometric (VBM) analysis to obtain an AD-associated regional atrophy map: normalisation, segmentation and modulation of grey and white matter images were performed using default parameter settings in SPM8, with a Gaussian smoothing kernel of 6 mm full-width-at-half-maximum. Groups were compared using voxel-wise two-sample t-tests, including covariates of age, gender, and total intracranial volume. Statistical parametric maps of brain atrophy were thresholded leniently (p < 0.01 uncorrected over the whole brain volume) in order to capture any significant grey matter structural changes in relation to functional activation profiles from the fMRI analysis.

2.5.3. Demographic and behavioural data analyses

Demographic data were compared between the healthy control and AD groups using two sample t-tests (gender differences were assessed using a Pearson's chi-square test of distribution); neuropsychological data were compared using non-parametric Wilcoxon rank-sum tests. Tone detection thresholds on audiometry screening and performance on post-scan behavioural tasks on experimental stimuli were analysed using linear regression models with clustered, robust standard error due to the model residuals holding non-normal distributions. In the audiometry analysis, the main effect of patient group was assessed while controlling for age and frequency type, as well as assessing for any interaction between group and frequency.

In the analysis of post-scan behavioural data, a ‘cocktail party effect’ measure was generated as the d-prime of name detection in the superimposed and interleaved conditions; the main effect of group and any interactions between test type and group were assessed for all test measures (name detection score/segregation detection score/cocktail party d-prime). In the AD group, correlations between individual post-scan test performance measures and peak effect sizes (beta estimates) for fMRI contrasts of interest were assessed using linear regression: name detection performance was correlated with peak activation in the name identification contrast; segregation detection performance with the segregation contrast; and d-prime with the cocktail party effect contrast.

For all tests, the threshold for statistical significance was p < 0.05; Wald tests were used to assess the significance of interaction effects.

3. Results

3.1. General characteristics of experimental groups

The patient and healthy control groups did not differ significantly in age (t(28) = 1.51, p = 0.14), gender distribution (χ2(1) = 0.62, p = 0.43) or years of musical training (t(27) = 1.60, p = 0.12); the healthy control group had on average significantly more years of education (t(28) = 2.08, p = 0.048), though participants in both groups overall were relatively highly educated (see Table 1). Tone detection thresholds on audiometry testing revealed that group membership did not have a significant effect on detection time in ms (beta = 3420, CI −673 to 7514, p = 0.10). There was a significant interaction between group and frequency [F(4,30) = 3.14, p = 0.03] driven by the effect of frequency type within group rather than any differences between groups.

3.2. Post-scan behavioural data

Group performance data for the post-scan behavioural tests are presented in Table 1. There was a significant main effect of test type (name detection/segregation detection: beta = −2.82, CI −4.24 to −1.41, p < 0.001) and a strong trend to a main effect of group (beta = −0.88, CI −1.77 to 0.003, p = 0.051). There was a significant interaction between group and test type (F(1,29) = 9.29, p = 0.005): these results were driven by poorer performance of the AD group than the healthy control group on the auditory segregation detection task (t = 3.61, p = 0.001). Wald tests also revealed significantly superior performance on name than segregation detection in both healthy individuals (t = 4.09, p < 0.001) and patients (t = 6.11, p < 0.001). There was no significant interaction between group and ‘cocktail party’ d-prime (F(1,29) = 2.75, p = 0.11).

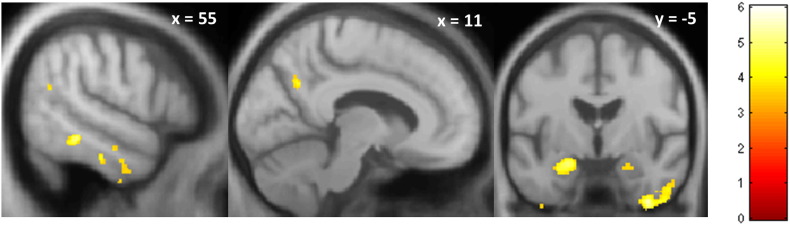

3.3. Structural neuroanatomical data

Comparison of the AD and healthy control groups in the VBM analysis revealed the anticipated profile of AD-associated regional grey matter atrophy involving hippocampi, temporal and retrosplenial cortices; statistical parametric maps are presented in Fig. S2 and significant regions of AD-associated grey matter atrophy are summarised in Table S1 in Supplementary Material on-line.

Fig. S2.

Statistical parametric maps of regional grey matter atrophy in the Alzheimer's disease group compared to the healthy control group based on a voxel-based morphometry analysis of structural brain MR images. Maps are presented on a group mean T1-weighted MR image in MNI space, thresholded leniently at p < 0.01 uncorrected for multiple comparisons over whole brain. The colour side bar codes voxel-wise t-values of grey matter change. Planes of representative sections are indicated using the corresponding MNI coordinates.

Table S1.

Regions of significant regional grey matter atrophy in the Alzheimer's disease group compared with the healthy control group in the VBM analysis. Associations shown were significant at threshold p < 0.01 uncorrected for multiple comparisons over the whole brain; all significant clusters >50 voxels are shown and peak (local maximum) coordinates are in MNI space. ITG, inferior temporal gyrus; MTG, middle temporal gyrus; PCC, posterior cingulate cortex.

| Region | Side | Cluster (voxels) | Peak (mm) |

t-value | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Posterior MTG | R | 2187 | 60 | − 36 | − 15 | 6.03 |

| MTG | L | 566 | − 65 | − 21 | − 23 | 4.10 |

| Hippocampus | L | 395 | − 23 | − 4 | − 20 | 4.06 |

| ITG | L | 276 | − 47 | − 33 | − 24 | 4.81 |

| Posterior ITG | L | 574 | − 57 | − 34 | − 20 | 4.70 |

| PCC | R | 54 | 11 | − 60 | 33 | 4.51 |

| Posterior ITG | L | 57 | − 48 | − 61 | − 15 | 4.15 |

Inline Supplementary Figure S2.

Inline Supplementary Table S1.

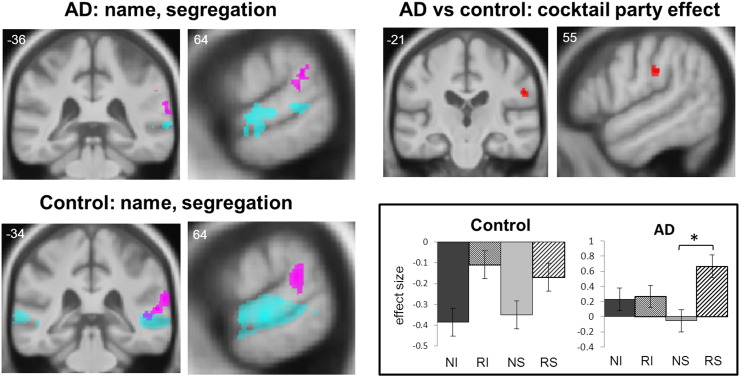

3.4. Functional neuroanatomical data

Significant neuroanatomical findings from the fMRI analysis are summarised in Table 2; statistical parametric maps and beta parameter estimates for key contrasts and conditions are presented in Fig. 2. All reported contrasts were significant at threshold p < 0.05FWE, corrected for multiple voxel-wise comparisons within anatomical regions of interest specified by our prior experimental hypotheses. Auditory stimulation (the contrast of all sound conditions versus silence) was associated, as anticipated, with extensive bilateral activation involving the superior temporal gyri in both the AD and healthy control groups; no significant differences between groups were identified and there was no significant activation associated with the ‘reverse’ contrast. Identification of own name compared with spectrally rotated analogues produced extensive bilateral activation of superior temporal gyrus and superior temporal sulcus in both the AD and the healthy control groups; again, no significant differences between groups were identified and there were no significant areas of activation for the ‘reverse’ contrast. In the contrast assessing auditory object segregation processing, right planum temporale and posterior superior temporal gyrus were more activated in the interleaved than superimposed sound conditions (i.e., in the ‘reverse’ contrast: [(NI + RI) − (NS + RS)]) in both the AD and the healthy control groups. Healthy individuals showed additional activation in an inferior parietal junctional area (supramarginal gyrus), however there were no significant differences between participant groups nor any significant activations associated with the ‘forward’ contrast. The contrast to assess the interaction of own name identification with auditory segregation processing (the cocktail party effect) produced no significant activations in the healthy control group but significant activation of right supramarginal gyrus in the AD group. There was a significant difference between groups for this contrast in right supramarginal gyrus.

Table 2.

Summary of fMRI data for experimental contrasts of interest in participant groups.

| Group | Contrast | Region | Side | Cluster (voxels) |

Peak (mm) |

t-Value | p-Value | ||

|---|---|---|---|---|---|---|---|---|---|

| x | y | z | |||||||

| Healthy controls | Sound versus silence | HG | L | 4344 | −44 | −21 | 4 | 12.10 | <0.001 |

| Mid STG | R | 4635 | 60 | −12 | −2 | 11.56 | <0.001 | ||

| Name identificationa | Mid STG/STS | L | 1788 | −56 | −13 | −2 | 10.31 | <0.001 | |

| R | 1989 | 66 | −16 | −5 | 11.38 | <0.001 | |||

| Post STG | L | 219 | −62 | −24 | 1 | 8.22 | 0.001 | ||

| R | 35 | 65 | −18 | 6 | 5.46 | 0.039 | |||

| Segregation processingb | PT/ SMG | R | 172 | 65 | −36 | 19 | 5.68 | 0.028 | |

| AD patients | Sound versus silence | Mid STG | L | 3639 | −56 | −21 | 3 | 23.14 | <0.001 |

| Post STG | R | 3990 | 54 | −22 | 10 | 11.79 | <0.001 | ||

| Name identification | Ant STG/STS | L | 652 | −59 | 0 | −15 | 8.34 | 0.003 | |

| R | 1073 | 62 | −1 | −6 | 8.34 | 0.003 | |||

| Segregation processing | Post STG/PT | R | 67 | 65 | −37 | 24 | 6.48 | 0.047 | |

| Cocktail party effectc | SMG | R | 39 | 55 | −22 | 28 | 6.47 | 0.048 | |

| Patients > controls | Cocktail party effect | SMG | R | 57 | 55 | −21 | 28 | 6.06 | 0.002 |

Statistical parametric data summarising regional brain activations for contrasts between experimental conditions of interest, in each participant group and between groups. All contrasts shown are thresholded at p < 0.05FWE after multiple comparisons correction in pre-specified anatomical small volumes.

NS own natural name superimposed on babble, RI spectrally rotated name interleaved with babble, RS spectrally rotated name superimposed on babble; no significant activations were identified for the ‘forward’ segregation contrast [(NS + RS) − (NI + RI)] in either participant group, for the cocktail party contrast in the healthy control group or for auditory stimulation, name identification or segregation processing between groups. AD, Alzheimer's disease; Ant, anterior; HG, Heschl's gyrus; Post, posterior; PT, planum temporale; SMG, supramarginal gyrus; STG, superior temporal gyrus; STS, superior temporal sulcus.

Contrast [(NS + NI) − (RS + RI)].

Contrast [(NI + RI) − (NS + RS)].

Contrast [(NI − RI) − (NS − RS)] where NI is own natural name interleaved with babble, NS own natural name superimposed on babble, RI spectrally rotated name interleaved with babble, RS spectrally rotated name superimposed on babble.

Fig. 2.

Statistical parametric maps (panels top row, bottom left) of regional brain activation for contrasts of interest in the Alzheimer's disease (AD) and healthy control groups and the between-group ‘cocktail party’ interaction; effect sizes (group mean ±1 standard error peak voxel beta parameter estimates) for each experimental condition at the right supramarginal gyrus peak from the cocktail party contrast are also shown (panel bottom right; * indicates significant difference in effect size between conditions, p < 0.01). Statistical parametric maps are rendered on coronal and sagittal sections of the study-specific group mean T1-weighted structural MR image in MNI space; the coordinate of each section plane is indicated and the right hemisphere is shown on the right in all coronal sections. Maps have been thresholded at p < 0.001 uncorrected over whole brain for display purposes; activations shown were significant at p < 0.05 after family-wise error correction for multiple comparisons over anatomical small volume of interest (see also Table 2). Contrasts were composed as follows: name identification (cyan), [(NS + NI) − (RS + RI)]; auditory object segregation processing (magenta), [(NI + RI) − (NS + RS)]; cocktail party effect (red), [(NI − RI) − (NS − RS)] where NI is own natural name interleaved with babble, NS own natural name superimposed on babble, RI spectrally rotated name interleaved with babble, RS spectrally rotated name superimposed on babble.

To further investigate this disease-associated modulation of cocktail party processing in supramarginal gyrus, we conducted an exploratory post hoc analysis of condition effects for both the AD and healthy control groups. Beta parameter estimates in each sound condition relative to the baseline silence condition were compared using pair-wise t-tests (Bonferroni corrected, significance threshold p < 0.05) at the peak voxel of activation for the cocktail party contrast. In the AD group, activation in the RS condition was significantly greater than both the NS condition (t(12) = 3.01, p = 0.03) and the RS condition in the healthy control group (t(28) = 3.47, p = 0.02); there were no other significant sound condition differences within or between groups.

The correlation analysis of peak-voxel beta contrast estimates and post-scan behavioural performance in the AD group revealed no significant relation for name identification (left anterior superior temporal gyrus r = −0.23, p = 0.45; right anterior superior temporal gyrus r = 0.22, p = 0.48) but a near-significant trend for segregation processing (right posterior superior temporal gyrus r = −0.56, p = 0.06). Beta estimates for the cocktail party contrast were significantly correlated with ‘cocktail party’ d-prime (r = −0.66, p = 0.01).

4. Discussion

Here we have shown that the functional neuroanatomy of auditory scene analysis is altered in AD compared to healthy older individuals. This alteration was localised to inferior parietal cortex, a brain region previously implicated as playing a key part both in auditory scene analysis in the healthy brain (Dykstra et al., 2011; Kondo and Kashino, 2009; Kong et al., 2014; Linden et al., 1999) and in the pathogenesis of AD (Seeley et al., 2009; Warren et al., 2012). Our findings build on the growing body of evidence for specific and significant impairments of central auditory function in AD (Gates et al., 1996, 2008, 2011; Golden et al., 2015; Goll et al., 2011, 2012; Golob et al., 2007, 2009; Kurylo et al., 1993; Strouse et al., 1995). The findings show that processes of auditory scene analysis can delineate functional as well as structural neural network alterations in AD based on a relatively naturalistic stimulus that simulates the kind of listening conditions in which these patients commonly report difficulties in daily life. The data further suggest that AD may have a specific computational signature arising from an interaction of cognitive operations that mediate the ‘cocktail party effect’.

The activation profiles of name identification were similar in both the healthy control and AD groups and in accord with previous evidence showing that processing of intelligible speech signals engages distributed superior temporal cortical areas extending beyond auditory cortex (Davis et al., 2011; Meyer et al., 2005; Obleser et al., 2008; Scott et al., 2000). Inclusion of conditions in which name was presented over background babble aligns the present work with previous studies of masked speech processing, which has been shown to engage bihemispheric mechanisms that analyse dynamic spectrotemporal as well as lexical properties of this complex acoustic signal (Scott and McGettigan, 2013). Both patients and healthy individuals were able reliably to discriminate their own names from spectrally rotated versions in post-scan behavioural testing, suggesting that the activation produced by this contrast here indexed name identification per se as well as more generic spectrotemporal template matching and object analysis processes (Billig et al., 2013; Davis et al., 2011; Griffiths and Warren, 2002). It should be noted that the name identification contrast here spanned a change in the spectrotemporal composition of the acoustic background (natural versus spectrally rotated babble) as well as the foreground name sounds: while the use of a spectrally rotated background was intended to reduce spectral ‘pop-out’ of rotated name sounds, future work might dissect the effects of spectral rotation per se from and template-matching processes using alternative speech degradation procedures and different auditory target objects.

Auditory object segregation processing was associated with activation of more posterior superior temporal and inferior parietal cortex in both the healthy control and AD groups: again, this broadly corroborates previous work in the healthy brain (Dykstra et al., 2011; Gutschalk et al., 2007; Hill and Miller, 2010; Kondo and Kashino, 2009; Kong et al., 2014; Linden et al., 1999; Overath et al., 2010; Wilson et al., 2007; Wong et al., 2009). While the direction of this effect here might seem somewhat counter-intuitive (on the basis that segregation of superimposed sounds should require ‘more’ computational processing than resolved interleaved sounds: (Deike et al., 2004, 2010; Gutschalk et al., 2007; Nakai et al., 2005; Wilson et al., 2007), it is consistent with certain previous observations (Hwang et al., 2006; Mustovic et al., 2003; Scott and McGettigan, 2013; Voisin et al., 2006). Speech in noise has been associated with reduced activation of posterior superior temporal cortex compared with clear speech (Hwang et al., 2006): this might reflect reduced intelligibility of the superimposed speech conditions (Scott and McGettigan, 2013) or (more plausibly, in the present case) enhanced engagement of the putative cortical template matching algorithm by intermittent ‘glimpses’ of the salient name sounds (Griffiths and Warren, 2002). Such ‘glimpses’ may have facilitated neural template matching by establishing expectancies over the course of a trial, a process that would be more efficient if name sounds are presented clearly (interleaved) rather than superimposed on background noise. Posterior temporal and temporo-parietal cortex may be particularly sensitive to expectancies of this kind in sound scenes (Mustovic et al., 2003; Voisin et al., 2006). Although this study was not designed to assess lateralised cerebral processing mechanisms explicitly and apparent laterality effects should therefore be interpreted with caution, it is of interest that auditory segregation processing produced peak activation in the right hemisphere in both the healthy control and AD groups here. The correlation with behavioural performance in our AD group further suggested that activity in this region may be required for successful auditory object segregation. Taken together, these findings are consistent with previous evidence that right (non-dominant) temporo-parietal cortex may play a critical role in auditory spatial analysis (Arnott et al., 2004; Krumbholz et al., 2005; Zimmer et al., 2003). This role may be modulated by stimulus characteristics, such as the use of spectrally rotated speech here (Scott et al., 2004).

Arguably more surprising was the lack of significant neuroanatomical differences between the present AD and healthy control groups for the main effect of auditory segregation processing, particularly given that (as anticipated) the AD group showed clearly reduced ability to discriminate superimposed from interleaved sound conditions in the post-scan behavioural test. This may at least in part reflect power to detect effects: functional neuroanatomical differences might emerge with larger patient cohorts. However, stimulus and task factors may also be relevant. In this initial study, we set out to use a paradigm simulating relatively realistic, everyday listening conditions that expose difficulties in patients with AD relative to healthy older people. The use of a babble background is likely to have entailed elements of both energetic and informational masking of superimposed speech sounds (Scott and McGettigan, 2013): it may be that cortical computations associated with disambiguating particular maskers are differentially vulnerable in AD (and of course, in a ‘real’ cocktail party scenario the relative proportion of energetic and informational masking effects is likely to vary unpredictably). Furthermore, it is known that masker level has complex effects on brain activation profiles during auditory scene analysis, particularly in the ageing brain (Scott and McGettigan, 2013; Wong et al., 2009): use of more demanding, reduced signal to noise ratios might amplify any functional neuroanatomical alterations associated with AD. Moreover, as our interest here was in perceptual processing mechanisms that eschew task strategy or difficulty effects, our paradigm did not employ an output task: an active segregation task requirement (as in the post-scan behavioural test here) might well reveal an AD-associated functional anatomical signature.

The interaction of template matching and object segregation in inferior parietal cortex during auditory scene analysis — the cocktail party effect — emerged as the key processing signature differentiating AD from the healthy older brain in this study. This is in line with evidence from previous work that this core computation is particularly vulnerable to cortical network dysfunction in AD (Goll et al., 2012; Warren et al., 2012). The anatomical locus of the effect in supramarginal gyrus further corroborates previous work implicating this area both in auditory scene analysis in the healthy brain and in the network pathophysiology of AD. In the healthy auditory brain, supramarginal gyrus has been linked to auditory target detection, spatial attention and streaming (Dykstra et al., 2011; Kondo and Kashino, 2009; Kong et al., 2014; Linden et al., 1999; Nakai et al., 2005; Scott and McGettigan, 2013), suggesting this region is involved in preparation of orienting and other behavioural responses to the auditory environment (Hickok and Poeppel, 2007; Warren et al., 2005). In AD, dysfunction of temporo-parietal junction is well documented as a hub of the critical, so-called ‘default mode network’ (Buckner et al., 2008; Greicius and Menon, 2004; Raichle et al., 2001; Seeley et al., 2009; Warren et al., 2012). Deconstruction of the complex ‘cocktail party’ interaction here (Fig. 2) revealed that this effect in supramarginal gyrus arose from increased differential activation in the AD group for processing spectrally rotated name versus own natural name sounds superimposed on the acoustic background: activation was enhanced in the AD group compared with healthy controls. Together these profiles suggest that AD may lead to abnormally enhanced activation (or failed deactivation) of inferior parietal cortex during analysis of the incoming sound stream. Dynamic activity shifts in inferior parietal components of the default mode network may normally act to maximise processing efficiency; such shifts might maintain sensitivity to aberrant sensory stimuli that are more difficult to match against stored templates (Chiang et al., 2013; Newman and Twieg, 2001), whereas this sensitivity may be blunted in AD. Modulation of inferior parietal cortex activity could facilitate overall network responsivity to salient auditory and other environmental events, consistent with the proposed ‘sentinel’ function of the default mode network in the healthy brain and its blighting in AD (Buckner et al., 2008; Gilbert et al., 2007).

The present paradigm employed a highly salient, self-referential stimulus (own name): the default mode network including inferior parietal cortex is likely to play a fundamental role in integrating inward representations of self with the world at large, and this process may be disrupted in AD (Molnar-Szakacs and Uddin, 2013). Hearing one's own name may therefore constitute a particularly potent probe of the default mode network and evolving network dysfunction during the development of AD. The key disease interaction here is unlikely to be simply a manifestation of the regional brain atrophy that accompanies AD. With the caveat that structural and functional neuroimaging modalities are generally difficult to compare directly, the location of the functional alteration in supramarginal gyrus lays beyond the zone of significant grey matter atrophy identified in a leniently-thresholded VBM analysis on the same participant groups (see Fig. S2). It is well established that regional brain dysfunction in AD occurs early in the disease course and may lead to structural brain damage (Herholz et al., 2002; Scahill et al., 2002): while it is of course unlikely that inferior parietal cortex in the AD group here was structurally entirely normal, the functional and structural profiles together imply that volume loss alone did not entirely account for the AD-associated functional alteration observed. The direction and selectivity of the functional effect here also speak to this issue: patients with AD showed abnormally enhanced regional cortical activation under particular auditory conditions relative to healthy individuals, rather than simply uniformly attenuated activation as one might anticipate were this wholly dependent on regional grey matter volume. Detection of such aberrant activity increases is an important motivation for employing functional alongside structural neuroimaging techniques in the characterisation of AD and other neurodegenerative diseases (Warren et al., 2012).

The correlation of inferior parietal activity with a behavioural measure of successful cocktail party processing in our AD patients suggests that enhanced activation of this region may help maintain some compensatory function in AD, albeit at the expense of processing inefficiency. However, the present paradigm does not resolve the nature of any relation between activation profiles and behavioural output, since this can only be directly assessed using in-scanner behavioural tasks. The disambiguation of compensatory from aberrantly increased cerebral activity is a key issue in the interpretation of functional neuroimaging changes in neurodegenerative disease (Elman et al., 2014) and a clear priority for future work. Our focus here was to assess AD effects on computational brain mechanisms that might be regarded as obligatory, prior to any modulatory effect from task demands. Ultimately, however, direct assessment of task effects on brain activation profiles will be required both to delineate the network pathophysiology of AD and to evaluate the potential of fMRI as a disease biomarker.

This study has several limitations that suggest directions for further work. Case numbers here were relatively small; in future, it will be important to study larger patient cohorts representing a broader phenotypic spectrum of AD. This is particularly relevant to the delineation of functional profiles that may distinguish typical amnestic AD from major variant syndromes, notably posterior cortical atrophy which is associated with disproportionately prominent impairment of spatial analysis (Warren et al., 2012); and separate AD from other neurodegenerative diseases. Related to this, the AD group here was relatively young: while this will have tended to minimise confounding effects from vascular and other comorbidities, therefore yielding a purer index of functional alterations associated with AD pathology, future work should extend recruitment to include older individuals who represent the major burden of AD in the wider community. Indeed, the brain mechanisms that support auditory scene analysis even in the healthy ageing brain need to be more completely defined. The present auditory paradigm raises unresolved issues that should be investigated in more detail: these include perceptual difficulty effects on the processing of sound conditions within healthy control and patient cohorts; target, masking stimulus, and signal-to-noise effects; and the impact of explicit task requirements. The clinical relevance of functional alterations will ultimately only be established by studying patients at different disease stages and by correlating brain signatures with daily life symptoms, for which more serviceable indices of impaired auditory scene analysis are ideally also required. From a neuroanatomical perspective, in this study we have adopted a directed, region-of-interest approach to assess the neural substrates of auditory scene analysis, informed by the study of the healthy younger brain. Larger cohorts would provide greater power to delineate neuroanatomical correlates beyond these canonical regions, both in the healthy ageing brain and in AD; this may in turn require multi-centre studies to assess the generalisability of findings. In addition, regional functional alterations occur within distributed brain networks and will only be fully defined using connectivity-based techniques, an issue of special pertinence to neurodegenerative diseases underpinned by large-scale neural network disintegration (Seeley et al., 2009; Warren et al., 2012). Acknowledging these various limitations, the present study suggests that auditory scene analysis may constitute a novel and useful paradigm for identifying novel computational signatures of AD and provides a rationale for further systematic investigation with coordinated behavioural and neuroanatomical approaches.

Acknowledgments

We are grateful to all patients and healthy participants for their involvement. We thank the radiographers of the Wellcome Trust Centre for Neuroimaging for assistance with scan acquisition, Stephen Nevard for assistance with sound recordings, Dr Stuart Rosen for assistance with stimuli preparation, Dr. Nikolas Weiskopf for assistance with scanning protocols, and Dr. Gill Livingston and Dr. Alberto Cifelli for referring research patients. The Dementia Research Centre is supported by Alzheimer's Research UK, the Brain Research Trust and the Wolfson Foundation. This work was funded by the the UK Medical Research Council (G0801306) and the NIHR Queen Square Dementia Biomedical Research Unit (CBRC 161). HLG holds an Alzheimer Research, UK PhD Fellowship (ART-PhD2011-10). SJC is supported by an Alzheimer Research, UK Senior Research Fellowship and an ESRC/NIHR (grant no ES/K006711/1). JDW holds a Wellcome Trust Senior Clinical fellowship (grant No 091673/Z/10/Z).

Footnotes

Supplementary data to this article can be found online at http://dx.doi.org/10.1016/j.nicl.2015.02.019.

Appendix A. Supplementary data

Exemplar stimulus: NI, own natural name sound interleaved with babble.

Exemplar stimulus: NS, own natural name sound superimposed on babble.

Exemplar stimulus: RI, spectrally rotated name sound interleaved with (spectrally rotated) babble.

Exemplar stimulus: RS, spectrally rotated name sound superimposed on (spectrally rotated) babble.

References

- Arnott S.R., Binns M.A., Grady C.L., Alain C. Assessing the auditory dual-pathway model in humans. Neuroimage. 2004;22(1):401–408. doi: 10.1016/j.neuroimage.2004.01.014. 15110033 [DOI] [PubMed] [Google Scholar]

- Ashburner J. A fast diffeomorphic image registration algorithm. Neuroimage. 2007;38(1):95–113. doi: 10.1016/j.neuroimage.2007.07.007. 17761438 [DOI] [PubMed] [Google Scholar]

- Billig A.J., Davis M.H., Deeks J.M., Monstrey J., Carlyon R.P. Lexical influences on auditory streaming. Curr. Biol. 2013;23(16):1585–1589. doi: 10.1016/j.cub.2013.06.042. 23891107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder J.R., Liebenthal E., Possing E.T., Medler D.A., Ward B.D. Neural correlates of sensory and decision processes in auditory object identification. Nat. Neurosci. 2004;7(3):295–301. doi: 10.1038/nn1198. 14966525 [DOI] [PubMed] [Google Scholar]

- Blesser B. Speech perception under conditions of spectral transformation. I. Phonetic characteristics. J. Speech Hear. Res. 1972;15(1):5–41. doi: 10.1044/jshr.1501.05. 5012812 [DOI] [PubMed] [Google Scholar]

- Bregman A.S. Auditory Scene Analysis: The Perceptual Organization of Sound. MIT Press; 1994. [Google Scholar]

- Buckner R.L., Andrews-Hanna J.R., Schacter D.L. The brain's default network: anatomy, function, and relevance to disease. Ann. N. Y. Acad. Sci. 2008;1124:1–38. doi: 10.1196/annals.1440.011. 18400922 [DOI] [PubMed] [Google Scholar]

- Buckner R.L., Carroll D.C. Self-projection and the brain. Trends Cogn. Sci. 2007;11(2):49–57. doi: 10.1016/j.tics.2006.11.004. 17188554 [DOI] [PubMed] [Google Scholar]

- Chan D., Fourcin A., Gibbon D., Granstrom B., Huckvale M., Kokkinakis G. EUROM—a spoken language resource for the EU. Eurospeech'95: Proceedings of the 4th European Conference on Speech Communication and and Speech Technology. 1995;1:867–870. [Google Scholar]

- Cherry E.C. Some experiments on the recognition of speech, with one and with two ears. J. Acoust. Soc. Am. 1953;25(5):975–979. [Google Scholar]

- Chiang T.C., Liang K.C., Chen J.H., Hsieh C.H., Huang Y.A. Brain deactivation in the outperformance in bimodal tasks: an fMRI study. PLOS One. 2013;8(10):e77408. doi: 10.1371/journal.pone.0077408. 24155952 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen Y.E. Multimodal activity in the parietal cortex. Hear. Res. 2009;258(1–2):100–105. doi: 10.1016/j.heares.2009.01.011. 19450431 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conway A.R., Cowan N., Bunting M.F. The cocktail party phenomenon revisited: the importance of working memory capacity. Psychon. Bull. Rev. 2001;8(2):331–335. doi: 10.3758/bf03196169. 11495122 [DOI] [PubMed] [Google Scholar]

- Cusack R. The intraparietal sulcus and perceptual organization. J. Cogn. Neurosci. 2005;17(4):641–651. doi: 10.1162/0898929053467541. 15829084 [DOI] [PubMed] [Google Scholar]

- Davis M.H., Ford M.A., Kherif F., Johnsrude I.S. Does semantic context benefit speech understanding through “top-down” processes? Evidence from time-resolved sparse fMRI. J. Cogn. Neurosci. 2011;23(12):3914–3932. doi: 10.1162/jocn_a_00084. 21745006 [DOI] [PubMed] [Google Scholar]

- Deike S., Gaschler-Markefski B., Brechmann A., Scheich H. Auditory stream segregation relying on timbre involves left auditory cortex. Neuroreport. 2004;15(9):1511–1514. doi: 10.1097/01.wnr.0000132919.12990.34. 15194885 [DOI] [PubMed] [Google Scholar]

- Deike S., Scheich H., Brechmann A. Active stream segregation specifically involves the left human auditory cortex. Hear. Res. 2010;265(1–2):30–37. doi: 10.1016/j.heares.2010.03.005. 20233603 [DOI] [PubMed] [Google Scholar]

- Delis D.C., Kaplan E., Kramer J.H. Delis-Kaplan Executive Function System. The Psychological Corporation; San Antonio, TX: 2001. [Google Scholar]

- Downar J., Crawley A.P., Mikulis D.J., Davis K.D. A multimodal cortical network for the detection of changes in the sensory environment. Nat. Neurosci. 2000;3(3):277–283. doi: 10.1038/72991. 10700261 [DOI] [PubMed] [Google Scholar]

- Dubois B., Feldman H.H., Jacova C., Dekosky S.T., Barberger-Gateau P., Cummings J. Research criteria for the diagnosis of Alzheimer's disease: revising the NINCDS–ADRDA criteria. The Lancet Neurology. 2007;6(8):734–746. doi: 10.1016/S1474-4422(07)70178-3. [DOI] [PubMed] [Google Scholar]

- Dunn L.M., Dunn P.Q., Whetton C. British Picture Vocabulary Scale. NFER-Nelson; Windsor: 1982. [Google Scholar]

- Dykstra A.R., Halgren E., Thesen T., Carlson C.E., Doyle W., Madsen J.R., Eskandar E.N., Cash S.S. Widespread brain areas engaged during a classical auditory streaming task revealed by intracranial EEG. Front. Hum. Neurosci. 2011;5:74. doi: 10.3389/fnhum.2011.00074. 21886615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elman J.A., Oh H., Madison C.M., Baker S.L., Vogel J.W., Marks S.M., Crowley S., O'Neil J.P., Jagust W.J. Neural compensation in older people with brain amyloid-β deposition. Nat. Neurosci. 2014;17(10):1316–1318. doi: 10.1038/nn.3806. 25217827 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gates G.A., Anderson M.L., Feeney M.P., McCurry S.M., Larson E.B. Central auditory dysfunction in older persons with memory impairment or Alzheimer dementia. Arch. Otolaryngol. Head Neck Surg. 2008;134(7):771–777. doi: 10.1001/archotol.134.7.771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gates G.A., Anderson M.L., McCurry S.M., Feeney M.P., Larson E.B. Central auditory dysfunction as a harbinger of Alzheimer dementia. Arch. Otolaryngol. Head Neck Surg. 2011;137(4):390–395. doi: 10.1001/archoto.2011.28. 21502479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gates G.A., Cobb J.L., Linn R.T., Rees T., Wolf P.A., D'Agostino R.B. Central auditory dysfunction, cognitive dysfunction, and dementia in older people. Arch. Otolaryngol. Head Neck Surg. 1996;122(2):161–167. doi: 10.1001/archotol.1996.01890140047010. 8630210 [DOI] [PubMed] [Google Scholar]

- Gilbert S.J., Dumontheil I., Simons J.S., Frith C.D., Burgess P.W. Comment on “Wandering minds: the default network and stimulus-independent thought”. Science. 2007;317(5834):43. doi: 10.1126/science.317.5834.43. 17615325 [DOI] [PubMed] [Google Scholar]

- Golden H.L., Nicholas J.M., Yong K.X., Downey L.E., Schott J.M., Mummery C.J. Auditory spatial processing in Alzheimer's disease. Brain. 2015;138(1):189–202. doi: 10.1093/brain/awu337. 25468732 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goll J.C., Kim L.G., Hailstone J.C., Lehmann M., Buckley A., Crutch S.J., Warren J.D. Auditory object cognition in dementia. Neuropsychologia. 2011;49(9):2755–2765. doi: 10.1016/j.neuropsychologia.2011.06.004. 21689671 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goll J.C., Kim L.G., Ridgway G.R., Hailstone J.C., Lehmann M., Buckley A.H. Impairments of auditory scene analysis in Alzheimer's disease. Brain. 2012;135(1):190–200. doi: 10.1093/brain/awr260. 22036957 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golob E.J., Irimajiri R., Starr A. Auditory cortical activity in amnestic mild cognitive impairment: relationship to subtype and conversion to dementia. Brain. 2007;130(3):740–752. doi: 10.1093/brain/awl375. 17293359 [DOI] [PubMed] [Google Scholar]

- Golob E.J., Ringman J.M., Irimajiri R., Bright S., Schaffer B., Medina L.D., Starr A. Cortical event-related potentials in preclinical familial Alzheimer disease. Neurology. 2009;73(20):1649–1655. doi: 10.1212/WNL.0b013e3181c1de77. 19917987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greicius M.D., Menon V. Default-mode activity during a passive sensory task: uncoupled from deactivation but impacting activation. J. Cogn. Neurosci. 2004;16(9):1484–1492. doi: 10.1162/0898929042568532. 15601513 [DOI] [PubMed] [Google Scholar]

- Griffiths T.D., Warren J.D. The planum temporale as a computational hub. Trends Neurosci. 2002;25(7):348–353. doi: 10.1016/s0166-2236(02)02191-4. 12079762 [DOI] [PubMed] [Google Scholar]

- Gutschalk A., Oxenham A.J., Micheyl C., Wilson E.C., Melcher J.R. Human cortical activity during streaming without spectral cues suggests a general neural substrate for auditory stream segregation. J. Neurosci. 2007;27(48):13074–13081. doi: 10.1523/JNEUROSCI.2299-07.2007. 18045901 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall D.A., Haggard M.P., Akeroyd M.A., Palmer A.R., Summerfield A.Q., Elliott M.R. “Sparse” temporal sampling in auditory fMRI. Hum. Brain Mapp. 1999;7(3):213–223. doi: 10.1002/(SICI)1097-0193(1999)7:3<213::AID-HBM5>3.0.CO;2-N. 10194620 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herholz K., Salmon E., Perani D., Baron J.C., Holthoff V., Frölich L. Discrimination between Alzheimer dementia and controls by automated analysis of multicenter FDG PET. Neuroimage. 2002;17(1):302–316. doi: 10.1006/nimg.2002.1208. 12482085 [DOI] [PubMed] [Google Scholar]

- Hickok G., Poeppel D. The cortical organization of speech processing. Nat. Rev. Neurosci. 2007;8(5):393–402. doi: 10.1038/nrn2113. 17431404 [DOI] [PubMed] [Google Scholar]

- Hill K.T., Miller L.M. Auditory attentional control and selection during cocktail party listening. Cereb. Cortex. 2010;20(3):583–590. doi: 10.1093/cercor/bhp124. 19574393 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutton C., Bork A., Josephs O., Deichmann R., Ashburner J., Turner R. Image distortion correction in fMRI: a quantitative evaluation. Neuroimage. 2002;16(1):217–240. doi: 10.1006/nimg.2001.1054. 11969330 [DOI] [PubMed] [Google Scholar]

- Hwang J.-H., Wu C.-W., Chen J.-H., Liu T.-C. The effects of masking on the activation of auditory-associated cortex during speech listening in white noise. Acta Otolaryngol. 2006;126(9):916–920. doi: 10.1080/00016480500546375. 16864487 [DOI] [PubMed] [Google Scholar]

- International Telecommunication Union . 1986. Report BS.1058-0: Minimum AF and RF signal-to-noise ratio required for broadcasting in band 7 (HF) Retrieved from http://www.itu.int/pub/R-REP-BS.1058, last accessed 16/01/2015. [Google Scholar]

- Jackson M., Warrington E.K. Arithmetic skills in patients with unilateral cerebral lesions. Cortex. 1986;22(4):611–620. doi: 10.1016/s0010-9452(86)80020-x. 3816245 [DOI] [PubMed] [Google Scholar]

- Kondo H.M., Kashino M. Involvement of the thalamocortical loop in the spontaneous switching of percepts in auditory streaming. J. Neurosci. 2009;29(40):12695–12701. doi: 10.1523/JNEUROSCI.1549-09.2009. 19812344 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong L., Michalka S.W., Rosen M.L., Sheremata S.L., Swisher J.D., Shinn-Cunningham B.G., Somers D.C. Auditory spatial attention representations in the human cerebral cortex. Cereb. Cortex. 2014;24(3):773–784. doi: 10.1093/cercor/bhs359. 23180753 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krumbholz K., Schönwiesner M., von Cramon D.Y., Rübsamen R., Shah N.J., Zilles K. Representation of interaural temporal information from left and right auditory space in the human planum temporale and inferior parietal lobe. Cereb. Cortex. 2005;15(3):317–324. doi: 10.1093/cercor/bhh133. 15297367 [DOI] [PubMed] [Google Scholar]

- Kumar S., Stephan K.E., Warren J.D., Friston K.J., Griffiths T.D. Hierarchical processing of auditory objects in humans. PLoS Comput. Biol. 2007;3:e100. doi: 10.1371/journal.pcbi.0030100. 17542641 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurylo D.D., Corkin S., Allard T., Zatorre R.J., Growdon J.H. Auditory function in Alzheimer's disease. Neurology. 1993;43(10):1893–1899. doi: 10.1212/wnl.43.10.1893. 8413944 [DOI] [PubMed] [Google Scholar]

- Lee A.K., Larson E., Maddox R.K., Shinn-Cunningham B.G. Using neuroimaging to understand the cortical mechanisms of auditory selective attention. Hear. Res. 2014;307:111–120. doi: 10.1016/j.heares.2013.06.010. 23850664 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linden D.E., Prvulovic D., Formisano E., Völlinger M., Zanella F.E., Goebel R., Dierks T. The functional neuroanatomy of target detection: an fMRI study of visual and auditory oddball tasks. Cereb. Cortex. 1999;9(8):815–823. doi: 10.1093/cercor/9.8.815. 10601000 [DOI] [PubMed] [Google Scholar]

- McKenna P., Warrington E. Graded Naming Test. NFER-Nelson; Windsor: 1983. [Google Scholar]

- Meyer M., Zysset S., von Cramon D.Y., Alter K. Distinct fMRI responses to laughter, speech, and sounds along the human peri-sylvian cortex. Brain Res Cogn Brain Res. 2005;24(2):291–306. doi: 10.1016/j.cogbrainres.2005.02.008. 15993767 [DOI] [PubMed] [Google Scholar]

- Molnar-Szakacs I., Uddin L.Q. Self-processing and the default mode network: interactions with the mirror neuron system. Front. Hum. Neurosci. 2013;7:571. doi: 10.3389/fnhum.2013.00571. 24062671 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moray N. Attention in dichotic listening: affective cues and the influence of instructions. Q. J. Exp. Psychol. 1959;11(1):56–60. [Google Scholar]

- Mustovic H., Scheffler K., Di Salle F., Esposito F., Neuhoff J.G., Hennig J., Seifritz E. Temporal integration of sequential auditory events: silent period in sound pattern activates human planum temporale. Neuroimage. 2003;20(1):429–434. doi: 10.1016/s1053-8119(03)00293-3. 14527603 [DOI] [PubMed] [Google Scholar]

- Nakai T., Kato C., Matsuo K. An fMRI study to investigate auditory attention: a model of the cocktail party phenomenon. Magn Reson Med Sci. 2005;4(2):75–82. doi: 10.2463/mrms.4.75. 16340161 [DOI] [PubMed] [Google Scholar]

- Nelson H.E. National Adult Reading Test. NFER-Nelson; Windsor: 1982. [Google Scholar]

- Newman S.D., Twieg D. Differences in auditory processing of words and pseudowords: an fMRI Study. Hum. Brain Mapp. 2001;14(1):39–47. doi: 10.1002/hbm.1040. 11500989 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obleser J., Eisner F., Kotz S.A. Bilateral speech comprehension reflects differential sensitivity to spectral and temporal features. J. Neurosci. 2008;28(32):8116–8123. doi: 10.1523/JNEUROSCI.1290-08.2008. 18685036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obleser J., Wise R.J., Dresner M.A., Scott S.K. Functional integration across brain regions improves speech perception under adverse listening conditions. J. Neurosci. 2007;27(9):2283–2289. doi: 10.1523/JNEUROSCI.4663-06.2007. 17329425 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Overath T., Kumar S., Stewart L., von Kriegstein K., Cusack R., Rees A., Griffiths T.D. Cortical mechanisms for the segregation and representation of acoustic textures. J. Neurosci. 2010;30(6):2070–2076. doi: 10.1523/JNEUROSCI.5378-09.2010. 20147535 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raichle M.E., MacLeod A.M., Snyder A.Z., Powers W.J., Gusnard D.A., Shulman G.L. A default mode of brain function. Proc. Natl. Acad. Sci. U. S. A. 2001;98(2):676–682. doi: 10.1073/pnas.98.2.676. 11209064 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosen S., Souza P., Ekelund C., Majeed A.A. Listening to speech in a background of other talkers: effects of talker number and noise vocoding. J. Acoust. Soc. Am. 2013;133(4):2431–2443. doi: 10.1121/1.4794379. 23556608 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salvi R.J., Lockwood A.H., Frisina R.D., Coad M.L., Wack D.S., Frisina D.R. PET imaging of the normal human auditory system: responses to speech in quiet and in background noise. Hear. Res. 2002;170(1–2):96–106. doi: 10.1016/s0378-5955(02)00386-6. 12208544 [DOI] [PubMed] [Google Scholar]

- Scahill R.I., Schott J.M., Stevens J.M., Rossor M.N., Fox N.C. Mapping the evolution of regional atrophy in Alzheimer's disease: unbiased analysis of fluid-registered serial MRI. Proc. Natl. Acad. Sci. U. S. A. 2002;99(7):4703–4707. doi: 10.1073/pnas.052587399. 11930016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schönwiesner M., Novitski N., Pakarinen S., Carlson S., Tervaniemi M., Näätänen R. Heschl's gyrus, posterior superior temporal gyrus, and mid-ventrolateral prefrontal cortex have different roles in the detection of acoustic changes. J. Neurophysiol. 2007;97(3):2075–2082. doi: 10.1152/jn.01083.2006. 17182905 [DOI] [PubMed] [Google Scholar]

- Scott S.K., Blank C.C., Rosen S., Wise R.J. Identification of a pathway for intelligible speech in the left temporal lobe. Brain. 2000;123(12):2400–2406. doi: 10.1093/brain/123.12.2400. 11099443 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott S.K., McGettigan C. The neural processing of masked speech. Hear. Res. 2013;303:58–66. doi: 10.1016/j.heares.2013.05.001. 23685149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott S.K., Rosen S., Beaman C.P., Davis J.P., Wise R.J. The neural processing of masked speech: evidence for different mechanisms in the left and right temporal lobes. J. Acoust. Soc. Am. 2009;125(3):1737–1743. doi: 10.1121/1.3050255. 19275330 [DOI] [PubMed] [Google Scholar]

- Scott S.K., Rosen S., Wickham L., Wise R.J. A positron emission tomography study of the neural basis of informational and energetic masking effects in speech perception. J. Acoust. Soc. Am. 2004;115(2):813–821. doi: 10.1121/1.1639336. 15000192 [DOI] [PubMed] [Google Scholar]

- Seeley W.W., Crawford R.K., Zhou J., Miller B.L., Greicius M.D. Neurodegenerative diseases target large-scale human brain networks. Neuron. 2009;62(1):42–52. doi: 10.1016/j.neuron.2009.03.024. 19376066 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shulman G.L., Fiez J.A., Corbetta M., Buckner R.L., Miezin F.M., Raichle M.E., Petersen S.E. Common blood flow changes across visual tasks: II. Decreases in cerebral cortex. J. Cogn. Neurosci. 1997;9(5):648–663. doi: 10.1162/jocn.1997.9.5.648. 23965122 [DOI] [PubMed] [Google Scholar]

- Spreng R.N., Grady C.L. Patterns of brain activity supporting autobiographical memory, prospection, and theory of mind, and their relationship to the default mode network. J. Cogn. Neurosci. 2010;22(6):1112–1123. doi: 10.1162/jocn.2009.21282. 19580387 [DOI] [PubMed] [Google Scholar]

- Stopford C.L., Thompson J.C., Neary D., Richardson A.M., Snowden J.S. Working memory, attention, and executive function in Alzheimer's disease and frontotemporal dementia. Cortex. 2012;48(4):429–446. doi: 10.1016/j.cortex.2010.12.002. 21237452 [DOI] [PubMed] [Google Scholar]

- Strouse A.L., Hall J.W., Burger M.C. Central auditory processing in Alzheimer's disease. Ear Hear. 1995;16(2):230–238. doi: 10.1097/00003446-199504000-00010. 7789674 [DOI] [PubMed] [Google Scholar]

- Voisin J., Bidet-Caulet A., Bertrand O., Fonlupt P. Listening in silence activates auditory areas: a functional magnetic resonance imaging study. J. Neurosci. 2006;26(1):273–278. doi: 10.1523/JNEUROSCI.2967-05.2006. 16399697 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warren J.D., Fletcher P.D., Golden H.L. The paradox of syndromic diversity in Alzheimer disease. Nat. Rev. Neurol. 2012;8(8):451–464. doi: 10.1038/nrneurol.2012.135. 22801974 [DOI] [PubMed] [Google Scholar]

- Warren J.E., Wise R.J., Warren J.D. Sounds do-able: auditory-motor transformations and the posterior temporal plane. Trends Neurosci. 2005;28(12):636–643. doi: 10.1016/j.tins.2005.09.010. 16216346 [DOI] [PubMed] [Google Scholar]

- Warrington E.K. Recognition Memory Test. NFER-Nelson; Windsor: 1984. [Google Scholar]

- Warrington E.K., James M. The Visual Object and Space Perception Battery. Thames Valley Test Company; Bury St Edmunds: 1991. [Google Scholar]

- Wechsler D. Wechsler Memory Scale: Revised. The Psychological Corporation; San Antonio, TX: 1987. [Google Scholar]

- Wechsler D. Wechsler Abbreviated Scale of Intelligence: WASI. The Psychological Corporation, Harcourt Brace; San Antonio, TX: 1999. [Google Scholar]

- Weiskopf N., Helms G. 2008. Multi-parameter mapping of the human brain at 1 mm resolution in less than 20minutes. Proceedings of the 16th Scientific Meeting. ISMRM, Toronto, Canada. [Google Scholar]

- Weiskopf N., Hutton C., Josephs O., Deichmann R. Optimal Epi parameters for reduction of susceptibility-induced BOLD sensitivity losses: a whole-brain analysis at 3 T and 1.5 T. Neuroimage. 2006;33(2):493–504. doi: 10.1016/j.neuroimage.2006.07.029. 16959495 [DOI] [PubMed] [Google Scholar]

- Weiskopf N., Lutti A., Helms G., Novak M., Ashburner J., Hutton C. Unified segmentation based correction of R1 brain maps for RF transmit field inhomogeneities (UNICORT) Neuroimage. 2011;54(3):2116–2124. doi: 10.1016/j.neuroimage.2010.10.023. 20965260 [DOI] [PMC free article] [PubMed] [Google Scholar]