Abstract

Background

With improved surgical techniques and electrode design, an increasing number of cochlear implant (CI) recipients have preserved acoustic hearing in the implanted ear, thereby resulting in bilateral acoustic hearing. There are currently no guidelines, however, for clinicians with respect to audio-metric criteria and the recommendation of amplification in the implanted ear. The acoustic bandwidth necessary to obtain speech perception benefit from acoustic hearing in the implanted ear is unknown. Additionally, it is important to determine if, and in which listening environments, acoustic hearing in both ears provides more benefit than hearing in just one ear, even with limited residual hearing.

Purpose

The purposes of this study were to (1) determine whether acoustic hearing in an ear with a CI provides as much speech perception benefit as an equivalent bandwidth of acoustic hearing in the non-implanted ear, and (2) determine whether acoustic hearing in both ears provides more benefit than hearing in just one ear.

Research Design

A repeated-measures, within-participant design was used to compare performance across listening conditions.

Study Sample

Seven adults with CIs and bilateral residual acoustic hearing (hearing preservation) were recruited for the study.

Data Collection and Analysis

Consonant-nucleus-consonant word recognition was tested in four conditions: CI alone, CI + acoustic hearing in the nonimplanted ear, CI + acoustic hearing in the implanted ear, and CI + bilateral acoustic hearing. A series of low-pass filters were used to examine the effects of acoustic bandwidth through an insert earphone with amplification. Benefit was defined as the difference among conditions. The benefit of bilateral acoustic hearing was tested in both diffuse and single-source background noise. Results were analyzed using repeated-measures analysis of variance.

Results

Similar benefit was obtained for equivalent acoustic frequency bandwidth in either ear. Acoustic hearing in the nonimplanted ear provided more benefit than the implanted ear only in the wideband condition, most likely because of better audiometric thresholds (>500 Hz) in the nonimplanted ear. Bilateral acoustic hearing provided more benefit than unilateral hearing in either ear alone, but only in diffuse background noise.

Conclusions

Results support use of amplification in the implanted ear if residual hearing is present. The benefit of bilateral acoustic hearing (hearing preservation) should not be tested in quiet or with spatially coincident speech and noise, but rather in spatially separated speech and noise (e.g., diffuse background noise).

Keywords: Cochlear implants, speech perception, hearing aids, hearing preservation

Introduction

Anotable byproduct of expanded cochlear implant (CI) indications is that 80% of CI candidates now have bilateral low-frequency residual hearing before surgery (Balkany et al, 2006; Gifford et al, 2007; Dorman and Gifford, 2010). Improved surgical techniques and electrode design have also made it possible to preserve acoustic hearing in the implanted ear with both long electrode arrays (16–30 mm) (e.g., Gstoettner et al, 2008; Lenarz et al, 2009; Skarzynski et al, 2012) and short electrode arrays (6–10 mm) (e.g., Gantz et al, 2009). Thus, it is becoming increasingly possible for CI recipients to make use of a hearing aid and a CI in the same ear. Speech perception benefit has been demonstrated with the addition of a hearing aid to the CI regardless of whether the hearing aid is in the implanted or the nonimplanted ear (Gifford et al, 2007; Brown and Bacon, 2009; Dorman and Gifford, 2010; Dunn et al, 2010; Gifford et al, 2013; Rader et al, 2013). The degree of speech perception benefit varies greatly, however, across CI recipients.

CI recipients with preserved hearing have the option of wearing a hearing aid in addition to their CI in either or both ears. Between 70 and 100% of participants have better speech perception performance with a hearing aid in the implanted or nonimplanted ear (e.g., Brown and Bacon, 2009). That benefit or improvement in performance with the addition of the hearing aid in either ear varies between 5 and 65 percentage points across studies (Dunn et al, 2005; Mok et al, 2006; Gifford et al, 2007; Brown and Bacon, 2009; Visram et al, 2012). Some studies have found correlations between the degree of hearing loss and the benefit obtained from the addition of a hearing aid (Mok et al, 2010; Sheffield and Zeng, 2012; Yoon et al, 2012). The degree of hearing loss varies greatly among CI recipients, both in the degree of audibility as well as useable frequency bandwidth (Gifford et al, 2007; Gifford et al, 2014). Additionally, as a result of surgical-induced trauma in the implanted ear—and the fact that the poorer hearing ear is generally implanted— hearing in the nonimplanted ear is often better. The only study to examine the benefit of a hearing aid in implanted and nonimplanted ears separately found similar benefit from either ear when the degree of hearing loss was roughly symmetrical (Brown and Bacon, 2009). In summary, most CI recipients with residual acoustic hearing in either ear gain some speech perception benefit with the addition of a hearing aid in either ear, and there is no evidence of any difference in benefit between the ears.

In addition to the degree of hearing loss, the benefit of acoustic hearing has been shown to vary with acoustic bandwidth. Use of earphones to provide amplification and low-pass filters to adjust available acoustic bandwidth, a frequency bandwidth of <125 Hz for male speakers or <250 Hz for female speakers in the nonimplanted ear, is sufficient to provide speech perception benefit in a bimodal hearing configuration; however, bimodal benefit (bimodal performance – CI only performance) does generally increase with increasing bandwidth (Zhang, Dorman, et al, 2010; Sheffield and Gifford, 2014). Specifically, studies have shown that individual bimodal benefit varies between 0–30 percentage points for <125 or 150 Hz, 0–35 percentage points for <250 Hz, 0–40 percentage points for <500 Hz, 0–50 percentage points for <750 Hz, and 10–60 percentage points with no low-pass filter or all available acoustic hearing (Cullington and Zeng, 2010; Zhang, Dorman, et al, 2010; Sheffield and Gifford, 2014). Mean bimodal benefit values across word and sentence recognition in quiet and in noise are between 15 and 30 percentage points for <250 Hz bandwidth in the nonimplanted ear. Thus, CI recipients will gain between a 15 and 30 percentage point benefit in speech perception if they have aidable hearing at frequencies ≤250 Hz in the nonimplanted ear and wear a hearing aid. Importantly, the effect of frequency bandwidth in the implanted ear on speech perception benefit is unknown. Consequently, audiologists have no guidelines that specifically outline audiometric criteria for recommending amplification in the implanted ear.

We suggest two plausible hypotheses for differences in bandwidth effects on speech perception benefit between the ears. The first hypothesis is that equal benefit will be obtained with equivalent acoustic bandwidth regardless of the origin. In other words, the same acoustic cues are present in each ear, and listeners can equally integrate acoustic hearing in either ear with the CI signal. The second hypothesis is that information in the acoustic portion of the signal can be better integrated with the CI signal when it originates from the same ear.

The first hypothesis is consistent with results from Brown and Bacon (2009), showing benefit from acoustic hearing in either ear and consistent with the glimpsing theory described by Li and Loizou (2008). The glimpsing theory states that the speech perception benefit is obtained by “glimpsing” temporal low-frequency peaks of the signal over troughs of the noise. This theory suggests that equal speech perception benefit will be obtained when glimpsing the peaks of the signal, no matter which ear is used.

On the other hand, the second hypothesis is consistent with the segregation theory and integration of acoustic and CI signals. The segregation theory states that speech cues are contrasted or integrated across the two signals: acoustic and CI (Qin and Oxenham, 2006). Cue integration could be very different when it occurs in the same ear rather than across different ears. One distinction made in the combination of acoustic signal information with CI information is adopted from multi-sensory integration: extraction and integration (Kong and Braida, 2011; Yang and Zeng, 2013). Extraction is use of the cues available in each of the signals, but not necessarily integration of the information. Integration, on the other hand, requires the combination of cues across signals to affect performance. Extraction would only be consistent with the first hypothesis in that extraction of the cues from either ear provides equal benefit. We suggest that the second hypothesis would support better true integration of the two signals within the same ear rather than across ears.

For CI recipients with hearing preservation, it is reasonable to assume that acoustic hearing in both ears will provide more benefit than acoustic hearing in just one ear. However, significant additional benefit of bilateral acoustic hearing (hearing aids) has only been found in some listening conditions such as diffuse background noise (Dunn et al, 2010; Gifford et al, 2013; Rader et al, 2013). The additional benefit of hearing aids in both ears versus a hearing aid in one ear also varies across individuals. Between 45–80% of participants gained more benefit with both hearing aids than either hearing aid alone with an average difference of ∼2 dB SNR or 6– 10 percentage points (Dunn et al, 2010; Gifford et al, 2013). Additionally, Gifford et al (2013) found no difference in average consonant-nucleus-consonant (CNC) word recognition in quiet between the combined condition with both hearing aids and the bimodal condition with just the hearing aid in the nonimplanted ear (77% versus 79%). The current study examined whether additional CNC word recognition benefit in quiet and in noise is obtained with bilateral acoustic hearing (the addition of the implanted ear) compared with unilateral acoustic hearing (the nonimplanted or implanted ear alone). Acoustic bandwidth in the implanted ear was controlled using an insert earphone and low-pass filters to determine the minimal hearing necessary to benefit from the addition of a hearing aid in the implanted ear.

We tested unilateral CI recipients with bilateral low-frequency acoustic hearing to examine the effects of frequency bandwidth on speech perception benefit. The two purposes of this study were to (a) determine whether acoustic hearing in an ear with a CI provides as much speech perception benefit as the same bandwidth of acoustic hearing in the nonimplanted ear, and (b) determine whether acoustic hearing in both ears provides more benefit than hearing in just one ear (i.e., if additional benefit is gained from the acoustic hearing in the implanted ear). We predicted that (a) the implanted and nonimplanted ears would provide equal speech perception benefit given equivalent audibility, and (b) acoustic hearing in both ears would provide more benefit than unilateral acoustic hearing.

Methods

Participants

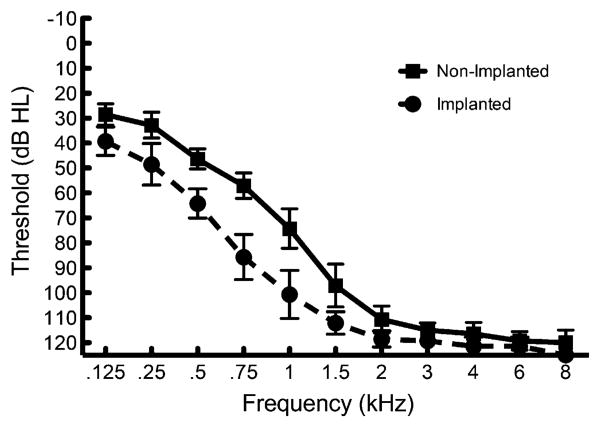

Seven adult unilateral CI recipients with bilateral low-frequency acoustic hearing participated in this study in accordance with Vanderbilt University Institutional Review Board approval. Each of the participants had at least 15 mo (mean = 46.8 mo) of listening experience with their CI and bilateral hearing aids. Each participant also had pure-tone thresholds of 80 dB HL or better at 500 Hz in both ears. We chose these criteria to ensure that each listener had aidable hearing below 500 Hz based on the half-gain rule and availability of power hearing aids in providing low-frequency gain. Mean audiometric thresholds for the implanted and nonimplanted ears are displayed in Figure 1. Demographic information for each CI listener is presented in Table 1. All of the participants used Cochlear Corporation Nucleus implants. Participants 1–5 had hybrid speech processors with a built-in acoustic component for amplification in the implanted ear. The participants with hybrid speech processors used S8 (P3), S12 (P5), or L24 (P1–2, P4) electrode arrays. The two other participants had CP810 speech processors with full-length electrode arrays and a personal hearing aid device.

Figure 1.

Implanted and nonimplanted ear pure-tone thresholds. Error bars represent 1 standard error of the mean.

Table 1. Demographic Information for Each CI Listener.

| Participant | Age | Gender | Electrode Type | Processor | Implant Ear | Months of CI Use |

|---|---|---|---|---|---|---|

| 1 | 80 | Male | L24 | Freedom Hybrid | Right | 48 |

| 2 | 60 | Female | L24 | Freedom Hybrid | Right | 70 |

| 3 | 63 | Male | S8 | Freedom Hybrid | Right | 72 |

| 4 | 52 | Female | L24 | Freedom Hybrid | Right | 48 |

| 5 | 43 | Female | S12 | Freedom Hybrid | Left | 26 |

| 6 | 76 | Male | CI24RE | CP810 | Right | 15 |

| 7 | 74 | Male | CI24RE | CP810 | Left | 33 |

| Mean | 59.6 | 44.6 |

Speech Stimuli and Test Conditions

We assessed speech recognition using CNC monosyllabic words (Peterson and Lehiste, 1962). The CNC stimuli consist of 10 phonemically balanced lists of 50 monosyllabic words recorded by a single male speaker. The mean F0 of the CNC words is 123 Hz with a standard deviation of 17 Hz (Zhang, Dorman, et al, 2010). Performance was scored as the percentage of words correctly repeated by the participant.

The recognition of CNC words was assessed in quiet and in a 20-talker babble at a +10 signal-to-noise ratio (SNR). Testing in both quiet and multitalker babble was completed because of differences found in the bandwidth of acoustic hearing necessary to obtain benefit in performance from the addition of the acoustic hearing (Zhang, Dorman, et al, 2010; Sheffield and Gifford, 2014).

Each participant was tested in quiet and in multi-talker babble in four different listening conditions: (1) CI alone, (2) CI + implanted ear acoustic hearing (ipsi-lateral electric and acoustic stimulation [ipsiEAS]), (3) CI + nonimplanted ear acoustic hearing (bimodal), and (4) CI + bilateral acoustic hearing (EASall). The full-frequency bandwidth (188–7938 Hz) was delivered across all active electrodes for all conditions, with the exception of P3 and P5. For P3 and P5, who were both recipients of a Hybrid 10-mm electrode, the starting frequency for the CI in their everyday listening condition was 563 Hz and 813 Hz, respectively. For the remaining five recipients, the full-frequency bandwidth for the CI was programmed based on patient preference, as participants reported that the full spectrum delivered to the CI yielded the most “natural” sound quality even in the presence of acoustic hearing (Zhang, Spahr, et al, 2010).

To simulate differences in bandwidth of useable hearing, we applied a series of low-pass filters to the acoustic signals. The acoustic signal for the ipsiEAS and bimodal conditions was presented in an unprocessed (wideband) condition as well as in the following low-pass filter conditions: <125 Hz, <250 Hz, <500 Hz, and <750 Hz. These filter bands were chosen to include only the fundamental frequency (<125 Hz) and then increasing speech energy and cues as well as to replicate the study by Zhang, Dorman, et al, (2010). The purpose of testing in the EASall condition was to examine any additional benefit afforded by preserved hearing in the implanted ear and how much preserved hearing is necessary. Thus, in the EASall condition, the acoustic bandwidth in the nonimplanted ear that provided the best performance for each participant (P1–P4 and P6: wideband; P5: <750 Hz; and P7: <500 Hz) was used, and all four filtered bands as well as the wideband condition were tested in the implanted ear.

The orders of testing for listening condition and acoustic bandwidth within each condition were counterbalanced across listeners using a modified Latin square technique. Each listener was tested in a total of 15 condition/bandwidth combinations in quiet and in multi-talker babble. The 10 CNC word lists were randomly selected across condition/bandwidth combinations. Because there are only 10 CNC word lists and 32 total condition/bandwidth combinations, each list was used three or four times for each participant. This practice is not uncommon in speech recognition research with CI recipients (e.g., Balkany et al, 2007; Gantz et al, 2009), particularly given that learning effects are minimal for monosyllabic words (Wilson et al, 2003).

Presentation of Speech Stimuli

Signals were presented to the CI via direct audio input to the speech processor in each participant's “everyday” listening program. CI volume level was adjusted to a comfortable listening level for each listener. Acoustic signals were presented to each ear using Etymotic Research 3A insert earphones at 60 dBA in quiet and 65 dBA in multitalker babble as measured when presented via the insert earphones in a Knowles Electronic Manikin for Acoustical Research.

The acoustic stimuli were filtered using MATLAB version 11.0 software with a finite impulse response filter with a 90 dB per octave roll-off in each band. After filtering, the acoustic stimuli were amplified linearly according to the frequency-gain prescription for a 60 dB SPL input dictated by National Acoustic Laboratories' nonlinear fitting procedure, version 1 (NAL-NL1; Byrne et al, 2001) to ensure audibility in each ear. The outputs of the acoustic stimuli were verified to match NAL-NL1 targets for a 60 dB SPL input, measured with probe microphone measurements in the Knowles Electronic Manikin for Acoustical Research with the insert earphones, in order to verify audibility of the stimuli for each frequency band.

Analysis

Two participants (P4 and P6) were not tested in multitalker babble because of floor effects. Therefore, analyses for testing in multitalker babble were made on the basis of data obtained from five participants. We completed analyses using repeated-measures analysis of variance (ANOVA) statistics. For all analyses using benefit of acoustic hearing, it was defined as the percentage-point difference between the specific acoustic filter scores in the bimodal, ipsiEAS, and EASall conditions and the CI-alone score. The additional benefit of hearing preservation in the implanted ear over the bimodal condition was defined as the scores in the EASall bandwidth conditions minus the best bimodal performance. The Fisher least significant difference test was used for post hoc testing. Least significant difference testing does not correct for multiple comparisons but was used to maximize power because of the small sample size.

Results

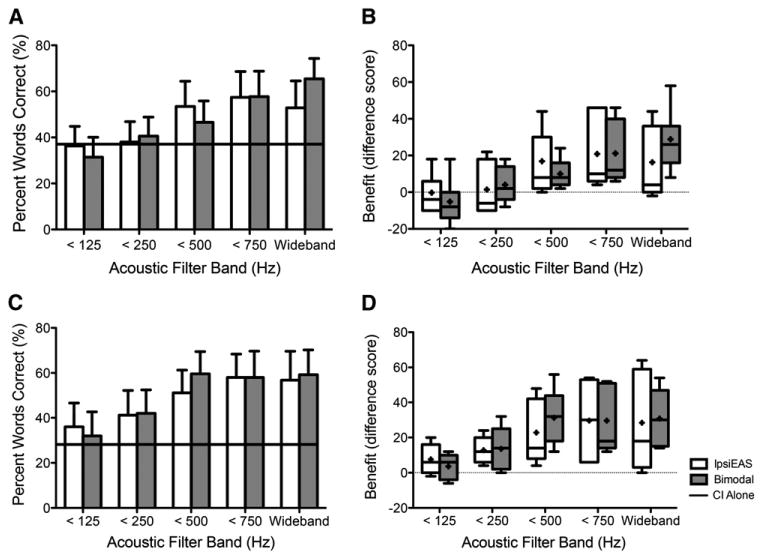

Figure 2A shows average performance in the ipsiEAS and bimodal conditions for each of the filter bands in quiet. CI-alone performance is plotted as a horizontal, solid-black line at 36.6%. Figure 2B shows individual and average benefit, percentage point improvement (e.g., bimodal – CI-alone), for each filter band in the ipsiEAS and bimodal conditions in quiet. Participants obtained similar benefit from the acoustic hearing in each ear across the filter bands, except for the wideband signal for which bimodal exceeded ipsiEAS. A two-factor (filter band, acoustic ear: bimodal or ipsiEAS) repeated-measures ANOVA on the acoustic benefit values supported similar benefit across ears with a significant main effect of filter band [F(4,24) = 12.05, p < 0.0001], indicating acoustic benefit but no main effect of the acoustic ear [F(1,24) = 0.05, p < 0.829]. There was also a significant interaction between the acoustic ear and filter band [F(4,24) = 7.75, p < 0.0004]. This interaction seems to be driven by the difference in acoustic benefit for the wideband acoustic signal between ears or the growth pattern of acoustic benefit from the <500 Hz filter band to the wideband. Post hoc analyses of the interaction effect between the acoustic ear and filter band indicated that the increase in benefit from the <500 and <750 Hz bands to the wideband signals is significantly more for the bimodal condition than the ipsiEAS condition [t(6) = 3.93, p < 0.008; t(6) = 3.35, p < 0.016]. In other words, acoustic benefit continued to increase with energy above 500 Hz in the bimodal condition, but not in the ipsiEAS condition. No other post hoc analyses with respect to the interaction were significant.

Figure 2.

Mean unilateral acoustic benefit inquiet (A) and in multitalker babble (C). Mean CI-alone performance is represented by solid-black line (A and C). IpsiEAS is represented in unfilled bars and bimodal in gray-filled bars in all subfigures. Error bars represent 1 standard error of the mean (A and C). Individual and mean acoustic benefit (percentage-point difference, e.g., bimodal – CI alone) in quiet (B) and in multitalker babble (D). Box plots in B and D include horizontal lines at the median values and “+” signs at the mean values, with the whiskers representing the minimal and maximal benefit values. Horizontal dotted lines in B and D represent no benefit or change in performance with added acoustic hearing. N = 7 in quiet and N = 5 in multitalker babble.

Figure 2C shows average performance in the bimodal and ipsiEAS conditions for each of the filter bands in multitalker babble. CI-alone performance is again plotted as a solid-black line at 28.4%. The CI-alone performance in multitalker babble is <10 percentage points poorer than the CI-alone performance in quiet. This is because the two participants who did not complete testing in multitalker babble had poor performance in quiet, lowering the mean. The mean CI-alone performance in quiet of the five participants who completed testing in both quiet and noise was 43.2%. Figure 2D shows the same individual and average benefit scores for each filter band in the ipsiEAS and bimodal conditions as in Figure 2B but in multitalker babble. Similar to the results in quiet, participants obtained comparable benefit from the acoustic hearing in each ear. Unlike the quiet results, however, the benefit obtained from the wideband acoustic signal was similar for each ear. A two-factor (filter band, acoustic ear: bimodal or ipsiEAS) repeated-measures ANOVA confirmed similar acoustic benefit across ears with a significant effect of filter band [F(4,16) = 9.41, p < 0.0005] but no significant effect of acoustic ear [F(1,4) = 0.62, p < 0.477] and no significant interaction between acoustic ear and filter band [F(4,16) = 1.37, p < 0.288]. In summary, results in multitalker babble indicate increasing acoustic benefit as filter bandwidth increases and no difference in acoustic benefit across ears.

Two participants in quiet (P6, P7) and one in multitalker babble (P7) gained limited to no benefit from the acoustic hearing in the implanted ear, regardless of filter band. Removal of their data from the analyses made no difference in the results; therefore, their data were retained in the analyses.

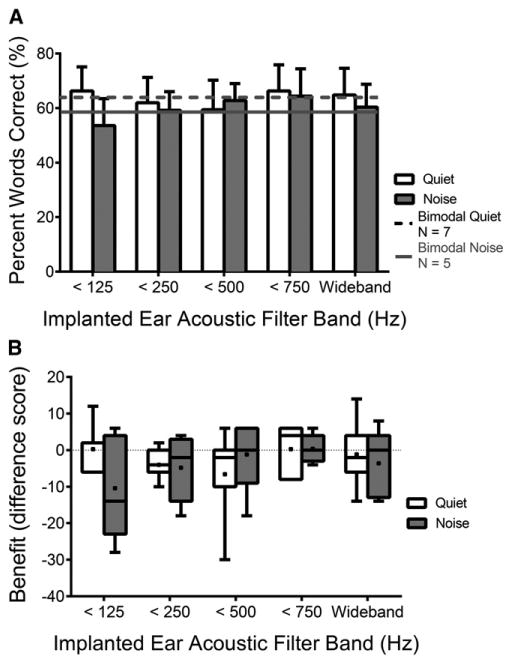

Although the acoustic hearing in each ear provides benefit in speech recognition, it is important to determine if acoustic hearing in both ears provides more benefit than acoustic hearing in just one ear. Figure 3A shows average performance for the EASall condition with each filter band in both quiet and multitalker babble. Mean bimodal performances (wideband acoustic) in quiet (65.4%) and in multitalker babble (59.2%) are plotted as a dotted-black line and a solid-gray line, respectively. Figure 3B shows individual and average acoustic benefit, percentage-point difference (EASall – bimodal), for each filter band in the implanted ear in quiet and in multitalker babble. The variability is large, and no individual benefit value from the addition of the implanted ear was >10 percentage points for any filter band in quiet or in multitalker babble, indicating limited to no additional benefit. In some cases (e.g., <125 Hz filter band), some participants appear to have experienced a decrement in performance. Statistically, however, single-factor (filter band) repeated-measures ANOVAs of the additional benefit of the acoustic hearing in the implanted ear (EASall – bimodal) revealed no significant effect of filter band in quiet nor in multitalker babble [F(1,6) = 1.17, p < 0.347; F(1,6) = 1.40, p < 0.267; respectively]. In other words, acoustic benefit and performance in the EASall condition did not increase with increasing acoustic energy in the implanted ear.

Figure 3.

Mean EASall performance with each filter band is displayed for quiet and multitalker babble as unfilled and gray-filled bars, respectively (A). Mean bimodal performances in quiet and in noise are represented by the dotted-black and solid-gray lines, respectively. Error bars represent 1 standard error of the mean. Individual and mean additional benefit of the acoustic hearing in the implanted ear (EASall – bimodal for each filter band in the implanted ear) are shown using unfilled and gray-filled box plots for quiet and multitalker babble, respectively (B). Box plots in B include horizontal lines at the median values and “+” signs at the mean values, with the whiskers representing the minimal and maximal benefit values. Horizontal dotted lines in B and D represent no benefit or change in performance with added acoustic hearing. N = 7 in quiet and N = 5 in multitalker babble.

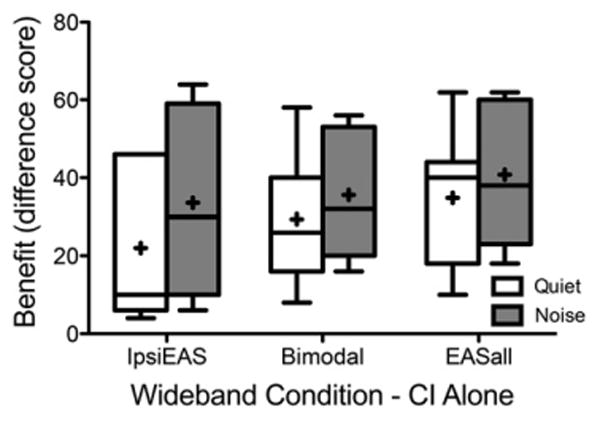

Figure 4 shows individual and average acoustic benefit in the ipsiEAS, bimodal, and EASall conditions, all with wideband acoustic signals, compared with the CI-alone performance in both quiet and multitalker babble. There was no significant difference between acoustic benefit in the bimodal and EASall conditions in quiet [t(6) = 0.88, p < 0.410], but acoustic benefit in the EASall condition was significantly greater than benefit in the ipsiEAS condition in quiet [t(6) = 2.79, p < 0.032]. There was no significant difference between the acoustic benefit in EASall and bimodal or ipsiEAS in multi-talker babble [t(4) = 0.65, p < 0.550; t(4) = 0.20, p < 0.851; respectively]. At first sight, it appears that acoustic benefit was larger in multitalker babble than in quiet. When removing the two participants who only participated in quiet, however, we observed no difference between acoustic benefit in quiet and multitalker babble. In summary, these results indicate limited to no additional benefit from acoustic hearing in the implanted ear when added to the bimodal condition in quiet and in multitalker babble originating from a single loudspeaker.

Figure 4.

Individual and mean acoustic benefit for the wideband filters of IpsiEAS, bimodal, and EASall in unfilled box plots for testing in quiet and gray-filled box plots for testing in multitalker babble. Box plots include horizontal lines at the median values and “+” signs at the mean values, with the whiskers representing the minimal and maximal benefit values. N = 7 in quiet and N = 5 in multitalker babble.

Discussion

Does Acoustic Hearing in the CI Ear Provide as Much Benefit as an Equivalent Acoustic Band in the Nonimplanted Ear?

Based on the results of testing in quiet and in multitalker babble, the same speech perception benefit can be obtained with equivalent acoustic hearing bands in either ear. In other words, if a CI recipient has aidable hearing (≤80 dB HL) up to 500 Hz in both ears, he or she should obtain equal speech perception benefit via acoustic amplification in either ear. The only exception to equal benefit from each ear is the wideband condition in the nonimplanted ear (bimodal) providing more benefit than the implanted ear in quiet. Statistically, the difference in acoustic benefit between the wideband conditions of ipsiEAS and bimodal approached significance in quiet [t(6) = 2.20, p < 0.071]. The most likely reason for this difference is related to audibility. Mean pure-tone thresholds at 750 and 1000 Hz in the nonimplanted ear were aidable (≤80 dB HL), whereas they were not in the implanted ear as can be seen in Figure 1.

Additionally, examination of individual data revealed that each participant obtained similar benefit from the acoustic hearing in each of the ears except for P7, who received much more benefit from the nonimplanted ear. However, P7 had the largest asymmetry in low-frequency pure-tone thresholds. This finding is not unexpected, as previous research has shown correlations between pure-tone thresholds and acoustic benefit (Mok et al, 2010; Sheffield and Zeng, 2012; Yoon et al, 2012; Yang and Zeng, 2013). Additionally, two participants in quiet (P6, P7) and one in multitalker babble (P7) gained no benefit from the acoustic hearing in the implanted ear (<6 percentage points). This finding is consistent with results in the literature showing that up to 55% of participants gain no speech perception benefit from acoustic hearing. As stated in the Introduction, we anticipated two likely outcomes when comparing acoustic benefit obtained between ears. The first was that similar benefit would be obtained for equivalent acoustic bands in each ear. The second was that more benefit would be obtained from acoustic hearing in the implanted ear because integration of cues across the CI and acoustic signals would be easier in the same ear. The current results support the first hypothesis or outcome that equal benefit can be obtained from each ear so far as residual hearing is fairly symmetrical. It is possible that this result occurred because participants are only extracting the cues from the different signals or glimpsing them but not integrating cues between the two signals. Further research is necessary to determine the contributions of extraction and integration of speech cues across the CI and acoustic signals in CI recipients with hearing preservation.

Another consideration in the interpretation of the current dataset is that no attempts were made to manipulate the degree of electric and acoustic overlap. That is, all electrodes were left active with full-frequency allocation from 188–7938 Hz for five of the seven participants and P3 and P5 programmed with their everyday CI starting frequencies of 563 and 813 Hz, respectively. This decision was made based on what the patient was most accustomed to for the everyday program with respect to the degree of electric and acoustic overlap. This degree of overlap, however, may not have yielded the greatest degree of EAS benefit in the implanted ear based on conflicting results in the literature (Vermeire et al, 2008; Zhang, Spahr, et al, 2010; Karsten et al, 2013). Thus, further research is needed to determine the optimal degree of acoustic and electric overlap for maximal performance in the implanted ear.

Does Acoustic Hearing in Both Ears Provide More Benefit than Hearing in Just One Ear?

The current results indicate no additional speech perception benefit from acoustic hearing in the implanted ear, hearing preservation, when added to the bimodal condition. Previous research, however, has found significant additional speech perception benefit from the acoustic hearing in the implanted ear (Dunn et al, 2010; Rader et al, 2013; Gifford et al, 2014). The speech perception benefit was found when tested in semidiffuse noise environments with speech at 0° azimuth. Thus, it is possible that the additional benefit from the residual acoustic hearing in the implanted ear is obtained only in an environment with semidiffuse noise or separation of the signal and the noise and not when the signal and noise are spatially coincidental, as is the case in this study. Examining the results of the participants of the current study in semidiffuse noise could provide strong evidence that the additional benefit requires spatial separation of signal and noise.

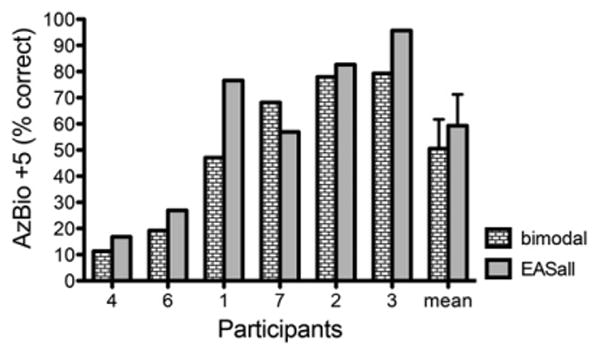

Figure 5 shows individual and mean AzBio sentence recognition performance in the EASall and bimodal conditions for six of the participants in semidiffuse restaurant noise at a +5 dB SNR. Participant 5 did not complete this testing because of floor effects. Five of the six participants performed better in the EASall condition than in the bimodal condition, and the mean difference was 8.8 percentage points, similar to the additional benefit reported for the larger sample in Gifford et al (2013). The additional benefit was significant in the larger sample in Gifford et al (2013). Given the small sample size and the fact that these data were included in the Gifford et al (2013) study, statistical analyses were not completed.

Figure 5.

Individual participant and mean performance for AzBio sentence recognition in semidiffuse restaurant noise for the bimodal and EASall conditions. Error bars represent 1 standard error of the mean.

In summary, the additional benefit of bilateral acoustic hearing obtained with semidiffuse background noise in the literature was not found with spatially coincidental signal and noise. It is perhaps not surprising that no additional benefit from bilateral acoustic hearing versus acoustic hearing in the nonimplanted ear alone is obtained with spatially coincident signal and noise, as the same acoustic speech features are likely present in the acoustic hearing in each ear, as long as hearing is roughly symmetrical. Thus, the benefit of the addition of the acoustic hearing in the implanted ear is likely obtained through binaural cues. Dunn et al (2010) suggested that bilateral low-frequency acoustic hearing might allow for better use of interaural time difference (ITD) and interaural level difference cues to squelch the spatially separated signal and noise. Gifford et al (2013) found evidence to support this method by showing that the difference between EASall performance and bimodal performance in semidiffuse noise correlated with individual ITD thresholds.

Study Limitations

Some limitations in this study should be noted, including the small sample size. Because of the small sample size, it is difficult to generalize the data and conclusions to all CI recipients. However, none of the seven participants gained more than 10 percentage points of benefit from the addition of the acoustic hearing in the implanted ear to the bimodal configuration in any condition (EASall – bimodal). Additionally, previous research has found no additional benefit from a hearing aid in the implanted ear for CNC word recognition in quiet (Gifford et al, 2013). Thus, it is unlikely that a large portion of CI recipients will gain additional benefit for CNC word recognition in quiet from a hearing aid in the implanted ear.

It is also important to note that speech perception benefit was only assessed with use of monosyllabic words. It is possible that speech tests with more or less context (sentence recognition or phoneme recognition, respectively) would reveal different results. Additionally, the CNC words have not previously been shown to be equivalent in multitalker babble. Because the CNC lists are designed with equal proportions of phonemes across lists, it is possible that they are more equivalent in noise than other monosyllabic word tests (Skinner et al, 2006). Additionally, we tested the equivalency of the 10 CNC word lists in six adults with normal hearing, with three tested at +10 dB SNR—as used in this study—and three at 0 dB SNR. Results revealed similar equivalency to that reported in CI recipients in quiet and 92% of all scores being within test-retest variability for 50-word monosyllabic lists (Thornton and Raffin, 1978; Skinner et al, 2006).

Conclusions

In conclusion, residual acoustic hearing in the implanted or nonimplanted ear provides equal speech perception benefit, provided that aided audibility is achievable. Acoustic hearing in the nonimplanted ear provided more benefit in quiet than the implanted ear in the wideband filter band in this study, but that was most likely because of better thresholds >500 Hz in the nonimplanted ears than the implanted ears. From a practical standpoint, if a unilateral CI recipient can wear only one hearing aid, the ear with the better residual acoustic hearing should be amplified. However, on the basis of previous research showing significant benefit with bilateral acoustic hearing and a CI (also see Figure 5), efforts should be made to provide acoustic amplification to both ears for frequencies with thresholds up to 80 dB HL. Also important to consider is that when testing for speech perception benefit of the addition of the implanted ear acoustic hearing (hearing preservation) to the bimodal condition (EASall – bimodal), the testing environment should include spatially separated signal and noise to allow use of ITD cues, as single-loudspeaker testing will not reveal the benefits associated with hearing preservation cochlear implantation. Finally, further research of electric and acoustic hearing is warranted to thoroughly investigate this hearing configuration and to develop clinical recommendations for EAS fittings.

Acknowledgments

This research was supported by grant R01 DC009404 from the National Institute on Deafness and Other Communication Disorders, National Institutes of Health (NIH).

Data collection and management via REDCap were supported by a Vanderbilt Institute for Clinical and Translational Research grant (UL1 TR000445 from NCATS/NIH).

The senior author is a member of the Audiology Advisory Board for Advanced Bionics, Cochlear Americas, and MED-EL.

Abbreviations

- bimodal condition

CI + nonimplanted ear acoustic hearing

- CI

cochlear implant

- CNC

consonant-nucleus-consonant

- EASall

CI + bilateral acoustic hearing

- ipsiEAS

ipsilateral electric and acoustic stimulation

- ITD

interaural time difference

- NAL-NL1

National Acoustic Laboratories' nonlinear fitting procedure, version 1

- SNR

signal-to-noise ratio

Footnotes

Portions of these data were presented at the American Auditory Society meeting in Scottsdale, AZ, March 8, 2012.

References

- Balkany TJ, Connell SS, Hodges AV, et al. Conservation of residual acoustic hearing after cochlear implantation. Otol Neurotol. 2006;27(8):1083–1088. doi: 10.1097/01.mao.0000244355.34577.85. [DOI] [PubMed] [Google Scholar]

- Balkany T, Hodges A, Menapace C, et al. Nucleus freedom North American clinical trial. Otolaryngol Head Neck Surg. 2007;136(5):757–762. doi: 10.1016/j.otohns.2007.01.006. [DOI] [PubMed] [Google Scholar]

- Brown CA, Bacon SP. Achieving electric-acoustic benefit with a modulated tone. Ear Hear. 2009;30(5):489–493. doi: 10.1097/AUD.0b013e3181ab2b87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byrne D, Dillon H, Ching T, Katsch R, Keidser G. NAL-NL1 procedure for fitting nonlinear hearing aids: characteristics and comparisons with other procedures. J Am Acad Audiol. 2001;12(1):37–51. [PubMed] [Google Scholar]

- Cullington HE, Zeng FG. Bimodal hearing benefit for speech recognition with competing voice in cochlear implant subject with normal hearing in contralateral ear. Ear Hear. 2010;31(1):70–73. doi: 10.1097/AUD.0b013e3181bc7722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH. Combining acoustic and electric stimulation in the service of speech recognition. Int J Audiol. 2010;49(12):912–919. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn CC, Perreau A, Gantz B, Tyler RS. Benefits of localization and speech perception with multiple noise sources in listeners with a short-electrode cochlear implant. J Am Acad Audiol. 2010;21(1):44–51. doi: 10.3766/jaaa.21.1.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn CC, Tyler RS, Witt SA. Benefit of wearing a hearing aid on the unimplanted ear in adult users of a cochlear implant. J Speech Lang Hear Res. 2005;48(3):668–680. doi: 10.1044/1092-4388(2005/046). [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Hansen MR, Turner CW, Oleson JJ, Reiss LA, Parkinson AJ. Hybrid 10 clinical trial: preliminary results. Audiol Neurootol. 2009;14(Suppl 1):32–38. doi: 10.1159/000206493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, McKarns SA, Spahr AJ. Combined electric and contralateral acoustic hearing: word and sentence recognition with bimodal hearing. J Speech Lang Hear Res. 2007;50(4):835–843. doi: 10.1044/1092-4388(2007/058). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Sheffield SW, Teece K, Olund AP. Availability of binaural cues for bilateral implant recipients and bimodal listeners with and without preserved hearing in the implanted ear. Audiol Neurootol. 2014;19(1):57–71. doi: 10.1159/000355700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Skarzynski H, et al. Cochlear implantation with hearing preservation yields significant benefit for speech recognition in complex listening environments. Ear Hear. 2013;34(4):413–425. doi: 10.1097/AUD.0b013e31827e8163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gstoettner WK, van de Heyning P, O'Connor AF, et al. Electric acoustic stimulation of the auditory system: results of a multicentre investigation. Acta Otolaryngol. 2008;128(9):968–975. doi: 10.1080/00016480701805471. [DOI] [PubMed] [Google Scholar]

- Karsten SA, Turner CW, Brown CJ, Jeon EK, Abbas PJ, Gantz BJ. Optimizing the combination of acoustic and electric hearing in the implanted ear. Ear Hear. 2013;34(2):142–150. doi: 10.1097/AUD.0b013e318269ce87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong YY, Braida LD. Cross-frequency integration for consonant and vowel identification in bimodal hearing. J Speech Lang Hear Res. 2011;54(3):959–980. doi: 10.1044/1092-4388(2010/10-0197). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lenarz T, Stöver T, Buechner A, Lesinski-Schiedat A, Patrick J, Pesch J. Hearing conservation surgery using the Hybrid-L electrode. Results from the first clinical trial at the Medical University of Hannover. Audiol Neurootol. 2009;14(Suppl 1):22–31. doi: 10.1159/000206492. [DOI] [PubMed] [Google Scholar]

- Li N, Loizou PC. A glimpsing account for the benefit of simulated combined acoustic and electric hearing. J Acoust Soc Am. 2008;123(4):2287–2294. doi: 10.1121/1.2839013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mok M, Galvin KL, Dowell RC, McKay CM. Speech perception benefit for children with a cochlear implant and a hearing aid in opposite ears and children with bilateral cochlear implants. Audiol Neurootol. 2010;15(1):44–56. doi: 10.1159/000219487. [DOI] [PubMed] [Google Scholar]

- Mok M, Grayden D, Dowell RC, Lawrence D. Speech perception for adults who use hearing aids in conjunction with cochlear implants in opposite ears. J Speech Lang Hear Res. 2006;49(2):338–351. doi: 10.1044/1092-4388(2006/027). [DOI] [PubMed] [Google Scholar]

- Peterson GE, Lehiste I. Revised CNC lists for auditory tests. J Speech Hear Disord. 1962;27:62–70. doi: 10.1044/jshd.2701.62. [DOI] [PubMed] [Google Scholar]

- Qin MK, Oxenham AJ. Effects of introducing unprocessed low-frequency information on the reception of envelope-vocoder processed speech. J Acoust Soc Am. 2006;119(4):2417–2426. doi: 10.1121/1.2178719. [DOI] [PubMed] [Google Scholar]

- Rader T, Fastl H, Baumann U. Speech perception with combined electric-acoustic stimulation and bilateral cochlear implants in a multisource noise field. Ear Hear. 2013;34(3):324–332. doi: 10.1097/AUD.0b013e318272f189. [DOI] [PubMed] [Google Scholar]

- Sheffield BM, Zeng FG. The relative phonetic contributions of a cochlear implant and residual acoustic hearing to bimodal speech perception. J Acoust Soc Am. 2012;131(1):518–530. doi: 10.1121/1.3662074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheffield SW, Gifford RH. The benefits of bimodal hearing: effect of frequency region and acoustic bandwidth. Audiol Neurootol. 2014;19(3):151–163. doi: 10.1159/000357588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skarzynski H, Lorens A, Matusiak M, Porowski M, Skarzynski PH, James CJ. Partial deafness treatment with the nucleus straight research array cochlear implant. Audiol Neurootol. 2012;17(2):82–91. doi: 10.1159/000329366. [DOI] [PubMed] [Google Scholar]

- Skinner MW, Holden LK, Fourakis MS, et al. Evaluation of equivalency in two recordings of monosyllabic words. J Am Acad Audiol. 2006;17(5):350–366. doi: 10.3766/jaaa.17.5.5. [DOI] [PubMed] [Google Scholar]

- Thornton AR, Raffin MJ. Speech-discrimination scores modeled as a binomial variable. J Speech Hear Res. 1978;21(3):507–518. doi: 10.1044/jshr.2103.507. [DOI] [PubMed] [Google Scholar]

- Vermeire K, Anderson I, Flynn M, Van de Heyning P. The influence of different speech processor and hearing aid settings on speech perception outcomes in electric acoustic stimulation patients. Ear Hear. 2008;29(1):76–86. doi: 10.1097/AUD.0b013e31815d6326. [DOI] [PubMed] [Google Scholar]

- Visram AS, Azadpour M, Kluk K, McKay CM. Beneficial acoustic speech cues for cochlear implant users with residual acoustic hearing. J Acoust Soc Am. 2012;131(5):4042–4050. doi: 10.1121/1.3699191. [DOI] [PubMed] [Google Scholar]

- Wilson RH, Bell TS, Koslowski JA. Learning effects associated with repeated word-recognition measures using sentence material. J Rehabil Res Dev. 2003;40(4):329–336. doi: 10.1682/jrrd.2003.07.0329. [DOI] [PubMed] [Google Scholar]

- Yang HI, Zeng FG. Reduced acoustic and electric integration in concurrent-vowel recognition. Sci Rep. 2013;3:1419. doi: 10.1038/srep01419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoon YS, Li Y, Fu QJ. Speech recognition and acoustic features in combined electric and acoustic stimulation. J Speech Lang Hear Res. 2012;55(1):105–124. doi: 10.1044/1092-4388(2011/10-0325). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang T, Dorman MF, Spahr AJ. Information from the voice fundamental frequency (F0) region accounts for the majority of the benefit when acoustic stimulation is added to electric stimulation. Ear Hear. 2010;31(1):63–69. doi: 10.1097/aud.0b013e3181b7190c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang T, Spahr AJ, Dorman MF. Frequency overlap between electric and acoustic stimulation and speech-perception benefit in patients with combined electric and acoustic stimulation. Ear Hear. 2010;31(2):195–201. doi: 10.1097/AUD.0b013e3181c4758d. [DOI] [PMC free article] [PubMed] [Google Scholar]