Abstract

Study Objective

We evaluated the short- and long-term impact of a computerized provider entry (CPOE)-based patient verification intervention to reduce wrong-patient orders in five emergency departments.

Methods

A patient verification dialog appeared at the beginning of each ordering session, requiring providers to confirm the patient's identity after a mandatory 2.5–second delay. Using the retract-and-reorder technique, we estimated the rate of wrong-patient orders before and after the implementation of the intervention to intercept these errors. We conducted a short- and long-term quasi-experimental study with both historical and parallel controls. We also measured the amount of time providers spent addressing the verification system, and reasons for discontinuing ordering sessions as a result of the intervention.

Results

Wrong-patient orders were reduced by 30% immediately after implementation of the intervention. This reduction persisted when using inpatients as a parallel control. After two years, the rate of wrong-patient orders remained 24.8% less than before intervention. The mean viewing time of the patient verification dialog was 4.2 seconds (SD = 4.0), and was longer when providers indicated they placed the order for the wrong patient (4.9 versus 4.1 seconds). Although the display of each dialog took only seconds, the large number of display episodes triggered meant that the physician time to prevent each retract-and-reorder event was 1.5 hours.

Conclusion

A CPOE-based patient verification system led to a moderate reduction in wrong-patient orders that was sustained over time. Interception of wrong-patient orders at the time of entry is an important step in reducing these errors.

Introduction

Background

Although the safety benefits of computerized provider order entry (CPOE) are well documented,1,2 the use of CPOE has been associated with additional types of errors, including order entry on the wrong patient.3–9 Before the advent of CPOE systems, paper charts offered a variety of visual and tactile cues—e.g., the thickness of the chart, the color of various forms and labels, the distinctive penmanship of consultants’ notes, and the placement of the chart near the patient’s bed—that could have subtly communicated to practitioners that they were in the correct chart. In the electronic health record (EHR), most of these cues are absent, and practitioners open charts by selecting from dynamic lists of patients that may not fit on the computer screen.

The Joint Commission highlighted the problem of patient identification in its first National Patient Safety Goal (NPSG .01.01.01), which requires healthcare providers to use at least two patient identifiers whenever taking blood samples or administering medications or blood products.10 There is no comparable requirement for patient verification when medication or other orders are placed.

The volume of wrong-patient order entry in the emergency department (ED) is not well known. A 2013 study by Adelman et al. estimated that approximately 1 in 1000 medication orders were placed for the wrong patient.11 In a cross-sectional analysis of all ED errors reported to the voluntary online MEDMARX system between 2000 and 2004, Pham et al. found that wrong-patient errors were three times more likely in EDs using CPOE compared to paper ordering.12 Other researchers have shown that ordering errors are more likely to happen in overcrowded settings13, but these results may not be generalizable to wrong-patient orders. Knowledge is scarce on factors that may increase the frequency of wrong-patient orders.

Importance

Wrong-patient order entry can be fatal. In March 2011, the Institute for Safe Medication Practices reported a case where an ED physician used a CPOE system to order the paralytic agent vecuronium on the wrong patient. The drug was administered to a patient who was not intubated and caused the patient’s death.14

It is hard to quantify what proportion of wrong-patient orders are intercepted before the occurrence, and evidence is scarce on the rate of wrong-patient orders that leads to severe outcomes such as lethal medication errors and misadministration of radiation due to x-rays performed on the wrong patient. The burden of identifying and eliminating wrong-patient order entries in CPOE needs to be reduced using effective automated interventions.

Goals of This Investigation

To reduce wrong-patient orders, we implemented a patient verification module in a commercial CPOE system at five EDs in New York City. A dialog box was displayed at the beginning of every ordering session, requiring providers to verify the patient for whom they were placing an order. The purpose of this study was to evaluate the short- and long-term impact of this intervention on the rate of intercepted wrong-patient orders and to assess the additional time practitioners spent placing orders as a result of the intervention.

Methods

Study Design, Settings and Selection of Participants

In this quasi-experimental study, monthly measurements of wrong-patient order rate were obtained before and after the implementation of the CPOE-based patient verification process. Five EDs were included: two adult EDs, two pediatric EDs, and one combined ED. The EDs served a socioeconomically-, racially- and ethnically-diverse population in New York City and had a combined annual visit volume of 250,000 patients. The EDs supported pediatrics and emergency medicine residency and pediatric emergency medicine fellowship programs.

The patient verification intervention was implemented in the EDs during May 2011. A commercial EHR (Allscripts Sunrise, Allscripts Corp., Chicago, IL) had been fully deployed—including CPOE and physician documentation—at the five sites before December 2010. The study sample included all orders written at these sites from January 2011 through April 2013. The pre-intervention phase (P1) included orders written from January to April 2011. We used two different time periods for the post-intervention phase of the study: to assess short-term impact of intervention, we used orders written in the four months following the intervention (June 2011 to September 2011, P2); we excluded the month of May from this analysis, because the module was being gradually rolled out during this month. To evaluate the long-term effect of the intervention, we used orders written between January 2013 and April 2013 (P3).

In a secondary analysis, we used inpatients of the same five facilities as a control group for our analysis. The patient verification module was not active in the inpatient settings during the study period. Despite the differences that exist between ED and inpatient settings with regard to process, workflow or frequency of wrong-patient orders, longitudinal data from the inpatient settings allowed for a parallel-controlled before-after design that would identify confounding secular trends, such as concurrent hospital-wide quality initiatives.

This study was conducted with approval from the institutional review boards of Columbia University Medical Center and Weill Cornell Medical College.

Intervention and Measurements

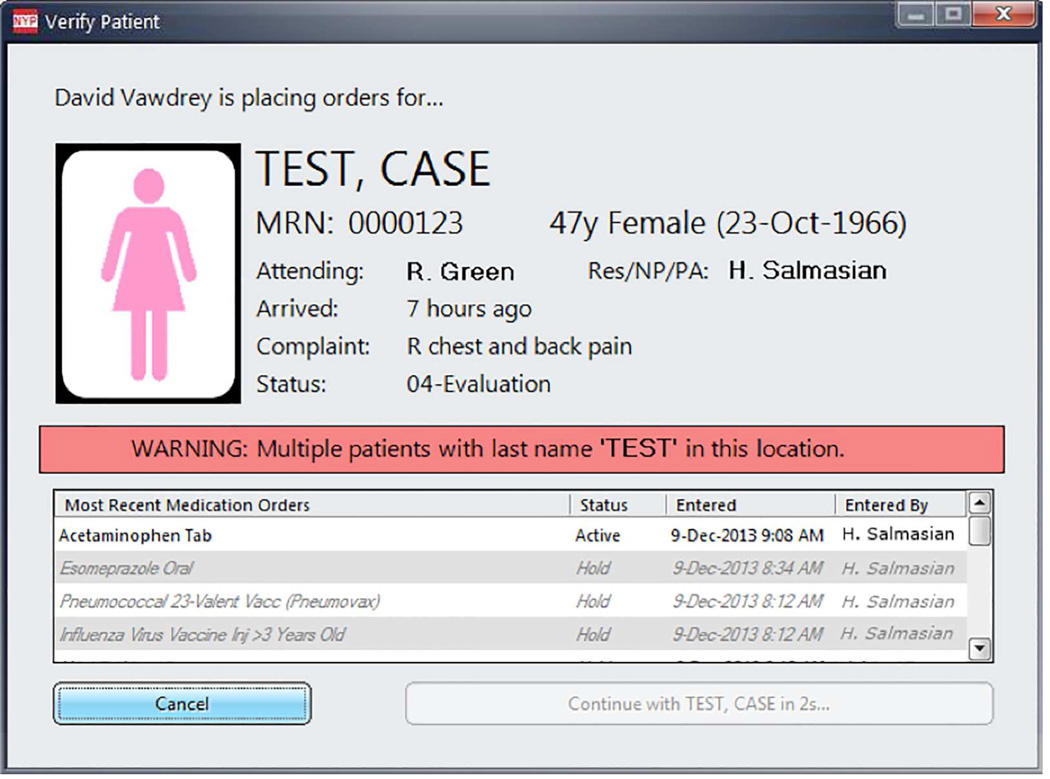

As part of a quality improvement initiative, a custom patient verification module was integrated into the CPOE system with the intent of helping practitioners intercept wrong-patient selection errors prior to order entry. A screenshot of the patient verification dialog is shown in Figure 1. After May 2011, the patient verification dialog was activated whenever an order-entry session was invoked. Three patient identifiers were prominently displayed: full name, birth date and medical record number. Additional information that could facilitate patient identification was also included, such as ED length of stay, chief complaint, bed location, and recent medication orders. Although patient photographs were not available in the patient verification dialog at the time of the study, a male or female icon was displayed according to the patient’s gender. A warning message appeared if another patient in the ED unit had the exact same last name.

Figure 1.

Screenshot of the patient verification dialog that appeared prior to order entry. The “Continue” button is disabled for 2.5 seconds when the dialog is displayed.

To prevent practitioners from immediately closing the patient verification dialog, the Continue button was disabled for 2.5 seconds when the form first appeared; the delay duration was selected based on feedback from clinicians in a preliminary usability study. There was no time delay for the Cancel button. If clinicians canceled out of an order-entry session attempt, they were prompted to select a reason for canceling (Supplement Figure 1).

The EHR system was fully implemented by January 2011 and all order entry was performed electronically in the study sites. A record of each order entry was obtained from EHR system logs. Additionally, the actions taken by providers within the patient verification module were also electronically recorded. We used the data from the EHR logs to perform our analysis.15

Outcome Measures

The data set included all orders placed through the CPOE system, including those for medications, diagnostics and other services such as nursing orders. Our primary outcome was intercepted wrong-patient orders (expressed as a rate per 1000 orders), which was calculated using the retract-and-reorder method described by Adelman et al.11 This method identifies orders placed for a patient but then rapidly discontinued by the same practitioner (i.e., the retract event); it then checks to see if an identical order was subsequently entered by the same provider on a different patient (i.e., the reorder event), within a short period of time after the retract event. Adelman evaluated the accuracy of the retract-and-reorder method by interviewing the provider after a retract-and-reorder event occurred. Adelman defined the method’s positive predictive value (PPV) as the percentage of retract-and-reorder events that were reported due to a wrong-patient order by the interviewed providers, and estimated a PPV of 76.2% (95% CI 70.6% to 81.9%).11 We used the retract-and-reorder method to measure the rate of intercepted wrong patient orders during the pre- and post-implementation periods.

For the post-implementation period, we also measured the amount of time spent by practitioners viewing the patient verification module, and the rate of back-out events (i.e., when practitioners pressed the “Cancel” button in the patient verification module and the order-entry session was not activated).

Data Analysis

We assessed the potential effect of different confounding variables, using a logistic regression model. Confounding variables included in the model consisted of patient-level variables (sex, age and race), provider role (attending physician, resident, medical student or other), and whether the order was placed during a day or a night shift. Further, we compared the impact of intervention across the five sites included in this study.

In a secondary analysis, we used the rate of wrong-patient orders in the five facilities’ inpatient settings to standardize the rate of wrong-patient orders in the ED data. Standardization was accomplished by dividing the rate of wrong-patient orders in the ED setting for each study period by the “baseline” rate of wrong-patient orders in the inpatient setting within the same period. This was done to eliminate the potential impact of secular trends, assuming that the impact of these secular trends was proportionally the same in inpatient and ED settings. The adjusted rate was then compared across study periods using the chi-squared test.

We used change-point analysis to study the longitudinal trends of wrong-patient orders to identify if the effect of intervention was sustained over time. Change-point analysis is a statistical method for identifying any changes in the sequence of observed random variables16. We used the pruned exact linear time (PELT) method to identify the points at which there was a significant change in the average rate of wrong-patient orders17.

All analyses were conducted using R statistical package version 3.0.2 and changes in the rate of wrong-patient order were reported using risk ratios (RR) and their respective 95% confidence intervals (CIs) while continuous values were summarized using mean and standard deviation (SD). Change-point analysis was conducted using the ‘changepoint’ package.

Results

During the entire study period (December 2010 through June 2013), a total of 3,457,342 electronic orders were recorded in the five EDs. In the same time period, a total of 5,637 retract-and-reorder events were identified, indicating an estimated average rate for wrong-patient orders of 1.63 per 1000 orders (95% CI = 1.59 to 1.67). Of all orders, 40.6% were for diagnostic procedures (of which 15% were for imaging modalities and 85% for laboratory tests), 21.1% were for medications, and 38.2% were nursing and miscellaneous orders. The majority of orders were placed by resident physicians (50.7%), followed by attending physicians (34.1%), physician assistants (12.1%) and others (3.1%).

In terms of short-term impact, compared with the four months preceding the intervention, there was a 30% reduction in the rate of wrong-patient orders in the four months following the intervention (2.02 versus 1.41 per 1000 orders, RR = 0.70, 95% CI = 0.63 – 0.77). Regression analysis indicated that none of the potential confounder variables had a statistically significant association with the rate of wrong-patient orders (Table 1), and the association between the intervention and the primary outcome remained significant after adjusting for the confounder variables, with an odds ratio of 0.72 (95% CI = 0.64 – 0.80). Additionally, this reduction remained similar when we used inpatient data as a parallel control group (RR=0.69, 95% CI = 0.62 – 0.76). In the longer-term analysis, we observed a 24.8% decline in wrong patient orders in the four-month period two years after the intervention was completed, compared with the pre-intervention period (1.53 per 1000 orders, RR = 0.76, 95% CI = 0.69 – 0.83).

Table 1.

Results of logistic regression analysis for adjusting the effect of intervention for potential confounding variables. The regression coefficient and odds ratio for the “baseline” value of each variable is reported as “N/A”.

| Variable | Values | Coefficient | Odds ratio (95% CI) |

|---|---|---|---|

| Study period | After | −0.333 | 0.72 (0.64 – 0.70) |

| Before | N/A | ||

| Patient sex | Male | −0.0121 | 0.99 (0.89 – 1.10) |

| Female | N/A | ||

| Patient race | Black | 0.137 | 1.15 (0.71 – 1.84) |

| Hispanic | 0.214 | 1.24 (0.69 – 2.21) | |

| White | 0.155 | 1.17 (0.74 – 1.85) | |

| Other | 0.198 | 1.22 (0.77 – 1.93) | |

| Unknown | −0.003 | 1.00 (0.99 – 1.01) | |

| Asian | N/A | ||

| Provider role | Resident | −0.003 | 0.99 (0.89 – 1.12) |

| Physician Assistant | 0.038 | 1.04 (0.87 – 1.24) | |

| Other | 0.024 | 1.02 ( 0.75 – 1.39) | |

| Attending | N/A | ||

| Time of order | Night shift | −0.006 | 0.99 (0.89 – 1.10) |

| Day shift | N/A | ||

| Intercept | −8.369 | N/A | |

Subgroup analysis of the short-term impact of intervention showed that the reduction in the rate of wrong-patient orders after the implementation of the intervention occurred in all five study sites. However, in three sites, the study sample was not large enough for the difference to reach statistical significance. Table 2 summarizes the effect of intervention observed in each study site.

Table 2.

Difference in the rate of wrong-patient orders pre- and post-implementation of the intervention, expressed as an odds ratio.

| Study site | Percentage of all orders | Odds ratio (95% CI) |

|---|---|---|

| Adult ED 1 | 34.7% | 0.67 (0.56 – 0.81) |

| Adult ED 2 | 32.6% | 0.59 (0.48 – 0.73) |

| Combined ED | 13.0% | 0.86 (0.68 – 1.09) |

| Pediatric ED 1 | 14.2% | 0.82 (0.62 – 1.09) |

| Pediatric ED 2 | 5.4% | 0.77 (0.49 – 1.21) |

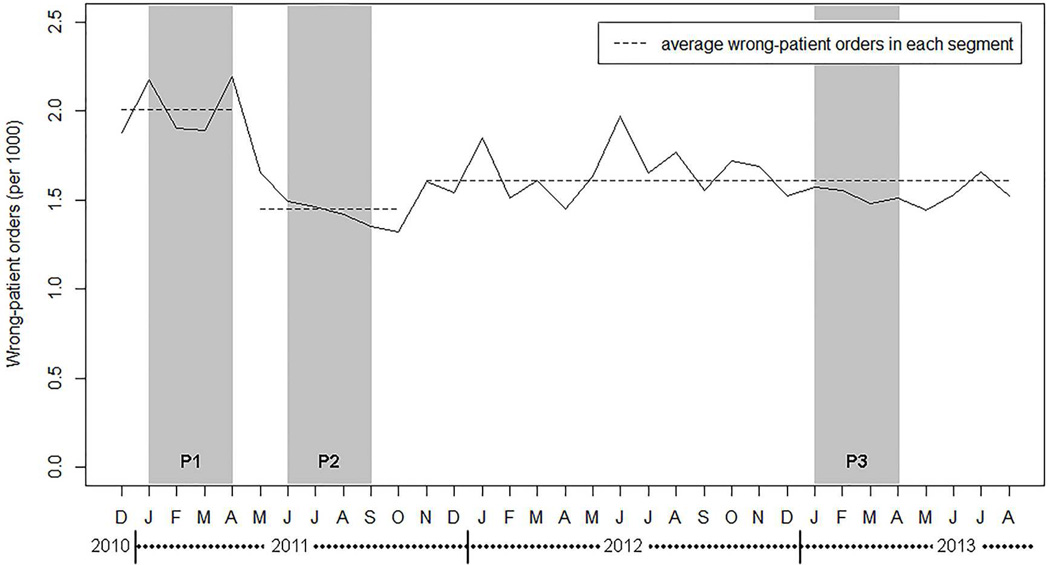

Change-point analysis showed that there were two significant change points in the data, one in May 2011 (coinciding with the intervention) and one in November 2011 (Figure 2). The average rate of wrong-patient orders in these three segments was 2.02 (95% CI = 1.88 – 2.14), 1.48 (95% CI = 1.36 – 1.58) and 1.58 (95% CI = 1.54 – 1.63) per 1000 orders, respectively and the difference in rate between successive segments was statistically significant in both cases. Using all data points after the implementation of the intervention, we observed a slight decline in the impact of the intervention over time but this trend was not statistically significant (average monthly decline of 0.003 per 1000 orders, 95% CI = −0.004 – 0.010). These findings support the previous chi-square analysis and suggest that the immediate plunge in the rate of wrong-patient orders has been sustained in long-term, although the reduction was slightly larger immediately after the intervention.

Figure 2.

The rate of wrong-patient orders in each month, per 1000 orders. Dashed lines show the average rate of wrong-patient orders in each segment detected in the data using change-point analysis17. Shaded areas show the study periods: pre-intervention (P1), short-term follow-up after intervention (P2), and long-term follow-up after intervention (P3).

We also analyzed the back-out events within the first four-months after the intervention. There were 481,858 order entry attempts during this period, and practitioners continued past the patient verification dialog 456,326 times in (94.7%). In 2,061 cases (0.4%), practitioners did not proceed with the order-entry session because they indicated the wrong patient was selected (first button in Supplement Figure 1). The remaining cases were reported as accidental clicks of the order entry button (second button in Supplement Figure 1, 0.3%), interruptions (third button in Supplement Figure 1, 0.3%) and “Other” (4.3%).

The mean viewing time of the patient verification module was 4.2 seconds (SD = 4.0). When “Wrong Patient Selected” was specified, the mean viewing time was 4.9 seconds, compared to 4.1 seconds when the correct patient was acknowledged. Average viewing time slowly increased over time at a rate of 0.1 seconds per year (linear regression coefficient = 3.16 × 10−4 seconds/day, 95%CI = 2.29 × 10−4 – 4.05 × 10−4). Practitioners launched an average of 30 order entry sessions per provider per 12-hour shift, with a maximum of 90 order-entry sessions per shift. On average, the patient-verification activity resulted in an additional 2.1 minutes per 12-hour shift, with a maximum of 6.3 minutes. Cumulatively, the patient verification module introduced an additional 562 hours of extra time introduced into the CPOE system during the 4–month study period, or almost 70 days annually for all ED physicians combined.

Limitations

Our study has several strengths, including the long-term follow-up period, the longitudinal analysis of the trends, multi-site design, and the use of a parallel-controlled methodology. There were several limitations as well. We did not evaluate the user interface of the patient verification module, including the mandatory viewing time or the placement of elements. Although our intervention was associated with a successful impact, it is possible that other module designs could lead to different results.

The retract-and-reorder method can only identify wrong-patient orders that were identified and corrected by the same provider within a short period of time. Wrong-patient orders that remain unnoticed or are intercepted by a different clinician are not identified using this method, which may lead to an underestimation of the wrong-patient order rate. However, it has be argued that the causal pathways of the intercepted wrong-patient orders (near-misses) and the actual accidents are similar18, and therefore an intervention that can reduce the number of intercepted wrong-patient orders will likely have a similar effect on those wrong-patient orders that remain unnoticed.

Also, the retract-and-reorder method has an estimated positive predictive value of 76%, and it can be hypothesized that this imperfection may have biased our results. However, the imperfection of this method can bias our results if and only if its false positive rate (i.e. the number of times a retract-and-reorder happens for reasons other than a wrong patient order) is higher in the pre-implementation period than the post-implementation period. There is little reason to believe that our intervention had a larger effect on these false positive events, therefore it is unlikely that our results are merely due to the imperfections of retract-and-reorder method. In addition, the retract-and-reorder method does not provide direct insight into the potential for harm. A wrong-patient order that is placed accidentally and intercepted within seconds may have a different impact than a wrong-patient order that is not intercepted for a longer time.

Additionally, our study uses a before-after design, and the results can be potentially confounded by an unknown simultaneous intervention that was not measured in the analyses; the use of a parallel control group can reduce the effect of unknown confounders, but because our control group was not matched with the study group (i.e. inpatient versus ED), we are only reporting the result of our controlled analysis as a secondary outcome and encourage the readers to interpret it with caution. Ideally, a control group consisting of another ED setting might be used for comparison; however, the intervention studied in this research was implemented as a pragmatic initiative to reduce wrong-patient ordering in the ED, and simultaneous deployment of the intervention in all ED settings precluded the possibility of a contemporaneous control group. Moreover, all five sites participating in this study used the same vendor software as their EHR system; therefore, our findings may not be readily generalizable to other EHR systems. Finally, although we observed a significant reduction in wrong-patient orders, the clinical significance of this change (from 2.0 to 1.5 wrong-patient orders per 1000 order) is hard to quantify, especially considering the additional time burden on the physicians. Future studies should address this by conducting appropriate cost-benefit analyses. Future studies should also focus on identifying the underlying causes of wrong-patient orders, for example through observational studies, or by contacting the physicians immediately after a retract-and-reorder event to discuss the reason for this action.

Discussion

We found a significant, immediate reduction of 30% in wrong-patient orders after the implementation of the patient verification intervention, and this effect was sustained, although slightly diminished at two years post-implementation.

Although the reduction in the rate of wrong-patient orders sustained over time, it was attenuated after the first few months post-intervention. One possible explanation for it can be the frequently cited phenomenon of alert fatigue19. Embi et al. analyzed the effect of alert fatigue on the response rate to alerts and reported a significant linear decline in alert effectiveness of approximately 8% per month.20 The slight decline in the impact of the patient verification module in our study was less severe and not statistically significant. Change-point analysis also confirmed that the impact of intervention remained relatively stable in the long-term.

Other studies have employed information technology interventions to encourage correct patient identification. Wilcox and colleagues studied the impact of using an electronic alert to reduce wrong-patient note-writing. The study reported a patient-note mismatch rate of 0.5% and a 40% reduction of mismatches after the implementation of patient verification module.21 Galanter et al. found that a mandatory “indication” field in the CPOE might assist the providers with avoiding wrong-patient orders for medications, and estimated that over a 6 year period, 32 wrong-patient errors were intercepted as a result of this intervention22. Finally, Adelman et al. studied two patient verification procedures prior to entering orders: the ‘ID-verify alert’, a verification module showing the patient’s name, age and gender, and the more complex ‘ID-reentry function’, which required the provider to re-enter the initials, gender and age. They showed that the interventions produced a decline in wrong order entry by 16% (ID-Verify) and 40% (ID-Reentry)11. Adelman’s work is most similar to our study, though their study was conducted in the inpatient care setting and there was no long-term follow-up. The patient verification module used in our study is comparable to the “ID-verify alert” used by Adelman et al.11 Similar to that study, the rate of retract-and-reorder events in our study was close to 1.5 in 1,000 orders.

We observed a more significant from our intention compared to the similar intervention in the study be Adelman et al. (30% versus 16% reduction in wrong-patient orders, respectively). One possible explanation for our more favorable outcomes can be differences in the design of the intervention, particularly the mandatory 2.5–second delay implemented in the patient verification module. This approach, which borrows from the human-computer interaction concept of polymorphic dialogs, prevents users from learning and automatically executing a fixed path through the interface, and has been shown to increase the likelihood that users take the time to understand information being presented to them on a computer screen.23

It is likely that most cases of wrong-patient order entry are rapidly discovered and corrected by the ordering practitioner. The prevalence of wrong-patient orders that directly affect patients is difficult to quantify, and many of these orders are caught by the prescribing provider or another member of health care team before the order is fulfilled. Nevertheless, relying solely on downstream mechanisms for catching wrong-patient orders, such as pharmacy review or nurse verification, can lead to tragic outcomes, and a recent study conducted in a simulated ED found out that more than one-third of emergency health care workers (including nurses, clerks and technicians) placed an identity band on the wrong patient because they failed to identify a patient identification error.24

Our study demonstrates the value of intercepting wrong-patient orders before they are placed. With the patient verification module in place, ED practitioners backed out of approximately 1 in 200 order entry sessions, reporting the reason for cancellation as “Wrong Patient Selected.” We believe that the problem of wrong-patient ordering can be most effectively addressed by preventing these orders from ever being entered into the CPOE system.

Our patient verification intervention was successful, but did not completely eliminate wrong-patient order entry. To further reduce the rate of wrong-patient orders will require additional interventions. The use of patient photographs may be an effective means to reduce this type of error. We identified only one published study regarding photo identification in CPOE, a 2012 article by Hyman et al. who reported on the use of patient photographs in the EHR for reducing wrong-patient orders.25 The study, while encouraging, was limited by its small sample size and reliance on voluntary reporting of errors.

Previous work has shown that medical residents and nurses admittedly do not consistently perform required patient identification activities because of time pressure26. Our approach imposed an additional time burden of at least 2.5s (mean = 4.3s) on practitioners each time they initiated order entry in the EHR. Interestingly, despite the mandatory 2.5 second delay, the total time added to the ordering session was lower in our study compared to that by Adelman et al. (4.3s versus 6.6s).

In summary, using an electronic patient verification system successfully reduced wrong-patient orders at the earliest stage of the order entry process, but did not completely eliminate them. More work is required to reduce this phenomenon in CPOE systems. Future work should also emphasize the cost-benefit tradeoff of improved safety vs. added clinician time burden.

Supplementary Material

Screenshot of the “Reason for Cancellation” dialog. This dialog appears when practitioners pressed the “Cancel” button in the patient verification dialog (Figure 1).

Acknowledgements

This study was in part supported by National Library of Medicine grants 5 T15 LM007079 and LM006910.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Bates DW, Teich JM, Lee J, Seger D, Kuperman GJ, Ma’Luf N, et al. The impact of computerized physician order entry on medication error prevention. J Am Med Inform Assoc. 1999;6(4):313–321. doi: 10.1136/jamia.1999.00660313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kaushal R, Shojania KG, Bates DW. Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med. 2003 Jun 23;163(12):1409–1416. doi: 10.1001/archinte.163.12.1409. [DOI] [PubMed] [Google Scholar]

- 3.Ash JS, Sittig DF, Dykstra R, Campbell E, Guappone K. The unintended consequences of computerized provider order entry: findings from a mixed methods exploration. Int J Med Inform. 2009 Apr;78(Suppl 1):S69–S76. doi: 10.1016/j.ijmedinf.2008.07.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Georgiou A, Prgomet M, Paoloni R, Creswick N, Hordern A, Walter S, et al. The effect of computerized provider order entry systems on clinical care and work processes in emergency departments: a systematic review of the quantitative literature. Ann Emerg Med. 2013 Jun;61(6):644–653. e16. doi: 10.1016/j.annemergmed.2013.01.028. [DOI] [PubMed] [Google Scholar]

- 5.Campbell EM, Sittig DF, Ash JS, Guappone KP, Dykstra RH. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc. 2009;13(5):547–556. doi: 10.1197/jamia.M2042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chassin MR, Becher EC. The wrong patient. Ann Intern Med. 2002 Jun 4;136(11):826–833. doi: 10.7326/0003-4819-136-11-200206040-00012. [DOI] [PubMed] [Google Scholar]

- 7.Koppel R, Metlay JP, Cohen A, Abaluck B, Localio AR, Kimmel SE, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA. 2005 Mar 9;293(10):1197–1203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- 8.Villamañán E, Larrubia Y, Ruano M, Vélez M, Armada E, Herrero A, et al. Potential medication errors associated with computer prescriber order entry. Int J Clin Pharm. 2013 Aug;35(4):577–583. doi: 10.1007/s11096-013-9771-2. [DOI] [PubMed] [Google Scholar]

- 9.Magrabi F, Ong M-S, Runciman W, Coiera E. Using FDA reports to inform a classification for health information technology safety problems. J Am Med Inform Assoc. 2010;19(1):45–53. doi: 10.1136/amiajnl-2011-000369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.The Join Commission. The Joint Commission’s 2011 National Patient Safety Goals [Internet] Available from: http://www.jointcommission.org/standards_information/npsgs.aspx. [Google Scholar]

- 11.Adelman JS, Kalkut GE, Schechter CB, Weiss JM, Berger Ma, Reissman SH, et al. Understanding and preventing wrong-patient electronic orders: a randomized controlled trial. J Am Med Inform Assoc. 2013;20(2):305–310. doi: 10.1136/amiajnl-2012-001055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Pham JC, Story JL, Hicks RW, Shore AD, Morlock LL, Cheung DS, et al. National study on the frequency, types, causes, and consequences of voluntarily reported emergency department medication errors. J Emerg Med. 2011 May;40(5):485–492. doi: 10.1016/j.jemermed.2008.02.059. [DOI] [PubMed] [Google Scholar]

- 13.Kulstad EB, Sikka R, Sweis RT, Kelley KM, Rzechula KH. ED overcrowding is associated with an increased frequency of medication errors. Am J Emerg Med. 2010 Mar;28(3):304–309. doi: 10.1016/j.ajem.2008.12.014. [DOI] [PubMed] [Google Scholar]

- 14.Oops, sorry, wrong patient! A patient verification process is needed everywhere, not just at the bedside. Alta RN. 2012 Jan;67(6):18–22. [PubMed] [Google Scholar]

- 15.Chen ES, Borlawsky T, Qureshi K, Li J, Lussier YA, Hripcsak G. Monitoring the function and use of a clinical decision support system. AMIA Annu Symp Proc. 2007 Jan;:902. [PubMed] [Google Scholar]

- 16.Chen J, Gupta A. Parametric statistical change point analysis: with applications to genetics, medicine and finance. 2nd ed. Boston: Birkhäuser; 2012. [Google Scholar]

- 17.Killick R, Fearnhead P, Eckley IA. Optimal detection of changepoints with a linear computational cost. J Am Stat Assoc. 2012 Dec;107(500):1590–1598. [Google Scholar]

- 18.Wright L, van der Schaaf T. Accident versus near miss causation: a critical review of the literature, an empirical test in the UK railway domain, and their implications for other sectors. J Hazard Mater. 2004 Jul;26111(1–3):105–110. doi: 10.1016/j.jhazmat.2004.02.049. [DOI] [PubMed] [Google Scholar]

- 19.Ash JS, Sittig DF, Campbell EM, Guappone KP, Dykstra RH. Some unintended consequences of clinical decision support systems. AMIA Annu Symp Proc. 2007 Jan;:26–30. [PMC free article] [PubMed] [Google Scholar]

- 20.Embi PJ, Leonard AC. Evaluating alert fatigue over time to EHR-based clinical trial alerts: findings from a randomized controlled study. J Am Med Inform Assoc. 2012 Jun;19(e1):e145–e148. doi: 10.1136/amiajnl-2011-000743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wilcox AB, Chen Y-H, Hripcsak G. Minimizing electronic health record patient-note mismatches. J Am Med Inform Assoc. 2011;18(4):511–514. doi: 10.1136/amiajnl-2010-000068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Galanter W, Falck S, Burns M, Laragh M, Lambert BL. Indication-based prescribing prevents wrong-patient medication errors in computerized provider order entry (CPOE) J Am Med Inform Assoc. 2013 May 1;20(3):477–481. doi: 10.1136/amiajnl-2012-001555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Brustoloni JC, Villamarín-Salomón R. Proceedings of the 3rd symposium on usable privacy and security - SOUPS ’07. New York, New York, USA: ACM Press; 2007. Improving security decisions with polymorphic and audited dialogs; p. 76. [Google Scholar]

- 24.Henneman PL, Fisher DL, Henneman Ea, Pham Ta, Campbell MM, Nathanson BH. Patient identification errors are common in a simulated setting. Ann Emerg Med. 2010 Jun;55(6):503–509. doi: 10.1016/j.annemergmed.2009.11.017. [DOI] [PubMed] [Google Scholar]

- 25.Hyman D, Laire M, Redmond D, Kaplan DW. The use of patient pictures and verification screens to reduce computerized provider order entry errors. Pediatrics. 2012 Jul;130(1):e211–e219. doi: 10.1542/peds.2011-2984. [DOI] [PubMed] [Google Scholar]

- 26.Phipps E, Turkel M, Mackenzie ER, Urrea C. He thought the “lady in the door” was the “lady in the window”: a qualitative study of patient identification practices. Jt Comm J Qual Patient Saf. 2012 Mar;38(3):127–134. doi: 10.1016/s1553-7250(12)38017-3. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Screenshot of the “Reason for Cancellation” dialog. This dialog appears when practitioners pressed the “Cancel” button in the patient verification dialog (Figure 1).