Abstract

The goal of the current study is to assess the temporal dynamics of vision and action to evaluate the underlying word representations that guide infants’ responses. Sixteen-month-old infants participated in a two-alternative forced-choice word-picture matching task. We conducted a moment-by-moment analysis of looking and reaching behaviors as they occurred in tandem to assess the speed with which a prompted word was processed (visual reaction time) as a function of the type of haptic response: Target, Distractor, or No Touch. Visual reaction times (visual RTs) were significantly slower during No Touches compared to Distractor and Target Touches, which were statistically indistinguishable. The finding that visual RTs were significantly faster during Distractor Touches compared to No Touches suggests that incorrect and absent haptic responses appear to index distinct knowledge states: incorrect responses are associated with partial knowledge whereas absent responses appear to reflect a true failure to map lexical items to their target referents. Further, we found that those children who were faster at processing words were also those children who exhibited better haptic performance. This research provides a methodological clarification on knowledge measured by the visual and haptic modalities and new evidence for a continuum of word knowledge in the second year of life.

Keywords: comprehension, vocabulary, graded knowledge, behavioral dissociations, infants, toddlers

The use of visual and haptic measures to estimate underlying cognitive abilities has a rich history in research on infant development of spatial concepts, object knowledge, and early vocabulary comprehension among others. However it has been documented that infant competence is highly task dependent, such that infants exhibit behavioral dissociations characterized by demonstrating knowledge in one modality but not the other (Ahmed & Ruffman, 1998; Diamond, 1985; Hofstadter & Reznick 1996; Ruffman, Garnham, Import, & Connolly, 2001; Shinskey & Munakata, 2005). One problem that remains to be addressed in such behavioral tasks is the differential interpretation of incorrect relative to absent responses. To date, there are limited empirical data to disambiguate these two response classes.

Few attempts have been made to assess early knowledge in infants by measuring the visual and haptic response modalities as they occur within the same task (Diamond, 1985; Hofstadler et al., 1996; Ruffman et al. 2001; Gurteen, Horne, & Erjavec, 2011). Further, no study to date has measured the moment-by-moment relation between visual and haptic responses as measures of early knowledge. The benefits of such an examination are threefold: 1. to guide the interpretation of behavioral responses and non-responses, 2. to clarify the relation between volitional (e.g. haptic, verbal) and spontaneous (e.g. visual, orienting) responses more generally, and 3. to facilitate discussion concerning the underlying knowledge educed in paradigms employing visual and haptic response modalities.

The study of early language comprehension presents a particularly ripe area within which to investigate the broader dynamics between visual and haptic measures of early knowledge. At present there are three primary paradigms in use for the assessment of early comprehension vocabulary: parent report, visual attention, and haptic response. Most of what we currently know about visual and haptic responses as measures of early language abilities are from studies that have been conducted in a piecemeal fashion, in which investigators selectively use either looking time (Behrend, 1988; Fernald & McRoberts, 1991; Fernald, Zangl, Portillo, & Marchman, 2008; Golinkoff, Hirsh-Pasek, Cauley, & Gordon, 1987; Hirsh-Pasek & Golinkoff, 1996; Houston-Price, Mather, & Sakkalou, 2007; Naigles & Gelman, 1995; Reznick, 1990; Robinson, Shore, Hull Smith & Martinelli, 2000; Schafer & Plunkett, 1998; Styles & Plunkett, 2009; Thomas, Campos, Shucard, Ramsay & Shucard, 1981) or haptic response (Bates et al., 1988; Snyder, Bates, & Bretherton, 1981; Woodward, Markman, & Fitzsimmons, 1994; Friend & Keplinger, 2003; Friend & Keplinger, 2008) but not both.

Micro-level measures of looking behavior that assess speed of processing, and pattern of visual attention (Aslin, 2007) have gained prominence in the infant literature within the last decade and have offered interesting insights into underlying cognitive processes. The “looking-while-listening” paradigm first outlined in Fernald, Pinto, Swingley, Weinberg, & McRoberts (1998) has evolved from the well-documented Intermodal Preferential Looking (IPL) paradigm to an on-line measure of saccades in response to speech. Eye movements are monitored by digital camcorders and saccades are coded frame-by-frame to determine infants’ speed in processing words. These continuous data yield a richer, more nuanced picture of language processing than do dichotomous measures obtained by parent report, or macro-level looking time measures. Additionally it has been shown that the speed with which words are processed and the size of children’s lexicons at 25-months are predictive of intellectual functioning and language skills at 8-years of age (Marchman & Fernald, 2008).

Researchers utilizing haptic response measures of early language have obtained comparable findings to visually based measures (Friend et al. 2003, 2008; Ring & Fenson, 2000; Woodward et al. 1994). Friend and colleagues conducted a series of studies investigating the psychometric properties of the Computerized Comprehension Task (CCT), a measure that uses touch responses to gauge early word comprehension. The score on the CCT (proportion of correct touches to a named visual referent) was found to be a reliable and valid measure of word comprehension in the 2nd year of life, and a significant predictor of productive language abilities in the 3rd year. Additionally, performance on the CCT was significantly correlated with parent report on the MCDI: WG (Friend & Keplinger, 2003; 2008). Despite the predictive value of this measure, it suffers from a quandary that exists for all measures that require a volitional response, that is, does one interpret both incorrect and absent responses equivalently, or do these two response types systematically index different levels of understanding?

To our knowledge there has been only one study that has used both looking and touching as measures of early word knowledge. Using an interactive modification of the IPL paradigm Gurteen et al. (2011) investigated 13- and 17-month-old’s familiar and novel word comprehension. For both familiar and novel words, infants participated in two types of test trials: one requiring a looking response and one a touching response. There was no significant relation between MCDI: WG (comprehension or production) and target looking on the familiar or novel preferential looking tasks. The relation between infants’ touching responses and MCDI: WG scores was not reported. For both novel and familiar words, 13- and 17-month-olds looked significantly longer to the target referent. However, across age, infants reached toward the target at a level greater than chance only for familiar words. These discrepant findings for visual and haptic behavior when measuring familiar versus novel word knowledge bring into question whether spontaneous and volitional measures more generally should be thought of as analogous. However in Gurteen et al (2011) looking and touching behaviors were assessed separately, thus the concurrent relationship between the modalities is still largely unknown.

One reason for the dearth of research on the synchronous relation between response modalities is that the task design must take into account the natural dependencies between response modalities. For example, there is evidence of cortical movement preparation in adults as early as 500 ms prior to voluntary hand movement and the decision to respond occurs ~200 ms before execution (Trevena & Miller, 2002; Gold & Shadlen, 2007; Romo & Salinas, 1999; Schall & Thompson, 1999; Shadlen & Newsome, 1996; Libet, Gleason, Wright, & Pearl, 1983; VanRullen & Thorpe, 2001). Thus, looking to the target pre-reach onset may be influenced by neural activity related to movement anticipation. It has been shown that haptic responses can take up to ~7 secs post-stimulus to execute (Friend et al., 2012). Looking responses can, and should be captured within ~2 secs post-stimulus; the further from stimulus onset that looks occur, the less likely they are to be influenced by stimulus parameters, and the more likely they are to reflect processes other than comprehension of the target word (Aslin 2007; Fernald, Perfors & Marchman, 2006; Swingley & Fernald, 2002). Therefore, due to differences in the relative timing of the visual and haptic modalities to respond, it is possible to acquire visual fixation data sufficiently early in the trial to minimize the effect of motor planning.

For the purpose of the present investigation then, a traditional measure of the macrostructure of looking time (e.g. proportion looking to target) would be confounded with information processing at every level in the task from the recognition of a word-referent relation to the preparation for and execution of the reach (Aslin 2007). Given these considerations, we employed a micro-level measure of looking in the early post-stimulus period to maximize the stimulus-response contingency and minimize any influence by the decision to act.

The overarching goal of the current study is to assess the simultaneous moment-by-moment bidirectional relation between visual and haptic responses as measures of early word comprehension, and to evaluate the implications of our findings for the structure of early lexical-semantic knowledge. Children participated in a modified combination of the CCT (Friend et al. 2003, 2008) and the looking-while-listening procedure (Fernald et al. 1998; 2001; 2006; 2008). Participants were presented with within-category pairs of images (e.g. dog and cat) on a touch sensitive monitor and prompted to touch one of the images (e.g. “dog”; Target), while their visual behavior and haptic responses were recorded concurrently on video. We analyzed infants’ looking behavior at every 40 ms interval from image onset during the presentation of the target word on distractor-initial trials (i.e. those trials for which infants first fixated the distractor image upon hearing the target word). We calculated visual reaction time (RT) operationalized as the latency to shift from the distractor to the target image once both the target word in the first sentence prompt and the visual stimuli were presented. It is necessary to use distractor-initial trials in order to calculate the speed with which children shift fixation to the target. Visual RT calculated in this way is a measure of the efficiency of word processing and predicts subsequent development (Fernald et al. 1998; 2001; 2008). By definition, this measure cannot be obtained when children fixate on the target initially (Fernald et al., 2008). For each participant, trials were grouped by haptic response (Target, Distractor, No Touch). In the current study we ask whether there are differences in visual RT across Target, Distractor, and No Touches. There are two primary patterns of interest.

We predict visual RTs and haptic responses will converge when word knowledge is most robust (i.e., visual RTs will be fastest during Target Touches). Of particular interest is the comparison between visual RTs during Distractor Touches and No Touches. One possibility is that both incorrect and absent responses reflect weak or nonexistent lexical access. From this view we would expect visual RTs during Distractor and No Touches to be indistinguishable, and slower than Target Touches. Another possibility is that these two response types gauge different capabilities in lexical access. Here, we would expect visual RTs for Distractor and No Touches to be significantly different, and likely, slower than Target Touches.

Moreover, no study to date has examined the relation between haptic, visual, and parent report measures within the same cohort of infants. Therefore, a coextending goal of the current study is to examine the correlations between children’s vocabulary knowledge indexed by the haptic modality and children’s speed of lexical access indexed by visual RT, and the well-documented MCDI: WG (Fenson et al., 1993).

Method

Participants

Participants were drawn from a larger, multi-institutional longitudinal project assessing language comprehension in the 2nd year of life. Infants were obtained through a database of parent volunteers recruited through birth records, internet resources, and community events in a large metropolitan area. All infants were full-term and had no diagnosed impairments in hearing or vision. Seven infants were excluded from the study because of excessive fussiness (n = 4), experimenter error (n = 1), and technical error (n = 2). The final sample included 61 monolingual English–speaking infants (33 females, 28 males), ranging in age from 15.5 to 18.2 months (M = 16.6 months). Infant language exposure was assessed using an electronic version of the Language Exposure Questionnaire (Bosch & Sebastian-Galles, 2001). Estimates of daily language exposure were derived from parent reports of the number of hours of language input by parents, relatives and other caregivers in contact with the infant. Only those infants with at least 80% language exposure to English were included in the study.

Apparatus

The study was conducted in a sound attenuated room. A 51 cm 3M SCT3250EX touch capacitive monitor was attached to an adjustable wall mounted bracket that was hidden behind blackout curtains and between two portable partitions. Two HD video cameras were used to record participants’ visual and haptic responses. The eye-tracking camera was mounted directly above the touch monitor and recorded visual fixations through a small opening in the curtains. The haptic-tracking camera was mounted on the wall above and behind the touch monitor to capture both the infants’ haptic response and the stimulus pair presented on the touch monitor. Two audio speakers were positioned to the right and left of the touch monitor behind the blackout curtains for the presentation of auditory reinforcers to maintain interest and compliance.

Procedure and Measures

Upon entering the testing room, infants were seated on their caregiver’s lap centered at approximately 30 cm from the touch sensitive monitor with the experimenter seated just to the right. Parents wore blackout glasses and noise-cancelling headphones to mitigate parental influence during the task. The assessment followed the protocol for the Computerized Comprehension Task (CCT; Friend & Keplinger, 2003; 2008). The CCT is an experimenter-controlled assessment that uses infants’ haptic response to measure early decontextualized word knowledge. A previous attempt has been made to automate the procedure, such that verbal prompts come from the audio speakers positioned behind the touch screen instead of the experimenter seated to the right of the child. Pilot data using the automated version showed that children’s interest in the task waned to such an extent that attrition rates approached 85% (attrition rates using the experimenter-controlled CCT are between 5 – 10%; M. Friend, personal communication, June 17, 2014, P. Zesiger, personal communication, May 21, 2014). Therefore, to collect a sufficient amount of data to yield effects we used the well documented protocol of the CCT (Friend & Keplinger 2003; 2008). Previous studies have reported that the CCT has strong internal consistency (Form A α =.836; Form B α =.839), converges with parent report (partial r controlling for age = .361, p < .01), and predicts subsequent language production (Friend et al., 2012). Additionally, responses on the CCT are nonrandom (Friend & Keplinger, 2008) and this finding replicates across languages (Friend & Zesiger, 2011) and monolinguals and bilinguals (Poulin-Dubois, Bialystok, Blaye, Polonia, & Yott, 2013).

For this procedure, infants are prompted to touch images on the monitor by an experimenter seated to their right (e.g. “Where’s the dog? Touch dog!”). Target touches (e.g. touching the image of the dog) elicit congruous auditory feedback over the audio speakers (e.g., the sound of a dog barking). Infants were presented with four training trials, 41 test trials, and 13 reliability trials in a two-alternative forced-choice procedure. For a given trial, two images appeared simultaneously on the right and left side of the touch monitor. The side on which the target image appeared was presented in pseudo-random order across trials such that target images could not appear on the same side on more than two consecutive trials, and the target was presented with equal frequency on both sides of the screen (Hirsh-Pasek & Golinkoff, 1996). The item that served as the target was counterbalanced across participants such that there were two forms of the procedure. All image pairs presented during training, testing, and reliability were matched for word difficulty (easy, medium, hard) based on MCDI: WG norms (Dale & Fenson, 1996), part of speech (noun, adjective, verb), category (animal, human, object), and visual salience (color, size, luminance). The design of the study relied on participants producing Target, Distractor, and No Touches in sufficient numbers, and at roughly similar rates in order to address our hypotheses. Thus including words at varying degrees of difficulty was crucial.

The study began with a training phase to insure participants understood the nature of the task. During the training phase, participants were presented with early-acquired noun pairs (known by at least 80% of 16-month-olds; Dale & Fenson, 1996) and prompted by the experimenter to touch the target. If the infant failed to touch the screen after repeated prompts, the experimenter touched the target image for them. If a participant failed to touch during training, the four training trials were repeated once. Only participants who executed at least one correct touch during the training phase proceeded to the testing phase.

During testing, each trial lasted until the infant touched the screen or until seven seconds elapsed at which point the image pair disappeared. When the infant’s gaze was directed toward the touch monitor, the experimenter delivered the prompt in infant-directed speech and advanced each trial as they uttered the target word in the first sentence prompt such that the onset of the target word occurred just prior to the onset of the visual stimuli (average interval = 238 ms).

Nouns; Where is the _____? Touch _____.

Verbs; Who is _____? Touch _____.

Adjectives; Which one is _____? Touch _____.

The criterion for ending testing was a failure to touch on two consecutive trials with two attempts by the experimenter to re-engage without success. If the attempts to re-engage were unsuccessful and the child was fussy the task was terminated and the responses up to that point were taken as the final score. However, if the child did not touch for two or more consecutive trials but was not fussy, testing continued. Those participants who remained quiet and alert for the full 41 test trials (N = 34), also participated in a reliability phase in which 13 of the test trial image pairs were re-presented in opposite left-right orientation.

Parent report of infant word comprehension was measured using the MCDI: WG, a parent report checklist of language comprehension and production developed by Fenson et al. (1993), which has demonstrated good test-retest reliability and significant convergent validity with an object selection task (Fenson et al., 1994). Of interest in the current study was the 396-item vocabulary checklist for comparison with the infants’ behavioral data.

Coding

A waveform of the experimenter’s prompts was extracted from the eye-tracking video – positioned approximately 30 cm from the experimenter – using Audacity® software (http://audacity.sourceforge.net/). Subsequently, the eye-tracking video, haptic-tracking video, and a waveform of the experiment’s prompts were all synced using Eudico Linguistics Annotator (ELAN) (<http://tla.mpi.nl/tools/tla-tools/elan/>, Max Planck Institute for Psycholinguistics, The Language Archive, Nijmegen, The Netherlands; Lausberg & Sloetjes, 2009). ELAN is a multi-media annotation tool specifically designed for the analysis of language. It is particularly useful for integrating coding across modalities and media sources because it allows for the synchronous playing of multiple audio tracks and videos. Only distractor-initial trials – those trials for which infants first fixated the distractor image upon hearing the target word – were included in the analyses of looking behavior.

Coders completed extensive training to identify the characteristics of speech sounds within a waveform, both in isolation and in the presence of coarticulation. Because a finite set of target words always followed the same carrier phrases (e.g., “Where is the ____”, “Who is ___”, or “Which one is ____”?), training included identifying different vowel and consonant onsets after the words “the” and “is”. Coders were also trained to demarcate the onset of vowel-initial and nasal-initial words after a vowel-final word in continuous speech, which can be difficult using acoustic waveforms in isolation.

Coders were required to practice on a set of files previously coded by the first author with supervision and then to code one video independently until correspondence with previously coded data was reached. Two coders completed each pass, each coding ~50% of the data.

Trials with short latencies (200 – 400 ms) likely reflect eye movements that were planned prior to hearing the target word (Fernald et al., 2008; Bailey & Plunkett, 2002; Ballem & Plunkett, 2005). For this reason trials were included in subsequent analyses if the participant looked at the screen for at least 400 ms. Additionally looking responses were coded during the first 2000 ms of each trial. As previously mentioned, looking responses that are further from the stimulus onset are less likely to be driven by stimulus parameters (Aslin 2007; Fernald, Perfors & Marchman, 2006; Swingley & Fernald, 2002). Finally, by coding the first 2000 ms we are largely restricting our analysis to the period prior to the decision to touch.

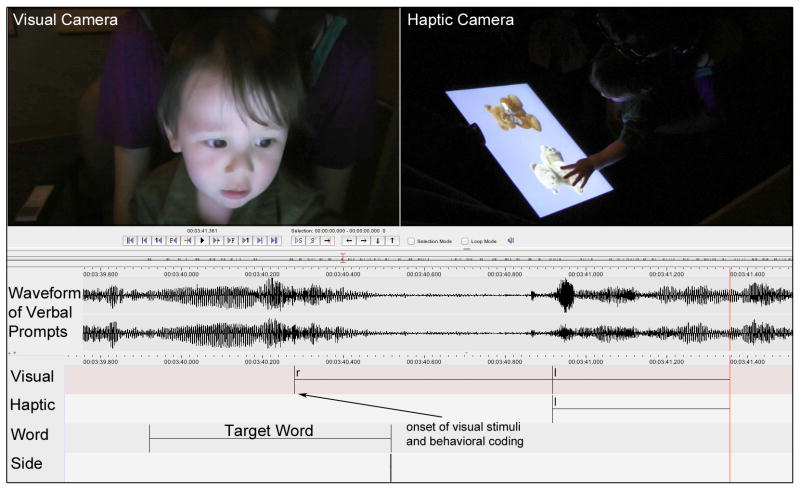

Coding occurred in two passes. Coder 1 annotated the frame onset and offset of the target word as it occurred in the first sentence prompt using the waveform of the experimenter’s speech. First the coder listened to the audio and zoomed in on the portion of the waveform that contained the target word in the first sentence prompt (e.g. Where is the DOG?). Once that section was magnified, the coder listened to the word several times precisely demarcating the onset and offset of speech information within the larger waveform. Coder 1 also marked the frame in which the visual stimuli appeared on the screen and the side of the target referent (note: side of the target referent was hidden from Coder 2). Coder 2 coded visual and haptic responses with no audio to insure that she remained blind to the image that constituted the target. Coding began at image onset, roughly 238 ms after target word onset, and prior to target word offset in the first sentence prompt (see Figure 1). For the visual behavior, Coder 2 advanced the video and coded each time a change in looking behavior occurred using three event codes: right look, left look, and away look. For sustained visual fixations, Coder 2 advanced the video in 40 ms coding frames, and, because shifts in looking are crucial for deriving measures of reaction time, Coder 2 advanced the video during gaze shifts at a finer level of resolution (3 ms).

Figure 1.

Eudico Linguistic Annotator coding setup. The waveform of the experimenter’s prompt is extracted from the video camera recording the visual behavior. The waveform is then synced with the video from the visual and haptic cameras. Coding was done using four tiers. On the Visual tier the onset of the visual stimuli was coded, and looking behavior was coded: right look (r), left look (l), or away look (a). On the Haptic tier the onset and offset of the haptic response and the direction of the touch (r or l) was coded. On the Word tier the onset and offset of the target word in the first sentence prompt was marked by viewing the waveform and using frame-by-frame auditory analysis. Finally, on the Side tier, the side (left or right) the target word appeared was coded and hidden from view. Behavioral coding began at the onset of the visual stimulus, which occurred ~ 238 ms after the target word in the first sentence prompt was uttered.

Haptic responses were coded over the course of the entire trial (7 secs). Only initial haptic responses were coded. The haptic response was coded categorically: Left Touch (unambiguous touch to the left image), Right Touch (unambiguous touch to the right image) or No Touch (no haptic response executed). Identifying touches as Target or Distractor was done post-hoc, to preserve coders’ blindness to target image and location.

Inter-rater reliability coding was conducted for both visual and haptic responses by a third, reliability coder. For looking responses a random sample of 11 videos (~ 25% of the data) was selected. Because our dependent variable (visual RT) relies on millisecond precision in determining when a shift in looking behavior occurred, only those frames in which shifts occurred were considered for the reliability score. This score is more stringent than including all possible coding frames because the likelihood of the two coders agreeing is considerably higher during sustained fixations compared to gaze shifts (Fernald et al., 1998). Using this shift-specific reliability calculation, we found that on 90% of trials coders were within one frame (40 ms) of each other, and on 94% of the trials coders were within two frames (80 ms) of each other.

All haptic response coding was compared to offline coding of haptic touch location completed for the larger longitudinal project. Inter-rater agreement for the haptic responses was 95%. All haptic coding was completed blind to target image, location, and visual fixations.

Results

Calculating reaction time by including only distractor-initial trials and a narrow time window restricts the number of usable trials per condition. Consequently not all children contributed data to all experimental conditions and thus were removed from further analysis. Of the 61 infants originally included, 16 participants were excluded from subsequent analyses for not contributing data to all three haptic type conditions. The remaining 45 infants completed an average of 36 out of a possible 41 trials and their average MCDI: WG comprehension vocabulary was 188 words out of a possible 396 and ranged from 62 to 342 words (percentile range = 1st to 91th). The average time to execute a haptic response was 3896.25 ms post image onset (< 14% of trials included a haptic response prior to 2000 ms). The average visual RT to shift to the target across haptic types was 862.43 ms, comparable to the mean visual RT found in similarly aged participants in previous research (827 ms; Fernald et al., 1998). Consistent with the literature, immediate test–retest reliability was strong for participants who completed reliability in the larger longitudinal project [r(41) = .74, p <.0001], and in the subset of data used for the current project [r(32) = .67, p < .0001]. Finally, internal consistency (Form A α = .931 and Form B α = .940) was excellent indicating consistency within the test and between test and reliability phases.

Infants chose the target image on 11.78 trials (SD = 6.76), the distractor on 10.08 trials (SD = 4.30), and provided no haptic response on 13.03 trials (SD = 7.78). Thus Target, Distractor, and No Touches were elicited at roughly equal rates. This pattern of findings is expected given the task design. There are equal numbers of easy (comprehension = >66%), moderately difficult (comprehension = 33–66%), and difficult words (comprehension < 33%) based on normative data at 16-months of age (Dale & Fenson, 1996). Therefore, if children correctly complete all 41 trials, and identify all words that most 16-month-olds are reported by parents to know, they would earn a score of roughly 14 indicating good knowledge of the easiest items.

To test the notion that children are more likely to execute target touches for highly known words, we analyzed haptic responses for those words for which there was a high probability of a target response (proportion of 16-month-olds expected to know each item appears in parentheses): ball (96.4%), juice (91.7%), dog (90.5%), and bottle (89.3%). This subset of words (59 trials in all) elicited a total of 35 Target Touches, 15 Distractor Touches, and 9 No Touches indicating that, as expected, when children touched the screen they made a correct haptic response significantly more often than chance by Binomial Test (exact), p = .007. This, in conjunction with excellent internal consistency and strong test-retest reliability, reveals that children’s responses were nonrandom.

To assess the potential contribution of side-bias effects to performance, we conducted two-sample t-tests and found no significant difference in number of touches, t(88) = 1.8, p = .08, or amount of looking time, t(88) = 1.5, p = .13 to images presented on the left relative to the right. To summarize, given the structure of the test and the average number of trials completed, the average number of target touches reported here is in line with expectations for performance at this age and is consistent with previous reports on the CCT (Friend & Keplinger, 2008; Friend, & Zesiger, 2011). In addition, both internal consistency and test-retest reliability were strong and we found no evidence of side-bias effects. However, a two-sample t-test revealed a significant difference in visual RTs across Forms, t(88) = 4.11, p = .0002, but no difference in the number of target haptic responses. To determine whether this difference influenced our findings, we first analyzed visual RTs as a function of haptic response type by Form. The pattern of results was identical for both Forms and we report our findings collapsed across Form below.

Concurrent Analyses of Visual and Haptic Responses

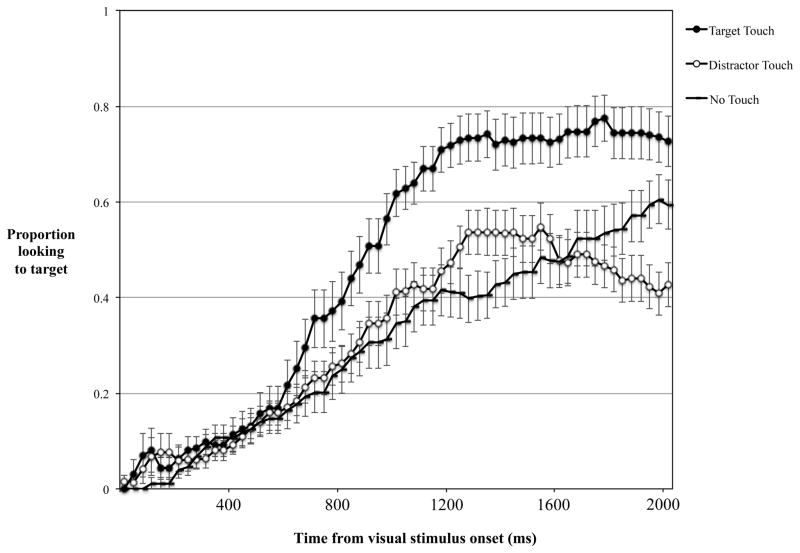

The time-course of eye movements across the different haptic types for the first 2000 ms from visual onset can be seen in the onset-contingency plot (see Figure 2). As predicted, during Target Touches, infants shifted their gaze toward the target image rapidly following the target word. In contrast, during No Touches, infants were slower to fixate the target image. Distractor Touches appear to have an intermediate rising slope however, roughly 1300 ms post image onset looking to the target image plateaus, and shifts towards the distractor.

Figure 2.

Time-course analysis. Each data point represents the mean proportion looking to the target location at every 40 ms interval from the onset of the visual stimulus for each Haptic Type (Target, Distractor, No Touch); error bars show the standard error across participants.

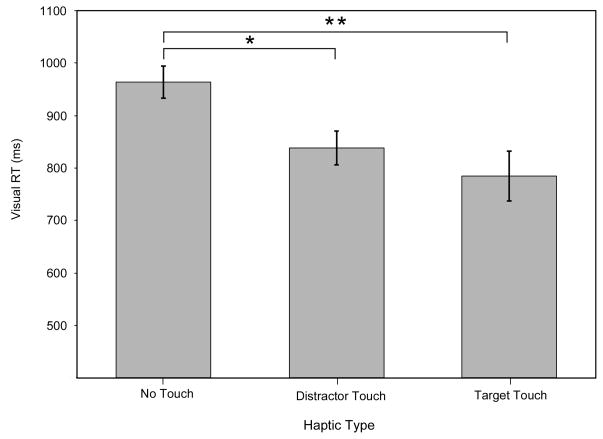

We compared speed of processing across Haptic Types (Target, Distractor, No Touch) using visual RT. Average visual RTs were calculated for distractor-initial trials in which a shift in gaze occurred between 400 – 2000 ms post-visual onset. Visual RT was averaged for each participant by Haptic Type and subjected to a one-way ANOVA. There was a main effect of Haptic Type, F (2, 43) = 6.8, p = .003 (see Figure 3). Planned pairwise comparisons using a bonferonni correction were conducted on the three levels of Haptic Type. As expected, infants processed the target word significantly faster during Target Touches (M = 784.76, SD = 30.87) compared to No Touches (M = 964.02, SD = 38.14). Interestingly, visual RTs were also significantly faster during Distractor Touches (M = 838.50, SD = 32.72) compared to No Touches. Finally, although visual RTs were faster for Target Touches than for Distractor Touches, this difference did not reach significance. Thus, statistically, infants shifted their gaze equally rapidly on Target and Distractor Touches. To insure this pattern of effects held for words that children this age had a high probability of knowing, and thus more closely mimic the majority of data collected using looking-while-listening procedure (Fernald et al. 1998; 2008), we calculated the visual RT for highly familiar words (Dale & Fenson, 1996) in our task (ball, juice, dog, bottle). Consistent with the pattern observed for the full data set, visual RTs for Target Touches were fastest (M = 748 ms), followed by Distractor Touches (M = 787 ms), and finally, No Touches (M = 992 ms).

Figure 3.

Visual RT analysis. Mean visual RT to shift gaze from the distractor to the target image following the onset of the visual stimulus on distractor-initial trials.

Note. Error bars show the standard error across participants. * p < .04, ** p < .01.

Relation of Visual, Haptic, and Parent Report Measures

A series of Pearson’s product–moment correlations was performed to analyze the relation between each of our response measures (visual RT and haptic) and MCDI: WG comprehension scores (the parent reported number of words understood by the child). For these analyses, the haptic measure was calculated in two ways: 1. as the number of Target Touches executed by the child, and 2,. as a proportion of Target Touches (i.e. number of Target Touches divided by the total number of trials completed). Further, the visual RT measure was calculated across haptic response type in two ways: 1. for only those words reported by parents as “known” and 2. for all words. There was no significant relation between visual RT for “known” words and MCDI comprehension, and although the direction of the relation between visual RT for all words and MCDI comprehension was in the expected negative direction, the correlation was not significant (r = −.16, p = .29). The correlations for both haptic measures and MCDI comprehension were significant: the proportion of Target Touches (r = .32, p = .03), and the number of Target Touches (r = .32, p = .03). Finally, although the correlations comparing visual RT for all words and both haptic measures were not significant, the correlations between visual RT for “known” words and each haptic measure were significant: proportion of Target Touches (r = −.41, p = .009), and number of Target Touches (r = −.40, p = .01) (see Table 1 for a summary of the correlation results).

Table 1.

Correlation (r) between visual, haptic, and parent report measures

| Measures | Visual RT

|

MCDI Comprehension | ||

|---|---|---|---|---|

| All Words | “Known” Words | |||

| Visual RT | All Words | −.16 | ||

| “Known” Words | .004 | |||

| Haptic | Number of Target Touches | −.12 | −.41** | .31* |

| Proportion of Target Touches | −.11 | −.40** | .32* | |

p < .05

p < .01

Discussion

In the current study we measured the dynamics of visual attention vis-à-vis haptic responses to examine the relation between two widely accepted measures of young children’s language abilities and to facilitate the interpretation of incorrect in contrast to absent volitional responses. There are several metrics (average fixation duration, total looks, proportion looking, etc.) available to operationalize infant looking behavior. For the current investigation we were interested in linking looking behavior to infants’ underlying lexical-semantic access by using a measure that is largely independent of subsequent action. Therefore we utilized a micro-level measure of visual attention (visual RT) known to gauge the speed with which infants’ process word-visual referent pairings. We found that visual RTs to shift gaze from the distractor to the target image varied as a function of whether a reach was subsequently executed (Touch vs. No Touch), but not where the reach was executed (Target vs. Distractor Touch). That is, infants quickly shifted visual attention on trials on which they touched either the target or the distractor image.

Of interest are the implications of these results for the structure of early lexical-semantic knowledge, particularly with respect to whether incorrect and absent volitional responses can be collectively bundled as representing lack of knowledge, or whether each indexes different capabilities in lexical access. A useful first step in understanding potential differences between these response types is to interpret the existence of behavioral dissociations during Distractor Touches (i.e. rapid visual RTs during incorrect haptic responses), but not during No Touches (i.e. visual and haptic behavior converge: slow visual RTs and absent haptic responses).

Traditionally discrepancies between results obtained visually and haptically have been interpreted as evidence that tasks requiring a haptic response underestimate infant knowledge. Thus, one explanation for why visual RTs are relatively quick during Distractor Touches, is that visual measures are more sensitive than haptic measures and therefore more accurate at gauging what infants know. Haptic measures on the other hand may systematically underestimate knowledge because of the additional demands of executing an action, which may cause infants to perseverate on a prepotent response (Diamond, 1985; Baillargeon, DeVos, & Graber, 1989, Hofstadter & Reznick, 1996, Gurteen, Horne, & Erjavec, 2011).

Although several researchers have shown that haptic perseveration is common in infant participants (Clearfield, Diedrich, Smith, & Thelen, 2006; Thelen, Schöner, Scheier, & Smith, 2001; Smith, Thelen, Titzer, & McLin, 1999; Munakata, 1998) flexible, goal-directed actions do occur when the input is salient and infants can execute actions without an imposed delay (Clearfield, Dineva, Smith, Diedrich, & Thelen, 2009). Indeed some researchers have successfully used infants’ haptic responses to gauge and predict language abilities (Friend et al. 2003, 2008; Ring & Fenson, 2000; Woodward et al. 1994), suggesting that haptic responses are a valid measure of early language.

Another interpretation for the conflicting results across modalities during Distractor Touches is based on the graded representations approach, which suggests that when two response modalities conflict, underlying knowledge may be partial (Morton & Munakata, 2002; Munakata 1998, 2001; Munakata & McClelland, 2003). Here the notion is that word knowledge is not all-or-none, but exists on a continuum from absence of knowledge, to partial knowledge, to robust knowledge (Durso & Shore 1991; Frishkoff, Perfetti, & Westbury, 2009; Ince & Christman, 2002; Schwanenflugel, Stahl & McFalls, 1997; Steele, 2012; Stein & Shore, 2012; Whitmore, Shore, & Smith, 2004; Zareva, 2012). Identifying measures that can gauge word knowledge across this continuum is vital because it has been well documented that infants who demonstrate both delayed language comprehension and production are at the greatest risk for continued language delay, and later development deficits (Karmiloff-Smith, 1992; Desmarais, Sylvestre, Meyer, Bairati, & Rouleau, 2008; Law, Boyle, Harris, Harkness, & Nye, 2000). From a graded representations account, haptic responses might be in a unique position to gauge these different levels of knowledge. Specifically, the convergence across modalities during correct touches and absent touches reveals the most and least robust levels of comprehension, respectively. From this view, behavioral dissociations emerge during distractor touches because knowledge is partial: knowledge is strong enough to support rapid visual RTs, but too fragile to overcome a prepotent haptic response to the first image fixated (the Distractor).

Indeed adult work suggests that incorrect responses can be a good proxy for partial knowledge. For example, in a word-learning paradigm, Yurovsky et al. (2013) found that when word-object pairs that adults executed an incorrect haptic response in the first block of testing were reencountered in a subsequent block, word-object identification dramatically improved when compared to a group of novel word-object pairs. Thus, while adults failed to encode enough information to support a correct haptic response in the initial test, they encoded partial knowledge, which increased subsequent word learning.

The haptic modality may be particularly susceptible to incorrect responses as a result of partial knowledge because to execute a correct haptic response activation from alternative responses must be inhibited (Woolley, 2006). Studies have shown that increases in processing load lead to greater distractor interference when target and distractor stimuli are presented visually (Fockert, 2013; Guy, Rogers, Cornish, 2012). In the current task paired images were from within the same category, making competition for activation between the incorrect and correct response particularly strong. Accordingly, this may have fostered greater distractor interference when knowledge of the target word was weak.

Crucially, these two interpretations rely on the notion that quick shifts from the distractor to the target image reflect the speed with which the target word was processed. It is possible that some shifts from the distracter to the target image simply reflect random eye movements that are not guided by speech. We attempted to mitigate the influence of such spurious orienting responses by removing gaze shifts that occurred < 400 ms from coding onset. Further, although it may be argued that using both familiar and more difficult words in the same task reduces the link from visual RT to speed of lexical access, there is evidence that visual RT can measure changes in speed of lexical access in words reported by parents as unknown (Fernald et al., 2006). Specifically, Fernald and colleagues found that visual RTs significantly decreased with age (15 to 25-months) at nearly identical rates for both words reported by parents as “known” and “unknown”. Fernald et al. therefore concluded that the measure of visual RT is able to tap into children’s emerging word knowledge that was presumed to be unknown. Indeed, in the current study we calculated visual RTs on a subset of highly known words as reported by parents and obtained the same pattern of results observed in the full stimulus set.

Finally, it should be considered that although visual RTs were slower when children failed to make a haptic response, this does not exclude the possibility that children “knew” the word, but failed to make a haptic response for reasons unknown (e.g. lack of cooperation). We tried to limit the influence of compliance by including only those children who completed the training phase and by utilizing criteria for ending the task when necessary so that we could be confident of the responses that contributed to the final dataset. So, one must wonder if No Touches were a result of noncompliance on the part of the participant, why would they fail to cooperate on some trials (No Touch), but not on others (Target and Distractor Touch). This, in addition to the visual RT evidence, suggests that absent responses, on the whole, reflect a true inability to successfully discriminate the target from the distractor: all children evinced compliance during the training phase, produced some of each response type (Target, Distractor, and No Touch) during test, and were slowest to shift their gaze to the target on No Touches.

A secondary goal of the present research was to assess the relation between, visual, haptic and parent report measures of early vocabulary. We found no significant relation between comprehension on the MCDI and speed of lexical access as measured by visual RT. These findings are in line with results from Fernald et al. 2006 who found significant correlations between visual RT for “known” words and vocabulary and grammar measures at older ages (25-months), but no significant correlation between visual RT for “known” words and MCDI scores (r = −.21) with similarly aged participants (18-months). These results are consistent with a growing literature suggesting that the relation between visual and parent report measures of early language is highly variable (Fernald, et al., 2006; Houston-Price et al., 2007; Marchman & Fernald, 2008; Styles & Plunkett, 2009). Consistent with previous findings from Friend & Keplinger (2008) we find a significant relation between haptic performance (proportion and number of Target Touches) and MCDI comprehension scores.

The finding that haptic performance but not visual performance was significantly correlated with parent report of early word comprehension is somewhat intuitive if we think about the information upon which parent judgments are based. It is likely that explicit kinds of behavioral responses are taken as evidence of comprehension. Indeed, it has been argued that, for this reason, parent reports more accurately estimate children’s productive lexicons (Killing & Bishop, 2008). However the present research suggests that parents provide a reasonably accurate assessment of robust early vocabulary.

Finally, we conducted a series of comparisons between the visual and haptic measures at the child-level to examine whether those children who are faster at processing words are also those children who exhibit better haptic performance. Although we did not find a significant relation between visual RT across all words for the haptic measures (proportion or number of Target Touches), visual RT for “known” words correlated significantly with both haptic measures. This suggests that although visual RT may be a more sensitive measure than haptic performance, which requires more robust understanding, the two measures potentially give us a similar picture about children’s level of lexical skill overall. This is supported by the findings that both visual RT for “known” words and haptic performance are significant predictors of later language abilities. An interesting future question then is which measure is more predictive.

Conclusion

The ability to recognize and access the meaning of familiar words gradually increases over the 2nd year of life. It has been suggested that learning the correct referent for a word involves the accumulation of partial knowledge across multiple exposures (Yurovsky et al, 2013). Initially, word recognition may require supporting contextual cues. Eventually stronger, more symbolic representations of word-referent pairings must develop. Consequently, the early lexicon likely consists of both weak (i.e., contextually-dependent) and strong (abstract) word representations (Tomasello, 2003). To investigate language acquisition in a developmentally-minded way, researchers need to tease partial from fully formed knowledge and latent from active representations. The present results suggest that by implementing testing methods that exclusively measure complete knowledge, or lump partial with absent knowledge we may not get the full picture of a developing lexicon. Obtaining a rich understanding of the nature of early vocabulary development may necessitate the use of multiple methodologies and modalities (Woolley, 2006). The present results help to clarify the relation between modalities in indexing early knowledge and contribute both to the literature on early language as well as to the broader developmental literature on cognition in the second year of life, especially with respect to the graded structure of early knowledge.

In future research, neuroimaging studies using methods such as event-related potentials (ERPs) may be valuable for exploring the strength of word representations that provoke different types of behavioral responses. An interesting question for future work is whether evidence for graded early knowledge obtains neurophysiologically. As Dale and Goodman wrote, “Advances in observational and measurement techniques have often directly stimulated theoretical advances, because they do not simply lead to more precise measurement of what is already studied, but to the observation and measurement of new entities or quantities” (Dale & Goodman, 2005). This nascent evidence for a graded structure in the developing lexicon has implications for connections between language and cognition early in life and motivates new research extending these findings more broadly both behaviorally and neurophysiologically.

Acknowledgments

We are grateful to the participant families and also to Anya Mancillas, Stephanie DeAnda, Brooke Tompkins, Tara Rutter, and Kasey Palmer for assistance in data collection and coding. This research was supported by NIH #HD068458 to the senior author and NIH Training Grant 5T32DC007361 to the first author and does not necessarily represent the views of the National Institutes of Health.

Footnotes

The content is solely the responsibility of the authors and does not necessarily represent the official views of the NICHD or the NIH.

References

- Ahmed A, Ruffman T. Why do infants make A not B errors in a search task, yet show memory for the location of hidden objects in a nonsearch task? Developmental Psychology. 1998;34(3):441. doi: 10.1037//0012-1649.34.3.441. [DOI] [PubMed] [Google Scholar]

- Aslin RN. What’s in a look? Developmental Science. 2007;10(1):48–53. doi: 10.1111/J.1467-7687.2007.00563.X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bailey TM, Plunkett K. Phonological specificity in early words. Cognitive Development. 2002;17(2):1265–1282. [Google Scholar]

- Baillargeon R, DeVos J, Graber M. Location memory in 8-month-old infants in a non-search AB task: Further evidence. Cognitive Development. 1989;4(4):345–367. [Google Scholar]

- Ballem KD, Plunkett K. Phonological specificity in children at 1; 2. Journal of Child Language. 2005;32(1):159–173. doi: 10.1017/s0305000904006567. [DOI] [PubMed] [Google Scholar]

- Bates E, Bretherton I, Snyder L, Beeghly M, Shore C, McNew S, Carlson V, Williamson C, Garrison A, O’Connell B. From first words to grammar: Individual differences and dissociable mechanisms. New York: Cambridge University Press; 1988. [Google Scholar]

- Behrend DA. Overextensions in early language comprehension: Evidence from a signal detection approach. Journal of Child Language. 1988;15(1):63–75. doi: 10.1017/s0305000900012058. [DOI] [PubMed] [Google Scholar]

- Bosch L, Sebastián-Gallés N. Early language differentiation in bilingual infants. Trends in bilingual acquisition. 2001;1:71. [Google Scholar]

- Clearfield MW, Diedrich FJ, Smith LB, Thelen E. Young infants reach correctly in A-not-B tasks: On the development of stability and perseveration. Infant Behavior and Development. 2006;29(3):435–444. doi: 10.1016/j.infbeh.2006.03.001. [DOI] [PubMed] [Google Scholar]

- Clearfield MW, Dineva E, Smith LB, Diedrich FJ, Thelen E. Cue salience and infant perseverative reaching: Tests of the dynamic field theory. Developmental Science. 2009;12(1):26–40. doi: 10.1111/j.1467-7687.2008.00769.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale PS, Fenson L. Lexical development norms for young children. Behavior Research Methods, Instruments, & Computers. 1996;28(1):125–127. [Google Scholar]

- Dale PS, Goodman JC. Commonality and individual differences in vocabulary growth. In: Tomasello M, Slobin D, editors. Beyond nature-nurture: Essays in honor of Elizabeth. 2005. [Google Scholar]

- Desmarais C, Sylvestre A, Meyer F, Bairati I, Rouleau N. Systematic review of the literature on characteristics of late-talking toddlers. International Journal of Language & Communication Disorders. 2008;43(4):361–389. doi: 10.1080/13682820701546854. [DOI] [PubMed] [Google Scholar]

- Diamond A. Developmental of the ability to use recall to guide action, as indicated by Infants’ performance on A B. Child Development. 1985;56:868–883. [PubMed] [Google Scholar]

- Durso FT, Shore WJ. Partial knowledge of word meanings. Journal of Experimental Psychology: General. 1991;120(2):190. [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Bates E, Thal DJ, Pethick SJ, Tomasello M, Mervis CB, Stiles J. Variability in early communicative development. Monographs of the society for research in child development. 1994:i–185. [PubMed] [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Thal D, Bates E, Hartung JP, Pethick S, Reilly JS. MacArthur Communicative Development Inventories: User’s guide and technical manual. San Diego, CA: Singular Publishing Group; 1993. [Google Scholar]

- Fernald A, McRoberts G, Herrara C. Prosody in early lexical comprehension. Society for Research in Child Development; Seattle, WA: 1991. [Google Scholar]

- Fernald A, McRoberts GW, Swingley D. Infants’ developing competence in understanding and recognizing words in fluent speech. In: Weissenborn J, Hoehle B, editors. Approaches to bootstrapping in early language acquisition. Amsterdam: Benjamins; 2001. pp. 97–123. [Google Scholar]

- Fernald A, Perfors A, Marchman VA. Picking up speed in understanding: Speech processing efficiency and vocabulary growth across the 2nd year. Developmental Psychology. 2006;42(1):98. doi: 10.1037/0012-1649.42.1.98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernald A, Pinto JP, Swingley D, Weinberg A, McRoberts GW. Rapid gains in speed of verbal processing by infants in the 2nd year. Psychological Science. 1998;9(3):228–231. [Google Scholar]

- Fernald A, Zangl R, Portillo AL, Marchman VA. Looking while listening Using eye movements to monitor spoken language. Developmental psycholinguistics: On-line methods in children’s language processing. 2008;44:97. [Google Scholar]

- Fockert JW. Beyond perceptual load and dilution: a review of the role of working memory in selective attention. Frontiers in Psychology. 2013:4. doi: 10.3389/fpsyg.2013.00287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friend M, Keplinger M. An infant-based assessment of early lexicon acquisition. Behavior Research Methods, Instruments, and Computers. 2003;35(2):302–309. doi: 10.3758/bf03202556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friend M, Keplinger M. Reliability and validity of the Computerized Comprehension Task (CCT): Data from American English and Mexican Spanish Infants. Journal of Child Language. 2008;35:77–98. doi: 10.1017/s0305000907008264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friend M, Schmitt SA, Simpson AM. Evaluating the predictive validity of the Computerized Comprehension Task: Comprehension predicts production. Developmental Psychology. 2012;48(1):136. doi: 10.1037/a0025511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friend M, Zesiger P. Une réplication systématique des propriétés psychométriques du Computerized Comprehension Task dans trois langues. Enfance. 2011;2011(3):329. [Google Scholar]

- Frishkoff GA, Perfetti CA, Westbury C. ERP measures of partial semantic knowledge: Left temporal indices of skill differences and lexical quality. Biological Psychology. 2009;80(1):130–147. doi: 10.1016/j.biopsycho.2008.04.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The neural basis of decision making. Annual Review of Neuroscience. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- Golinkoff RM, Hirsh-Pasek K, Cauley KM, Gordon L. The eyes have it: Lexical and syntactic comprehension in a new paradigm. Journal of Child Language. 1987;14 (1):23–45. doi: 10.1017/s030500090001271x. [DOI] [PubMed] [Google Scholar]

- Guy J, Rogers M, Cornish K. Developmental Changes in Visual and Auditory Inhibition in Early Childhood. Infant and Child Development. 2012;21(5):521–536. [Google Scholar]

- Gurteen PM, Horne PJ, Erjavec M. Rapid word learning in 13- and 17-month-olds in a naturalistic two-word procedure: Looking versus reaching measures. Journal of Experimental Child Psychology. 2011;109 (2):201–217. doi: 10.1016/j.jecp.2010.12.001. [DOI] [PubMed] [Google Scholar]

- Hirsh-Pasek K, Golinkoff RM. The intermodal preferential looking paradigm: A window onto emerging language comprehension. In: McDaniel D, McKee C, Cairns HS, editors. Methods for assessing children’s syntax. Cambridge, MA: MIT Press; 1996. pp. 105–124. [Google Scholar]

- Hofstadter MC, Reznick JS. Response modality affects human infant delayed-response performance. Child Development. 1996;67:646–58. [PubMed] [Google Scholar]

- Houston-Price C, Mather E, Sakkalou E. Discrepancy between parental reports of infants’ receptive vocabulary and infants’ behaviour in a preferential looking task. Journal of Child Language. 2007;34(04):701–724. doi: 10.1017/s0305000907008124. [DOI] [PubMed] [Google Scholar]

- Ince E, Christman SD. Semantic representations of word meanings by the cerebral hemispheres. Brain and Language. 2002;80(3):393–420. doi: 10.1006/brln.2001.2599. [DOI] [PubMed] [Google Scholar]

- Karmiloff-Smith A. Beyond modularity: A developmental perspective on cognitive science. MIT Press; 1992. [Google Scholar]

- Killing SE, Bishop DV. Move it! Visual feedback enhances validity of preferential looking as a measure of individual differences in vocabulary in toddlers. Developmental Science. 2008;11(4):525–530. doi: 10.1111/j.1467-7687.2008.00698.x. [DOI] [PubMed] [Google Scholar]

- Lausberg H, Sloetjes H. Coding gestural behavior with the NEUROGES-ELAN system. Behavior Research Methods. 2009;41(3):841–849. doi: 10.3758/BRM.41.3.841. [DOI] [PubMed] [Google Scholar]

- Law J, Boyle J, Harris F, Harkness A, Nye C. Prevalence and natural history of primary speech and language delay: findings from a systematic review of the literature. International Journal of Language & Communication Disorders. 2000;35(2):165–188. doi: 10.1080/136828200247133. [DOI] [PubMed] [Google Scholar]

- Libet B, Gleason CA, Wright EW, Pearl DK. Time of conscious intention to act in relation to onset of cerebral activity (readiness-potential) the unconscious initiation of a freely voluntary act. Brain. 1983;106(3):623–642. doi: 10.1093/brain/106.3.623. [DOI] [PubMed] [Google Scholar]

- Marchman VA, Fernald A. Speed of word recognition and vocabulary knowledge in infancy predict cognitive and language outcomes in later childhood. Developmental Science. 2008;11(3):F9–F16. doi: 10.1111/j.1467-7687.2008.00671.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morton JB, Munakata Y. Active versus latent representations: A neural network model of perseveration, dissociation, and decalage. Developmental Psychobiology. 2002;40(3):255–265. doi: 10.1002/dev.10033. [DOI] [PubMed] [Google Scholar]

- Munakata Y. Infant perseverative and implications for object permanence theories: A PDP model of the A-not-B task. Developmental Science. 1998;1:161–84. [Google Scholar]

- Munakata Y. Graded representations in behavioral dissociations. Trends in Cognitive Sciences. 2001;5(7):309–315. doi: 10.1016/s1364-6613(00)01682-x. [DOI] [PubMed] [Google Scholar]

- Munakata Y, McClelland JL. Connectionist models of development. Developmental Science. 2003;6:413–429. [Google Scholar]

- Naigles LG, Gelman SA. Overextensions in comprehension and production revisited: preferential-looking in a study of dog, cat and cow. Journal of Child Language. 1995;22:19–19. doi: 10.1017/s0305000900009612. [DOI] [PubMed] [Google Scholar]

- Poulin-Dubois D, Bialystok E, Blaye A, Polonia A, Yott J. Lexical access and vocabulary development in very young bilinguals. International Journal of Bilingualism. 2013;17 (1):57–70. doi: 10.1177/1367006911431198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reznick JS. Visual preference as a test of infant word comprehension. Applied Psycholinguistics. 1990;11(02):145–166. [Google Scholar]

- Ring ED, Fenson L. The correspondence between parent report and child performance for receptive and expressive vocabulary beyond infancy. First Language. 2000;20 (59):141–159. [Google Scholar]

- Robinson CW, Shore WJ, Hull Smith P, Martinelli L. Developmental differences in language comprehension: What 22-month-olds know when their parents are not sure. Poster presented at the International Conference on Infant studies; Brighton. July, 2000.2000. [Google Scholar]

- Romo R, Salinas E. Sensing and deciding in the somatosensory system. Current Opinion in Neurobiology. 1999;9(4):487–493. doi: 10.1016/S0959-4388(99)80073-7. [DOI] [PubMed] [Google Scholar]

- Ruffman T, Garnham W, Import A, Connolly D. Does eye gaze indicate implicit knowledge of false belief? Charting transitions in knowledge. Journal of Experimental Child Psychology. 2001;80(3):201–224. doi: 10.1006/jecp.2001.2633. [DOI] [PubMed] [Google Scholar]

- Schafer G, Plunkett K. Rapid word learning by fifteen-month-olds under tightly controlled conditions. Child Development. 1998;69(2):309–320. [PubMed] [Google Scholar]

- Schall JD, Thompson KG. Neural selection and control of visually guided eye movements. Annual Review of Neuroscience. 1999;22(1):241–259. doi: 10.1146/annurev.neuro.22.1.241. [DOI] [PubMed] [Google Scholar]

- Schwanenflugel PJ, Stahl SA, Mcfalls EL. Partial word knowledge and vocabulary growth during reading comprehension. Journal of Literacy Research. 1997;29(4):531–553. [Google Scholar]

- Shadlen MN, Newsome WT. Motion perception: seeing and deciding. Proceedings of the National Academy of Sciences. 1996;93(2):628–633. doi: 10.1073/pnas.93.2.628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shinskey JL, Munakata Y. Familiarity Breeds Searching Infants Reverse Their Novelty Preferences When Reaching for Hidden Objects. Psychological Science. 2005;16(8):596–600. doi: 10.1111/j.1467-9280.2005.01581.x. [DOI] [PubMed] [Google Scholar]

- Smith LB, Thelen E, Titzer R, McLin D. Knowing in the context of acting: the task dynamics of the A-not-B error. Psychological Review. 1999;106(2):235. doi: 10.1037/0033-295x.106.2.235. [DOI] [PubMed] [Google Scholar]

- Snyder LS, Bates E, Bretherton I. Content and context in early lexical development. Journal of Child Language. 1981;8(3):565–82. doi: 10.1017/s0305000900003433. [DOI] [PubMed] [Google Scholar]

- Steele SC. Oral Definitions of Newly Learned Words An Error Analysis. Communication Disorders Quarterly. 2012;33(3):157–168. [Google Scholar]

- Stein JM, Shore WJ. What do we know when we claim to know nothing? Partial knowledge of word meanings may be ontological, but not hierarchical. Language and Cognition. 2012;4(3):144–166. [Google Scholar]

- Styles S, Plunkett K. What is ‘word understanding’ for the parent of a one-year-old? Matching the difficulty of a lexical comprehension task to parental CDI report. Journal of Child Language. 2009;36(04):895–908. doi: 10.1017/S0305000908009264. [DOI] [PubMed] [Google Scholar]

- Swingley D, Fernald A. Recognition of words referring to present and absent objects by 24-month-olds. Journal of Memory and Language. 2002;46(1):39–56. [Google Scholar]

- Thelen E, Schöner G, Scheier C, Smith LB. The dynamics of embodiment: A field theory of infant perseverative reaching. Behavioral and Brain Sciences. 2001;24(01):1–34. doi: 10.1017/s0140525x01003910. [DOI] [PubMed] [Google Scholar]

- Thomas DG, Campos JJ, Shucard DW, Ramsay DS, Shucard J. Semantic comprehension in infancy: A signal detection analysis. Child Development. 1981;52:798–803. [PubMed] [Google Scholar]

- Tomasello M. Constructing a language: A usage-based theory of language acquisition. Cambridge, Massachusetts: Harvard University Press; 2003. [Google Scholar]

- Trevena JA, Miller J. Cortical movement preparation before and after a conscious decision to move. Consciousness and Cognition. 2002;11(2):162–190. doi: 10.1006/ccog.2002.0548. [DOI] [PubMed] [Google Scholar]

- VanRullen R, Thorpe SJ. The time course of visual processing: from early perception to decision-making. Journal of cognitive neuroscience. 2001;13(4):454–461. doi: 10.1162/08989290152001880. [DOI] [PubMed] [Google Scholar]

- Whitmore JM, Shore WJ, Smith PH. Partial knowledge of word meanings: Thematic and taxonomic representations. Journal of Psycholinguistic Research. 2004;33(2):137–164. doi: 10.1023/b:jopr.0000017224.21951.0e. [DOI] [PubMed] [Google Scholar]

- Woodward A, Markman EM, Fitzsimmons C. Rapid word learning in 13- and 18-month-olds. Developmental Psychology. 1994;30:553–566. [Google Scholar]

- Woolley JD. Verbal–behavioral dissociations in development. Child development. 2006;77 (6):1539–1553. doi: 10.1111/j.1467-8624.2006.00956.x. [DOI] [PubMed] [Google Scholar]

- Yurovsky D, Fricker DC, Yu C, Smith LB. The role of partial knowledge in statistical word learning. Psychonomic Bulletin & Review. 2013:1–22. doi: 10.3758/s13423-013-0443-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zareva A. Partial word knowledge: Frontier words in the L2 mental lexicon. International Review of Applied Linguistics in Language Teaching. 2012;50(4):277–301. [Google Scholar]