Abstract

Advances in information technology and near ubiquity of the Internet have spawned novel modes of communication and unprecedented insights into human behavior via the digital footprint. Health behavior randomized controlled trials (RCTs), especially technology-based, can leverage these advances to improve the overall clinical trials management process and benefit from improvements at every stage, from recruitment and enrollment to engagement and retention. In this paper, we report the results for recruitment and retention of participants in the SMART study and introduce a new model for clinical trials management that is a result of interdisciplinary team science. The MARKIT model brings together best practices from information technology, marketing, and clinical research into a single framework to maximize efforts for recruitment, enrollment, engagement, and retention of participants into a RCT. These practices may have contributed to the study’s on-time recruitment that was within budget, 86% retention at 24 months, and a minimum of 57% engagement with the intervention over the 2-year RCT. Use of technology in combination with marketing practices may enable investigators to reach a larger and more diverse community of participants to take part in technology-based clinical trials, help maximize limited resources, and lead to more cost-effective and efficient clinical trial management of study participants as modes of communication evolve among the target population of participants.

Keywords: Clinical trial management, marketing, social media, technology, intervention, behavior

Introduction

The internal validity, external validity and feasibility of a clinical study are contingent upon successful recruitment, enrollment, engagement with the intervention, and retention of an appropriate sample from the population under study [1] while participant engagement with the intervention is important for evaluating the efficacy and generalizability of the program under study. Delays in participant recruitment or high rates of dropout post-randomization may lead to uncertainty in treatment effectiveness and may possibly confound results [2]. For example, in the case of a technology-based intervention, the technology may change over time if recruitment is prolonged, potentially leading to artifacts or differential effects in treatment outcomes. Moreover, meeting specified recruitment targets on time is important to ensure the trial begins and ends on time, stays within budgeted costs, and complies with any participant accrual milestones specified by the funding agency. These four elements – recruitment, enrollment, engagement, and retention – form the basis of an active clinical trial. These elements are often perceived as separate, individual parts of a randomized controlled trial (RCT). In this paper, we propose that methods employed upstream for participant recruitment and enrollment influence downstream participant behaviors such as engagement and retention in a technology-based RCT. We use the SMART study, a clinical trial of weight loss for college students, as an example to demonstrate the inter-connectedness of these RCT elements and introduce a new model as a strategy for managing all of these elements in concert.

Systematic, evidence-based methods and strategies for clinical trial management may be under-developed and under-implemented in behavioral and clinical research due to lack of resources, time, and/or personnel. Additionally, developing innovative participant recruitment, enrollment, engagement, and retention methods may benefit from expertise outside of the traditional knowledge and skills of clinical trial research teams. Research and practice-based disciplines that may provide insights into these methods include marketing science and information technology (IT) [3–5].

In clinical research, continuously changing technology has implications for both the effectiveness and efficiency of trying to recruit, enroll, engage, and retain a target sample of individuals. Effectiveness can be improved by enabling targeted messages to have a greater reach and delivered to targeted groups. Efficiencies in resource utilization (e.g. staff, time, materials) can be derived through technologies with which participants already have familiarity. Use of IT and marketing in combination can potentially lead to a shorter recruitment cycle and higher connectivity with participants while decreasing personnel and operations costs. Thus, to effectively and efficiently recruit and retain college students for a weight-loss RCT, we looked to principles and practices in marketing science and IT in combination with traditional practices.

In this paper, we introduce the Marketing and Information Technology (MARKIT) model and describe how it was used in the SMART study to provide a holistic approach to managing these key elements of an RCT. We describe the methods and results of applying the MARKIT model for recruiting, enrolling, engaging, and retaining participants into the SMART intervention. We believe that this model will not only have utility in complete clinical trials management, but also improved outcomes for each of the stages aforementioned.

Methods

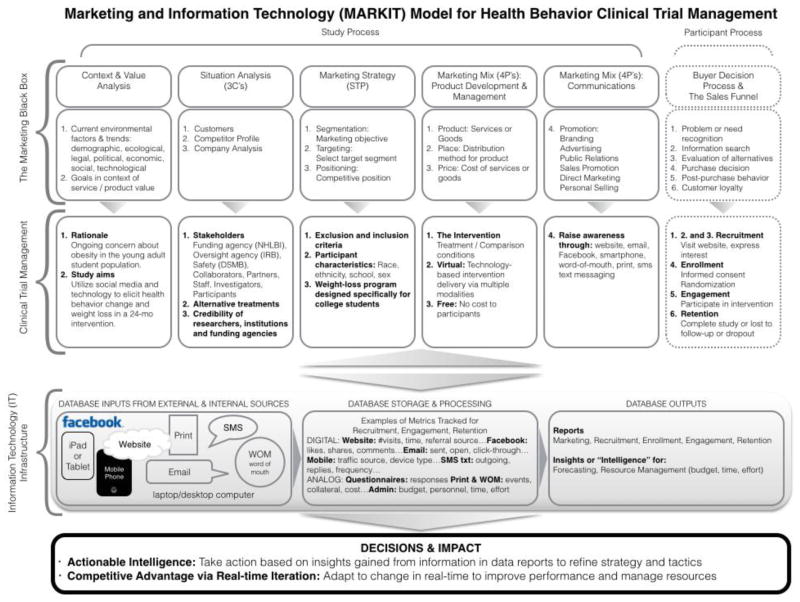

Marketing strategy is typically built by integrating five common frameworks: value and context analysis; 3C situation analysis (customer, competitor, and company analysis); segmentation, targeting, and positioning (STP) for marketing strategy; 4P marketing mix (product, place, price, and promotion); and the buying decision process [6]. The combination of these marketing frameworks is often referred to as “The Marketing Black Box” [7]. We mapped each of the marketing frameworks to activities in an RCT as shown in Figure 1 and propose a nomenclature to use in clinical trial management. We suggest five distinct stages of an RCT: Awareness, Recruitment, Enrollment, Engagement, and Retention. Awareness of a product or service (i.e. the intervention) is raised through advertising. We redefine Recruitment as being equivalent to the first three stages of a buyer decision process – problem recognition, information search, and evaluation of alternatives. It important to view these activities in their own “stage” to better comprehend behaviors and devise appropriate strategies and messaging to influence those behaviors. Enrollment is the “purchase decision” that the participant reaches as indicated by completing the informed consent and randomization into the study. Engagement is the participant’s “post-purchase behavior” or level of interaction with the intervention program while retention is analogous the marketing concept of “customer loyalty” or length of time enrolled in the study, which can be until the end of the study or sooner if participant is lost to follow-up or drops out altogether. In Figure 1, rows one and two describe the marketing framework and row three lists the corresponding clinical trials component. We implemented these marketing frameworks supported by an information technology infrastructure. The Marketing and Information Technology (MARKIT) model served as a “roadmap” for clinical trial management of the SMART study as shown in Figure 1. Each part of the roadmap is briefly described below.

Figure 1.

Marketing and Information Technology (MARKIT) Model for Health Behavior RCT Management

Context, Value, and Situation Analysis Inform the Marketing Strategy

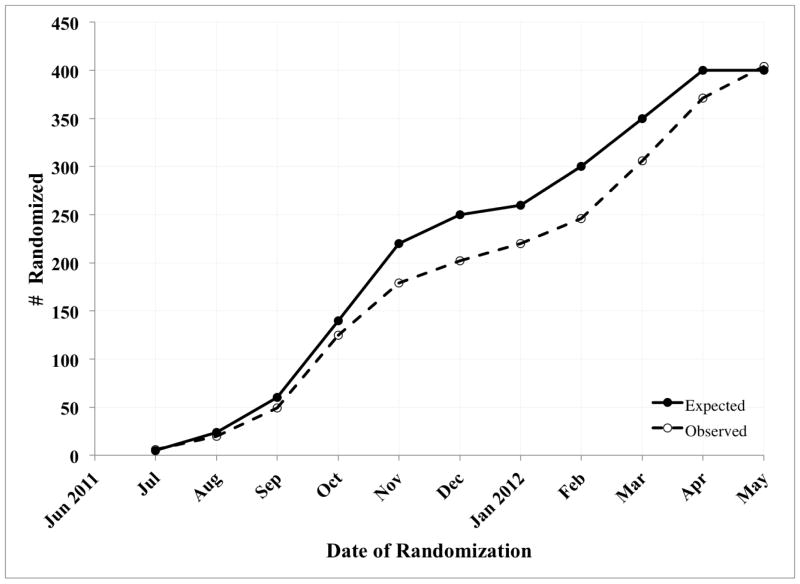

SMART is one of the seven National Institutes of Health, National Heart, Lung and Blood Institute (NIH/NHLBI) studies that comprise The EARLY Trials focused on studying the effectiveness of technology-based interventions in young adults 18–35 years old [8]. The SMART intervention was guided by principles of behavioral theory and iterative design that kept pace with current technological and popular culture trends to ensure the highest levels of engagement and efficacy of intervention with participants. The SMART study was a two-group experimental design with participants equally randomized into either group A or group B, both of which included technology-based elements [9]. The primary outcome of this study was weight-loss at 24 months from baseline measurements. The RCT study protocol was approved by the Institutional Review Board (IRB) at each of the following participating institutions: University of California at San Diego, San Diego State University, and California State University at San Marcos. The SMART study aimed to enroll 400 English-speaking college students from three Southern California universities who were between the ages of 18–35 years with a body mass index (BMI) of 25 < BMI ≤ 34.9 kg/m2, owned a laptop or desktop computer and a mobile phone, and used or were willing to start using Facebook. Additional recruitment criteria common among the EARLY Trials are described elsewhere [8]. Participants were excluded if any of these criteria were not met or if they were not available for 24 months, not able to ambulate unassisted, or non-compliant during the screening stage. A Data Safety Monitoring Board (DSMB) convened bi-annually to advise, audit and oversee participant accrual rates and safety of participants during the clinical trial. NIH accrual policy [1] guided the development of recruitment benchmarks. Accrual rates were reported monthly to the NHLBI project office and the DSMB (Figure 2).

Figure 2.

Expected (solid line) vs. Observed rate of participant accrual (dotted line).

Universities in the San Diego region were selected as the enrollment sites based on diversity of students: San Diego State University (SDSU), University of California at San Diego (UCSD), and California State University at San Marcos (CSUSM). Strategic partnerships were formed with leadership and staff of the student health centers at each of the three institutions and the study was co-branded as a joint-collaboration between the SMART study and the respective student health center. These partnerships lent credibility to the study’s recruitment efforts and allowed staff to gain access to various resources and personnel for advertising and marketing.

In terms of participant characteristics, SDSU has the largest student population and has the highest number of Hispanic students whereas UCSD has the largest Asian-American student population. To balance recruitment from each site, enrollment was stratified based on school, sex, and race/ethnicity. No more than 30% Hispanics were enrolled from SDSU while no more than 30% Asian-Americans were enrolled from UCSD. Sample size for each site was calculated as a ratio of the percent of total students enrolled per site based on 2008 enrollment. The three campuses combined represent approximately 72,000 students. We aimed to recruit 46% of the participants from SDSU, 41% from UCSD, and 13% from CSUSM. A two-, four-, or six-block randomization design was used to assign students to the treatment or comparison groups while keeping the study staff blinded during the randomization process. The study was powered to detect a minimal expected clinically meaningful between-group difference in weight loss of 3 kg, equivalent to 3.75% weight loss for an 80 kg individual. The power calculations accounted for 30% attrition of study participants by 24 months. The recruitment period spanned 12 months, from June 2011 to May 2012. Participants were enrolled on a rolling “first-come-first-served” basis until target sample sizes were met.

Marketing Mix: The Product as the Intervention, Place, and Price

The SMART intervention was guided by principles of behavioral theory and iterative design that keeps pace with current technological and popular culture trends to ensure the highest levels of engagement and efficacy of intervention with participants. The SMART study was a two-group experimental design with participants equally randomized into either the treatment group (A) or the comparison group (B), both of which included technology-based elements [9]. The primary outcome of this study was weight-loss at 24 months from baseline measurements.

Marketing Mix: Promotion

Awareness of the study was achieved through application of the marketing promotion framework. A combination of traditional and contemporary methods was used to raise awareness of the study. Print materials (flyers, coasters, pens, magnets, postcards, and posters) as well as digital media (TV screens, online ads, email, and a study-specific Facebook page) included a “call to action” to visit the study website and complete an online form. Healthcare providers in the campus student health center segmented their population by BMI based on electronic medical records (EMR) and targeted them with emails containing study information and a direct link to the online interest form. Technology was integrated into print media via QR codes, 2D barcodes read by downloading a QR reader app onto a smartphone. Scanning the barcode directed the user to the study website to complete the SMART online interest form.

In-person recruitment was coordinated with real-time monitoring of online interest form submissions. All staff members were equipped with laptops, an iPad, iTouch, or an iPhone connected to the Internet via Wi-Fi during recruitment events. Instant capture of interest and data proved to be an effective method of recruitment.

Information Technology Infrastructure

The IT infrastructure formed the basis for collecting data, which provided a view into a user’s digital footprint – the trail of data left by the user by engaging with online media – which in turn provided insights on specific phases of the clinical trial such as recruitment, enrollment, engagement, and retention. We used the digital footprint to derive insights on user behavior and applied them in “real time” to refine our strategy and tactics as necessary. This “actionable intelligence” contributed to forecasting, planning, and resource management.

Thus, these promotional efforts were launched with a comprehensive information technology infrastructure in place. An integrated, flexible, and scalable IT infrastructure with an easy-to-use user interface was developed for study staff and participants. A custom-built database housed data collected from all sources of digital media used for promotion. In addition, a mobile version of the study recruitment website (SMARThealthstudy.com) and use of quick response (QR) codes enhanced the user’s recruitment phase experience to improve the decision making process. User information was captured via the Internet from commonly used mobile devices and web-browsers. The database and website were integrated with a custom-built case management system that housed all participant data and enabled secure access to participant information. These methods were utilized to capture data for all phases of the RCT. Programming code defined by If/Then logic and rules automated the recruitment monitoring of participants in various stages of the process and this integrated IT infrastructure shortened the recruitment cycle. For example, once a participant expressed interest in the study, a personalized link to an online questionnaire was sent. Study staff were able to monitor the online completion of the questionnaire in real-time and send reminders to complete a partially filled form or offer “customer service” support via email to encourage completing the enrollment forms.

Recruitment & Enrollment

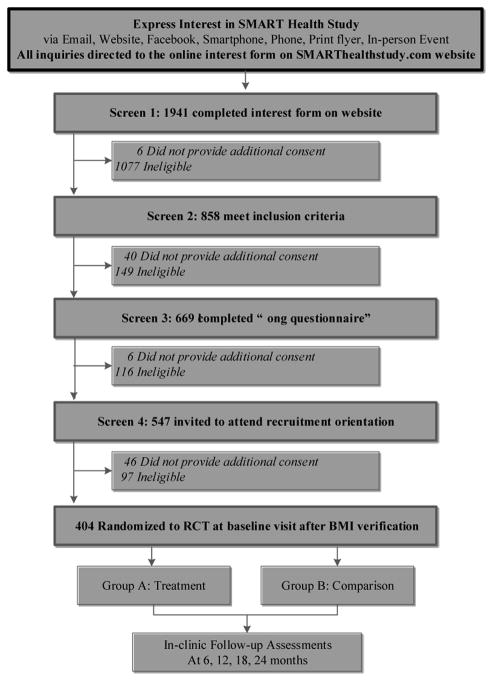

All participants followed a four-stage screening process that served two important functions: (1) to assess a participant’s familiarity with- and ability to use technology and (2) to serve as a “run-in” to assess a participant’s future compliance in the study (Figure 3) [10, 11]. The SMARThealthstudy.com website served as the primary destination for all study and recruitment inquiries (Figure 3). The online interest form was accessible from all pages of the website which also detailed requirements for study enrollment such as a pledge and a contract to stay enrolled in the study for 24-months. Eligible participants advanced to screen 2 to be verified as human (versus robot) participants, then completed a long questionnaire at screen 3 followed by a virtual “recruitment orientation” to address any concerns before enrolling in the study. A randomization visit was scheduled to verify self-reported physical measurements and randomize the participant to either group A or B. Informed consent was obtained at each phase of the study that required data collection. An electronic signature via an online consent form was obtained at Screen 1 and Screen 3. Participants were required to sign a paper consent form prior to randomization, at the baseline visit. In addition, participants were required to consent to continuous capture of their Facebook data for the duration of the study as defined by SMART study’s custom app’s terms of service and privacy policy. Per features inherent in Facebook’s privacy policy, all participants were responsible for their own privacy on Facebook [12]. To further protect the participants’ privacy, all captured data were stored on electronically secure servers residing in locked server cages which themselves resided in locked server rooms in buildings with secure access.

Figure 3.

Participant screening and enrollment process

Engagement

The digital interface, conferred via website, Facebook, mobile phone apps, text messaging, and email, provided multiple modes of communication and thus high frequency of content delivered to the participant as described elsewhere [9, 13]. Any engagement metric that was possible to track was tracked. Examples of engagement metrics tracked, but not limited to, were: for website – visits, time on the website, referral source, number of pages visited; for Facebook – likes, shares, comments, poll responses, and posts; for email – open rate, click-through rate; for SMS text – number of replies; for apps – the app type, time, date, app-specific interaction, device type, length of time, frequency of app usage.

Retention

Incentives in the form of cash offered to all participants who enrolled in the study and completed measurement visits served to enhance enrollment as well as retention. Incentive payments increased at each subsequent clinic visit such that participants received $20, $25, $30, $40, and $50 for completing the baseline, 6, 12, 18, and 24 month visits respectively. Although the “window” for measurement visit completion was defined as +/− 30-days from target visit date, incentive payments were doubled for completing a visit within two-weeks of the target visit date. Advanced appointment scheduling, consistent follow-up through email and SMS text reminders, and flexible scheduling were part of the retention strategy and automated via the IT infrastructure. To insure high retention rates at 24-months, participants were asked to write reminder postcards to themselves at the 18-month visit and mailed out one month before the final assessment.

Results

The results map to the different stages of the buyer decision process: recruitment, enrollment, engagement, and retention.

Recruitment

Digital and print media were used synergistically with the primary aim to drive traffic to the study recruitment website. According to Google website analytics, a total of 11,864 page visits by 7,762 unique visitors were made to the smarthealthstudy.com website in 12 months. Of the total visits, 59% were due to direct traffic, 14% from search engines, and 27% from Facebook and other university websites. An estimated 15,000 emails were sent out via university listserves and 40,000 printed materials were distributed in-person. The total cost of materials was less than $5000, excluding personnel costs. Effectiveness of the EMR targeted emails could not be tracked due to privacy restrictions. These efforts resulted in 1,941 of the 7,762 or 25% of unique visitors to the study website to express an interest in the study by completing the online interest form. Figure 3 shows results for the remaining screens until randomization.

Enrollment

Forecasted accrual rates varied based on assumptions made for college-based recruitment, as described by the “conditional model” for forecasting accrual rates [14]. Consequently, accrual benchmarks do not follow a linear accrual model, Figure 2. Based on the students’ academic calendar, lower accrual rates were estimated for the summer and winter break (Figure 2). Thus, although the recruitment period spanned 12-months the actual active time for recruitment was eight months. The observed accrual rate followed closely with the expected projections resulting in enrollment of 404 students between May 2011 and May 2012.

A total of 404 young adults between 18–35 years of age who met the inclusion criteria without any exclusions were randomized on a rolling basis into one of the two groups, Figure 3. Actual participant enrollment by site was close to the set targets with 152 (37%) from SDSU, 204 (51%) from UCSD, and 48 (12%) from CSUSM.

Characteristics of participants as they progressed through stages of screening until randomization into the study at baseline are shown in Table 1; there were no significant differences by condition. The mean ± SD age of the sample was 22.7 ± 4 years and 284 (70%) were female. More participants were in the overweight category compared to the obese BMI category. At least 126 (31%) of the sample were of Hispanic origin. Mobile device ownership data show that 403 (99.5%) of the randomized participants owned a smartphone, with iPhone and Android being the most commonly used devices (61%). While all participants randomized to the study owned either a laptop or desktop computer, we report data for 390 participants. Data on the specific type of computer ownership for fourteen subjects was not captured due to technical issues. Of the Three-hundred and ninety participants with computer ownership data, 80.3% owned a laptop and 17.2% owned both a laptop and desktop computer. There were no significant differences in device ownership between the treatment and comparison groups. Further analysis of participants at each screening stage confirmed that the sample characteristics are conserved from screen 1 through randomization with one exception – BMI. Normal weight individuals accounted for 45% of those who initially expressed interest in the study.

Table 1.

Participant characteristics

| Variable | Screen 1 N=1941 (%) |

Screen 2 N=858 (%) |

Screen 3 N=669 (%) |

Screen 4 N=547 (%) |

Baseline N=404 (%) |

At 24 Mo N=388 (%) |

|---|---|---|---|---|---|---|

| Mean Age (± SD) Years | 22.4 (4.6) | 22.0 (3.7) | 22.0 (3.7) | 22.0 (3.6) | 22.7 (3.8) | 22.6 (3.7) |

| <18 | 239 (12) | 102 (12) | 80 (12) | 67 (12) | 0 (0) | 0 (0) |

| 18–22 | 1076 (55) | 486 (57) | 372 (56) | 299 (55) | 266 (66) | 257 (66) |

| 23–27 | 399 (21) | 181 (21) | 146 (22) | 127 (23) | 92 (23) | 88 (23) |

| 28–33 | 161 (8) | 76 (9) | 60 (9) | 46 (8) | 39 (10) | 37 (10) |

| 33–35 | 30 (2) | 12 (1) | 10 (1) | 7 (1) | 6 (1) | 6 (2) |

| >35 | 34 (2) | 0 (0) | 0 (0) | 0 (0) | 0 (0) | 0 (0) |

| Sex: Female | 1447 (75) | 594 (69) | 479 (72) | 386 (71) | 284 (70) | 272 (70) |

| Ethnicity: Hispanic | 580 (30) | 278(32) | 205 (31) | 165 (30) | 126 (31) | 121 (31) |

| Race | ||||||

| African Am/Black | 107 (6) | 47 (5) | 39 (6) | 26 (5) | 20 (5) | 20 (5) |

| Am Indian/Alaska/Pac Is | 91 (5) | 43 (5) | 29 (4) | 23 (4) | 19 (5) | 18 (5) |

| Asian | 493 (25) | 218 (25) | 183 (27) | 154 (28) | 109 (27) | 107 (28) |

| Caucasian/White | 884 (46) | 362 (42) | 284 (42) | 238 (44) | 182 (45) | 175 (45) |

| Other | 382 (20) | 192 (22) | 144 (22) | 109 (20) | 74 (18) | 68 (18) |

| Mean BMI, kg/m2 (±SD) | 29 (3) | |||||

| Normal (18.5–24.99) | 870 (45) | 22 (3) | 19 (3) | 15 (3) | 0 (0) | |

| Overweight (25–29.99) | 672 (35) | 611 (71) | 464 (69) | 380 (69) | 270 (67) | |

| Obese Class I (30–34.99) | 254 (13) | 216 (25) | 178 (27) | 144 (26) | 118 (29) | |

| Obese Class II–III (>35) | 145 (7) | 9 (1) | 8 (1) | 8 (1) | 0 (0) | |

| University | ||||||

| UCSD | 785 (42) | 413 (49) | 340 (51) | 284 (52) | 204 (50) | 198 (51) |

| SDSU | 899 (48) | 345 (41) | 251 (38) | 202 (37) | 152 (38) | 147 (38) |

| CSUSM | 173 (9) | 93 (11) | 73 (11) | 61 (11) | 48 (12) | 45 (12) |

| Mobile Device Ownership | ||||||

| Smartphone: iPhone | 558 (29) | 223 (26) | 180 (27) | 140 (26) | 104 (26) | |

| Smartphone: Android | 612 (32) | 326 (38) | 245 (37) | 186 (34) | 142 (35) | |

| Smartphone: Other/Unsure | 770 (40) | 309 (36) | 244 (36) | 221 (40) | 157 (39) | |

| iPad | 147 (8) | 69 (8) | 56 (8) | 46 (8) | 33 (8) | |

| iTouch | 455 (23) | 178 (21) | 152 (23) | 124 (23) | 91 (23) | |

| Computer Ownership | ||||||

| Type: Desktop | 68 (4) | 26 (3) | 22 (3) | 16 (3) | 10 (3) | |

| Type: Laptop | 1443 (74) | 633 (74) | 504 (75) | 419 (77) | 313 (80) | |

| Type: Both | 305 (16) | 128 (15) | 104 (16) | 89 (16) | 67 (17) |

Engagement

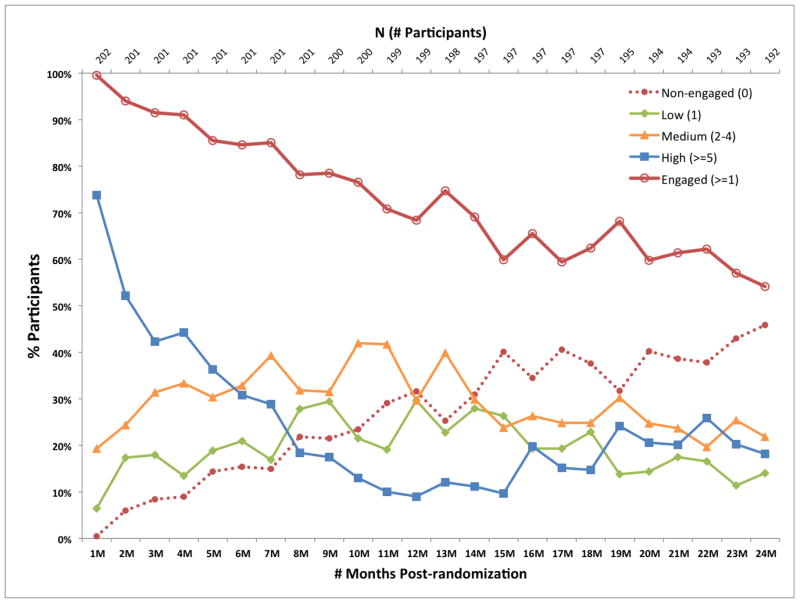

Engagement with the intervention was defined as the participant incurring at least one or more interactions with any one of the virtual channels (SMS text, Facebook, mobile or desktop apps, website, or email) in a week. Four additional levels of engagement were defined to differentiate between low (at least one interaction per week), medium (2–4 interactions per week) and high (five or more interactions per week) engagers. At least 57% of the treatment group participants engaged with the intervention at least one ore more times per week for 24 months (Figure 4). “High” engagers accounted for an average of approximately 20% of the treatment group subjects throughout the course of the 24-month intervention period. Non-engaged participants increased steadily over 24 months reaching a maximum rate of 46%.

Figure 4.

Engagement and retention of participants in the SMART study

Retention

Retention was defined as the number of participants who completed a measurement visit either within or out of a +/− 30-day window. As summarized in Table 2, the number of completed visits was highest at six months (94.3%) and steadily declined to 86% at 24 months when weight, physical measurements, and survey data were collected on 337 participants. Pregnancies accounted for 1.5% of study terminations and voluntary withdrawal totaled 2%.

Table 2.

Retention of participants in the SMART study

| 6 months | 12 months | 18 months | 24 months | |

|---|---|---|---|---|

|

| ||||

| Total Visits Expected | 404 | 401 | 399 | 394 |

|

| ||||

| Total Visits Occurred | 381 (94.3%) | 377 (94.0%) | 347 (87.0%) | 337 (85.5%) |

| In Window | 367 (96.3%) | 365 (96.8%) | 326 (93.9%) | 285 (84.6%) |

| Out of Window | 14 (3.67%) | 12(3.18%) | 21 (6.05%) | 52(15.4%) |

|

| ||||

| Total Visits Missed | 20 (5.0%) | 22 (5.5%) | 47(11.8%) | 53(13.5%) |

|

| ||||

| Total No. Terminated | 3 (0.74%) | 2 (0.50%) | 5(1.25%) | 4(1.02%) |

We conducted a satisfaction survey at the 24-month time point to assess the participants’ satisfaction with the SMART study and obtained responses from 323 participants with 170 from the comparison group and 153 from the treatment group. In response to the question “How satisfied are you overall with the weight-loss program you received from the SMART Study?” 57.6% of the participants responded with “somewhat satisfied” compared to 13.9% who were “very satisfied” on a four-point satisfaction scale (1- Very dissatisfied, 2 - Somewhat dissatisfied, 3 - Somewhat satisfied, 4 - Very satisfied). The treatment group was significantly more “very satisfied” (19%) compared to the comparison group (8.8%). In response to the question “Would you recommend the weight-loss program you received from the SMART Study to others?”, 51.1% of the respondents “probably would” recommend the SMART study on a four-point recommendation scale (1 - Definitely Not, 2 - Probably Not, 3 - Probably Would, 4 - Definitely Would) and treatment group participants are more likely to “definitely” recommend the program versus the comparison group.

Discussion

This paper proposes and describes the MARKIT Model and its application to recruitment, enrollment, engagement, and retention of participants in a technology-based clinical trial. Development of this model was informed by principles of marketing science, advances in information technology, current practices in clinical trials, and behavioral science research. We identified marketing frameworks such as 3C, 4P, STP and the buyer decision process and mapped them to clinical trial processes. By integrating IT into marketing, we created a system for capturing the user’s digital footprint. We used the digital footprint in context of RCT activities to drive decisions for forecasting, resource planning, and just-in-time iteration of recruitment strategies and tactics. We also used the MARKIT Model to propose a nomenclature for recruitment as well as other processes involved in RCT management. The MARKIT model contributes to behavioral science research and existing recruitment practices because it provides a framework for recruitment, enrollment, engagement, and retention of study participants.

Marketing is the process of creating value for a customer by developing a product or service and communicating that value to the customer through various media channels. Marketing also includes deriving value from the customer for the organization through metrics and analytics [15]. There are numerous channels for communicating value such as “offline” media, which include print media (e.g. newspapers, magazines, flyers), radio, and television [2] [16] [17]. Advances in technology such as the Internet, Web 2.0, wireless communications, and mobile phones offer even more alternatives for communicating and have now created an entirely new media category called “online” media as well as the field of information technology. Information technology is defined as the application of computers and telecommunications equipment to “store, retrieve, transmit and manipulate data” [18]. As a result of decreased costs, widely available high-speed broadband access, and multiple modalities of access, the Internet provides a creative, interactive, instant, and innovative way for users to connect to information [19]. Email, websites, social networks such as Facebook, video sharing sites such as YouTube, text messaging and instant messaging via online chat on mobile phones, and search engines such as Google are a few examples of how the Internet is used to connect to information [20–23].

The concept of a “systems approach to marketing” was first introduced by Bell in 1966 [24] and defined as a group of interrelated components where (1) any elements outside the relationship are part of the environment and where (2) the system can be influenced by components inside and outside the system. Bell described marketing systems as open and dynamic. Today, the interdependence of marketing and the Internet form the basis for the modern “systems approach to marketing”. The resulting system is dynamic because user behaviors and the information exchanged via the Internet are constantly changing elements of the system. These systems are enhanced by information technologies that enable detailed tracking of a user’s online or “digital” behavior by capturing detailed usage metrics – also known as the “digital footprint” – that are analyzed for patterns and other insights into the users’ behavior [4]. The application of these insights to make strategic and tactical decisions in order to improve an organization’s competitive position is often referred to as “actionable intelligence”[6]. Marketing literature suggests that technology-driven communications and synergy between analog (i.e. offline) and digital (i.e. online) communication channels are important because all modes of communications are consumed simultaneously, also known as integrated-marketing communications (IMC) [25–29]. This integration of information technology with marketing has allowed marketing to be studied as a science by way of the scientific method through hypothesis testing and experimentation [30].

College students experience weight gain and obesity that result from common modifiable behaviors and increase the risk for chronic diseases [31]. Data from a PEW study suggested that 95% of adults 18–33 years old went online during the 2010 calendar year [32]. In 2012, 78% of the US population used the Internet [33], including 97% of 18–29 year olds and 91% of 30–49 year olds [34]. Almost 100% of college students accessed the Internet regularly in 2011 [35]. The SMART intervention leveraged social media and mobile technologies popular among young adults, such as Facebook, text messaging, and applications (apps) on mobile phones to promote weight loss [9]. The intervention recruitment strategy also leveraged technology, contemporary communications trends, marketing and proven best practices in behavioral science clinical research to ensure recruitment of a representative sample for this study.

Previously published reports show that although recruitment of participants to behavioral interventions has traditionally been accomplished through offline media, use of online media is becoming more common because they are proving to be more cost-effective, scalable, interactive, personalizable, and tractable in comparison to offline media. Our data support these findings. The ubiquitous penetration and use of the Internet is significant because it provides a way to make communication interactive (via social and mobile media channels) and the ability to target and track individual behaviors at a granular level [36]. Marketing principles have been applied to clinical trial recruitment as well as the application of information technology [3]. However, to our knowledge, there are no reports of approaches described herein where marketing practices, integrated with information technology-based insights for decision-making, lead to immediate changes in recruitment, enrollment, engagement, and retention activities. The MARKIT Model fills this gap in the literature.

Weight-loss interventions are notorious for long recruitment phases, low enrollment, and high attrition rates [37]. Recruitment times can vary between 12–24 months [38] [39] while attrition rates can range from 10% to 80% for weight-loss studies [40]. Weight-loss interventions also suffer from low enrollment rates especially among the young adult population [41]. In comparison, the SMART study completed recruitment in less than 12 months with a retention-rate of 85.5% at 24-months and 60% participant engagement throughout the two-year RCT. This level of continued participation in the SMART study is above and beyond the reported data for retention and engagement in a 24-month RCT and implies that the MARKIT model is feasible and acceptable.

The MARKIT model is not without limitations. This model was applied to recruit, enroll, engage, and retain a sample of tech-savvy young adults who were college students and constantly connected to the Internet. While the proposed marketing frameworks and the underlying technology infrastructure are generalizable, the actual communications marketing mix will vary for each population type depending on the results of the situation analysis derived from the 3C’s. As such, the marketing strategy and the tactics will vary. For example, applying the MARKIT Model to engage senior citizens or pre-adolescent children in clinical trials may rely more on the traditional offline communication strategies. Additionally, use of online social networks among different target populations varies.

A second limitation to consider is availability of personnel with domain expertise in marketing and IT systems. To build an Information Technology infrastructure that is integrated with marketing requires staff with a specialized skills more often found in the private sector. Hiring staff with these skills can be challenging in academic environments.

Finally, the generalizability of these findings for less technology literate samples is unclear. Although penetration of the Internet is nearly ubiquitous as is mobile phone adoption, actual usage of technology varies depending on knowledge, exposure, perceived comfort and other factors.

Due to fewer opportunities for face-to-face interactions with participants, technology-related interventions impose special challenges for recruitment at the onset of a study. Thus, it is not sufficient to simply meet recruitment goals and enrollment targets because post-enrollment, participants much engage and remain in the study. To adequately assess the effectiveness of an intervention, the participant must not only engage with the elements of the intervention as “prescribed” but also remain enrolled in the study until its conclusion. Therefore it is imperative to consider the effect of recruitment efforts on participant engagement and retention and recruit the appropriate participants. Recruiting participants in an engaging way may serve to keep participants interacting with the intervention over the long-term. In this way, recruitment methods are related to engagement and retention.

The MARKIT Model describes the relevance of marketing to conduct RCTs. The attractiveness of methods used to recruit participants to the study may impact the intensity of engagement and longevity of enrollment of the participant in the study. This is a hypothesis we propose to test in future studies.

Conclusion

Health behavior research scientists interested in technology-based interventions can benefit from utilizing the MARKIT model. By combining traditional management methods with online marketing and a well-designed IT infrastructure, clinical scientists will have a scalable method for recruiting and enrolling the right participant. These early successes will translate into long-term gains because these participants will be appropriately matched to partake in a technology-based intervention. They will be more likely to engage with the various technological elements of the intervention and remain in the study. Practices from marketing such as context and situation analysis, segmentation, targeting, positioning, the marketing mix, and the consumer buying process as well as development of a strategic and tactical management plan can serve as a roadmap for recruitment, enrollment, engagement, and retention. Our proposed MARKIT model provides a new tool for overall management of participants volunteering in technology-based RCTs for future studies.

Acknowledgments

We would like to thank the following individuals and institutions for collaborating in the recruitment of student participants on their campuses: Karen Nicholson, MD MPH, Medical Director of Student Health and Counseling Services and her staff at California State University San Marcos; Maria Hanger, PhD, Director of Student Health Services and her staff at San Diego State University; Regina Fleming, MD MSPH, Director of Student Health Services and her staff at University of California, San Diego. This study was supported by NHLBI/NIH grant U01 HL096715.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Accrual of human subjects (milestones) policy. 2014 Available from: http://www.ncbi.nlm.nih.gov/pubmed/

- 2.Watson JM, Torgerson DJ. Increasing recruitment to randomised trials: a review of randomised controlled trials. BMC Med Res Methodol. 2006;6:34. doi: 10.1186/1471-2288-6-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McDonald AM, Shakur H, Free C, Knight R, Speed C, Campbell MK. Using a business model approach and marketing techniques for recruitment to clinical trials. Trials. 2011;12:74. doi: 10.1186/1745-6215-12-74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Trainor KJ, Rapp A, Beitelspacher LS, Schillewaert N. Integrating information technology and marketing: an examination of the drivers and outcomes of e-Marketing capability. Ind Mark Manag. 2011;40:162–174. [Google Scholar]

- 5.Wang ETG, Hub H, Huc PJ. Examining the role of information technology in cultivating firms’ dynamic marketing capabilities. The 9th International Conference on Electronic Business; 2009. [Google Scholar]

- 6.Kotler P, Armstrong G. Principles of marketing. 15. Prentice Hall; 2013. p. 720. [Google Scholar]

- 7.Sandhusen RL. Marketing. 2008:624. [Google Scholar]

- 8.Lytle LA, Patrick K, Belle SH, Fernandez ID, Jakicic JM, Johnson KC, Olson CM, Tate DF, Wing R, Loria CM. The EARLY trials: a consortium of studies targeting weight control in young adults. Transl Behav Med. 2014;4(3):304–313. doi: 10.1007/s13142-014-0252-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Patrick K, Marshall SJ, Davila EP, Kolodziejczyk JK, Fowler JH, Calfas KJ, Huang JS, Rock CL, Griswold WG, Gupta A, Merchant G, Norman GJ, Raab F, Donohue MC, Fogg BJ, Robinson TN. Design and implementation of a randomized controlled social and mobile weight loss trial for young adults (project SMART) Contemp Clin Trials. 2014;37(1):10–18. doi: 10.1016/j.cct.2013.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Berger VW, Rezvani A, Makarewicz VA. Direct effect on validity of response run-in selection in clinical trials. Control Clin Trials. 2003;24(2):156–166. doi: 10.1016/s0197-2456(02)00316-1. [DOI] [PubMed] [Google Scholar]

- 11.Ulmer M, Robinaugh D, Friedberg JP, Lipsitz SR, Natarajan S. Usefulness of a run-in period to reduce drop-outs in a randomized controlled trial of a behavioral intervention. Contemp Clin Trials. 2008;29(5):705–710. doi: 10.1016/j.cct.2008.04.005. [DOI] [PubMed] [Google Scholar]

- 12.Facebook.com. Facebook privacy basics, [cited 2015 03/23/2015]; Facebook Privacy Policy] 2015 Available from: https://www.facebook.com/about/basics/

- 13.Merchant G, Weibel N, Patrick K, Fowler JH, Norman GJ, Gupta A, Servetas C, Calfas K, Raste K, Pina L, Donohue M, Griswold WG, Marshall S. Click “Like” to change your behavior: a mixed methods study of college students’ exposure to and engagement with Facebook content designed for weight loss. J Med Internet Res. 2014;16(6):e158. doi: 10.2196/jmir.3267. http://www.jmir.org/2014/6/e158/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Barnard KD, Dent L, Cook A. A systematic review of models to predict recruitment to multicentre clinical trials. BMC Med Res Methodol. 2010;10:63. doi: 10.1186/1471-2288-10-63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gundlach GTWWL. The American Marketing Association’s new definition of marketing: perspective and commentary on the 2007 revision. 2013. http://dx.doi.org/10.1509/jppm.28.2.259.

- 16.Sarkin JA, Marshall SJ, Larson KA, Calfas KJ, Sallis JF. A comparison of methods of recruitment to a health promotion program for university seniors. Prev Med. 1998;27(4):562–571. doi: 10.1006/pmed.1998.0327. [DOI] [PubMed] [Google Scholar]

- 17.Lovato LC, Hill K, Hertert S, Hunninghake DB, Probstfield JL. Recruitment for controlled clinical trials: literature summary and annotated bibliography. Control Clin Trials. 1997;18(4):328–352. doi: 10.1016/s0197-2456(96)00236-x. [DOI] [PubMed] [Google Scholar]

- 18.Brynjolfsson EH, Lorin M. Beyond computation: information technology, organizational transformation and business performance. J Econ Perspect. 2000;14(4):23–48. [Google Scholar]

- 19.Zickhur KSA. Digital differences. 2012 [Google Scholar]

- 20.Graham AL, Milner P, Saul JE, Pfaff L. Online advertising as a public health and recruitment tool: comparison of different media campaigns to increase demand for smoking cessation interventions. J Med Internet Res. 2008;10(5):e50. doi: 10.2196/jmir.1001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.McKay HG, Danaher BG, Seeley JR, Lichtenstein E, Gau JM. Comparing two web-based smoking cessation programs: randomized controlled trial. J Med Internet Res. 2008;10(5):e40. doi: 10.2196/jmir.993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fenner Y, Garland SM, Moore EE, Jayasinghe Y, Fletcher A, Tabrizi SN, Gunasekaran B, Wark JD. Web-based recruiting for health research using a social networking site: an exploratory study. J Med Internet Res. 2012;14(1):e20. doi: 10.2196/jmir.1978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jones RB, Goldsmith L, Williams CJ, Boulos MNK. Accuracy of geographically targeted internet advertisements on Google AdWords for recruitment in a randomized trial. J Med Internet Res. 2012;14(3):e84. doi: 10.2196/jmir.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bell ML. Marketing: concepts and strategy. Houghton Mifflin; Boston: 1966. [Google Scholar]

- 25.Schultz DE. The inevitability of integrated. communications. 1996;37(3):139–146. [Google Scholar]

- 26.Ewing MT. Integrated marketing communications measurement and, evaluation. 2009 http://dx.doi.org/10.1080/13527260902757514.

- 27.Naik PA, Kay P. A hierarchical marketing communications model of online and offline media synergies. J Interact Mark. 2009;23 [Google Scholar]

- 28.Schultz D, Emma M, Baines Paul. WARC Best Practice. 2012. Nov, Best practice: integrated marketing communications; 44 pp.45 pp. [Google Scholar]

- 29.Voorveld HAM, Neijens PC, Smit EG. The interacting role of media sequence and product involvement in cross-media campaigns. 2011 http://dx.doi.org/10.1080/13527266.2011.567457.

- 30.Blattberg RC, Glazer R, Little JDC. The marketing information revolution. Harvard Business School Press; 1994. p. 373. [Google Scholar]

- 31.Kang J, Ciecierski CC, Malin EL, Carroll AJ, Gidea M, Craft LL, Spring B, Hitsman B. A latent class analysis of cancer risk behaviors among U.S. college students. Prev Med. 2014;64:121–125. doi: 10.1016/j.ypmed.2014.03.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Zickhur K. Generations. 2010 (@pewinternet) [Google Scholar]

- 33.internetworldstats. Internet usage statistics, population and telecom reports for the Americas. 2014 Available from: http://www.internetworldstats.com/stats2.htm-americas.

- 34.Zickhur KMM. Older adults and internet use. 2012. [Google Scholar]

- 35.Smith A, RL, Zickhur K. College students and, technology. 2011. [Google Scholar]

- 36.Winer RS. New communications approaches in marketing: issues and research directions. J Interact Mark. 2009;23(2) [Google Scholar]

- 37.Spring B, Sohn M, Locatelli SM, Hadi S, Kahwati L, Weaver FM. Individual, facility, and program factors affecting retention in a national weight management program. BMC Public Health. 2014;14 doi: 10.1186/1471-2458-14-363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Jakicic JM, Tate DF, Lang W, Davis KK, Polzien K, Rickman AD, Erickson K, Neiberg RH, Finkelstein EA. Effect of a stepped-care intervention approach on weight loss in adults: a randomized clinical trial. JAMA. 2012;307(24):2617–2626. doi: 10.1001/jama.2012.6866. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Griffin HJ, O’Connor Helen THT, Rooney Kieoron BKB, Steinbeck Katherine SKS. Effectiveness of strategies for recruiting overweight and obese Generation Y women to a clinical weight management trial. Asia Pac J Clin Nutr. 2013;22(24):235–240. doi: 10.6133/apjcn.2013.22.2.16. [DOI] [PubMed] [Google Scholar]

- 40.Moroshko I, Brennan L, O’Brien P. Predictors of dropout in weight loss interventions: a systematic review of the literature. Obes Rev. 2011;12(11):23. doi: 10.1111/j.1467-789X.2011.00915.x. [DOI] [PubMed] [Google Scholar]

- 41.Gokee-LaRose J, Gorin AA, Raynor HA, Laska MN, Jeffery RW, Levy RL, Wing RR. Are standard behavioral weight loss programs effective for young adults? Int J Obes (Lond) 2009;33(12):1374–1380. doi: 10.1038/ijo.2009.185. [DOI] [PMC free article] [PubMed] [Google Scholar]