Abstract

The Animate Monitoring Hypothesis proposes that humans and animals were the most important categories of visual stimuli for ancestral humans to monitor, as they presented important challenges and opportunities for survival and reproduction; however, it remains unknown whether animal faces are located as efficiently as human faces. We tested this hypothesis by examining whether human, primate, and mammal faces elicit similarly efficient searches, or whether human faces are privileged. In the first three experiments, participants located a target (human, primate, or mammal face) among distractors (non-face objects). We found fixations on human faces were faster and more accurate than primate faces, even when controlling for search category specificity. A final experiment revealed that, even when task-irrelevant, human faces slowed searches for non-faces, suggesting some bottom-up processing may be responsible for the human face search efficiency advantage.

Keywords: face detection, attention, visual search, search efficiency, eye tracking, human face, animal faces

Visual Search Efficiency is Greater for Human Faces Compared to Animal Faces Items in the environment that are of high biological significance appear to be located more efficiently than other items (e.g., Jackson, 2013; Öhman, 2007; Öhman, Flykt, & Esteves, 2001; Öhman, Soares, Juth, Lindström, & Esteves, 2012), though the proximate cause of this efficiency is debated (e.g., Horstmann & Bauland, 2006; Horstmann, Becker, Bergmann, & Burghaus, 2010; Horstmann, Lipp, & Becker, 2012; Yantis & Egeth, 1999). From an evolutionary perspective, according to the Animate Monitoring Hypothesis, human and nonhuman animals were the most important categories of visual stimuli for ancestral humans to monitor, as they presented challenges and opportunities for survival and reproduction, such as threats, mates, food, and predators (New, Cosmides, & Tooby, 2007; Orians & Heerwagen, 1992). Indeed, experimental investigations report that attention is attracted to threats, such as predators (Öhman & Mineka, 2001; 2003; Soares, 2012) and hostile humans (Öhman et al., 2012), as well as for positive items, including mates (e.g., attractive member of opposite sex; van Hoof, Crawford, & van Vugt, 2011), foods (Nummenmaa, Hietanen, Calvo, & Hyönä, 2011), and offspring (e.g., infant faces; Brosch, Sander, & Scherer, 2007).

We tested the Animate Monitoring Hypothesis by examining whether human, primate, and mammal faces elicit similarly efficient search efficiencies. To our knowledge, the search efficiency of human faces relative to other primate and mammal faces have not been directly compared. We hypothesized that both human and animal faces may be located efficiently as they both convey a wealth of information about individuals, including their visual attention (e.g., looking at the viewer), arousal level (e.g., awake), and emotional state (e.g., angry). In humans, there appears to be a domain specific mechanism for visually processing animals (Mormann et al., 2011), perhaps due to the importance of detecting prey or threats, or as a consequence of animal domestication. Animal faces and bodies may attract attention, even in complex natural backgrounds (Drewes, Trommershäuser, & Gegenfurtner, 2011; Kirchner & Thorpe, 2006; Lipp, Derakshan, Waters, & Logies, 2004; New et al., 2007; Rousselet, Macé, & Fabre-Thorpe, 2003), though perhaps not as quickly as human faces (Crouzet, Kirchner, & Thorpe, 2010). Moreover, faces are detected even when visually degraded (Bhatia, Lakshminarayanan, Samal, & Welland, 1995).

The present study had two primary goals: determine whether human faces are located more efficiently than other primate or mammal faces, and begin to examine the factors that may contribute to this human face advantage, namely, whether search category level influences search efficiency and the automaticity of search efficiency. To these ends, we employed a visual search paradigm to measure search efficiency (speed and accuracy), to locate a face and non-face images (targets) among object images (distractors).

Search efficiency for human and animal faces

Faces are located more quickly than other targets (e.g., objects, animal bodies), in adults (Brown, Huey, & Findlay, 1997) and infants (Di Giorgio, Turati, Altoe, & Simion, 2011; Gliga, Elsabbagh, Andravizou, & Johnson, 2009). Human faces are efficiently located when presented among scrambled faces (Kuehn & Jolicoeur, 1994) and objects (Hershler & Hochstein, 2005; Rousselet et al., 2003; Langton, Law, Burton, & Schweinberger, 2008), or in natural scenes (Lewis & Edmonds, 2005; Fletcher-Watson, Findlay, Leekam, & Benson, 2008). Low-level features (e.g., Fourier amplitudes; Honey, Kirchner, & VanRullen, 2008; VanRullen, 2006) cannot account for all of the apparent search efficiency of faces (Cerf, Harel, Einhäuser, & Koch, 2008; Hershler & Hochstein, 2006). For example, human face search efficiency declines when faces are inverted (Garrido, Duchaine, & Nakayama, 2008), suggesting low-level properties, such as brightness and contrast, alone cannot explain human face search efficiency. However, it remains unclear whether faces in general are efficiently located, or whether human faces are particularly privileged.

One approach to examine the properties of faces that contribute to efficient search is to compare visual search for human and animal faces. For example, human faces are detected more efficiently than common mammal faces (Hershler & Hochstein, 2005), including dog faces (Hershler, Golan, Bentin, & Hochstein, 2010). However, phylogenetic (i.e., evolutionary) distance was not considered in previous studies; it is possible that more closely related species, such as other primates, may be identified more quickly than more distantly related, non-primate species. Moreover, the variability in the target category level (e.g., dog) was not considered, and arguably, dogs are more variable in their facial appearance than humans, making them a more difficult target for which to search (Yang & Zelinsky, 2009). It remains untested whether the greater search efficiency for faces relative to non-faces may extend to other primate faces or whether it is exclusive to human faces.

Search category level and face search efficiency

Unlike previous work, we systematically examined face search category level. Searches for a specific target are more efficient than searches for a broader category (Yang & Zelinsky, 2009). Thus, previous work that has reported human face visual search advantages (e.g., Hershler & Hochstein, 2005; Hershler et al., 2010), but did not control for category level, may not actually reflect more efficient processing of human faces; rather, specific target categories (e.g., human faces) may be located more quickly than general target categories (e.g., mammal faces). Therefore, previous studies (e.g., Tipples, Young, Quinlan, Broks, & Ellis, 2002) may have failed to find animal faces are located as efficiently as human faces because the search instructions were too general (e.g., “find the animal”). In this way, studies directly comparing search efficiency of human and animal faces may give an unfair advantage to human face targets, which are a more specific category than animal face targets (e.g., Hershler & Hochstein, 2005; Hershler et al. 2010). Moreover, recent work has found that search times are influenced by both the number of distractor items and the number of target items held in memory (Wolfe, 2012), so searching for a particular type of item is more efficient than searching for a larger collection of items (i.e., broader category).

To test our prediction that search category level can account for human face search efficiency, we manipulated the search instructions that we gave to participants, first providing them with instructions to “Find the [insert one: human, primate, or mammal] face” (Experiment 1), then broader instructions (i.e., “Find the face,” Experiment 2), then making targets equally specific (i.e., “Find the [one specific species: human, macaque monkeys, or sheep] face”; Experiment 3). This allowed us to determine whether the apparent superiority in human face search efficiency is simply a consequence of target category.

Top-down and bottom-up contributions to face search efficiency

There are at least two intertwined processes that modulate visual attention, one or both of which may be responsible for human face search efficiency advantages. One is top-down attention, which is goal-directed and intentional. In contrast, bottom-up attention is stimulus-driven and unintentional (e.g., Corbetta & Shulman, 2002; Katsuki & Constantinidis, in press; Theeuwes, 2010). Previous work reports that task-irrelevant human faces interfere with search efficiency for non-face targets (Devue, Belopolsky, & Theeuwes, 2012; Langton et al., 2008). We were additionally interested in exploring the extent to which this face interference may be specific to one’s own-species.

We predicted that human faces, even when task-irrelevant, would attract attention and thereby slow search efficiency for detecting non-face targets. We tested this prediction by having participants search for cars and butterflies in object arrays each containing a face distractor—either human, primate, or mammal—and measuring their target detection speed and likelihood of fixating on the task-irrelevant faces (Experiment 4). This allowed us to determine whether any search efficiency advantages of human faces found in Experiment 1–3 are exclusively due to top-down processes, or whether bottom-up processes may also be involved.

Experiment 1

In Experiment 1, we tested whether search efficiency differs for human, primate, and mammal faces. Participants searched for a face category—human, primate, or mammal—amid an array of object distractors; they received specific instructions for each block: “Find the human face,” “Find the primate face,” or “Find the mammal face.” We also gave participants clarification of what we meant by “primates,” informing them that this included apes and monkeys.

Method

Participants

In Experiment 1, 37 undergraduate students from a large university (11 males) participated for course credit. The average age was 18.57 (SD = 0.73); 33 participants were Caucasian, three were African American, and one was Asian. Participants reported normal or corrected-to-normal vision and achieved good calibration.

Materials

In all experiments, we recorded eye movements via corneal reflection using a Tobii T60 eye tracker, a remote 43 cm monitor positioned 60 cm from participants, with integrated eye tracking technology, and a sampling rate of 60 Hertz. We used Tobii Studio software (Tobii Technology, Sweden) to collect and summarize the data. Manual responses were collected with the arrow keys of a standard keyboard.

In all experiments, participants viewed arrays of photographs, using a method employed by Hershler and Hochstein (2005), each which included 16, 36, or 64 elements (Figure 1). All elements were colored photographs, 2.4 – 2.9 cm (width) × 2.6 – 3 cm (height), and equally spaced in all array sizes. Images of neutral non-face objects (n = 1007), primate faces (n = 829; not including macaque faces: n = 24), non-primate mammal faces (n = 902; not including sheep n = 24), and human faces (n = 703) were collected through internet searches and were cropped and positioned into arrays using Adobe Photoshop. To ensure the novelty of the distractor images, in Experiments 1–3 each image appeared as a distractor no more than 6 times, and in Experiment 4 each image appeared as a face distractor only once. In all experiments, target images were only used once. A total of 360 arrays were created for Experiment 1, 90 of which were also used in Experiment 2, and 90 of which were modified for use in Experiment 3 (target images were altered; see details below), and 260 new arrays were created for Experiment 4.

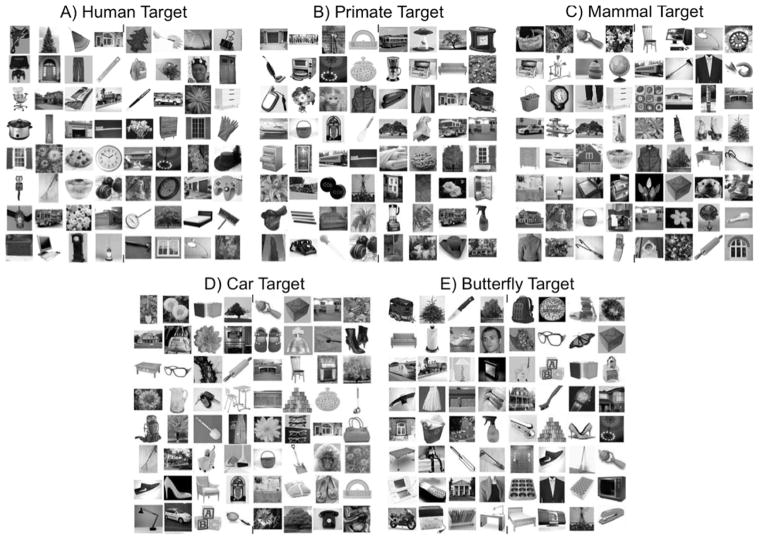

Figure 1.

Examples of arrays in which participants searched for faces among objects. Targets were (A) human faces, (B) nonhuman primate faces, (C) non-primate mammal faces, (D) cars, or (E) butterflies. Stimuli contained 16, 36, or 64 (shown) photographs. All arrays were in color. In example (D) there is a primate face distractor and in example (E) there is a human face distractor.

Distractors were photos of objects, such as common household items (e.g., furniture, clothing), natural items (e.g., trees, flowers), and other objects (e.g., vehicles, foods, toys). Photos were chosen such that they did not contain any faces, but were diverse in their colors, contrast, shapes, and backgrounds. Rather than equating the images on low-level characteristics (e.g., brightness, contrast), we instead chose stimuli (targets and distractors) that were as heterogeneous as possible (e.g., diverse backgrounds, lighting, angles), a method used previously by Hershler and Hochstein (2005, 2006) to eliminate low-level confounds.

In Experiments 1 and 2, target objects included photos of human faces, various primate faces (monkeys and apes), and various non-primate mammal faces (e.g., squirrels, elephants, horses). All face photos were confirmed to be neutral expressions in a pilot test rating with a separate group of participants (n = 45), who rated each stimulus using the following: “How emotional is this face?” on a scale of 1 (completely neutral) to 7 (very emotional). To ensure neutrality, photos rated an average of 2 or higher were not included as stimuli. In addition, to be included as stimuli, face photos had to be facing forward and have both eyes visible and open. Faces were chosen to be clear, diverse in lighting, age, gender, ethnicity, hair or fur characteristics, and backgrounds. Images were cropped to enlarge the faces using Adobe Photoshop. Faces with excessive makeup or costume were excluded. Target locations were balanced such that they appeared in all quadrants within the array an equal number of times across target types, ensuring target position was consistent across conditions.

In all experiments, 17–20% of the arrays contained no targets to determine the speed with which participants could conclude the targets were absent; this target-absent condition has been included in previous visual search studies, which report participants are faster to indicate that human face targets are absent, compared to animal face targets (Hershler & Hochstein, 2005).

Procedure

In all experiments, we used a 5-point eye-gaze calibration, followed by a practice or training task, then a visual search task in which participants searched for target photos among arrays of heterogeneous distractor object photos (Hershler & Hochstein, 2005). In all experiments, participants were told to search for a particular type of target, and to indicate with a key press if the target was on the left or right side of the screen, or not present. We required participants to report target locations so we could examine participants’ accuracy.

In Experiment 1, participants first completed a practice block in which they were asked to find the X among an array of O’s. Next, participants viewed 360 trials presented in blocks; each block contained 90 trials with only one type of target (object, human face, primate face, or mammal face). Each array contained one type of distractor (objects, human faces, primate faces, or mammal faces). Although the target type remained consistent within each block, the distractors changed from trial to trial. Participants were asked to find the “human face,” “primate face,” “mammal face,” or “object.” Only trials with images of objects as distractors (90 trials) were analyzed in the current study, as our primary goal in the present study was to examine differences in search efficiency across different target face types.

Data Analysis

Data were prepared in the same manner for all experiments. We used a clear view filter on the eye gaze RT data. Fixations were defined as a minimum of 100 ms at a 50-pixel radius. Areas of Interest (AOIs) for analysis were drawn around face targets within each array. The target AOI contained the entire face, but no distractors, and was always 3 cm × 3 cm. For each experiment we examined participants’ response speed for both gaze and manual responses; manual and gaze response times (RTs) produced the same statistically significant results (see Figure 2), so gaze RTs are reported here. Manual RTs are reported for target absent trials (because there was no gaze data for these trials). We examined participants’ accuracy for manual responses. In examinations of RTs, we only included data from trials with correct target detection. We calculated the best-fitting lines for each search category using linear regression on RT for target-present trials. We used Fisher’s Least Significant Difference (LSD) corrections and all comparisons were paired sample t tests, two-tailed.

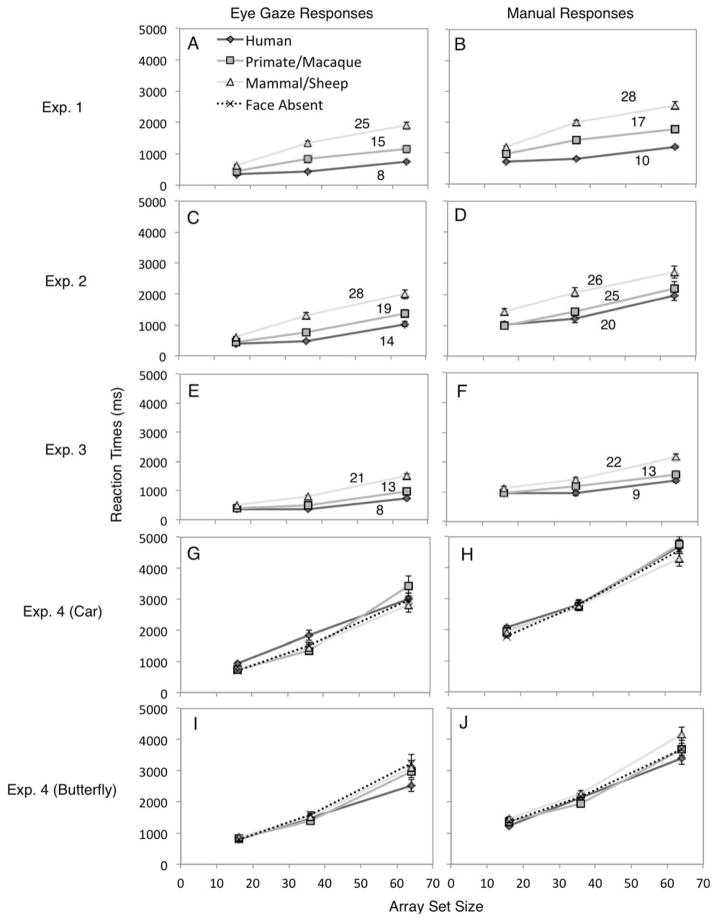

Figure 2.

Results showing the interaction between array size and target type in Experiment 1 (top row: A, B), Experiment 2 (second row: C, D), Experiment 3 (third row: E, F), and Experiment 4 (bottom two rows: G, H, I, J), for eye gaze RTs (left column) and manual RTs (right column). The darkest solid gray lines with the diamond points represent the human faces, the medium gray lines with the square points represent the primate/macaque monkey faces, the light gray lines with the triangle points represent the mammalian/sheep faces, and the dotted lines with x points represent face absent trials (i.e., no distractor; Experiment 4 only). Numbers reflect the search slopes (ms/item); these are not reported for Experiment 4 because there were no significant differences across face distractor types, ps > .10. Error bars represent within-subjects standard error of the mean. All statistical comparison results were the same for both gaze and manual response measures.

Results

Speed

In Experiment 1, no gaze was detected within the AOI in 23% of trials, either because the participant did not fixate on the AOI (may have detected the target in periphery) or because the eye tracker failed to accurately detect the gaze; however, there were no differences across target types (human vs. primate, t(23) = 1.11, p = .278; human vs. mammal, t(23) = .80, p = .434; primate vs. mammal, t(23) = .32, p = .751).

The average human face slope was 8ms/item, the average primate face slope was 15ms/item, and the average mammal face slope was 25ms/item (Figure 2A). A two-way within-subjects ANOVA (Array size × Target type) on gaze RTs revealed a main effect of Array size (F (2,60) = 74.59, p < .001, ηp2 = .71), a main effect of Target type (F (2,60) = 104.33, p < .001, ηp2 = .78), and an interaction between Array size and Target type (F (4,120) = 12.33, p < .001). Within each array size, RTs were fastest to human faces, followed by primate faces, and mammal faces elicited the slowest RTs, ps < .01, and differences grew larger as array size increased (see Tables 1 and 2).

Table 1.

Eye Gaze Response Speed.

| Array Size | Species | Experiment 1

|

Experiment 2

|

Experiment 3

|

Experiment 4

|

||||

|---|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | M | SD | M | SD | ||

| 16 | Human | 315 | 83 | 402 | 174 | 360 | 132 | 858 | 386 |

| Primate | 370 | 106 | 443 | 105 | 380 | 85 | 803 | 224 | |

| Mammal | 616 | 206 | 617 | 130 | 506 | 103.2 | 822 | 219 | |

|

| |||||||||

| 36 | Human | 355 | 105 | 484 | 146 | 368 | 98 | 1,654 | 541 |

| Primate | 740 | 315 | 758 | 225 | 508 | 133 | 1,336 | 393 | |

| Mammal | 1,265 | 379 | 1,316 | 520 | 804 | 240 | 1,479 | 347 | |

|

| |||||||||

| 64 | Human | 652 | 204 | 961 | 505 | 724 | 242 | 2,800 | 866 |

| Primate | 1,053 | 656 | 1,365 | 772 | 981 | 487 | 3,151 | 1,002 | |

| Mammal | 1,578 | 614 | 1,992 | 838 | 1,512 | 399 | 2,931 | 973 | |

Note. Participants’ mean (M) and standard deviation (SD) response times (ms) to fixate their gaze on targets.

Table 2.

Paired-sample t tests for Response Speed.

| Exp. | Array

|

Target Present

|

Target Absent

|

|||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Size | Face Type | t | df | p | d | t | df | p | d | |

|

|

|

|

||||||||

| 1 | 16 | Human vs. Primate | 4.33 | 34 | < .001 | 0.73 | 0.43 | 36 | 0.669 | |

| Human vs. Mammal | 9.05 | 35 | < .001 | 0.17 | 4.09 | 36 | < .001 | 0.67 | ||

| Primate vs. Mammal | 6.81 | 35 | < .001 | 1.13 | 3.75 | 36 | 0.001 | 0.62 | ||

| 36 | Human vs. Primate | 5.95 | 35 | < .001 | 1.01 | 1.55 | 36 | 1.55 | ||

| Human vs. Mammal | 11.53 | 35 | < .001 | 1.95 | 5.81 | 35 | < .001 | 0.97 | ||

| Primate vs. Mammal | 4.06 | 35 | < .001 | 0.69 | 5.56 | 35 | < .001 | 0.93 | ||

| 64 | Human vs. Primate | 5.66 | 36 | < .001 | 0.94 | 3.85 | 36 | < .001 | 0.63 | |

| Human vs. Mammal | 10.88 | 36 | < .001 | 1.81 | 5.97 | 36 | < .001 | 0.98 | ||

| Primate vs. Mammal | 6.65 | 36 | < .001 | 1.11 | 1.66 | 36 | 0.105 | |||

|

| ||||||||||

| 2 | 16 | Human vs. Primate | 1.6 | 32 | 0.119 | 1.87 | 32 | 0.071 | ||

| Human vs. Mammal | 6.75 | 32 | < .001 | 1.18 | 2.55 | 32 | 0.016 | 0.44 | ||

| Primate vs. Mammal | 6.71 | 32 | < .001 | 1.17 | 1.12 | 32 | 0.27 | |||

| 36 | Human vs. Primate | 8.15 | 32 | < .001 | 1.42 | 0.27 | 32 | 0.79 | ||

| Human vs. Mammal | 9.74 | 32 | < .001 | 1.69 | 0.98 | 32 | 0.332 | |||

| Primate vs. Mammal | 6.5 | 32 | < .001 | 1.13 | 1.48 | 32 | 0.148 | |||

| 64 | Human vs. Primate | 3.16 | 32 | 0.005 | 0.52 | 0.75 | 32 | 0.456 | ||

| Human vs. Mammal | 7.81 | 32 | < .001 | 1.36 | 0.65 | 32 | 0.524 | |||

| Primate vs. Mammal | 5.89 | 32 | < .001 | 1.03 | 1.58 | 32 | 0.125 | |||

|

| ||||||||||

| 3 | 16 | Human vs. Macaque | 0.95 | 34 | 0.349 | 4.04 | 34 | <.001 | .68 | |

| Human vs. Sheep | 5.69 | 34 | < .001 | 0.96 | 1.85 | 34 | 0.073 | |||

| Macaque vs. Sheep | 6.42 | 34 | < .001 | 1.09 | 3.01 | 34 | 0.005 | 0.51 | ||

| 36 | Human vs. Macaque | 4.79 | 33 | < .001 | 0.82 | 2.97 | 34 | 0.005 | 0.5 | |

| Human vs. Sheep | 9.57 | 33 | < .001 | 1.64 | 5.59 | 34 | < .001 | 0.95 | ||

| Macaque vs. Sheep | 7.61 | 34 | < .001 | 1.29 | 3.02 | 34 | 0.005 | 0.51 | ||

| 64 | Human vs. Macaque | 2.95 | 34 | 0.006 | 0.5 | 3.71 | 34 | 0.001 | 0.63 | |

| Human vs. Sheep | 10.34 | 34 | < .001 | 1.75 | 7.34 | 34 | < .001 | 1.24 | ||

| Macaque vs. Sheep | 6.06 | 34 | < .001 | 1.02 | 3.93 | 34 | < .001 | 0.66 | ||

|

| ||||||||||

| 4 (Car) | 16 | Human vs. Primate | 3.36 | 29 | 0.002 | 0.61 | 3.11 | 32 | 0.004 | 0.54 |

| Human vs. Mammal | 3.52 | 28 | 0.001 | 0.65 | 3.4 | 32 | 0.002 | 0.59 | ||

| Primate vs. Mammal | 0.31 | 29 | 0.758 | 0.63 | 32 | 0.533 | ||||

| 36 | Human vs. Primate | 2.64 | 26 | 0.014 | 0.51 | 0.65 | 32 | 0.520 | ||

| Human vs. Mammal | 2.19 | 28 | 0.037 | 0.41 | 2.03 | 32 | 0.051 | |||

| Primate vs. Mammal | 1.37 | 27 | 0.182 | 0.65 | 32 | 0.520 | ||||

| 64 | Human vs. Primate | 1.35 | 29 | 0.187 | 0.98 | 32 | 0.335 | |||

| Human vs. Mammal | 1.27 | 30 | 0.213 | 0.56 | 32 | 0.582 | ||||

| Primate vs. Mammal | 2.01 | 29 | 0.054 | 0.75 | 32 | 0.462 | ||||

|

| ||||||||||

| 4 (Butterfly) | 16 | Human vs. Primate | 2.94 | 31 | 0.006 | 0.52 | 1.29 | 32 | 0.207 | |

| Human vs. Mammal | 0.78 | 32 | 0.439 | 4.23 | 32 | < .001 | 0.74 | |||

| Primate vs. Mammal | 0.04 | 31 | 0.966 | 1.31 | 32 | 0.201 | ||||

| 36 | Human vs. Primate | 0.62 | 31 | 0.541 | 1.70 | 32 | 0.099 | |||

| Human vs. Mammal | 0.14 | 30 | 0.886 | 4.00 | 32 | < .001 | 0.7 | |||

| Primate vs. Mammal | 1.10 | 31 | 0.281 | 1.90 | 32 | 0.067 | ||||

| 64 | Human vs. Primate | 1.81 | 31 | 0.079 | 2.44 | 32 | 0.021 | 0.42 | ||

| Human vs. Mammal | 2.38 | 31 | 0.024 | 0.42 | 3.56 | 32 | 0.001 | 0.62 | ||

| Primate vs. Mammal | 0.51 | 32 | 0.611 | 1.62 | 32 | 0.116 | ||||

Note. Paired sample t tests for each of the three experiments. Target present trials represent gaze RTs to face (Exp. 1–3) or non-face (Exp. 4) targets. Target absent trials represent manual RTs to report targets as absent.

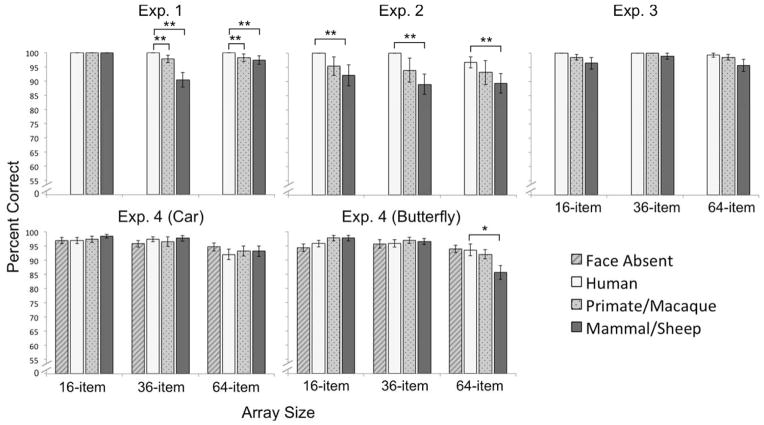

We next examined the target absent trials to see whether correct manual response times varied across Array size and Target type. One participant was excluded from this analysis for not following instructions in one condition. The average target-absent human face slope was 63ms/item, the average primate face slope was 91ms/item, and the average mammal face slope was 89ms/item (Figure 3A). A 3 × 3 repeated measures ANOVA (Array size × Target type) examined manual RTs in target absent trials. There were main effects of Target type (F (2,70)=30.01, p < .001, ηp2 = .462) and Array size (F (2,70)=131.04, p < .001, ηp2 = .789), qualified by Target type × Array size interaction (F (4,140)=6.54, p < .001). As predicted, participants were the fastest to report human faces as absent (M = 3486 ms, SD = 1191) compared to primate faces (M = 4058 ms, SD = 1476), t(36) = 3.42, p = .002, d = .56, and mammal faces (M = 5041 ms, SD = 1653), t(36) = 7.60, p < .001, d = 1.25, and were faster to report primate faces as absent compared to mammal faces, t(36) = 4.67, p < .001, d = .77. Also, as found elsewhere (Hershler & Hochstein, 2005), response times increased with array size, with the smallest array eliciting the fastest target absent response (M = 2274 ms, SD = 651), compared to the medium-size array (M = 4104 ms, SD = 1474), t(36) = 9.39, p < .001, d = 1.54, and the large-size array (M = 6208 ms, SD = 2093), t(36) = 13.39, p < .001, d = 2.28. Targets absent responses were faster in the medium-size array compared to the large-size array, t(36) = 9.78, p < .001, d = 1.61. Human faces were identified as absent faster than primate faces only in the 64-item arrays, p < .001, but not the 16- or 36-item arrays, ps > .10 (Table 2). Also, human and primate faces were declared absent more quickly than mammal faces in 16- and 36-item arrays, ps ≤ .001. In the 64-item arrays, however, though human faces were identified as absent faster than mammal faces, p < .001, there was no difference between primates and mammals, p = .105.

Figure 3.

Results showing manual response times in trials in which there was no target, for Experiment 1 (A), Experiment 2 (B), Experiment 3 (C), and Experiment 4 Car Targets (D) and Butterfly Targets (E). In Experiments 1, 3, and 4, the darkest gray lines with the diamond points represent the human faces, the medium gray lines with the square points represent the primate/macaque monkey faces, and the light gray lines with the triangle points represent the mammalian/sheep faces. In Experiment 2, the search instructions were to “find the face,” so there was no particular target-type. Numbers reflect the search slopes (ms/item); these are not reported for Experiment 4 because there were no significant differences across face distractor types, ps > .10. Error bars represent within-subjects standard error of the mean.

Accuracy

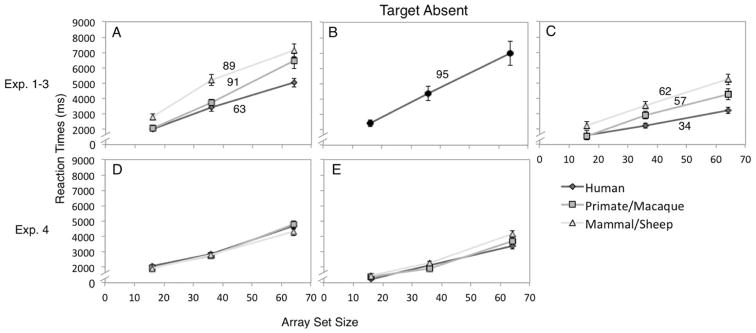

A 3 × 3 repeated measures ANOVA examined the percentage of correct manual responses across species and array size, which revealed main effects of Target type (F (2,72) = 10.61, p < .001, ηp2 = .228) and Array size (F (2,72) = 14.93, p < .001, ηp2 = .293), qualified by a Target type × Array size interaction (F (4,144) = 8.50, p = .001), Figure 4. Human face accuracy was higher (M = 99.7%, SD = .2%) than either primate faces (M = 94.4%, SD = 1.8%), t(36) = 2.80, p = .008, d = .46, or mammal faces (M = 89.5%, SD = 2.1%), t(36) = 5.02, p < .001, d = .83. There was no difference in accuracy for primate and mammal faces, t(36) = 1.84, p = .073. Accuracy was highest in the small arrays (M = 100%, SD = 0%), compared to the medium size arrays (M = 92.0%, SD = 1.1%), t(36) = 4.65, p < .001, d = .76, and large arrays (M = 94.9%, SD = 1.1%), t(36) = 2.12, p = .041, d = .35, and accuracy was accuracy was higher for the large arrays compared to the medium arrays, t(36) = 3.89, p < .001, d = .64. There were no species differences in the 16-item arrays (human vs. primate: t(36) = 1.82, p = .078; human vs. mammal: t(36) = 1.97, p = .057: primate vs. mammal, t(36) = .23, p = .817), but in the 36- and 64-item arrays, accuracy was higher for humans than for primates or mammals (36-item arrays: humans > primates, t(36) = 3.13, p = .002, d = .54, and humans > mammals, t(36) = 6.57, p < .001, d = 1.08; 64-item arrays: humans > primates, t(36) = 2.31, p = .027, d = .38, and humans > mammals, t(36) = 4.14, p < .001, d = .68). Primates were more accurate than mammals only in the 36-item arrays, t(36) = 3.01, p = .005, d = .50 (64-item arrays: primates vs. mammals, t(36) = 1.31, p = .20).

Figure 4.

Manual response accuracy to locate targets in target-present condition. Error bars represent within-subjects standard error of the mean. *ps < .05, **ps < .01.

Discussion

Participants were fastest and most accurate at localizing human faces, slower and less accurate at localizing primate faces, and slowest and least accurate at localizing mammal faces. Human faces were also more quickly declared as absent in the arrays in which they were not present, compared to primate and mammal faces. Interestingly, the primate faces were processed less efficiently than human faces, but not as inefficiently as the mammals. Together, these results suggest that search efficiency—measured as both speed and accuracy—appears to vary depending on the type of face for which one is searching.

One explanation for these differences in search efficiency is that the search category levels varied: When searching for primates, participants were searching for a broader category than humans, but a more specific category than mammals. It is unclear how to interpret these results, given that searches for a specific target are more efficient than searches for a broader category (Yang & Zelinsky, 2009). This leaves open the possibility that human faces may not be privileged in the efficiency with which they are located, but rather specific target categories may be located more quickly than general target categories. Indeed, others have found that the similarity among distractors and the similarity between targets and distractors influence search efficiency (Duncan & Humphreys, 1989); likewise, the similarity among targets may also influence search efficiency. We explore this hypothesis in Experiments 2 and 3.

Experiment 2

In Experiment 2, we were interested in determining whether the specificity of the search category level affected search times. Since “human” is a specific species, but primate is an order (broader taxonomic category), and mammal is a class (even broader taxonomic category), we predicted that the search instructions in Experiment 1 might account for the different search slopes. Previous studies have found that when searching for a target, humans use a specific template (Bravo & Farid, 2009), and when told to search for a specific target, they are more efficient than when searching for a broader category (Yang & Zelinsky, 2009), perhaps due to the number of items that must be held in memory (Wolfe, 2012). In other words, search efficiency for human faces may not be privileged, but rather specific target categories may be located more quickly than general target categories. To test this possibility, we carried out a second study in which participants viewed one block with mixed targets (human, primate, and mammal) and were given a more general search instruction, “Find the face.” We clarified that these faces could be human or animal faces.

Method

Participants

Thirty-three students contributed usable data (12 males); none had participated in Experiment 1. The average age was 19.27 (SD = 3.10), and there were 27 Caucasian participants, two African American participants, two Hispanic participants, and two Asian participants.

Materials

The materials consisted of 72 target-present arrays—24 containing human face targets, 24 containing a variety of primate face targets, and 24 containing a variety of non-primate mammal face targets—and 18 target-absent arrays, for a total of 90 arrays. As in Experiment 1, there were an equal number of arrays of each size (16, 36, or 64 elements), distractors were non-face objects, and the location of the target was counter-balanced across all conditions.

Procedure

The procedure was the same as Experiment 1, except participants viewed one block of 90 trials, in which targets were randomly mixed (humans, primates, and mammals). Participants received a more general search instruction: “find the face” within each array of photos, as quickly and accurately as possible, and to indicate the location of the face (left, right, or absent), as in Experiment 1.

Results

Speed

In Experiment 2, no gaze was detected within the AOI in 17% of trials, and paired sample t tests revealed that there were fewer missing trials for human targets (12%) compared to either primates (20%) or mammals (20%), t(23) = 2.76, p = .011, d = .56, t(23) = 2.53, p = .019, d = .52, respectively, but no difference between primates and mammals, t(23) = .11, p = .910.

The average human face slope was 14ms/item, the average primate face slope was 19ms/item, and the average mammal face slope was 28ms/item (Figure 2C). A two-way within-subjects ANOVA (Array size × Target type) on gaze RTs revealed main effects of Array size (F (2,64) = 78.54, p < .001, ηp2 = .71) and Target type (F (2,64) = 107.30, p < .001, ηp2 = .77), qualified by an Array size × Target type interaction (F (4,128) = 15.15, p < .001), Table 1, Figure 2C. Within the 16-item arrays, human and primate faces elicited equally fast responses, p = .119, but both were located more quickly than mammal faces, ps < .001 (See Tables 1 and 2). Within the 36- and 64-item arrays, RTs were fastest to human faces, followed by primate faces, and mammal faces elicited the slowest RTs, ps ≤ .005.

We next examined the target absent trials with a one-way ANOVA to see whether correct response times varied across Array size, Figure 3B. Target type was not examined because search instructions did not vary (participants were told to “find the face”). This analysis revealed a main effect of Array size (F (2,64)=56.65, p < .001, ηp2 = .639). As in Experiment 1, RTs increased with array size: faster responses to the 16-item arrays (M = 2434 ms, SD = 1015) compared to both the 36-item arrays (M = 4384 ms, SD = 2367), t(32) = 6.91, p < .001, d = 1.20, and the 64-item arrays (M = 6991 ms, SD = 4113), t(32) = 7.69, p < .001, d = 1.34 (Table 2). Responses were also faster to the 36-item than the 64-item array, t(32) = 7.45, p < .001, d = 1.30. There were no other significant differences, ps > .05.

Accuracy

We used a 3 × 3 repeated measures ANOVA to examine the percentage of correct responses in the target-present condition across Target type and Array size, which revealed main effects of Target type (F (2,64) = 3.40, p = .039, ηp2 = .096) and Array size (F (2,64) = 3.51, p = .036), Figure 4. Human (M = 98.9%, SD = 4.0%) and primate (M = 94.20%, SD = 22.40%) faces were detected with equal accuracy, t(32) = 1.19, p = .245, and human faces were more accurate than mammal faces (M = 90.20%, SD = 18.4%), t(32) = 2.73, p = .010, d = .47. Primate and mammal faces did not differ in their accuracy, t(32) = 1.46, p = .154. Responses were more accurate for the 16-item arrays (M = 95.9%, SD = 13.2%) than the 64-item arrays (M = 93.1%, SD = 13.2%), t(32) = 2.81, p = .008, d = .49, but the 36-item arrays did not differ from either the 16-item arrays, t(32) = 1.60, p = .119, or the 64-item arrays, t(32) = 1.03, p = .309.

Discussion

As in Experiment 1, responses were fastest to the human faces, followed by the primate faces, and slowest for the mammal faces, suggesting that search instructions alone cannot account for human face search efficiency. We found no differences in the accuracy with which human and primate faces were located, but both were located more accurately than mammal faces. We failed to find any effects of species on response speed in target absent trials, likely due to participants being unaware of the type of targets for which to search, and therefore, participants took longer to confirm that no faces were present (i.e., the task was too difficult; floor effect). In summary, these result indicate that making search instructions broader (“Find the face”) does not eliminate the human face search advantage; however, it is possible that participants may have learned to expect certain familiar face types (e.g., human faces) over the course of the trials, therefore making the present findings difficult to interpret. To more carefully test the effect of category level we carried out a third experiment in which we trained participants to search for three specific target species, each in a separate block. This allowed us to determine whether searching for a specific target category (a species) could account for differences in search times across human and animal faces.

Experiment 3

In Experiment 2, the targets for which participants searched varied in their degree of homogeneity; that is, when searching for primate faces there was more heterogeneity (variety of facial appearance) than in humans, and even more heterogeneity in mammal faces. This is because the primate category includes more species than the human category, and the mammal category includes even more species than the primate category. Given that participants are capable of rapid learning during visual search tasks (e.g., Ahissar & Hochstein, 1996), they may have learned what to look for more easily for the human and primate targets, compared to the mammal targets. To control for this, in Experiment 3 we tested the category level effect of target types by presenting three blocks in which targets were specific species: human faces, rhesus macaque monkey (Macaca mulatta) faces (hereafter referred to as macaque), or sheep (Ovis aries) faces.

Methods

Participants

Thirty-five participants contributed usable data (14 men); none had previously participated in either Experiment 1 or 2. One participant’s gaze location could not be detected, so only manual response data is reported for that individual. The average age was 19.71 (SD = 1.15), and included 32 Caucasians, two African Americans, and one Asian American.

Materials

Materials were the same as those used in Experiments 1 and 2, except the targets were from only three species—humans, rhesus macaque monkeys (Macaca mulatta), and sheep (Ovis aries)—and the distractors were always objects. In total 90 arrays were shown, 30 of each target type, with an equal number of each array size (16, 36, 64 items), and 20% of arrays were target-absent.

Procedure

Participants completed three search blocks, in a counterbalanced order. Targets were humans, monkeys, or sheep; each block contained only one target type (not intermixed) and one distractor type (objects). Instead of completing the search task practice, as in Experiments 1 and 2 (searching for Xs among Os), participants instead completed a passive-viewing task to familiarize them with the target types: At the start of each of the three blocks (one for each target type), participants viewed three sets of sample target stimuli for one of the species; each set included four example photos, so participants were familiarized with what the targets looked like, presented for 10 seconds each. Participants were instructed to view the photos on the screen and learn what the targets looked like. After each species-training, there was a block of 30 trials per target type, which participants completed in a predetermined counter-balanced order, for a total of 90 trials. Within each block, the target was not present in 6 arrays, 12 arrays contained a target face on the left, and 12 arrays contained a target face on the right. As in Experiments 1 and 2, participants indicated the locations of the targets as quickly and accurately as possible.

Results

Speed

In Experiment 3, no gaze was detected within the AOI in 17% of trials; however, there were no differences the number of missing trials across target types (human vs. macaque, t(23) = .48, p = .637; human vs. sheep, t(23) = .73, p = .475; macaque vs. sheep, t(23) = .20, p = .846).

The average human face slope was 8ms/item, the average macaque monkey face slope was 13ms/item, and the average sheep face slope was 21ms/item (Figure 2E). A two-way within-subjects ANOVA (Array size × Target type) on gaze RTs revealed main effects of Array size (F (2,66) = 170.54, p < .001, ηp2 = .84) and Target type (F (2,66) = 87.63, p < .001, ηp2 = .73) qualified by an Array size × Target type interaction (F (4,132) = 17.36, p < .001, ηp2 = .34), Table 1, Figure 2E. Within the 16-item arrays, human and macaque monkey faces elicited equally fast responses, p = .349, and human and macaque monkey faces were located more quickly than sheep faces, ps < .001 (see Tables 1 and 2). Within the 36- and 64-item arrays, RTs were fastest to human faces, followed by macaque monkey faces, and sheep faces elicited the slowest RTs, ps ≤ .006.

We next examined the target absent trials to see whether correct RTs varied across Array size and Target type, Figure 3C. This analysis revealed the same results as Experiment 1: main effects of Target type (F (2,68)=21.22, p < .001, ηp2 = .384) and Array size (F (2,68)=105.57, p < .001, ηp2 = .756), qualified by a Target type × Array size interaction (F (4,136)=6.33, p < .001). Participants were the fastest to report human faces as absent (M = 2350 ms, SD = 1073), compared to macaque faces (M = 2913 ms, SD = 1383), t(34) = 1.65, p = .012, d = .45, and sheep faces (M = 3675 ms, SD = 1432), t(34) = 6.02, p < .001, d = 1.02, and were faster to report macaque faces as absent compared to sheep faces, t(34) = 4.31, p < .001, d = .73. Response times also increased with array size, with the 16-item arrays eliciting faster responses (M = 1790 ms, SD = 880), than the 36-item arrays (M = 2903 ms, SD = 1121), t(34) = 7.91, p < .001, d = 1.34, or the 64-item arrays (M = 4244 ms, SD = 1620), t(34) = 11.37, p < .001, d = 1.92, and the 36-item arrays eliciting faster responses than the 64-item arrays, t(34) = 9.63, p < .001, d = 1.62. In the 16-item arrays, human faces were reported absent more quickly than macaque faces, p < .001, but did not differ from sheep faces, p = .073, and sheep faces were reported absent more quickly than macaque faces, p = .005 (Table 2). In both the 36- and 64-item arrays, however, humans were declared absent more quickly than macaque monkeys (ps ≤ .005), and both humans and macaque monkeys were declared absent more quickly than sheep (ps ≤ .005).

Accuracy

We used a 3 × 3 repeated measures ANOVA to examine the percentage of correct responses in target-present trials across species and array size, which revealed no main effects or interactions, ps > .05. The failure to find differences in response accuracy is likely due to a ceiling effect: overall, responses were extremely accurate (Figure 4).

Discussion

As in Experiment 1, participants were fastest to locate human faces, slower to locate primate (here, monkey) faces, and even slower to locate mammal (here, sheep) faces. Across all species and array sizes, participants’ were very accurate at localizing targets, so no differences were found across species. Thus, when search categories are specific, this may make the task easier, so RTs are a more sensitive measure of search efficiency, compared to accuracy.

The target absent condition revealed differences across species in manual RTs: human faces were reported absent more quickly than monkey faces, and monkey faces were reported absent more quickly than sheep faces. Thus, it appears that the ability to more quickly locate human faces—and even primate faces, relative to nonprimate mammal faces—is not due to search category specificity, nor is it due to participants becoming more proficient at finding particular target types over the course of the trials. Instead, it appears that humans locate human faces most efficiently, compared to primate and mammal faces.

Experiment 4

As Experiments 1–3 demonstrate, human faces are located more efficiently than animal faces. The mechanisms that underlie this differential search efficiency, however, remain unknown. There are at least two intertwined processes that modulate visual attention, one or both of which may be responsible for the human face search efficiency advantage: Top-down attention is goal-directed, intentional, and based on prior knowledge, while bottom-up attention is stimulus-driven, reflects attention-capture by low-level visual features (for reviews, see Corbetta & Shulman, 2002; Katsuki & Constantinidis, in press; Theeuwes, 2010). It is possible, therefore, that in Experiments 1–3 participants were prepared to locate faces (i.e., developed a search strategy which lead to the human face search advantage), whereas stimulus-driven attention capture should occur even without this preparation (Langton et al., 2008). Indeed, previous studies have found that task-irrelevant human faces interfere with search efficiency for non-face targets (Devue, Belopolsky, & Theeuwes, 2012; Langton et al., 2008; Riby, Brown, Jones, & Hanley, 2012); however, the extent to which this face interference may be specific to one’s own-species remains unexplored. To test this, we examined: (a) whether task-irrelevant human and animal faces decreased non-face target detection speed, and (b) the likelihood of fixating on the task-irrelevant faces. We selected cars and butterflies as targets as these have been used in previous visual search studies (e.g., cars: Golan, Bentin, deGutis, Robertson, & Harel, in press; Hershler & Hochstein, 2005, 2006, 2010; butterflies: Devue et al, 2012; Langton et al, 2008; Riby et al., 2012), they are specific categories, they are familiar stimuli, and they allow us to examine both inanimate, evolutionarily novel (car) and animal, evolutionarily relevant (butterflies) search efficiencies. We had two primary predictions: (1) Human faces, but not animal faces, would capture attention in a bottom up manner, resulting in a performance decrement—slower response times to fixate gaze on non-face targets when human face distractors were present, relative to animal faces or no distractors. (2) Human faces, relative to animal faces, would be more likely to attract attention, reflected in a higher percentage of trials in which there was a fixation to the task-irrelevant face.

Methods

Participants

Thirty-three students contributed usable data (9 males); none had previously participated in Experiment 1–3. The average age was 19 years (SD = .79), and included 26 Caucasians, 3 African Americans, 1 Hispanic, 1 Asian American, and 2 of other ethnicity. Eight additional participants were tested but were excluded due to high error rates (accuracy < 90%).

Materials

Materials were the same as those used in Experiments 1–3, except the targets were cars (Figure 1D) and butterflies (Figure 1E) and the task-irrelevant distractors were human, primate, and mammal faces. A total of 216 arrays were shown, 108 in each Target type condition (car, butterfly). Within each Target type condition, 72 arrays contained both a target (car or butterfly) and a face distractor (human, primate, mammal), with an equal number of arrays at each array size (16, 36, 64 items). In addition, 27 trials contained no target, but did contain a distractor face, with an equal number containing each face distractor (human, primate, mammal) and array size (16, 36, 64). Nine arrays contained no target and no face distractor. Car and butterfly targets appeared in identical locations across arrays and were never in the same quadrant as the face distractor.

Procedure

Participants completed two search blocks—car-search (“Find the car”) and butterfly-search (“Find the butterfly”)—in a counterbalanced order. Within a block, the target was always from the same category—either a car or a butterfly—and targets were unique images in each array (i.e., each image was only shown once). Among target-present trials, 92% contained a task-irrelevant face. Participants were not informed of the distractors, but were simply told to search for the target among images of other things, as quickly and accurately as possible, as in Experiment 1–3. In total, the experiment lasted approximately 15 min.

Results

We were primarily interested in the speed of locating targets (i.e., gaze RT to fixate on targets in target-present condition and manual RTs to report targets as absent in the target-absent condition), and the percentage of trials in which participants’ gaze fixated on the task-irrelevant faces. Only correct responses were included in these analyses (for accuracy see Figure 4).

Speed

In Experiment 4, no gaze was detected within the AOI in 31% of trials, and paired sample t tests revealed that there were fewer missing trials for butterfly targets (28%) compared to car targets (35%), t(23) = 5.57, p < .001, d = 1.14, but there were no differences across face distractor types (human vs. primate, t(23) = 1.01, p = .322; human vs. mammal, t(23) = 1.49, p = .151; human vs. face absent, t(23) = .081, p = .936; primate vs. mammal, t(23) = .66, p = .516; primate vs. face absent, t(23) = .59, p = .561; mammal vs. face absent, t(23) = 1.07, p = .294).

The average slopes for locating the car targets were: 43ms/item with a human face distractor, 56ms/item with a primate face distractor, 41ms/item with a mammal face distractor, and 48ms/item with no face distractor (Figure 2G). The average slopes for locating the butterfly targets were 38ms/item with a human face distractor, 44ms/item with a primate face distractor, 46ms/item with a mammal face distractor, and 51ms/item with no face distractor (Figure 2I). A within-subjects ANOVA examined Array size (16-item, 36-item, 64-item) × Target type (car, butterfly) × Distractor type (human face, primate face, mammal face, no distractor) on gaze RTs to the non-face targets, Table 1. This revealed a main effect of Array size (F (2,46) = 196.86, p < .001, ηp2 = .90), and a Target type × Distractor type interaction (F (3,69) = 2.37, p = .002). To follow up the interaction, we carried out two one-way ANOVAs, one for each target type, to examine whether there were differences in gaze RT across distractor types. For car targets, there was only a non-significant trend of a main effect of Distractor type, F(3,90) = 2.66, p = .053; however, follow up t-tests were carried out, given our a priori predictions, and these revealed slower responses when human face distractors were present (M = 1.96 sec, SD = .60) compared to mammal face distractors (M = 1.78 sec, SD = .85), t(30) = 3.30, p = .002, d = .59, and compared to when there was no face (M = 1.73 sec, SD = .62), t(30) = 2.07, p = .047, d = .37, Figure 5. There was, however, no difference between human and primate faces (M = 1.81 sec, SD = .66), t(30) = 1.35, p = .19. For butterfly targets, a one-way ANOVA on Distractor type revealed no effect of Distractor, F(3,93) = 2.16, p = .098. There were no other significant effects, ps > .05.

Figure 5.

Response times to locate targets (gaze RT in target present trials; top graphs) and to identify targets as absent (manual RT; bottom graphs) when faces are task-irrelevant and search goal is for a non-face (car or butterfly) in Experiment 4. Error bars represent within-subjects standard error of the mean. *ps < .05, **ps < .01.

We next examined manual RTs in target-absent trials with a 2 (Target type) × 4 (Distractor type) × 3 (Array size) repeated measures ANOVA, Table 2. This revealed main effects of Target type (F(1,32) = 9.44, p = .004, ηp2 = .23), in which responses were faster to butterfly targets (M = 5.89 sec, SD = 2.06) compared to car targets (M = 6.69 sec, SD = 1.92). There was also a main effect of Distractor type (F(3,96) = 2.81, p = .044, ηp2 = .08), in which responses were slower when there was no face (M = 6.52 sec, SD = 2.04), compared to when there was a human face (M = 6.21 sec, SD = 1.96), primate face (M = 6.20 sec, SD = 1.84), or mammal face (M = 6.21 sec, SD = 1.76); however, the face distractor types did not differ from one another, ps > .10. There was also a main effect of Array size (F(2,64) = 232.81, p < .001, ηp2 = .88), in which, not surprisingly, response times were longer in the 64-item arrays (M = 9.70 sec, SD = 3.12) compared to the 36-item arrays (M = 6.11 sec, SD = 1.76), and fastest in the 16-item arrays (M = 3.05 sec, SD = 0.74), ps < .001. Finally, there was a Target type × Distractor type interaction (F(3,96) = 10.06, p < .001), which we followed up by carrying out two one-way ANOVAs, one for each target type, to explore whether there were effects of distractor type, Figure 5. For the car target, there was a main effect of Distractor type, F(3,96) = 3.41, p = .021, ηp2 = .10, in which responses were slower when there was a human face present (M = 7.03 sec, SD = 2.18), compared to when there was a primate face (M = 6.59 sec, SD = 1.81), t(32) = 2.65, p = .012, d = .46, a mammal face (M = 6.69 sec, SD = 1.98), t(32) = 2.07, p = .047, d = .36, or no face (M = 6.46 sec, SD = 2.14), t(32) = 2.37, p = .024, d = .41. For the butterfly target, there was a main effect of Distractor type, F(3,96) = 9.45, p < .001, ηp2 = .23, in which responses were fastest when there was a human face present (M = 5.38 sec, SD = 1.84), compared to a primate face (M = 5.84 sec, SD = 2.04), t(32) = 2.71, p = .011, d = .47, mammal face (M = 6.36 sec, SD = 2.43), t(32) = 5.45, p < .001, d = .95, and no faces (M = 5.97 sec, SD = 2.29), t(32) = 3.06, p = .004, d = .53. There were no other significant differences, ps > .05.

Percentage of trials with fixations on faces

Overall, the percentage of trials in which participants looked at the task-irrelevant faces was low, Table 3. We carried a 2 (Target type) × 3 (Distractor type) × 3 (Array size) repeated measure ANOVA on the proportion of trials in which there was a fixation to the task-irrelevant face (number of trials in which there was a fixation to the face divided by the total number of trials). This revealed a main effect of Target type (F(1,32) = 9.33, p = .005, ηp2 = .23), in which there was a higher percentage of trials in which there were fixations to faces in butterfly target trials (M = 23%, SD = 9%) than car target trials (M = 17%, SD = 9%), t(32) = 3.16, p = .003, d = .55.

Table 3.

Percentage of trials with fixations on faces.

| Array Size | Species | Car Target Present

|

Butterfly Target Present

|

Car Target Absent

|

Butterfly Target Absent

|

||||

|---|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | M | SD | M | SD | ||

| 16 | Human | 17% | 13% | 21% | 15% | 37% | 26% | 36% | 35% |

| Primate | 15% | 14% | 17% | 12% | 28% | 22% | 51% | 32% | |

| Mammal | 13% | 14% | 25% | 18% | 32% | 28% | 56% | 32% | |

|

| |||||||||

| 36 | Human | 18% | 16% | 16% | 15% | 28% | 32% | 28% | 29% |

| Primate | 19% | 15% | 26% | 17% | 46% | 31% | 52% | 33% | |

| Mammal | 14% | 16% | 25% | 19% | 43% | 31% | 60% | 30% | |

|

| |||||||||

| 64 | Human | 19% | 17% | 22% | 17% | 29% | 30% | 25% | 28% |

| Primate | 19% | 16% | 22% | 18% | 30% | 33% | 34% | 31% | |

| Mammal | 17% | 16% | 23% | 13% | 41% | 33% | 47% | 28% | |

Note. Participants’ mean (M) and standard deviation (SD) percentage of trials in which they fixated on the task-irrelevant face in Experiment 4.

We next looked at target-absent trials with the same 2 × 3 × 3 ANOVA, and found a main effect of Distractor type (F(2,64) = 14.05, p < .001, ηp2 = .31), in which there was a smaller percentage of trials in which participants looked at human faces (M = 31%, SD = 17%), compared to either primate (M = 40%, SD = 18%), t(32) = 3.07, p = .004, d = .53, or mammal faces (M = 47%, SD = 18%), t(32) = 5.26, p < .001, d = .91, and a lower percentage of trials in which participants looked to primate faces compared to mammal faces, t(32) = 2.16, p = .038, d = .38. There was also a main effect of Target type (F(1,32) = 7.94, p = .008, ηp2 = .20), in which there was a higher percentage of trials in which there was a fixation on faces in the butterfly target absent condition (M = 43%, SD = 17%) compared to the car target absent condition (M = 35%, SD = 17%), t(32) = 2.82, p = .008, d = .49, and a main effect of Array size (F(2,62) = 4.97, p = .010, ηp2 = .13), in which there was a higher percentage of face looks in the 16-item (M = 40%, SD = 17%) and 36-item (M = 43%, SD = 19%) arrays, compared to the 64-item arrays (M = 35%, SD = 16%), t(32) = 2.20, p = .035, d = .38, and t(32) = 3.09, p = .004, d = .54, respectively. Finally, in target absent trials, there were two interactions: a Target type × Distractor type interaction (F(2,64) = 5.78, p = .005), and a Distractor type × Array size interaction (F(4,128) = 2.95, p = .023). We carried out one-way ANOVAs on each target type to see if there were differences across array size. For the car targets there was no effect of Distractor type, F(2,64) = 2.37, p = .102, but for the butterfly targets, there was an effect of Distractor type, F(2,64) = 15.40, p < .001, in which there was a lower percentage of trials with face fixations for human faces (M = 30%, SD = 21%), compared to primate faces (M = 46%, SD = 24%), t(32) = 3.38, p = .002, d = .59, and mammal faces (M = 54%, SD = 21%), t(32) = 5.56, p < .001, d = .97. No other differences were significant, ps > .05.

Discussion

As in Experiment 1–3, we found some evidence that human faces capture attention more than animal faces. We found support for our prediction that task-irrelevant human faces interfere with non-face search efficiency, at least in some contexts. Specifically, gaze response times to car targets were slowed when there was a human face present, compared to when there was a mammal face or no face present. Interestingly, there was no difference in response times when the distractor face was human or primate, suggesting that primate faces may capture attention, perhaps due to their shared morphology with human faces. When participants searched for absent car targets, however, they were slower when there was a human face present, compared to primate faces, mammal faces, or when there was no face, suggesting that at least in some search contexts, human faces may be more distracting than primate faces. Together, these results are consistent with reports of stimulus-driven human face attention capture (Cerf, Frady, & Koch, 2009; Devue et al., 2012; Langton et al., 2008; Riby et al., 2012), supporting the proposition that human face search efficiency may be at least partially due to bottom-up processes.

Though unexpected, we also found differences in face distractibility across target types. For example, butterflies were identified as absent more efficiently than cars. Though speculative, one explanation for these findings is that searches were more efficient for evolutionarily relevant stimuli—in this case, butterflies—relative to evolutionarily novel stimuli—cars. This proposition is consistent reports that evolutionary relevance and animacy improve search efficiency (e.g., Jackson, 2013; Öhman et al., 2001; Öhman et al., 2012).

Even more puzzling was our finding that the human face interference effect (whereby the presence of task-irrelevant human faces slowed search RT relative to animal face distractors) was only evident when targets were cars; in contrast, when targets were butterflies we found the opposite: a human face facilitation effect (whereby the presence of task-irrelevant human faces speeded target detection relative to animal face distractors). Human faces may be particularly effective at priming vigilance to other evolutionarily relevant stimuli. For example, fearful facial expressions can prime attention to potential threats in complex visual scenes (Longin, Rautureau, Perez-Diaz, Jouvent, & Dubal, 2012; Wieser & Kiel, 2013) and can improve visual search for nonthreatening objects (Becker, 2009). The present findings, however, are the first to our knowledge that suggest even neutral human faces may prime visual attention during goal-directed visual search. Though task-irrelevant neutral faces can facilitate search when neighboring targets (e.g., Devue et al., 2012), in the present study, faces always appeared in a different quadrant within the array, away from targets; therefore, facilitation based on spatial location seems unlikely.

Though we predicted that human faces, relative to animal faces, would be more likely to attract attention, we found no differences across face types in the percentage of trials in which there was a fixation to the task-irrelevant face. However, faces can produce priming effects even when visual attention is not focused on the faces (Finkbeiner & Palermo, 2009), so this lack of differential fixation across face distractors does not undermine their differential influence on target detection efficiency. In fact, when targets were absent, we found participants were less likely to fixate on human faces relative to primate and mammal faces, and less likely to fixate on primate faces relative to mammal faces. This effect was only present in when the targets were butterflies, which suggests this effect may be due to the similarity between the targets and distractors. When targets and distractors are more similar this decreases search efficiency (Duncan & Humphreys, 1989). In the present study, nonhuman face distractors may have appeared more similar to insects than human faces, and therefore, may have been more distracting. Alternatively, searches for butterflies may have primed participants to detect animate items more generally.

General Conclusions

According to the Animate Monitoring Hypothesis, human and nonhuman animals were the most important categories of visual stimuli for ancestral humans to monitor, as they presented challenges and opportunities for survival and reproduction, such as threats, mates, food, and predators (New et al., 2007; Orians & Heerwagen, 1992). We extended this hypothesis and predicted that humans may efficiently search for the faces of both human and nonhuman animals. The present study had two primary goals: determine whether human faces are located more efficiently than other primate or mammal faces, and begin to examine the factors that may contribute to this human face search advantage, namely, whether search category level and face task-relevance influence search efficiency.

We found human faces appear to be located more efficiently than either primate or mammal faces. We next sought to rule-out possible explanations for this finding. First, we tested whether search category level may account for the present results, given that more specific target categories are located more efficiently that more general target categories. Indeed, even controlling for search category, human faces were still located the most efficiently. And last, we tested whether face task-relevance may account for search efficiency; specifically, would faces still attract attention even when they are not goal-relevant? We found that, at least in some search contexts, human faces are more likely to distract viewers relative to animal faces, suggesting some bottom-up, stimulus-driven attention capture is at least partially responsible for the human face search efficiency advantage.

Together, our results show that human faces, relative to other primate and mammal faces, are located faster and more accurately, and more quickly declared absent when not present, when embedded in visually complex arrays. Though this may seem an obvious finding in retrospect, it is the first study—to our knowledge—in which this has been systematically examined. We also report the first results that nonhuman primate faces are located faster than mammal faces. This may be due to nonhuman primates’ phylogenetic closeness to humans—both being members of the same order—or may be due to the similarity in facial structures of nonhuman primates and humans (e.g., forward-facing eyes, shortened snout). In other words, one possibility is that humans’ extensive experience with human faces may have helped in the processing of primate faces. In contrast, mammal faces—and sheep faces in particular—do not appear to be similar enough to human faces that they likewise elicit efficient searches.

Although the present study provides evidence that human faces are located more efficiently than animal faces, it does not address the question of how this occurs, or through what mechanisms. Limited-capacity perceptual systems are unable to process all incoming information. Consequently, a process of selective attention occurs whereby attention is focused on some information at the expense of ignoring other information; this bottleneck can occur early or late in the temporal sequence of information processing (Broadbent, 1958; Deutsch & Deutsch, 1963). One way of testing this is by varying perceptual load, which is the total amount of information available in the environment. Perceptual load can be manipulated through varying the complexity of visual stimuli: larger array sizes have higher perceptual load relative to smaller array sizes because when load is low, there can be considerable processing prior to selection, but if load is high, early selection is mandatory to reduce the number of incoming stimuli (Lavie, 1995). Consequently, if a stimulus is efficiently located in spite of high perceptual load, this suggests the stimulus made it past an early selection bottleneck, likely due to visual features of the stimulus. Different systems may be activated for high and low perceptual load, with high-load relying on perceptual features, possibly the posterior network, and low-load relying on semantic features, possibly the anterior network (Huang-Pollock, Carr, & Nigg, 2002). In the present study, we found that increases in perceptual load more negatively affected search efficiency for animal faces relative to human faces, which suggests human face searches may rely more on perceptual features. Similarly, when faces were task-irrelevant, perceptual load appeared to have equal effects on human and animal face distractors, suggesting that human faces are equally distracting, irrespective of perceptual load.

In terms of ultimate mechanisms, the sensory bias hypothesis proposes that facial expressions evolved to exploit existing capacities of the visual system (Horstmann & Bauland, 2006). In contrast, the threat advantage hypothesis proposes that the visual system evolved sensitivities to detect evolutionarily important stimuli (for recent reviews, see LoBue & Matthews, 2013; Quinlan, 2013). Further work is necessary to test these ultimate hypotheses, perhaps through comparisons with other primate species that vary in their ecology (e.g., facial expressiveness, sociality, predation risk).

In terms of the proximate mechanism, Experiment 4 provided some evidence that the superior search efficiency for human faces found in Experiments 1–3 may be at least in part due to bottom-up, stimulus-driven effects. Human faces—and perhaps also primate faces—appear to capture visual attention even when task-irrelevant, interfering with non-face target detection search efficiency. In related work, we have found that, similarly, bottom-up effects also appear to drive efficient human face orienting in passive-viewing, with faster orienting responses to human faces relative to primate and mammal faces, both in adults (Jakobsen, Simpson, Umstead, Perta, Eisenmann, & Cover, 2013) and infants (Jakobsen, Umstead, Eisenmann, & Simpson, under review).

The specific face properties that capture attention, however, remain largely unexplored. Human faces’ color and shape together may aid face detection (Bindemann & Burton, 2009). Though nonhuman primate faces have similar facial configurations as human faces, they differ in color, so it is possible that nonhuman primate faces may be located approximately as efficiently as greyscale human faces. In addition, human face search efficiency declines when faces are inverted (Garrido, Duchaine, & Nakayama, 2008), as does the interference effect of task-irrelevant human faces (Langton et al., 2008), suggesting the basic configuration of faces (e.g., top-heavy pattern) may be critical for attracting attention. Additional studies are necessary to systematically test these possibilities with both human and animal faces.

Examining a more diverse collection of nonhuman animal faces would also be a fruitful future direction. Specifically, it would be interesting to examine whether nonhuman primate faces are located with varying degrees of efficiency as a function of their phylogenetic closeness, for example, comparing great apes to lesser apes and monkeys. Within mammalian species it would be interesting to examine whether domesticated species elicit more efficient search strategies than non-domesticated species, and also whether predatory animals, such as wild cats—and particularly those exhibiting threatening expressions—are located more efficiently by human observers compared to non-threatening species. Some work suggests that threatening animals are no more likely to be detected when presented in heterogeneous arrays than non-threatening animals (Tipples et al., 2002).

Together, these results suggest that search efficiency for conspecifics’ faces may be privileged. Faces are unquestionably one of the most important visual stimuli for humans and other vertebrates (Little, Jones, & DeBruine, 2011; New et al., 2007; Öhman, 2007; Orians & Heerwagen, 1992). Indeed, the faces and bodies of conspecifics are located more efficiently than other visual stimuli (Jakobsen et al., 2013; Stein, Sterzer, & Peelen, 2012). Human faces orient attention more strongly than non-faces (Crouzet et al., 2010), producing fast orienting (i.e., saccades 100–110 ms), which may be beyond the control of the observer, occurring even when task-irrelevant (Morand, Grosbras, Caldara, & Harvey, 2010). Interestingly, many of these own-species perceptual sensitivities appear to be present early in life (Jakobsen et al., under review; LoBue, Rakison, & DeLoache, 2010; Simpson, Suomi, & Paukner, under review). Examining the development of this specialized sensitivity to own-species faces will begin to uncover the phylogenetic and ontogenetic contributions that shape visual perception.

Acknowledgments

This research was supported by P01HD064653-01 NIH grant.

The authors thank the University of Georgia Infant Laboratory undergraduate research assistants for their help with stimulus preparation. Thanks are also due to undergraduate research assistants at James Madison University for their help with programming tasks, testing participants, and preparing data for analysis. We would also like to thank Hika Kuroshima, Klaus Rothermund, and Gernot Horstmann for helpful comments and suggestions. This research was supported by P01HD064653-01 NIH grant.

Footnotes

Portions of this data were presented at the Association for Psychological Science’s 24th Annual Convention, in May of 2012.

Contributor Information

Elizabeth A. Simpson, University of Parma and Eunice Kennedy Shriver National Institute of Child Health and Human Development

Haley L. Mertins, James Madison University

Krysten Yee, James Madison University.

Alison Fullerton, James Madison University.

Krisztina V. Jakobsen, James Madison University

References

- Ahissar M, Hochstein S. Learning pop out detection: Specifics to stimulus characteristics. Vision Research. 1996;36:3487–3500. doi: 10.1016/0042-6989(96)00036-3. [DOI] [PubMed] [Google Scholar]

- Becker MW. Panic search fear produces efficient visual search for nonthreatening objects. Psychological Science. 2009;20(4):435–437. doi: 10.1111/j.1467-9280.2009.02303.x. [DOI] [PubMed] [Google Scholar]

- Bhatia SK, Lakshminarayanan V, Samal A, Welland GV. Human face perception in degraded images. Journal of Visual Communication and Image Representation. 1995;6(3):280–295. [Google Scholar]

- Bindemann M, Burton AM. The role of color in human face detection. Cognitive Science. 2009;33:1144–1156. doi: 10.1111/j.1551-6709.2009.01035.x. doi:111/j.1551-6709.2009.01035.x. [DOI] [PubMed] [Google Scholar]

- Bindemann M, Burton AM, Jenkins R. Capacity limits for face processing. Cognition. 2005;98(2):177–197. doi: 10.1016/j.cognition.2004.11.004. [DOI] [PubMed] [Google Scholar]

- Bindemann M, Burton AM, Langton SR, Schweinberger SR, Doherty MJ. The control of attention to faces. Journal of Vision. 2007;7(10) doi: 10.1167/7.10.15. [DOI] [PubMed] [Google Scholar]

- Bravo MJ, Farid H. The specificity of the search template. Journal of Vision. 2009;9:1–9. doi: 10.1167/9.1.34. [DOI] [PubMed] [Google Scholar]

- Broadbent D. Perception and Communication. London: Pergamon Press; 1958. [Google Scholar]

- Brosch T, Sander D, Scherer KR. That baby caught my eye… Attention capture by infant faces. Emotion. 2007;7:685–689. doi: 10.1037/1528-3542.7.3.685. [DOI] [PubMed] [Google Scholar]

- Brown V, Huey D, Findlay JM. Face detection in peripheral vision: Do faces pop out? Perception. 1997;26:1555–1570. doi: 10.1068/p261555. [DOI] [PubMed] [Google Scholar]

- Cerf M, Frady EP, Koch C. Faces and text attract gaze independent of the task: Experimental data and computer model. Journal of Vision. 2009;9(12) doi: 10.1167/9.12.10. [DOI] [PubMed] [Google Scholar]

- Cerf M, Harel J, Einhäuser W, Koch C. Predicting human gaze using low-level saliency combined with face detection. In: Platt JC, Koller D, Singer Y, Roweis S, editors. Advances in neural information processing systems. Vol. 20. Cambridge, MA: MIT Press; 2008. [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3(3):201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Crouzet SM, Kirchner H, Thorpe SJ. Fast saccades toward faces: Face detection in just 100 ms. Journal of Vision. 2010;10:1–17. doi: 10.1167/10.4.16. [DOI] [PubMed] [Google Scholar]

- Deutsch JA, Deutch D. Attention: Some theoretical considerations. Psychological Review. 1963;70(1):80–90. doi: 10.1037/h0039515. [DOI] [PubMed] [Google Scholar]

- Devue C, Belopolsky AV, Theeuwes J. Oculomotor guidance and capture by irrelevant faces. PLOS ONE. 2012;7(4):e34598. doi: 10.1371/journal.pone.0034598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Giorgio E, Turati C, Altoe G, Simion F. Face detection in complex visual displays: An eye-tracking study with 3- and 6-month-old infants and adults. Journal of Experimental Child Psychology. 2011 Apr;113:66–77. doi: 10.1016/j.jecp.2012.04.012. [DOI] [PubMed] [Google Scholar]

- Drewes J, Trommershäuser J, Gegenfurtner KR. Parallel visual search and rapid animal detection in natural scenes. Journal of Vision. 2011;11:1–21. doi: 10.1167/11.2.20. [DOI] [PubMed] [Google Scholar]

- Duncan J, Humphreys GW. Visual search and stimulus similarity. Psychological Review. 1989;96:433–458. doi: 10.1037/0033-295x.96.3.433. [DOI] [PubMed] [Google Scholar]

- Finkbeiner M, Palermo R. The role of spatial attention in nonconscious processing: A comparison of face and nonface stimuli. Psychological Science. 2009;20(1):42–51. doi: 10.1111/j.1467-9280.2008.02256.x. [DOI] [PubMed] [Google Scholar]

- Fletcher-Watson S, Findlay JM, Leekam SR, Benson V. Rapid detection of person information in a naturalistic scene. Perception. 2008;37:571–583. doi: 10.1068/p5705. [DOI] [PubMed] [Google Scholar]

- Garrido L, Duchaine B, Nakayama K. Face detection in normal and prosopagnosic individuals. Journal of Neuropsychology. 2008;2(1):119–140. doi: 10.1348/174866407X246843. [DOI] [PubMed] [Google Scholar]

- Gliga T, Elsabbagh M, Andravizou A, Johnson M. Faces attract infants’ attention in complex displays. Infancy. 2009;14:550–562. doi: 10.1080/15250000903144199. [DOI] [PubMed] [Google Scholar]

- Golan T, Bentin S, DeGutis JM, Robertson LC, Harel A. Association and dissociation between detection and discrimination of objects of expertise: Evidence from visual search. Attention, Perception, and Psychophysics. doi: 10.3758/s13414-013-0562-6. (in press) Advanced Online Publication. [DOI] [PubMed] [Google Scholar]

- Hershler O, Golan T, Bentin S, Hochstein S. The wide window of face detection. Journal of Vision. 2010;10:1–14. doi: 10.1167/10.10.21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hershler O, Hochstein S. At first sight: A high-level pop out effect for faces. Vision Research. 2005;45:1707–1724. doi: 10.1016/j.visres.2004.12.021. [DOI] [PubMed] [Google Scholar]

- Hershler O, Hochstein S. With a careful look: Still no low-level confound to face pop out. Vision Research. 2006;46:3028–3035. doi: 10.1016/j.visres.2006.03.023. [DOI] [PubMed] [Google Scholar]

- Honey C, Kirchner H, VanRullen R. Faces in the cloud: Fourier power spectrum biases ultrarapid face detection. Journal of Vision. 2008;8:1–13. doi: 10.1167/8.12.9. [DOI] [PubMed] [Google Scholar]

- Horstmann G, Bauland A. Search asymmetries with real faces: Testing the anger-superiority effect. Emotion. 2006;6(2):193–207. doi: 10.1037/1528-3542.6.2.193. [DOI] [PubMed] [Google Scholar]

- Horstmann G, Becker SI, Bergmann S, Burghaus L. A reversal of the search asymmetry favouring negative schematic faces. Visual Cognition. 2010;18(7):981–1016. doi: 10.1080/13506280903435709. [DOI] [Google Scholar]

- Horstmann G, Lipp OV, Becker SI. Of toothy grins and angry snarls: Open mouth displays contribute to efficiency gains in search for emotional faces. Journal of Vision. 2012;12(5):1–15. doi: 10.1167/12.5.7. [DOI] [PubMed] [Google Scholar]

- Huang-Pollock CL, Carr TH, Nigg JT. Development of selective attention: Perceptual load influences early versus late attentional selection in children and adults. Developmental Psychology. 2002;38(3):363–375. doi: 10.1037//0012-1649.38.3.363. [DOI] [PubMed] [Google Scholar]

- Jackson RE. Evolutionary relevance facilitates visual information processing. Evolutionary Psychology. 2013;11(5):1011–1026. [PubMed] [Google Scholar]

- Jakobsen KV, Simpson EA, Umstead L, Perta A, Eisenmann V, Cover S. Human faces capture attention more efficiently than animal faces in complex visual arrays. Poster presented at the Association for Psychological Science 25th Annual Convention; Washington, DC. 2013. May, [Google Scholar]