Abstract

Objective. To provide a computer-based learning method for pharmacy practice that is as effective as paper-based scenarios, but more engaging and less labor-intensive.

Design. We developed a flexible and customizable computer simulation of community pharmacy. Using it, the students would be able to work through scenarios which encapsulate the entirety of a patient presentation. We compared the traditional paper-based teaching method to our computer-based approach using equivalent scenarios. The paper-based group had 2 tutors while the computer group had none. Both groups were given a prescenario and postscenario clinical knowledge quiz and survey.

Assessment. Students in the computer-based group had generally greater improvements in their clinical knowledge score, and third-year students using the computer-based method also showed more improvements in history taking and counseling competencies. Third-year students also found the simulation fun and engaging.

Conclusion. Our simulation of community pharmacy provided an educational experience as effective as the paper-based alternative, despite the lack of a human tutor.

Keywords: simulation, community pharmacy, game, serious games, virtual community pharmacy

INTRODUCTION

Community pharmacists in Australia, as in many other countries, perform a broad range of activities above and beyond dispensing medications.1 One of their most valuable services is performing clinical interventions, where they identify an actual or potential drug-related problem (DRP) and take actions to resolve it.2,3 In order to detect and resolve DRPs, the pharmacist must not only have extensive clinical knowledge of the multitude of problems that can occur, but must also be sufficiently trained to detect the problems, which may require targeted communication with the patient, prescriber, and/or referral to patient records. At the same time the community pharmacist is under significant time pressure, with typically heavy workloads and waiting patients, so it is important that the pharmacist’s investigation be efficient.4,5

Pharmacy curricula in Australia are for either a 4-year bachelor of pharmacy degree or a 3-year master of pharmacy degree. Curricula are required to comprise contemporary pharmaceutical sciences, pharmacotherapeutics, and pharmacy practice and to be delivered via a combination of didactic and experiential learning activities. After graduation, pharmacy students complete an additional 12 months of supervised practice prior to undertaking registration exams and registering as a pharmacist. Students are typically presented with opportunities to practice clinical, dispensing, and counseling skills in the classroom by working through patient scenarios.6 However, because of budget limitations, students have few opportunities to interact with a patient actor or gain experience in a realistic pharmacy environment.6 At most Australian universities, pharmacy students are sent on workplace-integrated learning experiences (placements) during their studies, where they are expected to work under supervision in real pharmacy settings for short periods of time, however there are no minimum or maximum hours of experiential practice required in the accreditation standards.7 These opportunities are an important educational experience,8 yet, limited positions are available because student numbers are growing, and the cost of providing placements is high. It is becoming increasingly difficult to provide an adequate number of placements of a suitable duration.6 It is therefore important to ensure that students are given supplementary training to complement and better prepare them for their practice experiences, so that they can gain maximum educational value from their limited time.

Simulated activities are seen as one potential solution to this problem and are becoming popular approaches in health care training; however, no minimum or maximum simulation-hour requirements currently exist in Australian accreditation standards.9,10 In recent years, significant focus has been on high-fidelity live simulations, where students interact with real machines in a real environment. This focus is likely attributable to the success of SimMan (Laerdal Medical Corporation, Gatesville,Texas), a simulated patient mannequin that can be programmed to play out many scenarios and respond appropriately to a wide variety of medical interventions.9-11 This form of high-fidelity live simulation is valuable to students, allowing them to practice their physical techniques and observe the consequences of their interventions. However, high development, purchasing, and maintenance costs are associated with this kind of machine, and there are limitations to a mannequin, such as its inability to move independently, that make it unsuitable for training in many areas of pharmacy practice.

Another approach is to make entirely virtual simulations, where human players use simulated systems in a synthetic environment.12,13 Historically, this method of simulation has been relatively unpopular in health care settings, perhaps because of the low detail of the virtual environments and the high cost of development. In recent years, however, with the massive improvement of computational and graphics processing power particularly, and with the development of affordable and accessible game development engines such as Unity and the Unreal Engine, this style of virtual simulation has become an increasingly viable option.12-14 Once initial development costs are borne, virtual simulations can be cheaper and more accessible to students, since they don’t require demonstrators or actors.

Our aim was to develop a virtual simulation using a modern game engine that allows pharmacy students to play through pharmacy practice scenarios in a fun, productive, and engaging way on a personal computer. Ideally, to make the software cost effective, a trained tutor or demonstrator should not be required. To ensure that scenarios are relevant, up to date, and adequately address current learning objectives, we had teachers write and edit the scenarios themselves, without assistance from a software developer or technician. In order to be considered a success, this simulation needed to provide equivalent or better learning outcomes when compared to the current practice approach of asking students to address paper-based pharmacy practice scenarios.

DESIGN

We developed a computer-based simulation of a community pharmacy, complete with a front desk, front of shop area, dispensary, dispensing computer, and telephones. This simulation was developed using the Unity3D game development environment (Unity Technologies, San Francisco, California). All a student needs to participate is access to a relatively low-end computer or laptop, or potentially a tablet device. Using a mouse-and-keyboard, first-person control scheme, which would be familiar to any student who has played a first-person computer game, student players takes on the role of pharmacist in this virtual community pharmacy. They are given complete freedom to walk around all areas of the pharmacy and make context-appropriate interactions with relevant items, such as the products on the shelves, the dispensing computer, the telephone, and the patients who enter the pharmacy.

The simulation is highly detailed and customizable. Educators are free to write scenarios that cover almost any pharmacy practice issue whether related to non-prescription or prescription medications, or just general health advice. These scenarios are written using an offline scenario-builder tool we also developed. Using this tool, the educator can enter all relevant patient details, as well as all the dialog options that should be available in each section, and the scores and feedback associated, as well as select events that occur when certain medications or classes of medications are provided, or when certain score thresholds are broken. It takes our clinical pharmacists about 4 hours to write a reasonably complex scenario.

To start a session, the student can choose one or more scenarios from a bank of prewritten scenarios. When they have selected which scenarios they want to play through, the patients will start to arrive in the pharmacy. As each patient arrives, they will walk up to the dispensary and look to the player for assistance. The student can then interact with the patient, initiating a conversation. Conversations with the patient are typically broken up into 4 phases or groups: (1) Opening questions/greetings – where the student ascertains who the patient is, the purpose of the pharmacy visit, and what he or she wants; (2) History taking – where the student can ask questions to ascertain the appropriate course of action; (3) Recommendations – where the student can indicate recommendation(s) to the patient; (4) Advice – where the student can provide additional counseling advice to the patient. In addition, there are 3 other groups of dialog options available through interaction with the telephone: (1) call the prescriber; (2) call the hospital; (3) call a nearby pharmacy.

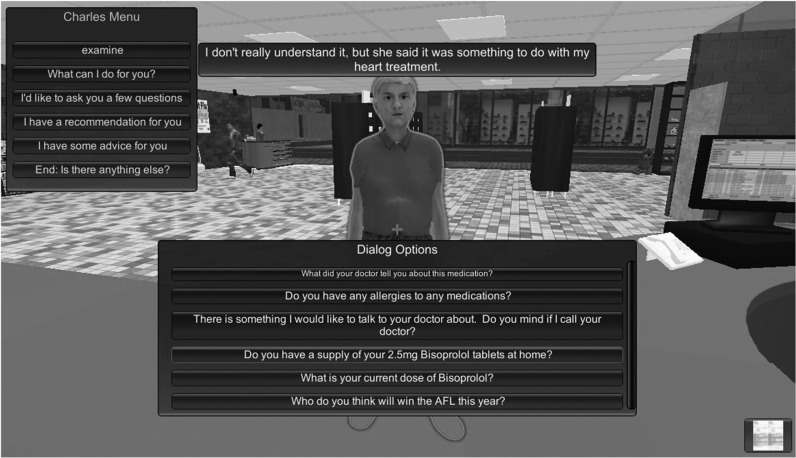

The student can select any of these conversation phases at any time. Selecting a phase brings up the list of currently available dialog options for that phase. When selected by the student, dialog options typically result in a text response from the patient, and an animation (such as nodding, head shaking, or anger, as selected by the scenario author).The scenario author can also include an optional audio or image response. For example, when dealing with a patient complaining about red eyes the student might ask to examine the eyes and be presented with a photograph of an eye affected by conjunctivitis. A screenshot showing the dialog system in the simulation is provided in Figure 1. Dialog options can also have complex logical dependencies, allowing the scenario author to make dialog options available or not, depending on any combination of other dialog options being previously asked or not asked..

Figure 1.

A screenshot of the simulator showing the “question” dialog menu for the first patient (“Charles”) scenario.

Initially, the student asks opening questions, to determine why the patient is at the pharmacy and what they want. At this stage they will typically ascertain what the patient’s complaint is and whether they have a prescription or not. After this, the student asks questions to determine exactly what the patient needs, and whether there are any other problems that need to be addressed. Once the student determines why the patient is at the pharmacy, and what the appropriate course of action is, an appropriate recommendation can be selected from the range of options: refer the patient to their general practitioner (ie, primary care physician), refer the patient to the hospital, provide a product, or do nothing.

If the student decides to provide a product, they must retrieve the product from the store shelves. They must also go through the dispensing process at the dispensing computer for prescription products. After clicking on the computer, a dispensing interface displays on the screen, with the typical set of fields to complete. When a student types in the patient’s name, the patient record fields automatically populate with any known data about that patient, such as their dispensing history, recorded medical conditions, allergies, and date of birth. The student can then search for and select the product they wish to dispense from the product database. To help students locate the products in the crowded dispensary, the products they search for in the dispensing computer are highlighted with a glowing blue outline in the simulated environment. The student must then enter usage instructions, pack size, and the number of repeat refills prescribed. The student can check that the label being generated matches the box he or she has picked up (and prescription details if relevant) and then apply the label to the box. The student can also print off a consumer medicines information leaflet from this interface.

Once the student has finished dispensing the product, he or she can approach the patient with the items. At this stage, the student typically provides advice about how to correctly use the product and/or monitor symptoms. Once the student completes the scenario to his or her satisfaction, the scenario ends.

At this point, the scenario scorecard appears, providing feedback on why every action the student took was appropriate or not, and giving a score. The student’s total score is shown, as well as a percentage score, so the student knows how much room for improvement there is. A “Play Again” button also appears on this interface, and students who are dissatisfied with their score are encouraged to repeat the scenario.

The first prototype of the pharmacy simulator was evaluated using a randomized controlled trial, with volunteer third-year and fourth-year pharmacy students in a 4-year bachelor’s degree program at the University of Tasmania as participants. These groups were chosen because they were sufficiently advanced in their pharmacotherapeutics studies to be able to complete the scenarios successfully. Students were split into their respective year group and then randomly allocated into either the computer-based or paper-based groups to ensure an even split of third-year and fourth-year students in each group.

Each group completed 2 pharmacy practice scenarios using either the traditional paper-based approach or the computer simulation. In the 2 scenarios, students considered a patient, Charles, with a history of heart failure. Charles presented in the first scenario with a new prescription from his general practitioner for bisoprolol 5mg, yet he had only been taking bisoprolol 2.5mg for a week (dose escalation too rapid based on Australian guidelines15). In the second scenario, Charles presented with back pain, and a prescription from a temporary replacement general practitioner for diclofenac (not recommended for patients with heart failure according to Australian guidelines15). The scenarios were pilot-tested by 3 PhD students to ensure they worked correctly.

The goal of the scenarios was to provide a community pharmacy setting in which students could develop and practice identifying and resolving common DRPs that can arise in patients undertaking treatment for heart failure, particularly DRPs surrounding dose escalation with bisoprolol and the use of non-steroidal, anti-inflammatory drugs. Another goal was that students would learn peripheral information by inspecting associated guidelines that they would refer to in their attempts to successfully complete the scenarios.

Both groups completed a preassessment, 9-question, clinical knowledge, multiple-choice quiz focused on their knowledge of heart failure treatment, and a self-assessment survey, which asked them to rate their own competencies in a range of important pharmacist skills. After the students had completed the scenarios, they were given the same clinical knowledge quiz, the same self-assessment survey, and an additional 8-question survey, which asked how much they enjoyed the scenarios and how much they thought they learned. Students in the computer-based group were also asked an additional 8 questions to determine how useable they thought the software was and were asked to provide feedback on how the software could be improved in the future. The preassessment and postassessment surveys and quizzes are available from the author.

Both groups of students were encouraged to look up relevant materials while they were studying the scenarios. Both groups were given an equal amount of time to complete the preassessment items (10 minutes), the scenarios (40 minutes), and the postassessment items (10 minutes) for each scenario. The paper-based group was given access to 2 demonstrators with appropriate clinical knowledge with whom they could discuss the scenarios. The computer-based group was supervised by 2 demonstrators with no clinical knowledge, who could only help the students with any technical issues.

This study was approved by the Social Sciences Human Research Ethics Committee at the University of Tasmania.

EVALUATION AND ASSESSMENT

The 33 students who participated in the study were randomly allocated to 2 groups, with 16 students in the computer-based group (8 from the third year, 8 from the fourth year), and 17 in the paper-based group (10 from the third year, 7 from the fourth year). The paper-based group had an extra third-year student because one student signed up but didn’t participate. All statistical analyses were performed using SPSS Statistics 20 (IBM, Armonk, New York).

Using a repeated measures ANOVA, we determined that clinical knowledge quiz scores did not significantly improve after students completed the scenarios (F(1, 29)=0.63, p=0.433), but the difference between groups scores was approaching significance (F(1, 29)=3.85, p=0.059). The computer-based group achieved a mean change in their quiz score of 0.6 (standard error (stderr)=0.3), while the paper-based group had a mean change of -0.2 (stderr=0.3).

When looking at specific year groups, the differences in quiz scores became more apparent. Third-year students had an average change of 1.1 (stderr=0.6) in the computer-based group and -0.1 (stderr=0.5) in the paper-based group, while fourth-year students had a smaller difference between groups with average changes of 0.1 (stderr=0.2) and -0.4 (stderr=0.4), respectively. However, given the small sample sizes, these differences were not significant (third-year students, p=0.12; fourth-year students, p=0.21).

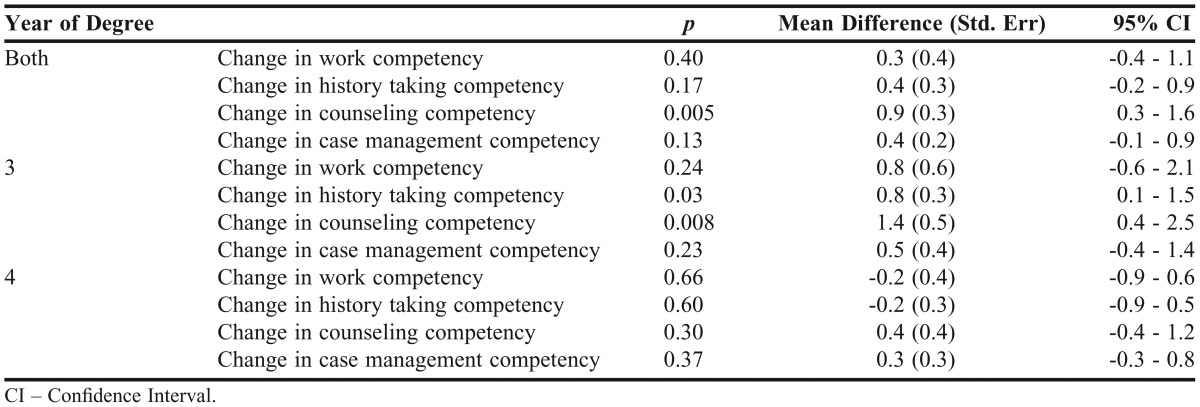

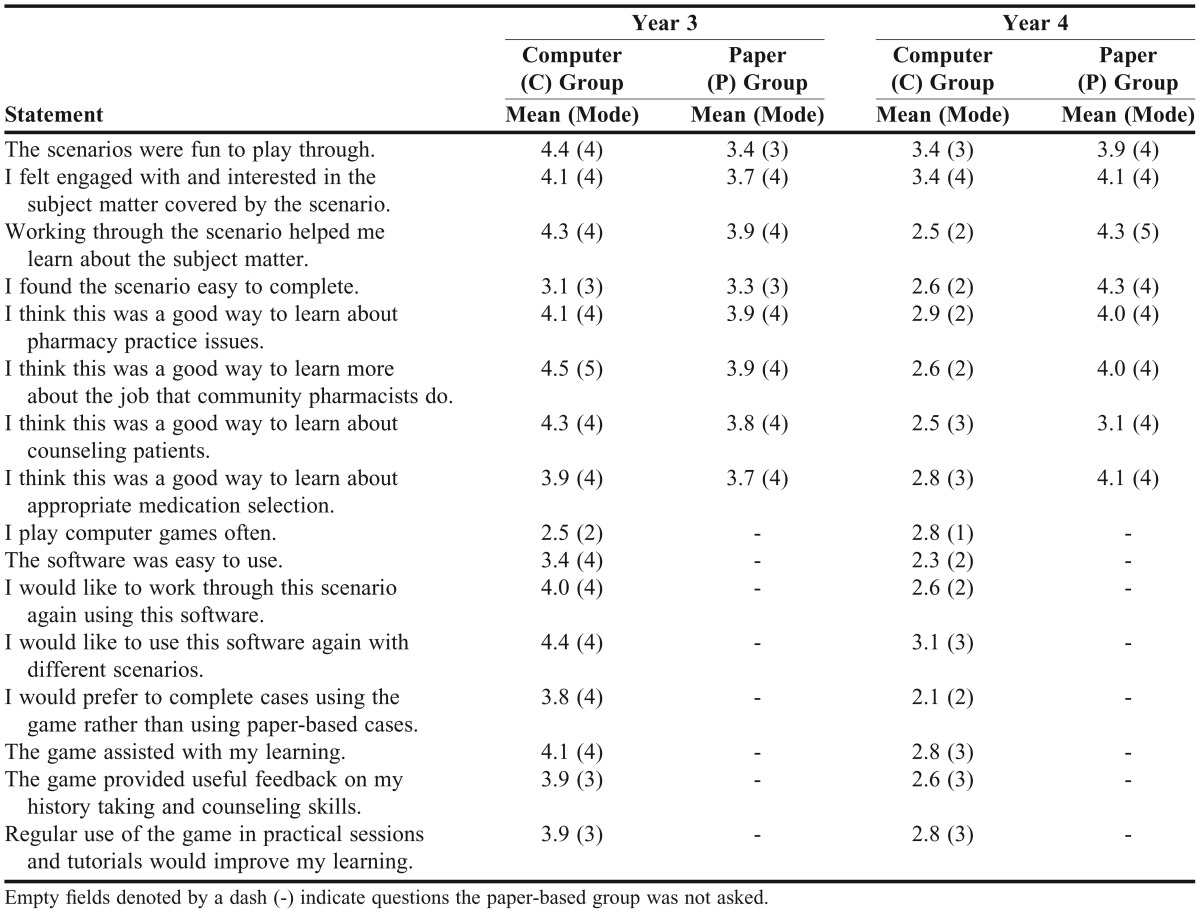

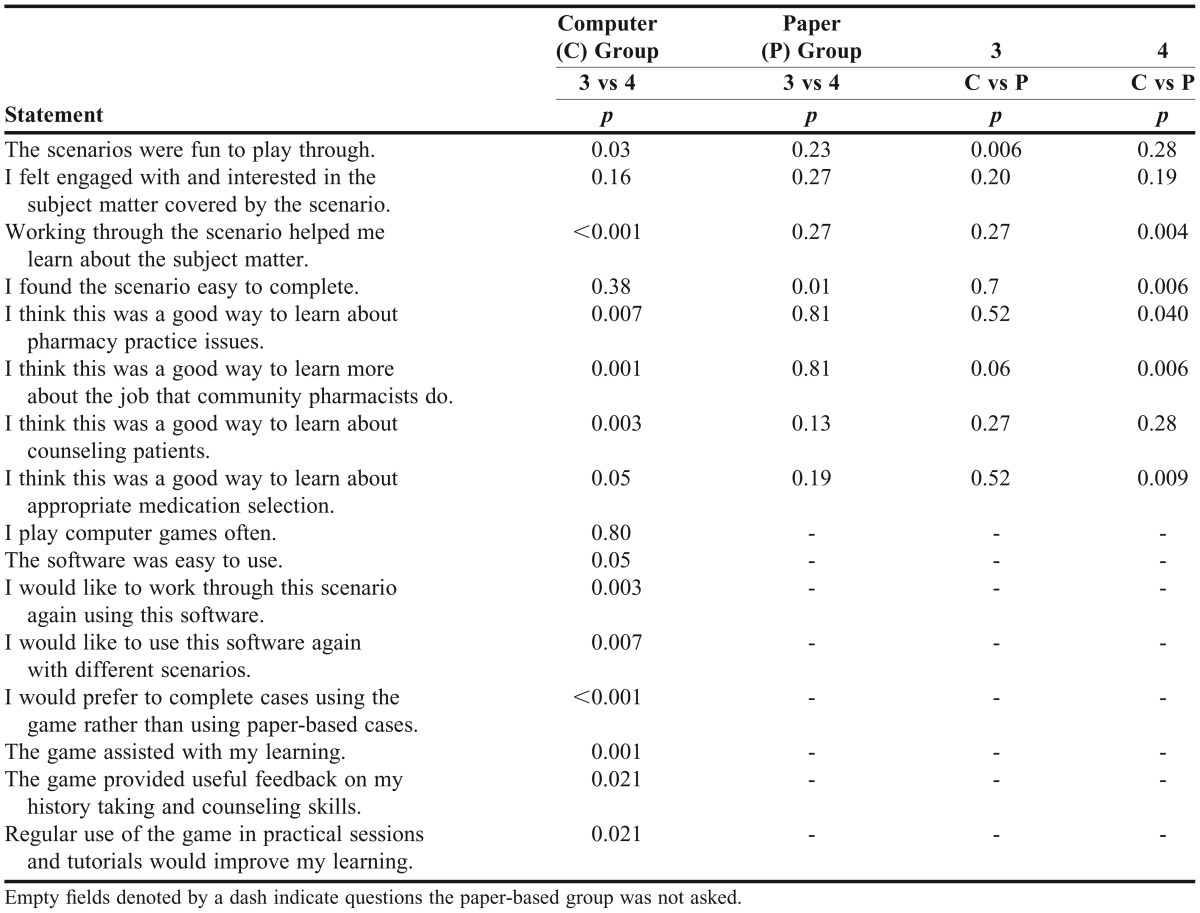

In the self-assessed competency responses, there were also noticeable, but not significant, differences in the preassessments and postassessments, with the exception of counseling competency, which did appear to have improved in the computer-based group (mean 0.6), and worsened in the paper-based group (mean -0.3), resulting in a significant difference (p=0.005), as seen in Table 1. The differences were more pronounced in third-year students than they were in the fourth-year students. Performing the same tests again, it was found that in third-year students, there was a significant improvement in self-rated history taking (p=0.029) and counseling competency (p=0.008), but there were no significant differences seen in fourth-year students for these skills, as seen in Table 1. Student responses to the Likert-scale survey questions were compared using means and modes as seen in Table 2, as well as Mann-Whitney U tests, shown in Table 3. The responses again showed a stark contrast between third-year and fourth-year cohorts in the computer-based group. Of the 16 survey questions asked, third-year and fourth-year student responses were significantly different in all but 3. Computer-based simulation was generally more well received among third-year students than among their fourth-year counterparts. In this group, the only questions agreed upon by students from both years were about engagement, ease of the scenario, and how often they played computer games. In contrast, third-year and fourth-year students in the paper-based group provided significantly different responses on only 1 of the 8 questions that were relevant to both groups, which asked how easy they found it to complete the scenario. Not surprisingly, fourth-year students found the scenario easier than third-year students found it.

Table 1.

Independent Sample t tests for the Pre- and Post-self-rated Competencies in the Computer-based vs Paper-based Groups Split Across Year of Degree

Table 2.

Summary of the Survey Responses by Group and Year of Study (rated 1=strongly disagree to 5=strongly agree)

Table 3.

Comparison of Student Responses to the Survey Questions

By comparing groups across years rather than comparing years across groups, we saw that the third-year students in the computer-based group reported having significantly more fun than their counterparts in the paper-based group and perhaps thought the computer-based simulation was a better way to learn about the job that community pharmacists do (borderline p=0.055), but otherwise responded similarly in terms of level of engagement and learning outcomes (third results column in Table 3). In contrast, the fourth-year students showed differences in their responses between the 2 groups in 5 of the 8 categories, with a preference for the paper-based approach.

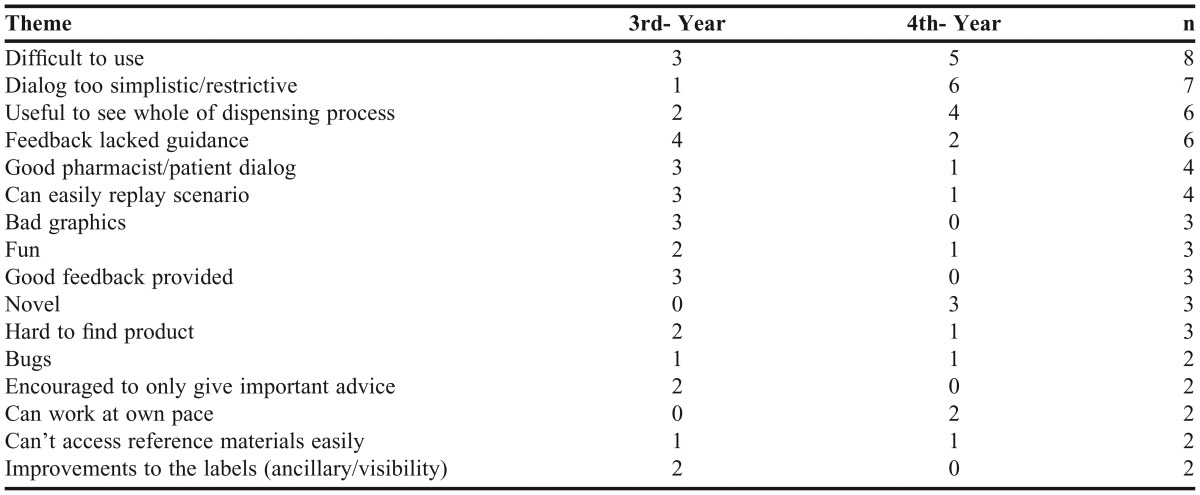

The computer-based group’s responses to the open-ended questions asking for negative comments, positive comments, and missing features were subjected to thematic analysis. From the 16 responses, 16 recurring themes were identified and are displayed with counts of incidence (that is, the number of students that identified the theme in their comments) in each year group, as well as total counts of incidence in Table 4. The most common comments concerned difficulty using the system, overly simplistic dialog options, and the value of being able to perform the whole dispensing process.

Table 4.

The Number of Students Who Mentioned Each of the 16 Themes in Their Survey Comments

DISCUSSION

Our aim was to provide a tool without the need for a human tutor that could provide learning outcomes as good as those seen resulting from traditional paper-based pharmacy practice tutorials. Our results suggest that this aim was achieved.

Third-year students showed an apparent improvement in their clinical knowledge after using the computer-based simulation compared to the paper-based group. However, this difference did not prove to be significant due to a lack of statistical power. Unfortunately, since this study was run with access to a limited pool of students, and for ethical reasons, had to be run outside of normal classroom activities, it was not possible to get a greater number of students to participate. Further evaluation activities outside of the University of Tasmania are currently underway to remedy this.

Third-year students in the computer-based group reported a significantly improved perception of their own history-taking and counseling competencies (Table 1). The patient counseling component of the simulation was criticized by some users as being too simple, since students were not required to use their own words. However, this approach could be beneficial, particularly for students earlier in their degree, as it provided examples of how to appropriately frame counseling points and also kept the student focused on providing the most appropriate and relevant counseling, rather than figuring out how to best word their questions or advice. The feedback also appeared to be of substantial value to students, allowing them to learn from both their mistakes and their successes.

Third-year students were generally in agreement with the positively themed statements, while fourth-year students were generally neutral, but leaned more toward disagreement. Perhaps most notably, the third-year students thought the computer-based scenarios were more fun than the paper-based scenarios, whereas the fourth-year students did not. Similarly, third-year students thought that the computer-based simulation was a good way to learn about community pharmacy, while fourth-year students did not. Contributing most to this difference was that the 2 scenarios were intentionally targeted at third-year students because we assumed scenarios targeted at fourth-year students would be too advanced for third-year students. As a consequence, the fourth-year students may have considered the scenarios too easy to learn from.

Overall, the biggest complaint from students was that the simulation was difficult to use. We were surprised to discover that relatively few (6 of 16) students agreed with the statement “I play computer games often,” and this is likely to have contributed to the problem, as it was clear on the day that many of the students were uncomfortable and unfamiliar with the typical mouse-and-keyboard control scheme used in first-person games. However, it was also clear that the majority of students had overcome their difficulties once they were asked to complete case 2, so we suspect that their responses in this area were influenced by their initial awkward experiences.

Many students reported appreciating that the simulation covered the whole pharmacist process, from initial greeting through to dispensing and advice giving and that it showed this process in a well-structured way. Feedback included “It was interesting to see the whole process of dispensing and have control over that.” This highlights a significant advantage of the simulation over traditional teaching methods that often focus on specific jobs of a pharmacist independently without context.

A common complaint was that the computer-based simulation did not tell the student what they were missing. The feedback the simulation provided students detailed every action the student had made, including how many points it was worth and why. It did not tell them if they had failed to perform important actions. A solution to this problem might be to break down the possible scores by category, so the students know which areas they need to improve in if they want to achieve a perfect score.

SUMMARY

The computer-based simulation was at least as effective as, and in some cases, more effective than the paper-based alternative for training third-year pharmacy students on issues of pharmacy practice, despite the absence of clinically trained tutors. The students enjoyed it more, and it is possible, although not clear, that they may have learned more. However, in its current form, the simulation did not seem appropriate for the more advanced fourth-year students, although this may have simply been because the scenarios lacked enough complexity.

Once a sufficient bank of scenarios are written we believe this simulator will be able to augment existing teaching activities throughout the degree, not only at our school, but also nationally and internationally.

ACKNOWLEDGMENT

Partial funding for this project was made available by the Health Workforce of Australia (HWA) as an Australian Government initiative.

REFERENCES

- 1.Pharmaceutical Society of Australia. Professional Practice Standards Version 3. Canberra: Pharmaceutical Society of Australia; 2006. [Google Scholar]

- 2.Benrimoj S, Langford J, Berry G, Collins D, Lauchlan R, Stewart K. Economic impact of increased clinical intervention rates in community pharmacy: a randomised trial of the effect of education and a professional allowance. Pharmacoeconomics. 2000;18(5):459–468. doi: 10.2165/00019053-200018050-00005. [DOI] [PubMed] [Google Scholar]

- 3.Westerlund T, Marklund B. Assessment of the clinical and economic outcomes of pharmacy interventions in drug-related problems. J Clin Pharm Ther. 2009;34(3):319–327. doi: 10.1111/j.1365-2710.2008.01017.x. [DOI] [PubMed] [Google Scholar]

- 4.Peterson G, Tenni P, Jackson S, et al. Documenting Clinical Interventions in Community Pharmacy: PROMISe III. Hobart: University of Tasmania; 2010. [Google Scholar]

- 5.Westerlund T, Almarsdattir AB, Melander A. Factors influencing the detection rate of drug-related problems in community pharmacy. Pharm World Sci. 1999;21(6):245–250. doi: 10.1023/a:1008767406692. [DOI] [PubMed] [Google Scholar]

- 6.Health Workforce of Australia. University of Newcastle & University of Tasmania; 2010. Use of simulation in pharmacy school curricula. [Google Scholar]

- 7.Australian Pharmacy Council. Accreditation Standards for Pharmacy Programs in Australia and New Zealand. Australian Pharmacy Council Ltd; 2012. [Google Scholar]

- 8.Choi M. Communities of practice: an alternative learning model for knowledge creation. Br J Educ Technol. 2006;37(1):143–146. [Google Scholar]

- 9.Ilgen J, Sherbino J, Cook D. Technology-enhanced simulation in emergency medicine: a systematic review and meta-analysis. Acad Emerg Med. 2013;20(2):10. doi: 10.1111/acem.12076. [DOI] [PubMed] [Google Scholar]

- 10.Cook D, Brydges R, Zendejas B, Hamstra S, Hatala R. Mastery learning for health professionals using technology-enhanced simulation: a systematic review and meta-analysis. Acad Med. 2013;88(8):1178–1186. doi: 10.1097/ACM.0b013e31829a365d. [DOI] [PubMed] [Google Scholar]

- 11.Fernandez R, Parker D, Kalus JS, Miller D, Compton S. Using a human patient simulation mannequin to teach interdisciplinary team skills to pharmacy students. Am J Pharm Educ. 2007;71(3):Article 51. doi: 10.5688/aj710351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bergeron B. Developing Serious Games (Game Development Series) Florence, KY: Thomson Delmar Learning; 2006. [Google Scholar]

- 13.Hansen MM. Versatile, immersive, creative and dynamic virtual 3-D healthcare learning environments: a review of the literature. J Med Internet Res. 2008;10(3):e26. doi: 10.2196/jmir.1051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Susi T, Johannesson M, Backlund P. Serious Games: An Overview. Skövde: University of Skövde; 2007. [Google Scholar]

- 15.Krum H, Jelinek MV, Stewart S, Sindone A, Atherton JJ, Hawkes AL. Guidelines for the prevention, detection and management of people with chronic heart failure in Australia 2006. Med J Aust. 2006;185(10):549. doi: 10.5694/j.1326-5377.2006.tb00690.x. [DOI] [PubMed] [Google Scholar]