Abstract

The study examined whether face-specific perceptual brain mechanisms in 9-month-old infants are differentially sensitive to changes in individual facial features (eyes vs. mouth) and whether sensitivity to such changes is related to infants’ social and communicative skills. Infants viewed photographs of a smiling unfamiliar female face. On 30% of the trials, either the eyes or the mouth of that face were replaced by corresponding parts from a different female. Visual event-related potentials (ERPs) were recorded to examine face-sensitive brain responses. Results revealed that increased competence in expressive communication and interpersonal relationships was associated with a more mature response to faces, as reflected in a larger occipito-temporal N290 with shorter latency. Both eye and mouth changes were detected, though infants derived different information from these features. Eye changes had a greater impact on the face perception mechanisms and were not correlated with social or communication development, whereas mouth changes had a minimal impact on face processing but were associated with levels of language and communication understanding.

Keywords: Face processing, ERP, infants

It is well-established that faces have a special status in perception and information processing due to the social nature of human interactions. Face processing is carried out by the brain’s social network (Johnson et al., 2005) and the development of this ability has been well documented. Certain capacity for face processing is present at birth (Easterbrook, Kisilevsky, Muir & Laplante, 1999; Walton & Bower, 1993); however, the development of more advanced skills is experience-dependent (Le Grand, Mondloch, Maurer, & Brent, 2001; Parker, Nelson, & The Bucharest Early Intervention Project Core Group, 2005). Newborns show a preference for simple face-like patterns relative to other stimuli (de Haan, Pascalis, & Johnson, 2002; Simion, Valenza, Umilta, & Dalla Barba, 1998; Valenza, Simion, Cassia, & Umilta, 1996), and at 4 days of age, infants can discriminate faces based on external features of the face/head; however, this discrimination ability disappears if the hairline is obscured (Pascalis, de Schonen, Morton, Deruelle, & Fabre-Grenet, 1995). Five-week-olds can recognize their mother from internal facial features alone (Bartrip, Morton, & de Schonen, 2001), and by 3 months, infants prefer the specific geometry of the face rather than the general top-heavy arrangement (Cassia, Kuefner, Westerlund, & Nelson, 2006). Six-month-old infants are equally good at discriminating human and monkey faces but by 9 months they lose the ability to discriminate the latter unless they have had sufficient experience (e.g., through picture books; Pascalis et al., 2005). Some report that 7–8-month-old infants are able to discriminate faces based on differences in distance between the features (e.g., nose and the mouth; Cohen & Cashon, 2001; Scott & Nelson, 2006; Thompson, Madrid, Westbrook, & Johnston, 2001), while others demonstrate that adult-like sensitivity to spacing of the features fully develops much later (Carey & Diamond, 1994; Chung & Thomson, 1995; Mondloch, Le Grand & Maurer, 2002).

Although many studies have examined general face perception in infants, few studies to date have investigated the contribution of individual features to the overall process. Recent reports demonstrate that even in the expert face processing of adults, featural information is utilized for face discrimination (same/different judgments; Rotshtein, Geng, Driver, & Dolan, 2007). In regard to specific features, some argue that eyes are the most salient (Maurer, 1985) and/or important facial feature (Bruce & Young, 1998), as infants as young as 3–5 weeks of age demonstrate preferential looking at the eyes, rather than mouth, of adults (Haith, Bergman, & Moore, 1977), and brain mechanisms for processing eye information mature faster than those for general face perception (Taylor, Gillian, Edmonds, McCarthy, & Allison, 2001). Recently, Bentin and colleagues (2006) used event-related potential (ERP) data to demonstrate convincingly that eyes play a special role in face processing for adults. They suggest that the information from the eye area determines whether or not the stimulus is channeled via the face-specific perceptual mechanism. For example, if a typical schematic face is presented, the stimulus is categorized as a face, eliciting a face-specific N170 response. However, when incongruent information is present at the eye location (e.g., drawings of objects), the N170 elicited by such stimuli is almost identical to the ERP elicited by single objects, despite the participants’ awareness that the drawing could be a face. In contrast, stimuli with small faces for the eyes elicited a N170 response similar to that elicited by regular schematic faces (Bentin, Golland, Flevaris, Robertson, & Moscovitch, 2006).

Existing research data also suggest that the relative importance of individual facial features can vary. For example, different feature preferences have been reported for persons with autism compared to those with typical development (Klin, Jones, Schultz, Volkmar, & Cohen, 2002). Typical children and adults tend to look more at the eyes of a face, while persons with autism often demonstrate fewer fixations on eyes and/or a relative proficiency in processing the mouth region (Dalton et al., 2005; Joseph & Tanaka, 2003; Klin et al., 2002) or focus on other facial characteristics such as chins or cheeks (Pelphrey et al., 2002). Such differences in feature preference may affect the speed of overall face processing. McPartland and colleagues reported delayed N170 latencies to faces but not to objects in adolescents and adults with autism (McPartland, Dawson, Webb, Panagiotides, & Carver, 2004).

The importance of individual features may also change in response to specific needs. For example, eye tracking data revealed that between 1.5 and 6.5 months of age, infants look predominantly at the eyes and mouth of their mother’s face (Hunnius & Geuze, 2004). However, while the relative amount of time spent fixating on each of these features is approximately equal at 3.5 months of age (48.10% vs. 44.88%), over the subsequent 3 months infants progressively increase the amount of time devoted to fixating on the mouth, and at 6.5 months they spend twice as long looking at it compared to the eyes (57.19% vs. 29.74%), possibly due to increased interest in language (Hunnius & Geuze, 2004). Changes in the distribution of fixations were also reported in adults, who tended to look more at the eyes when assessing prosody of speech and at the mouth when identifying words (Lansing & McConkie, 1999).

Thus, the existing findings suggest that processing of individual facial features may serve multiple purposes in addition to basic face perception. Moreover, the relative efficiency in processing eyes vs. mouth may be related to language and social functioning. However, such associations have not yet been investigated in typically developing infants. Doing so would contribute to the literature regarding the potential developmental importance of expertise in featural face processing.

The purpose of this study was two-fold: (1) to examine whether eye and mouth features have different impact on brain face perception mechanisms in 9-month-old infants as reflected by ERPs, and (2) to examine whether brain responses to faces and changes in their features are associated with infants’ social and communicative behaviors. The decision to focus on 9-month-olds was motivated by the fact that these participants already have sufficient expertise with human faces (Pascalis et al., 2002; Schwarzer, Zauner, & Jovanovic, 2007) but are still developing social and communicative skills.

If eyes and/or mouth features affect face-specific brain mechanisms, we expected such evidence to be present in amplitude and/or latency measures of N290 and P400 peaks (infant precursors of the adult N170; see de Haan, Johnson, & Halit, 2003, for a review) at the occipito-temporal scalp locations. The only prior study examining sensitivity of 8-month-old infants to featural changes reported delayed N290 latency for familiarized faces, and a larger P400 amplitude for altered faces in the left than right hemisphere (Scott & Nelson, 2006). However, that study did not examine responses to eye- and mouth-changes separately. Thus, due to the lack of prior studies on this topic and because amplitude and latency responses of N290 and P400 peaks vary with age (de Haan et al., 2003), we did not have specific a priori predictions regarding the direction of responses to eye or mouth changes compared to the standard face. Furthermore, if the changes in facial features do not affect the brain’s face-specific perceptual mechanisms per se but attract attention as rare novel/unfamiliar stimuli, perhaps due to subjective changes in face identity recognition, we expected to observe an increased Nc component to altered stimuli at fronto-central locations within 500–800 ms after stimulus onset. Nc has been frequently identified in infant ERP studies as sensitive to stimulus familiarity and probability (see de Haan et al., 2003 for review).

Method

Participants

20 infants (8 females), age 9 months (M age= 271.5 +/− 10.4 days; 9.05+/− .35 months) participated in the study after their parents provided written informed consent. All infants were reported to have typical development and no family history of developmental disabilities. Data from 4 additional infants were excluded due to insufficient number of ERP trials retained after artifact detection.

Stimuli

The stimuli included three color photographs of an unfamiliar smiling female face: one represented the standard face, one represented the same face with different eyes, and one represented the same face with a different mouth (Figure 1). The novel features were obtained from a photograph of a different smiling female and introduced changes in the overall shape (including the degree of openness) of the eyes and mouth.

Figure 1.

Stimuli used in the oddball paradigm. Top = Standard face, Left = Mouth change, Right = Eyes change.

All stimulus alterations were done using Adobe Photoshop CS (v.8.0) and the novel features were placed at the corresponding locations in order to minimize changes to the original configural characteristics. The photographs subtended a visual angle of 20.93° (w) × 16.75°(h) with the eyes and mouth features occupying 5.4° × 1.43° and 3.82° × 1.43°, respectively. Thus, the on-screen stimuli were close to life-size and all facial features were clearly visible.

Electrodes

A high-density array net of 124 Ag/AgCl electrodes embedded in soft sponges (Geodesic Sensor Net, EGI, Inc., Eugene, OR) was used to record infant ERPs. Electrode impedance levels were adjusted to less than 40 kOhm. Data were sampled at 250Hz with filters set to 0.1–30Hz. During data collection, all electrodes were referred to Cz (re-referenced offline to an average reference).

Procedure

Each participant was tested while seated in the parent’s lap in a darkened sound-attenuated room. ERPs were obtained using a passive oddball paradigm with two blocks of 100 trials. The original face served as the standard stimulus in both blocks and was presented on 70% of the trials in each block. The eye- or mouth-change stimuli served as the deviant and were presented on 30% of the trials. The order of the blocks was counterbalanced across the participants. Each trial began with a 500 ms fixation point (black plus sign on a white background) followed by a 1000 ms presentation of the face stimulus. The stimuli were presented against a black background in the center of the computer screen positioned 90 cm in front of the participant. Interstimulus interval varied randomly between 1100–1600 ms to prevent habituation to stimulus onset.

Recording of the brainwaves was controlled by Net Station software (v. 4.1; EGI, Inc., Eugene, OR). Stimulus presentation was controlled by E-Prime (v. 1.1, PST, Inc., Pittsburgh, PA). During the entire test session, infant’s electroencephalogram (EEG) and behavior were continuously monitored and stimulus presentation occurred only when the infant was quiet and looking at the monitor. During periods of inattention and/or motor activity, stimulus presentation was suspended and a baby-friendly video (Baby Einstein series) was briefly presented on the monitor to attract infant’s attention. The researcher present in the testing room also redirected infants to the computer screen using a wand with flashing spinning lights.

During the visit, mothers of the infants completed three subscales of the Vineland Adaptive Behavior Scales-II Parent/Caregiver Rating Form (VABS-II; Sparrow, Cicchetti, & Balla, 2005): Receptive Communication, Expressive Communication, and Interpersonal Relationships. These subscales were selected a priori because they measure constructs that are most directly related to our experimental measures of face perception. The Receptive Communication subscale included questions about how the infant listens and pays attention, as well as the words or concepts he/she understands. Questions related to the Expressive Communication focused on the sounds and gestures that the infant uses to make his/her wants known. The Interpersonal Relationships subscale assessed how the infant interacts with others, including how he/she expresses and recognizes emotions, responds to others, shows affection, and demonstrates imitation skills. These subscales yield standardized v-scores with a mean of 15 and a standard deviation of 3. All infants in the study scored in the average range (Receptive: M=15.26 +/− 2.28; Expressive: M=14.47 +/− 1.87; Interpersonal: M=14.00 +/− 1.56).

Data Analysis

Individual ERPs were derived by segmenting the ongoing EEG on each stimulus onset to include a 100-ms prestimulus baseline and a 900 ms post-stimulus interval. To avoid biasing the results due to a largely uneven number of standard and deviant trials (Thomas, Grice, Najm-Briscoe, & Miller, 2004), only the standard trials preceding a deviant stimulus were selected for the analysis. Resulting segments were screened for artifacts using NetStation tools followed by a manual review. Trials contaminated by eye or movement artifacts were excluded from the analysis. The remaining ERPs were referenced to an average reference and baseline corrected. For a data set to be included in the statistical analyses, individual condition averages had to be based on at least 10 trials. Trial retention rates were similar across stimulus conditions (M standard = 19.05 +/− 6.39, M eye change = 15.65+/− 3.84, M mouth change = 15.55+/− 4.29).

To reduce the number of variables in the statistical analyses, data from 124 electrodes were submitted to a spatial principal components analysis (sPCA) that identified small set of ‘virtual electrodes’ representing contiguous clusters of electrodes with similar ERP waveforms (see Spencer, Dien, & Donchin, 1999). Data from individual electrodes within a cluster were averaged together. In order to better relate the current results to existing literature, only electrode clusters corresponding to locations previously identified as the optimal sites for face-sensitive N290 and P400 peaks (occipito-temporal locations; Halit, de Haan, & Johnson, 2003; Scott & Nelson, 2006) and the novelty-sensitive Nc peak (frontal-central; de Haan & Nelson,1997; de Haan et al., 2003) were selected for further analysis. Next, within each cluster, peak latency and mean amplitude measures were obtained for N290 (250–350ms), P400 (350–450ms), and Nc (500–800ms) peaks using NetStation statistical extraction tool. Latency windows were determined based on the examination of the grand-averaged waveform. Planned comparisons using t-tests were used to examine differences in ERP responses to the change stimuli compared to the standard face. For the N290/P400 peaks, these comparisons were performed separately for the left and right hemisphere as prior infant studies have found the former to be potentially more sensitive to featural changes (Scott & Nelson, 2006).

Additionally, statistically significant ERP effects were followed by correlations between VABS-II V-scores on Receptive and Expressive Communication and Interpersonal Relationships subscales and amplitude and latency measures for the N290 and P400 peaks.

Results

The selected electrode clusters identified by the spatial PCA are presented in Figure 2. The left and right occipito-temporal electrode clusters overlapped locations previously used in infant face processing studies by de Haan et al (2002). Mean amplitude and latency data are presented in Table 1.

Figure 2.

Electrode clusters identified by sPCA and used in the analyses.

Table 1.

Mean and SD of average amplitude and latency measures

| Peak | Scalp area | Average amplitude | Latency | ||||

|---|---|---|---|---|---|---|---|

| STD | E | M | STD | E | M | ||

| N290 | Left occipito-temporal | 5.05 (6.64) | 0.44 (7.11) | 3.65 (7.51) | 298.27 (26.04) | 284.30 (25.13) | 274.50 (18.05) |

| Right occipito-temporal | −1.79 (6.80) | −0.58 (6.41) | −1.07 (8.00) | 292.24 (19.03) | 295.56 (23.36) | 289.16 (21.50) | |

| P400 | Left occipito-temporal | 9.22 (6.12) | 3.33 (9.05) | 8.55 (6.30) | 409.70 (20.65) | 401.63 (24.39) | 401.63 (25.15) |

| Right occipito-temporal | 2.43 (6.95) | 3.07 (7.31) | 4.03 (9.56) | 401.07 (22.94) | 401.67 (25.16) | 407.04 (27.27) | |

| Nc | Frontal | −0.75 (5.98) | 0.74 (7.40) | 2.17 (6.89) | 591.28 (74.29) | 606.22 (82.33) | 625.69 (76.27) |

| Central | 1.77 (4.40) | 2.35 (5.53) | 2.56 (6.24) | 596.08 (65.31) | 601.87 (80.45) | 627.39 (71.05) | |

SRD—standard face, E—eyes change, M—mouth change.

Face Perception (N290/P400)

Standard face

The standard face elicited a N290/P400 response with more negative amplitudes observed over the right than left hemisphere (N290: t(19)=3.370, p=.003; d=1.04; P400: t(19)=3.079, p=.006; d=1.06).

The N290 amplitude over the left hemisphere correlated negatively with the receptive (r=−.478, p=.038) and expressive (r=−.662, p=.002) communication scores, indicating that a larger (more negative) peak was associated with higher levels of communication understanding and use. The left N290 latency correlated with the interpersonal relationships score (r=−.553, p=.014) as a faster response (shorter latency) was associated with higher levels of infants’ social interaction.

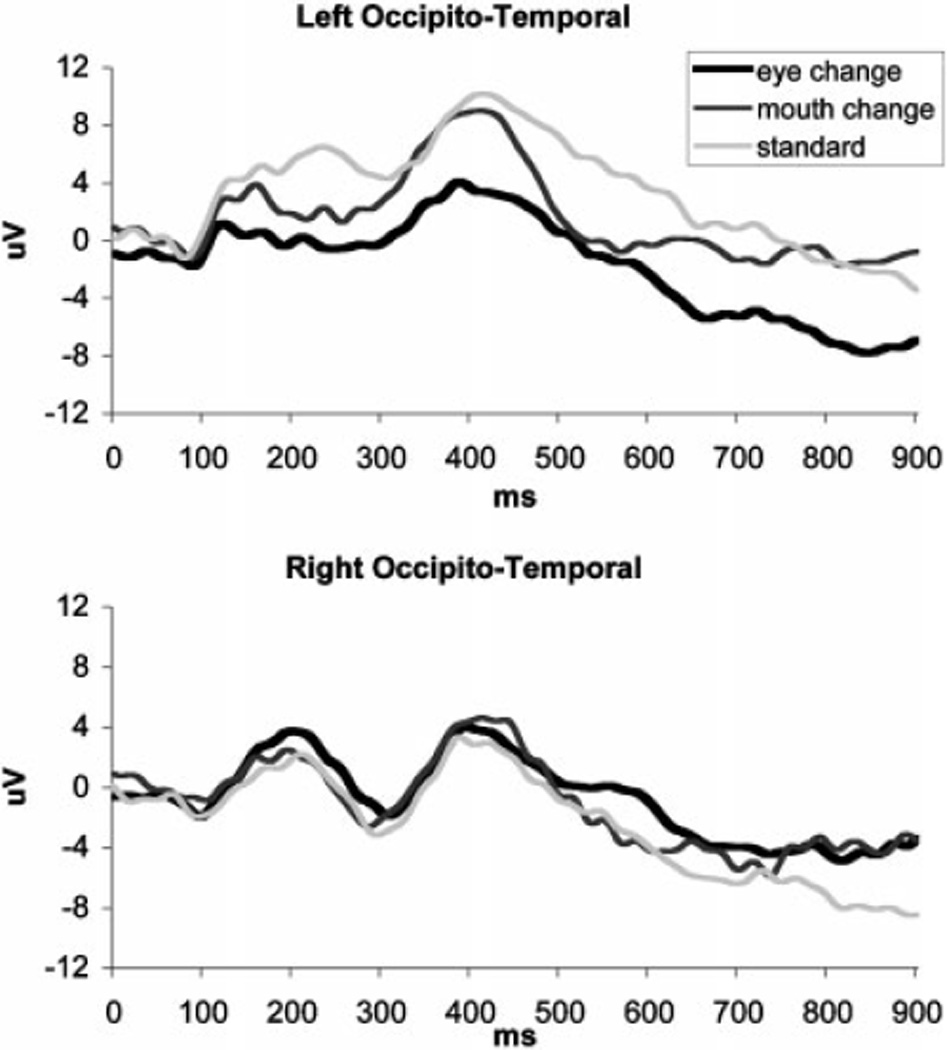

Eye change

Compared to the standard face, eye-change stimuli elicited a larger (more negative) left occipito-temporal N290 (t(19)=2.292, p=.034; d=.69) with shorter latency (t(19)=2.785, p=.012; d=.56; Figure 3). The left P400 response was also more negative (smaller amplitude) (t(19)=2.472, p=.023; d=.78). There were no significant correlations between the ERPs to eye change stimuli and behavioral scores.

Figure 3.

ERPs in response to standard and altered faces for left and right occipito-temporal clusters.

Mouth change

Relative to the standard face, mouth-change stimuli elicited N290 with shorter latencies (t(19)=3.307, p=.004; d=1.06) over the left hemisphere, but no other differences between the two conditions reached significance. Similar to the standard face, N290/P400 complex to mouth change was more negative over the right than left hemisphere (N290: t(19)=2.931, p=.027; d=.62; P400: t(19)=.221, p=.039; d=.56). The amplitude of the left N290 to the mouth change correlated with the receptive communication scores, (r=−.491, p=.033).

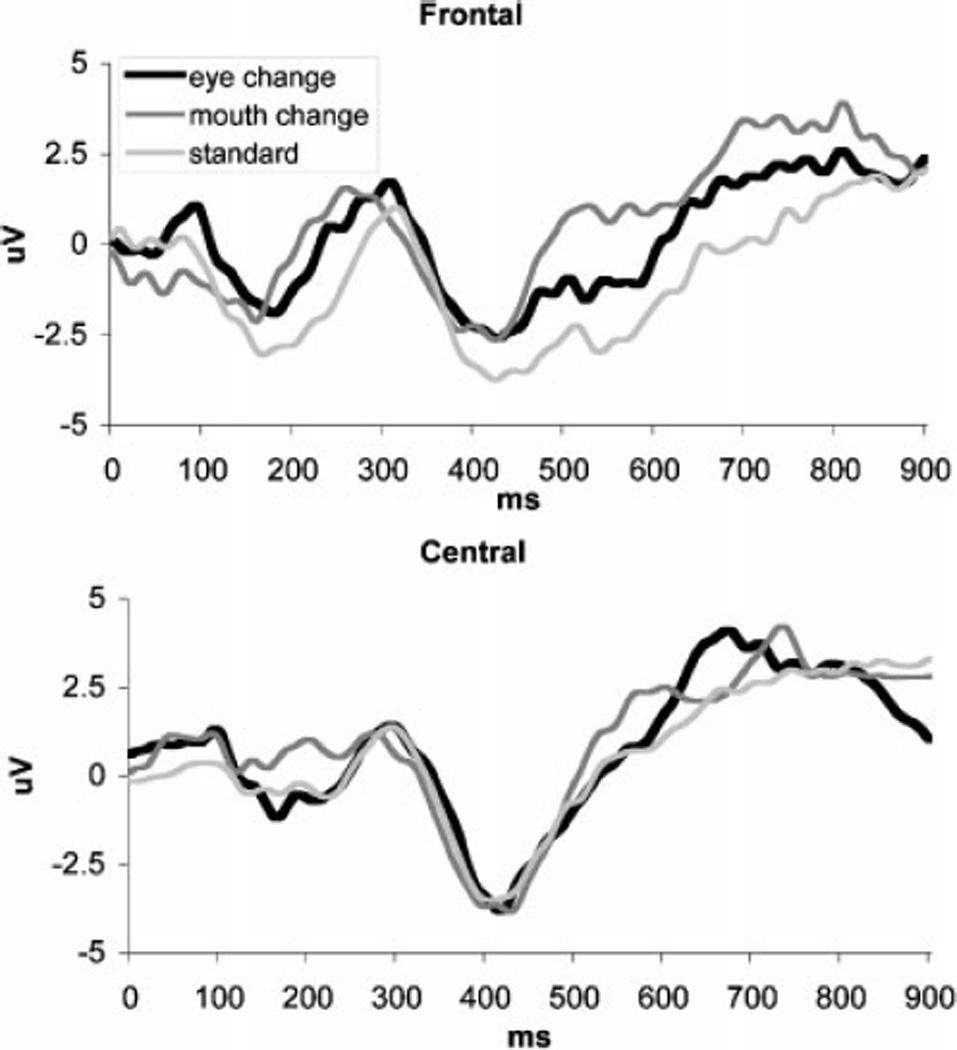

Face Recognition (fronto-central Nc)

There were no significant differences in mean amplitude or latency measures between either of the change stimuli and the standard face in the Nc time range for either frontal or central cluster (see Figure 4).

Figure 4.

ERPs in response to standard and altered faces for frontal and central clusters.

Discussion

This study examined how facial features (eyes vs. mouth) affect face perception mechanisms in 9-month-old infants as measured by ERPs and whether differences in face-sensitive brain responses are associated with infants’ social and communicative development.

Despite differences in paradigms (oddball vs. equiprobable), our findings were consistent with previous studies (de Haan et al., 2003; Nelson, 2001; Pascalis, de Haan, & Nelson, 2002) in identifying occipito-temporal N290 and P400 peaks as reflecting face perception in infants. The standard face elicited larger N290 over the right than left hemisphere. The pattern of increased negativity over the right hemisphere was also present for the P400 response, consistent with observations previously reported in 8-month-old infants (Scott & Nelson, 2006). This left-right asymmetry is similar to the hemisphere distribution frequently observed for adult N170 responses (Itier & Taylor, 2002; Bentin et al., 1996) but not consistently present in younger populations due to developmental differences (Taylor et al., 1999). A more mature left-hemisphere response to the standard face, as reflected in a larger N290 with shorter latency (i.e., more similar to the adult N170 characteristics; de Haan et al., 2003), was associated with increased competence in expressive communication and interpersonal relationships.

Compared to the standard face, eye changes were characterized by a larger N290 with shorter latency and smaller P400 amplitude over the left hemisphere sites. These effects can be interpreted as reflecting differential engagement of the face-specific perceptual mechanisms in response to the altered stimulus occasionally appearing among the frequent same-face presentations. Longer N290 latency has been reported for habituated than novel faces in 8-month-old infants (Scott & Nelson, 2006), and a smaller P400 amplitude appears to be present for novel faces in 9-month-olds (Scott, Shannon, & Nelson, 2006, Figure 3b). The left-lateralized location of the discrimination effects observed in our study is consistent with left-hemisphere advantage for featural processing reported in other infant studies (Deruelle & de Schonen, 1998; Scott & Nelson, 2006). Detection of the novel eyes would be possible only if the infants processed individual features in addition to the overall configuration of the presented faces because great care was exercised in creating the change stimuli as to minimize potential spatial layout alterations.

The increased N290 amplitude in response to eye changes, although not reported in prior studies, may further support the interpretation of more extensive face processing. Repeated exposure to faces with the same eyes (i.e., standard and mouth-change stimuli) could result in habituation of the perceptual mechanism to this facial feature and reduction of the ERP amplitude, while presentation of the novel eyes in the context of the same face led to dishabituation. Thus, our findings are consistent with the idea that information in the eye area is critical for the activation of face-specific perceptual mechanisms (Bentin et al., 2006), as reflected in amplitude modulations of the N290 and the subsequent P400 peaks.

The absence of the N290 amplitude effect in the Scott and Nelson (2006) study of 8-month-olds may be due to paradigm and analysis differences, as they presented standard faces, faces with feature changes, and novel faces equally often, and did not analyze eye- vs. mouth-change stimuli separately. Our pilot data from a group of 10 adults indicated that presenting both types of change stimuli intermixed within the same set of trials (i.e., as a multi-deviant oddball paradigm) resulted in reduced amplitudes of the ERPs for the infrequent stimuli compared to the blocked presentation.

The lack of correlations between infants’ ERP responses to eye changes and their social or communicative behaviors was surprising but may be better understood in light of Lansing and McConkie’s findings (1999) of more frequent fixations to the eyes when participants had to evaluate intonation of the spoken stimuli (e.g., happy or sad). It is possible that information in the eye region of the face assists in determining intentions or emotional states. In our stimuli, although the two sets of eyes did differ in the degree of openness and the amount of iris visible, both came from smiling female faces. Therefore, from a communicative perspective, the novel eyes carried similar affective information as the standard face. Future research is needed to examine whether changes in the expression of the eyes (in addition to or instead of the physical shape change) would be detected by infants and would correlate with social and communicative behaviors.

Mouth changes were typically processed in a similar manner as the standard face as reflected in similar patterns of hemisphere asymmetry and lack of amplitude differences for the N290 and P400 peaks. One explanation for this outcome could be that the novel mouth resulted in a lesser physical change compared to the novel eyes and therefore was not sufficiently salient. However, similar to the eye change stimuli, mouth changes elicited N290 with shorter latency than the standard face over the left hemisphere, suggesting that the infants did notice the mouth change, but it was not enough to alter the remaining steps of face processing. The mouth region may be more relevant for extracting information other than about the face itself. For example, Hunnius and Geuze (2004) suggest that the mouth may attract infants’ attention due to their developing interest in language. Klin et al. (2002) reported a positive association between increased attention to the mouth and more adaptive social functioning in adolescents with autism and proposed that it could reflect their concentration on speech as the means for understanding of the social situations. The correlations between the N290 amplitude to mouth changes and the infants’ receptive communication scores observed in our data set further support this interpretation of mouth processing as more relevant to language than to face perception.

It is interesting that despite the evident impact of the featural changes on the face perception mechanisms, neither eye- nor mouth-changes appeared to be sufficient to elicit a general non-face-specific novelty response, as there were no condition effects on the amplitude or latency of the Nc. This finding suggests that while 9-month-old infants are proficient in feature analysis, they may be already basing their overall face familiarity/novelty decisions on the configural information. Since our stimulus manipulations were designed to replicate the original placement of the individual features as much as possible, and the novel features were congruent with the general face template (e.g., we did not replace eyes with objects as in Bentin et al., 2006), the overall identity of the altered faces might have been perceived as unchanged, resulting in recognition of all faces as belonging to the same person. An alternative explanation for this finding may be that our participants did not develop sufficient familiarity with the standard face; however, this possibility is unlikely. Prior studies utilizing an oddball paradigm report that 4–7 month old infants are able to remember a frequently presented face and to discriminate it from a different face presented infrequently (Courchesne, Ganz, & Norcia, 1981). Therefore the 9-month-old participants in our study should be able to remember the standard face. Furthermore, the standard face was presented on 70% of trials in each of the two trial blocks. Thus, the accumulated looking time could be up to 140 sec. Infants in the Scott and Nelson (2006) study were considered to become familiar with the face after 20 sec accumulated looking time. Since our participants were older and had up to a 7-fold increase in the time spent looking at the standard face, we expect that they had sufficient opportunity to remember it. Another alternative explanation for the lack of a novelty response could be that we chose a less than optimal scalp location to examine the familiarity effect. Even 6-month old infants may utilize different face processing strategies depending on the difficulty of discrimination. When the standard and deviant faces are very similar, familiarity effects can be observed not for the fronto-central Nc response but for the posterior temporal positive slow wave (de Haan & Nelson, 1997). However, our post-hoc analyses of mean amplitudes at the posterior sites did not yield any significant differences between the standard and either eye- or mouth-change faces (p’s > .2).

Although many of our findings are consistent with the existing literature, the present study has several limitations. It is possible that our results are stimulus-specific as only one set of faces was used as the stimuli. The rationale for using a single set comes from the paradigm choice. To control for familiarity, we chose to use an unfamiliar face stimulus presented in an oddball paradigm in order to examine infants’ sensitivity to particular facial features. Using the same stimulus set ensured that the amount of change introduced by substituting eyes or mouth remained identical across all participants. However, future studies with different stimulus sets are needed to replicate our current findings. Another potential limitation may be the differences in the amount of change introduced by replacing the eyes vs. the mouth in our stimuli. The standard face and change stimuli differed in the overall shape of the eyes and mouth, but compared to the mouth, the eyes also presented a greater change in the degree of openness. It is possible that infants responded to differences in the amount of iris or pupil visibility by interpreting one stimulus to be less happy than the other. While this issue may be viewed as a potential confound, it does not contradict our interpretation that 9-month-old infants process eye information in great detail despite their general reliance on configural face processing. Nevertheless, follow-up studies will need to address the contribution of physical shape vs. affective expression of individual facial features. Finally, it is possible that the attention-getting visual stimuli (Baby Einstein videos, light-up toys, etc.) differentially affected infants’ familiarity with the experimental faces as some participants required more attention redirection than others. Our behavioral observations contradict this argument. Infants who experienced greater exposure to video and toy stimuli were the ones who showed greater habituation to the experimental faces – these infants were content to view novel visual stimuli (including faces from a different paradigm) but lost interest as soon as the familiarized face was presented. Furthermore, most breaks typically occurred toward the end of the first trial block, when infants have seen the standard face many times (accumulated looking time well in excess of 20 sec used as the habituation criterion by Scott & Nelson, 2006), and the oddball nature of the paradigm provided many opportunities to re-familiarize themselves with the standard stimulus following any breaks.

Overall, our results provide support for the idea that the eye region may be of special importance for face perception mechanisms while the mouth area carries information more relevant to communicative purposes. While both eye and mouth changes were detected, as reflected in changes of the N290 latency, the eye changes affected the face processing brain mechanisms to a greater extent as reflected in larger N290 and smaller P400 amplitudes. These findings are consistent with the suggestion by Bentin et al. (2006) that eyes may serve as the basic marker of “faceness” that activates the rest of face processing network.

Inability to process eyes may delay or alter the remaining face processing steps and have even larger implication for developmental outcomes. Infants’ ability to process specific eye information (e.g., gaze directed toward an object) has been correlated with object recognition (Reid, Striano, Kaufman, & Johnson, 2004). Future research might examine differences in featural face processing in infants at elevated risk for autism, which is defined by social and communication deficits and for which deficits in eye contact represent one of the most common early features (Baranek, 1999; Osterling, Dawson, & Munson, 2002). It is possible not only that differences in face processing strategies could be an early neurobehavioral marker for autism, but also that these deficits are amenable to intervention that results in improved social and language skills.

Acknowledgments

This work was supported in part by NICHD Grant P30 HD15052 to Vanderbilt Kennedy Center and by a Marino Autism Research Institute (MARI) Discovery Award to Dr. Alexandra Key. We would like to thank Ms. Stephanie Bradshaw and Ms. Katie Knoedelseder for their assistance in recruiting and testing the participants, and Dr. Paul Yoder for his helpful comments on the earlier versions of the manuscript.

References

- Baranek G. Autism during infancy: A retrospective video analysis of sensory-motor and social behaviors at 9–12 months of age. Journal of Autism and Developmental Disorders. 1999;29:213–224. doi: 10.1023/a:1023080005650. [DOI] [PubMed] [Google Scholar]

- Bartrip J, Morton J, de Schonen S. Responses to mother’s face in 3-week to 5-month-old infants. British Journal of Developmental Psychology. 2001;19:219–232. [Google Scholar]

- Bentin S, Alliosn T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face peception in humans. Journal of Cognitive Neuroscience. 1996;8(6):551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Golland Y, Flevaris A, Robertson C, Moscovitch M. Processing the trees and the forest during initial stages of face perception: electrophysiological evidence. Journal of Cognitive Neuroscience. 2006;18(8):1406–1421. doi: 10.1162/jocn.2006.18.8.1406. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. In the eye of the beholder: the science of face perception. New York: Oxford University Press; 1998. [Google Scholar]

- Carey S, Diamond R. Are faces perceived as configurations more by adults than by children? Visual Cognition. 1994;1(2/3):253–274. [Google Scholar]

- Cassia V, Kuefner D, Westerlund A, Nelson C. A behavioural and ERP investigation of 3-month-olds’ face preferences. Neuropsychologia. 2006;44:2113–2125. doi: 10.1016/j.neuropsychologia.2005.11.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung MS, Thomson DM. Development of face recognition. British Journal of Psychology. 1995;86:55–87. doi: 10.1111/j.2044-8295.1995.tb02546.x. [DOI] [PubMed] [Google Scholar]

- Cohen L, Cashon C. Do 7-month-old infants process independent features or facial configurations? Infant and Child Development. 2001;10:83–92. [Google Scholar]

- Courchesne E, Ganz L, Norcia AM. Event-related brain potentials to human faces in infants. Child Development. 1981;52(3):804–811. [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, Alexander AL, Davidson RJ. Gaze fixation and the neural circuitry of face processing in autism. Nature Neuroscience. 2005;8:519–526. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Haan M, Johnson MH, Halit H. Development of face-sensitive event-related potentials during infancy: a review. International Journal of Psychophysiology. 2003;51(1):45–58. doi: 10.1016/s0167-8760(03)00152-1. [DOI] [PubMed] [Google Scholar]

- de Haan M, Nelson CA. Recognition of the mother's face by six-month-old infants: A neurobehavioral study. Child Development. 1997;68(2):187–210. [PubMed] [Google Scholar]

- de Haan M, Pascalis O, Johnson MH. Specialization of neural mechanisms underlying face recognition in human infants. Journal of Cognitive Neuroscience. 2002;14(2):199–209. doi: 10.1162/089892902317236849. [DOI] [PubMed] [Google Scholar]

- Deruelle C, de Schonen S. Do the right and left hemisphere attend to the same visuospatial information within a face in infancy? Developmental Neuropsychology. 1998;14:535–554. [Google Scholar]

- Easterbrook MA, Kisilevsky BS, Muir DW, Laplante DP. Newborns discriminate schematic faces from scrambled faces. Canadian Journal of Experimental Psychology. 1999;53(3):231–241. doi: 10.1037/h0087312. [DOI] [PubMed] [Google Scholar]

- Haith MM, Bergman T, Moore MJ. Eye contact and face scanning in early infancy. Science. 1977;198:853–855. doi: 10.1126/science.918670. [DOI] [PubMed] [Google Scholar]

- Halit H, de Haan M, Johnson MH. Cortical specialization for face processing: face-sensitive event-related potential components in 3- and 12-month-old infants. Neuroimage. 2003;19(3):1180–1193. doi: 10.1016/s1053-8119(03)00076-4. [DOI] [PubMed] [Google Scholar]

- Hunnius S, Geuze R. Gaze shifting in infancy: a longitudinal study using dynamic faces and abstract stimuli. Infant Behavior and Development. 2004;27:397–416. doi: 10.1207/s15327078in0602_5. [DOI] [PubMed] [Google Scholar]

- Itier R, Taylor M. Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: A repetition study using ERPs. Neuroimage. 2002;15:353–372. doi: 10.1006/nimg.2001.0982. [DOI] [PubMed] [Google Scholar]

- Johnson M, Griffin R, Csibra G, Halit H, Farroni T, de Haan M, Tucker L, Baron-Cohen S, Richards J. The emergence of the social brain network: Evidence from typical and atypical development. Development and Psychopathology. 2005;17:599–619. doi: 10.1017/S0954579405050297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joseph RM, Tanaka J. Holistic and part-based face recognition in children with autism. Journal of Child Psychology and Psychiatry. 2003;44:529–542. doi: 10.1111/1469-7610.00142. [DOI] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Lansing C, McConkie G. Attention to facial regions in segmental and prosodic visual speech perception tasks. Journal of Speech, Language, and Hearing Research. 1999;42:526–539. doi: 10.1044/jslhr.4203.526. [DOI] [PubMed] [Google Scholar]

- Le Grand R, Mondloch C, Maurer D, Brent HP. Early visual experience and face processing. Nature. 2001;410:890. doi: 10.1038/35073749. [DOI] [PubMed] [Google Scholar]

- Maurer D. Infants' perception of facedness. In: Field T, Fox N, editors. Social Perception in Infants. Norwood, NJ: Ablex; 1985. pp. 73–100. [Google Scholar]

- McPartland J, Dawson G, Webb SJ, Panagiotides H, Carver LJ. Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. Journal of Child Psychology and Psychiatry. 2004;45(7):1235–1245. doi: 10.1111/j.1469-7610.2004.00318.x. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ, Le Grand R, Maurer D. Configural face processing develops more slowly than featural face processing. Perception. 2002;31:553–566. doi: 10.1068/p3339. [DOI] [PubMed] [Google Scholar]

- Nelson C. The development and neural bases of face recognition. Infant and Child Development. 2001;10:3–18. [Google Scholar]

- Osterling JA, Dawson G, Munson JA. Early recognition of 1-year-old infants with autism spectrum disorder versus mental retardation. Development and Psychopathology. 2002;14:239–251. doi: 10.1017/s0954579402002031. [DOI] [PubMed] [Google Scholar]

- Pascalis O, de Schonen S, Morton J, Deruelle C, Fabre-Grenet M. Mother’s face recognition by neonates: A replication and an extension. Infant Behavior and Development. 1995;18:79–85. [Google Scholar]

- Pascalis O, de Haan M, Nelson CA. Is face processing species-specific during the first year of life? Science. 2002;296(5571):1321–1323. doi: 10.1126/science.1070223. [DOI] [PubMed] [Google Scholar]

- Pascalis O, Scott LS, Kelly DJ, Shannon RW, Nicholson E, Coleman M, et al. Plasticity of face processing in infancy. Proceedings of the National Academy of Sciences. 2005;102:5297–5300. doi: 10.1073/pnas.0406627102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parker S, Nelson C The Bucharest Early Intervention Project Core Group. An event-related potential study of the impact of institutional rearing on face recognition. Development and Psychopathology. 2005;17:621–639. doi: 10.1017/S0954579405050303. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Developmental Disorders. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Reid V, Striano T, Kaufman J, Johnson M. Eye gaze cueing facilitates neural processing of objects in 4-month-old infants. Neuroreport. 2004;15:2553–2555. doi: 10.1097/00001756-200411150-00025. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Geng J, Driver J, Dolan R. Role of features and second-order spatial relations in face discrimination, face recognition, and individual face skills: Behavioral and functional magnetic resonance imaging data. Journal of Cognitive Neuroscience. 2007;19:1435–1452. doi: 10.1162/jocn.2007.19.9.1435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarzer G, Zauner N, Jovanovic B. Evidence of a shift from featural to configural face processing in infancy. Developmental Science. 2007;10:452–463. doi: 10.1111/j.1467-7687.2007.00599.x. [DOI] [PubMed] [Google Scholar]

- Scott L, Nelson C. Featural and configural face processing in adults and infants: A behavioral and electrophysiological investigation. Perception. 2006;35:1107–1128. doi: 10.1068/p5493. [DOI] [PubMed] [Google Scholar]

- Scott LS, Shannon RW, Nelson CA. Neural correlates of human and monkey face processing by 9-month-old infants. Infancy. 2006;10:171–186. doi: 10.1207/s15327078in1002_4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simion F, Valenza E, Umilta C, Dalla Barba B. Preferential orienting to faces in newborns: a temporal-nasal asymmetry. Journal of Experimental Psychology: Human Perception and Performance. 1998;24(5):1399–1405. doi: 10.1037//0096-1523.24.5.1399. [DOI] [PubMed] [Google Scholar]

- Sparrow SS, Cicchetti DV, Balla DA. Vineland Adaptive Behavior Scales, Second Edition. Circle Pines, MN: AGS Publishing; 2005. [Google Scholar]

- Spencer K, Dien J, Donchin E. A componential analysis of the ERP elicited by novel events using a dense electrode array. Psychophysiology. 1999;36:409–414. doi: 10.1017/s0048577299981180. [DOI] [PubMed] [Google Scholar]

- Taylor M, McCarthy G, saliba E, Degiovanni E. ERP evidence of developmental changes in processing of faces. Clinical Neurophysiology. 1999;110(5):910–915. doi: 10.1016/s1388-2457(99)00006-1. [DOI] [PubMed] [Google Scholar]

- Taylor M, Gillian, Edmonds G, McCarthy G, Allison T. Eyes First! Eye processing develops before face processing in children. NeuroReport. 2001;12:1671–1676. doi: 10.1097/00001756-200106130-00031. [DOI] [PubMed] [Google Scholar]

- Thomas D, Grice J, Najm-Briscoe R, Miller J. The influence of unequal numbers of trials on comparisons of average event-related potentials. Developmental Neuropsychology. 2004;26:753–774. doi: 10.1207/s15326942dn2603_6. [DOI] [PubMed] [Google Scholar]

- Thompson LA, Madrid V, Westbrook S, Johnston V. Infants attend to second-order relational properties of faces. Psychonomic Bulletin & Review. 2001;8:769–777. doi: 10.3758/bf03196216. [DOI] [PubMed] [Google Scholar]

- Valenza E, Simion F, Cassia VM, Umilta C. Face preference at birth. Journal of Experimental Psychology: Human Perception and Performance. 1996;22(4):892–903. doi: 10.1037//0096-1523.22.4.892. [DOI] [PubMed] [Google Scholar]

- Walton G, Bower T. Newborns form ‘prototypes’ in less than one minute. Psychological Science. 1993;4:203–205. [Google Scholar]