Abstract

Sustained and effective use of evidence-based practices in substance abuse treatment services faces both clinical and contextual challenges. Implementation approaches are reviewed that rely on variations of plan-do-study-act (PDSA) cycles, but most emphasize conceptual identification of core components for system change strategies. A 2-phase procedural approach is therefore presented based on the integration of TCU models and related resources for improving treatment process and program change. Phase 1 focuses on the dynamics of clinical services, including stages of client recovery (cross-linked with targeted assessments and interventions), as the foundations for identifying and planning appropriate innovations to improve efficiency and effectiveness. Phase 2 shifts to the operational and organizational dynamics involved in implementing and sustaining innovations (including the stages of training, adoption, implementation, and practice). A comprehensive system of TCU assessments and interventions for client and program-level needs and functioning are summarized as well, with descriptions and guidelines for applications in practical settings.

Keywords: technology transfer, strategic change, organizational assessments, client assessments, organizational development

Improving clinical practice in social and health care service settings by successfully implementing new and innovative evidence-based practices is challenging. Numerous “best practices” exist, with documented evidence of utility so programs often need guidance in determining which ones will complement and improve their existing clinical services and meet client needs. Because of organizational and logistical barriers, however, selected innovations often do not reach the individuals for whom they are intended (Institute of Medicine, 2005; Wisdom et al., 2006). These include lack of knowledge about promising new practices, being unaware of clients’ precise clinical needs, or insufficient preparedness or willingness to adopt and implement new approaches. Staff also may not understand potential benefits of the innovation, they may not have the resources or expertise to initiate and sustain change, and training or management support may be inadequate. The challenge is to make available an integrated set of tools and guidelines for behavioral health programs to improve organizational functioning. Such tools are shown to enhance providers’ ability to adopt and implement new evidence-based practices and lead to more effective services.

In response to the slow pace of science influencing services, considerable emphasis and effort has been placed on increasing the capacity of programs to provide treatments based on science (Brown & Flynn, 2002). A number of scholars have described this transfer process and the organizational characteristics that are necessary for successful implementation to occur (Aarons, 2006; Fixsen, Naoom, Blasé, Friedman, & Wallace, 2005; Flynn & Simpson, 2009; Klein & Sorra, 1996; Roman & Johnson, 2002; Simpson & Flynn, 2007a). Within a broadening perspective of systems-level factors that influence treatment effectiveness, organizational functioning and readiness for utilization of evidence-based practices in particular are receiving increasing attention. For example, the National Institute of Health calls for increased emphasis on dissemination and implementation research as a core component of its translational research agenda as a means of promoting the development and conversion of basic science findings into practical and sustainable applications in the field (Kerner & Hall, 2009). This paper begins with a summary of constructs important to implementation success and then reviews several change models currently being used in attempts to improve substance use disorders treatment in the United States. A comprehensive approach is then described for organizational development in social service agencies that will increase their capacity to change and improve service delivery. It is based on well-established models of organizational and client level change and utilizes readily available and accessible assessment tools for organizations, clinical practices, and client progress in order to make more informed decisions on where and how to intervene and make improvements.

Frameworks for Implementation

As the science of implementation has progressed and more research is appearing in the literature, several conceptual frameworks have been developed that summarize the critical domains and concepts involved in successful implementation of new evidence-based practices and processes. Fixsen and colleagues (Fixsen et al., 2005) describe an implementation framework that consists of a source or best example of the practice to be implemented, a destination or target for the implementation, a communication link or purveyors who actively work to implement the defined practice, a performance feedback mechanism, and a sphere of influence that acts directly or indirectly on the organization. Favorable outcomes are only achieved when effective programs are successfully implemented. Fixsen et al.’s model includes six core components for successful implementation - staff and practitioner selection, pre-service and in-service training, ongoing consultation and coaching, staff and program evaluation, facilitative administrative support, and systems interventions. These core components are viewed as comprising interactive and integrative processes, as strengths in one component can overcome weaknesses in another component.

A more recent comprehensive framework, presented by Damshroder and colleagues (Damschroder et al., 2009), is the Consolidated Framework for Implementation Research (CFIR). They identified five major domains derived from an examination of 19 implementation models and theories. Their approach was to identify all of the major elements in these models and consolidate similar concepts. Within each of the five domains, important constructs were described: (1) characteristics of the particular intervention being implemented; (2) the outer setting, which can include economic, political and social context; (3) the inner setting which includes structural, political and cultural contexts in which the implementation process proceeds; (4) individuals involved with the intervention and/or implementation process; and (5) the implementation process itself. These domains are generally comparable to those described by Fixsen et al. (2005). Outer and inner settings comprise the sphere of influence; the intervention target in Fixsen’s nomenclature overlaps with the individuals involved with the intervention in CFIR terms. The CFIR also draws from a review by Greenhalgh and colleagues (Greenhalgh, Robert, Macfarlane, Bate, & Kyriakidou, 2004) which listed the innovation, individuals adopting the innovation, communication and influences, the implementation process, and the outer or inter-organizational context as critical factors.

The value of the CFIR is in bringing together ideas from a variety of implementation process models to formulate a central framework, but it does not specify the actual process of change within an organization needed for successful implementation nor does it identify procedural steps a program can follow to help assure successful change. It is best characterized as an explanatory framework or taxonomy and is not intended as a logistical process or change model (Grol, Bosch, Hulscher, Eccles, & Wensing, 2007). As such, CFIR identifies four fundamental but generic activities that are essential in any process of implementation (planning, engaging, executing, and reflecting and evaluating). In contrast, “change models” offer more detailed, context-specific, and prescriptive guidance necessary for successful implementation. A variety of change models have been formulated and used to improve organizational functioning by identifying and implementing new organizational and clinical practices. Although they build on important elements described in the CFIR, no existing model is fully comprehensive or is applicable for all change situations. Several models commonly used in substance abuse disorder (SUD) treatment services will be described below, followed by a discussion of an integrated system based on the TCU Program Change and TCU Treatment Process models for identifying and implementing new evidence-based clinical practices.

Program Change Approaches

Organizational and program change approaches generally include some variant of the plan-do-study-act (PDSA) cycle, first described by Shewhart (1939) as plan-do-check-act (PDCA). This was later popularized by Edward Deming (1982) who relabeled the “check” stage to “study” to better reflect its popular usage, and applied it more systematically for process improvement. The model includes four steps: (1) plan – develop a plan for improvement; (2) do – implement the plan; (3) study – get feedback on the results of the plan; and (4) act – make improvements to the process based on the feedback. The cycle then repeats itself as continuing refinements are made. The PDSA approach describes more of a way of thinking about change than it does a step-by-step generic process which often requires a variety of more specific tools to be utilized at each step in order for implementation to be successful. However, PDSA is embedded intuitively in many if not most organizational change and clinical improvement models. Its importance is the emphasis on measurement and feedback as a critical piece of the change process.

Reflecting early recognition of the difficulty of improving SUD treatment services and the need for resources to facilitate the PDSA and other program change processes, Addiction Technology Transfer Centers (ATTC) were created by the Center for Substance Abuse Treatment (CSAT) in 1993 to conduct SUD treatment technology transfer (Brown & Flynn, 2002). A national office and 13 regional ATTCs were developed. Although the ATTCs initially focused on providing training for programs and practitioners, their focus was later broadened to include more technology transfer activities such as cultivating system change. As part of this broadened focus, the ATTC Change Book (Addiction Technology Transfer Centers, 2000, 2004) was developed to assist programs in technology transfer and present a more detailed approach to change that provides more procedural guidance to programs than does the general PDSA model. Seven change principles were described: (1) relevance of the technology to the organization; (2) timeliness of the technology in meeting current needs or those anticipated in the near future; (3) clarity of the transfer process; (4) credibility of the technologies to be transferred; (5) a multifaceted transfer process involving multiple targets of change; (6) continuous attention to the process and the new behaviors; and (7) a bi-directional process that elicits response from those being targeted for change.

With these principles in mind, The Change Book details 10 steps for effective technology transfer. The first seven involve substeps of the planning component in the PDSA cycle and include identifying the problem, organizing a change team, identifying an outcome, assessing the organization and the target audience, identifying the most appropriate approach, and designing action and maintenance plans. The next three steps directly correspond to the do-study-act components – implementing the action and maintenance plans (do), evaluating progress (study), and revising plans based on evaluation results (act).

ATTCs have also partnered with the National Institute on Drug Abuse (NIDA) Clinical Trials Network (CTN) and with the Substance Abuse and Mental Health Services Administration (SAMHSA) to develop a blending initiative to accelerate the dissemination of research-based drug abuse treatment findings into community-based practice. As described on the ATTC website (http://www.attcnetwork.org), the Blending Initiative combines three components – Regional Blending Conferences include researchers, clinical practitioners, and policy-makers to enhance bidirectional communication about scientific findings; State Agency Partnerships help identify strategies for adoption of evidence-based practices; and Blending Teams design tools or products to facilitate adoption of evidence-based interventions as soon as possible when research results are published. The teams are composed of members from ATTCs, NIDA, researchers, and community treatment providers participating in the CTN. Each of six active blending teams developed dissemination strategies and products designed to implement an evidence-based practice in the field. Products have included brochures, training materials (manuals, videos, CDs and DVDs, classroom trainings), PowerPoint presentations, frequently asked questions (FAQs), support materials, and annotated bibliographies of research articles. Although comprehensive, these blending products have not focused on the context and readiness of the organizations and programs that may attempt to implement the new practices.

The NIATx model (Network for the Improvement of Addiction Treatment; McCarty et al., 2007) uses business process improvement methods and was developed to help treatment providers reduce waiting times to start treatment, reduce no-shows for assessments, increase the number of admissions, and keep clients in treatment longer. Based on an examination of 640 organizations, five factors (from 80 studied) reportedly differentiate successful from unsuccessful organizations (Gustafson & Hundt, 1995) and the NIATx model developed five principles from them. The first focuses on understanding and involving the customer by conducting a walk-through in which staff follow the same steps a customer does in order to see how the process works from a customer’s perspective. A second principle is to fix key problems that keep the CEO awake at night, that is, to address problems that will make a difference. The third and fourth principles include choosing a powerful change leader and engaging others from both inside and outside the organization in the process. Rapid-cycle testing using a PDSA cycle is the last of the five principles in which changes are tested on a small scale, results examined, revisions made based on those results, and the cycle is repeated until satisfactory outcomes are achieved.

The NIATx approach also follows what is referred to as a Learning Collaborative Model designed to teach programs core strategies for initiating organizational change (Evans, Rieckmann, Fitzgerald, & Gustafson, 2007). It encompasses elements such as the NIATx website (www.NIATx.net), multi-day conferences known as learning sessions for members, interest circle teleconferences, a monthly electronic newsletter, weekly e-mail updates, and expert coaching. These coaches guide process improvement and work with agencies throughout the change process, relying on site visits, phone conferences, and e-mail.

A Strategic Approach to Innovation Planning and Implementation

While PDSA-guided strategies described above help identify conceptual stages and core components for successful innovation implementations, they offer few of the practical building blocks and tools needed by treatment providers and evaluators. More specifically, what can be said about how provider systems might go about deciding on innovations that fit their needs, goals, and resources? Indeed, there are many evidence-based practices to choose from, but none are appropriate for every clinical setting. Program mission, funding resources, geographic and cultural setting, and staff qualifications are some of the significant contextual considerations, along with specific treatment practices in play. The leadership and staff members should both be involved in the decision-making process, and they especially should have a common “blueprint” for how their flow of services are designed and practiced.

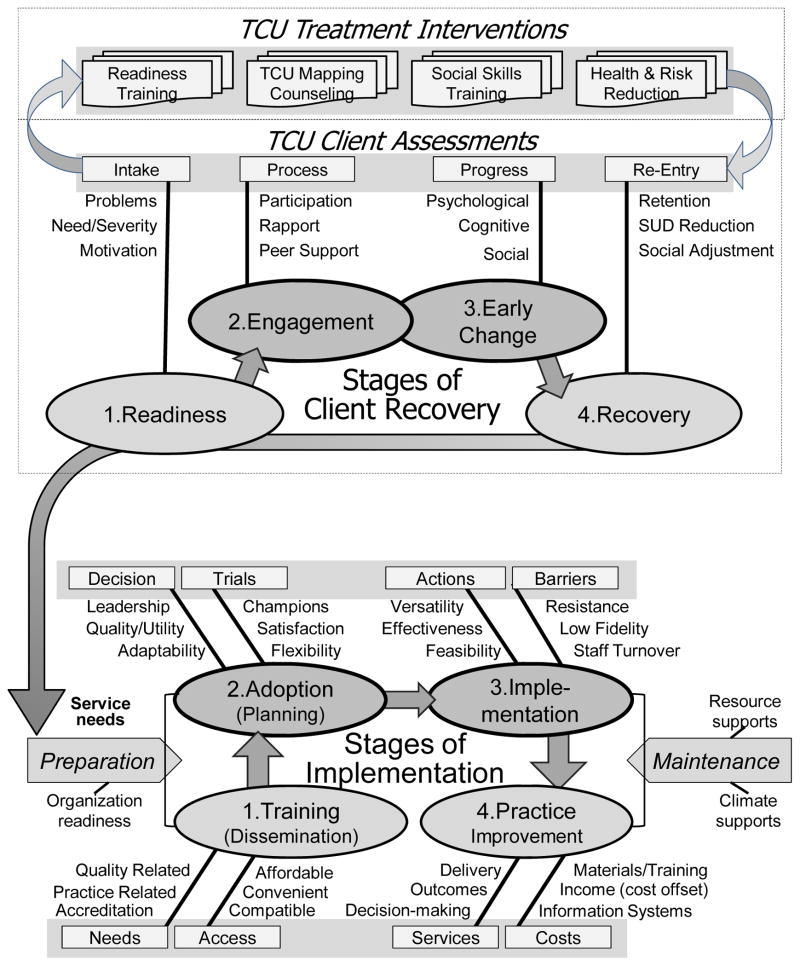

The TCU Treatment Process Model (Simpson, 2004) incorporates evidence on the sequential roles of client motivation and readiness, therapeutic engagement, and recovery changes into a common framework. As presented below as part of a prescribed 2-phase strategic approach to planning and implementing innovations – illustrated in Figure 1 – this evidence-based therapeutic process model offers a foundation for reviewing critical elements of treatment preparatory to the planning for innovations. When armed with this understanding,, treatment leaders and staff are better prepared collectively to identify new therapeutic interventions, counselor skills, client assessment tools, related data systems, or other operational tools that are needed. It is a prelude to the second phase, based on the TCU Program Change Model (Simpson, 2002; 2009; Simpson & Flynn, 2007a) for describing the stages of implementation and innovation maintenance over time.

Figure 1.

Integrated 2-phase TCU approach to strategic system change.

After presenting the bi-phasic conceptual framework and rationale for this innovation planning and implementation approach below, an array of empirical assessment tools to measure each of the client and program-level constructs included will be summarized along with examples of manualized interventions that are available. Finally, examples of their research applications and some key findings of completed research will be described.

Phase 1: Understanding stages of client recovery and related treatment tools

Understanding the process of program change is critical for effectively implementing new evidence-based practices to improve clinical service delivery. Understanding the process of clinical change is critical for effectively choosing the evidence-based practices that will contribute to better client performance in treatment. While the specific clinical model for service delivery may vary slightly from program to program, the elements that form the basis for any treatment process can be found in the TCU Treatment Process Model (Simpson, 2004). It represents serves as an evidence-based framework for describing stages of treatment and how they rely on a series of cognitive, behavioral, psychosocial, and skill-building interventions for establishing recovery related skills. Client change increments generally tend to be sequential – admittedly with fine gradations and in directions that are not strictly linear. The sequential nature of these stages of treatment is supported (Simpson & Joe, 2004), showing that clients presenting to treatment with higher motivation are more likely to participate in treatment during the early months. Better participation during the early portion of treatment is then associated with greater rapport with counselors. Clients who report stronger therapeutic relationships with counselors show greater improvements in psychological functioning during treatment in the areas of self-esteem, depression, anxiety, social integration, and decision-making. Improved psychological functioning is then associated with favorable behavior changes (e.g., self-report and urinalysis measures of drug use).

The model also portrays how specialized interventions as well as health and social support services promote stages of recovery-oriented change. Cognitive strategies (especially those for increasing levels of treatment readiness among low-motivated clients) have proven useful for improving subsequent therapeutic relationships and retention. Assessment instruments that gauge client and program performance provide a foundation for systematic treatment monitoring and management strategies, and for tracking the evidence for using targeted interventions to improve treatment quality. Several publications are available that illustrate the impact of this work (Simpson & Flynn, 2007b; Simpson & Knight, 2007; Simpson et al., 2009).

Phase 2: Understanding stages of implementation and related preparation/maintenance challenges

Understanding the clinical process of client recovery and using an assessment system to measure and monitor client progress through the various stages help programs understand their clinical needs and thus the types of interventions that can improve clinical services and outcomes. As indicated in Figure 1, this understanding is a critical part of the preparation phase of identifying what new clinical techniques should be implemented. Transferring “evidence-based” techniques into practice, however, is a complicated task, and as indicated by the collection of papers included in the present special journal issue is itself being given systematic scientific study. Organizational climate and readiness for change are especially important to consider, and the TCU Program Change Model (Flynn & Simpson, 2009; Simpson, 2002; Simpson & Flynn, 2007a) offers a descriptive framework and action plan to address sources of influence on this stage-based process. Once relevant new clinical practices are identified, the process of implementing them properly begins with consideration of program needs and resources, structural and functional characteristics, and general readiness to embrace innovations (Simpson, 2009). As elaborated below, guidelines for conducting agency self-evaluations and defining action strategies to make system-level changes are explained by Simpson and Dansereau (2007).

The TCU Program Change Model, shown as the second phase of the comprehensive model in Figure 1, describes organizational change as a dynamic, step-based process and has been developed for improving clinical practices. Where change approaches such as NIATx focus on business processes, the TCU Program Change Model addresses the dynamics for improving clinical processes by implementing new clinical practices in a sequential manner. Change begins with exposure to potential EBPs in workshop-based training sessions. The conceptualization of training as a first step in the change process is a unique contribution of this model. Training can occur prior to or following the identification of clinical needs, and represents an awareness of and intention to seek effective alternatives to how treatment is currently being delivered. By design, training also serves to increase individual participants’ knowledge and beliefs about the intervention and self-efficacy with regard to implementation, which are identified by CFIR as important individual characteristics. These participants tend to engage as change agents within their organization as they take new knowledge back to their organizations.

Following training, the next crucial step is adoption of the intervention (by the individual or groups of individuals within the organization), which involves decision-making and action. As recognized in the CFIR, leaders make decisions about using new tools or procedures based on intervention characteristics such as its evidence strength and quality and its adaptability to the specific environment, as well as with the implementation climate—in particular its compatibility with strategies, materials, and cultural values currently in place at the program. The implementation stage expands the adoption stage to regular use of the innovation and is dependent on factors including perceptions of effectiveness, feasibility, and sustainability given available resources. These factors are enhanced when the interventions have a close fit with clinical needs of the program as indicated by assessments of the stages of client recovery. At the organizational level, collective opinions about motivation, resources, staff attributes, and program climate play a role in long-range success, along with adequate financial support. Finally, innovations that pass these stages tend to become standard practice and presumably can result in service improvements.

The model shows that preparation for change (Simpson, 2009) is a critical feature for successful implementation and includes review of both facilities management/services and clinical model for service delivery (as shown by the top box on strategic planning for program change). A review of facilities management and services flow helps identify where innovative practices fit into the larger organizational structure to help assure that they are compatible with other organizational practices. A thorough review of the clinical model for care planning helps identify areas where improvements in clinical practices are needed. Surveys of staff needs and functioning provide diagnostic information regarding staff readiness and ability to accept the planned changes. Basically, programs need to “know” themselves well in order to successfully guide their organization toward survival and improvement. As new clinical procedures become more complex and comprehensive (e.g., involving multiple systems), the process of change becomes progressively more complicated and challenging – especially in settings where communication, cohesion, trust, and tolerance for change are lacking or must span across divisions or agencies. Organizational integrity, readiness, and resources all grow increasingly important. Planning and preparation for innovation implementation ought to identify and address organizational strengths and deficiencies before facing decisions about innovations (Flynn & Simpson, 2009). Also consistent with CFIR, it should be noted that “costs” have been included in the TCU Program Change Model to recognize the influence and importance of finances and resources on implementation of innovations.

TCU assessments of client treatment needs and progress

As discussed above, client-level assessments throughout the treatment process and aggregated at the program level provide a valuable source of data for assessing clinical needs. A comprehensive battery of client measures has been developed to assess client needs at intake and progress throughout treatment (Joe, Broome, Rowan-Szal, & Simpson, 2002; Simpson, 2004; Simpson & Knight, 2007). These include drug use and crime risk at intake (TCU Drug Screen II, Global Risk Assessment, Criminal History Risk Assessment, and Criminal Thinking Scales), client health and social risk (Physical and Mental Health Status, Mental Trauma and PTSD Screen, HIV/Hepatitis Risk Assessment, and Family and Friends Assessment), and client evaluation of self and treatment (CEST; Treatment Needs and Motivation, Psychological Functioning, Social Functioning, and Treatment Engagement). Client functioning domains included in the CEST assess client motivation and functioning at intake, and can also be used as indicators of client readiness for treatment. Repeated assessments of client functioning and engagement over time serve to monitor progress through the different stages of treatment. (All of these assessment forms are available in one-page scannable forms for easy and quick data entry and report generation for free from www.ibr.tcu.edu.) This clinical database serves as a key element of an organizational development information system by aggregating client measures at the program level for use as indicators of program functioning. It can then be used to help target areas for new interventions and clinical improvements.

TCU treatment interventions

While a comprehensive review of evidence-based practices that are available to meet the clinical needs identified through assessment is beyond the scope of this paper, it should be noted that a comprehensive set of interventions have been developed that link to and address client progress throughout the various stages of treatment as outlined in the TCU Treatment Process Model. These manual-guided interventions address improvements in areas such as sequencing stages of treatment based on readiness and motivation, applying client assessment information to care planning and progress monitoring, behavioral techniques for improving treatment participation, therapeutic engagement strategies, emotional self-management, dealing with thinking errors (e.g., criminal), communication skills, developing healthy relationships, sexuality, parenting, HIV/AIDS awareness, and preparing for relapse risks. They can be effectively grouped into customized and stage-sensitive “clusters” relevant to the treatment process model (Roque & Lurigio, 2009). Furthermore, all interventions incorporate TCU Mapping Enhanced Counseling, a graphical approach to organizing and communicating information within therapeutic settings.

TCU Mapping-Enhanced Counseling is an evidence-based graphic representation strategy used to visually enhance the counseling process, both between counselor and client(s) or as part of the presentation and implementation of TCU intervention manuals (Dansereau, Joe, & Simpson, 1993; Dees, Dansereau, & Simpson, 1994). It is included in SAMHSA’s National Registry of Evidence-based Programs and Practices (NREPP), and a conceptual overview of this approach is published in Professional Psychology: Research and Practice (Dansereau & Simpson, 2009). In brief, mapping is an effective strategy for increasing client motivation, engagement, participation, and retention in treatment by promoting more positive interactions with other clients and treatment staff. It facilitates communication, memory, and problem-solving during counseling sessions, and also helps document progress both within and across sessions (see Dansereau, 2005, for a review). Mapping approaches have been shown to help clients and counselors examine treatment-related issues (Dansereau & Dees, 2002; Newbern, Dansereau, Czuchry, & Simpson, 2005), and they have been incorporated into a series of effective modular interventions that cover specific counseling topics such as motivation and communication (Bartholomew, Hiller, Knight, Nucatola, & Simpson, 2000).

Further, studies of ethnically diverse adult clients and their counselors working collaboratively using mapping provide evidence for their efficacy when compared to typical counseling methods (see Dansereau & Dees, 2002; Czuchry & Dansereau, 2000; 2003). The approach has been particularly helpful for clients with less education (Pitre, Dansereau, & Joe, 1996), for African Americans and Mexican Americans (Dansereau, Joe, Dees, & Simpson, 1996), for more difficult clients such as those with multiple drug use history and with attention problems (Czuchry, Dansereau, Dees, & Simpson, 1995; Dansereau, Joe, & Simpson, 1995; Joe, Dansereau, & Simpson, 1994), and is particularly effective for group counseling (Dansereau, Dees, Greener, & Simpson, 1995; Knight, Dansereau, Joe, & Simpson, 1994).

TCU Assessments of organizational needs and functioning, innovation training, and adoption/implementation over time

Organizational assessments provide agencies with pertinent information about the health of their organization, identifying organizational barriers to implementation as well as specific clinical needs. A series of assessment instruments is available that measure organizational functioning (Organizational Readiness for Change [ORC]), structure (Survey of Structure and Operations [SSO]), costs (Treatment Cost Analysis Tool [TCAT]), and training evaluation (Workshop Evaluation [WEVAL]) and follow-up (Workshop Assessment and Follow-up [WAFU]). Corresponding feedback reports are recommended for participating agencies detailing strengths as well as areas in need of improvement.

Each assessment tool targets aspects of an organization that are critical in promoting change. Effective innovation implementation results depend on organizational infrastructure – the level of training, experience, and focus of staff – as indicated by ratings of program needs, clarity of mission, internal functioning, and professional attributes. Cost analysis provides critical data about available resources and potential implementation costs. Better staff knowledge about the rationale and delivery of services, along with up-to-date feedback about effectiveness of current services, provide a foundation for more educated and rational choices about potential innovations. With these elements in place, a comprehensive innovation implementation process can be more securely planned and carried out. Deploying practical innovations in an atmosphere of confidence and acceptance requires diagnostic tools such as these to identify staff perceptions that can affect each element of the innovation process. These assessments can help determine the higher-order organizational factors (measured by using aggregated staff ratings) that impact change.

Organizational Readiness for Change

The ORC survey (Greener, Joe, Simpson, Rowan-Szal, & Lehman, 2007; Lehman, Greener, & Simpson, 2002) includes 21 scales organized under four major domains: (1) program needs/pressures for change; (2) staff attributes; (3) institutional resources; and (4) organizational climate. Short descriptions of each scale including the domains are shown in Table 1. The first two domains of the ORC -- program needs/pressures for change and staff attributes -- are particularly relevant for assessing service needs and organizational readiness for implementation (preparation), and the third and fourth domains -- involving program resources and climate -- represent influences on maintenance of innovations.

Table 1.

Organizational Readiness for Change Domains and Scales

| Program Needs/Pressures for Change |

| Treatment Staff Needs reflect valuations made by treatment staff about program needs with respect to improving client functioning. These revolve around client assessments, improving treatment services, and using innovations for change |

| Program Needs reflect valuations made by staff about program strengths, weaknesses, and issues that need attention. These revolve around goals, performance, staff relations, and information systems. |

| Training Needs assess perceptions of training in several technical and knowledge areas that may be needed by staff. |

| Pressure for Change reflect perceptions about pressures from internal (e.g., target constituency, staff, or leadership) or external (e.g., regulatory and funding) sources. |

| Staff Attributes |

| Growth reflects the extent to which staff members value and use opportunities for their own professional growth |

| Efficacy measures staff confidence in their own professional skills and performance |

| Influence is an index of staff interactions, sharing, and mutual support |

| Adaptability refers to the ability of staff to adapt effectively to new ideas and change. |

| Satisfaction measures general satisfaction with one’s job and work environment |

| Institutional Resources |

| Offices refer to the adequacy of office equipment and physical space available |

| Staffing focuses on the overall adequacy of staff assigned to do the work |

| Training Resources address emphasis and scheduling for staff training and education |

| Equipment deals with adequacy and use of computerized systems and equipment |

| Internet refer to staff access and use of e-mail and the internet for professional communications, networking, and obtaining work-related information |

| Supervision reflects staff confidence in agency leaders and perceptions of co-involvement in the decision making process |

| Organizational Climate |

| Mission captures staff awareness of agency mission and clarity of its goals |

| Cohesion focuses on workgroup trust and cooperation |

| Autonomy addresses the freedom and latitude staff members have in doing their jobs |

| Communication focuses on the adequacy of information networks to keep everyone informed and having bi-directional interactions with leadership. |

| Stress measures perceived strain, stress, and role overload. |

| Change represents staff attitudes about agency openness and efforts in keeping up with changes that are needed. |

To date, the ORC has been administered to over 5,000 substance abuse treatment staff in more than 650 organizations (including work in England and Italy) and representing a variety of substance abuse, social, medical, and mental health settings in the U.S. in both community and correctional settings. ORC scores have been shown to be associated with higher ratings and satisfaction with training, greater openness to innovations (Fuller et al., 2007; Saldana, Chapman, Henggeler, & Rowland, 2007), greater satisfaction with training, greater utilization of innovations following training (Simpson & Flynn, 2007a), and with better client functioning (Broome, Flynn, Knight, & Simpson, 2007; Greener et al., 2007; Lehman et al., 2002). A staff more likely to value growth and change and programs with a more positive and supportive climate (e.g., a clearer mission, higher cohesion, autonomy, and communication, and lower stress) were more likely to report higher utilization of training, although these factors were not strongly related to exposure to training (e.g., more staff exposed to more training opportunities). Programs with more program resources available offered more training opportunities (Lehman, Knight, Joe, & Flynn, 2009).

Survey of Structure and Operations (SSO) is completed by a program director or other administrator, and serves as a source of structural information about participating programs. Major topics include general program characteristics, organizational relationships, clinical assessments and practices, services provided, staff and client characteristics, and recent changes that may affect organizational operations. The questionnaire elicits information generally compatible with the National Survey of Substance Abuse Treatment Services (N-SSATS). The purpose of this instrument is more descriptive than diagnostic and can provide information that adds context to the other results.

Treatment Cost Analysis Tool (TCAT)

The TCAT (Flynn et al., 2009) is a self-administered Microsoft Excel®-based workbook designed for Program Financial Officers and Directors, or other staff with sufficient information about program finances and operations. It collects, allocates, and analyzes accounting and economic costs of treatment in community-based programs (e.g., cost per counseling hour, group counseling hour, enrolled day, episode of treatment). The TCAT is designed to also be used as a planning and management tool to forecast effects of future changes in staffing, client flow, program design, and other resources. Worksheets include summaries and charts with comparative data from a national sample of non-methadone outpatient programs. Once full costs are available, estimates can be generated for changes in programming. Recognizing the importance of also providing cost estimates for implementing and using evidence-based practices, the TCAT is currently being adapted for treatment intervention developers and program personnel so that they can put a price tag on new interventions.

Workshop Evaluation (WEVAL) and Workshop Assessment and Follow-up (WAFU)

Two additional instruments were developed to provide training evaluation immediately following training and after a follow-up period. The Workshop Evaluation (WEVAL) form was designed to be administered directly following training and assesses relevance of the training for the participants, training engagement in terms of participant interest in obtaining further training, and program support, reflecting program resources available to participants to implement the training. The Workshop Assessment Follow-up (WAFU) was designed to assess post-training evaluation, typically about 6-months after training, in terms of satisfaction and interest in further training, utilization of training materials, and reasons why materials may not have been used (e.g., resource and procedural barriers). Relevance of training materials, the desire for more training, and program support have been shown to be related to post-training use of materials (Bartholomew, Joe, Rowan-Szal, & Simpson, 2007). Common barriers to utilization have included lack of time and redundancy with existing materials. These results point to the importance of program readiness and a clear understanding of program clinical needs prior to training in order to make the most effective use of scarce training resources.

TCU organizational intervention

It is widely recognized that implementing evidence-based practices such as those outlined above is most successful in programs where staff and organizational practices promote and/or embrace change. And increasingly, organizational assessments are being used to determine an organization’s readiness to change. However, a major barrier exists in that many organizations do not have the tools or expertise necessary to know how to utilize organizational assessment results to address their strengths and weaknesses and thereby increase their readiness for change. Providing tools for programs to identify problem areas and guide them through the process of defining solutions and solving problems will help remove some of the barriers organizations have in adopting new technologies.

A study reported by Courtney, Joe, Rowan-Szal, and Simpson (2007) examined organizational characteristics of treatment programs that participated in an assessment and training workshop designed to improve organizational functioning. Directors and clinical supervisors from 53 community programs attended a two-day workshop entitled “TCU Model Training – Making it Real.” Workshop participants worked with their own assessment information from previously administered ORC surveys to develop treatment quality improvement plans for their organizations using the ten steps outlined in The Change Book as a guide. Programs with higher needs and pressures, fewer intuitional resources, lower ratings on staff attributes and organizational climate, and greater staff consensus on organizational climate were more likely to engage in continued change. The implications for these results are that targeted feedback on inadequate organizational functioning can motivate programs to make changes for improvement, particularly when tools for making those changes are available.

Applying cognitive mapping to the transfer of evidence-based practices

In order to meet the need for available tools to assist programs in planning and implementing change, the “Mapping Organizational Change” (MOC) manual was developed utilizing cognitive mapping strategies as the underlying foundation for planning and decision-making. The MOC was designed to assist organizations in selecting, planning, and executing change in general organizational functioning (or organizational preparedness) or in clinical services and serves as a catalyst for change by structuring and encouraging input and buy-in among team members. Using a visual-spacial representation strategy, a series of prescribed exercises guide participants through seven steps in the change process: (1) identifying strengths and problems; (2) analyzing problems by exploring causes, consequences, and solutions; (3) selecting potential goals; (4) exploring potential consequences of change; (5) targeting and prioritizing sub-goals; (6) creating action plans; and (7) monitoring progress toward goals. Guide maps corresponding to each step provide a springboard for group discussion and structure while facilitating collaborative problem solving.

By design, MOC steps are compatible with other strategies and formative evaluation approaches developed within private industry and applied to behavioral health organizations. Mapping Organizational Change describes a logical sequence of steps similar to that presented in The Change Book (Addiction Technology Transfer Centers, 2004). In order to improve communication and memory during change, the “mapping” approach used here focuses on providing a concrete, easy to use set of activities to keep change visible and on track. Consequently, the two books are complimentary, one providing details on implementing specific steps and the other a method for keeping the process systematic.

Together, a set of integrated assessment and intervention tools provide programs with a systems approach to addressing barriers associated with implementing change. Strength from the approach lies in its ability to inform decisions using real data, its flexibility in the types of problems to which it can be applied, and its utility as a method for structuring decision-making, encouraging cooperation, and facilitating accountability. Over the past decade, supervisors and field managers with access to the TCU assessment tools have used them successfully to obtain a snapshot of organizational health with little or no training or incentives. A minority have developed action plans targeting areas in which their organizations perform subpar, and others have applied the MOC tool in their problem-solving efforts. Managers indicate that these tools have significant potential, and are helpful in facilitating communication, guiding thinking around brainstorming and problem-solving, and provide a structured, systematic approach to documentation and later evaluation of proposed plans.

Summary of comprehensive implementation research findings

The integration of a longitudinal model that incorporates many of the clinical and organizational assessment tools with a training program that utilizes organizational feedback information was demonstrated in a comprehensive set of analyses linking various elements of the program change and client process models reported by Simpson, Joe, and Rowan-Szal (2007). A two-year longitudinal study of treatment programs focused on relationships between staff ratings of innovation needs, training adoption, and implementation across time. A sample of 59 substance abuse treatment programs completed a program training needs survey, the ORC, and administered up to 100 CEST forms to clients (to assess client treatment progress) from 4 to 12 months prior to attending a training conference based on the program training needs survey administered up to one year earlier and which focused on improving therapeutic alliance. A workshop-specific WEVAL was completed by participants at the close of the workshop and WAFUs were mailed to participants six months later to assess innovation adoption. Program training needs and ORC domains were re-assessed about one year following the training.

Analyses based on these data examined several important linkages in the program change model. Results showed that staff attitudes about their training needs and their past experiences with training were positively related to their subsequent ratings of training quality and also to later progress n adopting innovations. A positive organizational climate (e.g., clarity of mission, cohesion, openness to change) was also related to later innovation adoption. Importantly, self-ratings by samples of clients on their own engagement in treatment were positively related to greater adoption by counselors of innovations presented at the training workshops. Higher posttraining satisfaction, trial adoption use and better development of counseling skills were associated with higher scores on program resources and climate and lower levels of training barriers measured about one year after the training workshops. These results demonstrate support for many of the linkages between the elements of the model shown in Figure 1 and indicate the importance of readily available assessment tools that integrate with the model and allow programs to monitor needs, barriers, and progress as they continually attempt to improve clinical services and client outcomes.

Concluding Comments

At the National Institutes of Health (NIH), the general process of developing and converting new basic science findings into practical applications is referred to as “translational research.” As discussed by Kerner and Hall (2009), however, emphasis is growing at NIH on using implementation research to study the effective adoption and uptake of new evidence-based practices in specific settings. Neither the traditional model of comparatively passive communication about innovations know as diffusion (Rogers, 2003) nor even the more contemporary and active communication model referred to as dissemination sufficiently addresses the nuances of implementing complex interventions in clinical settings while considering their unique social, organizational, and environmental contexts. Of particular importance are clinical context and organizational factors that emerge across distinctive information processing and action stages that must be considered when promoting sustainable practice improvements (Flynn & Simpson, 2009; Simpson, 2009).

Kerner and Hall (2009) also stress the need “to develop and validate a coherent theoretical framework of practitioner and organizational behavior change to inform better selection of intervention in research and service settings” (p. 526). Barriers to effective implementation likewise must be addressed, as well as other factors that can modify the process. In accord with these recommendations, and consistent with many of the observations about core components and implementation guidelines by several researchers as reviewed earlier in this paper (e.g., Aarons, 2006; Damshroder et al., 2009 ; Fixsen et al., 2005; Flynn & Simpson, 2009; Simpson & Flynn, 2007a), a comprehensive conceptual and evaluation framework was presented in this paper. The model takes into account common barriers and moderating factors represented by practitioner traits, training protocols, procedural evaluations, administrative resources and support, and provider system attributes related to planning the implementation of new interventions. It offers the practical benefits of having a battery of assessments and interventions that target the concepts put forward, and research has been published to support applications in service provider settings. Hopefully, this combination of conceptual and procedural guidelines (along with the tools needed) for identifying and moving innovations into practice can help resolve some of the past problems (Brown & Flynn, 2002; Institute of Medicine, 2005) in the uptake of evidence-based practices.

Acknowledgments

The National Institute of Drug Abuse (Grant No. R01 DA013093, Grant No. R01 DA025885, and Grant No. U01 DA016190) funded the work, but interpretations and conclusions do not necessarily represent the position of NIDA or the U.S. Department of Health and Human Services.

References

- Aarons GA. Transformational and transactional leadership: Association with attitudes toward evidence-based practice. Psychiatric Services. 2006;57(8):1162–1168. doi: 10.1176/appi.ps.57.8.1162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Addiction Technology Transfer Centers. The change book: A blueprint for technology transfer. Rockville, MD: Center for Substance Abuse Treatment; 2000. [Google Scholar]

- Addiction Technology Transfer Centers. The Change Book: A blueprint for technology transfer. Kansas City, MO: ATTC National Office; 2004. [Google Scholar]

- Bartholomew NG, Hiller ML, Knight K, Nucatola DC, Simpson DD. Effectiveness of communication and relationship skills training for men in substance abuse treatment. Journal of Substance Abuse Treatment. 2000;18(3):217–225. doi: 10.1016/s0740-5472(99)00051-3. [DOI] [PubMed] [Google Scholar]

- Bartholomew NG, Joe GW, Rowan-Szal GA, Simpson DD. Counselor assessments of training and adoption barriers. Journal of Substance Abuse Treatment. 2007;33(2):193–200. doi: 10.1016/j.jsat.2007.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broome KM, Flynn PM, Knight DK, Simpson DD. Program structure, staff perceptions, and client engagement in treatment. Journal of Substance Abuse Treatment. 2007;33(2):149–158. doi: 10.1016/j.jsat.2006.12.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown BS, Flynn PM. The federal role in drug abuse technology transfer: A history and perspective. Journal of Substance Abuse Treatment. 2002;22(4):245–257. doi: 10.1016/s0740-5472(02)00228-3. [DOI] [PubMed] [Google Scholar]

- Czuchry M, Dansereau DF. Drug abuse treatment in criminal justice settings: Enhancing community engagement and helpfulness. American Journal of Drug and Alcohol Abuse. 2000;26(4):537–552. doi: 10.1081/ada-100101894. [DOI] [PubMed] [Google Scholar]

- Czuchry M, Dansereau DF. A model of the effects of node-link mapping on drug abuse counseling. Addictive Behaviors. 2003;28(3):537–549. doi: 10.1016/s0306-4603(01)00252-0. [DOI] [PubMed] [Google Scholar]

- Czuchry M, Dansereau DF, Dees SM, Simpson DD. The use of node-link mapping in drug abuse counseling: The role of attentional factors. Journal of Psychoactive Drugs. 1995;27(2):161–166. doi: 10.1080/02791072.1995.10471685. [DOI] [PubMed] [Google Scholar]

- Courtney KO, Joe GW, Rowan-Szal GA, Simpson DD. Using organizational assessment as a tool for program change. Journal of Substance Abuse Treatment. 2007;33(2):131–137. doi: 10.1016/j.jsat.2006.12.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dansereau DF. Node-link mapping principles for visualizing knowledge and information. In: Tergan SO, Keller T, editors. Knowledge and information visualization: Searching for synergies (Lecture Notes in Computer Science 3426. Heidelberg: Springer Verlag; 2005. pp. 61–81. [Google Scholar]

- Dansereau DF, Dees SM. Mapping training: The transfer of a cognitive technology for improving counseling. Journal of Substance Abuse Treatment. 2002;22(4):219–230. doi: 10.1016/s0740-5472(02)00235-0. [DOI] [PubMed] [Google Scholar]

- Dansereau DF, Dees SM, Greener JM, Simpson DD. Node-link mapping and the evaluation of drug abuse counseling sessions. Psychology of Addictive Behaviors. 1995;9(3):195–203. [Google Scholar]

- Dansereau DF, Joe GW, Dees SM, Simpson DD. Ethnicity and the effects of mapping-enhanced drug abuse counseling. Addictive Behaviors. 1996;21(3):363–376. doi: 10.1016/0306-4603(95)00067-4. [DOI] [PubMed] [Google Scholar]

- Dansereau DF, Joe GW, Simpson DD. Node-link mapping: A visual representation strategy for enhancing drug abuse counseling. Journal of Counseling Psychology. 1993;40(4):385–395. [Google Scholar]

- Dansereau DF, Joe GW, Simpson DD. Attentional difficulties and the effectiveness of a visual representation strategy for counseling drug-addicted clients. International Journal of the Addictions. 1995;30(4):371–386. doi: 10.3109/10826089509048732. [DOI] [PubMed] [Google Scholar]

- Dansereau DF, Simpson DD. A picture is worth a thousand words: The case for graphic representations. Professional Psychology: Research and Practice. 2009;40(1):104–110. [Google Scholar]

- Dees SM, Dansereau DF, Simpson DD. A visual representation system for drug abuse counselors. Journal of Substance Abuse Treatment. 1994;11(6):517–523. doi: 10.1016/0740-5472(94)90003-5. [DOI] [PubMed] [Google Scholar]

- Deming WE. Out of the crisis. Cambridge, MA: MIT Center for Advanced Engineering Study; 1982. [Google Scholar]

- Evans AC, Rieckmann T, Fitzgerald MM, Gustafson DH. Teaching the NIATx model of process improvement as an evidence-based process. Journal of Teaching in the Addictions. 2007;6(2):21–37. [Google Scholar]

- Fixsen DL, Naoom SF, Blasé KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; 2005. (FMHI Publication #231) [Google Scholar]

- Flynn PM, Broome KM, Beaston-Blaakman A, Knight DK, Horgan CM, Shepard DS. Treatment Cost Analysis Tool (TCAT) for estimating costs of outpatient treatment services. Drug and Alcohol Dependence. 2009;100:47–53. doi: 10.1016/j.drugalcdep.2008.08.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flynn PM, Simpson DD. Adoption and implementation of evidence-based treatment. In: Miller PM, editor. Evidence-based addiction treatment. San Diego, CA: Elsevier; 2009. pp. 419–437. [Google Scholar]

- Fuller BE, Rieckmann T, Nunes EV, Miller M, Arfken C, Edmundson E, McCarty D. Organizational Readiness for Change and opinions toward treatment innovations. Journal of Substance Abuse Treatment. 2007;33(2):183–192. doi: 10.1016/j.jsat.2006.12.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greener JM, Joe GW, Simpson DD, Rowan-Szal GA, Lehman WEK. Influence of organizational functioning on client engagement in treatment. Journal of Substance Abuse Treatment. 2007;33:139–148. doi: 10.1016/j.jsat.2006.12.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: Systematic review and recommendations. The Milbank Quarterly. 2004;82(4):581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grol RP, Bosch MC, Hulscher ME, Eccles MP, Wensing M. Planning and studying improvement in patient care: The use of theoretical perspectives. Milbank Q. 2007;85(1):93–138. doi: 10.1111/j.1468-0009.2007.00478.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gustafson DH, Hundt AS. Findings of innovation research applies to quality management. Health Care Management Review. 1995;20:16–24. [PubMed] [Google Scholar]

- Institute of Medicine. Improving the quality of health care for mental and substance abuse conditions: Quality Chasm Series. Washington, DC: The National Academy Press; 2005. [Google Scholar]

- Joe GW, Broome KM, Rowan-Szal GA, Simpson DD. Measuring patient attributes and engagement in treatment. Journal of Substance Abuse Treatment. 2002;22:183–196. doi: 10.1016/s0740-5472(02)00232-5. [DOI] [PubMed] [Google Scholar]

- Joe GW, Dansereau DF, Simpson DD. Node-link mapping for counseling cocaine users in methadone treatment. Journal of Substance Abuse. 1994;6(4):393–406. doi: 10.1016/s0899-3289(94)90320-4. [DOI] [PubMed] [Google Scholar]

- Kerner JF, Hall KL. Research dissemination and diffusion: Translation within science and society. Research on Social Work Practice. 2009;19:519–530. [Google Scholar]

- Klein KJ, Sorra JS. The challenge of innovation implementation. Academy of Management Review. 1996;21(4):1055–1080. [Google Scholar]

- Knight DK, Dansereau DF, Joe GW, Simpson DD. The role of node-link mapping in individual and group counseling. American Journal of Drug and Alcohol Abuse. 1994;20(4):517–527. doi: 10.3109/00952999409109187. [DOI] [PubMed] [Google Scholar]

- Lehman WEK, Greener JM, Simpson DD. Assessing organizational readiness for change. Journal of Substance Abuse Treatment. 2002;22(4):197–209. doi: 10.1016/s0740-5472(02)00233-7. [DOI] [PubMed] [Google Scholar]

- Lehman WEK, Knight DK, Joe GW, Flynn PM. Program resources and utilization of training in outpatient substance abuse treatment programs. Poster presentation at the Addiction Health Services Research annual meeting; San Francisco, CA. 2009. Oct, [Google Scholar]

- McCarty D, Gustafson DH, Wisdom JP, Ford J, Choi D, Molfenter T, Cotter F. The Network for the Improvement of Addiction Treatment (NIATx): Enhancing access and retention. Drug and Alcohol Dependence. 2007;88:138–145. doi: 10.1016/j.drugalcdep.2006.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newbern D, Dansereau DF, Czuchry M, Simpson DD. Node-link mapping in individual counseling: Treatment impact on clients with ADHD-related behaviors. Journal of Psychoactive Drugs. 2005;37(1):93–103. doi: 10.1080/02791072.2005.10399752. [DOI] [PubMed] [Google Scholar]

- Pitre U, Dansereau DF, Joe GW. Client education levels and the effectiveness of node-link maps. Journal of Addictive Diseases. 1996;15(3):27–44. doi: 10.1300/J069v15n03_02. [DOI] [PubMed] [Google Scholar]

- Rogers EM. Diffusion of innovations. 5. New York: The Free Press; 2003. [Google Scholar]

- Roman PM, Johnson JA. Adoption and implementation of new technologies in substance abuse treatment. Journal of Substance Abuse Treatment. 2002;22(4):211–218. doi: 10.1016/s0740-5472(02)00241-6. [DOI] [PubMed] [Google Scholar]

- Roque L, Lurigio AJ. An outcome evaluation of a treatment readiness group program for probationers with substance use problems. Journal of Offender Rehabilitation. 2009;48:744–757. [Google Scholar]

- Saldana L, Chapman JE, Henggeler SW, Rowland MD. Organizational readiness for change in adolescent programs: Criterion Validity. Journal of Substance Abuse Treatment. 2007;33(2):159–169. doi: 10.1016/j.jsat.2006.12.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shewhart WA. Statistical method from the viewpoint of quality control. Lancaster, PA: Lancaster Press; 1939. [Google Scholar]

- Simpson DD. A conceptual framework for transferring research to practice. Journal of Substance Abuse Treatment. 2002;22:171–182. doi: 10.1016/s0740-5472(02)00231-3. [DOI] [PubMed] [Google Scholar]

- Simpson DD. A conceptual framework for drug treatment process and outcomes. Journal of Substance Abuse Treatment. 2004;27:99–121. doi: 10.1016/j.jsat.2004.06.001. [DOI] [PubMed] [Google Scholar]

- Simpson DD. Organizational readiness for Stage-based dynamics of innovation implementation. Research on Social Work Practice. 2009;19:541–551. [Google Scholar]

- Simpson DD, Dansereau DF. Assessing organizational functioning as a step toward innovation. Science & Practice Perspectives. 2007;3(2):20–28. doi: 10.1151/spp073220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson DD, Flynn PM. Moving innovations into treatment: A stage-based approach to program change. Journal of Substance Abuse Treatment. 2007a;33(2):111–120. doi: 10.1016/j.jsat.2006.12.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson DD, Flynn PM, editors. Organizational Readiness for Change. Special Section. Journal of Substance Abuse Treatment. 2007b;33(2):111–209. [Google Scholar]

- Simpson DD, Joe GW. A longitudinal evaluation of treatment engagement and recovery stages. Journal of Substance Abuse Treatment. 2004;27:89–97. doi: 10.1016/j.jsat.2004.03.001. [DOI] [PubMed] [Google Scholar]

- Simpson DD, Joe GW, Rowan-Szal GA. Linking the elements of change: Program and client responses to innovation. Journal of Substance Abuse Treatment. 2007;33(2):201–209. doi: 10.1016/j.jsat.2006.12.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson DD, Knight K. Offender needs and functioning assessments from a national program. Criminal Justice and Behavior. 2007;34(9):1105–1112. [Google Scholar]

- Simpson DD, Rowan-Szal GA, Joe GW, Best D, Day E, Campbell A. Relating counselor attributes to client engagement in England. Journal of Substance Abuse Treatment. 2009;36(3):313–320. doi: 10.1016/j.jsat.2008.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wisdom JP, Ford JH, Hayes RA, Edmundson E, Hoffman K, McCarty D. Addiction treatment agencies’ use of data: A qualitative assessment. Journal of Behavioral Health Services & Research. 2006;33:394–407. doi: 10.1007/s11414-006-9039-x. [DOI] [PubMed] [Google Scholar]