Abstract

Lattices abound in nature—from the crystal structure of minerals to the honey-comb organization of ommatidia in the compound eye of insects. These arrangements provide solutions for optimal packings, efficient resource distribution, and cryptographic protocols. Do lattices also play a role in how the brain represents information? We focus on higher-dimensional stimulus domains, with particular emphasis on neural representations of physical space, and derive which neuronal lattice codes maximize spatial resolution. For mammals navigating on a surface, we show that the hexagonal activity patterns of grid cells are optimal. For species that move freely in three dimensions, a face-centered cubic lattice is best. This prediction could be tested experimentally in flying bats, arboreal monkeys, or marine mammals. More generally, our theory suggests that the brain encodes higher-dimensional sensory or cognitive variables with populations of grid-cell-like neurons whose activity patterns exhibit lattice structures at multiple, nested scales.

DOI: http://dx.doi.org/10.7554/eLife.05979.001

Research organism: human, mouse, rat, other

eLife digest

The brain of a mammal has to store vast amounts of information. The ability of animals to navigate through their environment, for example, depends on a map of the space around them being encoded in the electrical activity of a finite number of neurons. In 2014 the Nobel Prize in Physiology or Medicine was awarded to neuroscientists who had provided insights into this process. Two of the winners had shown that, in experiments on rats, the neurons in a specific region of the brain ‘fired’ whenever the rat was at any one of a number of points in space. When these points were plotted in two dimensions, they made a grid of interlocking hexagons, thereby providing the rat with a map of its environment.

However, many animals, such as bats and monkeys, navigate in three dimensions rather than two, and it is not clear whether these same hexagonal patterns are also used to represent three-dimensional space. Mathis et al. have now used mathematical analysis to search for the most efficient way for the brain to represent a three-dimensional region of space. This work suggests that the neurons need to fire at points that roughly correspond to the positions that individual oranges take up when they are stacked as tight as possible in a pile. Physicists call this arrangement a face-centered cubic lattice.

At least one group of experimental neuroscientists is currently making measurements on the firing of neurons in freely flying bats, so it should soon be possible to compare the predictions of Mathis et al. with data from experiments.

Introduction

In mammals, the neural representation of space rests on at least two classes of neurons. ‘Place cells’ discharge when an animal is near one particular location in its environment (O'Keefe and Dostrovsky, 1971). ‘Grid cells’ are active at multiple locations that span an imaginary hexagonal lattice covering the environment (Hafting et al., 2005) and have been found in rats, mice, crawling bats, and human beings (Hafting et al., 2005; Fyhn et al., 2008; Yartsev et al., 2011; Jacobs et al., 2013). These cells are believed to build a metric for space.

In these experiments, locomotion occurs on a horizontal plane. Theoretical and numerical studies suggest that the hexagonal lattice structure is best suited for representing such a two-dimensional (2D) space (Guanella and Verschure, 2007; Mathis, 2012; Wei et al., 2013). In general, however, animals move in three dimensions (3D); this is particularly true for birds, tree dwellers, and fish. Their neuronal representation of 3D space may consist of a mosaic of lower-dimensional patches (Jeffery et al., 2013), as evidenced by recordings from climbing rats (Hayman et al., 2011). Place cells in flying bats, on the other hand, represent 3D space in a uniform and nearly isotropic manner (Yartsev and Ulanovsky, 2013).

As mammalian grid cells might represent space differently in 3D than in 2D, we study grid-cell representations in arbitrarily high-dimensional spaces and measure the accuracy of such representations in a population of neurons with periodic tuning curves. We measure the accuracy by the Fisher information (FI). Even though the firing fields between cells overlap, so as to ensure uniform coverage of space, we show how resolving the population's FI can be mapped onto the problem of packing non-overlapping spheres, which also plays an important role in other coding problems and cryptography (Shannon, 1948; Conway and Sloane, 1992; Gray and Neuhoff, 1998). The optimal lattices are thus the ones with the highest packing ratio—the densest lattices represent space most accurately. This remarkably simple and straightforward answer implies that hexagonal lattices are optimal for representing 2D space. In 3D, our theory makes the experimentally testable prediction that grid cells will have firing fields positioned on a face-centered-cubic lattice or its equally dense non-lattice variant—a hexagonal close packing structure.

Unimodal tuning curves with a single preferred stimulus, which are characteristic for place cells or orientation-selective neurons in visual cortex, have been extensively studied (Paradiso, 1988; Seung and Sompolinsky, 1993; Pouget et al., 1999; Zhang and Sejnowski, 1999; Bethge et al., 2002; Eurich and Wilke, 2000; Brown and Bäcker, 2006). This is also true for multimodal tuning curves that are periodic along orthogonal stimulus axes and generate repeating hypercubic (or hyper-rectangular) activation patterns (Montemurro and Panzeri, 2006; Fiete et al., 2008; Mathis et al., 2012). Our results extend these studies by taking more general stimulus symmetries into account and lead us to hypothesize that optimal lattices not only underlie the neural representation of physical space, but will also be found in the representation of other high-dimensional sensory or cognitive spaces.

Model

Population coding model for space

We consider the D-dimensional space in which spatial location is denoted by coordinates . The animal's position in this space is encoded by N neurons. The dependence of the mean firing rate of each neuron i on x is called the neuron's tuning curve and will be denoted by Ωi(x). To account for the trial-to-trial variability in neuronal firing, spikes are generated stochastically according to a probability for neuron i to fire ki spikes within a fixed time window τ. While two neurons can have correlated tuning curves Ωi(x), we assume that the trial-to-trial variability of any two neurons is independent of each other. Thus, the conditional probability of the N statistically independent neurons to fire (k1,…,kN) spikes at position x summarizes the encoding model:

| (1) |

Decoding relies on inverting this conditional probability by asking: given a spike count vector K = (k1,…,kN), where is the animal? Such a position estimate will be written as . How precisely the decoding can be done is assessed by calculating the average mean square error of the decoder. The average distance between the real position of the animal x and the estimate is

| (2) |

given the population coding model . This error is called the resolution (Seung and Sompolinsky, 1993; Lehmann, 1998), whereby the term denotes Euclidean distance, . More generally, the covariance matrix with coefficients for spatial dimensions , measures the covariance of the different error components, so that the sum of the diagonal elements of ∑ is just the resolution . In principle, the resolution depends on both the specific decoder and the population coding model. However, for unbiased estimators, that is, estimators that on average decode the location x as this location , the FI provides an analytical measure to assess the highest possible resolution of any such decoder (Lehmann, 1998).

Resolution and Fisher Information

Given a response of K = (k1,…,kN) spikes across the population, we ask how accurately an ideal observer can decode the stimulus x. The FI measures how well one can discriminate nearby stimuli and depends on how P(x, K) changes with x. The greater the FI, the higher the resolution, and the lower the error , as these two quantities are inversely related. More precisely, the inverse of the FI matrix J(x),

| (3) |

bounds the covariance matrix of the estimated coordinates x = (x1,…,xD)

| (4) |

The resolution of any unbiased estimator of the encoded stimulus can achieve cannot be greater than J(x)−1. This is known as the Cramér-Rao bound (Lehmann, 1998). Based on this bound, we will consider the FI as a measure for the resolution of the population code. In particular, we are interested in isotropic and homogeneous representations of space. These two conditions assure that the population has the same resolution at any location and along any spatial axis. Isotropy does not entail that the (global) spatial tuning of an individual neuron, Ωi(x), has to be radially symmetric, but merely that the errors are (locally) distributed according to a radially symmetric distribution. For instance, the tuning curve of a grid cell with hexagonal tuning is not radially symmetric around the center of a field (it has three axes), but the posterior is radially symmetric around any given location for a module of such grid cells. Homogeneity requires that the FI J(x) be asymptotically independent of x (as the number of neurons N becomes large); spatial isotropy implies that all diagonal entries in the FI matrix J(x) are equal.

Periodic tuning curves

Grid cells have periodic tuning curves—they are active at multiple locations, called firing fields, and these firing fields are hexagonally arranged in the environment (Hafting et al., 2005). Their periodic structure is given by a hexagonal lattice. The periodic structure of the tuning curve Ωi(x) reflects its symmetries, that is, the set of vectors that map the tuning curve onto itself. Since we want to understand how the periodic structure affects the resolution of the population code, we generalize the notion of a grid cell to allow different periodic structures other than just hexagonal. Mathematically, the symmetries of a periodic structure can be described by a lattice , which is constructed as follows: take a set of independent vectors (vα)1≤α≤D in D-dimensional space , and consider all possible combinations of these vectors and their integer multiples—each such vector combination points to a node of the lattice, such that the union of these represents the lattice itself. For instance, the square lattice (Figure 1A, bottom) is given by basis vectors v1 = (1, 0) and v2 = (0, 1). Mathematically, the lattice is

| (5) |

for which (vα)1≤α≤D is a basis of . We will not consider degenerate lattices. In this work, we follow the nomenclature from Conway and Sloane (1992). Applied fields might differ slightly in their terminology, especially regarding naming conventions for packings, which are generalizations of lattices (Whittaker, 1981; Nelson, 2002). We will address these generalizations of lattices below.

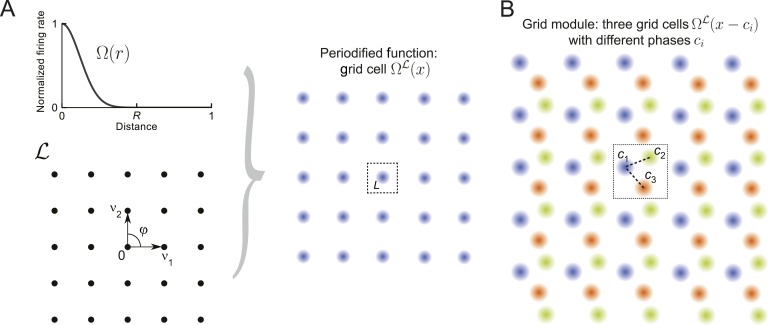

Figure 1. Grid cells and modules.

(A) Construction of a grid cell: Given a tuning shape Ω and a lattice , here a square lattice generated by v1 and v2 with φ = π/2, one periodifies Ω with respect to . One defines the value of in the fundamental domain L as the value of Ω(r) applied to the distance from zero and then repeats this map over like tiles the space. This construction can be used for lattices of arbitrary dimensions (Equation 7). (B) Grid module: The firing rates of three grid cells (orange, green, and blue) are indicated by color intensity. The cells' tuning is identical (Ω and are the same), yet they differ in their spatial phases ci. Together, such identically tuned cells with different spatial phases define a grid module.

Based on such a lattice , we construct periodic tuning curves as illustrated in Figure 1A. We start with a lattice and a tuning shape that decays from unity to zero; Ω(r) describes the firing rate of the periodified tuning curve at distance r from any lattice point and should be at least twice continuously differentiable. Each lattice point has a domain called the Voronoi region, which is defined as

| (6) |

that contains all points x that are closer to p than to any other lattice point q. Note that Vp ∩ Vq = ϕ if p ≠ q and that for all there exists a unique vector with Vp = Vq + v.

The domain that contains the null (0) vector is called the fundamental domain and is denoted by L:= V0. For each there is a unique lattice point that maps x into the fundamental domain: . Let us call this mapping . With this notation one can periodify Ω onto by defining a grid cell's tuning curve as :

| (7) |

where fmax is the peak firing rate of the neuron. Note that throughout the paper we set fmax = τ = 1, for simplicity. As illustrated in Figure 1A, within the fundamental domain L, the tuning curve defined above is radially symmetric. This pattern is repeated along the nodes of , akin to ceramic tiling.

A grid module is defined as an ensemble of M grid cells , with identical, but spatially shifted tuning curves, that is, and spatial phases (see Figure 1B). The various phases within a module can be summarized by their phase density . This definition is motivated by the observation of spatially shifted hexagonally tuned grid cells in the entorhinal cortex of rats (Hafting et al., 2005; Stensola et al., 2012).

Any grid module is uniquely characterized by its signature . To investigate the role of different periodic structures, we can fix the tuning shape Ω and density ρ and solely vary the lattice to find the lattice that yields the highest FI.

Results

To determine how the resolution of a grid module depends on the periodic structure , we compute the population FI Jς(x) for a module of grid cells with signature , which describes the tuning shape, the density of firing fields, and the lattice. By fixing the tuning shape Ω and the number of spatial phases, we can compare the resolution for different periodic structures. (Table 1 contains a glossary of the variables.)

Table 1.

List of acronyms, variables, and terms

| D | Dimension of the stimulus space |

| FI | Fisher information, usually denoted by J (Equation 3) |

| Non-degenerate point lattice describing periodic structure (Equation 5) | |

| L | Fundamental domain of , which is the Voronoi cell containing 0 (Equation 6) |

| Ω | Tuning shape |

| supp(Ω) | Support of Ω, that is, the subset where Ω does not vanish |

| Periodified tuning curve on , where is a D-dimensional lattice and Ω a tuning curve. Simply referred to as a ‘grid cell’ (Equation 7) | |

| ρ | Phase density of grid cells' phases ci within a module |

| M | Number of phases in grid module |

| Signature defining a grid module, which is an ensemble of grid cells differing in spatial phases ci, defined by ρ and tuning curves given by | |

| Determinant of lattice (equal to volume of L) | |

| BR(0) | Subset of containing all points with distance less than R from 0 |

| Packing ratio of a lattice, that is, the volume of the largest that fits inside L divided by (Equation 15) | |

| , | Hexagonal and square planar lattice of unit node-to-node distance (Figure 2) |

| , , | Face-centered, body-centered, and cubic lattice of unit node-to-node distance, respectively (Figure 4). |

| trJ | Trace of the FI, that is, the sum of diagonal elements |

| Jς | Population FI of grid module with signature ς |

| , , | Trace of FI per neuron for lattice ( and , respectively) with fixed bump-like Ω defined in Equation 26 |

| Trace of FI for lattice for M randomly distributed phases in L for the same bump function |

Scaling of lattices and nested grid codes

Our grid-cell construction has one obvious degree of freedom, the length scale or grid size of the lattice , that is, the width of the fundamental domain L. For a module with signature and for arbitrary scaling factor λ > 0, the rescaled construction is a grid module too. The corresponding tuning curve satisfies and is thus merely a scaled version of the former. Indeed, as we show in the ‘Material and methods’ section, the FI of the rescaled module is λ−2 Jς(0). The Cramér-Rao bound (Equation 4) implies that the local resolution of an unbiased estimator could thus rapidly improve with a finer grid size, that is, decreasing λ.

However, for any grid module the posterior probability, that is, the likelihood of possible positions given a particular spike count vector K = (k1,…,kN), is also periodic. This follows from Bayes rule:

| (8) |

Since the right hand side is invariant under operations of on x, so is the left hand side of this equation. Thus, the multiple firing fields of a grid cell cannot be distinguished by a decoder, so that for λ → 0 the global resolution approaches the a priori uncertainty (Mathis et al., 2012a, 2012b). By combining multiple grid modules with different spatial periods one can overcome this fundamental limitation, counteracting the ambiguity caused by periodicity and still preserving the highest resolution at the smallest scale. Thus, one arrives at nested populations of grid modules, whose spatial periods range from coarse to fine. The FI for an individual module at one scale determines the optimal length scale of the next module (Mathis et al., 2012a, 2012b). The larger the FI per module, the greater the refinement at subsequent scales can be (Mathis et al., 2012a, 2012b). This result emphasizes the importance of finding the lattice that endows a grid module with maximal FI, but also highlights that the specific scale of the lattices can be fixed for this study.

FI of a grid module with lattice

We now calculate the FI for a grid module with signature . For cells whose firing is statistically independent (Equation 1), the joint probability factorizes; therefore, the population FI is just the sum over the individual FI contributions by each neuron, . The individual neurons only differ by their spatial phase ci, thus . Consequently, , depends only on the function and the deviations x − ci, where ci is the closest lattice point of to x. If the grid-cell density ρ is uniform across , then for all : Jς(x) ≈ Jς(0). It therefore suffices to only consider the FI at the origin, which can be written as:

| (9) |

For uniformly distributed spatial phases ci and increasing number of neurons M, the law of large numbers implies

| (10) |

Here, denotes the volume of the fundamental domain. Thus, for large numbers of neurons we obtain

| (11) |

This means that the population FI at 0 is approximately given by the average FI within the fundamental domain L times the number of neurons M. Let us now assume that supp(Ω) = [0, R] for some positive radius R. Outside of this radius, the tuning shape is zero and the firing rate vanishes. So the spatial phases of grid cells that contribute to the FI at x = 0 lie within the ball BR(0). If we now also assume that this ball is contained in the fundamental domain, , we get

| (12) |

This result implies that any grid code , with large M, supp(Ω) = [0, R], and , satisfies

| (13) |

The FI at the origin is therefore approximately equal to the product of the mean FI contribution of cells within a R-ball around 0 and the number of neurons M, weighted by the ratio of the volume of the R-ball to the area of the fundamental domain L. Due to the radial symmetry of , the FI matrix is diagonal with identical entries, guaranteeing the spatial resolution's isotropy. The error for each coordinate axis is bounded by the same value, that is, the inverse of the diagonal element 1/Jς(0)ii, for such a population. Instead of considering the FI matrix Jς(0), we can therefore consider the trace of Jς(0), which is the sum over the diagonal of Jς(0). According to Equation 4, 1/trJς(0) bounds the mean square error summed across all dimensions .

For two lattices ,, with BR(0) ⊂ L1∩L2 we consequently obtain

| (14) |

which signifies that the resolution of the grid module is inversely proportional to the volumes of their fundamental domains. The periodic structure thus has a direct impact on the resolution of the grid module. This result implies that finding the maximum FI translates directly into finding the lattice with the highest packing ratio.

Packing ratio of lattices

The sphere packing problem is of general interest in mathematics (Conway and Sloane, 1992) and has wide-ranging applications from crystallography to information theory (Barlow, 1883; Shannon, 1948; Whittaker, 1981; Gray and Neuhoff, 1998; Gruber, 2004). When packing R-balls BR in in a non-overlapping fashion, the density of the packing is defined as the fraction of the space covered by balls. For a lattice , it is given by

| (15) |

which is known as the packing ratio of the lattice. For a given lattice, this ratio is maximized by choosing the largest possible R, known as the packing radius, which is defined as the in-radius of a Voronoi region containing the origin (Conway and Sloane, 1992). Figure 2 depicts the disks with the largest in-radius for the hexagonal and the square lattice in blue and illustrates the packing ratio.

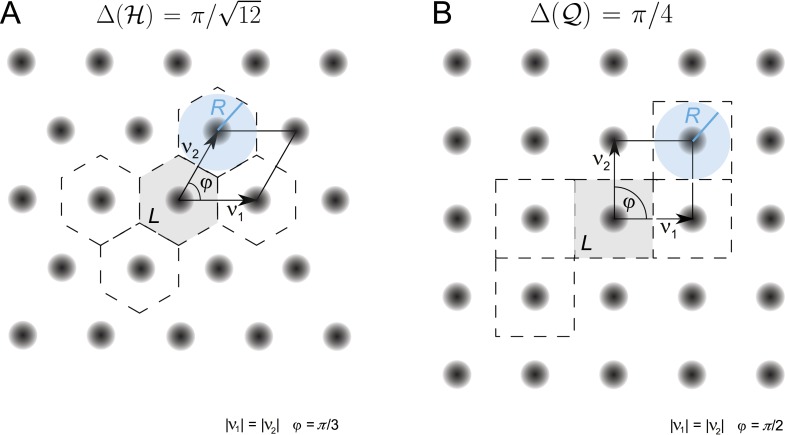

Figure 2. Periodified grid-cell tuning curve for two planar lattices, (A) the hexagonal (equilateral triangle) lattice and (B) the square lattice , together with the basis vectors v1 and v2.

These are π/3 apart for the hexagonal lattice and π/2 for the square lattice. The fundamental domain, that is, the Voronoi cell around 0, is shown in gray. A few other domains that have been generated according to the lattice symmetries are marked by dashed lines. The blue disk shows the disk with maximal radius R that can be inscribed in the two fundamental domains. For equal and unitary node-to-node distances, that is, , the maximal radius equals 1/2 for both lattices. The packing ratio Δ is for the hexagonal and for the square lattice; the hexagonal lattice is approximately 15.5% denser than the square lattice.

FI and packing ratio

We now come to the main finding of this study: among grid modules with different lattices, the lattice with the highest packing ratio leads to the highest spatial resolution.

To derive this result, let us fix a tuning shape Ω with supp(Ω) = [0, R], lattices such that BR(0) ⊂ Lj for 1 ≤ j ≤ K, and uniform densities ρ for each fundamental domain of equal cardinality M. Any linear order on the packing ratios,

| (16) |

is translated by Equation 14 into the same order for the traces of the FI

| (17) |

and thus the resolution of these modules: the higher the packing ratio, the higher the FI of a grid module.

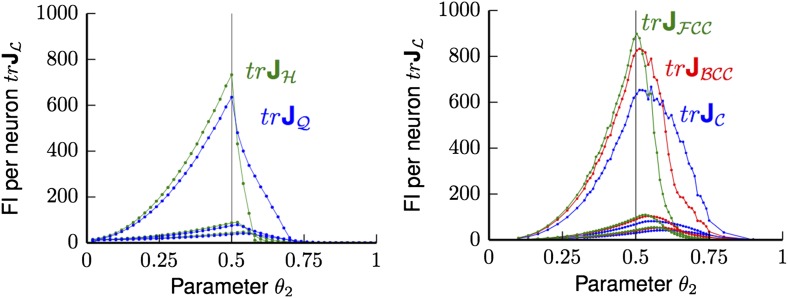

The condition supp(Ω) = [0, R] with BR(0) ⊂ L, although restrictive, is consistent with experimental observations that grid cells tend to stop firing between grid fields and that the typical ratio between field radius and spatial period is well below 1/2 (Hafting et al., 2005; Brun et al., 2008; Giocomo et al., 2011). Generally, the tuning width that maximizes the FI does not necessarily satisfy this condition; see Figures 3, 4, in which the optimal support radius of the tuning curve θ2 is greater than the in-radius R = 1/2 of L. The same observation will hold in higher dimensions (D > 2), consistent with the finding that the optimal tuning width for Gaussian tuning curves increases with the number of spatial dimensions, whether space is infinite (Zhang and Sejnowski, 1999) or finite (Brown and Bäcker, 2006). When the radius R of the support of the tuning curve exceeds the in-radius, the optimal lattice can be different from the densest one as we will show numerically for specific tuning curves and Poisson noise. However, with well separated fields, like those observed experimentally, the densest lattice provides the highest resolution for any tuning shape Ω, as we just demonstrated.

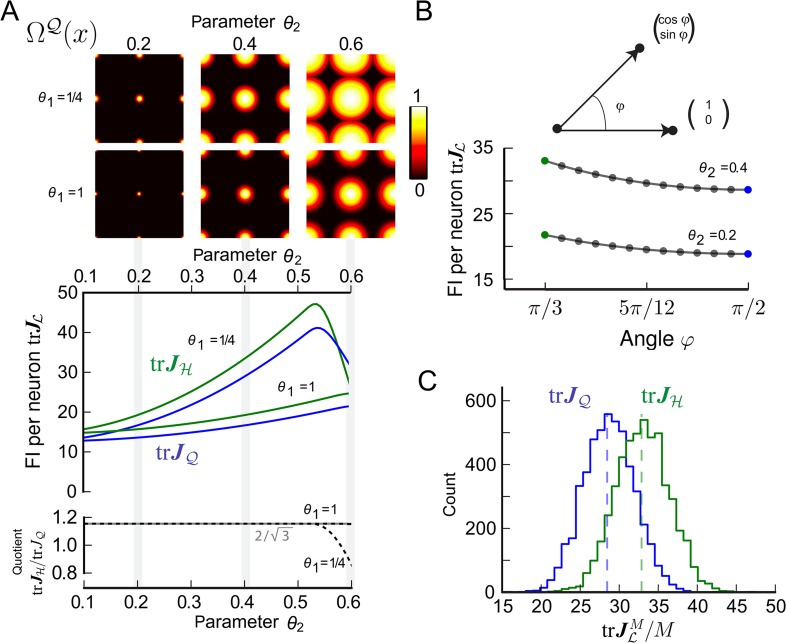

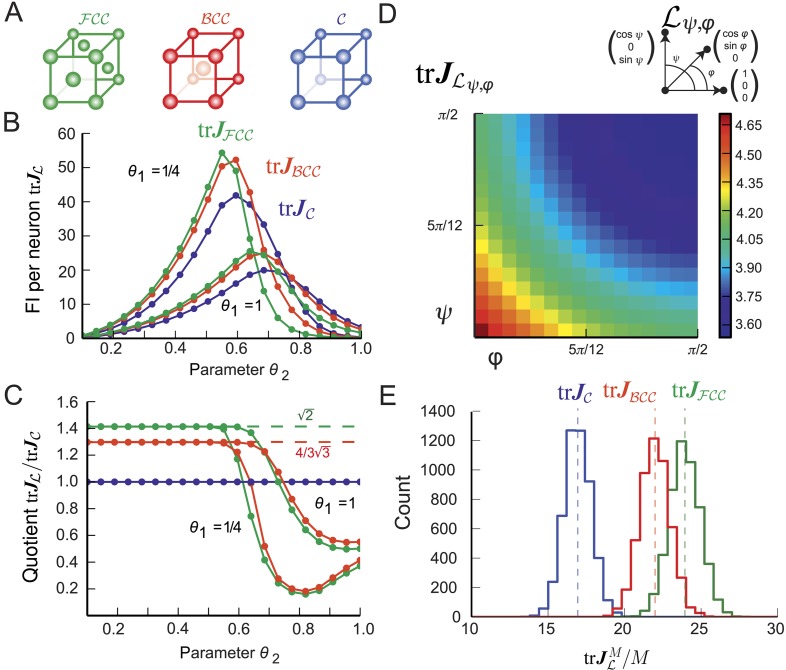

Figure 3. Fisher information for modules of two-dimensional grid cells.

(A) Top: Periodified bump-function Ω and square lattice , for various parameter combinations θ1 and θ2. Here, θ1 modulates the decay and θ2 the support. Middle: Average trace of the Fisher information (FI) for uniformly distributed grid cells . Hexagonal () and square () lattices are considered for different θ1 and θ2 values. The FI of the hexagonal grid cells outperforms the quadratic grid when support is fully within the fundamental domain (θ2 < 0.5, see main text). Bottom: Ratio as a function of the tuning parameter θ2. For θ2 < 0.5, the hexagonal population offers 3/2 times the resolution of the square population, as predicted by the respective packing ratios. (B) Average for grid cells distributed uniformly in lattices generated by basis vectors separated by an angle φ (basis depicted above graph). behaves like 1/sin(φ) and has its maximum at π/3. (C) Distribution of 5000 realizations of at 0 for a population of M = 200 randomly distributed neurons. For both the hexagonal and square lattice, parameters are θ1 = 1/4 and θ2 = 0.4. The means closely match the average values in (A). However, due to the finite neuron number the FI varies strongly for different realizations, and in about 20% of the cases a square lattice module outperforms a hexagonal lattice.

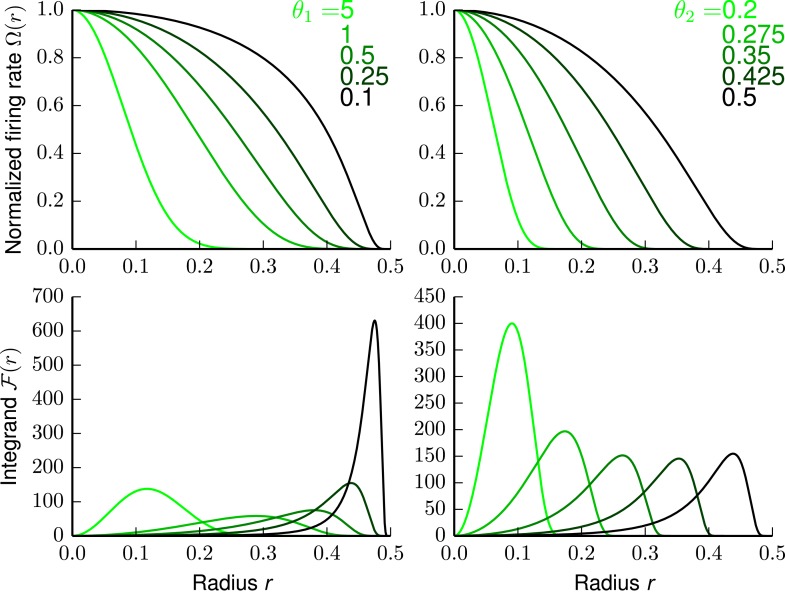

Figure 3—figure supplement 1. The firing rate and Fisher information of the bump tuning shape.

Figure 4. Fisher information for modules of 3D grid cells.

(A) The three lattices considered: face-centered cubic (), body-centered cubic (), and cubic (). (B) for the periodified bump-function Ω for the three lattices and various parameter combinations θ1 and θ2. The Fisher information (FI) of the grid cells outperforms the other lattices when the support is fully within the fundamental domain (θ2 < 0.5, see main text). For larger θ2 the best lattice depends on the relation between the Voronoi cell's boundary and the tuning curve. (C) Ratio as a function of θ2 for . For θ2 < 0.5, the hexagonal population has 3/2 times the resolution of the square population, as predicted by the packing ratios. (D) Average for uniformly distributed grid cells within a lattice generated by basis vectors separated by angles φ and ψ (as shown above; θ1 = θ2 = 1/4). behaves like 1/(sinφ⋅sinψ) and has its maximum for the lattice with the smallest volume. (E) Distribution of 5000 realizations of at 0 for a population of M = 200 randomly distributed neurons. Parameters: θ1 = 1/4, θ2 = 0.4. The means closely match the averages in (B). Due to the finite neuron number, the FI varies strongly for different realizations.

The optimal packing ratio of lattices for low-dimensional space is well known. Having established our main result, we can now draw on a rich body of literature, in particular Conway and Sloane (1992), to discuss the expected firing-field structure of grid cells in 2D and 3D environments.

Optimal 2D grid cells

With a packing ratio of , the hexagonal lattice is the densest lattice in the plane (Lagrange, 1773). According to Equation 14, the hexagonal lattice is the optimal arrangement for grid-cell firing fields on the plane. For example, it outperforms the quadratic lattice, which has a density of π/4, by about 15.5% (see Figure 2). Consequently, the FI of a grid module periodified along a hexagonal lattice outperforms one periodified along a square lattice by the same factor.

To provide a tangible example, we calculated the trace of the average FI per neuron for signature and chose the lattice to either be the hexagonal lattice or the quadratic lattice . We denote the trace of the average FI per neuron as: = ; and are similarly defined. We considered Poisson spike statistics and used a bump-like tuning shape Ω (Equation 26, ‘Materials and methods’ section). The tuning shape Ω depends on two parameters θ1 and θ2, where θ1 controls the slope of the flank in Ω and θ2 defines the support radius. The periodified tuning curve is illustrated for different parameters in the top of Figure 3A and in Figure 3—figure supplement 1.

Figure 3A depicts and for various values of θ1 and θ2. Quite generally, the FI is larger for grid modules with broad tuning (large θ2) and steep tuning slopes (small θ1). Figure 3A also demonstrates that as long as θ2 ≤ 1/2, consistently outperforms . But how large is this effect? As predicted by our theory, the grid module with the hexagonal lattice outperforms the square lattice by the relation of packing ratios , as long as the support radius θ2 is within the fundamental domain of the hexagonal and the square lattice of unit length, that is, θ2 ≤ 1/2 (bottom of Figure 3A). As the support radius becomes larger, the FI of the hexagonal lattice is no longer necessarily greater than that of the square lattice; the specific interplay of tuning curve and boundary shape determines which lattice is better: for θ1 = 1/4, drops quickly beyond θ2 = 0.5, even though, for θ1 = 1, the ratio stays constant up to θ2 = 0.6.

Next we calculated the FI per neuron for a larger family of planar lattices generated by two unitary basis vectors with angle φ. Figure 3B displays for φ ∈ [π/3, π/2], slope parameter θ1 = 1/4, and different support radii θ2. For the lattice to have unitary length, the value φ cannot go below π/3. The decays with increasing angle φ. Indeed, according to Equation 13, the FI falls like so that the maximum is achieved for the hexagonal lattice with π/3.

The FIs are averages over all phases, under the assumption that the density of phases tends to a constant; but are these values also indicative for small neural populations? To answer this question, we calculated the FI for populations with 200 neurons, as some putative grid cells are found in patches of this size (Ray et al., 2014). For M = 200 randomly chosen phases (Figure 3C), the mean of the normalized FI over 5000 realizations is well captured by the FI per neuron calculated in Figure 3A. Because of fluctuations in the FI, however, the square lattice is better than the hexagonal lattice in about 20% of the cases.

Our theory implies that for radially symmetric tuning curves the hexagonal lattice provides the best resolution among all planar lattices. This conclusion agrees with earlier findings: Wei et al. considered a notion of resolution defined as the range of the population code per smallest distinguishable scale and then demonstrated that a population of nested grid cells with hexagonal tuning is optimal for a winner-take-all and Bayesian maximum likelihood decoders (Wei et al., 2013). Guanella and Verschure numerically compared hexagonal to other regular lattices based on maximum likelihood decoding (Guanella and Verschure, 2007).

Optimal lattices for 3D grid cells

Gauss proved that the packing ratio of any cubic lattice is bounded by and that this value is attained for the face-centered cubic () lattice (Gauss, 1831) illustrated in Figure 4A. This implies that the optimal 3D grid-cell tuning is given by the lattice. For comparison, we also calculated the average population FI for two other important 3D lattices: the cubic lattice () and the body-centered cubic lattice (), both shown in Figure 4A.

Keeping the bump-like tuning shape Ω and independent Poisson noise, we compared the resolution of grid modules with such lattices (Figure 4B). Their averaged trace of FI is denoted by , , and , respectively. As long as the support θ2 of Ω is smaller than 1/2, the support is a subset of the fundamental domain of all three lattices. Hence, the trace of the population FI of the outperforms both the and lattices. As the ratios of the trace of the population FI scales with the packing ratio (Figure 4C), -grid cells provide roughly 41% more resolution for the same number of neurons than do -grid cells. Similarly, -grid cells provide 8.8% more FI than -grid cells.

Next we calculated the FI per neuron for a large family of cubic lattices generated by three unitary basis vectors with spanning angles φ and ψ. Figure 4D displays for θ1 = θ2 = 1/4 and various φ and ψ. The resolution decays with increasing angles and has its maximum for the lattice with the smallest volume as predicted by Equation 13.

To study finite-size effects, we simulated 5000 populations of 200 grid cells with random spatial phases. Qualitatively, the results (Figure 4E) match those in 2D (Figure 3C). Despite the small module size, outperformed the cubic lattice in all simulated realizations.

Equally optimal non-lattice solutions for grid-cell tuning

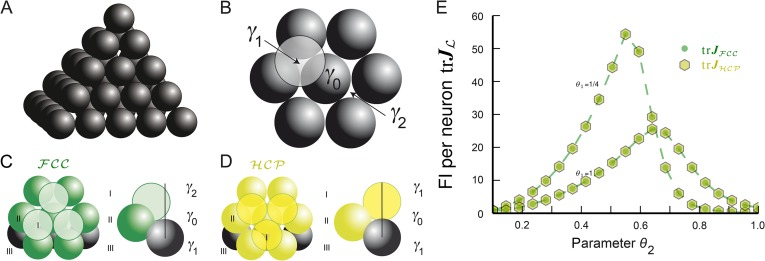

Fruit is often arranged in an formation (Figure 5A). One arrives at this lattice by starting from a layer of hexagonally placed spheres. This requires two basis vectors to be specified and is the densest packing in 2D. To maximize the packing ratio in 3D, the next layer of hexagonally arranged spheres has to be stacked as tightly as possible. There are two choices for the third and final basis vector achieve this packing, denoted as γ1 and γ2 in Figure 5B (modulo hexagonal symmetry). If one chooses γ1, then two layers below there is no sphere with its center at location γ1, but instead there is one at γ2 (and vice versa). This stacking of layers is shown in Figure 5C and generates the lattice.

Figure 5. Lattice and non-lattice solutions in 3D.

(A) Stacking of spheres as in an lattice. In this densest lattice in 3D, each sphere touches 12 other spheres and there are four different planar hexagonal lattices through each node. (B) Over a layer of hexagonally arranged spheres centered at γ0 (in black) one can put another hexagonal layer by starting from one of six locations, two of which are highlighted, γ1 and γ2. (C) If one arranges the hexagonal layers according to the sequence (…,γ1, γ0, γ2,…) one obtains the . Note that spheres in layer I are not aligned with those in layer III. (D) Arranging the hexagonal layers following the sequence (…,γ0, γ1, γ0,…) leads to the hexagonal close packing . Again, each sphere touches 12 other spheres. However, there is only one plane through each node for which the arrangement of the centers of the spheres is a regular hexagonal lattice. This packing has the same packing ratio as the , but is not a lattice. (E) for bump-function Ω with and for various parameter combinations θ1 and θ2; θ1 modulates the decay and θ2 the support. The two packings have the same packing ratio and for this tuning curve also provide identical spatial resolution. FI: Fisher information.

One could achieve the same density by choosing γ1 for both the top layer and the layer below the basis layer. Yet as this arrangement, called hexagonal close packing (), cannot be described by three vectors, it does not define a lattice (see Figure 5D), even though it is as tightly packed as the . Such packings, defined as an arrangement of equal non-overlapping balls (Conway and Sloane, 1992; Hales, 2012), generalize lattices.

While one can define a grid module for any lattice, as we showed above, one cannot define a grid module in a meaningful way for an arbitrary packing, due to the lack of symmetry. But for any given packing of by balls of radius 1, one can define a ‘grid cell’ by generalizing the definition given for lattices (Equation 7). To this end, consider the Voronoi partition of by . For each location there is a unique Voronoi cell Vp with node . One defines the grid cell's tuning curve by assigning the firing rate according to for tuning shape Ω and distance . Depending on the specific packing, this tuning curve may or may not be periodic. Because a packing often has fewer symmetries than a lattice , the ‘grid cells’ in an arbitrary cannot generally be used to define a ‘grid module’. To explain why, consider an arbitrary packing and the unique Voronoi cell V0 that contains the point 0. Choose M uniformly distributed phases c1,…,cM within V0. Locations within V0 will then be uniformly covered by shifted tuning curves . However, typically the different Voronoi cells will neither be congruent, nor have similar volumes. Thus, the Ωi will typically not cover each Voronoi cell with the same density and will therefore fail to define a proper grid module. This problem does not exist for lattices. Here, the equivalence classes cover each cell with the same density.

Highly symmetric packings, on the other hand, do permit the definition of grid modules. For example, the hexagonal close packing can be used to define a grid cell . Using the same symmetry argument from Equations 9–11, implies for the FI:

| (18) |

The maximal in-radius R for the with grid size λ = 1 is equal to 1/2. Like for lattices, we assume that supp(Ω) = [0, R] and BR(0) ⊂ V0. Then the integrand vanishes for distances larger than 1/2 from 0. Hence, we obtain:

| (19) |

Considering the same tuning shape Ω and number of phases M for an lattice, which also has maximal in-radius 1/2, Equation 13 gives us the following expression for the lattice:

| (20) |

Since both fundamental domains have the same volumes, that is, , and the integrands restricted to these balls are identical, that is, , we can conclude that grid modules comprising or -like symmetries have the same FI. We also numerically calculate the trace of the average FI for a module of grid cells and compare it to the case. For bump-like tuning curves Ω, both FIs are identical (Figure 5E) as expected from the radial symmetry of Ω. As a consequence, grid cells defined by either or symmetries provide optimal resolution.

Figure 5D,E shows that the cyclic sequences (γ0, γ1) and (γ1, γ0, γ2) lead to and , respectively. The centers γ0, γ1, and γ2 can also be used to make a final point on packings: there are infinitely many distinct packings with the same density . They can be constructed by inequivalent words, generated by finitewalks through the triangle with letters γ0, γ1, and γ2 (Hales, 2012), with each letter representing one of three orientations for the layers. For instance, (γ0, γ1, γ0, γ2) describes another packing with the same density. All packings share one feature: around each sphere there are exactly 12 spheres, arranged in either or lattice fashion (Hales, 2012). These packings can also be used to define a grid module, because the density of phases will be uniform in all cells. Furthermore, as in the calculation of the FI for the and (Equation 18–20) only local integration was necessary, such mixed packings will have equally large, uniform FI as the pure or packings.

Only in recent years has it been proven that no other arrangement has a higher packing ratio than the , a problem known as Kepler's conjecture (Hales, 2005, 2012). Based on these results and our comparison of and (Figure 5E), we predict that 3D grid cells will correspond to one of these packings. While there are equally dense packings as the densest lattice in 3D, this is not the case in 2D. Thue proved that the hexagonal lattice is unique in being the densest amongst all planar packings (Thue, 1910); grid cells in 2D should possess a hexagonal lattice structure.

Discussion

Grid cells are active when an animal is near one of any number of multiple locations that correspond to the vertices of a planar hexagonal lattice (Hafting et al., 2005). We generalize the notion of a grid cell to arbitrary dimensions, such that a grid cell's stochastic activity is modulated in a spatially periodic manner within . The periodicity is captured by the symmetry group of the underlying lattice . A grid module consists of multiple cells with equal spatial period but different spatial phases. Using information theory, we then asked which lattice offers the highest spatial resolution.

We find that the resolution of a grid module is related to the packing ratio of —the lattice with highest packing ratio corresponds to the grid module with highest resolution. Well-known results from mathematics (Lagrange, 1773; Gauss, 1831; Conway and Sloane, 1992) then show that the hexagonal lattice is optimal for representing 2D, whereas the lattice is optimal for 3D. In 3D, but not in 2D, there are also non-lattice packings with the same resolution as the densest lattice (Thue, 1910; Hales, 2012). A common feature of these highly symmetric optimal solutions in 3D is that each grid field is surrounded by 12 other grid fields, arranged in either lattice or hexagonal close packing fashion. These solutions emerge from the set of all possible packings simply by maximizing the resolution, as we showed. However, resolution alone, as measured by the FI, does not distinguish between optimal packing solutions with different symmetries. Whether a realistic neuronal decoder, such as one based on population vector averages, favors one particular solution is an interesting open question.

As we have demonstrated, using the FI makes finding the optimal analytically tractable for all dimensions D and singles out densest lattices as optimal tuning shapes under assumptions that are restrictive, but are consistent with experimental measurements (Hafting et al., 2005; Brun et al., 2008; Giocomo et al., 2011). The assumption that the tuning curves must have finite support within the fundamental domain of the lattice corresponds to grid cells being silent outside of the firing field. Indeed, our numerical simulations also showed that for broader tuning curves, grid modules with quadratic lattices can provide more FI than the hexagonal lattice (Figure 3A, θ2 ≈ 0.6 and θ1 = 1/4) and that grid cells with a or lattice can provide more FI than the (Figure 4B, θ2 > 0.65 and θ1 = 1/4). For the planar case, Guanella and Verschure (2007) show numerically that triangular tessellations yield lower reconstruction errors under maximum-likelihood decoding than equivalently scaled square grids. Complementing this numerical analysis, Wei et al. (2013) provide a mathematical argument that hexagonal grids are optimal. To do so, they define the spatial resolution of a single module representing 2D space as the ratio R = (λ/l)2, where λ is the grid scale and l is the diameter of the circle in which one can determine the animal's location with certainty. For a fixed resolution R, the number of neurons required is N = d sin(φ) R in their analysis, where d is the number of tuning curves covering each point in space. As φ ∈ [π/3, π/2] for the lattice to have unitary length (Figure 3B), minimizing N for a fixed resolution R yields φ = π/3; thus, hexagonal lattices should be optimal. Furthermore, Wei et al. show that this result also holds when considering a Bayesian decoder (Wei et al., 2013). While Wei et al. minimize N for fixed l, we minimize l (in their notation). Like Wei et al., we assume that the tuning curve Ω is isotropic (notwithstanding the fact that the lattice has preferred directions); unlike these authors, we show that there are conditions under which the firing fields should be arranged in a square lattice, and not hexagonally.

Using the FI gives a theoretical bound for the local resolution of any unbiased estimator (Lehmann, 1998). In particular, this local resolution does not take into account the ambiguity introduced by the periodic nature of the lattice. Our analysis is restricted to resolving the animal's position within the fundamental domain. For large neuron numbers N and expected peak spike counts fmaxτ the resolution of asymptotically efficient decoders, like the maximum likelihood decoder, or the minimum mean square estimator, can indeed attain the resolution bound given by the FI (Seung and Sompolinsky, 1993; Bethge et al., 2002; Mathis et al., 2013). Thus, for these decoders and conditions the results hold. In contrast, for small neuron numbers and peak spike counts, the optimal codes could be different, just as it has been shown in the past that the optimal tuning width in these cases cannot be predicted by the FI (Bethge et al., 2002; Yaeli et al., 2010; Berens et al., 2011; Mathis et al., 2012).

Maximizing the resolution explains the observed hexagonal patterns of grid cells in 2D, and predicts an lattice (or equivalent packing) for grid-cell tuning curves of mammals that can freely explore the 3D nature of their environment. Quantitatively, we demonstrated that these optimal populations provide 15.5% (2D) and about 41% (3D) more resolution than grid codes with quadratic or cubic grid cells for the same number of neurons. Although better, this might not seem substantial, at least not at the level of a single grid module. However, as medial entorhinal cortex harbors a nested grid code with at least 5 and potentially 10 or more modules (Stensola et al., 2012), this translates into a much larger gain of and , respectively (Mathis et al., 2012a, 2012b). Because aligned grid-cell lattices with perfectly periodic tuning curves imply that the posterior is periodic too (compare Equation 8), information from different scales would have to be combined to yield an unambiguous read-out. Whether the nested scales are indeed read out in this way in the brain remains to be seen (Mathis et al., 2012a, 2012b; Wei et al., 2013). An alternative hypothesis, as first suggested by Hafting et al., is that the slight, but apparently persistent irregularities in the firing fields across space (Hafting et al., 2005; Krupic et al., 2015; Stensola et al., 2015) are being used. Future experiments should tackle this key question.

We considered perfectly periodic structures (lattices) and asked which ones provide most resolution. However, the first recordings of grid cells already showed that the fields are not exactly hexagonally arranged and that different fields might have different peak firing rates (Hafting et al., 2005). More recently, deviations from hexagonal symmetry have gained considerable attention (Derdikman et al., 2009; Krupic et al., 2013, 2015; Stensola et al., 2015). Such ‘defects’ modulate the periodicity of the tuning and consequently affect the symmetry of the likelihood function. This might imply that a potential decoder might be able to distinguish different unit cells even given a single module, which is not possible for perfectly periodic tuning curves (compare Equation 8). The local resolution, on the other hand, is robust to small, incoherent variations as the FI is a statistical average over many tuning curves with different spatial phases. At a given location, Equation 9 becomes

where is the average of the variable tuning curves . Small variations in the peak rate and grid fields will therefore average out, unless these variations are coherent across grid cells. Thus, resolution bounded by the FI is robust with respect to minor differences in peak firing rates and hexagonality. Similar arguments hold in higher dimensions.

In this study, we focused on optimizing grid modules for an isotropic and homogeneous space, which means that the resolution should be equal everywhere and in each direction of space. From a mathematical point of view, this is the most general setting, but it is certainly not the only imaginable scenario; future studies should shed light on other geometries. Indeed, the topology of natural habitats, such as burrows or caves, can be highly complicated. Higher resolution might be required at spatial locations of behavioral relevance. Neural representations of 3D space may also be composed of multiple 1D and 2D patches (Jeffery et al., 2013). However, the mere fact that these habitats involve complicated low-dimensional geometries does not imply that an animal cannot acquire a general map for the environment. Poincaré already suggested that an isotropic and homogeneous representation for space can emerge out of non-Euclidean perceptual spaces, as one can move through physical space by learning the motion group (Poincaré, 1913). An isotropic and homogeneous representation of 3D space facilitates (mental) rotations in 3D and yields local coordinates that are independent of the environment's topology. On the other hand, the efficient-coding hypothesis (Barlow, 1959; Atick, 1992; Simoncelli and Olshausen, 2001) would argue that surface-bound animals might not need to dedicate their limited neuronal resources to acquiring a full representation of space, as flying animals might have to do, so that representations of 3D space will be species-specific (Las and Ulanovsky, 2014). Desert ants represent space only as a projection to flat space (Wohlgemuth et al., 2001; Grah et al., 2007). Likewise, experimental evidence suggests that rats do not encode 3D space in an isotropic manner (Hayman et al., 2011), but this might be a consequence of the specific anisotropic spatial navigation tasks these rats had to perform. Data from flying bats, on the other hand, demonstrate that, at least in this species, place cells represent 3D space in a uniform and nearly isotropic manner (Yartsev and Ulanovsky, 2013). The 3D, toroidal head-direction system in bats also suggests that they have access to the full motion group (Finkelstein et al., 2014). Our theoretical analysis assumes that the same is true for bat grid cells and that they have radially symmetric firing fields. From these assumptions, we showed the grid cells' firing fields should be arranged on an lattice or packed as . Interestingly, such solutions also evolve dynamically in a self-organizing network model for 3D (Stella et al., 2013; Stella and Treves, 2015) that extends a previous 2D system which exhibits hexagonal grid patterns (Kropff and Treves, 2008). Experimentally, the effect of the arena's geometry on grid cells' tuning and anchoring has also been a question of great interest (Derdikman et al., 2009; Krupic et al., 2013, 2015; Stensola et al., 2015). First, let us note that even though the environment might be finite, the grid-cell representation need not be constrained by it. In particular, the firing fields are not required to be contained within the confines of the four walls of a box—experimental observations show that walls can intersect the firing fields (so that one measures only a part of the firing field). On the other hand, the borders clearly distort the hexagonal arrangement of nearby firing fields in 2D environments (Stensola et al., 2015), whereas central fields are more perfectly arranged. Deviations are also observed when only a few fields are present in the arena (Krupic et al., 2015). One might expect similar deviations in 3D, such as for bats flying in a confined space. Our mathematical results rely on symmetry arguments that do not cover non-periodic tuning curves. Given that the resolution is related to the packing ratio of a lattice, extensions of the theory to general packings might allow one to draw on the rich field of optimal finite packings (Böröczky, 2004; Toth et al., 2004), thereby providing new hypotheses to test.

Many spatially modulated cells in rat medial entorhinal cortex have hexagonal tuning curves, but some have firing fields that are spatially periodic bands (Krupic et al., 2012). The orientation of these bands tends to coincide with one of the lattice vectors of the grid cells (as the lattices for different grid cells share a common orientation), so band cells might be a layout ‘defect’. In this context, we should point out that the lattice solutions are not globally optimal. For instance, in 2D, a higher resolution can result from two systems of nested 1D grid codes, which are aligned to the x and y axis, respectively, than from a lattice solution with the same number of neurons. The 1D cells would behave like band cells (Krupic et al., 2012). Similar counterexamples can be given in higher dimensions too. The anisotropy of the spatial tuning in grid cells of climbing rats when encoding 3D space (Hayman et al., 2011) might capitalize on this gain (Jeffery et al., 2013). Radial symmetry of the tuning curve may also be non-optimal. For example, two sets of elliptically tuned 2D unimodal cells, with orthogonal short axes, typically outperform unimodal cells with radially symmetric tuning curves (Wilke and Eurich, 2002). Why experimentally observed place fields and other tuning curves seem to be isotropically tuned is an open question (O'Keefe and Dostrovsky, 1971; Yartsev and Ulanovsky, 2013).

Grid cells which represent the position of an animal (Hafting et al., 2005) have been discovered only recently. By comparison, in technical systems, it has been known since the 1950s that the optimal quantizers for 2D signals rely on hexagonal lattices (Gray and Neuhoff, 1998). In this context, we note that lattice codes are also ideally suited to cover spaces that involve sensory or cognitive variables other than location. In higher-dimensional feature spaces, the potential gain could be enormous. For instance, the optimal eight-dimensional (8D) lattice is about 16 times denser than the orthogonal 8D lattice (Conway and Sloane, 1992) and would, therefore, dramatically increase the resolution of the corresponding population code. Advances in experimental techniques, which allow one to simultaneously record from large numbers of neurons (Ahrens et al., 2013; Deisseroth and Schnitzer, 2013) and to automate stimulus delivery for dense parametric mapping (Brincat and Connor, 2004), now pave the way to search for such representations in cortex. For instance, by parameterizing 19 metric features of cartoon faces, such as hair length, iris size, or eye size, Freiwald et al. showed that face-selective cells are broadly tuned to multiple feature dimensions (Freiwald et al., 2009). Especially in higher cortical areas, such joint feature spaces should be the norm rather than the exception (Rigotti et al., 2013). While no evidence for lattice codes was found in the specific case of face-selective cells, data sets like this one will be the test-bed for checking the hypothesis that other nested grid-like neural representations exist in cortex.

Materials and methods

We study population codes of neurons encoding the D-dimensional space by considering the FI J as a measure for their resolution. The population coding model, the construction to periodify a tuning shape Ω onto a lattice with center density ρ, as well as the definition of the FI, are given in the main text. In this section we give further background on the methods.

Scaling of grid cells and the effect on Jς

How is the resolution of a grid module affected by dilations? Let us assume we have a grid module with signature , as defined in the main text, and that λ > 0 is a scaling factor. Then is a grid module too, and the corresponding tuning curve satisfies:

| (21) |

Thus, the tuning curve is a scaled version of . What is the relation between the FI of the initial grid module and the rescaled version? Let us fix the notation: . From the definition of the population information (Equation 9), we calculate

| (22) |

where in the second step we used the re-parameterization formula of the FI (Lehmann, 1998). This shows that the FI of a grid module scaled by a factor λ is the same as the FI of the initial grid module times 1/λ2.

Population FI for Poisson noise with radially symmetric tuning

In the ‘Results’ section, we give a concrete example for Poisson noise and the bump function. Here we give the necessary background. Equation 13 states that

One would like to know for various tuning shapes Ω with supp(Ω) ≤ R.

Consider x ∈ L and α ∈ {1,…,D}. Then:

| (23) |

Together with the definition of the FI Equation 13, this yields

| (24) |

Note that for α ≠ β this function is odd in x. Thus, when averaging these individual contributions over a symmetric fundamental domain L: for α ≠ β. Thus, the diagonal entries are all identical. This also holds for any fundamental domain L when BR(0) ⊂ L, because BR(0) is symmetric.

For Poisson spiking has a particularly simple form, namely . The trace of the FI matrix becomes

| (25) |

Thus, the trace only depends on the tuning shape Ω and its first derivative. In the main text, we use the following specific tuning shape:

| (26) |

This type of function is often called ‘bump function’ in topology, as it has a compact support but is everywhere smooth (i.e., infinitely times continuously differentiable). In particular, the support of this function is [0, θ2), and is therefore controlled by the parameter θ2. The other parameter θ1 controls the slope of the bump's flanks (see upper panels of Figure 3—figure supplement 1).

For the bump-function Ω and radius the integrand for the FI is given by

| (27) |

The lower panels of Figure 3—figure supplement 1 depict the integrand of Equation 25, defined as . This function shows how much FI a cell at a particular distance contribute to the location 0. By integrating the FI over the fundamental domain L for a lattice one gets Jς(0), that is, the average FI contributions from all neurons (as shown in Figures 3, 4, 5E).

Acknowledgements

We thank Kenneth Blum and Mackenzie Amoroso for discussions. AM is grateful to Mackenzie Amoroso for graphics help and Ashkan Salamat for discussing the nomenclature in crystallography.

Funding Statement

The funders had no role in study design, data collection and interpretation, or the decision to submit the work for publication.

Funding Information

This paper was supported by the following grants:

Bundesministerium für Bildung und Forschung 01 GQ 1004A to Martin B Stemmler, Andreas VM Herz.

Deutsche Forschungsgemeinschaft MA 6176/1-1 to Alexander Mathis.

European Commission PIOF-GA-2013-622943 to Alexander Mathis.

Additional information

Competing interests

The authors declare that no competing interests exist.

Author contributions

AM, Conception and design, Analysis and interpretation of data, Drafting or revising the article.

MBS, Conception and design, Drafting or revising the article.

AVMH, Conception and design, Drafting or revising the article.

References

- Ahrens MB, Orger MB, Robson DN, Li JM, Keller PJ. Whole-brain functional imaging at cellular resolution using light-sheet microscopy. Nature Methods. 2013;10:413–420. doi: 10.1038/nmeth.2434. [DOI] [PubMed] [Google Scholar]

- Atick JJ. Could information theory provide an ecological theory of sensory processing? Network Computation in Neural Systems. 1992;3:213–251. doi: 10.1088/0954-898X/3/2/009. [DOI] [PubMed] [Google Scholar]

- Barlow W. Probable nature of the internal symmetry of crystals. Nature. 1883;29:205–207. doi: 10.1038/029205a0. [DOI] [Google Scholar]

- Barlow HB. NPL Symposium on the Mechanization of Thought Process. No. 10. London: Her Majesty's Stationary Office; 1959. Sensory mechanisms, the reduction of redundancy, and intelligence; pp. 535–539. [Google Scholar]

- Berens P, Ecker AS, Gerwinn S, Tolias AS, Bethge M. Reassessing optimal neural population codes with neurometric functions. Proceedings of the National Academy of Sciences of USA. 2011;108:4423–4428. doi: 10.1073/pnas.1015904108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bethge M, Rotermund D, Pawelzik K. Optimal short-term population coding: when Fisher information fails. Neural Computation. 2002;14:2317–2351. doi: 10.1162/08997660260293247. [DOI] [PubMed] [Google Scholar]

- Böröczky K. Finite packing and covering. 2nd edition. Cambridge University Press; 2004. [Google Scholar]

- Brincat SL, Connor CE. Underlying principles of visual shape selectivity in posterior inferotemporal cortex. Nature Neuroscience. 2004;7:880–886. doi: 10.1038/nn1278. [DOI] [PubMed] [Google Scholar]

- Brown WM, Bäcker A. Optimal neuronal tuning for finite stimulus spaces. Neural Computation. 2006;18:511–1526. doi: 10.1162/neco.2006.18.7.1511. [DOI] [PubMed] [Google Scholar]

- Brun VH, Solstad T, Kjelstrup KB, Fyhn M, Witter MP, Moser EI, Moser MB. Progressive increase in grid scale from dorsal to ventral medial entorhinal cortex. Hippocampus. 2008;18:1200–1212. doi: 10.1002/hipo.20504. [DOI] [PubMed] [Google Scholar]

- Conway JH, Sloane NJA. Sphere packings, lattices and groups. 2nd edition. New York: Springer-Verlag; 1992. [Google Scholar]

- Deisseroth K, Schnitzer MJ. Engineering approaches to illuminating brain structure and dynamics. Neuron. 2013;80:568–577. doi: 10.1016/j.neuron.2013.10.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Derdikman D, Whitlock JR, Tsao A, Fyhn M, Hafting T, Moser M-B, Moser EI. Fragmentation of grid cell maps in a multicompartment environment. Nature Neuroscience. 2009;12:1325–1332. doi: 10.1038/nn.2396. [DOI] [PubMed] [Google Scholar]

- Eurich W, Wilke SD. Multidimensional Encoding Strategy of Spiking Neurons. Neural Computation. 2000;12:1519–1529. doi: 10.1162/089976600300015240. [DOI] [PubMed] [Google Scholar]

- Fiete IR, Burak Y, Brookings T. What grid cells convey about rat location. Journal of Neuroscience. 2008;28:6858–6871. doi: 10.1523/JNEUROSCI.5684-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finkelstein A, Derdikman D, Rubin A, Foerster JN, Las L, Ulanovsky N. Three-dimensional head-direction coding in the bat brain. Nature. 2014;517:159–164. doi: 10.1038/nature14031. [DOI] [PubMed] [Google Scholar]

- Freiwald WA, Tsao DY, Livingstone MS. A face feature space in the macaque temporal lobe. Nature Neuroscience. 2009;12:1187–1196. doi: 10.1038/nn.2363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fyhn M, Hafting T, Witter MP, Moser EI, Moser M-B. Grid cells in mice. Hippocampus. 2008;18:1230–1238. doi: 10.1002/hipo.20472. [DOI] [PubMed] [Google Scholar]

- Gauss CF. Recension der ’Untersuchungen über die Eigenschaften der positiven ternären quadratischen Formen von Ludwig August Seeber‘. 1831 Göttingsche Gelehrte Anzeigen, July 9, pp. 1065; reprinted in J. Reine Angew. Math. 20 (1840)312–320. [Google Scholar]

- Giocomo LM, Hussaini SA, Zheng F, Kandel ER, Moser M-B, Moser EI. Grid cells use HCN1 channels for spatial scaling. Cell. 2011;147:1159–1170. doi: 10.1016/j.cell.2011.08.051. [DOI] [PubMed] [Google Scholar]

- Grah G, Wehner R, Ronacher B. Desert ants do not acquire and use a three-dimensional global vector. Frontiers in Zoology. 2007;4:12. doi: 10.1186/1742-9994-4-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gray RM, Neuhoff DL. Quantization. IEEE Transactions on Information Theory. 1998;44:2325–2383. doi: 10.1109/18.720541. [DOI] [Google Scholar]

- Gruber PM. Optimum quantization and its applications. Advances in Mathematics. 2004;186:456–497. doi: 10.1016/j.aim.2003.07.017. [DOI] [Google Scholar]

- Guanella A, Verschure PF. Prediction of the position of an animal based on populations of grid and place cells: a comparative simulation study. Journal of Integrative Neuroscience. 2007;6:433–446. doi: 10.1142/S0219635207001556. [DOI] [PubMed] [Google Scholar]

- Hafting T, Fyhn M, Molden S, Moser MB, Moser EI. Microstructure of a spatial map in the entorhinal cortex. Nature. 2005;436:801–806. doi: 10.1038/nature03721. [DOI] [PubMed] [Google Scholar]

- Hales T. A proof of the Kepler conjecture. Annals of Mathematics. 2005;162:1065–1185. doi: 10.4007/annals.2005.162.1065. [DOI] [Google Scholar]

- Hales T. Dense sphere packings: a blueprint for formal proofs. Cambridge: Cambridge University Press; 2012. [Google Scholar]

- Hayman R, Verriotis M, Jovalekic A, Fenton AA, Jeffery KJ. Anisotropic encoding of three-dimensional space by place cells and grid cells. Nature Neuroscience. 2011;14:1182–1188. doi: 10.1038/nn.2892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs J, Weidemann CT, Miller JF, Solway A, Burke JF, Wei X-X, Suthana N, Sperling MR, Sharan AD, Fried I, Kahana MJ. Direct recordings of grid-like neuronal activity in human spatial navigation. Nature Neuroscience. 2013;16:1188–1190. doi: 10.1038/nn.3466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeffery KJ, Jovalekic A, Verriotis M, Hayman R. Navigating in a three-dimensional world. The Behavioral and Brain Sciences. 2013;36:523–543. doi: 10.1017/S0140525X12002476. [DOI] [PubMed] [Google Scholar]

- Kropff E, Treves A. The emergence of grid cells: intelligent design or just adaptation? Hippocampus. 2008;18:1256–1269. doi: 10.1002/hipo.20520. [DOI] [PubMed] [Google Scholar]

- Krupic J, Burgess N, O'Keefe J. Neural representations of location composed of spatially periodic bands. Science. 2012;337:853–857. doi: 10.1126/science.1222403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krupic J, Bauza M, Burton S, Barry C, O'Keefe J. Grid cell symmetry is shaped by environmental geometry. Nature. 2015;518:232–235. doi: 10.1038/nature14153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krupic J, Bauza M, Burton S, Lever C, O'Keefe J. How environment geometry affects grid cell symmetry and what we can learn from it. Philosophical Transactions of the Royal Society of London B Biological Sciences. 2013;369:20130188. doi: 10.1098/rstb.2013.0188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lagrange JL. Recherches d’arithmétique. Nouveaux Mémoires de l'Académie Royale des Sciences et Belles-Lettres de Berlin, Années. 1773;3:693–758. Reprinted in Oeuvres. [Google Scholar]

- Las L, Ulanovsky N. Hippocampal neurophysiology across species. In: Derdikman D, Knierim JJ, editors. Space, time and memory in the hippocampal formation. Springer Vienna; 2014. pp. 431–461. [Google Scholar]

- Lehmann EL. Theory of Point estimation. 2nd edition. NY, USA: Springer-Verlag; 1998. [Google Scholar]

- Mathis A. The representation of space in mammals: resolution of stochastic place and grid codes. 2012 PhD thesis, Ludwig-Maximilians-Univeristät München. [Google Scholar]

- Mathis A, Herz AV, Stemmler MB. Optimal population codes for space: grid cells outperform place cells. Neural Computation. 2012a;24:2280–2317. doi: 10.1162/NECO_a_00319. [DOI] [PubMed] [Google Scholar]

- Mathis A, Herz AV, Stemmler MB. Resolution of nested neuronal representations can be exponential in the number of neurons. Physical Review Letters. 2012b;109:018103. doi: 10.1103/PhysRevLett.109.018103. [DOI] [PubMed] [Google Scholar]

- Mathis A, Herz AV, Stemmler MB. Multiscale codes in the nervous system: the problem of noise correlations and the ambiguity of periodic scales. Physical Review E, Statistical, Nonlinear, and Soft Matter Physics. 2013;88:022713. doi: 10.1103/PhysRevE.88.022713. [DOI] [PubMed] [Google Scholar]

- Montemurro MA, Panzeri S. Optimal tuning widths in population coding of periodic variables. Neural Computation. 2006;18:1555–1576. doi: 10.1162/neco.2006.18.7.1555. [DOI] [PubMed] [Google Scholar]

- Nelson DR. Defects and geometry in condensed matter physics. Cambridge University Press; 2002. [Google Scholar]

- O'Keefe J, Dostrovsky J. The hippocampus as a spatial map: preliminary evidence from unit activity in the freely-moving rat. Brain Research. 1971;34:171–175. doi: 10.1016/0006-8993(71)90358-1. [DOI] [PubMed] [Google Scholar]

- Paradiso MA. A theory for the use of visual orientation information which exploits the columnar structure of striate cortex. Biological Cybernetics. 1988;58:35–49. doi: 10.1007/BF00363954. [DOI] [PubMed] [Google Scholar]

- Poincaré H. The foundations of science: science and hypothesis, the value of science, science and method. Garrison, NY: The Science Press; 1913. [Google Scholar]

- Pouget S, Deneve S, Ducom JC, Latham PE. Narrow versus wide tuning curves: what's best for a population code? Neural Computation. 1999;11:85–90. doi: 10.1162/089976699300016818. [DOI] [PubMed] [Google Scholar]

- Ray S, Naumann R, Burgalossi A, Tang Q, Schmidt H, Brecht M. Grid-layout and theta-modulation of layer 2 pyramidal neurons in medial entorhinal cortex. Science. 2014;13:987–994. doi: 10.1126/science.1243028. [DOI] [PubMed] [Google Scholar]

- Rigotti M, Barak O, Warden MR, Wang X-J, Daw ND, Miller EK, Fusi S. The importance of mixed selectivity in complex cognitive tasks. Nature. 2013;497:585–590. doi: 10.1038/nature12160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seung HS, Sompolinsky H. Simple models for reading neuronal population codes. Proceedings of the National Academy of Sciences of USA. 1993;90:10749–10753. doi: 10.1073/pnas.90.22.10749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shannon CE. A mathematical theory of communication. The Bell System Technical Journal. 1948;XXVII:379–423. [Google Scholar]

- Simoncelli EP, Olshausen BA. Natural image statistics and neural representation. Annual Review of Neuroscience. 2001;24:1193–1216. doi: 10.1146/annurev.neuro.24.1.1193. [DOI] [PubMed] [Google Scholar]

- Stella F, Si B, Kropff E, Treves A. Grid maps for spaceflight, anyone? They are for free! Behavioral and Brain Sciences. 2013;36:566–567. doi: 10.1017/S0140525X13000575. [DOI] [PubMed] [Google Scholar]

- Stella F, Treves A. The self-organization of grid cells in 3D. eLife. 2015;3:e05913. doi: 10.7554/eLife.05913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stensola H, Stensola T, Froland K, Moser M-B, Moser EI. The entorhinal grid map is discretized. Nature. 2012;492:72–78. doi: 10.1038/nature11649. [DOI] [PubMed] [Google Scholar]

- Stensola T, Stensola H, Moser M-B, Moser EI. Shearing-induced asymmetry in entorhinal grid cells. Nature. 2015;518:207–212. doi: 10.1038/nature14151. [DOI] [PubMed] [Google Scholar]

- Thue A. Über die dichteste Zusammenstellung von kongruenten Kreisen in einer Ebene. Norske Videnskabs-Selskabets Skrifter. 1910;1:1–9. [Google Scholar]

- Toth CD, O'Rourke J, Goodman JE. Discrete and combinatorial mathematics series. 2nd edition. CRC Press; 2004. Handbook of discrete and computational geometry. [Google Scholar]

- Wei X-X, Prentice J, Balasubramanian V. The sense of place: grid cells in the brain and the transcendental number e. 2013 arXiv:1304.0031v1:20. [Google Scholar]

- Whittaker EJ. Crystallography: an introduction for earth (and other solid state) students. 1st edition. Pergamon Press; 1981. [Google Scholar]

- Wohlgemuth S, Ronacher B, Wehner R. Ant odometry in the third dimension. Nature. 2001;411:795–798. doi: 10.1038/35081069. [DOI] [PubMed] [Google Scholar]

- Yaeli S, Meir R. Error-based analysis of optimal tuning functions explains phenomena observed in sensory neurons. Frontiers in Computational Neuroscience. 2010;4:130. doi: 10.3389/fncom.2010.00130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yartsev MM, Ulanovsky N. Representation of three-dimensional space in the hippocampus of flying bats. Science. 2013;340:367–372. doi: 10.1126/science.1235338. [DOI] [PubMed] [Google Scholar]

- Yartsev MM, Witter MP, Ulanovsky N. Grid cells without theta oscillations in the entorhinal cortex of bats. Nature. 2011;479:103–107. doi: 10.1038/nature10583. [DOI] [PubMed] [Google Scholar]

- Zhang K, Sejnowski TJ. Neuronal tuning: to sharpen or broaden? Neural Computation. 1999;11:75–84. doi: 10.1162/089976699300016809. [DOI] [PubMed] [Google Scholar]