Abstract

Background

The CONSORT statement requires clinical trials to report confidence intervals, which help to assess the precision and clinical importance of the treatment effect. Conventional sample size calculations for clinical trials, however, only consider issues of statistical significance (that is, significance level and power).

Method

A more consistent approach is proposed whereby sample size planning also incorporates information on clinical significance as indicated by the boundaries of the confidence limits of the treatment effect.

Results

The probabilities of declaring a “definitive-positive” or “definitive-negative” result (as defined by Guyatt et al., CMAJ 152(2):169-173, 1995) are controlled by calculating the sample size such that the lower confidence limit under H1 and the upper confidence limit under H0 are bounded by relevant cut-offs. Adjustments to the traditional sample size can be directly derived for the comparison of two normally distributed means in a test of nonequality, while simulations are used to estimate the sample size for evaluating the hazards ratio in a proportional-hazards model.

Conclusions

This sample size planning approach allows for an assessment of the potential clinical importance and precision of the treatment effect in a clinical trial in addition to considerations of statistical power and type I error.

Keywords: clinical significance, confidence interval, sample size

Background

The importance of confidence intervals is clearly attested by journal guidelines [1-3] as they “convey information about magnitude and precision of effect simultaneously, and keep these two aspects of measurements closely linked” [4]. For clinical trials, the CONSORT statement [5] stipulates the reporting of the “estimated effect size and its precision (such as 95% confidence interval)” and “how sample size was determined,” but traditional sample size calculations for testing scientific hypotheses consider only statistical significance and power. The precision and clinical importance of the effect that can be depicted by confidence intervals is ignored. Under the usual practice, one calculates the sample size needed to declare some “clinically important difference” statistically significant at the α-level with 1 - β probability. The problem is that there is substantial subjectivity in quantifying this difference, and this can turn the sample size calculation into a moot exercise for choosing a difference to justify the number of patients the study can afford [6]. Frequently, the selected difference ends up larger than what is usual, and thus many studies may display large differences but lack the precision to make them statistically significant. Such shortcomings have led some to argue for reform of current sample size conventions in order to avoid misinterpretation of completed studies and harm to scientific research [7].

What would be helpful is a sample size estimation procedure that provides information on the confidence interval to supply users with information on the clinical significance and precision of the treatment effect in addition to power and statistical significance. Beal [8] suggested selecting sample size such that there is a high probability of the half-width of the confidence interval being less than some prescribed length, conditional on the interval containing the parameter of interest. Similarly, Liu [9] chose the sample size to yield a short confidence interval width but conditional on the rejection of the null hypothesis H0. Jiroutek et al. [10] combined the two by considering the probability of attaining a certain interval width conditional on both rejection of H0 and inclusion of the true parameter. Cesana et al. [11,12] introduced a two-step procedure by first obtaining the sample size according to power and then iteratively increasing the sample size until the probability of obtaining confidence intervals with widths less than the expected interval width under H1 exceeds a specified level.

In the above methods, the user either has to designate an interval length as reference or rely on the expected interval width, which may not be clinically relevant. A more straightforward alternative is to calculate a sample size such that the confidence limits of the parameter will be bounded by designated cut-offs. Specifically, the sample size is chosen such that according to the confidence limits the result can be deemed “definitive-positive” if there is indeed an effect or deemed “definitive-negative” if there is none. According to Guyatt et al. [13], a “definitive-positive” result implies that the lower confidence limit (LCL) of the parameter is not only larger than zero, implying a “positive” and statistically significant study, but above a relevant nonzero threshold. Conversely, a “definitive-negative” result implies that the upper confidence limit (UCL) is below some nonzero threshold. In hypothesis testing, one does not know whether H1 or H0 is true and can only control the probabilities of making a false positive or false negative error. Likewise, in this approach, we control the probabilities of declaring a “definitive-positive” or “definitive-negative” result by calculating the sample size such that LCL under H1 and UCL under H0 are bounded by fixed cut-offs. The following section demonstrates these concepts first for continuous normally distributed data and then for time-to-event data.

Methods

Normally distributed data

Consider a randomized 1:1 clinical trial comparing the mean responses between the treatment and control groups. When the response (or appropriately transformed response) can be regarded as normally distributed, the assessment of the treatment effect can be formulated as a hypothesis test of H0: μ1 - μ0 = 0 versus H1: μ1 - μ0 ≠ 0. The sample size is then given by

| 1 |

where Zγ is the γth quantile of the standard normal distribution, (μ0,σ0) and (μ1,σ1) are the means and standard deviations of the control and treatment groups, respectively, , and δ = μ1- μ0 is the clinically important difference to be detected at level α with power 1 - β.

We first examine how likely the above sample size will yield a “definitive-negative” or “definitive-positive” result by calculating, respectively, the probabilities Pr(UCL < k0δ | H0) and Pr(LCL > k1δ | H1) for k0, k1 ∈ [0,1]. Without loss of generality, assume δ > 0 and let be the sample estimate of the treatment difference. If σ is known, then

| 2 |

| 3 |

where Z is the standard normal variable. As k0, k1 vary from 0 to 1, these two probability functions are mirror images about 1/2, with Pr(LCL > δ /2 | H1) = Pr(UCL < δ /2 | H0). At the boundaries of 0 and 1, Pr(LCL > 0 | H1) = Pr(UCL < δ | H0) = 1 - β.

Based on the derivations of equations (2) and (3), it can be shown that if the sample size is increased to then Pr(UCL < k0δ | H0) = 1 - β for k0 ∈ (0,1) and if it is increased to n1 = n/(1 − k1)2 then Pr(LCL > k1δ | H1) = 1 - β for k1 ∈ (0,1). For example, with k0 = k1 = 1/2 and sample size n0 = n1 = 4n both Pr(LCL > δ /2 | H1) = Pr(UCL < δ /2 | H0) = 1 - β. Note that if k0 = k1 < 1/2 then n0 > n1 and a larger sample size is required to establish a “definitive-negative” compared to a “definitive-positive” result. Conversely, if k0 = k1 > 1/2, then n0 < n1, and a larger sample size is needed to establish a “definitive-positive” result. In general, if

| 4 |

then Pr(UCL < k0δ | H0) = Pr(LCL > k1δ | H1) = 1 - β. For example, if k0 = 2/3, k1 = 1/3 and n0 = n1 = 9n /4 then Pr(LCL > δ /3 | H1) = Pr(UCL < 2δ /3 | H0) = 1 - β.

Time-to-event data

We extend our proposed method to include time-to-event data, and use this case to show how a simulation-based approach can be used to estimate the sample size when the validity of normal approximation may be in doubt. In situations where a closed-form sample size formula is not readily available or difficult to derive, simulation provides an alternative and offers greater flexibility for adapting to more complicated analyses. Briefly, the initial sample size required to detect the clinically important difference δ at power 1 - β is first calculated and then iteratively increased until Pr(LCL > k1δ | H1) and Pr(UCL < k0δ | H0) reach desired levels. The hazard ratio Δ is chosen as the parameter of interest with its corresponding confidence limits LCL and UCL being estimated using Cox regression. In the following description, we select for simplicity and convenience a single common cut-off by letting k0 = k1 = 1/2.

Under the proportional hazards assumption, the initial total sample size N0 for detecting δ = logeΔ at level α and power 1 - β can be estimated using Schoenfeld’s [14] formula,

| 5 |

where πc is the overall censoring proportion, and P0 and P1 are the proportion of subjects in the treatment and control groups, respectively. (Another choice is to use Freedman’s [15] formula, which gives a slightly smaller sample size.)

Time-to-event data are simulated from the exponential distribution since it is most widely used to model time-to-event data under the proportional hazards assumption. Specifically, we simulate exponential survival times Ti and exponential censoring times Li for subjects i = 1, …, N0/2 in each group, and consider a subject censored whenever Ti < Li. According to Halabi and Bahadur [16], the parameters for the survival and censoring time distributions are given by

| 6 |

where λ0, λ1 are the hazard rates of the exponential survival times for the control and treatment groups, respectively, and λc is the hazard rate for the exponential censoring time. When πc = 0.5, equation (6) reduces to the simple relationship

| 7 |

We set λ0 = 1 and select four values, (1.25, 1.5, 1.75, 2.0), for the hazard ratio Δ ≡ λ1/λ0 = λ1. For each value of Δ, the procedure goes through the following steps:

With α = 0.05, β = 0.2, P0 = P1 = 0.5, πc = 0.5, and δ = loge(Δ), calculate the initial total sample size N0 using (5);

Simulate N0/2 independent samples of exponential survival and censoring times for the treatment and control groups with corresponding parameters λ0 = 1, λ1, and

Compare the survival times between the treatment and control groups using Cox regression and compute the 95% confidence interval for loge(Δ);

Repeat steps (2) and (3) for 10,000 iterations and estimate Pr(LCL > δ /2 | H1) using the proportion of iterations where LCL > δ /2;

Set Δ = 1 and repeat steps (2) and (3) 10,000 times to estimate Pr(UCL < δ /2 | H0) using the proportion of times when UCL < δ /2;

Replace N0 with a larger sample size and repeat steps (2) through (5) until the estimates for both Pr(LCL > δ /2 | H1) and Pr(UCL < δ /2 | H0) are greater than some desired level (for example, 0.8).

The above procedure was programmed using SAS 9.2, and a sample SAS program is provided in the Appendix as reference.

Results

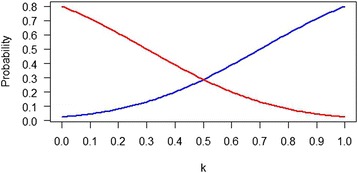

For comparing the means of normally distributed outcomes, Figure 1 shows that when α = 0.05 and power = 0.8, Pr(LCL > kδ | H1) decreases steadily from 0.8 to 0.025 while Pr(UCL < kδ | H0) increases steadily from 0.025 to 0.80 as k varies from 0 to 1. In fact, these two probability functions are mirror images about k = 1/2, where they both equal 0.288. This implies that a trial designed to detect a clinically important difference δ at the 5% significance level with 80% power will be “definitive-positive” about 29% of the time if one wants to say with 95% confidence that the treatment effect must be at least δ /2.

Figure 1.

Plot of Pr(LCL > kδ | H 1) (red curve) and Pr(UCL < kδ | H 0) (blue curve) for k ∈ [0,1], α = 0.05, β = 0.80 in a comparison of normally distributed mean responses with known σ between treatment and control groups for a 1:1 randomized clinical trial.

For time-to-event data, the initial total sample size (N0 = 1264) for detecting a hazard ratio Δ = 1.25 is almost 5/(1 - πc) or ten times larger than that (N0 = 132) for detecting Δ = 2.00 according to Schoenfeld’s [14] formula. At these initial sample sizes, the estimates of Pr(LCL > 0 | H1) ranged from 0.79 to 0.81 as expected, while Pr(UCL < δ | H0) ranged from 0.70 to 0.77, slightly less than 0.8. Similarly, estimates for Pr(LCL > δ /2 | H1) ranged from 0.27 to 0.29, close to what is expected for normally distributed data, while estimates of Pr(UCL < δ /2 | H0) are slightly lower than expected, ranging from 0.23 to 0.27. For a specific example, say Δ = 1.75, then N0 = 204 according to (5) and the estimates of α and β are 0.0485 and 0.2044, respectively. The β estimate implies that 79.6% of the samples have LCL > 0 under H1. But the mean LCL is 0.16, thus as shown in Table 1 only 27.7% of the samples have LCL > δ /2 = loge(1.75)/2 = 0.28. Correspondingly, 95.2% of the samples under H0 have confidence intervals that include zero, but since the mean UCL is 0.42 only 25.4% of the samples have UCL < 0.28.

Table 1.

Clinical significance and precision of the log-hazard ratio according to the initial and final sample sizes

| Δ | log e (Δ) | b λ c | N | Pr( LCL > δ /2 | H 1 ) | e CIW 1 | Pr( UCL < δ /2 | H 0 ) | d CIW 0 | |

|---|---|---|---|---|---|---|---|---|

| 1.25 | 0.22 | 1.12 | aInitial | 1264 | 0.2925 | 0.322 | 0.2651 | 0.314 |

| cFinal | 5402 | 0.8241 | 0.155 | 0.8016 | 0.151 | |||

| 1.50 | 0.41 | 1.22 | aInitial | 384 | 0.2759 | 0.602 | 0.2658 | 0.577 |

| cFinal | 1694 | 0.8349 | 0.285 | 0.8039 | 0.273 | |||

| 1.75 | 0.56 | 1.32 | aInitial | 204 | 0.2766 | 0.850 | 0.2536 | 0.804 |

| cFinal | 938 | 0.8496 | 0.392 | 0.8021 | 0.371 | |||

| 2.00 | 0.69 | 1.41 | aInitial | 132 | 0.2700 | 1.087 | 0.2344 | 1.018 |

| cFinal | 632 | 0.8503 | 0.487 | 0.8052 | 0.457 | |||

The ainitial N calculated using equation (5), Schoenfeld’s [14] formula, is the total sample size required to detect a hazard ratio Δ at the 5% level with 80% power, assuming equal subject allocation and a 0.5 overall censoring proportion. b λc is the hazard rate for the exponential censoring time given by equation (7), and δ. = loge(Δ). The cfinal N is the total sample size such that both Pr(LCL > δ /2 | H 1) and Pr(UCL < δ /2 | H 0) are at least 0.8 as estimated by the proportion of times LCL and UCL are bounded by δ /2 in 10,000 iterations. dCIW0 and eCIW1 are the mean width of the 95% confidence intervals under H 0 and H 1, respectively.

Table 1 suggests that sample sizes need to be larger by four to five times the initial sample size before estimates of both Pr(LCL > δ /2 | H1) and Pr(UCL < δ /2 | H0) are above 0.8. For example, with Δ = 1.75, the mean LCL for samples under H1 equals 0.38 when the sample size reaches 938 (4.6 times N0), and 85.0% of the samples then have LCL > δ /2 = 0.28. In addition, at this sample size, the mean UCL for samples under H0 equals 0.19, and 80.2% of the samples have UCL < 0.28. In terms of confidence interval width, the final sample sizes yield confidence interval widths that are between 0.4 to 0.5 times narrower than those at the initial sample sizes. For example, with Δ = 1.75 and a final sample size of 938, the mean confidence interval widths are 0.37 and 0.39 under H0 and H1, respectively, and 0.46 times narrower than the corresponding mean confidence interval widths at the initial sample size of 204.

Discussion

Many researchers realize that a traditional sample size calculation for testing H0: μ1 - μ0 = 0 versus H1: μ1 - μ0 ≠ 0 with α = 0.05 and 80% power to detect a clinically important difference δ implies that: 1) 95% of its 95% confidence intervals for μ1 - μ0 will include zero when H0 is true, and 2) 80% of the 95% confidence intervals will exclude zero when H1 (that is, μ1 - μ0 = δ) is true. However, a confidence interval with a LCL that is barely larger than zero may indicate a statistically significant treatment effect but be unconvincing to investigators who desire a “definitive-positive” result [13]. In contrast, a confidence interval that includes zero and demonstrates a “statistically nonsignificant” effect may be more convincing as a “definitive-negative” result when its UCL is small. Therefore, we propose that information on Pr(LCL > cut-off | H1) and Pr(UCL < cut-off | H0) be available to assist investigators in gauging the clinical significance of the treatment effect. For example, a plot similar to Figure 1 can be provided as a supplement to the usual sample size calculation or the investigator can directly estimate the sample size required such that LCL and UCL are bounded by relevant cut-offs with high probability. This offers a more consistent approach since the confidence interval becomes an important component in the design of clinical trials and not solely for analysis.

One question for this method concerns how a clinically relevant cut-off can be selected. Since δ, the clinically important difference, is already defined in the original sample size calculation, a convenient choice is to specify the cut-off with respect to δ. Given the uncertainty involved in quantifying δ and the tendency to inflate it [6], we set the cut-off equal to kδ for k ∈ (0,1). This bypasses the need to additionally specify a confidence interval reference width [8-10] or calculate an expected confidence interval width [11,12]. For example, δ /2 can be used as the cut-off since it gives equal consideration to the expected precision of symmetrical intervals under H0 and H1. However, it should be stressed that there is no requirement for intervals under H0 and H1 to be given equal emphasis or for the boundaries of LCL and UCL to be the same. A researcher may well choose different cut-offs corresponding to a “definitive-positive” and a “definitive-negative” result; for example, LCL > 3δ /4 and UCL < δ /4 or LCL > δ /3 and UCL < 2δ /3.

Previous considerations of sample size estimation by controlling statistical power and precision often involve complex calculations even for normally distributed or binary outcomes. The current proposal is pedagogically straightforward as it simply focuses on the position of the confidence limits in relation to clinically relevant boundaries. Greenland [17] designed a method that provides high power to discriminate between the parameter values under H0 and H1. A sample size was chosen such that the discriminatory power, min{ Pr(LCL > 0 | H1), Pr(UCL < δ | H0)}, equals a specified level. Our method also focuses on the probabilities of the lower and upper confidence limits being bounded, but the boundaries are different as Greenland was not thinking of clinically important effect sizes but the original parameter values under H0 and H1.

The condition LCL > k1δ corresponds to the alternative hypothesis for a superiority test of H0: μ1 - μ0 ≤ k1δ versus H1: μ1 - μ0 > k1δ. However, the sample size n1 to attain a “definitive-positive” result is different from the sample size for the superiority test since the former is two-sided while the latter is one-sided. For example, with α = 0.05, β = 0.2, σ2 = 2, δ = 1, and k1 = 1/2, equations (1) and (4) imply that n1 = 4×16 = 64, while the sample size for the superiority test, as given by

equals 50. More importantly, our method calculates not only the sample size involving LCL > k1δ but also that for UCL < k0δ.

Conclusions

In summary, our proposed method allows the researcher to calculate the sample size for a clinical trial not only according to the specifications of statistical significance (that is, α and β) but also in terms of clinical significance as judged by the boundaries of the confidence limits. For normally distributed data, simple formulae are available and their results serve as a reference for sample size planning when analyzing other types of data. For example, to ensure that LCL and UCL are both bounded by δ /2 the sample size needs to be increased 4-fold when comparing normally distributed means. Likewise, when evaluating the hazard ratio for time-to-event data, simulation results also suggest that sample sizes need to be 4 to 5 times larger. The results of our method indicate that sample size needs to be increased but our intention is not to mandate larger sample sizes per se. Such an effort may be futile since in practice cost constraints force clinical trials to aim for the smallest possible sample size What is important is that researchers be informed, for example by a graph similar to Figure 1, as to how their sample size will affect judgments of clinical significance using confidence intervals. In this respect, our proposal directs attention back to the importance of gauging effect sizes using confidence intervals, and is consistent with the predicted confidence intervals Goodman and Berlin [6] advocated to help investigators better understand the idea of statistical power when calculating sample size.

Acknowledgements

None. This research was not supported by any external funding resources.

Abbreviations

- CONSORT

Consolidated Standards of Reporting Trials

- LCL

lower confidence limit

- UCL

upper confidence limit

Appendix

Sample SAS program to estimate the total sample size for testing H0: Δ = 1 versus H1: Δ ≠ 1 such that Pr(LCL > δ /2 | H1) = Pr(UCL < δ /2 | H0) = 1 - β. Survival and censoring times are assumed to be exponentially distributed, and the overall censoring proportion equals 0.5. The initial sample size is estimated using Schoenfeld’s [14] formula for detecting δ = loge(Δ) with 80% power at the 5% significance level.

Footnotes

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

HSL conceived the study, performed the analyses, and drafted the manuscript. BJ participated in the analyses and drafted the manuscript. Both authors have read and approved the final manuscript.

Contributor Information

Bin Jia, Email: bjia33@its.jnj.com.

Henry S Lynn, Email: hslynn@shmu.edu.cn.

References

- 1.Simon R, Wittes RE. Methodologic guidelines for reports of clinical trials. Cancer Treat Rep. 1985;69:1–3. [PubMed] [Google Scholar]

- 2.Bailar JC, Mosteller F. Guidelines for statistical reporting in articles for medical journals. Ann Intern Med. 1988;108:266–73. doi: 10.7326/0003-4819-108-2-266. [DOI] [PubMed] [Google Scholar]

- 3.Lang T. Documenting research in scientific articles: guidelines for authors. 1. Reporting research designs and activities. Chest. 2006;130:1263–8. doi: 10.1378/chest.130.4.1263. [DOI] [PubMed] [Google Scholar]

- 4.Rothman K. Modern epidemiology. Boston: Little Brown; 1986. [Google Scholar]

- 5.Altman DG, Schulz KF, Moher D, Egger M, Davidoff F, Elbourne D, et al. The revised CONSORT statement for reporting randomized trials: explanation and elaboration. Ann Intern Med. 2001;134:663–94. doi: 10.7326/0003-4819-134-8-200104170-00012. [DOI] [PubMed] [Google Scholar]

- 6.Goodman S, Berlin J. The use of predicted confidence intervals when planning experiments and the misuse of power when interpreting results. Ann Intern Med. 1994;121:200–6. doi: 10.7326/0003-4819-121-3-199408010-00008. [DOI] [PubMed] [Google Scholar]

- 7.Bacchetti P. Current sample size conventions: flaws, harms, and alternatives. BMC Med. 2010;8 doi: 10.1186/1741-7015-8-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Beal SL. Sample size determination for confidence intervals on the population mean and on the difference between two population means. Biometrics. 1989;45:969–77. doi: 10.2307/2531696. [DOI] [PubMed] [Google Scholar]

- 9.Liu XS. Implications of statistical power for confidence intervals. Br J Math Stat Psychol. 2012;65:427–37. doi: 10.1111/j.2044-8317.2011.02035.x. [DOI] [PubMed] [Google Scholar]

- 10.Jiroutek MR, Muller KE, Kupper LL, Stewart PW. A new method for choosing sample size for confidence interval-based inferences. Biometrics. 2003;59:580–90. doi: 10.1111/1541-0420.00068. [DOI] [PubMed] [Google Scholar]

- 11.Cesana BM, Reina G, Marubini E. Sample size for testing a proportion in clinical trials: a ’two-step’ procedure combining power and confidence interval expected width. Am Stat. 2001;55:288–92. doi: 10.1198/000313001753272222. [DOI] [Google Scholar]

- 12.Cesana BM. Sample size for testing and estimating the difference between two paired and unpaired proportions: a ‘two-step’ procedure combining power and the probability of obtaining a precise estimate. Stat Med. 2004;23:2359–73. doi: 10.1002/sim.1827. [DOI] [PubMed] [Google Scholar]

- 13.Guyatt G, Jaeschke R, Heddle N, Cook D, Shannon H, Walter S. Basic statistics for clinicians: 2. Interpreting study results: confidence intervals. Can Med Assoc J. 1995;152:169–73. [PMC free article] [PubMed] [Google Scholar]

- 14.Schoenfeld DA. Sample-size formula for the proportional-hazards regression model. Biometrics. 1983;39:499–503. doi: 10.2307/2531021. [DOI] [PubMed] [Google Scholar]

- 15.Freedman LS. Tables of the number of patients required in clinical trials using the logrank test. Stat Med. 1982;1:121–9. doi: 10.1002/sim.4780010204. [DOI] [PubMed] [Google Scholar]

- 16.Halabi S, Bahadur S. Sample size determination for comparing several survival curves with unequal allocations. Stat Med. 2004;23:1793–815. doi: 10.1002/sim.1771. [DOI] [PubMed] [Google Scholar]

- 17.Greenland S. On sample-size and power calculations for studies using confidence intervals. Am J Epidemiol. 1988;128:231–7. doi: 10.1093/oxfordjournals.aje.a114945. [DOI] [PubMed] [Google Scholar]