Abstract

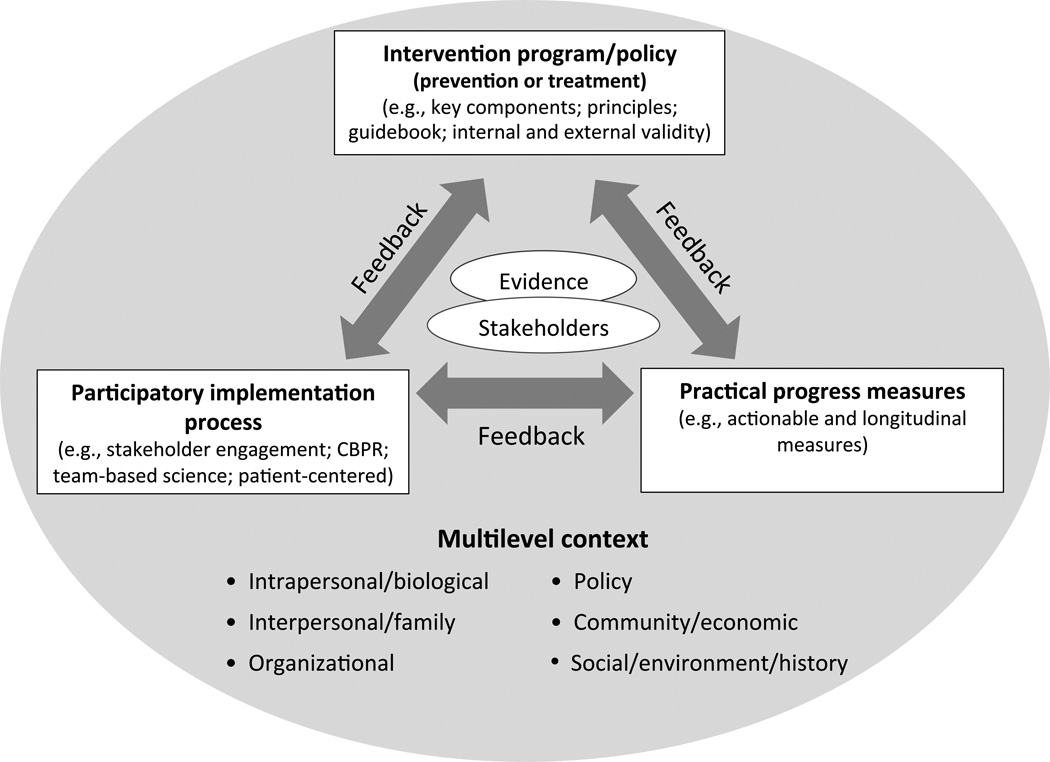

Over-reliance on decontextualized, standardized implementation of efficacy evidence has contributed to slow integration of evidence-based interventions into health policy and practice. This article describes an “evidence integration triangle” (EIT) to guide translation, implementation, prevention efforts, comparative effectiveness research, funding, and policymaking. The EIT emphasizes interactions among three related components needed for effective evidence implementation: (1) practical evidence-based interventions, (2) pragmatic, longitudinal measures of progress, and (3) participatory implementation processes. At the center of the EIT is active engagement of key stakeholders and scientific evidence, and attention to the context in which a program is implemented. The EIT model is a straightforward framework to guide practice, research and policy toward greater effectiveness, and is designed to be applicable across multiple levels—from individual-focused and patient-provider interventions, to health systems and policy-level change initiatives.

Introduction

Translation of research evidence to widespread application in practice has variously been conceptualized as a linear process—a “pipeline” or “roadmap” that unfortunately is slow, uncertain, and incomplete.1,2 The dominant conceptualizations of translation of science into practice begin with research products developed by investigators, and then go through various sequential steps to the eventual routine use by practitioners. This type of scientific evidence, however, developed in isolation from its projected users, often fits uncomfortably in the settings and populations where it is intended to be applied. The art of policy and practice involves reconciling the strength of published evidence with its relevance based on the experience of those who know, live and work with the problem that the evidence is designed to solve.3

The Roadmap for Medical Research by the NIH4 suggests a progression from T1 research (basic discovery), to T2 research (evaluation of efficacy). Recent contributions have expanded this to T3 research (evaluation of implementation in practice), and T4 research (assessing the impact on population health).5,6 T1 and T2 research, with their emphasis on bringing basic research to clinical trials, dominate biomedical funding but are not enough. The current complex health and healthcare challenges require complex, multilevel solutions tailored to the specific settings in which they are applied.7,8 The limited effect of research on population health argues for increasing the current low levels of investment in T3 and T4 investigation to enhance the success of prevention and implementation science. The research, policy and funding communities cannot keep relying on the same highly controlled efficacy research, pushed into the same unidirectional and leaky implementation pipeline, while expecting different outcomes.9,10

To increase the relevance, application and impact of scientific investigation, researchers, practitioners, community members and policymakers need a straightforward and systematic way to understand the pathway from research discovery to population health outcomes.11 Evidence, practice and policy must begin with the end goal in mind to foster adoption, implementation adaptation, and sustainability.12,13 The traditional linear approach to research translation has been critiqued by many, including the authors, but few clear, feasible alternatives have been proposed.7,10,14,15

Several research translation models have been employed productively, but they are often found to be too complex, academic or time-consuming for clinicians, community members and health systems.16–18 This paper describes a three-pronged model called the Evidence Integration Triangle (EIT) (Figure 1) that captures essential dimensions of an effective interaction between research and its practice/policy translation. The EIT builds on, and attempts to distill, the critical elements of these important predecessor models. It is designed to be more intuitive and readily applied by stakeholders, including practitioners, policymakers, and citizens to foster high-impact knowledge implementation by research-practitioner-community partnerships.

Figure 1.

Evidence Integration Triangle (EIT) Model

CBPR, community-based participatory research

The purposes of this paper are to: (1) describe the EIT as a model to help optimize practice through research evidence and speed integration of science, policy and practice;19 (2) suggest practical actions and keys to success within and across the three domains; (3) provide examples of application of the EIT; and (4) discuss implications for researchers, practitioners, and policymakers.

The Framework

The EIT depicts in a simple framework the complex multilevel contextual factors affecting the integration of scientific knowledge into practical applications. As shown in Figure 1, bringing together evidence and relevant stakeholders is central. Interactions among the three main evidence-based components—intervention program/policy, implementation processes, and measures of progress—empower these stakeholders to use scientific evidence to maximize positive health impact and value and encourage development and sharing of new knowledge to inform future interactions.

As depicted at the bottom of Figure 1, context is pivotal to the EIT. The multilevel context— conditions surrounding health problems and intervention opportunities in a particular place with a particular population—is a key starting point. Context also changes over time, giving a temporal and recursive aspect to the EIT, with context continually informing the other key components. The multilevel aspect of EIT aligns it with the growing emphasis on ecologic models of organizational and community assessment, systems approaches,17,20–24 program planning and evaluation,16,17 and with reorientation of “the clinical effectiveness research paradigm” toward greater recognition of “innovation and practice-based approaches” to evidence.3 Keeping an eye on contextual factors allows evidence to be made and kept relevant. The other EIT model components are described below.

Intervention Program/Policy

Intervention programs and policies need pragmatic evidence relevant to the stakeholders who must implement them. This focus on external validity is challenging because the standards for rigor in most scientific evidence emphasize internal validity.14,18 Published recommendations from systematic reviews rely primarily on RCTs of efficacy (www.cochrane.org).25 These, however, have been slow to translate and are perceived by many as lacking relevance to their setting or population.10,15 Consideration of external validity necessitates that research be more transparent about issues of recruitment, context, settings, capacity, and representativeness, and that the primary questions that need to be addressed for translation and implementation are of the realist variety,26,27 which focus on questions of the form “which intervention factors are most effective—for which patient subgroups, when administered by what staff, under which conditions, for what outcomes”.

Public health and policy experts have advocated for expanded use of practice-based evidence and insisted that research designs should fit the question and context rather than vice versa.9,28,29 Both the IOM30 and Etheredge31 have stressed the need for “rapid learning evidence,” a medicalized portion of which is increasingly available from electronic health records. Such learning uses close to real-time data on hundreds of thousands of real-world patients experiencing interventions delivered by practicing clinicians in real-world delivery systems. Simulation modeling also has experienced substantial advances in computing power, which can be used to provide tests of concept and potential outcomes prior to investment in long, expensive trials.17,32

The types of evidence being recommended here involve marrying rigorous design focused on internal validity and theory-driven hypotheses with an increased focus on external validity, contextual considerations, and stakeholder relevance.9,33 Relevance is achieved by attending to the contexts in which they will be implemented.34 Context includes multilevel factors including the historical, political, economic, social, environmental and cultural settings in which a program is being implemented (Figure 1). Programs need to be practical and efficient so that they are capable of having a broad reach, especially to settings and people most in need or at highest risk. Whenever feasible, the ideal interventions are ones demonstrated to be generalizable across diverse settings and under diverse conditions of implementation, with minimal adaptation.35

Practical Measures for Monitoring Progress

Standardized, practical measures are needed to evaluate progress toward goals and objectives. At the national level, measuring progress toward the accomplishment of Healthy People 2020 objectives (www.healthypeople.gov) has focused efforts on programs and policies that make the greatest difference in the health of populations. Far less attention has been focused on identifying “best practical measures” that are feasible for practitioners, health systems and policymakers to assess progress on the outcomes they address. Some of the key implementation successes have come from very simple innovations such as surgical checklists and provider reminder systems, which focus attention on key implementation issues.36

Implementation success needs to be monitored and frequent feedback provided so that adjustments can be made if desired outcomes are not achieved in local implementation. Choosing the best metrics involves trade-offs to find the best balance among criteria such as those outlined in Table 1. These criteria combine traditional psychometric concerns of scientific rigor with practical considerations of relevance, feasibility, and in particular, being actionable in typical settings.

Table 1.

Recommended characteristics for practical measures and assessments

| Characteristic | Recommended Criteria |

|---|---|

| Reliable | Especially test–retest (less on internal consistency) |

| Valid | Construct validity, criterion validity, established norms |

| Sensitive to Change | Appropriate for longitudinal use, goal-attainment tracking, repeated administration |

| Feasible | Brief (generally three items or less); easy to score/interpret |

| Important to Clinicians | Indices for health conditions that are prevalent, costly, Challenging |

| Public Health Relevance | To address without measures, in primary care domain, related to Healthy People 2020 goals |

| Actionable or feasibility of developing recommended clinical decision support | Realistic actions, reliable referral, immediate discussion, online resources, how easy or difficult would it be to develop a clinical response “toolkit” to act on the resulting data |

| User-friendly | Patient interpretability; face validity; meaningful to clinicians, public health officials, community members and policymakers |

| Broadly applicable | Available in relevant languages; validated in various cultures and Contexts |

| Low-cost | Publicly available or very low cost to promote widespread use |

| Enhances Patient Engagement | Having this information is likely to further patient involvement in their care and decision-making |

To optimize these criteria, those who monitor implementation often face the choice of using off-the-shelf measures that have been validated but not exactly right for a given application versus developing new measures specifically for a given evaluation. Between these extremes is a middle ground that includes using the most-relevant items from previously validated measures alongside new purpose-developed measures. The aims of relevance, engagement, and ongoing learning can be met by complementing quantitative measures with qualitative assessment and analysis. Such mixed methods37 can be particularly helpful for assessing meaning from the perspective of participants, discovering new constructs, assessing unanticipated outcomes, and providing narrative meaning to numeric results.

Measures ideally should meet standards of reliability and validity, but also be practical, normative, sensitive to change, usable longitudinally, available in relevant languages, have face validity for stakeholders and practitioners, and cause only modest staff and patient/population burden. Such indices are critical because an intervention is seldom implemented in practice exactly as it was in research. Data-based adjustments are usually required. Relevant and timely information is necessary to create rapid learning healthcare systems.31,38

Partnership Implementation Process

Moving an intervention from one setting to another requires recognition that different practitioners and stakeholders hold more or less authority, varied opinions, and more or less inclination, capacity and resources to support its implementation. The most common perception practitioners hold of experimental evidence is that it was generated in a system with far more resources, and on people that are carefully selected, compared to actual implementation settings.15 Guidelines for evidence-based practices that seem to deny or disparage professional or personal judgment, or to limit discretion in applying new methods to local situations, can arouse defensiveness or resentment. Evidence-based programs and practical measures alone are insufficient.

To succeed, interventions must be implemented with methods that engage the partners and multiple stakeholders, and that treat their varied perspectives with consideration and respect. The top-down “we are the experts” attitude has been a source of many failures. With a growing emphasis on participatory approaches,25 an increasing number of researchers and organizational leaders give lip service to egalitarian processes of evidence development, adaptation and implementation, but the participation they invite is often perfunctory and cosmetic. Much research continues to produce rather sterile, decontextualized answers to the question of “what” needs to be implemented, and little on “how” best to implement the evidence-based interventions and measures in relevant settings and populations. Needed are approaches that employ the principles of community-based participatory research39–41 and team science,42,43 that take stakeholder and local perspectives seriously and treat all collaborators as valued “experts” on their domains of interest.42

Various forms of evidence are essential to involve stakeholders from the beginning and throughout all phases of project planning, implementation, management, and evaluation. For research to influence implementation, planners and decision makers must take these key issues into consideration in recommending evidence-based practices.44

Multilevel Context and Interactions Among Components of the Evidence Integration Triangle

Each of the individual components of the EIT—evidence-based intervention, practical longitudinal assessment, and a partnership implementation approach—becomes necessary, but not sufficient, for successful integration of research, practice and policy. The specific elements of the EIT require attention if research is to influence practice in ways that improve population health. This paying attention45 involves iterating between the big picture and the particulars of the multilevel context, 8,24 working to assure that activities are coordinated to support each other, and are sensitive to and fit the implementation context. Applying the EIT, then, involves developing the three main components based on relevant evidence and interactions with key stakeholders, while periodically raising and lowering the gaze to pay attention to the multilevel context.46

Opportunities to Apply the Evidence Integration Triangle to Improve Prevention and Health Care

If national policymakers continue to require that state and local programs be evidence-based, even when such evidence does not exist, then the evidence to be considered must be expanded to take the implementation and partnership processes into account. Recommendations also must emphasize not just “best practices” from evidence-based reviews of controlled trials, but also “best processes” of assessing needs, joint decision-making, planning, management, and ongoing evaluation in partnership with stakeholders (Palmer VJ et al., in preparation). The EIT suggests a path to accomplish this through the iterative feedback process across the triangle components. Feedback from implementation/assessment to the evidence produces “practice-based evidence.”9

In community, regional or national implementation contexts, participatory research, both practice-based47 and community-based, is needed.48 This research strategy, which has a growing emphasis in primary health care,40 clinical trials research,49 and public health,50 has been stimulated by practice-based research networks, and research funding opportunities provided by the Agency for Healthcare Research and Quality, the CDC, the Kellogg Foundation, and the Robert Wood Johnson Foundation. In recent years, participatory research increasingly has been advocated in the name of transdisciplinary research and team science43 and as part of the NIH Roadmap in Community Translational Science Awards.51

Rapid Learning Organizations

One important implication of the ongoing and iterative nature of the EIT is that it fosters the creation of rapid learning organizations.31,52,53 Keeping the EIT components and the larger context in view over time results in an ongoing cycle of knowledge generation, implementation and measurement.23,31 This iterative process can be entered at any point in the triangle. For example, the intervention and evaluation design considerations become modified by assessments of progress; learning about what works in the implementation process may require modifications of the intervention in collaboration with local stakeholders and those affected by the program, and the addition of new measures.54

For example, an ongoing investigation to understand the complexity of primary care practice and community settings, found them to be dynamic adaptive systems with the capacity to “learn,”55 and then used that understanding to design tailored implementation processes resulting in sustained improvement.56 Ongoing measurement and evaluation involving both quantitative and qualitative assessment has fostered rapid cycle learning.55

For complex issues that have eluded solutions, such as the “wicked problems” (Palmer et al., in preparation) of obesity, violence, or health inequities, application of the EIT can foster a transdisciplinary approach43 in which people bring their diverse training and backgrounds to work together to make sense45 across usual boundaries46,57 to develop mutual understanding. Wicked problems “are ill-formulated, where the information is confusing, where there are many clients and decision makers with conflicting values, and where the ramifications in the whole system are thoroughly confusing.”58 The EIT can guide practical interventions that are sources of learning in real time. For example, the model could inform initiatives of the Centers for Medicare and Medicaid Services (CMS) Innovation Center, and many natural experiments occurring as part of transformations efforts to establish patient-centered medical homes 59,60 and accountable care organizations.61,62

Public Health and Policy Opportunities

After over a decade of following the hierarchy of evidence-based medicine,63 systematic reviews of community preventive services and lifestyle interventions frequently found a relative paucity of evidence, and often an impossibility of conducting RCTs on populations, leading repeatedly to conclusions of “insufficient evidence”.64 The urgency of action needed in the face of epidemics in HIV/AIDS, H1N1 influenza virus, food-borne diseases, and obesity, has forced a greater appreciation of the wide range of other types of evidence that can and must inform policy action.65

The successful tobacco control experience in reducing U.S. smoking prevalence illustrates what can be accomplished by paying attention to and working on the multiple components of the EIT. A focus on practical measures produced a renaissance in the priority given to surveillance data and analysis of population trends in systematic evaluation of the natural experiments of policy and broad program innovations. This, in turn, is having a transformative influence on public health thinking about evidence in general as a guide to public health practice. Other important advances will be driven by the dramatically increased availability of community-level data on health, health behaviors, and health determinants, as well as many other community attributes available via community health indicators, and the ever-increasing GIS databases. When interpreted through “dashboards” and other applications that can clearly and compellingly display complex and interrelated data sets, these data have considerable potential to inform public health action policies and campaigns.

Research Applications

To provide the information needed to apply the EIT, research methods need to be more rapid, practical, transparent, and relevant to stakeholders. These suggestions are congruent with recent movements supported by AHRQ as practical trials,66 and by the CONSORT working group on pragmatic trials.67 These groups, along with the new Patient-Centered Outcomes Research Institute (www.pcori.org) emphasize research that uses practical designs to produce results that are relevant to real-world settings, and that study complex multimorbid patients in challenging settings, and address issues such as implementation and generalizability of results.

The EIT implies that research designs and evaluations should be iterative and dynamic.24 Application of the EIT has potential to stimulate creative evaluation methods and designs that use practical measures of progress to provide rapid feedback to inform adjustments and mid-course corrections using partnership principles.68 The considerations raised by the EIT also provide opportunities for comparative effectiveness research across the prevention and disease-management spectrum69,70 — focusing on interactions that may explain substantial variance in why interventions differ in their effectiveness, as well as why the same intervention is successful in some settings, and not in others.26

Example Application

An example of how the EIT can be applied to increase the frequency of evidence-based health behavior change counseling in primary care settings. This project, described in detail elsewhere,71 is an ongoing effort among the NIH and multiple professional and consumer organizations to facilitate the delivery of patient-centered approaches to health behavior and psychosocial issues.72 The EIT elements of this effort include: (1) engaging stakeholders including primary care organizations (e.g., American Association of Family Medicine, Society of General Internal Medicine, AHRQ) and consumer-focused groups (e.g., Center for the Advancement of Health and Consumers Union) throughout the process (stakeholders); (2) attending to the larger context, which includes the advent of the primary care medical home73–76 and the meaningful use of the EHR (context); (3) achieving consensus on a core set of standard, brief, actionable, patient-reported items on health behaviors and psychosocial issues that are both scientifically sound as well as actionable and feasible to implement longitudinally (practical process measures for monitoring progress); (4) creating decision aids to provide feedback to both patients and healthcare teams on issues for discussion and goal setting/action planning, and to connect with evidence-based health behavior change strategies recommended by the U.S. Preventive Services Task Force (evidence-based intervention program/policy)77; and (5) an iterative process for identifying and field-testing recommended items, soliciting feedback from expert panels, numerous organizations and constituents via an interactive web-based wiki process, and pilot-testing in diverse primary care organizations that collaborated on study design (partnership implementation process).

The iterative nature of the EIT process is illustrated by the feedback provided from the common data elements (practical measures), which will inform adaptations at both multiple levels. Although this project is still ongoing, it is apparent that the local context, including clinic patterns of patient flow and level of EHR integration are critically important for implementation delivery.

Discussion

The EIT framework suggests several testable hypotheses that could inform implementation science. One key hypothesis is that programs that incorporate all three evidence-based components of: (1) an effective program collaboratively selected and adapted, (2) practical longitudinal measures for rapid feedback on progress, and (3) true partnership approaches to implementation that pay attention to contextual factors, should be superior to programs that focus on fewer components. A more subtle hypothesis is that programs that pay attention to EIT model features iteratively—that adapt initial interventions using feedback on progress, team science principles that involve transdisciplinary interactions,42 and shared decision-making among stakeholders—should perform better in the long term than those that focus predominantly on continued fidelity to an original set of intervention activities.

Since funding and research emphasis has focused predominantly on identifying evidence-based interventions, greater attention is needed to the other two components of the EIT—practical indicators of progress and the participatory implementation process.78 Research on the EIT and could benefit from measurement of the extent to which the three areas of the EIT align with and support each other. This concept of “alignment” has also been discussed as a key to the success of the Chronic Care Model79 and multilevel intervention programs.24 We are not aware of such alignment measures, and at present the construct is probably initially best approached qualitatively.

Both the EIT and the parent field of implementation science18 could benefit from practical demonstrations and assessments of the multilevel concept of “partnership implementation approach.” To capture patient–practitioner interactions, conceptually related but lengthy measures of slightly different constructs have been developed at the individual/dyadic level for patient-centered health care.80 Also, the EIT can aid the operationalization of community-based participatory research principles.81,82 Finally, use of the EIT can inform evolving literature on the “team science” of how transdisciplinary groups from varying perspectives can best work together constructively.42

Conclusion

Many of the needs for prevention, health care, and population health solutions involve complex problems in complex community and healthcare environments, faced by complex patients, settings and cultures. These challenges demand complex interventions, which are unlikely to be immediately successful when initially applied.83 Application of the EIT, and approaching improvement efforts as complex adaptive systems,84 can help guide us toward solutions to these “wicked problems (Palmer et al, in preparation).”58

Addressing the EIT components and interactions from the outset of research initiatives can maximize the yield of investment in science by guiding strategic decision-making about research areas to pursue and how evidence can inform health promotion and healthcare-quality research. Considerations raised by the EIT also can inform comparative effectiveness research, quality improvement interventions, evidence implementation, and policy decisions about resource allocation. In the current resource-challenged environment, society cannot afford to invest in knowledge generation that is uninformed by its evidence integration and application in context.

Acknowledgements

Dr. Stange’s time is supported by the Intergovernmental Personnel Act Mobility Program through the Division of Cancer Control and Population Sciences at the National Cancer Institute, by a Clinical Research Professorship from the American Cancer Society, and by a Clinical & Translational Science Award (CTSA) to Case Western Reserve University/Cleveland Clinic, CTSA Grant Number UL1 RR024989 from the National Center for Research Resources.

The content of this paper is solely the responsibility of the authors and does not necessarily represent the official view of the NCRR, the NCI, or the Task Force on Community Preventive Services.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

No financial disclosures were reported by the authors of this paper.

References

- 1.IOM, Committee on Quality Health Care in America. Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academies Press; 2003. [Google Scholar]

- 2.McGlynn EA, Asch SM, Adams J, et al. The quality of health care delivered to adults in the U.S. N Eng J Med. 2003;348(26):2635–2645. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- 3.Green LW. From research to “best practices” in other settings and populations. Am J Health Behav. 2001;25:165–178. doi: 10.5993/ajhb.25.3.2. [DOI] [PubMed] [Google Scholar]

- 4.NIH Common Fund. About the NIH Roadmap. commonfund.nih.gov/aboutroadmap.aspx.

- 5.Khoury MJ, Gwinn M, Joannidis JP. The emergence of translational epidemiology: from scientific discovery to population health impact. Am J Epidemiol. 2010;172(5):517–524. doi: 10.1093/aje/kwq211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Westfall JM, Mold J, Fagnan L. Practice-based research--”Blue Highways” on the NIH roadmap. JAMA. 2007 Jan 24;297(4):403–406. doi: 10.1001/jama.297.4.403. [DOI] [PubMed] [Google Scholar]

- 7.Green LW. Public health asks of systems science: to advance our evidence-based practice, can you help us get more practice-based evidence? Am J Public Health. 2006 Mar;96(3):406–409. doi: 10.2105/AJPH.2005.066035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Yano EM, Green LW, Glanz K, Ayanian JZ, Mittman BS. Implementation and spread of multi-level interventions in practice: Implications for the cancer care continuum. J Natl Cancer Inst. 2011 doi: 10.1093/jncimonographs/lgs004. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Green LW. Making research relevant: if it is an evidence-based practice, where's the practice-based evidence? Fam Pract. 2008 Dec;25(Suppl 1):i20–i24. doi: 10.1093/fampra/cmn055. [DOI] [PubMed] [Google Scholar]

- 10.Kessler R, Glasgow RE. A proposal to speed translation of healthcare research into practice: dramatic change is needed. Am J Prev Med. 2011 Jun;40(6):637–644. doi: 10.1016/j.amepre.2011.02.023. [DOI] [PubMed] [Google Scholar]

- 11.Tunis S. Implementing comparative effectiveness research: Priorities, methods, and impact. Washington, D.C.: Brookings Institute; 2009. Strategies to improve comparative effectiveness research methods and data infrastructure. In: Engelberg Center for Health Care Reform, Editor; pp. 35–54. [Google Scholar]

- 12.Bartholomew LK, Parcel GS, Kok G, Gottlieb NH. Intervention mapping: Designing theory and evidence-based health promotion programs. Mountain View, CA: Mayfield (now McGraw-Hill); 2001. [Google Scholar]

- 13.Klesges LM, Estabrooks PA, Glasgow RE, Dzewaltowski D. Beginning with the application in mind: Designing and planning health behavior change interventions to enhance dissemination. Ann Behav Med. 2005;(29 Suppl):66S–75S. doi: 10.1207/s15324796abm2902s_10. [DOI] [PubMed] [Google Scholar]

- 14.Green LW, Glasgow RE, Atkins D, Stange K. Making evidence from research more relevant, useful, and actionable in policy, program planning, and practice slips “twixt cup and lip”. Am J Prev Med. 2009 Dec;37(6 Suppl 1):S187–S191. doi: 10.1016/j.amepre.2009.08.017. [DOI] [PubMed] [Google Scholar]

- 15.Rothwell PM. External validity of randomised controlled trials: To whom do the results of this trial apply? Lancet. 2005;365:82–93. doi: 10.1016/S0140-6736(04)17670-8. [DOI] [PubMed] [Google Scholar]

- 16.Green LW, Kreuter MW. Health program planning: An educational and ecological approach. 4th Ed. Mayfield Publishing Company; 2005. [Google Scholar]

- 17.Best A, Stokols D, Green LW, Leischow S, Holmes B, Buchholz K. An integrative framework for community partnering to translate theory into effective health promotion strategy. Am J Health Promot. 2003 Nov;18(2):168–176. doi: 10.4278/0890-1171-18.2.168. [DOI] [PubMed] [Google Scholar]

- 18.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kottke TE, Solberg LI, Nelson AF, Belcher DW, Caplan W, Green LW, et al. Optimizing practice through research: A new perspective to solve an old problem. Ann Fam Med. 2008 Sep-Oct;6(5):459–462. doi: 10.1370/afm.862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Scutchfield FD, Shapiro RM. Public health services and systems research: entering adolescence? Am J Prev Med. 2011 Jul;41(1):98–99. doi: 10.1016/j.amepre.2011.04.001. [DOI] [PubMed] [Google Scholar]

- 21.Merrill JA, Keeling JW, Willson RV, Chen TV. Growth of a scientific community of practice public health services and systems research. Am J Prev Med. 2011 Jul;41(1):100–104. doi: 10.1016/j.amepre.2011.03.014. [DOI] [PubMed] [Google Scholar]

- 22.Leischow SJ, Best A, Trochim WM, Clark PI, Gallagher RS, Marcus SE, et al. Systems thinking to improve the public's health. Am J Prev Med. 2008 Aug;35(2 Suppl):S196–S203. doi: 10.1016/j.amepre.2008.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Senge PM, Kleiner A, Roberts C, Ross R, Roth G, Smith B. The dance of change: The challenges of sustaining momentum in learning organizations. 1st Ed. New York: Currency/Doubleday; 1999. [Google Scholar]

- 24.Breslau E, Dietrich AJ, Glasgow RE, Stange KC. State-of-the-art and future dirctions in multilevel interventions across the Cancer Control Continuum. J Natl Cancer Inst. 2011 doi: 10.1093/jncimonographs/lgs006. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mercer SL, Green LW. Federal funding and support for participatory research in public health and health care. In: Minkler M, Wallerstein N, editors. Community-based participatory research in health. 2nd ed. San Francisco: Jossey-Bass; 2008. pp. 399–406. [Google Scholar]

- 26.Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review: A new method of systematic review designed for complex policy interventions. J Health Serv Res Policy. 2005;10(S1):S21–S39. doi: 10.1258/1355819054308530. [DOI] [PubMed] [Google Scholar]

- 27.Zaza S, Briss PA, Harris KW. The Guide to Community Preventive Services. New York: Oxford University Press; 2005. Task Force on Community Preventive Services. [Google Scholar]

- 28.Brownson RC, Jones E. Bridging the gap: translating research into policy and practice. Prev Med. 2009 Oct;49(4):313–315. doi: 10.1016/j.ypmed.2009.06.008. [DOI] [PubMed] [Google Scholar]

- 29.Mercer SM, DeVinney BJ, Fine LJ, Green LW. Study designs for effectiveness and tranlsation research: Identifying trade-offs. Am J Prev Med. 2007;33(2):139–154. doi: 10.1016/j.amepre.2007.04.005. [DOI] [PubMed] [Google Scholar]

- 30.IOM. A foundation for evidence-driven practice: A rapid learning system for cancer care. NAS Press; 2010. Workshop Summary. [PubMed] [Google Scholar]

- 31.Etheredge LM. Health Affairs. 2007. A rapid-learning health system: What would a rapid-learning health system look like, and how might we get there? Web Exclusive Collection: w107-w118. [DOI] [PubMed] [Google Scholar]

- 32.Green LW, Glasgow RE. Evaluating the relevance, generalization, and applicability of research: Issues in external validity and translation methodology. Evaluation and the Health Professions. 2006;29(1):126–153. doi: 10.1177/0163278705284445. [DOI] [PubMed] [Google Scholar]

- 33.Glasgow RE. What types of evidence are most needed to advance behavioral medicine? Ann Behav Med. 2008;35(1):19–25. doi: 10.1007/s12160-007-9008-5. [DOI] [PubMed] [Google Scholar]

- 34.Katz DL, Murimi M, Gonzalez A, Nijike V, Green LW. From clinical trial to community adoption: The Multi-site Translational Community Trial (mTCT) Am J Public Health. 2011 doi: 10.2105/AJPH.2010.300104. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gaglio B, Glasgow RE. Evaluation Approaches for Dissemination and Implementation Research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and implementation research in health. New York: Oxford University Press; 2012. in press. [Google Scholar]

- 36.Gawandi A. The checklist manifesto: How to get things right. New York: Metropolitan Books, Henry Holt & Company; 2009. [Google Scholar]

- 37.Office of Behavioral and Social Sciences Researchs, DHHS. Best Practices for Mixed Methods Research in the Health Sciences. obssr.od.nih.gov/scientific_areas/methodology/mixed_methods_research/index.aspx.

- 38.Tunis SR, Carino TV, Williams RD, Bach PB. Federal initiatives to support rapid learning about new technologies. Health Aff (Millwood) 2007 Mar;26(2):w140–w149. doi: 10.1377/hlthaff.26.2.w140. [DOI] [PubMed] [Google Scholar]

- 39.Macaulay AC, Commanda LE, Freeman WL, et al. Participatory research maximises community and lay involvement. North American Primary Care Research Group. Br Med J. 1999 Sep 18;319(7212):774–778. doi: 10.1136/bmj.319.7212.774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Macaulay AC, Nutting PA. Moving the frontiers forward: incorporating community-based participatory research into practice-based research networks. Ann Fam Med. 2006 Jan;4(1):4–7. doi: 10.1370/afm.509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Minkler M, Wallerstein N, editors. Community-based participatory research for health: From process to outcomes. 2nd Ed. San Francisco, CA: Jossey-Bass; 2008. [Google Scholar]

- 42.Stokols D, Hall KL, Taylor BK, Moser RP. The science of team science: overview of the field and introduction to the supplement. Am J Prev Med. 2008 Aug;35(2 Suppl):S77–S89. doi: 10.1016/j.amepre.2008.05.002. [DOI] [PubMed] [Google Scholar]

- 43.Stokols D, Misra S, Moser RP, Hall KL, Taylor BK. The ecology of team science: understanding contextual influences on transdisciplinary collaboration. Am J Prev Med. 2008 Aug;35(2 Suppl):S96–S115. doi: 10.1016/j.amepre.2008.05.003. [DOI] [PubMed] [Google Scholar]

- 44.Ottoson JM, Green LW. Reconciling concept and context: Theory of implementation. In: Ward WB, Simonds SK, Mullen PD, Becker M, editors. Advances in health education and promotion. 2nd Ed. Greenwich, CT: JAI Press; 1987. pp. 353–382. [Google Scholar]

- 45.Weick K. Sensemaking in organizations. Thousand Oaks, CA: Sage Publications; 1995. [Google Scholar]

- 46.Stange KC. Refocusing knowledge generation, application, and education: raising our gaze to promote health across boundaries. Am J Prev Med. 2011 Oct;41(4 Suppl 3):S164–S169. doi: 10.1016/j.amepre.2011.06.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Thomas P, Griffiths F, Kai J, O'Dwyer A. Networks for research in primary health care. Br Med J. 2001 Mar 10;322(7286):588–590. doi: 10.1136/bmj.322.7286.588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Westfall JM, VanVorst RF, Main DS, Herbert C. Community-based participatory research in practice-based research networks. Ann Fam Med. 2006;4(1):8–14. doi: 10.1370/afm.511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.ENNACT. [Accessed 5/27/11];Communities as Partners in Cancer Clinical Trials: Changing Research, Practice and Policy. 2008 www.enacct.org/sites/default/files/CommunitiesAsPartners_Report_12_18_08_0pdf (Seattle: Community-Campus Partnerships for Health and Education Network to Advance Cancer Clinical Trials)

- 50.Green LW. The Prevention Research Centers as models of practice-based evidence: Two decades on. Am J Prev Med. 2007;33(1S):S6–S8. doi: 10.1016/j.amepre.2007.03.012. [DOI] [PubMed] [Google Scholar]

- 51.NIH. Clinical and Translational Service Awards. ncrr.nih.gov/clinical_research_resources/clinical_and_translational_science_awards.2011.

- 52.Stacey RD. Complexity and creativity in organizations. San Francisco: Berrett-Koehler Publishers; 1996. [Google Scholar]

- 53.Senge PM. The fifth discipline: The art and practice of the learning organization. New York: Currency Doubleday; 1994. [Google Scholar]

- 54.Cohen DJ, Crabtree BF, Etz RS, et al. Fidelity versus flexibility: translating evidence-based research into practice. Am J Prev Med. 2008 Nov;35(5 Suppl):S381–S389. doi: 10.1016/j.amepre.2008.08.005. [DOI] [PubMed] [Google Scholar]

- 55.Crabtree BF, Nutting PA, Miller WL, et al. Primary care practice transformation is hard work: Insights from a 15-year developmental program of research. Med Care. 2011;(Suppl):S28–S35. doi: 10.1097/MLR.0b013e3181cad65c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Stange KC, Goodwin MR, Zyzanski SJ, Dietrich AJ. Sustainability of a practice-individualized preventive service delivery intervention. Am J Prev Med. 2003;25(4):296–300. doi: 10.1016/s0749-3797(03)00219-8. [DOI] [PubMed] [Google Scholar]

- 57.Stange KC. Raising our gaze to promote health across boundaries. Am J Prev Med. 2011 doi: 10.1016/j.amepre.2011.06.022. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Churchman CW. Wicked problems. Mange Sci. 1967;14(4):B141–B142. [Google Scholar]

- 59.Nutting PA, Crabtree BF, Miller WL, Stange KC, Stewart E, Jaen C. Transforming physician practices to patient-centered medical homes: lessons from the national demonstration project. Health Aff (Millwood) 2011 Mar;30(3):439–445. doi: 10.1377/hlthaff.2010.0159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Reid RJ, Coleman K, Johnson EA, et al. The group health medical home at year two: cost savings, higher patient satisfaction, and less burnout for providers. Health Aff (Millwood) 2010 May;29(5):835–843. doi: 10.1377/hlthaff.2010.0158. [DOI] [PubMed] [Google Scholar]

- 61.McClellan M, McKethan AN, Lewis JL, Roski J, Fisher ES. A national strategy to put accountable care into practice. Health Aff (Millwood) 2010 May;29(5):982–990. doi: 10.1377/hlthaff.2010.0194. [DOI] [PubMed] [Google Scholar]

- 62.Fisher ES, McClellan MB, Bertko J, et al. Fostering accountable health care: moving forward in medicare. Health Aff (Millwood) 2009 Mar;28(2):w219–w231. doi: 10.1377/hlthaff.28.2.w219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Harris RP, Helfand M, Woolf SH, et al. Current methods of the U.S. Preventive Services Task Force: a review of the process. Am J Prev Med. 2001 Apr;20(3 Suppl):21–35. doi: 10.1016/s0749-3797(01)00261-6. [DOI] [PubMed] [Google Scholar]

- 64.Kroke A, Boeing H, Rossnagel K, Willich SN. History of the concept of “levels of evidence” and their current status in relation to primary prevention through lifestyle interventions. Public Health Nutr. 2003;7:279–284. doi: 10.1079/PHN2003535. [DOI] [PubMed] [Google Scholar]

- 65.IOM. Bridging the evidence gap in obesity prevention: A framework to inform decision making. Washington, DC: The National Academies Press; 2010. [PubMed] [Google Scholar]

- 66.Tunis SR, Stryer DB, Clancey CM. Practical clinical trials: Increasing the value of clinical research for decision making in clinical and health policy. JAMA. 2003;290:1624–1632. doi: 10.1001/jama.290.12.1624. PMID 14506122. [DOI] [PubMed] [Google Scholar]

- 67.Zwarenstein M, Treweek S, Gagnier JJ, et al. Improving the reporting of pragmatic trials: an extension of the CONSORT statement. Br Med J. 2008 Nov 11;337:a2390. doi: 10.1136/bmj.a2390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Glasgow RE, Eckstein ET, ElZarrad MK. Implementation science perspectives and opportunities for HIV/AIDS research: Integrating science, practice and policy. JAIDS. 2011 doi: 10.1097/QAI.0b013e3182920286. In press. [DOI] [PubMed] [Google Scholar]

- 69.Glasgow RE, Steiner JF. Brownson RC, Colditz GA, Proctor EK, editors. Comparative effectiveness research to accelerate translation: Recommendations for “CERT-T”. Dissemination and implementation research in health. 2012 in press. [Google Scholar]

- 70.Iglehart JK. Prioritizing comparative-effectiveness research--IOM recommendations. N Eng J Med. 2009 Jul23;361(4):325–328. doi: 10.1056/NEJMp0904133. [DOI] [PubMed] [Google Scholar]

- 71.Glasgow RE, Kaplan R, Ockene J, Fisher EB, Emmons KM. Society of Behavioral Medicine Health Policy Committee. The need for practical patient-report measures of health behaviors and psychosocial issues in electronic health records. 2012 Health Aff In press. [Google Scholar]

- 72.Fisher EB, Fitzgibbon ML, Glasgow RE, et al. Behavior matters. Am J Prev Med. 2011 May;40(5):e15–e30. doi: 10.1016/j.amepre.2010.12.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.American Academy of Family Physicians (AAFP), American Academy of Pediatrics (AAP), American College of Physicians (ACP), America Osteopathic Association (AOA) Joint principles of the patient-centered medical home. 2007 www.medicalhomeinfo.org/Joint%20Statementpdf.

- 74.Kilo CM, Wasson JH. Practice redesign and the patient-centered medical home: history, promises, and challenges. Health Aff (Millwood) 2010 May;29(5):773–778. doi: 10.1377/hlthaff.2010.0012. [DOI] [PubMed] [Google Scholar]

- 75.Landon BE, Gill JM, Antonelli RC, Rich EC. Prospects for rebuilding primary care using the patient-centered medical home. Health Aff (Millwood) 2010 May;29(5):827–834. doi: 10.1377/hlthaff.2010.0016. [DOI] [PubMed] [Google Scholar]

- 76.Stange KC, Miller WL, Nutting PA, Crabtree BF, Stewart EE, Jaen CR. Context for understanding the National Demonstration Project and the patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1):S2–S8. doi: 10.1370/afm.1110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Whitlock EP, Orleans CT, Pender N, Allan J. Evaluating primary care behavioral counseling interventions: An evidence-based approach. Am J Prev Med. 2002;22:267–284. doi: 10.1016/s0749-3797(02)00415-4. [DOI] [PubMed] [Google Scholar]

- 78.Woolf SH. The meaning of translational research and why it matters. JAMA. 2008 Jan 9;299(2):211–213. doi: 10.1001/jama.2007.26. [DOI] [PubMed] [Google Scholar]

- 79.Glasgow RE, Orleans CT, Wagner EH, Curry SJ, Solberg LI. Does the chronic care model serve also as a template for improving prevention? Milbank Q. 2001;79(4):579–612. doi: 10.1111/1468-0009.00222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Epstein RM, Fiscella K, Lesser CS, Stange KC. Why the nation needs a policy push on patient-centered health care. Health Aff (Millwood) 2010 Aug;29(8):1489–1495. doi: 10.1377/hlthaff.2009.0888. [DOI] [PubMed] [Google Scholar]

- 81.Cargo M, Mercer SL. The value and challenges of participatory research: Strengthening its practice. Annu Rev Public Health. 2008;29:325–350. doi: 10.1146/annurev.publhealth.29.091307.083824. [DOI] [PubMed] [Google Scholar]

- 82.Minkler M, Blackwell AG, Thompson M, Tamir H. Community-based participatory research: Implications for public health funding. Am J Public Health. 2003 Aug;93(8):1210–1213. doi: 10.2105/ajph.93.8.1210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Campbell NC, Murray E, Darbyshire J, et al. Designing and evaluating complex interventions to improve health care. Br Med J. 2007 Mar 3;334(7591):455–459. doi: 10.1136/bmj.39108.379965.BE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Sturmberg JP, Martin CM. Complexity and health--yesterday's traditions, tomorrow's future. J Eval Clin Pract. 2009 Jun;15(3):543–548. doi: 10.1111/j.1365-2753.2009.01163.x. [DOI] [PubMed] [Google Scholar]