Abstract

BACKGROUND

The National Cancer Institute’s Surveillance, Epidemiology, and End Results (SEER) program collects and publishes population-based cancer incidence data from registries covering approximately 28% (seer.cancer.gov/registries/data.html) of the US population. SEER incidence rates are released annually in April from data submitted the prior November. The time needed to identify, consolidate, clean, and submit data requires the latest diagnosis year included to be 3 years before release. Approaches, opportunities, and cautions for an earlier release of data based on a February submission are described.

METHODS

First, cases submitted in February for the latest diagnosis year represented 92% to 98% of those in the following November submission. A reporting delay model was used to statistically adjust counts in recent diagnosis years for cases projected in the future. February submissions required larger adjustment factors than November submissions. Second, trends were checked to assess the validity.

RESULTS

Most cancer sites had similar annual percent change (APC) trends for February and November 2013. Male colon and rectum cancer and female lung and bronchus cancer showed an acceleration in declining APC trends only in February. Average annual percent change (AAPC) trends for the 2 submissions were similar for all sites.

CONCLUSIONS

For the first time, preliminary 2012 incidence rates, based on February submissions, are provided. An accelerated decline starting in 2008 for male colon and rectum cancer rates and male lung cancer rates did not persist when 2012 data were added. An earlier release of SEER data is possible. Caution must be exercised when one is interpreting changing trends. Use of the more conservative AAPC is advised.

Keywords: annual percent change, average annual percent change, cancer incidence trends, delay adjustment, population-based registry data

INTRODUCTION

Users of population-based cancer registry data have questioned whether it may be possible to shorten the time between the collection of the data and its release. Surveillance, Epidemiology, and End Results (SEER) incidence data are generally made available to the public in April of each year along with Cancer Statistics Review, which is based on the data submitted in the previous November.1 Online information from the SEER program’s fact sheets is also based on the November submission data.2 The most recent diagnosis year in a November data submission is 22 months after the close of a diagnosis year. For example, the November 2014 SEER incidence data submission, to be released in April 2015, will include cases diagnosed through the 2012 calendar year (ie, released 28 months after the end of 2012). As part of a dynamic database, subsequent November submissions include cases that were previously unreported (delayed) and are added, whereas a few cases (eg, corrections) are removed. Because more cases are added than removed during this process, the observed rates will be underestimated when they are first reported. Underreporting in the most recent years is largest, and this biases the trends downward. The most recent data points are the most important; any small change is a potential harbinger of the impact of cancer control activities. To adjust for this, a statistical model has been developed to estimate reporting delay-adjusted rates,3 which represent projections to more closely approximate the rates after multiple submissions. Starting in 2003 for cases diagnosed in 2000, the National Cancer Institute adopted a reporting delay model by Midthune et al4 to obtain delay-adjusted estimates of cancer incidence rates.

Beginning in 2011, SEER registries were required to submit data not only in November but also in February of each year. The February submission provides a preliminary estimate of the data for the same diagnosis years as the November submission. Figure 1 illustrates the annual SEER data submission process over the course of several years as well as which diagnosis years are included in the data that are reported to the public. Instituting the February submission has allowed SEER to explore the possibility of providing preliminary incidence estimates approximately 6 to 9 months earlier than current submissions. Because many cases are added between the February and November submissions, especially for the most recent years, adjusting for reporting delays is even more critical when data based on a February submission are reported. Delay-adjustment factors vary substantially by cancer site, with those cancers diagnosed and treated outside hospitals (eg, melanoma) having larger reporting delays. This report provides the first preliminary estimates of SEER incidence data through diagnosis year 2012, and it examines the approaches, opportunities, and potential pitfalls of early data release. We report incidence rates for all cancer sites combined, the 4 major cancer sites (lung and bronchus, female breast, prostate, and colon and rectum), and melanoma.

Figure 1.

Annual SEER data submission schedule. SEER indicates Surveillance, Epidemiology, and End Results.

MATERIALS AND METHODS

Data Source and Methodology for Assessing Trends

Data from the SEER 17 program registries were used in this analysis. Nine registries include San Francisco–Oakland, Connecticut, Detroit, Hawaii, Iowa, New Mexico, Seattle, Utah, and Atlanta, which have contributed data since 1973. Four registries—San Jose–Monterrey, Los Angeles, Alaska Natives, and rural Georgia—have contributed data since 1992. Greater California, Kentucky, Louisiana, and New Jersey joined SEER in 2000. Although some of the registries have cases diagnosed as early as 1973, all of the registries have cases from at least 2000. First in 2011 and in each year thereafter, the registries were asked to submit preliminary data files with individual cancer records in February in addition to the usual November submission of full data files. The most recent diagnosis years covered in these preliminary (February) and full (November) submission file sets included cases diagnosed up to 2 years before the submission year (eg, the February and November 2014 submissions included cases diagnosed through 2012). For a given February submission and the respective prior November submission, the same denominator file from the US Census was used. For example, the February 2014 and November 2013 rates are based on the same census denominator files for the updated populations in the year of diagnosis.

The Joinpoint program, developed by the National Cancer Institute,5 implements a methodology that is used widely in reporting cancer trends. Joinpoint is used to assess cancer trend data in Cancer Statistics Review1 as well as the annual report to the nation on the status of cancer.6 Joinpoint fits a segmented or piecewise linear model to the logarithm of incidence rates by diagnosis year, and it uses permutation tests to determine the number of segments and a grid search to determine the location of the joinpoints that define the segments.7 The slope of the linear trend between 2 adjacent joinpoints is described by the annual percent change (APC), which is the transformation of the slope of the linear regression between the 2 adjacent joinpoints. The APC is interpreted as a constant rate of change over this interval. Because the last joinpoint segment varies in length for different series, it is difficult to summarize recent trends across groups in a consistent manner. To compare incidence trends for different cancer sites or different populations, in which the trends have varying joinpoint locations over a prespecified time period (eg, previous 5 or 10 years), an average annual percent change (AAPC) is used. The AAPC, developed by Clegg et al,8 is a summary measure across a fixed time interval and is a weighted geometric mean of the APCs over a fixed prespecified segment. Both APCs and AAPCs (including 5- and 10-year AAPCs) are currently reported in Cancer Statistics Review. Although the APCs may be inconsistent as more diagnosis years are accumulated, the AAPC is substantially more stable.

Estimating the Difference Between February and November Submissions

Because the February submission is 9 months earlier than the regular November submission, the files submitted in February are less complete than those submitted in November. The difference between the 2 submissions can be measured by the calculation of the total number of cases submitted in February divided by the number submitted in November. To illustrate the difference between submissions, Table 1 lists the percentages for 5 cancer sites for the 3 available February submission years; cases diagnosed in 2011 are used. For most sites, the number of cases in the February submission represents 94% of the cases in the November submission. Completion with respect to the November submission is greater than 92% for each site in the 2011 data (2009 cases), greater than 94% for each site in the 2012 data (2010 cases), and greater than 91% for each site in the 2013 data (2011 cases). It is important to note that there is larger variation across cancer sites (with prostate cancer, melanoma, and lung and bronchus cancer having the lowest completion rates) than across submission years, and this suggests that a delay adjustment by site is an important consideration.

TABLE 1.

Cases Submitted in February and November to SEER 17 for All Races and Both Sexes

| Cancer Site | 2009 Casesa | 2010 Casesb | 2011 Casesc |

|---|---|---|---|

| All sites | 94.6% | 95.0% | 93.4% |

| Colon and rectum | 96.1% | 96.0% | 94.5% |

| Lung and bronchus | 93.5% | 94.3% | 91.6% |

| Melanoma of the skin | 92.9% | 94.7% | 92.8% |

| Female breast | 97.4% | 97.4% | 96.4% |

| Prostate | 93.2% | 94.2% | 92.5% |

Abbreviation: SEER, Surveillance, Epidemiology, and End Results.

February 2011 submission count/November 2011 submission count = percentage complete in February with respect to November.

February 2012 submission count/November 2012 submission count = percentage complete in February with respect to November.

February 2013 submission count/November 2013 submission count = percentage complete in February with respect to November.

February Submission Reporting Delay Model

After the end of a calendar year, SEER registries are given a 22-month window within which newly diagnosed cases are required to be submitted to the SEER program. As stated earlier, additional cases may be found and reported after the 22-month period, and other cases may be reclassified because of corrections, including recoding of race and reclassification of a primary cancer as a metastasis of an earlier cancer. Other cases may be removed if the diagnosis is later found not to be cancer. The correction and reclassification process often leads to a loss of a case in one group (eg, whites) and a gain in another (eg, blacks). The discrepancy between when a case is diagnosed and when it is first reported to the National Cancer Institute (or when it is moved into a new category after being reclassified) leads to underreporting, with the largest effect in the most recent diagnosis years.9 Without any adjustment for reporting delay, recent trends will be biased downward.

In the past, cases added or removed in the subsequent reporting submissions were modeled separately and then combined to get an overall reporting delay factor. For the February submission data, we modified the delay model to consider the net count only. That is, for each submission, the model considers the net difference between the number of cases that were first reported and the total number in later submissions. This model performs similarly to the original model by Midthune et al.4

In each of the models, a delay factor is produced. This factor can then be applied to observed rates to account for the discrepancy between the initial observed rates and the rates that will be observed after many submissions. For example, if the delay-adjustment factor is 1.02, then the eventual actual rate is estimated by multiplication of the observed rate by 1.02. This can be understood as the observed rate representing approximately 1/1.02 (or 98%) completeness of the eventual actual rates. In Table 2, this model-based completeness is reported for both the February and November submissions. For example, according to the delay model, cases for all tumor sites combined from the 2011 diagnosis year were first reported in February 2013, and they reflect approximately 91.7% of the complete case count. As the years since diagnosis increase, the reported cases become closer to the eventual counts. For example, cases submitted in February 2013 for diagnosis years 2010, 2009, 2008, and 2007 are 97.6%, 98.7%, 99.2%, and 99.6% complete, respectively, with respect to the eventual counts. The 99.6% model completeness for cases diagnosed in 2007 and submitted in February 2013 is very close to 100%. There is less need for adjustment as more time passes since the diagnosis year and additional data are collected for the same diagnosis years; cases with a 2007 diagnosis are the most complete in this table. Therefore, adjustment for the most recent diagnosis years is the most important.

TABLE 2.

Modeled Completeness Percentages (Submitted Counts With Respect to Eventual Counts) for SEER 17 for All Races and Both Sexes

| Site | Year of Diagnosis |

February 2013 Submission |

November 2013 Submission |

February 2014 Submission |

|---|---|---|---|---|

| All sites | 2007 | 99.6% | 99.5% | 99.7% |

| 2008 | 99.2% | 99.2% | 99.5% | |

| 2009 | 98.7% | 98.9% | 99.2% | |

| 2010 | 97.6% | 98.3% | 98.6% | |

| 2011 | 91.7% | 96.6% | 97.7% | |

| 2012 | 91.5% | |||

| Colon and rectum | 2007 | 99.8% | 99.8% | 99.9% |

| 2008 | 99.6% | 99.6% | 99.8% | |

| 2009 | 99.1% | 99.4% | 99.6% | |

| 2010 | 98.3% | 99.0% | 99.2% | |

| 2011 | 94.0% | 97.7% | 98.5% | |

| 2012 | 93.7% | |||

| Lung and bronchus | 2007 | 99.9% | 99.9% | 99.9% |

| 2008 | 99.7% | 99.8% | 99.9% | |

| 2009 | 99.1% | 99.6% | 99.7% | |

| 2010 | 97.6% | 99.1% | 99.1% | |

| 2011 | 90.9% | 97.1% | 97.8% | |

| 2012 | 90.5% | |||

| Melanoma | 2007 | 99.7% | 99.8% | 99.8% |

| 2008 | 99.5% | 99.7% | 99.7% | |

| 2009 | 99.1% | 99.4% | 99.5% | |

| 2010 | 98.2% | 98.9% | 99.1% | |

| 2011 | 91.3% | 97.4% | 98.3% | |

| 2012 | 91.3% | |||

| Female breast | 2007 | 99.7% | 99.7% | 99.8% |

| 2008 | 99.6% | 99.7% | 99.7% | |

| 2009 | 99.3% | 99.5% | 99.5% | |

| 2010 | 99.0% | 99.3% | 99.3% | |

| 2011 | 96.0% | 98.6% | 99.0% | |

| 2012 | 95.8% | |||

| Prostate | 2007 | 99.2% | 99.3% | 99.4% |

| 2008 | 98.7% | 99.0% | 99.1% | |

| 2009 | 98.0% | 98.5% | 97.9% | |

| 2010 | 96.9% | 97.8% | 96.8% | |

| 2011 | 89.5% | 95.9% | 96.8% | |

| 2012 | 89.3% |

Abbreviation: SEER, Surveillance, Epidemiology, and End Results.

In Table 2, completeness varies somewhat across cancer sites: from 89.3% (prostate cancer) to 95.8% (breast cancer) for cases diagnosed in 2012 according to the February 2014 submission. Furthermore, the model-based completeness for the most recent diagnosis year improves considerably (by 3 and 6 percentage points) between the early submission and the full submission.

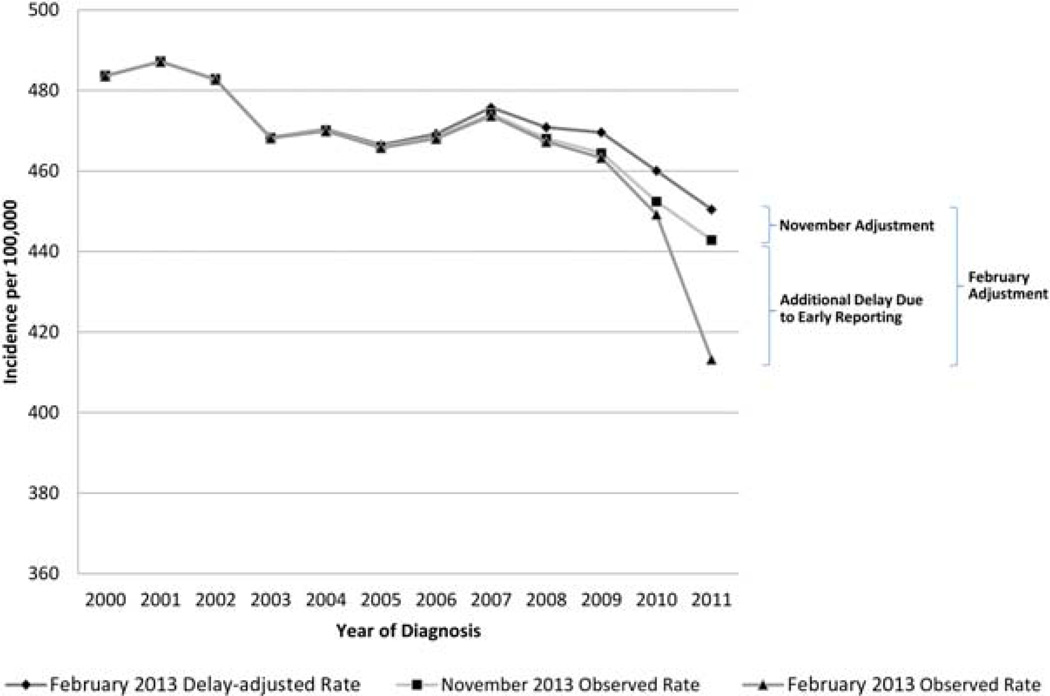

Figure 2 illustrates how the delay model can be used for the adjustment of incidence rates. The figure shows incidence rates for all sites combined from 2000 to 2011. As shown, the model-based delay-adjusted rates from the February 2013 submission inflate the observed incidence rates from the February 2013 submission. The delay adjustment needed for the February submission is a combination of the delay adjustment typically used for the November submission and an additional adjustment to account for the difference between the February and November submissions. The gap is greatest for the most recent diagnosis year (2011).

Figure 2.

Observed and delay-adjusted incidence rates for all sites for selected SEER 17 submissions. SEER indicates Surveillance, Epidemiology, and End Results.

RESULTS

Validation of Models

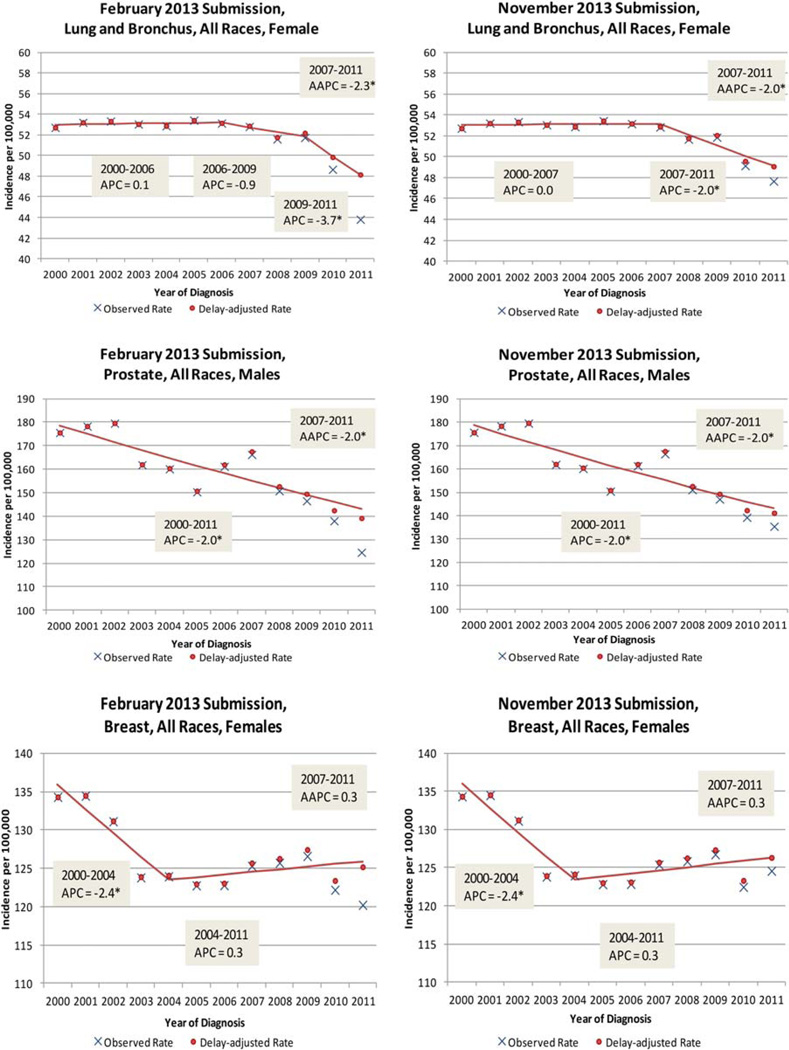

Although the February case counts are less complete than the November counts, the delay model can be applied to estimate the eventual actual counts and predict rates and trends. To validate the use of early (February) submission data, we first applied the delay model to obtain the delay-adjusted rates, and we then fit the Joinpoint model to the delay-adjusted rates. Figure 3 shows the Joinpoint model fitted to the delay-adjusted rates and observed data from 2 submissions, February 2013 and November 2013, for 3 sites—female lung and bronchus, male prostate, and female breast—for diagnosis years 2000 to 2011 (the observed incidence rates are shown in blue, and the delay-adjusted incidence rates are in shown in red). Note that the scales in each graph differ by case type, with lung and bronchus cancer beginning at 40 cases per 100,000, with prostate cancer beginning at 100 cases per 100,000, and with female breast cancer beginning at 110 cases per 100,000. Because the adjustment factors are smaller for the November submission, the observed and delay-adjusted rates are closest in November 2013 for all 3 sites. APCs and 5-year AAPCs are shown in Figure 3. For female lung and bronchus cancer (all races), 2 joinpoints occurred for the February 2013 submission (in 2006 and 2009), and only 1 occurred for the November submission. This difference in joinpoints resulted in different APCs in the most recent years (−3.7 vs −2.0).However, the 5-year AAPCs are similar for the February and November submissions (−2.3 vs −2.0), and both are statistically significant. The patterns for prostate cancer and female breast cancer are similar.

Figure 3.

SEER 17 observed and delayed adjusted incidence rates for lung and bronchus, prostate, and female breast cancer for February and November 2013 SEER submissions (diagnosis years 2000–2011). AAPC indicates average annual percent change; APC, annual percent change; SEER, Surveillance, Epidemiology, and End Results. *APC and AAPC trends are significant (P<.05).

Table 3 shows the observed age-adjusted and delay-adjusted incidence rates from the February and November 2013 submissions for diagnosis year 2011 along with the Joinpoint model fits for data from 2000 through 2011. Although the observed rates for 2011 from the 2 submissions are quite different (6.8% different on average), the delay-adjusted rates are much closer (1.6% different on average), and this indicates that the new February reporting delay model is providing reasonable estimates even though the correction factors are much larger. In summary, the estimates of adjusted rates from the delay model are not substantially affected by the timing of submission: February and November submissions produce similar estimates of the eventual actual rates.

TABLE 3.

SEER 17 Cancer Rates for Diagnosis Year 2011 from February and November 2013 Submissions: Validation Check

| Cancer Site | 2011 Age-Adjusted Rates | APC Trends | AAPC Trends: 5 y/10 y | |||||

|---|---|---|---|---|---|---|---|---|

| February 2013 Submission |

November 2013 Submission |

February 2013 Submission |

November 2013 Submission |

February 2013 Submission |

November 2013 Submission |

|||

| Observed | Delay- Adjusted |

Observed | Delay- Adjusted |

|||||

| All sites | 413.2 | 450.5 | 442.9 | 458.6 | −0.4a (2000–2011) | −0.4a (2000–2011) | −0.4a/−0.4a | −0.4/−0.4a |

| Male | 461.4 | 504.0 | 499.8 | 520.7 | −0.9a (2000–2011) | −0.8a (2000–2011) | −0.9a/−0.9a | −0.8a/−0.8a |

| Female | 379.7 | 413.1 | 402.8 | 414.5 | −1.0 (2000–2003) | −0.1 (2000–2011) | 0.2/0.1 | −0.1/−0.1 |

| 0.2 (2003–2011) | ||||||||

| Colon and rectum | 37.9 | 40.3 | 40.1 | 41.0 | −2.3a (2000–2009) | −2.2a (2000–2008) | −3.6a/−2.9a | −3.3a/−2.7a |

| −4.9a (2009–2011) | −3.6a (2008–2011) | |||||||

| Male | 43.6 | 46.4 | 46.2 | 47.4 | −2.5a (2000–2008) | −2.8a (2000–2011) | −3.8a/−3.1a | −2.8a/−2.8a |

| −4.3a (2008–2011) | ||||||||

| Female | 33.2 | 35.3 | 35.1 | 35.8 | −2.1a (2000–2009) | −2.1a (2000–2009) | −3.4a/−2.7a | −3.1a/−2.6a |

| −4.7a (2009–2011) | −4.1a (2009–2011) | |||||||

| Lung and bronchus | 50.1 | 55.1 | 54.7 | 56.4 | −1.1a (2000–2009) | −1.1a (2000–2009) | −2.8a/−1.9a | −2.4a/−1.7a |

| −4.6a (2009–2011) | −3.7a (2009–2011) | |||||||

| Male | 58.5 | 64.4 | 64.2 | 66.1 | −1.9a (2000–2009) | −2.0a (2000–2009) | −3.5a/−2.6a | −3.0a/−2.4a |

| −5a (2009–2011) | −4.0a (2009–2011) | |||||||

| Female | 43.8 | 48.2 | 47.7 | 49.1 | 0.1 (2000–2006) | 0.0 (2000–2007) | −2.3a/−1.1a | −2.0a/−0.9a |

| −0.9 (2006–2009) | −2.0a (2007–2011) | |||||||

| −3.7a (2009–2011) | ||||||||

| Melanoma | 19.4 | 21.3 | 21.0 | 21.5 | 1.7a (2000–2011) | 1.7a (2000–2011) | 1.7a/1.7a | 1.7a/1.7a |

| Male | 25.4 | 27.8 | 27.5 | 28.3 | 1.9 (2000–2011) | 1.9a (2000–2011) | 1.9a/1.9a | 1.9a/1.9a |

| Female | 15.1 | 16.5 | 16.2 | 16.5 | 1.4a (2000–2011) | 1.3a (2000–2011) | 1.4a/1.4a | 1.3a/1.3a |

| Female breast | 120.2 | 125.2 | 124.6 | 126.3 | −2.4a (2000–2004) | −2.4a (2000–2004) | 0.3/−0.3 | 0.3/−0.3 |

| 0.3 (2004–2011) | 0.4 (2004–2012) | |||||||

| Prostate | 124.6 | 139.2 | 135.4 | 141.2 | −2.0a (2000–2011) | −2.0a (2000–2011) | −2.0a/−2.0a | −2.0a/−2.0a |

Abbreviations: AAPC, average annual percent change; APC, annual percent change; SEER, Surveillance, Epidemiology, and End Results.

Cancer sites are for all races and both sexes except where indicated.

APC and AAPC trends are significant (P<.05).

Looking at the joinpoint fits in Table 3, we find general consistency between the trends reported in the February and November 2013 submissions (ie, all sites, both sexes and males; colon and rectum, both sexes and females; lung and bronchus, both sexes and males; melanoma, both sexes, males, and females; female breast; and prostate). A few sites showed the elimination of a change in the trend between the 2 submissions. For lung and bronchus cancer (all races and females), there are 2 joinpoints (3 segments) for the February 2013 submission, whereas there is only 1 joinpoint (2 segments) for the November 2013 submission. For all sites (all races and female), there is 1 joinpoint (2 segments) for the February 2013 submission, whereas there is no joinpoint (1 segment) for the November 2013 submission. Colon and rectum cancer (all races and males) also has 1 joinpoint (2 segments) for the February 2013 submission, whereas there is no joinpoint (1 segment) for the November 2013 submission. Even with the elimination of a joinpoint, when we look at the delay-adjusted rates (February vs. November), the APC trends remain consistent for these 3 cancer sites.

The Joinpoint model can sometimes be sensitive to small changes in rates, and when that occurs, the 5-year AAPC and the 10-year AAPC can provide a better summary for comparing models. Even when there is a change in the number of joinpoints (all sites, females; colon and rectum, males; lung and bronchus, females), the 5- and 10-year AAPCs are quite consistent between the 2 submissions. For cases in which the number of joinpoints is identical between the 2 submissions, the AAPCs are even more consistent. This finding indicates that February submission data can be used to calculate valid estimates of incidence trends, especially when the more stable AAPC measure is being used.

Preliminary Report of Cancer Cases Diagnosed Through 2012

Table 4 presents observed and delay-adjusted cancer incidence rates for the diagnosis year 2012 for all sites, colon and rectum cancer, lung and bronchus cancer, female breast cancer, prostate cancer, and melanoma on the basis of the February 2014 submission. The full set of observed and delay-adjusted incidence rates from 2000 through 2012 for these cancers are presented in the appendix (See online Supporting Information). Table 4 also presents trends for these cancers with Joinpoint regression.

TABLE 4.

SEER 17 Cancer Rates for Diagnosis Year 2012 and Trends Through 2012 From the February 2014 Submission

| 2012 Age-Adjusted Rates | AAPC Trends | ||||

|---|---|---|---|---|---|

| Cancer Site | Observed Rate | Delay-Adjusted Rate | APC Trends | 5 y | 10 y |

| All sites | 416.1 | 454.5 | −0.4a (2000–2012) | −0.4a | −0.4a |

| Male | 452.1 | 493.8 | −0.9a (2000–2012) | −0.9a | −0.9a |

| Female | 392.9 | 429.2 | −0.1 (2000–2012) | −0.1 | −0.1 |

| Colon and rectum | 38.2 | 40.8 | −2.5a (2000–2012) | −2.5a | −2.5a |

| Male | 43.6 | 46.5 | −2.8a (2000–2012) | −2.8a | −2.8a |

| Female | 33.7 | 35.9 | −2.3a (2000–2012) | −2.3a | −2.3a |

| Lung and bronchus | 51.1 | 56.4 | −1.0a (2000–2007) | −2.2a | −1.7a |

| −2.2a (2007–2012) | |||||

| Male | 59.6 | 65.9 | −2.2a (2000–2012) | −2.2a | −2.2a |

| Female | 44.7 | 49.4 | 0.0 (2000–2007) | −1.8a | −1.0a |

| −1.8a (2007–2012) | |||||

| Melanoma of the skin | 20.2 | 22.2 | −1.6a (2000–2012) | 1.6a | 1.6a |

| Male | 26.5 | 29.0 | 1.8a (2000–2012) | 1.8a | 1.8a |

| Female | 15.7 | 17.2 | 1.3a (2000–2012) | 1.3a | 1.3a |

| Female breast | 123.0 | 128.4 | −2.4a (2000–2004) | 0.4 | 0.1 |

| 0.4 (2004–2012) | |||||

| Prostate | 105.1 | 117.8 | −2.3a (2000–2012) | −2.3a | −2.3a |

Abbreviations: AAPC, average annual percent change; APC, annual percent change; SEER, Surveillance, Epidemiology, and End Results.

Cancer sites are for all races and both sexes except where indicated.

APC and AAPC trends are significant (P<.05).

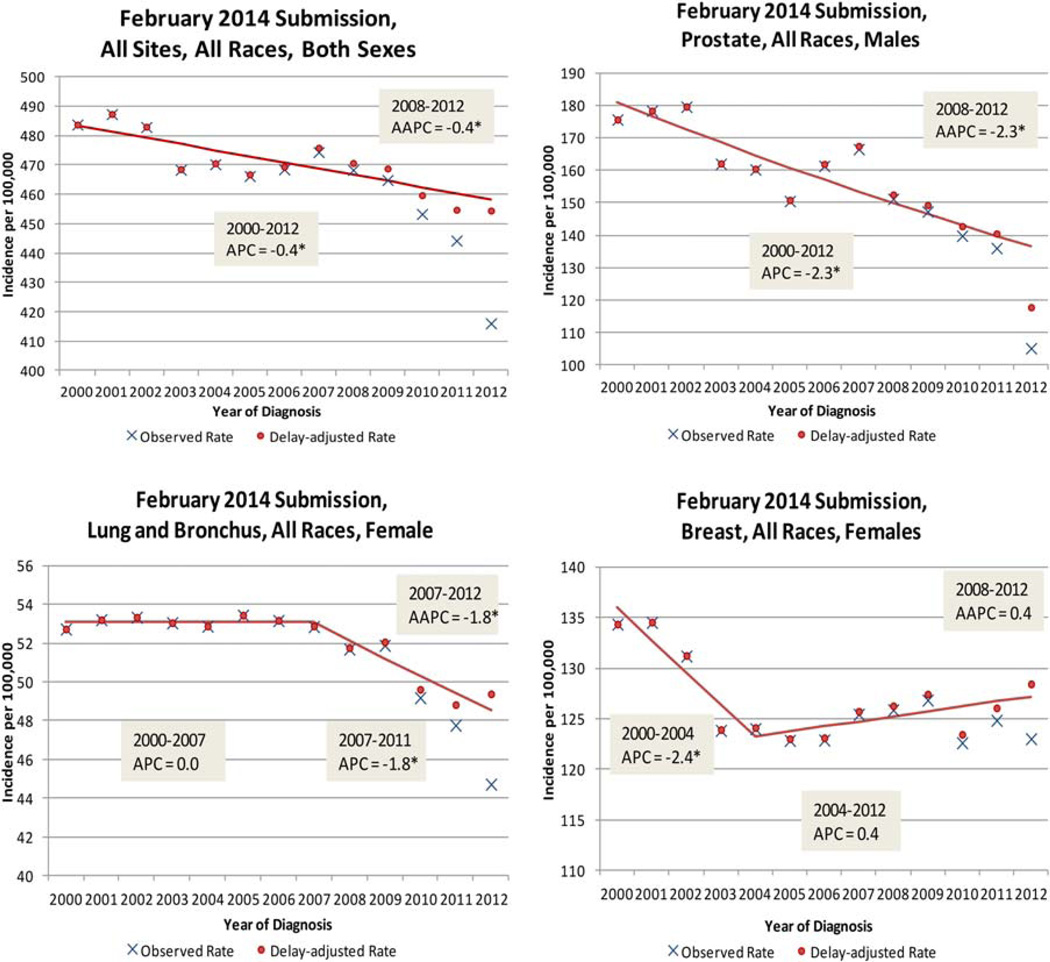

Figure 4 graphically displays the trends seen in Table 4 for all sites (both sexes), lung and bronchus cancer (females), prostate cancer, and female breast cancer. Incidence trends for all sites (both sexes) continue to decline with the addition of the 2012 data. Although the trend for men is declining for all sites, the trend for women for all sites is relatively flat even though the delay-adjusted 2012 data point for females is above prior points. For colon and rectum cancer, previous results based on data through 2011 (Table 3) had shown that a falling trend for both sexes and for females had accelerated slightly around 2008 or 2009. The addition of the 2012 data point eliminated that acceleration in the declining trends (Table 4). For lung and bronchus cancer, with data through 2011, an acceleration of the declining trends for men, which started in 2009, was eliminated with the preliminary 2012 data. Previously observed declines for women for colon and rectum cancer and lung and bronchus cancer that started in 2007 persisted, although the rate of the decline was reduced. Rising trends for melanoma persisted with the addition of the 2012 data point. For female breast cancer, a slightly (but not statistically significant) rising trend persisted. The 2012 delay-adjusted rate for prostate cancer was quite a bit below prior points, and this made the previously observed declining trend a little steeper (5-year AAPC of −2.0 through 2011 and −2.3 through 2012).

Figure 4.

SEER 17 observed and delay-adjusted incidence rates for all sites and prostate, breast, and lung and bronchus cancer (diagnosis years 2000–2012). AAPC indicates average annual percent change; APC, annual percent change; SEER, Surveillance, Epidemiology, and End Results. *APC and AAPC trends are significant (P<.05).

DISCUSSION

This article describes the process of data submission and reports earlier estimates of SEER incidence data. Preliminary rates, including cases diagnosed through 2012, are presented on the basis of the February 2014 data submission. The usual report will be released in April 2015 and will be based on the November 2014 data submission. An important note is that these rates are based on data through the 2012 year of diagnosis and are not projections based on data through 2010 as published in Cancer Facts & Figures 2014.10

Delays in the reporting of SEER incidence data produce biases in the most recent observed rates. Although delay adjustment is already used for providing more accurate incidence rates from the November submission, it would be valuable to report incidence rates and trends earlier than 2 years after diagnosis. The major challenge in presenting earlier estimates of the data based on a February submission rather than a November submission is that biases from delayed reporting are substantially larger for a February submission. For all sites combined and for the 5 individual cancer sites examined in this article, observed rates from a November submission for the most recent diagnosis year range from 96% to 98% of the rate that would be observed eventually, whereas they range from 89% to 94% from a February submission. Cancer sites treated outside a hospital, such as melanoma and the prostate, tend to be at the lower range of these proportions.

Traditionally, statistical models for reporting delays have been used to adjust for this bias in data from November submissions.1,6 This article reports the use of a modified model to derive delay-adjusted rates based on February submissions. The validation study comparing delay-adjusted rates based on February and November submissions showed good agreement. However, the larger adjustment factors based on a February submission make the final delay-adjusted rates inherently more sensitive because any minor misspecification of the model is apt to have larger consequences. Despite these potential problems, the delay-adjusted rates derived from the February and November submissions were similar.

Trends in rates from population-based cancer registries are typically characterized with Joinpoint regression, and our validation study compared trends based on the February and subsequent November submissions. Although there was consistency in the validation overall, a new joinpoint segment was identified in 3 instances (all sites, females; colon and rectum, males; lung and bronchus, females) in the February submission, but it was not persistent in the subsequent November submission. Five and 10-year AAPCs are inherently more stable than APCs, and there was very good consistency between the AAPCs from both submissions. Because of the additional challenges in estimating delay-adjusted rates from February submissions, we recommend that trends based on AAPCs, rather than APCs, be used as the primary approach for characterizing trends. In comparison with trends based on data through 2011, trends based on data through 2012 show a slower rate of decline for colon and rectal cancer for women and for lung and bronchus cancer for men. For prostate cancer, a faster rate of decline was observed on the basis of data through 2012 than had been observed for previous submissions.

Although the preliminary estimates presented in this article were limited to all sites combined and 5 individual cancer sites by sex, consideration should be given to releasing a more complete data set in subsequent years. Providing data for cancer sites beyond the 6 sites included here should be a priority for any future early releases, although we acknowledge that the degree of missing data for key variables such as race, ethnicity, stage, and other variables will need to be carefully evaluated. Such an assessment is necessary because missing data have the potential to underestimate rates in the most recent years. In the November 2013 submission of cases diagnosed in 2011, 2.3%, 2.4%, and <0.001% are missing race, ethnicity, and derived American Joint Committee on Cancer stage (7th edition) data, respectively. The February 2013 submission of cases diagnosed in 2011 had similar percentages (2.6%, 2.6%, and 0.662% missing, respectively). Variables missing more than some predetermined threshold would not be included in the data set. Limitations of the delay-adjusted rates include an inability to predict sudden changes in rates due to changes in cancer screening policies. Reclassification may be a problem in that site and histologies may be different between February and November data submissions for some cancer types. Composite modeled completeness may mask variation by registry and thereby make the delay-adjusted factors a better fit for some registries than others. Therefore, before any early release of a data set, the variability must be considered, and any release would be only in aggregate without registry identification.

Although electronic data capture holds promise for even timelier release of cancer incidence data, further developments are necessary before that potential can be realized. November submissions do include cases diagnosed in subsequent years as available (eg, the November 2013 submission includes 2012 cases). From these submissions, we can evaluate the effect of releasing statistics 1 year earlier. For all sites combined, the November 2012 submission represents approximately 82% of the November 2013 submission for cases diagnosed in 2011. Race and derived American Joint Committee on Cancer stage (7th edition) data are missing 2.7% and 6.4% of the time, respectively, for cases diagnosed in 2011 on the basis of the November 2012 submission. Ethnicity as measured by the SEER origin recode variable is missing for 2.7% of the cases diagnosed in 2011 in the November 2012 submission.

More rapid release of population-based cancer rates is important so that they can be deployed for planning cancer control, examining cancer-related policy issues, and understanding population-based risk and be used in epidemiologic and health services research as well as population-based rapid case ascertainment for clinical studies. This study examines the opportunities for early release but also considers some of the concomitant cautions.

Supplementary Material

Acknowledgments

We thank Dr. Lynne Penberthy, Susan Scott, and Trish Murphy for their assistance with the figures, tables, and article editing. They also thank the Surveillance, Epidemiology, and End Results registries for submitting February data submissions and the following principal investigators for their helpful comments: Dr. Charles Lynch, Dr. Xiao-Cheng Wu, Dr. Lloyd Mueller, and Dr. Nan Stroup.

FUNDING SUPPORT

No extramural funding was provided for this project. This work was conducted as part of the authors’ official duties to the National Cancer Institute with assistance from an Information Management Systems contract with the National Cancer Institute.

Footnotes

This article has been contributed to by US Government employees and their work is in the public domain in the USA.

Additional Supporting Information may be found in the online version of this article.

CONFLICT OF INTEREST DISCLOSURES

The authors made no disclosure.

REFERENCES

- 1.Howlader N, Noone AM, Krapcho M, et al. Cancer Statistics Review, 1975–2011. Bethesda, MD: National Cancer Institute; 2014. [Google Scholar]

- 2.Surveillance, Epidemiology, and End Results (SEER) Program. Cancer stat fact sheets. [Accessed November 4, 2014]; http://seer.cancer.gov/statfacts/.

- 3.National Cancer Institute. Cancer query system: delay-adjusted SEER incidence factors. [Accessed November 4, 2014]; http://surveillance.cancer.gov/delay/canques.html.

- 4.Midthune DN, Fay M, Clegg LX, Feuer EJ. Modeling reporting delays and reporting corrections in cancer registry data. J Am Stat Assoc. 2005;100:61–70. [Google Scholar]

- 5.Kim HJ, Fay MP, Feuer EJ, Midthune DN. Permutation tests for joinpoint regression with applications to cancer rates. Stat Med. 2000;19:335–351. doi: 10.1002/(sici)1097-0258(20000215)19:3<335::aid-sim336>3.0.co;2-z. [DOI] [PubMed] [Google Scholar]

- 6.Edwards BK, Noone AM, Mariotto AB, et al. Annual report to the nation on the status of cancer, 1975–2010, featuring prevalence of comorbidity and impact on survival among persons with lung, colorectal, breast, or prostate cancer. Cancer. 2014;120:1290–1314. doi: 10.1002/cncr.28509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kim HJ, Yu B, Feuer EJ. Selecting the number of change-points in segmented line regression. Stat Sin. 2009;19:597–609. [PMC free article] [PubMed] [Google Scholar]

- 8.Clegg LX, Hankey BF, Tiwari R, Feuer EF, Edwards BK. Estimating average annual percent change in trend analysis. Stat Med. 2009;28:3670–3682. doi: 10.1002/sim.3733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Clegg LX, Feuer EJ, Midthune DN, Fay MP, Hankey BF. Impact of reporting delay and reporting error on cancer incidence rates and trends. J Natl Cancer Inst. 2002;94:1537–1545. doi: 10.1093/jnci/94.20.1537. [DOI] [PubMed] [Google Scholar]

- 10.American Cancer Society. Cancer Facts & Figures 2014. Atlanta, GA: American Cancer Society; 2014. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.