Abstract

Evidence suggests that lateral frontal cortex implements cognitive control processing along its rostro-caudal axis, yet other evidence supports a dorsal–ventral functional organization for processes engaged by different stimulus domains (e.g., spatial vs. nonspatial). This functional magnetic resonance imaging study investigated whether separable dorsolateral and ventrolateral rostro-caudal gradients exist in humans, while participants performed tasks requiring cognitive control at 3 levels of abstraction with language or spatial stimuli. Abstraction was manipulated by using 3 different task sets that varied in relational complexity. Relational complexity refers to the process of manipulating the relationship between task components (e.g., to associate a particular cue with a task) and drawing inferences about that relationship. Tasks using different stimulus domains engaged distinct posterior regions, but within the lateral frontal cortex, we found evidence for a single rostro-caudal gradient that was organized according to the level of abstraction and was independent of processing of the stimulus domain. However, a pattern of dorsal/ventral segregation of processing engaged by domain-specific information was evident in each separable frontal region only within the most rostral region recruited by task demands. These results suggest that increasingly abstract information is represented in the frontal cortex along distinct rostro-caudal gradients that also segregate along a dorsal–ventral axis dependent on task demands.

Keywords: cognitive control, hierarchy, language, prefrontal cortex, spatial, stimulus domain

Introduction

Lateral frontal cortex function is critical for cognitive control (Miller and Cohen 2001; Fuster 2004; Petrides 2005; Duncan 2010). There is clear and abundant evidence from tracer studies (Petrides and Pandya 2006), single-cell recordings (Funahashi et al. 1989), lesion studies (Curtis and D'Esposito 2004), and functional neuroimaging studies (Badre and D'Esposito 2007) that the anatomy of lateral frontal cortex is not homogenous. Despite this large body of work, a unified, comprehensive model of the functional organization of lateral frontal cortex does not exist.

The first proposed organizational scheme for lateral frontal cortex puts forth that caudal frontal cortex is organized by distinct dorsal and ventral modules, such that each region processes different domains of information during tasks that require cognitive control (Goldman-Rakic 1996; Buckner 2003; Sakai and Passingham 2003; Courtney 2004; Petrides 2005; O'Reilly 2010). Support for this proposal derived from studies using language stimuli that observed recruitment of ventrolateral frontal regions (Paulesu et al. 1993; Fiez et al. 1996; Chein et al. 2002) and studies using spatial or pictorial stimuli that recruit more dorsolateral regions (Courtney et al. 1998; Mohr et al. 2006; Yee et al. 2010; Curtis and D'Esposito 2011).

More recently, models of the functional organization of lateral frontal cortex propose a continuous gradient of function along the rostro-caudal axis of lateral frontal cortex (Koechlin and Hyafil 2007; Botvinick 2008; Badre and D'Esposito 2009). These models, drawing inspiration from Fuster's “perception–action cycle” (Fuster 1997), theorizes that there is a hierarchical organization, in which progressively more rostral (anterior) frontal regions process progressively more abstract representations. Specifically, it is proposed that caudal (posterior) frontal regions are engaged for concrete action decisions that are closer in time and more directly related to choosing a specific motor response, and rostral frontal regions guide behavior over longer lags and at more abstract levels of action contingency (Koechlin et al. 2003; Fuster 2004; Botvinick 2008; Badre and D'Esposito 2009).

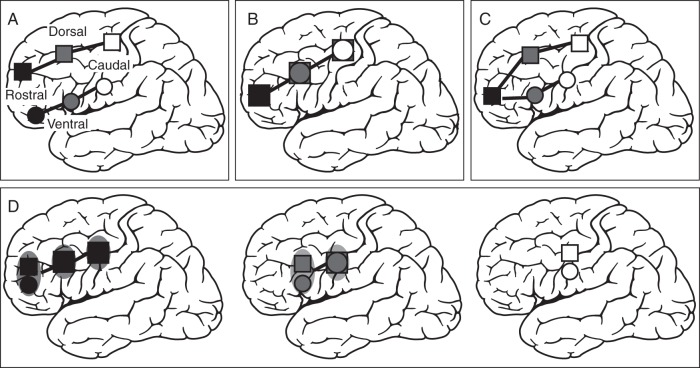

In summary, evidence exists that the lateral frontal cortex is functionally organized along both a rostro-caudal and dorsal–ventral axis. However, it remains unclear how to reconcile these 2 organizational schemes with each other. One hypothesis is that there is a distinct dorsolateral and ventrolateral prefrontal cortex (VLPFC) organization that is maintained throughout the rostro-caudal axis (see Fig. 1A). This is consistent with a recent proposal of dorsal–ventral frontal organization (O'Reilly 2010). Alternatively, the rostro-caudal axis of cognitive control may not be sensitive to processes that differentially engage dorsolateral versus ventrolateral frontal cortex (see Fig. 1B). Another possible organizational scheme could be that cognitive control processes engaged by different stimulus domains are segregated only within the caudal frontal cortex [as originally proposed by Goldman-Rakic (1996)], whereas rostrolateral frontal cortex representations are domain-general (Sakai and Passingham 2003, see Fig. 1C). Finally, a fourth potential outcome of the present study could be an interaction of the cognitive control hierarchy and processes that differentially engage dorsolateral versus ventrolateral frontal areas that does not directly map onto either scheme that has been identified thus far, but rather a hybrid of them (Fig. 1D).

Figure 1.

Hypotheses tested in the present study. Cognitive control representations get more abstract from white to black. (A) Two rostro-caudal gradients of cognitive control separately for the 2 different stimulus domains (i.e., language–ventral, spatial–dorsal). (B) One rostro-caudal gradient of cognitive control. Overlapping activations for language and spatial domains along the rostro-caudal axis in lateral frontal cortex. (C) Lower levels of cognitive control recruit distinct ventral and dorsal subregions within caudal frontal cortex (e.g., language–ventral, spatial–dorsal). In contrast, more rostral regions of the lateral frontal cortex are independent of stimulus domain (i.e., overlapping activations for language and spatial stimuli in rostral regions). (D) Interaction between cognitive control and stimulus domain. Segregation of language (ventral) and spatial (dorsal) stimuli in subregions along the rostro-caudal axis, as a function of the cognitive control hierarchy. A stimulus domain segregation is only present at subregions that are engaged in the highest level of cognitive control.

Materials and Methods

Participants

Twenty-two right-handed native English-speaking participants [12 females, mean age = 24 years, standard deviation (SD) = 6.2 years] took part in this study. Data from 2 additional participants were collected but excluded due to poor behavioral performance. None of the participants had a history of a neurological, psychiatric, or significant medical disorder. Informed consent was obtained from participants in accordance with procedures approved by the Committee for the Protection of Human Subjects at the University of California, Berkeley.

Experimental Design

Three tasks requiring different demands on cognitive control were performed, each with language or spatial stimuli (Fig. 2A). Language stimuli were words (nouns) that represent living or nonliving objects (e.g., living = man, bird, or frog; non-living = pen, house, or sofa). Additionally, the same words were also categorized as small or large objects, where small was defined as objects that fit into a 20-inch box (e.g., small = bird, pen, or frog; large = man, house, or sofa). Spatial stimuli consisted of random dot patterns with different distributions of the dots at different positions on the screen. Dot distribution was higher either on the left or right part of the screen, or on the upper or lower part of the screen. Thus, language and spatial stimuli comprised of 2 categories. Language stimuli were judged along the size-category (small or large) and living/nonliving-category. Spatial stimuli were judged along the vertical-category (left or right) and the horizontal-category (upper or lower).

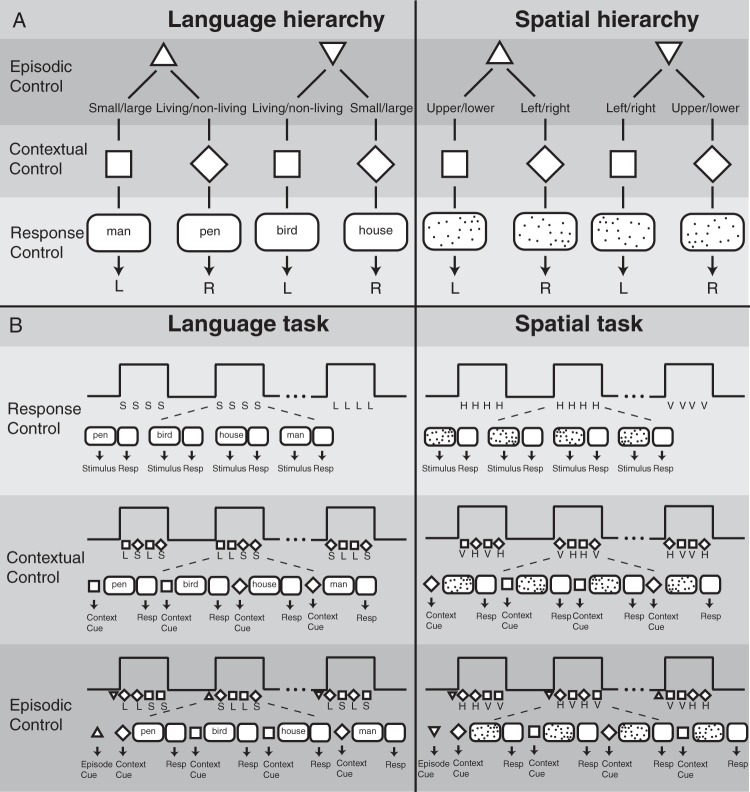

Figure 2.

(A) Schematic description of experimental design. In the Language Domain, participants made small/large or living/nonliving judgment of nouns. In the Spatial Domain, participants judged if a random dot pattern had more dots on the upper or lower part, or left or right part of the screen. Response Control: A stimulus (noun or dot pattern) was perceived and a response (button press) was made according to the type of task. Contextual Control: A cue (square or diamond) indicated which task to perform on each trial. Episodic Control: A cue (upward triangle vs downward triangle) indicated which subsequent cue (square or diamond) indicated which task to perform in a block of trials. (B) Schematic description of experimental tasks. One task block consisted of 4 consecutive trials. Each task block was separated by a resting baseline block of equal duration. Response Control: One task block comprised trials with identical tasks [e.g., Language: small/large (S) or living/nonliving (L) and Spatial: upper/lower (horizontal, H) or left/right (vertical, V) judgment]. Contextual Control: In each block, different tasks (Language: L or S and Spatial: H or V) were conducted based on a cue (square or diamond). Episodic Control: A cue–cue (upward triangle vs downward triangle) in front of each task block triggered the type cue–task association for the current block.

To probe a hierarchical organization of cognitive control, 3 different levels of control were implemented (see Fig. 2A). Response Control was the lowest level engaging stimulus–response mapping: a stimulus (i.e., a noun or a dot pattern) was presented and a particular response (button press) was given. Contextual Control was the next higher level. At this level, a particular cue predicted the processing of a particular task, which in turn triggered a particular response. The highest level in the hierarchy was Episodic Control. In this case, a particular cue–task association was maintained over a certain episode, but varied across blocks (see Fig. 2B). Thus, this was a 2 × 3 experimental design with DOMAIN (spatial vs. language) and HIERARCHY (Response Control, Contextual Control, and Episodic Control) as factors. The experiment was divided into 6 sessions, one for each behavioral condition (e.g., Response Control in the Language Domain). The order of sessions was pseudorandomized across participants. Each session comprised 16 blocks, separated by rest blocks, and each block contained 4 consecutive trials.

Session 1: Response Control in the Language Domain

Participants judged whether words were living or nonliving in 8 consecutive blocks. After the presentation of the word, a response-mapping screen with the words “living” and “nonliving” was presented. In the other half of the session, participants judged the size of the words (small or large). After the presentation of the word, a response-mapping screen with the words “small” and “large” was presented. This procedure ensured that participants did not need to process any cue–task associations to perform the Response Control task. The order of the 2 tasks was counterbalanced.

Session 2: Response Control in the Spatial Domain

Participants judged whether the dot pattern distribution was higher on the left or on the right part of the screen (vertical classification) in 8 consecutive blocks. After the presentation of the dot pattern, a response-mapping screen with the words “left” and “right” was presented. In the other half of the session, participants judged whether the dot pattern distribution was higher on the top or bottom of the screen (horizontal classification). After the presentation of the dot pattern, a response-mapping screen with the words “upper” and “lower” was presented. The order of the 2 tasks was counterbalanced.

Session 3: Contextual Control in the Language Domain

A cue (square or diamond) immediately before the word indicated whether to perform the living/nonliving-judgment or the size-judgment.

Session 4: Contextual Control in the Spatial Domain

A cue (square or diamond) immediately before the dot pattern indicated whether to perform the horizontal or vertical axis judgment.

Session 5: Episodic Control in the Language Domain

A cue (triangle pointing up or triangle pointing down) at the beginning of each block indicated which cue (square or diamond) determined the type of judgment of the immediately following word. A triangle pointing up indicated that the square triggered the size-judgment and the diamond triggered the living/nonliving-judgment. In contrast, a triangle pointing down indicated that the diamond triggered the size-judgment and the square triggered the living/nonliving-judgment.

Session 6: Episodic Control in the Spatial Domain

A cue (triangle pointing up or triangle pointing down) at the beginning of each block triggered which cue (square or diamond) determined the type of judgment of the immediately following dot pattern. A triangle pointing up indicated that the square triggered the vertical-judgment and the diamond triggered the horizontal-judgment. In contrast, a triangle pointing down indicated that the diamond triggered the vertical-judgment and the square triggered the horizontal-judgment.

In sessions 3–6, the order of cues was randomized with the following constraints: the 4 trials per block comprised 2 size-judgment and 2 living/nonliving-judgment tasks in the Language Domain; and 2 vertical-judgment and 2 horizontal-judgment tasks in the Spatial Domain.

Stimuli and Experimental Procedure

All stimulus blocks in every task began with a triangle (2 s), then a rectangle (either a square or diamond, 1 s). The triangle cue was irrelevant in the Contextual and Response Control sessions. The rectangle cues were irrelevant in the Response Control session. Each cue was shown in each session to avoid effects of differences in stimulus properties and trial length. Each block consisted of 4 consecutive trials (Fig. 2B). One trial consisted of a square or a diamond (1 s), followed by the stimulus (word or dot pattern, 1.5 s), a judgment period (2 s), and a feedback (0.5 s). Each block lasted 22 s and was followed by a baseline resting block of the same duration. Each session lasted approximately 12 min.

The stimulus set in the Language Domain consisted of 192 nouns (mean word length = 5.1 letters, SD = 1.4 letters; mean word frequency = 40.5, SD = 53.2; size ca. 9° × 14° ± 2° visual angle). Words were extracted from the MRC Psycholinguistic Database; word frequency of occurrence was given by the norms of Kucera and Francis (1967). Each of the 3 language sessions consisted of 64 nouns; 16 nouns referred to small-living objects, 16 small-nonliving objects, 16 large-living objects, and 16 large-nonliving objects. The stimulus set in the Spatial Domain comprised of 192 dot pattern (30–40 dots pseudorandomly distributed on screen; size ca. 9° × 13° visual angle). Each of the 3 spatial sessions consisted of 64 dot pattern. In 16 cases, more dots were located at the lower-left part of the screen, 16 lower-right, 16 upper-left, and 16 upper-right parts of the screen.

Plausibility of semantic categories (size and living/nonliving), as well as of distribution of dot pattern (vertical and horizontal), was validated in a behavioral pre-experiment. Before scanning training was given, starting with Response Control, then Contextual Control, and finally Episodic Control blocks. Training began either with the Language Domain or with the Spatial Domain, counterbalanced across participants. For training, 88 nouns (22 for each category) and 88 dot patterns were used, those were not used in the functional magnetic resonance imaging (fMRI) session. Training was given to ensure that participants only entered the fMRI session when they reached a certain behavioral criterion (i.e., 90% correct answers for the 6 conditions). Training lasted approximately 40 min. This intensive training procedure differs from previous studies on the hierarchical organization of cognitive control (e.g., Koechlin et al. 2003; Badre and D'Esposito 2007). Training was given to ensure that participants' performance was high on each condition when entering the scanner. Prior to each session during fMRI scanning, a short instruction of the upcoming task was presented on the screen.

Functional MRI Data Acquisition

Data were collected on a Siemens MAGNETOM Trio 3-T MR Scanner at the Henry H. Wheeler, Jr., Brain Imaging Center at the University of California, Berkeley. A 32-cahnnel birdcage coil was used. Anatomical images consisted of 160 slices acquired using a T1-weighted magnetization prepared rapid acquisition gradient echo (MPRAGE) protocol [repetition time (TR) = 2300 ms; echo time (TE) = 2.98 ms; field of view (FOV) = 256 mm; matrix size = 256 × 256; voxel size = 1 mm3]. Functional MRI scanning was carried out using a T2*-weighted blood oxygen level-dependent (BOLD)-sensitive gradient-echo echo-planar imaging sequence (TR = 2 s, TE = 25 ms, FOV = 19.2 cm, 64 × 64 matrix, resulting in an in-plane resolution of 3 mm × 3 mm). Thirty-six slices (thickness: 3 mm with an interslice gap of 1 mm) covering the whole brain were acquired (interleaved and descending acquisition). Participants viewed projected stimuli via a mirror mounted on the head coil and manual responses were obtained a fiber optic response pad. Six functional sessions with 354 volumes lasting approximately 120 min were collected.

Functional MRI Data Analysis

MRI data were analyzed using SPM8 (available at http://www.fil.ion.ucl.ac.uk/spm). Preprocessing comprised realignment and unwarp, slice timing, coregistration, segmentation, normalization to MNI space, and smoothing with a 6-mm full-width at half-maximum Gaussian kernel. Normalizing an individual structural T1 image to the SPM8 T1 brain template was processed in 2 steps: Segmentation of the structural T1 image into gray matter, white matter, and cerebrospinal fluid, and estimation of normalization parameters for the segmented images and writing the normalized images with these parameters. This procedure transformed the structural images and all EPI volumes into a common stereotactic space to allow for multisubject analyses. Voxel size was interpolated during preprocessing to isotropic 3 mm3.

Statistical Analysis

BOLD signal change between conditions was analyzed using the general linear model approach implemented in SPM8. A block design matrix including all conditions of interest was specified using the canonical hemodynamic response function. Additionally, motion parameters and sessions were modeled as covariates. Confounds of global signal changes were removed by applying a high-pass filter with a cut-off frequency of 128 s. In total, there were 16 blocks per condition, each lasting 22 s. The onset of an epoch was set to the first stimulus (i.e., triangle) in each condition. The resulting individual contrast images were submitted to the second-level analysis. Group-level 1-sample t-tests against a contrast value of zero were performed at each voxel. To protect against false-positive activations, a double threshold was applied, by which only regions with a z-score exceeding 3.09 (P < 0.05, FDR corrected) and 30 contiguous voxels were considered. In the main effects contrasts (Figs 4B,C and 5), a less conservative threshold of P < 0.005 (uncorrected) and 30 contiguous voxels was applied. To functionally define frontal regions of interest (ROIs) involved in the 3 levels of cognitive control abstraction, a group contrast of all effects versus baseline was performed. A large area in the left frontal cortex was activated in this contrast (using a threshold of P < 0.05, FDR corrected). To identify separate peak coordinates for left anterior frontal sulcus, left mid-frontal sulcus, and left ventral premotor cortex, a more conservative threshold of P < 0.00005 was applied. The data for the ROI analysis were extracted from 6-mm radius spherical volumes. In each participants' data, the centers of the ROIs were set to the peak voxels in the brain areas that were identified in the contrast of all effects versus baseline. A 2 × 3 analysis of variance (ANOVA) on the percent signal change was conducted with the factors DOMAIN (Language, Spatial) and HIERARCHY (Response Control, Contextual Control, and Episodic Control).

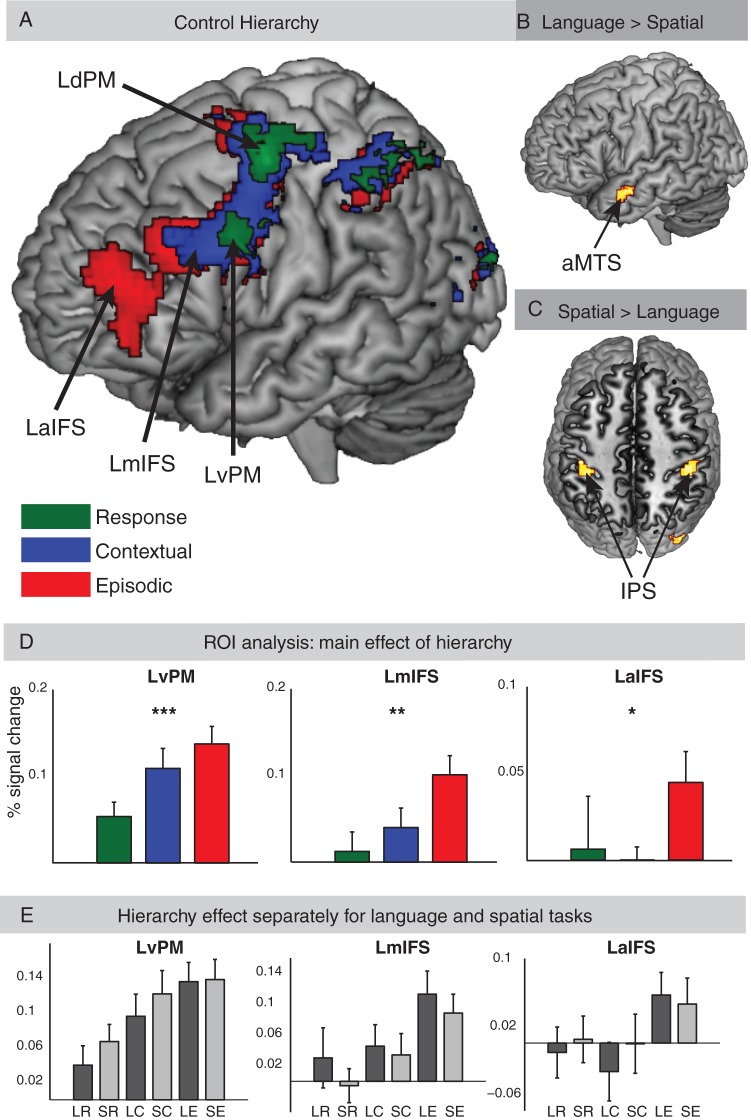

Figure 4.

Patterns of activation across the whole brain for the (A) main effects of cognitive control. Each control condition collapsed across language and spatial tasks, (B) direct contrast of language versus spatial tasks collapsed across all cognitive control conditions, and (C) direct contrast of spatial versus language tasks collapsed across all cognitive control conditions. (D) Analysis of BOLD signal change in lateral frontal ROIs recruited by the cognitive control conditions collapsed across language and spatial tasks. A significant difference in signal change between the 3 different control tasks (Response, Contextual, and Episodic) was observed (*P < 0.05, **P < 0.005, ***P < 0.001). (E) Analysis in lateral frontal ROIs separately for each of the 6 conditions. LR, language task and response control; SR, spatial task and response control; LC, language task and contextual control; SC, spatial task and contextual control; LE, language task and episodic control; SE, spatial task and Episodic Control.

Figure 5.

(A) Patterns of activation for the contrast of Episodic versus Contextual Control (red), Contextual versus Response Control (blue), and Response Control versus Baseline (green), P < 0.005, k = 30 voxels. (B) Patterns of activation for the 3 levels of cognitive control separately for language tasks. (C) Patterns of activation for the 3 levels of cognitive control separately for spatial tasks. (B,C) Activations for Response Control versus Baseline (green), Contextual Control versus Baseline (blue), and Episodic Control versus baseline (red) are shown with an uncorrected threshold of P < 0.001 and k = 30 adjacent voxels. Note that frontal activations in both plots do not survive multiple comparison corrections. See Figure 4A for (corrected) frontal activations of different levels of cognitive control collapsed across language and spatial tasks and see Figure 4B,C for the contrasts of language versus spatial, and vice versa collapsed across all cognitive control conditions.

To identify a possible dorsal–ventral segregation in regions identified in the HIERARCHY effect, 2 different analyses were applied. First, a comparison of individual peak activation for language versus spatial stimuli on the z-axis in the MNI space was conducted. For each participant and for each level of hierarchy, we computed the distance along the z-axis between the activation peaks from the language and spatial task. This value was computed as a different score (spatial − language) with positive values reflecting scores, where the spatial peak was more dorsal (superior) compared with the language peak. Secondly, a multivariate classification analysis on y- and z-axes was conducted. The z-axis in the MNI space provides only a rough approximation of the dorsal–ventral axis in the frontal cortex. In anatomical space, the dorsal–ventral axis is defined by the plane of the inferior frontal sulcus (IFS), which is tilted along y- and z-axes in the MNI space. To address this concern, we performed a nonlinear pattern classification on y- and z-coordinates (see Fig. 6B). The aim of this analysis was to determine whether in each ROI the location of peak activity classifies the factor DOMAIN in 2-dimensional space. To do so we investigated whether the location of y- and z-coordinates in a given ROI predicts the domain of the task.

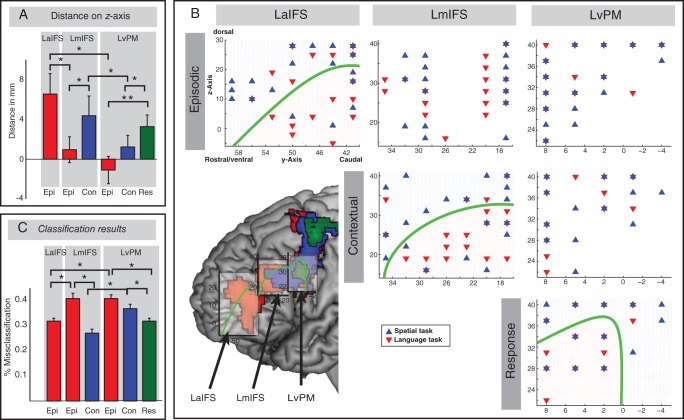

Figure 6.

Classification analysis results. (A) Distance between peak activation for language and spatial tasks on the z-axis. Bars represent mean distance values across participants in the 3 lateral frontal regions (LaIFS, LmIFS, and LvPM) for the cognitive control conditions (Epi, Episodic, Con, Contextual, and Res, Response; *P < 0.05; **P < 0.005). (B) Distribution of peak activity for language and spatial tasks in each participant is plotted in 3 lateral frontal regions for the cognitive control conditions. Individual MNI coordinates of language tasks (red triangles pointing down) and spatial tasks (blue triangles pointing up) were plotted in each ROI (overlapping language and spatial tasks are represented in overlapping triangles pointing down and pointing up). A significant dorsal–ventral segregation is illustrated with a green discrimination line. Lower left: Green discrimination line mapped on whole-brain activation pattern. Squares (light gray) represent bounding boxes of the search space for classification analysis. Bounding boxes were overlaid on activation pattern in lateral frontal regions using the MRIcron software (the coordinates of the bounding boxes are cartoon illustrations). (C) Distribution of the percentage of misclassification errors. A leave-one-participant-out cross-validation was applied on the classification analysis. The low percentage of misclassification errors represents better classification results and stronger dorsal–ventral topographical segregation in a given region and condition (*P < 0.001).

For both analyses, we extracted individual peak maxima from those frontal areas that showed a significant activation for Episodic Control (left anterior IFS, LaIFS [−45 50 16]), Contextual Control (LmIFS [−48 35 31]), and Response Control (left ventral PM, LvPM [−45 2 31]). Bounding boxes of these areas were generated with the Marsbar toolbox (http://marsbar.sourceforge.net/). We defined bounding boxes around coordinates of activation pattern in these 3 regions. In order to take differences in the size of activation between regions into account, the size of bounding boxes was different between the 3 areas. However, the size difference has no effect on statistics, since the comparison of distance on z-axis and classification on y- and z-axes was only conducted within separate bounding boxes. Edge lengths of LaIFS were 15 × 20 × 35 mm (x-y-z). MNI coordinates of the LaIFS bounding box: x-axis = −35 : −50, y-axis = 40 : 60, z-axis = −5 : 30. Edge length of LmIFS was 20 × 20 × 25 mm (x-y-z). MNI coordinates of the LmIFS bounding box: x-axis = −35 : −55, y-axis = 15 : 35, z-axis = 15 : 40. Edge lengths of LvPM were 20 × 15 × 20 mm (x-y-z). MNI coordinates of the LvPM bounding box: x-axis = −35 : −55, y-axis = −5 : 10, z-axis = 20 : 40. Bounding boxes were depicted in Figure 6B (lower left part), and they were overlaid on a MNI standard brain and whole-brain main effects using the software MRIcron (http://www.mccauslandcenter.sc.edu/mricro/mricron/). In a next step, peak maxima for the language and spatial tasks were extracted for each participant. For each task, we extracted peak activity only for bounding boxes that showed significant main effects in the whole-brain analysis of HIERARCHY. Thus, in the Response Control we extracted local maxima in LvPM, in Context Control we extracted local maxima in LvPM and LmIFS, and in Episode Control we extracted local maxima in LvPM, LmIFS, and LaIFS.

First, we investigated the distance between peak activations of language and spatial tasks on z-axis in each bounding box. The difference between language peaks and spatial peaks on z-axis was calculated in each participant, resulting in 22 distance values (positive value: language = ventral, spatial = dorsal). Next, 1-sample t-tests against the null hypothesis of no distance between language and spatial tasks on individual distance values were conducted. Post hoc Bonferroni corrections were applied to control for multiple comparisons. Paired sample t-tests were applied to compare conditions between bounding boxes and to compare conditions within bounding boxes. Secondly, z- and y-coordinates of individual peak activations were entered into a multivariate classification algorithm (Krzanowski 2000; Seber 2004). In this function, individual coordinates were randomly divided into a training group (80%) and a testing group (20%). The classification algorithm decoded the individual spatial pattern of 2 conditions (language vs. spatial) in a training group and was then applied to a testing group. A quadratic discrimination function was used, fitting multivariate normal densities with covariance estimates stratified by the group of coordinates of language trials and the group of coordinates of spatial trials. Quality of classification was described by the misclassification error. The misclassification error is an estimate based on the comparison between the training and testing data sets. This is the percentage of observations in the testing data set that are misclassified, weighted by the prior probability for the 2 groups. The classification results were visualized with a function containing the coefficients of the boundary curves between pairs of coordinate groups, illustrating the spatial discrimination between language and spatial task coordinates. The boundary between the 2 groups was described by the formula f = K + Lv + vTQv, where v is the z- and y-coordinate vector, K is a constant, L describes the linear contribution, and Q is a 2 × 2 matrix describing the quadratic contribution. K, L, and Q are iteratively determined by minimizing the misclassification in the training group. The classification was performed using the function “classify” as implemented in the Matlab Statistics Toolbox.

Two different analyses were conducted to quantify potential classification differences between brain regions as a function of stimulus domain. First, samples of misclassification error values were generated, based on random assignments of groups (language or spatial) to activation coordinates. Groups (language or spatial) were randomly assigned to coordinates in each participant's data and a classification on this data was performed. This was repeated 1000 times, resulting in a random distribution of misclassification error values based on scrambled group-to-data assignments. This procedure was applied to each ROI (LaIFS, LmIFS, and LvPM) and each cognitive control condition (Response, Contextual, and Episodic Control). The actual misclassification error values were then compared with the corresponding random distribution of misclassification errors. Values below the lower boundary of 90–95% of confidence interval of random distributions were interpreted as a successful classification, that is, language and spatial tasks are represented separately in a given ROI. Values within on SD of random misclassification distribution were interpreted as unsuccessful classification, that is, there is no spatial separation between the 2 conditions in a given ROI. Secondly, a “leave-one-participant-out” cross-validation was adopted, in which a single participant was iteratively left out of the classification analysis. In each ROI, data from 21 of 22 participants were used for the classification analysis, resulting in a distribution of 22 different misclassification errors. Paired sample t-tests were applied to compare cross-validated misclassification errors between regions and conditions.

Results

The present experimental design allowed for a direct comparison of hierarchical cognitive control demands at 3 levels (Response Control, Contextual Control, and Episodic Control) and stimulus domain (e.g., Language or Spatial).

Behavioral Data

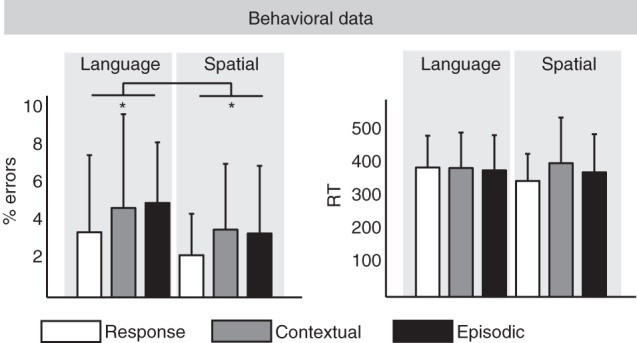

A 2 × 3 ANOVA with the factors DOMAIN (Language, Spatial) and HIERARCHY (Response Control, Contextual Control, and Episodic Control) on the error rates and reaction times was performed (see Fig. 3). The analysis of error rates revealed a main effect of DOMAIN (F1,21 = 4.48, P < 0.05). Participants made significantly more errors during language tasks (4.41% errors) than during the spatial tasks (3.07% errors). However, the main effect of HIERARCHY (F2,42 = 2.01) and interaction effect (F2,42 < 1) was not significant. The analysis on reaction times showed no significant main effects or interactions (Fs < 1).

Figure 3.

Behavioral results.

Functional MRI Data

Univariate Analysis

Hierarchy effect

The effect of hierarchy revealed a caudal–rostral gradient of activity within the lateral frontal cortex (see Fig. 4A). Each level of the hierarchy was compared with baseline, collapsed across language and spatial tasks. Response Control blocks activated left dorsal PM (LdPM, ∼BA 6) and LvPM (∼BA 6). Contextual Control blocks activated LdPM and LvPM, and left mid-IFS (LmIFS, ∼BA 9/44). Episodic Control blocks activated LdPM and LvPM, LmIFS, and left and right anterior IFS (LaIFS/RaIFS, ∼BA 45/46). LmIFS activity spread into the inferior frontal gyrus (IFG, pars orbitalis) and middle frontal gyrus (MFG, posterior part). LaIFS activity spread into the left IFG (pars triangularis), the adjacent left MFG (anterior, inferior part), and left orbito-frontal gyrus. All 3 cognitive control tasks led to additional activity in bilateral IPS, bilateral middle occipital gyrus (MOG), bilateral anterior insula (AI), supplementary motor area (SMA, extending into pre-SMA), right dorsal and ventral PM (extending into the right inferior frontal junction, IFJ), and left thalamus (see Tables 1–3).

Table 2.

Contextual Control

| Brain region | BA | x | y | z | Zmax |

|---|---|---|---|---|---|

| L mIFS | 9/44 | −48 | 32 | 31 | 3.83 |

| L PM | 6 | 39 | −1 | 49 | 4.65 |

| R mIFS | 45 | 51 | 38 | 28 | 4.36 |

| R PM | 6 | 45 | 5 | 31 | 4.41 |

| L AI | −27 | 23 | 7 | 5.97 | |

| R AI | 30 | 29 | 4 | 5.04a | |

| L IPS | 7 | −30 | −52 | 46 | 7.25 |

| R IPS | 7 | 27 | −64 | 49 | 5.60a |

| L SMA/pre-SMA | 6/32 | −9 | 11 | 49 | 5.82 |

| L Thalamus | −12 | −16 | 13 | 5.97 |

Note: Anatomical areas, approximate Brodmann's Area (BA), mean x, y, and z Montreal Neurological Institute (MNI) coordinates, and maximal Z values of the significant activations are presented.

L, left hemisphere; R, right hemisphere; mIFS, mid-inferior frontal sulcus; PM, premotor cortex; AI, anterior insula; IPS, intraparietal sulcus; SMA, supplementary motor area.

aZmax values were extracted from higher threshold (P < 0.0001), because at lower threshold peak coordinates of the particular region was included within a bigger cluster and could not be separated.

Table 1.

Episodic Control

| Brain region | BA | x | y | z | Zmax |

|---|---|---|---|---|---|

| L aIFS | 45/46 | −36 | 56 | 22 | 4.03 |

| L mIFS | 9/44 | −51 | 32 | 25 | 4.60 |

| L dPM | 6 | −42 | −4 | 55 | 4.91a |

| L vPM | 6 | −45 | 5 | 34 | 6.06 |

| R dPM | 6 | 36 | 2 | 52 | 5.09 |

| R vPM | 6 | 45 | 5 | 31 | 4.72a |

| L AI | −24 | 23 | 4 | 6.05 | |

| R AI | 24 | 23 | 4 | 4.94a | |

| L IPS | 7 | −27 | −61 | 46 | 6.84 |

| R IPS | 7/19 | 30 | −64 | 34 | 5.69a |

| L SMA/pre-SMA | 6/32 | −9 | 11 | 49 | 4.76a |

| L Thalamus | −15 | −16 | 22 | 4.87 |

Note: Anatomical areas, approximate Brodmann's Area (BA), mean x, y, and z Montreal Neurological Institute (MNI) coordinates, and maximal Z values of the significant activations are presented.

L, left hemisphere; R, right hemisphere; aIFS, anterior inferior frontal sulcus; dPM, dorsal premotor cortex; vPM, ventral premotor cortex; AI, anterior insula; IPS, intraparietal sulcus; SMA, supplementary motor area.

aZmax values were extracted from higher threshold (P < 0.0001), because at lower threshold peak coordinates of particular region was included within a bigger cluster and could not be separated.

Table 3.

Response Control

| Brain region | BA | x | y | z | Zmax |

|---|---|---|---|---|---|

| L vPM | 6 | −45 | 5 | 34 | 6.06 |

| L dPM | 6 | −42 | −4 | 55 | 4.98 |

| R vPM | 6 | 45 | 5 | 31 | 4.42 |

| R dPM | 6 | 39 | −1 | 52 | 3.92 |

| L AI | −30 | 20 | 7 | 5.10 | |

| R AI | 42 | 20 | 7 | 4.19 | |

| L IPS | 7 | −30 | −55 | 46 | 6.32 |

| R IPS | 7 | 27 | −64 | 46 | 5.40 |

| R MOG | 18 | 30 | −88 | 7 | 5.34 |

| L SMA/pre-SMA | 6/32 | −9 | 11 | 49 | 4.70 |

| L Thalamus | −9 | −16 | 10 | 4.22 |

Note: Anatomical areas, approximate Brodmann's Area (BA), mean x, y, and z Montreal Neurological Institute (MNI) coordinates, and maximal Z values of the significant activations are presented.

L, left hemisphere; R, right hemisphere; dPM, dorsal premotor cortex; vPM, ventral premotor cortex; AI, anterior insula; IPS, intraparietal sulcus; MOG, middle occipital gyrus; SMA, supplementary motor area.

The contrast between Episodic Control and Contextual Control (Fig. 5A) revealed activation in the left MFG and IFG, left pars opercularis, left caudate nucleus, and SMA. The contrast between Contextual Control and Response Control activated the left IFJ, left pars triangularis, left premotor cortex, and left MOG.

Domain effect

Language tasks (when compared with spatial tasks collapsed across hierarchical levels) revealed greater activity in the left anterior middle temporal sulcus (∼BA 21) and the precuneus (∼BA 7). Spatial tasks revealed greater activity in bilateral IPS (∼BA 40) and right MOG (BA 19). No frontal cortical area was activated in either direct contrast of language versus spatial tasks (see Fig. 4B,C and Table 4). Contrasts between each hierarchical level for the spatial and language tasks separately versus baseline were presented in Figure 5B,C.

Table 4.

Main effects

| Brain region | BA | x | y | z | Zmax |

|---|---|---|---|---|---|

| Language > Spatial | |||||

| L MTS | 21 | −48 | −1 | −20 | 4.69 |

| L Precuneus | 7 | −6 | −58 | 34 | 4.13 |

| Spatial > Language | |||||

| R IPS | 40 | 45 | −34 | 43 | 4.28 |

| L IPS | 40 | −39 | −31 | 40 | 4.18 |

| R MOG | 19 | 36 | −88 | 16 | 3.69 |

| Episodic > Contextual | |||||

| L SMA | 6 | −12 | −1 | 67 | 3.74 |

| L Caudate | −12 | 11 | 13 | 3.60 | |

| L MFG/IFG | 45/46 | −48 | 50 | 4 | 2.77 |

| L IFG (opercularis) | 44 | −57 | 20 | 31 | 3.51 |

| Contextual > Response | |||||

| L IFJ | 44/6 | −36 | 5 | 28 | 4.29 |

| L IFG (triangularis) | 44 | −45 | 17 | 19 | 3.86 |

| L PM | 6 | −39 | −1 | 43 | 3.28 |

| L MOG | 18 | 30 | −82 | 10 | 3.85 |

Note: Anatomical areas, approximate Brodmann's Area (BA), mean x, y, and z Montreal Neurological Institute (MNI) coordinates, and maximal Z values of the significant activations are presented.

L, left hemisphere; R, right hemisphere; MTS, middle temporal sulcus, IPS, intraparietal sulcus; MOG, middle occipital gyrus; SMA, supplemental motor area, MFG, middle frontal gyrus, IFG, inferior frontal gyrus, PM, premotor cortex, IFJ, inferior frontal junction.

ROI Analysis

A 3 × 2 × 3 ANOVA with the factors ROI (LaIFS, LmIFS, and LvPM), DOMAIN (Language, Spatial), and HIERARCHY (Response Control, Contextual Control, and Episode Control) was conducted on BOLD percent signal change (see Fig. 4D,E). We found a significant main effect of ROI (F2,42 = 16.24, P < 0.001) and a main effect of HIERARCHY (F2,42 = 8.32, P < 0.001). The 3-way interaction and the main effect of DOMAIN were not significant (Fs < 1). Also, the interaction between ROI and HIERARCHY (F2,42 = 2.57) and the interaction between ROI and DOMAIN (F2,42 = 2.95) reached not significance. All effects were Greenhouse-Geisser corrected. Note that hemodynamic differences between ROIs might represent general differences in vascular changes. In addition, testing for the full factorial design, when some of the cells may contain data that mostly represent noise, because activations were sub-threshold, might not be appropriate.

Thus, in a next step, a 2 × 3 ANOVA with the factors DOMAIN (Language, Spatial) and HIERARCHY (Response Control, Contextual Control, and Episode Control) was conducted on BOLD percent signal change in each ROI (see Fig. 4D). A main effect of HIERARCHY was found in all 4 lateral frontal regions identified in the map-wise analyses: LaIFS (F2,42 = 3.49, P < 0.05), LmIFS (F2,42 = 5.71, P < 0.005), LvPM (F2,42 = 8.72, P < 0.001), and LdPM (F2,42 = 3.69, P < 0.05). The main effect of DOMAIN and the interactions of the 2 factors were not significant (all Fs < 1.5). Thus, the pattern of activity along the rostro-caudal axis in the lateral frontal cortex was similar between the language and spatial tasks.

Dorsal–Ventral Discrimination Analyses

Whole-brain univariate analyses did not reveal distinctly different regions recruited by language and spatial tasks across the lateral frontal cortex. However, in order to accept this null finding, it is necessary to conduct a test which is more sensitive than the whole-brain analysis. That is why we applied 2 different analyses to test whether topographic separation of stimulus domain existed within each frontal region recruited by different levels of cognitive control (see Table 5 for an overview of the results).

Table 5.

Dorsal–ventral discrimination results

| LaIFS |

LmIFS |

LvPM |

||||

|---|---|---|---|---|---|---|

| t-value | m-err | t-value | m-err | t-value | m-err | |

| Episodic | 3.34** | 0.318∼ | 0.77 | 0.409 | 0.83 | 0.409 |

| Contextual | 2.31∼ | 0.273* | 0.28 | 0.364 | ||

| Response | 2.93* | 0.301∼ | ||||

Note: Summary of t-values and misclassification errors (m-err) in frontal regions (LaIFS, LmIFS, and LvPM) and Cognitive Control conditions (Episodic, Contextual, and Response Control).

t-values: ∼P = 0.06; *P < 0.05; **P < 0.005; all Bonferroni-corrected. m-err: ∼misclassification error below the lower boundary of 90% of confidence interval of random distribution; *misclassification error below the 95% of confidence interval.

The first analysis was conducted by examining the distance on z-axis between the peak activation within frontal regions (LvPM, LmIFS, and LaIFS) across individual participants for both Language and Spatial tasks (see Fig. 6A). One-sample t-tests on individual distance values revealed a significant difference between Language and Spatial tasks for Episodic Control in LaIFS (t(21) = 3.34, P = 0.003), a significant distance effect for Contextual Control in LmIFS (t(21) = 2.31, P = 0.03), and a significant distance effect for Response Control in LvPM (t(21) = 2.93, P = 0.008). Episodic Control in LmIFS and LvPM, and Contextual Control in LvPM, did not show a significant difference between Language and Spatial tasks on z-axis (all ts < 1.1, n.s.). Note that we also found whole-brain activation in LdPM. However, we found no significant difference on z-axis in this region on any condition. In the next step, we compared the distance on z-axis between 3 different conditions in the 3 different regions using paired sample t-tests. In the Episodic Control condition, we compared the distance on z-axis between LaIFS and LmIFS and found a significant difference between the 2 regions (t(21) = 2.47, P = 0.02), as well as LmIFS and LvPM (t(21) = 3.84, P < 0.001). In the Contextual Control condition, we found a significant difference of distance between LmIFS and LvPM (t(21) = 2.21, P = 0.04). Within LmIFS, we found a marginally significant difference between Episodic Control and Contextual Control (t(21) = 1.79, P = 0.08). Within LvPM, we found a significant difference between Response Control and Episodic Control (t(21) = 3.02, P = 0.006) and a marginal significant difference between Response Control and Contextual Control (t(21) = 1.98, P = 0.06).

In the second analysis, a multivariate classification method was used to investigate whether topographic separations also exist on y- and z-axes. This analysis was conducted in the same regions and on the same conditions as in the first analysis. We found qualitatively different patterns of topographical segregation in the 3 frontal ROIs for the 3 cognitive control conditions. These findings were essentially the same as found in the first analysis. Episodic Control tasks exhibited different distributions of activation peak coordinates for different stimulus domains in LaIFS (misclassification error: 0.31), such that peak activation of the language task clustered ventral to the IFS, whereas the spatial task clustered dorsal to the IFS (see green line in Fig. 6B). To demonstrate that this classification was qualitatively different from a random distribution of classifications in LaIFS for Episodic Control tasks, we compared the actual misclassification error with misclassification errors of scrambled data-to-group classifications. In random samples, the misclassification error was below the lower boundary of 90% confidence interval (CI), demonstrating that the classification is qualitatively different from a random distribution. Episodic Control tasks exhibited no topographical segregation in LmIFS (misclassification error: 0.41, within 1 SD of random distribution) and in LvPM (misclassification error: 0.41; within 1 SD of random distribution). Contextual Control tasks exhibited different distributions of activation peak coordinates for different stimulus domains in LmIFS, that is, a dorsal–ventral segregation along the IFS for language and spatial stimuli (misclassification error: 0.27; below the lower boundary of 95% CI). However, Contextual Control tasks exhibited no topographical segregation in LvPM (misclassification error: 0.37; within 1 SD of random distribution). Finally, Response Control tasks exhibited a topographical segregation in LvPM (misclassification error: 0.32, below the lower boundary of 90% CI). To further quantify the classification results, a leave-one-participant-out cross-validation was applied. Paired sample t-tests were applied to compare cross-validated misclassification errors between regions and conditions (see Fig. 6C). Mean misclassification values of the cross-validation were virtually identical to the first analysis (with all participants). In addition, standard variation was very small (ranging between 0.0117 and 0.0212), pointing toward highly robust classification results across participants. In the Episodic Control condition, we compared misclassification errors between LaIFS and LmIFS and found a significant difference between the 2 regions (t(21) = 17.34, P < 0.001), as well as LmIFS and LvPM (t(21) = 18.82, P < 0.001). In the Contextual Control condition, we found a significant difference of distance between LmIFS and LvPM (t(21) = 21.78, P < 0.001). Within LmIFS, we found a significant difference between Episodic Control and Contextual Control (t(21) = 26.54, P < 0.001). Within LvPM, we found a significant difference between Response Control and Episodic Control (t(21) = 18.19, P < 0.001) and also a significant difference between Response Control and Contextual Control (t(21) = 10.65, P < 0.001).

Discussion

To our knowledge, this is the first study aimed at investigating the neural underpinnings of manipulating processes that differentially engage both the rostral–caudal and dorsal–ventral axes of lateral frontal cortex. Given evidence for a rostro-caudal gradient of function in the lateral frontal cortex during the processing of increasing abstract representations (Koechlin et al. 2003; Badre and D'Esposito 2007), we tested whether this axis of functional organization is sensitive to processes engaged by different stimulus domains (e.g., spatial vs. language). To distinguish among 4 possible frontal organization schemes (see Fig. 1), we developed a new experimental design to investigate cognitive control processes engaged by both the abstractness of action representations and the stimulus domain. Abstractness was manipulated by using 3 different task sets that varied in relational complexity, whereas stimulus domain was manipulated using language and spatial stimuli. Relational complexity refers to the process of manipulating the relationship between task components (e.g., to associate a particular cue with a task) and drawing inferences about that relationship (Holyoak and Cheng 2011). Consistent with previous studies, we found that increases in relational complexity were associated with a rostro-caudal gradient of activation in lateral frontal cortex. Moreover, this pattern of activity was similar for both the language and spatial tasks, without clearly distinct activity in dorsal and ventral subregions of frontal cortex. In more directed analyses, within each distinct region of activity along the rostro-caudal axis of lateral frontal cortex, evidence was found for a dorsal–ventral topographic segregation for the processing of different stimulus domains as a function of the level of control hierarchy.

With both spatial and language stimuli, we found dissociable patterns of frontal activity by varying the abstractness of cognitive control processes. Three levels of abstractness were investigated that we labeled Response, Contextual, and Episodic Control (Koechlin et al. 2003). Response Control involved 1 relation (S–R mapping), Contextual Control involved 2 sets of relations (cue–task association and S–R mapping), and Episodic Control involved 3 sets of relations (cue–cue association, cue–task association, and S–R mapping). We found evidence for a rostro-caudal gradient in lateral frontal cortex that depended on the level of abstractness in cognitive control. The most rostral frontal region, LaIFS, was exclusively activated during Episodic Control, the more caudal LmIFS was activated during Episodic and Contextual Control, and the most caudal frontal regions, LvPM and LpPM, were activated during all 3 levels of the control hierarchy. Note that the present experiment differs from a previous one on cognitive control hierarchy by Badre and D'Esposito (2007), such that we did not apply a parametric manipulation for each of the 3 levels of control hierarchy. Badre and D'Esposito (2007) used this type of manipulation to demonstrate that differences in difficulty between the 4 cognitive control levels did not account for the pattern of activity across the frontal rostro-caudal axis. However, unlike that study, we did not find differences in error rates and reaction times for the 3 levels of control in our tasks, eliminating the need for such a parametric manipulation of task difficulty. In this study, we also found that lateral frontal cortex activity for the 3 levels of cognitive control were restricted to the left hemisphere (see Table 4). There are likely multiple possible post hoc explanations for this finding.

The findings from whole-brain analyses were corroborated by a ROI analysis, such that LaIFS exhibited increased activity only in Episodic Control, LmIFS exhibited increased activity during Episodic and Contextual Control, and LvPM and LpPM exhibited increase activity during all 3 conditions. Thus, activity progressed from the most caudal to rostral frontal regions with increasing levels of abstractness in cognitive control, consistent with previous studies utilizing similar tasks (Koechlin et al. 2003; Badre and D'Esposito 2007).

It is important to note that Koechlin et al. (2003) and Badre and D'Esposito (2007) suggested different hypotheses underlying the rostro-caudal organization of lateral frontal cortex. While Koechlin et al. (2003) highlights the importance of reduction of uncertainty during action selection processes and temporal proximity versus temporal remoteness of action selection, Badre and D'Esposito (2007) propose that processing of abstract information (e.g., nested task sets) versus that of less abstract information (e.g., S–R mapping) leads to the observed rostro-caudal gradient. It should be noted that both differences in abstraction (Badre and D'Esposito 2007) and the time scale of cognitive control (Koechlin et al. 2003) were present in our experimental design, such that higher levels of abstractness in the Episodic Control condition (i.e., S–R mapping is nested in cue–task association) also necessitated maintaining of task sets over longer time lags (i.e., cue–cue information maintenance in a block). Thus, we could not, and it was not our intention to disentangle the role of these 2 factors in engaging each hierarchy level. However, Reynolds et al. (2012) directly tested these 2 factors and found an activation pattern consistent with a third alternative hypothesis, suggesting similar involvement of different subregions in lateral frontal cortex during each hierarchy level, such that responses differed as a function of active maintenance (sustained activation) and flexible task updating (transient activation) of task-relevant representations.

Our results are consistent with a growing body of literature suggesting a hierarchical organization of the lateral frontal cortex (Fuster 2004; Botvinick 2008; Badre and D'Esposito 2009). Evidence for hierarchy is supported by an asymmetrical dependency between different levels of abstractness, that is, first-order stimulus–response mapping are contingent upon more abstract second- (and higher-) order rules. These asymmetrical activation patterns along the rostro-caudal axis were observed in our study. Specifically, we found that the most rostral frontal region exhibited increased activity only during the highest level of abstractness, the mid-frontal region exhibited increased activity at the 2 highest levels, and the caudal frontal region exhibited increased activity at all 3 levels of abstractness (see Fig. 5). Moreover, these findings are consistent with a study that has directly tested the cognitive control hierarchy using patients with focal frontal lesions (Badre et al. 2009).

Additional analyses revealed a dorsal–ventral segregation within each of the lateral frontal regions engaged by the cognitive control conditions. In our first analysis of the effects of processing different stimulus domains, we found a significant difference in the distance between peak activations in lateral frontal subregions for language and spatial tasks on the z-axis (see Fig. 6A). In a second analysis, we took into account the anatomical characteristics of the lateral frontal cortex (i.e., dorsal–ventral axis follows the IFS that is tilted on the y-axis) by using a nonlinear classification analysis of peaks of activation in each participant during performance of either the language or spatial tasks. In this analysis, we corroborated the findings in the first analysis demonstrating that cognitive control processes engaged by the spatial tasks recruited more dorsal portions of each frontal ROI, whereas cognitive control processes engaged by the language tasks recruited more ventral portions (see Fig. 6B). A post hoc interpretation of these unexpected findings could be that, within the same frontal region, there are distinct neuronal populations that can subserve cognitive control processes engaged by language, spatial, or perhaps both types of stimuli. Consistent with this perspective, Rao et al. (1997) demonstrated that, during a working memory task, some neurons within macaque prefrontal cortex are tuned specifically to goal-relevant object or spatial information, while other neurons seem to code for both relevant object and location information. Moreover, Meyer et al. (2011) demonstrated that, in monkeys performing a cognitive control task, the selectivity of domain-specific neurons in prefrontal cortex decreases after training, but some neurons remain biased toward stimulus domain. Thus, these cell-recording studies demonstrated adaptive coding of lateral frontal neurons. Similar to these findings, the topographical segregation in our study possibly reflects the adaptive nature of the lateral frontal cortex during information processing necessary for goal-directed behavior. However, this speculative interpretation remains a critical objective for future investigations. A novel finding in our study was that the dorsal–ventral segregation differed between frontal subregions during different behavioral conditions. For instance, LaIFS, which was the most rostral region activated during Episodic Control, demonstrated significantly more dorsal–ventral segregation during the processing of different stimulus domains during Episodic Control in comparison with the more caudal regions LmIFS and LvPM. Likewise, LmIFS, which was the most rostral region activated during Contextual Control, demonstrated significantly more stimulus domain dorsal–ventral segregation during Contextual Control tasks in comparison with the more caudal region LvPM. Finally, a similar pattern existed for LvPM during Response Control. Thus, the cognitive control hierarchy influenced the processes engaged by tasks with different stimulus domains, such that a region exhibited more dorsal–ventral segregation during task performance, if it was the highest level processor for a particular task. How can one account for these findings? According to models of the rostro-caudal axis of lateral frontal cortex (Koechlin et al. 2003; Badre and D'Esposito 2007), the most rostral region implicated in a task processes the most abstract task rules that are relevant for the task. In the present task, the rules that are relevant are domain-specific (i.e., spatial rules for the spatial task and semantic rules for the language task), thus it is possible that at the highest level of abstraction, task rules are represented or processed in a domain-specific fashion. In this way, distinct neuronal populations, which are preferentially tuned to or subserve the different relevant domain-specific rules, may be selectively recruited in high-level regions. On subsequent lower levels of processing, task rules may be represented or processed in a more domain-general fashion, and thus distinct neuronal populations representing or processing domain-specific rules need not be recruited. Indeed, it is possible that the processing by higher-level regions that are more closely tied to domain-specific rules may enable lower-level regions to process in a more domain-general fashion (see Fig. 1D). This interpretation is consistent with the findings of Meyer et al. (2011), demonstrating that domain-specific tuning is reduced during training, yet frontal neurons still demonstrate domain-specific tuning when required by the task. In particular, our results suggest that LvPM generates a response (i.e., button press) in a domain-sensitive code, only when there is not an additional level of control prior to a response. This finding seems to be counterintuitive since Response Control should be similar whether or not it has been preceded by a task. A speculative interpretation could be that more rostral frontal areas provide a top-down influence on LvPM when task properties become more abstract, diminishing the domain sensitivity of LvPM. In this way, topographical segregation of stimulus domain may only take place at the specific level of the control hierarchy that is computationally responsible for the information integration at that level.

This study was designed to explore possible different dorsal–ventral interactions on the rostro-caudal cognitive control axis. In our design, we used cognitive control tasks that differed in type of information being processed (i.e., dot patterns vs. words), which likely required the engagement of different types of cognitive control processes. For example, the language tasks used in our study likely required such processes as controlled semantic retrieval and item selection, whereas our spatial tasks likely required such processes as fine spatial discrimination, chunking, or relational processing. There is a wealth of evidence showing that VLPFC is engaged during controlled retrieval/item selection (Badre and Wagner 2007; Race et al. 2009), and that specific regions along its rostro-caudal axis may be differentially engaged in selection versus retrieval or at differing levels of item abstraction (Badre et al. 2005). For example, Badre et al. (2005) showed that semantic retrieval, manipulated by different types of associative strengths between target words (e.g., candle and flame vs. candle and halo), engages rostral VLPFC. In contrast, semantic selection, manipulated by the number of target words to be selected (2 vs. 4 words presented) or the type of judgment task [association (e.g., ivy and league) vs. attribution (e.g., tar and coal)], activated mid-portion of VLPFC. A recent study by Race et al. (2009) investigated VLPFC function during different levels of mnemonic control in a repetition-priming semantic discrimination task. It was demonstrated that control of motor response engaged caudal VLPFC (∼BA 6/44), control of target word selection engaged mid-VLPFC (∼BA 45), and control of judgment task selection engaged rostral PFC (∼BA 47). Our study was not designed to determine the specific processes that lead to topographical segregation along the dorsal–ventral axis. Nevertheless, the pattern of segregation that we observed provides a new insight in that there is clearly an interaction between the functions subserved by the dorso-ventral and rostro-caudal frontal axes. Future studies that manipulate cognitive processing independent of stimulus domain are needed to address these issues and to broaden current theory regarding the organization of prefrontal cortex.

Funding

This work was supported by NIH grants (NS40813 and MH63901) and the Leopoldina—National Academy of Science grant (LPDS 2009–2020).

Notes

Conflict of Interest: None declared.

References

- Badre D, D'Esposito M. 2007. Functional magnetic resonance imaging evidence for a hierarchical organization of the prefrontal cortex. J Cogn Neurosci. 19:2082–2099. [DOI] [PubMed] [Google Scholar]

- Badre D, D'Esposito M. 2009. Is the rostro-caudal axis of the frontal lobe hierarchical? Nat Rev Neurosci. 10:659–669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Badre D, Hoffman J, Cooney JW, D'Esposito M. 2009. Hierarchical cognitive control deficits following damage to the human frontal lobe. Nat Neurosci. 12:515–522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Badre D, Poldrack RA, Paré-Blagoev EJ, Insler RZ, Wagner AD. 2005. Dissociable controlled retrieval and generalized selection mechanisms in ventrolateral prefrontal cortex. Neuron. 47:907–918. [DOI] [PubMed] [Google Scholar]

- Badre D, Wagner AD. 2007. Left ventrolateral prefrontal cortex and the cognitive control of memory. Neuropsychologia. 45:2883–2901. [DOI] [PubMed] [Google Scholar]

- Botvinick MM. 2008. Hierarchical models of behavior and prefrontal function. Trends Cogn Sci (Regul Ed). 12:201–208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner RL. 2003. Functional-anatomic correlates of control processes in memory. J Neurosci. 23:3999–4004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chein JM, Fissell K, Jacobs S, Fiez JA. 2002. Functional heterogeneity within Broca's area during verbal working memory. Physiol Behav. 77:635–639. [DOI] [PubMed] [Google Scholar]

- Courtney SM. 2004. Attention and cognitive control as emergent properties of information representation in working memory. Cogn Affect Behav Neurosci. 4:501–516. [DOI] [PubMed] [Google Scholar]

- Courtney SM, Petit L, Haxby JV, Ungerleider LG. 1998. The role of prefrontal cortex in working memory: examining the contents of consciousness. Philos Trans R Soc Lond B Biol Sci. 353:1819–1828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curtis CE, D'Esposito M. 2004. The effects of prefrontal lesions on working memory performance and theory. Cogn Affect Behav Neurosci. 4:528–539. [DOI] [PubMed] [Google Scholar]

- Curtis CE, D'Esposito M. 2011. Success and failure suppressing reflexive behavior. J Cogn Neurosci. 15:409–418. [DOI] [PubMed] [Google Scholar]

- Duncan J. 2010. The multiple-demand (MD) system of the primate brain: mental programs for intelligent behaviour. Trends Cogn Sci (Regul Ed). 14:172–179. [DOI] [PubMed] [Google Scholar]

- Fiez JA, Raife EA, Balota DA, Schwarz JP, Raichle ME, Petersen SE. 1996. A positron emission tomography study of the short-term maintenance of verbal information. J Neurosci. 16:808–822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Funahashi S, Bruce CJ, Goldman-Rakic PS. 1989. Mnemonic coding of visual space in the monkey's dorsolateral prefrontal cortex. J Neurophysiol. 61:331–349. [DOI] [PubMed] [Google Scholar]

- Fuster JM. 1997. The prefrontal cortex: anatomy, physiology, and neuropsychology of the frontal lobe. New York: Lippincott-Raven. [Google Scholar]

- Fuster JM. 2004. Upper processing stages of the perception-action cycle. Trends Cogn Sci (Regul Ed). 8:143–145. [DOI] [PubMed] [Google Scholar]

- Goldman-Rakic PS. 1996. The prefrontal landscape: implications of functional architecture for understanding human mentation and the central executive. Philos Trans R Soc Lond B Biol Sci. 351:1445–1453. [DOI] [PubMed] [Google Scholar]

- Holyoak KJ, Cheng PW. 2011. Causal learning and inference as a rational process: the new synthesis. Annu Rev Psychol. 62:135–163. [DOI] [PubMed] [Google Scholar]

- Koechlin E, Hyafil A. 2007. Anterior prefrontal function and the limits of human decision-making. Science. 318:594–598. [DOI] [PubMed] [Google Scholar]

- Koechlin E, Ody C, Kouneiher F. 2003. The architecture of cognitive control in the human prefrontal cortex. Science. 302:1181–1185. [DOI] [PubMed] [Google Scholar]

- Krzanowski WJ. 2000. Principles of multivariate analysis: a user's perspective. Rev Sub. ed USA: Oxford University Press. [Google Scholar]

- Kucera H, Francis WN. 1967. Computational analysis of present-day American English. Providence, RI: Brown University Press. [Google Scholar]

- Meyer T, Qi X-L, Stanford TR, Constantinidis C. 2011. Stimulus selectivity in dorsal and ventral prefrontal cortex after training in working memory tasks. J Neurosci. 31:6266–6276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. 2001. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 24:167–202. [DOI] [PubMed] [Google Scholar]

- Mohr HM, Goebel R, Linden DEJ. 2006. Content- and task-specific dissociations of frontal activity during maintenance and manipulation in visual working memory. J Neurosci. 26:4465–4471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Reilly RC. 2010. The what and how of prefrontal cortical organization. Trends Neurosci. 33:355–361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulesu E, Frith CD, Frackowiak RS. 1993. The neural correlates of the verbal component of working memory. Nature. 362:342–345. [DOI] [PubMed] [Google Scholar]

- Petrides M. 2005. Lateral prefrontal cortex: architectonic and functional organization. Philos Trans R Soc Lond B Biol Sci. 360:781–795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. 2006. Efferent association pathways originating in the caudal prefrontal cortex in the macaque monkey. J Comp Neurol. 498:227–251. [DOI] [PubMed] [Google Scholar]

- Race EA, Shanker S, Wagner AD. 2009. Neural priming in human frontal cortex: multiple forms of learning reduce demands on the prefrontal executive system. J Cogn Neurosci. 21:1766–1781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rao SC, Rainer G, Miller EK. 1997. Integration of what and where in the primate prefrontal cortex. Science. 276:821–824. [DOI] [PubMed] [Google Scholar]

- Reynolds JR, O'Reilly RC, Cohen JD, Braver TS. 2012. The function and organization of lateral prefrontal cortex: a test of competing hypotheses. PLoS ONE. 7:e30284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sakai K, Passingham RE. 2003. Prefrontal interactions reflect future task operations. Nat Neurosci. 6:75–81. [DOI] [PubMed] [Google Scholar]

- Seber GAF. 2004. Multivariate observations. New Jersey: Wiley. [Google Scholar]

- Yee LTS, Roe K, Courtney SM. 2010. Selective involvement of superior frontal cortex during working memory for shapes. J Neurophysiol. 103:557–563. [DOI] [PMC free article] [PubMed] [Google Scholar]