Abstract

The processing of social information in the human brain is widely distributed neuroanatomically and finely orchestrated over time. However, a detailed account of the spatiotemporal organization of these key neural underpinnings of human social cognition remains to be elucidated. Here, we applied functional magnetic resonance imaging (fMRI) and magnetoencephalography (MEG) in the same participants to investigate spatial and temporal neural patterns evoked by viewing videos of facial muscle configurations. We show that observing the emergence of expressions elicits sustained blood oxygenation level–dependent responses in the superior temporal sulcus (STS), a region implicated in processing meaningful biological motion. We also found corresponding event-related changes in sustained MEG beta-band (14–30 Hz) oscillatory activity in the STS, consistent with the possible role of beta-band activity in visual perception. Dynamically evolving fearful and happy expressions elicited early (0–400 ms) transient beta-band activity in sensorimotor cortex that persisted beyond 400 ms, at which time it became accompanied by a frontolimbic spread (400–1000 ms). In addition, individual differences in sustained STS beta-band activity correlated with speed of emotion recognition, substantiating the behavioral relevance of these signals. This STS beta-band activity showed valence-specific coupling with the time courses of facial movements as they emerged into full-blown fearful and happy expressions (negative and positive coupling, respectively). These data offer new insights into the perceptual relevance and orchestrated function of the STS and interconnected pathways in social–emotion cognition.

Keywords: coupling, emotion, neural, STS, transient

Introduction

The ability to rapidly decode indicators of the emotional states of others not only provides the basis for social cognition, but also forms the foundation for a skill that has been necessary for survival since the dawn of primitive societies (Darwin 1872; Ekman 1972; Leopold and Rhodes 2010). This capacity is no less crucial for success in negotiating today's interpersonally complex world. Indeed, perturbations of this process are linked to several neuropsychiatric disorders (Adolphs 2010). Dynamically changing configurations of facial muscles provide the most immediate such emotional cues (Darwin 1872), and neurophysiological and imaging studies during processing of facial expressions implicate a spatially distributed neural network including the inferior occipital cortex (IOC), superior temporal sulcus (STS), and parietal, premotor, and frontolimbic regions (Haxby et al. 2000; Trautmann et al. 2009). The occipital and temporoparietal components of this network are implicated in visuo-spatial cognition, whereas the premotor and frontolimbic regions are shown to be involved in motor simulation and mnemonic and/or experiential aspects of facial emotion processing (Haxby et al. 2000; Dolan 2002; Gallese et al. 2004).

The STS, in particular, plays a central role in face processing circuitry. This region possesses strong reciprocal connections with frontal and paralimbic regions (Yeterian and Pandya 1991; Karnath 2001), as well as with the putamen, caudate, and pulvinar nucleus of the thalamus, which has connective inputs from subcortical visual tectum (Ledoux 1998) and input–output connections with visual cortical areas. This unique pattern of connections allows the STS to serve as a functional interface between the ventral and dorsal visual processing streams, thereby mediating the integration of object- and space-related visual information (Karnath 2001; Keysers and Perrett 2004; Stein and Stanford 2008). Furthermore, connections between 1) the STS and primary (Yeterian and Pandya 1991) and secondary visual (Allman and Kaas 1974), parietal, and premotor regions (which we collectively refer to here as “sensorimotor cortex”); and 2) between the STS and frontal, limbic, and paralimbic regions (collectively referred to here as “frontolimbic cortex”) (Ledoux 1998; Haxby et al. 2000; Karnath 2001) may enable perceptually relevant processing of meaningful biological motion (Oram and Perrett 1996; Haxby et al. 2000; Pelphrey et al. 2004; Stein and Stanford 2008), of which dynamic facial expressions are an important example. This integrative role fits well with evidence implicating the STS in the representation of biologically changeable (i.e., moving) attributes of visual stimuli (Haxby et al. 2000; Karnath 2001), a function that is likely pivotal to facial emotion cognition: STS may operate by integrating visuo-spatial information from multiple sources in order to attribute emotional meaning to facial movements (Calder and Young 2005; Stein and Stanford 2008). These densely interconnected and functionally specialized regions must cooperate in synchronized fashion in order to collectively decode and process emotional meaning from the dynamic muscle configurations that define changing facial expressions (Haxby et al. 2000).

There is considerable evidence documenting the importance of the beta oscillatory response in normal brain functioning (Varela et al. 2001; Buzsáki and Draguhn 2004; Engel and Fries 2010; Siegel et al. 2011, 2012). Moreover, there is growing evidence of the crucial nature of the beta-band oscillatory response in contextual processing of visual stimuli (Kveraga et al. 2011) and in conscious perception of complex social stimuli (Smith et al. 2006). It has also been suggested that the beta oscillatory response supports perceptually relevant reverberant activity within sensorimotor and fronto-parietal cortices during cognition (Engel and Fries 2010; Siegel et al. 2012).

The potential importance of the beta-band signal in processing social information is also supported by convergence of this oscillatory frequency and the fMRI blood oxygenation level–dependent (BOLD) signal during cognition. For example, a tight relationship between BOLD and beta-band signals has been reported during perceptual cognition (Winterer et al. 2007; Zumer et al. 2010; Stevenson et al. 2011; Scheeringa et al. 2011). Furthermore, signal increases in BOLD were shown to correlate with a decrease in beta-band oscillatory response during perceptual decision-making (Winterer et al. 2007; Donner et al. 2009; Zumer et al. 2010), in line with a perceptually relevant role for decreased beta oscillatory power (Pfurtscheller et al. 1996). Together, these findings suggest a relationship between BOLD and beta-band activity, and underscore the possibility of a behaviorally relevant role for these signals in the processing of social stimuli, especially in the visual domain (Engel and Fries 2010).

To date, however, the local and large-scale neuronal coupling within the beta band (14–30 Hz), such as circuit-level STS oscillatory response to environmentally valid, millisecond-resolved human visual social cognition, remains undefined, and direct anatomical validation of such signals with BOLD fMRI, which is particularly important for magnetoencephalography (MEG) signals in subcortical regions (Cornwell et al. 2008), is lacking. Toward these aims, we designed a combined MEG/fMRI experiment, directly quantifying the relationship between the BOLD response to dynamic facial expressions of emotion with event-related fMRI and the corresponding MEG response to the same stimuli in the same participants with a focus on evoked beta-band response both at the sustained and the millisecond-resolved levels. Building upon extensive work on STS involvement in coding biological motion (Oram and Perrett 1996; Haxby et al. 2000) and in both explicit (Haxby et al. 2000) and implicit emotional cognition (Cornwell et al. 2008), we specifically tested the hypothesis that the BOLD and beta-band responses to the visual cognition of dynamic facial expressions would centrally involve the STS and that the STS beta-band response would be temporally orchestrated in a time-locked, behaviorally relevant manner to the time course over which the emotions emerged from the dynamic facial expressions.

Materials and Methods

Experimental Strategy

We first tested for anatomical consistency between the fMRI BOLD and the oscillatory responses to facial dynamics by implementing a spatiotemporal validation approach: Here, we identified brain regions more responsive to the viewing of videos than static pictures of facial emotions for both fMRI and MEG, and then tested for anatomical convergence and correlation of these “sustained” signals across the 2 modalities. We next confirmed the behavioral relevance of the observed MEG beta-band response by correlating these signals with the time to emotion recognition. Then, we examined transient, millisecond-resolved beta-band signals with a 200-ms sliding window, and tested the association of the time course of this signal with the time course of the emergence of facial expressions. Finally, we tested for inter-regional covariance between STS and frontolimbic beta-band activity.

Participants and Stimuli

We measured BOLD response to facial dynamics with event-related fMRI in 40 right-handed individuals (mean age = 30.54 years, 17 females) who were physically healthy and free of neuropsychiatric disease. A follow-up MEG study was carried out in 21 of the same participants (mean age = 33.09 years, 7 females). All participants provided written informed consent prior to participation in the studies according to NIH IRB guidelines.

During both fMRI and MEG, participants viewed facial cues consisting of still pictures and videos showing either emotional facial expressions (fear or happiness) or emotionally neutral subtle facial movement (naturally occurring eye blinks) portrayed by trained actors. The emotional valence of these stimuli was previously validated with out-of-the-scanner ratings by an independent group of participants (van der Gaag et al. 2007). To ensure continuous attentional tuning during the viewing of the visual stimuli for both imaging modalities, participants were instructed to watch each image presentation from beginning to end and then, within 1 s of the disappearance of the image from the screen, to press one of 2 buttons to indicate the person's gender. This low-level cognitive task requirement and the post-trial timing of the motor component were adopted to direct the participant's attention to the faces and emotional expressions throughout the face-viewing period, while avoiding sensorimotor response from pressing a button during this viewing time. It should be noted that the emotion recognition that occurred during the viewing time in our fMRI and MEG experiments was implicit in nature because participants were not explicitly instructed to recognize the emotional content of the facial expressions. Therefore, to further assess explicit recognition and provide a measure of the perceptual relevance of the neural response to the emotional stimuli, participants watched these same stimuli in a postscanning session and indicated, with a button press, the time at which they recognized the particular emotional expression (fear/happiness) on the dynamic faces. These measures of emotion recognition time were used in follow-up regression analyses with the BOLD data (see below).

Additionally, given that the dynamic nature of emotional expressions may be a strong perceptual trigger of the neural underpinning of recognition, especially in regions such as the STS, known to code biologically relevant incoming stimulus information (Oram and Perrett 1996), we determined another measure of the emergence of the facial expressions, the global time course of the facial muscle movements for each video. To derive these data, we compared each of 29 of the total 30 frames comprising the video with the previous frame using PerceptualDiff software (http://pdiff.sourceforge.net, Last accessed 01/12/2014), and we computed the average time course of these differential movement parameters for all videos in each emotional category relative to the neutral category (see Results). These analyses produced a time series with 30 data points (with the first value being zero because the first frame has no differential movement value) that we cross-correlated with the time course of the evoked transient MEG beta-band activity. The approach of using contrast time courses of facial movement data (i.e., fear > neutral and happy > neutral facial movements) was adopted in order to directly parallel the analyses of the MEG data, which used the classic contrast approach (again, fear > neutral and happy > neutral evoked oscillatory activity) to assess transient, emotion-specific whole-brain beta-band activity and time courses, as described below. To better understand the effects of this method of subtracting the time courses of the facial movements (fear > neutral and happy > neutral facial movements), we also assessed the average facial movement time courses of the fear, happiness and the emotionally neutral expressions separately (i.e., for fearful and happy dynamic expressions without reference to the neutral baseline), as illustrated in the supplementary materials (Supplementary Fig. 1).

MRI Acquisition and Analysis of Sustained Event-Related BOLD Response Evoked by Dynamic Compared with Static Stimuli

To anatomically define the neural response to observing dynamically evolving facial expressions compared with static pictures of the same emotions, we used 3T fMRI with a 16-channel NOVA head coil (TR = 2.210 s, TE = 25, FOV = 20, number of slices = 27, slice thickness = 3.0) during a fully randomized slow event-related presentation of the emotional videos and pictures with interstimulus intervals ranging from 2 to 12 s. Twenty stimuli for each emotional category (fearful, happy, and neutral) were presented as videos (10 for each run) and pictures (10 for each run) in 2 separate experimental runs. To assess the BOLD response to stimulus dynamics, we used SPM5 (http://www.fil.ion.ucl.ac.uk/spm/software/spm5, Last accessed 01/12/2014) for preprocessing (8 mm smoothing), and we modeled the full 1-s stimulus presentation in a first-level analysis comparing videos with static emotional and neutral expressions, henceforth referred to as sustained BOLD response. This analysis enabled us to identify the network of regions that are more responsive to the dynamic aspects of facial expressions than to static pictures regardless of emotional valence (Fig. 1A). After co-registration onto ASSET MPRAGES acquired with the 16-channel Nova head coil (FOV = 20, TE = min full, flip angle = 6), these single-subject first-level statistical images for dynamic stimuli > static stimuli were included in a group average one-sample t-test at the second level. Time-course data representing signal values (in arbitrary units) of the BOLD representation of facial dynamics were extracted from all dynamic relative to static stimuli using the SPM ROI toolbox Marsbar (http://marsbar.sourceforge.net/).

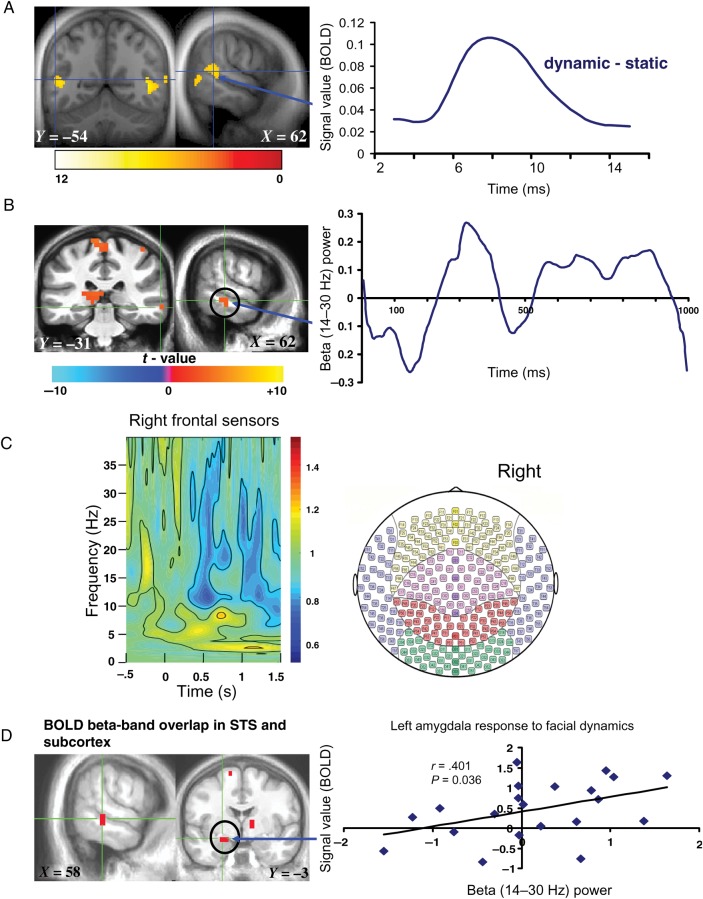

Figure 1.

Sustained neural response to facial dynamics. (A) Left: BOLD response to dynamic > static expressions thresholded at P < 0.001 for display; orange to red clusters survived P < 0.05 FDR corrected; Right: fMRI time course of right STS (note the typical 5–6 s hemodynamic response delay in the BOLD signal, consistent with the relatively low temporal resolution of fMRI compared with the right STS beta-band activity time course measured with MEG in (B), right). (B) Left: MEG beta-band response to facial dynamics thresholded at P < 0.05 FDR corrected; Right: time course of right STS beta-band activity. (C) Time–frequency results illustrating frontal right hemispheric sensor-level spectral distribution from −0.5 s before stimulus onset to 1.5 s after stimulus onset, which includes 0.5 s of poststimulus time for the purpose of setting the scale for the entire frequency time window of −0.5 to 1.5 s, with the time–frequency maps shown to be predominantly distributed between theta (4–8 Hz), alpha (8–14 Hz), and beta (14–30 Hz) during the 0–1000-ms time window of interest when facial expression dynamics were shown. Note that the blue color in the time–frequency map reliably illustrates beta-band power. (D) Overlap map of the STS and amygdala showing convergent BOLD and beta-band activity at P = 0.0025 derived from a conjunction analysis of BOLD and MEG beta-band activity response to facial dynamics (conjunction P < 0.0025 uncorrected); scatter plot depicts correlation (Spearman's ρ) between beta-band activity and BOLD signal in left amygdala. The color bars represents t-maps for the various results and X, Y, Z values denote MNI coordinates.

MEG Acquisition and Analysis of Sustained Event-Related Oscillatory Activity Evoked by Dynamic Compared with Static Stimuli

A whole-head array of 275 radial first-order gradiometer/SQUID channels with the CTF system was used to acquire MEG signals. The identical stimulus set described in the fMRI section was used in the same presentation fashion except that the interstimulus intervals were reduced to 2–6 s, and the whole MEG session consisted of 1 experimental run using the same number of stimuli as in the 2 fMRI runs.

The raw MEG signals were processed using a background noise cancellation method that was applied with a third gradient spatial filtering using 30 reference sensors (Vrba et al. 1995; Carver et al. 2012). The data were then high-pass filtered at 0.61 Hz along with DC offset removal (Carver et al. 2012), and time markers were added at the onset of each stimulus type (dynamic and static as well as fear, happy, and neutral) and for the poststimulus fixation period that included the motor response indicating gender. Artifacts such as eye blinks were not removed because the synthetic aperture magnetometry (SAM) beamformer technique employed here is designed to minimize all interfering activity from a given source, including artifacts (Vrba and Robinson 2002).

To first assess the frequency distribution of the MEG signal, we performed a time–frequency analysis at the sensor level to provide a view of the neuromagnetic signals represented across frequency and time for the left and right hemisphere frontal sensors (Fig. 1C shows an illustration of these results in the right hemisphere). Note that, at the MEG sensor level, however, the signals recorded by these frontal electrodes cannot be considered to be specific for the underlying cortical regions (Gross et al. 2001), but rather serve as a determinant for the parameter for source-level analysis of the frequencies that showed relevant signals at the sensor level. These spectral maps were acquired using a Stockwell time–frequency analysis with the NIMH MEG Core Facility Ctf2st toolbox (see, http://kurage.nimh.nih.gov/meglab/Meg/Ctf2st, Last accessed 01/12/2014). The raw MEG channel data were analyzed prior to source estimation in order to determine time windows and frequency bands of interest for further investigation. A time–frequency analysis of the channel data was performed using Stockwell transforms, which can be thought of as continuous wavelet transforms with a phase correction (Stockwell et al. 1996). Specifically, a window from 500 ms before stimulus presentation (−0.5) to 1.5 s after the onset of stimulus presentation was examined, using the average power in the time from −0.5 to 0 as baseline normalization for the purpose of setting the scale for the entire frequency time window.

MEG source localization was performed across the entire brain using the SAM beamformer technique. SAM, a scalar, linearly constrained, minimum-variance beamformer, operates by computing a weighted sum of measurements such that the total signal power (variance) from all sensors is attenuated—subject to a unity dipole gain constraint for any given voxel coordinate. The source images are assembled by applying SAM one voxel at a time, at 5-mm intervals over the entire head or region of interest. In this way, the power that remains (after attenuation) is an estimate of the source strength. The source grid is rectangular and the SAM functional images are confined to the boundary of the brain hull, a geometric representation of each individual's anatomical whole-brain volume (Vrba and Robinson 2002). This technique accurately models the forward solution (Nolte, et al. 2001), and the source waveforms at locations with large moments are accurate representations of what a cortical electrode would show for the same location (Vrba and Robinson 2002), as has been substantiated by comparing electrocorticography waveforms with MEG source waveforms for epileptic patients with subdural grids (Ishii et al. 2008).

Following SAM analysis, the MEG data were co-registered to structural MRI MPRAGE images (8-channel GE head coil, TE = min full, flip angle = 6, FOV = 20, TI = 725, # of slices = 136) in AFNI (http://afni.nimh.nih.gov/afni, Last accessed 01/12/2014) visualizing the vitamin E capsules placed on each participant as preauricular and nasion fiducial markers. Next, to determine the effects of stimulus dynamics, second-level random-effects analyses were performed using a standard general linear model (GLM) that applied a one-sample, two-tailed t-test in AFNI to assess beta-band (14–30 Hz) response using dynamic stimuli > static stimuli, regardless of emotional valence, as the contrast of interest across the first 1 s of stimulus presentation.

Because changes in both lower and higher frequency bands have been implicated in different aspects of human emotional information processing in previous studies (Popov et al. 2013; Lee et al. 2010) and because our sensor analysis indicated stimulus-related activity in some of these frequency bands (see Results), we also analyzed evoked oscillatory activity in the theta (4–8 Hz), alpha (8–14 Hz), low-gamma (30–50 Hz), and high-gamma (60–140 Hz) frequency bands during the viewing of dynamic relative to static facial expressions. These additional analyses allowed us to test the specificity of beta-band activity in response to the dynamic nature of human emotional expressions.

Spatiotemporal Validation of Sustained BOLD and MEG Measures

We combined the superior spatial resolution of fMRI with the superior temporal resolution of MEG in order to cross-validate the spatiotemporal characteristics of our dataset. First, to anatomically validate the sources that are resolved temporally by MEG, we conducted a whole-brain conjunction analysis in individuals studied with the same paradigm in both MEG and fMRI to test for signal overlap in these 2 modalities. For this analysis, the logical AND function in AFNI was used to define voxels activated in both the BOLD and MEG measurements made during the viewing of facial dynamics (videos vs. static pictures as detailed above) at a combined statistical threshold of P < 0.0025, one-tailed.

Next, because subcortical structures have been challenging to localize with MEG, we chose the amygdala to quantitatively assess the relationship of the signals across the 2 modalities because this structure is not only far from the MEG sensors, but also involved in emotion processing. We used 3dROIstats, an AFNI tool, to extract signal values from amygdala voxels that showed both BOLD signal and MEG beta-band activation, and we tested the correlation between the 2 signals in that cluster (Spearman's nonparametric correlation, two-tailed). Evidence for such a spatiotemporal overlap between BOLD and MEG signals would not just validate our MEG source localization, but also allow exploration of transient network interactions involved in coding emotion-specific facial dynamics.

Determination of Transient Beta-Band Response

Dynamic facial expressions gain emotional salience over time, and their discernment thus requires a rapid, time-dependent series of neural computations. As such, sustained neural responses modeled over seconds (as reported thus far) cannot adequately resolve the neural signals underlying these dynamics and their inter-regional orchestration over time. Therefore, to assess transient oscillatory (beta-band) neural representation of emotion-specific dynamics, we adopted a strategy of applying GLM analysis of the MEG beta-band activity data at the beamformed source level across the whole brain with a series of 200-ms sliding windows across the 1-s presentation reflecting the time till the peak of the emotional expressions in the videos. To measure emotion-specific transient oscillatory activity as it emerged over time, we separately compared beta-band activity during happy or fearful videos with activity during neutral videos with a series of 200-ms sliding windows.

Before undertaking this emotion-specific analysis, we first assessed the sensitivity of the adopted 200-ms time windows by modeling the initial 200 ms of early facial stimulus onset (EFSO) as well as the 200 ms following stimulus offset (poststimulus onset [PSO], a time when participants were making a button press to indicate the observed face gender), and we compared these 2 time periods. We hypothesized that EFSO and PSO would differentially reflect visual/perceptual- and motor response-related beta-band activity, respectively.

Based on the results of this validation (see Results), we next used this approach to examine transient emotion-specific neural response to dynamic facial expressions, comparing beta-band activity during happy or fearful videos with activity during neutral videos. Here, the 200-ms sliding windows analysis was applied across the entire 1-s viewing period, including the time at which the emotions were shown to be most expressed in the videos; a series of nine 200-ms sliding windows spanning the 1-s face presentation (0–200, 100–300, … 800–1000 ms) was created, enabling us to capture transient neural response to emotional dynamics while allowing for temporal overlap such that important signals would not be lost. Activity during viewing of happy and fearful videos was compared with the neutral videos for each emotion separately. This analysis was restricted to the beta band (14–30 Hz) because only beta-band response to stimulus dynamics (videos > static pictures) had survived false discovery rate (FDR) correction for multiple comparisons (see Results).

Determination of the Temporal and Spatial Distribution of Transient Inter-Regional Beta-Band Coupling

To determine the time course of transient oscillatory responses, we used SAMtime (an in-house toolbox), to extract normalized z-score values of oscillatory response within the beta band during the time windows of interest. This toolbox enables spatiotemporal comparison of event-related changes in oscillatory power as a function of latency relative to marked events. Images of brain activity were derived from measurement of source activity on a regular 3D grid of points within the head. For each location (voxel), the source waveform was parsed into active (fear or happy expression evoked-) and control (neutral expression evoked) segments, relative to specified event markers. Next, a Stockwell transform was applied to each active and control window, yielding the source power as a function of time and frequency. For each latency, the power in active and control states was compared using a nonparametric Mann–Whitney U-test. The resulting z-score was mapped to the specified voxel, 3D maps of Z-values were then generated for each individual latency, and 3D images were assembled across time using AFNI software. The spatiotemporal evolution of activity during each 1000 ms of facial video viewing was then quantified as 100 data points (i.e., 10 ms per data point), representing average z-scores of beta-band (14–30 Hz) power, extracted from the functionally activated ROIs in STS and frontolimbic regions for time-course analysis (time-course data are shown in the Results section), using the 3dROIstats. The extracted MEG time-course data in STS and frontolimbic clusters, where beta-band activity emerged between 400 and 1000 ms (see Results) in response to fearful and happy dynamic expressions, were used to assess coupling (Pearson's r, two-tailed) between the 100 data points of STS and each frontolimbic beta-band time course of interest.

Determination of the Temporal Coupling of Beta-Band Activity with Facial Movements and with Emotion Recognition

To link the time course of the emergence of the emotional expressions to the time course of the neural response, we correlated (Pearson's r, two-tailed) the 30 data-point time series of the global facial movements for fear and happiness with the respective beta-band time series extracted from each functionally activated ROI. To match the 30 data points of the global facial movements, the 100 data point (for the 1000 ms) MEG time courses underwent a three-point smoothing. Data are only reported for those regions showing significant correlations between the emotional facial movement time courses and the time courses of evoked beta-band activity.

Additionally, to link the neural responses to the conscious perception of the stimuli, reaction times indicating the speed of emotion recognition for each individual were used as covariates of oscillatory response to facial dynamics using 3dRegAna, a regression analysis tool in AFNI (http://afni.nimh.nih.gov/afni, Last accessed 01/12/2014). A whole-brain voxel-wise regression analysis was performed to assess the relationship between recognition speed for identifying fear and happiness, and regional beta-band response to the same expressions.

Results

Observing Dynamic Facial Expressions Evokes Anatomically Convergent Sustained fMRI BOLD Signal and MEG Beta-Band Activity in the STS

A GLM analysis revealed that, compared with static pictures, dynamic facial expressions elicited increased sustained BOLD response in bilateral STS and inferior temporal gyrus at P < 0.05, FDR corrected (Fig. 1A). To further illustrate the extent of our BOLD findings, a list of regions shown to respond more to dynamic facial stimuli thresholded at P = 0.005 uncorrected is given in Table 1 and Supplementary Figure 2.

Table 1.

Dynamics > static (fMRI BOLD at P < 0.005 uncorrected)

| Anatomical description | MNI Coordinates |

t Values | ||

|---|---|---|---|---|

| X | Y | Z | ||

| Sensorimotor response | ||||

| Primary visual cortex/fusiform/cerebellum | 49 | –61 | –7 | 11.57 |

| Primary visual cortex/fusiform/cerebellum | –24 | –90 | 12 | 3.35 |

| STS/superior temporal cortex | 48 | –30 | –6 | 6.02 |

| STS/middle temporal cortex | –52 | –52 | 8 | 6.65 |

| Inferior parietal lobule | 61 | –40 | 29 | 5.64 |

| Inferior parietal lobule | –62 | –40 | 33 | 3.23 |

| Presupplementary motor area | 7 | –2 | 68 | 2.38 |

| Premotor cortex | 58 | 24 | 20 | 4.67 |

| Premotor cortex | –54 | –2 | 48 | 2.04 |

| Frontolimbic response | ||||

| Dorsolateral prefrontal cortex | 52 | 26 | 14 | 4.75 |

| Anterior temporal pole | 54 | 16 | –20 | 2.81 |

| Anterior cingulate cortex | 0 | 4 | 28 | 3.01 |

| Anterior insula/frontal operculum | 44 | 23 | –14 | 3.74 |

| Anterior insula/frontal operculum | –44 | 8 | 4 | 2.04 |

| Dorsal/ventral striatum/amygdala | 18 | 8 | 6 | 3.55 |

| Dorsal/ventral striatum/amygdala | –18 | 4 | 4 | 2.43 |

| Thalamus/pulvinar/habenula/brainstem | 6 | –18 | 6 | 2.27 |

| Pons | 8 | –22 | –28 | 2.18 |

| Subgenual cingulate/medial OFC | 10 | 16 | –22 | 1.92 |

| Lateral OFC | 30 | 32 | –10 | 2.12 |

In line with the fMRI analysis, sustained MEG oscillatory response to observing facial dynamics compared with static images was examined by modeling the full 1-s stimulus presentation duration using SAM (Robinson and Vrba 1999). Convergent with our BOLD findings, sustained beta-band activity (measured in terms of changes in beta-band oscillatory power) was observed in the right STS, striatum, primary visual cortex bilaterally, left amygdala, and left anterior insula at P < 0.05, FDR corrected (Fig. 1C).

To assess the frequency specificity of our observed beta-band response data, we performed a whole-brain analyses of evoked oscillatory activity in the theta, alpha, and low- and high-gamma frequency bands, comparing dynamic with static facial expressions, and found no significant response clusters within any of these frequencies at P < 0.05 FDR corrected, suggesting a more robust involvement of beta-band activity in tracking the dynamic aspects of human facial emotional expressions.

To further determine whether the observed sustained fMRI BOLD and MEG beta-band signals occurred within the same anatomical STS regions (which would provide additional verification of the spatial distribution of the MEG signals), we applied a formal conjunction analysis and found that observing facial movements evoked spatially overlapping BOLD signal change AND beta-band synchronization in the right STS, right caudate, and left amygdala at P < 0.0025 (Fig. 1D, left).

To quantify the spatial validity of our MEG results in subcortical regions, we independently extracted BOLD and beta-band activity values in the voxels in each individual's left amygdala that showed conjoint activity across the 2 imaging modalities. We found a significant correlation between left amygdala BOLD and beta-band activities (Spearman's nonparametric ρ = 0.401, P = 0.036, two-tailed, see Fig. 1D, right), thereby demonstrating a robust BOLD–beta-band relationship in a behaviorally relevant subcortical region known to process salient aspects of emotional stimuli (Ledoux 1998; Hariri et al. 2000; Cornwell et al. 2008; Pessoa and Adolphs 2010).

Taken together, these results derived from contrasts of dynamic > static stimuli showing robust and convergent BOLD signal change and MEG beta-band response to changing aspects of human facial emotions (with emotions and identity of the faces remaining the same) are concordant with earlier work demonstrating spatial relationship between BOLD and beta-band oscillatory responses (Winterer et al. 2007; Zumer et al. 2010; Scheeringa et al. 2011), and reveal an anatomically convergent STS involvement in processing dynamic aspects of human facial expressions (Oram and Perrett 1996; Haxby et al. 2000), thereby providing strong spatiotemporal validation of the observed beta-band neural representation of facial dynamics in cortical and subcortical regions. Because of this cross-modal convergence and because our time–frequency analyses at the sensor level (Fig. 1C) showed a pattern of beta-band activity coinciding with the 1-s time window during which the dynamic facial stimuli were presented, further analyses of behavioral correlations and of transient neural response focused on the beta band.

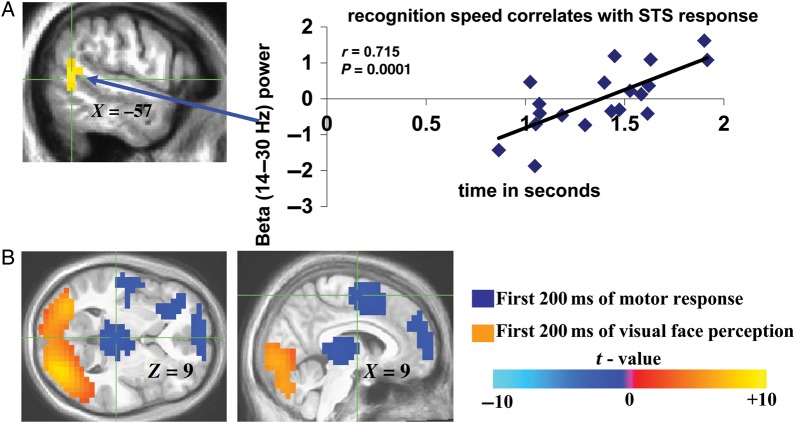

Sustained Beta-Band Activity Correlates with Emotion Recognition Speed

To examine the perceptual relevance of the observed oscillatory activity, 19 of the 21 MEG participants performed a post-MEG assessment of the emotion conveyed by each video clip (behavioral response data were not acquired for 2 individuals because of technical difficulties). Average recognition time for fear and happiness was 1.377 s ± 0.304 (SEM). Individual differences in emotion recognition speed were shown to correlate with left posterior STS beta-band activity at P = 0.047, FDR corrected, using a whole-brain regression analysis in AFNI. By further extracting the beta-band oscillatory power signals from this STS cluster for the purpose of visualizing this important association, we found a correlation (Pearson's R = 0.715, P = 0.0001, two-tailed; Fig. 2A) between beta-band power and recognition speed, together supporting perceptual relevance of the observed sustained STS beta-band signals elicited by facial dynamics during MEG.

Figure 2.

Perceptual correlates of sustained and transient beta-band activity. (A) Location of correlations between left STS beta-band activity and emotion (fear and happiness) recognition speed assessed post-MEG. Corresponding correlation (Pearson's R) graph between reaction time for fear and happiness recognition and the corresponding extracted left posterior STS beta-band activity values for each individual was included to further illustrate the relationship between the observed left beta-band signals shown to correlate with recognition speed in a whole-brain regression analysis. (B) Visual (orange)- and motor (blue)-related beta-band activity elicited by first 200 ms of viewing facial videos compared with first 200 ms of gender identification-related motor response, respectively. Color bar represents t values in (B) (for (B), negative t values are not inhibitory responses, but rather denote positive t values for motor response-evoked beta-band activity compared with visually evoked beta-band activity).

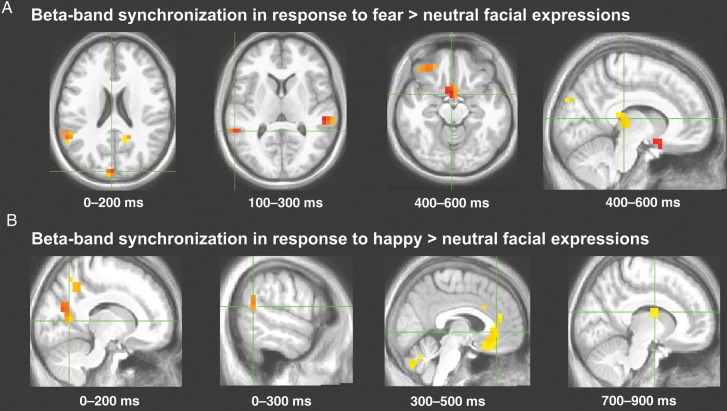

Observing Dynamic Facial Emotions Evokes Emotion-Specific Transient Beta-Band Activity

To better assess the rapid neural computations by which emotion-specific dynamic facial expressions gain salience over time, we next assessed transient oscillatory (beta-band) responses with a GLM analysis of MEG beta-band activity across a series of 200-ms sliding windows spanning the 1-s presentation, including the time till the peak of the emotional expressions in the videos. The sensitivity of the adopted 200-ms time windows was confirmed by the observation that occipital cortical beta-band activity during the initial 200 ms of EFSO was significantly greater than activity during the 200 ms following stimulus offset (PSO) when participants were making a button press; in contrast, the 200-ms PSO period evoked greater motor/premotor, posterior thalamic, and prefrontal beta-band activity than the EFSO period (P < 0.0001, two-tailed, FDR corrected; Fig. 2B, orange and blue clusters, respectively), indicating that the adopted 200-ms time windows are well suited for capturing transient neural processes. This validation paved the way for a valence-specific analysis of transient neural responses to the emotion-conveying aspects of our videos; beta-band responses to fearful and happy dynamic facial expressions were analyzed separately 1) because of the different time courses with which the respective facial expressions evolved (supplementary Fig. 1), and 2) to determine whether the neural substrate of emotionally negative and positive facial expressions was characterized by differing neurofunctional responses at the temporal resolution enabled by our approach.

Whole-brain sliding 200-ms window analysis (sampled at P < 0.0001, two-tailed) revealed the early (0–400 ms) emergence of both fear- and happiness-evoked beta-band activity within a sensorimotor network including occipital, STS, parietal, and premotor cortical regions (Fig. 3A,B, Tables 2 and 3 for millisecond timing and statistics). Beginning at ∼400 ms and continuing for the remainder of the 1000 ms, this early pattern of beta-band activity spread rapidly to frontolimbic areas with a time course similar to the peak of the facial movements (Fig. 3A,B, Tables 2 and 3 for millisecond timing and statistics, Fig. 4).

Figure 3.

Transient beta-band activity. Locations and millisecond timing for selected regions in which beta-band activity was significantly recruited during perception of dynamic fearful (A) and happy (B) expressions relative to dynamic neutral expressions during specific 200-ms sliding time windows; see Tables 2 and 3 for complete results.

Table 2.

Fear dynamics > neutral dynamics (beta-band power)

| Anatomical description | MNI Coordinates |

Time (in ms) | |||

|---|---|---|---|---|---|

| X | Y | Z | t Values | ||

| Sensorimotor response | |||||

| IPL | 58 | –20 | 28 | 5.31 | 0–200 |

| STS/superior temporal cortex | –64 | –42 | 3 | 4.57 | 100–300 |

| STS/middle temporal cortex | 62 | –33 | –2 | 6.08 | 200–400 |

| Motor cortex | –2 | –36 | 68 | 4.21 | 300–500 |

| Inferior temporal cortex | 43 | 0 | –41 | 5.14 | 400–600 |

| Fusiform | –21 | –63 | –20 | 4.02 | 500–700 |

| Postcentral gyrus | –25 | –47 | 54 | 4.34 | 600–800 |

| Fusiform/cerebellum | –17 | –45 | –17 | 4.52 | 700–900 |

| Frontolimbic response | |||||

| Pulvinar/habenula | 10 | –26 | 7 | 4.73 | 400–600 |

| Subgenual cingulate/medial OFC | 2 | 9 | –16 | 5.44 | 400–600 |

| Lateral OFC | 43 | 38 | –15 | 5.56 | 400–600 |

| Amygdala/hippocampus | –21 | –13 | –15 | 3.57 | 600–800 |

| Posterior insula | 42 | 2 | –8 | 3.94 | 700–900 |

| ACC | 1 | 36 | 11 | 4.01 | 800–1000 |

In italics are the STS cluster shown to correlate with dynamic fear expressions between 400 and 1000 ms; IPL, inferior parietal lobule; STS, superior temporal cortex; OFC, orbitofrontal cortex; ACC, anterior cingulate cortex.

Table 3.

Happy dynamics > neutral dynamics (beta-band power)

| Anatomical description | MNI Coordinates |

Time (in ms) | |||

|---|---|---|---|---|---|

| X | Y | Z | t Values | ||

| Sensorimotor response | |||||

| Middle occipital cortex | 12 | –63 | 17 | 5.49 | 0–200 |

| Precuneus | 12 | –54 | 36 | 4.44 | 0–200 |

| STS | –58 | –54 | 11 | 4.31 | 0–200 |

| Premotor cortex | 53 | –3 | 16 | 4.79 | 100–300 |

| Lateral occipital cortex | –25 | –84 | –13 | 5.14 | 100–300 |

| Inferior occipitotemporal cortex | 48 | –64 | –22 | 4.83 | 200–400 |

| IOC | 2 | –69 | –13 | 8.36 | 200–400 |

| Fusiform gyrus | –14 | –64 | –17 | 4.56 | 300–500 |

| IPL | 58 | –24 | 40 | 5.01 | 400–600 |

| STS | 66 | –36 | –3 | 4.32 | 500–700 |

| IOC | –7 | –95 | –9 | 4.89 | 700–900 |

| IPS | –33 | –81 | 11 | 4.65 | 800–1000 |

| Frontolimbic response | |||||

| Middle cingulate | –43 | –19 | 40 | 4.2 | 300–500 |

| ACC/subgenual cingulate | –3 | 29 | –9 | 4.77 | 300–500 |

| Superior frontal gyrus | –18 | 14 | 62 | 5.23 | 400–600 |

| DLPFC | 33 | 1 | 50 | 4.69 | 500–700 |

| Amygdala | –17 | 0 | –21 | 4.33 | 600–800 |

| Pulvinar/habenula | 9 | –32 | 4 | 4.45 | 600–800 |

| Midbrain | –10 | –11 | –4 | 3.87 | 600–800 |

| Caudate | 9 | –4 | 12 | 4.43 | 700–900 |

In italics are the STS cluster shown to correlate with dynamic happy expressions between 400 and 1000 ms; IPL, inferior parietal lobule; IOC, inferior occipital cortex; ACC, anterior cingulate cortex; DLPFC, dorsolateral prefrontal cortex.

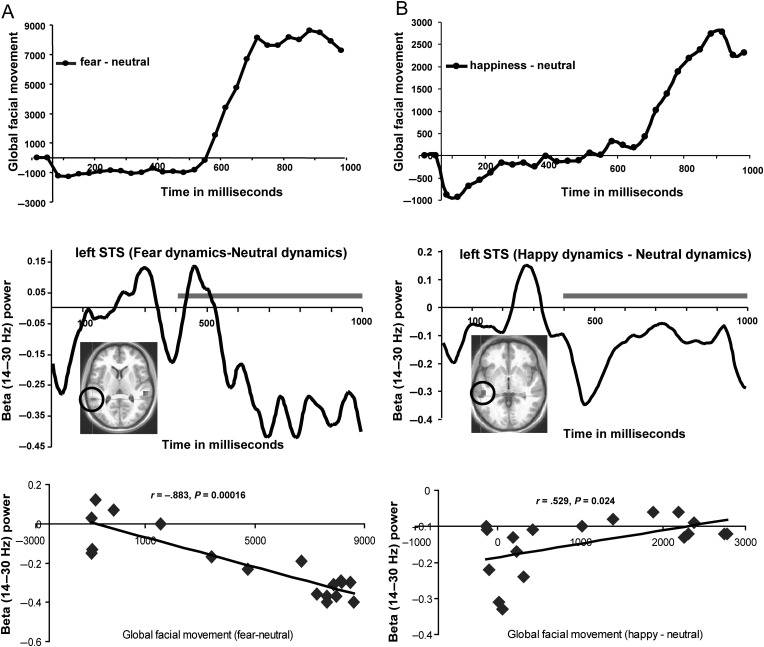

Figure 4.

Time course of STS beta-band activity couples with time course of the emergence of emotional facial dynamics. (A and B) Top depicts the global face movements for fearful and happy expressions, respectively, calculated using the PerceptualDiff image analyzer program; (A and B) Middle depicts the time courses of left STS (encircled ROI) beta-band activity evoked by fearful and happy relative to neutral expressions across the entire 1-s viewing epoch; (A and B) Bottom shows scatter plots illustrating the cross-correlation between the STS beta-band activity time courses and the global facial movement of fearful–neutral and happy–neutral expressions over the 400–1000 ms time courses of the top and middle panels of (A and B), covering a time over which the expressions fully emerge (indicated by gray lines in middle panels of A and B). Time courses of facial expressions (A and B top) represent the time-dependent, frame-to-frame differential facial movement (relative to neutral dynamic expressions) as the fearful and happy dynamic expressions emerged; these time points were averaged over the fearful and happy videos, respectively; the time-course data depicting fearful and happy facial expressions (relative to neutral expressions) consists of the stimulus time courses shown earlier in Jabbi et al. (2013).

Time Course of Evolving Emotional Facial Expressions Correlates with Transient Beta-Band Activity

Next, we tested for regionally specific relationships between the time courses of motoric evolution of the corresponding facial expressions (Fig. 4A,B, top) and the time courses of beta-band responses to fear/happiness (Fig. 4A,B, middle) by correlating across the ∼400–1000 ms period during which the valence-specific facial expressions and the frontolimbic beta-band activity both emerged. Pearson's r, two-tailed, was used for this and all subsequent time course correlations. The 400–1000 ms time course of fearful facial movements correlated negatively with a suppression of the beta-band response in left STS (Fig. 4A, bottom; r = −0.883, P = 0.00016). In contrast, the time course of happy expressions measured from 400 to 1000 ms correlated positively with an increase in left STS beta-band response (Fig. 4B, bottom: r = 0.529, P = 0.024). These results suggest a differential, valence-specific orchestration of left STS: The emergence of dynamic fearful expressions correlated negatively with beta-band activity in the left STS, whereas viewing happy expressions was positively time-locked with another beta-band activity cluster in the left STS (see Tables 2 and 3 for the MNI coordinates). To test the lateral specificity of this finding, we further examined the correlations between beta-band signal values from the right STS cluster responding to fear and found no correlation with 400–1000 ms fearful facial movements (r = −0.135, P = 0.593). Similarly, beta-band signals from the right STS cluster responding to happy expressions showed no correlation with dynamic facial movements of happiness (r = 0.235, P = 0.348). Taken together with our finding of a left STS beta-band activity cluster correlating with recognition speed, these results point to a behaviorally relevant valence-specific involvement of the STS in tracking the dynamic aspects of facial emotional movements during human emotion cognition. The time courses of all other visual cortical beta-band activity clusters reported in Tables 2 and 3 showed no such correlations with fear and happy facial expression dynamics. Of interest, the STS response time course that was found to correlate with the time course of emotional facial expression also showed robust correlations with frontolimbic areas such as caudate nucleus, subgenual cingulate/orbitofrontal cortex (OFC), and pulvinar/habenula (see Supplementary Fig. 3 and related data on these correlations in Supplementary Tables 1 and 2), but the validity and the casual nature of these STS–frontolimbic time-course correlations needs further assessment (see Schoffelen and Gross 2009).

Discussion

Here, we combined the spatial resolution of BOLD fMRI and the temporal resolution of MEG in the same participants while they observed videos of emerging facial emotional expressions, and we delineated a finely tuned, anatomically and temporally distributed neural response system in which the STS plays a prominent role. We found that compared with static pictures of facial expressions, dynamic videos depicting the evolution of the exact same expressions evoked sustained BOLD response and corresponding event-related changes in beta-band activity in STS and frontolimbic cortices. The robust pattern of oscillatory activity evoked by facial dynamics was specific to the beta band. The convergence of sustained BOLD and beta-band oscillatory response in the STS identifies a robust representation of visually guided cognition in the context of ecologically valid facial emotional expressions (Haxby et al. 2000; Wyk et al. 2009). Because our task involved only an implicit recognition of these facial expressions, the spatiotemporal representation of explicit visual recognition in the context of similar stimuli requires further assessment in future studies.

To assess the behavioral relevance of these spatially and temporally distributed, but cross-modally convergent, oscillatory response patterns, we directly correlated the MEG data with individual difference in reaction time for recognition of the emotion displayed, and found in a whole-brain analysis that only the sustained beta-band activity localized within the left posterior aspect of the superior STS correlated with speed of recognition for fearful and happy facial expressions. This finding is consistent with an fMRI study which, using a multivariate searchlight approach, found an emotion-specific response pattern in the same left superior STS region (Peelen et al. 2010). Our observation of highly localized, sustained STS beta-band response that was linked directly to the speed with which conscious processing of emotional meaning of facial expressions is achieved, lends support for an important mediatory role of this region in comprehending dynamic social cues. These results are in line with the involvement of the STS in normal and pathological social perception (Pelphrey and Carter 2008; Adolphs 2010), and may contribute to a better understanding of the functional relationship between the identified STS circuitry and the successful visual processing of social–emotional stimuli. For instance, rapid, time-dependent feed-forward/feed-back interaction between STS and frontolimbic pathways may mediate perceptual, attentional, mnemonic, and experiential aspects of social–emotional cognition (Haxby et al. 2000; Dolan 2002; Pessoa and Adolphs 2010).

To temporally resolve the neuronal response patterns underlying the perception of dynamic facial expressions, we also assessed transient oscillatory response within the beta band across the entire brain using sliding 200-ms time windows, an approach that we had shown to reliably capture transient neural representations of sensorimotor and visual oscillatory processes. This analysis revealed that presentation of dynamic fearful and happy expressions elicited early (0–400 ms) activity in a sensorimotor pathway including the occipito-parietal, STS, and premotor cortices. From 400 to 1000 ms, however, this sensorimotor beta-band activity extended rapidly into the frontolimbic system (pulvinar, caudate, cingulate, and amygdala), suggesting a spatiotemporally organized STS beta-band coupling with frontolimbic activity when the facial expressions become emotionally salient.

To test the hypothesis that these transient STS beta-band signals are associated with the emergence of the emotional expressions from the changing conformations of the facial musculature, we cross-correlated the time course of STS beta-band activity from the clusters showing transient response to emerging facial dynamics with the time course of global measures of facial movements while the fearful and happy expressions evolved. We found a valence-specific coupling between the temporal patterns of transient STS beta-band activity and temporal patterns of the facial movement: Negative coupling with the evolution of emotionally negative (fearful) expressions, and positive coupling for the emergence of emotionally positive happy expressions. Together, these results extend the well-documented role of the STS in coding biological motion (Oram and Perrett 1996; Haxby et al. 2000) by providing direct evidence of a beta-band oscillatory pattern in this region that is intimately tuned to the facial movement patterns defining fearful and happy expressions in an ecologically valid paradigm. Additionally, the observed frontolimbic (pulvinar and SGC/OFC, as well as caudate and SGC/OFC) beta-band response time courses were shown to be coupled with STS beta-band activity during the same conditions of observing fearful and happy expressions. The suggestion in our supplemental finding (see Supplementary Fig. 3) that there is a temporal offset between our observed STS beta-band activity and related frontolimbic responses supports the existence of a possible feed-forward/feed-back mechanism within these pathways during visual emotion processing (Ledoux 1998; Karnath 2001), but a more complete understanding of this possibility will require further quantitative exploration, such as assessing inter-regional beta-band phase-locking (Fenske et al. 2006; Jensen and Colgin 2007; Ghuman et al. 2008; Penny et al. 2008; Hipp et al. 2011; Kveraga et al. 2011).

Our results suggest a more distributed and tightly interwoven neuronal representation of human facial emotion expressions than previously appreciated; however, we cannot rule out the influence of a common driver effect on our observed correlations among STS and related frontolimbic beta-band signals. Additionally, disentangling the behavioral implications and causal nature of the coupling between the STS and the distributed network of frontolimbic regional oscillatory response patterns will be a crucial goal for future studies. In summary, these results reveal a neural mechanism whereby visual perception of unfolding facial expressions triggers complex but tightly orchestrated neuronal information flow in the form of STS pathway beta-band activity, consistent with the hypothesized complexity of the spatiotemporally distributed neural mediation of mnemonic and experiential aspects of conscious emotion cognition. The identification of such a behaviorally relevant neural system involving a dynamic interplay within the STS circuitry may guide noninvasive examination of pathological aspects of brain mediation of human affective cognition and functioning.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Authors’ Contributions

M.J. conceived and designed the experiment and wrote the manuscript. M.J., T.N., P.D.K., A.I., C.C., J.S.K., T.H., F.W.C., and B.C. performed the experiments and analyzed the data. P.D.K. and S.E.R. contributed analytical tools and guided the video and MEG time course analysis, respectively. T.N. and Q.C. performed the extraction of MEG and fMRI time-course data, respectively. R.C. and K.F.B. supervised all aspects of this project. All authors discussed the experiments and contributed to the manuscript.

Funding

This work was funded by the Intramural Research Program of the National Institute of Mental Health at the National Institutes of Health. ClinicalTrials.gov Identifier number: NCT00004571; Protocol ID number: 00-M-0085.

Supplementary Material

Notes

We thank Dr Jerzy Bodurka, Adam Thomas, Sean Marrett, Sandra Moore, Marcella Montequin, Paula Rowser, and all of our colleagues at the NIH NMR centre for their support; Judy Mitchell-Francis and Dani Rubinstein of the NIH MEG core facility for technical support; Gang Chen for his help on realization of the MEG time-course analytical methods; Avniel Ghuman for comments; Shau-Ming Wei, Joel Bronstein, Gabriela Alarcon, Aarthi Padmanabhan, Molly Chalfin, James Zhang, and Anees Benferhat for experimental assistance; Joseph Masdeu and Daniel Eisenberg for medical coverage. Conflict of Interest: None declared.

References

- Adolphs R. 2010. Conceptual challenges and directions for social neuroscience. Neuron. 65:752–767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allman JM, Kaas JH. 1974. A crescent-shaped cortical visual area surrounding the middle temporal area (MT) in the owl monkey (Aotus trivirgatus). Brain Res. 81:199–213. [DOI] [PubMed] [Google Scholar]

- Buzsáki G, Draguhn A. 2004. Neuronal oscillations in cortical networks. Science. 304:1926–1929. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW. 2005. Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci. 6:641–651. [DOI] [PubMed] [Google Scholar]

- Carver FW, Elvevåg B, Altamura M, Weinberger DR, Coppola R. 2012. The neuromagnetic dynamics of time perception. PLoS One. 7:e42618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornwell BR, Carver FW, Coppola R, Johnson L, Alvarez R, Grillon C. 2008. Evoked amygdala responses to negative faces revealed by adaptive MEG beamformers. Brain Res. 1244:103–112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darwin C. 1872. The expressions of the emotions in man and animals. Introduction, afterword and commentaries by Paul Ekman. Oxford: Oxford University Press; p. 146–344. [Google Scholar]

- Dolan RJ. 2002. Emotion, cognition, and behavior. Science. 298:1191–1194. [DOI] [PubMed] [Google Scholar]

- Donner TH, Siegel M, Fries P, Engel AK. 2009. Buildup of choice-predictive activity in human motor cortex during perceptual decision making. Curr Biol. 19(18):1581–1585. [DOI] [PubMed] [Google Scholar]

- Ekman P. 1972. Universals and cultural differences in facial expressions of emotion. In: Cole J, editor. Nebraska Symposium on Motivation 1971. Vol. 19 Lincoln, NE: University of Nebraska Press; p. 207–283. [Google Scholar]

- Engel AK, Fries P. 2010. Beta-band oscillations—signalling the status quo? Curr Opin Neurobiol. 20:156–165. [DOI] [PubMed] [Google Scholar]

- Fenske MJ, Aminoff E, Gronau N, Bar M. 2006. Top-down facilitation of visual object recognition: object-based and context-based contributions. Prog Brain Res. 155:3–21. [DOI] [PubMed] [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G. 2004. A unifying view of the basis of social cognition. Trends Cogn Sci. 8:396–403. [DOI] [PubMed] [Google Scholar]

- Ghuman AS, Bar M, Dobbins IG, Schnyer DM. 2008. The effects of priming on frontal-temporal communication. Proc Natl Acad Sci USA. 105(24):8405–8409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gross J, Kujala J, Hamalainen M, Timmermann L, Schnitzler A, Salmelin R. 2001. Dynamic imaging of coherent sources: studying neural interactions in the human brain. Proc Natl Acad Sci USA. 98(2):694–699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hariri Ahmad R, Bookheimer Susan Y, Mazziotta John C. 2000. Modulating emotional responses: effects of a neocortical network on the limbic system. Neuroreport. 11:43–48. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. 2000. The distributed human neural system for face perception. Trends Cogn Sci. 4:223–233. [DOI] [PubMed] [Google Scholar]

- Hipp JF, Engel AK, Siegel M. 2011. Oscillatory synchronization in large-scale cortical networks predicts perception. Neuron. 69:387–396. [DOI] [PubMed] [Google Scholar]

- Ishii R, Canuet L, Ochi A, Xiang J, Imai K, Chan D, Iwas M, Takeda M, Snead OC, 3rd, Otsubo H. 2008. Spatially filtered magnetoencephalography compared with electrocorticography to identify intrinsically epileptogenic focal cortical dysplasia. Epilepsy Res. 81(2–3):228–232. [DOI] [PubMed] [Google Scholar]

- Jabbi M, Nash T, Kohn P, Ianni A, Rubinstein D, Holroyd T, Carver F, Robinson S, Kippenhan JS, Masdeu J, et al. 2013. Midbrain presynaptic dopamine tone predicts sustained and transient neural response to emotional salience: fMRI, MEG, fDOPA PET. Mol Psychiatry. 18(1):4–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen O, Colgin LL. 2007. Cross-frequency coupling between neuronal oscillations. Trends Cogn Sci. 11:267–269. [DOI] [PubMed] [Google Scholar]

- Karnath HO. 2001. New insights into the functions of the superior temporal cortex. Nat Rev Neurosci. 2:568–576. [DOI] [PubMed] [Google Scholar]

- Keysers C, Perrett DI. 2004. Demystifying social cognition: a Hebbian perspective. Trends Cogn Sci. 8:501–507. [DOI] [PubMed] [Google Scholar]

- Kveraga K, Ghuman AS, Kassam KS, Aminoff EA, Hämäläinen MS, Chaumon M, Bar M. 2011. Early onset of neural synchronization in the contextual association's network. Proc Natl Acad Sci USA. 108:3389–3394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ledoux J. 1998. The emotional brain: the mysterious underpinnings of emotional life. New York: Simon & Schusters; p. 138–178. [Google Scholar]

- Lee LC, Andrews TJ, Johnson SJ, Woods W, Gouws A, Green GG, Young AW. 2010. Neural responses to rigidly moving faces displaying shifts in social attention investigated with fMRI and MEG. Neuropsychologia. 48:477–490. [DOI] [PubMed] [Google Scholar]

- Leopold DA, Rhodes G. 2010. A comparative view of face perception. J Comp Psychol. 124:233–251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nolte G, Fieseler T, Curio G. 2001. Perturbative analytic solutions of the magnetic forward problem for realistic conductors. J Appl Phys. 89(4):2360–2369. [Google Scholar]

- Oram MW, Perrett DI. 1996. Integration of form and motion in the anterior superior temporal polysensory area (STPa) of the macaque monkey. J Neurophysiol. 76:109–129. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Atkinson AP, Vuilleumier P. 2010. Supramodal representations of perceived emotions in the human brain. J Neurosci. 30:10127–10134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Carter EJ. 2008. Charting the typical and atypical development of the social brain. Dev Psychopathol. 20:1081–1102. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, McCarthy G. 2004. Grasping the intentions of others: the perceived intentionality of an action influences activity in the superior temporal sulcus during social perception. J Cogn Neurosci. 16(10):1706–1716. [DOI] [PubMed] [Google Scholar]

- Penny WD, Duzel E, Miller KJ, Ojemann JG. 2008. Testing for nested oscillation. J Neurosci Methods. 174(1):50–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. 2010. Emotion processing and the amygdala: from a ‘low road’ to ‘many roads’ of evaluating biological significance. Nat Rev Neurosci. 11:773–783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfurtscheller G, Stancák A, Jr, Neuper C. 1996. Post-movement beta synchronization. A correlate of an idling motor area? Electroencephalogr Clin Neurophysiol. 98(4):281–293. [DOI] [PubMed] [Google Scholar]

- Popov T, Miller GA, Rockstroh B, Weisz N. 2013. Modulation of α power and functional connectivity during facial affect recognition. J Neurosci. 33:6018–6026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson SE, Vrba J. 1999. Functional neuroimaging by synthetic aperture magnetometry (SAM). Sendai, Japan: Tohoku University Press. [Google Scholar]

- Scheeringa R, Fries P, Petersson KM, Oostenveld R, Grothe I, Norris DG, Hagoort P, Bastiaansen MC. 2011. Neuronal dynamics underlying high- and low-frequency EEG oscillations contribute independently to the human BOLD signal. Neuron. 69:572–583. [DOI] [PubMed] [Google Scholar]

- Schoffelen JM, Gross J. 2009. Source connectivity analysis with MEG and EEG. Hum Brain Mapp. 30(6):1857–1865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siegel M, Donner TH, Engel AK. 2012. Spectral fingerprints of large-scale neuronal interactions. Nat Rev Neurosci. 13:121–134. [DOI] [PubMed] [Google Scholar]

- Siegel M, Engel AK, Donner TH. 2011. Cortical network dynamics of perceptual decision-making in the human brain. Front Hum Neurosci. 5:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith ML, Gosselin F, Schyns PG. 2006. Perceptual moments of conscious visual experience inferred from oscillatory brain activity. Proc Natl Acad Sci USA. 103(14):5626–5631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Stanford TR. 2008. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci. 9:255–266. [DOI] [PubMed] [Google Scholar]

- Stevenson CM, Brookes MJ, Morris PG. 2011. β-Band correlates of the fMRI BOLD response. Hum Brain Mapp. 32(2):182–197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stockwell RG, Mansinha L, Lowe RP. 1996. Localization of the complex spectrum: the S transform. IEEE Trans Signal Process. 44:998–1001. [Google Scholar]

- Trautmann SA, Fehr T, Herrmann M. 2009. Emotions in motion: dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Res. 1284:100–115. [DOI] [PubMed] [Google Scholar]

- van der Gaag C, Minderaa RB, Keysers C. 2007. Facial expressions: what the mirror neuron system can and cannot tell us. Soc Neurosci. 2:179–222. [DOI] [PubMed] [Google Scholar]

- Varela F, Lachaux JP, Rodriguez E, Martinerie J. 2001. The brainweb: phase synchronization and large-scale integration. Nat Rev Neurosci. 2:229–239. [DOI] [PubMed] [Google Scholar]

- Vrba J, Robinson SE. 2002. SQUID sensor array configurations for magnetoencephalographic applications. Methods. 15:R51–R89. [Google Scholar]

- Vrba J, Taylor B, Cheung T, Fife AA, Haid G. et al. 1995. Noise cancellation by a whole-cortex SQUID MEG system. IEEE Trans Appl Supercond. 5:2118–2123. [Google Scholar]

- Winterer G, Carver FW, Musso F, Mattay V, Weinberger DR, Coppola R. 2007. Complex relationship between BOLD signal and synchronization or desynchronization of human brain MEG oscillations. Hum Brain Mapp. 28:805–816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wyk BC, Hudac CM, Carter EJ, Sobel DM, Pelphrey KA. 2009. Action understanding in the superior temporal sulcus region. Psychol Sci. 20(6):771–777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeterian EH, Pandya DN. 1991. Corticothalamic connections of the superior temporal sulcus in rhesus monkeys. Exp Brain Res. 83:268–284. [DOI] [PubMed] [Google Scholar]

- Zumer JM, Brookes MJ, Stevenson CM, Francis ST, Morris PG. 2010. Relating BOLD fMRI and neural oscillations through convolution and optimal linear weighting. Neuroimage. 49(2):1479–1489. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.