Abstract

Objectives

To determine the effectiveness of interventions designed to prevent or reduce publication and related biases.

Study Design and Setting

We searched multiple databases and performed manual searches using terms related to publication bias and known interventions against publication bias. We dually reviewed citations and assessed risk of bias. We synthesized results by intervention and outcomes measured and graded the quality of the evidence (QoE).

Results

We located 38 eligible studies. The use of prospective trial registries (PTR) has increased since 2005 (seven studies, moderate QoE); however, positive outcome-reporting bias is prevalent (14 studies, low QoE), and information in nonmandatory fields is vague (10 studies, low QoE). Disclosure of financial conflict of interest (CoI) is inadequate (five studies, low QoE). Blinding peer reviewers may reduce geographical bias (two studies, very low QoE), and open-access publishing does not discriminate against authors from low-income countries (two studies, very low QoE).

Conclusion

The use of PTR and CoI disclosures is increasing; however, the adequacy of their use requires improvement. The effect of open-access publication and blinding of peer reviewers on publication bias is unclear, as is the effect of other interventions such as electronic publication and authors' rights to publish their results.

Keywords: Publication bias, Geographical bias, Trial registration, Open access, Peer review, Conflict of interest

1. Introduction

What is new?

Key findings

-

•

The use of clinical trial registries has substantially increased since 2005; however, publication bias is still pervasive (the results of many registered trials are never made available). Likewise, although registries should deter positive outcome reporting, this bias is still prevalent and registry entries are often inadequate for independent systematic reviewers to fully detect this source of publication bias.

What this adds to what was known?

-

•

Publication bias and selective outcome-reporting bias represent major threats to the validity of systematic reviews and reduce our ability to produce valid conclusions based on a body of evidence. This review highlights that no empirical studies of current interventions have shown that they reduce this bias.

What is the implication and what should change now?

-

•

Evaluation of the effectiveness of all interventions implemented to reduce publication or related biased is urgently required to focus campaigning and advocacy efforts on those most effective. In addition, for their potential to reduce publication bias to be realized, stricter regulation of trial registries is required, with explicit accountabilities and responsibilities, as well as detailed requirements for entries into mandatory fields and penalties for noncompliance.

Despite substantial global efforts to increase the publication of health-related research, about half of clinical studies remain unpublished [1,2]. As a result, published scientific literature represents an incomplete and biased subset of total research findings [3]. Consequently, the nonpublication of research impedes our ability to make objective and balanced decisions about patient care and resource allocation. Publication bias (also sometimes referred to as dissemination bias) occurs when the publication (or nonpublication) of research depends on the nature and origin of the research and the direction of the results [1,4].

Numerous examples demonstrate the detrimental effect of publication bias on patient care [5–12] and health expenditures [13]. The case of oseltamivir (Tamiflu) for preventing complications of influenza, for example, illustrates the real-world ramifications of publication bias. Billions of dollars were spent worldwide to stockpile oseltamivir based on a published body of evidence that was missing 60% of patient data [13]. Likewise, clinical decisions based on biased bodies of evidence harmed millions of patients who received rosiglitazone [12], gabapentin [10,11], paroxetine [8,9], rofecoxib [6,7], or reboxetine [14].

Despite examples about the impact of publication bias and overall evidence about the nonpublication of a large proportion of research findings, publication bias is difficult to detect when investigating a specific question of interest. Statistically, current methods for assessing publication bias are characterized by low power and strongly rely on the magnitude of the true treatment effect, the distribution of sample sizes, and a reasonable number of studies [15]. Consequently, the absence of a statistically significant correlation or regression does not necessarily indicate the absence of publication bias. Other methods such as the funnel plot and related imputation methods such as trim and fill have low interrated reliability or rely on the assumption that asymmetry is exclusively due to bias [16].

Increased awareness of the harmful and unethical consequences of publication bias has led to the implementation of several measures to reduce the nonpublication of studies and its related publication bias. In 2010, Song et al. [1] published an updated Health Technology Assessment that states that publication bias occurs during different stages of research but mainly before the presentation of findings at conferences and before the submission of manuscripts to journals. Based on their literature review, they list several measures to reduce publication bias that have either been proposed such as a right to publication or already been implemented such as prospective trial registration, mandatory sponsor guidelines, and others.

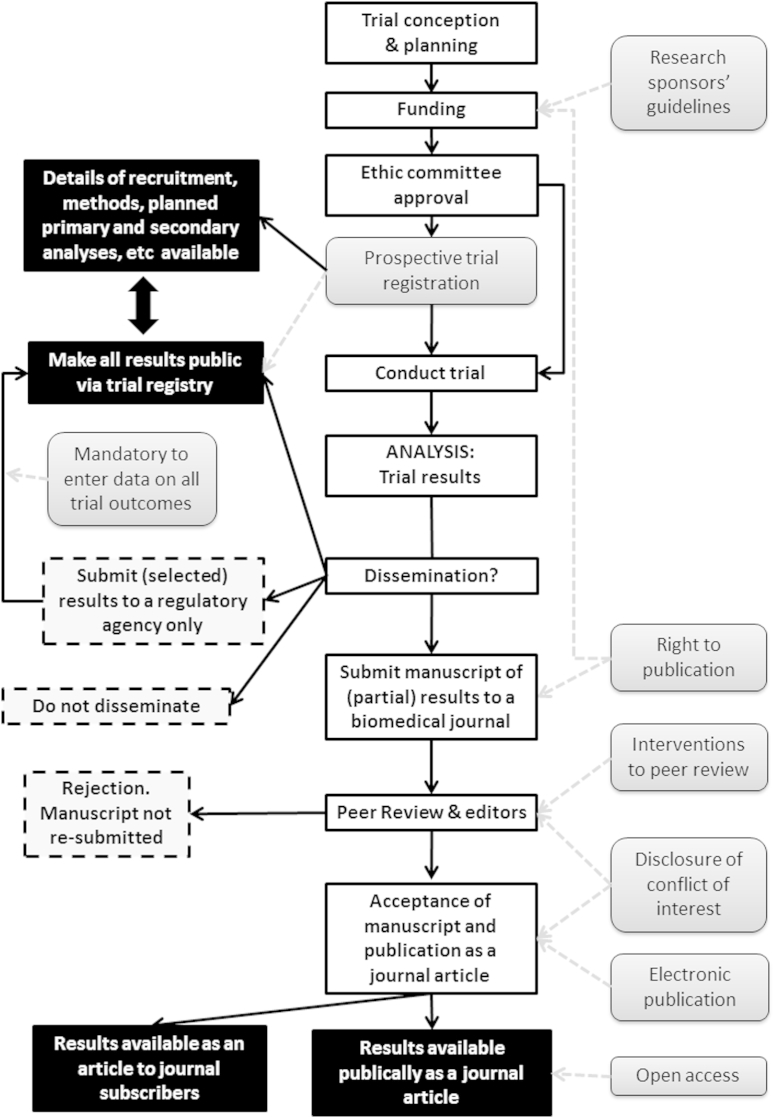

Table 1 provides a summary of the potential mechanisms of measures to reduce publication or related biases as presented by the Song report. Fig. 1 shows the path of trial conception through to the dissemination (or nonpublication) of trial results. The measures identified by Song et al. are shown in light gray shaded boxes, and their point of effect on the pathway is indicated by a light gray dashed line. In this figure, black boxes represent dissemination of results and dashed gray boxes represent nondissemination or publication bias.

Table 1.

Measures to reduce publication bias

| Research sponsors' guidelines | Guidelines such as the EU Clinical Trials Directive, the Declaration of Helsinki, and the CONSORT Statement have been developed so that researchers can follow the same sets of standards. Guidelines that stress the importance of reporting both positive and negative findings can help prevent selective reporting of outcomes. |

| Prospective trial registration and mandatory availability of trial results in registry | Prospective registration of trials and mandatory reporting of results within 2 years is required by US law. This is an attempt to prevent the “file-drawer” problem of unfavorable results disappearing. Even without regulation to enforce publication of results, trial registries increase transparency. Since 2005, the ICMJE have applied a policy of accepting only manuscripts of trials that have been prospectively registered, however, not all medical journal adhere to the ICMJE policy. |

| Right to publication | Ensuring that researchers, and not funders, have the proprietary right to publish results of research conducted in their clinics would reduce the “file-drawer” problem of unfavorable results never being published. |

| Peer review | Targets for changes to the publication process might include the peer review process, mandatory disclosure of conflict of interest, or electronic publication. Peer reviewers have been criticized for rejecting manuscripts with negative or null results and for being biased toward their own country or language and one measure that might reduce this bias is blinding of peer reviewers. |

| Disclosure of conflict of interest | Disclosure of conflict of interest enables readers to determine whether the authors of a trial manuscript may have motives to present the results in a more favorable light (and may increase the readers' suspicion of reporting biases). |

| Electronic publication | Because of their unlimited size, electronic journals could accept all methodologically sound manuscripts regardless of the direction of the results (reducing positive outcome-reporting bias). |

| Open-access policy | May mean open access to all trial results or the open-access publishing model—where authors pay a publishing fee and articles are freely available to all readers. The latter is a method to counteract the barrier of a “pay-wall” for users of the medical literature. |

Abbreviations: CONSORT, Consolidated Standards of Reporting Trials; ICMJE, International Committee of Medical Journal Editors.

Fig. 1.

Process from conception of a clinical trial to dissemination of the results with point of impact of interventions to reduce publication bias. Interventions to reduce publication bias as classified by Song et al. [1] are shown in light gray boxes. The outcomes of the decision to disseminate results and their impact on “publication bias” are shown in black boxes (trial results are partially or fully publically available) or in dashed-line boxes, which represent trial results which are not available (nonpublication, or nondissemination). This is a simplification of a complicated social and organization system and some aspects of publication bias, such as gray literature and results available in abstract form are not reflected in this figure.

To date, however, it remains unclear whether any of these measures achieves its intended goal, that is, to increase the availability of trial results and to reduce publication bias. Therefore, the objective of our systematic review was to identify and appraise empirical studies of interventions designed and implemented to prevent or reduce publication bias to determine their effectiveness.

2. Methods

In this review, we concentrated on publication bias in the context of randomized controlled trials (RCTs) in clinical medicine and only included studies that directly measured the effect of an intervention on reducing publication or a related bias. We used the measures identified by Song et al. [1] as a classification framework and summarized our results in terms of the effects on publication bias or related biases, such as outcome-reporting bias, positive outcome bias, geographical or language bias, and so forth.

We included any empirical research study of a measure to reduce publication bias where an analysis was performed that sought to quantify or determine the success of the intervention in preventing or reducing publication bias or related biases. Many studies have demonstrated the existence of publication bias, and these were not the subject of this review. We did not include studies that merely demonstrated the presence of publication bias—such as the number of conference abstracts of RCTs that were subsequently published in full in journals or associations between industry sponsorship and positive results or delay in publication.

We searched MEDLINE (via PubMed), the Cochrane Library, EMBASE, CINAHL, PsycINFO, AMED, and Web of Science. The full search strategy is presented in “Additional material 1.” We used medical subject headings and key words, focusing on terms for publication bias, related biases (i.e., “selection bias”), and for the known interventions (i.e., “registry,” “prospective registration,” “publishing/ethics,” “disclosure,” “peer review,” “electronic publishing,” “open access,” “right to publication,” “CONSORT statement,” “conflict of interest,” “research sponsor guidelines,” and so forth). We initially searched sources from inception up to May 2012. In a second stage in April–June 2014, we performed updated and extended hand and electronic searches using forward and backward citation and reference tracking of pertinent key references (“snowballing”) [17]. In addition, we personally contacted experts in the field of publication bias for their suggestions of relevant studies. We imported all citations into EndNote X4 (Thomson Reuters).

We retrieved all results from searches and performed independent dual review of abstracts and relevant full-text publications. Disagreements were resolved between the two reviewers or with a third reviewer. A single reviewer performed an assessment of the risk of bias of all empirical studies (low, unclear, or high risk of bias), which was confirmed by a second reviewer. For RCTs, we used the Cochrane Collaboration risk of bias tool, assessing adequacy of randomization, allocation concealment, the impact of attrition, and incomplete reporting [4]. We based the risk of bias assessment for observational studies on criteria outlined by Deeks et al. [18]. For example, we assessed the comparability of groups and the appropriateness of the interpretation of statistical analyses.

We classified the interventions into the categories as listed in Table 1 and presented in Fig. 1. We then summarized the results of the included studies qualitatively.

For each outcome, we graded the quality of the available evidence (QoE) in a four-part hierarchy based on an approach devised by the GRADE (Grading of Recommendations Assessment, Development and Evaluation) working group (high, moderate, low, and very low) [19]. This grading system reflects our confidence that the true effect lies close to that of the estimate of the effect. The factors that influence the QoE for a specific outcome are: the overall risk of bias of the studies and suspicion of publication bias, the consistency of the results (between the studies), the precision of the pooled result, and the directness of the studies regarding the subject studied, intervention implemented, and outcomes available. Factors that can increase the overall QoE are: when a large effect is present or a dose–response relationship is observed and the nature and direction of plausible confounding. A single reviewer graded the evidence and allocated a rating. A second, senior reviewer confirmed the rating.

3. Results

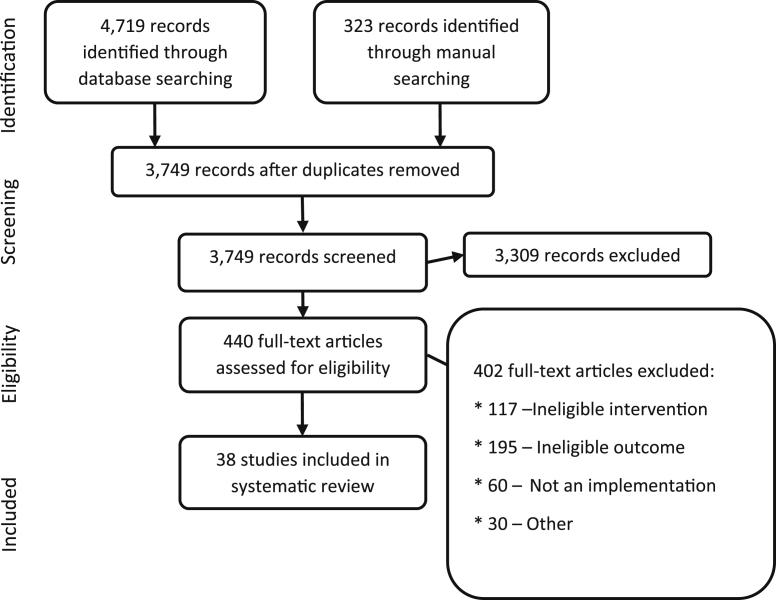

We identified 5,042 citations from searches and reviews of reference lists and screened 3,749 records, after removal of duplicates. Fig. 2 documents the disposition of the review process. We located 38 articles that analyzed the effectiveness of interventions to prevent or reduce publication bias [20–57]. The included research provides evidence regarding: prospective trial registration; the peer review process; disclosure of conflicts of interest (CoIs); and open-access publishing. We did not locate any evidence on the other categories of interventions, that is, research sponsors' guidelines, right to publication, or electronic publication. Table 2/Appendix at www.jclinepi.com provides information on the study design, intervention, results, and risk of bias rating for all included studies. Most of the studies we located were cross-sectional. Some were observational studies that relied on an historical control. Where randomized trials were available (i.e., for blinded peer review), they were often too small to provide adequate statistical power to detect small but potentially important differences. The QoE was generally reduced because of overall risk of bias, indirectness (e.g., because results were from within one medical specialty), or imprecision (where studies were small and confidence intervals included both a potential benefit and harm or no effect). Table 2 presents a summary of the QoE for each intervention.

Fig. 2.

PRISMA: disposition of the literature.

Table 2.

Summary of the evidence for interventions to reduce publication bias

| Intervention | Number of studies | Summary of evidence | Quality of evidencea |

|---|---|---|---|

| Research sponsors' guidelines | None | No evidence located | |

| Prospective trial registration | 30 Studies | The use of trial registries has increased since implementation of the ICMJE policy in 2005 | Moderate |

| Selective outcome-reporting bias persists in 30–65% of published reports of trials despite registration | Low | ||

| In approximately 50% of registry entries, it is not possible for readers/reviewers to detect outcome-reporting bias due to missing information | Low | ||

| Mandatory to enter trial outcome/results data in registry | 1 Study | Only 22% of pediatric trials subject to the FDA Amendment Act 2007 had entered results after 12 months; however, only 10% of trials not subject to the act had results. | Very low |

| Right to publication | None | No evidence located | |

| Peer review | 2 Studies | Blinding peer reviewers reduces geographical bias against non-US authors | Very low |

| Disclosure of conflict of interest | 5 Studies | Between 8% and 29% of authors did not reveal any conflict and up to two-thirds did not fully reveal their financial conflicts of interest | Low |

| Electronic publication | None | No evidence located | |

| Open access | 1 Study | Open-access publishing does not increase geographical bias against authors from LMIC | Very low |

Abbreviations: ICMJE, International Committee of Medical Journal Editors; FDA, Food and Drug Administration; LMIC, low- and middle-income countries.

Quality of evidence determined using the GRADE system (Grading of Recommendations Assessment, Development and Evaluation).

We begin each results subsection by providing context about the theoretical background of why each specific measure could reduce publication bias. We then summarize the new evidence gained from our systematic review addressing the effectiveness of each measure.

3.1. Prospective trial registration

3.1.1. Theoretical background

Prospective trial registration has multiple foreseeable benefits [1]. First, registration of all clinical trials enables systematic reviewers to know of the existence of a trial and make every attempt to include its results in their review. Unfortunately, as we have seen in the case of the trials of oseltamivir for influenza, merely knowing of the existence of trials does not always enable reviewers to gain access to the trial results [13]. A second benefit of prospective trial registration is that the trial protocols are publically available. This transparency should discourage (or completely prevent) cases where trialists change prespecified primary or secondary outcomes, sample size calculations, or other methodological aspects of their trial to present more favorable results in a final publication. A third benefit of trial registries is to allow systematic reviewers to easily detect cases where prespecified outcomes or methodological aspects of trials are changed and incorporate this knowledge into their summaries of the evidence [4]. Finally, trial registries can act as repositories for trial results on trial completion.

3.1.2. Evidence about effectiveness

We located 30 studies that evaluated aspects of prospective trial registration related to publication bias [20–49]. These studies offered data on several key issues: whether the International Committee of Medical Journal Editors (ICMJE) policy on accepting only manuscripts of prospectively registered trials has increased the proportion of trials registered (use of registries); whether prospective trial registration decreases the proportion of scientific publications that report different key outcomes or methods than those initially registered (i.e., discourages selective outcome-reporting bias); the quality and accuracy of information contained in trial registries (i.e., for systematic reviewers to be able to detect selective outcome-reporting bias if it occurs); and finally, whether the FDA Amendment Act 2007 mandating that trial results be available in registries is effective.

3.1.2.1. Use of trial registries

Since 2005, the ICMJE have applied a policy of accepting only manuscripts of trials that have been prospectively registered, however, not all biomedical journal adhere to the ICMJE policy [20]. Seven studies indicated that implementation of the ICMJE policy in 2005 has led to an increase in the proportion of trials that are prospectively registered (moderate QoE) [20,23,33,37,39,46,48]. For example, one before and after study of the trial registry clinicaltrials.gov showed that the number of registered records in the 6 months around the implementation of the ICMJE policy in September 2005 increased by 73% from 13,153 to 22,714 [20]. Likewise, a cross-sectional analysis of 137 reports of RCTs in oncology showed that the proportion of registered trials increased from 0% to 80% between 2002 and 2008 [23], and a review of RCTs in oncology revealed that the registration rate increased from 43% to 82% between 2005 and 2009 [39].

3.1.2.2. Discouraging selective outcome-reporting bias

Fourteen studies of registry entries provide evidence that the problem of positive outcome-reporting bias still exists, even for prospectively registered trials (low QoE). Specifically, between 30% and 50% of primary outcomes and up to 65% of secondary outcomes are changed between the first and last entry of study information in trial registries or differ between registry entry and journal publication [22,23,27–30,35,36,40–42,44,47,49].

In one study, it was shown that a noninferiority margin was specified in only 32% of entries of noninferiority trials in clinicaltrials.gov [27]. The very existence of these studies, however, illustrates the improved ability of systematic reviewers to detect this bias when it occurs (e.g., through the “archive feature” of clinicaltrials.gov).

Although we know selective outcome reporting still occurs, it may have conceivably been reduced by prospective trial registration. To quantify this comparison, Rasmussen et al [23] conducted a cross-sectional analysis of 137 published reports of 115 distinct RCTs that evaluated the 25 oncology drugs newly approved for use by the FDA in the period 2000–2005 and compared those that were prospectively registered with those not registered. All articles were published between 1996 and 2008. The authors did not find any difference between the likelihood of registered studies to favor the test drug as compared with nonregistered RCTs [odds ratio (OR) 1.11; 95% confidence interval (CI): 0.44, 2.80]. These results indicate that the use of trial registries is increasing; however, no effect on reducing, positive outcome-reporting bias could be seen (very low QoE).

3.1.2.3. Detecting selective outcome-reporting bias

Prospective trial registries could assist systematic reviewers or other independent persons to detect selective outcome-reporting bias by allowing them to cross-check planned primary and secondary outcomes and potential subgroup analyses with those presented in the publications of result of trials. For this to be possible, the data in trial registries must be accurate and complete. We located 10 studies that indicate that many entries in trial registries contain missing or faulty information regarding outcomes and important methodological aspects of trials (low QoE) [20,22,24,25,30,31,34,38,43,45]. Nonetheless, the reporting of methods in registered trials is probably better than that of nonregistered trials (one study, very low QoE) [26].

In detail, the largest cross-sectional study of 7,515 registered clinical trials in clinicaltrials.gov conducted in 2007 indicated that only 66% provided details on the primary outcome and only 56% described secondary outcomes [24]. Records entered by the top 10 pharmaceutical companies around the time of the implementation of the ICMJE compulsory trial registration policy were even more imprecise: in 657 records only 31% provided specific information on the primary outcome including the measure and the time frame [20]. When details of primary and secondary outcomes are entered into registries, the entries are often too vague for them to be useful for systematic reviewers to retrospectively detect bias. For example, several studies showed that the reporting of outcome measures and time for follow-up of outcomes was vague [24,30,31]. Examples include: providing an outcome such as “anxiety” but no measurement scale or providing a measurement (specific rating scale) but no time frame or method of analysis (e.g., categorical, change from baseline, period of follow-up).

A similar pattern was seen in the reporting of methodological aspects of trials in registries. One study that evaluated the adequacy of reporting of key methodological study details in 265 records of RCTs retrieved from the seven registries accessible through the World Health Organization meta-registry International Clinical Trials Registry Platform (WHO ICTRP) search portal (which at that time consisted of clinicaltrials.gov plus six primary registries from Australia, China, India, Germany, ISRCTN, and the Netherlands) showed that most records provided no useful information or insufficient detail on allocation concealment (98%), blinding (86%), or harms (90%) [25]. Explicit reporting of sample size calculations was adequate in only 1% of entries. In general, the Australian and Indian registries had a higher proportion of adequate reporting of methods, and these registries also provided specific fields for most of the methodological items assessed. This indicates that specific questions regarding trial methods are necessary to ensure that the information in the registry is usable for systematic reviewers.

Despite the fact that many registry entries contain vague information on outcomes or little information on trial methods, they may still be better than the information available for nonregistered trials. A cross-sectional study of 144 RCTs published in 55 journals compared the adequacy of methodological details such as participant flow and randomization implementation [26]. Reporting was significantly better in publications of RCTs that had been prospectively registered (participant flow: 76% vs. 38%, randomization: 48% vs. 22%). Similarly, where available, the reporting of methods was generally more detailed in registry entries than in the associated publications [42,45].

3.1.2.4. Mandatory availability of results

One study specifically compared the availability of results in the clinicaltrials.gov registry for pediatric trials subject to the FDA Amendments Act [32]. Of the 738 trials that were located and were subject to mandatory reporting, only 22% had reported results after 12 months. This is compared with 10% of trials not subject to mandatory reporting (QoE is very low).

3.2. Changes in the peer review process

3.2.1. Theoretical background

The role of the peer reviewer is to decide on the suitability of a manuscript for publication. The aim was to improve the quality of scientific articles by engaging an “expert” to review the work, thus preventing the publication of manuscripts with flawed methodology or results [1]. Critics of the peer-reviewing process claim that it increases bias because reviewers favor manuscripts from well-known authors or from institutions within their own country (geographical bias) [58,59]. Likewise, peer reviewers may discriminate against women (gender bias) [60].

3.2.2. Evidence of effectiveness

We located two studies where blinded peer review had been compared with anonymous review, and the effect on geographical and gender bias was analyzed [50,51]. Many studies of blinded peer reviewers that focused on acceptance of manuscripts in general or on quality or speed of reviewing in general were not included in this review [61–64].

3.2.2.1. Blinded peer review to reduce geographical bias

Two studies (one small randomized trial [51] and one large before and after study [50]) indicated that blinding peer reviewers reduced geographical bias (very low QoE).

The largest study compared the acceptance of 67,273 abstracts submitted to the American Heart Association's annual Scientific Sessions meeting in 2000 and 2001, when reviewers were aware of the name and institution of submitting authors, with the years 2002 through 2004 when abstracts were submitted anonymously [50]. Blinding of the peer review process significantly reduced the likelihood of preferential acceptance of abstracts from US authors, from countries with English as the official language, and from prestigious institutions (P < 0.001 for all comparisons). The acceptance rate of abstracts from women or men was equal for both open and blinded peer reviewers. In contrast, one smaller randomized trial of blinded peer review of 40 manuscripts submitted to the journal Dermatologic Surgery failed to detect significant differences between the blinded and unblinded reviewers on geographical bias: no significant differences were detected between rates of recommendations to accept, accept with revisions, or reject manuscripts from US or non-US authors [51]. Unfortunately, this trial was underpowered to detect a small but important difference for this outcome.

3.3. Disclosure of CoI

3.3.1. Theoretical background

Many studies have shown that financial CoIs lead authors to favor the drugs produced by certain pharmaceutical companies and present a biased view of the scientific evidence for therapies or to make recommendations that favor certain drugs [54,65–67]. We did not include studies in this review that showed that CoI leads to biased research results or guideline recommendations or studies reporting on the policies of journals or organizations on disclosures of CoIs. In this section, we summarize the evidence that an implemented CoI policy is successful in revealing actual conflicts.

3.3.2. Accuracy of disclosures of CoI

3.3.2.1. Evidence of effectiveness

We located five studies that consistently showed that despite policies requiring authors and guideline panel members to disclose financial conflicts, between 8% and 29% did not reveal any conflict and up to two-thirds did not fully reveal their financial CoIs [52–56]. The authors of the studies cross-checked the declarations of CoI with publically available information on the transfer of funds from pharmaceutical companies to doctors (e.g., Dollars for Docs) or from registries of patent applications or previous journal articles from the same authors with declarations (see Table 2/Appendix at www.jclinepi.com) for details. The QoE is low.

3.4. Open access

3.4.1. Theoretical background

“Open access” describes free access for all readers to publications of clinical trials in scientific journals (a system where users of the scientific literature have unlimited access to publications without paying subscription fees to the publishers of journals). To fund open-access journals, authors must pay “publication charges.” Open-access publishing might reduce publication bias because more space is available in open-access journals for publishing negative and nonsignificant results. On the other hand, moving the burden of payment to the authors of publications may worsen the problem of publication bias if it acts as a barrier to authors deciding to publish trial results. Costs for authors are usually between one and two thousand Euros, and journals often offer discounts for authors from developing countries. Several funders of clinical research in Europe mandate open access to the results of studies that they sponsor and provide funds for the publishing fee [1]. For readers of the literature, open access obviously provides more information for less cost.

3.4.2. Open-access publishing and geographical bias

3.4.2.1. Evidence of effectiveness

One study provided two analyses that sought to determine whether authors from low- or middle-income countries are discouraged from publishing in open-access journals (a cross-sectional comparison of journal types and a before–after analysis of two journals that implemented open-access publishing) [57]. The results are conflicting and very imprecise; however, they indicate that open-access publishing does not increase geographical bias (very low QoE). In detail, the before–after analysis of 485 articles from the two open-access journals (comparing the nature of articles before and after the change in policy) did not show any significant difference for authors from developing countries (OR 1.33; 95% CI: 0.7, 2.52). In contrast, in the cross-sectional comparison of four different infectious diseases journals at the same point in time (two subscription payers and two with open-access publishing fees of 1,230 US dollars), significantly fewer articles written by authors from developing countries were published in the journals with publication fees compared with the journals without publication charges (OR 0.25; 95% CI: 0.15, 0.41). This result is likely to be due to other confounding factors related to the differences between the journals.

4. Discussion

The research and scientific community has been aware of and calling for solutions to address the problem of publication bias for many decades [68,69]. In 2010, Song et al., [1] identified several possible interventions that may reduce publication bias. To our knowledge, this is the first systematic review that sought to synthesize all the available empirical evidence for the effectiveness of these interventions. We located studies evaluating the success of only four measures, and the results of the studies we located indicate that we have made progress in the overall battle against publication bias but still have much work ahead. The only conclusions that we can support with low or moderate ratings for the quality of the body of evidence is that although the use of clinical trial registries has substantially increased since the ICMJE policy change in 2005, the problem of nonpublication of trial results and positive outcome-reporting is still pervasive and the data available to reviewers in registries are often not adequate for independent systematic reviewers to recognize this bias. Likewise, the evidence suggests that blinding peer reviewers can reduce geographical bias against non-US authors; on the one hand, this indicates a real potential to reduce related biases; on the other hand, the potential of blinding peer reviewers to impact on the larger problem of publication bias is unclear. We did not locate any direct evidence comparing the rates of declarations of CoI over time or a direct connection between CoI and a reduction in selective outcome reporting, for example; however, studies between 2006 and 2012 show a consistent and persistent pattern of inadequate declaration of financial CoIs. We found no studies on: research sponsors' guidelines; right to publication; or electronic publication.

This review has some limitations. Initially, we concentrated our searches on databases; however, it soon became clear that in this complex field, the yield from electronic searches alone would be inadequate. We therefore extended our searches in a second phase to include snowballing techniques and approached experts for suggestions of eligible studies, as suggested by Greenhalgh and Peacock [17] for systematic reviews of complex evidence. Nonetheless, we cannot be sure that we have detected every study of an intervention to reduce publication bias. Another criticism of our review might be that we did not include studies of interventions such a blinded peer review or disclosure of CoI where the measured outcome was not a reduction in bias. We used a “chain of evidence” approach and determined that an improvement in the quality of peer reviews or an increase in citation rates of open-access articles did not constitute a link on the chain to reducing publication bias. In comparison, as shown in Fig. 1, we included many studies on the completeness of trial registries or their uptake as registries represent an increasingly crucial link in the pathway of clinical trial results publication. Likewise, the success of CoI disclosure is important in evaluating its ability to decrease publication bias.

The evidence available on the effectiveness of interventions is limited because it is difficult to design and conduct good trials or natural experiments to test many of the interventions suggested by commentators to reduce publication bias (e.g., changes of whole systems like ethic commissions or changes to national regulations/laws). Nonetheless, some interventions that are often suggested would be amenable to testing in well-conducted studies. For example, one could compare the reporting of results including the accuracy of reporting of primary and secondary outcomes in open-access journals compared with subscription journals. Likewise, we could compare the availability of negative or nonsignificant results in open-access journals. We could analyze how many trial results become public before and after a change to funding or ethics commission rules. Likewise, we could more thoroughly analyze the effect of interventions to the peer review system on biases such as outcome-reporting or geographical bias. It seems reasonable that we, as advocates and practitioners of evidence-based medicine, would base our campaigns for specific measures to reduce publication bias on a reasonable level of evidence for their effectiveness.

A second implication of this review is that the one intervention that has been successful in its uptake (trial registries) is unfortunately weakened by lack of mandatory fields and lack of regulation to ensure that all data provided are complete and accurate and not altered—either during the course of a trial or after trial completion but before publication. In addition to mandating their use, responsibility for the enforcement of the quality of information entered into trial registries is required, as well as consideration of increasing the number of compulsory fields. We recommend that fully complete data in the mandatory fields of a trial registry should also be a prerequisite for ethic commission approval, for being considered for publication, and of funding for future clinical trials. Furthermore, it is necessary that there is one registry to find all conducted trials, such as the WHO ICTRP, where each trial has a unique identifier number. The impact of the policy of mandatory reporting of results of registered trials within 1 year of their completion, as required by US law [70], needs to be evaluated further [43].

Lastly, we did not locate any evidence regarding the mandatory disclosure of all trial data and in which format this data should be available. Many researchers now contend that this would be the ultimate panacea for the problem of publication bias, allowing independent persons to evaluate, interpret, and summarize the results of clinical trials [71].

Acknowledgments

This report is the result of work done in the UNCOVER project. The consortium that contributed to the UNCOVER project includes persons from the Austrian Institute of Technology Department for Foresight & Policy Development (Coordinating partner) and the UNC University of North Carolina at Chapel Hill Gillings School of Global Public Health. A second report from this work package that provides a thematic analysis and stakeholder interviews regarding barriers and facilitators of measures to reduce publication bias has been published [72].

The authors thank Evelyn Auer and Julia Schober for administrative support on this project and the peer reviewer and experts who we contacted for their advice.

Authors' contributions: All authors contributed to designing the review, participated in reviewing abstracts and full publications, synthesized the results, and contributed to writing the manuscript. In addition, K.T. was responsible for the review team, M.G.V.N. and I.K. performed the electronic searches, and C.K. coordinated the work package for this project.

Footnotes

Conflict of interest: None.

G.G. and K.T. are Director and Associate Director of the Austrian Branch of the Cochrane Collaboration—an organization that strongly advocates to reduce publication bias and for transparency in clinical pharmaceutical research.

Funding: The research was funded by the European Union's Seventh Framework Program (FP7/2007-2013) under grant agreement number 282574.

Supplementary data

References

- 1.Song F., Parekh S., Hooper L., Loke Y.K., Ryder J., Sutton A.J. Dissemination and publication of research findings: an updated review of related biases. Health Technol Assess. 2010;14 doi: 10.3310/hta14080. iii, ix-xi, 1-193. [DOI] [PubMed] [Google Scholar]

- 2.Galsworthy M.J., Hristovski D., Lusa L., Ernst K., Irwin R., Charlesworth K. Academic output of 9 years of EU investment into health research. Lancet. 2012;380:971–972. doi: 10.1016/S0140-6736(12)61528-1. [DOI] [PubMed] [Google Scholar]

- 3.Chan A.W., Song F., Vickers A., Jefferson T., Dickersin K., Gotzsche P.C. Increasing value and reducing waste: addressing inaccessible research. Lancet. 2014;383:257–266. doi: 10.1016/S0140-6736(13)62296-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Higgins J.P.T., Green S., editors. Cochrane handbook for systematic reviews of interventions version 5.1.0 [updated March 2011] The Cochrane Collaboration; Oxford, UK: 2011. http://handbook.cochrane.org/ Available at: Accessed Oct 3, 2014. [Google Scholar]

- 5.Caldwell B., Aldington S., Weatherall M., Shirtcliffe P., Beasley R. Risk of cardiovascular events and celecoxib: a systematic review and meta-analysis. J R Soc Med. 2006;99(3):132–140. doi: 10.1258/jrsm.99.3.132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ross J.S., Madigan D., Hill K.P., Egilman D.S., Wang Y., Krumholz H.M. Pooled analysis of rofecoxib placebo-controlled clinical trial data: lessons for postmarket pharmaceutical safety surveillance. Arch Intern Med. 2009;169:1976–1985. doi: 10.1001/archinternmed.2009.394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Psaty B.M., Kronmal R.A. Reporting mortality findings in trials of rofecoxib for Alzheimer disease or cognitive impairment: a case study based on documents from rofecoxib litigation. JAMA. 2008;299:1813–1817. doi: 10.1001/jama.299.15.1813. [DOI] [PubMed] [Google Scholar]

- 8.Whittington C.J., Kendall T., Fonagy P., Cottrell D., Cotgrove A., Boddington E. Selective serotonin reuptake inhibitors in childhood depression: systematic review of published versus unpublished data. Lancet. 2004;363:1341–1345. doi: 10.1016/S0140-6736(04)16043-1. [DOI] [PubMed] [Google Scholar]

- 9.Jureidini J.N., McHenry L.B., Mansfield P.R. Clinical trials and drug promotion: selective reporting of study 329. Int J Risk Saf Med. 2008;20:73–81. [Google Scholar]

- 10.Vedula S.S., Goldman P.S., Rona I.J., Greene T.M., Dickersin K. Implementation of a publication strategy in the context of reporting biases. A case study based on new documents from Neurontin litigation. Trials. 2012;13:136. doi: 10.1186/1745-6215-13-136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Vedula S.S., Bero L., Scherer R.W., Dickersin K. Outcome reporting in industry-sponsored trials of gabapentin for off-label use. N Engl J Med. 2009;361:1963–1971. doi: 10.1056/NEJMsa0906126. [DOI] [PubMed] [Google Scholar]

- 12.Nissen S.E., Wolski K. Rosiglitazone revisited: an updated meta-analysis of risk for myocardial infarction and cardiovascular mortality. Arch Intern Med. 2010;170(14):1191–1201. doi: 10.1001/archinternmed.2010.207. [DOI] [PubMed] [Google Scholar]

- 13.Jefferson T., Jones M.A., Doshi P., Del Mar C.B., Heneghan C.J., Hama R. Neuraminidase inhibitors for preventing and treating influenza in healthy adults and children. Cochrane Database Syst Rev. 2012:CD008965. doi: 10.1002/14651858.CD008965.pub3. [DOI] [PubMed] [Google Scholar]

- 14.Eyding D., Lelgemann M., Grouven U., Harter M., Kromp M., Kaiser T. Reboxetine for acute treatment of major depression: systematic review and meta-analysis of published and unpublished placebo and selective serotonin reuptake inhibitor controlled trials. BMJ. 2010;341:c4737. doi: 10.1136/bmj.c4737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Macaskill P., Walter S.D., Irwig L. A comparison of methods to detect publication bias in meta-analysis. Stat Med. 2001;20:641–654. doi: 10.1002/sim.698. [DOI] [PubMed] [Google Scholar]

- 16.Terrin N., Schmid C.H., Lau J. In an empirical evaluation of the funnel plot, researchers could not visually identify publication bias. J Clin Epidemiol. 2005;58:894–901. doi: 10.1016/j.jclinepi.2005.01.006. [DOI] [PubMed] [Google Scholar]

- 17.Greenhalgh T., Peacock R. Effectiveness and efficiency of search methods in systematic reviews of complex evidence: audit of primary sources. BMJ. 2005;331:1064–1065. doi: 10.1136/bmj.38636.593461.68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Deeks J.J., Dinnes J., D'Amico R., Sowden A.J., Sakarovitch C., Song F. Evaluating non-randomised intervention studies. Health Technol Assess. 2003;7 doi: 10.3310/hta7270. iii-x, 1-173. [DOI] [PubMed] [Google Scholar]

- 19.Guyatt G.H., Oxman A.D., Kunz R., Vist G.E., Falck-Ytter Y., Schunemann H.J. What is “quality of evidence” and why is it important to clinicians? BMJ. 2008;336:995–998. doi: 10.1136/bmj.39490.551019.BE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zarin D.A., Tse T., Ide N.C. Trial registration at ClinicalTrials.gov between May and October 2005. N Engl J Med. 2005;353:2779–2787. doi: 10.1056/NEJMsa053234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sekeres M., Gold J.L., Chan A.W., Lexchin J., Moher D., Van Laethem M.L. Poor reporting of scientific leadership information in clinical trial registers. PLoS One. 2008;3(2):e1610. doi: 10.1371/journal.pone.0001610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Mathieu S., Boutron I., Moher D., Altman D.G., Ravaud P. Comparison of registered and published primary outcomes in randomized controlled trials. JAMA. 2009;302:977–984. doi: 10.1001/jama.2009.1242. [DOI] [PubMed] [Google Scholar]

- 23.Rasmussen N., Lee K., Bero L. Association of trial registration with the results and conclusions of published trials of new oncology drugs. Trials. 2009;10:116. doi: 10.1186/1745-6215-10-116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ross J.S., Mulvey G.K., Hines E.M., Nissen S.E., Krumholz H.M. Trial publication after registration in ClinicalTrials.Gov: a cross-sectional analysis. PLoS Med. 2009;6(9):e1000144. doi: 10.1371/journal.pmed.1000144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Reveiz L., Chan A.W., Krleza-Jeric K., Granados C.E., Pinart M., Etxeandia I. Reporting of methodologic information on trial registries for quality assessment: a study of trial records retrieved from the WHO search portal. PLoS One. 2010;5(8):e12484. doi: 10.1371/journal.pone.0012484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Reveiz L., Cortes-Jofre M., Asenjo Lobos C., Nicita G., Ciapponi A., Garcia-Dieguez M. Influence of trial registration on reporting quality of randomized trials: study from highest ranked journals. J Clin Epidemiol. 2010;63:1216–1222. doi: 10.1016/j.jclinepi.2010.01.013. [DOI] [PubMed] [Google Scholar]

- 27.Dekkers O.M., Soonawala D., Vandenbroucke J.P., Egger M. Reporting of noninferiority trials was incomplete in trial registries. J Clin Epidemiol. 2011;64:1034–1038. doi: 10.1016/j.jclinepi.2010.12.008. [DOI] [PubMed] [Google Scholar]

- 28.Huic M., Marusic M., Marusic A. Completeness and changes in registered data and reporting bias of randomized controlled trials in ICMJE journals after trial registration policy. PLoS One. 2011;6(9):e25258. doi: 10.1371/journal.pone.0025258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Viergever R.F., Ghersi D. The quality of registration of clinical trials. PLoS One. 2011;6(2):e14701. doi: 10.1371/journal.pone.0014701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zarin D.A., Tse T., Williams R.J., Califf R.M., Ide N.C. The ClinicalTrials.gov results database—update and key issues. N Engl J Med. 2011;364:852–860. doi: 10.1056/NEJMsa1012065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Jones C.W., Platts-Mills T.F. Quality of registration for clinical trials published in emergency medicine journals. Ann Emerg Med. 2012;60(4):458–464 e1. doi: 10.1016/j.annemergmed.2012.02.005. [DOI] [PubMed] [Google Scholar]

- 32.Prayle A.P., Hurley M.N., Smyth A.R. Compliance with mandatory reporting of clinical trial results on ClinicalTrials.gov: cross sectional study. BMJ. 2012;344:d7373. doi: 10.1136/bmj.d7373. [DOI] [PubMed] [Google Scholar]

- 33.Reveiz L., Bonfill X., Glujovsky D., Pinzon C.E., Asenjo-Lobos C., Cortes M. Trial registration in Latin America and the Caribbean's: study of randomized trials published in 2010. J Clin Epidemiol. 2012;65:482–487. doi: 10.1016/j.jclinepi.2011.09.003. [DOI] [PubMed] [Google Scholar]

- 34.Ross J.S., Tse T., Zarin D.A., Xu H., Zhou L., Krumholz H.M. Publication of NIH funded trials registered in ClinicalTrials.gov: cross sectional analysis. BMJ. 2012;344:d7292. doi: 10.1136/bmj.d7292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Shamliyan T., Kane R.L. Clinical research involving children: registration, completeness, and publication. Pediatrics. 2012;129(5):e1291–e1300. doi: 10.1542/peds.2010-2847. [DOI] [PubMed] [Google Scholar]

- 36.Smith H.N., Bhandari M., Mahomed N.N., Jan M., Gandhi R. Comparison of arthroplasty trial publications after registration in ClinicalTrials.gov. J Arthroplasty. 2012;27(7):1283–1288. doi: 10.1016/j.arth.2011.11.005. [DOI] [PubMed] [Google Scholar]

- 37.van de Wetering F.T., Scholten R.J.P.M., Haring T., Clarke M., Hooft L. Trial registration numbers are underreported in biomedical publications. PLoS One. 2012;7(11):e49599. doi: 10.1371/journal.pone.0049599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wieseler B., Kerekes M.F., Vervoelgyi V., McGauran N., Kaiser T. Impact of document type on reporting quality of clinical drug trials: a comparison of registry reports, clinical study reports, and journal publications. BMJ. 2012;344:d8141. doi: 10.1136/bmj.d8141. [DOI] [PubMed] [Google Scholar]

- 39.You B., Gan H.K., Pond G., Chen E.X. Consistency in the analysis and reporting of primary end points in oncology randomized controlled trials from registration to publication: a systematic review. J Clin Oncol. 2012;30:210–216. doi: 10.1200/JCO.2011.37.0890. [DOI] [PubMed] [Google Scholar]

- 40.Hannink G., Gooszen H.G., Rovers M.M. Comparison of registered and published primary outcomes in randomized clinical trials of surgical interventions. Ann Surg. 2013;257(5):818–823. doi: 10.1097/SLA.0b013e3182864fa3. [DOI] [PubMed] [Google Scholar]

- 41.Li X.Q., Yang G.L., Tao K.M., Zhang H.Q., Zhou Q.H., Ling C.Q. Comparison of registered and published primary outcomes in randomized controlled trials of gastroenterology and hepatology. Scand J Gastroenterol. 2013;48(12):1474–1483. doi: 10.3109/00365521.2013.845909. [DOI] [PubMed] [Google Scholar]

- 42.Liu J.P., Han M., Li X.X., Mu Y.J., Lewith G., Wang Y.Y. Prospective registration, bias risk and outcome-reporting bias in randomised clinical trials of traditional Chinese medicine: an empirical methodological study. BMJ Open. 2013;3(7) doi: 10.1136/bmjopen-2013-002968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Riveros C., Dechartres A., Perrodeau E., Haneef R., Boutron I., Ravaud P. Timing and completeness of trial results posted at ClinicalTrials.gov and published in journals. PLoS Med. 2013;10(12):e1001566. doi: 10.1371/journal.pmed.1001566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Rosenthal R., Dwan K. Comparison of randomized controlled trial registry entries and content of reports in surgery journals. Ann Surg. 2013;257(6):1007–1015. doi: 10.1097/SLA.0b013e318283cf7f. [DOI] [PubMed] [Google Scholar]

- 45.Tharyan P., George A.T., Kirubakaran R., Barnabas J.P. Reporting of methods was better in the Clinical Trials Registry—India than in Indian journal publications. J Clin Epidemiol. 2013;66:10–22. doi: 10.1016/j.jclinepi.2011.11.011. [DOI] [PubMed] [Google Scholar]

- 46.Babu A.S., Veluswamy S.K., Rao P.T., Maiya A.G. Clinical trial registration in physical therapy journals: a cross-sectional study. Phys Ther. 2014;94:83–90. doi: 10.2522/ptj.20120531. [DOI] [PubMed] [Google Scholar]

- 47.Killeen S., Sourallous P., Hunter I.A., Hartley J.E., Grady H.L. Registration rates, adequacy of registration, and a comparison of registered and published primary outcomes in randomized controlled trials published in surgery journals. Ann Surg. 2014;259(1):193–196. doi: 10.1097/SLA.0b013e318299d00b. [DOI] [PubMed] [Google Scholar]

- 48.Shamliyan T.A., Kane R.L. Availability of results from clinical research: failing policy efforts. J Epidemiol Glob Health. 2014;4(1):1–12. doi: 10.1016/j.jegh.2013.08.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Viergever R.F., Karam G., Reis A., Ghersi D. The quality of registration of clinical trials: still a problem. PLoS One. 2014;9(1) doi: 10.1371/journal.pone.0084727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ross J.S., Gross C.P., Desai M.M., Hong Y., Grant A.O., Daniels S.R. Effect of blinded peer review on abstract acceptance. JAMA. 2006;295:1675–1680. doi: 10.1001/jama.295.14.1675. [DOI] [PubMed] [Google Scholar]

- 51.Alam M., Kim N.A., Havey J., Rademaker A., Ratner D., Tregre B. Blinded vs. unblinded peer review of manuscripts submitted to a dermatology journal: a randomized multi-rater study. Br J Dermatol. 2011;165:563–567. doi: 10.1111/j.1365-2133.2011.10432.x. [DOI] [PubMed] [Google Scholar]

- 52.Mayer S. Declaration of patent applications as financial interests: a survey of practice among authors of papers on molecular biology in nature. J Med Ethics. 2006;32:658–661. doi: 10.1136/jme.2005.014290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Okike K., Kocher M.S., Wei E.X., Mehlman C.T., Bhandari M. Accuracy of conflict-of-interest disclosures reported by physicians. N Engl J Med. 2009;361(15):1466–1474. doi: 10.1056/NEJMsa0807160. [DOI] [PubMed] [Google Scholar]

- 54.Wang A.T., McCoy C.P., Murad M.H., Montori V.M. Association between industry affiliation and position on cardiovascular risk with rosiglitazone: cross sectional systematic review. BMJ. 2010;340:1344. doi: 10.1136/bmj.c1344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Neuman J., Korenstein D., Ross J.S., Keyhani S. Prevalence of financial conflicts of interest among panel members producing clinical practice guidelines in Canada and United States: cross sectional study. BMJ. 2011;343:5621. doi: 10.1136/bmj.d5621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Norris S.L., Holmer H.K., Ogden L.A., Burda B.U., Fu R. Characteristics of physicians receiving large payments from pharmaceutical companies and the accuracy of their disclosures in publications: an observational study. BMC Med Ethics. 2012;13:24. doi: 10.1186/1472-6939-13-24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Liyanage S.S., Raina Macintyre C. Do financial factors such as author page charges and industry funding impact on the nature of published research in infectious diseases? Health Info Libr J. 2006;23:214–222. doi: 10.1111/j.1471-1842.2006.00665.x. [DOI] [PubMed] [Google Scholar]

- 58.Link A.M. US and non-US submissions. An analysis of reviewer bias. JAMA. 1998;280:246–247. doi: 10.1001/jama.280.3.246. [DOI] [PubMed] [Google Scholar]

- 59.Opthof T., Coronel R., Janse M.J. The significance of the peer review process against the background of bias: priority ratings of reviewers and editors and the prediction of citation, the role of geographical bias. Cardiovasc Res. 2002;56:339–346. doi: 10.1016/s0008-6363(02)00712-5. [DOI] [PubMed] [Google Scholar]

- 60.Gilbert J.R., Williams E.S., Lundberg G.D. Is there gender bias in JAMA's peer review process? JAMA. 1994;272:139–142. [PubMed] [Google Scholar]

- 61.van Rooyen S., Godlee F., Evans S., Smith R., Black N. Effect of blinding and unmasking on the quality of peer review: a randomized trial. JAMA. 1998;280:234–237. doi: 10.1001/jama.280.3.234. [DOI] [PubMed] [Google Scholar]

- 62.van Rooyen S., Godlee F., Evans S., Black N., Smith R. Effect of open peer review on quality of reviews and on reviewers' recommendations: a randomised trial. BMJ. 1999;318:23–27. doi: 10.1136/bmj.318.7175.23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Van Rooyen S., Godlee F., Evans S., Smith R., Black N. Effect of blinding and unmasking on the quality of peer review. J Gen Intern Med. 1999;14:622–624. doi: 10.1046/j.1525-1497.1999.09058.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Schroter S., Black N., Evans S., Carpenter J., Godlee F., Smith R. Effects of training on quality of peer review: randomised controlled trial. BMJ. 2004;328:673. doi: 10.1136/bmj.38023.700775.AE. http://onlinelibrary.wiley.com/o/cochrane/clcentral/articles/643/CN-00769643/frame.html Available at. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Bekelman J.E., Li Y., Gross C.P. Scope and impact of financial conflicts of interest in biomedical research: a systematic review. JAMA. 2003;289:454–465. doi: 10.1001/jama.289.4.454. [DOI] [PubMed] [Google Scholar]

- 66.Jorgensen A.W., Hilden J., Gotzsche P.C. Cochrane reviews compared with industry supported meta-analyses and other meta-analyses of the same drugs: systematic review. BMJ. 2006;333:782. doi: 10.1136/bmj.38973.444699.0B. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Lundh A., Sismondo S., Lexchin J., Busuioc Octavian A., Bero L. Industry sponsorship and research outcome. Cochrane Database Syst Rev. 2012:MR000033. doi: 10.1002/14651858.MR000033.pub2. http://onlinelibrary.wiley.com/doi/10.1002/14651858.MR000033.pub2/abstract Available at. Accessed March 15, 2014. [DOI] [PubMed] [Google Scholar]

- 68.Chalmers I., Moher D. Publication bias. Lancet. 1993;342:1116. doi: 10.1016/0140-6736(93)92099-f. [DOI] [PubMed] [Google Scholar]

- 69.Dickersin K., Min Y.I. Publication bias: the problem that won't go away. Ann N Y Acad Sci. 1993;703:135–146. doi: 10.1111/j.1749-6632.1993.tb26343.x. discussion 146–8. [DOI] [PubMed] [Google Scholar]

- 70.ClinicalTrials.gov. 2012 [updated August 2012; cited 2012 September 3]. Available at http://clinicaltrials.gov/ct2/about-site/history. Accessed September 3, 2012.

- 71.Gotzsche P.C. Why we need easy access to all data from all clinical trials and how to accomplish it. Trials. 2011;12:249. doi: 10.1186/1745-6215-12-249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Kien C., Nussbaumer B., Thaler K.J., Van Noord M.G., Wagner P., Gartlehner G. Barriers to and facilitators of interventions to counterpublication bias: thematic analysis of scholarly articles and stakeholderinterviews. BMC Health Services Research. 2014;14:551. doi: 10.1186/s12913-014-0551-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.