Abstract

In its search for neural codes, the field of visual neuroscience has uncovered neural representations that reflect the structure of stimuli of variable complexity from simple features to object categories. However, accumulating evidence suggests an adaptive neural code that is dynamically shaped by experience to support flexible and efficient perceptual decisions. Here, we review work showing that experience plays a critical role in molding midlevel visual representations for perceptual decisions. Combining behavioral and brain imaging measurements, we demonstrate that learning optimizes feature binding for object recognition in cluttered scenes, and tunes the neural representations of informative image parts to support efficient categorical judgements. Our findings indicate that similar learning mechanisms may mediate long-term optimization through development, tune the visual system to fundamental principles of feature binding, and optimize feature templates for perceptual decisions.

Keywords: perceptual learning, object recognition, categorization

Introduction

Evolution and development have been proposed to shape the organization of the visual system and result in structural neural codes that support visual recognition (Gilbert, Sigman, & Crist, 2001; Simoncelli & Olshausen, 2001). However, long-term experience is not the only means by which sensory processing becomes optimized. Learning through everyday experiences in adulthood has been shown to be a key facilitator for a range of tasks, from simple feature discrimination to object recognition (Fine & Jacobs, 2002; Goldstone, 1998; Schyns, Goldstone, & Thibaut, 1998). Here, we review how long-term experience and short-term training may interact to shape the optimization of visual recognition processes. While long-term experience hones the principles of organization that mediate feature grouping for object recognition, short-term training may establish new principles for the interpretation of natural scenes. Here, we summarize our recent work combining behavioral and brain imaging measurements to investigate the role of experience in optimizing neural coding for perceptual decisions. In particular, we focus on the role of learning in perceptual judgements under sensory uncertainty, such as when detecting stimuli presented in cluttered backgrounds or discriminating between highly similar stimuli. We ask: what are the learning mechanisms that mediate our ability to detect targets in cluttered scenes and assign new objects to meaningful categories?

Learning-dependent mechanisms for visual recognition in cluttered scenes

Recognizing meaningful objects entails integrating information at different levels of visual complexity from local contous to complex features independent of image changes (e.g., changes in position, size, pose, or background clutter). To achieve this challenging task, the brain is thought to exploit a network of connections that support the integration of object features based on image regularities that occur frequently in natural scenes (e.g., orientation similarity between neighboring elements; Geisler, 2008; Sigman, Cecchi, Gilbert, & Magnasco, 2001). For example, long-term experience with the high prevalence of collinear edges in natural environments (Geisler, 2008; Sigman et al., 2001) has been shown to result in enhanced sensitivity for the detection of collinear contours in clutter. However, recent work highlights the role of shorter term training in feature binding and visual recognition in clutter. For example, observers have been shown to learn distinctive target features by exploiting regularities in natural scenes and suppressing background noise (Brady & Kersten, 2003; Dosher & Lu, 1998; Eckstein, Abbey, Pham, & Shimozaki, 2004; Gold, Bennett, & Sekuler, 1999; Li, Levi, & Klein, 2004). In particular, learning has been suggested to enhance the correlations between neurons responding to the features of target patterns while decorrelating neural responses to target and background patterns. As a result, redundancy in the physical input is reduced and target salience is enhanced (Jagadeesh, Chelazzi, Mishkin, & Desimone, 2001) supporting efficient detection and identification of objects in cluttered scenes (Barlow, 1990).

Further, our recent behavioral studies show that short-term experience in adulthood may modify the behavioral relevance (i.e., utility) of atypical contour statistics for the interpretation of natural scenes (Schwarzkopf & Kourtzi, 2008). Although collinearity is a prevalent principle for perceptual integration in natural scenes, we demonstrated that the brain can learn to exploit other image regularities for contour linking (Schwarzkopf & Kourtzi, 2008). In particular, observers learned to use discontinuities typically associated with surface boundaries (e.g., orthogonal or acute alignments) for contour linking and detection. Observers were trained with or without feedback to judge which of the two stimuli presented successively in a trial contained contours rather than only random elements (two-interval forced-choice task). Behavioral performance (i.e., detection accuracy) improved for the trained contours, and this improvement was maintained for a prolonged period (i.e., testing 6 to 8 months after training), suggesting optimization of perceptual integration processes through experience rather than simply transient changes in visual sensitivity. Thus, these findings provide evidence that short-term experience boosts the observers' ability to detect camouflaged targets by shaping the behavioral relevance of image statistics.

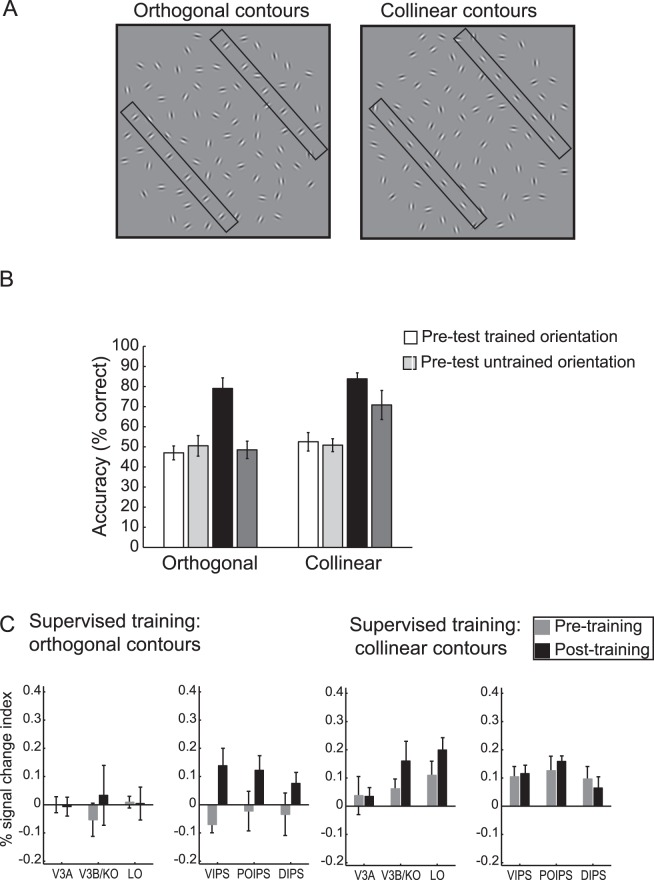

Our recent studies combining behavioral and brain imaging measurements (Zhang & Kourtzi, 2010) indicate two routes to visual learning in clutter with distinct signatures of brain plasticity (Figure 1). We show that long-term experience with statistical regularities (i.e., collinearity) may facilitate opportunistic learning (i.e., learning to exploit image cues), while learning to integrate discontinuities (i.e., elements orthogonal to contour paths) entails bootstrap-based training (i.e., learning new features) for the detection of contours in clutter. In particular, observers were trained on contour detection (two-interval forced-choice task) with auditory error feedback, or simply exposed to contour stimuli while performing a contrast-discrimination task (i.e., judging stimulus contrast) unrelated to contour detection. Learning to integrate collinear contours was shown to occur through frequent exposure, generalize across untrained stimulus features, and shape processing in higher occipitotemporal regions implicated in the representation of global forms. In contrast, learning to integrate discontinuities (i.e., elements orthogonal to contour paths) required task-specific training (bootstrap-based learning), was stimulus-dependent, and enhanced processing in intraparietal regions implicated in attention-gated learning. This is consistent with neuroimaging studies showing that a ventral cortex region becomes specialized through experience and development for letter integration and word recognition (Dehaene, Cohen, Sigman, & Vinckier, 2005), while parietal regions are recruited for recognizing words presented in unfamiliar formats (Cohen, Dehaene, Vinckier, Jobert, & Montavont, 2008). Taken together, these findings suggest that opportunistic learning of statistical regularities shapes bottom-up object processing in occipitotemporal areas, while learning new features and rules for perceptual integration recruits parietal regions involved in the attentional gating of recognition processes.

Figure 1.

Learning to detect contours in cluttered scenes. (A) Examples of stimuli: Collinear contours in which elements are aligned along the contour path and orthogonal contours in which elements are oriented at 90° to the contour path. For demonstration purposes only, two rectangles illustrate the position of the two contour paths in each stimulus. (B) Average behavioral performance across subjects (percent correct) before and after training for collinear and orthogonal contours presented at trained and untrained orientations. (C) fMRI responses for observers trained with orthogonal versus collinear contours. fMRI data (percent signal change for contour minus random stimuli) are shown for trained contour orientations before and after training on orthogonal versus collinear contours. Adapted with permission from Zhang and Kourtzi (2010).

Learning-dependent changes for shape categorization

Assigning novel objects into meaningful categories is critical for successful interactions in complex environments (Miller & Cohen, 2001). Extensive behavioral work on visual categorization (Goldstone, Lippa, & Shiffrin, 2001; Nosofsky, 1986; Schyns et al., 1998) suggests that the brain solves this challenging task by representing the relevance of visual feature for categorical decisions rather than simply the physical similarity between objects. Neuroimaging studies have identified a large network of cortical and subcortical areas in the human brain that are involved in visual categorization (Ashby & Maddox, 2005; Keri, 2003) and have revealed a distributed pattern of activations for object categories in the temporal cortex (Hanson, Matsuka, & Haxby, 2004; Haxby et al., 2001; O'Toole, Jiang, Abdi, & Haxby, 2005; Williams, Dang, & Kanwisher, 2007).

Yet, using fMRI to isolate the neural code for flexible category learning is fraught with complexity. First, learning-dependent changes measured by BOLD may reflect different underlying changes in neural selectivity (i.e., sharpening of neuronal tuning to a visual stimulus): There may be enhanced responses to the preferred stimulus, decreased responses to nonpreferred stimuli, or a combination of the two. A number of studies have shown that learning changes the overall responsiveness (i.e., increased or decreased fMRI responses) of visual areas to trained stimuli (Kourtzi, Betts, Sarkheil, & Welchman, 2005; Mukai et al., 2007; Op de Beeck, Baker, DiCarlo, & Kanwisher, 2006; Sigman et al., 2005; Yotsumoto, Watanabe, & Sasaki, 2008). However, considering only the overall magnitude (i.e., univariate), fMRI signal does not allow us to discern whether learning-dependent changes in fMRI relate to changes in the overall magnitude of neural responses or changes in the selectivity of neural populations. Second, to quantify changes in (a) perceptual performance and (b) brain responses due to learning, it is useful to vary stimuli parametrically and test changing levels of performance. However, simple univariate fMRI measures may be insufficient to reveal subtle changes in neuronal responses and the links between perceptual and neuronal states. Here, we discuss recent work using multivoxel pattern classification methods that take into account activity across patterns of voxels to investigate the link between learning-dependent changes in neural representations and behavior.

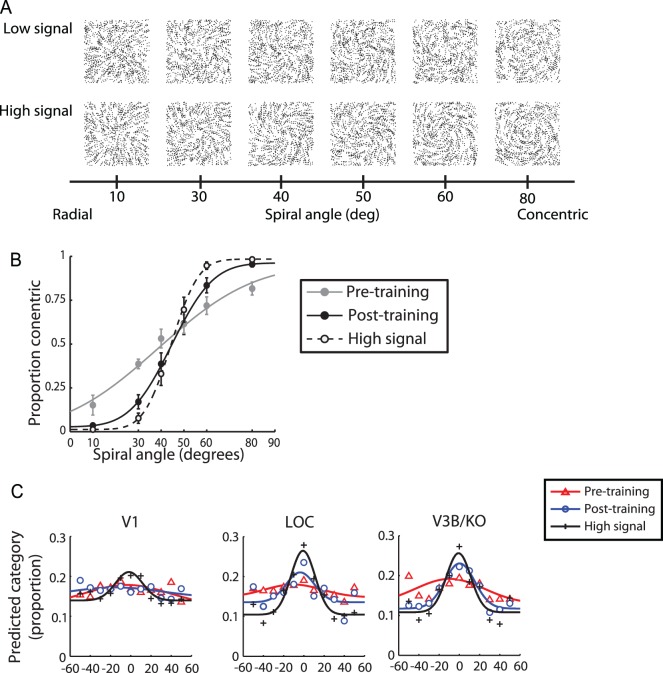

In a first set of studies (Zhang, Meeson, Welchman, & Kourtzi, 2010), we tested the mechanisms that mediate our ability to learn visual shape categories by combining behavioral judgments with high-resolution imaging (i.e., smaller voxels for finer grained measurements) and multivoxel pattern classification analysis tools (Haynes & Rees, 2005; Kamitani & Tong, 2005). To gain insight into the way in which visual form representations change with training, we employed a parametric stimulus space in which we could morph systematically between two different stimulus classes (concentric vs. radial glass patterns; Figure 2A). By adding external noise to the displays, and using fine-scale variations in the morphing space, we were able to characterize observers' behavioral discrimination performance before and after training (Figure 2B). Participants were trained to discriminate concentric from radial patterns with feedback in three training sessions, and were scanned on the same task (without feedback) before and after training. Based on fMRI measurements concurrent with stimulus presentation, we were able to evaluate the ability of the machine-learning algorithm to decode the presented stimuli. We were particularly interested in the choices of the pattern classifier in predicting each of the presented stimuli. Using the distribution of choices for each stimulus we generated fMRI-based voxel tuning functions that described the distribution of choices made by the classifier when given data measured in different visual areas (Figure 2C). Thereby we sought to link learning-based changes in behavioral responses and fMRI responses by comparing psychometric functions and fMRI pattern-based tuning functions before and after training.

Figure 2.

Learning to discriminate global shapes. (A) Stimuli: Low- and high-signal Glass pattern stimuli. (B) Behavioral performance (average data across observers) across stimuli in the morphing space (as indicated by spiral angle) during scanning. The curves indicate the best fit of the cumulative Gaussian function for high-signal stimuli and low-signal stimuli before and after training. Error bars indicate the standard error of the mean. (C) fMRI pattern-based tuning functions for V1, LO, and V3B/KO: The proportion of predictions made to each stimulus condition in terms of the difference in spiral angle between the viewed stimulus and the prediction. Symbols indicate average data across observers; solid lines indicate the best fit of a Gaussian to the data from 1,000 bootstrap samples. Adapted with permission from Zhang et al. (2010).

Comparing the performance of human observers and classifiers demonstrated that learning altered the observers' sensitivity to visual forms and the tuning of fMRI activation patterns in visual areas selective for task-relevant features. For high signal stimuli (i.e., 80% of the dots in the display were aligned to the stimulus shape, while 20% were randomly positioned), we observed a tuned response across visual areas, that is, the classifiers mispredicted similar stimuli more frequently than dissimilar ones. Consistent with the behavioral results, these tuning functions had higher amplitude for high-signal stimuli than low-signal stimuli (i.e., only 45% of the dots in the display were aligned to the stimulus shape) before training in higher visual areas. However, training on low-signal stimuli enhanced the amplitude while it reduced the width of pattern-based tuning functions in higher dorsal and ventral visual areas (Figure 2C). Increased amplitude after training indicates higher stimulus discriminability that may relate to enhanced neural responses for the preferred stimulus category at the level of large neural populations. Reduced tuning width after training indicates fewer classification mispredictions, suggesting that learning decreases neural responses to nonpreferred stimuli. Thus, these findings suggest that learning of visual patterns is implemented in the human visual cortex by enhancing the response to the preferred stimulus category, while reducing the response to nonpreferred stimuli.

Learning-dependent changes for optimal decisions

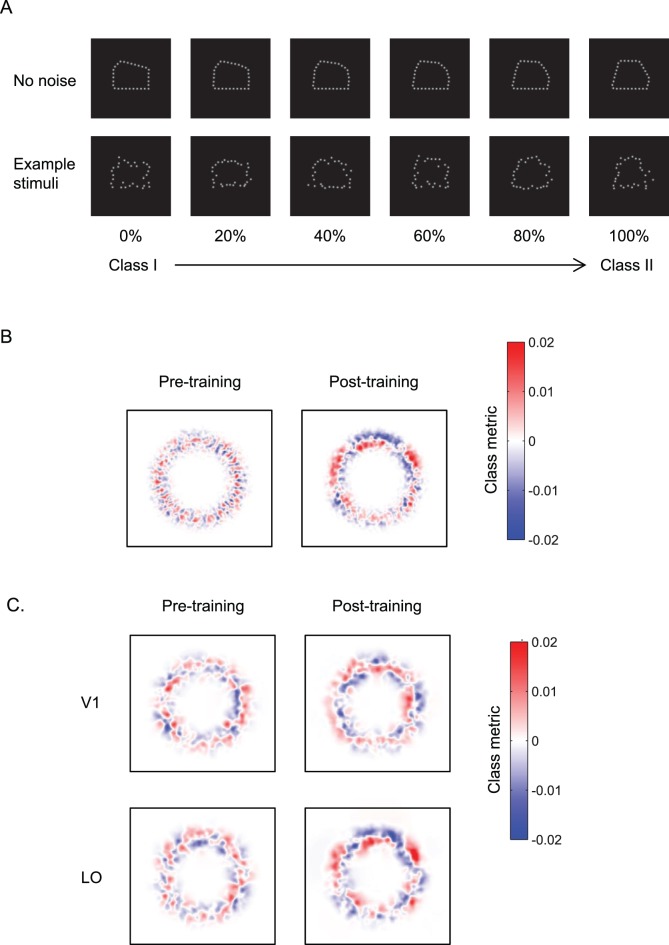

Learning is known to optimize mental templates of object features that are critical for efficient performance in perceptual tasks (e.g., categorical discrimination; Dobres & Seitz, 2010; Dupuis-Roy & Gosselin, 2007; Gold et al., 1999; Li et al., 2004). In recent work (Kuai, Levi, & Kourtzi, 2013), we investigated the mechanisms that the brain uses to optimize templates for perceptual decisions through experience. Observers were trained (three training sessions with feedback) to discriminate visual forms (two classes of polygons) that were randomly perturbed by noise (Figure 3A) and were scanned on the same task (without feedback) before and after training. To identify the specific stimulus components that determine the observer's choice (i.e., the discriminative features), we reverse-correlated behavioral choices and fMRI signals with noisy stimulus trials. This approach has been widely used in psychophysics (for reviews see Abbey & Eckstein, 2002; Eckstein & Ahumada, 2002); however, its application to neuroimaging has been limited by noisy single-trial fMRI signals and the small number of samples that can be acquired during fMRI scans (Schyns, Gosselin, & Smith, 2009; Smith et al., 2008). To overcome these limitations, we used reverse correlation in conjunction with multivoxel pattern analysis. We calculated decision templates based on the choices made by a linear support vector machine (SVM) classifier that decodes the stimulus class from the fMRI data measured on individual stimulus trials. By reverse correlating behavioral and multivoxel pattern responses with noisy stimulus trials, we identified the critical image parts that determine the observer's choice.

Figure 3.

Learning optimizes decision templates. (A) Sample pentagon-like stimuli comprising 30 equally spaced Gaussian dots with 0.1° SD. Two classes of shapes were generated by varying the location of the pentagon lines that differed in their length. The top panel shows the stimulus space generated by linear morphing between Class I and Class II polygons (stimuli are shown as a function of percent Class II). The bottom panel shows example stimuli with position noise, as presented in the experiment. (B) Behavioral classification images before and after training, averaged across participants. (C) fMRI classification images for V1 and LO before and after training, averaged across participants. Red indicates image locations associated with a Class I decision, while blue indicates image locations associated with a Class II decision. Adapted with permission from Kuai et al. (2013).

Our findings showed that observers learned to integrate information across locations and weight the discriminative image parts. Classification images based on the observers' performance after training showed marked differences between image parts associated with the two stimulus classes (Figure 3B). In contrast, we did not observe any consistent image parts associated with the two stimulus classes before training, ensuring that the classification images reflected the perceived differences between classes rather than local image differences between stimuli.

Having characterized the behavioral decision template, we used fMRI to determine where in the visual cortex this template is implemented. Our results show that classification images in the lateral occipital (LO) area, but not in early visual areas, revealed image parts that were perceptually distinct between the two stimulus classes (Figure 3B). Importantly, there was little information about this perceptual template before training, ensuring that fMRI classification templates reflect the perceived classes rather than stimulus examples. Comparing classification images derived from behavioral and fMRI data showed that similar image parts became more discriminable between the two stimulus classes after training, suggesting correspondence between behavioral and fMRI templates. Further, we found that these templates were also tolerant of stimulus size changes. In particular, after training, we tested observers' performance for stimuli that were presented at different sizes (1.5 or 2 times larger) from the trained stimuli. Our findings showed that behavioral and fMRI classification images for stimuli of trained and untrained size were highly similar, suggesting that learning tunes representations of discriminative image parts in higher ventral cortex that are tolerant to image changes rather than specific local image positions.

Summary

Understanding the midlevel representations that support shape perception remains a challenge in cognitive neuroscience and computer vision. Computational and experimental approaches (Marr, 1982; Pasupathy & Connor, 2002; Wilson & Wilkinson, 2014) are converging in uncovering how object structure is encoded in the human visual cortex. Yet, object representations are surprisingly adaptive to changes in environmental statistics, implying that learning through evolution, development, but also shorter-term experience during adulthood, may shape the object code. Here we propose that adaptive shape coding in the visual cortex is at the core of flexible decision making. We demonstrate that learning optimizes feature binding for object recognition in cluttered scenes, and tunes the neural representations of informative image parts for efficient categorical judgements. Thus, understanding the brain dynamics (i.e., interactions between large-scale networks and local cortical circuits) that support adaptive neural processing in the human brain emerges as the next key challenge in visual neuroscience.

Supplementary Material

Acknowledgments

This work was supported by a Wellcome Trust Senior Research Fellowship to AEW (095183/Z/10/Z) and grants to ZK from the Biotechnology and Biological Sciences Research Council (H012508), a Leverhulme Trust Research Fellowship (RF-2011-378) and the People Programme (Marie Curie Actions) of the European Union's Seventh Framework Programme FP7/2007-2013/ under REA grant agreement no. PITN-GA-2011-290011.

Commercial relationships: none.

Corresponding author: Zoe Kourtzi.

Email: zk240@cam.ac.uk.

Address: Department of Psychology, University of Cambridge, Cambridge, UK.

References

- Abbey C. K.,, Eckstein M. P. (2002). Classification image analysis: Estimation and statistical inference for two-alternative forced-choice experiments. Journal of Vision, 2 (1): 2 66–78, http://www.journalofvision.org/content/2/1/5, doi:10.1167/2.1.5. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Ashby F. G.,, Maddox W. T. (2005). Human category learning. Annual Review of Psychology, 56, 149–178. [DOI] [PubMed] [Google Scholar]

- Barlow H. (1990). Conditions for versatile learning, Helmholtz's unconscious inference, and the task of perception. Vision Research, 30, 1561–1571. [DOI] [PubMed] [Google Scholar]

- Brady M. J.,, Kersten D. (2003). Bootstrapped learning of novel objects. Journal of Vision, 3 (6): 2 413–422, http://www.journalofvision.org/content/3/6/2, doi:10.1167/3.6.2. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Cohen L.,, Dehaene S.,, Vinckier F.,, Jobert A.,, Montavont A. (2008). Reading normal and degraded words: Contribution of the dorsal and ventral visual pathways. Neuroimage, 40, 353–366. [DOI] [PubMed] [Google Scholar]

- Dehaene S.,, Cohen L.,, Sigman M.,, Vinckier F. (2005). The neural code for written words: A proposal. Trends in Cognitive Science, 9, 335–341. [DOI] [PubMed] [Google Scholar]

- Dobres J.,, Seitz A. R. (2010). Perceptual learning of oriented gratings as revealed by classification images. Journal of Vision, 10 (13): 2 1–11, http://www.journalofvision.org/content/10/13/8, doi:10.1167/10.13.8. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Dosher B. A.,, Lu Z. L. (1998). Perceptual learning reflects external noise filtering and internal noise reduction through channel reweighting. Proceedings of the National Academy of Sciences, USA, 95, 13988–13993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dupuis-Roy N.,, Gosselin F. (2007). Perceptual learning without signal. Vision Research, 47, 349–356. [DOI] [PubMed] [Google Scholar]

- Eckstein M. P.,, Abbey C. K.,, Pham B. T.,, Shimozaki S. S. (2004). Perceptual learning through optimization of attentional weighting: Human versus optimal Bayesian learner. Journal of Vision, 4 (12): 2 1006–1019, http://www.journalofvision.org/content/4/12/3, doi:10.1167/4.12.3. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Eckstein M. P.,, Ahumada A. J.,, Jr. (2002). Classification images: A tool to analyze visual strategies. Journal of Vision, 2 (1): i, http://www.journalofvision.org/content/2/1/i, doi:10.1167/2.1.i. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Fine I.,, Jacobs R. A. (2002). Comparing perceptual learning tasks: a review. Journal of Vision, 2 (2): 2 190–203, http://www.journalofvision.org/content/2/2/5, doi:10.1167/2.2.5. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Geisler W. S. (2008). Visual perception and the statistical properties of natural scenes. Annual Review of Psychology, 59, 167–192. [DOI] [PubMed] [Google Scholar]

- Gilbert C. D.,, Sigman M.,, Crist R.E. (2001). The neural basis of perceptual learning. Neuron, 31, 681–697. [DOI] [PubMed] [Google Scholar]

- Gold J.,, Bennett P. J.,, Sekuler A. B. (1999). Signal but not noise changes with perceptual learning. Nature, 402, 176–178. [DOI] [PubMed] [Google Scholar]

- Goldstone R. L. (1998). Perceptual learning. Annual Review of Psychology, 49, 585–612. [DOI] [PubMed] [Google Scholar]

- Goldstone R. L.,, Lippa Y.,, Shiffrin R. M. (2001). Altering object representations through category learning. Cognition, 78, 27–43. [DOI] [PubMed] [Google Scholar]

- Hanson S. J.,, Matsuka T.,, Haxby J. V. (2004). Combinatorial codes in ventral temporal lobe for object recognition: Haxby (2001) revisited: Is there a “face” area. NeuroImage, 23, 156–166. [DOI] [PubMed] [Google Scholar]

- Haxby J. V.,, Gobbini M. I.,, Furey M. L.,, Ishai A.,, Schouten J. L.,, Pietrini P. (2001). Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science, 293, 2425–2430. [DOI] [PubMed] [Google Scholar]

- Haynes J. D.,, Rees G. (2005). Predicting the orientation of invisible stimuli from activity in human primary visual cortex. Nature Neuroscience, 8, 686–691. [DOI] [PubMed] [Google Scholar]

- Jagadeesh B.,, Chelazzi L.,, Mishkin M.,, Desimone R. (2001). Learning increases stimulus salience in anterior inferior temporal cortex of the macaque. Journal of Neurophysiology, 86, 290–303. [DOI] [PubMed] [Google Scholar]

- Kamitani Y.,, Tong F. (2005). Decoding the visual and subjective contents of the human brain. Nature Neuroscience, 8, 679–685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keri S. (2003). The cognitive neuroscience of category learning. Brain Research: Brain Research Reviews, 43, 85–109. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z.,, Betts L. R.,, Sarkheil P.,, Welchman A. E. (2005). Distributed neural plasticity for shape learning in the human visual cortex. PLoS Biology, 3, e204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuai S. G.,, Levi D.,, Kourtzi Z. (2013). Learning optimizes decision templates in the human visual cortex. Current Biology, 23, 1799–1804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li R. W.,, Levi D. M.,, Klein S. A. (2004). Perceptual learning improves efficiency by re-tuning the decision ‘template' for position discrimination. Nature Neuroscience, 7, 178–183. [DOI] [PubMed] [Google Scholar]

- Marr D. (1982). Vision: A computational approach. San Francisco: Freeman & Co. [Google Scholar]

- Miller E. K.,, Cohen J. D. (2001). An integrative theory of prefrontal cortex function. Annual Review of Neuroscience, 24, 167–202. [DOI] [PubMed] [Google Scholar]

- Mukai I.,, Kim D.,, Fukunaga M.,, Japee S.,, Marrett S.,, Ungerleider L. G. (2007). Activations in visual and attention-related areas predict and correlate with the degree of perceptual learning. Journal of Neuroscience, 27, 11401–11411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nosofsky R. M. (1986). Attention, similarity, and the identification-categorization relationship. Journal of Experimental Psychology: General, 115, 39–61. [DOI] [PubMed] [Google Scholar]

- O'Toole A. J.,, Jiang F.,, Abdi H.,, Haxby J. V. (2005). Partially distributed representations of objects and faces in ventral temporal cortex. Journal of Cognitive Neuroscience, 17, 580–590. [DOI] [PubMed] [Google Scholar]

- Op de Beeck H. P.,, Baker C. I.,, DiCarlo J. J.,, Kanwisher N. G. (2006). Discrimination training alters object representations in human extrastriate cortex. Journal of Neuroscience, 26, 13025–13036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasupathy A.,, Connor C. E. (2002). Population coding of shape in area V4. Nature Neuroscience, 5, 1332–1338. [DOI] [PubMed] [Google Scholar]

- Schwarzkopf D. S.,, Kourtzi Z. (2008). Experience shapes the utility of natural statistics for perceptual contour integration. Current Biology, 18, 1162–1167. [DOI] [PubMed] [Google Scholar]

- Schyns P. G.,, Goldstone R. L.,, Thibaut J. P. (1998). The development of features in object concepts. Behavioral & Brain Sciences, 21, 1–17; discussion 17–54. [DOI] [PubMed] [Google Scholar]

- Schyns P. G.,, Gosselin F.,, Smith M. L. (2009). Information processing algorithms in the brain. Trends in Cognitive Science, 13, 20–26. [DOI] [PubMed] [Google Scholar]

- Sigman M.,, Cecchi G.,, Gilbert C.,, Magnasco M. (2001). On a common circle: Natural scenes and Gestalt rules. Proceedings of the National Academy of Sciences, USA, 98, 1935–1940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sigman M.,, Pan H.,, Yang Y.,, Stern E.,, Silbersweig D.,, Gilbert C. D. (2005). Top-down reorganization of activity in the visual pathway after learning a shape identification task. Neuron, 46, 823–835. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simoncelli E. P.,, Olshausen B. A. (2001). Natural image statistics and neural representation. Annual Review of Neuroscience, 24, 1193–1216. [DOI] [PubMed] [Google Scholar]

- Smith F. W.,, Muckli L.,, Brennan D.,, Pernet C.,, Smith M. L.,, Belin P.,, Gosselin F.,, Hadley D. M.,, Cavanagh J.,, Schyns P. G. (2008). Classification images reveal the information sensitivity of brain voxels in fMRI. Neuroimage, 40, 1643–1654. [DOI] [PubMed] [Google Scholar]

- Williams M. A.,, Dang S.,, Kanwisher N. G. (2007). Only some spatial patterns of fMRI response are read out in task performance. Nature Neuroscience, 10, 685–686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson H. R.,, Wilkinson F. (2014). Configural pooling in the ventral pathway. Werner J. S., Chalupa L. (Eds.) The new visual neurosciences (pp 617–626). Cambridge MA: MIT Press. [Google Scholar]

- Yotsumoto, Y.,, Watanabe T.,, Sasaki Y. (2008). Different dynamics of performance and brain activation in the time course of perceptual learning. Neuron, 57, 827–833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang J.,, Kourtzi Z. (2010). Learning-dependent plasticity with and without training in the human brain. Proceedings of the National Academy of Sciences, USA, 107, 13503–13508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang J.,, Meeson A.,, Welchman A. E.,, Kourtzi Z. (2010). Learning alters the tuning of fMRI multi-voxel patterns for visual forms. Journal of Neuroscience, 30, 14127–14133. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.