Abstract

The authors investigated the feasibility of using computer-assisted instruction in patients of varying literacy levels by examining patients’ preferences for learning and their ability to use 2 computer-based educational programs. A total of 263 participants 50–74 years of age with varying health literacy levels interacted with 1 of 2 educational computer programs as part of a randomized trial of a colorectal cancer screening decision aid. A baseline and postprogram evaluation survey were completed. More than half (56%) of the participants had limited health literacy. Regardless of literacy level, doctors were the most commonly used source of medical information—used frequently by 85% of limited and adequate literacy patients. In multivariate logistic regression, only those with health insurance (OR = 2.35, p = .06) and computer use experience (OR = 0.39, p .03) predicted the ability to complete the programs without assistance compared with those without health insurance or prior computer use, respectively. Although patients with limited health literacy had less computer experience, the majority completed the programs without any assistance and stated that they learned more than they would have from a brochure. Future research should investigate ways that computer-assisted instruction can be incorporated in medical care to enhance patient understanding.

Communication is essential for the effective delivery of health care. Unfortunately, there is often a mismatch between a clinician's level of communication and a patient's level of comprehension. Evidence shows that patients often misinterpret or do not understand much of the information given to them by clinicians (Baker, Gazmararian, Sudano, & Patterson, 2000). There are many reasons why patients do not understand what clinicians tell them, but key among them is inadequate health literacy—a limited ability to obtain, process, and understand basic health information and services needed to make appropriate health care decisions (Nielsen-Bohlman, Panzer, Hamlin, & Kindig, 2004).

Limited or low health literacy diminishes a person's capacity to engage in fruitful interactions with care providers (Baker et al., 2000). In addition, limited literacy patients rarely self-identify. Many are embarrassed to let the health care team know of their inability to read or understand instructions, which often leads to poor health outcomes, less knowledge of disease management, frequent return visits to the health care provider, and additional out-of-pocket expenses (Gazmararian, Williams, Peel, & Baker, 2003; Howard, Gazmararian, & Paker, 2005; Sudore et al., 2006). This limited literacy can also lead to “ . . . medication errors, missed appointments, adverse medical outcomes, and even malpractice lawsuits” (Weiss, 2003, p. 4).

The first national assessment of adult literacy (Kutner, Greenberg, Jin, & Paulsen, 2006) found more than one third of men and women in the United States to be at the lowest levels of literacy. However, for racial ethnic minorities and low-income adults more than half had basic or below basic literacy skills, contributing to health disparities. Groups with the highest prevalence of chronic disease and the greatest need for health care have the least ability to read and comprehend information needed to function as patients (Gazmararian et al., 2003; Parker et al., 1999; Wolf et al., 2006).

Traditional patient education relies heavily on written material about disease prevention and management. Unfortunately, most patient education materials are written at too high a grade level for low-literate patients to comprehend essential points (Gazmararian et al., 2003; Nutbeam, 2000; Wolf et al., 2004). Novel methods of patient education are needed to overcome health literacy barriers. One promising method is computer-assisted instruction (CAI), the use of computer programs to deliver an educational message. Computer-assisted instruction offers the potential to overcome literacy barriers by combining graphics, video clips, and audio segments to minimize reliance on the written word. In addition, CAI standardizes the material being presented and allows patients to proceed at their own pace. It also can incorporate interactivity to engage the user and target the content delivered.

Previous studies have demonstrated that CAI can improve knowledge, social support, and some health behaviors (Fox, 2009; Jibaja-Weiss et al., 2011; Murray, Burns, See, Lai, & Nazareth, 2005). Studies examining clinical outcomes have yielded mixed results (Fox, 2009), although a meta-analysis of web- and computer-based programs for smoking cessation found they significantly increased smoking cessation (Fox, 2009; Murray et al., 2005; Myung, McDonnell, Kazinets, Seo, & Moskowitz, 2009). Only a few studies of CAI have specifically targeted low-literacy individuals, and none of these studies report results according to patient literacy level (Andersen, Andersen, & Youngblood, 2011; Garbers et al., 2012). It is unclear whether patients with low health literacy have access to computers, have the ability to navigate a computer program, or would accept CAI. We investigated the feasibility of using CAI to educate patients of varying literacy levels by examining the usability and patient preference data from a randomized controlled trial comparing two computer-based patient programs in a mixed-literacy population.

Method

Study Design

Between November 2007 and September 2008, we enrolled 264 patients with varying health literacy levels in a randomized controlled trial testing the effectiveness of a web-based colorectal cancer screening patient decision aid in a large community-based university affiliated internal medicine practice (Miller et al., 2011). The Wake Forest University School of Medicine Institutional Review Board approved the study protocol, and all participants provided written informed consent.

Participants were English-speaking patients between 50 and 74 years of age who were scheduled for a routine medical visit and who were overdue for colorectal cancer screening. Before the medical visit, each participant completed a verbally administered questionnaire that included items about usual sources of health information, trust in various sources of health information, computer access, and computer experience.

After participants completed the questionnaire, they were randomly assigned to interact with one of two web-based educational programs: CHOICE (a colorectal cancer screening decision aid), or YourMeds (an educational program about prescription medication safety). Both programs were developed for a mixed-literacy audience with prominent use of audio narration, graphics, pictures, and animations or video. Both programs were displayed on a computer with a touch screen monitor and external speakers. A research assistant started the assigned program and then left the participant to view the program in privacy. Participants were instructed to contact the research assistant if they had any questions or difficulty using the program.

After completing the program, each participant completed a program evaluation survey. The research assistant also recorded the number of times the participant asked for assistance using the program. Additional details of the study design have been published elsewhere (Miller et al., 2011).

Measurements

Outcomes

For this feasibility study, our main outcome of interest was computer program usability. We determined computer program usability by examining three factors: the number of times patients needed assistance using the programs, patient assessment of ease of computer program use, and patient assessment of ease of understanding the material presented. Secondary outcomes included patients’ self-rated learning from the programs, and patients’ preferences for the programs, as determined by the postprogram surveys.

Predictor

We stratified the study sample on the basis of patient health literacy level (limited literacy vs. adequate literacy). We defined adequate literacy as ability to read above the eighth-grade level, as measured by the Rapid Estimate of Adult Literacy in Medicine (Davis et al., 1993).

Data Analysis

Data analyses were conducted using SAS 9.2 (SAS Institute Inc, 2009). Potential group differences between the two literacy groups were assessed using chi-square tests for proportions and t-tests for means. We used chi-square tests to determine whether our outcomes of interest (ability to use program without assistance, ease of use, ease of understanding, self-rated learning, and patient preference) varied by literacy level. We created a multivariate logistic regression model for the outcome of completing the computer programs without assistance. The covariates in the model included patient demographics (age, sex, race, marital status [married=living together vs. other], employment status [employed vs. unemployed], insurance status [insured vs. uninsured], annual household income [<$20,000 vs. $20,000 or greater]) and computer experience (having a home computer, having used a computer, having used e-mail). Because the effect of having computer experience could vary by literacy level, we also examined for a potential interaction between literacy level and computer experience. We used a backward stepping algorithm, forcing patient literacy level to remain in the model but excluding any covariate that was not significant with p < .1.

Results

Research assistants contacted 401 eligible patients by telephone who agreed to participate. Of these patients, 264 were confirmed eligible, enrolled into the study, and randomized with equal probability to view one of the two instructional computer programs. One randomized patient did not view a computer program due to a computer error and was therefore excluded from analysis.

Table 1 describes participants’ characteristics. Mean age was 58.8 (SD = 7.2) and slightly more than half (56%) had limited health literacy. Those most likely to have limited literacy were men, African Americans, insured individuals, and those with lower educational achievement. Limited literacy participants were less likely to report experience with computers or the Internet. Only 37% of limited literacy patients had a home computer, and 42% reported no computer experience. Few limited literacy patients (16%) had ever used the Internet to look for medical information.

Table 1.

Sample demographics (N = 263)

| Health literacy level |

|||

|---|---|---|---|

| Patient characteristics | Limited (n = 146), n (%) | Adequate (n = 117), n (%) | p * |

| Sex | |||

| Female | 86 (59) | 91 (78) | .001 |

| Age, M (SD) | 58.8 (7) | 56.6 (6) | .02† |

| Race | |||

| African American | 121 (83) | 72 (63) | .001 |

| Marital status | |||

| Married/living together | 35 (24) | 30 (26) | .01 |

| Annual income | |||

| <$10,000 | 59 (45) | 37 (32) | |

| $10,000–$19,999 | 44 (34) | 46 (40) | .42 |

| ≥$20,000 | 28 (21) | 32 (28) | |

| Employment | |||

| Employed | 31 (21) | 36 (31) | .30 |

| Insurance | |||

| Uninsured | 44 (30) | 52 (44) | .05 |

| Education | |||

| Less than high school | 44 (65) | 21 (25) | |

| High school diploma/GED | 33 (48) | 33 (38) | .001 |

| Some college or greater | 23 (33) | 46 (54) | |

| Computer access | |||

| Have a home computer | 54 (37) | 60 (51) | .05 |

| Have Internet at home | 40 (27) | 50 (43) | .01 |

| Have high-speed Internet access at home | 31 (21) | 44 (39) | .01 |

| Prior computer use | |||

| Have used a PC | |||

| Yes | 85 (58) | 94 (80) | .001 |

| Have used Internet | |||

| Yes | 44 (30) | 68 (58) | .001 |

| No | 103 (70) | 49 (42) | |

| Have used e-mail | |||

| Yes | 32 (22) | 58 (50) | .001 |

| No | 53 (78) | 36 (50) | |

| Frequency of Internet use | |||

| Never | 101 (70) | 46 (40) | |

| Less often | 16 (11) | 20 (17) | |

| Once or twice per month | 3 (2) | 9 (8) | .001 |

| 1–2 days per week | 8 (6) | 9 (8) | |

| 3–5 days per week | 6 (4) | 8 (7) | |

| Every day | 10 (7) | 24 (21) | |

| Frequency of Internet use for medical information | |||

| Never | 121 (84) | 63 (55) | |

| Less often | 8 (7) | 23 (20) | |

| Once or twice per month | 6 (4) | 17 (15) | .001 |

| Once per week | 2 (1) | 4 (4) | |

| A few times per week | 4 (3) | 6 (5) | |

| Every day | 3 (2) | 2 (2) | |

Chi-square test unless otherwise indicated.

Wilcoxon two-sample t test.

For limited and adequate literacy patients, doctors were the most frequently used source of information (used “sometimes” or “a lot” by 85% of patients each), followed by television (69% vs. 70%), family members (64% vs. 65%), and pharmacists (61% vs. 63%; Table 2). Limited literacy patients were less likely than adequate literacy patients to use and trust Internet and text-based sources of information such as the newspapers, magazines, and books. Overall, health professionals and family members were the most trusted sources of information by both limited and adequate literacy patients.

Table 2.

Use and trust of information sources, by literacy level (N = 263)

| Use source of information* |

Trust source of information# |

|||||

|---|---|---|---|---|---|---|

| Source of information | Limited literacy (n = 146), n (%) | Adequate literacy (n = 117), n (%) | p | Limited literacy (n = 146), n (%) | Adequate literacy (n = 117), n (%) | p |

| Personal source | ||||||

| Doctor | 125 (85) | 99 (85) | .93 | 138 (94) | 114 (97) | .17 |

| Pharmacist | 89 (61) | 73 (63) | .76 | 125 (86) | 107 (92) | .11 |

| Family | 94 (64) | 76 (65) | .92 | 109 (75) | 83 (71) | .50 |

| Nonprint media | ||||||

| TV | 102(69) | 81 (70) | .94 | 94 (64) | 81 (69) | .41 |

| Radio | 49 (33) | 30 (26) | .17 | 57 (40) | 43 (39) | .82 |

| Internet | 26 (18) | 40 (34) | .01 | 48 (40) | 56 (55) | .05 |

| Printed source | ||||||

| Newspaper | 56 (38) | 60 (51) | .03 | 69 (48) | 70 (61) | .05 |

| Magazine | 62 (42) | 63 (54) | .06 | 78 (54) | 74 (64) | .09 |

| Book | 75 (51) | 79 (68) | .01 | 97 (67) | 86 (75) | .19 |

Participants who report using the source of information “sometimes” or “a lot.”.

Participants who report they trust the source of information “somewhat” or “a lot.”.

Regardless of literacy level, more than 98% of participants stated the programs were easy to use, were preferred to reading a brochure, and would be recommended to others (Table 3). More than 90% of all patients reported that they learned something important from the programs, and 80% reported that they learned something new. However, limited literacy patients were more likely than adequate literacy patients to state that they learned more from the programs than they would have from a brochure (97% vs. 88%, p = .05).

Table 3.

Patient evaluation of computer-assisted instructional programs, by literacy level (N = 263)

| Computer-assisted instruction questions | Limited literacy: Agree n (%) | Adequate literacy: Agree n (%) | p |

|---|---|---|---|

| I learned something new from watching this program. | 124 (85) | 87 (75) | .24 |

| I learned something important from watching this program. | 134 (91) | 107 (92) | .81 |

| I would like to see other similar computer programs on health topics that interest me. | 139 (95) | 114 (98) | .34 |

| Compared to reading a brochure, I liked watching this computer program more. | 143 (98) | 112 (98) | .59 |

| I learned more from watching this computer program than I would have learned from reading a brochure. | 140 (97) | 101 (88) | .05 |

| This program was easy to use. | 71 (99) | 59 (100) | 1.00 |

| This program was easy to understand. | 72 (100) | 59 (100) | 1.00 |

| I would recommend this program to my friends and neighbors. | 144 (99) | 115 (99) | .36 |

The two programs varied slightly in their complexity. After an initial introduction, CHOICE offered users a touchscreen menu to customize which section was seen next. YourMeds offered the user the option to repeat a section, but it was otherwise linear in terms of its programming (pressing a single touchscreen button advanced the program to the next segment). This difference in complexity was seen in the amount of assistance required. Only 1 person (1.75%) with adequate literacy needed any assistance to complete the YourMeds program compared with 27% (n = 16) who needed some assistance to complete the CHOICE program.

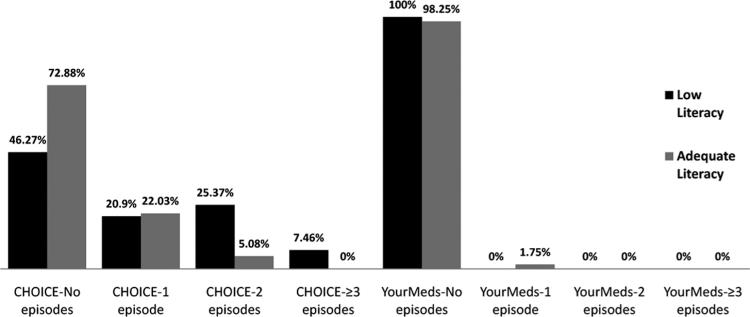

Overall, limited literacy patients were more likely to require assistance than adequate literacy patients. However, neither group needed much help, given than more than three quarters of all participants were able to complete the programs without any assistance (73% for limited literacy vs. 86% for adequate literacy, p = .03). This difference in the amount of assistance needed was seen only for the more complex CHOICE program where only 5% of adequate literacy patients needed two or more episodes of assistance compared with 33% of limited literacy patients, p < .01 (see Figure 1).

Fig. 1.

Episodes of assistance required while using computer programs. Fisher's exact test: CHOICE, p < .01; YourMeds, p = .44.

Logistic regression was used to assess the effect of literacy on requiring any assistance after adjustment for other covariates. These results are shown in Table 4. Literacy was not associated with needing assistance in either the full or reduced model. In the reduced model, only insurance status and lack of computer experience were associated with needing assistance to complete the computer programs. Patients with health insurance were more likely to require some assistance (OR = 2.36, p .02), compared with those without insurance. Patients who had used a computer before were less likely to require any assistance (OR 0.45, p = .02), compared with those without prior computer use. In that model, more patients with limited health literacy required assistance, but the result was not statistically significant in the multivariate analysis (OR = 1.49, p = .25).

Table 4.

Association of individual patient characteristics with needing one or more episodes of assistance to complete the educational computer programs (N = 263)

| Full model |

Reduced model* |

|||

|---|---|---|---|---|

| Patient characteristics | Odds ratio (95% CI) | p | Odds ratio (95% CI) | P |

| Health literacy | ||||

| Limited | 1.14 (0.53–2.47) | .74 | 1.49 (0.76–2.92) | .25 |

| Adequate | 1 (Ref) | |||

| Sex | ||||

| Male | 0.97 (0.46–2.05) | .94 | — | — |

| Female | 1 (Ref) | |||

| Age† | 0.99 (0.93–1.04) | .65 | — | — |

| Race/ethnicity | ||||

| White | 0.57 (0.22–1.48) | .25 | — | — |

| Non-White | 1 (Ref) | |||

| Marital status | ||||

| Married | 1.37 (0.59–3.17) | .47 | — | — |

| Unmarried | 1 (Ref) | |||

| Yearly household income | ||||

| ≥$20,000 | 1.19 (0.47–3.03) | .72 | — | — |

| <$20,000 | 1 (Ref) | |||

| Employment status | ||||

| Employed | 0.88 (0.33–2.31) | .79 | — | — |

| Unemployed | 1 (Ref) | |||

| Health insurance status | ||||

| Insured | 2.35 (0.98–5.65) | .06 | 2.36 (1.13–4.94) | .02 |

| Uninsured | 1 (Ref) | |||

| Has used a computer | 0.39 (0.16–0.91) | .03 | 0.45 (0.24–0.86) | .02 |

| Has PC in home | 0.89 (0.40–1.99) | .77 | — | — |

| Has used e-mail | 0.70 (0.27–1.85) | .48 | — | — |

Reduced model after using a backward-stepping algorithm, removing any covariate with p > .1 and forcing health literacy to remain in the model.

Odds ratio is for each additional year of age.

A separate analysis was done to assess the effect of having computer experience stratified by health literacy, as we thought the benefit of computer experience might vary in the two groups. Among those with limited health literacy, those with computer experience were less likely to require assistance (OR 0.34, p = .01). In comparison, the effect of computer on need for assistance was not statistically significant for those with adequate health literacy (OR = 0.88, p .84).

Because the majority of patients with insurance had either Medicaid (which is associated with lower socioeconomic status) or Medicare coverage (a marker for disability or older age), we reran the multivariate logistic regression model classifying insurance in three levels (uninsured, Medicaid/Medicare, or other insurance). In this post hoc analysis, only those with Medicaid or Medicare were more likely to need assistance compared with those with no insurance (OR 2.50, p = .02). Patients with other forms of insurance no significant increase in need for assistance (OR = 1.60, p = .50).

Discussion

Although a minority of limited health literacy patients reported owning or ever using a computer, almost all were able to complete a user-friendly touch screen computer programs with minimal assistance. In addition, limited and adequate literacy users overwhelmingly reported they preferred the programs over traditional printed patient education materials and they would recommend the programs to friends. Similar to our findings, prior published surveys have reported that patients with lower socioeconomic status have less computer access, the so-called “digital divide” (Horrigan, 2009). However, we are aware of only one study that has examined computer access and usability by literacy level (Gerber et al., 2005). In that study of a touchscreen diabetes educational intervention, the authors found a similar very low prevalence of computer usage among limited literacy patients. Our study is significant because it demonstrates that CAI is a feasible method for overcoming literacy barriers in medical care, despite limited literacy patients’ lack of computer experience. The study also objectively tracked how much assistant CAI users needed to complete one of the two web-based educational programs (CHOICE [a colorectal cancer screening decision aid], or YourMeds [an educational program about prescription medication safety]).

The ability of computer naïve individuals to complete these computer programs may be due to the increasing prevalence of touch screen devices in society. Grocery stores, discount stores, and ATMs all use touch screen computer systems to enable self-service. In one recent study targeting low-literacy Mexican Americans, both Hispanic and White respondents reported using an ATM or store self-checkout at least once per week (Andersen et al., 2011). More and more low-income individuals are using computers, often in libraries rather than at home, and computer use by these individuals is expected to increase substantially in the next decade (Strömberg, Ahlén, Fridlund, & Dahlström, 2002). Recent advances in communications technology, particularly with regards to mobile devices, should make it easier to develop even more user-friendly programs that will appeal to users of all literacy levels. We found that more than 98% of limited and adequate literacy patients stated that they preferred our computer programs to a brochure and that they would recommend our programs to a friend, indicating that a single intervention can be used for all patients regardless of literacy level.

The two programs examined in this study slightly varied in complexity (one program included a touchscreen menu allowing users to choose which segment to view next, and the other program progressed linearly). All limited literacy patients were able to complete the simpler, linear program without any assistance, while approximately one third needed more than one episode of assistance to complete the program including a menu. This finding highlights the importance of a simple user interface for any program targeting low-literacy audiences. Future research should investigate how to allow content to be tailored without harming usability.

We also found that limited literacy patients are most likely to rely on and trust personal sources of information such as doctors, family, and friends. In contrast with adequate literacy patients, those with limited literacy are less likely to use or trust printed sources of information. Given that using the Internet relies heavily on the ability to read and type text, we were not surprised to see that limited literacy patients were also less likely than adequate literacy patients to report Internet usage. Limited literacy patients’ overwhelming acceptance of our computer-based educational programs may indicate they perceived them as part of their doctors’ practice given that they interacted with them at the medical office. In addition, the programs included pictures and videos of doctors and pharmacists, two trusted sources of information, and this may have enhanced patient acceptance.

We examined self-reported measures of ease of use and an objective measure of how much assistance the user required to complete the program. More than 99% of patients reported the programs were easy to use; however, 14% of low and adequate literacy patients required one episode of assistance or more for the CHOICE program. This discrepancy between patient self-report and observed performance suggests that self-report may overestimate ease of use. We suggest that future studies include objective measures of ease of use to guide the development of the best user interfaces.

Prior studies of CAI consistently show it is effective for improving patients’ knowledge (Fox, 2009; Murray et al., 2005). Although CAI can be a valuable tool for communicating with limited literacy patients, lessons from other CAI studies indicate it needs to be combined with other interventions to be most effective at changing behavior (Bussey-Smith & Rossen, 2007; Gerber et al., 2005; Hamel, Robbins, & Wilbur, 2011; Miller et al., 2005; Miller et al., 2011).

Behavior change is a complex process which requires patients to appreciate a significant risk, see benefit in taking an action, possess self-confidence, and overcome obstacles (Glanz, Rimer, & Viswanath, 2008). Through education, CAI can inform patients of risks and benefits, and perhaps it can also increase self-efficacy. However, other interventions are likely needed to minimize barriers to change and support patients through the change process. Therefore, CAI is likely to be most effective when it is incorporated in a larger, supportive system.

Our study has limitations. First, we measured learning from the program only by patient self-report. Second, our results documenting ease of use are limited to the two computer programs we created. Future research should examine which specific features of programs facilitate ease of use by limited literacy patients. Third, although our study population was racially diverse, our sample was limited to English-speaking patients. Last, this study is limited to the patient population at a single site location, hence cannot be generalized to other populations.

Patients’ failure to understand often leads to non-compliance, unnecessary emergency room visits, and poor health outcomes. Patients need to be able to follow written and numerical directions regarding their therapeutic regimens and diagnostic tests, ask questions of medical personnel, report prior treatment and conditions, and solve problems that arise during the course of their care. We found that limited literacy patients were able to successfully complete our computer based patient education programs and rated the programs highly in ease of use and value of information received. Accordingly, CAI is a valuable tool for overcoming literacy barriers. Clinicians need such tools for implementing a patient-centered approach that addresses the challenges in navigating the health care system, removing barriers, and providing understandable preventive and self-care skills for vulnerable populations. Because most limited literacy patients’ lack of home computer access, future studies should investigate ways CAI can be incorporated in medical settings to supplement patient education.

The main implication for this study is that CAI is an acceptable and easy-to-use tool for limited literacy patients seeking health information. Computer assisted instruction is able to remove communication and literacy barriers where patients have had difficulty understanding their doctors, asking questions in medical encounters, and being unable to comprehend printed patient education materials. We recognize a need for health care administrators to work with program managers and clinicians in finding ways to implement CAI in their health care settings to enhance patient–clinician communication and understanding, particularly for limited literacy patients.

Acknowledgments

Funding

This research received funding from the American Cancer Society.

References

- Andersen S, Andersen P, Youngblood NE. Multimedia computerized smoking awareness education for low-literacy Hispanics. Computers, Informatics, Nursing. 2011;29:107–114. doi: 10.1097/NCN.0b013e3181f9dd81. [DOI] [PubMed] [Google Scholar]

- Baker DW, Gazmararian JA, Sudano J, Patterson M. The association between age and health literacy among elderly persons. Journals of Gerontology Series B: Psychological Sciences & Social Sciences. 2000;55B(6):S368–S374. doi: 10.1093/geronb/55.6.s368. [DOI] [PubMed] [Google Scholar]

- Bussey-Smith KL, Rossen RD. A systematic review of randomized control trials evaluating the effectiveness of interactive computerized asthma patient education programs. Annals of Allergy, Asthma and Immunology. 2007;98:507–516. doi: 10.1016/S1081-1206(10)60727-2. [DOI] [PubMed] [Google Scholar]

- Davis TC, Long SW, Jackson RH, Mayeaux EJ, George RB, Murphy PW, Crouch MA. Rapid estimate of adult literacy in medicine: A shortened screening instrument. Family Medicine. 1993;25:391–395. [PubMed] [Google Scholar]

- Fox MP. A Systematic review of the literature reporting on studies that examined the impact of interactive, computer-based patient education programs. Patient Education and Counseling. 2009;77:6–13. doi: 10.1016/j.pec.2009.02.011. [DOI] [PubMed] [Google Scholar]

- Garbers S, Meserve A, Kottke M, Hatcher R, Ventura A, Chiasson MA. Randomized controlled trial of a computer-based module to improve contraceptive method choice. Contraception. 2012;86:383–390. doi: 10.1016/j.contraception.2012.01.013. [DOI] [PubMed] [Google Scholar]

- Gazmararian JA, Williams MV, Peel J, Baker DW. Health literacy and knowledge of chronic disease. Patient Education and Counseling. 2003;51:267–275. doi: 10.1016/s0738-3991(02)00239-2. [DOI] [PubMed] [Google Scholar]

- Gerber BS, Brodsky IG, Lawless KA, Smolin LI, Arozullah AM, Smith EV, Eiser AR. Implementation and evaluation of a low-literacy diabetes education computer multimedia application. Diabetes Care. 2005;28:1574–1580. doi: 10.2337/diacare.28.7.1574. [DOI] [PubMed] [Google Scholar]

- Glanz K, Rimer BK, Viswanath K. Health behavior and health education: Theory, research, and practice. 4th ed. Jossey-Bass; San Francisco, CA: 2008. [Google Scholar]

- Hamel LM, Robbins LB, Wilbur J. Computer-and web-based interventions to increase preadolescent and adolescent physical activity: A systematic review. Journal of Advanced Nursing. 2011;67:251–268. doi: 10.1111/j.1365-2648.2010.05493.x. [DOI] [PubMed] [Google Scholar]

- Horrigan J. Home broadband adoption 2009: Broadband adoption increases, but monthly prices do too. 2009 Retrieved from http://www.pewinternet.org/~/media/Files/Reports/2009/Home-Broadband-Adoption-2009.pdf.

- Howard DH, Gazmararian J, Paker RM. The impact of low health literacy on the medical costs of Medicare managed care enrollees. American Journal of Medicine. 2005;118:371–377. doi: 10.1016/j.amjmed.2005.01.010. [DOI] [PubMed] [Google Scholar]

- Jibaja-Weiss ML, Volk RJ, Granchi TS, Neff NE, Robinson EK, Spann SJ, Beck JR. Entertainment education for breast cancer surgery decisions: A randomized trial among patients with low health literacy. Patient Education and Counseling. 2011;84:41–48. doi: 10.1016/j.pec.2010.06.009. [DOI] [PubMed] [Google Scholar]

- Kutner M, Greenberg E, Jin Y, Paulsen C. The health literacy of America's adults: Results from the 2003 National Assessment of Adult Literacy. (NCES 2006–483) National Center for Education Statistics, U.S. Department of Education; Washington, DC: 2006. [Google Scholar]

- Miller DP, Jr., Kimberly JR, Jr., Case LD, Wofford JL. Using a computer to teach patients about fecal occult blood screening: A randomized trial. Journal of General Internal Medicine. 2005;20:984–988. doi: 10.1111/j.1525-1497.2005.0081.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller DP, Jr., Spangler JG, Case LD, Goff DC, Jr., Singh S, Pignone MP. Effectiveness of a web-based colorectal cancer screening patient decision aid: A randomized controlled trial in a mixed-literacy population. American Journal of Preventive Medicine. 2011;40:608–615. doi: 10.1016/j.amepre.2011.02.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray E, Burns J, See TS, Lai R, Nazareth I. Interactive health communication applications for people with chronic disease. Cochrane Database Systematic Review. 2005;19(4):CD004274. doi: 10.1002/14651858.CD004274.pub4. doi: 10.1002=14651858.CD004274.pub4. [DOI] [PubMed] [Google Scholar]

- Myung SK, McDonnell DD, Kazinets G, Seo HG, Moskowitz JM. Effects of web- and computer-based smoking cessation programs: Meta-analysis of randomized controlled trials. Archives of Internal Medicine. 2009;169:929–937. doi: 10.1001/archinternmed.2009.109. [DOI] [PubMed] [Google Scholar]

- Nielsen-Bohlman L, Panzer AM, Hamlin D, Kindig DA, editors. Health literacy: A prescription to end confusion. National Academies Press; Washington, DC: 2004. [PubMed] [Google Scholar]

- Nutbeam D. Health literacy as a public health goal: A challenge for contemporary health education and communication strategies into the 21st century. Health Promotion International. 2000;15:259–267. [Google Scholar]

- Parker RM, Williams MV, Weiss BD, Baker DW, Davis TC, Doak CC, Somers SA. Health literacy: Report of the council on scientific affairs. Ad hoc committee on health literacy for the council on scientific affairs. American Medical Association. JAMA. 1999;281:552–557. [PubMed] [Google Scholar]

- SAS Institute Inc. SAS 9.2 Macro Language: Reference. Author; Cary, NC: 2009. [Google Scholar]

- Strömberg A, Ahlén H, Fridlund B, Dahlström U. Interactive education on CD-ROM: A new tool in the education of heart failure patients. Patient Education and Counseling. 2002;46:75–81. doi: 10.1016/s0738-3991(01)00151-3. [DOI] [PubMed] [Google Scholar]

- Sudore RL, Yaffe K, Satterfield S, Harris TB, Mehta KM, Simonsick EM, Schillinger D. Limited literacy and mortality in the elderly: The health, aging and body composition study. Journal of General Internal Medicine. 2006;21:806–812. doi: 10.1111/j.1525-1497.2006.00539.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiss BD. Health literacy: A manual for clinicians. American Medical Association=American Medical Association Foundation; Chicago, IL: 2003. [Google Scholar]

- Wolf MS, Davis TC, Cross JT, Marin E, Green K, Bennet CL. Health literacy and patient knowledge in a Southern US HIV clinic. International Journal of STD & AIDS. 2004;15:747–752. doi: 10.1258/0956462042395131. [DOI] [PubMed] [Google Scholar]

- Wolf MS, Knight SJ, Lyons EA, Durazo-Arvizu R, Pickard SA, Arseven A, Bennett CL. Literacy, race and PSA level among low-income men newly diagnosed with prostate cancer. Urology. 2006;68:89–93. doi: 10.1016/j.urology.2006.01.064. [DOI] [PubMed] [Google Scholar]